1. Introduction

The future quantum internet will enable connectivity between quantum information processing (QIP) nodes through the continuous distribution of entanglement [

1,

2]. In stricter terms, this translates into high-rate long-distance transmission of multi-partite quantum states exhibiting high fidelity with respect to maximally entangled states of the same dimension – for the simpler case of two QIP nodes, the fidelity with respect to a Bell state [

3]. In general, the requirements of high rate and high fidelity play opposing roles as far as entanglement generation processes go, as exemplified by the widely used sources of entangled photon-pairs based on non-linear media [

4]: by increasing the pump power, more photon-pairs are generated at the expense of multi-photon-pair states that diminish the overall state fidelity. On top of that, preservation of both rate and fidelity after long-distance transmission represents an even bigger challenge, albeit one that many research groups around the world are willing to undertake [

5,

6,

7]. The core idea is to break down the long distances into smaller stretches, called elementary links, where entanglement can be efficiently distributed in a heralded manner, such that quantum memories preserve the successful results. A chain of elementary links acts as a quantum repeater, where the intrinsic losses of the channel are mitigated by the rounds of entanglement swapping preserved by the quantum memories [

8]. Finally, the entanglement is distributed to the end nodes [

9], thereby achieving a network of interconnected quantum processors. Although some speculate that the so-called "killer application" of a quantum internet has not yet been identified, the prospects of secure communication relying on quantum key distribution and increased quantum processing power due to distributed quantum computing, both on a global scale, drive the progress and push for innovative solutions.

Satellite-based quantum communications opens the door to long-distance entanglement distribution links with a reduced number of elementary links in the quantum repeater chain. Apart from the tens of kilometers of atmosphere, light propagates through vacuum on its path towards (or from) the satellite, so the dominating loss term is the so-called free-space path loss (FSPL), which scales quadratically with distance instead of exponentially, as the loss in an optical fiber [

10]. In ground-based networks, quantum repeater nodes must be located at an accessible location and the fiber can rarely be installed in a straight line between two consecutive nodes, exemplified in the metropolitan experiments of [

11,

12]. The added benefit of independence concerning the topographical conditions of a certain node could further position satellites as an enabling quantum repeater node even for shorter distances. Furthermore, when a quantum internet that spans the entire globe is considered, the requirement of transoceanic links strongly favors the use of non-ground-based repeater nodes, achievable with space-based links. The expensive and complex endeavor of flying a space-qualified quantum payload on a satellite, coupled to the fact that current quantum memory platforms are not yet capable of supporting the long-distance links that make satellites beneficial, pose significant challenges for space-based quantum communications which, currently, attracts a small number of early adopters: the Micius satellite figures as the pioneer quantum satellite mission [

13]; but it is not the only one [

14]. Therefore, the main focus remains on ground-based quantum networks [

12,

15,

16,

17], and a shift in perspective requires both significant advances in the enabling technologies (sources of entangled photon pairs and quantum memories for light) as well as a clear definition of the benefits of a satellite-based link and the requirements such that these benefits can materialize [

18,

19,

20,

21].

Here, a contribution to the latter is put forth: an analysis of entanglement distribution rates between two arbitrary nodes in a quantum network that allows both ground- and satellite-based links. We start by breaking down the building blocks of a quantum repeater and rearranging them to fit a space segment all the while considering its practical constraints. This allows us to write entanglement distribution rate equations for both satellite- and ground-based repeater channels, which are then mapped onto the weights of edges in a graph representing the network. A best-path algorithm is then used to evaluate the beneficial architecture. The analysis is capable of incorporating dynamic conditions of the satellite link, such as the weather, stray light and the satellite orbit, for which realistic data is employed. We show that satellites present significant advantages for longer distance links, as expected. Also note that significant effort towards modeling and analyzing the achievable entanglement distribution rates in a practical quantum network has been made [

22,

23,

24,

25]. The present work is complementary, allowing for quick and broad analysis of practical quantum repeater architectures. The paper discusses the thresholds of satellite-based quantum communications, on the identified limits of the analysis as well as of the current technology, with conclusions on the potential avenues towards improvements.

2. Quantum Repeaters: Ground and Space Architectures

A quantum repeater leverages the multimodality (temporal, spectral, etc) enabled by both a quantum memory and the heralded swapping of entanglement to decouple the individual probabilities of success within a so-called elementary link and beats the bounds of direct transmission [

26]. Although different choices of protocol, encoding, platform, and wavelengths exist [

27], the unavoidable functional blocks of a quantum repeater can be narrowed down to four: sources of entanglement, quantum memories, Bell State Measurement units, and quantum channels. The requirements on the sources are such that entanglement is generated at the best compromise between high rate and high fidelity, in as many multiplexed modes as possible, and with an optical interface, so that optical photons can carry the quantum information with minimum attenuation and decoherence over the quantum channels. The quantum memories come in many flavours, and it is common for a single platform to implement both memory and source in the same physical system [

5,

7,

28]. Due to the latter not being the general case [

6], the functional blocks are split. The memory is expected to store a quantum state in a large number of modes with high efficiency. The Bell State Measurement (BSM) unit maps the joint state of two non-interacting photons on the base of maximally entangled bipartite states and is at the core of the entanglement swapping protocol [

29]. It is expected to efficiently produce projection measurement results that can be classically conveyed to the quantum memories to swap entanglement in a heralded fashion [

30]. Here, efficiencies are understood as the probability that the functional block performs as expected. For the source, this means the probability of successfully emitting a single pair of entangled photons for a given attempt (compared to the probability of emitting zero or two or more pairs). For the quantum memory, it means the probability of successfully storing and retrieving a photon from an entangled pair without disturbing its quantum state. In case of the BSM, it means the probability of successfully mapping and detecting the joint photon state.

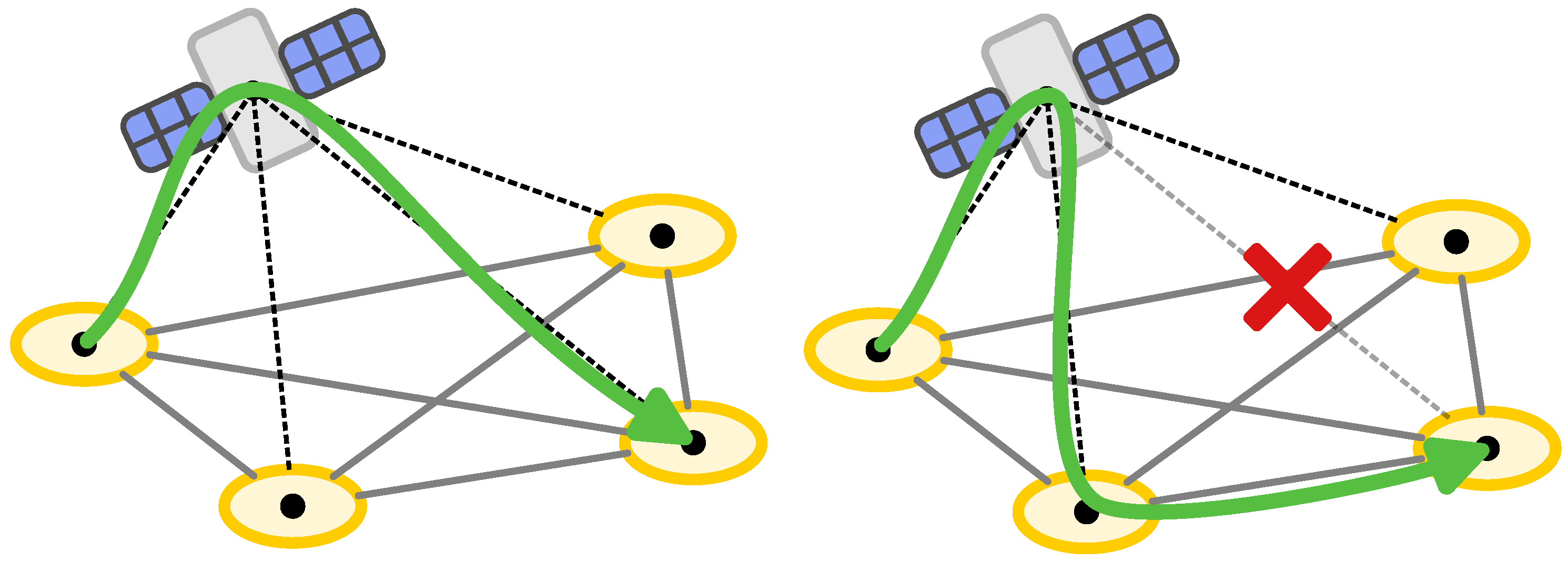

Based solely on the natural disposition of the functional blocks within an elementary link [

26,

27,

30], with source and memory on either side with a centralized BSM, it would be natural to assign the latter to the satellite. This way, two ground stations, each equipped with a source and a memory, would beam photons up to the satellite. The successful measurement results would be transmitted down to the ground stations, leaving the states stored in the memory in a joint entangled state. For a ground-based architecture, this configuration is extremely attractive, since the direction of propagation of the photonic qubits is irrelevant. However, the thin layer of atmosphere in the ground-to-satellite path close to the ground breaks the transmission direction symmetry for the space segment, as opposed to the fiber-based ground segment. The configuration in which the satellite receives a beam from a ground station is usually referred to as uplink, as opposed to downlink, where the satellite sends beams to the ground stations. In uplink, the beam experiences the effect of atmosphere at the first few kilometers of propagation, so the slightest angular deviation introduced there is translated into a significant beam spot displacement at the satellite, after hundreds of kilometers of propagation. On the other hand, downlink does not suffer from the same problem simply because the beam only interacts with the atmosphere at the very end of the propagation path. Differences in total loss between the two configurations, depending on the atmospheric turbulence, vary between 10 and 100 times [

31]. Therefore, the downlink configuration, i.e., photonic qubits transmitted from the satellite to the ground stations, is chosen as a baseline in this work.

Downlink configuration restricts the design of a quantum repeater’s space segment containing a satellite since the source, rather than the BSM, is at the center-point between the two ground stations. Placing the source on the satellite meets practical constraints, since these have been demonstrated to operate in-orbit [

13,

14], but relays all the complexity to the ground stations. Due to the probabilistic nature of entanglement distribution over lossy channels, successful rounds must be heralded by a classical signal coming from a BSM-like unit so that the quantum memories can hold on to those specific modes. While proposals such as [

32], combine the memories and the BSM heralding protocol into a single system, the functional blocks can be easily kept separated, in which case a source and a BSM must be included in both ground stations. In this final configuration, the satellite beams photon-pairs down to earth, which reach two ground stations. There, photons coming from space meet a photon generated on the ground in a BSM, whose pair is stored in the quantum memory. Based on the simultaneous heralding event of both ground-based BSMs, the states in the quantum memories are left in an entangled state following entanglement swapping. The block diagram representation of both space and ground repeater segments introduced so far is presented in

Figure 1. Note that these are not the only possible architectures, but offer a practical short- to mid-term architecture aside from intuitive functional roles of each block.

The functional block description of quantum memory, source of entanglement, BSM, and quantum channel, equipped with efficiencies and fidelities, provides a powerful framework to analyze the overall efficiency of a given concatenation of space and ground segments with rounds of entanglement swapping: a so-called automated repeater chain (ARC) [

27,

33]. The ARC is assumed to perform subsequent attempts of entanglement distribution over each segment at a fixed rate

R, which has the role, in a bipartite entanglement distribution scheme as considered here, of translating efficiencies into probabilities, and those into an average number of Bell pairs available at the end nodes. By enforcing minimum decoherence processes as the photonic qubits propagate through the quantum channels, negligible dark counts on the BSM detectors, and storage times long enough to ensure that the heralding signals reach the quantum memories in a timely manner (before the quantum states are lost), the performance of an ARC can be narrowed down to its achievable rate of entanglement distributed towards its end nodes. One can start by assigning efficiencies

,

,

, and

,

to source, memory, BSM, and channels, respectively. Here,

denotes the optical path distance between the center point of an elementary link and either one of its nodes. It is worth noting that the efficiency of the channel is a function of its distance, which will take on a quadratic or exponential scaling in case of space or ground segments.

To arrive at the ground-based elementary link (EL) rate equation [

27], one starts from the success probability of a single attempt, which, for a ground segment is simply the product

. This value can be subtracted from unity to represent the probability of no success, which, when taken to the power of the available number of multiplexing modes (

) available, represents the probability that no modes were successful within a given attempt. Subtracting the latter term from unity and multiplying by

equates to the probability of at least one mode being successfully swapped and stored into the quantum memories, the result of which is summarized in the expressions below:

The steps towards the space-based EL rate equation is the same, although the different configuration of the functional blocks must be accounted for as well as the difference in channel losses, as expressed below:

The factors

in Eq. 2 and

in Eq. 4 denote the fiber attenuation coefficient and half-angle divergence of the free-space optical beam, respectively. It should also be noted that, although the distances

of a ground segment correspond directly to the distances between the nodes and the central point, those for space consider the distances towards the satellite. An ARC is formed through the concatenation of its

n segments through

steps of successful entanglement swapping, leading to a final probability of entanglement distribution of the form:

where

.

It is interesting to note that the potential benefit of the space segment relies heavily on whether the quadratic loss scaling can beat the exponential scaling of the fiber and the increased complexity of the ground station hardware. This point is made more stringent due to the fact that the distance covered by a ground segment can be approximated by the distance between the two ground stations, while for a space segment the distance depends on the altitude of the satellite. In other words, two ground stations separated by 100 km can be connected by 100 km of optical fibers, but the fact that the satellite is hovering at ≥500 km altitude causes the optical path for the space segment to be much longer. The expression of Eq.

5, however, tells only part of the story: as previously discussed, entanglement distribution in a quantum network relies on the transmission of entangled particles with high rate and high fidelity. The secret key rate (SKR) combines both requirement aspects of the ARCs into a single meaningful parameter [

34] and is widely used as a figure of merit for the quality of quantum networks, although new benchmarking procedures have been proposed, e.g., [

35]. The SKR allows for the evaluation of relevant practical issues; the most pressing for satellite quantum channels being the impact of stray light.

Stray light refers to unfiltered photons that are mixed in the path of the photonic qubits transmitted by the satellite. These reduce the quantum information capacity of the link. Although any decoherence process during transmission through the channel is neglected in the present analysis, stray light has a severe impact on the results of the BSM units, especially during the day, when solar radiance is maximum. In order to evaluate the impact of the unwanted photons onto the overall fidelity of the states distributed over the ARCs, a decoherence channel model is assumed. The states produced by the source are considered to be Werner states (noisy EPR-pairs) [

36] with an associated density matrix of the form:

where

is a maximally-entangled Bell state,

is the Werner parameter of the source – associated with its fidelity with respect to

–, and

is a

identity matrix. The final Werner parameter of the states available on the ground stations can be estimated by concatenating the Werner parameters of each functional block, which are assumed to be fixed except for the BSM.

The Werner parameter of the swapped states due to the action of the BSM can be estimated from the Coincidence-to-Accidental Ratio (CAR), which approximates the signal-to-noise ratio (SNR) achievable at the BSM. In the regime of low decoherence introduced by the quantum channel, the CAR provides an upper bound of the fringe visibility extracted from the coincidence measurements performed on a joint state

delivered by the ARC segment. The visibility, in turn, is directly associated to the Werner parameter of the link, where the term introduced by the BSM

is dominant. With this expression, one can connect the stray light radiance impinging on the BSM detectors to the overall entanglement quality generated in an ARC segment. First, the stray photon rate can be derived given the solar radiance (which can be approximated to have a black body radiation profile

); the receiver telescope parameters of aperture area (

) and solid angle (

); the photonic qubit bandwidth (

); and the operational wavelength (

):

where

h is Planck’s constant and

c the speed of light. The rate

is then translated into the term

(the probability of measuring a stray photon within a time window of the detectors), with the assumption that the stray detection events are uncorrelated and, thus, homogeneously distributed over time. Therefore,

, where a time resolution

– limited by the detector’s jitter, or response time – is imposed. Finally, the photonic qubits are expected at a well-defined time window

, therefore:

In Eq.

9, the term

is not squared since it already presumes pairs of photons arriving at the BSM. From it, the estimated entanglement quality delivered by a given ARC segment (directly proportional to the overall Werner parameter

) is directly calculated from the multiplication of the

and the remaining individual Werner parameters of the other functional blocks of the elementary links (

,

, for source and memory, respectively). Relevant for the SKR is the quantum bit error rate (QBER), approximated as

[

37], which can be used to calculate the entropy per distributed entangled pair over the elementary link:

where

is a constant representing the inefficiency of the classical steps of the quantum key distribution protocol, and

is the Shannon entropy function. Note that the SKR, calculated as the product

is heavily influenced by the entanglement generation probability of the elementary link, since it also impacts

– as indicated in Eq.

9 – making it the most significant term to optimize. This is relevant for the best-path estimation results of

Section 3, since the SKR can only be computed at the end nodes at the edges of an ARC, while the optimization takes into account each individual segment.

2.1. Modeling Free-Space Optical Channels

In the space segment, a satellite is the connecting point between two ground stations (A and B) and the entanglement generation probability will depend on the channel efficiencies and . Since the orbit of the satellite determines its position at any given point in time and the ground stations are static, these two terms may differ greatly. Evaluating follows from the identification of its four major contributing factors: the geometrical losses due to the beam divergence and limited receiver telescope dimensions, ; the pointing losses due to vibrations, atmospheric turbulence and relative movement between transmitter and receiver stations, ; the effects of molecular and aerosol scattering in the atmosphere, ; and the coupling efficiency of the incoming free-space optical beam into a medium that allows integration into the BSM unit, in general an optical fiber, . Given the individual contributions, .

A detailed practical analysis of the first term can be found in [

38], and takes into account the sizes of both receiving and transmitting telescopes, the size of the beam after propagation with a certain divergence, clipping of the beam at the transmitting telescope, and obscurations in the telescopes. Assuming on-axis propagation and the far-field approximation, an optimal ratio

between the transmitter telescope’s diameter and beam waist can be derived [

38]:

where

is the obscuration ratio. The design ratio

allows one to write

given a few other design parameters, as follows:

where

and

are the aperture areas of the transmitting and receiving telescopes, respectively,

is the operational wavelength, and

ℓ is the distance between the two telescopes. The beam waist

– defined by the choice of

and Eq.

12 – will, in turn, define the half-angle beam divergence

, in radians. This is then is used to compute

through [

39]:

where

is the half-angle pointing error, also in radians, which can be mitigated with an active pointing tracking system [

13]. Differently from

and

, the remaining term

is only empirically modeled by the following expression [

40]:

where

is the cloud coverage factor,

the extinction coefficient at sea level, which is a function of the weather visibility

V and the operational wavelength

[

41],

meters is the scale parameter, and

Z is the Zenith angle between the ground station and the satellite. Note that, apart from

and

, all parameters of Eq.

15 are conditional, since they will change depending on the conditions of the channel. That is also the case for

, since the atmospheric conditions such as turbulence can impact its value differently depending on their intensity [

40]. Assuming the ground stations are equipped not only with pointing tracking hardware to minimize the effects of Eq.

14, but also with an adaptive optics system [

42], the optimistic assumption of

is used for the remainder of the analysis.

3. Hybrid Ground/Space ARC Network Simulation

Once equipped with both ground and space segment architectures (

Figure 1) and their associated performance metrics (Eqs.

5,

9, and

11), one can attempt to optimize the entanglement generation rate between any two end nodes of an ARC embedded within a network. In order to do so, a framework with two major components is introduced: one is a potential future pan-European quantum network, with over 100 selected nodes representing major European cities distributed throughout the territory, which is presented in

Figure 2; the second is a method to optimize the connections between any combination of end nodes in this network connected by an ARC. The latter is achieved by evaluating all the connections between the nodes in the network using the performance metrics introduced so far, associating them with edge weights of a graph, and searching for the best path.

The evaluation process of the hybrid ARC network analyzes each unique combination of end-node pairs after a ground/space graph is built, hereby called a "hybrid" graph. First, a static graph is constructed, where each node is connected to each other via a fiber link. This graph is only constructed once, since it is assumed not to be changing over time. The second graph, based on space segment connections, is constructed for each time window considered in the evaluation (minute accuracy for practical purposes), where the weather conditions (

V and

) for each node of the network are selected from a historical database [

43]. For the simulation results, the weather conditions on the first of January, 2024, are chosen without loss of generality. The time of the day is used to determine both the stray light radiance and the relative position of the satellite (in its polar orbit, at an altitude of 700km) with respect to the ground stations. Since each node is assumed to be able to communicate with any other node directly (either via space or via ground segments), the two graphs are merged into a single hybrid graph. To that end, each node-to-node connection is evaluated individually based on its performance metric. Here, one can use the fact that the rate

R of the ARC is assumed to be the same for ground and space segments to introduce the secret key

probability,

. Since

, setting the edge weights as

ensures they are always positive and increase as the SKP (SKR) worsens. The segments with better performance are assigned to the edges of the hybrid graph: this allows pruning and converging into a hybridized ground/space graph. Then, Dijkstra’s algorithm [

44], which minimizes the path weight in a graph, can be used to estimate the best path between the chosen nodes, as pictorially presented in

Figure 3. The choice of

ground-only,

space-only, or

hybrid, as well as the final SKR, are recorded and the process moves on to the next round.

The described procedure is not without its own limitations, starting from the fact that the weight estimated by Dijkstra’s algorithm at the end of each round does not represent the actual achievable SKR. The sum of the weights in a given path translates into the multiplication of the individual SKPs achieved by each segment of the path; although this would represent a meaningful value for the probability of entanglement generation (since probabilities can be multiplied), it does not necessarily equate the achievable SKR across a multi-segment path. In order to avoid edge cases that do not accurately represent the quality of an ARC, a tuple is assigned to each edge containing the values of SKP,

, and

, where the latter term is used to tie-break which segment – ground or space – is chosen for the final graph. At the end of a round, the end-to-end SKR is calculated based on the total entanglement generation probability across the ARC (Eq.

5), and the total Werner parameter across the ARC:

Another constraint is associated with the computational efficiency of the procedure: although Dijkstra’s algorithm can converge quickly to the final solution, the step of building the final hybrid graph for each time window represents a bottleneck. By realizing that the fiber channel does not change significantly over time, as opposed to the free-space channel, the ground connections can be computed once and stored

a priori. In doing so, one notices that the number of ground-based elementary links that optimally connects two given nodes changes depending on the set of parameters: i.e., functional block efficiencies, Werner parameters, available modes, and total distance. Although assuming equal functional block parameters for ground and space segments and normalizing the efficiencies would allow more focus on the relative performance of the different architectures, ensuring that the results are obtained with realistic physical parameters is paramount. We set

,

,

,

– in keeping with the long-term (∼10-20 years) expectations for potential functional block physical platforms [

27]. As will be seen in the next Section, the algorithm finds solutions in which the total distance in the network shown in

Figure 2 is divided into several concatenated elementary links of ∼150 km each – in order to increase the overall rate.

4. Results

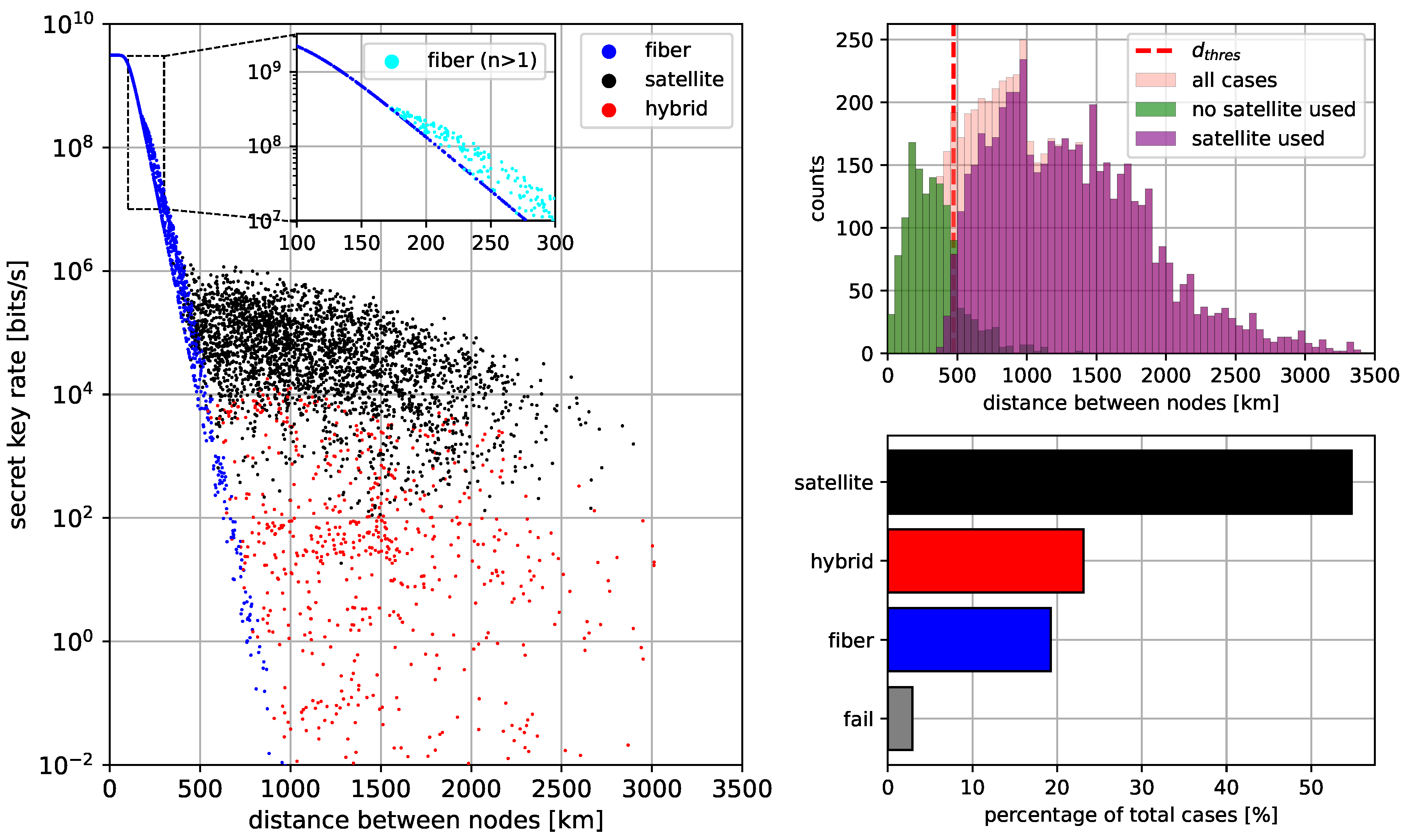

We start by evaluating the network of

Figure 2 assuming a fixed satellite positioned at 700 km above Zurich, such that the satellite is within a constant line-of-sight for most pairs of ground stations. We further assume night-time operation only. With this approach, the performance impact of the space segment is decoupled from issues associated to the management of the satellite. In

Figure 4, the results of all unique combinations of end node pairs, evaluated according to the protocol described in

Section 3, are presented in a scatter plot on the left hand side and colour-coded, such that: blue represents

ground-only; black represents

space-only; and red represents

hybrid. There are cases where the key material that can be extracted from the link is null (

fail), which do not appear in the scatter plot, but are presented on the bottom right, where the total number for each individual channel choices are stratified. Note that the very choice of node placement introduces a bias in the average distance between QIPs, since metropolitan (shorter than 100km) links are not included in the network of

Figure 2. This choice follows from the immediate realization, clear from Eqs.

1 and

3, that satellites are unlikely to benefit short-range quantum networks. Therefore, the comparison at short range distances is fruitless. Finally,

Table 1 details the experimental parameters utilized in the simulation. Solar radiation, cloud cover and visibility, and city coordinate data are scraped from an open source weather API [

43].

The results depicted in

Figure 4 indicate that, for the fixed satellite scenario, a clear distinction between different regimes appears. The first one is associated to the fact that, above 150km distance between end nodes, multiple ground segments are chosen instead of a single segment, as expected from Eq.

1 and the parameters detailed in the previous Section. Second, is the regime of ground preference versus space preference, which happens around 500 km. This result is further highlighted in the histogram on the top right corner. To determine the threshold distance

of the regime switch, the histogram distributions of choices involving satellites and no-satellites are fitted to a log-normal distribution and the intersection point between the fit curves is calculated and represented by the segmented red vertical line. The fact that the results indicate a clear preference for space segments after a threshold of

km is noteworthy: although the preference is expected, identifying a practical regime switch can be significant. Also noteworthy is the large spread of black dots, associated to the dynamic nature of the weather conditions. Links with similar distance can have prominent differences in performance due to the weather. Finally, the expressive incidence of

hybrid solutions indicates that the possibility to switch between space and ground segments is a valuable feature of the future quantum internet since, even for large distance links, the ARC benefits from having both available.

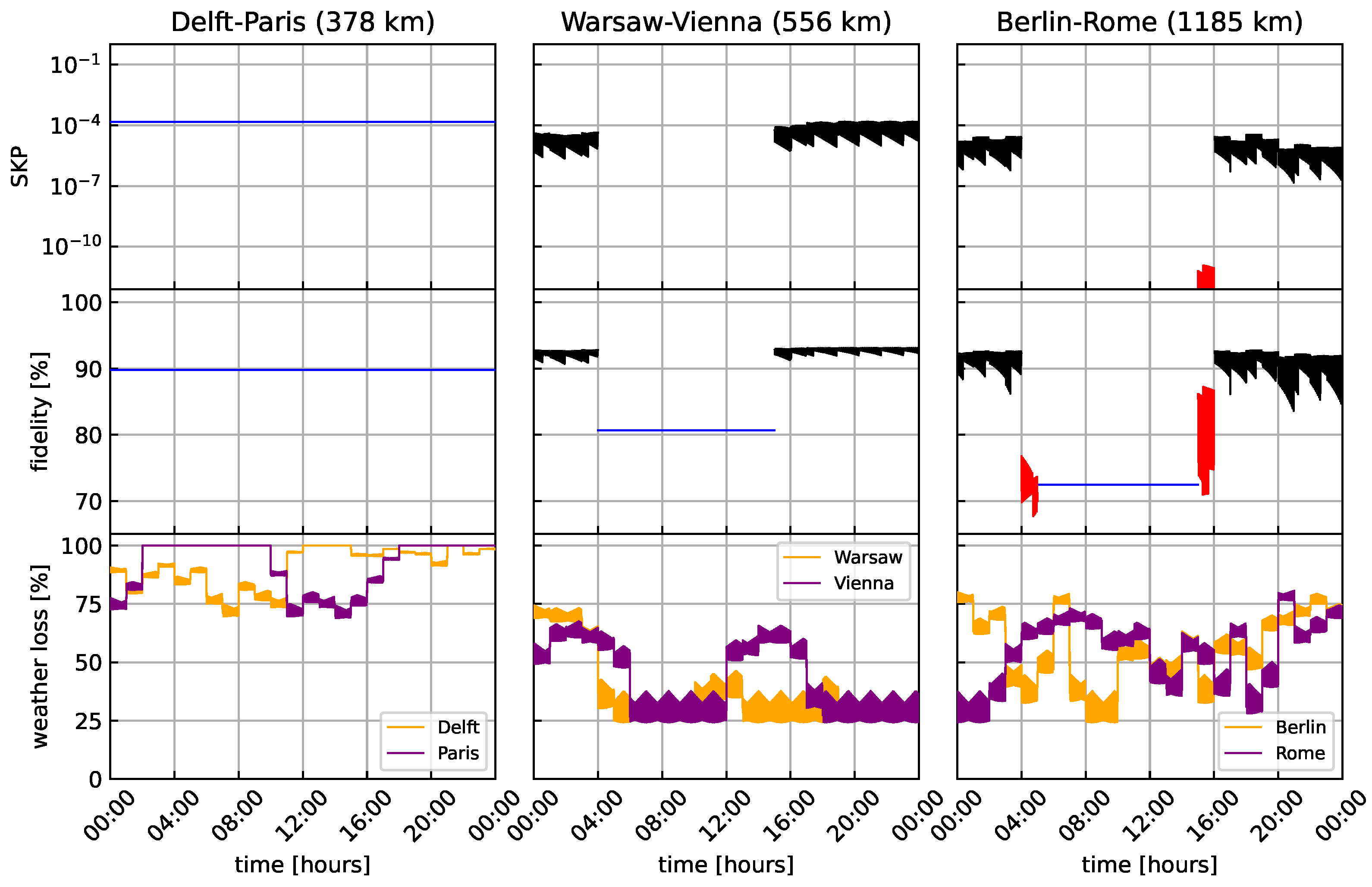

Moving on from the ideal case where the satellite and weather conditions are static requires taking into account the dynamic variations of the free-space optical channel. To do so, the procedure detailed in

Section 3 can be employed to evaluate the performance of the network over time. Unfortunately, combining the dynamics of each different unique combination of end node couples into a single figure is not straightforward. Therefore, we select three combinations of end nodes, expressing different length regimes, and evaluate its performance with a minute accuracy. Here, we also evaluate different satellite constellations of up to 30 satellites in a synchronous polar orbit. The results, presented in

Figure 5, uncover the complex interplay between end-node distances and connection choices given the satellite availability (set to 25), the end-to-end fidelity – heavily influenced by the stray light –, and the weather conditions. The end-to-end fidelity is calculated taking into account spectral, temporal, and spatial filters available at the ground stations. The spectral and temporal filters are designed based on the ARC rate

GHz, so the stray light radiance is spectrally filtered accordingly, and the CAR, calculated following Eq.

9, uses a detection window inversely proportional to the rate. The spatial filter performance is calculated based on a 50

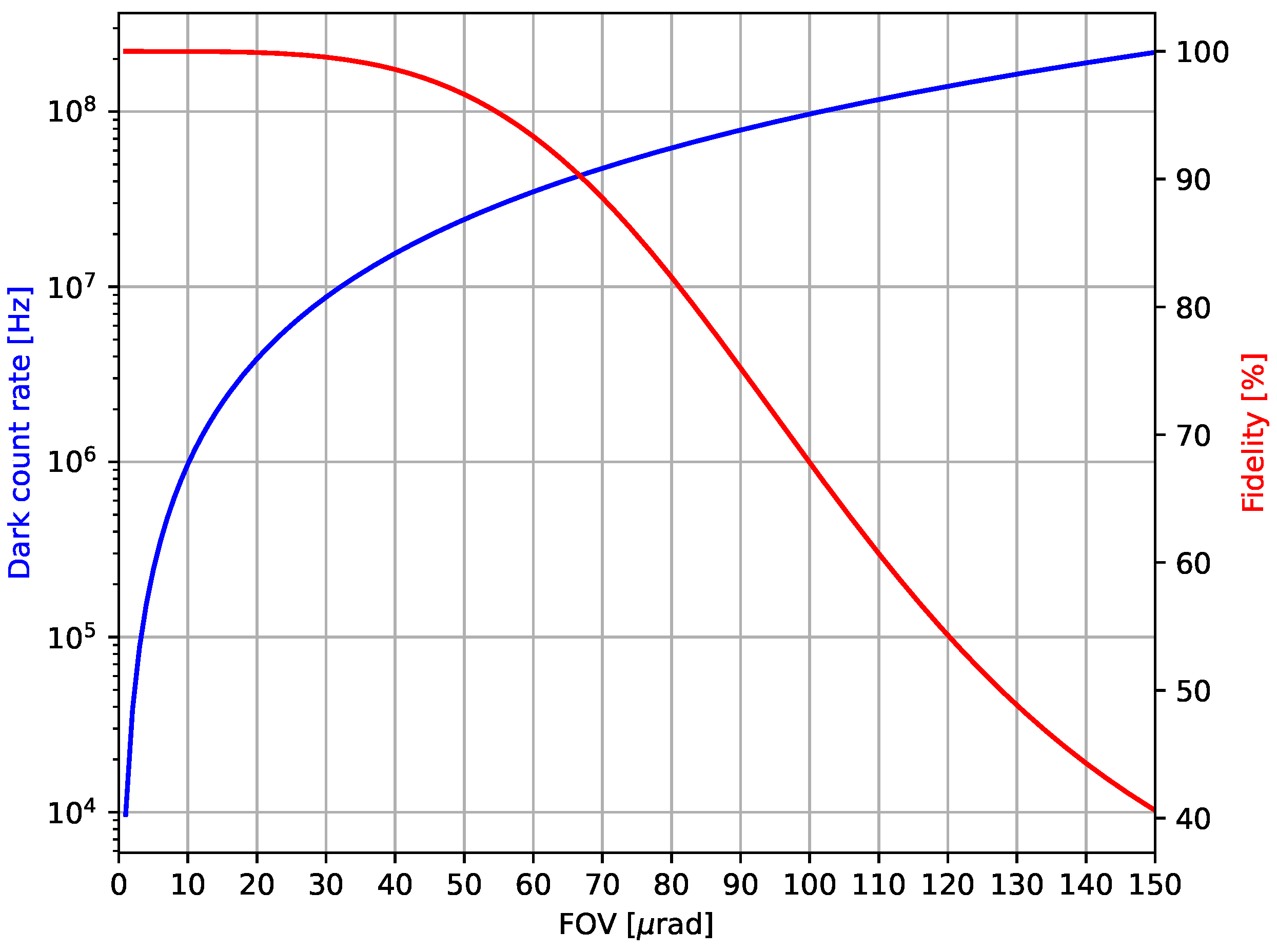

field-of-view (FOV), typical for modern satellite and ground station telescopes, and is strongly dependent on the achievable pointing accuracy. As the results of

Figure 6 demonstrate, improving the pointing accuracy and further filtering the signal spatially has a two-pronged effect: although it reduces the amount of photonic qubits that are coupled into the ground stations, it also significantly reduces the effect of stray light, and is an important step towards enabling day-time operation. For the results described here, the maximum count rate of the detector is assumed to be in the GHz regime (a parameter achievable in the long-term scenario), and an FOV of 50

rad is chosen.

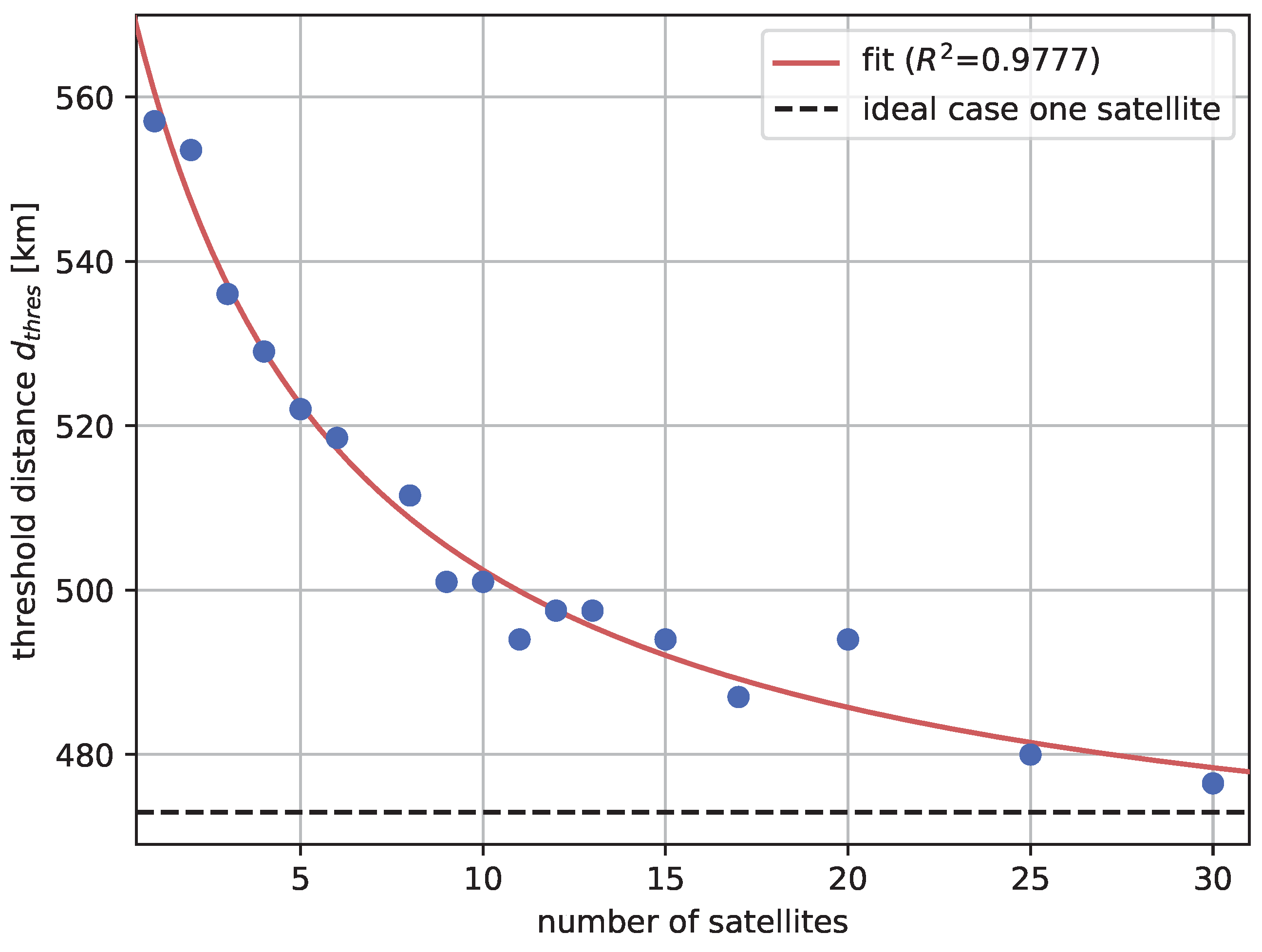

Clear from the figures is the fact that, over a day, the connection choices change significantly and the realistic performance cannot rely solely on the ideal case analysis of

Figure 4. In particular, one could question how the value of

changes based on the analysis over a longer time-frame, such as a full day, as considered in

Figure 5. Fortunately, the chosen figure of merit of key generation provides one with a quantity that can be combined: the key material extracted over a day, for instance. Combining this new performance evaluation parameter with the analysis based on

, it is possible to analyze the proposed network for each unique end-node combination including the dynamic constraints. Furthermore, the impact of a constellation of satellites can be extracted, which can then be associated to the total cost of deployment of the network’s space segment. In

Figure 7, the values of

are depicted, indicating that a similar regime switch as identified in

Figure 4 (460km) can be achieved with a number of available satellites in the range of 20.