Submitted:

27 February 2024

Posted:

27 February 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Having understood how the machine learning-based model works, attackers are now increasingly relying on asymmetrical methods by uploading images and videos to evade detection under various pretexts, and none of the proposed models can single-handedly be effective against such.

- Very small or minute changes to the uniform Resource Locator (URL) of a blacklisted URL will make the blacklist/ whitelist phishing detection method fail. Also, the fact that there is no worldwide centralized database for whitelisted or blacklisted URLs makes this method even more vulnerable, and so if company X blacklisted my phishing URL on their internal server, I can try it with company Y and be successful.

- In machine learning phishing detection method that relies on relevant features like URL, webpage content, website traffic, search engine, WHOIS record, and Page Rank have their vulnerabilities because firstly, such classifier will misclassify a phishing URL that is hosted on a hacked or compromised server as benign leading to false negative, secondly using domain age as a feature to train a model will always lead to higher false positive simply because the URL of a newly registered legitimate company website will be misclassified. After all, the domain name was recently registered, the page rank is zero, and with low traffic, and thirdly the fact that parameters for those features are gotten from a third-party website is another concern. What will happen if the third-party website is having a downtime?

- The issue with the visual similarity-based heuristic method which compares both the pre-stored signature such as images, font styles, page layout, screenshot, and so on of the new website with the old website will have general difficulty in detecting anomalies in a newly hosted phishing site.

- The fact that the majority of the existing machine learning models are trained based on textual features such as “#”,”.”, Internet Protocol address, URL Length, domain levels, and so on from the Uniform Resource Locator (URL) does not help as any phisher or attacker with little web technologies can develop what we called "friendly URL” depending on the programming language adopted whether JAVA, C#, Python, PHP or framework to avoid all those features. With a friendly URL, such models are bound to misclassify leading to an increment in false negative rate.

2. Related Work

2.1. Natural Language Processing (NLP)

2.2. Long Short-Term Memory (LSTM)

3. Experimental Setup

3.1. Dataset

3.2. Settings

- Layer 1 (URL-Based Training): We did traditional machine learning training on the first layer using the Mendeley phishing dataset. Out of possible 87 features, we use chi2 from sklearn feature selection library to select the best 19 features, having set the hyper-parameter k-value to 7 for optimal result which gave us a combination of the best 19 features. The dataset was split into two such that 80% was used for training, while the remaining 20% was used for validation tests. We choose random forest because of its suitability for URL-based phishing detection relative to other classifiers [26,27,28,29,30,31].During iteration, we set both the depth and random variable to several values for optimal result but only observed a small but negligible change in the variation of the accuracy until 39. with depth > 39, the accuracy remains constant, at least till when we increase the randomness of the tree to 1 before observing little change. We finally settled on setting the randomness state to 0 so that each tree remains the same each time it is generated.

- Layer 2 (Image Processing): This is the layer where the Hypertext Markup Language (HTML) of the actual phishing webpage is secretly web-scrapped behind the scenes without any actual navigation for security purposes. The behind-the-scenes mode of web scrapping the HTML content protects the server and the network from potential drive-by attacks that might originate from the phishing site. All the syntax of HTML mark-up language was removed From the extracted HTML by REGEX as we needed only the content within the opening and closing of the body tag which is the section being served by web server to potential victims while on a phishing website, this step securely brings whatever content (textual, videos, or images) that will be served to potential victim into the framework for series of processing, and this effectively ensure that they cannot evade detection.

| max_depth | random_state | accuracy |

|---|---|---|

| 30 | 0 | 90.1 |

| 20 | 0 | 90.0 |

| 10 | 0 | 88.1 |

| 40 | 1 | 88.2 |

| 50 | 1 | 89.8 |

| 60 | 1 | 88.1 |

| Algorithm 1 LSTM Model Training for Natural Language Processing (NLP) task |

|

- Layer 3 (Speech Synthesis): Having successfully web-scrapped the hypertext mark-up language of the potential phishing site behind the scenes, and without any actual navigation for security purposes at the previous layer. Returned content from the previous layer 2 is further iterated through with "for" loop. "for" loop iterates through every filtered word in the sentence, returning only the list of words with any video extension, the return list is automatically the full path of those videos from the server where they are hosted.

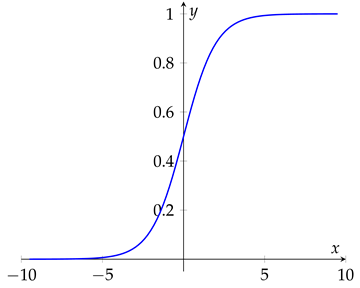

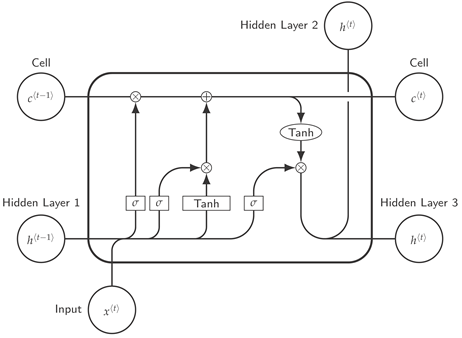

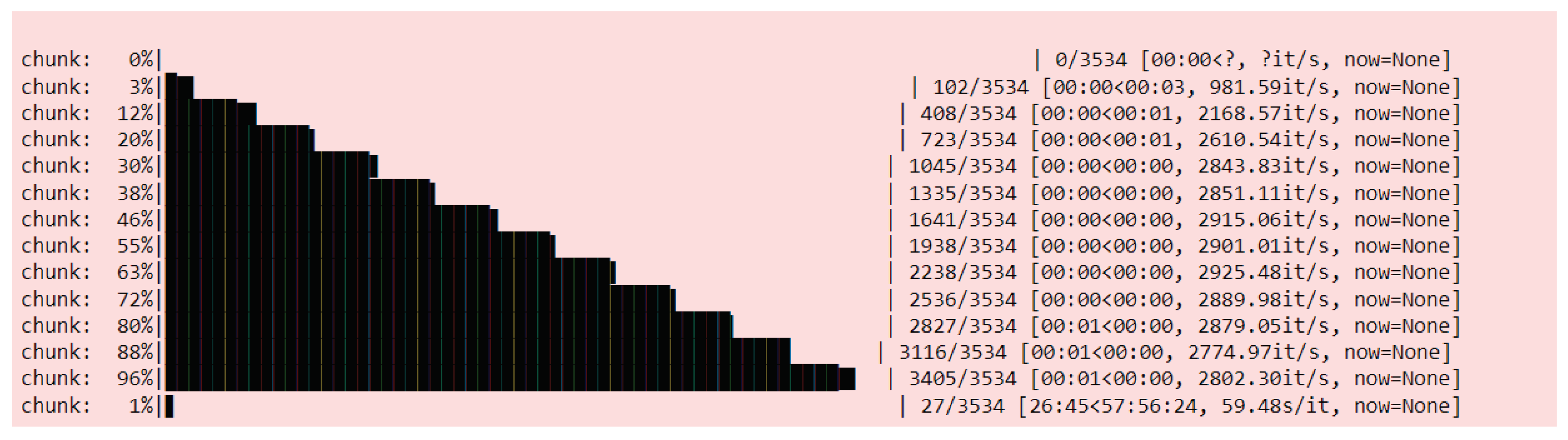

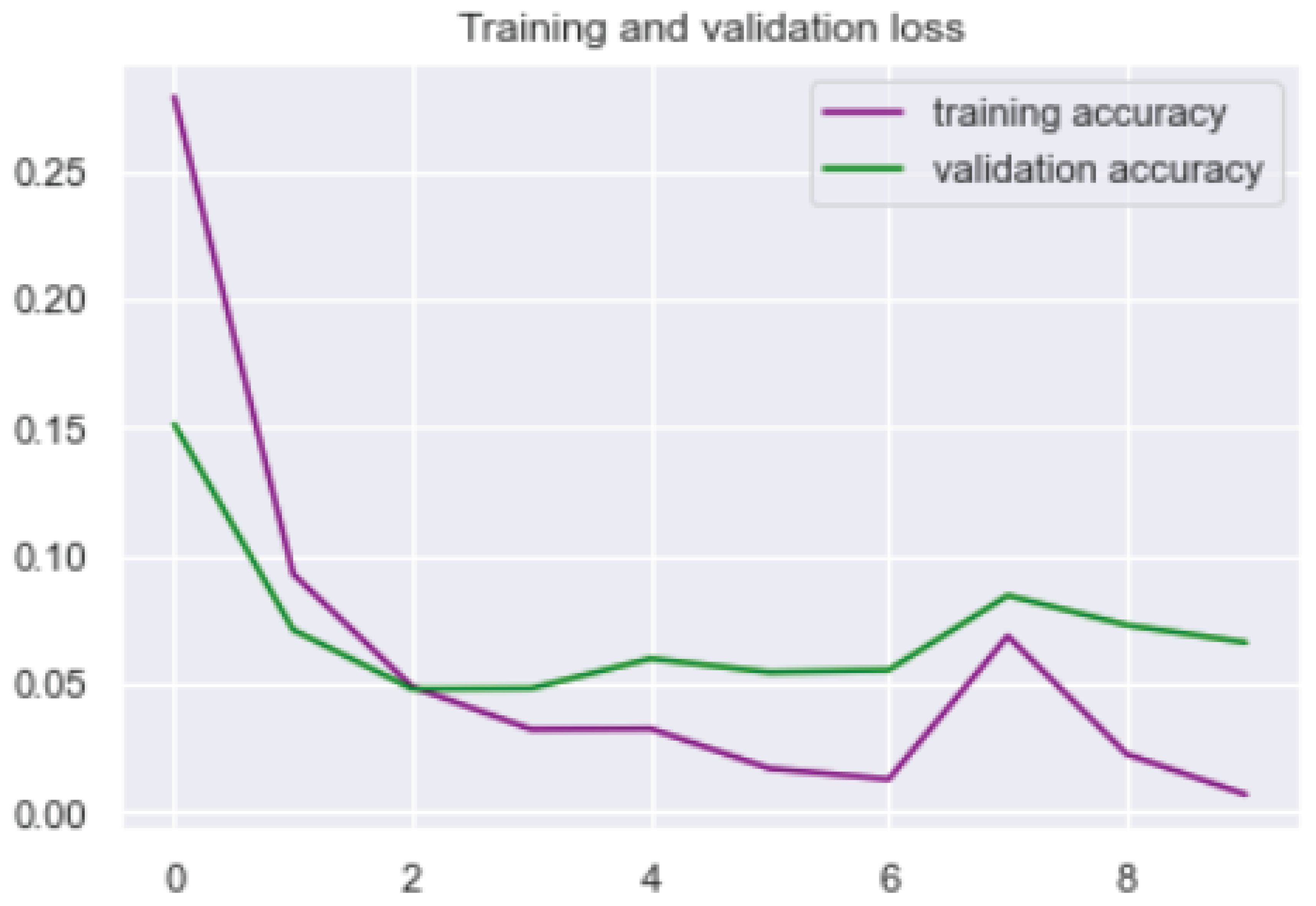

- Layer 4 (Speech Synthesis): We choose LSTM network because of the effective solution it offers to vanishing and exploding gradient which are Long term dependency problem in Recurrent Neural Network, the cell state in LSTM network serves as a memory to the network thereby given it the ability to remember the past. At layer 4, we have all outputted and processed text contents from each of the previous layers, and there is need to capture every long-term dependencies, short term dependencies, and sequences which could be provided by the cell state in LSTM network to ensure a more accurate prediction.

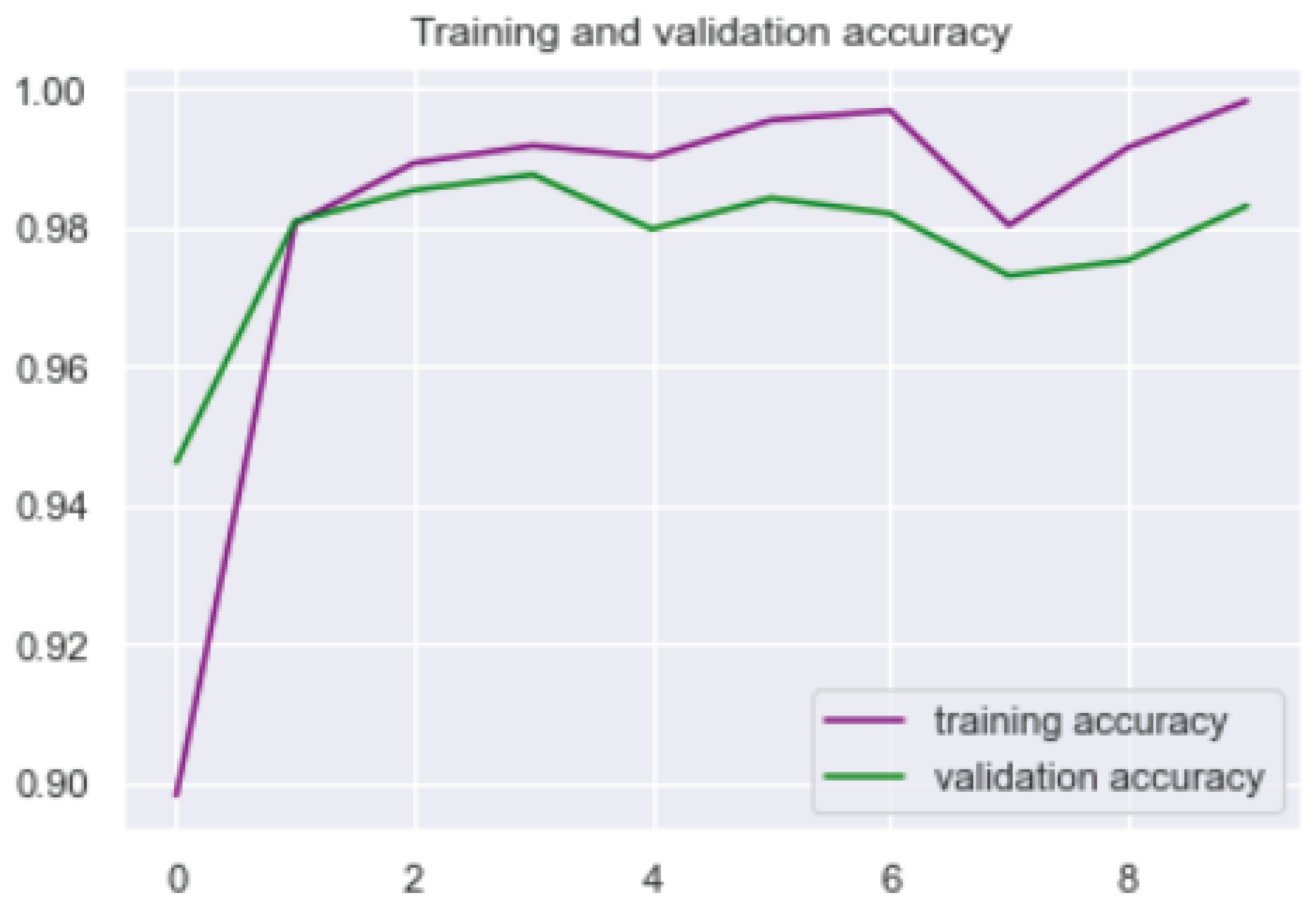

4. Framework Adaptability and Performance Evaluation

5. Conclusions

6. Limitation and Future Research direction

References

- Sarker, O.; Jayatilaka, A.; Haggag, S.; Liu, C.; Babar, M.A. A Multi-vocal Literature Review on challenges and critical success factors of phishing education, training and awareness. Journal of Systems and Software 2024, 208, 111899. [Google Scholar] [CrossRef]

- Jain, A.K.; Gupta, B. A survey of phishing attack techniques, defence mechanisms and open research challenges. Enterprise Information Systems 2022, 16, 527–565. [Google Scholar] [CrossRef]

- Ige, T.; Marfo, W.; Tonkinson, J.; Adewale, S.; Matti, B.H. Adversarial Sampling for Fairness Testing in Deep Neural Network. arXiv 2023, arXiv:2303.02874 2023. [Google Scholar] [CrossRef]

- Uçar, M.K.; Nour, M.; Sindi, H.; Polat, K.; others. The effect of training and testing process on machine learning in biomedical datasets. Mathematical Problems in Engineering 2020, 2020. [Google Scholar] [CrossRef]

- Alkhalil, Z.; Hewage, C.; Nawaf, L.; Khan, I. Phishing attacks: A recent comprehensive study and a new anatomy. Frontiers in Computer Science 2021, 3, 563060. [Google Scholar] [CrossRef]

- Abdulrahman, L.M.; Ahmed, S.H.; Rashid, Z.N.; Jghef, Y.S.; Ghazi, T.M.; Jader, U.H. Web Phishing Detection Using Web Crawling, Cloud Infrastructure and Deep Learning Framework. Journal of Applied Science and Technology Trends 2023, 4, 54–71. [Google Scholar] [CrossRef]

- Aljofey, A.; Jiang, Q.; Rasool, A.; Chen, H.; Liu, W.; Qu, Q.; Wang, Y. An effective detection approach for phishing websites using URL and HTML features. Scientific Reports 2022, 12, 8842. [Google Scholar] [CrossRef] [PubMed]

- Anitha, J.; Kalaiarasu, M. A new hybrid deep learning-based phishing detection system using MCS-DNN classifier. Neural Computing and Applications 2022, 1–16. [Google Scholar] [CrossRef]

- Ghaleb Al-Mekhlafi, Z.; Abdulkarem Mohammed, B.; Al-Sarem, M.; Saeed, F.; Al-Hadhrami, T.; Alshammari, M.T.; Alreshidi, A.; Sarheed Alshammari, T. Phishing websites detection by using optimized stacking ensemble model. Computer Systems Science and Engineering 2022, 41, 109–125. [Google Scholar] [CrossRef]

- Jain, A.K.; Gupta, B.B. A machine learning based approach for phishing detection using hyperlinks information. Journal of Ambient Intelligence and Humanized Computing 2019, 10, 2015–2028. [Google Scholar] [CrossRef]

- Jain, A.K.; Gupta, B.B. A novel approach to protect against phishing attacks at client side using auto-updated white-list. EURASIP Journal on Information Security 2016, 2016, 1–11. [Google Scholar] [CrossRef]

- Okomayin, A.; Ige, T.; Kolade, A. Data Mining in the Context of Legality, Privacy, and Ethics. 2023. [Google Scholar]

- Zieni, R.; Massari, L.; Calzarossa, M.C. Phishing or not phishing? A survey on the detection of phishing websites. IEEE Access 2023, 11, 18499–18519. [Google Scholar] [CrossRef]

- Muneer, A.; Ali, R.F.; Al-Sharai, A.A.; Fati, S.M. A Survey on Phishing Emails Detection Techniques. 2021 International Conference on Innovative Computing (ICIC); IEEE, 2021; pp. 1–6. [Google Scholar]

- Wang, Y. A survey of phishing detection: from an intelligent countermeasures view. 2022 IEEE Conference on Telecommunications, Optics and Computer Science (TOCS); IEEE, 2022; pp. 761–769. [Google Scholar]

- Adewale, S.; Ige, T.; Matti, B.H. Encoder-Decoder Based Long Short-Term Memory (LSTM) Model for Video Captioning. arXiv 2023, arXiv:2401.02052 2023. [Google Scholar]

- Yaswanth, P.; Nagaraju, V. Prediction of Phishing Sites in Network using Naive Bayes compared over Random Forest with improved Accuracy. 2023 Eighth International Conference on Science Technology Engineering and Mathematics (ICONSTEM); IEEE, 2023; pp. 1–5. [Google Scholar]

- Ige, T.; Kiekintveld, C. Performance Comparison and Implementation of Bayesian Variants for Network Intrusion Detection. arXiv 2023, arXiv:2308.11834 2023. [Google Scholar]

- Karim, A.; Shahroz, M.; Mustofa, K.; Belhaouari, S.B.; Joga, S.R.K. Phishing Detection System Through Hybrid Machine Learning Based on URL. IEEE Access 2023, 11, 36805–36822. [Google Scholar] [CrossRef]

- Ishwarya, R.; Muthumani, S.; PG, S.S.K.; Suriya, S. Seperation of Phishing Emails Using Probabilistic Classifiers. 2023 9th International Conference on Advanced Computing and Communication Systems (ICACCS). IEEE, 2023; Vol. 1, pp. 1676–1679. [Google Scholar]

- Omari, K. Comparative Study of Machine Learning Algorithms for Phishing Website Detection. International Journal of Advanced Computer Science and Applications 2023, 14. [Google Scholar] [CrossRef]

- Magdacy Jerjes, A.Z.A.; Dawod, A.Y.; Abdulqader, M.F. Detect Malicious Web Pages Using Naive Bayesian Algorithm to Detect Cyber Threats. Wireless Personal Communications 2023, 1–13. [Google Scholar] [CrossRef]

- Nishitha, U.; Kandimalla, R.; Vardhan, R.M.M.; Kumaran, U. Phishing Detection Using Machine Learning Techniques. 2023 3rd Asian Conference on Innovation in Technology (ASIANCON); IEEE, 2023; pp. 1–6. [Google Scholar]

- Mustafa, T.; Karabatak, M. Feature Selection for Phishing Website by Using Naive Bayes Classifier. 2023 11th International Symposium on Digital Forensics and Security (ISDFS); IEEE, 2023; pp. 1–4. [Google Scholar]

- Jaya, T.; Kanyaharini, R.; Navaneesh, B. Appropriate Detection of HAM and Spam Emails Using Machine Learning Algorithm. 2023 International Conference on Advances in Computing, Communication and Applied Informatics (ACCAI); IEEE, 2023; pp. 1–5. [Google Scholar]

- Alazaidah, R.; Al-Shaikh, A.; AL-Mousa, M.; Khafajah, H.; Samara, G.; Alzyoud, M.; Al-Shanableh, N.; Almatarneh, S. Website Phishing Detection Using Machine Learning Techniques. Journal of Statistics Applications & Probability 2024, 13, 119–129. [Google Scholar]

- Shukla, S.; Misra, M.; Varshney, G. HTTP header based phishing attack detection using machine learning. Transactions on Emerging Telecommunications Technologies 2024, e4872. [Google Scholar] [CrossRef]

- van Geest, R.; Cascavilla, G.; Hulstijn, J.; Zannone, N. The applicability of a hybrid framework for automated phishing detection. Computers & Security 2024, 103736. [Google Scholar]

- Olukoya, D. Heterogeneous Ensemble Feature Selection and Multilevel Ensemble Approach to Machine Learning Phishing Attack Detection.

- Shaukat, M.W.; Amin, R.; Muslam, M.M.A.; Alshehri, A.H.; Xie, J. A hybrid approach for alluring ads phishing attack detection using machine learning. Sensors 2023, 23, 8070. [Google Scholar] [CrossRef] [PubMed]

- Joshi, K.; Bhatt, C.; Shah, K.; Parmar, D.; Corchado, J.M.; Bruno, A.; Mazzeo, P.L. Machine-learning techniques for predicting phishing attacks in blockchain networks: A comparative study. Algorithms 2023, 16, 366. [Google Scholar] [CrossRef]

- Alsubaei, F.S.; Almazroi, A.A.; Ayub, N. Enhancing Phishing Detection: A Novel Hybrid Deep Learning Framework for Cybercrime Forensics. IEEE Access 2024. [Google Scholar] [CrossRef]

- Jayaraj, R.; Pushpalatha, A.; Sangeetha, K.; Kamaleshwar, T.; Shree, S.U.; Damodaran, D. Intrusion detection based on phishing detection with machine learning. Measurement: Sensors 2024, 31, 101003. [Google Scholar] [CrossRef]

- Zhu, E.; Cheng, K.; Zhang, Z.; Wang, H. PDHF: Effective phishing detection model combining optimal artificial and automatic deep features. Computers & Security 2024, 136, 103561. [Google Scholar]

- Adebowale, M.A.; Lwin, K.T.; Hossain, M.A. Intelligent phishing detection scheme using deep learning algorithms. Journal of Enterprise Information Management 2023, 36, 747–766. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).