1. Introduction

The worldwide population is growing older, especially in certain regions such as the South of Spain [

1]. The development of new tools to support the work of formal caregivers in retirement homes (homes where older people live together to be cared for) in certain monotonous tasks motivates the use of different technologies, that usually fall into the term Ambient Assisted Living (AAL). Among these technologies,

Socially Assistive Robots (SAR), designed to provide assistance through social interaction [

2], emerged as a promising option, due to their proactivity, autonomy, adaptability and potential acceptability. Moreover, they can act as powerful social facilitators, when correctly integrated in shared environments [

3,

4,

5]. However, a successful integration of these technologies in real world contexts remains as one of the main challenges that projects in this research field face. The lack of results based on long-term experimentation in real world settings and the limited consideration of the user perspective in the early design and evaluation stages, along with difficulties in correctly describing, evaluating and regulating these devices [

6], are currently preventing SAR from being widely used. As for the evaluation, the performance assessment should consider a social perspective beyond the technical dimension. Moreover, SAR performance should be evaluated in real scenarios and under everyday task conditions. However, approaches that consider long-term evaluation "in the wild", i.e., in real-world settings without the constraints of test-bed or lab scenarios, are still uncommon [

6].

This study aims to contribute in the development of socially accepted and efficient SAR. Hence, the methodology to actively involve users in the design process of the SAR is described in

Section 2, via combining aspects of human-centred design and participatory design approaches. Here, the users are both residents (the older people living in the retirement home) and the formal caregivers taking care of them. Possible use cases that were designed based on the insights gained from interviews and a focus group with the stakeholders are also enumerated in

Section 3. In

Section 3.2 and

Section 4, details are provided about the implementation of the first use case with a working platform within a pilot test deployed in the retirement home, which is described in

Section 5. The first results of the pilot test is detailed in Section 5.6. In addition, the interface of the robotic platform was improved throughout the design, implementation and roll-out of the pilot test, with feedback from the stakeholders being sought at several stages throughout the process. The process of the interface design and improvement is detailed in

Section 6.

Throughout the whole process, the AUSUS evaluation framework, developed within authors’ previous research [

7] was used to detect possible interaction problems with the robotic platform, and stakeholders’ feedback and emerging needs were considered during the continuous and iterative definition of the use-case and interface design. The main purpose of the AUSUS evaluation framework is to achieve a holistic and ’in place’ evaluation of Human Robot Interaction (HRI) for Socially Assistive Robots (SAR), considering first and foremost the needs of older adults, in terms of usability, accessibility, user experience and acceptance criteria.

2. Current Challenges for Socially Assistive Robots

SAR have become the research focus of many recent studies [

5,

8,

9], and in particular conceived to support caregivers in retirement homes. SAR are proposed to assume simple and repetitive tasks, leaving more time for the caregiver to provide personalized care. However, despite the existence of commercial social robots since the beginning of the 21st century, they still face limitations in adapting their behaviour to their possible interlocutors. Moreover, the design of SAR deployed in a retirement home should consider the frequently limited interaction capabilities of residents, the design of accessible and natural interfaces, and content adapted to cultural and taste aspects [

10].

A possible approach to gather social skills is based on the definition of architectures such as ROCARE (Robotic Coach Architecture for Elder Care) [

11], which evaluates how to provide the robot with social skills in a similar way to the development of human social cognition, by starting with models of dyadic or one-to-one interaction, passing through the triadic in which intention is already involved, and even collaborative interaction. Other approaches consider usability and accessibility as the key aspects in the development process of endorsing social robots with interaction capabilities. Thus, they focus on the interface design and consider the interaction naturalness as the key feature for the engagement of different users [

12]. A well-designed interface should allow users to socialize with or at least interact with robots without previous knowledge or experience about robots. Thus, as in everyday interaction, naturalness is usually related to the need for multimodal interfaces that facilitate interaction with users, avoiding accessibility barriers that hinder interaction [

13,

14].

However, the process of including an accessibility criteria in the interaction metaphors and interfaces is still uncommon in HRI, even in social robotics. Indeed, in the case of SARs dedicated to companion robots (e.g., PARO, AIBO, ifbot), whose main goal is the improvement of the emotional state, the evaluation in terms of accessibility and usability requirements is more limited [

15]. One of the main reasons for this limitation is the added complexity arising from the additional specifications of SAR in terms of tasks variety, security, and technological challenges. To the author’s knowledge, only some exceptions as the CLARC EU Project (ECHORD++ FP7-ICT-601116)

1 has considered accessibility in HRI for a SAR.

Even with the consequent generational narrowing of the digital gap, recent studies still show limitations in terms of the acceptability of the current technology. In this sense, there are studies that evaluate design requirements with different stakeholders and the impact and acceptance degree of new technologies [

14,

15,

16]. In particular, the study of Winkle, et al. [

17] provides a complete analysis of a mutual shaping approach by combining elements of human-centred design and participatory design of a SAR. As the main outcome, a significant shift in participants’ acceptance was found as a consequence of sharing and shaping the knowledge. Our research follows this same approach, and deepens and puts into practice previous outcomes about the contribution of stakeholders’ involvement in the SAR design in terms of acceptability and adequacy [

18], with the aim of obtaining both a pragmatic and reflexive-methodological conclusion.

Following the principles of user-centred design for a SAR which was conceived for a retirement home, a key aspect to consider is the user interface (UI) requirements. In particular, the design and development of interfaces that are accessible and usable. Indeed, as stated in previous studies with older adults, the lack of previous experience with similar technologies together with natural changes and limitations associated with aging [

12] usually entails additional constraints in the interaction with Information and Communication Technologies (ICT). Hence, the robotic platform needs to be designed in an inclusive way, providing an accessible interface in order to allow all the users to interact with the system with the same opportunities. In this paper, the main accessibility guidelines and recommendations in Human-Computer Interaction (HCI) [

19] and Human-Robot Interaction (HRI) fields [

20] are followed and specified as system requirements. Moreover, our experience in previous research projects [

21], where older adults interact with robot platforms is considered for the analysis and design process.

3. Definition of the Use Case

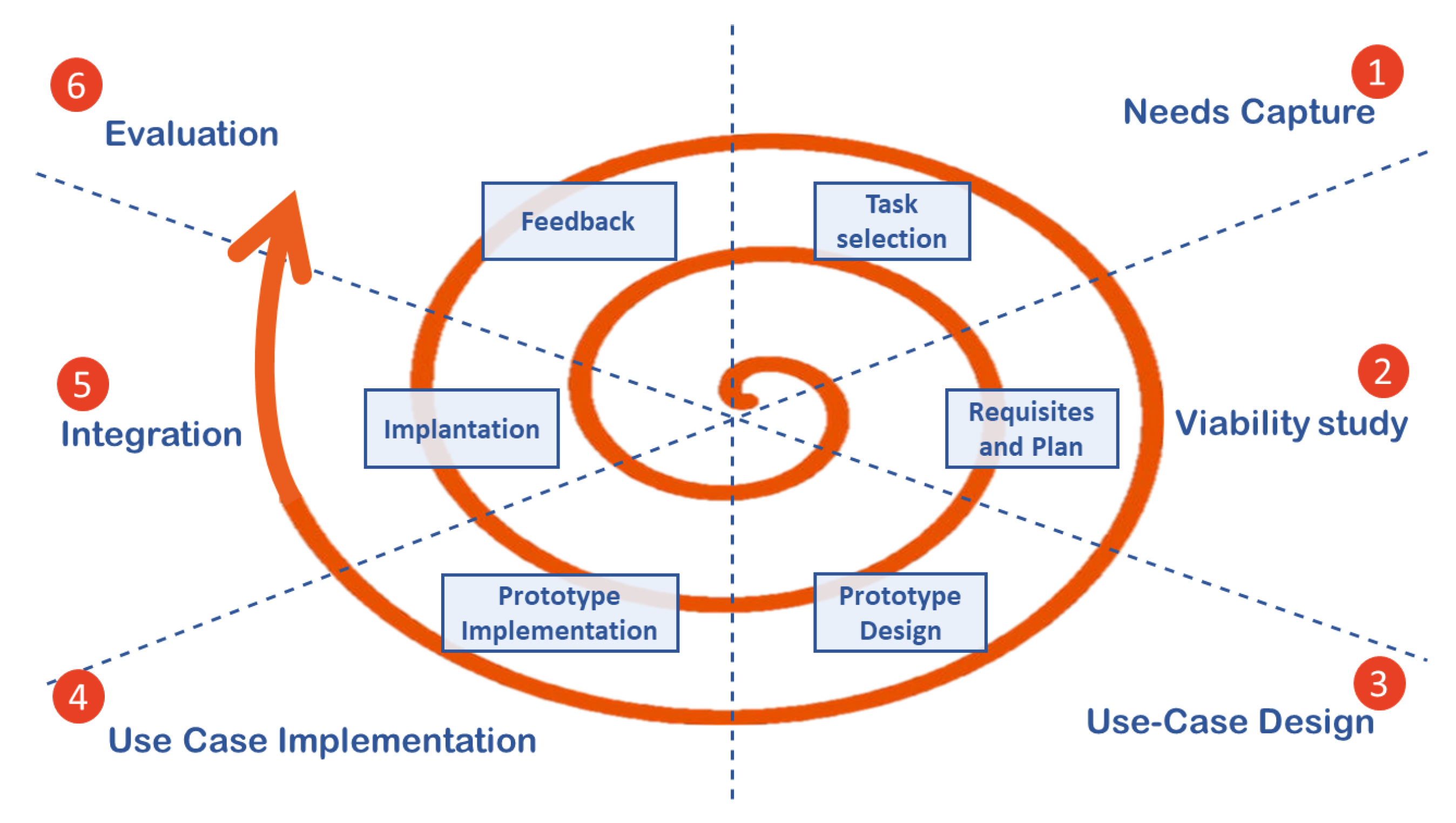

HCI, Software Engineering, Human-Factors Engineering and Assistive and Rehabilitation Technology disciplines propose different guidelines for the design of human-robot interfaces. These guidelines have been considered for the definition of this use case, using an iterative design approach (Figure 1) that is explained in detail in the next subsections.

The particularity and added value of our approach is the degree of involvement of the stakeholders and that the focus is on understanding and meeting their needs.The principles of human-centred approach [

22,

23] and participatory design [

24] have been combined, not as a "toolkit" of methods, but to be constantly adapted to the situated and specific context [

25] of the retirement home organisational environment, user’s characteristics and use case scenario. This study details how this approach has been followed during the whole design and evaluation process.

Participants have been classified in three categories depending of the expected level of interaction with the SAR and their role in the AAL. Thus, the primary group consists of residents, healthcare professionals and caregivers working in the retirement home; the secondary group are relatives, visitors, healthcare professionals and caregivers (formal and informal) not working in the retirement home; and the tertiary group refers to the other workers (e.g., management staff, external hardware and software providers).

Figure 1.

Human-centred Methodology and Life Cycle.

Figure 1.

Human-centred Methodology and Life Cycle.

3.1. Capture of Retirement Home Needs and Viability Study

In this phase, the needs of the users at a specific retirement home (Vitalia Teatinos in Málaga-Spain) have been analysed through ethnographic observations and workshops with the primary stakeholders and the engineers. First, needs and technical limitations of the robotic platform (e.g., physical barriers) were analysed from observing the environment and daily life routines in three different rooms. During one of these visits, several methods (collaborative mind-mapping, post-it and tasks sorting) during a participatory workshop allowed a preliminary assessment of some tasks in which a basic robot without arms could be useful. For this workshop eight primary participants were recruited by the occupational therapist: 4 men and 4 woman; 3 residents and 5 professionals (occupational therapist, physical therapist, psychologist, social worker and nurse). The session lasted 90 minutes and as a result, the main needs of residents and caregivers working at the retirement home were identified and sorted. The advantage of using a participatory design approach in this case was that the users themselves were the ones who decided which robotic functionality was most appropriate for the retirement home according to their own needs, thus contributing more actively to the design of the robotic system and increasing the likelihood of success in gaining acceptance of the platform in the retirement home.

The engineering team took as the end-user input this list of tasks and requirements, and analysed the feasibility of properly implementing those tasks with the limitations of the available robotic platform. Some examples of the SAR tasks, sorted according to the primary stakeholders’ priorities, are: (i) Postural Control; (ii) Selection of the menu options for lunch and dinner; (iii) Announcer of events at the retirement home. According to the analysis of needs, priorities and viability, the integration of the robot in the retirement home started with the most simple task: the announcer (

Section 3.2).

3.2. Design of the First Use Case: Announcer Task

This use case has been selected to be implemented first on the basis of (i) its technical simplicity that would allow designing basic prototype interfaces that could be iteratively improved; and (ii) the active role of the robot as a social entity in the retirement home, as it provides information of the social agenda and activities. Thus, conceiving a long-term evaluation, this task could promote a faster integration of the SAR in their life routines. In this use case the robot moves around the room, informing about the different activities or events scheduled for each of the resident groups. Different voices were tested, the first option being the Microsoft Helena Spanish voice. Although for the first tests in the real environment the robot was teleoperated, tasks such as autonomous navigation and people detection were also integrated and tested in controlled environments during the design phase. These autonomous behaviours have been gradually integrated in the real use case, as soon as they fulfilled robustness, safety and efficiency requirements. In fact, the robot currently performs the use case autonomously in the retirement home, once it has met the conception of a "human-friendly robot", that is to say, when interaction and safety issues have been inseparable [

26]. It’s no coincidence that the terms “human-friendly robots” or “human-friendly robot design” in the literature [

27] are more technology- rather than human- centred. However, in this research, design decisions have been made in accordance with the human-centred approach.

The main specifications that were considered in the announcer’s design were centred around two interfaces.

The operator interface, whose functionalities should include: (i) controls for the remote operation to allow that the robot can be easily moved around in two different rooms; (ii) controls to introduce new events while it is active (weather, recommendations, etc.); (iii) a web interface that automatically updates the agenda every week and allows programming new events.

The interface of the robot with the residents. This interface should accomplish the next requirements, gathered iteratively via the recommendations of the stakeholders in the participatory design, and also based on the user’s abilities and disabilities and interaction needs according to a user-centred approach: (i) be multimodal, supporting voice, images and text displayed on a screen to inform about the events; (ii) display specific events associated with birthdays or anniversaries; (iii) warn about the information announcement with a repetitive sound of a horn in order to attract attention; (iv) say goodbye and return to the home position after the announcement.

The procedure of the use case consisted of the robot moving once a day in two of the selected rooms and announcing the agenda. For that purpose, the robot autonomously moved to the most appropriate position to make the announcement. Some randomization was included in the selection of these positions to avoid mechanical repetitions. Moreover, the teleoperator could always choose different positions before and during the experiment, aimed towards making sure that all that were present properly understood the announced information independently of their visual/hearing limitations.

4. Robotic Platforms and Interfaces

Figure 2 shows one of the two similar robotic platforms, named CLARA [

21], developed in the recently concluded CLARC EU Project. The proposed methodology (

Section 3), in which user’s feedback leads to constant adjusting, redesigning and extension of the functionality and features of the robot, greatly benefits from having these two similar platforms available. Hence, one platform is always deployed in the retirement home, while the other is employed to implement and check updates. Moreover, the physical appearance of these robots was defined, by end users, in participatory meetings organized in the CLARC EU Project. The positive feedback collected from users during that project, and the positive first impressions of users during pilot tests, moved us towards keeping this external appearance for the robot.

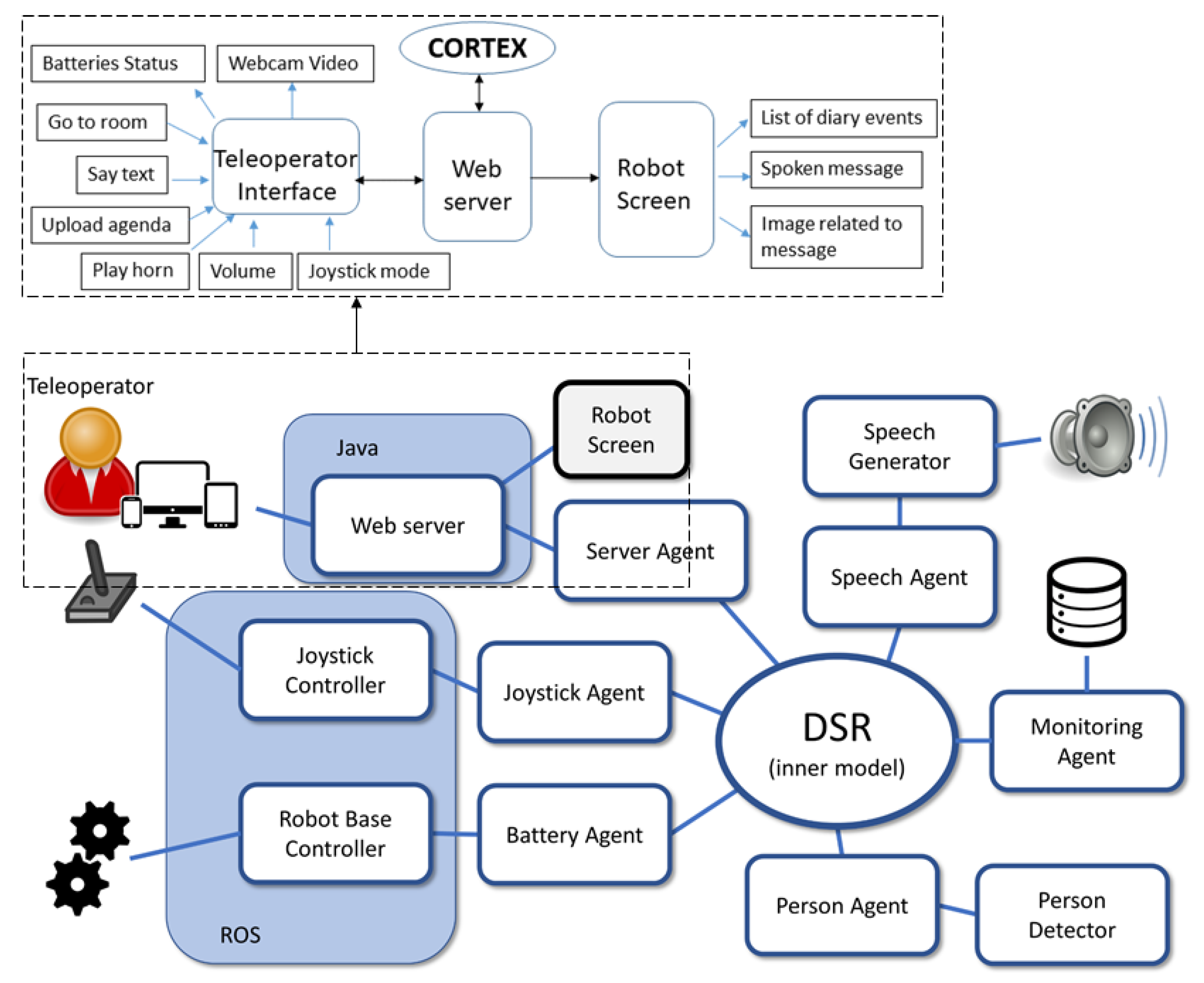

CLARA robot uses the CORTEX cognitive architecture [

28], which eases the robot adapting to new scenarios and incorporating new use cases. Hence, it is possible to use this robotic platform, initially designed to drive functional, cognitive and motor tests in one-to-one interactions, for a completely new set of tasks, without changing its inner software architecture. Figure 3 shows the CORTEX components for the announcer use case, which are in charge of different tasks (monitoring, interfacing, speech generation, battery management, etc.). All of them are connected with the Deep State Representation (DSR) graph. This graph works as the inner representation of the world and a shared blackboard for all the modules to communicate through the so-called

agents. Most of the proposed architecture is programmed using the Robocomp framework [

28], although Robot Operating System (ROS) framework [

29] is also used for some components connected via dedicated proxies. All modules rely on the Ice middleware [

30] for communication.

Figure 3.

Software architecture for CLARA.

Figure 3.

Software architecture for CLARA.

The

Teleoperation interface and the

Information interface described in

Section 3.2 have been both implemented and connected to the CORTEX architecture through a web server. The Teleoperation interface can be displayed on the teleoperator computer, tablet or mobile phone. The Informative interface is displayed on the touch-screen which was placed on the robot’s torso and its design and evaluation process is detailed in

Section 6.

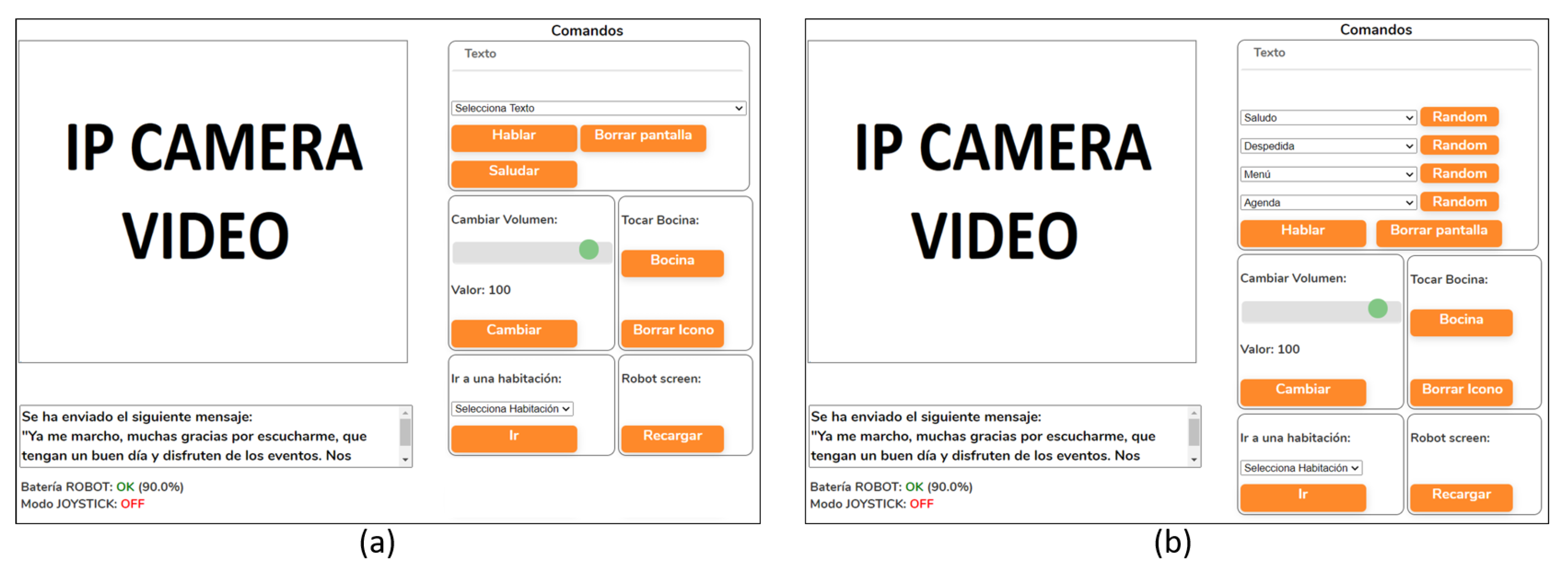

The Teleoperation interface allows a remote operator to control the robot. Its first version (Figure 4a) provided the following options to the operator: (i) to announce a message that can be written by the teleoperator or selected from a list; (ii) to announce a randomly generated message to greet the residents; (iii) to play some specific sounds (horn); (iv) to set the robot speaking volume; (v) to go to a mapped location. Additionally, this interface displays a live video streaming from a webcam installed in the robot, the battery levels for both the robot and the teleoperation joystick, the selected navigation mode (joystick/autonomous), and a text console with information about the actions executed by the robot. The only update for this interface after the tests (

Section 5.6) was to create 4 different topics, for both the list of messages to be selected and the randomly generated ones: greetings, goodbye, food menu and events agenda (Figure 4b).

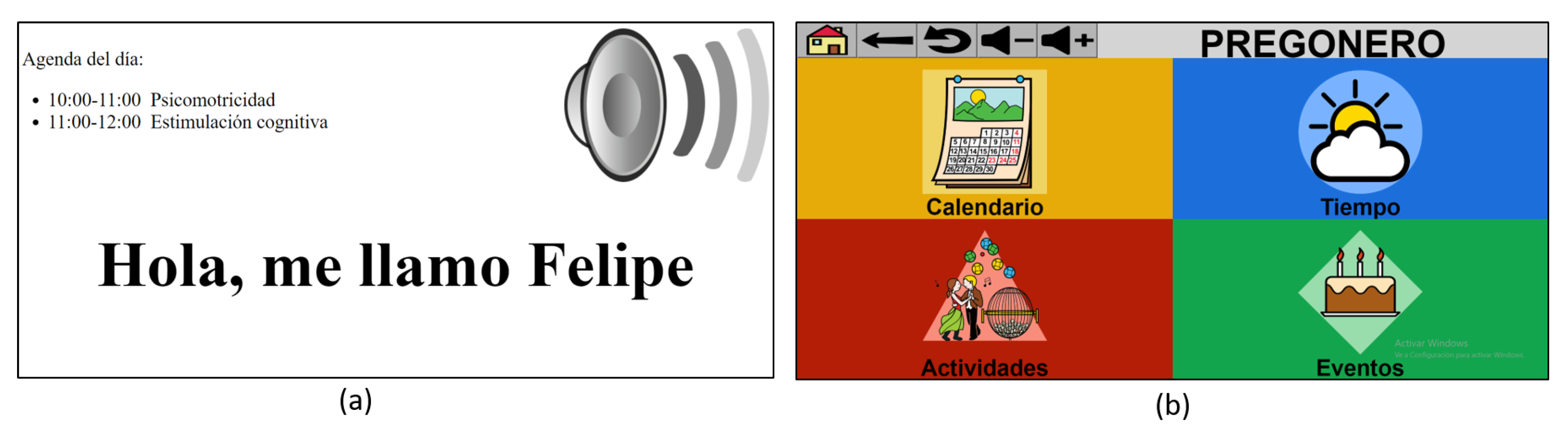

The Information interface (Figure 5) updates visual information targeted to residents. In a first version the screen showed a list with a brief version of the daily agenda, as well as a text with a transcript of the robot speech at any given moment, or a loudspeaker icon when the horn sound was being played (Figure 5a). This interface was deeply modified after the pilot tests, as explained in

Section 5.6. The new Information interface (Figure 5b) can be used by the residents to get information using the touch screen. A key requirement for this interface is accessibility. Hence, messages will be featured by the robot using a voice announcement while its transcription is displayed on the screen, augmented with adequate representative icons. Displayed colours and text have been selected considering accessibility criteria. Icons have been downloaded from the ARASAAC Augmentative and Alternative Communication symbols database

2.

Figure 4.

Teleoperation web interface. a) Old interface b) New interface.

Figure 4.

Teleoperation web interface. a) Old interface b) New interface.

Figure 5.

Screen Robot interface. a) Old interface b) New interface.

Figure 5.

Screen Robot interface. a) Old interface b) New interface.

5. Pilot Experiment

The design of the SAR capabilities and features, and the use cases, are conceived to follow an incremental redesign process (see

Section 3), where user’s feedback was employed to improve the solution in successive iterations. Moreover, the system will be incorporating different use cases to be evaluated in the long-term, as well as new interaction and interface capabilities.

This section describes the first pilot experiment that was performed in the retirement home in two phases. Each phase consisted of one execution of the announcer use case. A fortnight separated the two phases, giving time to the technical team to incorporate several updates to the SAR, as described below.

5.1. Aims

The first of these phases was designed with the following objectives:

from a Technological Perspective, the objectives were (i) to detect possible issues in the SAR performance during the interaction in the retirement home (in a real environment, in contrast to a controlled one) and; (ii) to technically evaluate the interfaces to detect accessibility and usability barriers.

from a Social Perspective, the objectives were (i) to introduce the robot to the residents, to explain them the main aims of the project and to make them aware that it will be present in their daily lives for a few weeks for the first pilot experiment; (ii) to evaluate the first impressions of the residents about the SAR as a phase of a participatory design, by involving them in the process of deciding certain aspects of the robot functionality and recommendations about the interface design.

The second phase of the pilot experiment was to prepare the long-term experiments to be carried out with the residents. The main aims were (i) to design the evaluation setup and protocol to execute the announcer use case, following a participatory design approach and involving the caregivers in the process,: time to be spent in each room, frequency and positions where the message should be repeated, etc.; (ii) to draw conclusions about the best way to gather user’s feedback (by interviews, questionnaires, focus groups or observation) and; (iii) to supervise the extent to which the prototyping cycle is able to incorporate valuable improvements from the collected feedback, by analysing the SAR performance once the minor technical improvements suggested in the first phase were implemented.

5.2. Participants

Two categories of end-users were involved in this first pilot experiment: residents and professionals in the retirement home. Visitors were not yet included in these experiments, although they will be present in the long-term evaluation process.

From an initial participatory workshop with the occupational therapist and the technical team, it was found that only two of the 7 existing common rooms in the retirement home were suitable for the pilot experiment, due to the lower degree of dependency of the residents who were there and the physical characteristics of the rooms, which allowed the robotic platform to move around. Hence, the inclusion criteria for this pilot test allowed participants who had no cognitive impairments, or very mild ones (i.e., Mini Mental Test score over 24). In the two phases of the pilot, the robot interacted with around 40 residents (approx. 20 per room), most of them were present in the introduction session. For this first pilot experiment, inclusion criteria related to their abilities/disabilities or preferences were not taken into account. The occupational therapist presenting the robot, along with several caregivers (2-3 per room), were also present during the experiments.

The tests were performed in a real environment, without changing at all the daily routines of the retirement home. Hence, the people who were not initially included in the experiments, including relatives and professionals, met the SAR in the corridors. Moreover, during the experiment there were residents and caregivers going in and out the rooms. Finally, during the first phase of the experiment, and due to the very positive response of the residents, the therapists asked the robot to move around an open-air terrace, where other residents were enjoying some activities related to the upcoming carnival, so that they could also meet it.

Regarding the technical team for this pilot tests, it consisted of two engineers, who were responsible for teleoperating the robot by using a tablet and a joystick. These operators were also in charge of observing the resident interactions with the robot, providing feedback about the usability and accessibility of the interfaces, following a user-centred approach. The technical team also included a rehabilitation specialist who prepared and conducted the initial contact with the residents.

5.3. Material

The pilot test was linked to complementary research questions -technical viability, usability-accessibility, utility and acceptability - aiming towards evaluating both the technical performance of the SAR and its interfaces and, related to social aspects, the first impressions that the robot produced in the residents and professionals at Vitalia. Following a user-centred design, data was collected using two main sources: (i) video recordings from the camera mounted on the robot; (ii) informal interviews with the residents, caregivers and technicians.

Before the experiments, the participants filled in an informed consent. As detailed above, testing in a daily life field scenario means unexpected people were around the robot or even interacted with it. Privacy was always guaranteed as images and videos taken inside the retirement home were subjected to Vitalia regulations regarding this particular aspect.

5.4. Environment

The evaluation was conducted in a real scenario: the Vitalia Teatinos retirement home, in Málaga (Spain). Each phase of the pilot consisted of one execution of the announcer use case. These phases were performed on two different days (the second phase two weeks after the first one), in February 2020.

Two shared rooms in the resident home were used for the pilot experiment. These rooms were the living-rooms used by the participants to interact with other residents or to perform daily activities.

The rooms were located consecutively in the same corridor, and both were also connected to an outdoor terrace. They are spacious, with several chairs. Some residents were sitting in these chairs during the tests. Others were walking around or standing up. Many of them were doing other activities or speaking with other residents when the robot arrived. Moreover, 2-3 caregivers were also in the rooms. No TV or music was played in the rooms during the evaluation phases in order to allow people to listen to the robot.

5.5. Procedure

Before the pilot experiment took place, consent forms were distributed among caregivers working in Vitalia Teatinos, and they helped residents fill in the consent form during their daily routines, some days before the pilot started.

In the first phase, the residents and professionals in the resident home were informed about the project aims and that a robotic platform would go to the two specified rooms to announce events and the daily menu, so the residents and professionals who wanted to participate in the test stayed in these rooms. Moreover, in order to help with the introduction of the SAR in the daily life of the retirement home, the occupational therapist of Vitalia suggested the activity of naming the robot, following a participatory design, by choosing and agreeing together on a name. The robot was finally named ’Felipe Vitálico’ by the residents participating in the experiment.

During the two phases, the robotic platform was configured to be fully teleoperated by the technicians. The robotic voice volume was fixed to the maximum level, to allow the robot to speak aloud and be heard in the whole room. The technicians stayed out of the rooms while the robot was performing the use case. However, they always kept line of sight to check the interaction between the users and the robot. In addition, the teleoperator could utter simple phrases such as introduction, greeting and farewell to exhibit more interaction capabilities when a resident was approaching the robot to initiate a conversatation.

The robot started the session in the corridor. Then, it moved to the first room. Once it was located in an adequate spot in the middle of the room, it made a horn sound (similar to a town crier horn) to capture the attention of the users around. Then, it said hello and started announcing the events and the menu. The announcement was repeated twice, as many residents did not hear it properly the first time. Once the announcements were done, it said goodbye and moved out to the other room. In that second room, due to the hour in which the tests were performed, tables and chairs were distributed in groups, ready for lunch-time. Residents were walking around or were sitting in groups. Hence, the robot was teleoperated to approach each of the three main groups, and made the announcements for each of them. After it finished announcing the events and menu, it said goodbye and moved directly to its charging place.

Figure 6.

Felipe Vitálico working at Vitalia Teatinos.

Figure 6.

Felipe Vitálico working at Vitalia Teatinos.

5.6. Results

This subsection presents the preliminary results based on the observation and informal interviews with professionals, residents and the robot teleoperators of the evaluation group obtained following a user-centred design. These preliminary results about additional needs and requirements were used to detect possible interaction problems. Moreover, during the informal interviews and by using a participatory design, the users were able to propose and make recommendations for changing the interface design and functionalities, which are described below.

5.6.1. Technical Issues and Recommendations

The first impressions of the pilot experiment, even with such a simple and controlled use case, is the large gap between the interaction capabilities in the real scenario in contrast with its normal operation in a controlled environment. The complexity of interaction in a crowded environment (noise, people with limited interaction capabilities, barriers, uncontrolled events, etc.) became evident when contrasted with first impressions obtained in the lab. However, interesting conclusions could be drawn for the redesign made of the teleoperation interface for the second intervention, and for the initial design of other use cases of incremental complexity as detailed below.

Teleoperation interface. Selecting phrases from pop-up lists quickly proved to be not a valid method to allow a fluent interaction. The teleoperator did not have time to write, nor even to select, a proper phrase when, for example, someone said ’hello’ to the robot and expected a response from it. The "delay" from a conversational mechanism perspective [

31] limits a fluid interaction capacity and can be seen as a disadvantage rather than an improvement. Moreover, while this issue might have been mitigated if the robot incorporated some mechanisms to acknowledge the reception of a phrase, the difficulties to automatically recognize speech from the users in a robust way within the environment of the retirement home made this option not possible, for the employed platforms.

Hence, we changed the teleoperation interface by allowing the teleoperator to make the robot speak by simply clicking a button. The cognitive architecture of the robot will select a random phrase (or a phrase composed by random components) when that button is clicked. Figure 4b shows the new teleoperation interface. As depicted, the interface incorporates more commands that would allow selecting a phrase from a pop-up list or randomly picking a phrase, as an alternative to writting it. Four categories were used to group these phrases: greetings, saying goodbye, event information and menu choices for the day.

Person detection. The robot’s ability to detect people facing it, and willing to interact with it, is significantly poorer when the robot works in crowded daily life environments, than when it works in a controlled lab environment. The Person Detection System of the robot allows the teleoperator to send a

greetings command each time the SAR detects a person. But using only a vision-based human detection system, the ability of the robot to detect people is limited. Therefore, the system was changed to allow the teleoperator to send when needed a greetings command. In addition, a more robust multimodal detection system is being developed. In particular, a new system with a fusion approach of visual features, Deep Neural Networks training, laser scans and RFID readings is being tested in the laboratory [

32]. This system will be further reinforced by an Automatic Speech Recognition module as described in [

33], able to detect when a person greets the robot.

Voice volume. While in moderately quiet laboratory environment the maximum volume of the robot’s loudspeakers was adequate, it was not enough to make the robot be heard in the crowded shared rooms of the retirement home. That situation was even worse when the robot moved outdoors. Therefore, only people within an approximate distance of less than 1.5 m could properly understand it. In situations where the therapists asked the people to keep silent in the shared rooms, that range could reach 2.5 m or 3 m around the robot. Therefore, many residents expressed they could just barely understand what the robot said.

This intelligibility problem was corrected between phases one and two of the pilot test by providing the robot with more powerful (10W) loudspeakers, and making holes to increase the voice projection. In the second phase of the experiment, these upgrades allowed the robot to be heard anywhere in the room.

Attention catching mechanisms. Residents and professionals in the shared rooms were expecting the robot to come in, as they have been previously informed about it. However, they easily lost their attention after the robot began to speak. Some of the reasons were: (i) the robot remained static while speaking; (ii) its voice was monotonous; (iii) the person didn’t understand it properly.

We recommend to address these issues by: (i) providing the robot with back channelling behaviours that allow acknowledging or simply attracting attention. The first proposal to be tested will make the robot perform randomized and spontaneous small movements while speaking. These movements could help in keeping the attention of the residents, although some tuning will be necessary to find a compromise to avoid annoying and useless motion; (ii) evaluating different voice generation systems for the robot, or using recorded messages; (iii) using more sounds to attract attention during the speach, in the same way that the horn sound is played before the robot begins the announcements.

5.6.2. User’s Acceptance of the Robotic System

Acceptance evaluation. In this first pilot experiments, acceptance was evaluated with observations and informal interviews. The acceptability questionnaires prepared were not finally administered due to the lack of time and the busy schedule that the residents had that day. This decision was taken following the guidelines which were signed in the agreements, considering ethical aspects, and therefore the experiment should avoid over tiring the residents, becoming invasive in any manner, or disrupting their usual organisation. Moreover, the retirement home was closed to visits only a few days after the second phase of the pilot due to the COVID-19 pandemic.

Our recommendation in future experiments is to be ready for busy schedules with different options: (i) ask the professionals if they could help the residents to fill in online post-test questionnaires; (ii) gather from the workers the general information in focus groups or participatory workshops; (iii) complete the post-questionnaires with a reduced numbers of users (not all the users in the room).

First impressions. They were very positive just considering the observations. Indeed, only one out of forty residents involved argued about the robot’s presence, and the occupational therapist indicated that this was the normal behaviour of this participant when facing changes in their routine.

During the first few minutes, all the residents focused their attention on the robot, to the point where many of them realized that one of the robots’ eyes was slightly bigger than the other. Moreover, many of them indicated their willingness to integrate it into their daily activities and, in some cases, even introduced it to their grandchildren.

The participatory process of robot naming was particularly enjoyable and was a determining factor in the user’s acceptance of the robot. As a relevant example of how the perception of the robot evolved during these first few minutes, the hesitating resident wanted to name it after her child, after seeing it move and speak.

Both residents and caregivers seemed to use the SAR (their ’Felipe’) as a good excuse to have a good time. Hence, its role as social facilitator was fully demonstrated, even in this pilot test. It was also interesting their initiative to bring the robot to the terrace to meet the other residents.

The implication of residents in the participatory workshop organized before the pilot test was also very positive: these people talked about the robot (even before seeing it for the first time) to the other residents, and were willing to see how the robot looked and performed.

Some relatives met the robot in the corridor when it was moving from one room to another. They wanted to take pictures, asked the researchers about the capabilities and features of the robot, and were glad about the initiative.

The reaction and acceptance of professionals working in the retirement home when they saw the robot was also noteworthy, as they could see how the robot was able to successfully perform the functionality they decided in the first participatory workshops. Furthermore, they were also interested in the current and future features of the robot, and explored the possibility of using it for different purposes and users in the future (e.g., for people with dementia, or in interventions for drug addictions).

False expectations. Although the first interventions were positive in showing the robots potential, false expectations were also easily created. Thus, despite the robot’s unhuman appearance and the occupational therapist’s explanations of its basic task-adapted functionality and limitations, most of the residents demanded greater functionality. As an example of this, when it announced the menu choices for the day, four of them asked it to provide additional new dishes and they really thought the robot could understand what they were saying and could intercede in changing the menu. In general, residents expected, from the interaction with a social robot, behaviours of human face-to-face interaction [

34] to be maintained with Felipe. Thus, these types of robots are understood from the beginning to be used more as a social facilitator than a useful tool, so dyadic and even triadic interaction [

11] modality must be considered from the beginning. These results led to a redesign phase based first on improving the speech module, either by improving the teleoperation interface shown in Figure 4 or by using advanced language models to automate this module using artificial intelligence algorithms (although it does not yet include speech recognition), and second, to include autonomous human recognition in the capabilities of the robot.

Information interface. Many residents indicated that they preferred to access information such as that provided by the robot through touch-screen or voice-accessible menus. Therefore, the use of the chest-screen was modified not only to provide the advertised information in a visible form but also to incorporate accessible information through touch interaction. Figure 5b shows our first prototype for the new chest screen interface, in which accessibility criteria were considered as a key part of the design process. The ongoing process of designing and evaluating this interface, with feedback from stakeholders, is described in

Section 6.

6. Improvement and Evaluation of the Robot Information Interface

Following participatory design principals, the user’s feedback gained from the pilot experiment was employed to enhance the interfaces of the announcer use case. After finishing implementing the interfaces, it was necessary to evaluate these updated versions with real users and the final robotic platform (autonomous robot which approaches to the users and announce events), before deploying the robot CLARA in the retirement home (2nd phase of the pilot). The complexity of the interaction with the robotic system is due to the necessity of controlling the proper evolution of the communication among the software components as shown in Figure 3, the hardware and updating in real time parameters closely related to the adaptation to real conditions interaction, such as keeping the right distance with/from the residents, robot position, luminosity conditions, noise control, etc.

This section describes the iterative process of the robot’s information interface design and evaluation based on the AUSUS framework [

7] and its continous adaptation based on the results obtained.

As this project was carried out during the pandemic, it was not always possible to involve older adults in the retirement home, so the evaluations were carried out in different phases:

Evaluation in a controlled environment: the process of improving the interfaces by taking into account the opinion of real users was carried out in a controlled environment, where people who were not as vulnerable to COVID19 as the older adults could participate in the improvement of the interfaces and give their feedback on how to improve the interfaces in one of the laboratories of the researchers. This evaluation is described in detail in sub

Section 6.1 and led to the improvement of the interfaces prior to their use with older people in the retirement home.

Evaluation in the retirement home: This evaluation implements the second phase of the pilot experiment, in which the improved interfaces were used for five months in the retirement home. Older people and the staff at the retirement home could interact with the robot and objective data about this interaction is detailed in

Section 6.2.

6.1. Evaluation in a Controlled Environment

This section describes the user evaluation that was conducted to assess the usability, accessibility, user experience and acceptability of the robot’s information interface according to the AUSUS methodology. This evaluation was performed in two phases, consisting on: 1) partial and informal evaluations, which were performed during the development of the interfaces. The procedure followed combined user-centred design and participatory design approaches, allowing the participants to express their impressions, recommendations and feedback after a free interaction with the robot interfaces, which helped the researchers to re-design the interfaces according to refined requirements; 2) formal evaluations after redesigning the interfaces with previous outcomes via following specific procedures to assess Usability, Accessibility, and Acceptability.

Participants. Ten participants (6 males and 4 females) were voluntarily involved in the evaluation.

Table A2 shows that all participants were familiar with using phones, tablets or computers for communication, work, study or entertainment. Only 30% of the participants have interacted with drones, or robots for research and study. It is worth noting that 30% of participants considered their interaction with the intelligent virtual assistants being as interacting with robots.

The selected sample reflects a diversity in terms of communication abilities, age, gender, and experience in using electronic devices and robots. Cognitive disabilities were considered as an exclusion criterion for selecting participants for the evaluation. There were nine Spanish and one Iranian among the participants. In Table A1 (Appendix A) the severity of each disability is described using a 5-point Likert Scale, where 1 is the most severe case (I can’t hear, see, move, etc.) and 5 is not having a disability at all (I can hear, see, move perfectly, etc.). Table A1 shows that a quarter of the participants have severe hearing and visual disabilities. The majority of the participants would like to interact in the future with robots in: welfare, work spaces, home, entertainment, and as personal assistant. Two participants expressed their unwillingness to interact with robots in the future, and both of them had never interacted with any robot before. One of the participants stressed the importance of using robots in all aspects of life, provided that humans are not replaced by them.

Environment. The evaluation sessions were carried out in the Artificial Intelligence SCALAB and GigaDB laboratories at Leganés campus of the Carlos III University of Madrid, in June 2021. At this time, the evaluation of the interfaces could not be conducted at the restirement home due to the COVID-19 access restrictions during the pandemic period. Only the participants, the robot and the evaluator were presented during all the sessions, to provide a comfortable and private environment, as well as to assist participants in focusing while interacting and accomplishing the required tasks for evaluation.

Materials. The following methods and materials were used:

Questionnaires. Pre-test and post-test questionnaires were used to collect data from the participants. In the Pre-test questionnaire eighteen questions were used to define participants’ socio-demographic information, and their experience with electronic devices and robots. This data allowed the researchers to perform a deeper analysis of the results, correlated with their previous experience and socio-demographic information. The post-test questionnaire, detailed in Appendix A, included 26 questions to investigate participants’ responses on usability, accessibility and acceptance aspects of both the hardware and software interfaces of the use case. These questions were answered following a 5-point Likert Scale, where 1 corresponds to "strongly disagree" and 5 corresponds to "totally agree".

Introduction and pre-test information: legal procedures and pre-test questionnaire. Once the participants were selected, the goal of the evaluation process was explained to them. Then, the participants signed a consent form. Finally, the ten participants answered the pre-test questionnaire.

Evaluation session. In order to test in depth, the robot interface, the participants were provided with a list of tasks, to be accomplished during their interaction with the robot. Seventeen short and direct tasks were designed to ensure all robot possible interaction modes were used during the experiment. These tasks involved obtaining information about the scheduled activities in their daily agenda, check the date and time, information about the weather, and information about the birthdays of the residents and staff. Moreover, the users could adjust the robot’s voice settings.

Post-test feedback. After each evaluation session, the researchers had an informal interview with the participants, in which they also filled in the post-test questionnaire using a computer.

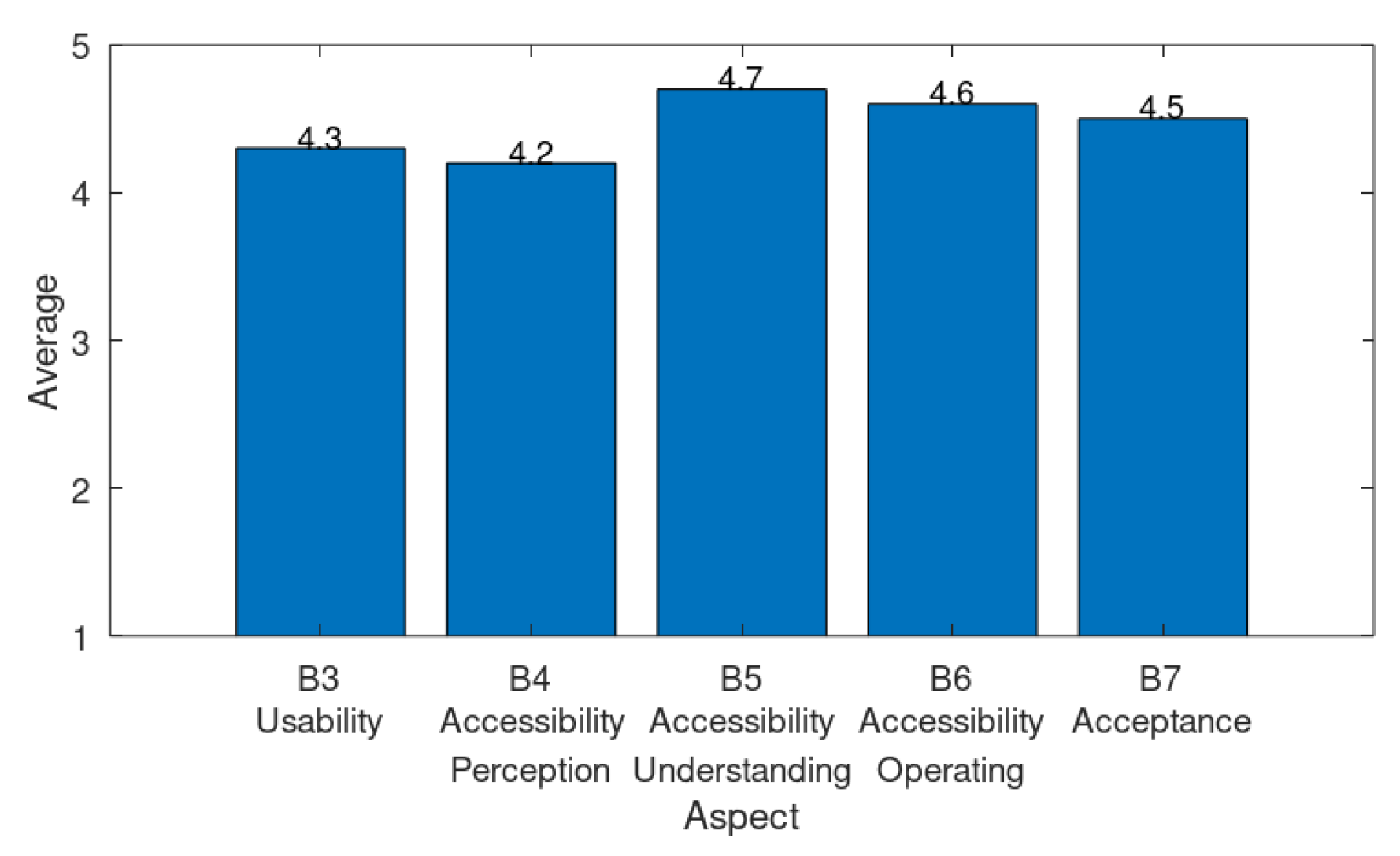

Questionnaires completeness were checked, and the obtained responses from the post-test questionnaires and interviews were analysed and correlated to participants’ characteristics and previous experience, which were defined based on the responses to pre-test questionnaires. The results obtained of the three factors (usability, accessibility and user acceptance) can be found in Appendix B along Tables A3 - A7, and Figure 7 depicts the average values for every table.

Figure 7.

Average outcomes for AUSUS evaluation.

Figure 7.

Average outcomes for AUSUS evaluation.

Usability. Table A3 shows the six questions that evaluated usability: five had a 5-point Likert scale answer system and one was an open-ended question. The global usability was rated as 4.3 on average (Figure 7). The questions focused on four factors which were: intuitive interaction, effectiveness, interfaces’ appearance and robustness. As a summary of the results: the majority of participants (7 of 10) agreed that the interfaces were intuitive and easy to understand. The understanding capability was just limited (Neutral answer) due to the foreign mother language of one user and the moderate visual disability of another user. All participants agreed that the robot was effective in assisting them to complete the required task, except one user who reported little experience using electronic devices and had both hearing and visual disabilities (Neutral answer); the interface appearance was well appreciated by all the users, except from those with moderate and severe visual disabilities; the interface was robust in general and just 4 out of 6 users detected some issues: (i) the voice of the robot did not match the subtitle displayed on the screen (essentially they were not synchronized because the subtitle lagged one-two seconds behind the robot’s speech); and (ii) the buttons were small and the user was confused between the functionality of the buttons to control the voice parameters such as volume, velocity or tone.

Accessibility. Fourteen 5-point Likert scale questions were devoted to assess the accessibility of the robot interfaces. More precisely, these questions evaluated to what extent the robot’s interfaces were perceivable, understandable and operable. Results for each subfactor are detailed in Tables A4, A5 and A6, with average values of 4.2, 4.7 and 4.6 respectively (see Figure 7). The users indicated that the robot’s voice was not clear enough and it should be corrected before its integration at the retirement home; the information provided through the display (including the captioning of the robot’s voice) was clearly perceived by the majority of the users, being all able to read everything one meter away from the screen. Related to the understanding factor, the participants were able to understand the robot’s speech, the purpose of each interface of the events announcer application, and the flow of interaction with these interfaces. Moreover, they all found the application windows logically ordered. Finally, related to the interface operable factor, the participants were able to operate the application’s software and hardware interaction components in general, such as the touch screen and voice volume buttons, and they were able to navigate through the town crier application windows. One user had problems adjusting the volume of the robot because the buttons were not big enough for him.

User acceptance. Table A7 contains questions designed to ascertain whether participants were satisfied with how the robot performed its functions, and to determine their willingness to use the robot in the future. An average value of 4.5 was obtained for this factor (see Figure 7), and in the interviews, the participants indicated in general that they were satisfied with the robots interface and they would like to use it in the future for the same purpose and for other tasks.

Evaluation recommendations

After the in-depth evaluation, important recommendations were elicited from the user’s feedback and they were incorporated in the version used in the long-term experiment in the retirement home. Some examples of recommendations affected directly to the interface design, such as: the letter size of the interfaces should be configurable in order to make the interface more accessible to users with visual disabilities; a more intuitive interface is needed for users with a combination of hearing and visual disabilities and not used to using digital devices; it’s especially important to synchronize the robots voice with the captioning; a clearer and more natural voice for the robot would help users to understand it better.

Advantages and Limits

One of the main advantages of this evaluation with younger users is that, with the active participation of users, usability and accessibility barriers were detected and the researchers could improve the interfaces to achieve a better user experience and acceptance before integrating them in the next pilot phase, in the retirement home. Moreover, it allowed to obtain and evaluate a prototype of the interfaces formally, based on a scientific evaluation framework, following the criteria of not disturbing too much to the final users.

However, as a limitation, we are aware that the interaction characteristics of the people involved in this evaluation do not necessarily match the interaction characteristics of the stakeholders in the retirement home.

Also, it must be noticed that the novelty factor may have introduced a bias in the outcomes, which may dissapear when the long-evaluation is completed.

Nevertheless, this evaluation is considered valuable because it has helped the researchers to improve the interfaces, but evaluations of the interfaces with the users of the retirement home are needed.

Next subsection details a mid-term evaluation carried out in the retirement home.

6.2. Evaluation in the Retirement Home

An initial and mid-term evaluation of the performance of the interfaces with the users in the retirement home was also made based on quantitative parameters, once that it was possible to return to the retirement house during the COVID19 period. The main aim of this evaluation is to objectively measure the use of the touchable interface on the robotic torso by the older adults.

For these tests in Vitalia Teatinos retirement home (Málaga), the users’ age ranged from 65 to 85 years old. There were 9 residents actively involved in the tests, four men and five women. The evaluation lasted for five months, from November 15th, 2021, to July 21th, 2022 (during these months and again due to the COVID-19, there was another lockdown period from December 2021 to March 2022).

The environment and procedure was similar to the pilot experiment described in

Section 5.4 and

Section 5.5 respectively. The main difference in the procedure is that while the users were interacting with the robotic platform, it saved some anonymized interaction data for a further objective analysis. The main outcomes of this analysis is detailed next.

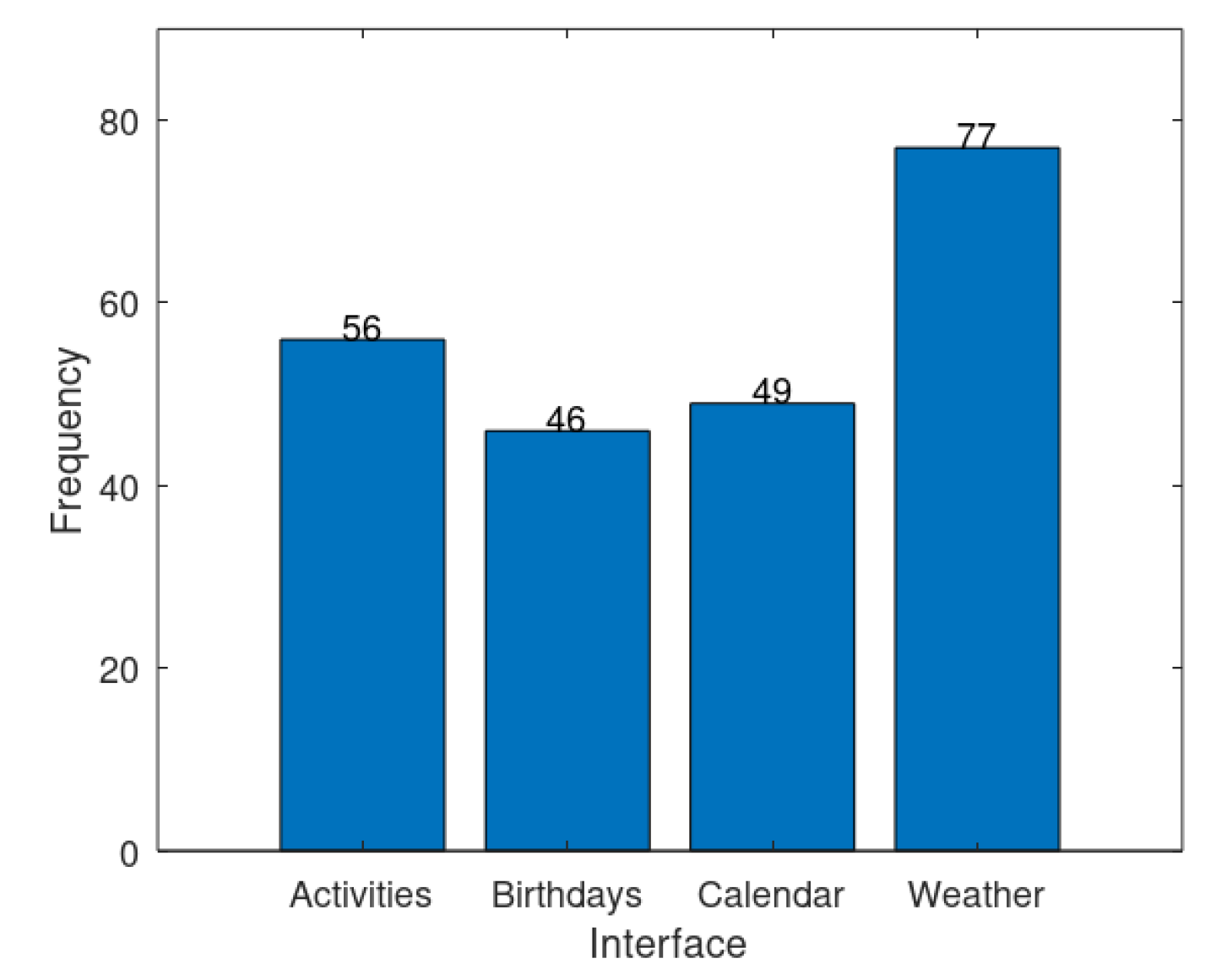

First, the number of times that every interface was selected through the main interface in the chest screen of the robot was measured (see Figure 5b). Figure 8 shows that most requirements were related to the weather information.

Figure 8.

Number of times that every interface has been selected.

Figure 8.

Number of times that every interface has been selected.

Also, how the users managed the control of the volume from the robot interface was monitored. In this sense, it was considered of interest the number of times that the users tried to increase the volume once that it had been already set to its maximum value, since it can be a sign of problems with the robot loudspeakers volume and/or intelligibility. In particular, it has been detected that only in 5.26% of the tasks of Figure 8 there was an attempt to increase the volume of the interface over its maximum value. Based on this value, it can be concluded that the loudspeakers volume was satisfactory the majority of the times.

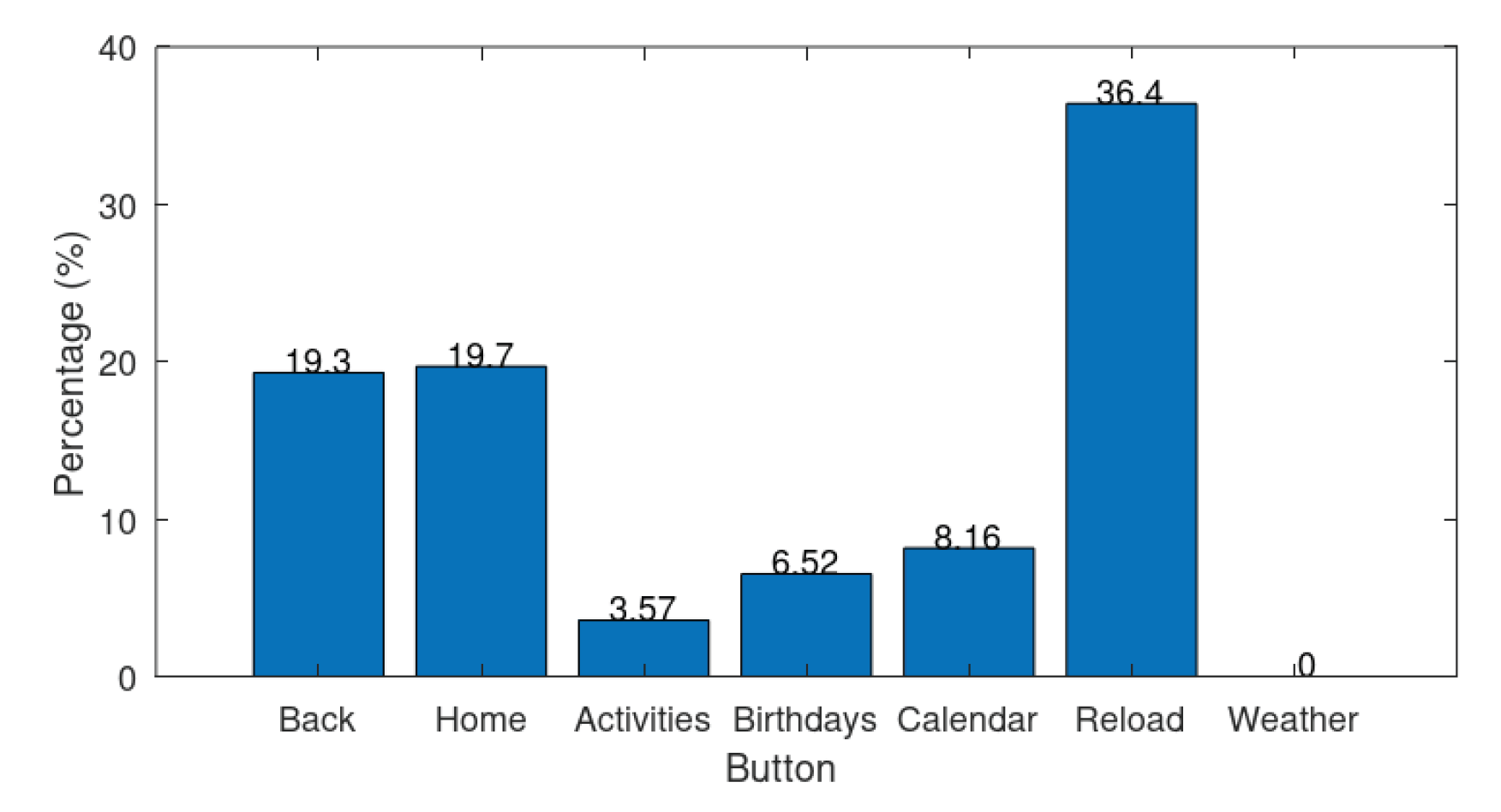

Regarding the tactile control of the screen, the researchers observed that some of the users tried to press the same button in the interface several times in a row. For this reason the number of times that the users have pressed a button on the screen more than once within a temporal range of three seconds was also measured. Figure 9 shows the individual percentage of the number of attemps to press a button with one or more consecutive taps. It can be seen that this percentage is low for the buttons that select the main tasks: "Activities", "Birthdays", "Calendar" and "Weather". However, this percentage is significantly higher in the case of the buttons for "Back", "Home" and "Reload". It can be seen in Figure 5b that the main difference among these buttons is their size, being the smaller ones those that present a higher percentage. This issue will be considered in the next interfaces re-design, concluding that larger buttons are better for these situations.

Figure 9.

Percentage of the number of times that every button has been consecutively pressed.

Figure 9.

Percentage of the number of times that every button has been consecutively pressed.

Finally, in addition to the collection of objective measures during the interaction sessions and observations, informal interviews were conducted with the stakeholders after each of the sessions. As a summary of the main results, as was the case with the evaluation of the younger users, the users found the interfaces usable (intuitive, effective, they liked the look and feel of the interface and they found it robust) and they found some accessibility barriers, most of them already detected in previous evaluations, such as ocasionally the volume of the loudspeakers, captioning delay when the robot was speaking, the position of the screen or when the robot was unable to establish a social dialogue with the older adults, among others. However, despite these accessibility inconveniences, they were generally very satisfied with the functionality and interfaces of the robot and were willing to interact with it in the future for this or other use cases. In fact, after the evaluation sessions, most of the users gave us more ideas for future tasks for the robot, such as making appointments with doctors, remembering to take medication, or even remembering when their relatives are coming to visit them, among others.

7. Conclusions

The main scientific contributions of this study are four fold: it details a use case where a SAR is integrated in a retirement home to study mid-term user’s adherence, acceptability and utility of the robotic system; it also describes in detail the research and evaluation methodology following a user-centred and participatory design and detailing how the different methods were employed during the specification and implementation of the use case. Moreover, the usefulness and effectiveness of the AUSUS framework, previously proposed by the authors in the article [

7], is also validated. Additionally, it has also allowed to iteratively introduce improvements to the platform, by establishing new technological challenges derived from its evaluation in real environments [

32,

33].

In particular, a participatory design workshop in this initial definition of the use allowed -both from a pragmatic and reflexive perspective - to identify needs and encourage stakeholders’ participation. Also, coherent with participatory design characteristic of mutual learning, technical information about the robot were shared between researchers-engineers and participants, so as to eliminate false expectations at this early stage. Furthermore, the active participation of the users throughout the process, following a participatory design approach, made them much more involved in the project, facilitating the acceptance and the attachment to the robotic platform in the retirement home and the success of the project.

Related to the user-centred design approach, from the initial interviews to the final evaluation of the system, and by conceiving the robot and its ecosystem designed with a person-centred care as the main goal, we found that this approach could significantly increase the well-being of residents. Indeed, as reported by the stakeholders, attention should be paid firstly in allowing caregivers to spend more

quality time with the older adults, by reducing their workload in repetitive or simple tasks, and secondly contributing to making the robot a social facilitator, by adapting its behaviour to the older adults acceptability criteria. Moreover, in each iteration of the interface design and evaluation process, users could see that their recommendations and initial interaction problems were considered, and the system was improved step by step thanks to their help. This made the users co-participants responsible of the integration success. Following similar approaches ([

9,

14,

17]), the use case presented here aided in gathering first empirical insights, which constitute as an intermediary hypotheses that will be examined during the next iterative phases. Indeed, these insights have already allowed us to delimit a simple task that fulfils both premises, and that will also facilitate the definition of more complex tasks with an accessible interaction adapted to the residents’ expectations and needs.

The first pilot test presented in this study has shown that the announcer robot is producing a very good first impression in all users. It is important to highlight that social facilitation is a key added value of social robots, with respect to other assistive technologies. This pilot experiment has also shown that this design process-which is iterative, combining human-centred and participatory design approaches-will struggle to avoid rejection (caused by false expectations) on the one hand, and boredom (caused by a too naive robot) on the other hand. We strongly believe that keeping users in the design process is the key feature to succeed in this challenging scenario.

This study also describes in depth the user’s evaluation developed during and after the programming process of the robots interfaces. Users’ participation has allowed us to improve the interfaces before starting the long-time pilot integration at the retirement home, which is currently being conducted.

Hence, while the retirement home was closed to visits and experiments due to the COVID-19 pandemic, the robot was provided in the lab with all the updates suggested during the pilot study. Moreover, two other use cases were defined and implemented, so that Felipe was able to return in 2022 to the retirement home, with a complete new set of features to be shown, used, evaluated and redesigned by the residents and professionals of Vitalia Teatinos. These long-term evaluations, which lasted for months, are currently being performed, and while their analysis lies out of the scope of this paper, so far experiments for the announcer use case validate proposed methodology, by showing very good qualitative results in terms of acceptability and utility.

We are currently working on improving the robot’s interfaces and the implementation of the use case, together with new use cases to carry out long-term evaluations in the residential home. The long-term evaluation will avoid bias in the results due to the novelty of the robotic platform.

Author Contributions

Conceptualization, J.P. Bandera, A. Iglesias and R. Viciana; methodology, A. Iglesias, K. Lan, M. Qbilat and R. Viciana; software, A. Tudela, R. Marfil and J.M. Perez-Lorenzo.; validation, A. Iglesias, K. Lan, A. Tudela, A. Jerez and A. Hurtado; formal analysis, A. Iglesias and K. Lan; investigation, A. Tudela, A. Iglesias, M. Q-bilat, R. Marfil and J.P. Bandera.; resources, A. Jerez.; data curation, A. Iglesias, A. Jerez, J.M. Perez-Lorenzo.; writing—original draft preparation, A. Iglesias, R. Viciana, A. Tudela, J.P. Bandera, J.M. Perez-Lorenzo.; writing—review and editing, A. Iglesias, R. Viciana, M. Qbilat, J.P. Bandera, J.M. Perez-Lorenzo.; visualization, J.P. Bandera.; supervision, A. Iglesias, J.P. Bandera.; project administration, J.P. Bandera.; funding acquisition, J.P. Bandera, A. Iglesias and R. Viciana. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been funded by the regional Project AT17-5509-UMA ’ROSI’ (Plan Andaluz de Investigación, Desarrollo e Innovación - PAIDI 2020, Junta de Andalucía) and UMA18-FEDERJA-074 ’ITERA’ (Programa Operativo FEDER Andalucía 2014-2020. Convocatoria 2018), the National Research Projects CSO2017-86747-R, RTI2018-099522-A-C44, TED2021-131739B-C21 and PDC2022-133597-C42 and the grant for the requalification of the Spanish University System (2021-23) by the Ministry of Science, Innovation and Universities and UC3M.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Provincial Ethical Committee of the Andalusian Public Healthcare system (Comité de Ética de la Investigación Provincial de Málaga, session hold on 24/09/2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

All data generated or analysed during this study is included in this published article. The data sources (use case recordings, interviews, etc) employed to generate this data is not publicly available because they contain sensitive personal information.

Acknowledgments

The authors would like to thank the people who were involved in the evaluation of the system, at UC3M and Vitalia Teatinos retirement home. In particular, we would like to thank Ana for her willingness, enthusiasm and help in making this project a reality. We would also like to thank Dr Kyriaki Papageorgiou, who has enriched this project by being part of it. Finally, we would like to thank Mario Siles and José Javier Bosh from the Universidad Carlos III de Madrid for their ideas on how to improve the robot display interface.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AAL |

Ambient Assisted Living |

| DSR |

Deep State Representation |

| HCI |

Human-Computer Interaction |

| HRI |

Human-Robot Interaction |

| ICT |

Information and Communication Technologies |

| ROS |

Robot Operating System |

| SAR |

Socially Assistive Robot |

Appendix A HRI User’s Evaluation. Participant’s Characteristics

The characteristics of the users enrolled at the evaluation of the Robot interface are detailed in Tables A1 and A2, where the participants self-report their abilities regarding aspects of vision, hearing, etc. using Likert scales.

Table A1.

Participants’ characteristics (1).

Table A1.

Participants’ characteristics (1).

| Characteristics |

Categories |

Percentages |

| Age |

20-30 |

60% |

| |

40-50 |

10% |

| |

50-60 |

20% |

| |

60-70 |

10% |

| Gender |

Male |

60% |

| |

Female |

40% |

| Nationality |

Spanish |

90% |

| |

Iranian |

10% |

Type of disabilities

(if any)

|

Hearing disabilities |

(High) 10%, (Moderate) 10%, (Low) 30%, and (None) 50% |

| Hearing aids |

None 100% |

| Visual disabilities |

(High) 20%, (Moderate) 40%, (Low) 20%, and (None) 20% |

|

Visual aids

|

Glasses 70% |

| Motor disabilities |

(Moderate) 10%, (Low) 20%, and (None) 70% |

|

Motor aids |

None 100% |

| Reading agility |

(Moderate) 20%, (Low) 30%, and(None) 50% |

Table A2.

Participants’ characteristics (2).

Table A2.

Participants’ characteristics (2).

|

Characteristics

|

Categories

|

Percentages

|

Experience in

using electronic

devices

|

Using mobile phones |

(Frequently) 30%,

(Moderate use) 40%,

(Low use) 20%, and

(None) 10%

|

| Other uses of the phone beside calls |

(Messaging, calls, social

networks, and surfing the

internet) 80%, and

(games and shopping) 20%

|

| Using computers or tablets |

(Frequently/High use) 30%,

(Moderate use) 50%,

(Low use) 10%, and

(None) 10%

|

|

Using computer or tablet for |

(For work and study) 60%

and (for work, study,

and entertainment) 40%

|

Experience in

interaction with

robots

|

I have ever interacted

with a robot |

(None) 30%, (rarely) 30%,

(low use) 20%, (moderate use) 10%,

and (frequently/high use) 10%

|

|

Type of used robot and what for |

(Intelligent virtual assistant)

30%, (study and research)

30%, (drones) 10%

|

| Opinions about

interacting with robots

and the preferred use

cases of robots |

(I don’t like to interact with

robots) 20%, (welfare)

10%, (work and home) 40%,

(personal assistive) 10%,

(entertainment) 10%, (in all

aspects of life without

replacing individuals) 10%

|

Appendix B HRI User’s Evaluation. Questionnaire Results

This section details the questions answered by the users, as well as their mean and standard deviation:

Usability Questionnaire in Table A3

Accessibility (perception) Questionnaire in Table A4

Accessibility (understandable) Questionnaire in Table A5

Accessibility (operating) Questionnaire in Table A6

Satisfaction Questionnaire in Table A7

Table A3.

Questions dedicated to evaluate usability aspect.

Table A3.

Questions dedicated to evaluate usability aspect.

|

#

|

Factors

|

Questions

|

Mean

|

Standard

deviation

(SD) |

| 1 |

Intuitive interaction |

I find the robotic application intuitive. |

4.2 |

0.63 |

| 2 |

Intuitive

interaction |

The displayed formation on the screen

is easy to understand. |

4.2 |

0.91 |

| 3 |

Effectiveness |

I have been able to perform tasks easily. |

4.3 |

0.67 |

| 4 |

Effectiveness |

I have not needed any help during the

completion of the tasks. |

4.4 |

0.52 |

| 5 |

Interfaces

appearance |

Interfaces appearance helped me to

clearly distinguish the different

available functions. |

4.3 |

1.06 |

| 6 |

Robustness |

I have found errors in the interfaces,

and they are... |

* |

* |

Table A4.

Questions dedicated to evaluate accessibility-perception factor.

Table A4.

Questions dedicated to evaluate accessibility-perception factor.

|

#

|

Factors

|

Questions

|

Mean

|

Standard

deviation

(SD) |

| 7 |

Perception |

The robot voice was clear to me. |

3.3 |

0.82 |

| 8 |

I was able to read the displayed

subtitles on the robot screen at

all times. |

4.6 |

0.70 |

| 9 |

It was easy to perceive the displayed

messages and subtitles at the same

time and along with the robot voice. |

4.2 |

0.79 |

| 10 |

The colors chosen for the interfaces

made it easy to read the information. |

4.8 |

0.42 |

| 11 |

The used font size was appropriate

for reading at a one-meter distance. |

4.2 |

1.03 |

Table A5.

Questions dedicated to evaluate accessibility-understanding factor.

Table A5.

Questions dedicated to evaluate accessibility-understanding factor.

|

#

|

Factors

|

Questions

|

Mean

|

Standard

deviation

(SD) |

| 12 |

Understanding |

I was able to understand what

the robot was saying at all times. |

4.7 |

0.48 |

| 13 |

I have clearly understood that the

purpose of the main screen was to

click on each button to access the

calendar, birthdays, weather, and

activities interfaces respectively. |

4.9 |

0.32 |

| 14 |

I have clearly understood that the

purpose of the calendar interfaces

was to know the current day and

time. And to click on any day to

check the scheduled activities for

that day. |

4.6 |

0.52 |

| 15 |

I have clearly understood that the

purpose of the birthday interface

was to show all of today’s

birthdays and those for the next

two days. |

4.7 |

0.48 |

| 16 |

I have clearly understood that the

purpose of the weather interface

was to know the weather forecast

for today and the next few days. |

5 |

0.00 |

| 17 |

I have clearly understood that the

purpose of the activities interface

was to know the schedule of

activities for today. |

4.8 |

0.42 |

| 18 |

I have found the order of the

application windows was logical. |

4.4 |

0.52 |

Table A6.

Questions dedicated to evaluate accessibility-operating factor.

Table A6.

Questions dedicated to evaluate accessibility-operating factor.

|

#

|

Factors

|

Questions

|

Mean

|

Standard

deviation

(SD) |

| 19 |

Operating |

At all times, I have been able

to tap the robot screen to navigate

through the application windows. |

4.9 |

0.33 |

| 20 |

The application has enabled me to

control the volume of the robot voice,

so I can hear it perfectly. |

4.3 |

0.95 |

| 21 |

At any moment, I have known

what is the running function of the

application, and how to return to

the main interface. |

4.5 |

0.71 |

Table A7.

Questions dedicated to evaluate user acceptance-satisfaction factor.

Table A7.

Questions dedicated to evaluate user acceptance-satisfaction factor.

|

#

|

Factors

|

Questions

|

Mean

|

Standard

deviation

(SD) |

| 22 |

Satisfaction |

I liked how the robot told me what the

day it was, and what activities were

on the calendar for that day. I’d also

like it to do so in the future. |

4.6 |

0.70 |

| 23 |

I liked how the robot told me the

birthdays of today and the next few

days. I’d also like it to do so in

the future. |

4.4 |

0.84 |

| 24 |

I liked how the robot told me the

forecast for today and the next few

days. I’d also like it to do so in the

future. |

4.6 |

0.70 |

| 25 |

I liked how the robot told me the

scheduled activities of the day. I’d

like it to do so in the future. |

4.5 |

0.71 |

| 26 |

In the future, I’d like the robot to

be more complete and to include

new tasks. |

4.2 |

0.92 |

References

- Proyección de población de Andalucía por ámbitos subregionales 2009-2035, 2012.

- Feil-Seifer, D.; Mataric, M. Defining Socially Assistive Robotics. In Proceedings of the 2005 IEEE C9th Int. Conf. on Rehabilitation Robotics, July 2005, pp. 465–468. [CrossRef]

- Li, Y.; Liang, N.; Effati, M.; Nejat, G. Dances with Social Robots: A Pilot Study at Long-Term Care. Robotics 2022, 11, 96. [Google Scholar] [CrossRef]

- Abdi, J.; et al. Scoping review on the use of socially assistive robot technology in elderly care. S. BMJ Open. 2018, 8. [Google Scholar] [CrossRef] [PubMed]

- Anghel, I.; Cioara, T.; Moldovan, D.; Antal, M.; Pop, C.D.; Salomie, I.; Pop, C.B.; Chifu, V.R. Smart Environments and Social Robots for Age-Friendly Integrated Care Services. International Journal of Environmental Research and Public Health 2020, 17. [Google Scholar] [CrossRef]

- Seibt, J.; Damholdt, M.F.; Vestergaard, C. Integrative social robotics, value-driven design, and transdisciplinarity. Interaction Studies 2020, 21, 111–144. [Google Scholar] [CrossRef]

- Iglesias, A.; Viciana, R.; Pérez-Lorenzo, J.; Lan Hing Ting, K.; Tudela, A.; Marfil, R.; Dueñas, A.; Bandera, J. Towards long term acceptance of Socially Assistive Robots in retirement houses: use case definition. In Proceedings of the 2020 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC); 2020; pp. 134–139. [Google Scholar] [CrossRef]

- Booth, K.E.; Mohamed, S.C.; Rajaratnam, S.; Nejat, G.; Beck, J.C. Robots in retirement homes: Person search and task planning for a group of residents by a team of assistive robots. IEEE Intelligent Systems 2017, 32, 14–21. [Google Scholar] [CrossRef]

- Kriegel, J.; et al. Socially Assistive Robots (SAR) in In-Patient Care for the Elderly. Studies in health technology and informatics 2019, 260, 178–185. [Google Scholar] [CrossRef] [PubMed]

- Kachouie, R.; et al. Socially Assistive Robots in Elderly Care: A Mixed-Method Systematic Literature Review. International Journal of Human-Computer Interaction 2014, 30, 369–393. [Google Scholar] [CrossRef]

- Fan, J.; Bian, D.; Zheng, Z.; Beuscher, L.; Newhouse, P.; Mion, L.; Sarkar, N. A Robotic Coach Architecture for Elder Care (ROCARE) Based on Multi-user Engagement Models. IEEE Transactions on Neural Systems and Rehabilitation Engineering 2016, PP, 1–1. [Google Scholar] [CrossRef] [PubMed]

- Breazeal, C. Affective Interaction between Humans and Robots. In Proceedings of the Advances in Artificial Life; Kelemen, J.; Sosík, P., Eds., Berlin, Heidelberg, 2001; pp. 582–591. [CrossRef]

- Obrenovic, Z.; Abascal, J.; Starcevic, D. Universal accessibility as a multimodal design issue. Commun. ACM 2007, 50, 83–88. [Google Scholar] [CrossRef]

- Courbet, L.; et al., Preliminary Evaluation of a Digital Diary for Elder People in Nursing Homes; 2017; pp. 178–194. [CrossRef]

- Keizer, R.A.C.M.O.; et al. Using socially assistive robots for monitoring and preventing frailty among older adults: a study on usability and user experience challenges. Health and Technology 2019, 9, 595–605. [Google Scholar] [CrossRef]

- Astorga, M.; Cruz-Sandoval, D.; Favela, J. A Social Robot to Assist in Addressing Disruptive Eating Behaviors by People with Dementia. Robotics 2023, 12, 29. [Google Scholar] [CrossRef]

- Winkle, K.; et al. Mutual shaping in the design of socially assistive robots: A case study on social robots for

therapy. International Journal of Social Robotics 2019, pp. 1–20. [CrossRef]