1. Introduction

Induction motors are widely preferred in railway applications due to their mechanical robustness, high overload capacity and affordability [

1]. However, maintaining the optimum temperature in traction motors is crucial for maximizing their efficiency and keeping safe and reliable operation in railway propulsion systems. The traction motors operate in fluctuating load demand and ambient conditions potentially causing overheating of some parts such as windings resulting in motor failures. Hence, temperature monitoring in real time is deemed to be necessary. Conventionally, an accurate estimation of the temperatures is performed using detailed physics-based thermal models. However, the computational demand of such techniques limits their adaptability to real-world conditions. Hence, prior calibration of the thermal models in a controlled experimental environment is performed. The introduction of digital technologies such as smart sensors, and the Internet of Things (IoT), has transformed the way thermal model parameters are calibrated, in the current scenario. These technologies enable a shift from conventional, lab-based calibration methods to real-time analysis of operational data. Utilizing IoT and smart sensors for constant monitoring leads to early identification of thermal issues. Which results in decreasing the chance of system failures and improves predictive maintenance and operational efficiency.

In recent years, the surge of interest in machine learning (ML) techniques has been considered promising tools for automating monitoring and control in induction motor drivers. These data-driven models [

2], particularly employing neural networks, offer promising results in temperature prediction and parameter estimation. A machine learning model can be trained to empirically estimate temperature using data collected from test benches [

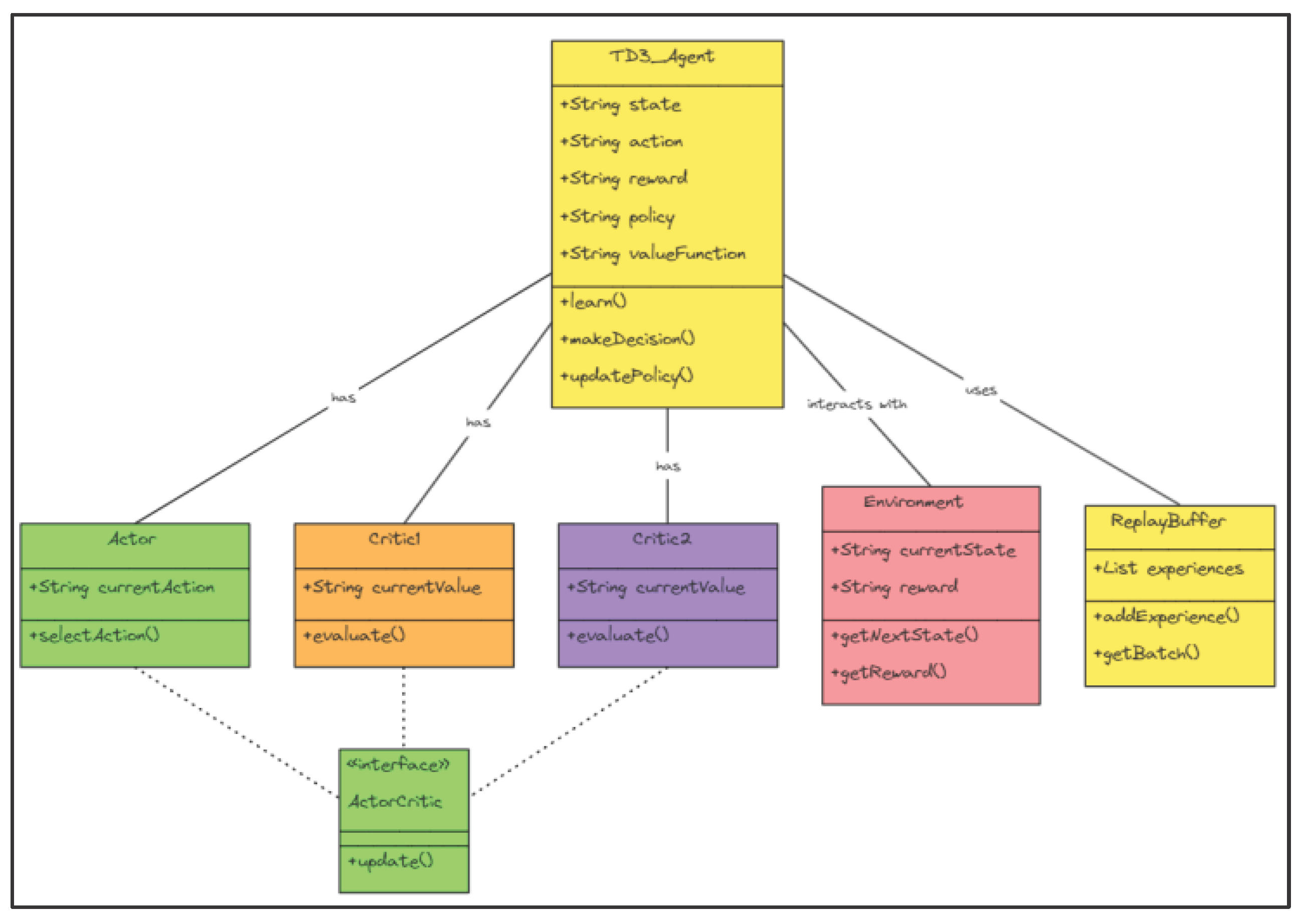

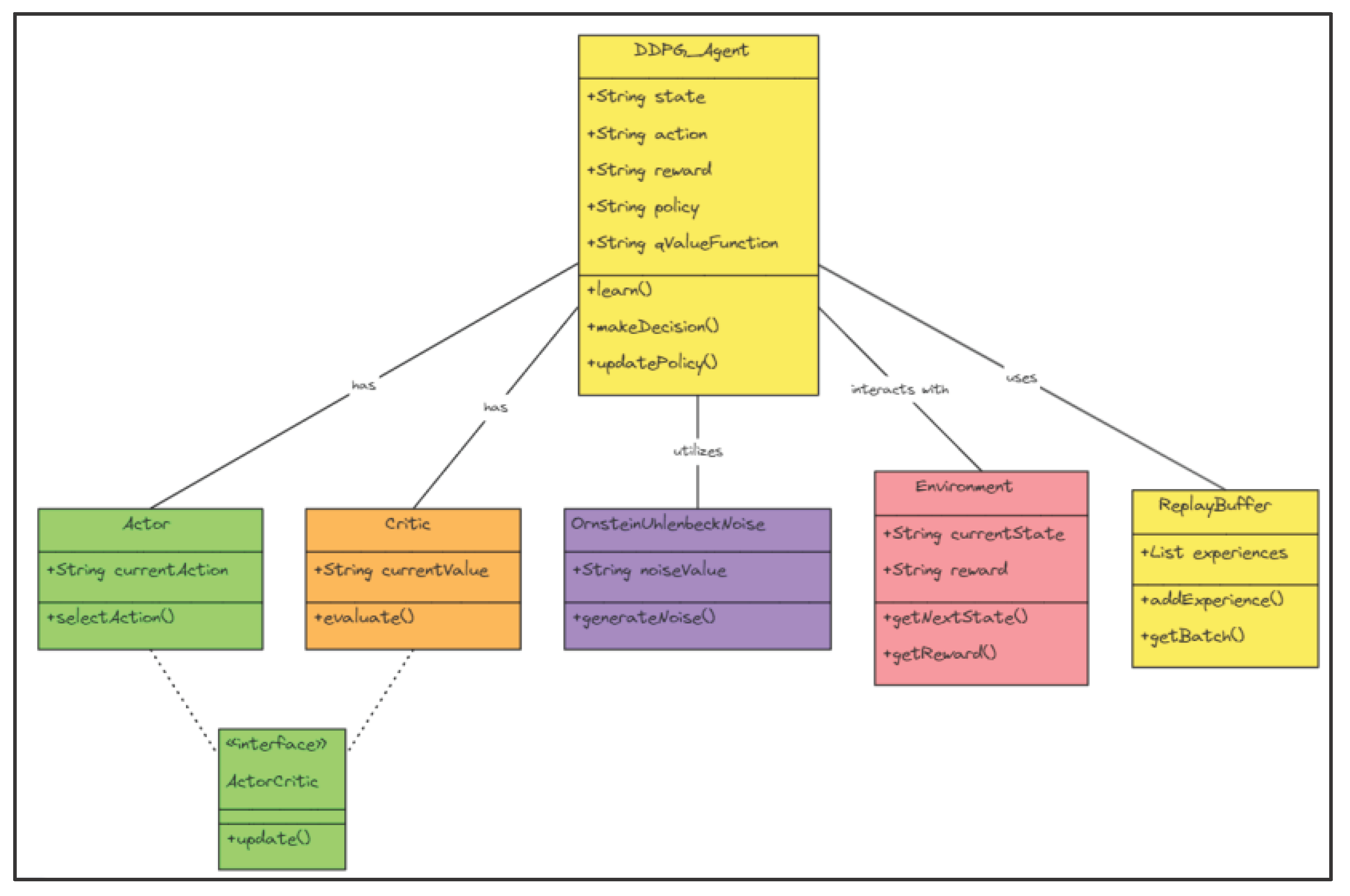

2]. Moreover, supervised ML models have been explored for real-time estimation of parameters such as rotor resistance in in-duction motor control systems. Recurrent neural networks (RNNs) and convolutional neural networks (CNNs) are widely used for sequence learning tasks and can manage high-dynamic nature scenarios. Additionally, reinforcement learning (RL) has emerged as another promising data-driven approach for electric motor drive control [

3]. RL techniques, which learn through trial and error without the need for supervised data labeling, rely on a reward function to guide the learning process. This allows for continuous improvement of control policies based on feedback, showcasing the evolving landscape of ML applications in motor drive monitoring and control.

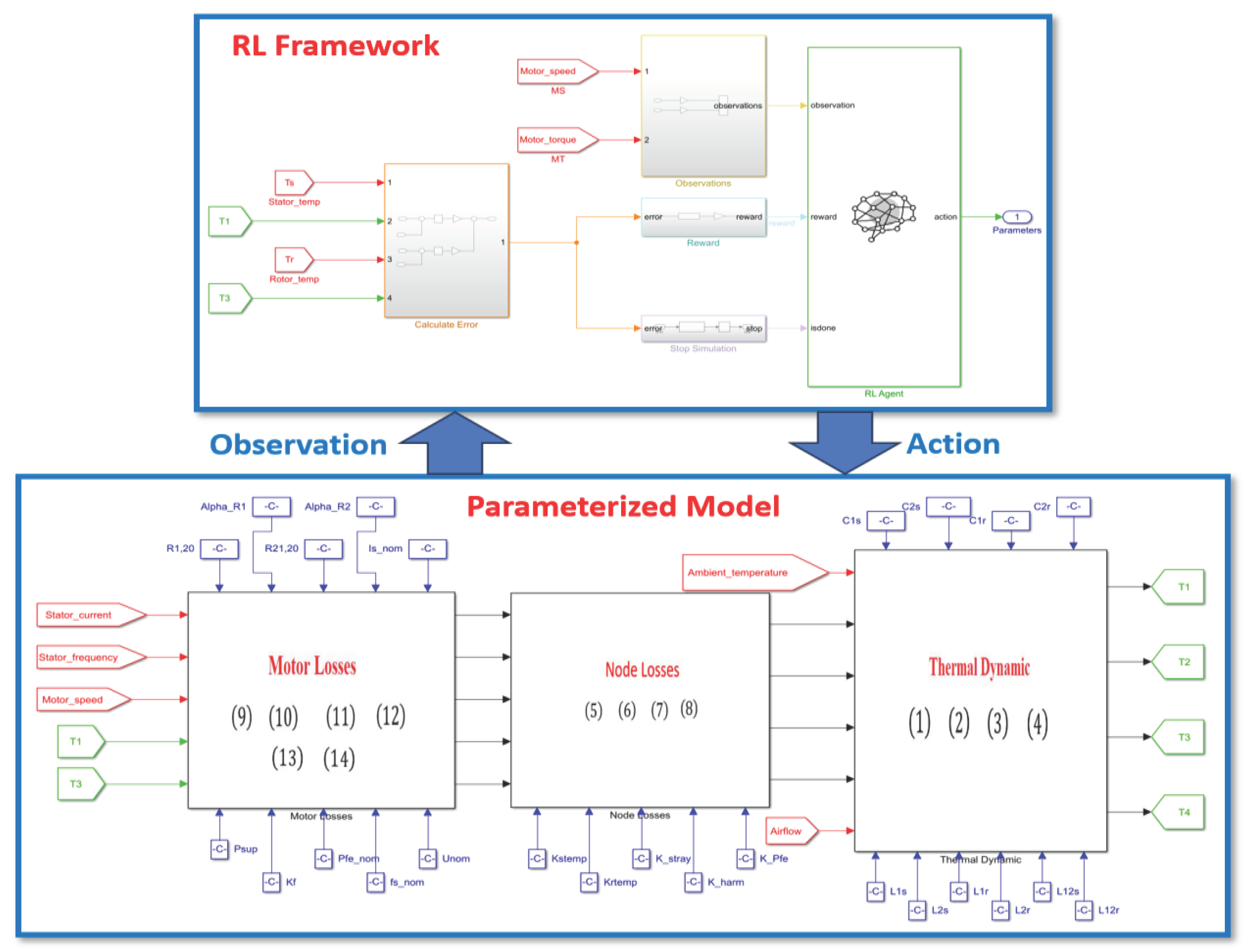

This work contributes to the scientific endeavor of employing a data-driven reinforcement learning-based model for parameter estimation and precise prediction from thermal models using measured and tool driving cycles data. The selection of a reinforcement learning agent is critically influenced by the driving cycle data pattern and the specific problem it aims to solve. This approach not only challenges traditional calibration methods but also contributes to the development of more adaptive and efficient thermal management strategies for railway propulsion systems.

The structure of this paper is outlined as follows: A background and related work is presented in

Section 2.

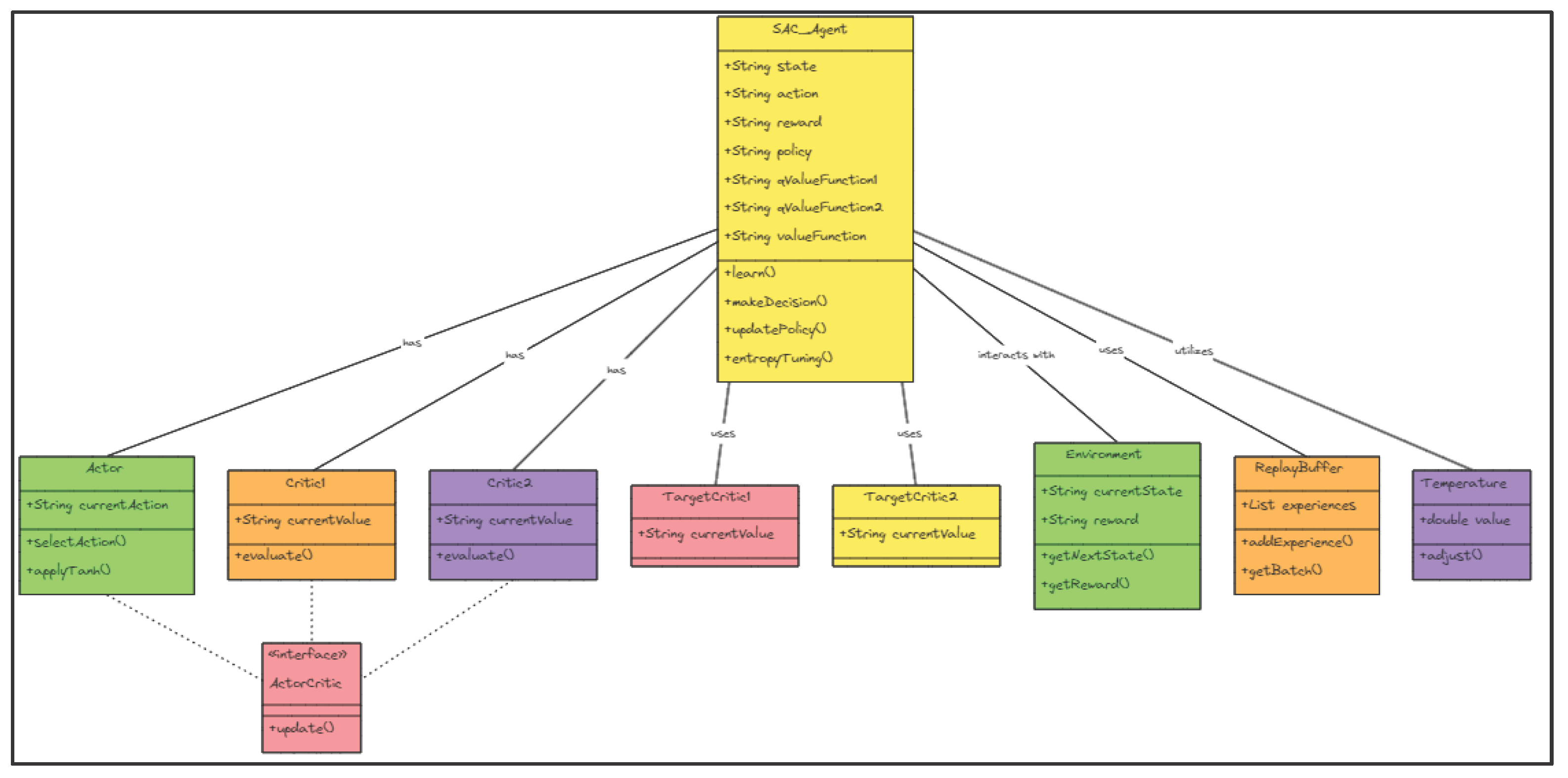

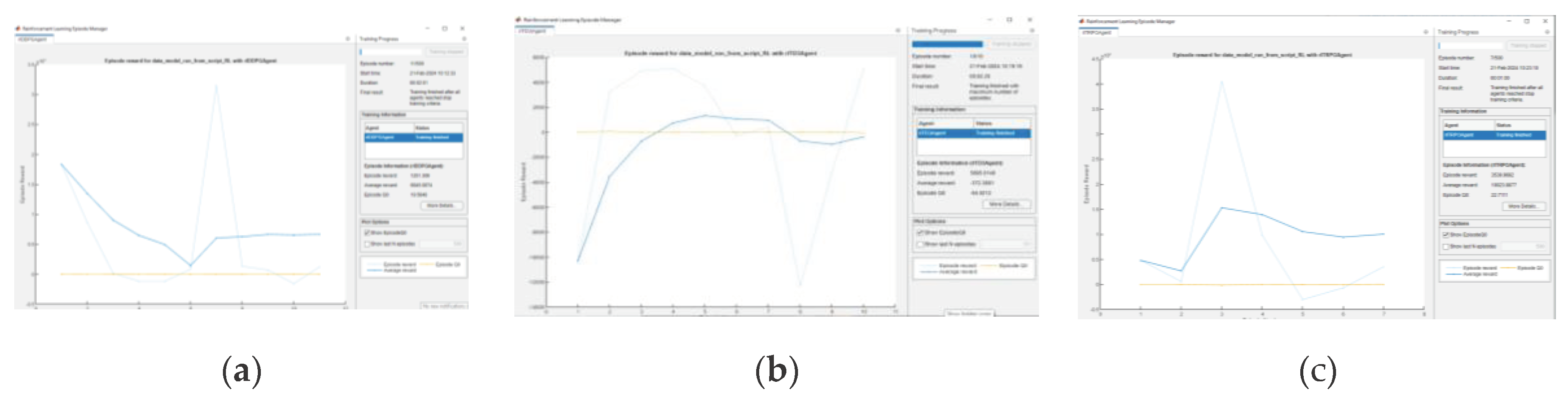

Section 3 introduces the multiple agents reinforcement learning (RL) framework developed for the optimization of the model. Details regarding the dataset and the training methodology are also discussed in

Section 3.

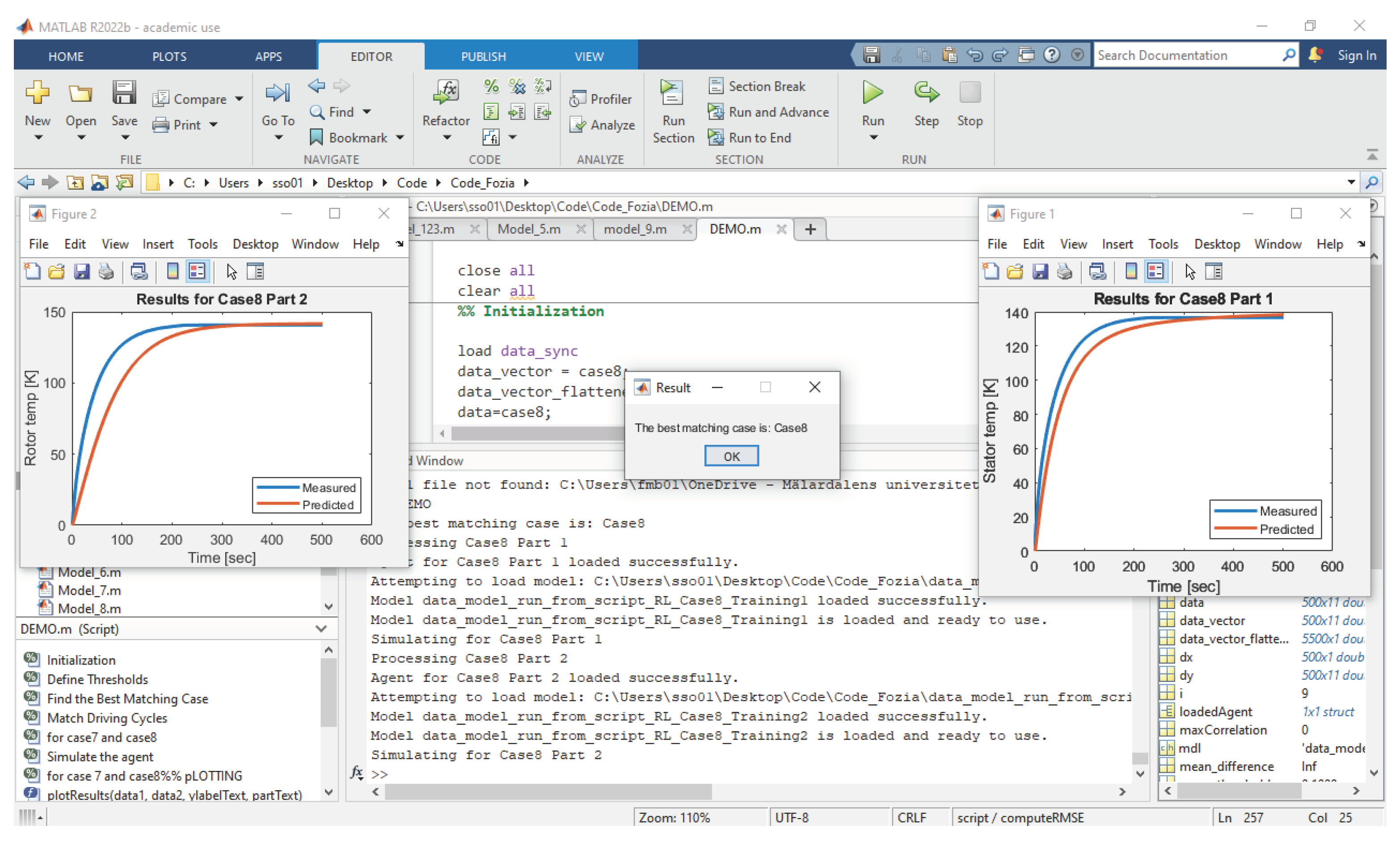

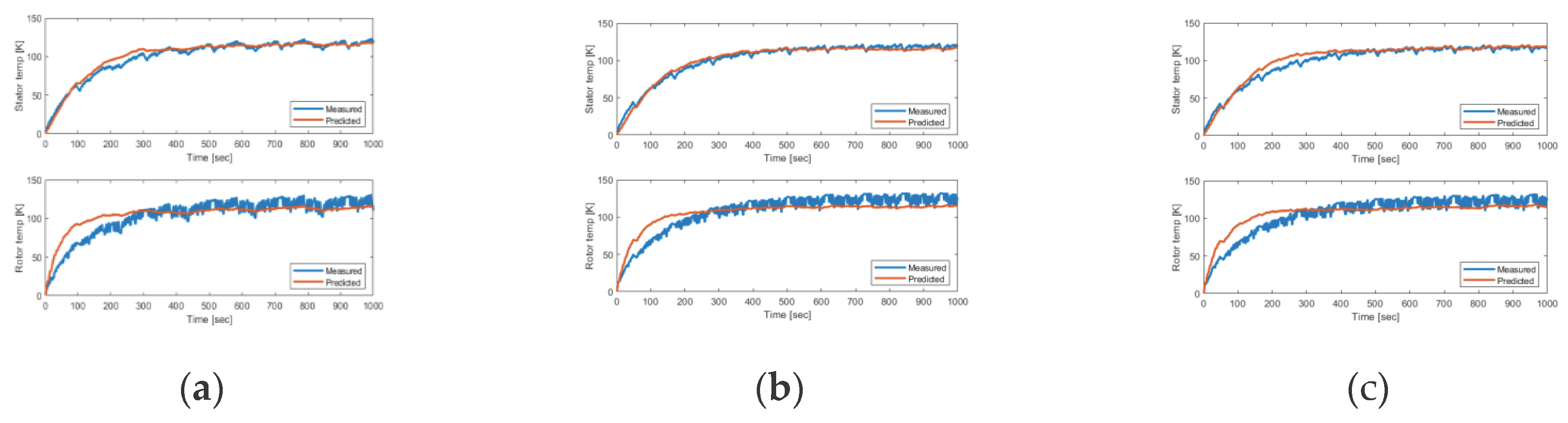

Section 4 presents and analyzes the outcomes of the thermal model. Finally,

Section 5 offers concluding thoughts and directions for future research.

2. Background and Related Work

Traction drive systems are subjected to dynamically changing operating conditions, thus, making tracking of the temperature during run time imperative to prevent any thermal overloading. Optimizing the motor utilization in terms of generating maximum torque and power can lead to a high current load, hence more heat load in the windings. This thermal overloading phenomenon increases the possibility of motor failure if the temperature exceeds the rated temperature withstanding capacity in the insulation. In-duction motor drives are the most used motors in railway propulsion applications to date because of their high efficiency, reliability, mechanical robustness, and low cost [

4]. These motors’ performance varies nonlinearly with temperature, frequency, saturation, and operating point which makes temperature monitoring essential to feedback the controller to compensate for the electrical signals such as voltage and current.

There are several established direct or indirect means for estimating the temperature in different motor parts. Direct methods such as installing contact-based sensors in stator and rotor parts are the simplest means for measurement. For the rotating parts, the data transmission needs special arrangement; to be conducted with the help of end slip rings, or telemetry means. Regardless, installing sensors requires integration effort and additional cost and adds complexity due to their inaccessibility for replacement in case of failures or detuning. Hence model-based measurement techniques have been focused on the past decade [

5,

6]. Here the temperatures can be estimated from the measurement of temperature-dependent electrical parameters in both offline and online manner. In these approaches, the model dynamic behaviors must be accurately accounted for to avoid any estimation errors. These are also invasive in nature and create disturbance to normal operation [

7].

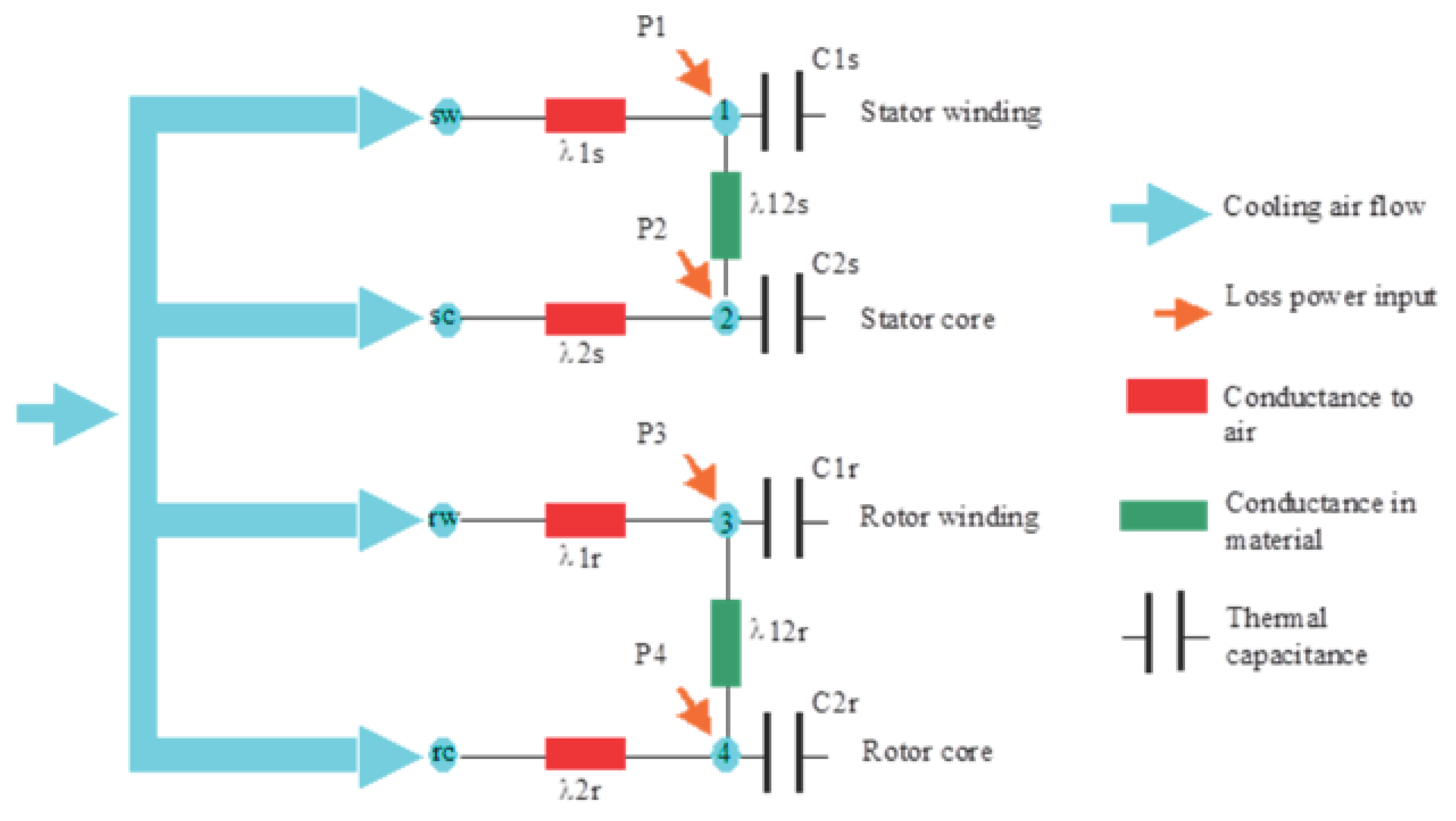

Thermal analysis using computational fluid dynamics or heat equation-based finite element analysis method potentially exhibits good accuracy in temperature estimation. However, both these approaches demand rigorous modeling effort and hence require high computational resources. Thus, it has been excluded from real-time monitoring upfront. An alternative but accurate computationally light temperature estimation technique is using the lumped-parameter thermal network (LPTN) model. An LPTN model summarizes the heat transfer process and can be represented in thermal equivalent circuit diagrams based on heat transfer theory. In the LPTN model, the heat conduction and convection phenomena are represented as thermal resistances and capacitance between different nodes. Furthermore, electro-mechanical power conversion losses, such as winding losses, core losses and mechanical and windage losses are provided as inputs to the LPTN model to compute the temperature.

The complexity of LPTN models depends on the number of chosen nodes in the network. Generally, white box LPTN, based on pure analytical equations, are more accurate but are endowed with many thermal parameters, which could be complex to calculate in practice [

8]. A reduced-order structure with fewer nodes is computationally lightweight and, hence, more suitable for online deployment. In this approach only the dominant heat transfer pathways are represented hence expert domain knowledge is essential not only for the choice of structural design but also for their parameter values. In the past, several reduced order models, typically categorized as light grey (with 5-15 nodes) or dark grey (with 2-5 nodes) LPTN models were structured to estimate the temperatures. The use of reduced-order LPTN models would require estimations of many parameters that are not well known or possible to calculate using analytical equations [

9,

10]. Thus, the identification of the parameters through empirical-based tuning is a crucial step in these studies [

11,

12,

13]. The parameter identification procedure can be stated as an optimization problem, in which the values are varied until the used LPTN model gives the same results as the experimental ones. Several gradient and non-gradient-based optimization methods are used for identifying the parameters. In the work presented by [

11], the parameter identification is conducted by using determinist inverse-based methods such as the Gauss-Newton method, the Levenberg-Marquardt method, and stochastic inverse-based methods such as the Genetic Algorithms.

The thermal parameter values for a traction motor are non-constant due to nonlinear thermal effects. The convective heat transfer coefficients within the air gap as well as in the end-cap region are nonlinearly dependent on the rotor speed and the ambient temperature. In a similar line, in an air-cooled motor, the heat transfer from the stator frame is dependent on the ambient temperature and airflow rate. Sciascera et al. [

9] employed a parameter tuning procedure based on a sequential quadratic programming iterative method for obtaining the uncertain thermal parameters in the LPTN. However, the computational cost of such tuning procedures is high due to the time-variant nature of the parameters. Furthermore, to improve computational efficiency, the dependence of the state matrix on the phase current is approximated with polynomial approximation.

The loss components such as the winding losses which are input to the LPTN model can be calculated for the measured current and winding resistance. The resistance values are temperature dependent which is a state variable in the thermal matrix. Furthermore, the core loss is not measurable. A usual approach to determining iron losses is measuring total power losses and subtracting winding losses. Hence, all errors in the determination of total and winding losses directly add up to an error in the iron loss values. To deal with these uncertainties, Gedlu et al. [

14] used an extended iron loss model as input to a low-order LPTN model for temperature estimation. The loss inputs as a form of spatial loss model calculate individual core losses for each node. In addition to the heat transfer coefficients, the uncertain parameters in the core loss equations are tuned in their possible searching space using particle swarm optimization (PSO) to minimize the estimation error in comparison to empirical measurements.

Xiao and Griffo [

13] in their work presented an online measurement-informed thermal parameter estimation using a recursive Kalman filter method. While a pulse width modulation-based estimation method is utilized for rotor temperature measurement, the temperatures in three nodes such as stator core, winding, and rotor are predicted. The input losses for the LPTN model are derived based on a model-based approach and with the use of Finite Elements analysis. The identification problem is formulated as a state observer with eight states. Three of the states correspond to the nodes’ temperatures and the rest five states represent the unknown thermal resistance parameters in the LPT network. The nonlinearity of the model is dealt with continuously updated linearization of the extended Kalman filter method.

As described by Wallscheid [

7], parameter identification can be made in a local approach or a global approach. In the work presented by [

12], a three-node LPTN is parametrized based on a global approach. A sequence of interdependent identification steps was followed, and the experimental data were used to find the thermal parameters. The model uses the measurement-based loss inputs available with motor Electrical Control Unit quantities, such as motor speed and electric currents. The parameter identification approach has been built on the idea of mapping the linear time-varying parameters to a set of time-invariant models operating within a certain chosen environment. Thus, a consistent parameter set for the whole operating region could be obtained with the adaptation of the relevant boundary conditions through various identification cycles. Wallscheid [

27] used PSO combined with a sequential quadratic programming technique to identify the varying thermal parameters of a four-node LPTN model. The power loss measurement was made in the complete operation range while keeping the temperature constant at the nodes. The loss distribution among the nodes is interpreted as part of the parameter identification procedure to deal with the uncertainty.

While the global approach is more robust in capturing all operating regions of the machine than the local approach, it can also be problematic if the parameter landscape to be identified is large and highly nonlinear in nature [

7].

The growing interest and upsurge in machine learning (ML) techniques in the past decade make these potentially viable tools in the area of automated monitoring and motor drive control. A pure machine ML model is excluded from the expert knowledge of any classic fundamental heat theory and does the task of estimating the temperature empirically. Under this platform, the model parameters are fitted based on collected testbench/observation data only [

2,

15]. The widely used ML algorithm is the linear regression technique which has a low computational complexity and is used for temperature predictions [

16,

17]. However, as linear regression is a linear time-invariant, it does not capture the dynamics of a machine model.

In the field of sequential learning tasks in highly dynamic environments, recurrent neural networks and convolutional neural networks are the state of the art in classification and estimation performance. In the study conducted by Kirchgässner et al. [

16], deep recurrent and convolutional neural networks with residual connections are empirically evaluated for predicting temperature profile in the stator teeth, winding, and yoke as well. The concept is to parameterize neural networks entirely on empirical data, without being driven by the domain expertise. Furthermore, supervised ML models are also investigated for online parameter estimation such as rotor resistance and mutual inductance in the control system of an induction motor [

18]. In the presented work, a simple two-layer artificial neural network (ANN) is trained by minimizing the error between the rotor flux linkages based on an IM analytical voltage model and the output of the ANN-trained model. Feedforward and recurrent networks are used to develop an ANN as a memory for remembering the estimated parameters and for computing the electrical parameters during the transient state.

While data-driven methods are effective in predicting the temperature, the tuned parameters are not interpretable and could not be designed with the low amount of model parameters as in an LPTN model at equal estimation accuracy. As a further development, to make expert knowledge-based calibration less desirable and to account for the uncertainties regarding the input power losses, Kirchgässner [

19] proposed a deep learning-based temperature model where a thermal neural network is introduced, which unifies both consolidated knowledge in the form of heat-transfer-based LPTNs, and da-ta-driven nonlinear function approximation with supervised machine learning.

The reinforcement learning (RL) based method is another promising data-driven technique explored in the field of control of electric machine drives [

20,

21]. RL methods enable learning in a trial-and-error manner and avoid supervision of each data sample. The algorithm requires a reward function to receive the reward signals throughout the learning process. Thus, the control policy could be improved continuously based on the measurement feedback. In this paper, a data-driven reinforcement learning-based parametrization method is proposed to tune a thermal model in induction traction motors. In the following section, the parameterized model is developed, and the reinforcement learning framework is presented.