Submitted:

29 February 2024

Posted:

01 March 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

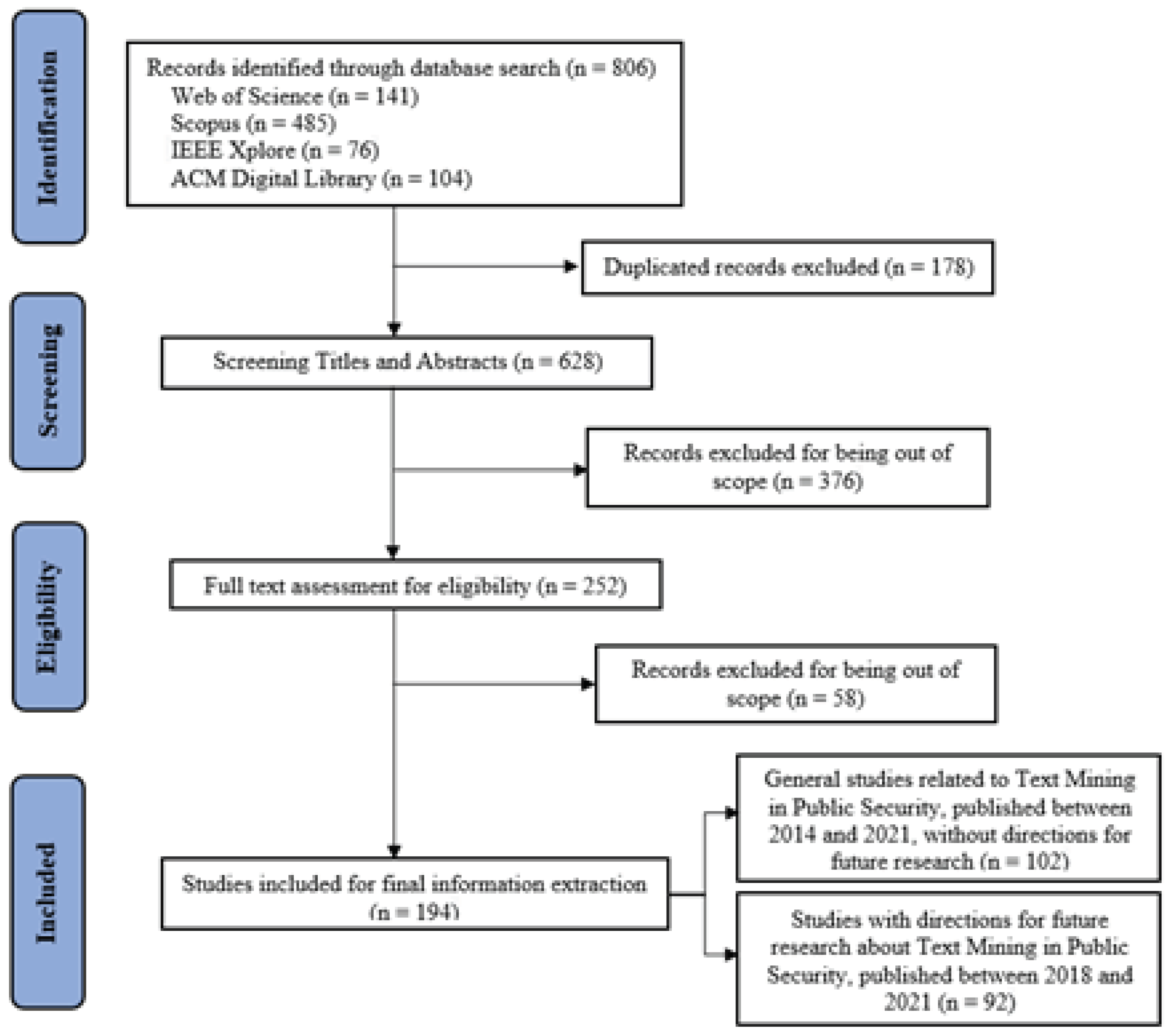

2. Survey Process

2.1. Methodological Summary

2.2. Review Conduction

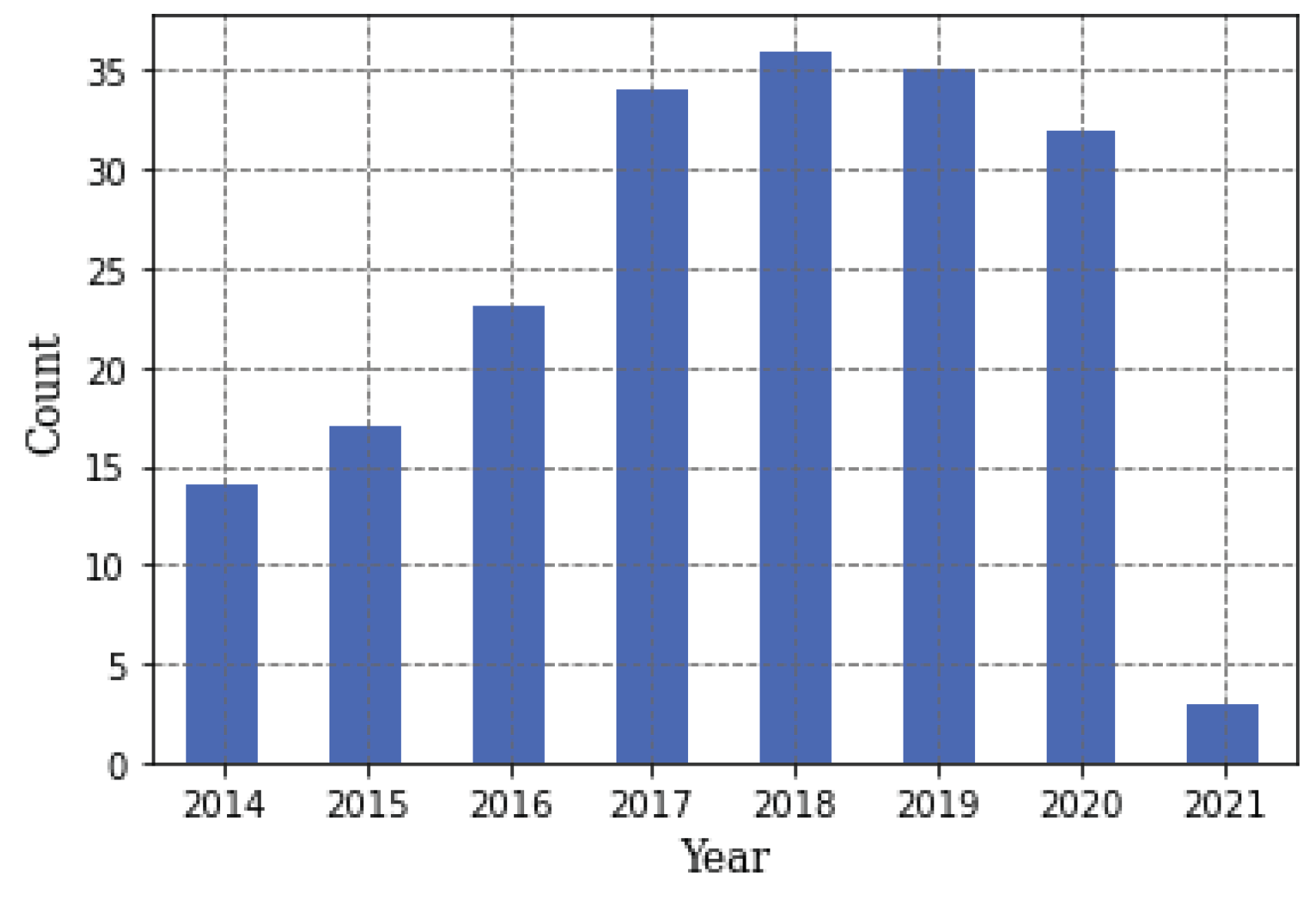

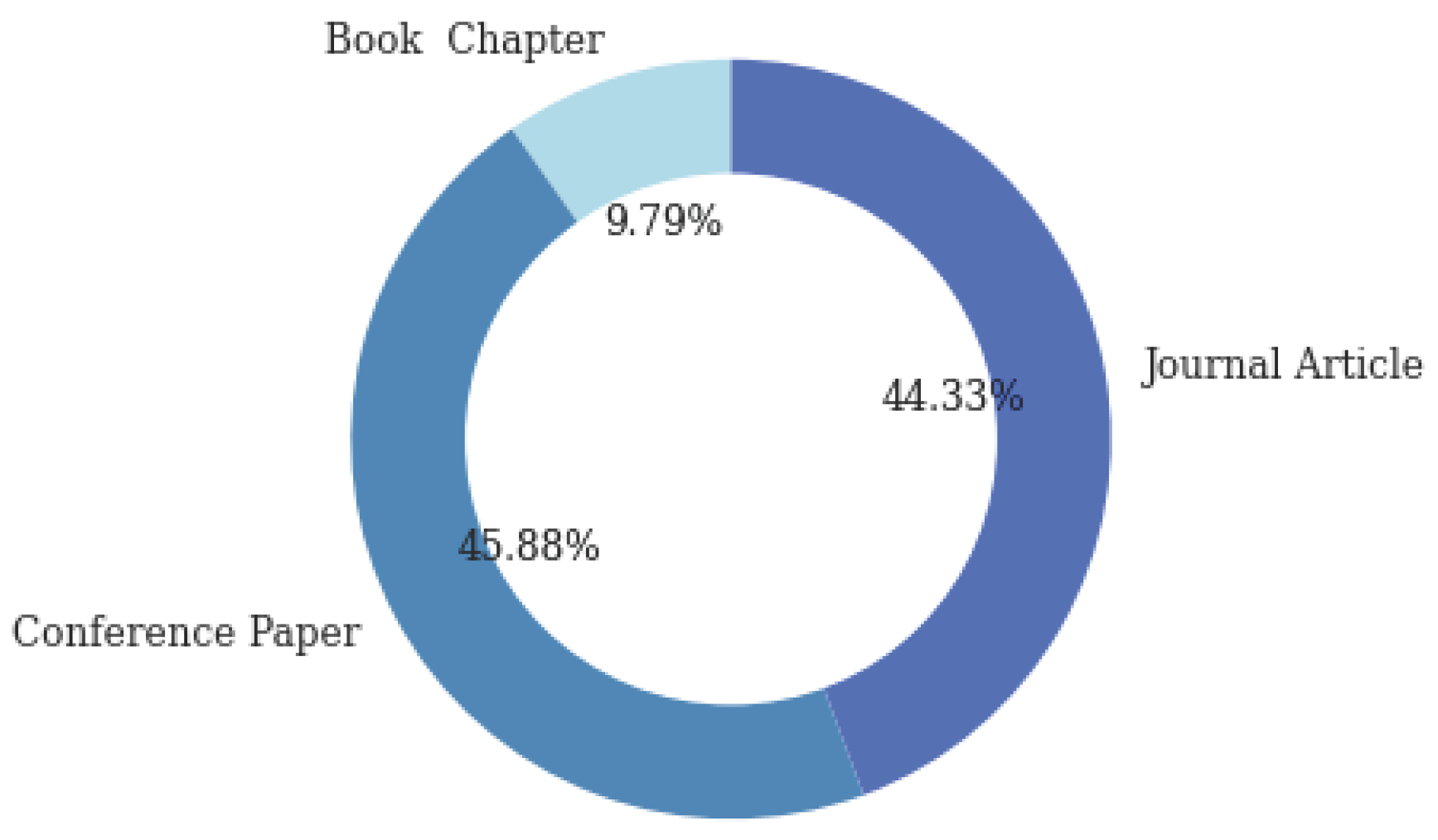

3. Results and Discussion

3.1. General Findings

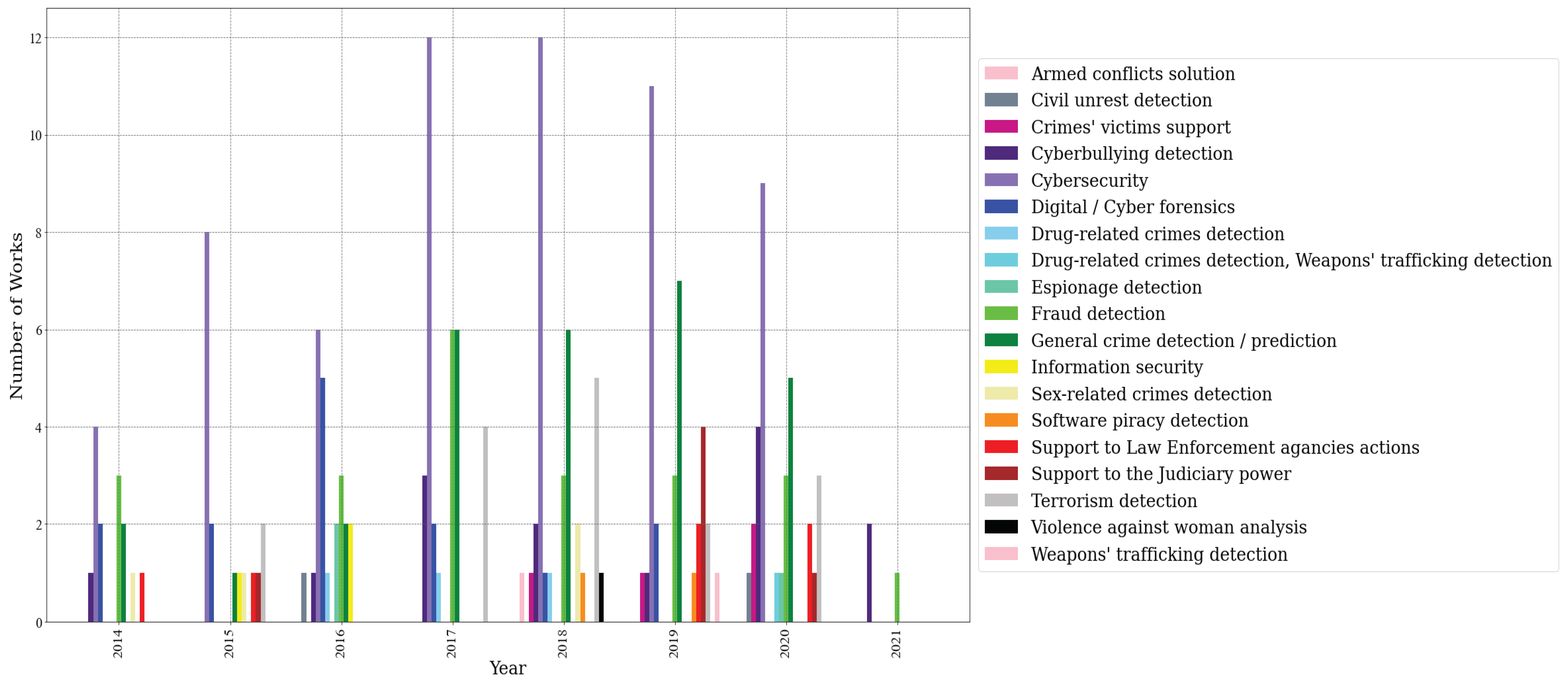

3.2. Application areas for text mining in public security

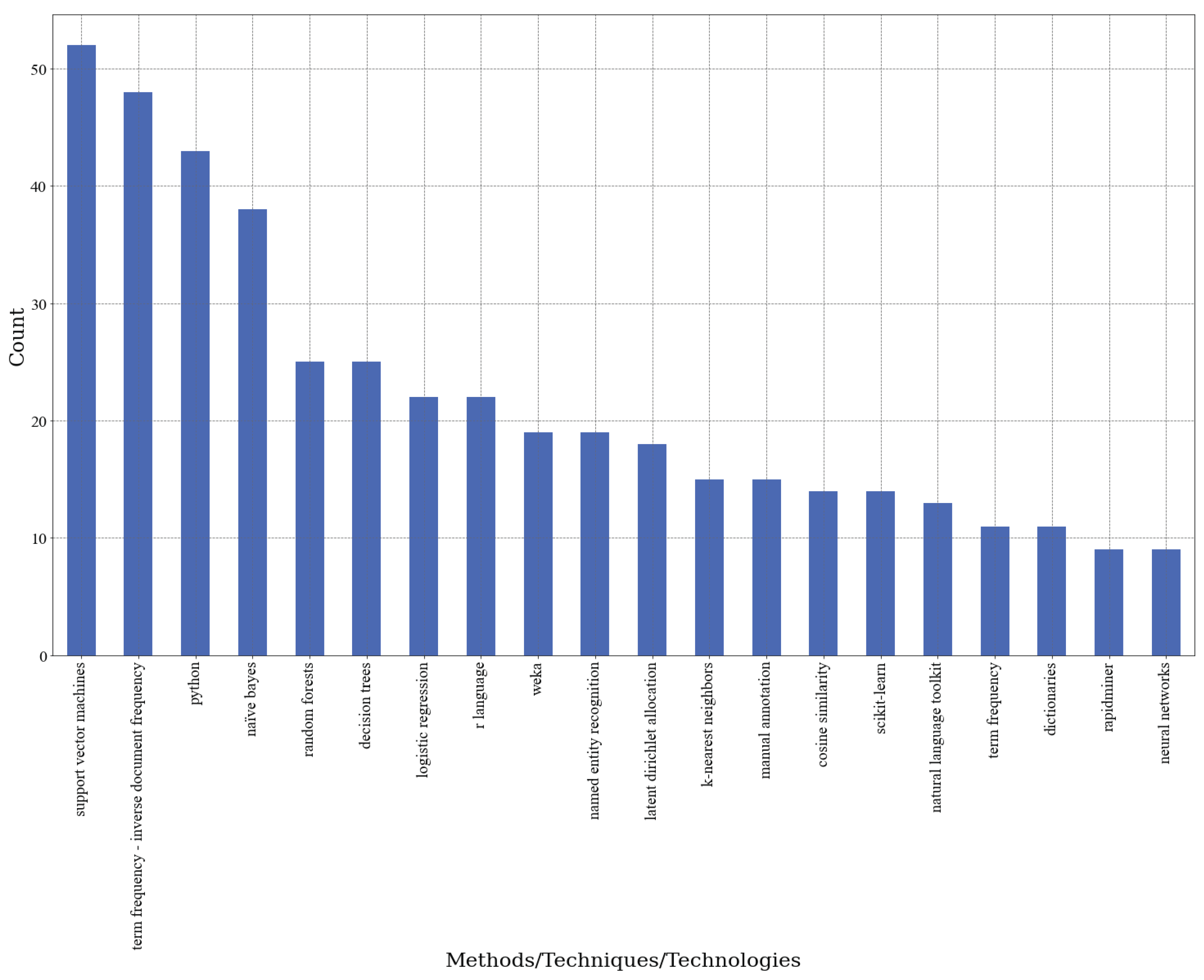

3.3. Text mining techniques and technologies applied in public security

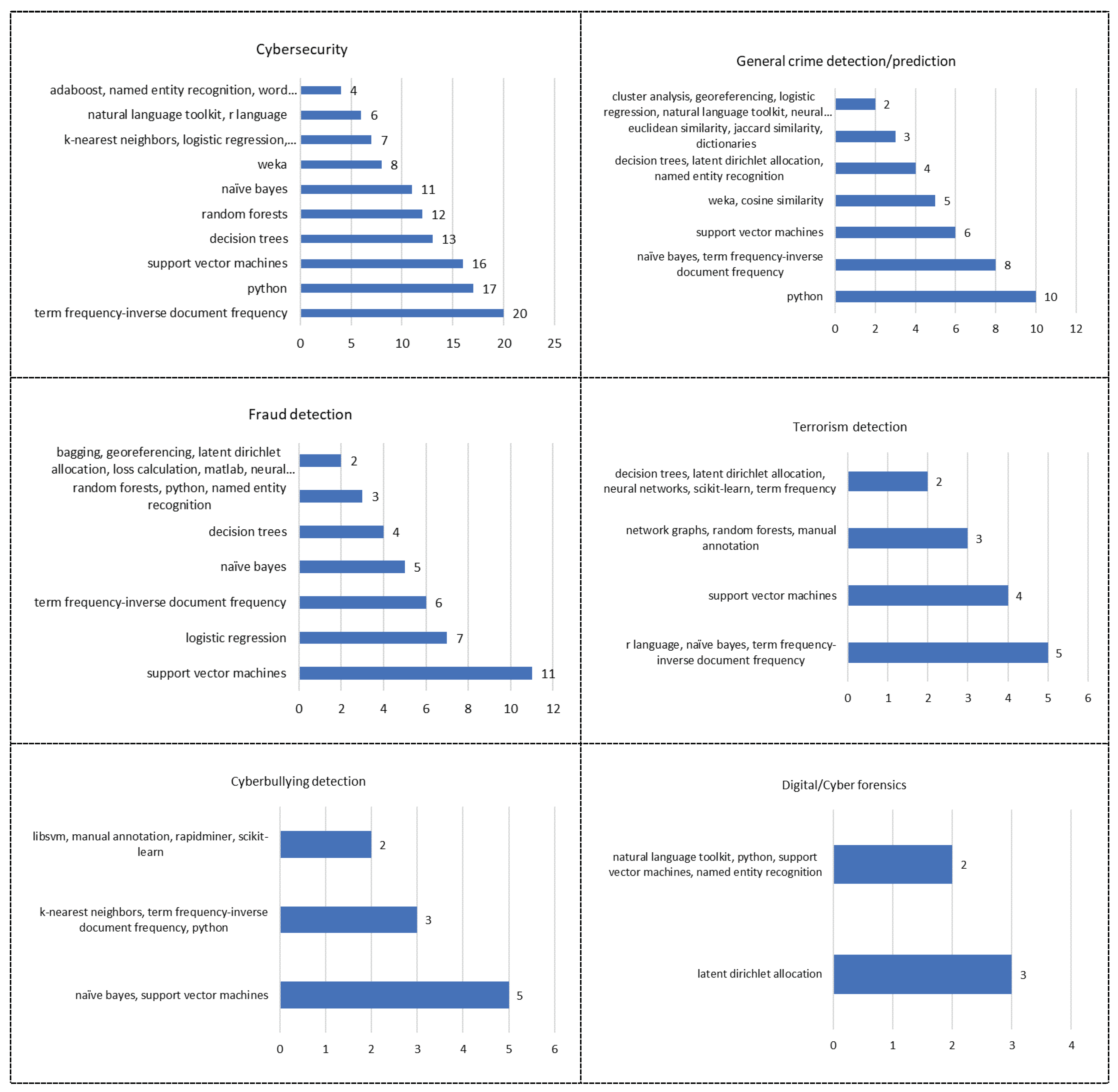

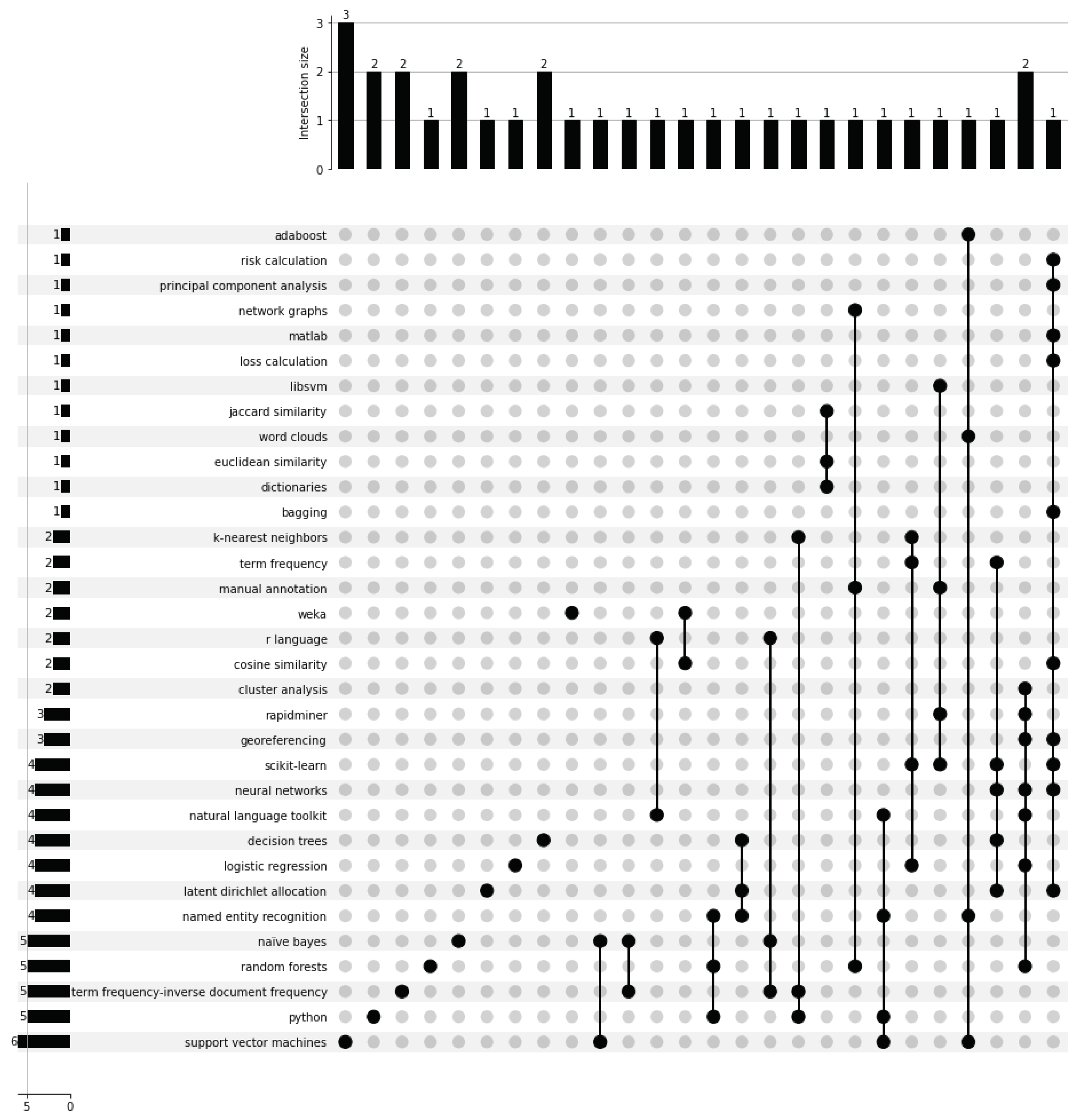

Most Frequent Techniques and Technologies by Application Area

3.4. Identifying Opportunities and Challenges for Text Mining in Public Security-Related Applications

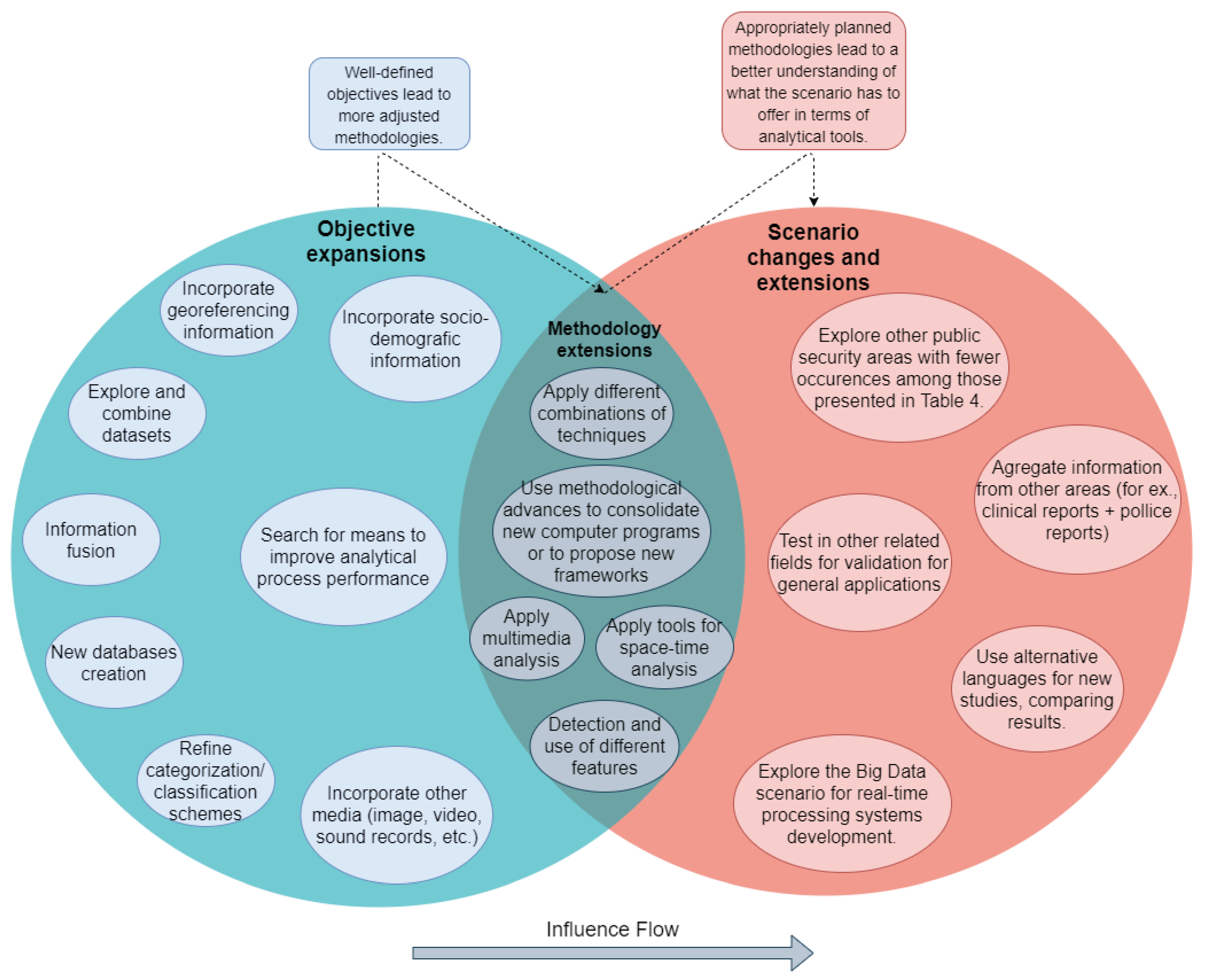

3.4.1. Research Directions: Outlining a Research Agenda

Objectives Expansions

Methodological Extensions

Scenario Changes and Extensions

4. Conclusion

5. Update

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zainol, Z.; Jaymes, M.T.H.; Nohuddin, P.N.E. VisualUrText: A Text Analytics Tool for Unstructured Textual Data. In Proceedings of the Journal of Physics: Conference Series; Computer Science Department, Faculty of Science and Defence Technology, Universiti Pertahanan Nasional Malaysia, Kem Sungai Besi, Kuala Lumpur, Malaysia; 2018; Vol. 1018. [Google Scholar]

- de Carvalho, V.D.H.; Costa, A.P.C.S. Towards Corpora Creation from Social Web in Brazilian Portuguese to Support Public Security Analyses and Decisions. Libr. Hi Tech 2022, in press. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, M.; Liu, L. A Review on Text Mining. In Proceedings of the Proceedings of the IEEE International Conference on Software Engineering and Service Sciences, ICSESS; School of Computer Science and Engineering, Beihang University, Beijing, China, 2015; Vol. 2015-Novem, pp. 681–685.

- de Carvalho, V.D.H.; Nepomuceno, T.C.C.; Costa, A.P.C.S. An Automated Corpus Annotation Experiment in Brazilian Portuguese for Sentiment Analysis in Public Security. In Lecture Notes in Business Information Processing; 2020; Vol. 384 LNBIP, pp. 99–111. ISBN 9783030462239. [Google Scholar]

- Shahzad, F.; Xiu, G.; Shafique Khan, M.A.; Shahbaz, M. Predicting the Adoption of a Mobile Government Security Response System from the User’s Perspective: An Application of the Artificial Neural Network Approach. Technol. Soc. 2020, 62, 101278. [Google Scholar] [CrossRef]

- Boulos, M.N.K.; Sanfilippo, A.P.; Corley, C.D.; Wheeler, S. Social Web Mining and Exploitation for Serious Applications : Technosocial Predictive Analytics and Related Technologies for Public Health, Environmental and National Security Surveillance. Comput. Methods Programs Biomed. 2010, 100, 16–23. [Google Scholar] [CrossRef] [PubMed]

- Kowalski, R.; Esteve, M.; Jankin Mikhaylov, S. Improving Public Services by Mining Citizen Feedback: An Application of Natural Language Processing. Public Adm. 2020, 98, 1011–1026. [Google Scholar] [CrossRef]

- de Carvalho, V.D.H.; Nepomuceno, T.C.C.; Poleto, T.; Costa, A.P.C.S. The COVID-19 Infodemic on Twitter: A Space and Time Topic Analysis of the Brazilian Immunization Program and Public Trust. Trop. Med. Infect. Dis. 2022, 7. [Google Scholar] [CrossRef] [PubMed]

- de Carvalho, V.D.H.; Todaro, M.S.F.; dos Santos, R.J.R.; Nepomuceno, T.C.C.; Poleto, T.; Figueiredo, C.J.J.; Turet, J.G.; de Moura, J.A. AI-Driven Decision Support in Public Administration: An Analytical Framework. In Information Technology and Systems; Rocha, Á., Ferrás, C., Diez, J.H., Rebolledo, M.D., Eds.; Springer, Cham, 2024; pp. 237–246.

- Zhang, W.; Zuo, N.; He, W.; Li, S.; Yu, L. Factors Influencing the Use of Artificial Intelligence in Government: Evidence from China. Technol. Soc. 2021, 66, 101675. [Google Scholar] [CrossRef]

- Han, J.; Kamber, M.; Pei, J. Data Mining: Concepts and Techniques (The Morgan Kaufmann Series in Data Management Systems); 2011; ISBN 0123814790. [Google Scholar]

- Hashimi, H.; Hafez, A.; Mathkour, H. Selection Criteria for Text Mining Approaches. Comput. Human Behav. 2015, 51, 729–733. [Google Scholar] [CrossRef]

- Tseng, Y.-H.; Ho, Z.-P.; Yang, K.-S.; Chen, C.-C. Mining Term Networks from Text Collections for Crime Investigation. Expert Syst. Appl. 2012, 39, 10082–10090. [Google Scholar] [CrossRef]

- Poleto, T.; Nepomuceno, T.C.C.; de Carvalho, V.D.H.; Friaes, L.C.B.; de, O.; de Oliveira, R.C.P.; Figueiredo, C.J.J. Information Security Applications in Smart Cities: A Bibliometric Analysis of Emerging Research. Futur. Internet 2023, 15, 393. [Google Scholar] [CrossRef]

- Tutun, S.; Khasawneh, M.T.; Zhuang, J. New Framework That Uses Patterns and Relations to Understand Terrorist Behaviors. Expert Syst. Appl. 2017, 78, 358–375. [Google Scholar] [CrossRef]

- de Carvalho, V.D.H.; Nepomuceno, T.C.C.; Poleto, T.; Turet, J.G.; Costa, A.P.C.S. Mining Public Opinions on COVID-19 Vaccination: A Temporal Analysis to Support Combating Misinformation. Trop. Med. Infect. Dis. 2022, 7, 256. [Google Scholar] [CrossRef]

- Sundermann, C.V.; Domingues, M.A.; Sinoara, R.A.; Marcacini, R.M.; Rezende, S.O. Using Opinion Mining in Context-Aware Recommender Systems: A Systematic Review. Inf. 2019, 10, 1–45. [Google Scholar] [CrossRef]

- O’Mara-Eves, A.; Thomas, J.; McNaught, J.; Miwa, M.; Ananiadou, S. Using Text Mining for Study Identification in Systematic Reviews: A Systematic Review of Current Approaches. Syst. Rev. 2015, 4, 5. [Google Scholar] [CrossRef] [PubMed]

- Nepomuceno, T.C.C.; Piubello Orsini, L.; de Carvalho, V.D.H.; Poleto, T.; Leardini, C. The Core of Healthcare Efficiency: A Comprehensive Bibliometric Review on Frontier Analysis of Hospitals. Healthcare 2022, 10, 1316. [Google Scholar] [CrossRef] [PubMed]

- Usai, A.; Pironti, M.; Mital, M.; Aouina Mejri, C. Knowledge Discovery out of Text Data: A Systematic Review via Text Mining. J. Knowl. Manag. 2018, 22, 1471–1488. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering, 2007.

- Robinson, P.H.; Dubber, M.D. The American Model Penal Code: A Brief Overview. New Crim. Law Rev. 2007, 10, 319–341. [Google Scholar] [CrossRef]

- de Carvalho, V.D.H.; Costa, A.P.C.S. Exploring Text Mining and Analytics for Applications in Public Security: An in-Depth Dive into a Systematic Literature Review. Socioecon. Anal. 2023, 1, 5–55. [Google Scholar] [CrossRef]

- Aghababaei, S.; Makrehchi, M. Mining Twitter Data for Crime Trend Prediction. Intell. Data Anal. 2018, 22, 117–141. [Google Scholar] [CrossRef]

- Das, P.; Das, A.K. Graph-Based Clustering of Extracted Paraphrases for Labelling Crime Reports. Knowledge-Based Syst. 2019, 179, 55–76. [Google Scholar] [CrossRef]

- Qazi, N.; Wong, B.L.W. An Interactive Human Centered Data Science Approach towards Crime Pattern Analysis. Inf. Process. Manag. 2019, 56. [Google Scholar] [CrossRef]

- Lal, S.; Tiwari, L.; Ranjan, R.; Verma, A.; Sardana, N.; Mourya, R. Analysis and Classification of Crime Tweets. Procedia Comput. Sci. 2020, 167, 1911–1919. [Google Scholar] [CrossRef]

- Badii, A.; Tiemann, M.; Adderley, R.; Seidler, P.; Evangelio, R.H.; Senst, T.; Sikora, T.; Panattoni, L.; Raffaelli, M.; Cappel-Porter, M.; et al. MOSAIC: Multimodal Analytics for the Protection of Critical Assets. In Proceedings of the Proceedings of the 2014 International Conference on Signal Processing and Multimedia Applications (SIGMAP); IEEE: Vienna, 2014; pp. 311–320. [Google Scholar]

- Bisio, F.; Meda, C.; Zunino, R.; Surlinelli, R.; Scillia, E.; Ottaviano, A. Real-Time Monitoring of Twitter Traffic by Using Semantic Networks. In Proceedings of the Proceedings of the 2015 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining 2015 - ASONAM ’15; ACM Press: New York, New York, USA, 2015; pp. 966–969. [Google Scholar]

- Basilio, M.P.; Pereira, V.; Brum, G. Identification of Operational Demand in Law Enforcement Agencies: An Application Based on a Probabilistic Model of Topics. Data Technol. Appl. 2019, 53, 333–372. [Google Scholar] [CrossRef]

- Behmer, E.-J.; Chandramouli, K.; Garrido, V.; Mühlenberg, D.; Müller, D.; Müller, W.; Pallmer, D.; Pérez, F.J.; Piatrik, T.; Vargas, C. Ontology Population Framework of MAGNETO for Instantiating Heterogeneous Forensic Data Modalities. In IFIP Advances in Information and Communication Technology; Fraunhofer Institute for Optronics, System Technologies and Image Exploitation IOSB, Karlsruhe, Germany, 2019; Vol. 559, pp. 520–531.

- Basilio, M.P.; Brum, G.S.; Pereira, V. A Model of Policing Strategy Choice. J. Model. Manag. 2020, 15, 849–891. [Google Scholar] [CrossRef]

- Hou, J.; Li, X.; Yao, H.; Sun, H.; Mai, T.; Zhu, R. BERT-Based Chinese Relation Extraction for Public Security. IEEE Access 2020, 8, 132367–132375. [Google Scholar] [CrossRef]

- Nikolic, V.; Markoski, B.; Ivkovic, M.; Kuk, K.; Djikanovic, P. Information Retrieval for Unstructured Text Documents in Serbian into the Crime Domain. In Proceedings of the Proceedings of the 16th IEEE International Symposium on Computational Intelligence and Informatics; IEEE: Budapest, 2015; pp. 267–271. [Google Scholar]

- Iftikhar, A.; Jaffry, S.W.U.Q.; Malik, M.K. Information Mining from Criminal Judgments of Lahore High Court. IEEE Access 2019, 7, 59539–59547. [Google Scholar] [CrossRef]

- Pina-Sánchez, J.; Roberts, J. V.; Sferopoulos, D. Does the Crown Court Discriminate Against Muslim-Named Offenders? A Novel Investigation Based on Text Mining Techniques. Br. J. Criminol. 2019, 59, 718–736. [Google Scholar] [CrossRef]

- Pina-Sánchez, J.; Grech, D.; Brunton-Smith, I.; Sferopoulos, D. Exploring the Origin of Sentencing Disparities in the Crown Court: Using Text Mining Techniques to Differentiate between Court and Judge Disparities. Soc. Sci. Res. 2019, 84, 102343. [Google Scholar] [CrossRef] [PubMed]

- Xia, Y.; Cai, T.; Zhong, H. Effect of Judges’ Gender on Rape Sentencing: A Data Mining Approach to Analyze Judgment Documents. China Rev. 2019, 19, 125–149. [Google Scholar]

- Gomes, T.; Ladeira, M. A New Conceptual Framework for Enhancing Legal Information Retrieval at the Brazilian Superior Court of Justice. In Proceedings of the Proceedings of the 12th International Conference on Management of Digital EcoSystems; ACM: New York, NY, USA, 2 November 2020; pp. 26–29. [Google Scholar]

- Al-Nabki, M.W.; Fidalgo, E.; Alegre, E.; Fernández-Robles, L. Improving Named Entity Recognition in Noisy User-Generated Text with Local Distance Neighbor Feature. Neurocomputing 2020, 382, 1–11. [Google Scholar] [CrossRef]

- Elkhawas, A.I.; Abdelbaki, N. Malware Detection Using Opcode Trigram Sequence with SVM. In Proceedings of the Proceedings of the 26th International Conference on Software, Telecommunications and Computer Networks (SoftCOM); IEEE, September 2018; pp. 1–6. [Google Scholar]

- Cichosz, P. A Case Study in Text Mining of Discussion Forum Posts: Classification with Bag of Words and Global Vectors. Int. J. Appl. Math. Comput. Sci. 2018, 28, 787–801. [Google Scholar] [CrossRef]

- Al-saif, H.; Al-dossari, H. Detecting and Classifying Crimes from Arabic Twitter Posts Using Text Mining Techniques. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 377–387. [Google Scholar] [CrossRef]

- Alothman, B.; Rattadilok, P. Android Botnet Detection: An Integrated Source Code Mining Approach. In Proceedings of the Proceedings of the 12th International Conference for Internet Technology and Secured Transactions (ICITST); IEEE, December 2017; pp. 111–115. [Google Scholar]

- Hadad, T.; Puzis, R.; Sidik, B.; Ofek, N.; Rokach, L. Application Marketplace Malware Detection by User Feedback Analysis. Commun. Comput. Inf. Sci. 2018, 867, 1–19. [Google Scholar] [CrossRef]

- Maktabar, M.; Zainal, A.; Maarof, M.A.; Kassim, M.N. Content Based Fraudulent Website Detection Using Supervised Machine Learning Techniques. In Hybrid Intelligent Systems; Abraham, A., Muhuri, P.K., Muda, A.K., Gandhi, N., Eds.; Springer: New Delhi, 2018; Vol. 734, pp. 294–304. [Google Scholar]

- Martín, A.; Calleja, A.; Menéndez, H.D.; Tapiador, J.; Camacho, D. ADROIT: Android Malware Detection Using Meta-Information. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI); 2016; pp. 1–8. [Google Scholar]

- Thao, T.P.; Yamada, A.; Murakami, K.; Urakawa, J.; Sawaya, Y.; Kubota, A. Classification of Landing and Distribution Domains Using Whois’ Text Mining. In Proceedings of the Proceedings - 16th IEEE International Conference on Trust, Security and Privacy in Computing and Communications, 11th IEEE International Conference on Big Data Science and Engineering and 14th IEEE International Conference on Embedded Software and Systems; 2017; pp. 1–8. [Google Scholar]

- Monish, H.; Pandey, A.C. A Comparative Assessment of Data Mining Algorithms to Predict Fraudulent Firms. In Proceedings of the Proceedings of the Confluence 2020 - 10th International Conference on Cloud Computing, Data Science and Engineering; Jaypee Institute of Information Technology: Noida, India, 2020; pp. 117–122. [Google Scholar]

- Lee, T.-H.; Sung, W.-K.; Kim, H.-W. A Text Mining Approach to the Analysis of Information Security Awareness: Korea, United States, and China. In Proceedings of the Pacific Asia Conference on Information Systems, PACIS 2016 - Proceedings; 2016. 2016. [Google Scholar]

- Noel, G.E.; Peterson, G.L. Applicability of Latent Dirichlet Allocation to Multi-Disk Search. Digit. Investig. 2014, 11, 43–56. [Google Scholar] [CrossRef]

- Kuang, D.; Brantingham, P.J.; Bertozzi, A.L. Crime Topic Modeling. Crime Sci. 2017, 6, 12. [Google Scholar] [CrossRef]

- Sundarkumar, G.G.; Ravi, V.; Nwogu, I.; Govindaraju, V. Malware Detection via API Calls, Topic Models and Machine Learning. In Proceedings of the Proceedings of the 2015 IEEE International Conference on Automation Science and Engineering (CASE); IEEE: Gothenburg, 2015; pp. 1212–1217. [Google Scholar]

- Yang, B.; Jiang, J.; Li, N. Towards Discovering Covert Communication through Email Spam. In Proceedings of the IFIP Advances in Information and Communication Technology; 2016; Vol. 486, pp. 191–201. [Google Scholar]

- Chung, W.; Liu, J.; Tang, X.; Lai, V.S.K. Extracting Textual Features of Financial Social Media to Detect Cognitive Hacking. In Proceedings of the 2018 IEEE International Conference on Intelligence and Security Informatics, ISI 2018; 2018; pp. 244–246. [Google Scholar]

- Parapar, J.; Losada, D.E.D.E.; Barreiro, Á. Combining Psycho-Linguistic, Content-Based and Chat-Based Features to Detect Predation in Chatrooms. J. Univers. Comput. Sci. 2014, 20, 213–239. [Google Scholar]

- Sonowal, G.; Kuppusamy, K.S. SmiDCA: An Anti-Smishing Model with Machine Learning Approach. Comput. J. 2018, 61, 1143–1157. [Google Scholar] [CrossRef]

- Birks, D.; Coleman, A.; Jackson, D. Unsupervised Identification of Crime Problems from Police Free-Text Data. Crime Sci. 2020, 9. [Google Scholar] [CrossRef]

- Bozyiğit, A.; Utku, S.; Nasibov, E. Cyberbullying Detection: Utilizing Social Media Features. Expert Syst. Appl. 2021, 179, 115001. [Google Scholar] [CrossRef]

- Chen, W.; Aspinall, D.; Gordon, A.D.; Sutton, C.; Muttik, I. A Text-Mining Approach to Explain Unwanted Behaviours. In Proceedings of the Proceedings of the 9th European Workshop on System Security - EuroSec ’16; ACM Press: New York, 2016; pp. 1–6. [Google Scholar]

- Dong, F.; Yuan, S.; Ou, H.; Liu, L. New Cyber Threat Discovery from Darknet Marketplaces. In Proceedings of the Proceedings of the 2018 IEEE Conference on Big Data and Analytics (ICBDA); IEEE, November 2018; pp. 62–67.

- Aboluwarin, O.; Andriotis, P.; Takasu, A.; Tryfonas, T. Optimizing Short Message Text Sentiment Analysis for Mobile Device Forensics. In Proceedings of the 2th International Conference on Digital Forensics; New Delhi, 2016; pp. 69–87. ISBN 9783319462783. [Google Scholar]

- Chandra, N.; Khatri, S.K.; Som, S. Anti Social Comment Classification Based on KNN Algorithm. In Proceedings of the Proceedings of the 6th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO); IEEE, September 2017; pp. 348–354.

- Gil, V.D.; Betancur, J.D.; Puerta, I.C.; Montoya, L.M.; Sepulveda, J.M. The Femicide in Colombia and Mexico: A Text Mining Analysis. Turkish Online J. Des. Art Commun. 2018, 2018, 170–177. [Google Scholar] [CrossRef] [PubMed]

- Savaliya, B.R.; Philip, C.G. Email Fraud Detection by Identifying Email Sender. In Proceedings of the Proceedings of the 2017 International Conference on Energy, Communication, Data Analytics and Soft Computing (ICECDS); IEEE: Chennai, August 2017; pp. 1420–1422. [Google Scholar]

- Gowri, S.; Anandha Mala, G.S.; Divya, G. Enhancing the Digital Data Retrieval System Using Novel Techniques. J. Theor. Appl. Inf. Technol. 2014, 66, 481–489. [Google Scholar]

- Karystianis, G.; Adily, A.; Schofield, P.; Knight, L.; Galdon, C.; Greenberg, D.; Jorm, L.; Nenadic, G.; Butler, T. Automatic Extraction of Mental Health Disorders From Domestic Violence Police Narratives: Text Mining Study. J. Med. Internet Res. 2018, 20, e11548. [Google Scholar] [CrossRef]

- Karystianis, G.; Adily, A.; Schofield, P.W.; Greenberg, D.; Jorm, L.; Nenadic, G.; Butler, T. Automated Analysis of Domestic Violence Police Reports to Explore Abuse Types and Victim Injuries: Text Mining Study. J. Med. Internet Res. 2019, 21. [Google Scholar] [CrossRef]

- Po, L.; Rollo, F. Building an Urban Theft Map by Analyzing Newspaper Crime Reports. Proc. - 13th Int. Work. Semant. Soc. Media Adapt. Pers. SMAP 2018 2018, 13–18. [Google Scholar] [CrossRef]

- Ruano-Ordás, D.; Fdez-Riverola, F.; Méndez, J.R. Concept Drift in E-Mail Datasets: An Empirical Study with Practical Implications. Inf. Sci. (Ny). 2018, 428, 120–135. [Google Scholar] [CrossRef]

- Andriansyah, M.; Purwanto, I.; Subali, M.; Sukowati, A.I.; Samos, M.; Akbar, A. Developing Indonesian Corpus of Pornography Using Simple NLP-Text Mining (NTM) Approach to Support Government Anti-Pornography Program. In Proceedings of the 2017 Second International Conference on Informatics and Computing (ICIC); IEEE, November 2017; pp. 1–4. [Google Scholar]

- Venčkauskas, A.; Karpavičius, A.; Damaševičius, R.; Marcinkevičius, R.; Kapočiūtė-Dzikienė, J.; Napoli, C. Open Class Authorship Attribution of Lithuanian Internet Comments Using One-Class Classifier. In Proceedings of the Proceedings of the 2017 Federated Conference on Computer Science and Information Systems; IEEE: Prague, 2017; Vol. 11, pp. 373–382. [Google Scholar]

- Das, P.; Das, A.K. An Application of Strength Pareto Evolutionary Algorithm for Feature Selection from Crime Data. In Proceedings of the Proceedings of the 8th International Conference on Computing, Communication and Networking Technologies (ICCCNT); IEEE: Dept. of Computer Science and Technology, Indian Institute of Engineering Science and Technology, Shibpur, Howrah, India, July 2017. pp. 1–6.

- Das, P.; Das, A.K. Crime Analysis against Women from Online Newspaper Reports and an Approach to Apply It in Dynamic Environment. In Proceedings of the Proceedings of the 2017 International Conference on Big Data Analytics and Computational Intelligence (ICBDAC); IEEE: Dept. of Computer Science and Technology, Indian Institute of Engineering Science and Technology, Shibpur, Howrah, India, March 2017; pp. 312–317.

- Almehmadi, A.; Joudaki, Z.; Jalali, R. Language Usage on Twitter Predicts Crime Rates. In Proceedings of the Proceedings of the 10th International Conference on Security of Information and Networks; ACM: New York, NY, USA, 2017; pp. 307–310. [Google Scholar]

- Margono, H.; Yi, X.; Raikundalia, G.K. Mining Indonesian Cyber Bullying Patterns in Social Networks. In Proceedings of the Proceedings of the 37th Australasian Computer Science Conference; ACS: Auckland, 2014; Vol. 147, pp. 115–124. [Google Scholar]

- Noviantho; Isa, S.M.; Ashianti, L. Cyberbullying Classification Using Text Mining. In Proceedings of the Proceedings of the 1st International Conference on Informatics and Computational Sciences (ICICoS); IEEE: Semarang, November 2017. pp. 241–246.

- Samtani, S.; Yu, S.; Zhu, H.; Patton, M.; Matherly, J.; Chen, H. Identifying SCADA Systems and Their Vulnerabilities on the Internet of Things: A Text-Mining Approach. IEEE Intell. Syst. 2018, 33, 63–73. [Google Scholar] [CrossRef]

- Das, P.; Das, A.K. A Two-Stage Approach of Named-Entity Recognition for Crime Analysis. In Proceedings of the 8th International Conference on Computing, Communications and Networking Technologies, ICCCNT 2017; 2017. [Google Scholar]

- Zaeem, R.N.; Manoharan, M.; Yang, Y.; Barber, K.S. Modeling and Analysis of Identity Threat Behaviors through Text Mining of Identity Theft Stories. Comput. Secur. 2017, 65, 50–63. [Google Scholar] [CrossRef]

- Liang, N.; Biros, D. Validating Common Characteristics of Malicious Insiders: Proof of Concept Study. In Proceedings of the Proceedings of the 49th Hawaii International Conference on System Sciences (HICSS); IEEE: Koloa, January, 2016; pp. 3716–3726. [Google Scholar]

- Nwafor, E.; Chowdhary, P.; Chandra, A. A Policy-Driven Framework for Document Classification and Enterprise Security. Proc. - 13th IEEE Int. Conf. Ubiquitous Intell. Comput. 13th IEEE Int. Conf. Adv. Trust. Comput. 16th IEEE Int. Conf. Scalable Comput. Commun. IEEE Int. 2017, 949–953. [CrossRef]

- Petrovskiy, M.; Chikunov, M. Online Extremism Discovering through Social Network Structure Analysis. In Proceedings of the Proceedings of the IEEE 2nd International Conference on Information and Computer Technologies (ICICT); IEEE, 2019. pp. 243–249.

- Saini, J.K.; Bansal, D. A Comparative Study and Automated Detection of Illegal Weapon Procurement over Dark Web. Cybern. Syst. 2019, 50, 405–416. [Google Scholar] [CrossRef]

- Zahra, K.; Azam, F.; Butt, W.H.; Ilyas, F. A Framework for User Characterization Based on Tweets Using Machine Learning Algorithms. In Proceedings of the ACM International Conference Proceeding Series; 2018; pp. 11–16. [Google Scholar]

- Bhardwaj, A.; Gupta, R. Qualitative Analysis of Financial Statements for Fraud Detection. In Proceedings of the Proceedings of the 2018 International Conference on Advances in Computing, Communication Control and Networking (ICACCCN); IEEE, October 2018. pp. 318–320.

- Karystianis, G.; Simpson, A.; Adily, A.; Schofield, P.; Greenberg, D.; Wand, H.; Nenadic, G.; Butler, T. Prevalence of Mental Illnesses in Domestic Violence Police Records: Text Mining Study. J. Med. Internet Res. 2020, 22, e23725. [Google Scholar] [CrossRef] [PubMed]

- Saldana, M.; Escobar, C.; Galvez, E.; Torres, D.; Toro, N. Mapping of the Perception of Theft Crimes from Analysis of Newspaper Articles Online. In Proceedings of the 2020 15th Iberian Conference on Information Systems and Technologies (CISTI); IEEE: Universidad Arturo Prat, Almirante Juan José Latorre 2901, Faculty of Engineering and Architecture, Antofagasta, 1244260, Chile, June 2020; Vol. 2020-June, pp. 1–7.

- Lee, P.S.; Owda, M.; Crockett, K. Novel Methods for Resolving False Positives during the Detection of Fraudulent Activities on Stock Market Financial Discussion Boards. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 1–10. [Google Scholar] [CrossRef]

- Mine, T.; Hirokawa, S.; Suzuki, T. Does Crime Activity Report Reveal Regional Characteristics? In Advances in Intelligent Systems and Computing; S., L., R., I., H, C., Eds.; Springer, Cham, 2019; pp. 582–598.

- Nedeljkovic, S.; Nikolic, V.; Cabarkapa, M.; Misic, J.; Randelovic, D. An Advanced Quick-Answering System Intended for the e-Government Service in the Republic of Serbia. ACTA Polytech. HUNGARICA 2019, 16, 153–174. [Google Scholar] [CrossRef]

- Roopa, V.; Induja, K. Customized Visualization of Email Using Sentimental and Impact Analysis in R. Commun. Comput. Inf. Sci. 2019, 1046, 144–154. [Google Scholar] [CrossRef]

- Miranda, E.; Aryuni, M.; Fernando, Y.; Kibtiah, T.M. A Study of Radicalism Contents Detection in Twitter: Insights from Support Vector Machine Technique. In Proceedings of the Proceedings of 2020 International Conference on Information Management and Technology, ICIMTech 2020; School of Information Systems, Bina Nusantara University, Information Systems Department, Jakarta, 11480, Indonesia, 2020; pp. 549–554.

- Alakrot, A.; Murray, L.; Nikolov, N.S. Dataset Construction for the Detection of Anti-Social Behaviour in Online Communication in Arabic. In Proceedings of the Procedia Computer Science; Elsevier B.V., 2018; Vol. 142, pp. 174–181. [Google Scholar]

- Concepcion-Sanchez, J.A.; Molina-Gil, J.; Caballero-Gil, P.; Santos-Gonzalez, I. Fuzzy Logic System for Identity Theft Detection in Social Networks. In Proceedings of the 2018 4th International Conference on Big Data Innovations and Applications (Innovate-Data); IEEE, August 2018; pp. 65–70. [Google Scholar]

- Husari, G.; Niu, X.; Chu, B.; Al-Shaer, E. Using Entropy and Mutual Information to Extract Threat Actions from Cyber Threat Intelligence. In Proceedings of the Proceedings of the 2018 IEEE International Conference on Intelligence and Security Informatics (ISI); IEEE, 2018; pp. 1–6.

- Zainal, K.; Jali, M.Z.; Hasan, A.B. Comparative Analysis of Danger Theory Variants in Measuring Risk Level for Text Spam Messages. In Advances in Intelligent Systems and Computing; Springer International Publishing, 2018; Vol. 753, pp. 133–152 ISBN 9783319787527.

- Silomon, J.A.M.; Roeling, M.P. Assessing Opinions on Software as a Weapon in the Context of (Inter)National Security. In Transactions on Computational Science XXXII; Gavrilova, M.L., Tan, C.J.K., Sourin, A., Eds.; Springer Berlin Heidelberg, 2018; Vol. 10830 LNCS, pp. 43–56 ISBN 9783662566718.

- Henseler, H.; Hyde, J. Technology Assisted Analysis of Timeline and Connections in Digital Forensic Investigations. In Proceedings of the CEUR Workshop Proceedings; Magnet Forensics, Waterloo, Canada, 2019; Vol. 2484, pp. 32–37.

- Ariffin, N.; Zainal, A.; Maarof, M.A.; Nizam Kassim, M. A Conceptual Scheme for Ransomware Background Knowledge Construction. In Proceedings of the Proceedings of the 2018 Cyber Resilience Conference (CRC); IEEE: School of Computing, Universiti Teknologi Malaysia, Johor, Malaysia, November 2018; pp. 1–4.

- Pires, M.; Georgieva, P. An Intelligent Tool for Detection of Phishing Messages. In Advances in Intelligent Systems and Computing; Department of Electronics Telecommunications and Informatics, University of Aveiro, Aveiro, Portugal, 2020; Vol. 942, pp. 116–125.

- Al-Khalisy, M.A.E.; Jehlol, H.B. Terrorist Affiliations Identifying through Twitter Social Media Analysis Using Data Mining and Web Mapping Techniques. J. Eng. Appl. Sci. 2018, 13, 7459–7464. [Google Scholar] [CrossRef]

- Ristea, A.; Kurland, J.; Resch, B.; Leitner, M.; Langford, C. Estimating the Spatial Distribution of Crime Events around a Football Stadium from Georeferenced Tweets. ISPRS Int. J. Geo-Information 2018, 7, 43. [Google Scholar] [CrossRef]

- Savaş, S.; Topaloğlu, N. Data Analysis through Social Media According to the Classified Crime. Turkish J. Electr. Eng. Comput. Sci. 2019, 27, 407–420. [Google Scholar] [CrossRef]

- Dastjerdi, A.R.; Foroghi, D.; Kiani, G.H. Detecting Manager’s Fraud Risk Using Text Analysis: Evidence from Iran. J. Appl. Account. Res. 2019, 20, 154–171. [Google Scholar] [CrossRef]

- Angenent, M.N.; Barata, A.P.; Takes, F.W. Large-Scale Machine Learning for Business Sector Prediction. In Proceedings of the Proceedings of the 35th Annual ACM Symposium on Applied Computing, March 30 2020; ACM: New York, NY, USA; pp. 1143–1146. [Google Scholar]

- Siering, M.; Muntermann, J.; Grčar, M. Design Principles for Robust Fraud Detection: The Case of Stock Market Manipulations. J. Assoc. Inf. Syst. 2021, 22, 156–178. [Google Scholar] [CrossRef]

- Correa, J.C.; García-Chitiva, M.D.P.; García-Vargas, G.R. A Text Mining Approach to the Text Difficulty of Latin American Peace Agreement. Rev. Latinoam. Psicol. 2018, 50, 61–70. [Google Scholar] [CrossRef]

- Mansour, S. Social Media Analysis of User’s Responses to Terrorism Using Sentiment Analysis and Text Mining. In Proceedings of the Procedia Computer Science; 2018; Vol. 140, pp. 95–103. [Google Scholar]

- Öztürk, N.; Ayvaz, S. Sentiment Analysis on Twitter: A Text Mining Approach to the Syrian Refugee Crisis. Telemat. Informatics 2018, 35, 136–147. [Google Scholar] [CrossRef]

- Balim, C.; Gunal, E.S. Automatic Detection of Smishing Attacks by Machine Learning Methods. In Proceedings of the 2019 1st International Informatics and Software Engineering Conference (UBMYK); IEEE: Afyon Kocatepe University, Dept. of Computer Programming, Sandikli Vocational School, Afyonkarahisar, Turkey, November 2019; pp. 1–3.

- Alatrista-Salas, H.; Morzán-Samamé, J.; Nunez-del-Prado, M. Crime Alert! Crime Typification in News Based on Text Mining. In Lecture Notes in Networks and Systems; Universidad del Pacífico, Av. Salaverry, Lima, 2020, Peru, 2020; Vol. 69, pp. 725–741.

- Giacalone, M.; Cusatelli, C.; Romano, A.; Buondonno, A.; Santarcangelo, V. Big Data and Forensics: An Innovative Approach for a Predictable Jurisprudence. Inf. Sci. (Ny). 2018, 426, 160–170. [Google Scholar] [CrossRef]

- Choi, Y.-J.; Jeon, B.-J.; Kim, H.-W. Identification of Key Cyberbullies: A Text Mining and Social Network Analysis Approach. Telemat. Informatics 2021, 56, 101504. [Google Scholar] [CrossRef]

- Adily, A.; Karystianis, G.; Butler, T. Text Mining Police Narratives to Identify Types of Abuse and Victim Injuries in Family and Domestic Violence Events. TRENDS ISSUES CRIME Crim. JUSTICE 2021, 1–12, WE - Emerging Sources Citation Index (ESCI). [Google Scholar] [CrossRef]

- Aldera, S.; Emam, A.; Al-Qurishi, M.; Alrubaian, M.; Alothaim, A. Online Extremism Detection in Textual Content: A Systematic Literature Review. IEEE Access 2021, 9, 42384–42396. [Google Scholar] [CrossRef]

- Alharbi, A.; Dong, H.; Yi, X.; Tari, Z.; Khalil, I. Social Media Identity Deception Detection: A Survey. ACM Comput. Surv. 2021, 54. [Google Scholar] [CrossRef]

- Al-Wesabi, F.N. An Optimized English Textwatermarking Method Based on Natural Language Processing Techniques. Comput. Mater. Contin. 2021, 69, 1519–1536. [Google Scholar] [CrossRef]

- Asante, A.; Feng, X. Content-Based Technical Solution for Cyberstalking Detection. In Proceedings of the 2021 3rd International Conference on Computer Communication and the Internet, ICCCI 2021; Institute of Electrical and Electronics Engineers Inc.: Catholic University College of Ghana, Department of Computing and Information Sciences, Fiapre-Sunyani, Ghana, 2021; pp. 89–95.

- Avgerinos Loutsaris, M.; Lachana, Z.; Alexopoulos, C.; Charalabidis, Y. Legal Text Processing: Combing Two Legal Ontological Approaches through Text Mining. In Proceedings of the DG.O2021: The 22nd Annual International Conference on Digital Government Research; Association for Computing Machinery: New York, NY, USA, 2021; pp. 522–532. [Google Scholar]

- Canossa, A.; Salimov, D.; Azadvar, A.; Harteveld, C.; Yannakakis, G. For Honor, for Toxicity: Detecting Toxic Behavior through Gameplay. Proc. ACM Hum.-Comput. Interact. 2021, 5. [Google Scholar] [CrossRef]

- Chen, H.; Hossain, M. Developing a Google Chrome Extension for Detecting Phishing Emails. In Proceedings of the EPiC Series in Computing; R., W., F., H., A., R., Eds.; EasyChair: University of Minnesota Crookston, MN, United States, 2021; Vol. 77, pp. 13–22.

- Corrêa, I.T.; Faria, E.R. An Analysis of Violence against Women Based on Victims’ Reports. In Proceedings of the Proceedings of the XVII Brazilian Symposium on Information Systems; Association for Computing Machinery: New York, NY, USA, 2021.

- de Oliveira, G.A.; de Sousa, R.T.; Albuquerque, R.D.; Villalba, L.J.G. Adversarial Attacks on a Lexical Sentiment Analysis Classifier. Comput. Commun. 2021, 174, 154–171. [Google Scholar] [CrossRef]

- Degadwala, S.; Vyas, D.; Hossain, M.R.; DIder, A.R.; Ali, M.N.; Kuri, P. Location-Based Modelling and Analysis of Threats by Using Text Mining. In Proceedings of the Proceedings of the 2nd International Conference on Electronics and Sustainable Communication Systems, ICESC 2021; Institute of Electrical and Electronics Engineers Inc.: Sigma Institute of Engineering, Gujarat, Vadodara, India, 2021; pp. 1940–1944.

- Desmet, C.; Cook, D.J. Recent Developments in Privacy-Preserving Mining of Clinical Data. ACM/IMS Trans. Data Sci. 2021, 2. [Google Scholar] [CrossRef] [PubMed]

- Febriany, A.; Utama, D.N. Analysis Model for Identifying Negative Posts Based on Social Media. Int. J. Emerg. Technol. Adv. Eng. 2021, 11, 96–103. [Google Scholar] [CrossRef]

- Franchina, L.; Ferracci, S.; Palmaro, F. Detecting Phishing E-Mails Using Text Mining and Features Analysis. In Proceedings of the CEUR Workshop Proceedings; A., A., M., C., Eds.; CEUR-WS: HERMES Bay S.R.L, 2021; Vol. 2940, pp. 106–119.

- Gradoń, K.T.; Hołyst, J.A.; Moy, W.R.; Sienkiewicz, J.; Suchecki, K. Countering Misinformation: A Multidisciplinary Approach. Big Data Soc. 2021, 8. [Google Scholar] [CrossRef]

- Gryaznova, E.; Kirina, M. Defining Kinds of Violence in Russian Short Stories of 1900-1930: A Case of Topic Modelling With LDA and PCA. In Proceedings of the CEUR Workshop Proceedings; R.V., B., N.V., B., A.V., C., D.E., P., A.E., V., V.P., Z., Eds.; CEUR-WS: National Research University, Higher School of Economics, 123 Griboyedova emb., St. Petersburg, 190068, Russian Federation, 2021; Vol. 3090, pp. 281–290.

- Guo, W.Y.; Zeng, Q.T.; Duan, H.; Ni, W.J.; Liu, C. Process-Extraction-Based Text Similarity Measure for Emergency Response Plans. Expert Syst. Appl. 2021, 183. [Google Scholar] [CrossRef]

- Hajarian, M.; Khanbabaloo, Z. Toward Stopping Incel Rebellion: Detecting Incels in Social Media Using Sentiment Analysis. In Proceedings of the 2021 7th International Conference on Web Research (ICWR); IEEE, 19 May 2021; pp. 169–174. [Google Scholar]

- Hamza, M.; Jamila, M.; Lunn, J.; Aljumaili, W. Crime Geo Analytics Tool. In Proceedings of the 2021 14th International Conference on Developments in eSystems Engineering (DeSE); 2021; pp. 577–581. [Google Scholar]

- Herbert, F.; Schmidbauer-Wolf, G.M.; Reuter, C. Who Should Get My Private Data in Which Case? Evidence in the Wild. In Proceedings of the Proceedings of Mensch Und Computer 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 281–293. [Google Scholar]

- Husain, F.; Uzuner, O. A Survey of Offensive Language Detection for the Arabic Language. ACM Trans. Asian Low-Resource Lang. Inf. Process. 2021, 20, 1–44. [Google Scholar] [CrossRef]

- Ignaczak, L.; Goldschmidt, G.; Da Costa, C.A.; Righi, R.D.R. Text Mining in Cybersecurity: A Systematic Literature Review. ACM Comput. Surv. 2021, 54. [Google Scholar] [CrossRef]

- Jiang, Y.; Atif, Y. An Approach to Discover and Assess Vulnerability Severity Automatically in Cyber-Physical Systems. In Proceedings of the 13th International Conference on Security of Information and Networks; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar]

- Kusuma, N. Suhardi Detection of Online Prostitution in Twitter Platform Using Machine Learning Approach. In Proceedings of the 2021 3rd East Indonesia Conference on Computer and Information Technology (EIConCIT); 2021; pp. 55–60. [Google Scholar]

- Langton, S.; Bannister, J.; Ellison, M.; Haleem, M.S.; Krzemieniewska-Nandwani, K. Policing and Mental Ill-Health: Using Big Data to Assess the Scale and Severity of, and the Frontline Resources Committed to, Mental Ill-Health-Related Calls-for-Service. POLICING-A J. POLICY Pract. 2021, 15, 1963–1976. [Google Scholar] [CrossRef]

- Lee, M.-C.; Vajiac, C.; Kulshrestha, A.; Levy, S.; Park, N.; Jones, C.; Rabbany, R.; Faloutsos, C. INFOSHIELD: Generalizable Information-Theoretic Human-Trafficking Detection. In Proceedings of the Proceedings - International Conference on Data Engineering; IEEE Computer Society: National Chiao Tung University, 2021; Vol. 2021-April, pp. 1116–1127. [Google Scholar]

- Liu, T.; Wang, S.; Fu, J.; Chen, L.; Wei, Z.; Liu, Y.; Ye, H.; Xu, L.; Wang, W.; Huang, X. Fine-Grained Element Identification in Complaint Text of Internet Fraud. In Proceedings of the International Conference on Information and Knowledge Management, Proceedings; Association for Computing Machinery: Fudan University, Shanghai, China; 2021; pp. 3268–3272. [Google Scholar]

- Meilani, Z.D.; Nizar, I.M.; Sunandar, M.F.; Hidayati, S.C. IEEE Lawyering Social: Navigating Legal Issues on Social Media Posts with a Low-Cost Data Algorithm. 2021 5TH Int. Conf. INFORMATICS Comput. Sci. (ICICOS 2021); 2021. [Google Scholar] [CrossRef]

- Meraliyev, B.; Kongratbayev, K.; Sultanova, N. Content Analysis of Extracted Suicide Texts From Social Media Networks by Using Natural Language Processing and Machine Learning Techniques. In Proceedings of the 2021 IEEE International Conference on Smart Information Systems and Technologies (SIST); 2021; pp. 1–6. [Google Scholar]

- Min, M.; Lee, J.J.; Park, H.; Lee, K. Honeypot System for Automatic Reporting of Illegal Online Gambling Sites Utilizing SMS Spam. In Proceedings of the World Automation Congress Proceedings; IEEE Computer Society: Korea University, Department of Information Security School of Cybersecurity, Seoul, South Korea, 2021; Vol. 2021-Augus. pp. 180–185.

- Mitra, S.; Tasnim, T.; Islam, M.A.R.; Khan, N.I.; Majib, M.S. A Framework to Detect and Prevent Cyberbullying from Social Media by Exploring Machine Learning Algorithms. In Proceedings of the 6th International Conference on Computer, Communication, Chemical, Materials and Electronic Engineering, IC4ME2 2021; Institute of Electrical and Electronics Engineers Inc.: Military Institute of Science and Technology, Department of Computer Science and Engineering, Dhaka, 1216, Bangladesh, 2021.

- Mladenović, M.; Ošmjanski, V.; Stanković, S.V. Cyber-Aggression, Cyberbullying, and Cyber-Grooming: A Survey and Research Challenges. ACM Comput. Surv. 2021, 54. [Google Scholar] [CrossRef]

- Peng, H.; Li, J.; Song, Y.; Yang, R.; Ranjan, R.; Yu, P.S.; He, L. Streaming Social Event Detection and Evolution Discovery in Heterogeneous Information Networks. ACM Trans. Knowl. Discov. Data 2021, 15. [Google Scholar] [CrossRef]

- Permana, M.A.; Thohir, M.I.; Mantoro, T.; Ayu, M.A. Crime Rate Detection Based on Text Mining on Social Media Using Logistic Regression Algorithm. In Proceedings of the 7th International Conference on Computing, Engineering and Design, ICCED 2021; Institute of Electrical and Electronics Engineers Inc.: NusaPutra University, School of Computer Science, Sukabumi, Indonesia, 2021.

- Qureshi, K.A.; Sabih, M. Un-Compromised Credibility: Social Media Based Multi-Class Hate Speech Classification for Text. IEEE Access 2021, 9, 109465–109477. [Google Scholar] [CrossRef]

- Rahman, T.; Rohan, R.; Pal, D.; Kanthamanon, P. Human Factors in Cybersecurity: A Scoping Review. In Proceedings of the Proceedings of the 12th International Conference on Advances in Information Technology; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar]

- Rizwan, K.; Babar, S.; Nayab, S.; Hanif, M.K. HarX: Real-Time Harassment Detection Tool Using Machine Learning. In Proceedings of the International Conference of Modern Trends in ICT Industry: Towards the Excellence in the ICT Industries, MTICTI 2021; Institute of Electrical and Electronics Engineers Inc.: University of Faisalabad, Department of Computer Science: Faisalabad, Pakistan, 2021. [Google Scholar]

- Rovera, M.; Nanni, F.; Ponzetto, S.P. Event-Based Access to Historical Italian War Memoirs. J. Comput. Cult. Herit. 2021, 14. [Google Scholar] [CrossRef]

- Sajid, M.S.I.; Wei, J.; Abdeen, B.; Al-Shaer, E.; Islam, M.M.; Diong, W.; Khan, L. SODA: A System for Cyber Deception Orchestration and Automation. In Proceedings of the Proceedings of the 37th Annual Computer Security Applications Conference; Association for Computing Machinery: New York, NY, USA, 2021; pp. 675–689. [Google Scholar]

- Samtani, S.; Li, W.; Benjamin, V.; Chen, H. Informing Cyber Threat Intelligence through Dark Web Situational Awareness: The AZSecure Hacker Assets Portal. Digit. Threat. 2021, 2. [Google Scholar] [CrossRef]

- Sasaki, S.; Miyamoto, Y. Source-Oriented POV Visualization for Multidimensional Analysis of International Conflicts and Terrorist Incidents with 5D World Map System. In Proceedings of the International Electronics Symposium 2021: Wireless Technologies and Intelligent Systems for Better Human Lives, IES 2021 - Proceedings; A.A., Y., A., K.N., H., H., P.A.M., P., F., G., M., R., Y.R., P., M., R., Eds.; Institute of Electrical and Electronics Engineers Inc.: Musashino University, Department of Data Science, 3-3-3 Ariake, Koto-ku, 135-8181, Japan, 2021. pp. 323–330.

- Seyler, D.; Li, L.; Zhai, C. Semantic Text Analysis for Detection of Compromised Accounts on Social Networks. In Proceedings of the Proceedings of the 12th IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining; IEEE Press, 2021; pp. 417–424. [Google Scholar]

- Sharma, C.; Ramakrishnan, R.; Pendse, A.; Chimurkar, P.; Talele, K.T. CYBER-BULLYING DETECTION VIA TEXT MINING AND MACHINE LEARNING. In Proceedings of the 2021 12th International Conference on Computing Communication and Networking Technologies, ICCCNT 2021; Institute of Electrical and Electronics Engineers Inc.: Electronics Engineering Department, Sardar Patel Institute of Technology, Mumbai, India, 2021.

- Sheng, Q.; Zhang, X.; Cao, J.; Zhong, L. Integrating Pattern- and Fact-Based Fake News Detection via Model Preference Learning. In Proceedings of the Proceedings of the 30th ACM International Conference on Information & Knowledge Management; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1640–1650. [Google Scholar]

- Shi, T.; Wang, N.; Zhang, L. LDA-CBOW-Based Mining Model for Risky Driving Behavior in Traffic Accidents. In Proceedings of the Journal of Physics: Conference Series; IOP Publishing Ltd: Beijing Police College, Beijing, 102202, China, 2021; Vol. 2138.

- Singh Yadav, A.K.; Sora, M. Fraud Detection in Financial Statements Using Text Mining Methods: A Review. In Proceedings of the IOP Conference Series: Materials Science and Engineering; Dept. of CSE, NERIST, Itanagar, Arunachal Pradesh, India, 2021; Vol. 1020.

- Solanke, A.A.; Chen, X.; Ramirez-Cruz, Y. Pattern Recognition and Reconstruction: Detecting Malicious Deletions in Textual Communications. In Proceedings of the Proceedings - 2021 IEEE International Conference on Big Data, Big Data 2021; Y., C., H., L., Y., T., U., F., X., Z., X.T., H., S., B., X., L., J., Z., S., P., V., P., J., W., A., C., C., O., Eds.; Institute of Electrical and Electronics Engineers Inc.: University of Bologna, Cirsfid-AlmaAI, Bologna, Italy, 2021; pp. 2574–2582.

- Thaipisutikul, T.; Tuarob, S.; Pongpaichet, S.; Amornvatcharapong, A.; Shih, T.K. Automated Classification of Criminal and Violent Activities in Thailand from Online News Articles. In Proceedings of the KST 2021 - 2021 13th International Conference Knowledge and Smart Technology; Faculty of Information and Communication Technology, Mahidol University: Thailand, 2021; pp. 170–175. [Google Scholar]

- Toubes, D.R.; Araújo-Vila, N. The Treatment of Language in Travel Advisories as a Covert Tool of Political Sanction. Tour. Manag. Perspect. 2021, 40. [Google Scholar] [CrossRef]

- Tundis, A.; Melnik, M.; Naveed, H.; Mühlhäuser, M. A Social Media-Based over Layer on the Edge for Handling Emergency-Related Events. Comput. Electr. Eng. 2021, 96. [Google Scholar] [CrossRef]

- Ul Rehman, Z.; Abbas, S.; Khan, M.A.; Mustafa, G.; Fayyaz, H.; Hanif, M.; Saeed, M.A. Understanding the Language of ISIS: An Empirical Approach to Detect Radical Content on Twitter Using Machine Learning. C. Mater. \& Contin. 2021, 66, 1075–1090. [Google Scholar] [CrossRef]

- Wang, A.; Potika, K. Cyberbullying Classification Based on Social Network Analysis. In Proceedings of the Proceedings - IEEE 7th International Conference on Big Data Computing Service and Applications, BigDataService 2021; Institute of Electrical and Electronics Engineers Inc.: Department of Computer Science, San Jose State University, San Jose, United States, 2021; pp. 87–95.

- Wu, D.; Shi, W.; Ma, X. A Novel Real-Time Anti-Spam Framework. ACM Trans. Internet Technol. 2021, 21. [Google Scholar] [CrossRef]

- Wu, T.; Ma, W.; Wen, S.; Xia, X.; Paris, C.; Nepal, S.; Xiang, Y. Analysis of Trending Topics and Text-Based Channels of Information Delivery in Cybersecurity. ACM Trans. Internet Technol. 2021, 22. [Google Scholar] [CrossRef]

- Yao, S. Application of Data Mining Technology in Financial Fraud Identification. In Proceedings of the ACM International Conference Proceeding Series; Association for Computing Machinery: Business School, Nanjing University, Nanjing, 210023, China, 2021; pp. 2919–2922.

- Yin, X.; Zhu, Y.; Hu, J. A Comprehensive Survey of Privacy-Preserving Federated Learning: A Taxonomy, Review, and Future Directions. ACM Comput. Surv. 2021, 54. [Google Scholar] [CrossRef]

- Zaimi, R.; Hafidi, M.; Lamia, M. A Literature Survey on Anti-Phishing in Websites. In Proceedings of the Proceedings of the 4th International Conference on Networking, Information Systems & Security; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar]

- Shrestha, A.; Akrami, N.; Kaati, L. Introducing Digital-7: Threat Assessment of Individuals in Digital Environments. In Proceedings of the Proceedings of the 12th IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining; IEEE Press, 2021; pp. 720–726. [Google Scholar]

- Pandya, D.D.; Amarawat, G.; Jadeja, A.; Degadwala, S.; Vyas, D. Analysis and Prediction of Location Based Criminal Behaviors Through Machine Learning. In Proceedings of the 2022 International Conference on Edge Computing and Applications (ICECAA); 2022; pp. 1324–1332. [Google Scholar]

- Chi, X. Research On Crime Feature Mining Based On Extraction of Spatio-Temporal Elements of Cases. In Proceedings of the 2022 15th International Symposium on Computational Intelligence and Design (ISCID); 2022; pp. 282–285. [Google Scholar]

- Labanan, R.M.; Muñoz, N.D.S. A Study on the Usability of Text Analysis on Web Artifacts for Digital Forensic Investigation. In Proceedings of the 2022 2nd International Conference in Information and Computing Research (iCORE); 2022; pp. 54–59. [Google Scholar]

- Tsur, A.M.; Nadler, R.; Sorkin, A.; Lipkin, I.; Gelikas, S.; Chen, J.; Benov, A. Patterns in Vehicle-Ramming Attacks. Isr. Med. Assoc. J. 2022, 24, 579–583. [Google Scholar]

- Raja, K.; Bakaraniya, P. A Review On Social Media Crime Related Users Prediction Methodology. In Proceedings of the 2022 Second International Conference on Artificial Intelligence and Smart Energy (ICAIS); 2022; pp. 698–703. [Google Scholar]

- Torres-Berru, Y.; López Batista, V.F. Data and Text Mining for the Detection of Fraud in Public Contracts: A Case Study of Ecuador’s Official Public Procurement System. In Proceedings of the Lecture Notes in Electrical Engineering; S., B., K., A., Eds.; Springer Science and Business Media Deutschland GmbH: Universidad de Salamanca Plaza de la Merced, Salamanca, Spain, 2022; Vol. 846 LNEE, pp. 116–127.

- Fridlund, M.; Brodén, D.; Jauhiainen, T.; Malkki, L.; Olsson, L.-J.; Borin, L. Trawling and Trolling for Terrorists in the Digital Gulf of Bothnia: Cross-Lingual Text Mining for the Emergence of Terrorism in Swedish and Finnish Newspapers, 1780-1926. In CLARIN: The Infrastructure for Language Resources; De Gruyter: Centre for Digital Humanities, Department of Literature, History of Ideas and Religion, University of Gothenburg, Gothenburg, Sweden, 2022; pp. 781–801 ISBN 978-311076737-7 (ISBN); 978-311076734-6 (ISBN).

- Liu, Y.; Xu, Z. An Empirical Analysis of Rape Sentence Based on SPSS. In Proceedings of the Proceedings of the 2022 5th International Conference on Software Engineering and Information Management; Association for Computing Machinery: New York, NY, USA, 2022; pp. 182–187. [Google Scholar]

- Anastasiadis, M.; Aivatoglou, G.; Spanos, G.; Voulgaridis, A.; Votis, K. Combining Text Analysis Techniques with Unsupervised Machine Learning Methodologies for Improved Software Vulnerability Management. In Proceedings of the 2022 IEEE International Conference on Cyber Security and Resilience (CSR); 2022; pp. 273–278. [Google Scholar]

- Balasaraswathi, V.R.; Mary Shamala, L.; Hamid, Y.; Pachhaiammal Alias Priya, M.; Shobana, M.; Sugumaran, M. An Efficient Feature Selection for Intrusion Detection System Using B-HKNN and C2 Search Based Learning Model. Neural Process. Lett. 2022, 54, 5143–5167. [Google Scholar] [CrossRef]

- Müller, W.; Mühlenberg, D.; Pallmer, D.; Zeltmann, U.; Ellmauer, C.; Carrasco, F.J.P.; Garcia, A.G.; Demestichas, K.; Peppes, N.; Touska, D.; et al. Knowledge Engineering for Crime Investigation. In Proceedings of the Proceedings of World Multi-Conference on Systemics, Cybernetics and Informatics, WMSCI; N.C., C., J., H., B., S., M., S., Eds.; International Institute of Informatics and Cybernetics: Fraunhofer IOSB, Karlsruhe, 76131, Germany, 2022; Vol. 3, pp. 64–69.

- Nisha, M.; Jebathangam, J. Detection and Classification of Cyberbullying in Social Media Using Text Mining. In Proceedings of the 6th International Conference on Electronics, Communication and Aerospace Technology, ICECA 2022 - Proceedings; Institute of Electrical and Electronics Engineers Inc.: Vistas, Department of Computer Science, Chennai, India, 2022; pp. 856–861.

- Michell, C.; Winarto, C.N.; Bestari, L.; Ramdhan, D.; Chowanda, A. Systematic Literature Review of E-Wallet: The Technology and Its Regulations in Indonesia. In Proceedings of the 2022 International Conference on Information Technology Systems and Innovation, ICITSI 2022 - Proceedings; Institute of Electrical and Electronics Engineers Inc.: School of Computer Science, Bina Nusantara University, Computer Science Department, Jakarta, 11480, Indonesia, 2022; pp. 64–69.

- Edlund, J.; Brodén, D.; Fridlund, M.; Lindhé, C.; Olsson, L.-J.; Ängsal, M.P.; Öhberg, P. A Multimodal Digital Humanities Study of Terrorism in Swedish Politics: An Interdisciplinary Mixed Methods Project on the Configuration of Terrorism in Parliamentary Debates, Legislation, and Policy Networks 1968–2018. In Proceedings of the Lecture Notes in Networks and Systems; K., A., Ed.; Springer Science and Business Media Deutschland GmbH: KTH Speech, Music & Hearing, Department of Intelligent Systems, KTH Royal Institute of Technology, Stockholm, 100 44, Sweden, 2022; Vol. 295, pp. 435–449.

- Zhou, T.; Zhao, H.; Zhang, X. Keyword Extraction Based on Random Forest and XGBoost - An Example of Fraud Judgment Document. In Proceedings of the 2022 European Conference on Natural Language Processing and Information Retrieval (ECNLPIR); 2022; pp. 17–22. [Google Scholar]

- Bahaweres, R.B.; Nugrahanti, D.A. Implementation of Text Association Rules about Terrorism on Twitter in Indonesia. In Proceedings of the 2022 10th International Conference on Cyber and IT Service Management, CITSM 2022; Institute of Electrical and Electronics Engineers Inc.: Informatics Uin Syarif Hidayatullah Jakarta South, Tangerang, Indonesia, 2022.

- Husák, M.; Čermák, M. SoK: Applications and Challenges of Using Recommender Systems in Cybersecurity Incident Handling and Response. In Proceedings of the Proceedings of the 17th International Conference on Availability, Reliability and Security; Association for Computing Machinery: New York, NY, USA, 2022. [Google Scholar]

- Li, J. Analyse of Influence of Adversarial Samples on Neural Network Attacks with Different Complexities. In Proceedings of the Proceedings - 2022 2nd International Signal Processing, Communications and Engineering Management Conference, ISPCEM 2022; Institute of Electrical and Electronics Engineers Inc.: Chongqing University of Posts and Telecommunications, Chongqing, China, 2022; pp. 329–333.

- Kovalchuk, O.; Banakh, S.; Masonkova, M.; Berezka, K.; Mokhun, S.; Fedchyshyn, O. Text Mining for the Analysis of Legal Texts. In Proceedings of the Proceedings - International Conference on Advanced Computer Information Technologies, ACIT; West Ukrainian National University, Department of Applied Mathematics, Ternopil, Ukraine, 2022; pp. 502–505.

- Wieck, F.; Stein, N. V.; Lower, M. Improving Safety in Europe-Detecting Counterfeit Certificates from FFP2 Masks: A Text-Mining Approach. In Proceedings of the ISPCE 2022 - IEEE International Symposium on Product Compliance Engineering; Institute of Electrical and Electronics Engineers Inc.: University of Wuppertal Wuppertal, Dept. of Product Safety and Quality, Germany, 2022.

- Febro-Naga, J.-D.; Tinam-isan, M.-A.-C. Exploring Cyber Violence against Women and Girls in the Philippines through Mining Online News. Comunicar 2022, 30, 121–133. [Google Scholar] [CrossRef]

- Min, M.; Lee, J.J.; Lee, K. Detecting Illegal Online Gambling (IOG) Services in the Mobile Environment. Secur. Commun. Networks 2022, 2022. [Google Scholar] [CrossRef]

- de Morais, J.P.M.; Merschmann, L.H.; de, C. A Cascade Approach for Gender Prediction from Texts in Portuguese Language. In Proceedings of the Proceedings of the Brazilian Symposium on Multimedia and the Web; Association for Computing Machinery: New York, NY, USA, 2022; pp. 142–149. [Google Scholar]

- Panggabean, S.; Gata, W.; Setiawan, T.A. Analysis of Twitter Sentiment Towards Madrasahs Using Classification Methods. J. Appl. Eng. Technol. Sci. 2022, 4, 375–389. [Google Scholar] [CrossRef]

- Khandelwal, S.; Chaudhary, A. COVID-19 Pandemic & Cyber Security Issues: Sentiment Analysis and Topic Modeling Approach. J. Discret. Math. Sci. Cryptogr. 2022, 25, 987–997. [Google Scholar] [CrossRef]

- Aguerri, J.C.; Miró-Llinares, F.; Vila-Viñas, D. When Social Media Feeds Classic Punitivism on Media: The Coverage of the Glorification of Terrorism on XXI. Criminol. Crim. JUSTICE 2022. [Google Scholar] [CrossRef]

- Wilson, M.; Spike, E.; Karystianis, G.; Butler, T. Nonfatal Strangulation During Domestic Violence Events in New South Wales: Prevalence and Characteristics Using Text Mining Study of Police Narratives. Violence Against Women 2022, 28, 2259–2285. [Google Scholar] [CrossRef] [PubMed]

- Withall, A.; Karystianis, G.; Duncan, D.; Hwang, Y.I.; Kidane, A.H.; Butler, T. Domestic Violence in Residential Care Facilities in New South Wales, Australia: A Text Mining Study. Gerontologist 2022, 62, 223–231. [Google Scholar] [CrossRef] [PubMed]

- Algefes, A.; Aldossari, N.; Masmoudi, F.; Kariri, E. IEEE A Text-Mining Approach for Crime Tweets in Saudi Arabia: From Analysis to Prediction. 2022 7TH Int. Conf. DATA Sci. Mach. Learn. Appl. (CDMA 2022) 2022, 109–114. [Google Scholar] [CrossRef]

- Boukabous, M.; Azizi, M. Multimodal Sentiment Analysis Using Audio and Text for Crime Detection. 2022 2ND Int. Conf. Innov. Res. Appl. Sci. Eng. Technol. 2022, 803-807 WE-Conference Proceedings Citation Inde.

- Gunarathne, P.; Rui, H.X.; Seidmann, A. Racial Bias in Customer Service: Evidence from Twitter. Inf. Syst. Res. 2022, 33, 43–54. [Google Scholar] [CrossRef]

- Feng, Y.Y.; Li, G.W.; Sun, X.L.; Li, J.P. Identification of Tourists’ Dynamic Risk Perception-the Situation in Tibet. Humanit. Soc. Sci. Commun. 2022, 9. WE - Social Science Citation Index (SSCI) WE - Arts &Humanities Citation Index (A&HCI). [Google Scholar] [CrossRef] [PubMed]

- Unlu, A.; Yilmaz, K. Online Terrorism Studies: Analysis of the Literature. Stud. Confl. Terror. 2022. [Google Scholar] [CrossRef]

- Bridgelall, R. An Application of Natural Language Processing to Classify What Terrorists Say They Want. Soc. Sci. 2022, 11. WE - Emerging Sources Citation Index (ESCI). [Google Scholar] [CrossRef]

- Ryu, H.; Kim, C.; Kim, J.; Lee, S.J.; Lee, J.Y. Understanding the Road Rage Behavior and Implications: A Textual Approach Using Legal Cases in Korea. Transp. Res. Interdiscip. Perspect. 2022, 16. WE - Emerging Sources Citation Index (ESCI). [Google Scholar] [CrossRef]

- Mothe, J.; Ullah, M.Z.; Okon, G.; Schweer, T.; Jursenas, A.; Mandravickaite, J. Instruments and Tools to Identify Radical Textual Content. INFORMATION 2022, 13. WE - Emerging Sources Citation Index (ESCI). [Google Scholar] [CrossRef]

- Charmanas, K.; Mittas, N.; Angelis, L. Predicting the Existence of Exploitation Concepts Linked to Software Vulnerabilities Using Text Mining. In Proceedings of the Proceedings of the 25th Pan-Hellenic Conference on Informatics; Association for Computing Machinery: New York, NY, USA, 2022; pp. 352–356. [Google Scholar]

- Li, Y. Towards Forecasting Internet Financial Frauds Based on Advertising. In Proceedings of the Proceedings - 2022 8th International Conference on Big Data and Information Analytics, BigDIA 2022; Institute of Electrical and Electronics Engineers Inc.: Peking University, China, 2022; pp. 5–11.

- Tampus-Siena, M. Analyzing the Discussion of Gregorio Murder on Twitter Using Text Mining Approach. Comput. Hum. Behav. Reports 2022, 8. [Google Scholar] [CrossRef]

- Borah, A.R.; Krishna, B.V.S.; Shrinidhi, M.H.; Gouda, D.N.; Poojary, P. The Soul Safety. In Proceedings of the Proceedings of the 2022 3rd International Conference on Communication, Computing and Industry 4.0, C2I4 2022; Institute of Electrical and Electronics Engineers Inc.: New Horizon College of Engineering, Department of Computer Science and Engineering, Bangalore, India, 2022.

- Chaudhary, M.; Bansal, D. Open Source Intelligence Extraction for Terrorism-Related Information: A Review. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2022, 12. [Google Scholar] [CrossRef]

- Lindstadt, C.; Boyer, B.P.; Ciszek, E.; Chung, A.; Wilcox, G. Drunk Girl: A Brief Thematic Analysis of Twitter Posts about Alcohol Use and #MeToo. Qual. Res. Reports Commun. 2022, 23, 90–104. [Google Scholar] [CrossRef]

- Alnazzawi, N. Using Twitter to Detect Hate Crimes and Their Motivations: The HateMotiv Corpus. DATA 2022, 7. WE - Emerging Sources Citation Index (ESCI). [Google Scholar] [CrossRef]

- Zhao, F.; Skums, P.; Zelikovsky, A.; Sevigny, E.L.; Swahn, M.H.; Strasser, S.M.; Huang, Y.; Wu, Y. Computational Approaches to Detect Illicit Drug Ads and Find Vendor Communities Within Social Media Platforms. IEEE/ACM Trans. Comput. Biol. Bioinforma. 2022, 19, 180–191. [Google Scholar] [CrossRef]

- Hsieh, H.-P.; Jiang, J.; Yang, T.-H.; Hu, R.; Wu, C.-L. Predicting the Success of Mediation Requests Using Case Properties and Textual Information for Reducing the Burden on the Court. Digit. Gov. Res. Pr. 2022, 2. [Google Scholar] [CrossRef]

- Sadlek, L.; Čeleda, P.; Tovar\vnák, D. Current Challenges of Cyber Threat and Vulnerability Identification Using Public Enumerations. In Proceedings of the Proceedings of the 17th International Conference on Availability, Reliability and Security; Association for Computing Machinery: New York, NY, USA, 2022. [Google Scholar]

- Ferreira, F.; Duarte, J.; Ugulino, W. Automated Statistics Extraction of Public Security Events Reported Through Microtexts on Social Networks. In Proceedings of the ACM International Conference Proceeding Series; W., S., V.V., G.N., A., de L.F., R.C.G., B., A.R., G., Eds.; Association for Computing Machinery: Instituto Militar de Engenharia Rio de Janeiro, Rio de Janeiro, Brazil, 2022; Vol. Par F18047.

- Xu, Y.; Chen, G.; Liu, Q.; Xu, W.; Zhang, L.; Wu, J.; Fan, X. A Phishing Website Detection and Recognition Method Based on Naive Bayes. In Proceedings of the IEEE 6th Information Technology and Mechatronics Engineering Conference, ITOEC 2022; B., X., K., M., Eds.; Institute of Electrical and Electronics Engineers Inc.: Institute for Cyberspace Intelligence and Crime Governance, Zhejiang Police College, Hangzhou, China, 2022; pp. 1557–1562.

- de Carvalho, V.D.H.; Costa, A.P.C.S. Towards Corpora Creation from Social Web in Brazilian Portuguese to Support Public Security Analyses and Decisions. Libr. Hi Tech 2022. [Google Scholar] [CrossRef]

- Bridgelall, R. Applying Unsupervised Machine Learning to Counterterrorism. J. Comput. Soc. Sci. 2022, 5, 1099–1128. [Google Scholar] [CrossRef]

- Mantoro, T.; Permana, M.A.; Ayu, M.A. Crime Index Based on Text Mining on Social Media Using Multi Classifier Neural-Net Algorithm. Telkomnika (Telecommunication Comput. Electron. Control. 2022, 20, 570–579. [Google Scholar] [CrossRef]

- Casimiro, G.R.; Digiampietri, L.A. Authorship Attribution with Temporal Data in Reddit. In Proceedings of the Proceedings of the XVIII Brazilian Symposium on Information Systems; Association for Computing Machinery: New York, NY, USA, 2022. [Google Scholar]

- Mansour Khoudja, A.; Loukam, M.; Belkredim, F.Z. Towards Author Profiling from Modern Standard Arabic Texts: A Review. In Proceedings of the Lecture Notes in Networks and Systems; X., Y., S., S., N., D., A., J., Eds.; Springer Science and Business Media Deutschland GmbH: Hassiba Benbouali University of Chlef, Chlef, Algeria, 2022; Vol. 235, pp. 745–753.

- Cascavilla, G.; Catolino, G.; Ebert, F.; Tamburri, D.A.; Heuvel, W.J. van den “When the Code Becomes a Crime Scene” Towards Dark Web Threat Intelligence with Software Quality Metrics. In Proceedings of the 2022 IEEE International Conference on Software Maintenance and Evolution (ICSME); 2022; pp. 439–443. [Google Scholar]

- Gupta, A.; Matta, P.; Pant, B. Identification of Cybercriminals in Social Media Using Machine Learning. In Proceedings of the 2022 International Conference on Smart Generation Computing, Communication and Networking, SMART GENCON 2022; Institute of Electrical and Electronics Engineers Inc.: Graphic Era Deemed to Be University, Graphic Era Hill University, Dehradun, India, 2022.

- Deguara, N.; Arshad, J.; Paracha, A.; Azad, M.A. Threat Miner - A Text Analysis Engine for Threat Identification Using Dark Web Data. In Proceedings of the 2022 IEEE International Conference on Big Data (Big Data); 2022; pp. 3043–3052. [Google Scholar]

- Bifari, E.; Alhalabi, W. Exploring Narrative Court Documents for Use in Police Academic Education. In Proceedings of the Proceedings - 2022 14th IEEE International Conference on Computational Intelligence and Communication Networks, CICN 2022; Institute of Electrical and Electronics Engineers Inc.: King Abdulaziz University, Computer Science Department, Jeddah, Saudi Arabia, 2022; pp. 41–45.

- Aldossari, N.; Algefes, A.; Masmoudi, F.; Kariri, E. Data Science Approach for Crime Analysis and Prediction: Saudi Arabia Use-Case. In Proceedings of the 2022 Fifth International Conference of Women in Data Science at Prince Sultan University (WiDS PSU); 2022; pp. 20–25. [Google Scholar]

- Biswas, B.; Mukhopadhyay, A.; Bhattacharjee, S.; Kumar, A.; Delen, D. A Text-Mining Based Cyber-Risk Assessment and Mitigation Framework for Critical Analysis of Online Hacker Forums. Decis. Support Syst. 2022, 152. [Google Scholar] [CrossRef]

- Lyu, Y.; Wang, Z.; Ren, Z.; Ren, P.; Chen, Z.; Liu, X.; Li, Y.; Li, H.; Song, H. Improving Legal Judgment Prediction through Reinforced Criminal Element Extraction. Inf. Process. Manag. 2022, 59. [Google Scholar] [CrossRef]

- dos Santos, A.R.S.; Rodrigues, C.M.; de, O.; de Melo, H.B.S. Identifying Xenophobia in Twitter Posts Using Support Vector Machine with TF/IDF Strategy. In Proceedings of the Proceedings of the XVIII Brazilian Symposium on Information Systems; Association for Computing Machinery: New York, NY, USA, 2022. [Google Scholar]

- Büsgen, A.; Klöser, L.; Kohl, P.; Schmidts, O.; Kraft, B.; Zündorf, A. From Cracked Accounts to Fake IDs: User Profiling on German Telegram Black Market Channels. In Proceedings of the Communications in Computer and Information Science; A., C., O., G., S., H., C., Q., Eds.; Springer Science and Business Media Deutschland GmbH: Aachen University of Applied Sciences, Aachen, 52066, Germany, 2023; Vol. 1860 CCIS, pp. 176–202.

- Al-Alawi, A.I.; A-Lmansouri, A.M. Artificial Intelligence in the Judiciary System of Saudi Arabia: A Literature Review. In Proceedings of the 2023 International Conference On Cyber Management And Engineering (CyMaEn); 2023; pp. 83–87. [Google Scholar]

- Karteris, A.; Tzanos, G.; Papadopoulos, L.; Soudris, D. Detection of Cyber Security Threats through Social Media Platforms. In Proceedings of the 2023 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW); 2023; pp. 820–823. [Google Scholar]

- Chauhan, R.; Upadhyay, S.; Vaidya, H. Fake News Detection Based on Machine Learning Algorithm. In Proceedings of the 2023 3rd International Conference on Innovative Sustainable Computational Technologies (CISCT); 2023; pp. 1–5. [Google Scholar]

- Aaditya Anil, K.; Sasikumar, K.; Nambiar, R.K.; Rohith, K.P.; Viji Rajendran, V. Unmasking Cyberbullies on Social Media Platforms Using Machine Learning. In Proceedings of the 2023 14th International Conference on Computing Communication and Networking Technologies, ICCCNT 2023; Institute of Electrical and Electronics Engineers Inc.: Nss College of Engineering, Dept. of Computer Science, Kerala, Palakkad, India, 2023.

- Saravanan, S.; Menon, A.; Saravanan, K.; Hariharan, S.; Nelson, L.; Gopalakrishnan, J. Cybersecurity Audits for Emerging and Existing Cutting Edge Technologies. In Proceedings of the 2023 11th International Conference on Intelligent Systems and Embedded Design (ISED); 2023; pp. 1–7. [Google Scholar]

- Sun, P.; Zuo, Y.; Wang, Y. Classification Model for NAVTEX Navigational Warning Messages Based on Adaptive Weighted TF-IDF. In Proceedings of the Proceedings of the 10th Multidisciplinary International Social Networks Conference; Association for Computing Machinery: New York, NY, USA, 2023; pp. 133–142. [Google Scholar]

- Vajiac, C.; Lee, M.-C.; Kulshrestha, A.; Levy, S.; Park, N.; Olligschlaeger, A.; Jones, C.; Rabbany, R.; Faloutsos, C. DeltaShield: Information Theory for Human- Trafficking Detection. ACM Trans. Knowl. Discov. Data 2023, 17. [Google Scholar] [CrossRef]

- Saeed, A.; Khan, H.U.; Shankar, A.; Imran, T.; Khan, D.; Kamran, M.; Khan, M.A. Topic Modeling Based Text Classification Regarding Islamophobia Using Word Embedding and Transformers Techniques. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2023. [Google Scholar] [CrossRef]

- Santiago, N.; Mendez, J. Analysis of Common Vulnerabilities and Exposures to Produce Security Trends. In Proceedings of the Proceedings of the 2022 International Conference on Cyber Security; Association for Computing Machinery: New York, NY, USA, 2023; pp. 16–19. [Google Scholar]

- Rahmat, R.F.; Aziira, A.H.; Purnamawati, S.; Pane, Y.M.; Faza, S.; Nadi, F. Classifying Indonesian Cyber Crime Cases under ITE Law Using a Hybrid of Mutual Information and Support Vector Machine. Int. J. Saf. Secur. Eng. 2023, 13, 835–844. [Google Scholar] [CrossRef]

- Correia, F.A.; Nunes, J.L.; Alves, P.H.; Lopes, H. Dynamic Topic Modeling with Tensor Decomposition as a Tool to Explore the Legal Precedent Relevance Over Time. In Proceedings of the Proceedings of the ACM Symposium on Document Engineering 2023; Association for Computing Machinery: New York, NY, USA, 2023. [Google Scholar]

- Pejic-Bach, M.; Jajic, I.; Kamenjarska, T. A Bibliometric Analysis of Phishing in the Big Data Era: High Focus on Algorithms and Low Focus on People. In Proceedings of the Procedia Computer Science; R., M., R., R., M.M., C.-C., D., D., E., P., Eds.; Elsevier B.V.: Faculty of Economics and Business, University of Zagreb, Square of John F. Kennedy 6, Zagreb, 10 000, Croatia, 2023; Vol. 219, pp. 91–98.

- Berhoum, A.; Meftah, M.C.E.; Laouid, A.; Hammoudeh, M. An Intelligent Approach Based on Cleaning up of Inutile Contents for Extremism Detection and Classification in Social Networks. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2023, 22. [Google Scholar] [CrossRef]

- Sivanantham, K.; Blessington Praveen, P.; Deepa, V.; Mohan Kumar, R. Cybercrime Sentimental Analysis for Child Youtube Video Dataset Using Hybrid Support Vector Machine with Ant Colony Optimization Algorithm. In Studies in Computational Intelligence; Springer Science and Business Media Deutschland GmbH: HCL Technologies: Tamilnadu, Coimbatore, India, 2023; Vol. 1080, pp. 175–193, ISBN 1860949X (ISSN). [Google Scholar]

- Gómez-Camacho, A.; Hunt-Gómez, C.I.; Núñez-Roman, F.; Esteban, A.N. “Not All Motherfuckers Are MENA, but Most MENA Are Motherfuckers”: Hate Speech on Twitter against Unaccompanied Foreign Minors. J. Lang. Aggress. Confl. 2023, 11, 256–278. [Google Scholar] [CrossRef]

- Zhen, Z.; Gao, J. Chinese Cyber Threat Intelligence Named Entity Recognition via RoBERTa-Wwm-RDCNN-CRF. Comput. Mater. Contin. 2023, 77, 299–321. [Google Scholar] [CrossRef]

- Parker, M.A.; Valdez, D.; Rao, V.K.; Eddens, K.S.; Agley, J. Results and Methodological Implications of the Digital Epidemiology of Prescription Drug References Among Twitter Users: Latent Dirichlet Allocation (LDA) Analyses. J. Med. Internet Res. 2023, 25. [Google Scholar] [CrossRef] [PubMed]

- Aljohani, E.J.; Yafooz, W.M.S.; Alsaeedi, A. Cyberbullying Detection Approaches: A Review. In Proceedings of the Proceedings of the 5th International Conference on Inventive Research in Computing Applications, ICIRCA 2023; Institute of Electrical and Electronics Engineers Inc.: College of Computer Science and Engineering, Taibah University, Department of Computer Science, Madinah, Saudi Arabia, 2023; pp. 1310–1316.

- Goldstein, E. V.; Mooney, S.J.; Takagi-Stewart, J.; Agnew, B.F.; Morgan, E.R.; Haviland, M.J.; Zhou, W.; Prater, L.C. Characterizing Female Firearm Suicide Circumstances: A Natural Language Processing and Machine Learning Approach. Am. J. Prev. Med. 2023, 65, 278–285. [Google Scholar] [CrossRef]

- Ptaszek, G.; Yuskiv, B.; Khomych, S. War on Frames: Text Mining of Conflict in Russian and Ukrainian News Agency Coverage on Telegram during the Russian Invasion of Ukraine in 2022. Media, War Confl. 2023. [Google Scholar] [CrossRef]

- Kovalchuk, O.; Banakh, S.; Kasianchuk, M.; Moskaliuk, N.; Kaniuka, V. Associative Rule Mining for the Assessment of the Risk of Recidivism. In Proceedings of the CEUR Workshop Proceedings; T., H., O., S., P.T., P., S., L., Eds.; CEUR-WS: West Ukrainian National University, 11 Lvivska str., Ternopil, 46009, Ukraine, 2023; Vol. 3373, pp. 376–387.

- Sood, P.; Sharma, C.; Nijjer, S.; Sakhuja, S. Review the Role of Artificial Intelligence in Detecting and Preventing Financial Fraud Using Natural Language Processing. Int. J. Syst. Assur. Eng. Manag. 2023, 14, 2120–2135. [Google Scholar] [CrossRef]

- Hui, V.; Eby, M.; Constantino, R.E.; Lee, H.; Zelazny, J.; Chang, J.C.; He, D.; Lee, Y.J. Examining the Supports and Advice That Women With Intimate Partner Violence Experience Received in Online Health Communities: Text Mining Approach. J. Med. Internet Res. 2023, 25. [Google Scholar] [CrossRef] [PubMed]

- Qiu, M.; Zhang, X.; Wang, X. An Ex-Convict Recognition Method Based on Text Mining. Int. J. Secur. Networks 2023, 18, 10–18. [Google Scholar] [CrossRef]

- Bashar, M.A.; Nayak, R.; Knapman, G.; Turnbull, P.; Fforde, C. An Informed Neural Network for Discovering Historical Documentation Assisting the Repatriation of Indigenous Ancestral Human Remains. Soc. Sci. Comput. Rev. 2023, 41, 2293–2317. [Google Scholar] [CrossRef]

- Salama, R.; Al-Turjman, F.; Altrjman, C.; Kumar, S.; Chaudhary, P. A Comprehensive Survey of Blockchain-Powered Cybersecurity- A Survey. In Proceedings of the 2023 International Conference on Computational Intelligence, Communication Technology and Networking (CICTN); 2023; pp. 774–777. [Google Scholar]

- Rahman, M.R.; Hezaveh, R.M.; Williams, L. What Are the Attackers Doing Now? Automating Cyberthreat Intelligence Extraction from Text on Pace with the Changing Threat Landscape: A Survey. ACM Comput. Surv. 2023, 55. [Google Scholar] [CrossRef]

- D., Y.; Panduro-Ramirez, J.; Buddhi, D.; Vekariya, V.; Pillai, B.G.; Tida, N. The Effective Role of Cyber Security in Supply Chain to Enhance Supply Chain Performance and Collaboration. In Proceedings of the 2023 3rd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE); 2023; pp. 838–842.

- Rathor, K.; Vidya, S.; Jeeva, M.; Karthivel, M.; Ghate, S.N.; Malathy, V. Intelligent System for ATM Fraud Detection System Using C-LSTM Approach. In Proceedings of the 2023 4th International Conference on Electronics and Sustainable Communication Systems (ICESC); 2023; pp. 1439–1444. [Google Scholar]

- Karthika, I.; Boomika, G.; Nisha, R.; Shalini, M.; Srivarshini, S.P. A Survey on Detecting and Preventing Hateful Comments on Social Media Using Deep Learning. In Proceedings of the Smart Innovation, Systems and Technologies; J., C., P., M., T., P., A., J., Eds.; Springer Science and Business Media Deutschland GmbH: Department of Computer Science and Engineering, M.Kumarasamy College of Engineering, Tamil Nadu, Karur, 639113, India, 2023; Vol. 312, pp. 285–298.

- Stalidi, C.; Popovici, E.-C.; Suciu, G. Preliminary Architecture and a Pilot Implementation for a Malicious Emails Detection Solution. In Proceedings of the 15th International Conference on Electronics, Computers and Artificial Intelligence, ECAI 2023 - Proceedings; Institute of Electrical and Electronics Engineers Inc.: Politehnica University of Bucharest, Beia Consult International, Faculty of Electronics, Telecommunications, and Information Technology, Telecommunications Dept., R&d Department, Bucharest, Romania, 2023.

- Chatzimarkaki, G.; Karagiorgou, S.; Konidi, M.; Alexandrou, D.; Bouras, T.; Evangelatos, S. Harvesting Large Textual and Multimedia Data to Detect Illegal Activities on Dark Web Marketplaces. In Proceedings of the 2023 IEEE International Conference on Big Data (BigData); 2023; pp. 4046–4055. [Google Scholar]

- Sharanya, G.; Chandrasekaran, D.; Sre, M.D.; Sathiyanarayanan, M. Predicting Abnormal User Behaviour Patterns in Social Media Platforms Based on Process Mining. In Proceedings of the 2023 International Conference on Intelligent and Innovative Technologies in Computing, Electrical and Electronics (IITCEE); 2023; pp. 204–209.

- Guo, Z.; Wang, P.; Cho, J.H.; Huang, L.F. ACM Text Mining-Based Social-Psychological Vulnerability Analysis of Potential Victims To Cybergrooming: Insights and Lessons Learned. COMPANION WORLD WIDE WEB Conf. WWW 2023 2023, 1381–1388. [Google Scholar] [CrossRef]

- Lee, C.S.; Jang, A. Questing for Justice on Twitter: Topic Modeling of #StopAsianHate Discourses in the Wake of Atlanta Shooting. CRIME Delinq. 2023, 69, 2874–2900. [Google Scholar] [CrossRef]

- Bera, D.; Ogbanufe, O.; Kim, D.J. Towards a Thematic Dimensional Framework of Online Fraud: An Exploration of Fraudulent Email Attack Tactics and Intentions. Decis. Support Syst. 2023, 171. [Google Scholar] [CrossRef]

- Zhuchkova, S.; Kazun, A. Exploring Gender Bias in Homicide Sentencing: An Empirical Study of Russian Court Decisions Using Text Mining. HOMICIDE Stud. 2023. [Google Scholar] [CrossRef]

- Kumar, S. Negative Stances Detection from Multilingual Data Streams in Low-Resource Languages on Social Media Using BERT and CNN-Based Transfer Learning Model. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2024, 23. [Google Scholar] [CrossRef]

- Jones, G.M.; Santhiya, P.; Winster, S.G.; Sundar, R. An Intelligent Analysis of Mobile Evidence Using Sentimental Analysis. In Proceedings of the Lecture Notes in Electrical Engineering; S.J., P., N.K., C., B.N., G., S.S., I., Eds.; Springer Science and Business Media Deutschland GmbH: Department of Computer Science and Engineering, Sathyabama Institute of Science and Technology, Chennai, India, 2024; Vol. 1075 LNEE, pp. 317–330.

- Fiesler, C.; Zimmer, M.; Proferes, N.; Gilbert, S.; Jones, N. Remember the Human: A Systematic Review of Ethical Considerations in Reddit Research. Proc. ACM Hum.-Comput. Interact. 2024, 8. [Google Scholar] [CrossRef]

- Shen, B. Analysis and Comparison of Limitation Interests in Civil Procedure Law in the Context of Information Technology. Appl. Math. Nonlinear Sci. 2024, 9. [Google Scholar] [CrossRef]

- Karami, A.; Swan, S.C.; White, C.N.; Ford, K. Hidden in Plain Sight for Too Long: Using Text Mining Techniques to Shine a Light on Workplace Sexism and Sexual Harassment. Psychol. Violence 2024, 14, 1–13, WE - Social Science Citation Index (SSCI). [Google Scholar] [CrossRef]

- Karystianis, G.; Chowdhury, N.; Sheridan, L.; Reutens, S.; Wade, S.; Allnutt, S.; Kim, M.T.; Poynton, S.; Butler, T. Text Mining Domestic Violence Police Narratives to Identify Behaviours Linked to Coercive Control. CRIME Sci. 2024, 13. WE - Emerging Sources Citation Index (ESCI). [Google Scholar] [CrossRef]

- Albayari, R.; Abdallah, S.; Shaalan, K. Cyberbullying Detection Model for Arabic Text Using Deep Learning. J. Inf. Knowl. Manag. 2024. [Google Scholar] [CrossRef]

- Jiao, J.; He, P.; Zha, J. Factors Influencing Illegal Dumping of Hazardous Waste in China. J. Environ. Manage. 2024, 354. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhai, Y.; Fu, S.; Shi, M.; Jiang, X. Quantitative Analysis of Maritime Piracy at Global and Regional Scales to Improve Maritime Security. Ocean Coast. Manag. 2024, 248. [Google Scholar] [CrossRef]

- Salley, C.J.; Mohammadi, N.; Taylor, J.E. Safeguarding Infrastructure from Cyber Threats with NLP-Based Information Retrieval. In Proceedings of the Proceedings of the Winter Simulation Conference; IEEE Press, 2024; pp. 853–862. [Google Scholar]

| Research Questions | RQ1: What has been researched on application areas for text mining within the context of public security? RQ2: What are the most employed text mining techniques and technologies in public security in general and for each application area? RQ3: What research opportunities and challenges exist for text mining in public security? |

| Databases | Scopus, Web of Science, IEEE Xplore, and ACM Digital Library. |