Submitted:

02 March 2024

Posted:

05 March 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction and summary

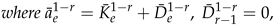

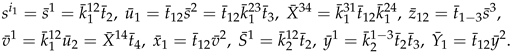

2. Foundations

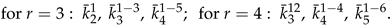

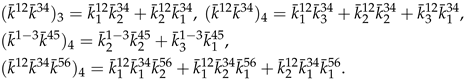

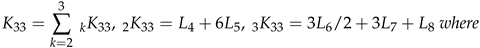

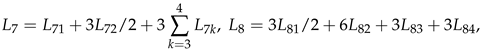

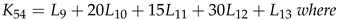

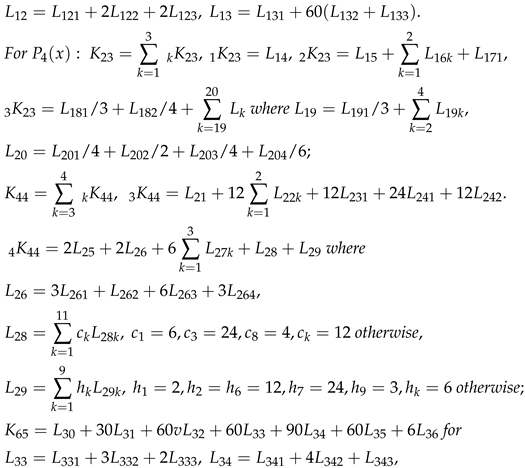

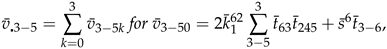

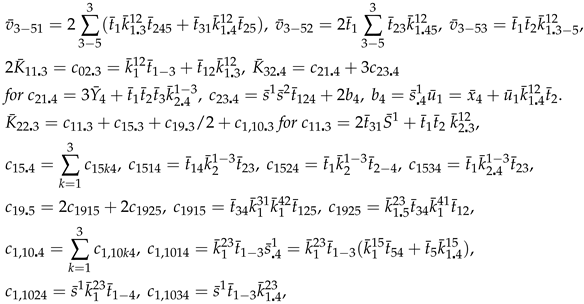

3. Cumulant Coefficients for when

4. Cumulant Coefficients for when

5. Cumulant Coefficients for Univariate

6. An Extension to Theorem 2.1

7. Discussion

8. Conclusion

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of open access journals |

| TLA | Three letter acronym |

| LD | linear dichroism |

Appendix A: Some comments on the references

References

- Barndoff-Nielsen, O.E. and Cox, D.R. (1989). Asymptotic techniques for use in statistics. Chapman & Hall, London.

- Bhattacharya, R.N. and Rao, Ranga R. (1976). Normal approximation and asymptotic expansions, Wiley, New York.

- Cai, T. (2005) One-sided confidence intervals in discrete distributions. Journal of Stat. Planning and Inference, 131, 63–88.

- Comtet,L. (1974) Advanced combinatorics. Reidel, Dordrecht.

- Cornish, E.A. and Fisher, R. A. (1937) Moments and cumulants in the specification of distributions. Rev. de l’Inst. Int. de Statist., 5, 307–322. Reproduced in The collected papers of R.A. Fisher, 4.

- Daniels, H.E. (1983) Saddlepoint approximations for estimating equations. Biometrika 70, 89–96.

- Daniels, H.E. (1987) Tail probability expansions. Intern. Statist. Review 55, 37–48.

- Fisher, R. A. and Cornish, E.A. (1960) The percentile points of distributions having known cumulants. Technometrics, 2, 209–225.

- Hill, G.W. and Davis, A.W. (1968) Generalised asymptotic expansions of Cornish-Fisher type. Ann. Math. Statist., 39, 1264–1273.

- James, G.S. and Mayne, A.J. (1962). Cumulants of functions of random variables. Sankhya A 24, 47–54.

- Stuart, A. and Ord, K. (1987). Kendall’s advanced theory of statistics, 1. 5th edition. Griffin, London.

- Withers, C.S. (1982) The distribution and quantiles of a function of parameter estimates. Annals of the Institute of Statistical Mathematics, Series A, 34 (1), 55–68. Corrigendum: http://freepages.misc.rootsweb.com/~kitwithers/research/1982a.pdf.

- Withers, CS (1983) Expansions for the distribution and quantiles of a regular functional of the empirical distribution with applications to nonparametric confidence intervals. Annals Statist., 11 (2), 577–587.

- Withers, C.S. (1984) Asymptotic expansions for distributions and quantiles with power series cumulants. J. Roy. Statist. Soc. B, 46, 389–396.

- Withers, C.S. (1987) Bias reduction by Taylor series. Commun. Statist. - Theor. Meth., 16, 2369–2383.

- Withers, CS (1988) Nonparametric confidence intervals for functions of several distributions. Annals of the Institute of Statistical Mathematics, Part A, 40 (4), 727–746.

- Withers, C.S. (1989) Accurate confidence intervals when nuisance parameters are present. Comm. Statist. - Theory and Methods, 18, 4229–4259.

- Withers, C.S. and Nadarajah, S. (2008) Edgeworth expansions for functions of weighted empirical distributions with applications to nonparametric confidence intervals, Journal of Nonparametric Statistics, 20, 751–768. http://www.informaworld.com/smpp/1162981568-85928283/content~db=all~content=a905308777.

- Withers, C.S. and Nadarajah, S. (2009) Charlier and Edgeworth expansions for distributions and densities in terms of Bell polynomials. Probab. Math. Statist. 29, 271–280.

- Withers, C.S. and Nadarajah, S. (2010a) The bias and skewness of M-estimators in regression, Electronic Journal of Statistics, 4, 1–14. http://projecteuclid.org/DPubS/Repository/1.0/Disseminate?view=body&id=pdfview_1&handle=euclid.ejs/1262876992.

- Withers, C.S. and Nadarajah, S. (2010b) Tilted Edgeworth expansions for asymptotically normal vectors. Annals of the Institute of Statistical Mathematics, 62 (6), 1113–1142. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2010c) The distribution and quantiles of functionals of weighted empirical distributions when observations have different distributions. Statistics and Probability Letters, 80 (13-14), 1093–1102. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2011a) Expansions for the distribution of M-estimates with applications to the multi-tone problem. ESIAM - Probability and Statistics, 15, 139–167. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2011b) Channel capacity for MIMO systems with multiple frequencies and delay spread. Applied Mathematics and Information Sciences, 5 (3), 480–483.

- Withers, C.S. and Nadarajah, S. (2011c) Reciprocity for MIMO systems. European Transations on Telecommunications, 22 (6), 276–281. [CrossRef]

- Withers, CS and Nadarajah, S (2012a) Improved confidence regions based on Edgeworth expansions. Computational Statistics and Data Analysis, 56 (12), 4366–4380.

- Withers, C.S. and Nadarajah, S. (2012b) The distribution of Foschini’s lower bound for channel capacity. Advances in Applied Probability, 44 (1), 260–269. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2012c) Cornish-Fisher expansions for sample autocovariances and other functions of sample moments of linear processes. Brazilian Journal of Probability and Statistics, 26 (2), 149–166. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2012d) Cornish-Fisher expansions about the F-distribution. Applied Mathematics and Computation, 218 (15), 7947–7957. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2012e) Transformations of multivariate Edgeworth-type expansions Statistical Methodology, 9 (3), 423–439. [CrossRef]

- Withers, CS and Nadarajah, S (2012f) Nonparametric estimates of low bias. Revstat Statistical Journal, 10 (2), 229–283.

- Withers, C. S. and Nadarajah, S. (2013a) Cornish-Fisher expansions for functionals of the partial sum empirical distribution. Statistical Methodology, 12, 1–15. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2013b) The distribution of the amplitude and phase of the mean of a sample of complex random variables. Journal of Multivariate Analysis, 113, 128–152. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2014a) The dual multivariate Charlier and Edgeworth expansions. Statistics and Probability Letters, 87 (1), 76–85. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2014b) Asymptotic properties of M-estimators in linear and nonlinear multivariate regression models, Metrika, 77, 647–673. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2014c) Expansions about the gamma for the distribution and quantiles of a standard estimate. Methodol. Comput. Appl. Probab., 16 (3), 693–713. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2015) Edgeworth-Cornish-Fisher-Hill-Davis expansions for normal and non-normal limits via Bell polynomials, Stochastics An International Journal of Probability and Stochastic Processes,87 (5), 794–805. [CrossRef]

- Withers, C.S. and Nadarajah, S. (2020) The distribution and percentiles of channel capacity for multiple arrays. Sadhana, Indian Academy of Science, 45 (1), 1–25. 2020. https://www.ias.ac.in/article/fulltext/sadh/045/0155.

- Withers, C.S. and Nadarajah, S. (2022) Chain rules for multivariate cumulant coefficients. Stat. 11 (1). 2022:11:e451. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).