Submitted:

05 March 2024

Posted:

06 March 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Motivation and Significance

1.2. Research Objectives

2. Related Work

2.1. LESK Algorithm

2.2. Disambiguation base on Word2Vec

2.3. Disambiguation base on Dependence Adaptability

- :the total number of dependency tuples with a specific dependency relation r, where the dominant word is and the dependent word is .

- :the total number of dependent tuples with dependency r and dominator .

- :total number of dependent tuples with dependency r and dominator .: total number of dependency tuples with dependency r.

- : total number of dependency tuples with dependency r.

2.4. Disambiguation Algorithm Based on Bi-LSTM Neural Network Model

2.5. WSD Algorithm Based on Gloss-Bert Model

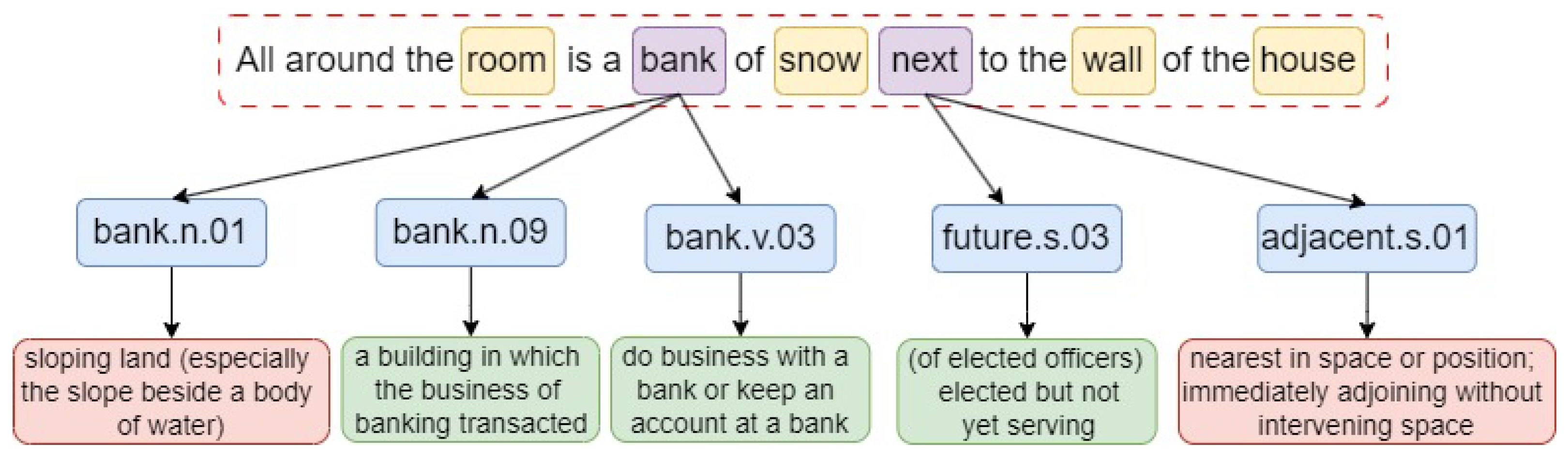

2.6. WordNet Knowledge Graph Word Semantic Disambiguation Algorithm

3. Methodology

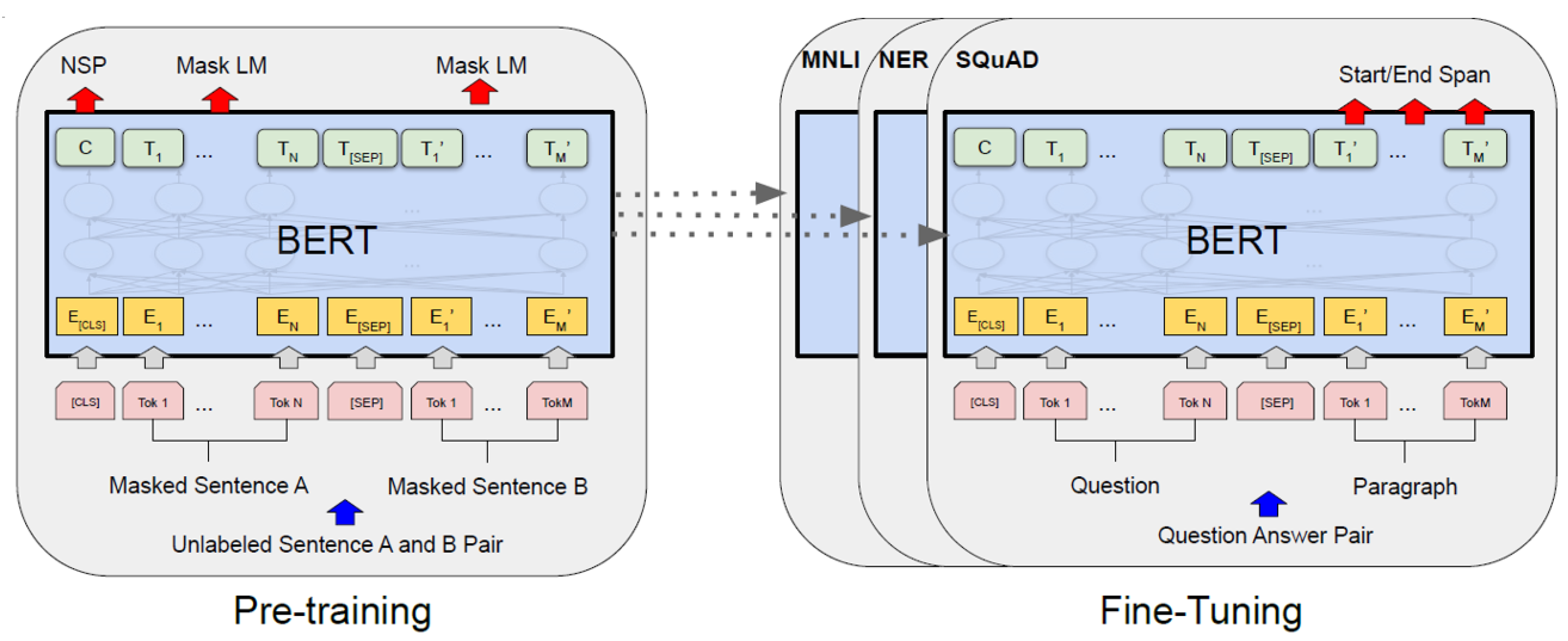

3.1. Bert Model and its Features

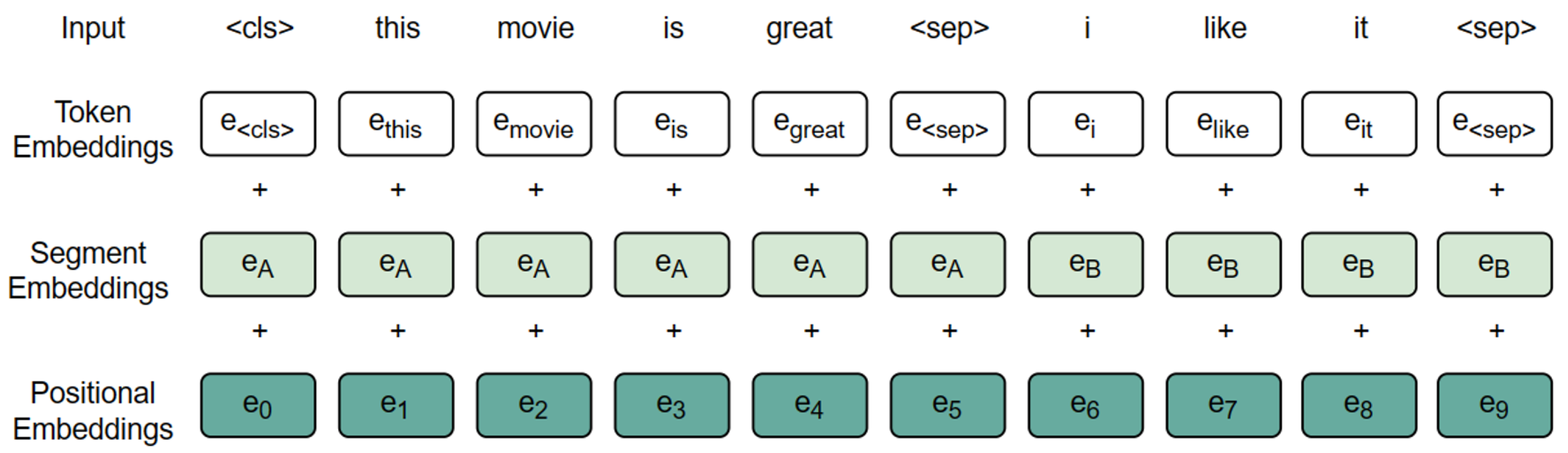

3.1.1. Embedding Process

3.1.2. Pre-Training Process of Bert Model

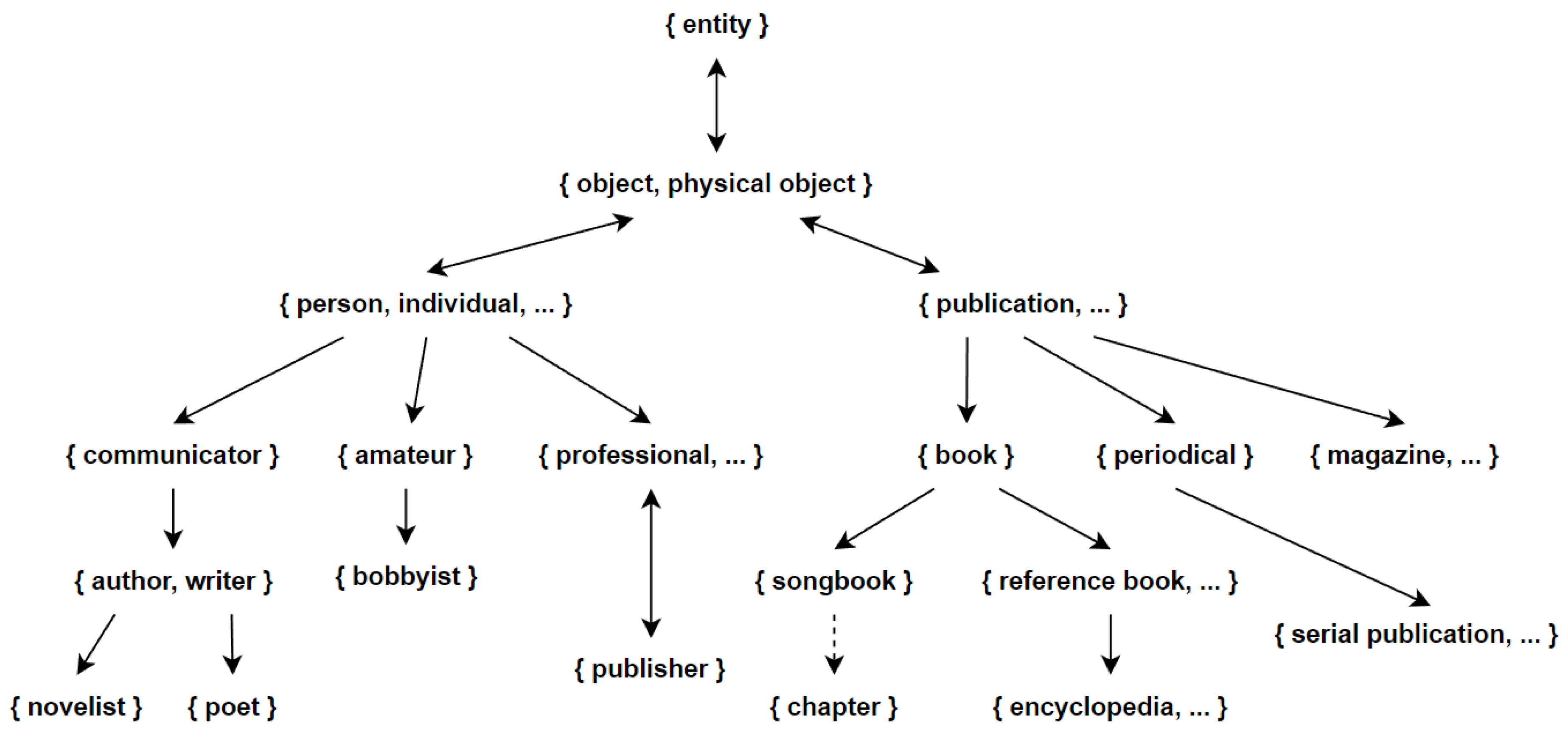

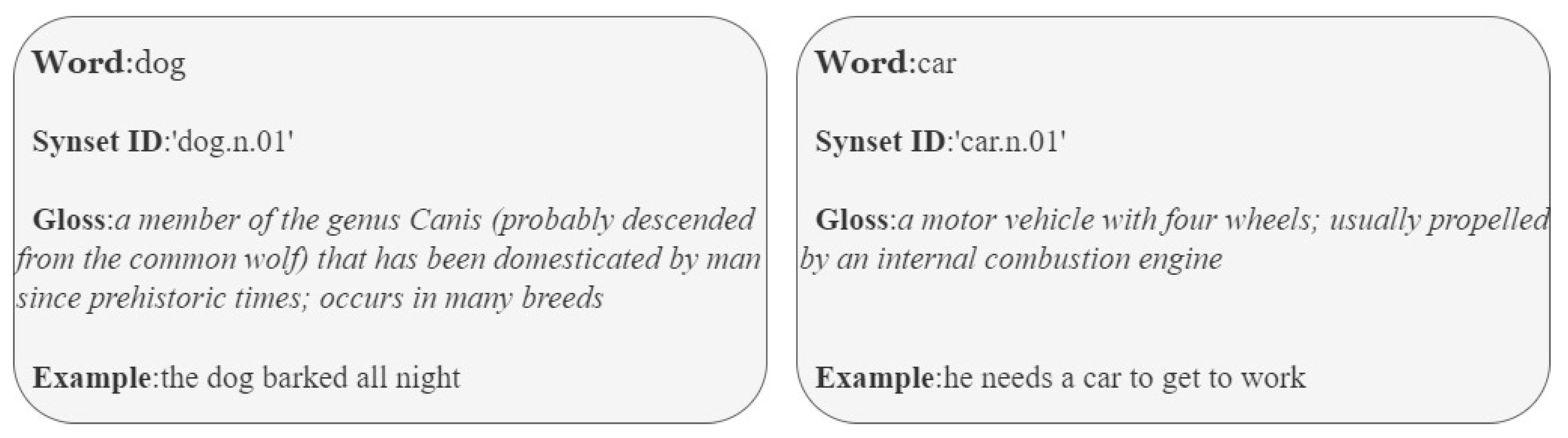

3.2. Exterior Knowledge Base-WordNet

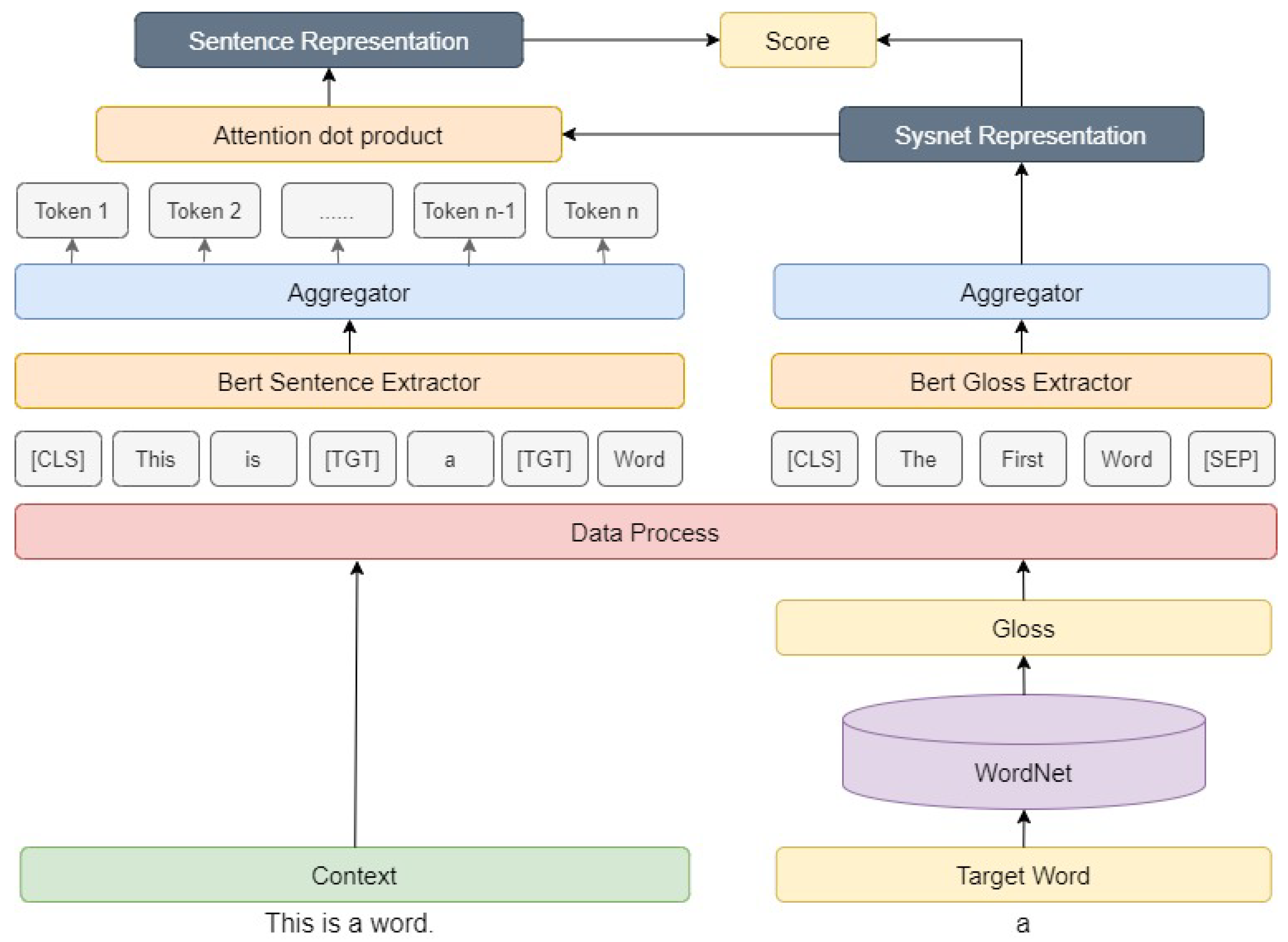

3.3. Proposed Model

3.4. Data Preprocessing

3.5. Extraction of Sentimental Information

3.5.1. Extraction of Gloss Sentimental Information

3.5.2. Extraction of Target Sentence Sentimental Information

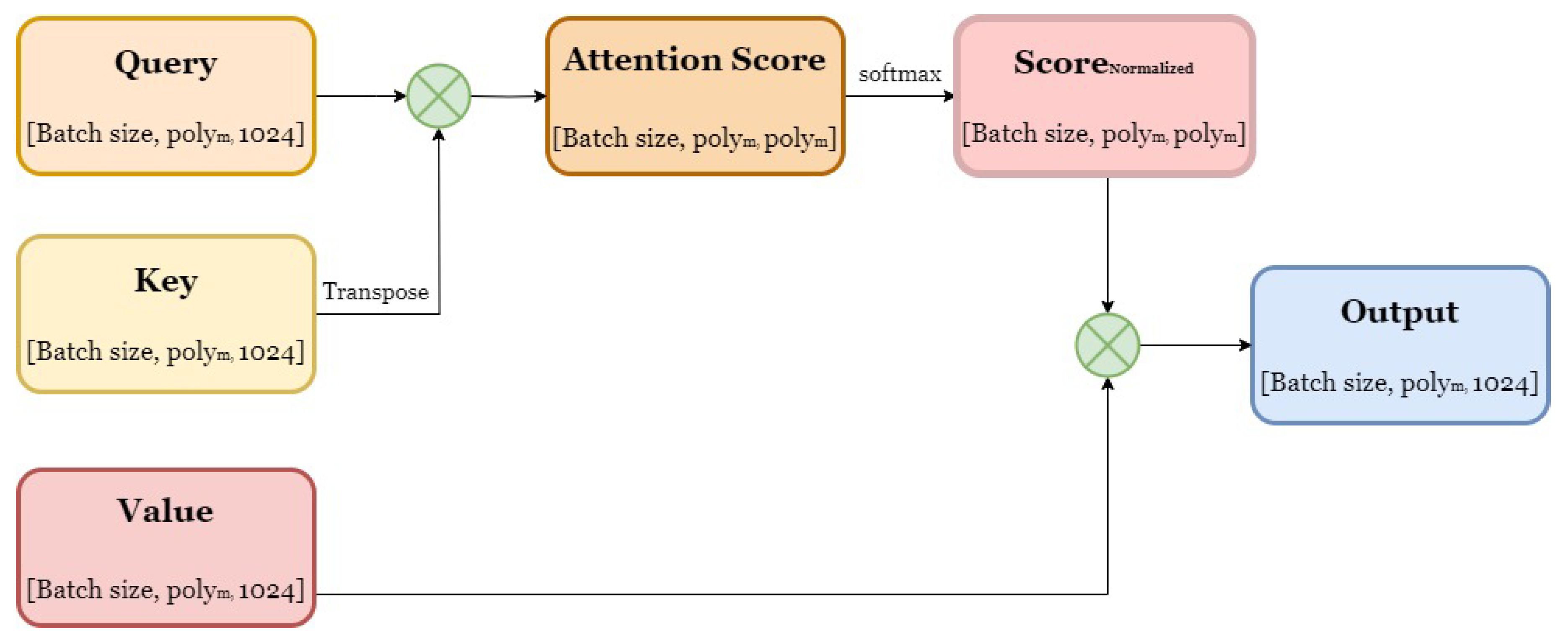

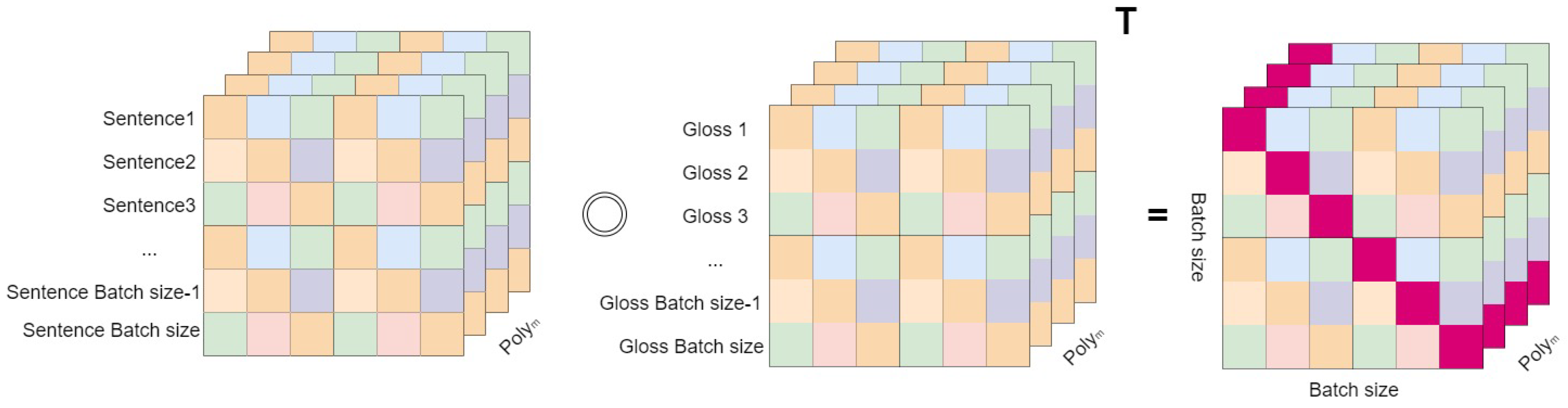

3.6. Attention Dot Product

3.7. Proposed Loss Function and Output Layer

4. Experiments and Performance Evaluation

| GPU | RTX 2080Ti from NVIDIA |

| CPU | Intel(R) Xeon(R) W-2133 CPU @ 3.60GHz |

| System | Windows 10 |

| GPU memory | 24GB |

| Python version | 3.10.1 |

| Transformers version | 4.38.0 |

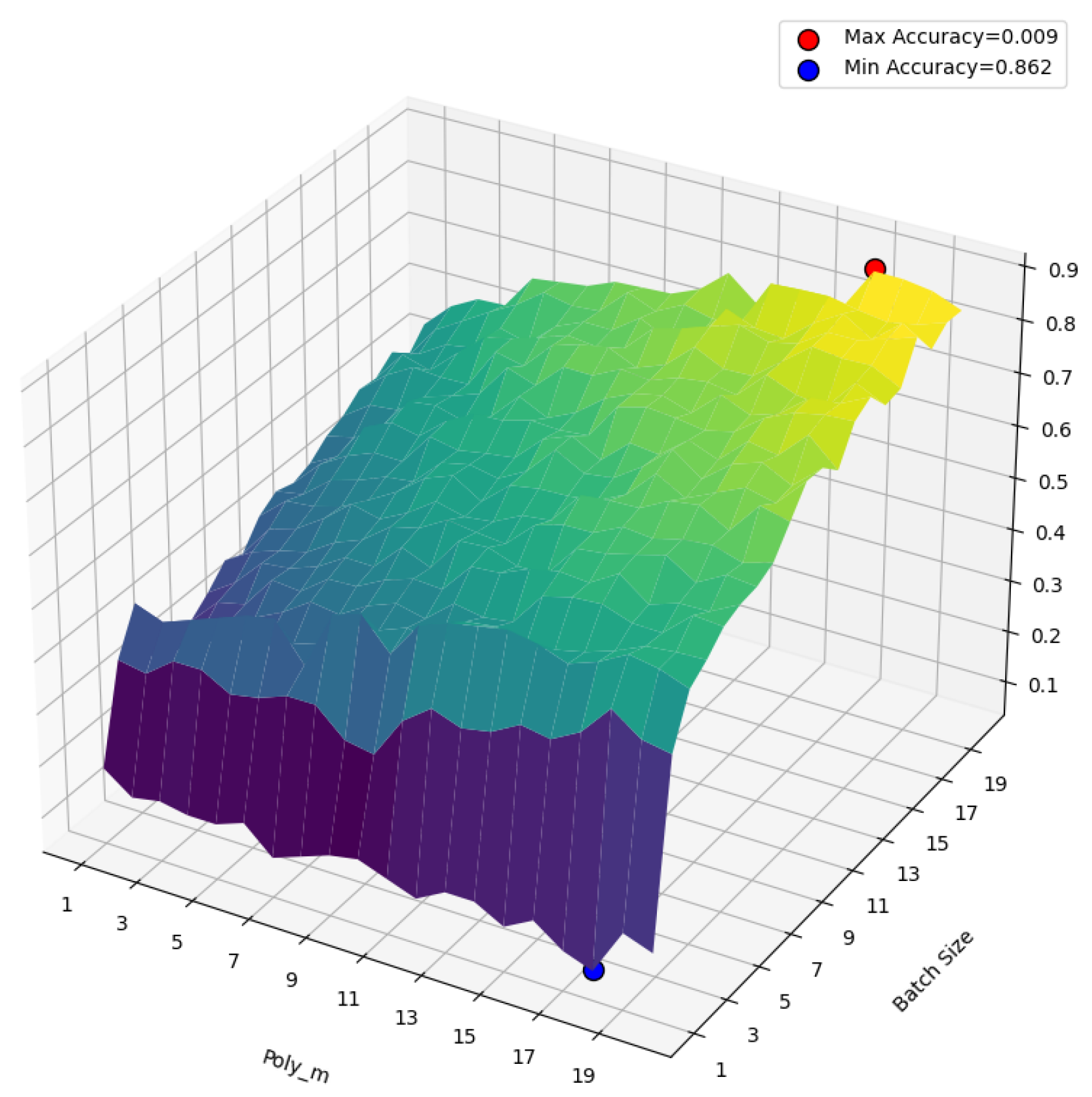

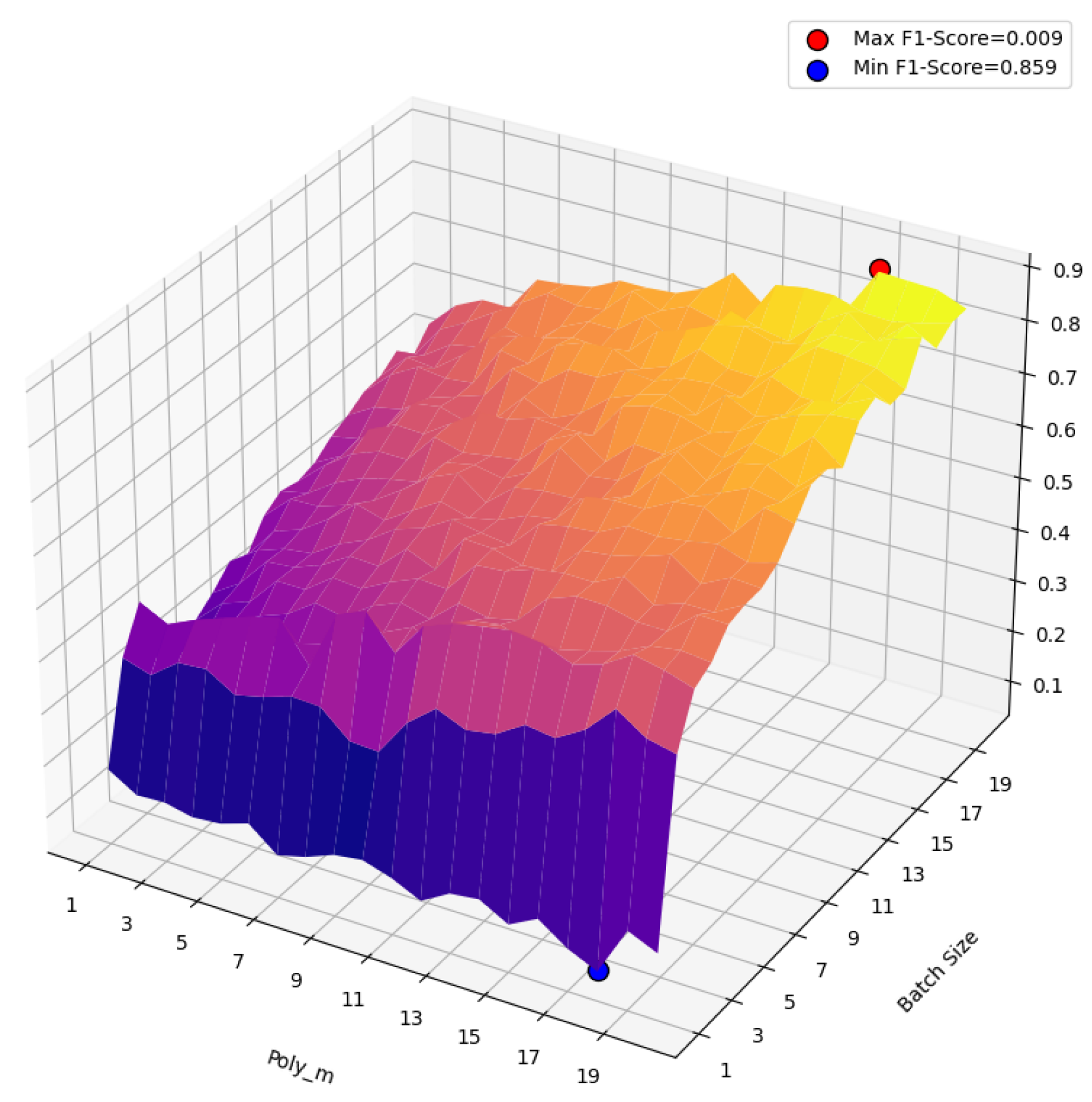

4.1. Performance Evaluation and Optimization

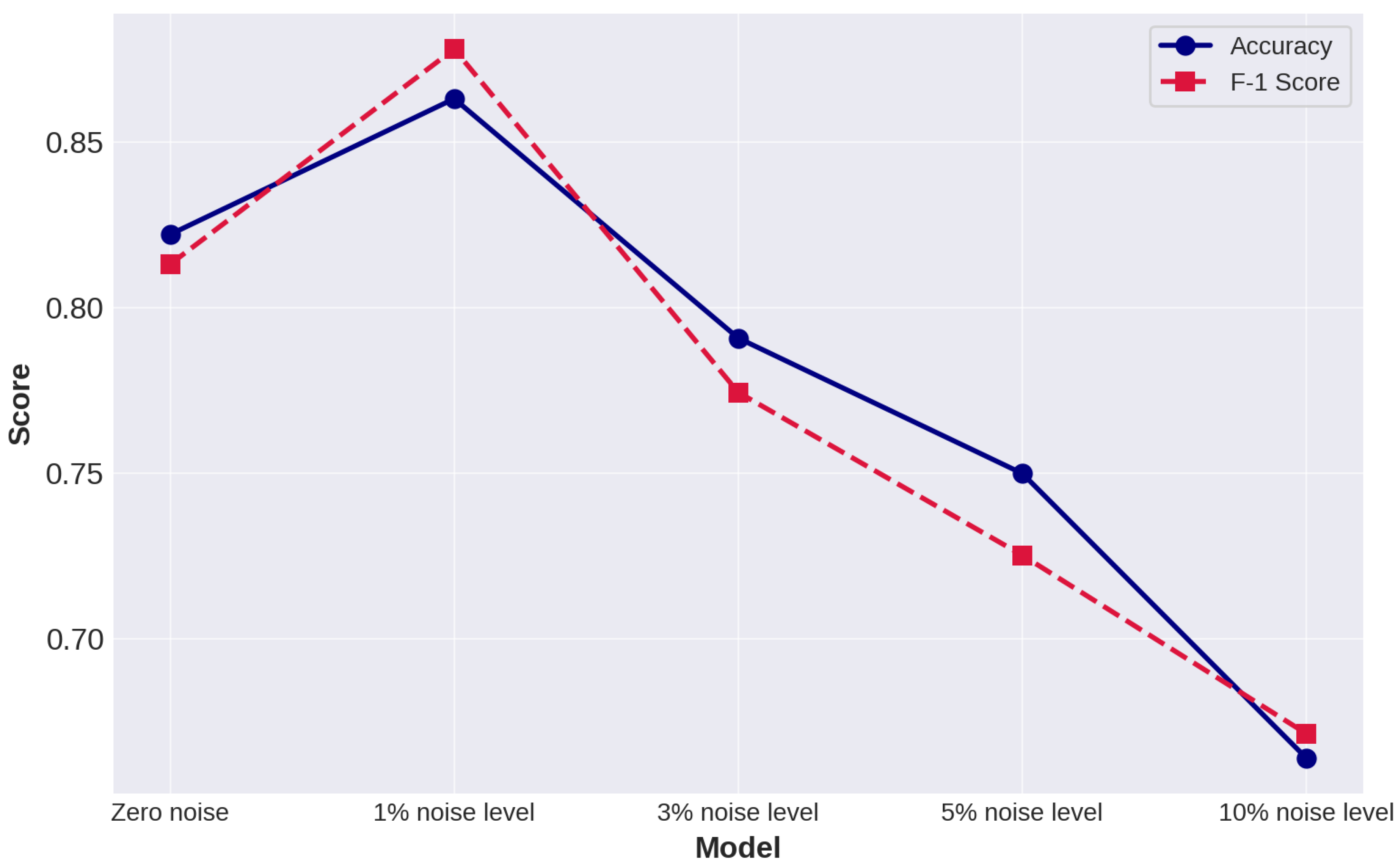

4.2. Sensitivity Analysis

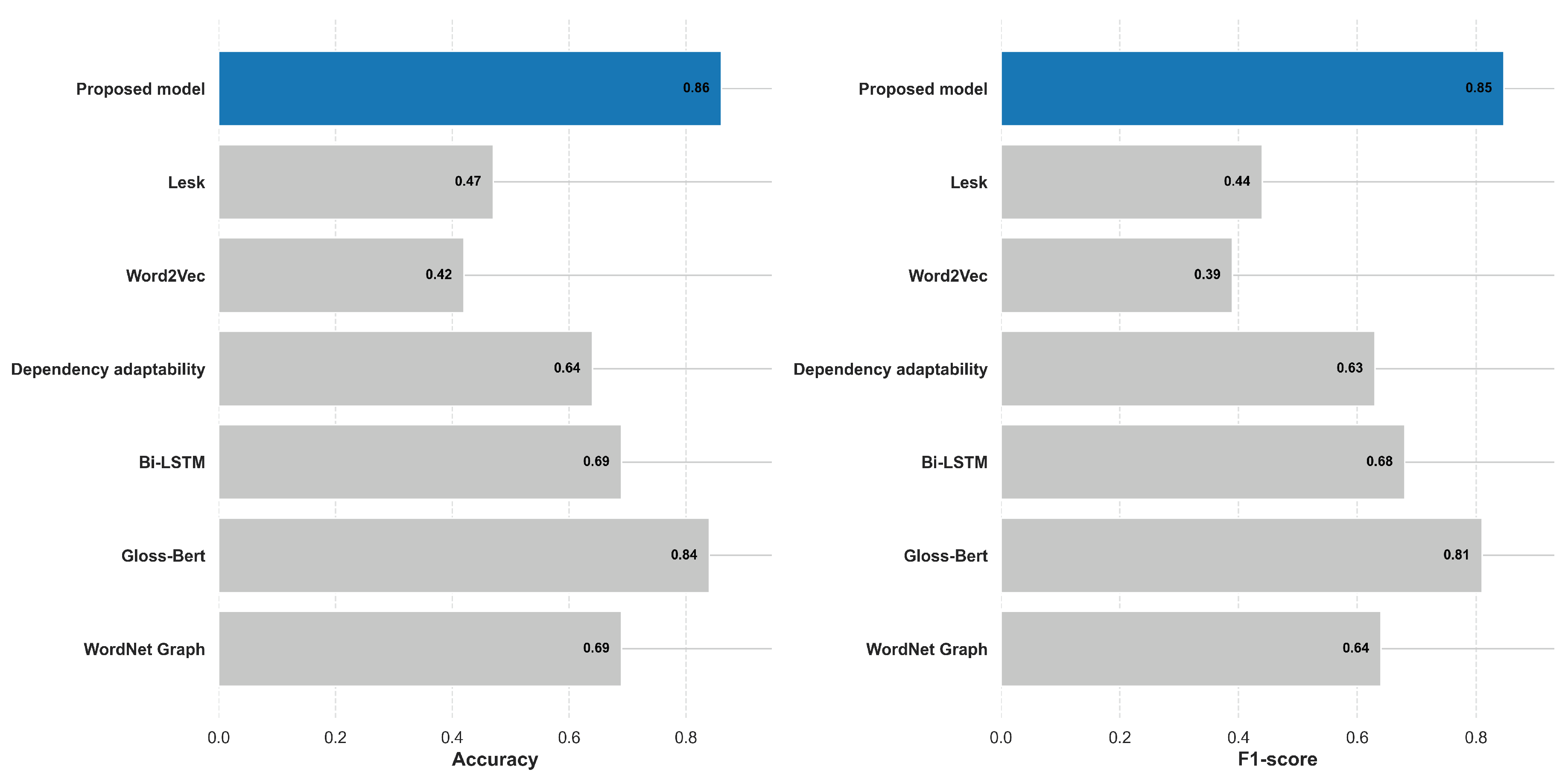

4.3. Performance Evaluation of Optimized model and Comparison

5. Conclusions

Appendix A

-

Context sentence: The principle is commendable but we suspect that in the practice somebody is going to get gulled .Target word: gulledData ID in Semcor: d291.s073.t002WSD result: gull%2:32:00::Gloss of true semantic: fool or hoax

-

Context sentence: Since 1953 California has led the nation in enacting guarantees that public business shall be publicly conducted , but not until this year did the lawmakers in Sacramento plug the remaining loopholes in the Brown Act .Target word: plugData ID in Semcor: d291.s100.t003WSD result: plug%2:35:01::Gloss of true semantic: fill or close tightly with or as if with a plug

-

Context sentence: In the tower , five men and women pull rhythmically on ropes attached to the same five bells that first sounded here in 1614 .Target word: womanData ID in Semcor: l000.s005.t002WSD result: woman%1:18:00::Gloss of true semantic: an adult female person (as opposed to a man)

-

Context sentence: They belong to a group of 15 ringers – including two octogenarians and four youngsters in training – who drive every Sunday from church to church in a sometimes-exhausting effort to keep the bells sounding in the many belfries of East Anglia .Target word: driveData ID in Semcor: l000.s010.t008WSD result: drive%2:38:00::Gloss of true semantic: move by being propelled by a force

-

Context sentence: In a well-known detective-story involving church bells , English novelist Dorothy L. Sayers described ringing as a “ passion that finds its satisfaction in mathematical completeness and mechanical perfection . “Target word: churchData ID in Semcor: d000.s030.t003WSD result: church%1:06:00::Gloss of true semantic: a place for public (especially Christian) worship

-

Context sentence: It is a passion that usually stays in the tower, however .Target word: towerData ID in Semcor: d000.s033.t003WSD result: tower%1:06:00::Gloss of true semantic: a structure taller than its diameter; can stand alone or be attached to a larger building

-

Context sentence: Since 1953 California has led the nation in enacting guarantees that public business shall be publicly conducted , but not until this year did the lawmakers in Sacramento plug the remaining loopholes in the Brown Act .Target word: plugData ID in Semcor: d291.s100.t003WSD result: plug%2:35:01::Gloss of true semantic: fill or close tightly with or as if with a plug

-

Context sentence: Scientists say the discovery of these genes in recent months is painting a new and startling picture of how cancer develops .Target word: cancerData ID in Semcor: d001.s001.t009WSD result: cancer%1:26:00::Gloss of true semantic: any malignant growth or tumor caused by abnormal and uncontrolled cell division; it may spread to other parts of the body through the lymphatic system or the blood stream’, ’type genus of the family Cancridae’, ’a small zodiacal constellation in the northern hemisphere; between Leo and Gemini

-

Context sentence: The newly identified genes differ from a family of genes discovered in the early 1980s called oncogenes .Target word: genesData ID in Semcor: d001.s011.t002WSD result: gene%1:08:00::Gloss of true semantic: (genetics) a segment of DNA that is involved in producing a polypeptide chain; it can include regions preceding and following the coding DNA as well as introns between the exons; it is considered a unit of heredity

-

Context sentence: Because of the isolation of the retinoblastoma tumor-suppressor gene , it became possible last January to find out what threat the Quinlan baby faced .Target word: becameData ID in Semcor: d001.s021.t003WSD result: become%2:30:00::Gloss of true semantic: enter or assume a certain state or condition

-

Context sentence: “ All this may not be obvious to the public , which is concerned about advances in treatment , but I am convinced this basic research will begin showing results there soon . “Target word: obviousData ID in Semcor: d001.s027.t000WSD result: obvious%3:00:00::Gloss of true semantic: easily perceived by the semanticss or grasped by the mind

-

Context sentence: The story of tumor-suppressor genes goes back to the 1970s , when a pediatrician named Alfred G. Knudson Jr. proposed that retinoblastoma stemmed from two separate genetic defects .Target word: geneticData ID in Semcor: d001.s037.t010WSD result: genetic%3:01:02::Gloss of true semantic: of or relating to the science of genetics

-

Context sentence: The result is a generation of young people whose ignorance and intellectual incompetence is matched only by their good opinion of themselves .Target word: ignoranceData ID in Semcor: d002.s010.t004WSD result: ignorance%1:09:00::Gloss of true semantic: the lack of knowledge or education

-

Context sentence: Already two major pharmaceutical companies , the Squibb unit of Bristol-Myers Squibb Co. and Hoffmann-La Roche Inc. , are collaborating with gene hunters to turn the anticipated cascade of discoveries into predictive tests and , maybe , new therapies .Target word: predictiveData ID in Semcor: d001.s090.t011WSD result: predictive%5:00:00:prophetic:00Gloss of true semantic: of or relating to prediction; having value for making predictions

References

- Tanenhaus M K, Trueswell J C. Sentence comprehension [J]. 1995.

- Bedny M, HulBert J C, Thompson-Schill S L. comprehension words in context: The role of Broca’s area in word comprehension [J]. Brain research, 2007, 1146: 101-114.

- Biao Zhang, Barry Haddow, and Alexandra Birch. 2023. Prompting large language model for machine translation: A case research. In Proceedings of the 40th International Conference on Machine Learning (ICML’23), Vol. 202. JMLR.org, Article 1722, 41092–41110.

- Sagduyu Y E, Ulukus S, Yener A. Task-oriented communications for nextG: End-to-end deep learning and AI security aspects [J]. IEEE Wireless Communications, 2023, 30(3): 52-60. [CrossRef]

- Alzubaidi L, Bai J, Al-Sabaawi A, et al. A survey on deep learning tools dealing with data scarcity: Definitions, challenges, solutions, tips, and applications [J]. Journal of Big Data, 2023, 10(1): 46. [CrossRef]

- Yi K, Zhang Q, Cao L, et al. A survey on deep learning based time series analysis with frequency transformation [J]. CoRR, abs/2302.02173, 2023. [CrossRef]

- Navigli, R. Word sense disambiguation: A survey [J]. ACM computing surveys (CSUR), 2009, 41(2): 1-69. [CrossRef]

- Loureiro, D. Rezaee, K Pilevar, M. Camacho-Collados, J. (2020). Language Models and Word Sense Disambiguation: An Overview and Analysis.

- Han X, Zhang Z, Ding N, et al. Pre-trained models: Past, present and future [J]. AI Open, 2021, 2: 225-250. [CrossRef]

- Kenton J D M W C, Toutanova L K. Bert: Pre-training of deep bidirectional transformers for language understanding [C] // Proceedings of naacL-HLT. 2019, 1: 2. [CrossRef]

- Fellbaum, C. WordNet [M] // Theory and applications of ontology: Computer applications. Dordrecht: Springer Netherlands, 2010: 231-243. [CrossRef]

- Miller G, A. WordNet: A lexical database for English [J]. Communications of the ACM, 1995, 38(11): 39-41. [CrossRef]

- Morato J, Marzal M A, Lloréns J, et al. Wordnet applications [C] // Proceedings of GWC. 2004: 20-23.

- Humeau, S. , Shuster, K., Lachaux, M., Weston, J. (2019). Poly-encoders: Architectures and Pre-training Strategies for Fast and Accurate Multi-sentence Scoring. International Conference on Learning Representations. [CrossRef]

- Samhith K, Tilak S A, Panda G. Word sense disambiguation using WordNet lexical categories [C] // 2016 International Conference on Signal Processing, Communication, Power and Embedded System (SCOPES). IEEE, 2016: 1664-1666. [CrossRef]

- Sun S, Luo C, Chen J. A review of natural language processing techniques for opinion mining systems [J]. Information fusion, 2017, 36: 10-25. [CrossRef]

- Bharadiya, J. A Comprehensive Survey of Deep Learning Techniques Natural Language Processing [J]. European Journal of Technology, 2023, 7(1): 58-66. [CrossRef]

- Wu T, He S, Liu J, et al. A brief overview of ChatGPT: The history, status quo and potential future development [J]. IEEE/CAA Journal of Automatica Sinica, 2023, 10(5): 1122-1136. [CrossRef]

- Zhou J, Ke P, Qiu X, et al. ChatGPT: Potential, prospects, and limitations [J]. Frontiers of Information Technology Electronic Engineering, 2023: 1-6. [CrossRef]

- Hadi M U, Qureshi R, Shah A, et al. A survey on large language models: Applications, challenges, limitations, and practical usage [J]. Authorea Preprints, 2023. [CrossRef]

- Samsi S, Zhao D, McDonald J, et al. From words to watts: Benchmarking the energy costs of large language model inference [C] // 2023 IEEE High Performance Extreme Computing Conference (HPEC). IEEE, 2023: 1-9. [CrossRef]

- Savelka J, Ashley K D. The unreasonable effectiveness of large language models in zero-shot semantic annotation of legal texts [J]. Frontiers in Artificial Intelligence, 2023, 6. [CrossRef]

- Lesk, M. Automatic sense disambiguation using machine readable dictionaries: How to tell a pine cone from an ice cream cone [C] // Proceedings of the 5th annual international conference on Systems documentation. 1986: 24-26.

- Orkphol K, Yang W. Word sense disambiguation using cosine correlation collaborates with Word2vec and WordNet [J]. Future Internet, 2019, 11(5): 114. [CrossRef]

- RoBertson, S. (2004). "Comprehension inverse document frequency: On theoretical arguments for IDF." Journal of Documentation, Vol. 60 No. 5, pp. 503-520. [CrossRef]

- Lu Wenpeng, Huang Heyan. Knowledge Automatic Acquisition Approach for Word Sense Disambiguation Based on Dependency Adaptability [J]. Journal of Software, 2013, 24(10): 2300-2311. [CrossRef]

- Mikael Kågebäck and Hans Salomonsson. 2016. Word Sense Disambiguation using a Bidirectional LSTM. In Proceedings of the 5th Workshop on Cognitive Aspects of the Lexicon (CogALex - V), pages 51–56, Osaka, Japan. The COLING 2016 Organizing Committee. [CrossRef]

- Huang, L. , Sun, C. , Qiu, X., Huang, X. (2019). GlossBert: Bert for Word Sense Disambiguation with Gloss Knowledge. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP) (pp. 3507–3512). Association for Computational Linguistics. [Google Scholar] [CrossRef]

- Sakae Mizuki and Naoaki Okazaki. 2023. Semantic Specialization for Knowledge-based Word Sense Disambiguation. In Proceedings of the 17th Conference of the European Chapter of the Association for Computational Linguistics, pages 3457–3470, Dubrovnik, Croatia. Association for Computational Linguistics. [CrossRef]

- Bilal M, Almazroi A A. Effectiveness of fine-tuned Bert model in classification of helpful and unhelpful online customer reviews [J]. Electronic Commerce Research, 2023, 23(4): 2737-2757. [CrossRef]

- De Luca E, W. A corpus for evaluating semantic multilingual web retrieval systems: The sense folder corpus [J]. argument, 2010, 48: 5.

- Zhou, C. , Li, Q., Li, C., Yu, J., Liu, Y., Wang, G., … Sun, L. (2023). A Comprehensive Survey on Pretrained Foundation Models: A History from Bert to ChatGPT. [CrossRef]

- Kanade A, Maniatis P, Balakrishnan G, et al. Learning and evaluating contextual embedding of source code [C] // International conference on machine learning. PMLR, 2020: 5110-5121.

- Mehta, S. , Koncel-Kedziorski, R., Rastegari, M., Hajishirzi, H. (2020). Define: Deep factorized input token embeddings for neural sequence modeling. In International Conference on Learning Representations.2019. [CrossRef]

- van der Goot R, Müller-Eberstein M, Plank B. Frustratingly Easy Performance Improvements for Low-resource Setups: A Tale on Bert and Segment Embeddings [C] // Proceedings of the Thirteenth Language Resources and Evaluation Conference. 2022: 1418-1427.

- Yu-An Wang and Yun-Nung Chen. 2020. What Do Position Embeddings Learn? An Empirical research of Pre-Trained Language Model Positional Encoding. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 6840–6849, Online. Association for Computational Linguistics. [CrossRef]

- Koren Lazar, Benny Saret, Asaf Yehudai, Wayne Horowitz, Nathan Wasserman, and Gabriel Stanovsky. 2021. Filling the Gaps in Ancient Akkadian Texts: A Masked Language Modelling Approach. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 4682–4691, Online and Punta Cana, Dominican Republic. Association for Computational Linguistics. [CrossRef]

- Yi Sun, Yu Zheng, Chao Hao, and Hangping Qiu. 2022. NSP-Bert: A Prompt-based Few-Shot Learner through an Original Pre-training Task —— Next Sentence Prediction. In Proceedings of the 29th International Conference on Computational Linguistics, pages 3233–3250, Gyeongju, Republic of Korea. International Committee on Computational Linguistics. [CrossRef]

- Lee K, Choi G, Choi C. Use all tokens method to improve semantic relationship learning [J]. Expert Systems with Applications, 2023, 233: 120911. [CrossRef]

- Wu, L. [CLS] Token is All You Need for Zero-Shot Semantic Segmentation. [CrossRef]

- Zhu X, Yang X, Huang Y, et al. Measuring correlation and relatedness using multiple semantic relations in WordNet [J]. Knowledge and Information Systems, 2020, 62: 1539-1569. [CrossRef]

- Moldovan D, Novischi A. Word sense disambiguation of WordNet glosses [J]. Computer Speech Language, 2004, 18(3): 301-317. [CrossRef]

- Ercan G, Haziyev F. Synset expansion on translation graph for automatic wordnet construction [J]. Information Processing Management, 2019, 56(1): 130-150. [CrossRef]

- Niu Z, Zhong G, Yu H. A review on the attention mechanism of deep learning [J]. Neurocomputing, 2021, 452: 48-62. [CrossRef]

- Namazifar M, Hazarika D, Hakkani-Tür D. Role of bias terms in dot-product attention [C] // ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2023: 1-5. [CrossRef]

- Sokolova M, Japkowicz N, Szpakowicz S. Beyond Accuracy, F-score and ROC: A family of discriminant measures for performance evaluation [C] // Australasian joint conference on artificial intelligence. Berlin, Heidelberg: Springer Berlin Heidelberg, 2006: 1015-1021. [CrossRef]

- Tortorelli D A, Michaleris P. Design sensitivity analysis: Overview and review [J]. Inverse problems in Engineering, 1994, 1(1): 71-105. [CrossRef]

- Ghosh A, Lan A. Contrastive learning improves model robustness under label noise [C] // Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2021: 2703-2708.

- Sauter T, Lobashov M. End-to-end communication architecture for smart grids [J]. IEEE Transactions on Industrial Electronics, 2010, 58(4): 1218-1228. [CrossRef]

- Aoudia F A, Hoydis J. Model-free training of end-to-end communication systems [J]. IEEE Journal on Selected Areas in Communications, 2019, 37(11): 2503-2516. [CrossRef]

- Sowa J, F. Semantic networks [J]. Encyclopedia of artificial intelligence, 1992, 2: 1493-1511.

- Voorhees, E. M. (1993). In Using WordNetTM to Disambiguate Word Senses for Text Retrieval. In Proceedings of the ACM-SIGIR’93 (pp. 171-179), Pittsburgh, PA, USA. [CrossRef]

- Manaal Faruqui, Yulia Tsvetkov, Pushpendre Rastogi, and Chris Dyer. 2016. Problems With Evaluation of Word Embeddings Using Word correlation Tasks. In Proceedings of the 1st Workshop on Evaluating Vector-Space Representations for NLP, pages 30–35, Berlin, Germany. Association for Computational Linguistics. [CrossRef]

- Corley, C. , Mihalcea, R. In (2005). Measuring the Semantic correlation of Texts. In Proceedings of the ACL Workshop on Empirical Modeling of Semantic Equivalence and Entailment (pp. 13-18), Ann Arbor, MI.

| Context | Label |

|---|---|

| 1.[CLS] Your research ... [SEP] systematic investigatio... [SEP] | 1 |

| 2.[CLS] Your research ... [SEP] a search for knowledge [SEP] | 0 |

| 3.[CLS] Your research ... [SEP] inquire into [SEP] | 0 |

| 4.[CLS] Your research ... [SEP] attempt to find out in a ... [SEP] | 0 |

| Context | Gloss |

|---|---|

| In some instances a seventh question can be [TGT] added [TGT] : | state or say further |

| The latter [TGT] is [TGT] what concerns us all . | be identical to |

| Each family line can be [TGT] sonsidered [TGT] a substructure . | look at attentively |

| This tax was [TGT] discontinued [TGT] in 1936 . | put an end to an activity |

| Parameters | Value |

|---|---|

| 19 | |

| 19 | |

| Noise level | 0.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).