1. Introduction

The objective of this paper is to contribute to three challenges in different disciplines: (1) Earth observation and data analysis, (2) climatic and cryospheric change, and (3) machine learning (ML).

Challenge 1. Harnessing the data revolution in Earth observation from space. Observations of our rapidly changing Earth are largely carried out from space, and the collection of such Earth observation data from satellites has rapidly advanced with increasingly large and detailed data sets becoming available for scientific investigations [

1]. The data revolution has led to both new opportunities and challenges for science, as extraction of information on complex geophysical processes from large and high-resolution data sets is becoming increasingly difficult (a problem that has been summarized as “Harnessing the data revolution" by the U.S National Science Foundation [

2]). In turn, this phenomenon has created a cyberinfrastructure problem in terms of a disconnect between the revolutionary increase in satellite image data on the one hand and the development of numerical Earth system models on the other hand, which are employed to aid in assessment of global climatic changes and their manifestations in warming and sea-level rise (SLR) [

3,

4,

5,

6,

7,

8,

9,

10,

11,

12]. A bottleneck is created - and growing with the data revolution - as this new wealth of information revealed by the new satellites makes it hard to incorporate observations into physical-process models as the improved spatio-temporal scale introduced by the data sheds light onto subprocesses not easily incorporated into models.

In this paper, we will introduce an approach that integrates machine learning and physical knowledge into a physically-driven neural network, whose application will facilitate derivation of physical process understanding from high-resolution satellite data. Results include parameterized information in the form of thematic maps (time series of segmented satellite imagery) that can inform modeling as well as lend themselves to direct geophysical interpretation and discovery.

Challenge 2. Glacial acceleration and sea-level-rise assessment. We address a climatic and cryospheric change problem, the phenomenon of glacial acceleration, that has been identified as one of two main sources of uncertainty SLR assessment, as identified by the Intergovernmental Panel on Climate Change (IPCC) in their 2013 Assessment Report 5 [the other source is atmospheric] [

13]. The most recent IPCC AR 6, published in 2021, does not present a solution but rather elevates the urgency of understanding glacial acceleration by declaring it a “deep uncertainty" in SLR assessment [

3]. The different types of accelerating glaciers include surge-type glaciers, tidewater glaciers, fjord glaciers (isbræ) and ice streams [

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30]. Acceleration frequency may be intrinsic to the glacier type, quasi-periodic, or single-time. Initialization of an acceleration may be due to internal dynamics of the glacier or externally forced, for instance, induced by warming ocean water at the front of the glacier or controlled by a combination of several factors [

31,

32,

33,

34]. Spatial acceleration may be due to subglacial (bed) topography [

33] or caused by a dynamic event. All types of acceleration typically lead to the formation of crevasse fields. Surging is the type of acceleration that has seen the least amount of research, and complexity of ice flow during surging defies many classic data analysis methods thus rendering most cyberinfrastructures incapable of modeling this geophysical process.

In this paper, we focus on an exemplary analysis of glacial acceleration during the surge of an Arctic glacier system, the Negribreen Glacier System (NGS), through classification of crevasse patterns as indicators of the drastic and rapid dynamic changes that occur during a surge. The surge has led to mass transfer from the glacier to the ocean on the order of 0.5-1% of global annual SLR in just a few months during the height of the surge [

35,

36]. The fact that a surge causes sudden mass transfer events from the cryosphere to the ocean leads to a catastrophic type of uncertainty in SLR estimation (the term “catastrophic" defined as continuous changes leading to sudden effects). If we are to reconcile SLR assessment, we need to understand surge processes.

Challenge 3. Integration of physically-constrained classification and modern “Deep Learning" approaches in satellite image classification. The surge is captured in time series of high-resolution satellite image data, which motivates a ML-based classification. While deep convolutional neural network (CNN) architectures have been considered to provide state of the art performance on standard image classification benchmarks such as the ImageNet dataset [

37,

38,

39,

40,

41], two problems exist: First, deeper networks only lead to increased performance up to a point, after which increased network depth results in increasingly worse performance due to the vanishing gradient problem [

42]. Second, and more challenging for applications in the cryospheric sciences, is the fact that no published labeled training data sets exist for tasks of classification of ice-surface features, such as crevasses (see, [

43]). The role of crevasse types in identification of deformation types, which are directly related to glacial acceleration, will be described in section 3. A main task is thus the creation of such labeled data sets, required for training of a Neural Net (NN). For CNNs, the problem is exacerbated by the fact that very large numbers of training data (on the order of 100,000s) are needed.

We have previously developed a physically constrained ML approach, the connectionist -geostatistical classification method [

18,

44,

45,

46]. The connectionist-geostatistical method uses a two-tiered approach, in which the first step is a physically informed spatial statistical analysis, carried out in a discrete mathematics framework. The output of the geostatistical step provides the input for the NN, activating the neurons of the input layer. In order to carry out an actual classification, a connectionist approach is selected, which can utilize a multi-layer perceptron with backpropagation of errors (MLP-BP), or simply, a MLP. The MLP has proven to provide a robust and functional architecture for this type of classification and provided an efficient solution already 20 years ago [

44]. To train the connectionist-geostatistical classification, a small data set suffices, of a size that can reasonably be derived by an expert [

44], on the order of several 100 labeled video-scenes or small subimages of a satellite image. However, advances in Earth observation, increasing data resolution and data set size, as well as advances in computer hardware and processing speed warrant investigation of modern “Deep Learning" architectures to facilitate fast and efficient processing.

The salient difference in the effectiveness of the two approaches lies less in the NN architecture (MLP versus CNN) than in the fact that the connectionist-geostatistical classification is a physically informed approach (where the physical knowledge informs our approach to geostatistics), whereas in the case of the CNN the network’s much larger amount of degrees of freedom is what the determination of classes relies on. CNNs can be trained supervised or unsupervised [

47].

In this paper, we will investigate the trade-offs of a physically constrained NN and a CNN and introduce a first approach to leverage the advantages of both ML methods in an integrated image classification system. We propose a solution to natural science problems that takes an approach of combining and integrating physically constrained neural networks and modern ML methods. To this end, we will demonstrate that a physically constrained NN can be utilized to aid in creating a labeled training data set of sufficient size to train a CNN. We emphasize that physical knowledge needs to be leveraged in designing a ML approach that can be expected to provide solutions for the physical sciences and advance knowledge there.

2. Background on Neural Networks, Especially in the Geosciences and in (Satellite) Image Classification

In this section, we give a brief summary of the state of the art of ML in the geosciences, as well as ML applied to satellite image classification or analysis. Most works fall into one of several categories, addressed in the following subsections.

2.1. General References: Classic Papers, Review Papers and Books

While neural networks have seen a sudden rise in public attention in the most recent years, first research dates back to neural psychology at the end of the 19th century [

48,

49]. Rosenblatt [

50] introduced the perceptron, and the first deep learning perceptron came out in 1967 [

51]. The use of neural networks stalled in the early 1970s, mostly due to limitations computation [

52]. Foundational research on CNNs and thus on Deep Learning dates back to the 1960s [

40,

51,

53,

54]. Important concepts that mark steps of development of NNs include backpropagation of errors and connectionism. Backpropagation of errors is an application of Leibniz’ chain rule (from 1673) to networks of differentiable nodes that has become a standard in optimizing MLPs [

55]. Designing the connectionist-geostatistical classification approach, we applied an MLP with backpropagation of errors in the 1990s, using the Stuttgarter Neural Network software [

44,

56]. The term connectionism refers to an approach to the study of human mental processes and cognition that utilizes mathematical models known as connectionist networks or artificial neural networks [

57,

58].

A standard reference for Deep Learning is the book by Goodfellow and others [

59]. A good general reference related to several topics of this paper is a book titled “Deep learning for the Earth Sciences: A comprehensive approach to remote sensing, climate science and geosciences" [

47].

In their review of remote sensing image classification methods, [

60] focus on applications of CNNs for extraction of semantic features in image data. Rawat and Wang [

38] present a review of deep convolutional networks for image classification, and Garcia-Garcia and others write a survey of deep learning techniques for image and video semantic segmentation [

61]. A review of ML methods for classification of remotely sensed imagery and applications to sea-ice classification is given in [

62], and a review of hyperspectral image (HSI) classification using CNNs is presented by [

63].

2.2. Classic Applications of NNs in the Geosciences

Prediction and assessment of sea-ice conditions in the Arctic, based on satellite remote sensing, has been an important tool for ocean navigation. Synthetic Aperture Radar (SAR) data lend themselves well for sea-ice observations, because the radar signal penetrates cloud layers and fog, which are frequent in Arctic atmospheric conditions and obscure optical satellite image data. A short review of ML methods for classification of remotely sensed imagery and applications to sea-ice classification is given in [

62]. Research on sea-ice classifications based on SAR data goes back to the 1990s [

64,

65,

66]. These early methods typically allow distinguishing a small number of sea-ice types, such as 3 or 4. Most methods use multivariate statistics at pixel values in different channels, for example [

67,

68,

69]. The approach of [

67] is innovative in that is bases image segmentation on gradients in the original multivariate statistical parameters, using an edge-detection method. Early applications of NNs include [

70,

71]. Karvonen’s work [

70] was a milestone in state-of-the-art statistical techniques in sea-ice classification with applications to the seasonal ice cover in the Baltic Sea, noting that understanding physical processes is an open problem. [

71] introduce an interesting concept that combines a number of statistical parameters and a NN. Recent publications which utilize sea-ice classification include [

72,

73,

74,

75].

Neural networks that address pattern recognition problems such as self-organized maps [

76], a form of unsupervised classification, or “Learning vector quantification", a supervised NN approach [

77], achieved some popularity, but were found to be outperformed by MLPs with back propagation of errors (MLP-BP) for image analysis of repeated structural patterns [

44]. An overview of pattern recognition using NNs is given in [

78].

2.3. Spectral Versus Spatial Classification

Most image classification methods are based on spectral or multi-spectral classification, i.e. they utilize the fact that an image is composed of several spectral bands [

60]. The connectionist-geostatistical classification method that will be utilized in this paper is a form of spatial classification, which is based on the fact that repeating spatial structures of crevasse fields lead to characteristic types of vario functions [

44,

45]. In [

62], we compare statistical and geostatistical classification methods to explore the potential of combined methods for sea-ice classification.

Vario functions are a formulation of the variogram in discrete mathematics [

79]. Variograms are employed in satellite image characterization by [

80]. [

80] explore first and second-order modeled histograms and variograms to characterize landscape spatial structures from remote-sensing imagery (SPOT-HRV NDVI data) and conclude that the method has potential to distinguish effects of anthropogenic landscape-forming processes from those of environmental and ecological processes, however they note that the method can be improved. In contrast, the connectionist-geostatistical method in the form used in this paper employs experimental vario functions directly to initialize the input neurons in a NN.

Most applications of variograms in satellite image analysis are estimations (kriging) or spatial or temporal analyses, rather than classifications, of satellite data, for example [

81,

82], specifically, of Synthetic Aperture Radar data (SAR data). The differences between geostatistical estimation/ interpolation and extrapolation, characterization and classification are explained in [

45].

2.4. Computer Science Developments of ML Methods for Image Processing and Classification. CNNs.

Recent advances in NN research, especially for applications to image analysis/processing/ classification, have been led by computer scientists. In the last approximately 10-15 years, Deep Learning methods have dominated. Within the field of Deep Learning approaches, CNNs are preeminent [

47]. Deep Learning summarizes ML approaches that involve Neural Nets with large numbers of internal layers (for example, ResNet-1001 has 1001 layers [

83,

84]. Overviews of these methods are given in [

47,

60]. In contrast, the MLP used in the original (2001) connectionist-geostatistical classification has three layers: an input layer, an internal layer and output layer [

44].

Types of CNNs that have been widely used include: (described largely following [

60] with some updated references) (1) AlexNet, a CNN with five convolutional layers and two fully connected layers, first evaluated for ImageNet [

39,

40], a prototype test data set. AlexNet won the so-called ImageNet challenge in 2012 [

41]. (2) Network-in-Network (NiN) [

85], where a MLP is added to each convolutional layer, replacing a simple linear convolutional layer, and an averaging method, called global average pooling, is applied to counteract overfitting, (3) VGG-Net [

86], a 19-layer network with small (3x3) convolutional kernels, (4) GoogLeNet [

87], a 22-layer network, (5) ResNet [

41,

83,

84], a family of so-called residual networks with depths of up to 1001 layers, (6) DenseNet [

88], a NN type that uses cross-layer connections to improve network structure, (7) MS-CapsNet [

89], a multi-scale capsule network, ML methods, from multi-spectral statistical methods to CNNs, can be trained supervised or unsupervised [

47].

The work in this paper uses a form of ResNet, because ResNets have been found to excel at image classification problems. Hence ResNet principles and architectures will be described in more detail in section (8). Applications of CNNs in image classification are numerous (see, for example, [

37,

38,

40]).

2.5. Identified Needs for Advancing Remote-Sensing-Data Classification Using ML Methods, in General and in the Geosciences

In this paper, we will address some of the shortcomings or challenges associated with applications of CNNs in image classifications, identified in [

60]: Lack of sufficient training data (see also [

43,

90,

91], need for remote-sensing-specific CNN architectures, time-efficiency of training CNNs for image classification, and a need for high-level CNN-based applications in remote-sensing image classification. The first three challenges concern technical aspects of NN developments, and our work will address all three. Most interesting to us is the observation (made by [

60]) that most current remote-sensing image ML applications resemble those in computer vision, whereas identification of semantically complex information is largely missing in state-of-the-art research. This resonates with the authors’ observations that many modern CNNs are constructed for the same type of simple applications that were tackled with image processing methods several decades ago. For example, the hyper-deep ResNet-1001 [

83,

84] is derived for multiframe video satellite image super-resolution processing, but then applied to a problem of differencing aircraft-presence/aircraft-absense already analyzed decades earlier. Another application to moving object detection is described in [

92]. Note that the ResNets use very small convolutional kernels, which is a match to the fact that many image denoising or sharpening techniques of the 1900s used 3x3 or 5x5 or 7x7 kernels [

44]. It appears that the modern ML methods often perform similar applications as old methods, only faster, at higher resolution, or for more modern observations, e.g. satellite videography. In our paper, we aim to create an approach that allows to understand a certain, complex geophysical (cryospheric) phenomenon.

In part, the lack of actual conceptual advances or physical process understanding in the Earth Science from ML applications to image classification is tied to the fact that ML research is based on a relatively small number of labeled training data sets (an example is ImageNet [

40]).

Physically-driven NNs fall in the category that is termed “high-level (C)NN-based applications" (by [

60]) or classification of geophysically complex information, such as crevasse classification for the surge problem in this paper. Identification and classification of complex information in imagery requires large sub-images, or large moving windows (not the same) [

44], and last not least the creation of labeled training data for cryospheric applications. Along similar lines, [

93] in their review of Earth science applications highlight NN structures that include modules of data analysis from other than ML fields (see, subsections (2.5) and (2.6)), however, there are only a small number of such approaches listed - and none in cryospheric sciences. Our work falls in this category.

2.6. Recent Applications of NNs in Geosciences

Dominant application fields include land-cover/land-use (urban areas, farmlands, roads, water bodies), biogeosciences, and military applications, there especially change detection of airplanes present/absent at terminals (see, for example, [

37,

60,

90,

94,

95,

96,

97]). Neural Nets and other ML methods are increasingly finding applications in the geosciences. Reviews are found in [

62,

98].

Examples of papers where ML structures are applied include the following: Neural networks have been utilized in studies of vegetation canopy height, using ICESat-2 and Landsat data [

99,

100]. In a case study of a forest in Texas, [

100] investigate the potential of using a Deep Neural Net (DNN) or a Random Forest (RF) model for above-ground biomass assessment based on ICESat-2 and Landsat data, finding similar performance values for the DNN and the RF. [

101] explore applications of NNs for analysis of atmospheric data from ICESat-2, treated like image data. Common to these studies is that they are case studies, which investigate the applicability of previously published types of NNs to satellite data analysis. Other applications include forest canopy height determination from ICESat-2 and Landsat data [

102], disaster detection and monitoring (flood detection) using Random Forests [

103], and geological image classification using CNNs [

104].

In summary, recent applications of ML in the geosciences fall into two categories, (1) Computer scientists taking summative approaches to geoscience data classification (different formulation) and (2) geoscientists exploring applications of existing, previously published ML approaches to image analysis. Notable exceptions include feature augmented neural nets for satellite image classification (an approach that augments data sets with handcrafted feature data sets, see, for example, [

105]) and a new strand of methods that aim to integrate ML and physics (see, next section).

2.7. Approaches Aimed at Integrating Physical Sciences and ML

Most relevant for the work in this paper is a class of approaches that are aimed at integrating physical sciences and ML, by either using physical knowledge in ML or by using ML to improve physical models.

Exemplary approaches that include physics in ML have been termed “physics-aware ML" [

106], based on the concept that the elementary laws of physics ought to be respected by ML approaches in the geosciences. Under this label, challenges, more so than solutions, in the interplay of physics and ML have been identified that may help advance Earth system knowledge (encoding differential equations from data, constraining data-driven models with physics-priors and dependence constraints, improving parameterizations, emulating physical models, and blending data-driven and process-based models). [

107] propose an approach termed “Geoscience-aware deep learning" (GADL), which will include geoscience features into Deep Learning models. This is similar to the concept of including handcrafted features in CNN-based satellite image classification suggested by [

105]. Other authors recognize the need for collaborative efforts in the field of geoscience and ML (e.g. [

108]).

Physically-guided neural networks (PGNNs) leverage scientific knowledge, physical models and observational data in a neural network in order to make better predictions [

109]. The idea of physical consistency is used as a learning objective to allow generalization of the learned network. PGNN’s have been used to model complex physical systems that either lack required data constraints or incur large computational costs, such as those found in fluid dynamics problems [

110] or power flow analysis [

111]. These include applications of ML methods in the determination of numerical modeling parameters.

In a recent overview of ML in the Earth sciences or physical sciences in general, [

93] emphasize that advance of knowledge in the sciences, facilitated with the help of ML methods, requires development of novel NN approaches. Examples of methods that include non-ML physical data analysis modules in the NN operations flow/ architecture stem from oceanography (sea-surface temperature patterns, [

112]) and biological applications [

113].

3. Glaciology Background

3.1. Importance of Surging

Glacier surging is an important type of glacial acceleration, with surge-type glaciers found around the world in many but not all geographic regions, however the phenomenon remains poorly understood due to a relative paucity of comprehensive observational data and a lack of model application to actual, complex ice systems [

14,

15,

16,

17,

18]. A surge-type glacier experiences a quasi-periodic cycle between a long quiescent phase of normal flow and gradual retreat, and a short surge phase when the glacier accelerates to typically 10-200 times its quiescent speeds with heavy crevassing occurring throughout the ice system.

The recent surge of the Negribreen Glacier System (NGS), an Arctic glacier system located in eastern Spitsbergen, Svalbard, provides a rare opportunity to study a surge in a large and complex system [

35,

114,

115]. Beginning in 2016, the NGS began to surge with acceleration and heavy crevassing within 10 km of the terminus [

35,

36,

115,

116]. Largest surge speeds of around 22 m/day, equivalent to 200 times the glaciers quiescent flow velocity, occurred during the height of the acceleration phase in July 2017 [

117].

Negribreen last surged in 1935/36 [

114], which indicates that the quasi-cycle of the surge in in the NGS is approximately 80 years. From a methodological point of view, it is worth noting that there has been no opportunity for modern data analysis and study of the Negribreen surge process prior to the current surge – this example indicates how the relative paucity of surges limit our ability for their study, but also that the Negribreen surge has provided a unique opportunity to advance several branches of science, mathematics and engineering [

117,

118]. Relevant to the study in this paper, the NGS has provided a unique collection of ice surface structures and crevasses types in close proximity, for an Arctic glacier system, and thus enabled the ML work reported here.

3.2. The Surge in the NGS

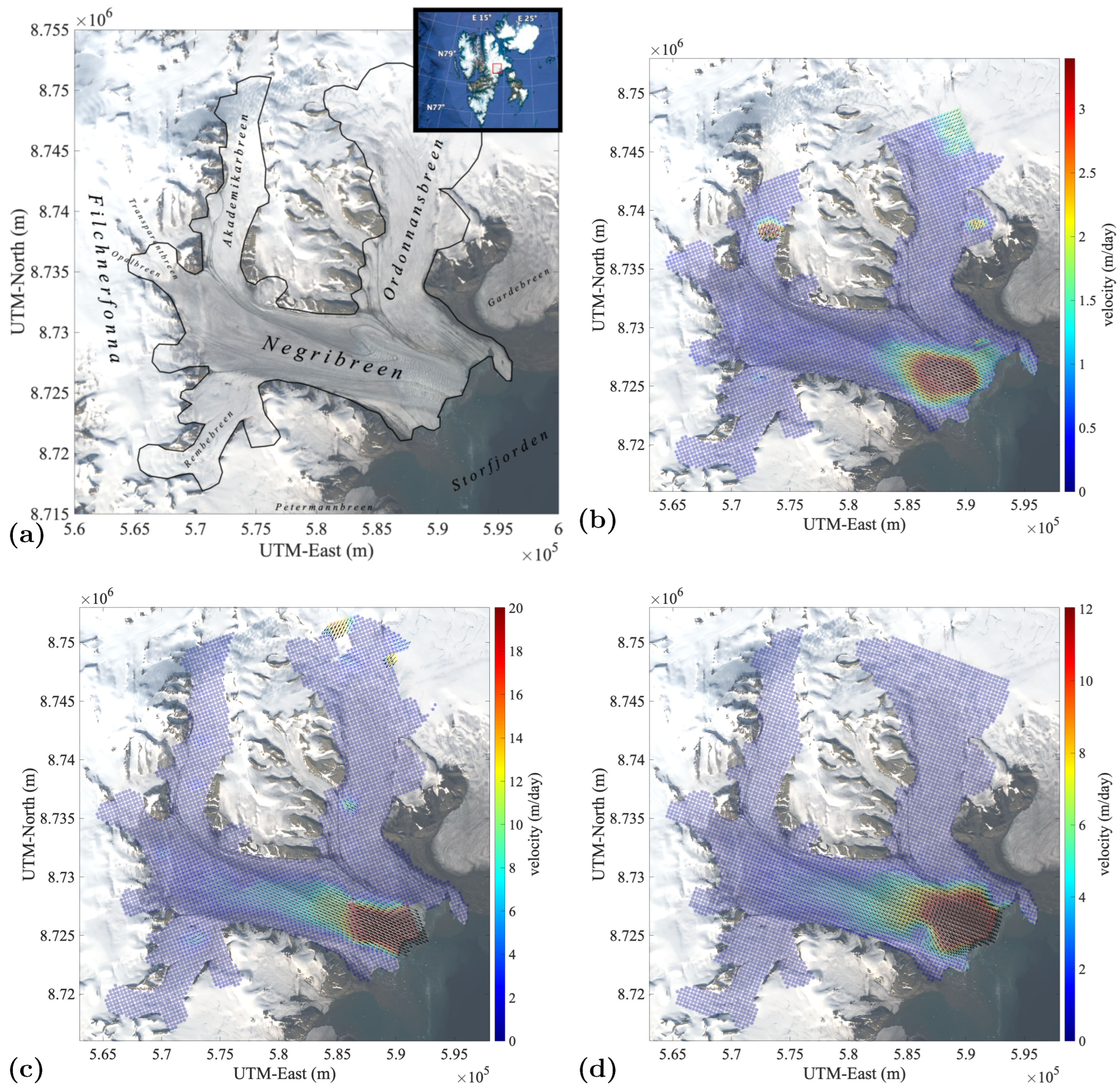

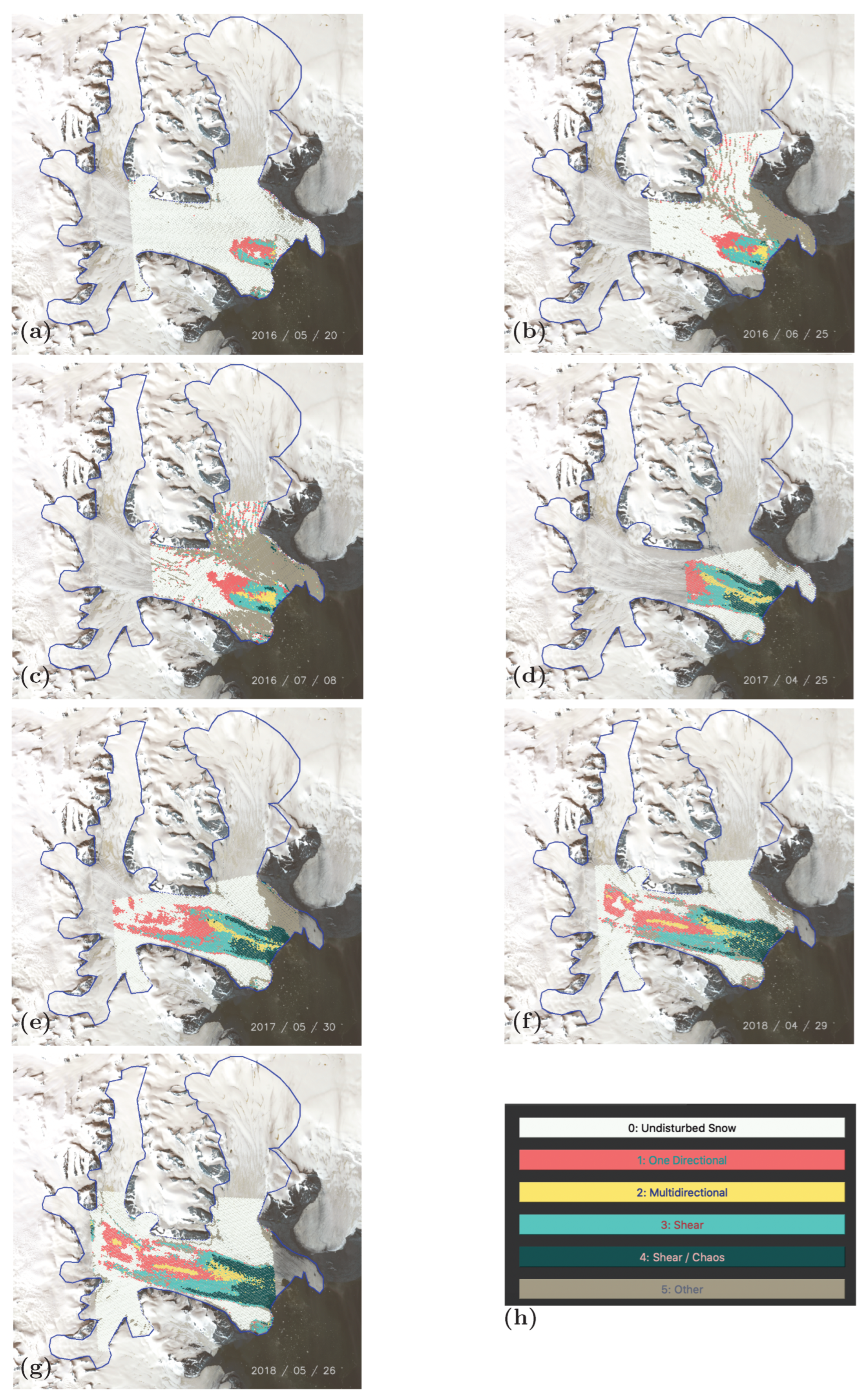

Negribreen is located on Spitsbergen in Svalbard, Norway, with the calving front at approximately (78.57°N, 19.083°E) in 2019, approximately 1000 km south of the North Pole. Negribreen receives most of its inflowing ice from the accumulation zone above the glacier to the west called Filchnerfønna and its northern part, the Lomonosovfønna, through Transparentbreen, Opalbreen and the Negribreen ice falls. The NGS, as defined by the blue contour in

Figure 1a, has an ice extent of approximately 500

. The main glacial trunk, referred to simply as Negribreen, is fed by several major tributaries: Rembebreen to the south, and to the north, Akademikarbreen and Ordonnansbreen. Rembebreen and Petermannbreen (southwest of Negribreen) flow out of a southern part of the Filcherfønnna. Ordonnansbreen does not flow out of an icecap, but its source areas are mountain cirques. The area of the NGS is classically referenced as 1180 km

2 [

114], based on the extent of the glacier system at a time when Petermannbreen and Gardebreen (east of Ordonnansbreen) and their tributaries were still connected to Negribreen.

The NGS is a polythermal glacier, consisting of ice at and below the pressure melting point, and a marine-terminating (tidewater) glacier with ice calving into Storfjorden and the Arctic Ocean. Like other tidewater glacier surges in Svalbard (e.g., [

24,

115,

119]), Negribreen began accelerating near the terminus after a collapse near the glacier front [

36]. Surge effects, such as heavy crevassing and elevated velocities, proceeded to propagate upglacier through the end of 2020 when it reached the NGS boundary with Filchnerfønna 30 km upglacier from the terminus [

36]. Mean ice-speeds remain significantly elevated in 2023 relative to quiescent speeds, with a maximum of 4m/day near the calving front, though ice-speeds have been decreasing steadily since the peak in 2017 (see

Figure 1c). High velocities, large-scale crevassing and enhanced calving during the surge has led to rapid disintegration of the system and large mass loss [

36], thus contributing a significant amount to annual sea level rise during the surge years. Examples of surge crevasses are shown in aerial photographs in [

36,

117].

3.3. The Crevasse-Centered Approach

Because analysis of crevasse patterns takes a central role in the physical part of our ML approach, we give a brief background summary on the role of crevassing in glacial acceleration and to the utilization of the crevasse concept in data analysis and modeling. The central idea of the crevasse-centered approach is that dynamic signatures of fast-moving ice and glacial acceleration are imprinted in ice in the form of crevasses and consequently the deformation history of a glacier can be reconstructed through analysis of crevasse patterns. Structural geologic principles provide links between dynamics, kinematics and deformation, which can be physically formalized and quantified using continuum mechanics, and simulated in numerical models [

20,

120,

121,

122,

123,

124,

125].

Crevasses can be characterized using generalized spatial surface roughness, which is a mathematical approach that utilizes parameters derived from spatial statistical functions to capture spatial properties of a surface [

45]. Roughness-based characterization applies to both crevassed and non-crevassed ice surfaces and thus allows to map an entire glacier. The approach of combining structural geology and mathematical roughness analysis to derive deformation characteristics in fast-moving glaciers is described in theory in [

126] and has been applied to map deformation provinces in surging and continuously fast-moving glaciers throughout the cryosphere [

16,

44,

45,

127,

128,

129,

130]. Applications of other approaches to structural glaciology are have been reported by [

131,

132,

133]. These studies have shown that observations of crevasse patterns and surface roughness can be used as a source of geophysical or glaciological information.

Furthermore, the crevasse-based geophysical information obtained from remote sensing observations, such as satellite imagery, can be utilized in numerical models. [

20] use Landsat-7 imagery of Bering Glacier, Alaska, during its peak surge phase in 2011 to derive crevasse locations, based on roughness characterization, and crevasse orientations, which are also modeled by simulating the stress regime in a 3D, full-Stokes finite element model. Differences in crevasse characteristics are minimized by optimizing important surge-model parameters such as the basal friction coefficient. This method is extended in [

134] to include other sources of model-data comparisons, such as surface velocity, which allows the optimization of additional model parameters such as those related to ice rheology.

Ice velocity observations are popularly used to constrain unknown model parameters (e.g. [

135]), however, during a surge the large-scale non-linear dynamics complicate velocity determination [

20,

136], particularly on short time scales relevant to a surge. Therefore, crevasse observations are our most reliable source of dynamical information during peak surge activity and can be used to derive and optimize basal sliding laws for modeling a surge phase [

137].

Crevasse classes, like those derived in the present paper, offer a more sophisticated picture of a glacier’s dynamic and structural state compared to simple crevasse-location and crevasse-orientation characterization. With more detailed geophysical information from crevasse classification, we expect to provide better constraints for a numerical model allowing more optimal parameterization, better error correction for input data sets such as bed topography, and ultimately more realistic simulation of glacial acceleration and its resulting effects on SLR and the evolving cryosphere.

4. Summary of the Approach

4.1. Objectives, Summary of Approach, Classification and Analysis Steps

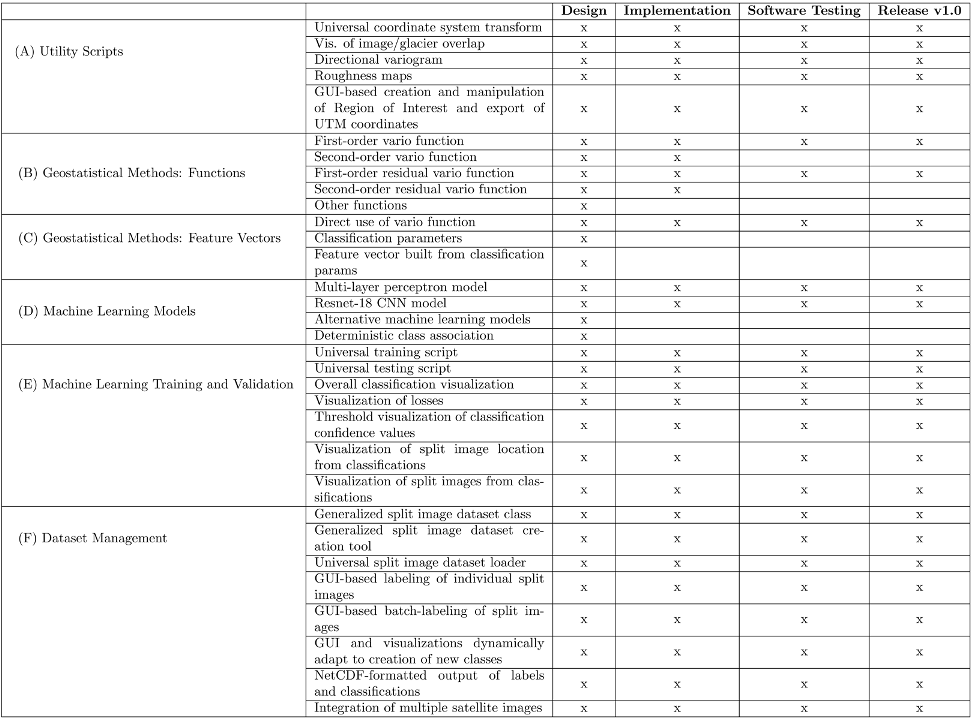

The main objective of this paper is the exploration of the trade-offs between a physically constrained NN, a CNN (“Deep Learning") for a specific, but generalizable, problem in the geosciences: The classification of crevasse types that form during the surge in an Arctic glacier system, the Negribreen Glacier System, Svalbard, to derive objective information about the evolution of the surge. To achieve this objective, we create a software system, termed GEOCLASS-image that facilitates classification of surface features from high-resolution satellite imagery and other imagery, perform testing and quality assessment (Q/A) of the software system, and release it as core of an associated Cyberinfrastructure.

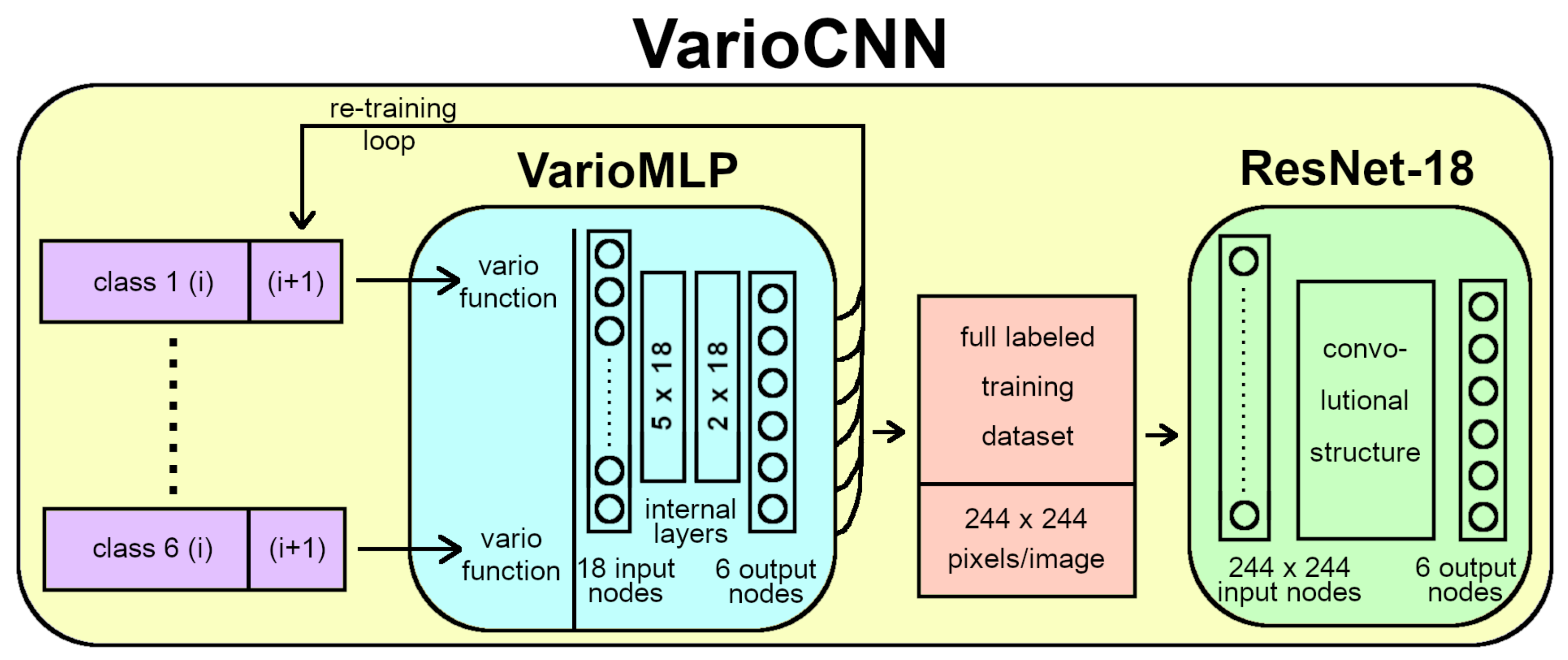

Based on the results of the two trade-offs studies, we derive an example of a ML approach that combines the advantages of a physically constrained, classic NN with those of a CNN, thereby creating a physically constrained NN with a combined architecture, that will be termed VarioCNN. The final VarioCNN is applied to a time series of WorldView images, to derive information on the evolution of the surge in an Arctic Glacier System, the Negribreen Glacier System.

The combined NN, VarioCNN, will be applied to a time series of WorldView-1 and WorldView-2 images, collected in 2016 – 2018 during the acceleration stage and mature stage of the the surge in the NGS. Each image will be analyzed individually and provide an element in a time series of thematic maps of crevasse provinces. The goal is to derive geophysical information on the evolution of the surge during these core stages. Specifically, we aim to create a classification of crevasse patterns, as they relate to deformation types that occur as a result of ice-dynamic processes. Crevasses are manifestations of the local strain state of the ice. Occurrence of fresh crevassing indicates the expansion of the surge, and as the surge progresses, new types of crevasse patterns form. The time series of crevasse maps will be interpreted geophysically. Lastly, we provide a description of the GEOCLASS-image software system.

In summary, the work in this paper builds on the following three ideas:

- (1)

Employ geostatistical parameters as mathematical formulation for physically informed extraction of complex information from imagery

- (2)

Utilize different NN types as connectionist association structures: MLPs and CNNs

- (3)

Compare and then combine the NNs into a three-tiered approach: Geostatistical-connectionist with MLP and CNN

4.2. Approach Steps

Objectives of the work in this paper are the following:

- (1)

-

Create a software that

- (1.1)

encompasses the main principles of the connectionist-geostatistical classification method,

- (1.2)

is sufficiently tested/ robust/ quality-assessed to form the center-piece of a community software for image classification in the geosciences and beyond,

- (1.3)

has a user-friendly GUI for image manipulation, selection of training data, through classification,

- (1.4)

facilitates training and classification of several crevasse types

- (1.5)

allows analysis of different types of satellite imagery

- (1.6)

includes utility tools for cartographic projections and other image manipulations,

- (1.7)

includes several Neural Network Types, including Multi-Layer Perceptrons, Convolutional Neural Networks, and

- (1.8)

is open to generalization to more architecture types

- (2)

Explore the trade-offs between a physically constrained NN and a CNN for a specific, but generalizable, problem in the geosciences: the classification of crevasse types that form during a glacier surge

- (3)

Create an example of a ML approach that combines the advantages of a physically constrained, classic NN with those of a CNN, thereby creating a physically constrained NN with a combined architecture, and

- (4)

Apply the resultant NN to a time series of WorldView images, to derive information on the evolution of the surge in an Arctic Glacier System, the Negribreen Glacier System.

4.3. Terminology

We use the following terms to distinguish ML approaches and NNs in this paper; further explained in sections (6), (7), (8) and (9).

- (1)

The

connectionist-geostatistical classification method [

44] is the original approach that combines a physically driven geostatistical analysis of an input data set and a neural network into a ML approach. As described in [

45], the geostatistical analysis or characterization can take several different forms, in any case, the output of the geostatistical analysis is used as input for the neural network. Examples of geostatistical analysis include (a) the experimental variogram, a discrete function, and (b) results of geostatistical characterization parameters. The neural network type applied in most of our studies is generally a form of a multi-layer perceptron (MLP) with back-propagation of errors [

44,

45,

46,

62] (see section (6)).

- (2)

The acronym VarioMLP is used for connectionist-geostatistical NN type that is applied in this paper; it employs an four-directional experimental vario function to activate the input neuron of a MLP with back-propagation of errors (see section (6)).

- (3)

The term

Convolutional Neural Network (CNN) stands for a specific class of neural networks that realize the concept of “deep learning" [

47,

59].

- (4)

ResNet-18 is the acronym for the specific CNN used in this paper [

41,

83] (see section (8)).

- (5)

The acronym VarioCNN will be used for the combined new method that integrates VarioMLP and ResNet-18 into a unique, physically constrained ML approach (see section (9)).

- (6)

-

Specific architectures of a NN are identified by adding information in square brackets, for example, VarioMLP[18, 4,(5,2)] identifies a VarioMLP, where 18 is the number of steps in the vario function (for each direction), 4 the number of directions of vario-fcuntion calculations, yielding 72 nodes in the input layer, and (5,2) the factor in the number of nodes of hidden layers; here a MLP with two hidden layer is used, where the first layer includes 72 times 5 nodes and the second layer 72 times 2 nodes (see section (7)).

More generally, identifies a VarioMLP, where is the number of steps in the vario function (for each of directions ) and with the factor in the number of nodes in hidden layers; here a MLP with hidden layers is used, where layer i has nodes for (see section (7)).

- (7)

GEOCLASS-image is the software system utilized to create the neural networks and labeled data sets referred to in this paper and carry out the classifications of crevasse types during the surge of the NGS, Svalbard [

138].

5. Approach Component: Image Classification and Data Sources

5.1. Image Classification Challenges and Approaches

The data analysis challenge is a type of image classification, more specifically, image segmentation. Different types of image classification are the following: (1) Each image is associated to a class, (2) features are extracted from images (an often-analyzed example is the detection of moving features between consecutive images; e.g. planes at terminals [

84]. (3) application of the image classification to videography, i.e. time series of images (e.g. [

44]) or satellite videography [

84], (4) Segmentation of a single image into areas of several different classes, resulting in thematic maps. Early applications in the geosciences, e.g. sea-ice classification, land-cover classification, fall in this category. Typically, the classification is applied as a moving-window operator (i.e. to subimages, which can overlap). From a classification standpoint, the types of image arrangement can all be treated the same way, with different data handling utilities. Challenges in this context lie in the specifics of the observational data, which may include remotely sensed imagery from any tier of observation (satellite, airborne, subaerial, ground), in the specific spatial and spectral resolution of the sensor, signal-background separation, and other characteristics of image. The problem treated in this study is a combination of (4) and (1), applied to a time series of satellite image data of the glacier surface. The classification will be applied to each image individually (i.e. without providing information on the previous image).

Because a surge in an Arctic glacier extends over several years, typically 7-10 [

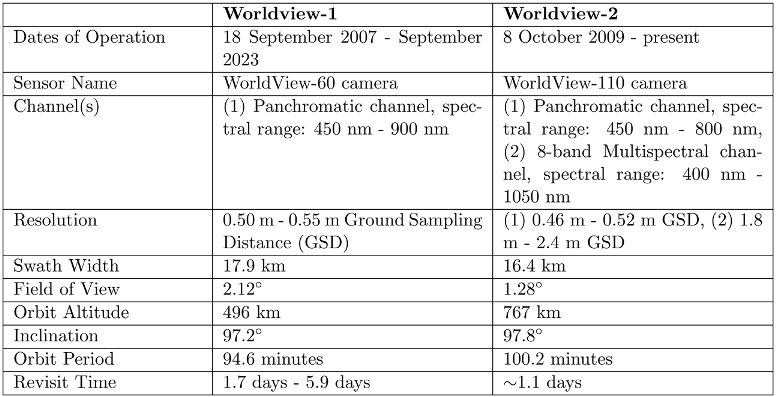

139], data from several different satellite sources need to be integrated in an analysis. Here, we utilize Maxar (formerly DigitalGlobe) data from the WorldView-1 and WorldView-2 satellites. Both satellites carry high-resolution multispectral optical image sensors, but with different resolutions and spectral channels (see,

Table 1). Thus, a specific challenge lies in the identification of subimages for training that work for both satellite data types.

In order to facilitate application of our classification system GEOCLASS-image to data from different sources, a large range of data handling utility modules is included (see, software description). The software is designed to be generalizable to several data types, both (a) for different studies, using a single data type, and (b) to integrate data from several sources into one classification.

The classification will be trained using a set of labeled images. A challenge in a spatially based classification, but also in any image classification that uses subimages (or: splitimages), is the selection of a subimage size that is large enough to include several repetitions of the crevasse pattern, but also small enough to be sufficiently homogenous to be assigned to a single class.

5.2. Data Sources and Processing

The analysis in this paper utilizes Maxar WorldView-1 and WorldView-2 optical satellite image data. WorldView-1, WorldView-2 and WorldView-3 data are a widely used type of commercial satellite imagery [

140]. Hence the classification approach described in this paper is relevant to large parts of the Earth science community. For example, an Arctic-wide Mosaic and DEM has been created from WorldView data [

141]. [

142] use a random forrest classification applied to Worldview-2 imagery to identify tree species in the forests of Austria at high resolution. WorldView data is used heavily as the data source for classifications by the Land-Cover/Land-Use and the Vegetation Ecology Communities (e.g., [

94,

95,

97,

142,

143,

144,

145,

146,

147,

148,

149]).

The Worldview-1,2,3 satellites, owned and operated by Maxar (formerly DigitalGlobe), provide submeter optical imagery of much of the cryosphere [

141], including all of Negribreen. WorldView-1 carries a single high-resolution optical imager called the WorldView 60 camera, which has a single panchromatic channel with a spectral range of 0.45

- 0.90

. WorldView 60 is a pushbroom sensor operating in a swath of 17.9 km with 0.5 m resolution at nadir down to 0.55 m resolution 20° off nadir. (see

Table 1). WorldView-2 also carries a single high-resolution optical imager called the WorldView 110 camera, but has two operational channels. The first is panchromatic channel with a spectral range of 0.45

- 0.80

, while the second is an 8-band multispectral channel ranging from 0.4

- 1.05

. WorldView 110 is also a pushbroom sensor with a swath-width of 16.4 km with 0.46 m resolution at nadir down to 0.52 m resolution off nadir. A full comparison of the WorldView-1 and WorldView-2 specs is given in

Table 1.

In this analysis, we utilize panchromatic imagery from WorldView-1 (launched 18 September 2007, decommissioned September 2023, [

150]) and WorldView-2 (launched 8 October 2009, remains operational in 2024, [

151]) to analyze the NGS surge from its start in 2016 through 2019. Data from the panchromatic channel will be utilized for the classification approaches in this paper, because it has the highest spatial resolution for each satellite (0.45m pixel size for WorldView-1 data and 0.42m for WorldView-2 data) and thus retains the most information on spatial properties of the ice surface. While we will not employ data from the other spectral channels, we have described statistical and geostatistical image classification approaches for multispectral data elsewhere [

62].

The VarioMLP has also been applied to classify Negribreen crevasse provinces based on Planet SkySat data [

35,

117] (for data description, see [

152,

153]). Other commonly used satellite imagers include Landsat [

154] or Sentinel-2 [

155].

Processing. Images were selected w.r.t spatial coverage, temporal coverage and lack of obfuscation. The GEOCLASS-image system includes a tool for evaluation of the area of overlap of a given WorldView image with the area of interest, as outlined by the polygon encompassing the NGS region (

Figure 1a). Only images with a 50% or more overlap with the NGS area of interest were used for analysis. To avoid obfuscation, (1) images with a high percentage cloud cover over the area were avoided, as were images with a deep snow cover on the glacier, which would obliterate the crevasse patterns. Thus winter images were rejected. Applying these criteria, 11 high-quality images from spring and summer 2016-2018 were selected from several hundred WorldView data sets (

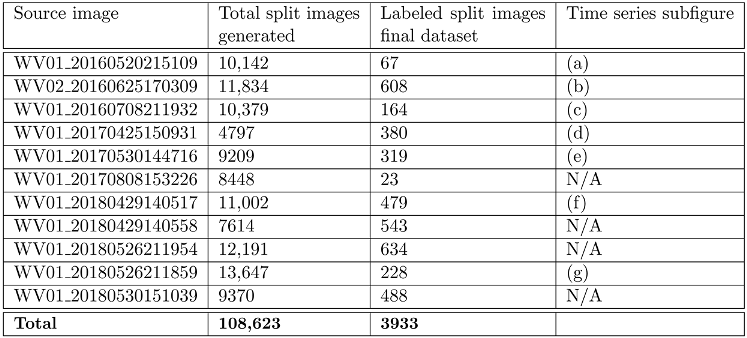

Table 2). All 11 images were used for creation of the labeled training data sets of split images, whereas 7 are used in the time-series analysis (leaving out images that are too close in time to other images already in the time series).

The pixel intensity of the source geotiff images was normalized to the 95th percentile and compressed to an 8-bit range. This was done because although the Worldview-1 and Worldview-2 sensors allow for 11-bit depth, only a small number of pixels in each image actually reached those intensity values, the rest being several orders of magnitude below. By normalizing to an 8-bit range data processing during training and testing was made much faster and more efficient, and by thresholding the highest-intensity pixels, the resulting geotiff images and the split images extracted from them were much easier to view for the human eye, a crucial component for a labeling process.

Custom software was written to extract the coordinates in both pixel space and UTM space for each split image within a given set of geotiff images which falls 100% within the NGS area of interest. These coordinates, along with fields for a class label, class prediction, confidence, and enumerator to reference the source geotiff images were stored in a large table in addition to metadata containing the filepaths, affine transforms to convert between pixel and UTM space, and class enumerations. Thus, this pipeline produces a standardized and efficient split image dataset format which can be utilized by the classification model, visualization tools, labeling tools and utility tools to reproducibly extract the split images from their corresponding source images at runtime. For the 11 source geotiff images selected (

Table 2), a total of 108,623 split images were extracted using this pipeline. A breakdown of the total number of split images from each source geotiff is also given in

Table 2.

6. The Connectionist-Geostatistical Classification Method

The connectionist-geostatistical classification method [

44,

45] integrates and interleaves physical knowledge, spatial statistical analysis and computational components at several levels. The approach includes the following concepts:

- (1)

The idea of using spatial classification to extract features from image data

- (2)

The idea of using geostatistical parameters to pre-process the imagery

- (3)

The vario function and residual vario function

- (4)

Creation of input data to activate the input-layer neurons of the NN

- (5)

The feed-forward multi-layer perceptron with back-propagation of errors

The idea of the connectionist-geostatistical classification method is to utilize geostatistical parameters to pre-process the input image data, thereby reducing the complexity of a NN required to identify spatial structures that are surface signatures resultant from cryospheric processes. The relationship between glacial acceleration, crevassing and resultant spatial structures reflected in imagery has been explained in section (3.3) and in more detail in [

45]. In this section we describe the mathematical and computational ingredients of this approach, in the form that is employed in VarioMLP, the type of connectionist-geostatistical classification utilized as a component of VarioCNN.

6.1. Geostatistical Processing of the Input Image Data

6.1.1. Spatial Homogeneity

Depending on the type of image classification problem at hand, an input image can be a video frame, a photograph, a subset of a video frame or photograph, or a subimage of a large image such as a satellite image (termed split-image here). Split-images are created from satellite images by a moving window process. The goal is to associate each image to a surface class, here a crevasse class, using the classification method. Considering the entire classification a moving-window operation applied to a satellite image, a segmentation of the area of the satellite image into crevasse classes will be obtained, in other terms, a thematic map of structural glaciologic provinces. Similarly, a time-dependent segmentation of a video stream of a glacier will result in a mapping of surface classes.

To allow for characterization and classification of the spatial structures captured, the optimal size of a subimage (split-image) is determined as follows: A feature type needs to repeat approximately three times in the split-image, and the split image needs to be spatially homogeneous with respect to surface structure, here, crevasse type. These two criteria will not be met exactly across an entire glacier region, thus a split image size needs to be selected that meets the criteria sufficiently often to make the classification operational. For experiments with only VarioMLP, split-images of sizes 201 pixels by 268 pixels were used, which follow the (3-4-5) rectangle convention and are approximately 123 m by 92 m for WorldView-1 data. An additional constraint is that the structure requires input imagery of 224 by 224 pixel sizes. For WorldView data and Negribreen surge crevasses, this requirement can be met, however, it limits generalizability of the approach. The entire training was rerun for the combined architecture of VarioCNN using square images.

6.1.2. Vario Functions

In order to characterize the spatial surface structure in a given area, recorded in an image or subimage, we calculate vario functions, defined as follows:

for pairs of points

, where

is a region in

(case of profile data) or

(case of image data) and

n is the number of pairs separated by

h; the distance value

h is also termed “lag". The function

is called the

first-order vario function. This function exists always and has a finite value.

The residual vario function is often more useful to analyze roughness in situations where a regional trend or a local drift underlies the data. Using

the residual vario function is defined as:

First-order vario functions are formally equivalent to variograms of geostatistics, but introduced in a discrete mathematics framework that facilitates easy numerical implementation as well as generalization to higher order [

45].

The variogram is defined for a data set that may be considered a realization of a spatial random function satisfying the intrinsic hypothesis (see Matheron [

156,

157]), for which generalization to higher order is difficult because of the statistical assumptions that need to be met. Equation (

1) corresponds to the statistical second-order moment and Equation (

2) to the first-order moment. Residual vario functions work best for data that underly a trend. The second-order vario function and residual vario function can also be used (see,

Table 3). Numerical outputs of the first-order vario function have been used in the original connectionist-geostatistical classification in [

44], they correspond to the experimental variogram values.

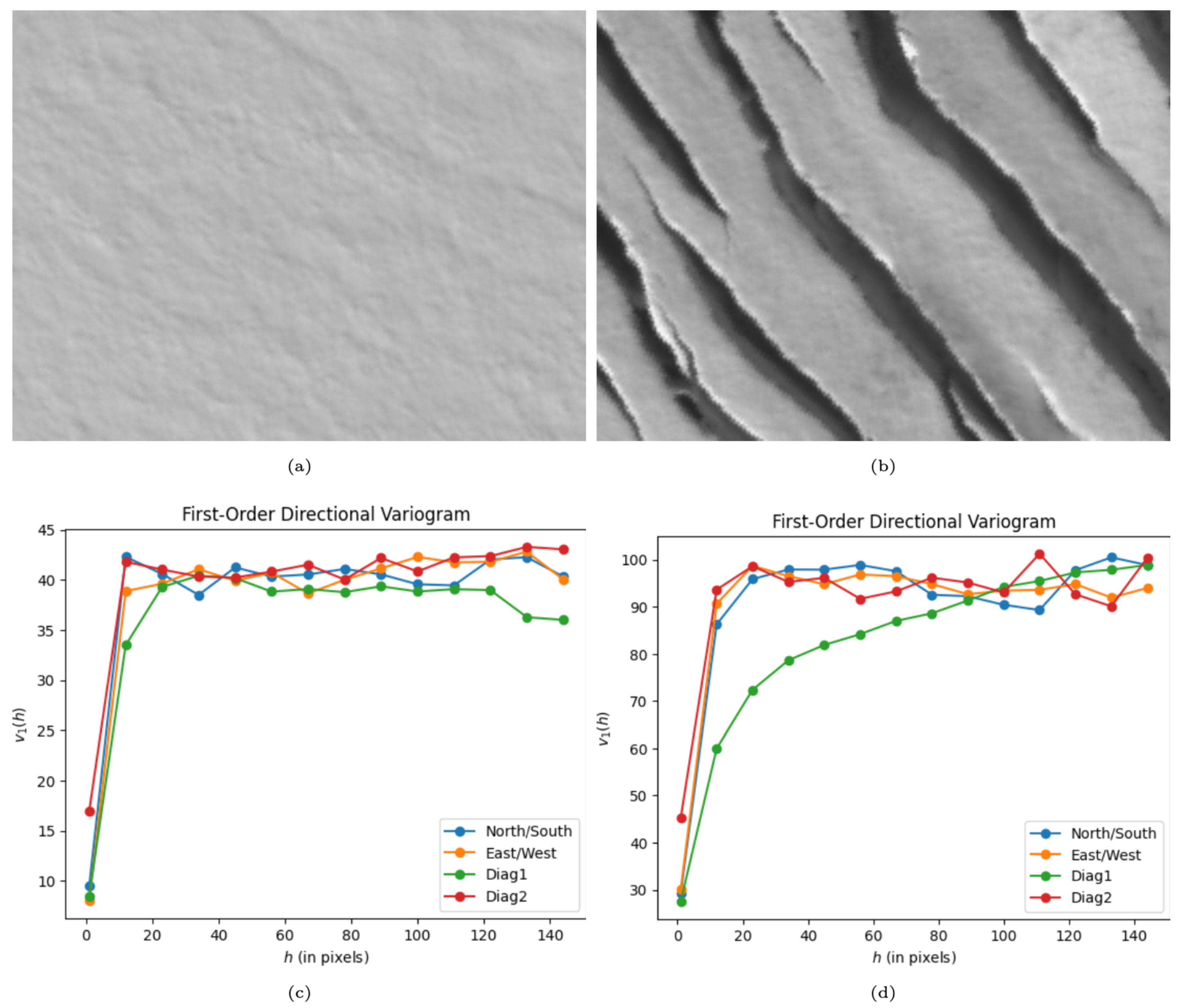

In VarioMLP, first-order vario functions are calculated sampling along four directions of each image, paralleling each side and the two diagonals (see,

Figure 2). An efficient sampling algorithm makes use of the matrix structure of the 2D image. The sampling algorithm in [

44] utilizes images of relative sizes (3-4-5), where 3 and 4 are the relative lengths of the split image sides and 5 the diagonal. However, this cannot be transferred to training, which requires square images.

The discretization of the vario function is determined by the lag value

h in pixels. In the final implementation of the algorithm, the lag value is determined such that 18 lag steps exhaust 80% of the image size (244). The values of

become the activation values for the input layer of the MLP for any value of

, in our final structure it will be

. Accounting for the 4 directions of directional vario functions calculated for each split image, we have a matrix of input values

With for the number of directions, the number of input values is .

6.2. NN Architecture: Multi-Layer Perceptron (MLP)

The NN structure of the connectionist-geostatistical classification is a multi-layer perceptron with back-propagation of errors (MLP-BP or simply MLP). The MLP has an input node per vario-function value, in the final VarioMLP structure , accounting for 18 lag steps and 4 directions. MLPs have been found to be useful NN types for the solution of this type of classification problem.

The number of nodes (neurons) in the output layer has to equal the number of surface classes, here crevasse classes. In our experiments, this number is

. Larger numbers of crevasse classes have been used, ranging up to 18. In [

46], we describe a classification with up to 13 crevasse classes.

This leaves the number and size of internal, hidden layers as variables of the NN architecture that will be determined experimentally (see section (7.7.2)). The original work in [

44] uses a single hidden layer, in fully connected or partly connected architectures. Here we experiment with two or three internal layers.

7. Image Labeling and Training Approach (for VarioMLP and ResNet-18)

7.1. Training Approach

The training approach reflects the goal of creating a physically constrained NN by combining knowledge of glaciological processes and Earth observation technology with ML methods at every step. In the last section, we already saw that the selection of sizes of training images is controlled by a requirement of spatial homogeneity, constraints associated with the spatial resolution of the satellite imagery, and the spacing of crevasses on the glacier surface, which results from the glacial movement and acceleration and we aim to analyze. Training is carried out as a form of supervised training; training as such is an optimization problem of the model’s internal parameters.

7.2. Crevasse Classes

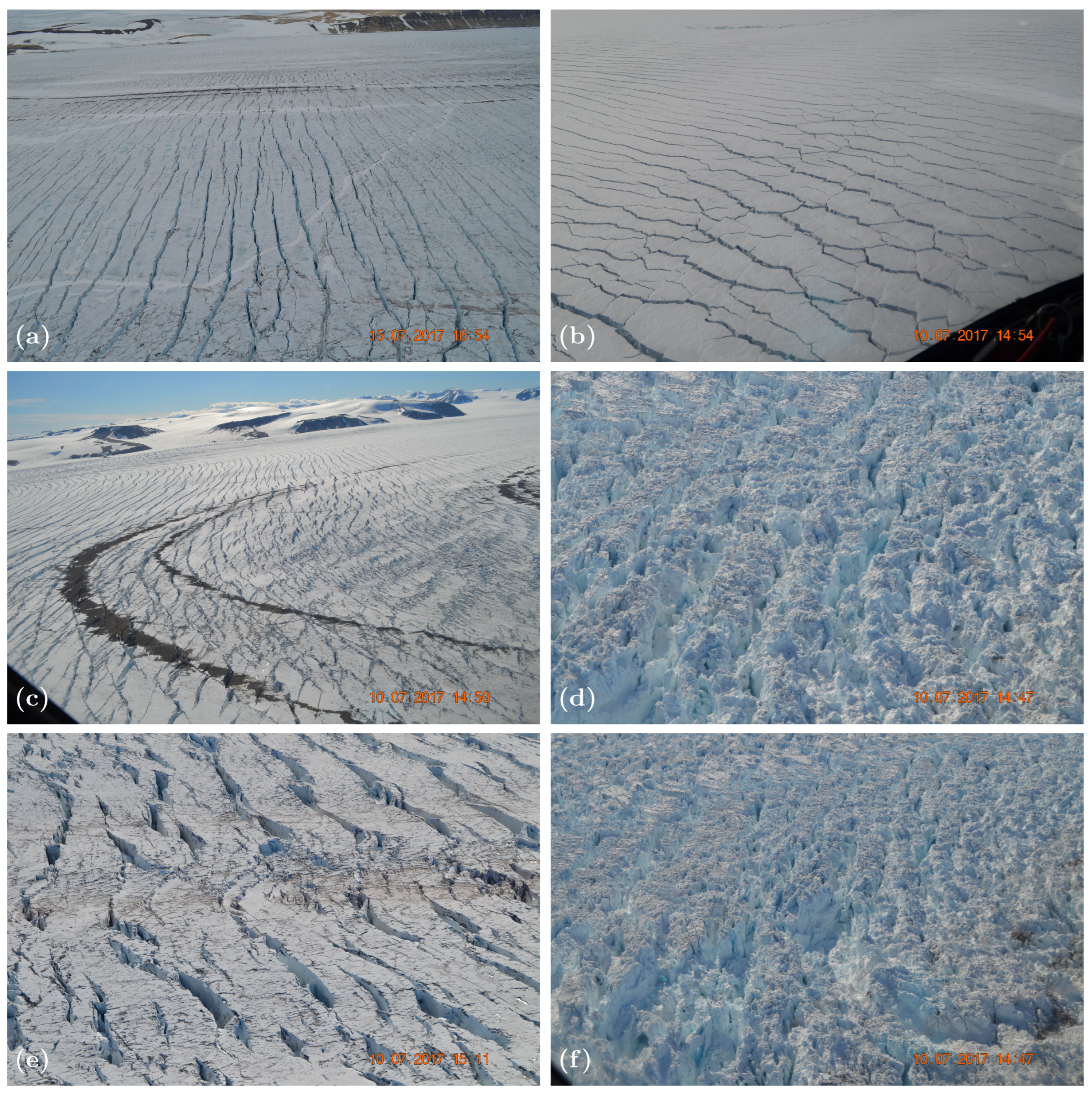

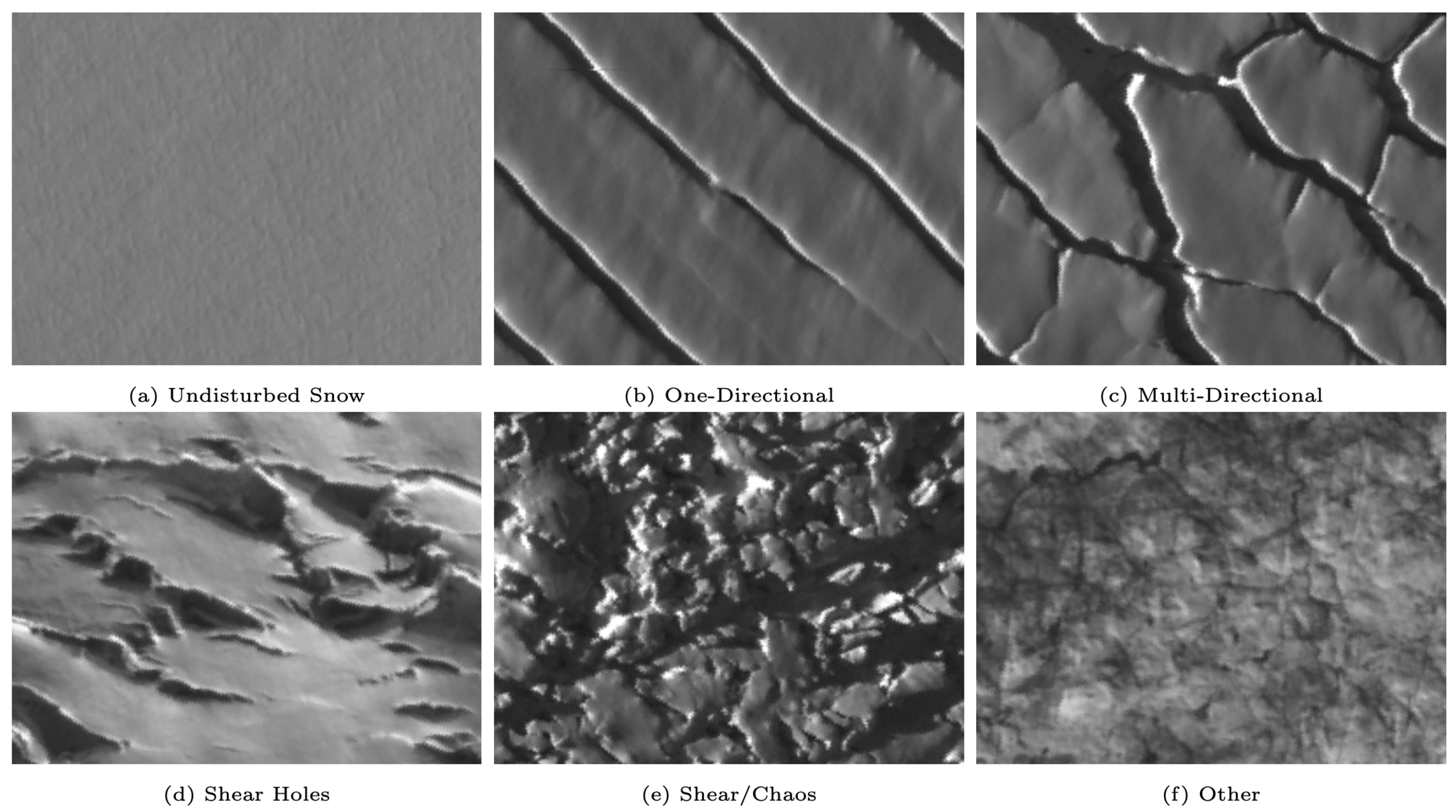

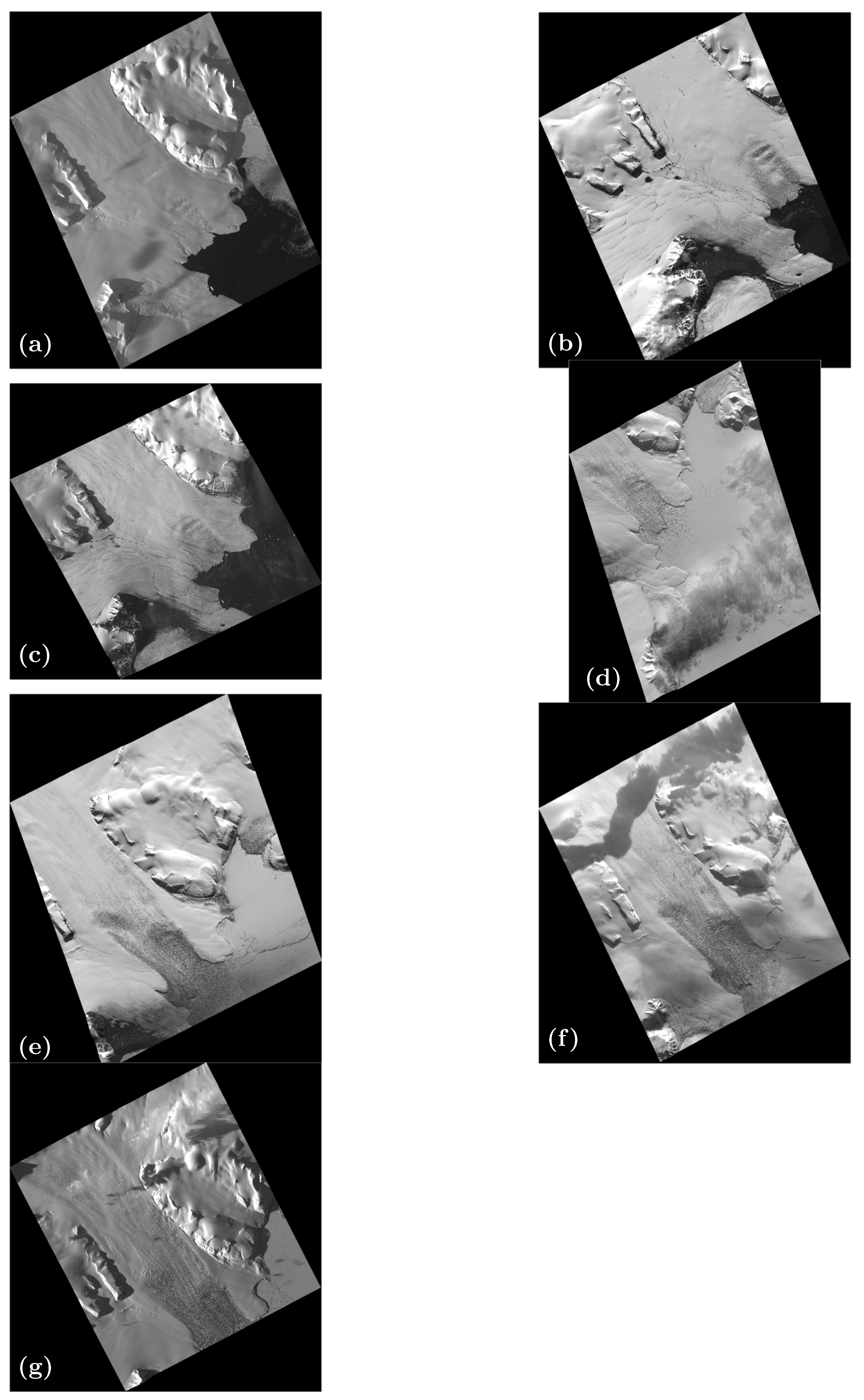

Crevasse classes are selected by an expert, based on structural glaciology (section (3.3)). Because a main objective of this paper is the integration of a physically constrained NN and a CNN, we utilize (only) four basic crevasse classes: (a) one-directional crevasses, (b) multi-directional crevasses, (c) shear crevasses, and (d) chaos crevasses, or shear-chaos crevasses. The crevasse types associated with these classes are illustrated in

Figure 3. Crevasse types (a), (b) and (c) are associated with basic deformation matrices [

126]: The one-directional crevasse type results from an extension in one direction (

Figure 3a). The multi-directional, including two-directional, crevasse type results from a deformation with more than one stress axis (

Figure 3b). It can also result from two deformation processes that affect the material ice in sequence. The shear crevasse type results from shear, a deformation type that typically occurs when fast-moving ice borders slow-moving ice. In the case of a surge, the ice of one glacier (Negribreen) accelerates, while the ice of an adjacent glacier (e.g. Ordonnansbreen) continues to flow at normal, much slower speeds (

Figure 3c). Depending on the spatial and temporal velocity gradient, shear crevasses can take different appearances (

Figure 3c and

Figure 3d). Transportation, weathering and interaction of several deformation processes can lead to complex ice-surface and near-surface structures, in which the signatures of individual processes cannot be distinguished any more, thus they are summarized as “chaos" crevasse class (

Figure 3d). In some areas, the signature of shear deformation is still evident in the chaos crevasse fields (

Figure 3f), but separation in an image classification process may be too difficult, thus the class is summarized as chaos/shear-chaos. Two additional classes need to be added to each classification, one for undisturbed snow/ice and a rest class for “other" surfaces, which can include moraines, rock avalanches, subimages that include snow/ice and rock surfaces, and indiscernible images, to limit misclassification of the better defined 4 crevasse classes. A rendering of representative examples of split images, subselected from WorldView satellite imagery, is seen in

Figure 4. The images have a size of 201(=3*67) pixels by 268(=4*67) pixels, i.e. the follow the (3-4-5) size rule.

7.3. Image Labeling

A second main objective of this paper is the derivation of a labeled training data set for the problem of crevasse classification from satellite imagery. With this objective, we address the problem that application of ML in the geosciences and specifically the cryospheric sciences has been hampered by the lack of labeled training data sets, as identified by authors working in the field (e.g. [

43,

90,

91]) and described in more detail in section (2).

To initiate the training, sets of split-images for each class are identified and selected by the structural glaciologist. In our experiments, we found that several tens of example images per class are sufficient for an initial training run of VarioMLP.

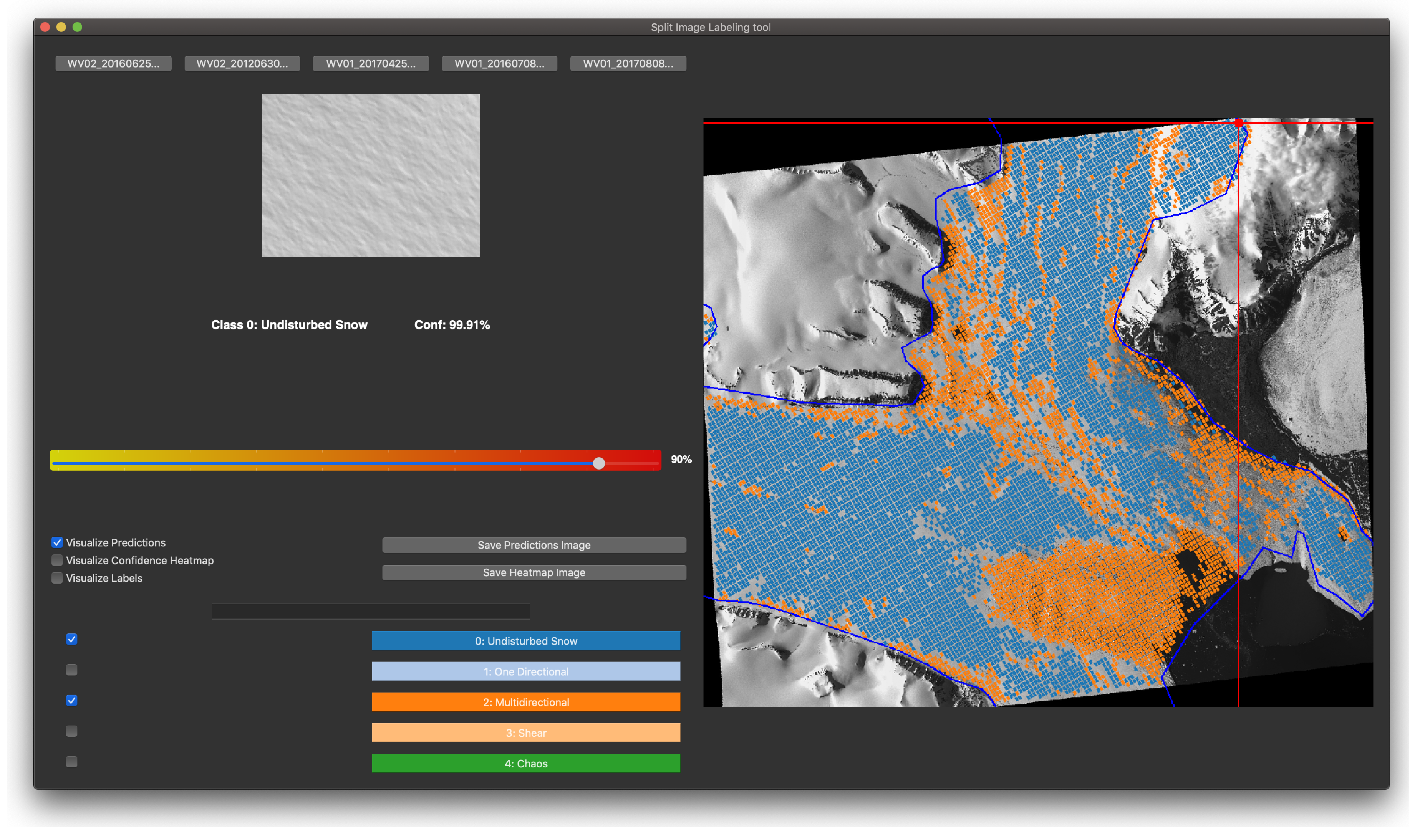

Technically, image labeling is carried out using the Split Image Explorer Tool, visualized in

Figure 5, described in more detail at [

138]. Individual images can be selected from the WorldView image, optionally with a polygonal area of interest outlined that contains the glacier area, viewed enlarged at the top left, and associated to a class. The association can be (1) performed initially by the glaciologist, or (2) displayed as the result of the NN classification, or (3) overwritten (accepted or rejected) in a control pass in the training loop (see section (7.6)). A sliding bar in the left middle of the explorer toll allows application of confidence as a filter for visualization (only images classified with a confidence level exceeding the user-selected confidence threshold are displayed in color).

7.4. Data Handling and Feature Engineering

Feature engineering is the design of the input for the neural network. Of importance for robustness of the results is that identification of a crevasse type is independent of orientation and view angle of the satellite, relative to features on the ground. Directional bias is removed by calculating vario functions in several different directions for each split-image.

Prior to extraction of split-images, the satellite image needs to be oriented in a geographic or rectangular projection framework that facilitates output of the final classification in the form of a thematic map of crevasse provinces. Raw satellite imagery is typically collected along orbits and constrained by the view angle of the observatory, which is fixed for some imagers, but adjustable or sweeping for most (including WorldView). To accomplish mapping larger areas from a single or multiple satellite images, utility functions for image projection and mosaicking are implemented as part of the GEOCLASS-image system. To visualize, the reader may compare the different sizes and orientations of the input imagery shown in

Figure 6.

Data from the panchromatic channel of WorldView-1 and WorldView-2 are utilized, because the classification principle is a spatial classification. In the more common form of multivariate statistical classification, data from several spectral channels are used. Our study combines imagery from two different image systems, WorldView-1 and WorldView-2, which result in imagery of somewhat different pixel size and resolution (0.45 m for WorldView-1 and 0.42 m for WorldView-2, see section (5.2)). A utility function in GEOCLASS-image facilitates simultaneous analysis and classification of imagery from both satellite types.

Application of the vario function to a typical image from the classes of (1) undisturbed snow and ice surfaces and (2) one-dimensional crevasse types, seen in

Figure 2, illustrates how the NN can separate these crevasse types based on the vario function values for different directions and distances. First, the maximum of the resultant vario function values is much lower for undisturbed surfaces than for crevassed surfaces (compare the

axes in

Figure 2c and

Figure 2d). Second, an anisotropic behavior of the set of directional vario functions is typical for one-directional crevasses (

Figure 2b,d), where the direction that is near-parallel to the crevasse direction does not reach the sill of the vario function (green in

Figure 2d), whereas the other three directional vario functions exhibit a typical wavy pattern resultant from washed out cross-correlation, with spacing dependent on the relative angle of the crevasse orientation to the directional calculations.

7.5. Criteria for Evaluation of Training Success

We use the terminology of

intrinsic criteria for quantitative, computational criteria (cross-entropy measure of training loss, confidence of classification result, co-occurence matrix) and

extrinsic criteria for glaciological criteria which are typically based on airborne field observations of the glacier system during surge and on additional expert knowledge on the evolution of crevasse types during a surge [

16,

18,

20,

127,

128,

158]. The application of extrinsic criteria is best explained in an applied example of image labeling and in the geophysical interpretation (see sections (7.6) and (11)).

7.5.1. Softmax Function

A softmax function is used to convert the NN output layer to a probability distribution for the possible classes. Each output node is assigned a value between 0 and 1 (

), with all outputs summing to 1, so that they can be interpreted as probabilities. The class with the largest probability is selected as the NN’s final classification of a given input and the confidence of the classification result is equal to that probability, i.e., the maximum of the softmax function. The loss function associated with the softmax function is given by the cross-entropy loss, which is used for training purposes (see, section 7.5.2). The softmax function is commonly used in many CNNs [

40,

41] due to its simplicity and probabilistic interpretation.

7.5.2. Cross Entropy

Training an MLP is an optimization of the model’s internal parameters, carried out iteratively. At each iteration, VarioMLP predicts the class of each training example and uses the cross entropy loss function as a quantification of the difference between predicted values and training data. Entropy was first introduced in [

159] to quantify the level of uncertainty of a random variable

X based on possible outcomes

according to

for

and

is the number of classes. For VarioMLP, the outcomes are the crevasse classes and the probabilities are those which the model assigns to each output neuron. The DDA-MLP uses cross entropy loss as its loss criterion, calculated as

where

n is the number of classes,

is the truth label for class

i, and

is the model-predicted probability for class

i as its loss criterion. The optimization problem is then for the model to learn an internal parameter set which minimizes this loss function, and to accomplish this the DDA-MLP employs stochastic gradient descent (SGD) via the Adam algorithm for first-order gradient-descent based optimization problems introduced by [

160]. During training, backpropagation, as defined in [

161], involves computing the gradient of the loss layer by layer, starting from the output and moving backward towards the input layer. In this case, the Adam algorithm for SGD only computes the first-order gradient, and employs adaptive learning rates for parameters based on estimates of first and second order moments, and updates the parameters proportionally to the learning rate hyperparameter in the direction of steepest descent of the gradient [

160]. Application of cross-entropy loss for training of deep NNs is described in [

162].

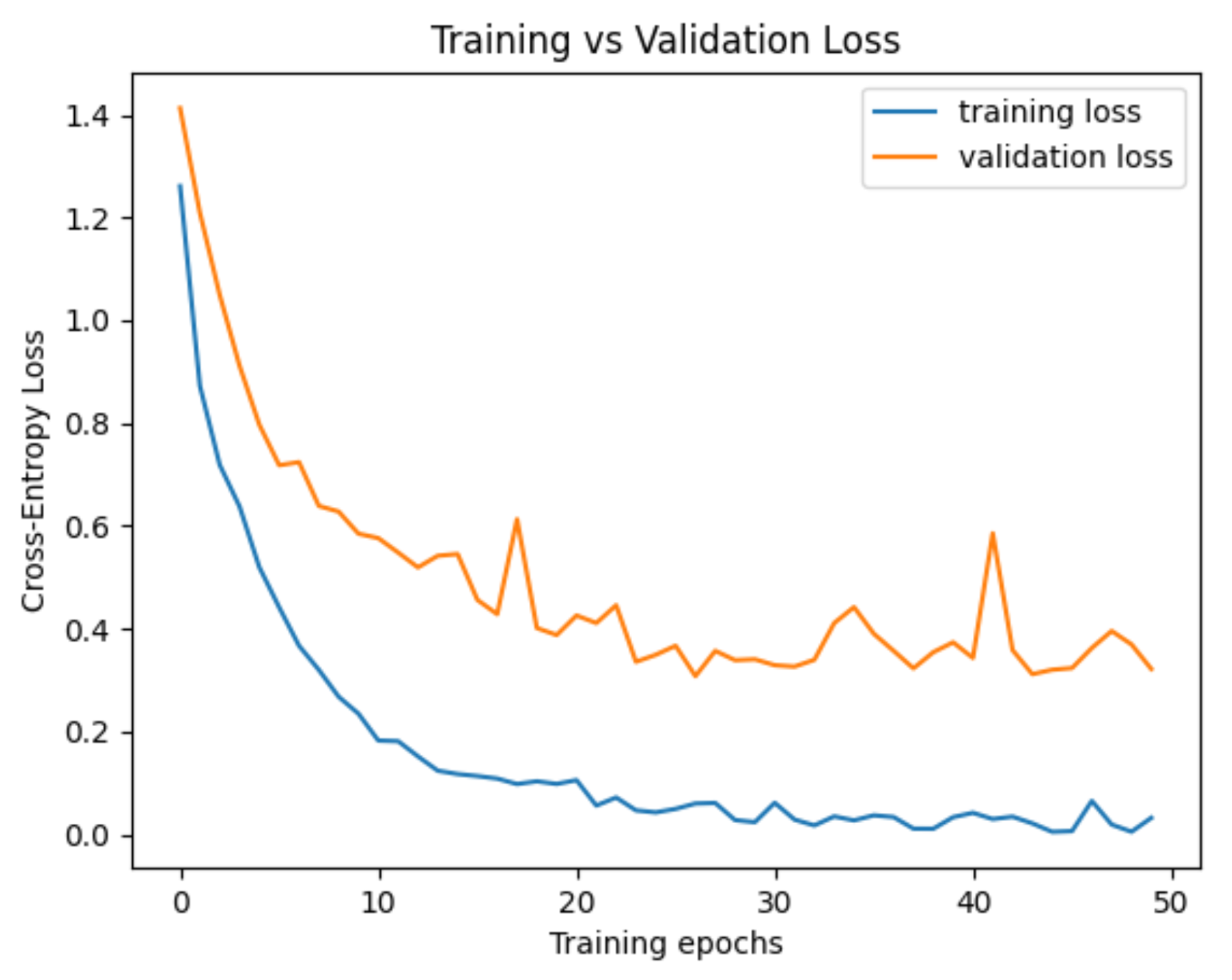

Cross-entropy loss is utilized to identify functional training runs and reject training mistakes. For example, overfitting in a test-run of the model is illustrated in

Figure 7.

7.5.3. Confidence

Classification confidence is a measurement of the probability that the association of an input image to a class is correct. Confidence approaches have been discussed in [

163]. We utilize confidence to accept or reject classified crevasse images into the training data set, applying a threshold of 90% confidence. The Split-Image Explorer Tool allows user-selected confidence values.

7.5.4. Other Training Hyperparameters

Overall, the training and feedback-loop experiments were repeated several times with different parameters and variations of the classification models. The split of the training data into actual training images and validation images was held constant at 80% (training) and 20% (evaluation) for all experiments training VarioMLP and ResNet-18. This means that however many labeled training images existed for a given run, 80% were randomly selected at runtime for the actual training process, and 20% were reserved to evaluate model performance after each epoch. It is important to separate the training and evaluation datasets, because if the model is not evaluated on images it did not see during training, it will simply memorize the training dataset if the model is sufficiently complex. Each training run was carried out with a maximum number of 50 epochs. For each epoch which resulted in a new best validation loss, a checkpoint of the classification model was saved for further evaluation. For all training experiments, cross entropy loss was used with the Adam optimizer as the method for gradient descent calculation (section 7.5.2).

7.6. Interleave of Split Image Labeling with the Training Process: The Feedback Loop

Following creation of an initial set of expert-labeled training data, a VarioMLP is trained. The resultant network architecture can be applied to simply classify an entire satellite image. However, in order to derive a large data set of labeled training images, an iterative approach to split-image labeling and VarioMLP training is taken. The goal is the creation of a data set that is large enough to train a CNN, which in turn can be expected facilitate rapid classification of many satellite images for similar problems, i.e. a higher level of generalization of the task of crevasse classification.

The iterative approach is implemented in as a feedback loop in VarioMLP, executed as a mix of computational criteria and expert interaction, interleaved in the training process of VarioMLP as follows (see,

Figure 8). The initial data set is considered the first-order data set, used to train the NN. Validation loss and training loss are evaluated as quantified by the cross-entropy measure (see, section 7.5.2). A trained VarioMLP architecture results.

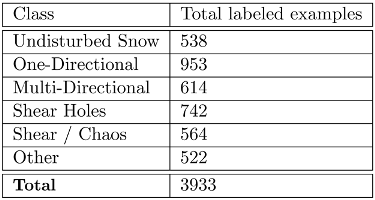

The VarioMLP, with first-approximation final structure, is then applied to classify the entire set of all split-images from a given satellite image (all split-images inside the polygon that outlines the NGS). Each split image is associated to a class and written out into a directory of that class. Next, only split images with a classification confidence at least 0.9 are retained in the crevasse class directories. Then, the glaciology expert quickly views all new images in each class (i.e. any images that were not part of the original labeled data set) and rejects images that are misclassified. This process is much faster, requiring a fraction of human expert time, than labeling thousands of split-images initially. The VarioMLP is then rerun, using the larger set of labeled data as training data. By repeating the feedback loop, a labeled data set with 3933 images was obtained in a reasonable amount of time. The final labeled data set of 3993 split images includes between 522 and 953 images per crevasse class, with a distribution given in

Table 4. This distribution is relatively even and not varied enough to cause a significant potential source of inaccuracy for the model training.

In this exemplary application, the expert that selected the initial data set was a glaciologist experienced in structural glaciology, especially observation of glacier surfaces during surges (the lead author of the paper), whereas in later iterations, the sorting of images was performed by a computer science student, indicating that the sorting procedure grows increasingly fast and simple, as the training goes through several iteration steps. To simplify the process, only a set of four main crevasse classes, plus undisturbed plus a rest class/chaos class were chosen for this study.

On the other hand, to ascertain general application of the labeled training data set to a range of previously unseen WorldView data sets from the NGS and other regions of surge glaciers, as well as to analysis and classification of data from WorldView-1 and WorldView-2, split-images were sourced from 11 different WorldView data sets collected over the NGS in 2016, 2017 and 2018. This resulted in a total of 108,623 split-images. The distribution of split images in the final 3933 data set per WorldView source files is given in (

Table 2).

At this point, we have achieved two results: (1) The derivation of a labeled training data set, and (2) The VarioMLP together with the feedback loop as either a standalone NN or a component in a physically constrained CNN, the VarioCNN.

In the next sections, we will describe ResNet-18, the CNN component selected for VarioCNN, its training, comparison to VarioMLP, and finally design of the combined classification system, VarioCNN, and the classification software system, GEOCLASS-image. Experiments with VarioCNN, using GEOCLASS-image, are rounded off by geophysical application and interpretation of the evolution of crevasse provinces during the surge in the NGS.

7.7. Determination of VarioMLP Hyperparameters

The VarioMLP architecture includes hyperparameters which can be optimized to tune the model for testing performance and generalization. Both the Directional Variogram and Multi-Layer Perceptron steps of the VarioMLP architecture have hyperparameters which affect the training and testing in different ways. Input image size and resolution has already been discussed in section (6.1.1), as this is constrained by the observations technology, the surface signatures and the assumption of spatial homogeneity. To optimize the architecture of VarioMLP, experiments were carried out to determine the optimum number of lag steps in the vario function and the shape and number of the internal layers. In both series of experiments, cross entropy loss was used as the measure for assessment of training quality and network performance.

7.7.1. Number of Vario Function Steps

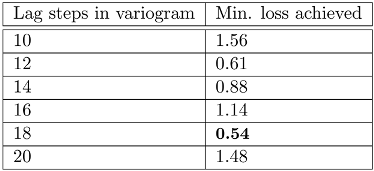

The optimal number of lag steps in the directional variogram becomes a function of the input image size in pixels. If the number of lag steps used is too small, the directional variogram may not be able to provide sufficiently different characteristics for a given set of surface types for reliable classification. If the number of lag steps is too high, the characteristics provided by the directional variogram can be polluted by noise and small-scale features that are present in multiple surface types. These characteristics may bury the salient features of each surface type needed for classification. During training, the lag step parameter was tested at values of 10, 12, 14, 16, 18 and 20 (

Table 5). In this experiment, the hidden layer shape was fixed at [5,2] and the final validation data set included 786 images (20% of the final 3,933-image labeled data set.) The best performance was achieved with a lag step value of 18. It is interesting to note that performance is not correlated with the number of lag steps used in the Variogram phase. Rather, the model seems to perform relatively well with values of 12, 14 and 18, and relatively poorly with values of 10, 16 and 20.

7.7.2. Hidden Layer Structure in the MLP

The number of hidden layers in the MLP step of the VarioMLP architecture is a function of the size of the input layer, as well as the size of the training dataset. If the number of hidden layers is too large relative to the input layer size, then the model becomes unnecessarily complex and thus more susceptible to overfitting. Too few hidden layers produce the opposite problem - the model lacks the complexity necessary to capture the full variance of the dataset and suffers from underfitting. This is an example of what is commonly referred to in machine learning as the bias-variance tradeoff [

164,

165,

166]. Choosing a perfect model size and depth becomes increasingly difficult for problems where there is no existing reference dataset of labeled training examples, since as the size of the training dataset increases so too does the optimal fully-connected model size. However this relationship is nearly impossible to calculate, so trial-based estimation is necessary. To reduce the scope of this optimization during training, the shape of the hidden layers of MLP architecture were limited to being exact multiples of the input layer size. An MLP model denoted as [5, 10, 2] refers to a model with 3 fully-connected hidden layers, which contain 5, 10 and 2 times as many nodes as the input layer respectively. During training, model architectures of [2, 2], [5, 2], [5, 5, 2], [10, 5, 2], and [10, 10, 2] were tested (

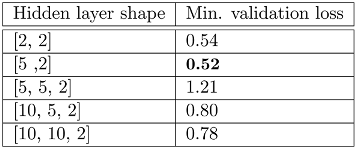

Table 6). For each test run, the lag steps for the variogram stage were fixed at 18. The best performing hidden layer shape was [5, 2]. For networks both wider and deeper than this, the performance significantly decreased. This is likely due to the fact that for the relatively small amount of information at the input layer (the concatenated output of the variogram stage), larger networks simply converge on memorizing the training dataset. This is another example of the bias-variance tradeoff at play, the network must not be overly complex for the scope of the input data.

8. ResNet-18

8.1. Description of the CNN ResNet-18

The term ResNet summarizes a family of convolutional neural networks with depths of up to 1001 layers [

41,

83,

84], based on residual learning. ResNet-18 is the deep learning network with the fewest internal layers that is commonly used today (e.g. [

167,

168,

169]) and the ResNet type that will used for the stuides in this paper. In medical sciences, labeled training data exist [

167]. Notably, for image feature detection in medicine (brain tumors, alzheimers, to name a few) labeled data sets exist, which is not the case for image classification in geoscience studies.

8.1.1. Mathematical Principles of ResNets

The following mathematical description of deep residual networks (ResNets) is summarized from [

83], the basic paper that introduces ResNets. ResNets consist of many stacked so-called “Residual Units". The defining equations of a ResNet are the following two:

where

and

are input and output of the l-th unit, F is a residual function and

denotes the set of weights (and biases) associated with the

l-th residual unit, which may consist of

K number of layers itself (

or

are typical values). The residual units form the building blocks of the modularized architecture that characterizes a ResNet. In [

41], only

is an identity mapping and

f is a ReLU function, whereas in [

83], both

and

are identity mappings. The work in [

83] shows that in the case that both these are identity mappings, the signal can be directly propagated from one unit to any other unit; this finding leads to the definition of

skip connections. The identity mapping

(already derived in their first paper, [

41]), achieves fastest error reduction and lowest training loss (among a number of model variants studied in [

83]). The use of the second identity mapping,

, is a new interpretation of the activation functions (which can be, for example, ReLU, the function used in our ResNet-18 model) as so-called pre-activation of the weight layers, as opposed to a hitherto view of post-activation. This paper [