Submitted:

06 March 2024

Posted:

08 March 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Research Question

3. Literature Review

3.1. ML Methods for SEE Review

3.2. EL Methods for SEE Review

4. Back Ground

4.1. Machine Learning (ML)

- Supervised Learning: There are examples in every dataset used in ML. Features are used to define or represent the instances. These characteristics may be "continuous" or "binary/categorical". Marked instances are those that match the correct output or response. After the dataset (training and testing data) is marked, supervised machine learning techniques are utilized. Using marked previous and present data, these systems analyze and forecast. [47]. Classification and Regression types of supervised learning. A collection of classes exists in the output space of a supervised learning problem called classification [47]. One kind of supervised learning issue is regression, in which a set of continuous values serves as the output space. By statistical approaches, methods of regression, estimate the relationship between the output and factors that affect the result[47].

- Unsupervised Learning: is used in place of supervised learning for cases that are not marked. To create a function that explains the data’s pattern, the unsupervised algorithms examine the information without marking[48]. These methods don’t provide very accurate output identification. Nonetheless, these techniques are useful for identifying and documenting findings about the data’s latent patterns. Unsupervised learning includes association and clustering[48].

4.2. Algorithms

4.2.1. K-nearest neighbors (KNN)

4.2.2. Decision Tree (DT)

4.2.3. Navies Bayes (NB)

4.3. Ensemble Learning (EL)

- Methods for averaging: The goal is to create and forecast using a variety of estimators, and then average these forecasts. Because of a lower variance, it is claimed that the estimator combined by employing the average technique outperforms an individual estimator on average. Random forest trees, bagging, and other average ensemble techniques are a few examples[51].

- Methods for boosting: These techniques aim to create an effective ensemble by combining several weak estimators, as opposed to averaging ensemble methods. By developing single estimators one after the other, these techniques aim to reduce the ensemble’s bias. Boosting ensemble techniques include adaboost, gradient tree boosting, and others[51].

- Methods for voting: A voting classifier is an ML model that is trained on a wide EL of models and forecasts an output (class) depending on the models’ largest potential of providing the desired class.[51].

4.3.1. Bagging

4.3.2. Random Forest (RF)

4.3.3. AdaBoosting

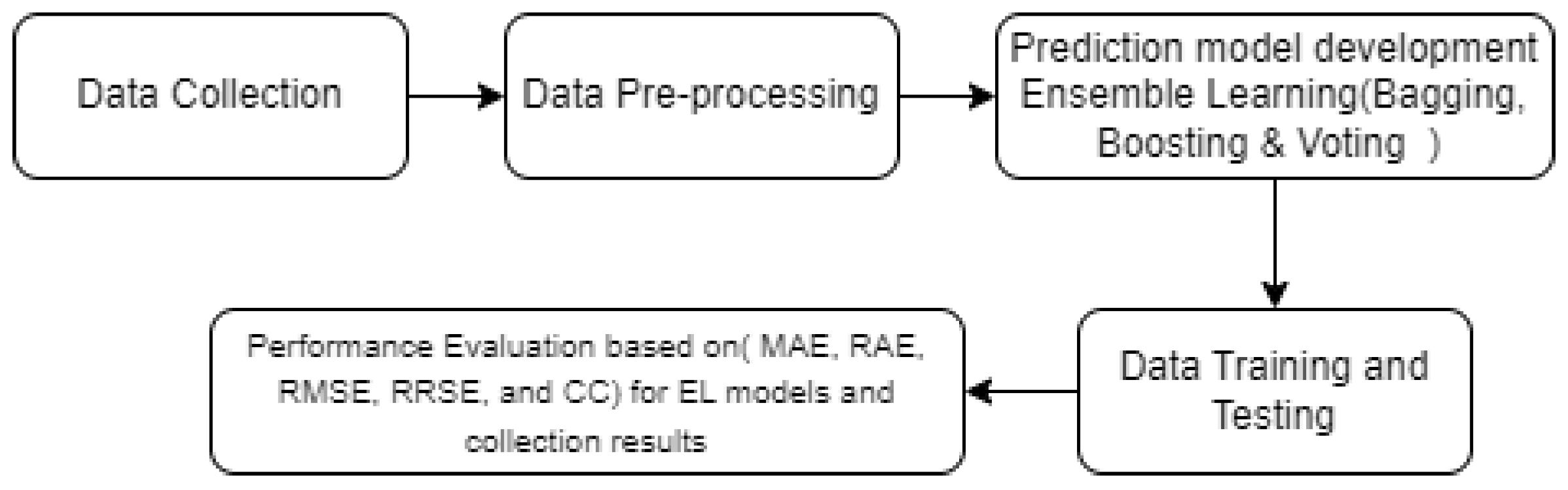

5. Methodology

6. Dataset

6.1. Dataset Collection

6.2. Datasets Description

6.2.1. COCOMO81

6.2.2. COCOMONasa I

6.2.3. COCOMONasa II

6.2.4. KITCHENHAM

6.2.5. DESHARNAIS

6.2.6. DESHARNAIS_1_1

6.2.7. MAXWELL

6.2.8. BELADY

6.2.9. BOEHM

6.2.10. CHINA

6.2.11. ALBRECHT

6.3. Merge Dataset

- Merge COCOMO81, COCOMONasa I, and COCOMONasa II to produce a new Month-COCOMO(M-COCOMO) dataset containing 216 projects with 17 features.

- Merge China, Maxwell, Desharnais, Desharnais_1_1, Albrecht, Kitchenham, belady, and Boehm to produce a new hours phase dataset(HPD) dataset containing 988 projects with seven features.

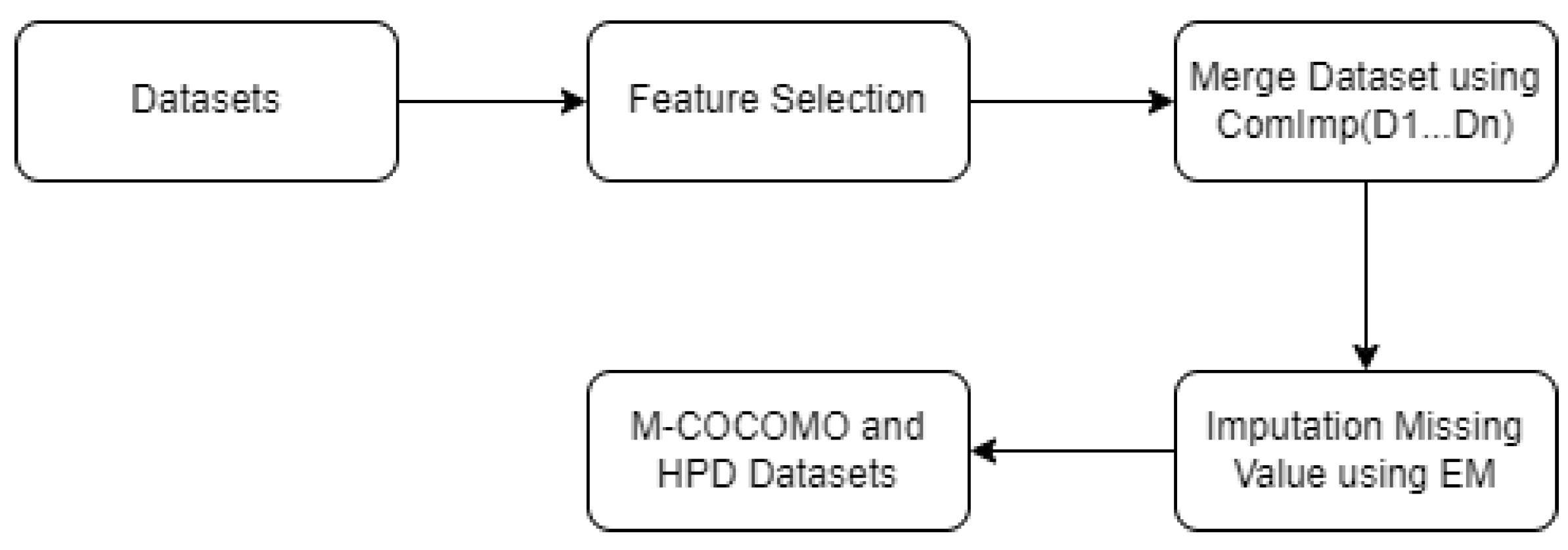

6.3.1. ComImp (Combine Datasets Based on Imputation)

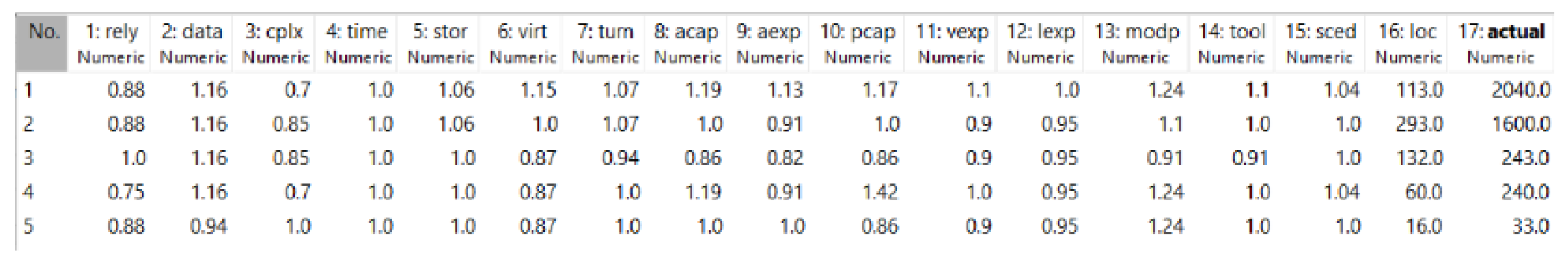

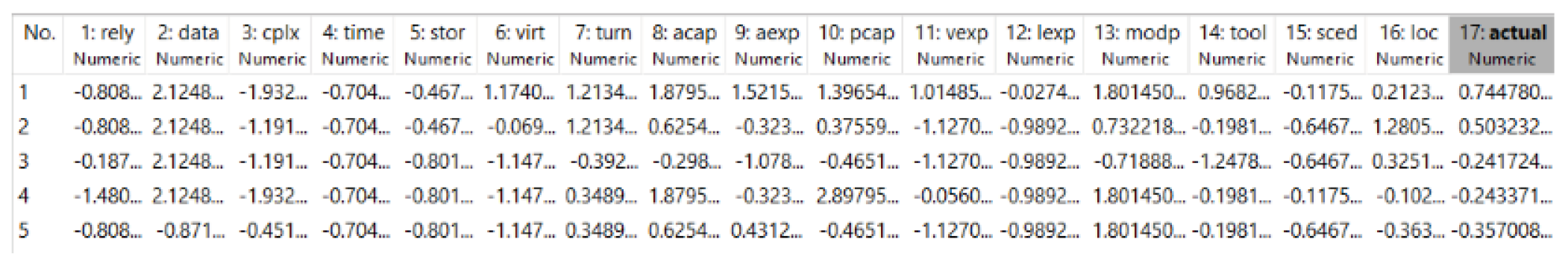

- M-COCOMO: The M-COCOMO dataset was frequently used to validate different methods of EE. There are 216 software projects in all, and each one is explained by five features and a concerted effort. In M-COCOMO, the dataset calculates the actual effort in "person-months", the time required for a single person to complete a project. . The result is no missing value after merging these datasets. Table 13 provides a full explanation of the M-COCOMO repository.

- HPD: There are twelve features in the HPD dataset used to predict software effort. In total, there are 988 software projects in all. The dataset calculates the actual efforts in"person-hours", the time required for a single person to complete a project. The result missing value after merging these datasets. The HPD dataset is fully described in Table 14.

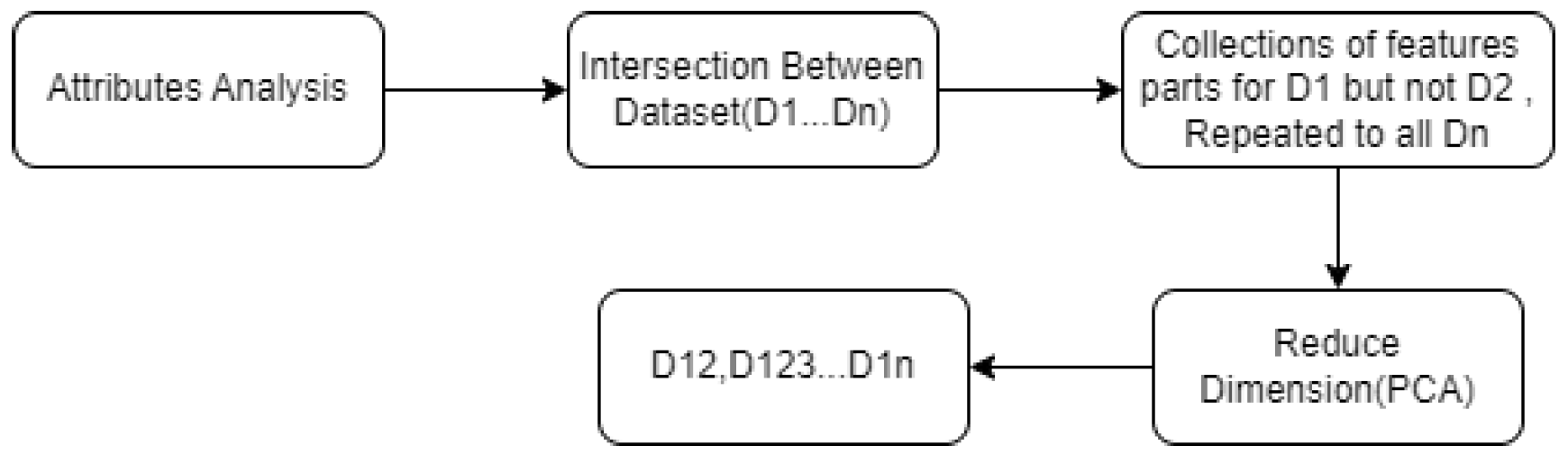

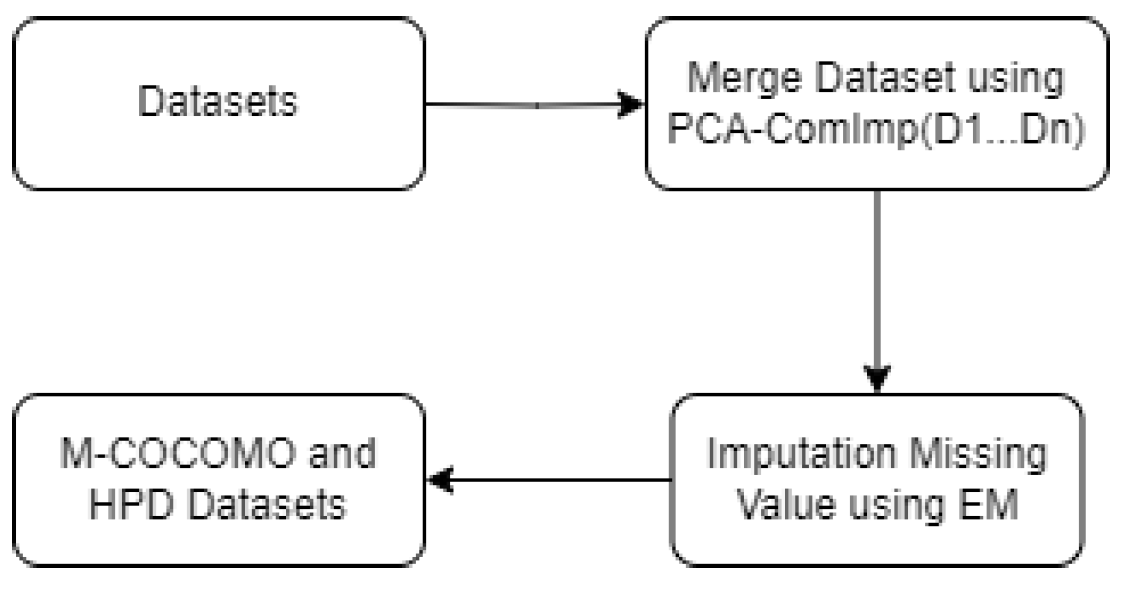

6.3.2. ComImp that utilizes Principle Component Analysis (PCA-ComImp)

- Attributes analysis: The set of features (data columns) for each data source is recognized and identified. This helps to recognize how various attributes reflect various information and which must be included for the vertical scale of your combined data to change.

- Fined the intersection between and , to produced .

- Then fined , It indicates that is the collection of features that are part of but not . is equivalent.

- Redused dimension of the dataset using PCA: ,.

- Finally, produced .

- Next, similar to ComImp, we produced HPD, stacked them together, and imputed them.

- For missing values, in this research, the EM technique was used to impute missing data.

- M-COCOMO: The M-COCOMO dataset was frequently used to validate different methods of EE. There are 216 software projects in all, and each one is explained by 17 features and a concerted effort. In M-COCOMO, the dataset calculates the actual effort in "person-months", the time required for a single person to complete a project. The result is no missing value after merging these datasets. Table 15 gives a detailed explanation of the M-COCOMO repository.

- HPD: There are seven features in the HPD dataset used to predict software effort. In total, there are 988 software projects. The dataset calculates the actual effort in "person-hours", the time required for a single person to complete a project. The result missing value after merging these datasets. The HPD dataset is fully described in Table 16.

7. Data Pre-Processing

7.1. Feature Selection (FS)

7.2. Standardization of Features(SF)

7.3. Imputation Missing Values

8. Performance Evaluation

8.1. Mean Absolute Error (MAE)

8.2. Root Mean Squared Error (RMSE)

8.3. Relative Absolute Error (RAE)

8.4. Root Relative Squared Error (RRSE)

8.5. Correlation Coefficient (CC)

9. Result & Discussion

- M-COCOMO contains 216 projects from merging (COCOMO81, COCOMONasa I , and COCOMONasa II) by two methods of merging(ComImp and PCA-ComImp).

- HPD contains 988 projects from merging (Albrecht, China, Desharnais, Desharnais_1_1, Maxwell, Kitchenham, Boehm, and Belady) by two methods of merging(ComImp and PCA-ComImp).

10. Conclusions and Future Work

| Papers | Dataset | Algorithm(ML & EL) | Evaluation measures | Result | Discussion |

| B. Marapelli [1] |

|

|

|

The results on the data sets show that in comparison to KNN (COCOMONASA2, COCOMONASA, COCOMO81) the LR model is a good estimator since it produces greater CC and lower values of RRSE, RAE, RMSE, and MAE. | LR: Its accuracy is dependent on the quality of the data and might overlook complicated, non-linear relationships. When working with large datasets, KNN can be computationally expensive and may need selecting the distance measure and k value carefully. |

| B.,Turhan et al [2] |

|

|

|

Across the three datasets, RBF performed the best. The COCOMO model is effective in EE for the two datasets, NASA and USC, as demonstrated by the results of the experiments. | To enhance the model, the metrics obtained from those models may be modified, adjusted, or expanded upon with additional metrics. |

| P. Pospieszny et al. [3] |

|

EL combined:

|

|

MLP, SVM, and GLM combined for EE. The combined model outperforms the single model. The results of EL models developed for EE and duration early in a project’s lifetime indicate that they are highly accurate contrasted to other methods used by different studies and can be implemented in real-world settings. | The variability of the ISBSG dataset is a result of its sourcing from numerous initiatives and organizations. Another factor is the high number of missing values, which when combined with heterogeneity, could make preparing data and creating ML models difficult. |

| Singal et al.[4] |

|

|

|

Less memory was used and less computational complexity was achieved with the DE technique. It offered superior values for the cost factors, which greatly enhanced the EE. | The researcher just utilized the MMRE as a fitness function; alternative measures may be taken into account to increase accuracy. Also just use one model DE for EE. |

| P. Rijwani et al.[5] |

|

|

|

MLF with the Back Propagation Method uses ANN. The(MLF-ANN) however, offered greater prediction accuracy. | Gathering and maintaining high-quality data is essential. Data quality and quantity directly affect the performance of the design. Additionally, the training and validation of ANNs require expertise and computational resources. Also, not enough datasets were used. |

| Z.abdelali et al.[6] |

|

|

|

In this paper, three commonly used accuracy metrics Pred(0.25), MMRE, and MdMRE were applied to determine which approaches were the most accurate. Overall, the RF model outperforms the RT model, especially when it comes to COCOMO and ISBSG R8. | RF is robust to overfitting and noise in the data, making it suitable for real-world software projects where data quality can vary. |

| M.Hammad, et al.[7] |

|

|

|

SVM offers the highest accuracy for forecasting in comparison to other methods because it has the lowest MAE values. For the SVM model, its lowest MAE value was 2.6. | Configuring hyperparameters for ML algorithms, such as selecting the right architecture for an ANN, can be a time-consuming process, Not enough datasets are used (73 projects), and other evaluation measures. |

| M. Kumar, et al.[8] |

|

|

|

When the 12 features were merged with the LR, MLP, and RF methods, outcomes show that LR outscored the other ML techniques based on estimation. The LR performance matrices RSE, RMSE, and MAE are employed. The Desharnais dataset, with just seven features chosen, demonstrated that when LR was used, a more accurate estimation was feasible than when MLP and RF were used. | The data set used is not sufficient to judge the best model and did not provide evidence for the suggested work’s effects when applied to additional datasets. |

| S. Elyassami,et al.[9] |

|

|

|

More accurate outcomes are obtained by ANN with HL and SVM with AK, respectively, than by ANN with two HL and SVM with LK. | The choice of the kernel function can significantly impact SVM’s performance. Selecting the right kernel for SEE can be challenging. Suitable for small datasets and complicated architecture. |

| S. Elyassami , et al.[10] |

|

|

|

Pred (25) was used to determine that, in regards to prediction accuracy, the NBC technique was equally beneficial as the SWR technique. | Data preprocessing is required to address potential outliers and missing values in the ISBSG dataset. These techniques are computationally expensive and only work on linear issues; they cannot tackle non-linear problems and Must use other evaluation measures. |

| I. F., da Silva [11] |

|

|

|

For this data set, the ANN outperformed LR. When the ANN is not restricted to a linear function, it may perform greater efficiency when dealing with data that is not a straight line. | The data set used is not sufficient to judge the best model and did not provide evidence for the suggested work’s effects when applied to additional datasets and Must use other evaluation measures. |

| Benala et al.[12] |

|

|

|

More accurate estimations are produced by DBSCAN/UKW FLANN than by FLANN, SVR, RBF, and CART. | Clustering algorithms have parameters that need to be set, and their effectiveness can be influenced by the quality of the data. |

| Leal et al. [13] |

|

|

|

WNNLR outperforms SVR, Bagging, and NNLR in terms of outcomes depending on the MMRE and the prediction rate. | Weight assignments in WNNLR, the choice of a distance metric, feature scaling, and other hyperparameters must be carefully tuned for optimal performance and Not enough datasets are used (73 projects). |

| F., Gravino et al[14] |

|

|

|

GP outperformed CBR and MSWR depending on the MMRE, MdMRE, and the prediction rate. | GP can be computationally expensive and may require substantial computational resources. |

| Nassif et al [15] |

|

|

|

In terms of each assessing measure, It is clear that DTF outperforms DT and MLR and has statistical significance. At the 95% confidence level, the DTF model has statistical significance (p value less than 0.05). | DT forests can help mitigate overfitting to some extent, but finding the right balance between complexity and accuracy is a challenge.DTF may produce less interpretable results, making it challenging to explain the reasoning behind predictions to stakeholders. |

| Dave et al [16] |

|

|

|

MMRE demonstrates that FFNN outperforms RBFNN as an estimating model. However, our evaluation of these models using the Modified MMRE and RSD demonstrates that the RBFNN model has greater accuracy at EE. This demonstrates that MMRE is an unreliable criterion for evaluation and does not always result in the best estimating model. | The data set used is not sufficient to judge the best model and did not provide evidence for the suggested work’s effects when applied to additional datasets. |

| Attarzadeh et al [17] |

|

|

|

When contrasted during the COCOMO II prototype, the proposed model improves accuracy by 17.1%. | Suitable for small datasets and complicated architecture. |

| Hidmi et al [18] |

|

EL Combined:

|

|

The best-case scenario for a single strategy employed alone is an acceptable accuracy of 85%, according to the results. Nevertheless, when we mix the classifiers, we get an accuracy of 91.35% with the Desharnais dataset and 85.48% with the Maxwell dataset. We can therefore conclude that combining two methods improves estimation accuracy. | KNN might require a careful choice of distance metric and k value, Must use other ML algorithms and other data sets to give more accuracy, and Must use other evaluation measures. |

| Hosni et al [19] |

|

EL combined:

|

|

There is not an optimum percentage EL since the performance of the suggested ensemble varies by dataset. | KNN might require a careful choice of distance metric and k value, Must use other ML algorithms and other data sets to give more accuracy, and Must use other evaluation measures. |

| Shukla et al [20] |

|

|

|

The R-squared achievement of AdaBoost-MLPNN is 82.213%, Which is the greatest across every model, whereas MLPNN has a score of 78.33%. | The data set used is not sufficient to judge the best model and did not provide evidence for the suggested work’s effects when applied to additional datasets. |

| Elish et al [21] |

|

EL combined:

|

|

The results validate the unreliability of individual models due to their inconsistent and unstable performance on various datasets. Conversely, the EL model offers performance that is more reliable than individual models | Ensemble averaging combines the predictive power of different algorithms, resulting in more accurate EE and duration estimates. |

Author Contributions

Funding

Conflicts of Interest

References

- Marapelli, B. (2019). Software Development Effort Duration and Cost Estimation using Linear Regression and K-Nearest Neighbors Machine Learning Algorithms. International Journal of Innovative Technology and Exploring Engineering, 9(2), 1043-1047. [CrossRef]

- Baskeles, B.; Turhan, B.; Bener, A. SEE using machine learning methods. In 2007 22nd International Symposium on Computer and Information Sciences; IEEE: November 2007; pp. 1-6. 20 November.

- Pospieszny, P.; Czarnacka-Chrobot, B.; Kobylinski, A. An effective approach for software project effort and duration estimation with machine learning algorithms. Journal of Systems and Software 2018, 137, 184–196. [Google Scholar] [CrossRef]

- Singal, P.; Kumari, A. C.; Sharma, P. Estimation of software development effort: A Differential Evolution Approach. Procedia Computer Science 2020, 167, 2643–2652. [Google Scholar] [CrossRef]

- Rijwani, P.; Jain, S. Enhanced Software Effort Estimation Using Multi-Layered Feed Forward Artificial Neural Network Technique. Procedia Computer Science 2016, 89, 307–312. [Google Scholar] [CrossRef]

- Abdelali, Z.; Mustapha, H.; Abdelwahed, N. Investigating the use of random forest in software effort estimation. Procedia Computer Science 2019, 148, 343–352. [Google Scholar] [CrossRef]

- Hammad, M.; Alqaddoumi, A. Features-level software effort estimation using machine learning algorithms. In 2018 International Conference on Innovation and Intelligence for Informatics, Computing, and Technologies (3ICT); IEEE, 2018.

- Kumar, M.; Singh, A. Comparative Analysis on Prediction of Software Effort Estimation Using Machine Learning Techniques. SSRN Electronic Journal 2020. [CrossRef]

- Prabhakar; Dutta, M. Prediction of Software Effort Using Artificial Neural Network and Support Vector Machine. International Journal of Advanced Research in Computer Science and Software Engineering 2013, 3(3).

- Elyassami, S. Investigating Effort Prediction of Software Projects on the ISBSG Dataset. International Journal of Artificial Intelligence & Applications 2012, 3(2), 121-132. [CrossRef]

- Tronto, I. F. de Barcelos; da Silva, J. D. S.; Sant’Anna, N. Comparison of artificial neural network and regression models in software effort estimation. In 2007 International Joint Conference on Neural Networks; IEEE, 2007.

- Benala, T. R.; Dehuri, S.; Mall, R.; ChinnaBabu, K. Software Effort Prediction Using Unsupervised Learning (Clustering) and Functional Link Artificial Neural Network. In World Congress on Information and Technology (WCIT), 2012.

- Leal, L. Q.; Fagundes, R. AA; de Souza, R. MCR; Moura, H. P.; Gusmão, C. MG. Nearest-neighborhood linear regression in an application with software effort estimation. In 2009 IEEE International Conference on Systems, Man and Cybernetics; IEEE, 2009.

- Ferrucci, F.; Gravino, C.; Oliveto, R.; Sarro, F. Genetic programming for effort estimation: An analysis of the impact of different fitness functions. In 2nd International Symposium on Search-Based Software Engineering; IEEE, 2010.

- Nassif, A. B.; Capretz, L. F.; Ho, D. A Comparison Between Decision Trees and Decision Tree Forest Models for Software Development Effort Estimation. In International Conference on Communications and Information Technology (ICCIT), 2013.

- Dave, V. S.; Dutta, K. Neural network-based software effort estimation & evaluation criterion MMRE. In 2011 2nd International Conference on Computer and Communication Technology (ICCCT-2011); IEEE, 2011.

- Attarzadeh, I.; Ow, S. H. Proposing a new software cost estimation model based on artificial neural networks. In 2010 2nd International Conference on Computer Engineering and Technology; IEEE, 2010.

- Hidmi, O.; Sakar, B. E. Software development effort estimation using ensemble machine learning. Int. J. Comput. Commun. Instrum. Eng. 2017, 4(1), 143–147. [Google Scholar]

- Hosni, M.; Idri, A.; Nassif, A. B.; Abran, A. Heterogeneous ensembles for software development effort estimation. In 2016 3rd International Conference on Soft Computing & Machine Intelligence (ISCMI); IEEE, 2016.

- Shukla, S.; Kumar, S.; Bal, P. R. Analyzing effect of ensemble models on multi-layer perceptron network for software effort estimation. In 2019 IEEE World Congress on Services (SERVICES); vol. 2642, pp. 386-387, 2019.

- Elish, M. O. Assessment of voting ensemble for estimating software development effort. In 2013 IEEE Symposium on Computational Intelligence and Data Mining (CIDM); IEEE, 2013, pp. 316-321.

- Jorgensen, M. What We Do and Don’t Know about Software Development Effort Estimation. IEEE Software 2014, 31(2), 37–40. [Google Scholar] [CrossRef]

- Kaur, T. A Review on Cost Estimation Models for Effort Estimation. Int. J. Sci. Eng. Res. 2015, 6(5), 179–183. [Google Scholar]

- Huang, Y.; Li, F.; Xie, M. An empirical analysis of data preprocessing for machine learning-based software cost estimation. Information and Software Technology 2015, 67, 108–127. [Google Scholar] [CrossRef]

- Dolado, J. J.; Fernandez, L. Genetic programming, neural networks and linear regression in software project estimation. In Proceedings of International Conference on Software Process Improvement, Research, Education and Training; 1998; pp. 157–171. [Google Scholar]

- Abdelali, Z.; Mustapha, H.; Abdelwahed, N. Investigating the use of random forest in software effort estimation. Procardia Computer Science 2019, 148, 343–352. [Google Scholar] [CrossRef]

- Shon, T.; Moon, J. A hybrid machine learning approach to network anomaly detection. Information Sciences 2007, 177, 3799–3821. [Google Scholar] [CrossRef]

- Kumar, P. S.; Behera, H. S.; Nayak, J.; Naik, B. A pragmatic ensemble learning approach for effective software effort estimation. Innovations in Systems and Software Engineering 2022, 18(2), 283–299. [Google Scholar] [CrossRef]

- Denard, S.; Ertas, A.; Mengel, S.; Ekwaro-Osire, S. Development cycle modeling: Resource estimation. Applied Sciences 2020, 10(14), 5013. [Google Scholar] [CrossRef]

- Grimstad, S.; Jørgensen, M. Inconsistency of expert judgment-based estimates of software development effort. Journal of Systems and Software 2007, 80(11), 1770–1777. [Google Scholar] [CrossRef]

- López-Martín, C.; Yáñez-Márquez, C.; Gutiérrez-Tornés, A. Predictive accuracy comparison of fuzzy models for software development effort of small programs. Journal of Systems and Software 2008, 81(6), 949–960. [Google Scholar] [CrossRef]

- Tronto, I. F. Tronto, I. F. de Barcelos; da Silva, J. D. S.; Sant’Anna, N. An investigation of artificial neural networks based prediction systems in software project management. Journal of Systems and Software 2008, 81(3), 356-367. [CrossRef]

- Albrecht, A. J.; Gaffney, J. E. Software function, source lines of code, and development effort prediction: A software science validation. IEEE Transactions on Software Engineering 1983, 6, 639–648. [Google Scholar] [CrossRef]

- Braga, P. L.; Oliveira, A. L. I.; Ribeiro, G. H. T.; Meira, S. R. L. Bagging predictors for estimation of software project effort. In 2007 International Joint Conference on Neural Networks; IEEE, 2007, pp. 1595-1600.

- Başkeleş, B.; Turhan, B.; Bener, A. Software Effort Estimation Using Machine Learning Methods. In 2007 22nd International Symposium on Computer & Information Sciences; 2007.

- Garcia-Diaz, N.; Lopez-Martin, C.; Chavoya, A. A comparative study of two fuzzy logic models for software development effort estimation. Procedia Technology 2013, 7, 305–314. [Google Scholar] [CrossRef]

- Heiat, A. Comparison of artificial neural network and regression models for estimating software development effort. Information and Software Technology 2002, 44(15), 911–922. [Google Scholar] [CrossRef]

- Pendharkar, P. C.; Subramanian, G. H.; Rodger, J. A. A probabilistic model for predicting software development effort. IEEE Transactions on Software Engineering 2005, 31(7), 615–624. [Google Scholar] [CrossRef]

- Chiu, N. H.; Huang, S. J. The adjusted analogy-based software effort estimation based on similarity distances. Journal of Systems and Software 2007, 80(4), 628–640. [Google Scholar] [CrossRef]

- Burgess, C. J.; Lefley, M. Can genetic programming improve software effort estimation? A comparative evaluation. Information and Software Technology 2001, 43(14), 863–873. [Google Scholar] [CrossRef]

- Oliveira, A. L. Estimation of software project effort with support vector regression. Neurocomputing 2006, 69(13-15), 1749-1753. [CrossRef]

- Idri, A.; Abran, A.; Khoshgoftaar, T. M. Estimating software project effort by analogy based on linguistic values. In Proceedings Eighth IEEE Symposium on Software Metrics; June 2002; pp. 21-30.

- Kocaguneli, E.; Kultur, Y.; Bener, A. Combining multiple learners induced on multiple datasets for software effort prediction. In International Symposium on Software Reliability Engineering (ISSRE); November 2009.

- Pandey, P. Analysis of the Techniques for Software Cost Estimation. In 2013 Third International Conference on Advanced Computing and Communication Technologies (ACCT); Rohtak, India, 2013.

- Başkeleş, B.; Turhan, B.; Bener, A. Software Effort Estimation Using Machine Learning Methods. In 2007 22nd International Symposium on Computer & Information Sciences; 2007.

- Lv, H.; Tang, H. Machine learning methods and their application research. In 2011 2nd International Symposium on Intelligence Information Processing and Trusted Computing; IEEE, 2011, pp. 108-110.

- Kotsiantis, S. B.; Zaharakis, I.; Pintelas, P. Supervised machine learning: A review of classification techniques. Emerging Artificial Intelligence Applications in Computer Engineering 2007, 160(1), 3–24. [Google Scholar]

- Saravanan, R.; Sujatha, P. A state of art techniques on machine learning algorithms: A perspective of supervised learning approaches in data classification. In 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS); IEEE, 2018, pp. 945-949.

- Hosni, M.; Idri, A.; Abran, A.; Nassif, A. B. On the value of parameter tuning in heterogeneous ensembles effort estimation. Soft Computing 2018, 22, 5977–6010. [Google Scholar] [CrossRef]

- Wu, J.; Gao, S. Software Productivity Estimation by Regression and Naive-Bayes Classifier-An Empirical Research. In International Conference on Promotion of Information Technology (ICPIT 2016); August 2016; pp. 20-24.

- Brown, G.; Wyatt, J. L.; Tino, P. Managing diversity in regression ensembles. Journal of Machine Learning Research 2005, 6, 1621–1650. [Google Scholar]

- Visalakshi, S.; Radha, V. A literature review of feature selection techniques and applications: Review of feature selection in data mining. In 2014 IEEE International Conference on Computational Intelligence and Computing Research; IEEE, 2014, pp. 1-6.

- Rana, P.; St-Onge, B.; Prieur, J. F.; Budei, B. C.; Tolvanen, A.; Tokola, T. Effect of feature standardization on reducing the requirements of field samples for individual tree species classification using ALS data. ISPRS Journal of Photogrammetry and Remote Sensing 2022, 184, 189–202. [Google Scholar] [CrossRef]

- Van Buuren, S.; Groothuis-Oudshoorn, K. Mice: Multivariate imputation by chained equations in R. Journal of Statistical Software 2011, 45, 1–67. [Google Scholar] [CrossRef]

- Stekhoven, D. J.; Bühlmann, P. MissForest—non-parametric missing value imputation for mixed-type data. Bioinformatics 2012, 28(1), 112–118. [Google Scholar] [CrossRef]

- Abd Elmegaly, A. A. Evaluation of Expectation Maximization and Full Information Maximum Likelihood as Handling Techniques for Missing Data. 2022.

- Aljuaid, T.; Sasi, S. Proper imputation techniques for missing values in data sets. In 2016 International Conference on Data Science and Engineering (ICDSE); IEEE, 2016, pp. 1-5.

- Sayyad Shirabad, J.; Menzies, T. J. The PROMISE Repository of Software Engineering Databases. School of Information Technology and Engineering, University of Ottawa, Canada, 2005.

- Nguyen, T.; Khadka, R.; Phan, N.; Yazidi, A.; Halvorsen, P.; Riegler, M. A. Combining datasets to improve model fitting. In 2023 International Joint Conference on Neural Networks (IJCNN); IEEE, 2023, pp. 1-9.

| Dataset name | Source Repository | Number of Features | Number of projects | Output feature-effort | Reference |

| COCOMO81 | Promise | 17 | 63 | Person-months | [1,4,6,11,12,14] |

| COCOMO NASA 1 | Promise | 17 | 63 | Person-months | [1,2,4,16,19] |

| COCOMO NASA 2 | Promise | 24 | 93 | Person-months | [1,5,17] |

| Maxwell | Github | 27 | 62 | Person-hours | [20] |

| Desharnais | Github | 9 | 81 | Person-hours | [8,14,15,18,20,23] |

| Desharnais-1-1 | Github | 12 | 81 | Person-hours | [8] |

| China | Github | 16 | 499 | Person-hours | [9] |

| Albrecht | Github | 8 | 24 | Person-hours | [4,19,21] |

| belady | Github | 2 | 32 | Person-hours | [25] |

| boehm | Github | 2 | 62 | Person-hours | [25] |

| Kitchenham | Github | 4 | 145 | Person-hours | [24] |

| COCOMO81 Features | Description | FS | Data Type |

| Rely | Describes the application and its reliability required. | Numeric | |

| Data | Describes the dimension of the database’s records. | ✓ | Numeric |

| IntComplx | Describes the procedure’s complexities. | Numeric | |

| Time | Describes the central processing unit (CPU) time constraint. | ✓ | Numeric |

| Stor | Describes the CPU’s main limitation. | ✓ | Numeric |

| Virt | Describes the machine volatility. | Numeric | |

| Turn | Describes how long it takes to turn around. | Numeric | |

| Acap | Describes the Capability Analyzer. | Numeric | |

| aexp | Describes the app’s experiences. | Numeric | |

| Pcap | Describes programmers’ abilities. | Numeric | |

| vepx | Describes a virtual usage of computers. | Numeric | |

| lexp | Describes the foreign language experience of learning. | Numeric | |

| Modp | Describes the processes utilized in modern software. | Numeric | |

| Tool | Describes the software tools that are implemented. | ✓ | Numeric |

| Sced | Describe the timetable restriction. | Numeric | |

| loc | Describes the source code lines. | ✓ | Numeric |

| Effort | Describes the actual time spent in "person-months". | ✓ | Numeric |

| COCOMO Nasa-I Features | Description | FS | Data Type |

| Rely | Describes the application and its reliability required. | Numeric | |

| Data | Describes the dimension of the database’s records. | ✓ | Numeric |

| IntComplx | Describes the procedure’s complexities. | Numeric | |

| Time | Describes the central processing unit (CPU) time constraint. | ✓ | Numeric |

| Stor | Describes the CPU’s main limitation. | ✓ | Numeric |

| Virt | Describes the machine volatility. | Numeric | |

| Turn | Describes how long it takes to turn around. | Numeric | |

| Acap | Describes the Capability Analyzer. | Numeric | |

| aexp | Describes the app’s experiences. | Numeric | |

| Pcap | Describes programmers’ abilities. | Numeric | |

| vepx | Describes a virtual usage of computers. | Numeric | |

| lexp | Describes the foreign language experience of learning. | Numeric | |

| Modp | Describes the processes utilized in modern software. | Numeric | |

| Tool | Describes the software tools that are implemented. | ✓ | Numeric |

| Sced | Describe the timetable restriction. | Numeric | |

| loc | Describes the source code lines. | ✓ | Numeric |

| Effort | Describes the actual time spent in "person-months". | ✓ | Numeric |

| COCOMO Nasa-II Features | Description | FS | Data Type |

| id | Describes the Project ID. | Numeric | |

| ProjectNam | Describes the Project name. | Ordinal | |

| mode | Describes the development mode. | Ordinal | |

| year | Describes the year of development. | Numeric | |

| cat2 | Describes the category of application. | Ordinal | |

| center | Describes which NASA center. | Ordinal | |

| forg | Describes the flight or ground system. | Ordinal | |

| Rely | Describes the application and its reliability required. | Numeric | |

| Data | Describes the dimension of the database’s records. | ✓ | Numeric |

| IntComplx | Describes the procedure’s complexities. | Numeric | |

| Time | Describes the central processing unit (CPU) time constraint. | ✓ | Numeric |

| Stor | Describes the CPU’s main limitation. | ✓ | Numeric |

| Virt | Describes the machine volatility. | Numeric | |

| Turn | Describes how long it takes to turn around. | Numeric | |

| Acap | Describes the Capability Analyzer. | Numeric | |

| aexp | Describes the app’s experiences. | Numeric | |

| Pcap | Describes programmers’ abilities. | Numeric | |

| vepx | Describes a virtual usage of computers. | Numeric | |

| lexp | Describes the foreign language experience of learning. | Numeric | |

| Modp | Describes the processes utilized in modern software. | Numeric | |

| Tool | Describes the software tools that are implemented. | ✓ | Numeric |

| Sced | Describe the timetable restriction. | Numeric | |

| loc | Describes the source code lines. | ✓ | Numeric |

| Effort | Describes the actual time spent in "person-months". | ✓ | Numeric |

| KITCHENHAM Features | Description | FS | Data Type |

| Actual. duration | Describes the project duration. | Numeric | |

| Adjusted function points | Describes the Function Point Adjustment Factor. | Numeric | |

| First. Estimate | Describes the first estimate for the project. | ✓ | Numeric |

| Actual. effort | Describes the actual time spent in "person-hours". | ✓ | Numeric |

| DESHARNAIS Features | Description | FS | Data Type |

| TeamExp | Describes the project’s team member’s capabilities. | Numeric | |

| ManagerExp | Describes the project manager’s experience. | Numeric | |

| YearEnd | Describes the final year of the project. | Numeric | |

| Transactions | Describes the number of transactions processed. | ✓ | Numeric |

| Entities | Describes the overall number of instances in the structure’s data. | Numeric | |

| PointsAdjust | Describe the adjustments functionality points. | ✓ | Numeric |

| Envergure | Describes the environment for the project. | Numeric | |

| Language | Describes the project’s language. | Ordinal | |

| Effort | Describes the actual time spent in "person-hours". | ✓ | Numeric |

| DESHARNAIS_1_1 Features | Description | FS | Data Type |

| ID | Describes the object ID. | Numeric | |

| TeamExp | Describes the project’s team member’s capabilities. | Numeric | |

| ManagerExp | Describes the project manager’s experience. | Numeric | |

| YearEnd | Describes the final year of the project. | Numeric | |

| Transactions | Describes the number of transactions processed. | ✓ | Numeric |

| Entities | Describes the overall number of instances in the structure’s data. | Numeric | |

| PointsAdjust | Describe the adjustments functionality points. | ✓ | Numeric |

| PointsnonAdjust | Describes the non-adjustment function points(Transaction & Entities). | ✓ | Numeric |

| Length | Describes the actual project schedule in months. | Numeric | |

| Language | Describes the language used for the project. | Ordinal | |

| Adjustment | Describes the function point adjustment factor for complexity. | Numeric | |

| Effort | Describes the actual time spent in "person-hours". | ✓ | Numeric |

| MAXWELL Features | Description | FS | Data Type |

| Size | Describes the application size. | ✓ | Numeric |

| Duration | Describes the project duration. | ✓ | Numeric |

| Time | Describes the Time taken. | Numeric | |

| Year | Describes the year of development. | Numeric | |

| app | Describes the application type for the project. | Numeric | |

| har | Describes the required hardware framework. | Numeric | |

| dba | Describes the project’s database. | Numeric | |

| ifc | Describes the user interface for the project. | Numeric | |

| Source | Describes the conditions under which software is created. | Numeric | |

| nlan | Describes the number of languages that were used. | Numeric | |

| telonuse | Describes the Telon used for the project. | Numeric | |

| T01 | Describes how the client interacts. | Numeric | |

| T02 | Describes the creation of an environment’s capability. | Numeric | |

| T03 | Describes the project’s workforce accessibility. | Numeric | |

| T04 | Describes the standard used for the project. | Numeric | |

| T05 | Describes the method used for the project. | Numeric | |

| T06 | Describes the tools used for the project. | Numeric | |

| T07 | Describes the logic that underlies the complexity of the software. | Numeric | |

| T08 | Describes the range of limitations. | Numeric | |

| T09 | Describes the standard of excellence criteria. | Numeric | |

| T10 | Describes what is necessary for productivity. | Numeric | |

| T11 | Describes the process of installation criteria. | Numeric | |

| T12 | Describes the critical thinking skills of the team members. | Numeric | |

| T13 | Describes the program and the experience of staff members. | Numeric | |

| T14 | Describes the project’s team technical capabilities. | Numeric | |

| T15 | Describes the project’s team member’s capabilities. | ✓ | Numeric |

| Effort | Describes the actual time spent in "person-hours". | ✓ | Numeric |

| BELADY Features | Description | FS | Data Type |

| Size | Describes the application size. | ✓ | Numeric |

| Effort | Describes the actual time spent in "person-hours". | ✓ | Numeric |

| BOEHM Features | Description | FS | Data Type |

| Size | Describes the application size. | ✓ | Numeric |

| Effort | Describes the actual time spent in "person-hours". | ✓ | Numeric |

| CHINA Features | Description | FS | Data Type |

| AFP | Describe the altered functionality parameters. | Numeric | |

| Input | Describes the function points of input for the project. | Numeric | |

| Output | Describes the function points of output for the project. | Numeric | |

| Inquiry | Describes the function points of external output inquiry. | Numeric | |

| Files | Describes the function pointers of internal logical files. | Numeric | |

| Interface | Describes the function pointers of the external interface added. | Numeric | |

| Added | Describes the function pointers of the added functions. | Numeric | |

| Changed | Describes the function pointers of changed functions. | Numeric | |

| Resource | Describes the team type for the project. | ✓ | Numeric |

| Duration | Describes the project duration. | Numeric | |

| PDR_AFP | Describes the Productivity delivery rate(adjustment functionality parameters). | Numeric | |

| PDR _UFP | Describes the Productivity delivery rate(Un-adjustment functionality parameters). | Numeric | |

| NPDR _AFP | Describes the Normalized productivity delivery rate(adjustment functionality parameters). | Numeric | |

| NPDU _UFP | Describes the Productivity delivery rate(Un-adjustment functionality parameters). | Numeric | |

| N-Effort | Describes the normalized effort. | ✓ | Numeric |

| Effort | Describes the actual time spent in "person-hours". | ✓ | Numeric |

| ALBRECHT Features | Description | FS | Data Type |

| Input | Describes the quantity of inputs a software must handle. | Numeric | |

| Output | Describes the quantity of outputs that a program generates. | ✓ | Numeric |

| Inquiry | Describes the number of queries or questions that an application must respond to. | ✓ | Numeric |

| Files | Describes the amount of records needed for the program to write to or read from. | Numeric | |

| FPAdj | Describes the Function Point Adjustment Factor. | Numeric | |

| RawFPcounts | Describes the Function Point Measures are used to calculate the raw function points. | ✓ | Numeric |

| AdjFP | Describes the function’s point adjustments factor given by the original function points. | ✓ | Numeric |

| Effort | Describes the actual time spent in "person-hours". | ✓ | Numeric |

| M-COCOMO Features | Description | Data Type |

| Data | Describes the Database Size. | Numeric |

| Time | Describes the CPU time limitation. | Numeric |

| Stor | Describes the CPU’s main limitation. | Numeric |

| Tool | Describes the software tools that are implemented. | Numeric |

| loc | Describes the source code lines. | Numeric |

| Effort | Describes the actual time spent in "person-months". | Numeric |

| HPD Features | Description | Data Type |

| Resource | Describe the team type for the project. | Numeric |

| Output | Describes the quantity of outputs that a program generates. | Numeric |

| Enquiry | Describes the number of queries or questions that an application must respond to. | Numeric |

| Team Exp | Describes the team experience in years. | Numeric |

| First Estimate | Describes the first estimate for the project. | Numeric |

| AFP | Describes the Function Point Adjustment Factor | Numeric |

| Non-AFP | Describes the non-adjustment function points. | Numeric |

| Transaction | Describes the number of transactions processed. | Numeric |

| Size | Describes the application size. | Numeric |

| Duration | Describes the project duration. | Numeric |

| N-effort | Describes the normalized effort. | Numeric |

| Effort | Describes the actual time spent in "person-hours". | Numeric |

| M-COCOMO Features | Description | Data Type |

| Rely | Describes the application and its reliability required. | Numeric |

| Data | Describes the dimension of the database’s records. | Numeric |

| IntComplx | Describes the procedure’s complexities. | Numeric |

| Time | Describes the central processing unit (CPU) time constraint. | Numeric |

| Stor | Describes the CPU’s main limitation. | Numeric |

| Virt | Describes the machine volatility. | Numeric |

| Turn | Describes how long it takes to turn around. | Numeric |

| Acap | Describes the Capability Analyzer. | Numeric |

| aexp | Describes the app’s experiences. | Numeric |

| Pcap | Describes programmers’ abilities. | Numeric |

| vepx | Describes a virtual usage of computers. | Numeric |

| lexp | Describes the foreign language experience of learning. | Numeric |

| Modp | Describes the processes utilized in modern software. | Numeric |

| Tool | Describes the software tools that are implemented. | Numeric |

| Sced | Describes the timetable constraint . | Numeric |

| loc | Describes the source code lines. | Numeric |

| Effort | Describes the actual time spent in "person-months". | Numeric |

| HPD Features | Description | Data Type |

| Input | Describes the quantity of inputs a software must handle. | Numeric |

| Output | Describes the quantity of outputs that a program generates. | Numeric |

| File | Describes the number of files required for a program to write to or read from. | Numeric |

| First Estimate | Describes the first estimate for the project. | Numeric |

| AFP | Describe the function of the Points Adjustments Factor. | Numeric |

| N-effort | Describes the normalized effort. | Numeric |

| Effort | Describes the actual time spent in "person-hours". | Numeric |

| Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0158 | 0.0671 | 53.2419 % | 56.3547 % | 0.9578 |

| AdaBoost | 0.0159 | 0.0589 | 46.9494 % | 48.5212 % | 0.9998 |

| Voting | 0.0143 | 0.0565 | 44.3188 % | 44.5415 % | 0.9998 |

| Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0214 | 0.0819 | 53.6069 % | 58.0754 % | 0.9759 |

| AdaBoost | 0.0039 | 0.0356 | 9.7092 % | 25.2217 % | 0.9996 |

| Voting | 0.0168 | 0.0640 | 42.0561 % | 45.3716 % | 0.9995 |

| Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0143 | 0.0661 | 53.8579 % | 57.3016 % | 0.9578 |

| AdaBoost | 0.0013 | 0.0228 | 5.5397 % | 19.7348 % | 0.9998 |

| Voting | 0.0109 | 0.0502 | 40.9738 % | 43.5082 % | 0.9998 |

| Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0131 | 0.0615 | 53.5125 % | 55.7224 % | 0.9179 |

| AdaBoost | 0.009 | 0.0419 | 36.7875 % | 37.9658 % | 0.9999 |

| Voting | 0.0115 | 0.0519 | 47.0259 % | 47.0276 % | 0.9999 |

| Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0094 | 0.0454 | 36.6104 % | 40.0959 % | 0.9164 |

| AdaBoost | 0.0094 | 0.0421 | 36.7417 % | 37.1824 % | 0.9999 |

| Voting | 0.0102 | 0.0463 | 41.9686 % | 41.9719 % | 0.9999 |

| Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0347 | 0.102 | 55.6538 % | 57.7707 % | 0.8922 |

| AdaBoost | 0.0023 | 0.0313 | 3.6618 % | 17.7461 % | 0.9996 |

| Voting | 0.0305 | 0.0878 | 48.8484 % | 49.7202 % | 0.9996 |

| Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0182 | 0.0771 | 56.4441 % | 60.7297 % | 0.8685 |

| AdaBoost | 0.00643 | 0.0488 | 19.7554 % | 38.4640 % | 0.9989 |

| Voting | 0.0176 | 0.0727 | 54.4594 % | 57.2534 % | 0.9989 |

| Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0076 | 0.0467 | 53.5633 % | 55.4589 % | 0.9936 |

| AdaBoost | 0.0003 | 0.0101 | 1.9578 % | 12.0124 % | 0.9999 |

| Voting | 0.0071 | 0.0423 | 49.8529 % | 50.2115 % | 0.9999 |

| Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0172 | 0.0705 | 54.0836 % | 55.9919 % | 0.9615 |

| AdaBoost | 0.0118 | 0.0481 | 37.2787 % | 38.1860 % | 0.9999 |

| Voting | 0.0140 | 0.0556 | 44.0882 % | 44.1649 % | 0.9999 |

| Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0429 | 0.1116 | 53.7217 % | 55.6905 % | 0.9892 |

| AdaBoost | 0.0299 | 0.0764 | 37.3913 % | 38.2528 % | 0.9999 |

| Voting | 0.0202 | 0.0505 | 25.2501 % | 25.2845 % | 0.9999 |

| Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0015 | 0.0183 | 36.1662 % | 40.3380 % | 0.9938 |

| AdaBoost | 0.0001 | 0.0051 | 1.4717 % | 11.1515 % | 0.9998 |

| Voting | 0.0021 | 0.0229 | 49.9593 % | 50.2752 % | 0.9998 |

| ComImp | Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0066 | 0.0443 | 43.5009 % | 49.6498 % | 0.9320 | |

| AdaBoost | 0.0009 | 0.0183 | 6.1038 % | 20.948 % | 0.9999 | |

| Voting | 0.0057 | 0.0357 | 37.3736 % | 40.9089 % | 0.9999 | |

| PCA-ComImp | Bagging | 0.0069 | 0.0429 | 45.1777 % | 49.1607 % | 0.9359 |

| AdaBoost | 0.0002 | 0.0085 | 1.0243 % | 9.7135 % | 0.9999 | |

| Voting | 0.0038 | 0.0276 | 24.801 % | 31.697 % | 0.9999 |

| ComImp | Model | MAE | RMSE | RAE | RRSE | CC |

| Bagging | 0.0008 | 0.0135 | 36.2509 % | 39.5167 % | 0.9978 | |

| AdaBoost | 0.0122 | 0.0494 | 37.2849 % | 38.5657 % | 0.9997 | |

| Voting | 0.0004 | 0.0054 | 15.6635 % | 15.6637 % | 0.9999 | |

| PCA-ComImp | Bagging | 0.0008 | 0.0135 | 36.0581 % | 39.5376 % | 0.9972 |

| AdaBoost | 0.0001 | 0.0002 | 1.5617 % | 10.1143 % | 0.9999 | |

| Voting | 0.0008 | 0.0114 | 32.986 % | 33.1954 % | 0.9999 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).