1. Introduction

1.1. Modelling Human Emotions

A mathematical model of human emotion processing in group settings [

1], focusing on how individuals receive and react to emotions from events was developed. It explains how emotions are modelled using a one-dimensional random walk or Wiener process and represented by fixed probability distributions. The author demonstrates that when individuals form a group, the distribution of emotions can also be represented by a fixed distribution, providing insights into emotional responses and behaviours within social dynamics.

[

1,

2,

3,

4,

5,

6,

7] investigated the mathematical modelling of human emotions and decision-making processes within group dynamics. It highlights the application of decision field theory to understand how individuals make decisions and how mathematical models are used in psychology and other fields to study dynamic events and decision-making processes. The research [

1] aims to provide insights into human behaviours and emotional responses within social contexts through mathematical analysis and modelling techniques. While mathematical models have been applied in experimental psychology and Bayesian modelling, there seems to be a gap in integrating mathematical methods with the study of human emotions and behaviours. This highlights a potential area for further research and exploration in the field of psychology.

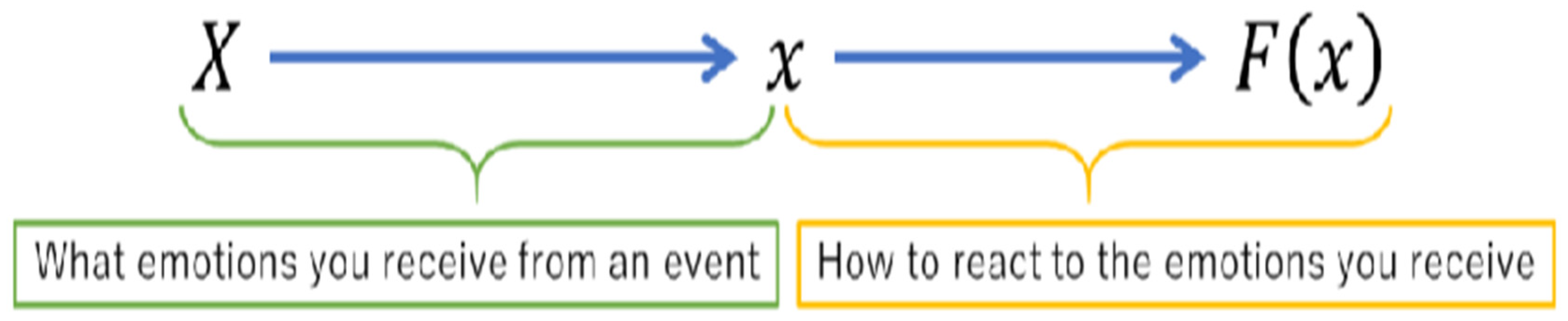

Going through the hypothetical scenario [

1] where an event (

) triggers an emotion (

), which then leads to a reaction denoted as

This relationship is illustrated in

Figure 1, showing how events, emotions, and reactions are interconnected. The author [

1] suggested that individuals determine whether an event produces positive or negative emotions and adjust their emotional state towards a stable and neutral state (

) through a series of reactions and emotional responses, as illustrated by

Figure 1(c.f., [

1]).

In the context of personal emotion processing [

1], individuals determine whether events elicit positive or negative emotions and whether they lead to large or small emotional or behavioural responses. The process involves randomly starting with an emotion value and then adding 1 or -1 to aim for emotional balance at

, representing stability and emotionlessness. This one-dimensional random walk model suggests that emotions are processed by continuously adding or subtracting values, with the mean emotion value being 0 and variance increasing with the number of processing iterations.

This explains that the process of emotion processing can be likened to a one-dimensional random walk, where positive and negative emotions are determined with a probability of and then added together to obtain the total emotion. In this scenario, the mean (expected value) of the emotion is 0, indicating a balance between positive and negative emotions, while the variance represents the variability or spread of emotions experienced during the process. This perspective provides a mathematical framework to understand how emotions may fluctuate and balance out over time.

This shows how an individual's reactions are determined by events and emotions they experience [

1], with reactions being like a dictionary once events and emotions are established. It emphasizes that individuals cannot influence events or the type of emotions they receive, but they can influence their reactions based on the initial emotional value assigned to an event. The relationship between the magnitude of emotions and corresponding reactions is highlighted, where strong positive emotions lead to favourable reactions and strong negative emotions lead to unfavourable reactions.

The text discusses the relationship between a stochastic differential equation and the Fokker-Planck equation in the context of probability density functions and random walk processes. It introduces the concept of a Wiener process, represented by the equation:

where 𝛽 is a positive real number and

is a standard Brownian motion. The equations derived illustrate how changes in probability density functions over time can be described mathematically in the context of random processes.

Thus, the suggested mathematical model can relate to the change in emotions over time, comparing it to a one-dimensional random walk. It introduces equations that describe how emotions change per unit time and how these changes can be represented using partial differential equations. This highlights the relationship between different mathematical expressions and emphasizes the concept of 𝛽 in the context of the model.

The time-dependent probability distribution of emotions during emotional processing,

which provides insights into how emotions evolve over time during the processing phase, helping to understand the average distribution of emotions as the process unfolds. Engaging the mathematical vision provided by [

1],

reads as:

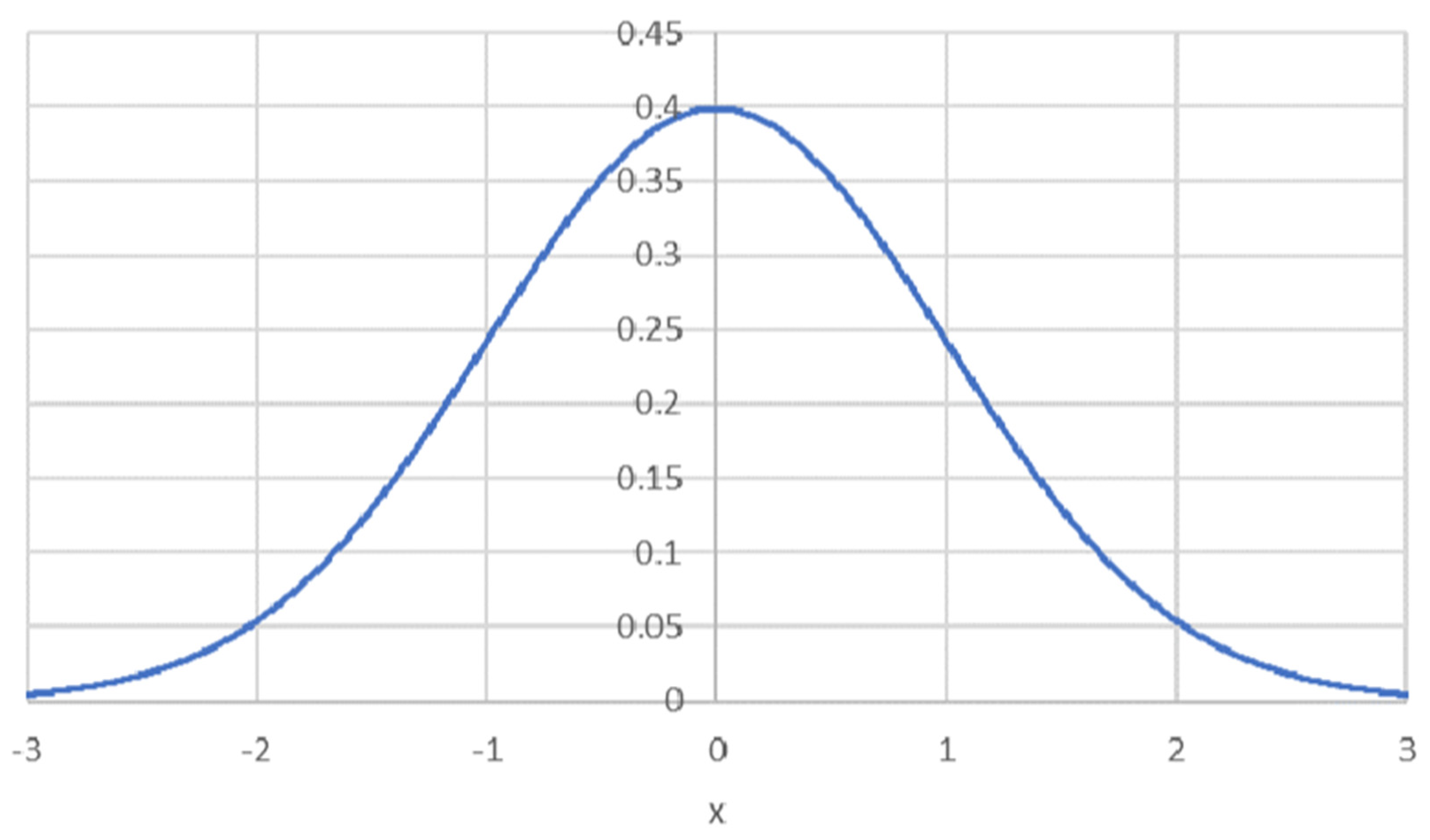

When individuals are grouped together [

1], the emotion processing of the group is viewed as the average of the individual emotions. This means that the probability density function (PDF) of the group's emotions,

reads:

which describes how emotions are distributed within the group based on statistical principles. This approach simplifies the understanding of how emotions are processed collectively within a group setting, as visualized by

Figure 2(c.f., [

1]).

[

1] highlighted how individuals and groups share a common equation for expressing emotions, emphasizing the role of understanding social indications in preventing bullying and moral harassment. Some strategies for individuals to adapt to their surroundings, manage their reactions, and handle challenging situations effectively were suggested by [

1].

1.2. Information Length Theory

Shannon entropy is not the best descriptor [

8] of a time series’ statistical variations, which has made using other unique information theoretic notions, including Fisher Information [

9,

10,

11], highly motivating. Differential entropy [

12], Kullback-Leibler divergence (KLD) [

13], or information length (IL) [

14,

15,

16]. Time-dependent PDFs provide the ability to trace time series evolution and measure variability [

8], which is the basis of the IL metric's attractiveness. In conclusion, [

17,

18,

19,

20,

21,

22,

23] provide an examination of the interval learning (IL) computation of linear stochastic autonomous processes. This broadens the scope of application, enabling IL to be used for the abruption of event prediction and facilitating application to different engineering contexts.

1.3. IL as a Concept

Mathematically speaking, if

serves as a nth- order stochastic variable and

is a time-dependent PDF of

, then the Information Length

corresponding to its evolution from the initial time

to the final time

reads:

provided that

serves as the root-mean- squared fluctuating energy rate.

Having a closer look at (3), it is essential to note that

serves as a dynamic temporal unit which provides the correlation time over which the changes of

take place [

16]. Furthermore,

defines the statistical space’s time unit. Having said that,

, quantifies the information velocity [

18].

It is preferable to compute the underlying value of the mathematical model of the related physical process to comprehend the meaning of

. Taking the Langevin equation-described first-order stochastic process into consideration:

serves as a random variable,

defines a deterministic force,

represents a short-correlated random force satisfying that:

where

serves as the amplitude (temperature) of the deterministic force

It is to be noted that equation (7) is so popular to be used as a descriptor of the motion of a particle under a harmonic potential in the form:

Following [

23,

24], it is found that:

provided that

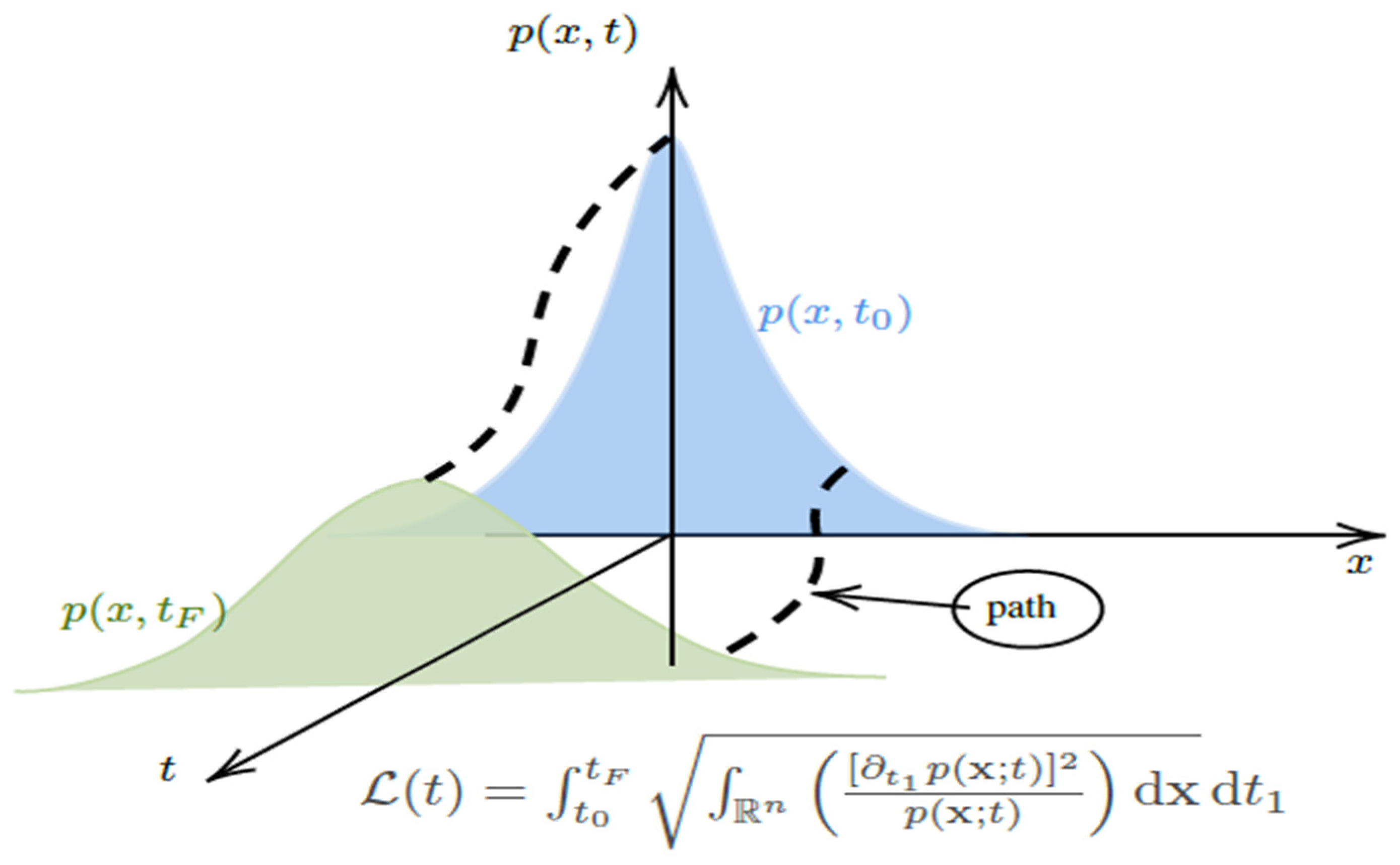

Considering equation (5), it is clear that

is dependent on the changes in both mean and variance defined by the corresponding dynamics of equation (4), portraying

three-dimensional space variations, namely

as in

Figure 3(c.f., [

8]), where the variation of the information velocity,

would occur along the path starting initially from the state probability density function

to the final state at

as a descriptor of the speed limit from the statistical deviations of the observables [

16].

Essentially, we might not be able to see the temporal statistical fluctuations that are occurring when we use differential entropy [

12]. This directly follows from the locality's insufficiency because differential entropy primarily measures the differences between any two given PDFs while ignoring any intermediate states [

17]. In an alternative symbolism, it merely alerts us to the distinctions that impact the overall evolution of the underlying system. In contrast, any localised changes that take place over the course of the system are measured by IL

, [

14,

17]. What's more, IL has been hailed as a powerful metric that can bring geometry and stochasticity together, as well as a state-of-the-art method for representing an attractor structure [

20,

21]. The analogous value of

would grow linearly from the point of stable equilibrium, based on the location of the mean PDF

of the starting state [

15,

24]. More significantly, using any other information metric results in the loss of this specific trait [

17,

23].

Preliminary Theorem(PT)

Let

f be a function that is defined and differentiable on an open interval (

c,d).

The road of this current paper reads: A spotlight introduction is presented in section 1. The key findings are established in section 2. Some challenging open problems, conclusion, and future research work are given in section 3.

2. The Upper and Lower Bounds of The Data Information Length of Human Emotions(HEs), ,

This section is concerned with calculating .

2.1. Key Findings

Theorem 1.

TheIL of HEs ,satisfies the following inequality:

Proof. We have

Consequently,

It is well known that:

Let

. Then,

Engaging (15), and communicating lengthy steps of mathematical manipulation, yields

Communicating(16),

On another separate note,

Combining (4), (18), and (19), one gets

Following the mean value theorem for definite integrals:

Notably,

, would guarantee that

=

is real, otherwise

would be complex valued, which is not acceptable.

Theorem 2.

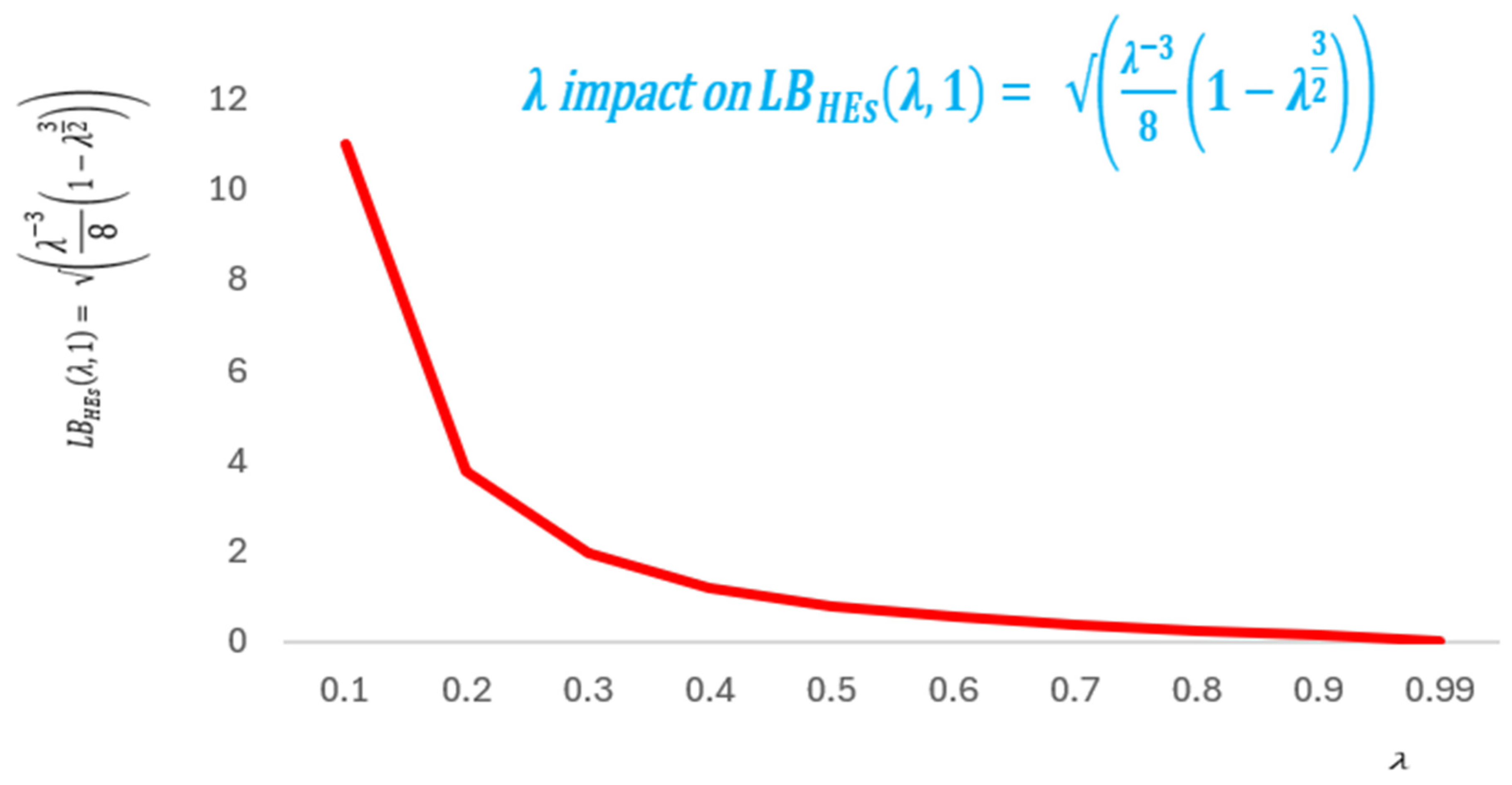

(c.f., Theorem 1) satisfy:

(i) is forever decreasing in

(ii) is forever decreasing in

(iii) is forever increasing in time.

(iv) is forever increasing in time.

Proof.

(i) We have

, which holds for all

Communicating (11), and (21), (i) follows.

, which implies (ii)

(iii), and (iv) are straightforward.

2.2. Numerical Experiments

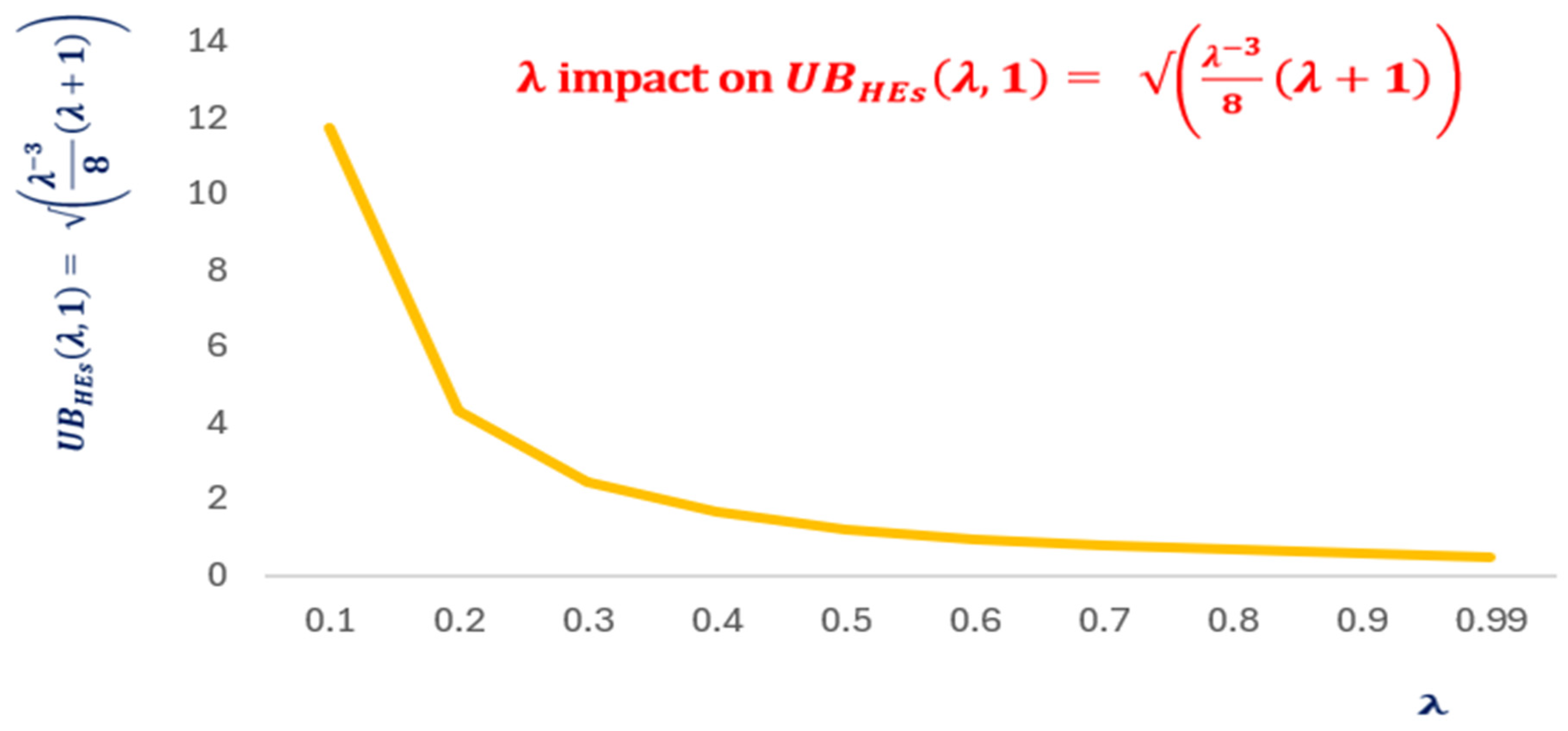

Figure 4 depicts the decreasability of

in

, which validates the analytic findings.

Figure 5 illustrates the decreasability of

in

, which validates the analytic findings.

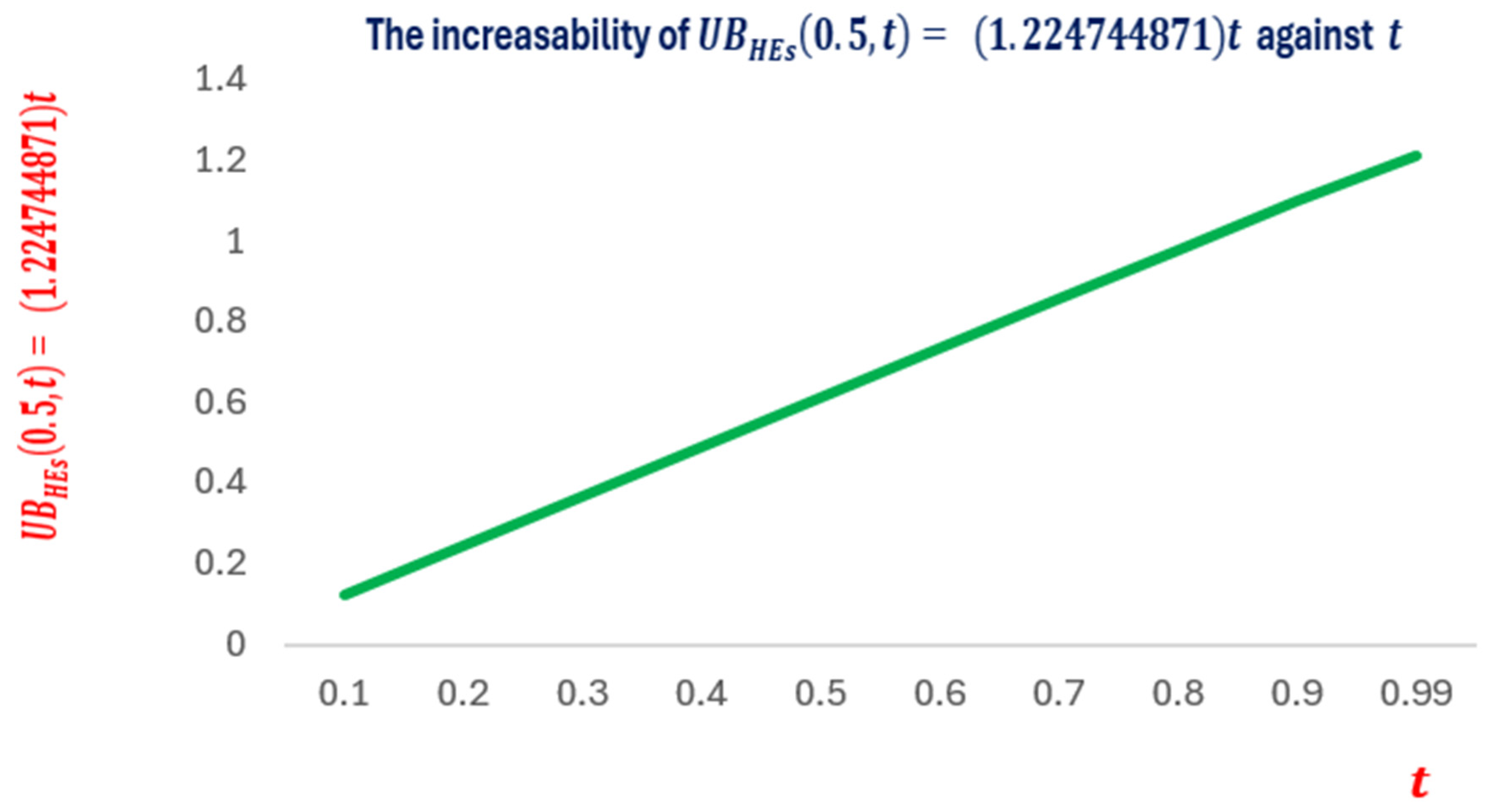

The numerical findings match the analytic results as shown by

Figure 6.

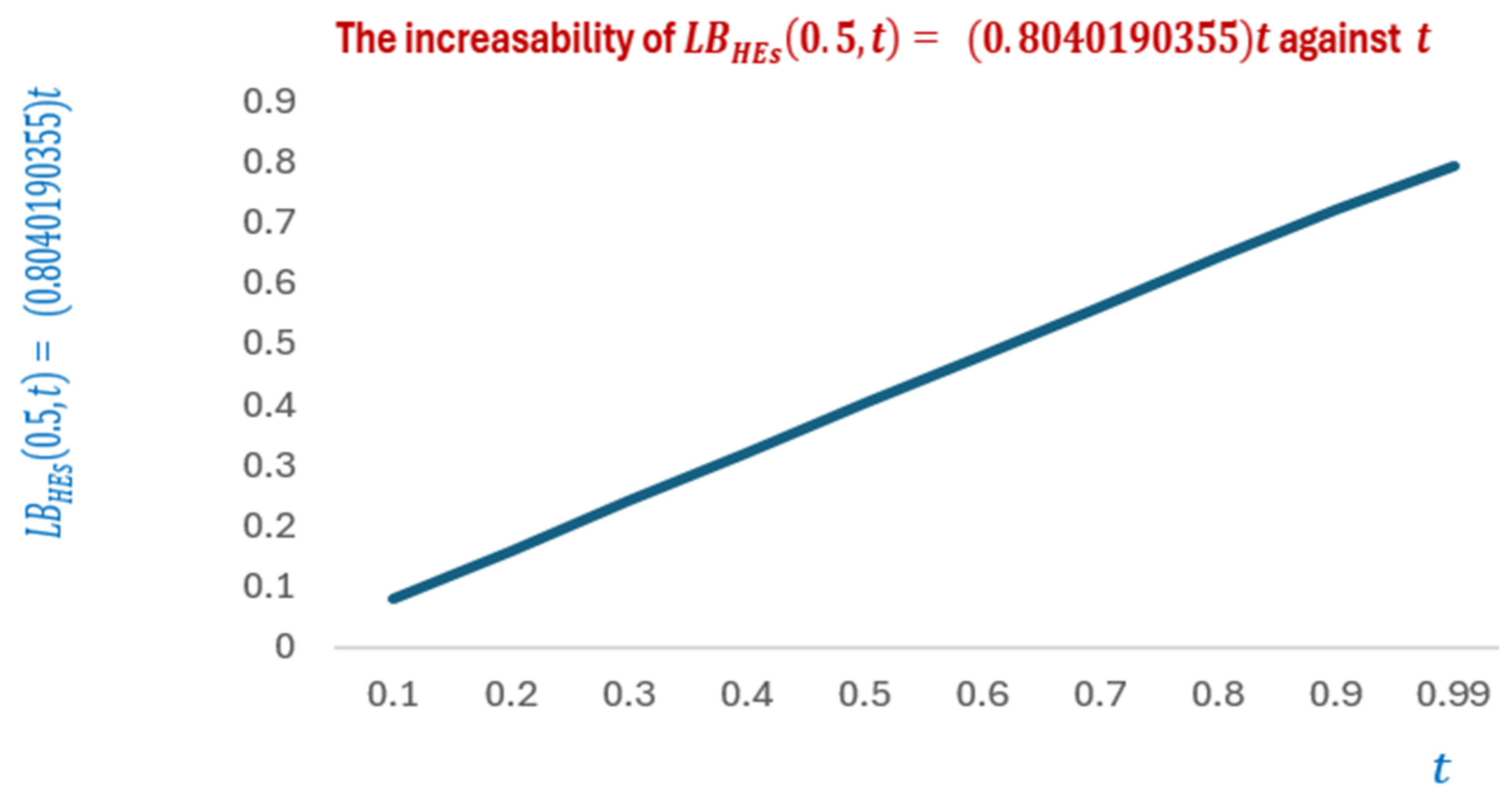

Figure 7 visualizes how

increases as times increases.

3. Concluding Remarks, Open Problems, and Future Research

This paper contributes to the establishment of Information Length Theory of HEs. The novel mathematical derivations are undertaken by finding the integral formula of the information length of HEs. Because of the higher complexity to derive the closed form result of the later integral formula, both the upper and the lower bounds of that integral, namely were derived.

Notably, are both (-dependent. Moreover, these analytic findings were validated numerically.

Here are some emerging open problems:

Is it mathematically feasible to unlock the challenging problem of finding the exact analytic form of, (c.f., (18)), rather than obtaining its upper and lower bounds? This problem is still open.

Looking at Eqn (18), can we find any other strict upper and lower bounds for ?

Future research pathways include the attempting to solve the proposed open problems and extending the information data length theory to explain other fields of human knowledge, such as engineering, physics and much more.

References

- Yabu, T. (2020). Mathematical approach to the psychological aspects of human.psyarxiv.com.

- Beck, S. J. (2020). Cognitive Behavior Therapy, Third Edition: Basics and Beyond.The Guilford Press.

- Bolger, N., Zee, K. S., Rossignac-Milon, M., & Hassin, R. R. (2019). Causal processes in psychology are heterogeneous.Journal of Experimental Psychology: General, 148(4). [CrossRef]

- Burigana, L., & Vicovaro, M. (2020). Algebraic aspects of Bayesian modelling in psychology.Journal of Mathematical Psychology, Vol.94. [CrossRef]

- Cox, G. E., & Criss, A. H. (2020). Similarity leads to correlated processing: A dynamic model of encoding and recognition of episodic associations.Psychological Review, 127(5). [CrossRef]

- Hancock, T. O. (2019). Travel behaviour modelling at the interface between econometrics and mathematical psychology.PhD thesis, University of Leeds.

- Hancock, T. O., Hess, S., & Choudhury, C. F. (2019). Theoretical considerations for a dynamic model for dynamic data: Bridging choice modelling with mathematical 18 psychology.International Choice Modelling Conference 2019.

- Chamorro, HR; et al. (2020). “ Information Length Quantification and Forecasting of Power Systems Kinetic Energy.” IEEE Transactions on Power Systems. [CrossRef]

- Mageed, I. A., Yin, X., Liu, Y., & Zhang, Q. (2023, August). ℤ(a,b) of the Stable Five-Dimensional M/G/1 𝑀/𝐺/1 Queue Manifold Formalism's Info-Geometric Structure with Potential Info-Geometric Applications to Human Computer Collaborations and Digital Twins. In 2023 28th International Conference on Automation and Computing (ICAC) (pp. 1-6). IEEE. [CrossRef]

- Mageed, I. A., Zhang, Q., Akinci, T. C., Yilmaz, M., & Sidhu, M. S. (2022, October). Towards Abel Prize: The Generalized Brownian Motion Manifold's Fisher Information Matrix With Info-Geometric Applications to Energy Works. In 2022 Global Energy Conference (GEC) (pp. 379-384). IEEE. [CrossRef]

- Mageed, I. A., Zhou, Y., Liu, Y., & Zhang, Q. (2023, August). Towards a Revolutionary Info-Geometric Control Theory with Potential Applications of Fokker Planck Kolmogorov (FPK) Equation to System Control, Modelling and Simulation. In 2023 28th International Conference on Automation and Computing (ICAC) (pp. 1-6). IEEE. [CrossRef]

- Mageed, I. A., & Kouvatsos, D. D. (2021, February). The Impact of Information Geometry on the Analysis of the Stable M/G/1 Queue Manifold. In ICORES (pp. 153-160).

-

Van Erven, T. and Harremos, P. (2014). “Rényi divergence and Kullback-Leibler divergence.” IEEE Transactions on Information Theory 60(7), 3797-3820. [CrossRef]

- Mageed, I.A. "A Unified Information Data Length (IDL) Theoretic Approach to Information- Theoretic Pathway Model Queueing Theory (QT) with Rényi entropic applications to Fuzzy Logic," 2023 International Conference on Computer and Applications (ICCA), Cairo, Egypt, 2023, pp. 1-6. [CrossRef]

- Kim, E. and R. Hollerbach.(2017). “Signature of nonlinear damping in geometric structure of a nonequilibrium process.” Physical Review E vol. 95, no. 2, p. 022137. [CrossRef]

- Nicholson, S,B. et al.(2020) “Time–information uncertainty relations in thermodynamics.” Nature Physics16.12: 1211-1215. [CrossRef]

- Heseltine, J., and E. Kim.(2019). “Comparing information metrics for a coupled- Ornstein–Uhlenbeck process.” Entropy 21(8):775. [CrossRef]

- Kim, E.(2021). “Information geometry and non-equilibrium thermodynamic relations in the over-damped stochastic processes.” Journal of Statistical Mechanics: Theory and Experiment vol. 2021, no. 9, p. 093406. [CrossRef]

- Kim, E. and A.-J. Guel-Cortez. (2021). “Causal information rate.” Entropy vol. 23, no. 8, p. 1087. [CrossRef]

- Guel-Cortez, A.-J. And E. Kim. (2020). “Information length analysis of linear autonomous stochastic processes.” Entropy 22(11). [CrossRef]

- Guel-Cortez, A.-J. and E. Kim. (2021). “Information geometric theory in the prediction of abrupt changes in system dynamics.” Entropy vol. 23, no. 6, p. 694. [CrossRef]

- Heseltine, J. and E. Kim.(2016). “Novel mapping in non-equilibrium stochastic processes.”Journal of Physics A: Mathematical and Theoretical vol. 49, no. 17, p. 175002. [CrossRef]

- Kim, E.(2016). “Geometric structure and geodesic in a solvable model of non-equilibrium process.” Physical Review E vol. 93, no. 6, p. 062127.Entropy vol. 20, no. 8, p. 550. [CrossRef]

- I. A. Mageed and Q. Zhang, “The Rényian-Tsallisian Formalisms of the Stable M/G/1 Queue with Heavy Tails Entropian Threshold Theorems for the Squared Coefficient of Variation,” electronic Journal of Computer Science and Information Technology, vol. 9, no. 1, 2023, p. 7-14.

- Sales, L. L., Silva, J. A., Bento, E. P., Souza, H. T., Farias, A. D., & Lavor, O. P. (2018). An alternative method for solving the Gaussian integral. arXiv preprint arXiv:1811.03957. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).