1. Introduction

One of the most interesting innovating opinion of nowadays physics refers to the treatment of infinitesimals and infinities as mathematical abstractions more than real entities. This convincement has already brought important outputss as the quantum loop gravity [

1,

2] and the non-commutative string theories [

3,

4].

In this study, we aim to demonstrate that adopting this perspective can provide fresh insights into longstanding issues in physics.

On a well-founded hypothesis that spacetime is not continuous but rather discrete, we illustrate the feasibility of drawing an analogy between our universe and a computerized N-Body Simulation.

Our goal is to present a sturdy framework of reasoning, which will be subsequently utilized to attain a more profound comprehension of our reality. This framework is founded on the premise that anyone endeavoring to create a computer simulation resembling our Universe will inevitably confront the same challenges as the entity responsible for constructing the Universe itself.

The fundamental aim is that, by tackling these challenges, we may unearth insights into the reasons behind the functioning of the Universe. This is based on the notion that constructing something as extensive and intricate at higher levels of efficiency might have only one viable approach. The essence of the current undertaking is eloquently aligned with the dictum of Feynman: ‘What I cannot create, I do not understand”. Conversely, here, this principle is embraced in the affirmative: ‘What I can create, I can comprehend.”

One of the primary challenges in achieving this objective is the need for a physical theory that comprehensively describes reality, capable of portraying the N-body evolution across the entire physical scale, spanning from the microscopic quantum level to the macroscopic classical realm.

Regarding this matter, established physics falls short in providing a comprehensive and internally consistent theoretical foundation [

5,

6,

7,

8,

9,

10]. Numerous problematic aspects persist to this day, including the challenge posed by the probabilistic interpretation assigned to the wavefunction in quantum mechanics. Others persistent issues are the impossibility of assuming a well-defined concept of pre-existing reality before measurement and ensuring local relativistic causality.

Quantum theory, despite its well defined mathematical apparatus, remains incomplete with respect to its foundational postulates. Specifically, the measurement process is not explicated within the framework of quantum mechanics. This requires acceptance of its probabilistic foundations regardless of the validity of the principle of causality.

This conflict is famously articulated through the objection posed by the EPR paradox. The EPR paradox, as detailed in a renowned paper [

5], is rooted in the incompleteness of quantum mechanics concerning the indeterminacy of the wavefunction collapse and measurement outcomes. These fundamental aspects do not find a clear placement within a comprehensive theoretical framework.

The endeavor to formulate a theory encompassing the probabilistic nature of quantum mechanics within a unified theoretical framework can be traced back to the research of Nelson [

6] and has persisted over time. However, Nelson’s hypotheses ultimately fell short due to the imposition of a specific stochastic derivative with time inversion symmetry, limiting its generality. Furthermore, the outcomes of Nelson’s theory do not fully align with those of quantum mechanics concerning the incompatibility of contemporary measurements of conjugated variables, as illustrated by Von Neumann’s proof [

7] of the impossibility of reproducing quantum mechanics with theories based on underlying classical probabilistic variables.

Moreover, the overarching goal of incorporating the probabilistic nature of quantum mechanics while ensuring its reversibility through “hidden variables” in local classical theories was conclusively proven to be impossible by Bell [

8]. Nevertheless, Bohm’s non-local hidden variable theory [

11] has arisen with some success. He endeavors to restore the determinism of quantum mechanics by introducing the concept of a pilot wave. The fundamental concept posits that, in addition to the particles themselves, there exists a “guidance” or influence from the pilot wave function that dictates the behavior of the particles. Although this pilot wave function is not directly observable, it does impact the measurement probabilities of the particles.

A more sophisticate model is based on the Feynman integral path representation [

12] of quantum mechanics. Here, as shown by Kleinert [

13], it is established that quantum mechanics can be conceptualized as an imaginary time stochastic process. These imaginary time quantum fluctuations differ from the more commonly known real-time fluctuations of the classical stochastic dynamics. They result in the “reversible” evolution of probability wave (wavefunction) that shows pseudo-diffusion behavior of the mass density.

The distinguishing characteristic of quantum pseudo-diffusion is the inability to define a positive diffusion coefficient. This directly stems from the reversible nature of quantum evolution, which, within a spatially distributed system, may demonstrate local entropy reduction over specific spatial domains. However, this occurs within the framework of an overall reversible deterministic evolution with a net entropy variation of zero [

14].

This aspect is clarified by the Madelung quantum hydrodynamic model [

15,

16,

17], which is perfectly equivalent to the Schrödinger description while being a specific subset of the Bohm theory [

18]. In this model, quantum entanglement is introduced through the influence of the so-called quantum potential.

Recently, with the emergence of evidence pointing to dark energy manifested as a gravitational background noise (GBN), whether originating from relics or the dynamics of bodies in general relativity, the author demonstrated that the quantum hydrodynamic representation provides a means to describe self-fluctuating dynamics within a system without necessitating the introduction of an external environment [

19]. The noise generated by spacetime curvature ripples can be integrated into Madelung’s quantum hydrodynamic description by utilizing the foundational assumption of relativity, permitting the consideration of the energy associated with spacetime curvature as virtual mass.

The resulting stochastic quantum hydrodynamic model (SQHM) avoids introducing divergent results that contradict established theories such as decoherence [

9] and the Copenhagen foundation of quantum mechanics; instead, it enriches and complements our understanding of these theories. It indicates that in the presence of noise, quantum entanglement and coherence can be maintained on a microscopic scale much smaller than the De Broglie length and the range of action of the quantum potential. On a scale with a characteristic length much larger than the distance over which quantum entanglement operates, classical physics naturally emerges [

19].

While the Bohm theory attributes the indeterminacy of the measurement process to the undeterminable pilot wave, the SQHM attributes its unpredictable probabilistic nature to the fluctuating gravitational background. Furthermore, it is possible to demonstrate a direct correspondence between the Bohm non-local hidden variable approach developed by Santilli in IsoRedShift Mechanics [

20] and the SQHM. This correspondence reveals that the origin of the hidden variable is nothing but the perturbative effect of the fluctuating gravitational background on quantum mechanics [

21].

The stochastic quantum hydrodynamic model (SQHM), adept at describing physics across various length scales, from the microscopic quantum to the classical macroscopic [

19], offers the potential to formulate a comprehensive simulation analogy to the N-body evolution within the discrete spacetime of the Universe.

The work is organized as follows:

Introduction to the Stochastic Quantum Hydrodynamic Model (SQHM);

Quantum to classical transition and the emerging classical mechanics on large size systems;

The measurement process in the quantum stochastic theory: the role of the finite range of non-local quantum potential interactions;

Minimum measurement uncertainty in spacetime with fluctuating background and finite speed of light;

Minimum discrete length interval in 4D spacetime;

Dynamics of Wavefunction Collapse;

Evolution of the mass density distribution of quantum superposition of states in spacetime with GBN;

EPR Paradox and Pre-existing Reality from the standpoint of the SQHM;

The computer simulation analogy for the N-body problem;

How the Universe computes the next state: the unraveling of the meaning of time;

The Free Will;

The Universal “Pasta Maker” and the Actual Time in 4D spacetime;

Discussion and future Developments;

Extending Free Will;

Best Future States Problem Solving Emergent from the Darwinian Principle of Evolution;

How the coscience captures the reality dynimcs;

The spontaneous appearance of gravity in a discrete spacetime simulation;

The classical General Relativity limit problem in Quantum Loop Gravity and Causal Dynamical Triang3ulation

2. The Quantum Stochastic Hydrodynamic Model

The Madelung quantum hydrodynamic representation transforms the Schrodinger equation [

15,

16,

17]

for the complex wave function

, into two equations of real variable: the conservation equation for the mass density

and the motion equation for the momentum

,

where

and where

By treating the energy content of the gravitaional background noise (GBN) as virtual mass density, the quantum potential genertes a stochastic force with correlation function shape

generalizing the Madelung hydrodynamic analogy into a quantum-stochastic problem. As shown in [

19] the SQHM is defined on the following assumptions:

1. The virtual mass density fluctuations genrated by the GBN are described by the wave function with density ;

2. The associated energy density (of the spacetime background fluctuations) is proportional to ;

3. The virtual mass is defined by the identity

4. The virtual mass is assumed to not interact with the mass of the physical system (since the gravitational interaction is sufficiently weak to be disregarded).

In this case, the wave function of the overall system

reads

Moreover, since the energy density

of the GBN is quite small, the viryal mass density

is assumed to bew much smaller than the the body mass density

(usually considered in lhysical problems). Therefore, considering the virtual mass

much smaller than the mass of the system and assuming in equation (4) that

, the overall quantum potential can be expressed as follows:

Expression (6) allows to derive the shape

of the correlation function of the quantum potential fluctuations that reads [

19,

22]:

where

is the De Broglie length. The expression (7-8) reveals that uncorrelated mass density fluctuations on increasingly shorter distances with respect to

are gradually suppressed by the quantum potential. This suppression enables the realization of conventional quantum mechanics, representing the zero-noise “deterministic” limit of the Stochastic Quantum Hydrodynamics Model (SQHM), for systems with a physical length much smaller than the De Broglie length

.

In presence of GBN, accounted as virtual mass fluctuations, the mass distribution density (MDD) becomes a stochastic function, such as

, that we can ideally pose

, where

is the fluctuating part, and

is the regular part. All these variables are connected by the limiting condition

Furthermore, the characteristics of the Madelung quantum potential, that in the presence of stochastic noise, fluctuates, can be derived by generally posing that it is composed of the regular part

(to be defined) plus the fluctuating part

, such as

where the stochastic part of the quantum potential

leads to the force noise

leading to the stochastic motion equation

Moreover, the regular part

for microscopic systems (

), without loss of generality, can be rearranged as

Leading to the motion equation

where

is the probability mass density function (PMD) associated with the stochastic process (12) [

23] that, in the deterministic limit, obeys to the condition

.

For the sufficiently general case to be of practical interest, the correlation function of noise

can be assumed to be Gaussian with null correlation time, isotropic into the space and independent among different coordinates, taking the form

with

Furthermore, given that for microscopic systems (i.e.,

)

It follows that

and the motion described by equation (12) takes on the stochastic form of the Markovian process [

19]

where

where

is a non-zero pure number.

In this case,

is the probability mass density function determined by the probability transition function (PTF)

[

23] through the relation

where

obeys to the Smolukowsky conservation equation for the Markov process (19)

Therefore, for the complex field

the quantum-stochsatic hydrodynamic sistem of equation reads:

In the context of (23-26),

does not denote the quantum wavefunction; rather, it represents the generalized quantum-stochastic probability wave. With the exception of some unphysical singular cases, this probability wave adheres to the limit.

It is worth noting that the SQHA equations (23-26) show that the gravitational dark energy leads to a self-fluctuating system where the noise is an intrinsic property of the spacetime dynamical geometry that does not require the presence of an environment.

The agreement between the SQHM and the well-established quantum theory outputs can be additionally validated by applying it to mesoscale systems (

). In this scenario, the SQHM reveals that

adheres to the generalized Langevin-Schrodinger equation, which, for time-independent systems, is expressed as follows

that by using (??) can be readjusted as:

Moreover, by introducing, close to the zero noise, the semiempirical parameter

, defined by the relation [19 and references therein]

and characterizing the ability of the system to dissipate, the realization of quantum mechanics is ensured by the condition

. On this ansatz, (29) reads as

where

accounts for the compressibility of the mass density distribution

as a consequence of dissipation [

19].

In ref. [

19] it is shown that for highly dissipative quantum system with

equation (32) converges to the Langevin-Schrodinger equation.

2.1. Emerging Classical Mechanics on Large Size Systems

When manually nullifying the quantum potential in the equations of motion for quantum hydrodynamics (1-3), the classical equation of motion emerges [

17]. However, despite the apparent validity of this claim, such an operation is not mathematically sound as it alters the essential characteristics of the quantum hydrodynamic equations. Specifically, this action leads to the elimination of stationary configurations, i.e., eigenstates, as the balancing force of the quantum potential against the Hamiltonian force [

24]—which establishes their stationary mass density distribution condition—is nullified. Consequently, even a small quantum potential cannot be disregarded in conventional quantum mechanics as described by the zero-noise ‘deterministic’ quantum hydrodynamic model (2-4).”

Conversely, in the stochastic generalization, it is possible to correctly neglect the quantum potential in (19, 4.7) when its force is much smaller than the force noise

such as

that by (4.7) leads to condition

and hence, in a coarse-grained description with elemental cell side

, to

where

is the physical length of the system.

It is worth noting that, despite the noise

having a zero mean, the mean of the fluctuations in the quantum potential, denoted as

, is not null. This non-null mean contributes to the dissipative force

in equation (6.22). Consequently, the stochastic sequence of noise inputs disrupts the coherent evolution of the quantum superposition of states, causing them to converge to a stationary mass density distribution with

. Moreover, by observing that the stochastic noise

grows with the size of the system, for macroscopic systems (i.e.,

), condition (33) is satisfied if

In order to achieve a large-scale description completely free from quantum correlations for any finite values of the physical length

of the system, a more rigorous requirement must be imposed, such as

Hence, recognizing that for linear systems

it readily concludes that these systems are incapable of generating the macroscopic classical phase. In general, as the Hamiltonian potential strengthens, the wave function localization increases, and the quantum potential behavior at infinity becomes more prominent.

This is demonstrable by considering the MDD

where

is a polynomial of order

k, and it becomes evident that a finite range of quantum potential interaction is achieved for

.

Hence, linear systems, characterized by k=0k=0, exhibit an infinite range of quantum potential action.

On the other hand, for instance, for gas phases with particles that interact by the Lennard-Jones potential, whose long-distance wave function reads [

25]

the quantum potential reads

leading to the quantum force

that by (33, 37), can lead to large-scale classical behavior [

19] in a sufficiently rarefied phase.

It is interesting to note that in (41), the quantum potential is at the basis of the hard sphere potential of the “pseudo potential Hamiltonian model” of the Gross-Pitaevskii equation [

26,

27], where

is the boson-boson s-wave scattering length.

By observing that, to fulfill condition (37), we can sufficiently require that

so that it is possible to define the quantum potential range of interaction

as [

19]

Relation (44) provides a measure of the physical length associated with quantum non-local interactions.

It is worth mentioning that the quantum non-local interaction extends themselves up to the distance of order of the largest length between and . Below , an even feeble quantum potential emerges because of the damping of the noise, above but below the quantum potential is strong enough to overcome the fluctuations. The quantum non-local effects can be extended by increasing as a consequence of lowering the temperature or the mass of the bodies (see § 2.3), while grows by strengthening the Hamiltonian potential. In the latter case, for instance, larger values of can be obtained by extending the linear range of Hamiltonian interaction between particles (see § 2.2).

As a direct consequence of equation (39), when examining phenomena at intermolecular distances where the interaction is linear, the behavior exhibits quantum characteristics (e.g., X-ray diffraction). However, when observing macroscopic properties that involve the non-linear behavior of the Lennard-Jones potential—decreasing to zero at infinity, such as in the case of low-frequency acoustic waves with wavelengths much larger than the linear range of interatomic force—there is a transition to classical behavior, being the range of interaction of the quantum potential finite (see § 5.1).

2.2. The Lindemann Constant for Quantum Lattice-to-Classical Fluid Transition

For a system of Lennard-Jones interacting particles, the quantum potential range of interaction

reads

where

(with

) represents the distance up to which the interatomic force is approximately linear, and

denotes the atomic equilibrium distance.

Experimental validation of the physical significance of the quantum potential length of interaction is evident during the quantum-to-classical transition in a crystalline solid at its melting point. This transition occurs as the system shifts from a quantum lattice to a fluid amorphous classical phase.

Assuming that, within the quantum lattice, the atomic wave function (around the equilibrium distance ) extends over a distance smaller than the quantum coherence length, it can be inferred that at the melting point, its variance is equal to

Based on these assumptions, the Lindemann constant

defined as [

28]

can be expressed as

and it can be theoretically calculated, as

that, being typically

and

, leads to

A more precise assessment, utilizing the potential well approximation for molecular interaction [

29,

30], results in

, and yields a value

for the Lindemann constant consistent with measured values, falling within the range of 0.2 to 0.25 [

28].

2.3. The Fluid-Superfluid 4He Transition

Given that the De Broglie distance

is temperature-dependent, its impact on the fluid-superfluid transition in monomolecular liquids at extremely low temperatures, as observed in

4He, can be identified. The approach to this scenario is elaborated in reference [

30], where, for the

4He -

4He interaction, the potential well is assumed to be.

In this context, represents the Lennard-Jones potential depth, denotes the mean 4He -4He inter-atomic distance where .

Ideally, at the superfluid transition, the De Broglie length attains approximately the mean

4He -

4He atomic distance. However, the induction of the superfluid

4He -

4He state occurs as soon as the De Broglie length overlaps with the

4He -

4He wavefunctions within the potential depth. Therefore, we observe the gradual increase of

4He superfluid concentration within the interval

For

, we have, that no superfluid

4He. For

100% of

4He is in the superfluid state. Therefore, given that

When the superfluid/normal

4He density ratio is 50%, it follows that the temperature

, for the

4He mass of

, is given by

which is in good agreement with the experimental data from reference [

31], which is approximately

.

On the other hand, since for all the couples of 4Hefall into the quantum state, the superfluid ratio of 100% is reached at the temperature

On the other hand, given that for

, all pairs of

4He enter the quantum state, the superfluid ratio of 100% is attained at the temperature

also consistent with the experimental data from reference [

31], which is approximately

.

Moreover, by employing the superfluid ratio of 38% at the

-point of

4He, such that

, the transition temperature

is determined as follows

in good agreement with the measured superfluid transition temperature of

.

As a final remark, it is worth noting that there are two ways to establish quantum macroscopic behavior. One approach involves lowering the temperature, effectively increasing the de Broglie length. The second approach is to enhance the strength of the Hamiltonian interaction among the particles within the system.

Regarding the latter, it is important to highlight that the limited strength of the Hamiltonian interaction over long distances is the key factor allowing classical behavior to manifest. When examining systems governed by a quadratic or stronger Hamiltonian potential, the range of interaction associated with the quantum potential becomes infinite, as illustrated in equation (68). Consequently, achieving a classical phase becomes unattainable, regardless of the system’s size.

In this particular scenario, we exclusively observe the complete manifestation of classical behavior on a macroscopic scale within systems featuring interactions that are sufficiently weak, weaker even than linear interactions, which are classically chaotic. In this case, the quantum potential lacks the ability to exert its nonlocal influence over extensive distances.

Therefore, classical mechanics emerges as a decoherent outcome of quantum mechanics when fluctuating spacetime background is involved.

2.4. Measurement Process and the Finite Range of Nonlocal Quantum Potential Interactions

Throughout the course of measurement, there exists the possibility of a conventional quantum interaction between the sensing component within the experimental setup and the system under examination. This interaction concludes when the measuring apparatus is relocated to a considerable distance from the system. Within the SQHM framework, this relocation is imperative and must surpass specified distances and .

Following this relocation, the measuring apparatus takes charge of interpreting and managing the “interaction output.” This typically involves a classical, irreversible process characterized by a distinct temporal progression, culminating in the determination of the macroscopic measurement result.

Consequently, the phenomenon of decoherence assumes a pivotal role in the measurement process. Decoherence facilitates the establishment of a large-scale classical framework, ensuring authentic quantum isolation between the measuring apparatus and the system, both pre and post the measurement event.

This quantum-isolated state, both at the initial and final stages, holds paramount significance in determining the temporal duration of the measurement and in amassing statistical data through a series of independent repeated measurements.

It is crucial to underscore that, within the confines of the SQHM, merely relocating the measured system to an infinite distance before and after the measurement, as commonly practiced, falls short in guaranteeing the independence of the system and the measuring apparatus if either or is met. Therefore, the existence of a macroscopic classical reality remains indispensable for the execution of measurement process.

2.5. Minimum Measurement Uncertainty of Quantum Systems in Fluctuating Spacetime Background

Any quantum theory aiming to elucidate the evolution of a physical system across various scales, at any order of magnitude, must inherently address the transition from quantum mechanical properties to the emergent classical behavior observed at larger magnitudes. The fundamental disparities between the two descriptions are encapsulated by the minimum uncertainty principle in quantum mechanics, signifying the inherent incompatibility of concurrently measuring conjugated variables, and the finite speed of propagation of interactions and information in local classical relativistic mechanics.

Should a system fully adhere to the “deterministic” conventions of quantum mechanics up to a distance, possibly smaller than, where its subparts lack individual identities, the independent observer, to gain information about the system, needs to maintain a separation distance bigger than both before and after the process.

Should a system fully adhere to the conventional quantum mechanics within a physical length , smaller than , where its subparts lack individual identities, the independent observer, that wants to gain information about the system, needs to maintain a separation distance bigger than both before and after the process.

Therefore, due to the finite speed of propagation of interactions and information, the process cannot be executed in a time frame shorter than

Furthermore, considering the Gaussian noise (20) with the diffusion coefficient proportional to

, we find that the mean value of energy fluctuation is

for the degree of freedom. As a result, a nonrelativistic (

) scalar structureless particle, with mass m, exhibits an energy variance

of

from which it follows that

It is noteworthy that the product remains constant, as the increase in energy variance with the square root of precisely offsets the corresponding decrease in the minimum acquisition time . This outcome holds true when establishing the uncertainty relations between the position and momentum of a particle with mass m.

If we acquire information about the spatial position of a particle with precision

, we effectively exclude the space beyond this distance from the quantum non-local interaction of the particle, and consequently

the variance

of its relativistic momentum

due to the fluctuations reads

and the uncertainty relation reads

Equating (62) to the uncertainty value, such as

or

It follows that represents the physical length below which quantum entanglement is fully effective, and it signifies the deterministic limit of the SQHM, specifically the realization of quantum mechanics.

As far as it concerns the theoretical minimum uncertainty of quantum mechanics, obtainable from the minimum indeterminacy (59, 62) in the limit of quantum mechanics ( and ) in the non-relativistic limit (), we have that

With regard to the minimum uncertainty of quantum mechanics, attainable from the minimum indeterminacy (59, 62) in the limit of

(

), in the non-relativistic limit (

), it follows that

and therefore that

That constitutes the minimum uncertainty in quantum mechanics, obtained as the deterministic limit of the SQHM.

It’s worth noting that, owing to the finite speed of light, the SQHM extends the uncertainty relations to all conjugate variables of 4D spacetime. In conventional quantum mechanics, deriving the energy-time uncertainty is not possible because the time operator is not defined.

Furthermore, it is interesting to note that in the relativistic limit of quantum mechanics (

and

), influenced by the finite speed of light, the minimum acquisition time of information in the quantum limit is expressed as follows

The result (71) indicates that performing a measurement in a fully deterministic quantum mechanical global system is not feasible, as its duration would be infinite.

Given that non-locality is restricted to domains with physical lengths on the order of , and information about a quantum system cannot be transmitted faster than the speed of light (violating the uncertainty principle otherwise), local realism is established within the coarse-grained macroscopic physics where domains of order of reduce to a point.

The paradox of “spooky action at a distance” is confined to microscopic distances (smaller than ), where quantum mechanics is described in the low-velocity limit, assuming and . This leads to the apparent instantaneous transmission of interaction over a distance.

It is also noteworthy that in the presence of noise, the measure indeterminacy has a relativistic correction since

leading to the minimum uncertainty in a quantum system submitted to gravitational background noise ()

It is also noteworthy that in the presence of noise, the measured indeterminacy undergoes a relativistic correction, as expressed by

, resulting in the minimum uncertainty in a quantum system subject to gravitational background noise (

):

and

This can become significant for light particles (with ), but in quantum mechanics, at , the uncertainty relations remain unchanged.

2.6. Minimum Discrete Interval of Spacetime

Within the framework of the SQHM, incorporating the uncertainty on measure in fluctuating quantum system and the maximum attainable velocity of the speed of light

it follows that the uncertainty relations

leads to

and, consequently, to

where

is the Compton’s length.

Identity (76) reveals that the maximum concentration of the mass of a body is within an elemental volume with a side length equal to half of its Compton wavelength.

This result holds significant implications for black hole (BH) formation. To form a BH, all the mass must be contained within the gravitational radius

, giving rise to the relationship:

which further leads to the condition:

indicating that the BH mass cannot be smaller than

.

The validity of the result (76) is substantiated by the gravitational effects produced by the quantum mass distribution within spacetime [

32,

33]. This demonstration elucidates that when mass density is condensed into a sphere with a diameter equal to half the Compton length, it engenders a quantum potential force that precisely counters the compressive gravitational force within a black hole.

Considering the Planck mass black hole as the lightest configuration, with its mass compressed within a sphere of half the Compton wavelength, it logically follows that black holes with masses greater than

[

19] exhibit their mass compressed into a sphere of smaller diameter. Consequently, given the significance of elemental volume as the volume inside which content is uniformly distributed, the consideration of the Planck length as the smallest discrete elemental volume of spacetime is not sustainable. This would make it impossible to compress the mass of large black holes within a sphere of a diameter of half Compton’s length, consequently preventing the achievement of gravitational equilibrium [

33].

This output holds significant importance, as it forms the basis for both loop quantum gravity and non-commutative string theories. The former theory relies on the postulate that there exists an absolute limitation on length measurements in quantum gravity.

While, in principle, it is correct to steer clear of the unrealizable concepts of infinite and infinitesimal, the fundamental arguments of these theories assume that to pinpoint a particle within a sphere of a Planck length radius, an energy greater than the Planck mass is required that shields whatever occurs within the Schwarzschild radius. Consequently, this represents the smallest ‘quanta’ of space and time. This assumption conflicts with the fact that any existing black holes compress their mass into a nucleus smaller than the Planck length [

33].

This compression is only feasible if spacetime discretization allows elemental cells of smaller volume, thereby distinguishing between the minimum measurable distance and the minimum discrete element of distance in the spacetime lattice. In the simulation analogy, the maximum grid density is equivalent to the elemental cell of the spacetime. Additionally, the vacuum in the collapsed branched phase envisioned by pure quantum gravity cannot occupy a spacetime volume smaller than one elemental cell.

Finally, it is worth noting that the current theory leads to the assumption that the elemental discrete spacetime distance corresponds to the Compton length of the maximum possible mass, which is the energy/mass of the Universe. Consequently, we have a criterion to rationalize the mass of the Universe—why it is not higher than its value—being intricately linked to the minimum length of the discrete spacetime element. If the pre-big-bang black hole (PBBH) has been generated by a fluctuation anomaly in an elemental cell of spacetime, it could not have a mass/energy content smaller than that which the universe possesses.

2.6.1. Dynamics of Wavefunction Collapse

The Markov process (17) can be described by the Smolukowski equation for the Markov probability transition function (PTF) [

23]

where the PTF

is the probability that in time interval τ is transferred to point q.

The conservation of the PMD shows that the PTF displaces the PMD according to the rule [

23]

Generally, for the quantum case, Equation (79) cannot be reduced to a Fokker–Planck equation (FPE). The functional dependence of

by

, and by the PTF

, produces non-Gaussian terms [

19].

Nonetheless, if, at initial time,

is stationary (e.g., quantum eigenstate) and close to the long-time final stationary distribution

, it is possible to assume that the quantum potential is constant in time as a Hamilton potential following the approximation

Being the quantum potential independent by the mass density time evolution, the stationary long-time solutions

can be approximately described by the Fokker–Planck equation

where

leading to the final equilibrium of the stationary quantum configuration

In ref. [

19] the stationary states of a harmonic oscillator obeying (84) are shown. The results show that the quantum eigenstates are stable and maintain their shape (with a small change in their variance) when subject to fluctuations.

It is worth mentioning that in (84) and does not represent the fluctuating quantum mass density but is the probability mass density (PMD) of it.

2.6.2. Evolution of the PMD of Superposition of States Submitted to Stochastic Noise

The quantum evolution of not-stationary state superpositions (not considering fast kinetics and jumps) involves the integration of Equation (17) that reads as

By utilizing both the Smolukowski Equation (85) and the associated conservation Equation (80) for the PMD

, it is possible to integrate (85) by using its second-order discrete expansion

where

where

has a Gaussian zero mean and unitary variance which probability function

, for

, reads as

where the midpoint approximation has been introduced

and where

and

are the solutions of the deterministic problem

As shown in ref. [

19], the PTF

can be achieved after successive steps of approximation and reads as

and the PMD at the

-th instant reads as

leading to the velocity field

Moreover, the continuous limit of the PTF gives

where

.

The resolution of the recursive Expression (98) offers the advantage of being applicable to nonlinear systems that are challenging to handle using conventional approaches [

34,

35,

36,

37].

2.6.3. General Features of Relaxation of the Quantum Superposition of States

The classical Brownian process admits the stationary long-time solution

where

, leading to solution [

13]

As far as it concerns in (98,) it cannot be expressed in a closed form, unlike (99), because it is contingent on the particular relaxation path the system follows toward the steady state. This path is significantly influenced by the initial conditions, namely the MDD as well as , and, consequently, by the initial time at which the quantum superposition of states is subjected to fluctuations.

In addition, from (86), we can see that depends on the exact sequence of inputs of stochastic noise, since, in classically chaotic systems, very small differences can lead to relevant divergences of the trajectories in a short time. Therefore, in principle, different stationary configurations (analogues of quantum eigenstates) can be reached whenever starting from identical superposition of states. Therefore, in classically chaotic systems, Born’s rule can also be applied to the measurement of a single quantum state.

Even if , it is worth noting that, to have finite quantum lengths and (necessary to have the quantum stochastic dynamics) and the quantum decoupled (classical) environment or measuring apparatus), the nonlinearity of the overall system (system–environment) is necessary: Quantum decoherence, leading to the decay of superposition states, is significantly promoted by the widespread classical chaotic behavior observed in real systems.

On the other hand, a perfect linear universal system would maintain quantum correlations on a global scale and would never allow quantum decoupling between the system and the experimental apparatus performing the measure (see § 5). It should be noted that even the quantum decoupling of the system from the environment would be impossible, as quantum systems function as a unified whole. Merely assuming the existence of separate systems and environments subtly introduces the classical condition into the nature of the overall supersystem.

Furthermore, given that the relationship (19) (see Equations A31,A38, in ref.19]) is valid only in the leading order of approximation of (i.e., during a slow relaxation process with small amplitude fluctuations), in instances of large fluctuations occurring on a timescale much longer than the relaxation period of , transitions may occur to that are not captured by (98), potentially leading from a stationary eigenstate to a general superposition of states.

In this case, relaxation will follow again toward another stationary state. The (96), describes the relaxation process occurring in the time interval between two large fluctuations rather than the system evolution toward a statistical mixture. Due to the extended timescales associated with these jumping processes, a system comprising a significant number of particles (or independent subsystems) undergoes a gradual relaxation towards a statistical mixture. The statistical distribution of this mixture is dictated by the temperature-dependent behavior of the diffusion coefficient.

2.7. EPR Paradox and Pre-Existing Reality

The SQHM highlights that quantum theory, despite its well-defined reversible deterministic theoretical framework, remains incomplete with respect to its foundational postulates. Specifically, the SQHM underscores that the measurement process is not explicated within the deterministic “Hamiltonian” framework of standard quantum mechanics. Instead, it manifests as a phenomenon comprehensively described within the framework of a quantum stochastic generalized approach.

The SQHM reveals that quantum mechanics represents the deterministic (zero noise) limit of a broader quantum-stochastic theory induced by spacetime gravitational background fluctuations.

From this standpoint, the zero-noise quantum mechanics defines the deterministic evolution of the “probabilistic wave” of the system. Moreover, the SQHM suggests that the term “probabilistic” is inaccurately introduced, arising from the inherent probabilistic nature of the measurement process, as the standard quantum mechanics itself cannot fully describe its output. Given the capacity of the SQHM to describe both wavefunction decay and the measurement process, thereby achieving a comprehensive quantum theory, the term “state wave” is a more appropriate substitute for the expression “probabilistic wave”. The SQHM theory reinstates the principle of determinism into quantum theory, emphasizing that it delineates the deterministic evolution of the “state wave” of the system. It elucidates the probabilistic outcomes as a consequence of the fluctuating gravitational background.

Furthermore, it is noteworthy to observe that the SQHM addresses the lingering question of preexisting reality before measurement. In contrast, the Copenhagen interpretation posits that only the measurement process allows the system to decay into a stable eigenstate, establishing a persistent reality over time. Consequently, it remains indeterminate within this framework whether a persistent reality exists prior to measurement.

About this point, the SQHM introduces a simple and natural innovation showing that the world is capable of self-decaying through macroscopic-scale decoherence, wherein only the stable macroscopic eigenstates persist. These states, being stable with respect to fluctuations, establish an enduring reality that exists prior to measurement.

Regarding the EPR paradox, the SQHM demonstrates that, in a perfect quantum deterministic (coherent) universe is not feasible to achieve the complete decoupling between the subparts of the system, namely the measuring apparatus and the measured system, and carry out the measurememnt in a finite time interval. Instead, this condition can only be realized within a large-size classical supersystem—a quantum system in a 4D spacetime with fluctuating background —where the quantum emtanglement, due to the quantum potential, extends up to a finite distance [

19]. Under these circumstaance, the SQHM shows that it is possible to restore the local relativistic causality (see § 2.5).

If the Lennard-Jones interparticle potential yields a sufficiently weak force, resulting in a microscopic range of quantum non-local interaction and a large-scale classical phase, photons, as demonstrated in reference [

19], maintain their quantum behavior at the macroscopic level due to their infinite quantum potential range of interaction. Consequently, they represent the optimal particles for conducting experiments aimed at demonstrating the characteristics of quantum entanglement over a distance.

In order to clearly describe the standpoint of the SQHM on this argument, we can analyze the output of two entangled photon experiments traveling in opposite directions in the state

where

and

are vertical and horizontal polarizations, respectively, and

is a constant phase coefficient.

Photons “one” and “two” impact polarizers (Alice) and (Bob) with polarization axes positioned at angles and relative to the horizontal axis, respectively. For our purpose, we can assume .

The probability that photon “two” also passes through Bob’s polarizer is .

As widely held by the majority of the scientific community in quantum mechanics physics, when photon “one” passes through polarizer with its axes at an angle of , the state of photon “two” instantaneously collapses to a linear polarized state at the same angle , resulting in the combined state .

In the context of the SQHM, able to describe the kinetics of the wavefunction collapse, the collapse is not instantaneous, and following the Copenhagen quantum mechanics standpoint, it needs to assert rigorously that the state of photon “two” is not defined before its measurement at the polarizer .

Therefore, after photon “one” passes through polarizer , from the standpoint of SQHM, we have to assume that the combined state is , where the state represents the state of photon “two” in the interaction with the residual quantum potential field generated by photon “one” at polarizer . The spatial extension of the field of the photon two, in the case the photons travel in opposite direction, is the double of that one crossed by the photon one before its adsorption. In this regard, it is noteworthy that the quantum potential is not proportional to the intensity of the field. Instead, it is proportional to its second derivative. Therefore, a minor perturbation in the field with a high frequency at the tail of photon two (during the absorption of photon one) can give rise to a significant quantum potential field .

When the residual part of the two entangled photons

also passes through Bob’s polarizer, it makes the transition

with probability

. The duration of the photon two adsorption (wavefunction decay and measurement) due to its spatial extension, and finite light speed, it is just the time necessary to transfer the information about the measure of photon one to the place of photon two measurement. A possible experiment is proposed in ref. [

19].

Summarizing, the SQHM reveals the following key points:

The SQHM posits that quantum mechanics represents the deterministic limit of a broader quantum stochastic theory;

Classical reality emerges at the macroscopic level, persisting as a preexisting reality before measurement;

The measurement process is feasible in a classical macroscopic world, because we can have really quantum decoupled and independent systems, namely the system and the measuring apparatus;

Determinism is acknowledged within standard quantum mechanics under the condition of zero GBN;.

Locality is achieved at the macroscopic scale, where quantum non-local domains condense to punctual domains.

Determinism is recivered in quantum mechannics representing the zero-noise limit of the SQHM. The probabilistic nature of quantum measurement is introduced by the GBN.

The maximum light speed of the propagation of information and the local relativistic causality align with quantum uncertainty;

The SQHM addresses the GBN as playng the role of the hidden variable in the Bohm non-local hidden variaboe theory: The Bohm theory ascribes the indeterminacy of the measurement process to the unpredictable pilot wave, whereas the Stochastic Quantum Hydrodynamics attributes its probabilistic nature to the fluctuating gravitational background. This background is challenging to determine due to its predominantly early-generation nature during the Big Bang, characterized by the weak force of gravity without electromagnetic interaction. In the context of Santilli’s non-local hidden variable approach in IsoRedShift Mechanics, it is possible to demonstrate the direct correspondence between the non-local hidden variable and the GBN. Furthermore, it must be noted that the consequent probabilistic nature of the wavefunction decay, and measure output, is also compounded by the inherently chaotic nature of the classical law of motion and the randomness of the GBN, further contributing to the indeterminacy of measurement outcomes.

2.8. The SQHM and the Objective-Collapse Theories

The SQHM well inserts itself into the so-called Objective Collapse Theories [

38,

39,

40,

41]. In collapse theories, the Schrödinger equation is augmented with additional nonlinear and stochastic terms, referred to as spontaneous collapses, that serve to localize the wave function in space. The resulting dynamics ensures that, for microscopic isolated systems, the impact of these new terms is negligible, leading to the recovery of usual quantum properties with only minute deviations.

An inherent amplification mechanism operates to strengthen the collapse in macroscopic systems comprising numerous particles, overpowering the influence of quantum dynamics. Consequently, the wave function for these systems is consistently well-localized in space, behaving practically like a point in motion following Newton’s laws.

In this context, collapse models offer a comprehensive depiction of both microscopic and macroscopic systems, circumventing the conceptual challenges linked to measurements in quantum theory. Prominent examples of such theories include: Ghirardi–Rimini–Weber model [

38], Continuous spontaneous localization model [

39] and the Diósi–Penrose model [

40,

41].

While the SQHM aligns well with existing Objective-Collapse models, it introduces an innovative approach that effectively addresses critical aspects within this class of theories. One notable achievement is the resolution of the ‘tails’ problem by incorporating the quantum potential length of interaction, in addition to the De Broglie length. Beyond this interaction range, the quantum potential cannot maintain coherent Schrödinger quantum behavior and wavefunction tails.

The SQHM also highlights that there is no need for an external environment, demonstrating that the quantum stochastic behavior responsible for wave-function collapse can be an intrinsic property of the system in a spacetime with fluctuating metrics due to the gravitational background. Furthermore, situated within the framework of relativistic quantum mechanics, which aligns seamlessly with the finite speed of light and information transmission, the SQHM establishes a clear connection between the uncertainty principle and the invariance of light speed.

The theory also derives, within a fluctuating quantum system, the indeterminacy relation between energy and time—an aspect not expressible in conventional quantum mechanics—providing insights into measurement processes that cannot be completed within a finite time interval in a truly quantum global system. Notably, the theory finds support in the confirmation of the Lindemann constant for the melting point of solid lattices and the transition of He4 from fluid to superfluid states. Additionally, it proposes a potential explanation for the measurement of entangled photons through a Heart-Moon-Mars experiment [

19].

3. Simulation Analogy: Complexity in Achieving Future States

The discrete spacetime structure that comes from the finite spedd of ligth together with the quantum uncertainty (???) allows the implementation of a discrete simulation of the universe’s evolution.

In this case, the programmer of such universal simulation has to face with the following problems:

One key argument revolves around the inherent challenge of any computer simulation, namely the finite nature of computer resources. The capacity to represent or store information is confined to a specific number of bits. Similarly, the availability of Floating-point Operations Per Second (FLOPS) is limited. Regardless of efforts, achieving a truly “continuous” simulated reality in the mathematical sense becomes unattainable due to these constraints. In a computer-simulated universe, the existence of infinitesimals and infinities is precluded, necessitating quantization, which involves defining discrete cells in spacetime.

The speed of light must be finite. Another common issue in computer-simulation arises from the inherent limitation of computing power in terms of the speed of executing calculations. Objects within the simulation cannot surpass a certain speed, as doing so would render the simulation unstable and compromise its coherence. Any propagating process cannot travel at an infinite speed, as such a scenario would require an impractical amount of computational power. Therefore, in a discretized representation, the maximum velocity for any moving object or propagating process must conform to a predefined minimum single-operation calculation time. This simulation analogy aligns with the finite speed of light (c) as a motivating factor.

Discretization must be dynamic The use of fixed-size discrete grids is clearly a huge dispersion of computational resource in spacetime regions where there are no bodies and there is nothing to calculate (so that we can fix there just one big cell saving computational resources). On the one hand, the need to increase the size of the simulation requires lowering the resolution; on the other hand, it is possible to achieve better resolution within smaller domains of the simulation. This dichotomy is already present to those creating vast computerized cosmological simulations [

42]. This problem is attaked by varying the mass quantization grid resolution as a function of the local mass density and other parameters leading to the so-called Automatic Tree Refinement (ATR). The Adaptive Moving Mesh Method, a similar approach [

43] to that of ATR would be to vary the size of the cells of the quantized mass grid locally, as a function of kinetic energy density while at the same time varying the size of the local discrete time-step, which should be kept per-cell as a 4th parameter of space, in order to better distribute the computational power where it’s needed the most. By doing so, the grid would result as distorted having different local sizes. In a 4D simulation this effect would also invole the time that be perceived as flowing differently in different parts of the simulation: faster for regions of space where there’s more local kinetic energy density, and slower where there’s less. [additional consequences are reported and discussed into the section 3.3].

In principle, there are two instruments or methods for computing the future states of a system. One involves utilizing a classical apparatus composed of conventional computer bits. Unlike Qbits, these classical bits cannot create, maintain, or utilize the superposition of their states, rendering them classical machines. On the other hand, quantum computation employs a quantum system of Qbits and utilizes the quantum law of evolution for calculations.

However, the capabilities of the classical and quantum approaches to predict the future state of a system differ. This distinction becomes evident when considering the calculation of the evolution of many-body. In the classical approach, computer bits must compute the position and interactions of each at every calculation step. This becomes increasingly challenging (and less precise) due to the chaotic nature of classical evolution. In principle, the classical N-body simulations are straightforward as they primarily entail integrating the 6N ordinary differential equations that describe particle motions. However, in practice, the sheer magnitude of particles, N, is often exceptionally large (of order of millions or ten billions like in the Millennium simulation [

43]). Moreover, the computational expense becomes prohibitive due to the quadratic increase

in the number of particle-particle interactions that need to be computed. Consequently, direct integration of the differential equations requires an exponential increase of calculation and data storage resources for large scale simulations.

On the other hand, quantum evolution doesn’t require defining the state of each particle at every step. It addresses the evolution of the global wave of superposition of states for all particles. Eventually, when needed or when decoherence is induced or spontaneously occurs, the classical state of each particle at a specific instant is obtained through the wavefunction decay (under this standpoint, calculated is the analogous of “measured”). This represents a form of optimization: sacrificing the knowledge of the classical state at each step, but being content with knowing the classical state of each particle at discrete time instants (just every a large number of calculation steps). This approach allows for a quicker computation of the future state of reality with a lesser use of resources. Moreover, since the length of quantum coherence is finite, the group of entangled particles undergoing to the common wavefunction decay, are of smaller finite number, further simplifying the algorithm of the simulation.

The advantage of quantum calculus over classical calculus can be metaphorically demonstrated by addressing the challenge of finding the global minimum. When using classical methods like maximum descent gradient or similar approaches, the pursuit of the global minimum—such as in the determination of prime numbers—results in an exponential increase in the calculation time as the maximum value of the prime numbers rises.

In contrast, employing the quantum method allows us to identify the global minimum in linear or, at least, polynomial time. This can be loosely conceptualized as follows: in the classical case, it’s akin to having a ball fall into each hole to find a minimum, and then the values of each individual minimum must be compared with all possible minima before determining the overall minimum. The utilization of the quantum method involves using an infinite number of balls, spanning the entire energy spectrum. Consequently, at each barrier between two minima (thanks to quantum tunneling), some of the balls can explore the next minimum almost simultaneously. This simultaneous exploration (quantum computing) significantly shortens the time needed to probe the entire set of minima, then wavefunction decay allows to measure (or detect) the outcome of the process (measure).

If we aim to create a simulation on a scale comparable to the vastness of the Universe, we must find a way to address the many-body problem. Currently, solving this problem remains an open challenge in the field of Computer Science. However, Quantum Mechanics appears to be a promising candidate for making the many-body problem manageable. This is achieved through the utilization of the Entanglement process, which encodes coherent particles and their interaction outcomes as a wavefunction. The wavefunction evolves without explicit solving and, when coherence diminishes, the wavefunction collapse leads to calculate (as well determine) the essential classical properties of the system given by the underlying physics at discrete time steps.

This sheds light on the reason why physics properties remain undefined until measured; from the standpoint of the simulation analogy it is a direct consequence of the quantum optimization algorithm, where properties are computed only when necessary. Moreover, the combination of the coherent quantum evolution with the wavefunction collapse has been proven to constitute a Turing-complete computational process, as evidenced by its application in Quantum Computing for performing computations.

An even more intriguing aspect of the possibility that reality can be virtualized as a computer simulation is the existence of an algorithm capable of solving the intractable many-body problem, challenging classical algorithms. Consequently, the entire class of problems characterized by a phenomenological representation, describable by quantum physics, can be rendered tractable through the application of quantum computing. However, it’s worth noting that very abstract mathematical problems, such as the ‘lattice problem’ [

44], may still remain intractable. Currently, the most well-known successful examples of quantum computing include Shor’s algorithm [

45] for prime number discovery and Grove’s algorithm [

46] for inverting ‘black box functions.’

Classical computation categorizes the determination of prime numbers as an NP (non-polynomial) problem, whereas quantum computation classifies it as a P (polynomial) problem with the Shor’s Algorithm. However, not all problems considered NP in classical computation can be reduced to P problems by utilizing quantum computation. This implies that quantum computing may not be universally applicable in simplifying all problems but a certain limited class.

The possibility of acknowledging the universe many-body problem as a computer simulation requires that the NP problem of N-body is tractable. In such a scenario, it becomes theoretically feasible to utilize universe-like particle simulations for solving NP problems by embedding the problem within specific assigned particle behavior. This concept implies that the Laws of Physics are not inherently given but are rather formulated to represent the solution of specific problems

To clarify further: if various instances of universe-like particle simulations were employed to tackle distinct problems, each instance would exhibit different Laws of Physics governing the behavior of its particles. This perspective opens up the opportunity to explore the purpose of the Universe and inquire about the underlying problem it seeks to solve.

In essence, it prompts the question: What is the fundamental problem that the Universe is attempting to address?

3.1. How the Universe Computes the Next State: the Unraveling of the Meaning of Time and Free Will

At this stage, in order to analyze the universal simulation, producing the evolution with the characteristics of the SQHM in a flat space (at this stage) so that gravity is exclued except for the gravitational background noise that generates the quantum decoherence, let’s consider the local evolution, in a cell of spacetime of order of few De Broglie lengths or quantum coherence lengths

[

19]. After a certain characteristic time, the superposition of states, evolving following the motion equation (19), decays into one of its eigenstates and leads to a stable state that, surviving to fluctuations, constitutes a lasting over time measurable state: we can define it as reality since, for its stability, gives the same result even after repeated measurements. Moreover, given the macroscopic decoherence, the local domain in different places are quantum disentangled eachother, Therefore, their decay to the stable eigenste cannot contemporarely happen. Due to the perceived randomness of the GBN, this process can be assumed stochasticly distributrd into the space, leading to a fractal classical reality into the spacetime that in this way results locally quantum but globally classic.

Furthermore, after an interval of time much larger than the wavefunction decay one, each domain is perturbed by a large fluctuation that is able to let it to jump to a quantum superposition that re-starts to evolve following the quantum law of evolution for a while, before new wavefunction collapse, and so on.

From the standpoint of the SQHM, the universal computation method exploits the quantum evolution for a while and then by the decoherence derives the classical N-body state at certain discrete instants by the wavefunction collapse exatly as a universal quantum computer. Then it goes to the next step by computing the evolutin of the quantum entangled wavefunction evolution, saving up of classically calculating the state of the N-bodies repeatedly, deriving it only when the quantum state decays into the classical one (as in a measure).

Practically, the universe realizes a sort of computational optimization to speed up the derivation of its future state by usitilizing a Qbits-like quantum computation..

3.1.1. The Free Will

Following the pigeonhole principle, which states that any computer that is a subsystem of a larger one cannot handle the same information (thus cannot produce a greater power of calculation in terms of speed and precision) as the larger one, and considering the inevitable information loss due to compression, we can infer that a human-made computer, even utilizing a vast system of Q-bits, cannot be faster and more accurate than the universal quantum computer.

Therefore, the temporal horizon of predicting the future states, before they happen, is by force limited inside the reality. Therefore, among the many future states possible, we can infer that we can determine or choose the future output within a certain temporal horizon and that free will is limited. Moreover,since the decision of what reality state we want to realize is not connected to the preceeedings events before a certain preceding interval of time (4D disentanglement), we can also say that such decision it is not predetermined.

Nevertheless, other than the will is free but limited, from the present analysis there is an additional aspect of the concept of free will that comes out. Specifically pertaining to whether many possible states of reality exist in future scenarios, providing us with the genuine opportunity to choose which of them to attain.

In this context, within the deterministic quantum evolution framework, or even in classical scenarios, with precisely defined initial conditions in 4D spacetime, such a possibility is effectively prohibited since the future states are predetermined. Time in this context does not flow but merely serves as a “coordinate” of the 4D spacetime where reality is depicted, losing the significance it holds in the real life.

In absence of GBN, knowing the initial condition of the universe at initial instant of the big-bang and the laws of physics precisely, it is possible to predict the future of the universe.

This is because, unless you introduce noise in the simulation, the basic quantum law of physics are deterministic..

Actually, in the context of stochastic quantum evoltion, the random nature of the GBN plays an important role in shaping the future states of the universe. From the standpoint of the simulation anaòpgy the nature of GBN presents important informational aspects.

The randomness introduced by this noise renders the simulation inherently unpredictable to an internal observer. Even if the internal observer employs the identical algorithm as the simulation to forecast future states, the absence of access to the same noise source results in rapid divergence in their predictions of future states. This is due to the critical influence of each individual fluctuation on the wavefunction decay (see section ??). In other words, to the internal observer, the future would be encrypted by such noise. Furthermore, if the noise that would be used in the simulation analogy evolution would be a pseudo-random noise with enough unpredictability, only who is in possession of the seed would in fact be able to predict the future or invert the arrow of time. Even if the noise is a pseudo-random, the problem of deriving the cryptation key can practically be intractable. Therefore, in presence of GBN, the future outcome of the computation is “encrypted” by the randomness of the GBN.

Moreover, if the simulation makes use of a pseudo-random routine to generate the GBN and it appears truly-random inside the reality, it follows that the seed “encoding GBN” is kept outside the simulated reality, and is unreachable to us. In this case we are in front of an instance of a “one-time pad”, effectively equating to deletion, which is proven unbreakable. Therefore, in principle, the simulation could effectively conceal information about the key used to encrypt the GBN noise in a manner that remains unrecoverable.

From this perspective, the renowned Einstein quote, “God does not play dice with the universe,” is aptly interpreted. In this context, it implies that the programmer of the universal simulation does not engage in randomness, as everything is predetermined for him. However, from within the reality, we remain unable to ascertain the seed of the noise, and the noise manifests itself as genuinely random. Furthermore, even if from the inside reality we would be able to detect the pseudo-random nature of the GBN, featuring a high level of randomness, the challenge of deciphering the key remains insurmountable [

47] and the encryption key practically irretrievable.

Thus, we would never be able to tracing back to the encryption key and completely reproduce the outcomes of the simulation even knowing the initial state and all the laws of physics perfectly since the simulated evolution depends by the form of each single fluctuation.

This universal behavior emphasizes the concept of ‘free will’ as a constrained capability, unable to access information beyond a specific temporal horizon. Furthermore, the simulation analogy delves deeper into this idea, portraying free will as a faculty originating in macroscopic classical (living) systems characterized by fractal dimensions in spacetime. Consequently, free will lacks perfect definition in our consciousness. Nonetheless, through the exercise of our free will, we can impact the forthcoming macroscopic state, albeit with a certain imprecision and ambiguity in our intentions, yet not predetermined by preceding states of reality beyond a specific interval of time.

3.2. The Universal “Pasta Maker” and the Actual Time in 4D spacetime

Working with a discrete spacetime offers advantages that are already supported by lattice gauge theory [

48]. This theory demonstrates that in such a scenario, the path integral becomes finite-dimensional and can be assessed using stochastic simulation techniques, such as the Monte Carlo method.

In our scenario, the fundamental assumption is that the optimization procedure for universal computation has the capability to generate the evolution of reality. This hypothesis suggests that the universe evolves quantum mechanics in polynomial time, efficiently solving the many-body problem and transitioning it from NP to P. In this context, quantum computers, employing Q-bits with wavefunction decay that both produces and effectively computes the result, utilize a method inherent to the physical reality itself.

From a global spacetime perspective, aside from the collapses in each local domain, it is important to acknowledge a second fluctuation-induced effect. Larger fluctuations taking place over extended time intervals can induce a jumping process in the wavefunction configuration, leading to a generic superposition of states. This prompts a restart in its evolution following quantum laws. As a result, after each local wavefunction decay, a quantum resynchronization phenomenon occurs, propelling the progression towards the realization of the next local classical state of the universe.

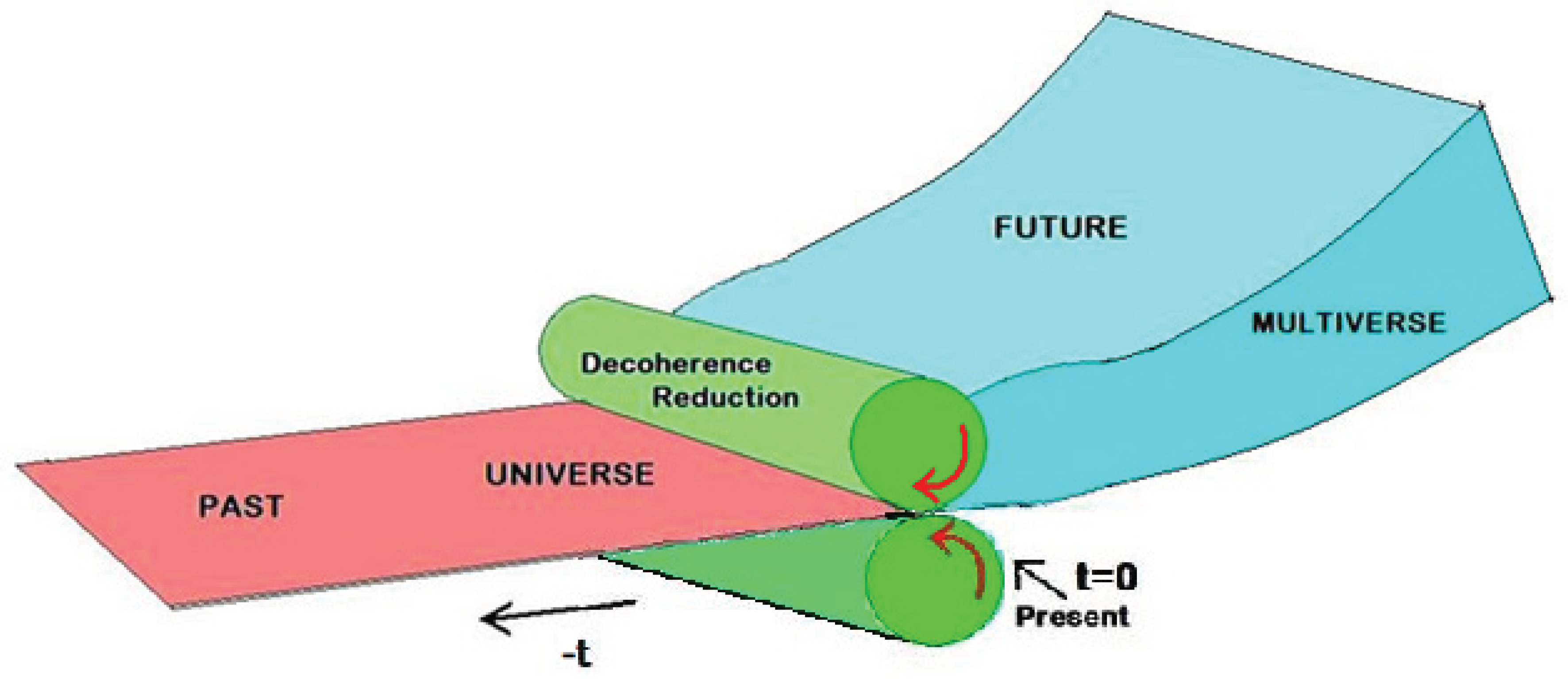

Furthermore, with quantum synchronization, at the onset of the subsequent moment, the array of potential quantum states (in terms of superposition) encompasses multiple classical states of realization. Consequently, in the current moment, the future states form a quantum multiverse where each individual classical state is potentially attainable depending on events (such as the chain of wave-function decay processes) occurring beforehand. As the present unfolds, marked by the quantum decoherence process leading to the attainment of a classical state, the past is generated, ultimately resulting in the realization of the singular (fractal) classical reality: the Universe.

Moreover, if all possible configurations of the realizable universe exist in the future (extending past our ability to determine or foresee over a finite temporal extent), the past is comprised of fixed events (universe) that we are aware of but unable to alter.

In this context, we can metaphorically illustrate spacetime and its irreversible universal evolution as an enormous pasta maker. In this analogy, the future multiverse is represented by a blob of unshaped flour dough, inflated because it contains all possible states. This dough, extending up to the surface of the present, is then pressed into a thin pasta sheet, representing the quantum superposition decay to the classical state realizing the universe.

Figure 1.

The Universal “Pasta-Maker”.

Figure 1.

The Universal “Pasta-Maker”.

The 4D surface boundary between the future multiverse and the past universe marks the instant of present time. At this point, the irreversible process of decoherence occurs, entailing the computation or reduction to the present classical state. This specific moment defines the current time of reality, a concept that cannot be precisely located within the framework of relativistic spacetime.

3.3. Quantum and Gravity

Until now, we haven’t adequately discussed how gravity arises from the discrete nature of the universal ‘calculation.’ Nevertheless, it’s interesting to provide some insights into the issue because, viewed through this perspective, gravity naturally emerges as quantized.

Considering the universe as an extensive quantum computer operating on a predetermined spatiotemporal grid doesn’t yet represent the most optimized simulation. In fact, the fixed dimensions of the elemental grid haven’t been considered in the optimization of the simulation. This becomes apparent when we realize that maintaining constant elemental cell dimensions leads to a significant dispersion of computational resources in spacetime regions devoid of bodies or any need for calculation. In such regions, we could simply allocate one large cell, thereby conserving computational resources.

This perspective aligns with a numerical algorithm employed in numerical analysis known as adaptive mesh refinement (AMR). This technique dynamically adjusts the accuracy of a solution within specific sensitive or turbulent regions during the calculation of a simulation. In numerical solutions, computations often occur on predetermined, quantified grids, such as those in the Cartesian plane, forming the computational grid or ‘mesh.’ However, many issues in numerical analysis do not demand uniform precision across the entire computational grid as, for instance, used for graph plotting or computational simulation. Instead, these issues would benefit from selectively refining the grid density only in regions where enhanced precision is required.

The local adaptive mesh refinement (AMR) creates a dynamic programming environment enabling the adjustment of numerical computation precision according to the specific requirements of a computation problem, particularly in areas of multidimensional graphs that demand precision. This method allows for lower levels of precision and resolution in other regions of the multidimensional graphs. The credit for this dynamic technique of adapting computation precision to specific requirements goes to Marsha Berger, Joseph Oliger, and Phillip Colella [

49,

50], who developed an algorithm for dynamic gridding known as AMR. The application of AMR has subsequently proven to be widely beneficial and has been utilized in the investigation of turbulence problems in hydrodynamics, as well as the exploration of large-scale structures in astrophysics, exemplified by its use in the Bolshoi Cosmological Simulation [

51]

An intriguing variation of Adaptive Mesh Refinement is the Adaptive Moving Mesh Method proposed by Huang Weizhang and Russell Robert [

52]. This method employs an r-adaptive (relocation adaptive) strategy to achieve outcomes akin to those of Adaptive Mesh Refinement. Upon reflection, an r-adaptive strategy, grounded in local energy density as a parameter, bears resemblance to the workings of curved space-time in our Universe.

Conceivably, a more sophisticated cosmological simulation could leverage an advanced iteration of the Adaptive Moving Mesh Method algorithm. This iteration would involve relocating space grid cells and adjusting the local delta time for each cell. By moving cells from regions of lower energy density to those of higher energy density at the system’s speed of light and scaling the local delta time accordingly, the resultant grid would appear distorted and exhibit behavior analogous to curved space-time in General Relativity.

Furthermore, as cell relocation induces a distortion in the grid mesh, updating the grid at the speed of light, as opposed to simultaneous updating, would disperse computations across various time frames. In this scenario, gravity, time dilation, and gravitational waves would spontaneously manifest within the simulated universe, mirroring their emergence from curved space-time in our Universe.

A criterion for reducing the grid step is based on providing a more detailed description in regions with higher mass density (more particles or energy density) and a higher amplitude of induced quantum potential fluctuations that reduces the De Broglie length of quantum coherence.

This point of view finds an example in the Lagrangian approach, as outlined in ref. [