Submitted:

12 March 2024

Posted:

14 March 2024

You are already at the latest version

Abstract

Keywords:

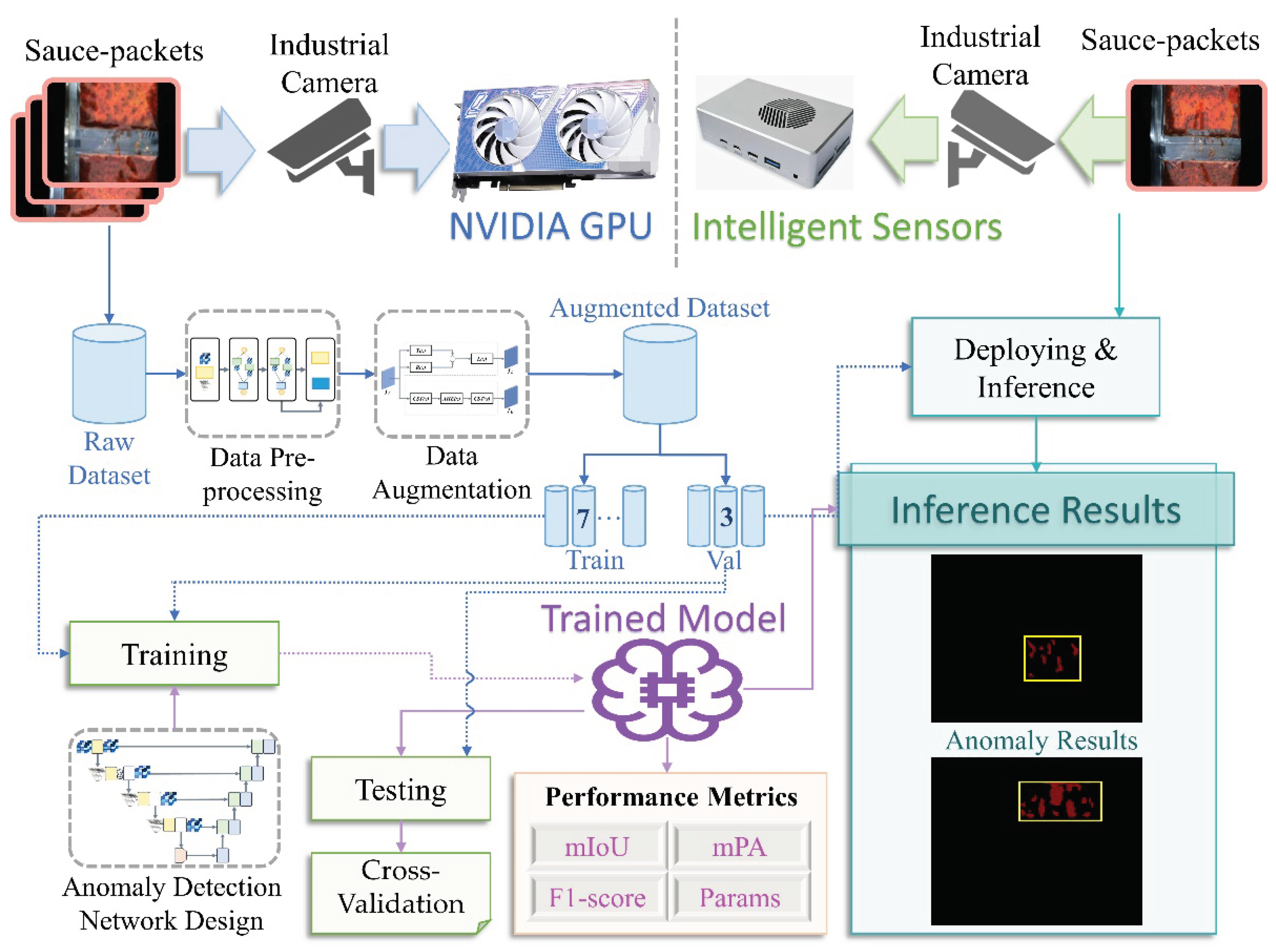

1. Introduction

2. Materials and Methods

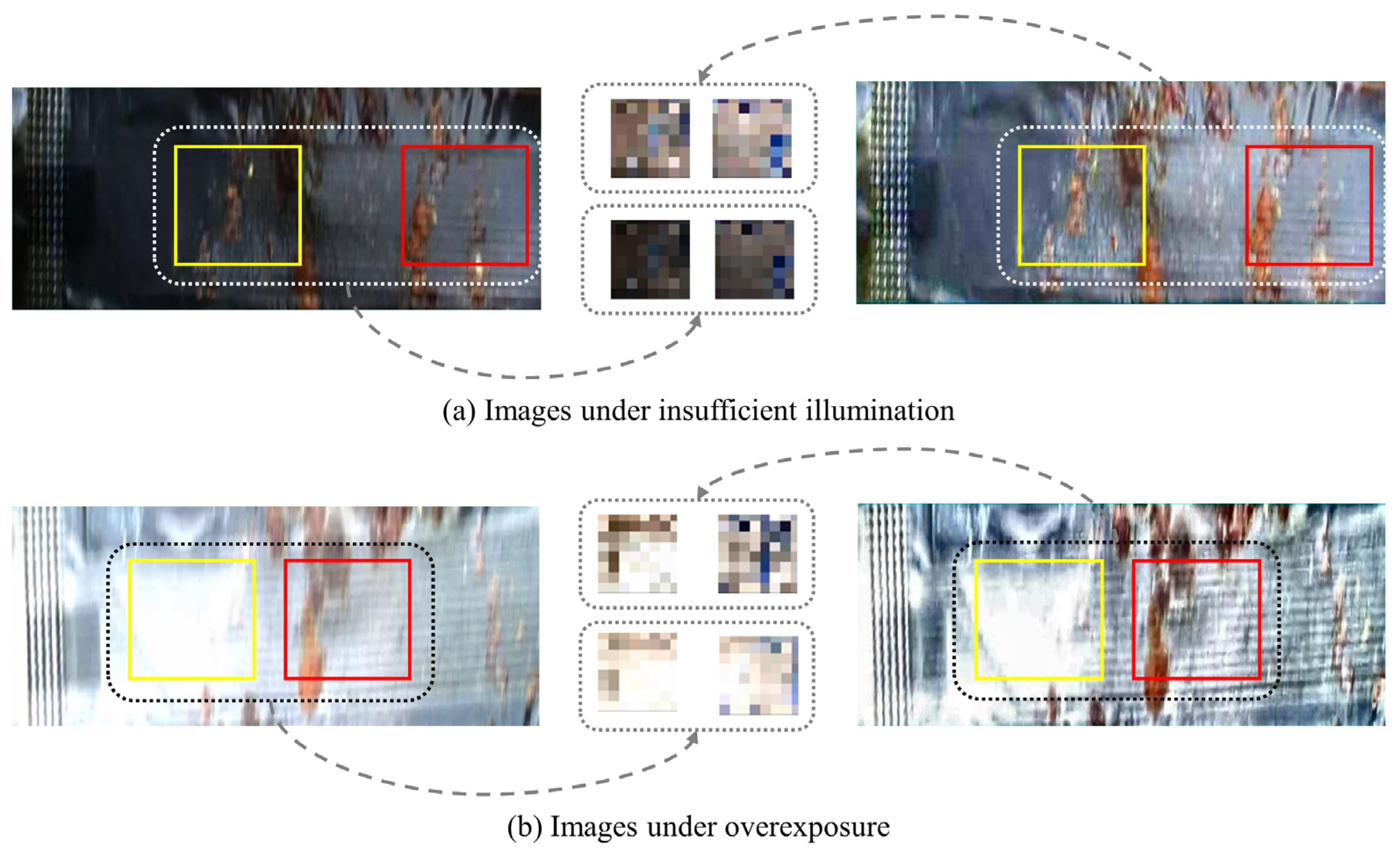

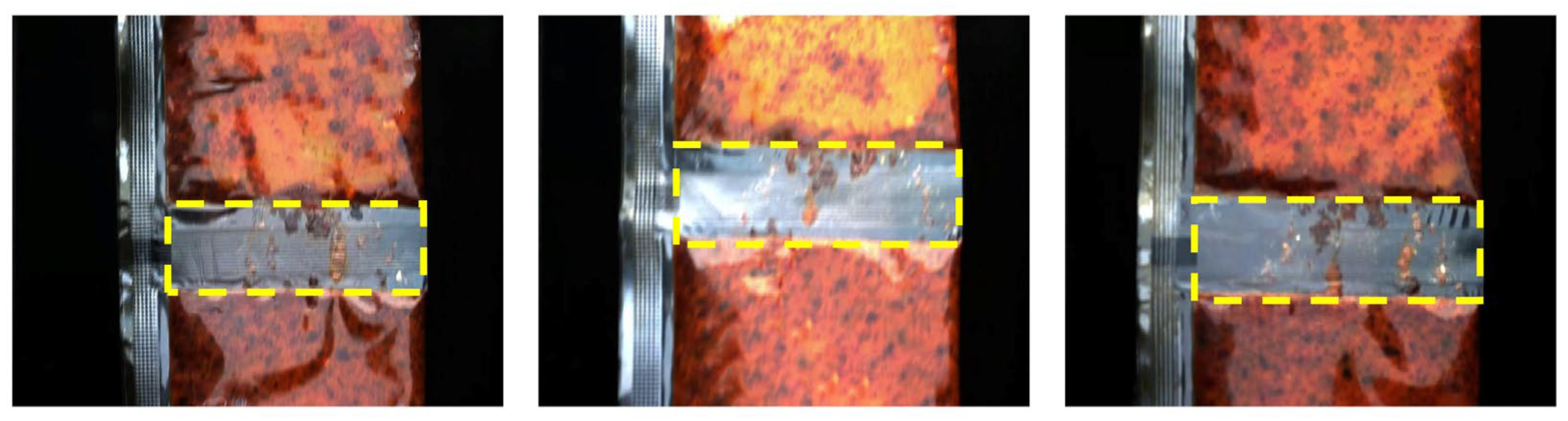

2.1. Uneven-Light Image Enhancement for Illumination-Aware Region Enhancement

U = -0.169R - 0.331G + 0.5B + 128

V = 0.5R - 0.419G - 0.081B + 128

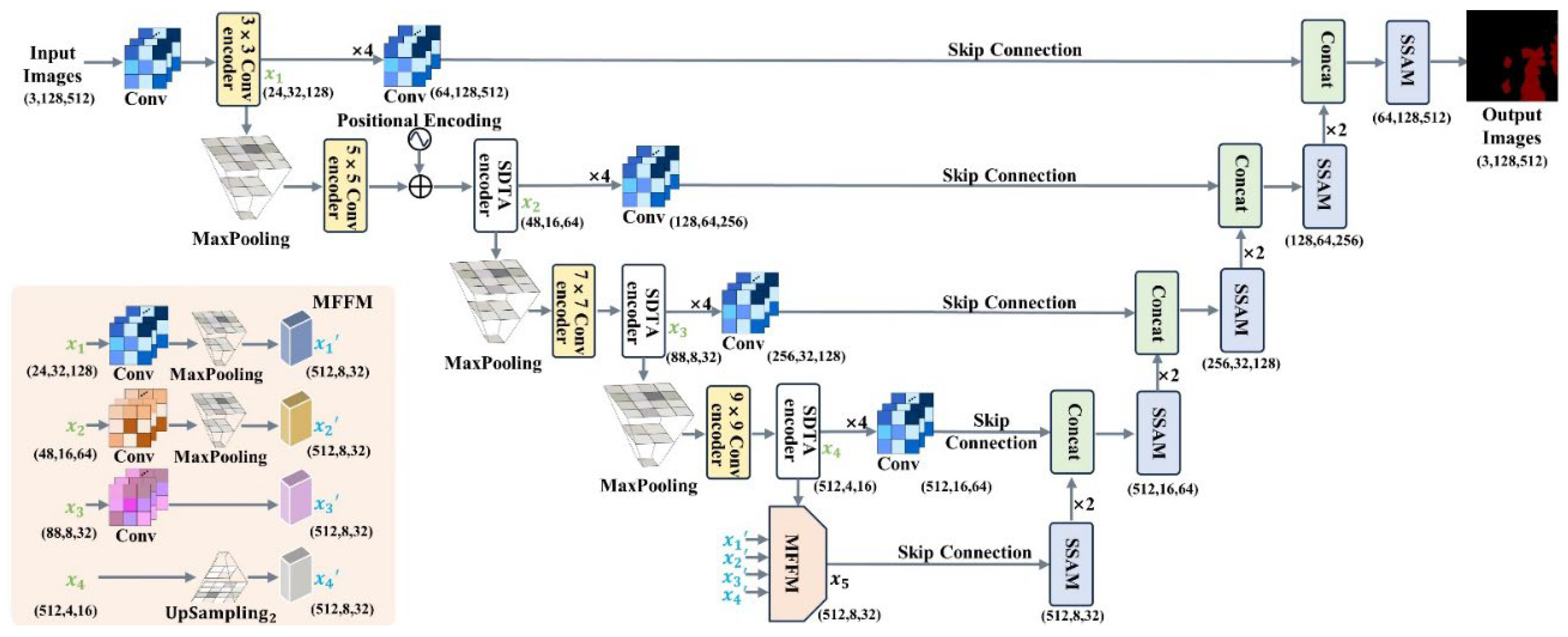

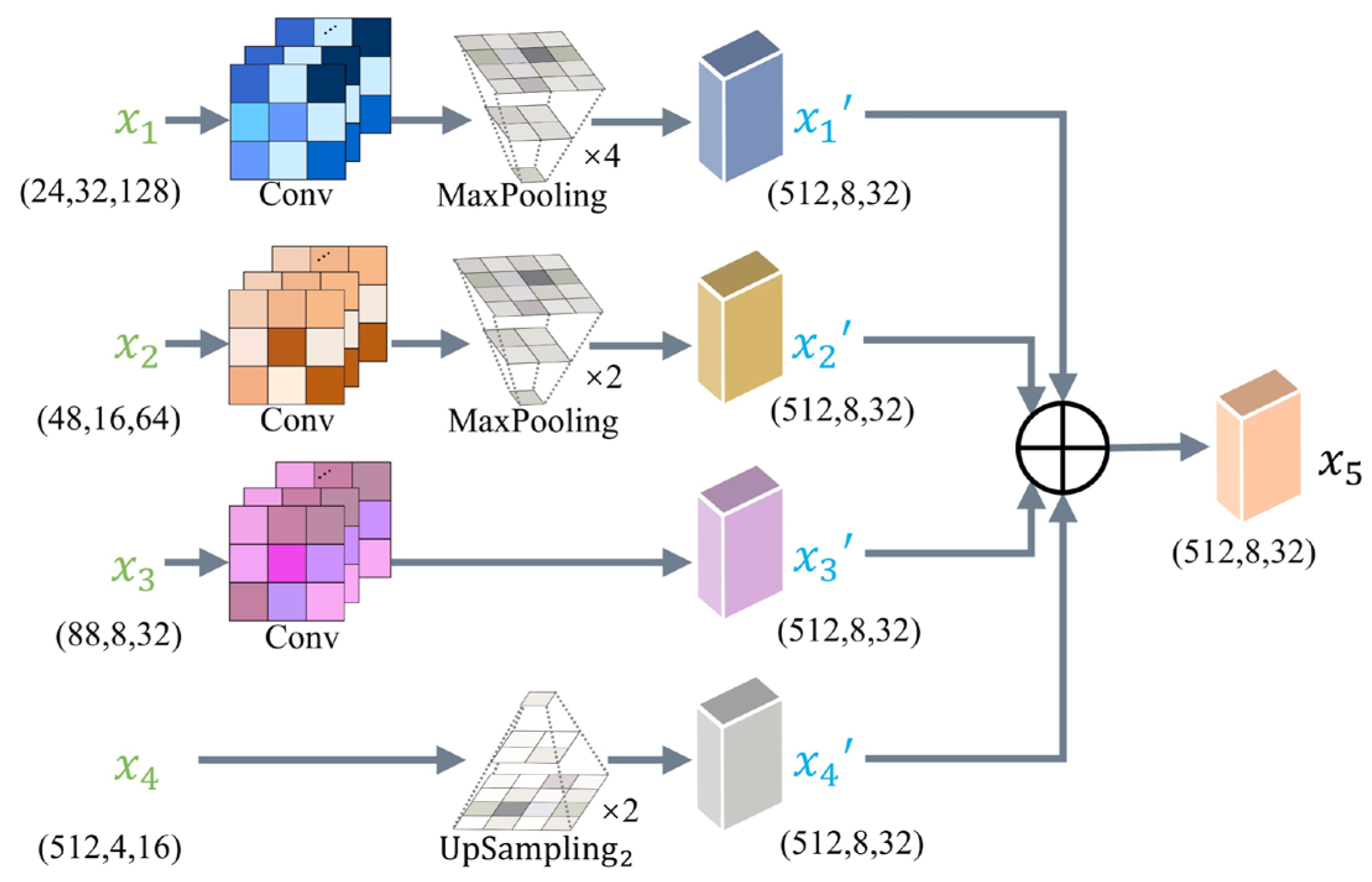

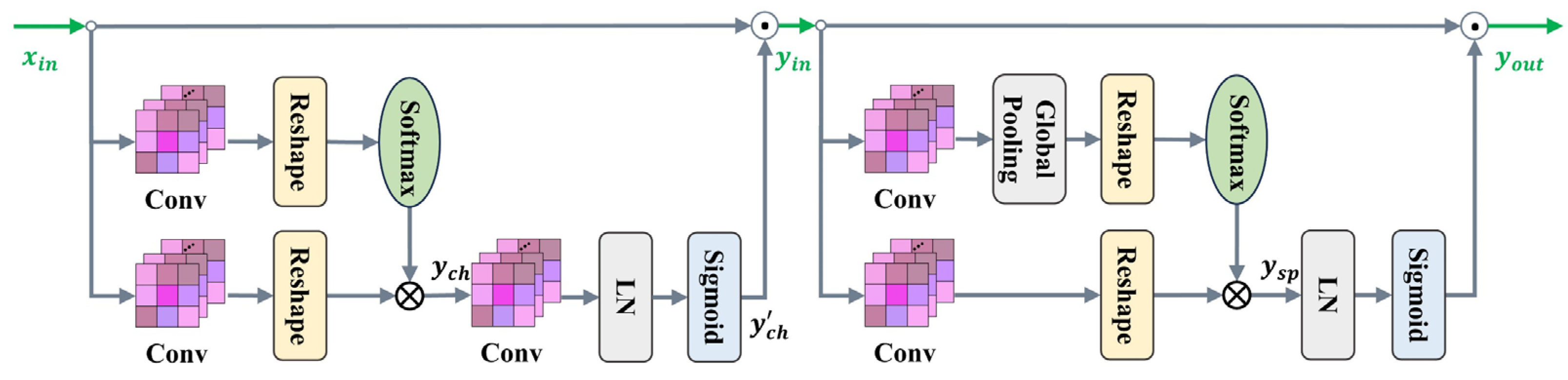

2.2. ISLS Network Details for Leakage Segmentation

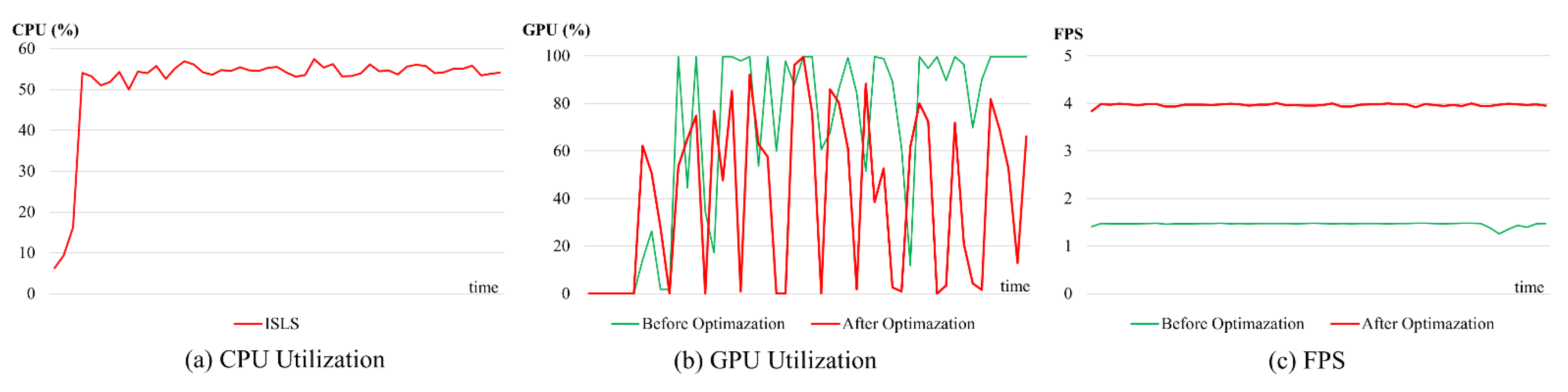

3. Experiments and Results

3.1. Dataset and Experiment Setting

3.2. Evaluation Indexes

3.3. Experiment Analysis of ULIE

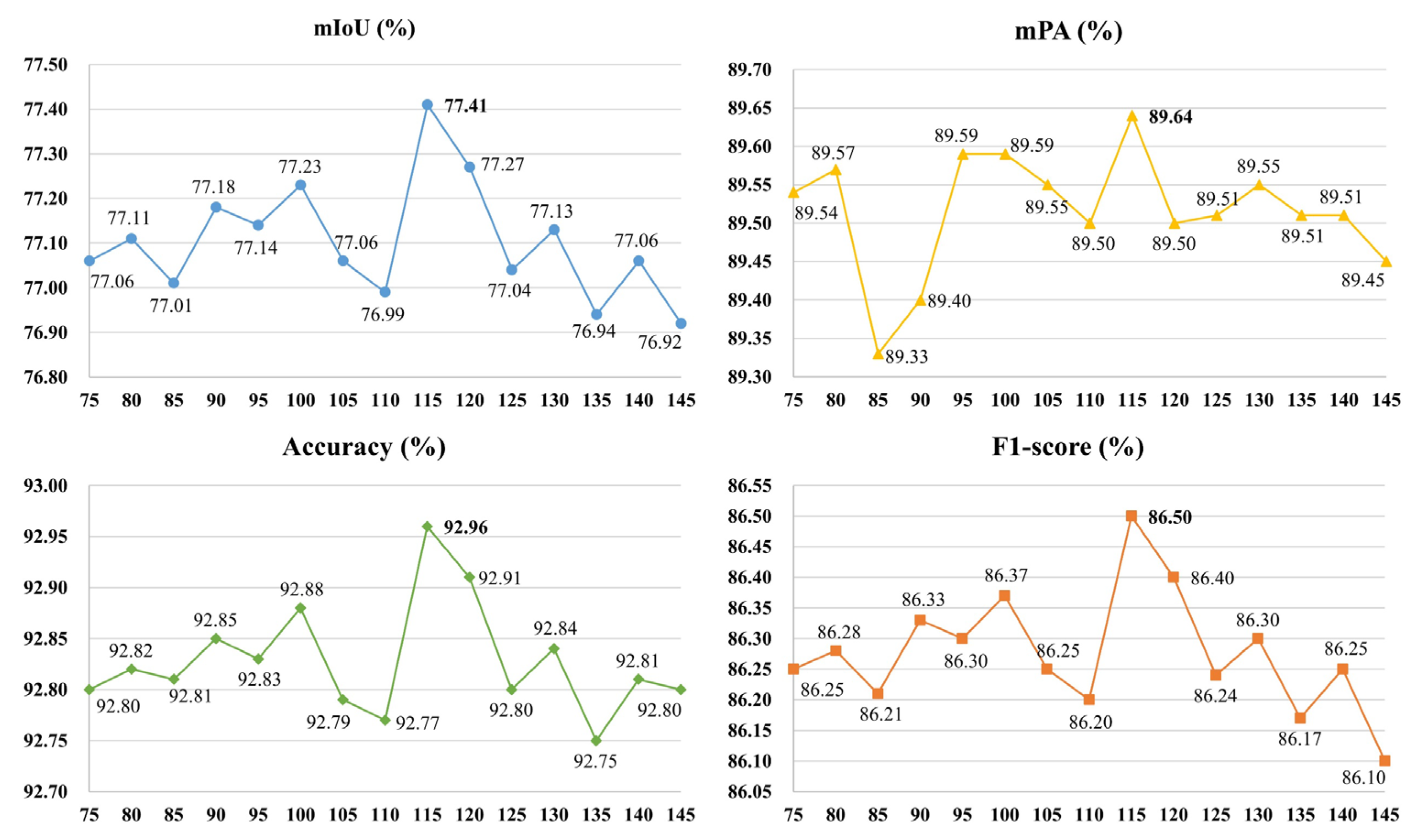

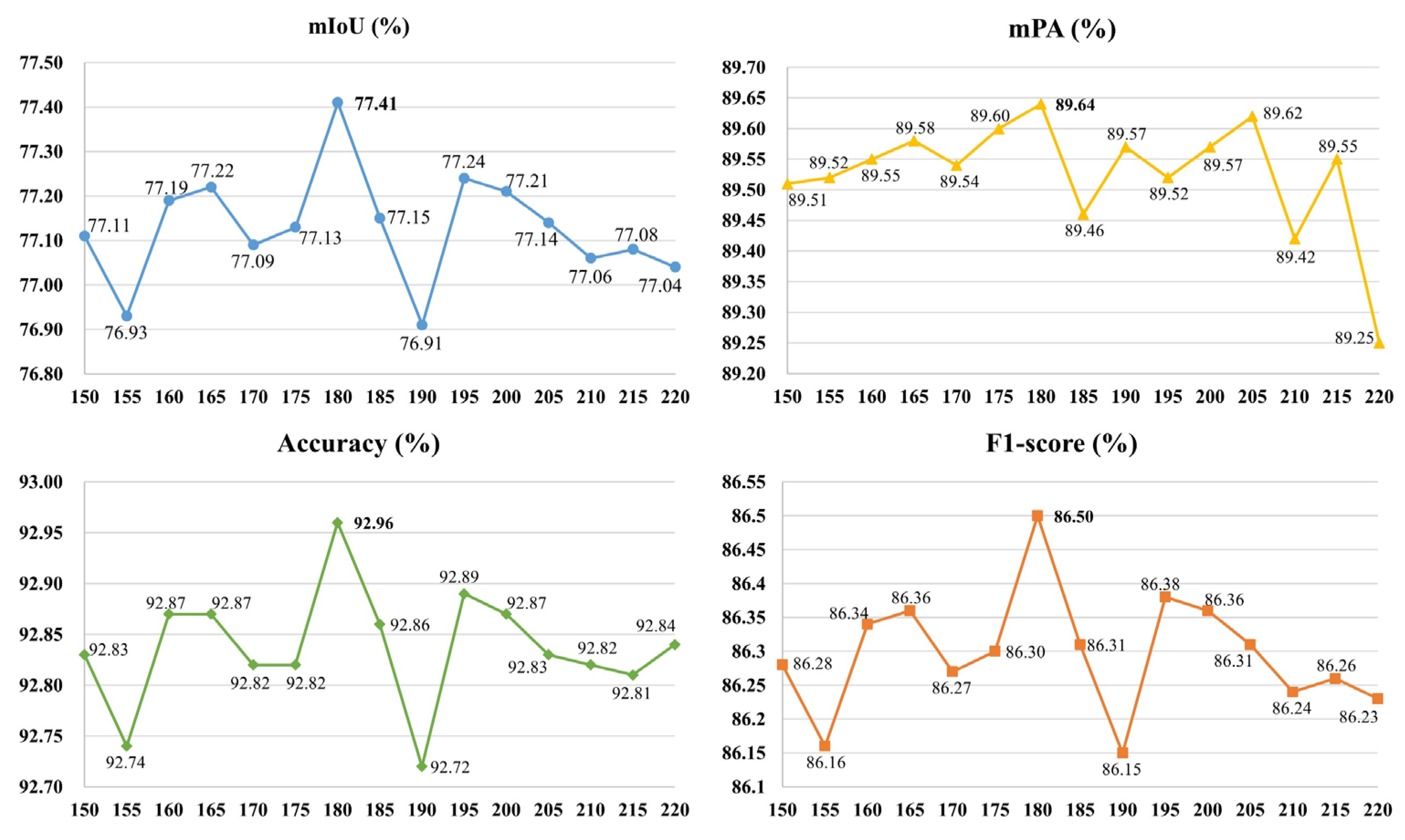

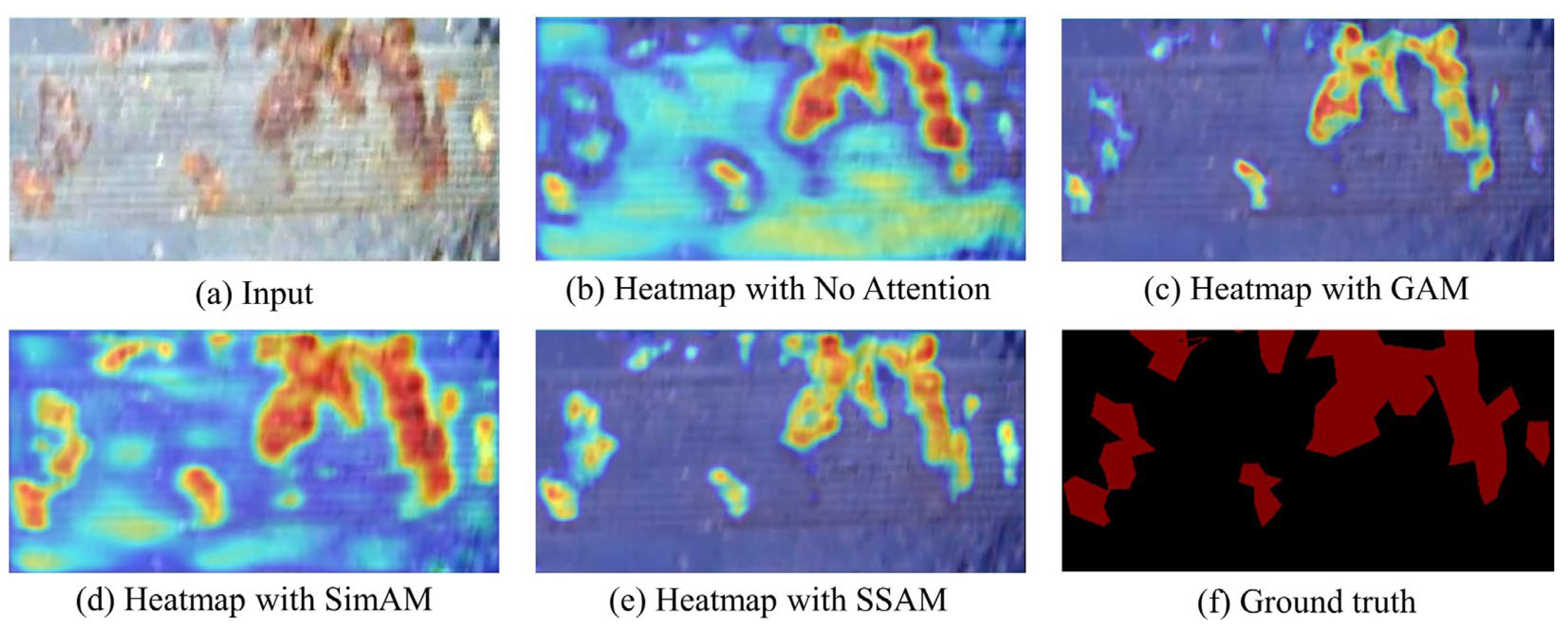

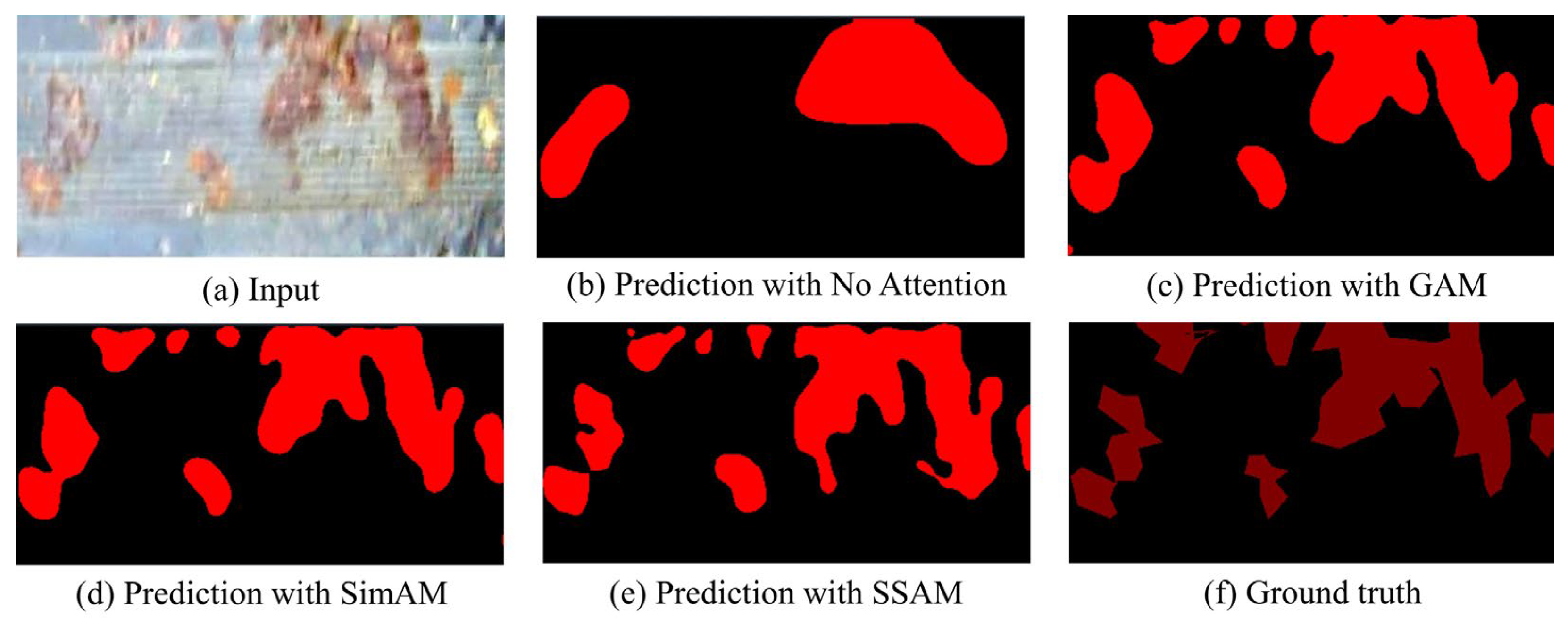

3.4. Analysis of Ablation Study

3.5. Comparison with Other Segmentation Methods

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhu, Y.; Xu, Z.; Lin, Y.; Chen, D.; Ai, Z.; Zhang, H. A Multi-Source Data Fusion Network for Wood Surface Broken Defect Segmentation. Sensors 2024, 24, 1635. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Li, Z.; Wang, G.; Qiu, X.; Liu, T.; Cao, J.; Zhang, D. Spectral–Spatial Feature Fusion for Hyperspectral Anomaly Detection. Sensors 2024, 24, 1652. [Google Scholar] [CrossRef] [PubMed]

- Wu, Q.; Li, H.; Tian, C.; et al. AEKD: Unsupervised auto-encoder knowledge distillation for industrial anomaly detection[J]. Journal of Manufacturing Systems, 2024, 73, 159–169. [Google Scholar] [CrossRef]

- He, X.; Kong, D.; Yang, G.; et al. Hybrid neural network-based surrogate model for fast prediction of hydrogen leak consequences in hydrogen refueling station[J]. International Journal of Hydrogen Energy, 2024, 59, 187–198. [Google Scholar] [CrossRef]

- Rai, S.N.; Cermelli, F.; Fontanel, D.; et al. Unmasking anomalies in road-scene segmentation[C]. International Conference on Computer Vision. 2023, 4037–4046. [Google Scholar]

- Li, J.; Lu, Y.; Lu, R. Detection of early decay in navel oranges by structured-illumination reflectance imaging combined with image enhancement and segmentation[J]. Postharvest Biology and Technology, 2023, 196, 112162. [Google Scholar] [CrossRef]

- Fulir, J.; Bosnar, L.; Hagen, H.; et al. Synthetic Data for Defect Segmentation on Complex Metal Surfaces[C]. Computer Vision and Pattern Recognition. 2023, 4423–4433. [Google Scholar]

- Gertsvolf, D.; Horvat, M.; Aslam, D.; et al. A U-net convolutional neural network deep learning model application for identification of energy loss in infrared thermographic images[J]. Applied Energy, 2024, 360, 122696. [Google Scholar] [CrossRef]

- Qi, S.; Alajarmeh, O.; Shelley, T.; et al. Fibre waviness characterisation and modelling by Filtered Canny Misalignment Analysis[J]. Composite Structures, 2023, 307, 116666. [Google Scholar] [CrossRef]

- Sharma, A.K.; Nandal, A.; Dhaka, A.; et al. HOG transformation based feature extraction framework in modified Resnet50 model for brain tumor detection[J]. Biomedical Signal Processing and Control, 2023, 84, 104737. [Google Scholar] [CrossRef]

- Liu, H.; Jia, X.; Su, C.; et al. Tir0e appearance defect detection method via combining HOG and LBP features[J]. Frontiers in Physics, 2023, 10, 1099261. [Google Scholar] [CrossRef]

- Ma, B.; Zhu, W.; Wang, Y.; et al. The defect detection of personalized print based on template matching[C]. IEEE International Conference on Unmanned Systems. 2017: 266-271.

- Wang, J.; Sun, K.; Cheng, T.; et al. Deep high-resolution representation learning for visual recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.; Gao, C.; Wang, J.; et al. Bisenet v2: Bilateral network with guided aggregation for real-time semantic segmentation[J]. International Journal of Computer Vision, 2021, 129, 3051–3068. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; et al. SegFormer: Simple and efficient design for semantic segmentation with transformers[J]. Advances in Neural Information Processing Systems, 2021, 34, 12077–12090. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; et al. Swin-unet: Unet-like pure transformer for medical image segmentation[C]. European Conference on Computer Vision. 2022, 205–218. [Google Scholar]

- Zhang, H.; Xu, D.; Cheng, D.; et al. An Improved Lightweight Yolo-Fastest V2 for Engineering Vehicle Recognition Fusing Location Enhancement and Adaptive Label Assignment[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2023, 16, 2450–2461. [Google Scholar] [CrossRef]

- Liu, S.; Mei, C.; You, S.; et al. A Lightweight and Efficient Infrared Pedestrian Semantic Segmentation Method[J]. IEICE Transcations on Information and Systems, 2023, 106, 1564–1571. [Google Scholar] [CrossRef]

- Pu, T.; Wang, S.; Peng, Z.; et al. VEDA: Uneven-light image enhancement via a vision-based exploratory data analysis model[J]. arXiv preprint 2023, arXiv:2305.16072. [Google Scholar]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-light image enhancement via illumination map estimation[J]. IEEE Transactions on Image Processing, 2016, 26, 982–993. [Google Scholar] [CrossRef]

- Sun, Y.; Zhao, Z.; Jiang, D.; et al. Low-illumination image enhancement algorithm based on improved multi-scale Retinex and ABC algorithm optimization[J]. Frontiers in Bioengineering and Biotechnology, 2022, 10, 865820. [Google Scholar] [CrossRef]

- Xu, J.; Hou, Y.; Ren, D.; et al. Star: A structure and texture aware retinex model[J]. IEEE Transactions on Image Processing, 2020, 29, 5022–5037. [Google Scholar] [CrossRef] [PubMed]

- Hussein, R.R.; Hamodi, Y.I.; Rooa, A.S. Retinex theory for color image enhancement: A systematic review[J]. International Journal of Electrical and Computer Engineering, 2019, 9, 5560. [Google Scholar] [CrossRef]

- Wu, W.; Weng, J.; Zhang, P.; et al. Uretinex-net: Retinex-based deep unfolding network for low-light image enhancement[C]. Computer Vision and Pattern Recognition. 2022: 5901-5910.

- Yang, J.; Bhattacharya, K. Augmented Lagrangian digital image correlation[J]. Experimental Mechanics, 2019, 59, 187–205. [Google Scholar] [CrossRef]

- Yahya, A.A.; Tan, J.; Su, B.; et al. BM3D image denoising algorithm based on an adaptive filtering[J]. Multimedia Tools and Applications, 2020, 79, 20391–20427. [Google Scholar] [CrossRef]

- Wen, X.; Pan, Z.; Hu, Y.; et al. Generative adversarial learning in YUV color space for thin cloud removal on satellite imagery[J]. Remote Sensing, 2021, 13, 1079. [Google Scholar] [CrossRef]

- Jha, M.; Bhandari, A.K. Camera response based nighttime image enhancement using concurrent reflectance[J]. IEEE Transactions on Instrumentation and Measurement, 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Liu, J.; Zhou, X.; Wan, Z.; et al. Multi-Scale FPGA-Based Infrared Image Enhancement by Using RGF and CLAHE[J]. Sensors, 2023, 23, 8101. [Google Scholar] [CrossRef] [PubMed]

- Shah, A.; Bangash, J.I.; Khan, A.W.; et al. Comparative analysis of median filter and its variants for removal of impulse noise from gray scale images[J]. Journal of King Saud University-Computer and Information Sciences, 2022, 34, 505–519. [Google Scholar] [CrossRef]

- Maaz, M.; Shaker, A.; Cholakkal, H.; et al. Edgenext: Efficiently amalgamated cnn-transformer architecture for mobile vision applications[C]. European Conference on Computer Vision. 2022: 3-20.

- Jiang, Z.; Dong, Z.; Wang, L.; et al. Method for diagnosis of acute lymphoblastic leukemia based on ViT-CNN ensemble model[J]. Computational Intelligence and Neuroscience, 2021.

- Vaswani, A.; Shazeer, N.; Parmar, N.; et al. Attention is all you need[J]. Advances in Neural Information Processing Systems, 2017, 30.

- Zamora Esquivel, J.; Cruz Vargas, A.; Lopez Meyer, P.; et al. Adaptive convolutional kernels[C]. International Conference on Computer Vision Workshop. 2019: 1998-2005.

- Lin, T.-Y.; Doll’ar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection[C]. Computer Vision and Pattern Recognition. 2117–2125, 2017.

- Wang, J.; Chen, Y.; Dong, Z.; et al. Improved YOLOv5 network for real-time multi-scale traffic sign detection[J]. Neural Computing and Applications, 2023, 35, 7853–7865. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; et al. Deep residual learning for image recognition[C]. Computer Vision and Pattern Recognition. 2016: 770-778.

- Jadon, S. A survey of loss functions for semantic segmentation[C]. Computational Intelligence in Bioinformatics and Computational Biolog. 2020: 1-7.

- Lin, T.Y.; Goyal, P.; Girshick, R.; et al. Focal loss for dense object detection[C]. International Conference on Computer Vision. 2017: 2980-2988.

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions[J]. arXiv preprint arXiv:2112.05561, 2021.

- Yang, L.; Zhang, R.Y.; Li, L.; et al. Simam: A simple, parameter-free attention module for convolutional neural networks[C]. International Conference on Machine Learning. 2021: 11863-11874.

- Liu, H.; Liu, F.; Fan, X.; et al. Polarized self-attention: Towards high-quality pixel-wise regression[J]. arXiv preprint arXiv:2107.00782, 2021.

- Selvaraju, R.R.; Cogswell, M.; Das, A.; et al. Grad-cam: Visual explanations from deep networks via gradient-based localization[C]. International Conference on Computer Vision. 2017: 618-626.

- Sun, X.; Shi, H. Towards Better Structured Pruning Saliency by Reorganizing Convolution[C]. Winter Conference on Applications of Computer Vision. 2024: 2204-2214.

- Zhao, H.; Shi, J.; Qi, X.; et al. Pyramid scene parsing network[C]. Computer Vision and Pattern Recognition. 2017: 2881-2890.

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; et al. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs[J]. Transactions on Pattern Analysis and Machine Intelligence, 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Basulto-Lantsova, A.; Padilla-Medina, J.A.; Perez-Pinal, F.J.; et al. Performance comparative of OpenCV Template Matching method on Jetson TX2 and Jetson Nano developer kits[C]. Annual Computing and Communication Workshop and Conference. 2020: 0812-0816.

- Ouyang, Z.; Xue, L.; Ding, F.; et al. An algorithm for extracting similar segments of moving target trajectories based on shape matching[J]. Engineering Applications of Artificial Intelligence, 2024, 127, 107243. [Google Scholar] [CrossRef]

- Chen, Y.; Ge, P.; Wang, G.; et al. An overview of intelligent image segmentation using active contour models[J]. Intell. Robot., 2023, 3, 23–55. [Google Scholar] [CrossRef]

- Maturkar, P.A.; Gaikwad, M.A. Comparative analysis of texture segmentation using RP, PCA and Live wire for Arial images[M]. Recent Advances in Material, Manufacturing, and Machine Learning. 2023: 590-598.

- Cheng, Z.; Zou, C.; Dong, J. Outlier detection using isolation forest and local outlier factor[C]. Research in Adaptive and Convergent Systems. 2019: 161-168.

| Methods | UEIE | FPN [35] | AF-FPN [36] | MFFM | mIoU (%) | mPA (%) | F1-score (%) | Params (M) |

|---|---|---|---|---|---|---|---|---|

| Baseline | 75.6 | 85.6 | 85.2 | 11.333 | ||||

| +ULIE | ✔ | 77.4 | 89.6 | 86.5 | 11.333 | |||

| +FPN | ✔ | 77.3 | 85.2 | 86.3 | 18.906 | |||

| +AF-FPN | ✔ | 75.3 | 83.6 | 84.8 | 18.908 | |||

| +MFFM | ✔ | 78.7 | 87.9 | 87.3 | 11.564 | |||

| +ULIE +FPN | ✔ | ✔ | 78.5 | 86.7 | 87.2 | 11.906 | ||

| +ULIE +AF-FPN | ✔ | ✔ | 75.9 | 84.3 | 85.3 | 11.908 | ||

| +ULIE +MFFM | ✔ | ✔ | 79.2 | 89.1 | 87.7 | 11.564 |

| Methods | GAM [40] | SimAM [41] | SSAM [42] | mIoU (%) | mPA (%) | F1-score (%) | Params (M) |

|---|---|---|---|---|---|---|---|

| BUM | 79.2 | 89.1 | 87.7 | 11.564 | |||

| +GAM | ✔ | 78.4 | 89.2 | 87.7 | 20.273 | ||

| +SimAM | ✔ | 77.1 | 86.6 | 86.2 | 11.564 | ||

| +SSAM | ✔ | 80.8 | 90.1 | 88.8 | 12.266 |

| Methods | mIoU (%) | mPA (%) | F1-score (%) | Params (M) |

|---|---|---|---|---|

| HRNet [13] | 77.7 | 83.0 | 85.5 | 9.637 |

| BiseNetv2 [14] | 75.5 | 79.1 | 85.6 | 5.191 |

| SegFormer [15] | 76.5 | 80.8 | 85.1 | 3.715 |

| PSPNet [45] | 63.4 | 67.6 | 75.4 | 46.707 |

| DeepLabv3 [46] | 78.4 | 83.6 | 86.2 | 54.709 |

| LIEPNet [18] | 79.9 | 89.2 | 87.5 | 3.271 |

| ISLS (Ours) | 80.8 | 90.1 | 88.8 | 12.266 |

| Methods | mIoU (%) | mPA (%) | F1-score (%) |

|---|---|---|---|

| Template Matching [47] | 40.9 | 59.8 | 54.0 |

| Canny Edge Segmentation [48] | 32.5 | 44.5 | 43.6 |

| Contour Segmentation [49] | 32.5 | 44.5 | 43.6 |

| PCA Segmentation [50] | 36.6 | 58.6 | 50.4 |

| iForest Segmentation [51] | 48.0 | 59.1 | 58.8 |

| ISLS (Ours) | 80.8 | 90.1 | 88.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).