1. Introduction

Clouds are a critical element of the Earth's atmospheric system. Clouds affect the energy balance of the Earth's atmosphere and atmospheric circulations in all scales [

3,

4]. Accurate detection of the three-dimensional (3D) structure of clouds is essential for improving the simulation of cloud precipitation processes, diabatic data assimilation, and studying cloud-radiation interaction and its impact on climate [

5]. Currently, many sensors, including satellite active and passive remote sensing, radiosonde observations, ground-based remote sensing observations, and aircraft measurements, etc., have been developed to obtain cloud information. Nevertheless, all existing platforms have significant limitations.

Weather and cloud radars are the most important cloud detection instruments for detecting 3D structures of clouds. However, most radars are ground-based and their coverage is limited, especially for remote mountainous areas and over the oceans. Geostationary satellites provide continuous observation of large-scale horizontal cloud distributions over the oceans, but due to their high altitude, the observation is typically done with passive remote sensing, which is mostly limited to cloud top properties. Several polar-orbiting satellites are equipped with active remote sensing (including radar). For example, the W-band (94 GHz) millimeter-wave Cloud Profiling Radar (CPR), carried on the Cloudsat satellite, provides a global detection of 2D vertical cloud structures [

6]. The GPM (Global Precipitation Measurement Mission) and the FY-3G satellite are equipped with dual-frequency precipitation radars (DPR) and Precipitation Measurement Radar (PMR) respectively, detecting the 3D precipitation structures [

7]. Nevertheless, these radars usually have very narrow swath widths (100 - 250km) and low horizontal resolution. They cannot achieve continuous observations of the same geographical area. Therefore, it is highly desired to develop new methods, such as those that retrieve clouds based on the satellite passive remote sensing to obtain large-scale oceanic cloud 3D structures.

Several researchers have attempted to explore satellite observations to obtain 3D cloud information. Barker et al. (2011) used a radiation-similarity approach based on thermal infrared and visible channel data to estimate cloud ceilings. This approach relates donor pixels (from the active sensor data) to the recipient pixels in the surrounding regions [

8]. Miller et al. (2014) analyzed the statistical relationships between cloud types and cloud water content profiles and used detailed local cloud-profile information from active sensors to approximate properties of the surrounding regional cloud field. Since satellite passive observing systems provide very limited information about clouds in the vertical dimension, the technique can only be applied to the uppermost cloud layer observed [

9]. Noh et al. (2022) developed a statistical Cloud Base Height (CBH) estimation method to support constructing 3D clouds for aviation applications [

10]. Obviously, these studies present very limited capabilities for retrieving 3D clouds, especially for the broad ocean areas [

11].

Modern deep learning technologies provide new capabilities to retrieve clouds from the satellite passive remote sensing. Several works employed deep learning algorithms to study cloud properties [

12,

13,

14,

15]. With deep learning technologies, it is possible to establish a relationship between the CloudSat CPR 2D cloud vertical profiles and the corresponding MODIS L2 cloud products so that 3D clouds can be retrieved in the full MODIS granules. Generative Adversarial Networks (GANs) and their variant Conditional Generative Adversarial Networks (CGANs) have been proven to be very encouraging tools for establishing such a desired relationship [

16,

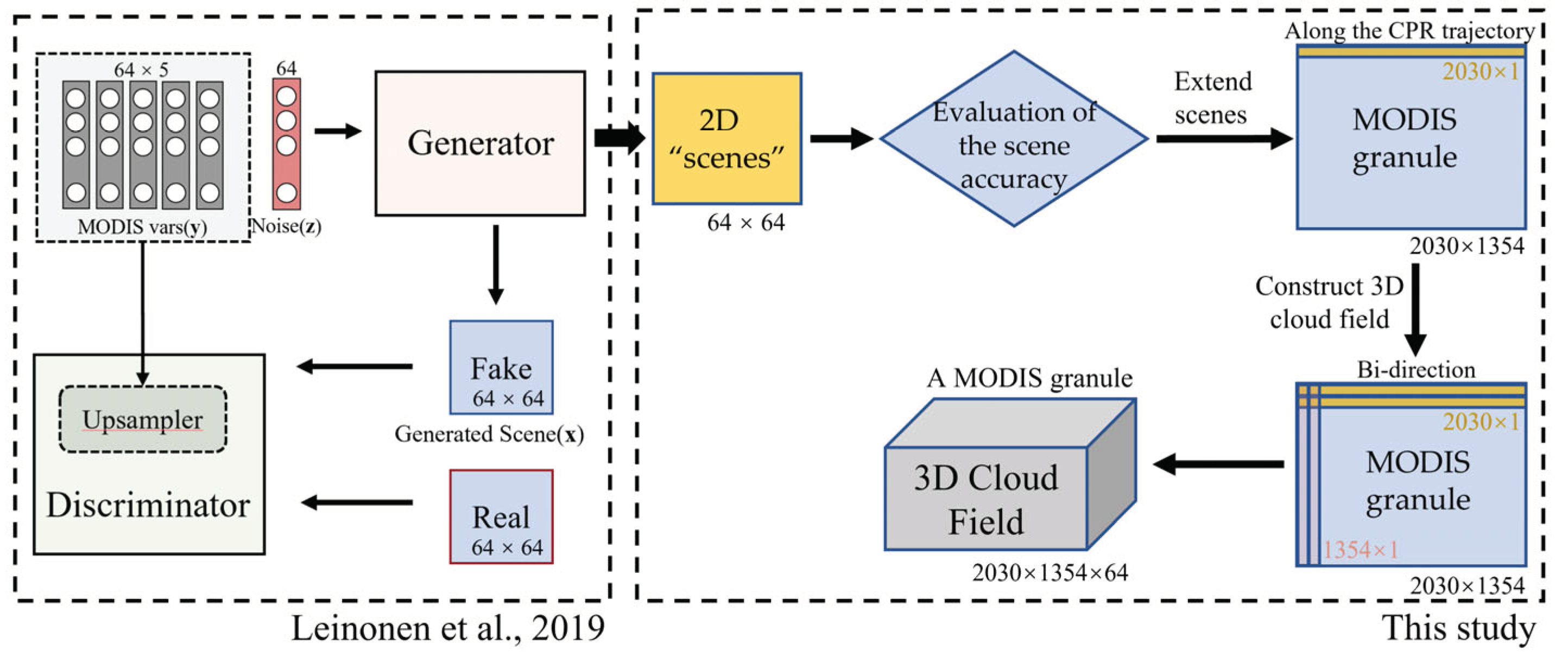

17]. By setting up a CGAN model, Leinonen et al. (2019) [

1] retrieved CloudSat CPR equivalent 2D cloud profiles (64×64 scenes, with a horizontal length of 70 km and a vertical height of 15 km) [

1]. Wang et al. (2023) expanded the dataset in Leinonen et al.’s study [

1] and conducted a systematic assessment of Leinonen's CGAN model for different cloud types and geographical regions. Their study showed that the CGAN model presents great capabilities for retrieving clouds with structured patterns, significant thickness, and high reflectivity, such as deep convective clouds and nimbostratus [

18].

This study aims to further expand the work of Leinonen et al. (2019) [

1] and Wang et al. (2023) [

18] to post-process their 64×64-pixel cloud scenes and produce seamless 3D clouds of 2030

1354

64-pixels for the MODIS granules. Firstly, we analyze the error characteristics of Leinonen et al.'s CGAN-retrieved cloud scenes. Secondly, a bi-directional Ensemble Bin Probabilistic Fusion technique based on CGAN (CGAN-BEBPF) is developed to improve the accuracy of the CGAN model generation and achieve probabilistic fusion CGAN-retrievals of the 2D cloud radar reflectivity factors to produce a seamless 3D cloud radar reflectivity fields for full MODIS granules with a horizontal resolution of 1 kilometer and a vertical resolution of 240 meters. The capability of CGAN-BEBPF is demonstrated by reconstructing the 3D cloud fields of a near-shore convective system and a typhoon, and comparing them with ground-based weather radar observations.

The structure of this paper is as follows. The next section introduces the data sources, Leinonen et al.'s CGAN-based MODIS 2D vertical scene retrieval technique, the weather case selection for this study, and the evaluation criteria for the 3D cloud retrievals. The third section describes the bi-directional Ensemble Bin Probabilistic Fusion (BEBPF) technique and an evaluation using CloudSat CPR data. In the fourth section, the 3D cloud structures retrieved for typhoon Chaba-2022 and a multi-cell convective system are compared with ground-based radar observations. Finally,

Section 5 summarizes the research results and provides future perspectives.

2. Data and Methodology

2.1. CloudSat and MODIS Datasets

The data used in this study are from the CloudSat (CPR) and Aqua (MODIS) satellites, similar to those used for training the CGAN cloud scene retrieval model by Leinonen et al. (2019) [

1] and Wang et al., (2023) [

18]. CloudSat is in a sun-synchronous orbit at an altitude of 705 km. It is equipped with the Cloud Profiling Radar (CPR), a 94 GHz millimeter-wave (W-band) radar that has significantly higher sensitivity to clouds compared to standard ground-based weather radars [

6]. The 2B-GEOPROF product from CloudSat [

19,

20] provides radar reflectivity observations obtained by the CPR. The Aqua satellite, which carries the Moderate Resolution Imaging Spectroradiometer (MODIS) [

21,

22] that has 36 spectral bands covering the visible to the thermal infrared regions, is capable of achieving full global observation coverage within a 2-day period [

23]. MODIS enables the detection and characterization of cloud boundaries and cloud radiative properties on a global scale [

24,

25]. Since both the Aqua and CloudSat satellites are part of the A-Train constellation, these two satellites fly in close proximity, with a time separation of less than one minute. Therefore, the cloud observation data from the MODIS instrument on Aqua and the CPR instrument on CloudSat are considered spatiotemporally consistent. Note that MODIS provides updated data twice a day for both daytime and nighttime observations.

The CGAN model developed by Leinonen et al.(2019) [

1] retrieves the CloudSat CPR reflectivity from the cloud top pressure (

), cloud water path (

), cloud optical thickness (

), effective particle radius (

), and cloud mask, provided by MODIS MOD06-AUX cloud product[

26]. The dataset comprises global oceanic airspace data from 2010 to 2017 and is used to train, validate, and test the CGAN model. A vertical cross-section of 64

64 pixels, defined as a “scene” by Leinonen et al. (2019) [

1], was generated individually along the CloudSat CPR track. Therefore, a scene covers an area of approximately 70 kilometers horizontally and 15 kilometers in height. The MODIS cloud products are interpolated to match the CloudSat CPR reflectivity, constituting the training samples for the CGAN model.

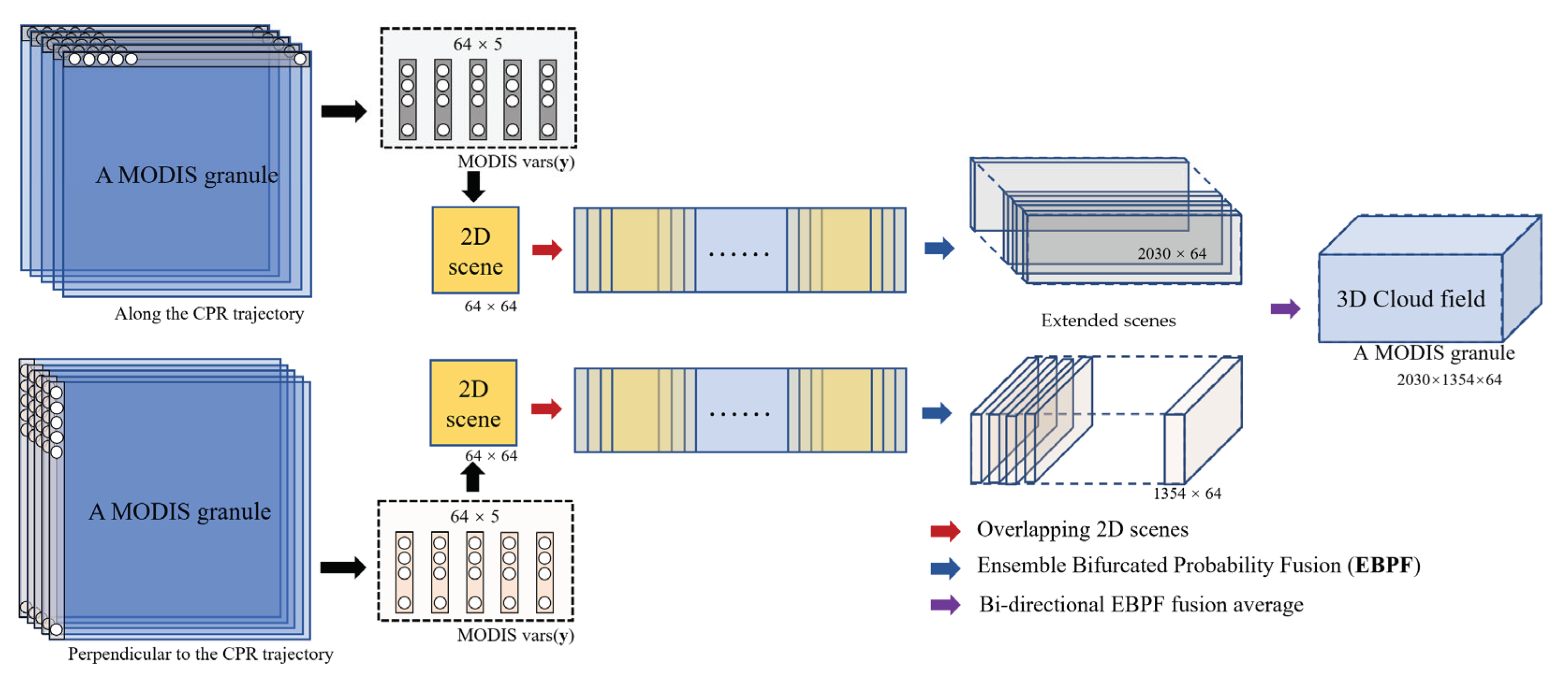

2.2. Requirements for Retrieving 3D Cloud Fields for the MODIS Granules

The CGAN-based MODIS cloud “scene” retrieval model developed by Leinonen et al. (2019) [

1] only retrieves cloud radar reflectivity scenes based on one-dimensional (64) MODIS cloud observational data input along the CPR track, with 64x64 pixels. A MODIS granule is a trunk of a MODIS swath containing 2030

1354 pixels (

Figure 1) and covering about 2030 km along the orbit and 2330 km wide [

2,

27]. To construct 3D cloud fields for MODIS granules, we need to consider the retrieving accuracy of the scenes and the discontinuity between the neighboring scenes. Meanwhile, we also need to deal with the issue of discontinuity in the direction normal to the CPR track. In this study, we developed a series of algorithms to complete seamless 3D cloud field construction. Firstly, we evaluate the characteristics of the CGAN-generated scenes. Then we designed an ensemble and blending method to extend the CGAN scenes to the full MODIS granule width, and finally we dealt with the other direction (normal to the CPR track) and obtained a final 3D cloud output. The whole processes are referred to as Bi-direction ensemble bin probabilistic fusion based on CGAN (CGAN-BEBPF). The pathway of CGAN-BEBPF is described in

Figure 1. The algorithms in each step will be discussed in detail in

Section 3.

2.3. Case Selection

In February 2018, the CloudSat satellite underwent a descent orbit operation, resulting in its withdrawal from the 'A-train' constellation. Therefore, since 2018, the CGAN-retrieved MODIS cloud scenes no longer have matched CPR observations. To validate the 3D cloud fields with CGAN-BEBPF, two groups of weather cases were selected. The first group includes six cases from 2014 to 2017. They are used to evaluate the results of CGAN-BEBPF by comparing them with the CPR observations. The second group includes typhoon Chaba (South China, July 2, 2022) and a multi-cell convective system (South China, August 24, 2022) occurring in the near-shore seas along the south coasts of China. The observation range of the coastal ground-based weather radars (S-band) is about 200 km out to the sea, which provides reflectivity data for verifying the 3D cloud fields retrieved for these two cases. The ground-based radar data used for verification is from the SWAN radar mosaic data provided by the Meteorological Centre of the China Meteorological Administration.

Table 1 describes the cases in the two groups. Note that the Group 1 cases are also used as a reference in designing EBPF value-selection strategies (to be detailed later).

2.4. Verification Metrics

To evaluate the 3D cloud field retrievals, the Threat Score (TS) [

28,

29] is calculated using the binary confusion matrix proposed by Finley et al. (1884 ) [

30] (

Table 2), as verified against the CPR observations. The evaluation is done pixel by pixel and case by case.

TS is computed as

where, for a given radar reflectivity factor threshold K, TP is the occurrences where the observation is greater than or equal to K and the retrieval is also greater than or equal to K. FN is the occurrences where the observation is greater than or equal to K while the retrieval is less than K. FP is the occurrences where the observation is less than K while the retrieval is greater than or equal to K. TN is occurrences where the observation is less than K and the retrieval is less than K. The selected test thresholds in this study are -22 dBz, -15 dBz, -10 dBz, -5 dBz, 0 dBz, 5 dBz, and 10 dBz.

To calculate the accuracy for the cloud mask determined by ensemble members in

Section 3.3, the Accuracy score against the CPR observation is calculated as follows:

This metric reflects the accuracy of the ensemble cloud mask for distinguishing cloudy and clear pixels.

3. Bi-Directional Ensemble Binning Probability Fusion (BEBPF)

3.1. Errors Distribution of the CGAN Scene Retrievals

Since Leinonen et al.’s CGAN model retrievals are 2D vertical cross-sections of cloud radar reflectivity (64x64 pixels), i.e., a scene, to reconstruct 3D cloud fields over MODIS granules, the simple way is to run the CGAN model by sliding the scenes throughout the MODIS granule and then combining the individual output scenes. However, such a method leads to significant discontinuity at the junction of the scenes. The discontinuity can be caused by the uncertainties of the CGAN model. Thus, to fuse the scenes seamlessly, one must consider the accuracy of the CGAN-retrieved scenes and the consistency between the neighboring scenes, especially at their lateral boundary zones. One way to cope with the problem is to use sliding windows to generate overlapping scenes and then take an average. However, this causes an artificial smoothing (weakening) of cloud core intensities.

We overcome the problem in three steps. Firstly, we evaluate the error properties of the scenes; secondly, based on the error properties of the scenes, we develop an ensemble-based probabilistic blending scheme to fuse the scenes along the CloudSat track, seamlessly; and lastly, we work out a similar method to process the scenes in the direction normal to the CloudSat track.

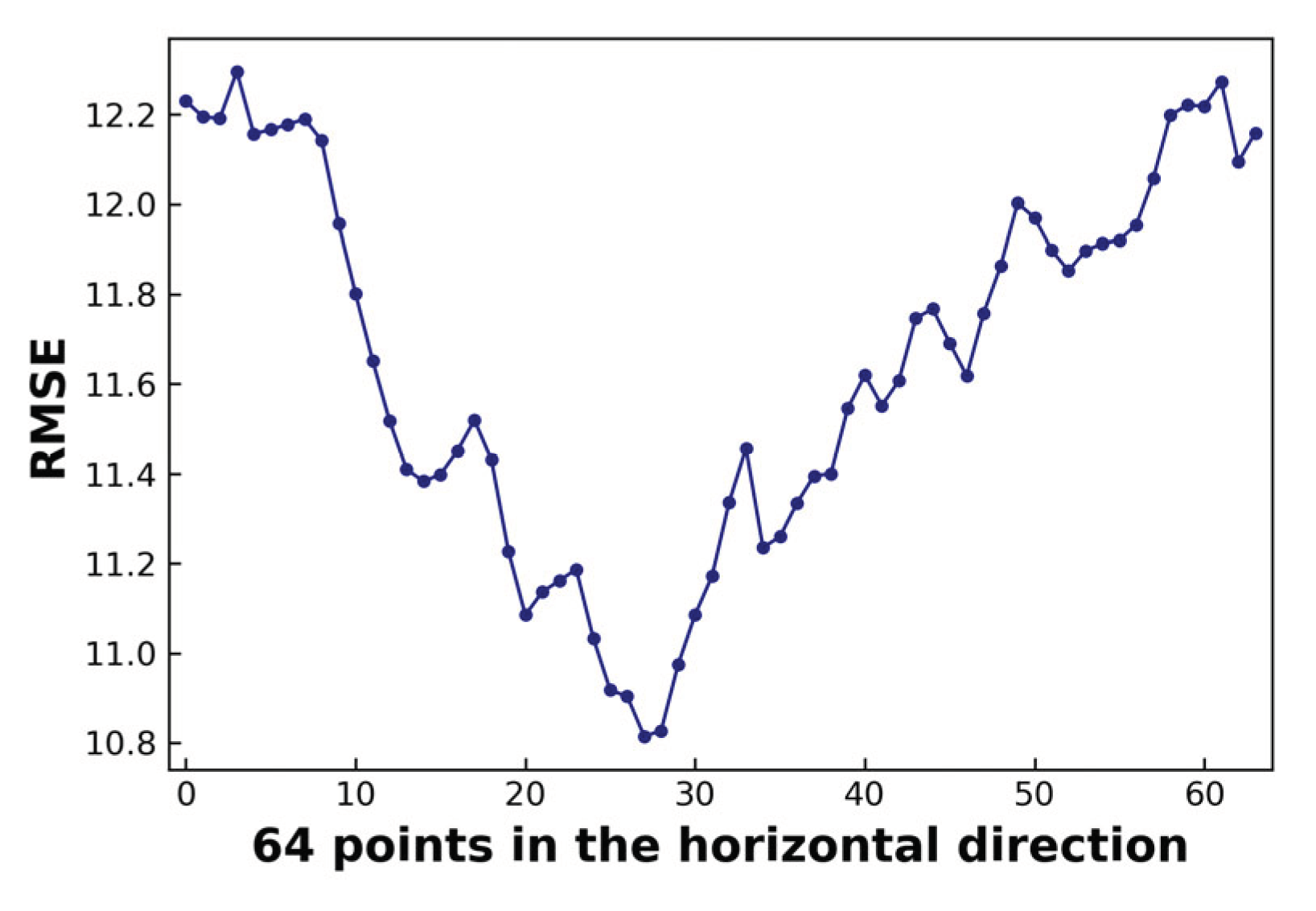

The root mean squared error (RMSE) of the CGAN-retrieved scenes is calculated for a total of 7180 samples in the test dataset. The average error was calculated over the 64 vertical layers and shown in

Figure 2. There are significant horizontal variations in the RMSE of the CGAN-generated scenes. Larger errors exist in the lateral boundary zones and the interior errors also appear to oscillate. This suggests that the CGAN generation is influenced by the boundaries because the pixels near the boundary have less information to be used to infer the labels, and, thus, the retrieval error increases toward the boundary of the scenes.

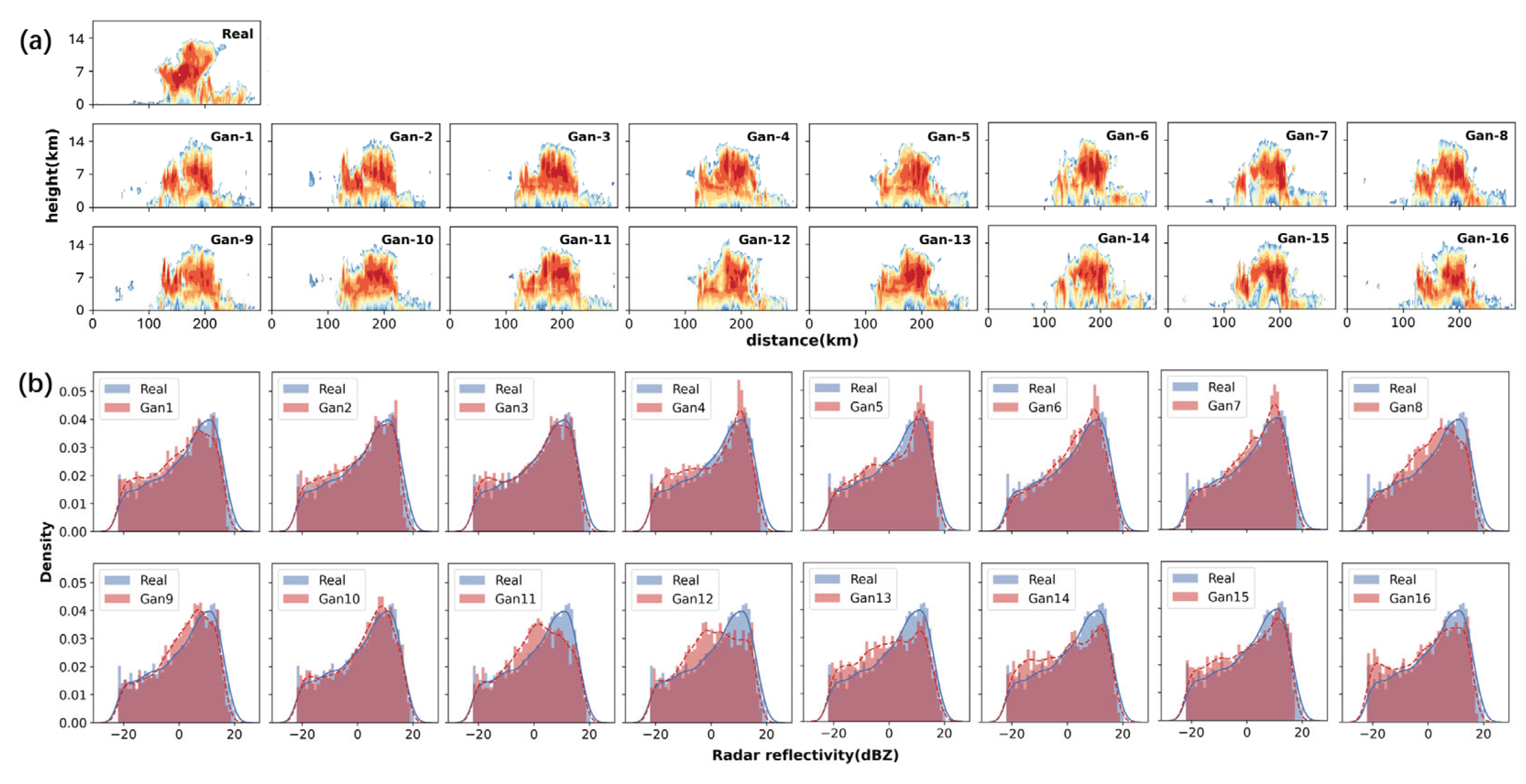

3.2. Ensembles of the CGAN-Retrieved Scenes

Based on the characteristics of the CGAN-retrieved scene errors, an overlapping scene generation method (e.g., scenes with small spatial shifting) is designed to provide the uncertainty information of the CGAN-retrievals. After several tests, we chose to retrieve CGAN scenes by sliding the scene generation by four-pixel intervals at a time. This results in 16 retrievals for each pixel. Which makes a 16-member ensemble. To check the representation of the CGAN retrieving uncertainties,

Figure 3a displays the results of the 16 ensemble members for a mesoscale convective system and the CPR observations. It can be seen that although all retrievals appear to be similar to the CPR observation in an overview, the locations and intensity of their convective cores differ significantly. This is more obvious by comparing the radar reflectivity distribution between the retrieval results and the CPR observations (

Figure 3b). There are significant differences between the shift-retrieved individual scenes for all radar reflectivity intensities.

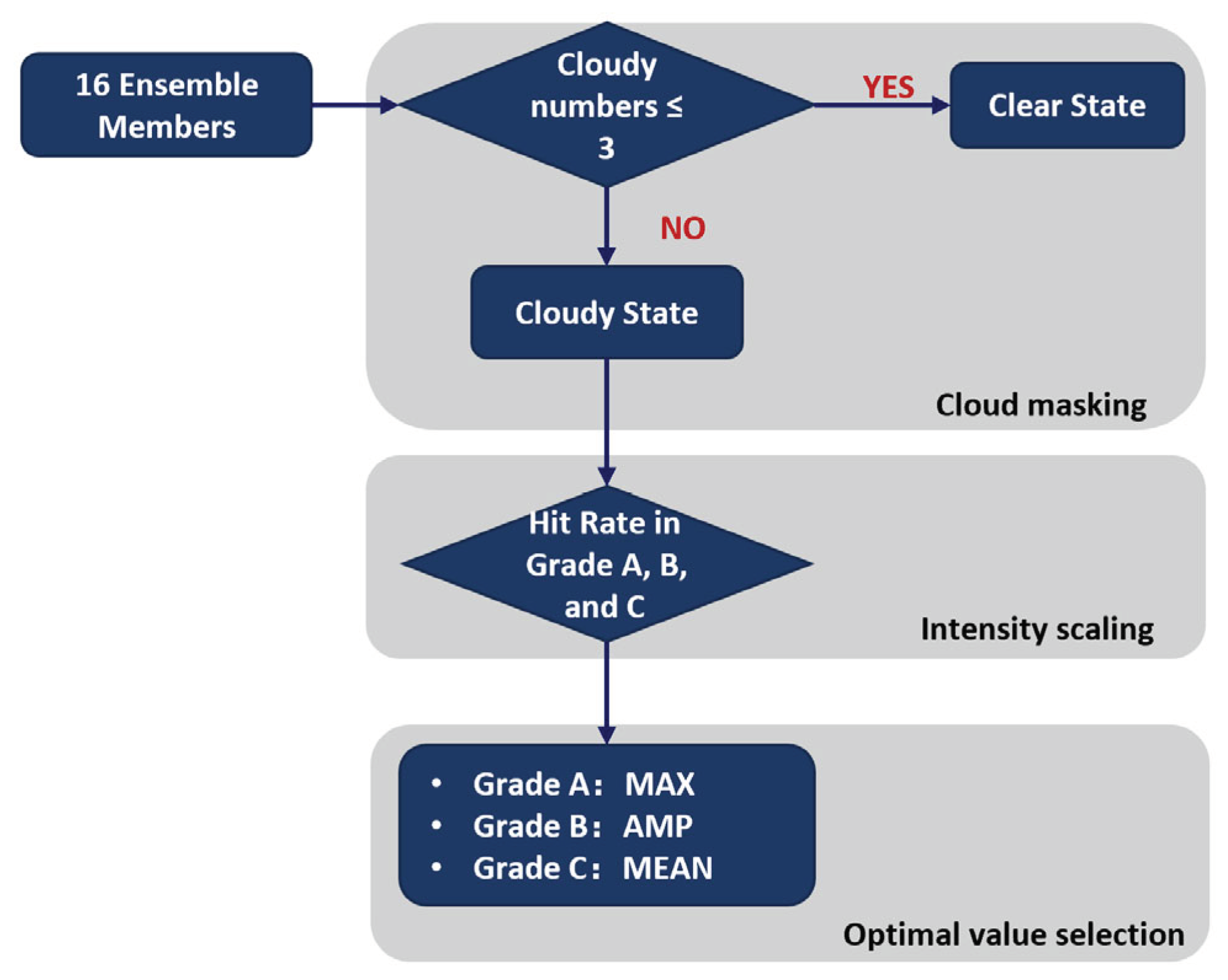

3.3. Ensemble Binning Probability Fusion (EBPF)

Based on the CGAN-retrieved ensemble, an Ensemble Binning Probability Fusion (EBPF) technique is designed to determine the blending weights of the CGAN retrievals (ensemble members) at each pixel according to the ensemble probability distributions. The EBPF technique comprises three algorithms (

Figure 4): cloud masking, intensity scaling, and optimal value selection. The Group 1 weather cases in

Table 1 are used to determine the weight parameters in these three algorithms. Note that the EBPF technique only blends the CGAN-retrieved 2D scenes along the horizontal direction of the scene ensemble and it does not retrieve 3D cloud fields.

-

(1)

Cloud masking

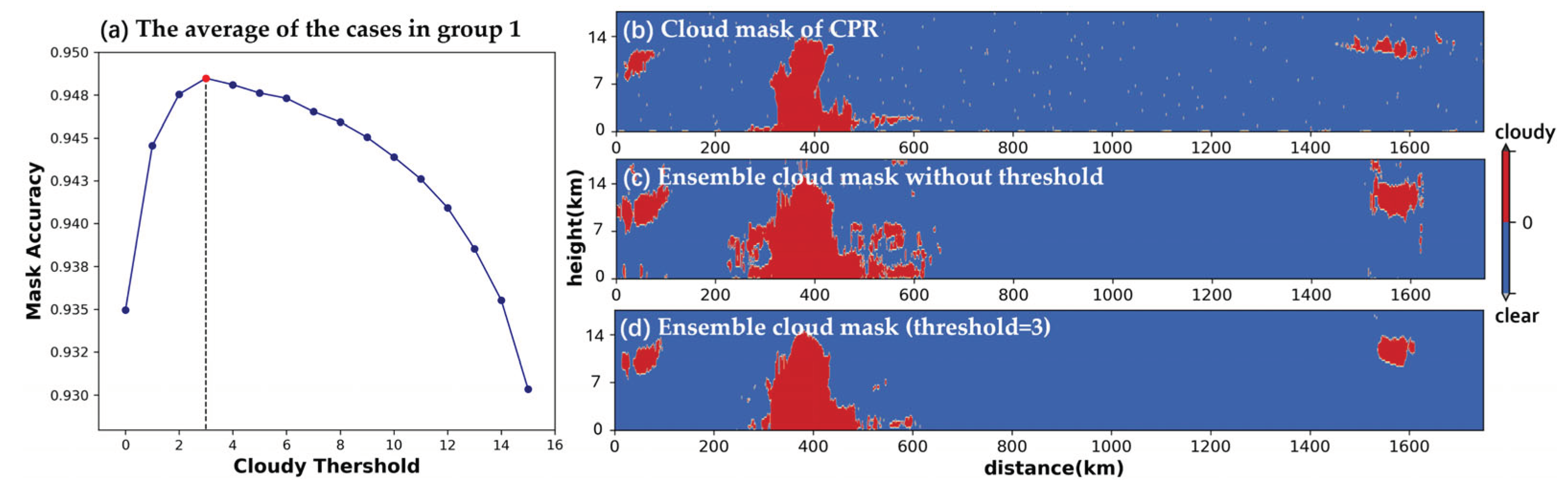

As a first step, we classify if the target pixel is cloudy or clear based on the ensemble members. Since the ensemble contains probabilistic information for cloudy or clear for each pixel, for a given pixel, the classification for cloudy or clear can be determined by the probability distribution of the ensemble members. To do so, we defined the cloudy probability threshold in terms of the number of ensemble members to establish the cloud mask. To determine an optimal threshold, we run the cloud masking with thresholds of 0 – 15 members for all six Group 1 cases in

Table 1, respectively. Specifically, for example, given a pixel, a threshold of 2 means that if over 2 members of the ensemble say “cloudy” we define the pixel as cloudy. Then, the computed result based on each threshold is verified against the CPR observations. The Accuracy scores (Eq. 2) are computed for different thresholds over all six cases are shown in

Figure 5a. Apparently, a threshold of 3 is optimal, yielding the highest accuracy of 94.85%.

Figures 5b, c, and d show the result for the case on 4 December 2017 (

Table 1). Apparently, the ensemble cloud masks determined with threshold 3 are quite consistent with the CPR observations. Therefore, threshold 3 is selected.

-

(2)

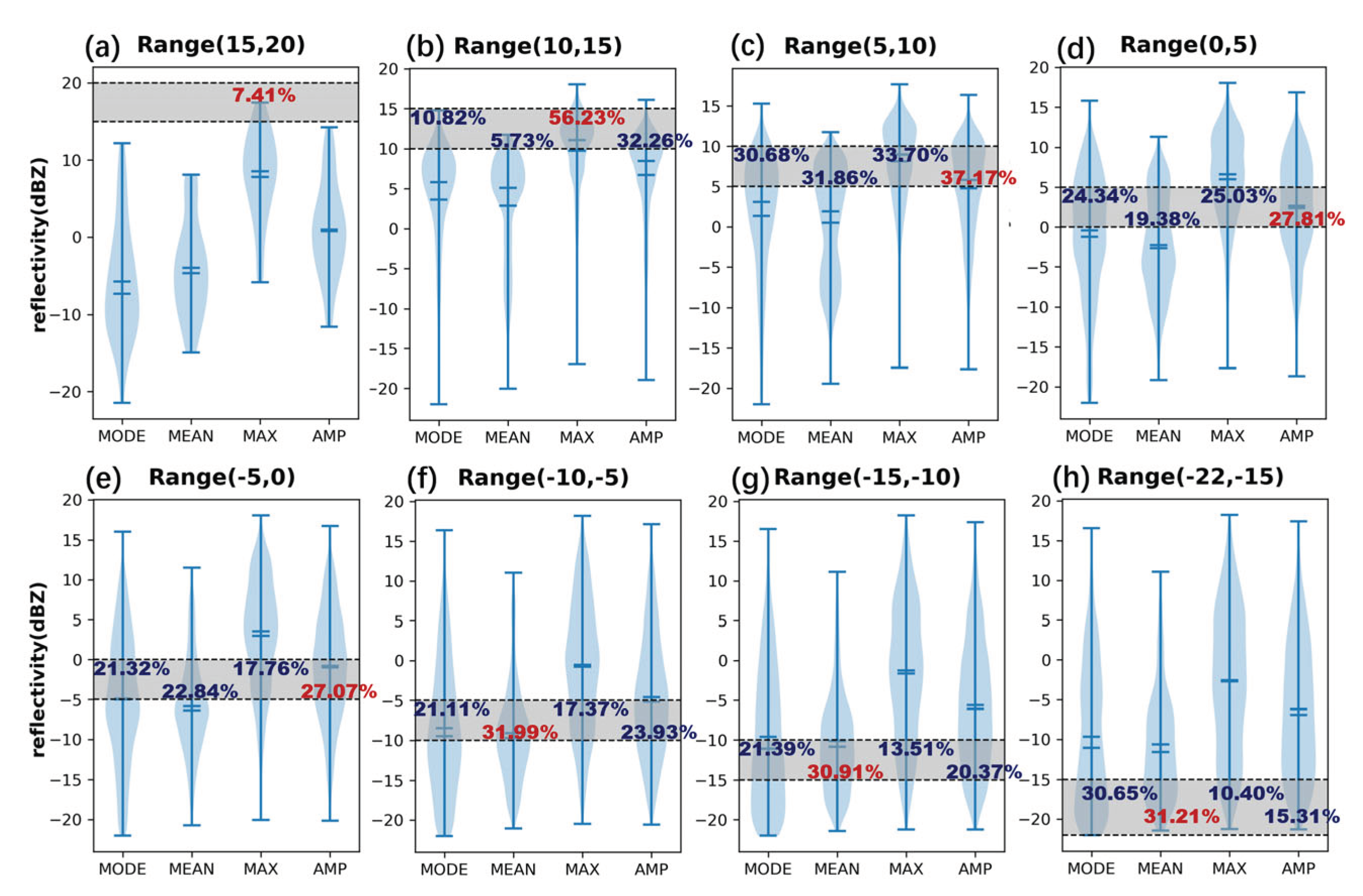

Intensity Scaling and Processing

In considering that the accuracy of the CGAN-retrieved clouds changes with the radar reflectivity intensity, we scale the retrieved radar reflectivity to different grades and optimize the value for each grade. The CPR data and ensemble retrievals of six cases listed in

Table 1 are used as a basis to determine the scaling and value optimization. Since the main research object of this section is the cloud clusters with severe convective systems, and the CPR radar reflectivity below -22 dBZ is predominantly clutter (often observed in clear areas) that does not affect the cloud cores, we set the reflectivity below -22 dBZ to no-cloud. For the CPR radar reflectivity factors ranging from -22 dBZ to 20 dBZ, we divide them into 8 bins, at intervals of 5 dBZ (referred to as bins 1 to 8). The ensemble mode (MODE), which indicates the most values that appear in the ensemble members, the mean (MEAN), and the maximum reflectivity factor (MAX) for each of the 8 CPR radar reflectivity bins are calculated. In addition, we also calculated the mean of MODE and MEAN, abbreviated as “avg_max_prob (AMP)”. The verification results against the CPR observations are shown in

Figure 6.

Figure 6 shows that only a small subset of ensemble members has high radar reflectivity at the rain cores due to the uncertainty in the CGAN retrievals. Apparently, the ensemble mean tends to dilute the intensity of the rain cores. Thus, for the high radar reflectivity range [10 to 20 dBZ], named as intensity Grade A, the maximum value among the 16 ensemble members is retained. For the median radar reflectivity range [-5 to 10 dBZ], named Grade B, since the Mode value often leads to an underestimation for cloud clusters with severe convective systems, and the AMP result performs better, we select the AMP result. Finally, for the low radar reflectivity range [-22 to -5 dBZ], named Grade C, the mean value of the ensemble members is chosen.

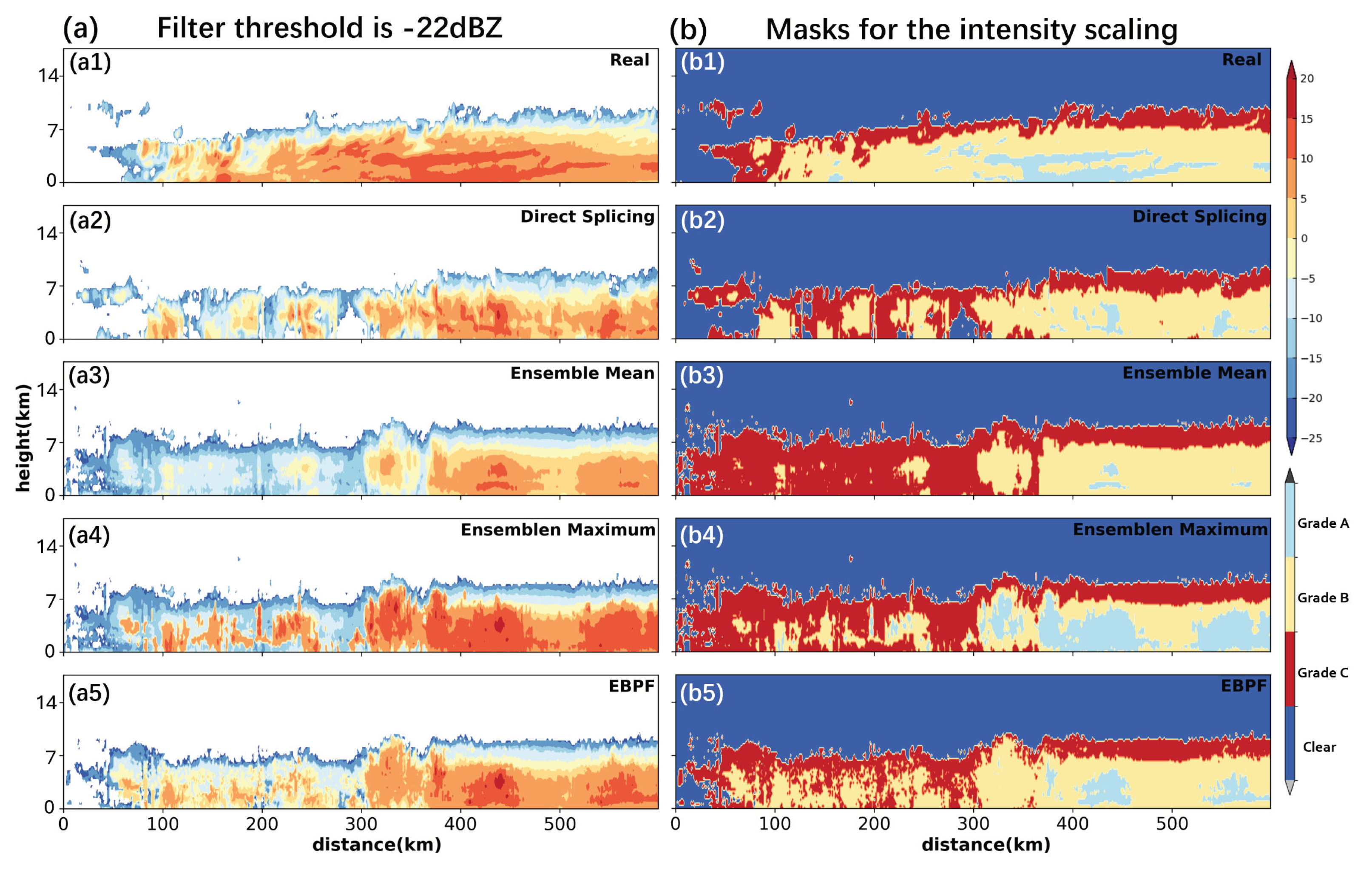

3.4. Evaluation of EBPF

To demonstrate the advantages of EBPF, the reconstruction by EBPF is compared with Direct Splicing of the CGAN-retrieved scenes, Ensemble Mean, and Ensemble Maximum for a nimbostratus case. The Ensemble Maximum refers to the highest value among the ensemble members if one of the members is with a value exceeding 5 dBZ, otherwise, the ensemble mean is taken.

The results are presented in

Figure 7. Compared with the CPR observations (

Figure 7a1,b1), Direct Splicing results in serious discontinuity (

Figure 7a2,b2); Ensemble Mean smooths out the intense core and leads to an underestimation (

Figure 7a3,b3), while Ensemble Maximum causes an overestimation of the cloud intensity (

Figure 7a4,b4). EBPF (

Figure 7a5,b5) produces the best result. It preserves the mid to high reflectivity (Grade A and B) which is more consistent with the CPR observations.

The TS scores of the EBPF retrievals over all six cases in Group 1 (

Table 1) are given in

Table 3. For comparison, the TS scores of the four experiments are computed against the CPR observation for different thresholds. In general, EBPF obtains the highest TS scores across various thresholds, especially for the intense rain cores (a threshold of 10 dBZ). It suggests that EBPF not only ensures good coverage in the low reflectivity region but also retains the high reflectivity cores.

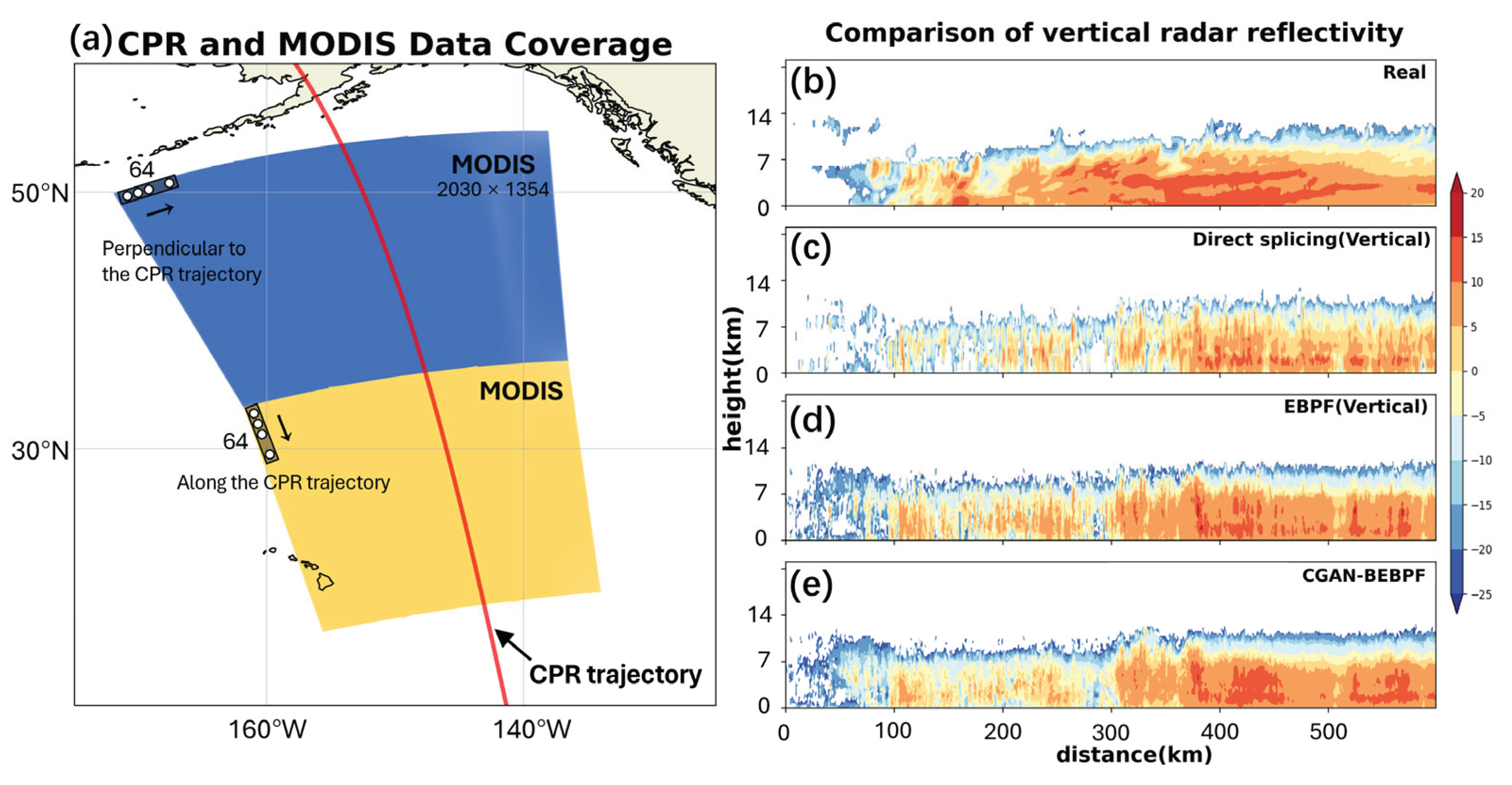

3.5. Bi-Directional EBPF 3D Cloud Retrieving (BEBPF)

Although EBPF extends Leinonen et al. (2019) [

1] CGAN cloud scene retrievals along the CPR track direction with continuous and improved cloud vertical profiles (cross-section, to obtain 3D cloud fields, we must consider whether these cloud vertical cross-sections can be directly spliced in the direction normal to the CPR trajectory. Three algorithms are examined: a) simply splicing the Leinonen et al. [

1] CGAN scenes retrieved in the direction normal to the CPR track (Direct Splicing,

Figure 8a) and b) performing EBPF in the direction, and c) combining the EBPF results in both directions, i.e., bi-directional EBPF (CGAN-BEBPF).

The result of a case study using the above three methods is shown in

Figure 8c, d, and e, and compared with the CPR observation (Real,

Figure 8b). Direct Splicing produces evident discontinuity (

Figure 8c), EBPF generates better results (

Figure 8d), and CGAN-BEBPF brings about further improvements (

Figure 8e). Therefore, CGAN-BEBPF is selected for generating MODIS 3D cloud fields.

Figure 9 presents the flowchart for constructing a 3D cloud field with CGAN-BEBPF for a MODIS granule. CGAN-BEBPF is firstly applied along the CPR trajectory using EBPF. Next, CGAN-BEBPF is applied along the direction normal to the CPR trajectory using EBPF. Finally, the results of these two steps are averaged to generate the final 3D cloud field.

4. Case Studies

To demonstrate the capability of CGAN-BEBFP, the 3D cloud fields for Typhoon Chaba and a complex convective system, that occurred in the coastal regions of the South China Sea, are retrieved and compared with the ground-based radar observations. The descriptions of these two cases are given in

Table 1. Ground-based radar data were obtained from the China Meteorological Administration's Meteorological Center's SWAN radar mosaic, while the effective particle radius data from MODIS were plotted for comparison.

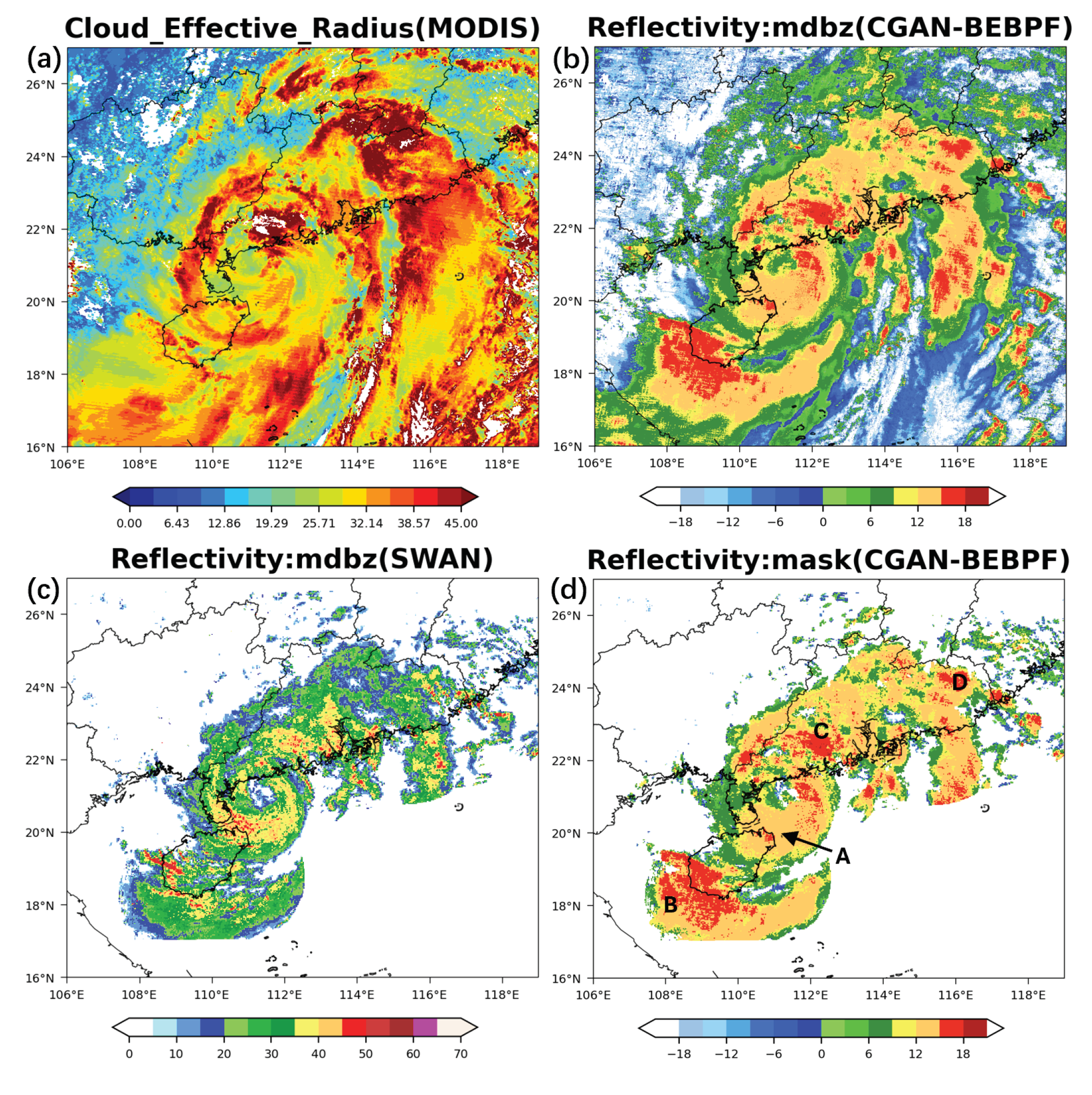

4.1. Typhoon Chaba

Typhoon Chaba was observed by MODIS at 02:50 UTC on 2 July 2022.

Figure 10 presents the 3D cloud radar reflectivity retrieved with CGAN-BEBFP and a comparison with the MODIS effective particle radius and the SWAN mosaic of reflectivity. It shows that the CGAN-BEBFP-retrieved 3D reflectivity (

Figure 10b) is highly consistent with the MODIS effective particle radius observations (

Figure 10a) for both cloud distribution and rainband structures.

Figure 10b and 11c show that CGAN-BEBPF retrieves the weak intensity cloud areas that were missed by SWAN because CPR is more sensitive to small cloud droplets than ground-based radar. To verify the detailed structures of the typhoon rainbands, we mask the 3D cloud fields with ground-based radar reflectivity measurements, and the result is shown in

Figure 10d. In general, the rainband structure of the 3D retrieval well agrees with the ground-based radar observation. The 3D cloud retrievals capture the typhoon eyewall (“A” labeled in

Figure 10d) and spiral rainbands (“B”, “C”, and “D”) precisely.

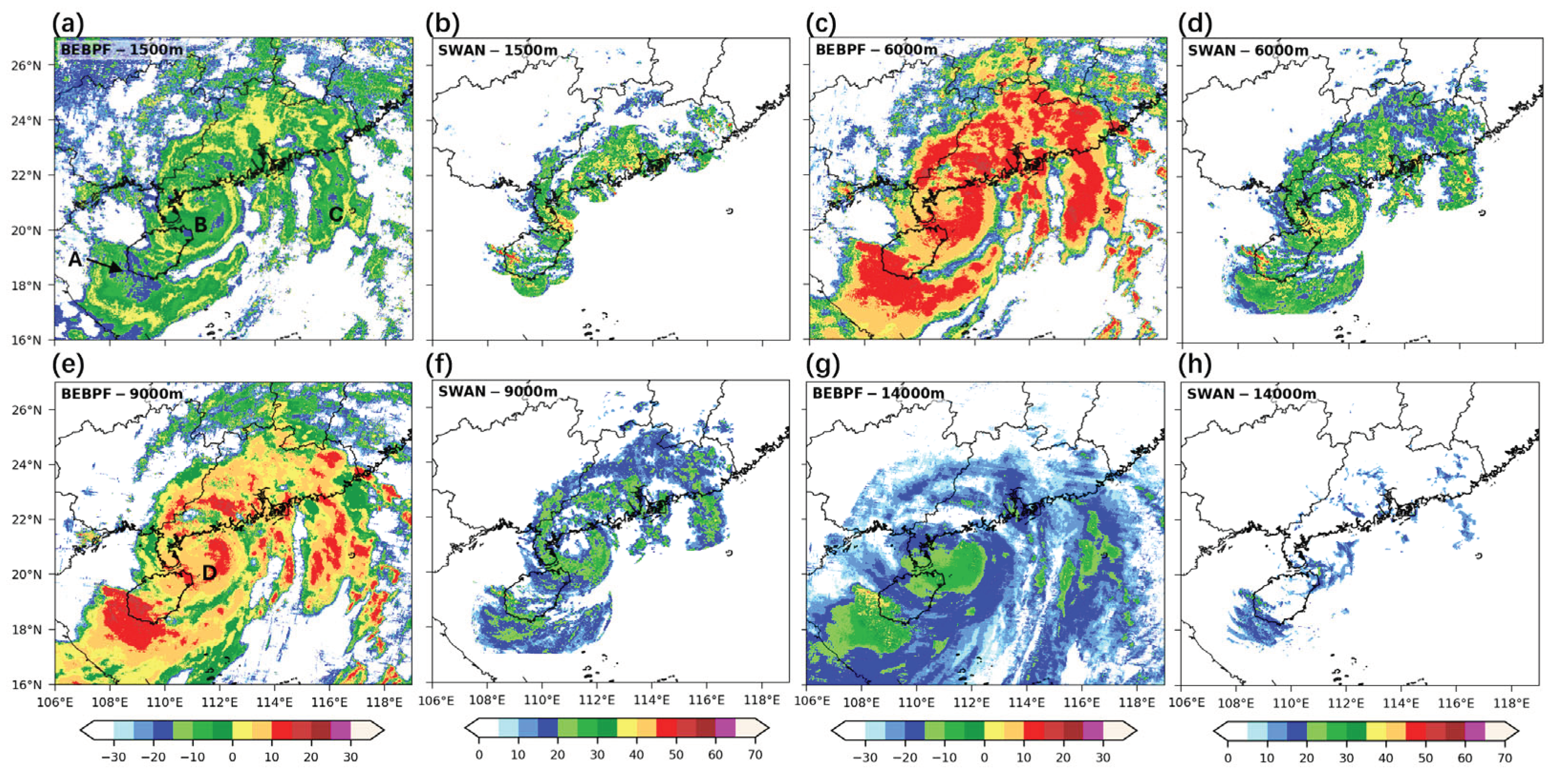

The cross-sections of the 3D cloud fields at heights of 1500m, 6000m, 9000m, and 14000m are compared with ground-based radar observations at the same heights (

Figure 11). Several important points can be drawn. a) In a deep middle layer (

Figure 11c, d and

Figure 11e, f), the CGAN-BEBPF-retrieved cloud fields are principally consistent with the ground-based radar measurements. The retrieval evidently broadens the ground radar rainbands observed by the ground-based radars due to its ability to retrieve the weak cloud regions. b) In the upper layer (

Figure 11g, h), CGAN-BEBPF recovers the important cloud structures of ice crystals and snow particles that are partly or completely missed by the ground-based radars. And c) CGAN-BEBPF retrieves the eyewall and rainbands observed by the ground radars, as well as the weak-intensity cloud boundaries around these rainbands (

Figure 11a), but it significantly underestimated the intensity of the rainband core. This is related to the intrinsic shortcoming of the CloudSat CPR (W-band), which cannot properly detect lower-level strong precipitation cores due to severe signal attenuation by a deep layer of intense cloud particles. We can see that the rainbands retrieved by CGAN-BEBPF are featured with hollow weak echo zones in the rain core. Three of them are labeled in

Figure 11a as “A”, “B”, and “C”.

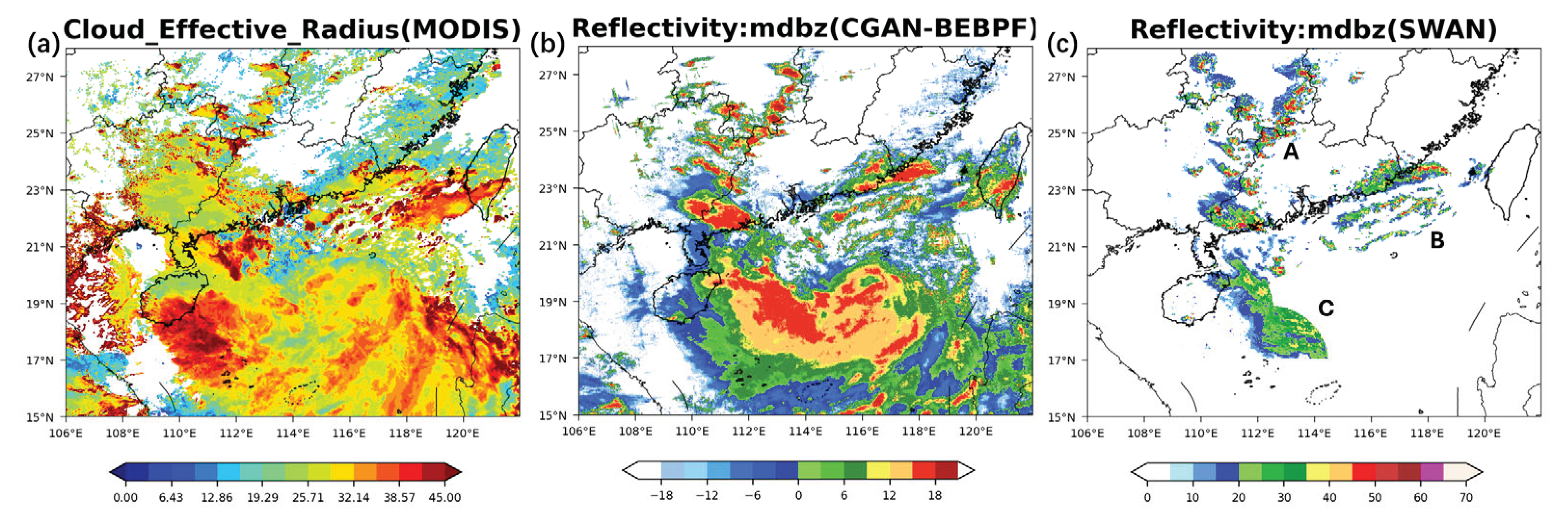

4.2. A Multi-Cell Convective System

Figure 12 shows the CGAN-BEBPF 3D cloud retrieval and the ground radar observations of an intense convective cloud cluster associated with the tropical storm in south China at 06:00 UTC on 24th August 2022. The MODIS effective particle radius is also presented for comparison (

Figure 12a). It shows that CGAN-BEBPF retrieves 3D cloud fields (

Figure 12b) that are consistent with the MODIS effective particle radius observations (

Figure 12a) for both cloud distribution and structures. It successfully recovers the waving rainbands near the coast (labeled as “B”) and the major oceanic cloud mass (labeled as “C”) that is beyond the detection range of the ground-based radar observations (

Figure 12c). It is worthy to point out that, although the CGAN is trained with the data over the oceans, CGAN-BEBPF retrieves accurately the lines of strong cellular convection over land (labeled in “A”) in terms of both convection locations and intensity. This suggests that the model presents some capability for retrieving 3D cloud fields over land.

5. Conclusions and Discussion

This work extends the CGAN-based MODIS cloud retrieval work of Leinonen et al. (2019) [

1] to generate seamless 3D cloud radar reflectivity for the whole MODIS visible channel granule. A Bi-directional Ensemble Binning Probability Fusion (BEBPF) is proposed to automate the 3D cloud radar reflectivity generation based on the CGAN model. CGAN-BEBPF enhances the accuracy of the original CGAN retrievals and enables a seamless fusion of the 64x64 (75km horizontal x 15 km vertical) cloud vertical profiles (scenes) to generate 3D cloud fields for the MODIS granules. CGAN-BEBPF is applied to retrieve the 3D cloud structure of a typhoon and a multi-cell convective system, and the results are compared with ground-based radar observations. The results demonstrate that CGAN-BEBPF can retrieve 3D clouds and rainbands of typhoons and severe convection with remarkable accuracy and reliability. The main conclusions are summarized below.

- (1)

Statistical verification of the 7180 2D cloud scenes (vertical cross-sections of cloud radar reflectivity) generated by the CGAN model of Leinonen et al. (2019) [

1] exhibits discontinuity in neighboring scenes, internal uncertainties, and an increase in error towards lateral boundaries. Running the model for the overlapping scenes, but with a small shift in the grids, changes the retrieval results significantly.

- (2)

A Bi-directional Ensemble Binning Probability Fusion (BEBPF) technique is introduced to overcome the issues of the Leinonen et al. CGAN model and generate seamless 3D cloud fields for the MODIS granules, termed CGAN-BEBPF. CGAN-BEBPF optimizes the Leinonen et al. (2019) [

1] CGAN model retrieval (scenes) accuracy and realizes seamless fusion of the scene by generating overlapped scenes and pixel-wise ensembles and making use of the ensemble probability information. CGAN-BEBPF has three components: cloud masking, intensity scaling, and optimal value selection. CGAN-BEBPF provides superior coverage of the low reflectivity areas and preserves high reflectivity in the cloud cores, which significantly outperforms the direct splicing or simple ensemble mean methods.

- (3)

CGAN-BEBPF is applied to retrieve the 3D cloud structure of typhoon Chaba and a multi-cell convective system. A comparison of the retrieved CGAN-BEBPF 3D cloud fields with the ground-based radar observations shows that CGAN-BEBPF is remarkably capable of retrieving the structure and locations of rainbands and convective cells of typhoon and severe convection, as well as the weak ice and snow clouds in the upper layer of deep convective systems, which are mostly missed by ground-based radars. Furthermore, CGAN-BEBPF can retrieve weak clouds around rainbands, producing broader 3D rainbands than those observed by ground-based radars.

- (4)

Due to the signal attenuation effect of the CloudSat CPR (W-band), CGAN-BEBPF underestimates the radar reflectivity in the lowest 2-3 km precipitation layer of deep convective cores and presents difficulty in resolving the sharp small-scale core structures.

Overall, CGAN-BEBPF exhibits an outstanding capability of generating high-resolution (~1km horizontally and 240m vertically) 3D cloud fields at ~1 km over the 2330 km-wide MODIS granules over the oceans, and thus can significantly fill some gaps in the modern cloud measurements. The CGAN-BEBPF package has been run in near-real time at Nanjing University of Information Science and Technology to generate 3D cloud fields in the South China Sea and Western Pacific coastal region, and provides valuable information for the forecasting of typhoons and severe convection. We are currently working to extend this capability to retrieve 3D cloud fields using Himawari-8/9 AHI and FY-4 AGRI geostationary satellite observations. In addition, the possibility of using ground-based radar reflectivity mosaics as labels for the deep learning model is also under exploration, which will potentially provide more accurate 3D precipitation reflectivity retrievals that can be assimilated into numerical weather prediction models to improve typhoon and severe convection prediction.

Author Contributions

Conceptualization, Y.L.; methodology, Y.Q. F.W.; software, Y.Q. and F.W.; validation, Y.L., H.F. and Y.Z.; formal analysis, Y.Q. and H.F.; investigation, Y.Q. and Y.L.; resources, Y.L. and F.W.; data curation, F.W. and Y.Z.; writing—original draft preparation, Y.Q.; writing—review and editing, Y.L., H.F and J.D.; visualization, Y.Q.; supervision, Y.L.; project administration, Y.L.; funding acquisition, Y.L. and J.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the NSFC-CMA Joint Research (Grant #U2342222) and the National Key R&D Program of China (Grant 2023YFC3007600).

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

References

- Leinonen, J.; Guillaume, A.; Yuan, T. Reconstruction of Cloud Vertical Structure With a Generative Adversarial Network. Geophys. Res. Lett. 2019, 46, 7035–7044. [Google Scholar] [CrossRef]

- Remer L A, Tanré D, Kaufman Y J, et al. Algorithm for remote sensing of tropospheric aerosol from MODIS: Collection 005. National Aeronautics and Space Administration, 2006, 1490.

- Li, Z.; Barker, H.W.; Moreau, L. The variable effect of clouds on atmospheric absorption of solar radiation. Nature 1995, 376, 486–490. [Google Scholar] [CrossRef]

- Stephens, G.L. Cloud Feedbacks in the Climate System: A Critical Review. J. Clim. 2005, 18, 237–273. [Google Scholar] [CrossRef]

- Randall D, Khairoutdinov M, Arakawa A, et al. Breaking the cloud parameterization deadlock. Bulletin of the American Meteorological Society, 2003, 84, 1547–1564.

- Stephens G L, Vane D G, Boain R J, et al. The CloudSat mission and the A-Train: A new dimension of space-based observations of clouds and precipitation. Bulletin of the American Meteorological Society, 2002, 83, 1771–1790.

- Huffman G J, Bolvin D T, Braithwaite D, et al. NASA global precipitation measurement (GPM) integrated multi-satellite retrievals for GPM (IMERG). Algorithm theoretical basis document (ATBD) version, 2015, 4, 30.

- Barker H W, Jerg M P, Wehr T, et al. A 3D cloud-construction algorithm for the EarthCARE satellite mission. Quarterly Journal of the Royal Meteorological Society, 2011, 137, 1042–1058.

- Miller, S.D.; Forsythe, J.M.; Partain, P.T.; Haynes, J.M.; Bankert, R.L.; Sengupta, M.; Mitrescu, C.; Hawkins, J.D.; Haar, T.H.V. Estimating Three-Dimensional Cloud Structure via Statistically Blended Satellite Observations. J. Appl. Meteorol. Clim. 2014, 53, 437–455. [Google Scholar] [CrossRef]

- Noh, Y.-J.; Haynes, J.M.; Miller, S.D.; Seaman, C.J.; Heidinger, A.K.; Weinrich, J.; Kulie, M.S.; Niznik, M.; Daub, B.J. A Framework for Satellite-Based 3D Cloud Data: An Overview of the VIIRS Cloud Base Height Retrieval and User Engagement for Aviation Applications. Remote. Sens. 2022, 14, 5524. [Google Scholar] [CrossRef]

- Dubovik, O.; Schuster, G.L.; Xu, F.; Hu, Y.; Bösch, H.; Landgraf, J.; Li, Z. Grand Challenges in Satellite Remote Sensing. Front. Remote. Sens. 2021, 2. [Google Scholar] [CrossRef]

- Jeppesen, J.H.; Jacobsen, R.H.; Inceoglu, F.; Toftegaard, T.S. A cloud detection algorithm for satellite imagery based on deep learning. Remote Sens. Environ. 2019, 229, 247–259. [Google Scholar] [CrossRef]

- Lee, Y.; Kummerow, C.D.; Ebert-Uphoff, I. Applying machine learning methods to detect convection using Geostationary Operational Environmental Satellite-16 (GOES-16) advanced baseline imager (ABI) data. Atmospheric Meas. Tech. 2021, 14, 2699–2716. [Google Scholar] [CrossRef]

- Pritt M, Chern G. Satellite image classification with deep learning[C]//2017 IEEE applied imagery pattern recognition workshop (AIPR). IEEE, 2017: 1-7.

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.-Q. Target Classification Using the Deep Convolutional Networks for SAR Images. IEEE Trans. Geosci. Remote. Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets. Advances in neural information processing systems, 2014, 27.

- Mirza M, Osindero S. Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784, 2014.

- Wang, F.; Liu, Y.; Zhou, Y.; Sun, R.; Duan, J.; Li, Y.; Ding, Q.; Wang, H. Retrieving Vertical Cloud Radar Reflectivity from MODIS Cloud Products with CGAN: An Evaluation for Different Cloud Types and Latitudes. Remote. Sens. 2023, 15, 816. [Google Scholar] [CrossRef]

- Marchand, R.; Mace, G.G.; Ackerman, T.; Stephens, G. Hydrometeor Detection Using Cloudsat—An Earth-Orbiting 94-GHz Cloud Radar. J. Atmos. Ocean. Technol. 2008, 25, 519–533. [Google Scholar] [CrossRef]

- Stephens, G.L.; Vane, D.G.; Tanelli, S.; Im, E.; Durden, S.; Rokey, M.; Reinke, D.; Partain, P.; Mace, G.G.; Austin, R.; et al. CloudSat mission: Performance and early science after the first year of operation. J. Geophys. Res. Atmos. 2008, 113. [Google Scholar] [CrossRef]

- Parkinson, C. Aqua: an Earth-Observing Satellite mission to examine water and other climate variables. IEEE Trans. Geosci. Remote. Sens. 2003, 41, 173–183. [Google Scholar] [CrossRef]

- Platnick, S.; King, M.D.; Ackerman, S.A.; Menzel, W.P.; Baum, B.A.; Riédi, J.C.; Frey, R.A. The MODIS cloud products: algorithms and examples from terra. IEEE Trans. Geosci. Remote Sens. 2003, 41, 459–473. [Google Scholar] [CrossRef]

- Barnes, W.; Xiong, X.; Salomonson, V. Status of terra MODIS and aqua modis. Adv. Space Res. 2003, 32, 2099–2106. [Google Scholar] [CrossRef]

- Kotarba, A.Z. Calibration of global MODIS cloud amount using CALIOP cloud profiles. Atmospheric Meas. Tech. 2020, 13, 4995–5012. [Google Scholar] [CrossRef]

- Kang, L.; Marchand, R.; Smith, W. Evaluation of MODIS and Himawari-8 Low Clouds Retrievals Over the Southern Ocean With In Situ Measurements From the SOCRATES Campaign. Earth Space Sci. 2021, 8. [Google Scholar] [CrossRef]

- Cronk H, Partain P. Cloudsat mod06-aux auxiliary data process description and interface control document. National Aeronautics and Space Administration Earth System Science Pathfinder Mission, 2018.

- Barnes, W.; Pagano, T.; Salomonson, V. Prelaunch characteristics of the Moderate Resolution Imaging Spectroradiometer (MODIS) on EOS-AM1. IEEE Trans. Geosci. Remote. Sens. 1998, 36, 1088–1100. [Google Scholar] [CrossRef]

- Wilson J W, Crook N A, Mueller C K, et al. Nowcasting thunderstorms: A status report. Bulletin of the American Meteorological Society, 1998, 79, 2079–2100.

- Casati, B.; Wilson, L.J.; Stephenson, D.B.; Nurmi, P.; Ghelli, A.; Pocernich, M.; Damrath, U.; Ebert, E.E.; Brown, B.G.; Mason, S. Forecast verification: current status and future directions. Meteorol. Appl. 2008, 15, 3–18. [Google Scholar] [CrossRef]

- Finley J, P. Tornado predictions. American Meteorological Journal. A Monthly Review of Meteorology and Allied Branches of Study (1884-1896), 1884, 1, 85. [Google Scholar]

Figure 1.

The work plan for constructing a 3D cloud field from a MODIS granule based on the CGAN model.

Figure 1.

The work plan for constructing a 3D cloud field from a MODIS granule based on the CGAN model.

Figure 2.

The distribution of the vertical average RMSE was calculated for the 7180 test samples.

Figure 2.

The distribution of the vertical average RMSE was calculated for the 7180 test samples.

Figure 3.

A case of a strong convective system with a horizontal distance of about 200 km, valid at 15:45 UTC, 4 December 2017 (from Group 1 in

Table 1). The segment from 27°32’37” S, 26°17’34” W to 24°39’37” S, 27°00’30” W. (

a) The radar reflectivity factors of the CPR observation (Real) and those for 16 ensemble members generated by shifting every 4 pixels; (

b) The radar reflectivity factor spectral distribution of the 16 GGAN-retrieved ensemble members (Violet) and the CPR observations (Blue). The 16 ensemble members, differing by 4 pixels each, are labeled as Gan1-16.

Figure 3.

A case of a strong convective system with a horizontal distance of about 200 km, valid at 15:45 UTC, 4 December 2017 (from Group 1 in

Table 1). The segment from 27°32’37” S, 26°17’34” W to 24°39’37” S, 27°00’30” W. (

a) The radar reflectivity factors of the CPR observation (Real) and those for 16 ensemble members generated by shifting every 4 pixels; (

b) The radar reflectivity factor spectral distribution of the 16 GGAN-retrieved ensemble members (Violet) and the CPR observations (Blue). The 16 ensemble members, differing by 4 pixels each, are labeled as Gan1-16.

Figure 4.

The workflow of EBPF with three algorithms, e.g., ensemble cloud masking, ensemble intensity scaling, and ensemble optimal value selection in the EBPF technique.

Figure 4.

The workflow of EBPF with three algorithms, e.g., ensemble cloud masking, ensemble intensity scaling, and ensemble optimal value selection in the EBPF technique.

Figure 5.

(

a) The Accuracy score for the ensemble cloud masking scheme with different probability thresholds over the six cases in Group 1 (

Table 1). Results for the case at 15:45 UTC, 4 December 2017: (

b) the cloud mask of the CPR observations; (

c) the ensemble cloud mask obtained without cloudy threshold; (

d) the ensemble cloud mask with the cloudy-probability (the number of members) threshold of 3.

Figure 5.

(

a) The Accuracy score for the ensemble cloud masking scheme with different probability thresholds over the six cases in Group 1 (

Table 1). Results for the case at 15:45 UTC, 4 December 2017: (

b) the cloud mask of the CPR observations; (

c) the ensemble cloud mask obtained without cloudy threshold; (

d) the ensemble cloud mask with the cloudy-probability (the number of members) threshold of 3.

Figure 6.

The violin plots of the ensemble-blending reflectivity with four methods: the reflectivity mode (MODE), the mean reflectivity (MEAN), and the maximum reflectivity (MAX) and avg_max_prob(AMP) result for 8 radar reflectivity bins (a–h). The shaded area between the black dashed lines in (a-h) represents the target grades. The percentage indicates the hit rate on the target CPR bin.

Figure 6.

The violin plots of the ensemble-blending reflectivity with four methods: the reflectivity mode (MODE), the mean reflectivity (MEAN), and the maximum reflectivity (MAX) and avg_max_prob(AMP) result for 8 radar reflectivity bins (a–h). The shaded area between the black dashed lines in (a-h) represents the target grades. The percentage indicates the hit rate on the target CPR bin.

Figure 7.

(

a) Cloud radar reflectivity of the CPR observations(

a1) vs. the retrievals with Direct Splicing(

a2), Ensemble Mean(

a3), Ensemble Maximum(

a4), and EBPF(

a5); (

b) Masks for the intensity Grade A (10dBZ to 20 dBZ), B (-5 dBZ to 10 dBZ), and C (-22 dBZ to -5 dBZ) derived from the cloud radar reflectivity in (

a1-a5). The case is a nimbostratus intercepted at 23:10 UTC, 31 December 2014(

Table 1). The segment is from 46°56’35” N, 151°16’20” W to 52°41’39” N, 153°42’5” W.

Figure 7.

(

a) Cloud radar reflectivity of the CPR observations(

a1) vs. the retrievals with Direct Splicing(

a2), Ensemble Mean(

a3), Ensemble Maximum(

a4), and EBPF(

a5); (

b) Masks for the intensity Grade A (10dBZ to 20 dBZ), B (-5 dBZ to 10 dBZ), and C (-22 dBZ to -5 dBZ) derived from the cloud radar reflectivity in (

a1-a5). The case is a nimbostratus intercepted at 23:10 UTC, 31 December 2014(

Table 1). The segment is from 46°56’35” N, 151°16’20” W to 52°41’39” N, 153°42’5” W.

Figure 8.

(

a) Schematic diagram showing CGAN cloud retrieving in the directions along and normal to the CPR track; (

b) CPR observed cloud vertical profile (cross-section); (

c) splicing the CGAN scene retrievals in the direction normal to the CPR track; (

d) splicing the EBPF retrievals in the direction normal to the CPR track, and (

e) the CGAN-BEBPF retrieval. The case is a nimbostratus intercepted at 23:10 UTC, 31 December 2014(

Table 1). The segment is from 46°56’35” N, 151°16’20” W to 52°41’39” N, 153°42’5” W.

Figure 8.

(

a) Schematic diagram showing CGAN cloud retrieving in the directions along and normal to the CPR track; (

b) CPR observed cloud vertical profile (cross-section); (

c) splicing the CGAN scene retrievals in the direction normal to the CPR track; (

d) splicing the EBPF retrievals in the direction normal to the CPR track, and (

e) the CGAN-BEBPF retrieval. The case is a nimbostratus intercepted at 23:10 UTC, 31 December 2014(

Table 1). The segment is from 46°56’35” N, 151°16’20” W to 52°41’39” N, 153°42’5” W.

Figure 9.

Flowchart for constructing a 3D cloud field for a MODIS granule using CGAN-BEBPF.

Figure 9.

Flowchart for constructing a 3D cloud field for a MODIS granule using CGAN-BEBPF.

Figure 10.

3D cloud fields and observations of Typhoon Chaba (South China, 02:50 UTC on 2 July 2022). (a) The MODIS effective particles radius; (b) The composite radar reflectivity of the CGAN-BEBPF 3D cloud fields; (c) The composite radar reflectivity of the ground-based radar observation (SWAN); (d) The composite radar reflectivity of the CGAN-BEBPF 3D cloud fields masked with the cloud areas of the ground-based radar observations.

Figure 10.

3D cloud fields and observations of Typhoon Chaba (South China, 02:50 UTC on 2 July 2022). (a) The MODIS effective particles radius; (b) The composite radar reflectivity of the CGAN-BEBPF 3D cloud fields; (c) The composite radar reflectivity of the ground-based radar observation (SWAN); (d) The composite radar reflectivity of the CGAN-BEBPF 3D cloud fields masked with the cloud areas of the ground-based radar observations.

Figure 11.

The comparison of the retrieved MODIS 3D cloud fields with ground-based radar observations of Typhoon Chaba (South China, 02:50 UTC on 2 July 2022), at altitudes of 1500m, 6000m, 9000m, and 14000m. BEBPF stands for the retrieved MODIS 3D cloud fields by CGAN-BEBPF, while SWAN stands for ground-based radar observations.

Figure 11.

The comparison of the retrieved MODIS 3D cloud fields with ground-based radar observations of Typhoon Chaba (South China, 02:50 UTC on 2 July 2022), at altitudes of 1500m, 6000m, 9000m, and 14000m. BEBPF stands for the retrieved MODIS 3D cloud fields by CGAN-BEBPF, while SWAN stands for ground-based radar observations.

Figure 12.

A multi-convective cell system (South China, 06:00 UTC on 24th August 2022). (a) The MODIS effective particles radius; (b) The composite radar reflectivity of the CGAN-BEBPF 3D cloud fields; (c) The composite radar reflectivity of the ground-based radar (SWAN).

Figure 12.

A multi-convective cell system (South China, 06:00 UTC on 24th August 2022). (a) The MODIS effective particles radius; (b) The composite radar reflectivity of the CGAN-BEBPF 3D cloud fields; (c) The composite radar reflectivity of the ground-based radar (SWAN).

Table 1.

Description of the weather cases in this study. They are divided into two groups. The first group has matched CPR observations, while the second has no matched CPR observations. All cases are based on UTC.

Table 1.

Description of the weather cases in this study. They are divided into two groups. The first group has matched CPR observations, while the second has no matched CPR observations. All cases are based on UTC.

| Group 1 |

Group 2 |

23:10, 31 December 2014

western pacific |

14:10, 31 March 2014

Atlantic Ocean |

02:50, 2 July 2022

Typhoon Chaba |

23:45, 31 March 2015

western pacific |

16:40, 20 October 2016 Atlantic Ocean |

06:00, 24 August 2022

A complex convective system |

04:00, 30 July 2016

eastern pacific |

15:45, 4 December 2017 Atlantic Ocean |

|

Table 2.

The binary confusion matrix.

Table 2.

The binary confusion matrix.

| |

Predictions (Positive) |

Predictions (Negative) |

| Observation (Positive) |

True Positive (TP) |

False Negative (FN) |

| Observation (Negative) |

False Positive (FP) |

True Negative (TN) |

Table 3.

For all six cases in Group 1 (

Table 1). The average TS scores are at different thresholds. The thresholds are -22 dBZ, -15 dBZ, -10 dBZ, -5 dBZ, 0 dBZ, 5 dBZ, and 10 dBZ, respectively.

Table 3.

For all six cases in Group 1 (

Table 1). The average TS scores are at different thresholds. The thresholds are -22 dBZ, -15 dBZ, -10 dBZ, -5 dBZ, 0 dBZ, 5 dBZ, and 10 dBZ, respectively.

| |

-22dBZ |

-15 dBZ |

-10 dBZ |

-5 dBZ |

0 dBZ |

5 dBZ |

10 dBZ |

| Direct Splicing |

0.57 |

0.59 |

0.59 |

0.56 |

0.49 |

0.39 |

0.26 |

| Ensemble Mean |

0.56 |

0.61 |

0.61 |

0.57 |

0.52 |

0.41 |

0.20 |

| Ensemble Maximum |

0.56 |

0.61 |

0.61 |

0.57 |

0.52 |

0.42 |

0.33 |

| EBPF |

0.60 |

0.62 |

0.64 |

0.58 |

0.52 |

0.44 |

0.37 |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).