3.1. First Iteration of the Balzi Rossi VR Experience

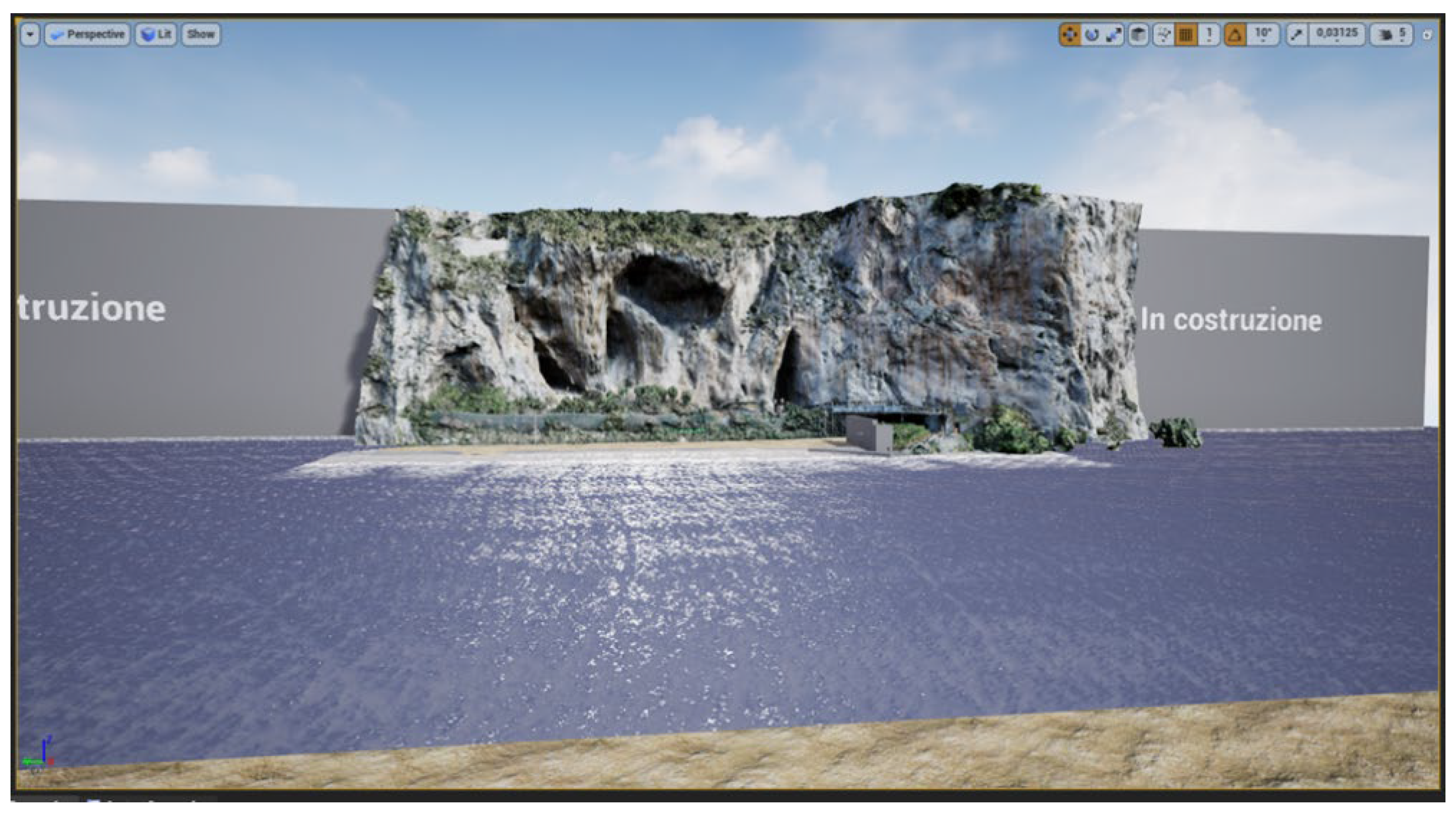

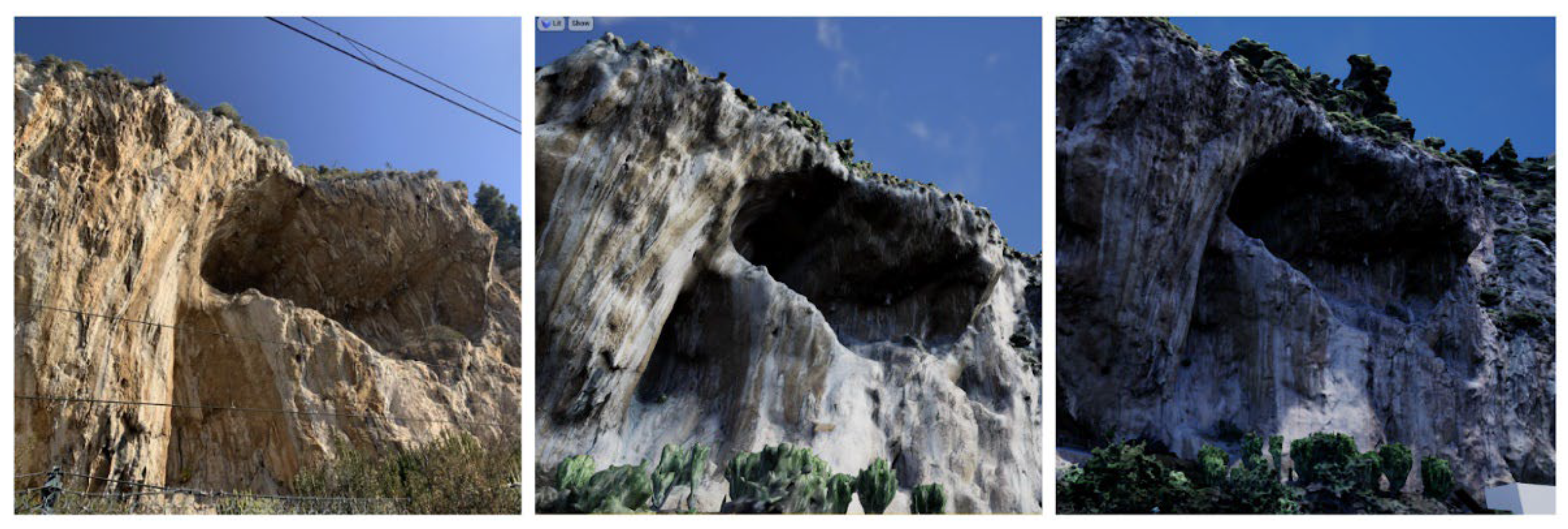

The first version of the Balzi Rossi VR Experience (

Figure 1) was developed using the Unreal Engine 4 graphics engine. The first step was to create a 3D reconstruction of the site using photogrammetry. To do this, 955 photographs of the site were taken from different angles using drones. These photographs were then processed using the RealityCapture (RC) software, which generated a cloud of about 3 billion 3D points: it included the “Caviglione” cave and the “Florestano” cave, where some of the most interesting rock engravings are located, for a total of about 10,800 m2.

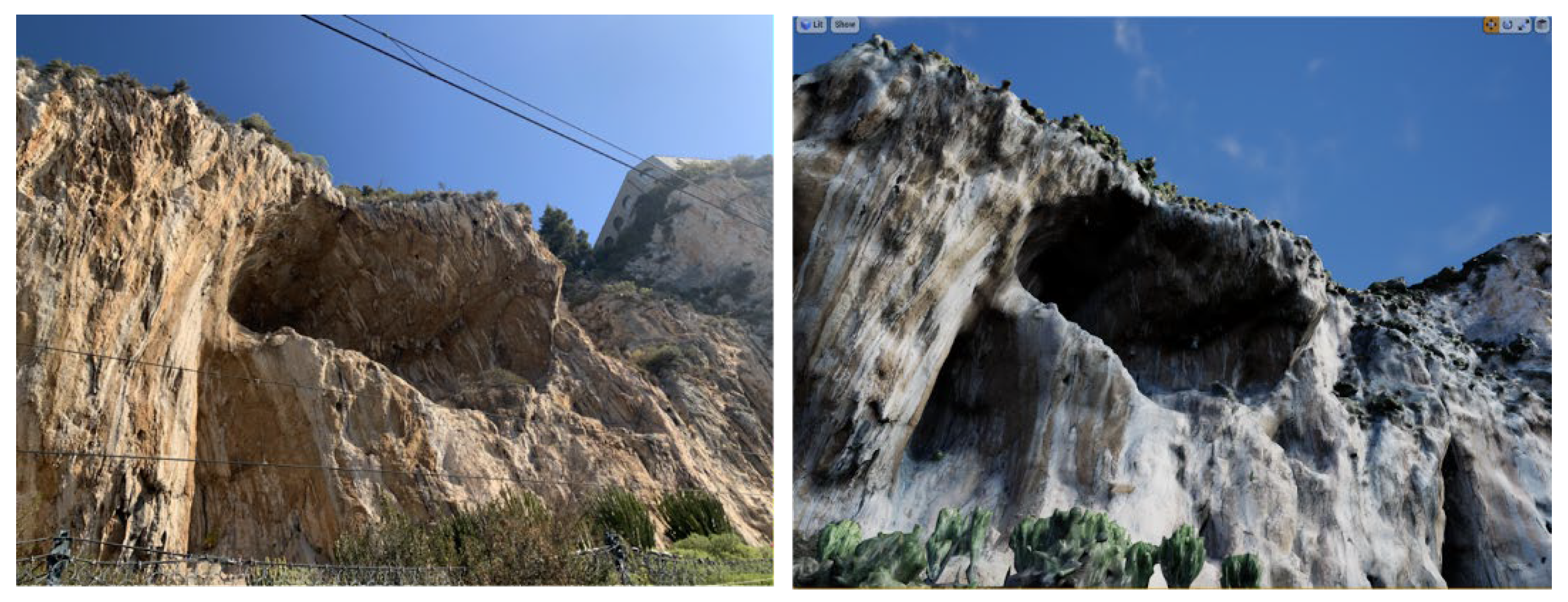

The point cloud was then converted into a polygonal mesh of about 250 million triangles. Due to the limited capabilities of Unreal Engine 4 and the hardware at our disposal, it was then necessary to decrease the resolution of the mesh, bringing it to 250,000 triangles. The reduction in resolution was compensated with a high-definition texturing, resulting in a model of good enough quality for the purposes of the project at this stage (

Figure 2).

We started with Unreal 4.27 VR Template, which already has basic interaction, teleport motion system, forward rendering and MSAA anti-aliasing implemented by default that are particularly relevant for VR [

11].

The 3D model was then imported into it. However, the model was still too complex to be displayed in real time. To solve this problem, it was divided into 25 separate pieces. Once the model was imported, it was necessary to create a level in which to place it. A number of assets were then added to the level, including an atmospheric system and a landscape that simulated a beach to evoke an environment similar to the Balzi Rossi area, even though at this stage there was not yet a faithful reproduction of the real landscape, except for the cliff. The base area of the level was composed of 24 square planes, 100 m long, creating an area of 600x400 m, on which the various sections of the level were built. After modeling the beach, a flying platform was added about 200 meters from the shore, which acted as a starting base and a panoramic point. To build the sea, the free asset “WaterMaterial” from the Epic games store containing a collection of assets for crafting rivers, lakes, and seas, was employed. By amalgamating various meshes from this package, a vast expanse of seawater was created, spanning approximately 800 meters, to the extent that the water’s edge remains unseen in virtual reality. To set up the assets within the level, a selection was made among the materials made available and the “M_Ocean_Cheaper” material was chosen as it was less complex and less impactful on the overall performance of the experience. The technique used to build a consistent sea (in spite of the material’s name) is to use a material involving vertex paint, in this case to differentiate the wave motion in contact with the beach and increase or differentiate it as one moves away from the shore.

The next step was to make the map navigable. To do this, a series of navigation volumes (NavMeshBoundsVolumes) were positioned, which outlined the areas where teleportation was allowed. Volumes were also positioned to prevent teleportation in areas where players were not supposed to go. The cliff’s collisions were then created using simplified collision volumes to allow for better performance.

A menu was then added, which allowed two actions: “Restart” to return to the initial level and “Real life” to close the simulation.

One of the objectives of this project is to highlight the rock art of the archaeological site and show it in a completely new way. In fact, due to the passage of centuries and the cleaning of the sediments present in the caves, some engravings are now in a high position compared to the ground (about 5 m high), making it difficult to observe them up close.

Using very high-definition images taken in a photography session with controlled and uniform light, Decals were created, images that rest directly on the mesh following its shape. Decals are a type of material that can project some properties, such as color, roughness, and normals, onto other meshes in the scene, that follow the shape of the material on which they are applied. Decals can be used to add details, such as dirt, damage, or graffiti, to the underlying surfaces, and in this case are used to emulate the appearance of the engravings following the shape of the mesh.

These images have been positioned as similarly as possible to how they are found on the real site. To improve the view of the engravings, a ladder with a platform has been positioned to reach the height of the engravings. Finally, as another element that improves the experience, signs have been added that highlight the engravings, which due to their nature are difficult to distinguish.

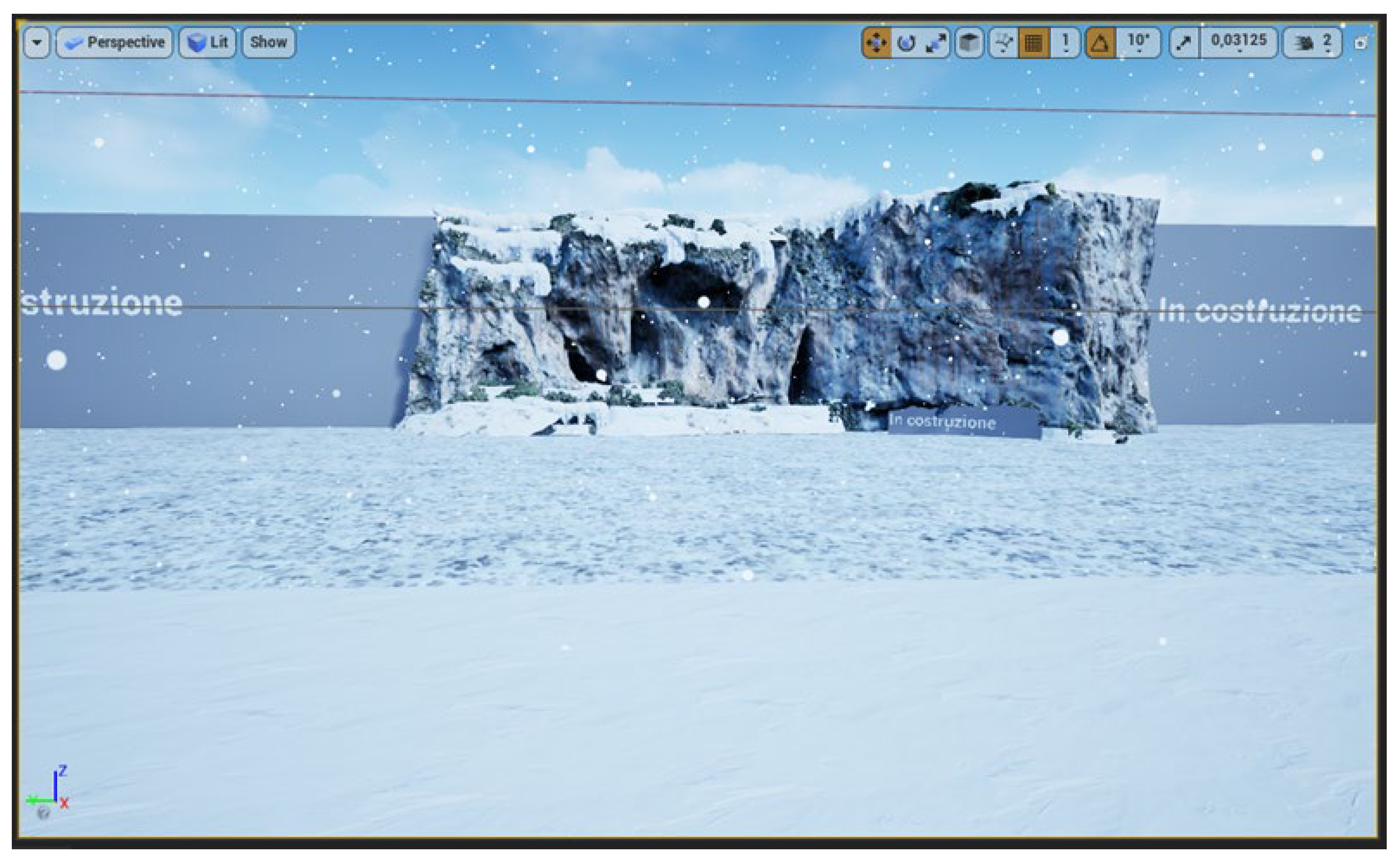

Based on this first level, two additional levels were created: the first consisted of a reconstruction of the Balzi Rossi cliff at the end of the Würm glaciation (

Figure 3) (about 12,000 years ago); the second consisted of an embellished but less archaeologically correct version, aimed at showing how the demo could be improved.

The Würm glaciation was the last glaciation before the current interglacial period. During the glaciation, the sea level was much lower than today (about 2 km from where the coastline is now), thus creating a large plain in front of the cliff. Furthermore, the landscape that is now coastal-Mediterranean was more similar to Alpine or Nordic landscapes at the time. For the reconstruction, we moved the sea level by 200 m compared to the original level, to give the idea of the movement of the coastline without weighing down the demo too much. In addition, the textures were modified to simulate a more glacial landscape and all the human constructions visible on the cliff were deleted. The embellished version was created as a very similar copy to the original, but with some tricks that made it more appealing to look at. The placeholder cubes were replaced by rock models, the connection between the beach and the path was replaced by a series of rocks, many areas where the vegetation was extremely artificial were deleted and the path to follow was made with a stone bridge.

All levels are accessible through interactions from the observation platform.

The work lasted more than a month and a half in total and it was presented on February 10, 2022 as part of the commemoration for the centenary of the death of Prince Albert I.

3.2. Second iteration of the Balzi Rossi VR experience

As of February 2023, subsequent to the unveiling of Unreal Engine 5 and its plethora of innovative features, a necessity emerged to conceive a second iteration of a specific application. This initiative aimed to surpass the visual excellence and fidelity of its predecessor. A prominent hurdle in the inaugural version was the imperative to segment the cliff model and confine the polygon count to a quarter-million to streamline the importation process into the game engine and secure optimal performance for Virtual Reality applications. The introduction of Unreal Engine 5 catalyzed new avenues to elevate the Balzi Rossi experience, notably through Nanite technology, enabling a significant enhancement in the cliff model’s quality and various facets of the VR application.

Nanite represents a revolutionary rendering paradigm, markedly augmenting the engine’s capacity to generate intricate and detailed 3D environments [

12]. This virtualized geometry system empowers creators to fabricate highly detailed assets, unshackled from conventional polygon budget limitations. Nanite facilitates the rendering of immense geometric detail by streaming and processing only visible specifics at any given time, achieved by aggregating triangles into clusters. It enables developers to actualize cinema-grade visuals in real-time, utilizing high-resolution source art composed of millions, even billions, of polygons directly in the engine. Despite this intricate detail, Nanite maintains efficient performance by optimizing and scaling geometry relative to the camera’s perspective, processing only essential data. This system potentially obviates the traditional Levels of Detail (LOD) approach for performance optimization. Additionally, Nanite allows for real-time, dynamic alterations in lighting, shadows, and other environmental variables, maintaining high fidelity without dependence on precomputed data.

To harness Nanite, a transition from forward to deferred rendering was necessitated, as the former does not support Nanite’s functionalities [

13]. Although the light pass with deferred rendering is computationally more intensive than forward rendering, Nanite’s utilization still fostered significant performance leaps. Given Nanite’s exceptional optimization in rendering and consequent substantial savings in graphic resources, it was decided to augment the polygon count of the cliff model, aspiring for superior graphic rendering. The process of digital photogrammetry was resumed again with RealityCapture, initially yielding a cliff model comprising 60 million triangles. Subsequent trials revealed that surpassing a certain polygon threshold marginally affected graphic rendering. Consequently, a 5-million-polygon model was exported from RC and imported into the engine. This model, with its higher polygon count, was markedly more detailed than the previous 250,000-polygon version, rendering it significantly more photorealistic.

During the initial importation attempt, a mesh conversion issue into Nanite clusters caused a sudden shutdown of Unreal. After multiple resizing trials on external programs, it became evident that the error stemmed from the mesh’s exceedingly large size. Subsequently, reducing the model by a factor of 100 facilitated a problem-free engine importation. As commonly observed post-3D model generation via photogrammetry, the imported mesh appeared ‘dirty,’ with numerous unwanted parts. For the cleanup process, Unreal’s internal modeling tool was employed, adeptly handling ordinary mesh optimization operations.

Nanite’s real-time streaming ensures fluid rendering despite the high polygon count, conserving significant hardware resources for the cliff rendering. This led to increased creative liberty in level design, providing more computational budget for incorporating distinctive landscape elements. A primary limitation of the first version of the Balzi Rossi experience was landscape constraints: nearly all hardware resources were consumed for cliff rendering, leaving a scant budget for level design. This resulted in a complete absence of the coastal strip, including museum buildings, plants, rocks, and other natural features. For level construction, free assets from Quixel Megascans and the Epic Games Marketplace were utilized.

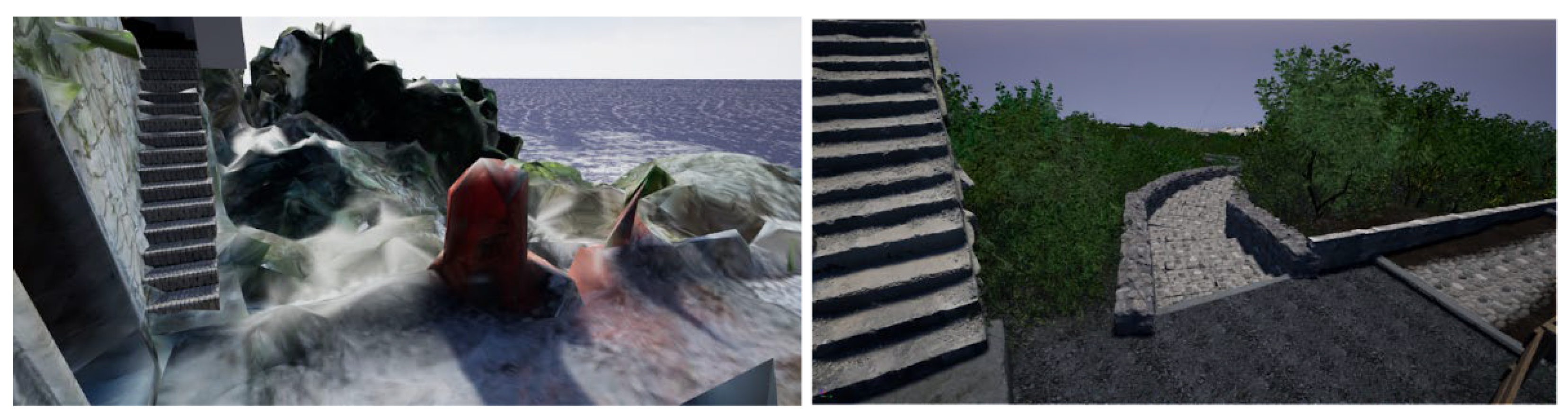

For the coastal part, the game map was modeled using Unreal’s landscape space to achieve shapes, colors, and hues akin to reality. In the landscape material, a function to blend two different textures was used to minimize tiling as much as possible. Regarding the construction of two staircases, a walkway crossing the railway, and a small stone utility shed (

Figure 4), the meshes and their materials were modified, also utilizing modeling tools available in Unreal.

Additionally, for a more visually seamless integration of the meshes into the landscape, vertex paint and runtime virtual texturing tools were employed. Streaming Virtual Texturing (SVT), an alternative method in Unreal Engine 5 for texture streaming from the disk, offers several advantages and some disadvantages compared to the traditional MIPmaps-based method [

14]. This latter method conducts an offline analysis of UV material usage and decides which MIP levels of a texture to load based on visibility and object distance from the observer during runtime. This can be limiting as the streaming data considered are the MIP levels of the native resolution textures. High-resolution texture use can significantly impact performance and memory overload when loading a higher MIP level. Additionally, the CPU makes decisions on MIP texture streaming using object visibility and culling settings. Here, visibility is more conservative, meaning the system is more likely to load an object to avoid sudden appearances (popping) as the player moves. Therefore, even if only a small part of the object is visible, the entire object is considered visible; the loaded object includes any associated textures that might be needed for streaming.

In contrast, the SVT system transmits only texture parts that are actually visible to the user. This is achieved by dividing all MIP levels into small, fixed-size sections (tiles) where the GPU determines their visibility from the observer’s perspective; consequently, when Unreal considers an object visible, the GPU is instructed to load the required tiles into a GPU memory cache. Regardless of texture size, the fixed tile size considers only those actually visible; the GPU calculates their visibility using the z-buffer, ensuring that texture streaming occurs only for visible parts relevant to certain pixels on the screen. Runtime virtual textures (RVT), used in the Balzi Rossi level to better integrate meshes with the landscape, operates similarly to SVT but differs in some specifics [

15]: here, the texture texels are generated in real-time by the GPU, not pre calculated and stored on disk as in SVT. RVT is designed for textures that need to be generated on demand, like procedural textures or landscape materials with multiple layers.

For the insertion of plants and rocks (

Figure 4), Unreal’s foliage space was used, ensuring better asset management and notably, all meshes placed in the level are considered as a single instance, weighing less in terms of rendering compared to positioning each mesh individually from the content browser. To achieve a minimum of 60 fps, extensive work on LOD optimization and mesh simplification was necessary. Instead of using 4 or 5 LOD levels, only two were employed: a level 0 with a mesh not exceeding 2500/3000 triangles, and a level 1 with a billboard image always oriented towards the observer’s camera. Additionally, the resolution of lightmaps was reduced from 8 to 4, and dynamic shadows were deactivated, retaining only static ones.

Moving to the subject of lighting, Lumen and any form of global illumination were disabled to maintain acceptable performance. Although Lumen, a new feature of Unreal Engine 5 capable of generating real-time light reflections using ray tracing, is too resource-intensive for VR rendering. As detailed in the official Unreal documentation [

16], to generate consistent shadows with Nanite-controlled meshes, these must work in combination with light sources set to “movable.” Consequently, the level’s directional light and skylight were configured this way. Planning for a single fixed light source, the sun, and a minimal quantity of dynamic elements, the adopted approach was precomputed-lighting, where non-real-time lighting is precalculated.

As previously mentioned, for the Balzi Rossi experience, a target of no less than 70 fps was set to ensure a smooth, entertaining view and prevent motion sickness syndrome [

17]. After adding and optimizing all landscape elements, setting LODs, and employing Nanite, a problem with fps and particularly frame time was encountered: average fps hovered around 60, but frame time was highly unstable, with frequent and noticeable signs of stuttering. After trying various solutions, it was concluded that temporal upscaling was appropriate: a type of algorithm (temporal upscalers) that performs an analysis of data on previous and current frames and motion vectors to produce an image at a higher resolution than that rendered. Temporal upscalers have the same general operational goal but differ in the methodology by which they achieve it. Both Unreal’s proprietary system TSR and AMD’s more famous FSR essentially interpolate frames to generate higher resolution images. In contrast, Nvidia’s DLSS (Deep Learning Super Sampling) uses AI deep learning algorithms to upscale frames. Specifically, DLSS employs a pre-trained neural network on thousands of images to learn how to upscale images correctly [

18]. Additionally, DLSS’s efficiency is boosted by operating on GPU-dedicated AI calculation processors called Tensor Cores. As recently clarified [

19], neither Unreal’s TSR nor AMD’s FSR are remotely comparable to DLSS, which can achieve substantial performance boosts while preserving excellent image quality. For these reasons, DLSS was implemented in the Balzi Rossi project, gaining a stable frame rate in all situations.

Following the remarkable results achieved through the adoption of Nanite and DLSS technologies (

Figure 5), there was an impetus to enhance and innovate the second iteration of the Wurm glaciation level. It’s crucial to note that during the Wurm period, the coastline was significantly lower, estimated at about 2 kilometers from the cliffs. To create a map of such extension, the innovative World Partition (WP) feature of Unreal Engine 5 was employed to maintain a stable frame rate. WP, an automatic data management and distance-based level streaming system, offers a comprehensive solution for large world management. Unlike the previous approach of dividing large levels into sublevels, WP stores the world in a single persistent level divided into grid cells, automatically streaming these cells based on distance from a streaming source [

20].

For the cliffs, to provide winter characteristics like snow and less saturated colors, various modifications were made to the mesh material. This included slight desaturation of the albedo texture by altering brightness, RGB values, and saturation; and the application of snow on the cliffs using the VertexNormalsWS node in combination with snow textures. This approach maintained the original albedo texture of the cliffs while applying the snow texture only to mesh vertices facing the z-axis. The intensity at which snow is applied beyond a certain degree of inclination is controlled using the Lerp node, which performs a linear interpolation between two known values (A and B) with an alpha constant ranging from 0 to 1.

To recreate a winter environment (

Figure 6) with ongoing snowfall, the Exponential Height Fog and Sky Atmosphere components were properly configured. The former creates a fog system with higher density in lower altitude areas and vice versa, managing two colors: one for the hemisphere facing the main directional light, and one for the opposite side. The Sky Atmosphere component simulates light dispersion through the atmosphere, considering factors like sky color and directional light, and includes Mie scattering and Rayleigh scattering effects for dynamic sky simulation [

21].

For the snowflakes, a Niagara system with modified textures was created to simulate real snow instead of simple white circles. This particle system was attached to the VR pawn, so the emitter creates particles locally, maintaining good performance and graphic rendering. The same optimizations applied to the foliage in the main level were used for the forest, but with fewer elements, allowing the retention of dynamic shadows.

The movement system has been enhanced and modified in the latest version. Initially, the basic system provided by Unreal was used, allowing movement through teleportation to minimize motion sickness. However, in the current iteration, this system has been completely redeveloped to incorporate smooth and continuous movement using analog sticks, offering users greater freedom for movement and exploration, despite the potential increase in motion sickness. This was accomplished by converting the VR player pawn into a player character, ensuring a more robust structure that prevents passing through objects. The movement programming now utilizes continuous movement. Moreover, a tutorial has been introduced to instruct users on how to utilize both movement methods, a feature not present in the previous version. Lastly, for debugging tools to assess performance during landscape construction and DLSS adoption, Unreal Insights was used. This tool provides highly detailed, frame-by-frame data on draw calls, CPU, GPU, and resources used by each scene element. Another essential VR debugging tool was Oculus Debug Tools, providing an overlay in the HMD containing basic in-game performance information. This tool was used to monitor fps and hardware usage in the executable of the experience, where Unreal tools are challenging to use. Another major improvement over the first version was making the experience multi-language, making tutorials and infographics available in: Italian, English and French. These three languages were chosen as a first step, since the Balzi Rossi archaeological site is on the border between France and Italy, but many different languages may be added in the future.