Submitted:

22 March 2024

Posted:

26 March 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- The vast amounts of observational data available from satellites, in-situ scientific measurements, and in the future, from internet-of-things devices, increase with tremendous speed. Even now only a small percentage of the available data is used in modern DASs. The problems with the assimilation of new data in DASs range from growing time-consuming (with increasing amounts of data) vs. limited computational resources to the necessity of new approaches to assimilating new types of data [4,5].

- The increasing requirements to improve the accuracy and the forecast horizon of numerical weather/climate modeling systems cause their growing complexity due to increasing horizontal and vertical resolutions and related increasing the complexity of model physics. Thus, global and regional modeling activities consume a tremendous amount of computing resources, which presents a significant challenge despite growing computing capabilities. Model ensemble systems have already faced the computational resources problem that limits the resolution and/or the number of ensemble members in these systems [5].

- Model physics is the most computationally demanding part of numerical weather/climate modeling systems. With the increase in model resolutions, many subgrid physical processes that are currently parameterized become resolved processes and should be treated correspondingly. However, the nature of these processes is not always sufficiently understood to develop a description of the processes based on the first principles. Also, with the increase in model resolution, the scales of the subgrid processes that should be parameterized become smaller and smaller. Parameterizations of such processes often become more and more time-consuming and sometimes less accurate because underlying physical principles may not be fully understood [4,5].

- Current NWCMSs produce improved forecasts with better accuracy. A major part of these improvements is due to the increase in supercomputing power that has enabled higher model resolution, better physics/chemistry/biology description, and more comprehensive data assimilation [5]. Yet, the “demise of the ‘laws’ of Dennard and Moore” [6,7] indicates that this progress is unlikely to continue due to an increase in the required computer power. Moore’s law drove the economics of computing by stating that every 18 months, the number of transistors on a chip would double at approximately equal cost. However, the cost per transistor starts to grow with the latest chip generations, indicating an end to this law. Thus, due to the aforementioned limitations, results produced by NWCMSs still contain errors of various natures. Thus, the PP correction of model output errors becomes even more important [8]. Currently used in NWP operational practice post-processing systems like Model Output Statistics (MOS) [9] are based on linear techniques (linear regressions). However, because optimal corrections of model outputs are nonlinear, for correcting biases of even regional fields, many millions of linear regressions are introduced in MOS [10,11], making such systems cumbersome and resource-consuming.

2. ML for NWCMSs Background

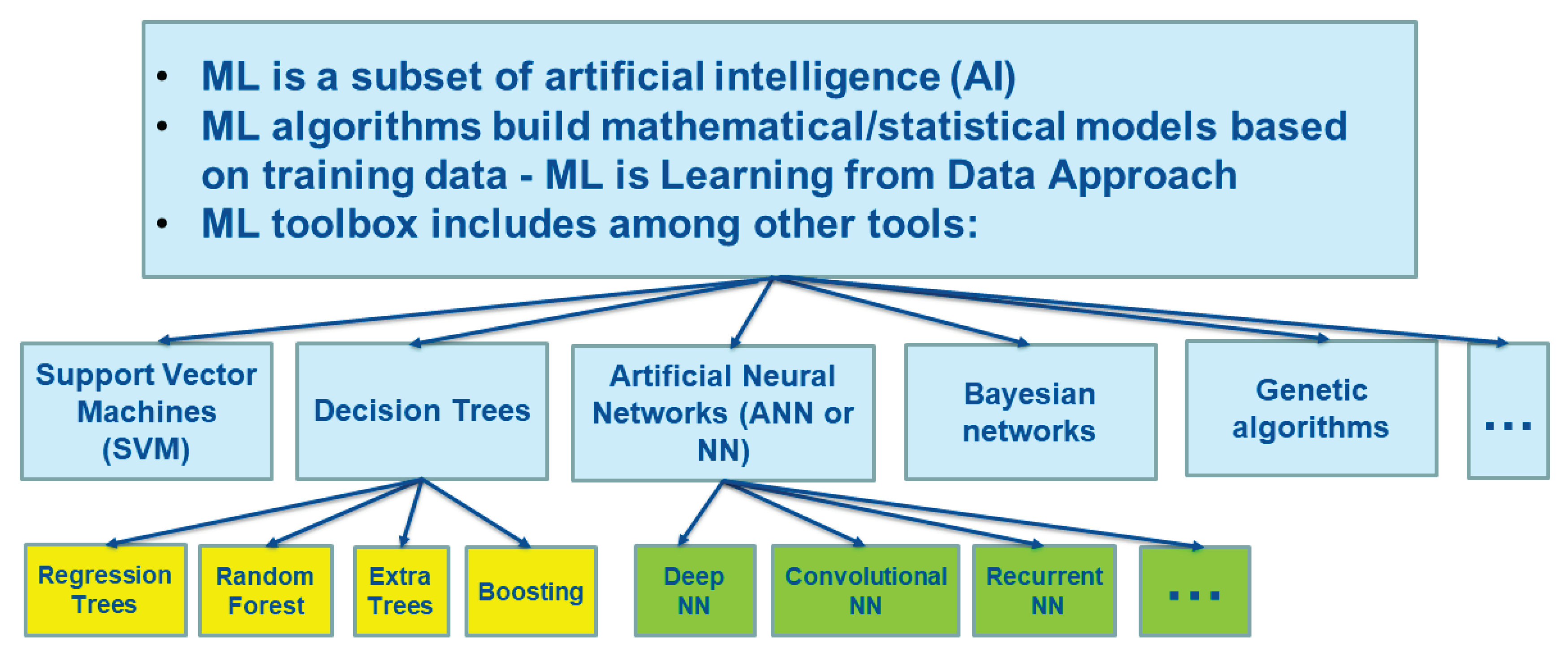

2.1. ML Tools

- many NWCMS applications, from a mathematical point of view, may be considered as mapping, M, that is a relationship between two vectors or two sets of parameters X and Y:where n and m are the dimensionalities of vectors X and Y correspondingly.

- ML provides an all-purpose non-linear fitting capability. NN, the major ML tool that is used in applications, are “universal approximators” [26] for complex multidimensional nonlinear mappings [27,28,29,30,31]. Such tools can be used and have already been used to develop a large variety of applications for NWCMSs.

2.2. ML for NWCMS Specifics

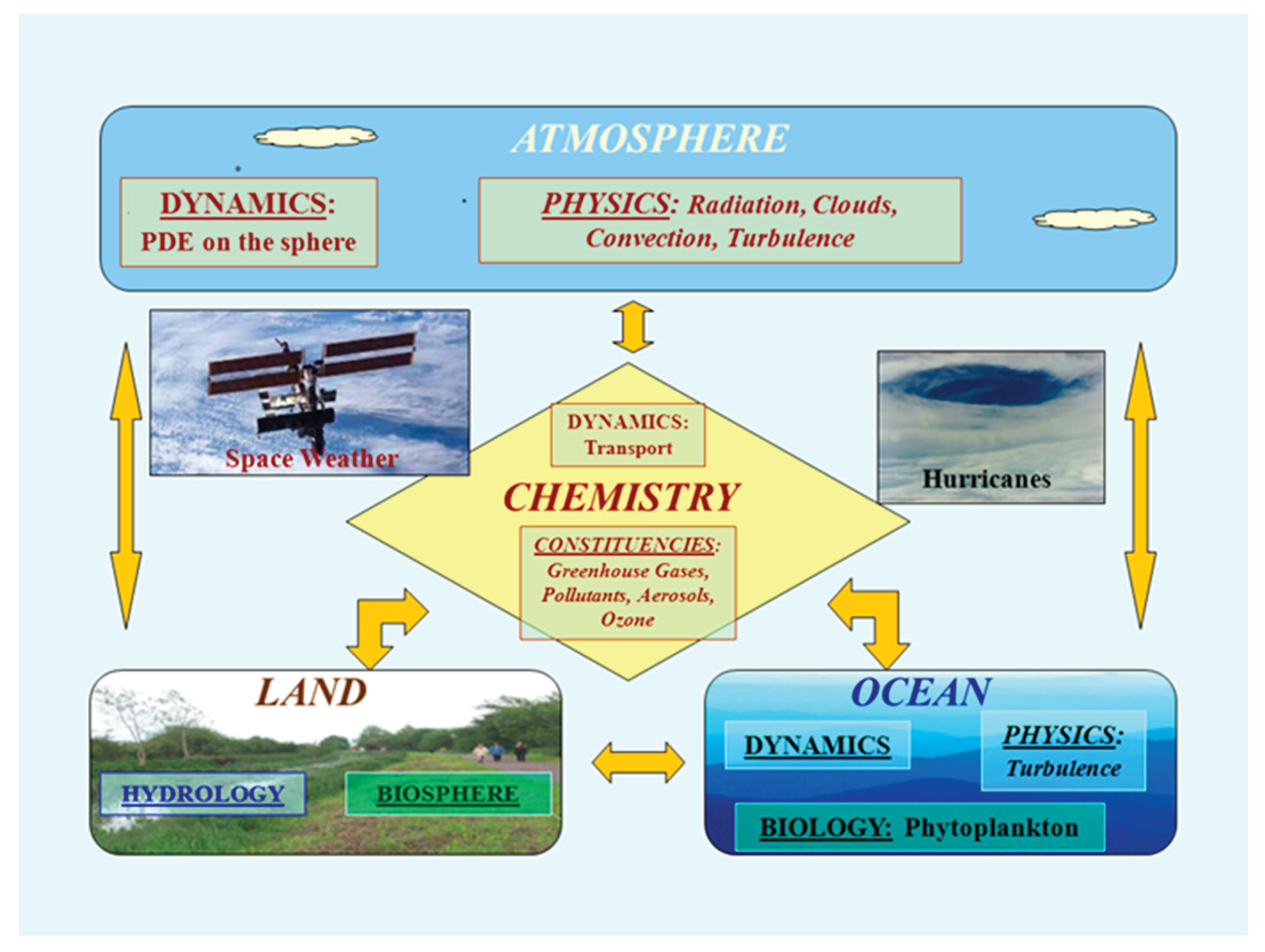

3. Climate and Weather Systems

3.1. Systems and Subsystems

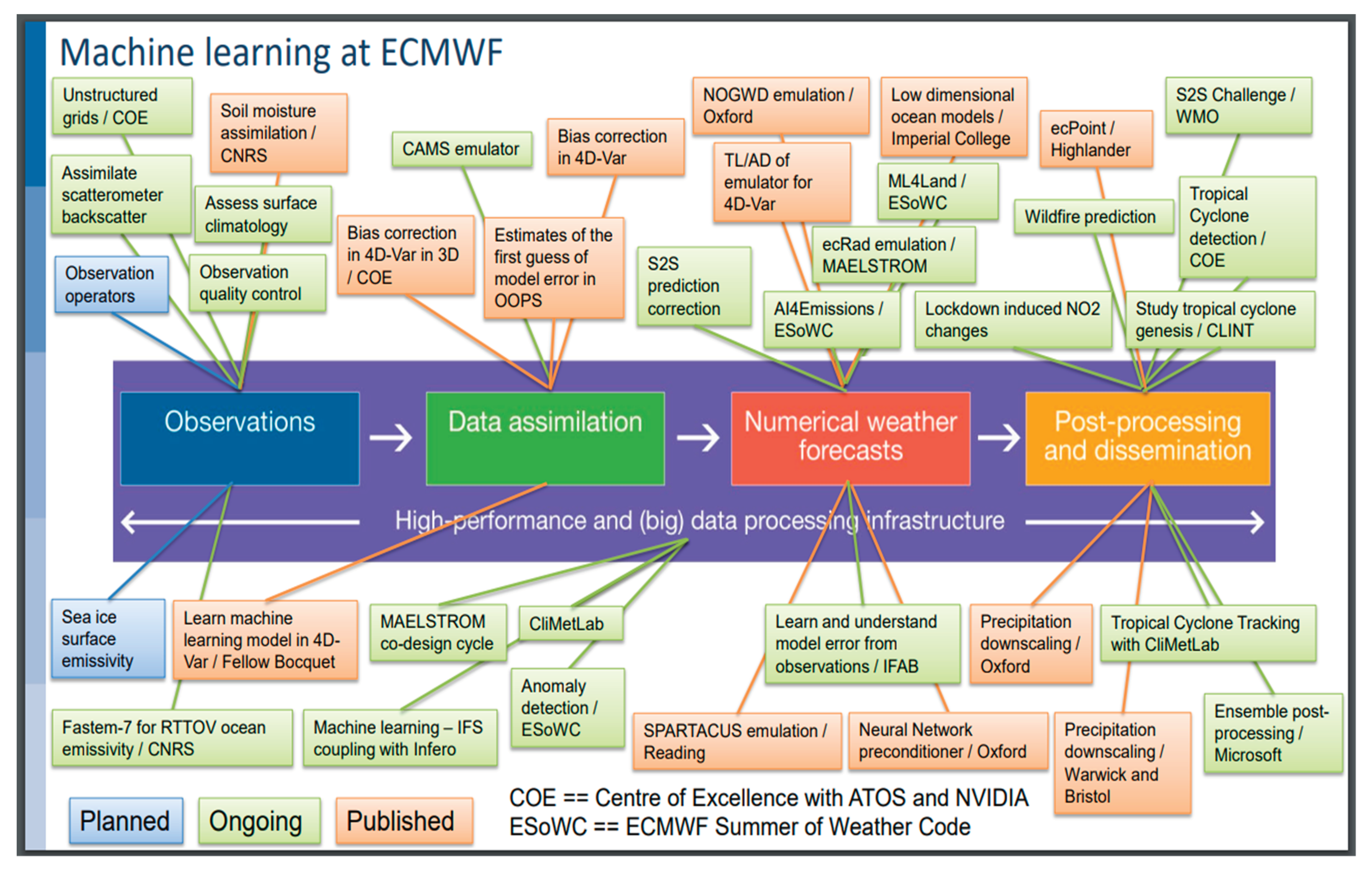

3.2. ML for NWCMS and Its Subsystems

4. Hybridization of ML with Traditional Numerical Modeling

4.1. ML for Data Assimilation

4.1.1. Fast ML forward Models for Direct Assimilation of Satellite Measurements

4.1.2. Fast ML Observation Operators

4.1.3. Fast ML Models and Adjoints

4.1.4. Data Pre-Processing and Quality Control

4.2. ML for Model Physics

4.2.1. Fast ML Radiation

4.2.2. Fast and Better ML Microphysics

4.2.3. New ML Parameterizations

4.2.4. ML Full Physics

4.2.5. ML Weather and Climate Models

4.2.6. ML Stochastic Physics

4.2.7. ML Model Chemistry

4.3. ML for Post-Processing

5. Conclusions

References

- Meadows, D.H. Thinking in Systems: A Primer. Chelsea Green Publishing Co., Vermont, USA, 2008.

- Uccellini L., W.; Spinrad, R. W.; Koch, D. M.; McLean, C. N.; Lapenta, W. M. EPIC as a Catalyst for NOAA’s Future Earth Prediction System. BAMS, 2022; 103, E2246–E2264. [Google Scholar] [CrossRef]

- Zhu, Y.; Fu, B.; Guan, H.; Sinsky, E.; Yang, B. The Development of UFS Coupled GEFS for Subseasonal and Seasonal Forecasts., NOAA’s 47th Climate Diagnostics and Prediction Workshop Special Issue 2022, pp. 88. [CrossRef]

- Boukabara, S.-A.; Krasnopolsky, V.; Stewart, J. Q.; Maddy, E. S.; Shahroudi, N.; Hoffman R., N. , 2019: Leveraging modern artificial intelligence for remote sensing and NWP: Benefits and challenges. BAMS 2019, 100(12), ES473–ES491. [Google Scholar] [CrossRef]

- Bauer, P.; Thorpe, A.; Brunet, G. The quiet revolution of numerical weather prediction. Nature 2015, 525, 47–55. [Google Scholar] [CrossRef] [PubMed]

- Bauer, P.; Dueben, P.D.; Hoefler, T.; et al. The digital revolution of Earth-system science. Nat Comput Sci 2021, 1, 104–113. [Google Scholar] [CrossRef] [PubMed]

- Khan, H.N.; Hounshell, D.A.; Fuchs, E.R.H. Science and research policy at the end of Moore’s law. Nat Electron 2018, 1, 14–21. [Google Scholar] [CrossRef]

- Haupt S., E.; et al. Towards implementing artificial intelligence post-processing in weather and climate: proposed actions from the Oxford 2019 workshop. Phil. Trans. R. Soc. 2021, A: 379:, 20200091. [CrossRef]

- Carter, G.M.; Dallavalle, J.P.; Glahn, H.R. Statistical forecasts based on the National Meteorological Center's numerical weather prediction system. Weather Forecast. 1989, 4, 401–412. [Google Scholar] [CrossRef]

- Gneiting, T.; Raftery, A.E.; Westveld, A.H.; Goldman, T. (2005). Calibrated probabilistic forecasting using ensemble model output statistics and minimum CRPS estimation. Mon. Wea. Rev. 2005, 133, 1098–1118. [Google Scholar] [CrossRef]

- Wilks, D.S.; Hamill, T.M. Comparison of ensemble-MOS methods using GFS reforecasts. Mon. Wea. Rev 2007, 135, 2379–2390. [Google Scholar] [CrossRef]

- Christensen, H.; Zanna, L. (2022). Parametrization in Weather and Climate Models. Oxford Research Encyclopedias 2022. [Google Scholar] [CrossRef]

- Düeben, P.; Bauer, P.; Adams, S. Deep Learning to Improve Weather Predictions. In Deep Learning for the Earth Sciences: A Comprehensive Approach to Remote Sensing, Climate Science, and Geosciences Eds: Camps-Valls, G., Tuia, D., Zhu, X.X., Reichstein, M.; John Wiley & Sons Ltd., 2021. [CrossRef]

- Hsieh, W.W. Introduction to environmental data science. Cambridge University Press, Cambridge, USA, 2023; ISBN: 978-1-107-06555-0.

- Krasnopolsky, V. The Application of Neural Networks in the Earth System Sciences. Neural Network Emulations for Complex Multidimensional Mappings; Atmospheric and Oceanic Science Library. (Vol. 46). Springer, Dordrecht, Heidelberg, New York, London, 2013. [CrossRef]

- Dueben, P. D.; Bauer, P. Challenges and design choices for global weather and climate models based on machine learning. Geosci. Mod. Dev. 2018, 11(10), 3999–4009. [Google Scholar] [CrossRef]

- Bishop, C. M. Pattern Recognition and Machine Learning; Springer, New York. ISBN 0-387-31073-8, 2006.

- Cherkassky, V., Muller, F. Learning from Data: Concepts, Theory, and Methods; Wiley, USA, Hoboken, NJ, 1998.

- Chevallier, F.; Morcrette, J.-J.; Chéruy, F.; Scott, N. A. Use of a neural-network-based longwave radiative transfer scheme in the ECMWF atmospheric model. Quarterly Journal of the Royal Meteorological Society 2000, 126, 761–776. [Google Scholar]

- Krasnopolsky, V. M.; Fox-Rabinovitz, M. S.; Chalikov, D. V. New Approach to Calculation of Atmospheric Model Physics: Accurate and Fast Neural Network Emulation of Long Wave Radiation in a Climate Model. Monthly Weather Review 2005, 133, 1370–1383. [Google Scholar] [CrossRef]

- Rasp, S., Pritchard, M. S., Gentine, P. Deep learning to represent subgrid processes in climate models, PNAS Latest Articles 2018.

- Brenowitz, N. D.; et al. Emulating Fast Processes in Climate Models. arXiv:2211.10774 [physics.ao-ph] 2022. [CrossRef]

- Geer, A.J. Learning Earth System Models from Observations: Machine Learning or Data Assimilation? Phil. Trans. R. Soc. 2020; 379, 20200089. [Google Scholar] [CrossRef]

- Belochitski, A.; Binev, P.; DeVore, R.; Fox-Rabinovitz, M.; Krasnopolsky, V.; Lamby, P. Tree Approximation of the Long Wave Radiation Parameterization in the NCAR CAM Global Climate Model. Journal of Computational and Applied Mathematics 2011, 236, 447–460. [Google Scholar] [CrossRef]

- O’Gorman, P. A.; Dwyer, J. G. (2018). Using machine learning to parameterize moist convection: potential for modeling of climate, climate change and extreme events. Journal of Advances in Modeling Earth Systems 2018. [CrossRef]

- Hornik, K. Approximation Capabilities of Multilayer Feedforward Network. Neural Networks 1991, 4, 251–257. [Google Scholar] [CrossRef]

- Vapnik, V. N. The nature of statistical learning theory; Springer, New York, USA, 1995, p. 189.

- Vapnik, V. N.; Kotz, S. Estimation of Dependences Based on Empirical Data (Information Science and Statistics); Springer, USA, New York, 2006.

- Vapnik, V. N. Complete Statistical Theory of Learning, Automation and Remote Control 2019, 80(11), 1949–1975.

- Cybenko, G. Approximation by superposition of sigmoidal functions. Mathematics of Control, Signals, and Systems 1989, 2, 303–314. [Google Scholar]

- Funahashi, K. On the approximate realization of continuous mappings by neural networks. Neural Networks 1989, 2, 183–192. [Google Scholar] [CrossRef]

- Chen, T.; Chen, H. Universal approximation to nonlinear operators by neural networks with arbitrary activation function and its application to dynamical systems. Neural Networks 1995, 6, 911–917. [Google Scholar] [CrossRef] [PubMed]

- Attali, J.-G.; Pagès, G. Approximations of functions by a multilayer perceptron: A new approach. Neural Networks 1997, 6, 1069–1081. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L. Random forests. Machine Learning 2001. 45, 5–32.

- Rudin, C.; Chen, Ch.; Chen, Zh.; Huang, H.; Semenova, L.; Zhong, Ch. Interpretable machine learning: Fundamental principles and 10 grand challenges. Statist. Surv. 2022, 16, 1–85. [Google Scholar] [CrossRef]

- Valerie, Ahl.; Allen, T.F.H. Hierarchy Theory: A Vision, Vocabulary, and Epistemology; Columbia University Press, USA, New York, 1996.

- Salthe, S.N. Evolving Hierarchical Systems Their Structure and Representation; Columbia University Press, USA, New York, 1985.

- Krasnopolsky, V. and Belochitski, A. (2022). Using Machine Learning for Model Physics: An Overview. Arxiv, 2022. [Google Scholar]

- Campos, R.M.; Krasnopolsky, V. ; Alves, J-H.; Penny, S. G. Improving NCEP’s global-scale wave ensemble averages using neural networks. Ocean Modelling. [CrossRef]

- Düben, P.; et al. (2021). Machine learning at ECMWF: A roadmap for the next 10 years. ECMWF Technical Memorandum 2021, 878. https://www.ecmwf.int/sites/default/files/elibrary/2021/19877-machine-learningecmwf-roadmap-next-10-years.pdf.

- Krasnopolsky, V. M.; Fox-Rabinovitz, M. S. Complex Hybrid Models Combining Deterministic and Machine Learning Components for Numerical Climate Modeling and Weather Prediction. Neural Networks 2006, 19, 122–134. [Google Scholar] [CrossRef]

- Brenowitz, N. D.; Bretherton, C. S. Prognostic Validation of a Neural Network Unified Physics Parameterization, Geophysical Research Letters 2018, 45, 6289–6298. [CrossRef]

- Dong, R.; Leng, H.; Zhao, J.; Song, J.; Liang, S. A Framework for Four-Dimensional Variational Data Assimilation Based on Machine Learning. Entropy 2022, 24, 264. [Google Scholar] [CrossRef]

- Bauer, P.; Geer, A. J.; Lopez, Ph.; Salmond, D. Direct 4D-Var assimilation of all-sky radiances. Part I: Implementation. Quat. J. Royal Met. Soc. 2010, 136, 1868-1885. [CrossRef]

- Mellor, G. L.; Ezer, T. (1991). A Gulf Stream model and an altimetry assimilation scheme, JGR 1991, 96C, 8779-8795. [CrossRef]

- Guinehut, S.; Le Traon, P.Y.; Larnicol, G.; Philipps, S. Combining Argo and remote-sensing data to estimate the ocean three-dimensional temperature fields—a first approach based on simulated observations. Journal of Marine Systems 2004, 46, 85–98. [Google Scholar] [CrossRef]

- Krasnopolsky, V.; Nadiga, S.; Mehra, A.; Bayler, E. , Adjusting Neural Network to a Particular Problem: Neural Network-based Empirical Biological Model for Chlorophyll Concentration in the Upper Ocean, Applied Computational Intelligence and Soft Computing 2018, 2018, Article ID 7057363, 10 pp. [CrossRef]

- Cheng, S.; Chen, J.; Anastasiou, C. Generalized Latent Assimilation in Heterogeneous Reduced Spaces with Machine Learning Surrogate Models. J Sci Comput 2023, 94, 11. [Google Scholar] [CrossRef]

- Hatfield, S.; Chantry, M.; Dueben, P.; Lopez, P.; Geer, T.; Palmer, A. Building Tangent-Linear and Adjoint Models for Data Assimilation With Neural Networks. Journal of Advances in Modeling Earth Systems 2021, 13. [Google Scholar] [CrossRef]

- Maulik, R.; Rao, V.; Wang, J.; Mengaldo, G.; Constantinescu, E.; Lusch, B.; Balaprakash, P.; Foster, I.; Kotamarthi, R. Efficient high-dimensional variational data assimilation with machine-learned reduced-order models. Geosci. Mod. Dev. 2022, 15, 3433–3445. [Google Scholar] [CrossRef]

- Krasnopolsky, V.M. Reducing Uncertainties in Neural Network Jacobians and Improving Accuracy of Neural Network Emulations with NN Ensemble Approaches. Neural Networks. [CrossRef]

- Geer, A. J.; et al. All-sky satellite data assimilation at operational weather forecasting centres. Quart. J. Roy. Meteor. Soc. 2017, 144, 1191–1217. [Google Scholar] [CrossRef]

- Krasnopolsky, V. M.; Fox-Rabinovitz, M. S.; Belochitski, A. Decadal climate simulations using accurate and fast neural network emulation of full, long- and short-wave, radiation. Monthly Weather Review 2008, 136, 3683–3695. [Google Scholar] [CrossRef]

- Krasnopolsky, V. M.; Fox-Rabinovitz, M. S.; Hou, Y. T.; Lord, S. J.; & Belochitski, A. A. Accurate and Fast Neural Network Emulations of Model Radiation for the NCEP Coupled Climate Forecast System: Climate Simulations and Seasonal Predictions, Monthly Weather Review 2010, 138, 1822–1842. [CrossRef]

- Krasnopolsky, V.; Belochitski, A.; Hou, Y.-T.; Lord, S.; Yang, F. Accurate and Fast Neural Network Emulations of Long and Short Wave Radiation for the NCEP Global Forecast System Model, NCEP Office Note 2012, 471. http://www.lib.ncep.noaa.gov/ncepofficenotes/files/on471.pdf.

- Pal, A.; Mahajan, S.; Norman, M. R. Using deep neural networks as cost-effective surrogate models for Super-Parameterized E3SM radiative transfer. Geophysical Research Letters 2019, 46, 6069–6079. [Google Scholar] [CrossRef]

- Roh, S. , & Song, H.-J. Evaluation of neural network emulations for radiation parameterization in cloud resolving model. Geophysical Research Letters 2020, 47, e2020GL089444. [Google Scholar] [CrossRef]

- Ukkonen, P.; Pincus, R.; Hogan, R. J.; Nielsen, K. P.; Kaas, E. Accelerating radiation computations for dynamical models with targeted machine learning and code optimization. Journal of Advances in Modeling Earth Systems 2020, 12, e2020MS002226. [Google Scholar] [CrossRef]

- Lagerquist, R.; Turner, D.; Ebert-Uphoff, I.; Stewart, J.; Hagerty, V. Using deep learning to emulate and accelerate a radiative-transfer model. Journal of Atmospheric and Oceanic Technology 2021. [Google Scholar] [CrossRef]

- Belochitski, A.; and Krasnopolsky, V. Robustness of neural network emulations of radiative transfer parameterizations in a state-of-the-art general circulation model. Geosci. Model Dev. 2021, 14, 7425–7437. [Google Scholar] [CrossRef]

- Khain, A.; Rosenfeld, D.; Pokrovsky, A. Aerosol impact on the dynamics and microphysics of deep convective clouds, Quarterly Journal of the Royal Meteorological Society: A Journal of the Atmospheric Sciences, Applied Meteorology and Physical Oceanography 2000, 131, 2639-2663 .

- Thompson, G.; Field, P. R.; Rasmussen, R. M.; Hall, W. D. Explicit Forecasts of Winter Precipitation Using an Improved Bulk Microphysics Scheme. Part II: Implementation of a New Snow Parameterization. Monthly Weather Review 2008, 138, 5095-5115.

- Krasnopolsky, V.; Middlecoff, J. ; Beck, J; Geresdi, I.; Toth, Z. A Neural Network Emulator for Microphysics Schemes. In Proceedings of the AMS annual meeting, US, Seattle, January 22–26, 2017. https://ams.confex.com/ams/97Annual/webprogram/Paper310969.html.

- Jensen, A.; Weeks, C.; Xu, M.; Landolt, S.; Korolev, A.; Wolde, M.; DiVito, S. The prediction of supercooled large drops by a microphysics and a machine-learning model for the ICICLE field campaign. Weather and Forecasting 2023. [Google Scholar] [CrossRef]

- Krasnopolsky, V. M.; Fox-Rabinovitz, M. S.; Belochitski, A. A. Using Ensemble of Neural Networks to Learn Stochastic Convection Parameterization for Climate and Numerical Weather Prediction Models from Data Simulated by Cloud Resolving Model, Advances in Artificial Neural Systems 2013, Article ID 485913, 13 pages. [CrossRef]

- Schneider, T.; Lan, S.; Stuart, A.; Teixeira, J. Earth System Modeling 2.0: A Blueprint for Models That Learn From Observations and Targeted High-Resolution Simulations Geophysical Research Letters 2017, 44, 12,396–12,417. [CrossRef]

- Gentine, P.; Pritchard, M.; Rasp, S.; Reinaudi, G.; Yacalis, G. Could Machine Learning Break the Convection Parameterization Deadlock? Geophysical Research Letters 2018, 45, 5742–5751. [Google Scholar] [CrossRef]

- Pal, A. Deep Learning Emulation of Subgrid-Scale Processes in Turbulent Shear Flows, Geophysical Research Letters 2020, 47, e2020GL087005. [CrossRef]

- Wang, Le-Yi.; Tan, Zhe-Min. Deep Learning Parameterization of the Tropical Cyclone Boundary Layer. Journal of Advances in Modeling Earth Systems 2023, 15, Issue 1. [CrossRef]

- Krasnopolsky, V. M.; Lord, S. J.; Moorthi, S.; Spindler, T. How to Deal with Inhomogeneous Outputs and High Dimensionality of Neural Network Emulations of Model Physics in Numerical Climate and Weather Prediction Models. Proceedings of International Joint Conference on Neural Networks, Atlanta, Georgia, USA, 2009, June 14-19; pp. 1668–1673.

- Wang, X.; Han, Y.; Xue, W.; Yang, G.; Zhang, G. J. Stable climate simulations using a realistic general circulation model with neural network parameterizations for atmospheric moist physics and radiation processes. Geosci. Model Dev. 2022, 15, 3923–3940. [Google Scholar] [CrossRef]

- Scher, S. Toward data-driven weather and climate forecasting: Approximating a simple general circulation model with deep learning. Geophysical Research Letters, 12. [CrossRef]

- Scher, S.; Messori, G. Weather and climate forecasting with neural networks: using GCMs with different complexity as study-ground. Geosci. Model Dev. Discuss. 2019. [CrossRef]

- Yik, W.; Silva, S.J.; Geiss, A. ; Watson-Parris, Exploring Randomly Wired Neural Networks for Climate Model Emulation. arXiv:2212.03369v2 [physics.ao-ph] 2022. https://arxiv.org/pdf/2212.03369.pdf.

- Pawar, S.; San, O. Equation-Free Surrogate Modeling of Geophysical Flows at the Intersection of Machine Learning and Data Assimilation. Journal of Advances in Modeling Earth Systems 2022, 14, Issue 11. [CrossRef]

- Schultz, M.G.; Betancourt, C.; Gong, B.; Kleinert, F.; Langguth, M.; Leufen, L.H.; Mozaffari, A.; Stadtler, S. Can deep learning beat numerical weather prediction? Phil. Trans. R. Soc. 2021, A 379: 20200097. [CrossRef]

- Bi, K.; Xie, L.; Zhang, H.; et al. Accurate medium-range global weather forecasting with 3D neural networks. Nature 2023, 619, 533–538. [Google Scholar] [CrossRef]

- Kochkov, D. Neural general circulation models. arXiv 2023, arXiv:2311.07222.

- Krasnopolsky, V. M.; Fox-Rabinovitz, M. S.; Belochitski, A. Using neural network emulations of model physics in numerical model ensembles. Paper presented at 2008 IEEE International Joint Conference on Neural Networks, Hong Kong, China (2008 IEEE World Congress on Computational Intelligence). [Google Scholar] [CrossRef]

- Kelpa M., M.; Tessuma, Ch. W.; Marshall, J. D. Orders-of-magnitude speedup in atmospheric chemistry modeling through neural network-based emulation. Arxiv, 2018. [Google Scholar]

- Schreck, J. S.; Becker, C.; Gagne, D. J.; Lawrence, K.; Wang, S.; Mouchel-Vallon, C.; et al. Neural network emulation of the formation of organic aerosols based on the explicit GECKO-A chemistry model. Journal of Advances in Modeling Earth Systems 2022, 14, e2021MS002974. [Google Scholar] [CrossRef]

- Sharma, H.; Shrivastava, M.; Singh, B. Physics informed deep neural network embedded in a chemical transport model for the Amazon rainforest. Climate and Atmospheric Science 2023, 6, 28. [Google Scholar] [CrossRef]

- Geiss, A.; Ma, Po-L. ; Singh, B.; Hardin, J. C. Emulating aerosol optics with randomly generated neural networks. Geosci. Model Dev. 2023, 16, 2355–2370. [Google Scholar] [CrossRef]

- et al. Statistical Postprocessing for Weather Forecasts: Review, Challenges, and Avenues in a Big Data World. BAMS 2021, E681. [Google Scholar] [CrossRef]

- Klein, W. H.; Lewis, B. M.; Enger, I. Objective prediction of five-day mean temperatures during winter. J. Meteor. 1959, 16, 672–682. [Google Scholar] [CrossRef]

- Glahn, H.R.; Lowry, D.A. The use of model output statistics (MOS) in objective weather forecasting. J. Appl. Meteor. 1972, 11, 1203–1211. [Google Scholar] [CrossRef]

- Hemri, S.; Scheuerer, M.; Pappenberger, F.; Bogner, K.; Haiden, T. Trends in the predictive performance of raw ensemble weather forecasts. Geophys. Res. Lett. 2014, 41, 9197–9205. [Google Scholar] [CrossRef]

- Grönquist, P. , et al. Deep learning for post-processing ensemble weather forecasts. Phil. Trans. R. Soc. A 379, 2021. [Google Scholar] [CrossRef]

- Bouallègue, Z. B.; Cooper, F.; Chantry, M.; Düben, P.; Bechtold, P; Sandu, I. Statistical Modelling of 2m Temperature and 10m Wind Speed Forecast Errors. Monthly Weather Review 2023, 151, 897–911. [Google Scholar] [CrossRef]

- Rojas-Campos, R.; Wittenbrink, M.; Nieters, P.; Schaffernicht, E. J.; Keller, J. D.; Pipa, G. Postprocessing of NWP Precipitation Forecasts Using Deep Learning. Weather and Forecasting 2023, 38, 487–497. [Google Scholar] [CrossRef]

- Benáček, P.; Farda, A.; Štěpánek, P. Postprocessing of Ensemble Weather Forecast Using Decision Tree–Based Probabilistic Forecasting Methods. Weather and Forecasting 2023, 38, 69–82. [Google Scholar] [CrossRef]

- Krasnopolsky, V.; Lin, Y. A Neural Network Nonlinear Multimodel Ensemble to Improve Precipitation Forecasts over Continental US. Advances in Meteorology 2012, Article ID 649450, 11 pages. [CrossRef]

- Wang, T.; Zhang, Y.; Zhi, X.; Ji, Y. Multi-Model Ensemble Forecasts of Surface Air Temperatures in Henan Province Based on Machine Learning. Atmosphere 2023, 14, 520. [Google Scholar] [CrossRef]

- Acharya, N.; Hall, K. A machine learning approach for probabilistic multi-model ensemble predictions of Indian summer monsoon rainfall. MAUSAM 2023, 74, 421-428. https://mausamjournal.imd.gov.in/index.php/MAUSAM/article/view/5997.

- Rodrigues, E.R.; Oliveira, I.; Cunha, R.; Netto, M. DeepDownscale: A deep learning strategy for high-resolution weather forecast. In Proceedings of the 2018 IEEE 14th International Conference on e-Science (e-Science), Amsterdam, The Netherlands, 29 October–1 November 2018; pp. 415–422. [Google Scholar]

- Li, L. Geographically weighted machine learning and downscaling for high-resolution spatiotemporal estimations of wind speed. Remote Sens. 2019, 11, 1378. [Google Scholar] [CrossRef]

- Höhlein, K.; Kern, M.; Hewson, T.; Westermann, R. A comparative study of convolutional neural network models for wind field downscaling. Meteorol. Appl. 2020, 27, e1961. [Google Scholar] [CrossRef]

- Sekiyama, T.T. Statistical Downscaling of Temperature Distributions from the Synoptic Scale to the Mesoscale Using Deep Convolutional Neural Networks. arXiv 2020, arXiv:2007.10839.

- Sebbar, B.-e.; Khabba, S.; Merlin, O.; Simonneaux, V.; Hachimi, C.E.; Kharrou, M.H.; Chehbouni, A. Machine-Learning-Based Downscaling of Hourly ERA5-Land Air Temperature over Mountainous Regions. Atmosphere 2023, 14, 610. [Google Scholar] [CrossRef]

- Agrawal, S.; Carver, R.; Gazen, C.; Maddy, E.; Krasnopolsky, V.; Bromberg, C.; Ontiveros, Z.; Russell, T.; Hickey, J.; Boukabara, S. A Machine Learning Outlook: Post-processing of Global Medium-range Forecasts. ArXive 2023, arXiv:2303.16301. [Google Scholar] [CrossRef]

- Krasnopolsky, V.M.; Fox-Rabinovitz, M.S.; Tolman, H.L.; Belochitski, A. Neural network approach for robust and fast calculation of physical processes in numerical environmental models: Compound parameterization with a quality control of larger errors. Neural Networks 2008, 21, 535–543. [Google Scholar] [CrossRef] [PubMed]

- Poggio, T.; Banburski, A.; Liao, Q. Theoretical issues in deep networks, PNAS 2020, 117 (48), 30039-30045. [CrossRef]

- Thompson, N.C.; Greenewald, K.; Lee, K.; Manso, G.F. The Computational Limits of Deep Learning. ArXive, 2007. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).