Submitted:

17 September 2024

Posted:

21 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Structure for Knowledge

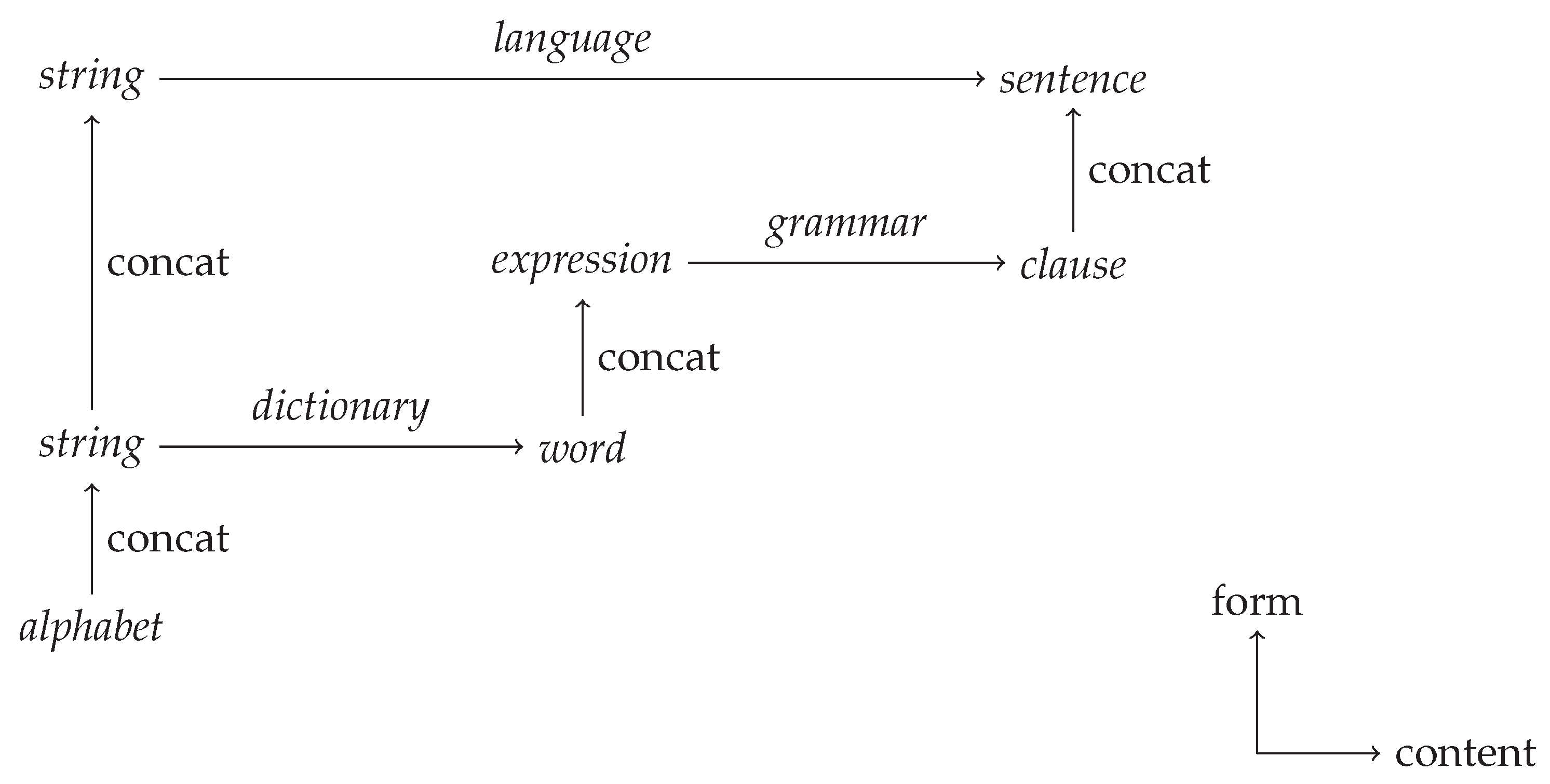

2.1. Language and Communication

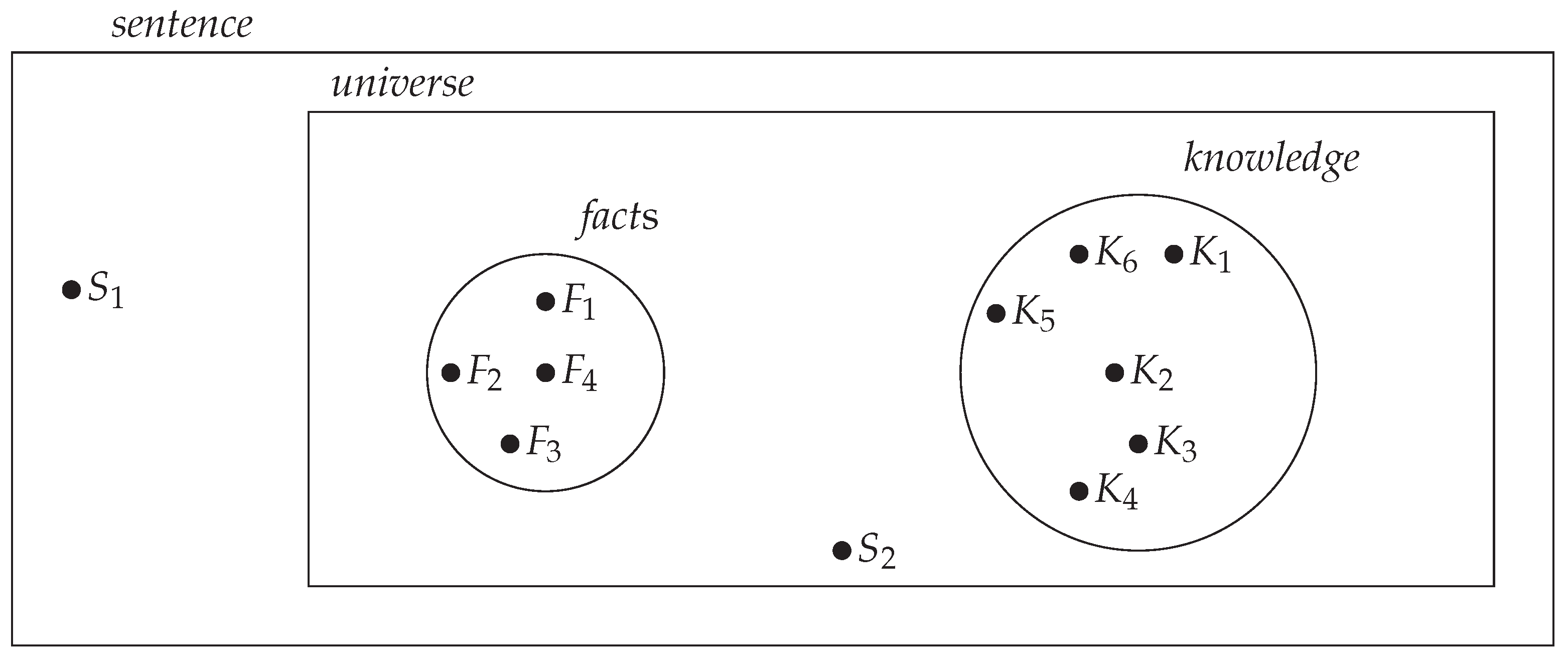

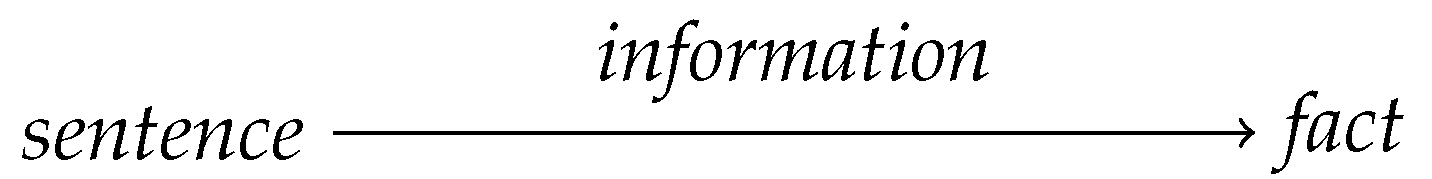

2.2. Fact

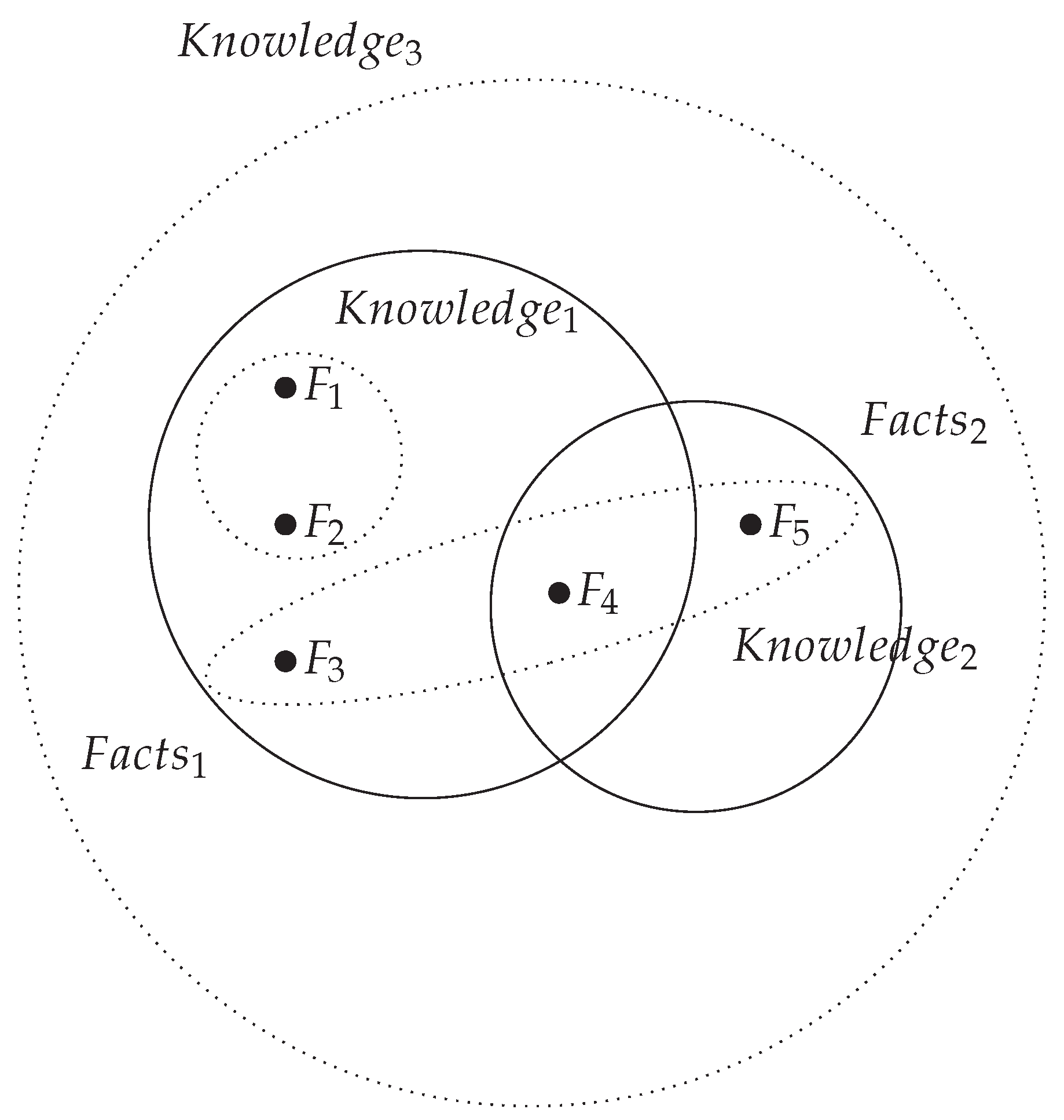

2.3. Knowledge

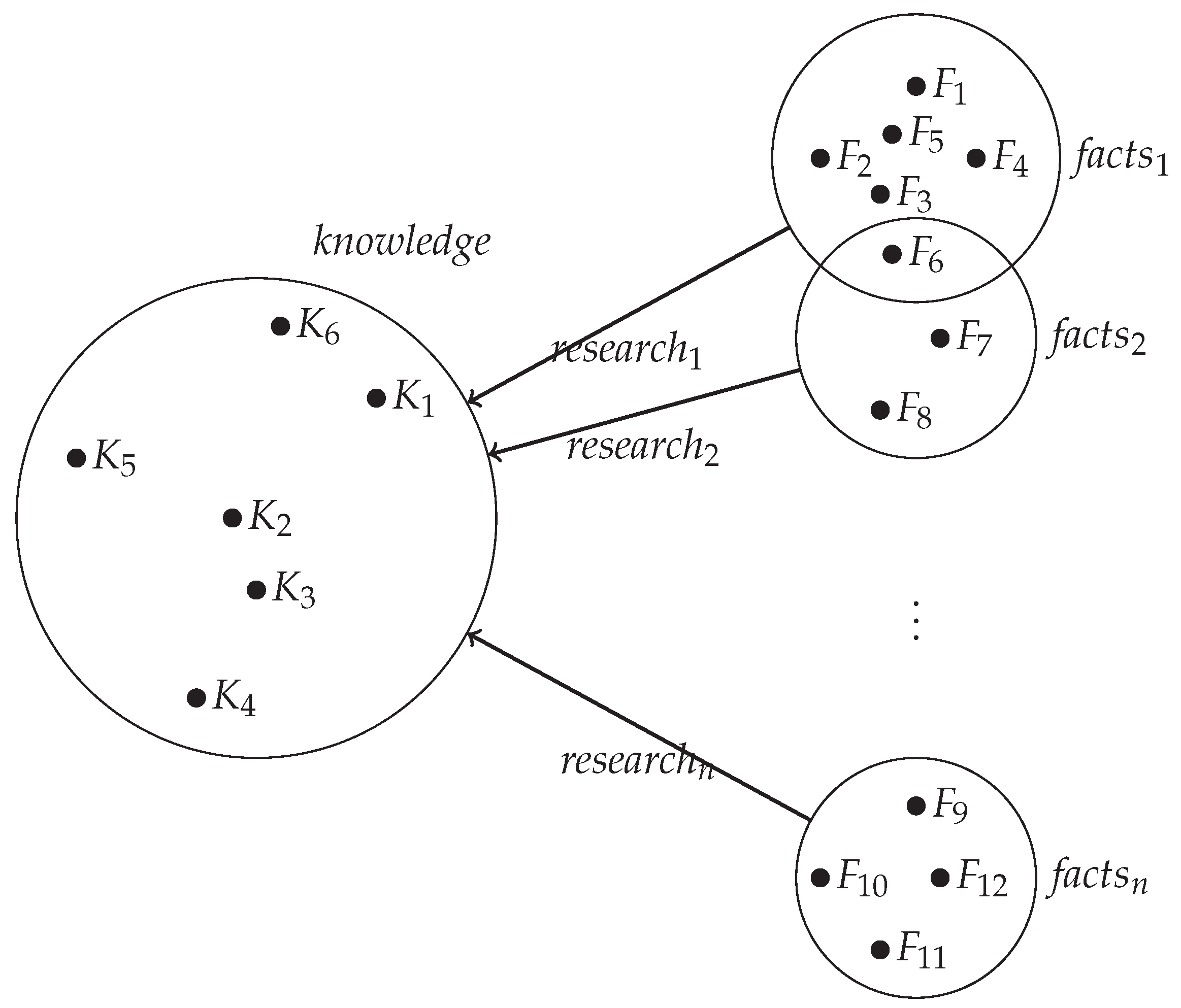

2.4. Research

2.4.1. Scientific research

3. Requirements for knowledge

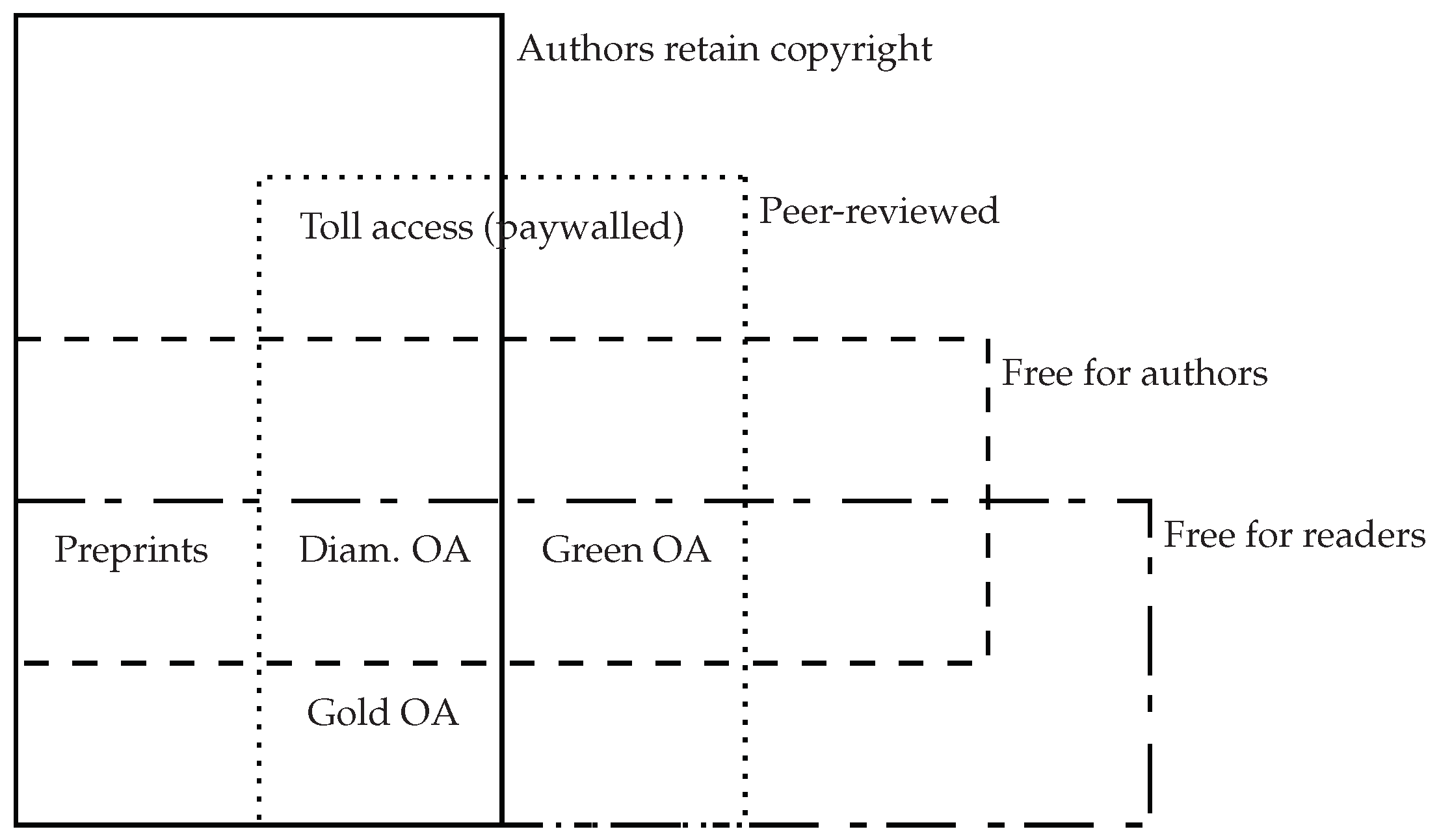

3.1. Open

3.2. Free

3.3. Tidy

4. Review on knowledge and cognition

4.1. Crucial experiments

4.2. Acquisition

5. Knowmatic

5.1. Connecting knowledge

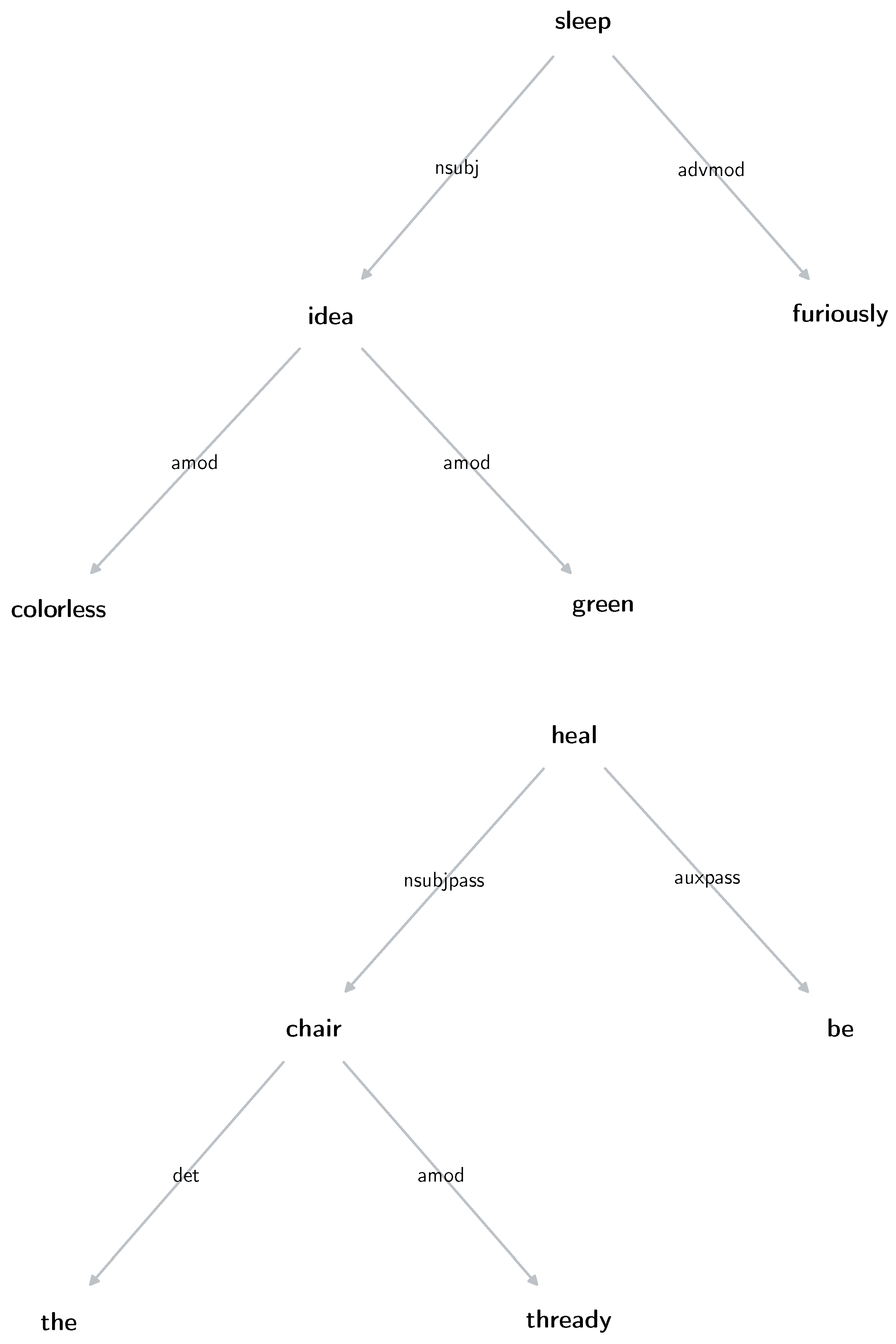

- is self-evident since therefore it has no further structure other than the concatenation of symbols e, l, p and s that compose it. The process of destructuring could continue up to the level of the alphabet and not stop at the dictionary but here it is useless to continue further. , and are self-evident for the same reason.

-

.

- advmod: adverbial modifier. Adverb modifying a verb, adjective, or adverb.

- amod: adjectival modifier. Adjective modifying a noun.

- auxpass: passive auxiliary verb. Auxiliary used in passive voice.

- det: determiner. Introduces a noun and provides reference information.

- nsubj: nominal subject. Noun or pronoun performing the action of the verb.

- nsubjpass: nominal subject in passive voice. Subject of a verb in the passive voice.

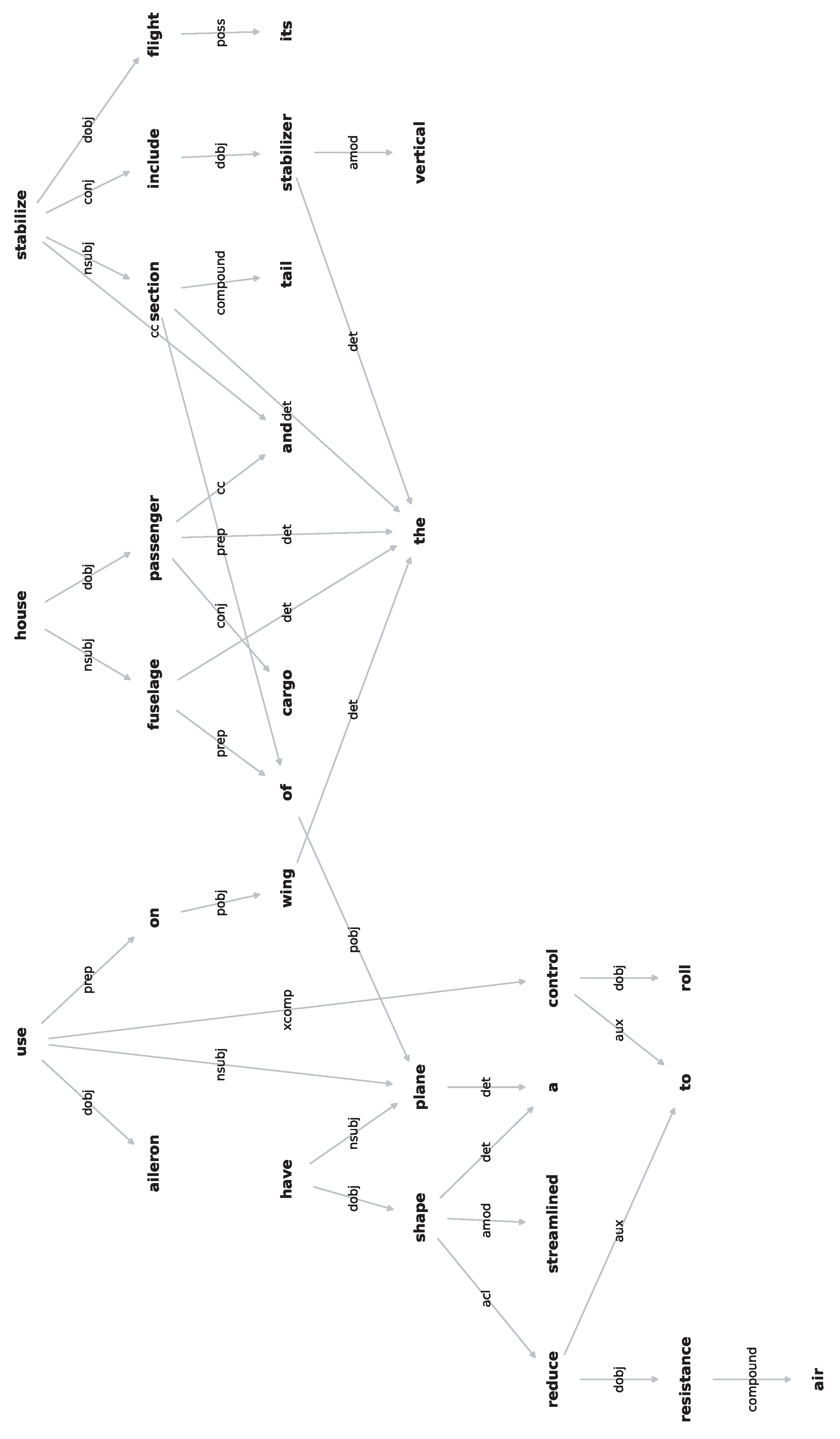

5.2. Creation of the Knowmatic Machine

-

resistance

-

cargo

-

includes the vertical stabilizer

6. Future Research

References

- Machuca, D.; Reed, B. Skepticism: from antiquity to the present; Bloomsbury Publishing, 2018. [Google Scholar]

- Russell, B. The problems of philosophy; OUP Oxford, 2001. [Google Scholar]

- Gettier, E.L. Is justified true belief knowledge? analysis 1963, 23, 121–123. [Google Scholar] [CrossRef]

- Dictionary, M.W. Merriam-Webster.com; Merriam-Webster. 2022. Available online: https://www.merriam-webster.com.

- dictionary.cambridge.org Dictionary. dictionary.cambridge.org; Cambridge Dictionary, 2022. Available online: https://dictionary.cambridge.org.

- comminsdictionary.com Dictionary. comminsdictionary.com; Collins Dictionary, 2022. Available online: https://www.collinsdictionary.com.

- britannica.com Dictionary. britannica.com; Encyclopædia Britannica, 2022. Available online: https://www.britannica.com.

- plato.standford.edu Encyclopedia. The Stanford Encyclopedia of Philosophy; The Metaphysics Research Lab, 2022. Available online: https://plato.stanford.edu/.

- iep.utm.edu Encyclopedia. Internet Encyclopedia of Philosophy; Internet Encyclopedia of Philosophy, 2022. Available online: https://iep.utm.edu/.

- Ware, M.; Mabe, M. The STM report: An overview of scientific and scholarly journal publishing; International Association of Scientific, Technical and Medical Publishers, 2015. [Google Scholar]

- White, K. Publication Output by Country, Region, or Economy and Scientific Field. 2021. Available online: https://ncses.nsf.gov/pubs/nsb20214/publication-output-by-country-region-or-economy-and-scientific-field (accessed on 19 February 2024).

- Meho, L.I. The rise and rise of citation analysis. Physics World 2007, 20, 32. [Google Scholar] [CrossRef]

- Wang, Y. On cognitive informatics. In Proceedings First IEEE International Conference on Cognitive Informatics. IEEE, 2002; pp. 34–42.

- Ranzoli, C. Dizionario di scienze filosofiche; U. Hoepli, 1926. [Google Scholar]

- Schmidt, N. Review of Saggi Sulla Teoria della Conoscenza. Saggio Secondo Filosofia della Metafisica. Parte prima: La Causa Efficiente. The Philosophical Review 1907, 16, 91–94. [Google Scholar] [CrossRef]

- Heath, T.L. ; others. The thirteen books of Euclid’s Elements; Courier Corporation, 1956. [Google Scholar]

- Hockett, C.F.; Hockett, C.D. The origin of speech. Scientific American 1960, 203, 88–97. [Google Scholar] [CrossRef]

- Hannon, M. Knowledge, concept of. Routledge Encyclopedia of Philosophy 2021. Available online: https://www.rep.routledge.com/articles/thematic/knowledge-concept-of/v-2. [CrossRef]

- Hetherington, S. Knowledge. Internet Encyclopedia of Philosophy 2021. Available online: https://iep.utm.edu/knowledg/.

- Ichikawa, J.J.; Steup, M. The Analysis of Knowledge. In The Stanford Encyclopedia of Philosophy, Summer 2018 ed.; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University, 2018. Available online: https://plato.stanford.edu/entries/knowledge-analysis/.

- Lehrer, K. Theory Of Knowledge: Second Edition; Taylor & Francis, 2018. Available online: https://books.google.it/books?id=ScJKDwAAQBAJ.

- Cyras, K.; Badrinath, R.; Mohalik, S.K.; Mujumdar, A.; Nikou, A.; Previti, A.; Sundararajan, V.; Feljan, A.V. Machine reasoning explainability. arXiv, arXiv:2009.00418.

- Bird, A. Thomas Kuhn. In The Stanford Encyclopedia of Philosophy, Spring 2022 ed.; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University, 2022. [Google Scholar]

- Aufrecht, M. Reichenbach falls-and rises? Reconstructing the discovery/justification distinction. International Studies in the Philosophy of Science 2017, 31, 151–176. [Google Scholar] [CrossRef]

- Foster, J.G.; Rzhetsky, A.; Evans, J.A. Tradition and innovation in scientists’ research strategies. American sociological review 2015, 80, 875–908. [Google Scholar] [CrossRef]

- Andresen, J.T.; Carter, P.M. Languages in the world: How history, culture, and politics shape language; John Wiley & Sons, 2016. [Google Scholar]

- Hoff, E. How social contexts support and shape language development. Developmental review 2006, 26, 55–88. [Google Scholar] [CrossRef]

- Gibson, E.; Futrell, R.; Piantadosi, S.P.; Dautriche, I.; Mahowald, K.; Bergen, L.; Levy, R. How efficiency shapes human language. Trends in cognitive sciences 2019, 23, 389–407. [Google Scholar] [CrossRef]

- Piantadosi, S.T.; Tily, H.; Gibson, E. Word lengths are optimized for efficient communication. Proceedings of the National Academy of Sciences 2011, 108, 3526–3529. [Google Scholar] [CrossRef]

- Florio, M. La privatizzazione della conoscenza: Tre proposte contro i nuovi oligopoli; Gius. Laterza & Figli Spa, 2021. [Google Scholar]

- Caso, R.; others. Open Data, ricerca scientifica e privatizzazione della conoscenza 2022.

- Costello, E. Bronze, free, or fourrée: An open access commentary. Science Editing 2019, 6, 69–72. [Google Scholar] [CrossRef]

- Farquharson, J. Diamond open access venn diagram [en SVG] 2022. Available online: https://figshare.com/articles/figure/Diamond_open_access_venn_diagram_en_SVG_/21598179. [CrossRef]

- Bondy, J.A.; Murty, U.S.R.; others. Graph theory with applications; Macmillan London: location, 1976; Vol. 290.

- Alston, W.P. Concepts of epistemic justification. The monist 1985, 68, 57–89. [Google Scholar] [CrossRef]

- Chisholm, R.M. Theory of knowledge; Prentice-Hall Englewood Cliffs: NJ, 1989. [Google Scholar]

- Chisholm, R. Probability in the Theory of Knowledge. In Knowledge and Skepticism; Routledge, 2019; pp. 119–130. [Google Scholar]

- Kant, I.; Meiklejohn, J.M.D.; Abbott, T.K.; Meredith, J.C. Critique of pure reason; JM Dent London, 1934. [Google Scholar]

- Quine, W.V.O.; Ullian, J.S. The web of belief; Random House New York, 1978. [Google Scholar]

- Goodman, N.; Douglas, M.; Hull, D.L. How classification works: Nelson Goodman among the social sciences 1992.

- Hanson, N.R.; Hanson, N.R. Observation and Explanation: A guide to Philosophy of Science. What I Do Not Believe, and Other Essays 2020, pp. 81–121.

- Popper, K. The logic of scientific discovery; Routledge, 2005. [Google Scholar]

- Kuhn, T.S. The structure of scientific revolutions; University of Chicago press Chicago, 1997. [Google Scholar]

- Kuhn, T.S.; et al. The essential tension: Tradition and innovation in scientific research. In The third University of Utah research conference on the identification of scientific talent; University of Utah Press: Salt Lake City, 1959; pp. 225–239. [Google Scholar]

- Kuhn, T.S. Objectivity, value judgment, and theory choice. In Arguing about science; Routledge, 2012; pp. 74–86. [Google Scholar]

- Preston, J. Paul Feyerabend. In The Stanford Encyclopedia of Philosophy, Fall 2020 ed.; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University, 2020. [Google Scholar]

- Feyerabend, P. Against method: Outline of an anarchistic theory of knowledge; Verso Books, 2020. [Google Scholar]

- Bogen, J. Theory and observation in science 2009.

- Leibniz, G.W. The Monadology (1714). Gottfried Wilhelm Leibniz: Philosophical Papers and Letters, Dordrecht 1969.

- Achinstein, P. Crucial experiments 1998. [CrossRef]

- El’yashevich, M.A. Niels Bohr’s development of the quantum theory of the atom and the correspondence principle (his 1912–1923 work in atomic physics and its significance). Soviet Physics Uspekhi 1985, 28, 879. [Google Scholar] [CrossRef]

- Swenson, R. A grand unified theory for the unification of physics, life, information and cognition (mind). Philosophical Transactions of the Royal Society A 2023, 381, 20220277. [Google Scholar] [CrossRef] [PubMed]

- Saffran, J.R.; Aslin, R.N.; Newport, E.L. Statistical learning by 8-month-old infants. Science 1996, 274, 1926–1928. [Google Scholar] [CrossRef] [PubMed]

- Saffran, J.R.; Johnson, E.K.; Aslin, R.N.; Newport, E.L. Statistical learning of tone sequences by human infants and adults. Cognition 1999, 70, 27–52. [Google Scholar] [CrossRef]

- Aslin, R.; Slemmer, J.; Kirkham, N.; Johnson, S. Statistical learning of visual shape sequences. meeting of the Society for Research in Child Development, Minneapolis, MN, 2001.

- Bonatti, L.L.; Peña, M.; Nespor, M.; Mehler, J. Linguistic constraints on statistical computations: The role of consonants and vowels in continuous speech processing. Psychological Science 2005, 16, 451–459. [Google Scholar] [CrossRef]

- Fiser, J.; Aslin, R.N. Statistical learning of new visual feature combinations by infants. Proceedings of the National Academy of Sciences 2002, 99, 15822–15826. [Google Scholar] [CrossRef]

- Peña, M.; Bonatti, L.L.; Nespor, M.; Mehler, J. Signal-driven computations in speech processing. Science 2002, 298, 604–607. [Google Scholar] [CrossRef]

- Fodor, J.D. Learning to parse? Journal of psycholinguistic research 1998, 27, 285–319. [Google Scholar] [CrossRef]

- Fodor, J.A.; Bever, T.G. The psychological reality of linguistic segments. Journal of verbal learning and verbal behavior 1965, 4, 414–420. [Google Scholar] [CrossRef]

- Garrett, M.; Bever, T.; Fodor, J. The active use of grammar in speech perception. Perception & Psychophysics 1966, 1, 30–32. [Google Scholar]

- Jarvella, R.J. Syntactic processing of connected speech. Journal of verbal learning and verbal behavior 1971, 10, 409–416. [Google Scholar] [CrossRef]

- Wanner, E. On remembering, forgetting, and understanding sentences: A study of the deep structure hypothesis; Walter de Gruyter GmbH & Co KG, 2019. [Google Scholar]

- De Vincenzi, M. Syntactic parsing strategies in Italian: The minimal chain principle; Springer Science & Business Media, 1991. [Google Scholar]

- Frazier, L.; Fodor, J.D. The sausage machine: A new two-stage parsing model. Cognition 1978, 6, 291–325. [Google Scholar] [CrossRef]

- Gibson, E.; Pearlmutter, N.; Canseco-Gonzalez, E.; Hickok, G. Cross-linguistic attachment preferences: Evidence from English and Spanish 1996.

- Clifton Jr, C.; Frazier, L. Comprehending sentences with long-distance dependencies. In Linguistic structure in language processing; Springer, 1989; pp. 273–317. [Google Scholar]

- Frazier, L. Theories of sentence processing 1987.

- Felser, C.; Marinis, T.; Clahsen, H. Children’s processing of ambiguous sentences: A study of relative clause attachment. Language Acquisition 2003, 11, 127–163. [Google Scholar] [CrossRef]

- Altmann, G.; Steedman, M. Interaction with context during human sentence processing. Cognition 1988, 30, 191–238. [Google Scholar] [CrossRef]

- Traxler, M.J. Plausibility and subcategorization preference in children’s processing of temporarily ambiguous sentences: Evidence from self-paced reading. The Quarterly Journal of Experimental Psychology: Section A 2002, 55, 75–96. [Google Scholar] [CrossRef]

- Gurevich, Y. What is an algorithm. In International conference on current trends in theory and practice of computer science; Springer, 2012; pp. 31–42. [Google Scholar]

- De Mol, L. Turing machines 2018.

- von Foerster, H.; Fiche, B. Communication Amongst Automata. The American journal of psychiatry 1962. [Google Scholar]

- Morin, E. La Nature de la Nature; Seuil: Paris, 1992. [Google Scholar]

- Morin, E. La Vie de la Vie; Seuil: Paris, 1992. [Google Scholar]

- Morin, E. La Connaissance de la Connaissance; Seuil: Paris, 1986. [Google Scholar]

- Morin, E. Les Idées: leur habitat, leur vie, leurs mœurs, leur organisation; Seuil: Paris, 1991. [Google Scholar]

- Morin, E. L’Humanité de l’Humanité; Seuil: Paris, 2001. [Google Scholar]

- Morin, E. Éthique; Seuil: Paris, 2004. [Google Scholar]

- Haken, H. Synergetics: An approach to self-organization. Self-organizing systems: The emergence of order 1987, pp. 417–434.

- Andreucci, D.; Herrero, M.A.; Velazquez, J.J. On the growth of filamentary structures in planar media. Mathematical methods in the applied sciences 2004, 27, 1935–1968. [Google Scholar] [CrossRef]

- Willshaw, D.J.; Von Der Malsburg, C. How patterned neural connections can be set up by self-organization. Proceedings of the Royal Society of London. Series B. Biological Sciences 1976, 194, 431–445. [Google Scholar]

- Aarts, E.; Korst, J. Simulated annealing and Boltzmann machines: a stochastic approach to combinatorial optimization and neural computing; John Wiley & Sons, Inc., 1989. [Google Scholar]

- Willems, J.L. Stability theory of dynamical systems. (No Title) 1970.

- Marsden, J.E.; McCracken, M. The Hopf bifurcation and its applications; Springer Science & Business Media, 2012. [Google Scholar]

- Holden, A.V. Chaos; Princeton University Press, 2014. [Google Scholar]

- Degn, H.; Holden, A.V.; Olsen, L.F. Chaos in biological systems; Springer Science & Business Media, 2013. [Google Scholar]

- Heath-Carpentier, A. The challenge of complexity: Essays by Edgar Morin; Liverpool University Press, 2022. [Google Scholar]

- Hopcroft, J.E.; Ullman, J.D. Formal languages and their relation to automata; Addison-Wesley Longman Publishing Co., Inc., 1969. [Google Scholar]

- Lewis, H.R.; Papadimitriou, C.H. Elements of the Theory of Computation. ACM SIGACT News 1998, 29, 62–78. [Google Scholar] [CrossRef]

- Boole, G. An investigation of the laws of thought: on which are founded the mathematical theories of logic and probabilities; Walton and Maberly, 1854. [Google Scholar]

- Jackendoff, R.S. Semantic structures; MIT press, 1992; Vol. 18. [Google Scholar]

- Talmy, L. Toward a cognitive semantics, volume 1: Concept structuring systems; MIT press, 2003. [Google Scholar]

- Dowty, D. Thematic proto-roles and argument selection. language 1991, 67, 547–619. [Google Scholar] [CrossRef]

- Langacker, R.W. Foundations of cognitive grammar: Volume I: Theoretical prerequisites; Stanford university press, 1987. [Google Scholar]

- Chomsky, N. Three models for the description of language. IRE Transactions on information theory 1956, 2, 113–124. [Google Scholar] [CrossRef]

- Chomsky, N. The logical structure of linguistic theory 1975.

| 1 | We chose the term acquiring knowledge to avoid the debate over “a priori” or “a posteriori” knowledge. In any case, with respect to a subject, whether knowledge is a priori or a posteriori, it is acquired at the end of some process. In the case of a priori, the process can be understood as the birth of the subject itself. |

| 2 | |

| 3 | The reference is explicitly to Leibniz’s Monadology and the relationship between the monad and the universe “Thus, although each created monad represents the entire universe [...]. And since this body expresses the whole universe through the connection of all matter in the plenum, the soul also represents the entire universe by representing this body, which belongs to it in a particular manner” (translation by the author). |

| 4 | Meaning that is related to the form. |

| 5 | These concepts will find a parallel in the following sections, where knowledge is structured symmetrically with the acquisition process we are currently investigating. |

| 6 | We want to report Gurevich work in a conference paper presented at the International Conference on Current Trends in Theory and Practice of Computer Science on the exact formal definition of algorithm [72]. |

| 7 | The row vector and 0 the null relation such that for every . |

| 8 | Let be the length of the alphabet, . |

| 9 |

is equivalent to , but we preferred to use the cardinality (a scalar quantity) because it allows us to introduce the definition and measurement of information. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).