1. Introduction

Planning and performing of mobile robot tasks in the presence of human operators while sharing the same workspace requires a high level of stability and safety. Research activities regarding navigation, obstacle avoidance, adaptation and collaboration between robots and human agents have been widely reported [

1,

2]. Multiple target tracking for robots using higher control levels in a control hierarchy are discussed in [

3,

4]. A human-friendly interaction between robots and humans can be obtained by human-like sensor systems [

5]. A prominent role in robot navigation is the trajectory-crossing problem of robots and humans [

6] and corresponding fuzzy solutions [

7]. Motivations for a fuzzy solution of the intersection problem are manifold. One point is an uncertain measurement of the position and orientation of the human agent, because of which the use of a fuzzy signal and an adequate fuzzy processing seems natural [

8,

9]. Another aspect is the need for decreasing the computing effort in the case of complex calculations during a very small time interval. System uncertainties and observation noise lead to uncertainties of the intersection estimations. This paper deals with the one-robot one-human trajectory crossing problem whereas small uncertainties in position and orientation may lead to high uncertainties at the intersection points. Position and orientation of human and robot are nonlinearly coupled but can be linearized. In the following the linear part of the nonlinear system is considered in the analysis reported for small variations at the input [

10]. In the following the "direct task" is described, meaning that the parameters of the input distribution are transformed to the output distribution parameters. The "inverse task" is also solved, meaning that for defined output distribution parameters the input parameters are calculated. In this paper two methods are outlined:

1. The statistical linearization, that linearizes the nonlinearity around the operating area ate the intersection. Means and standard deviations on the input parameters positions, orientations) are transformed through the linearized nonlinear system to obtain means and standard deviations of the output parameters position of intersection).

2. The

Sigma-Point Transformation, that calculates the so-called sigma-points of the input distribution including mean and covariance of the input. The sigma-points are directly propagated through the nonlinear system [

11,

12,

13] to obtain means and covariance of the output and, with this, the standard deviations of the output (position of intersection). The advantage of sigma-point transformation is that it captures the 1st and 2nd order statistics of a random variable, whereas the statistical linearization approximates a random variable only by its 1st order.

The paper is organized as follows. In

Section 2 the general intersection problem and its analytical approach is described.

Section 3 deals with the transformation/conversion of Gaussian distributions for a 2-input - 2-output system and for 6-input - 2-output system plus corresponding inverse and fuzzy solutions. In

Section 4 the sigma-point approach plus inverse and fuzzy solutions is addressed.

Section 5 presents simulations of the

statistical sinearization and the

sigma-point Transformation, to show the quality of the input-output conversion of the distributions, and the impact of different resolutions of fuzzy approximations on the accuracy of the random variable intersection. Finally,

Section 6 concludes the paper with a discussion of the two different approaches and a comparison of the methods.

2. Computation of Intersections

The problem can be stated as follows:

Robot and human agent move in a common area according to their tasks or intentions. To avoid collisions, possible intersections of the paths of the agents should be predicted for both trajectory planning and on-line interactions. To accomplish this, positions, orientations and intended movements of robot and human should be estimated as accurately as needed.

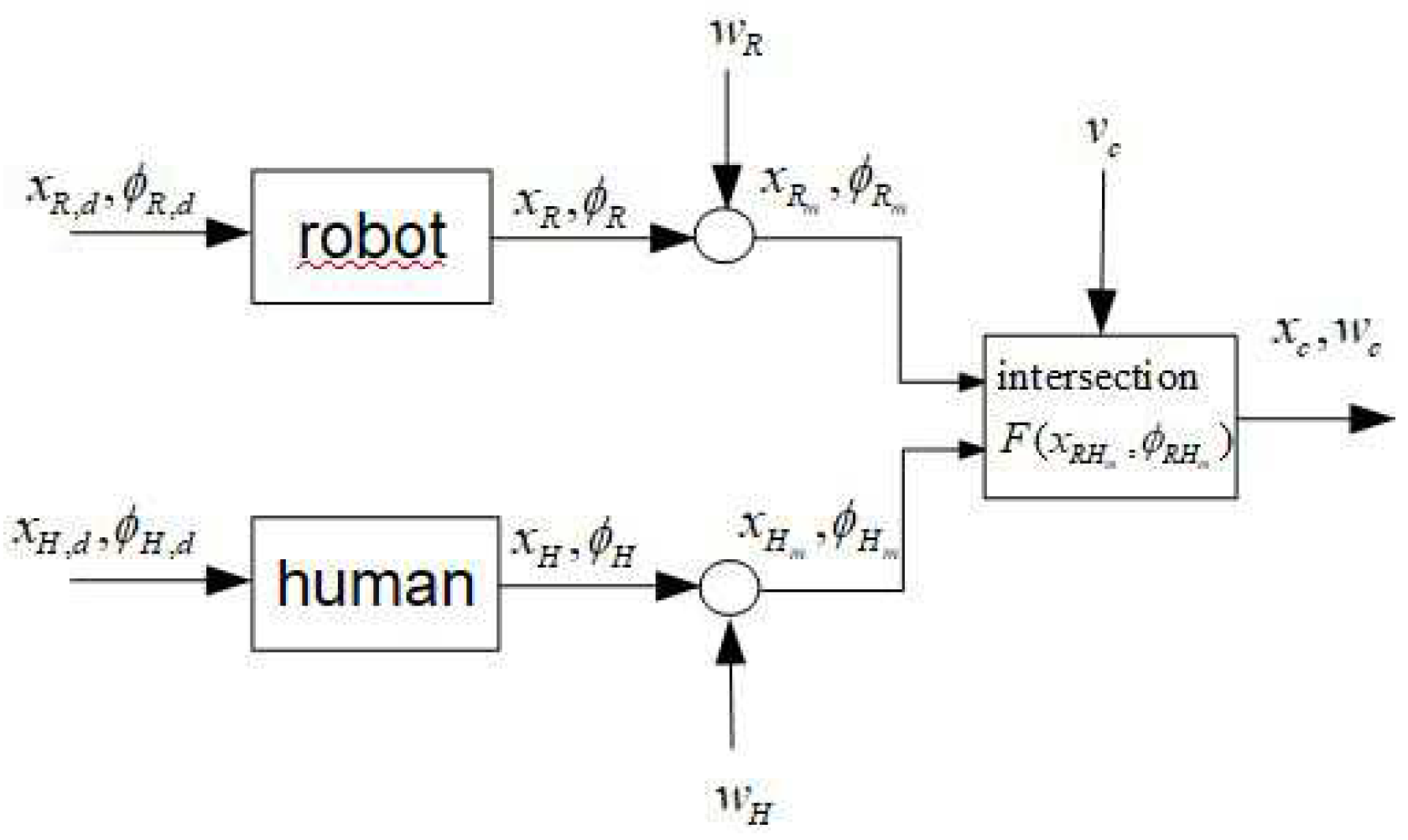

In this connection, uncertainties and noise on the random variables position/orientation , , and of robot and human have a great impact on the calculation of the expected intersection position . The random variable is calculated as the crossing point of the extension of the orientation or velocity vectors of robot and human which may change during motion depending on the task and current interaction. The task is to calculate the intersection and its uncertainty in the presence of known uncertainties of the acting agents robot and human.

System noise

and

for robot and human can be obtained from experiments. The noise

of the ’virtual’ intersection is composed by the nonlinear transformed noise

and

and some additional noise

that may come from uncertainties of the nonlinear computation of the intersection position

(see

Figure 1). In the following, the geometrical relations are described as well as fuzzy approximations and nonlinear transformations of the random variables

,

,

and

.

2.1. Geometrical Relations

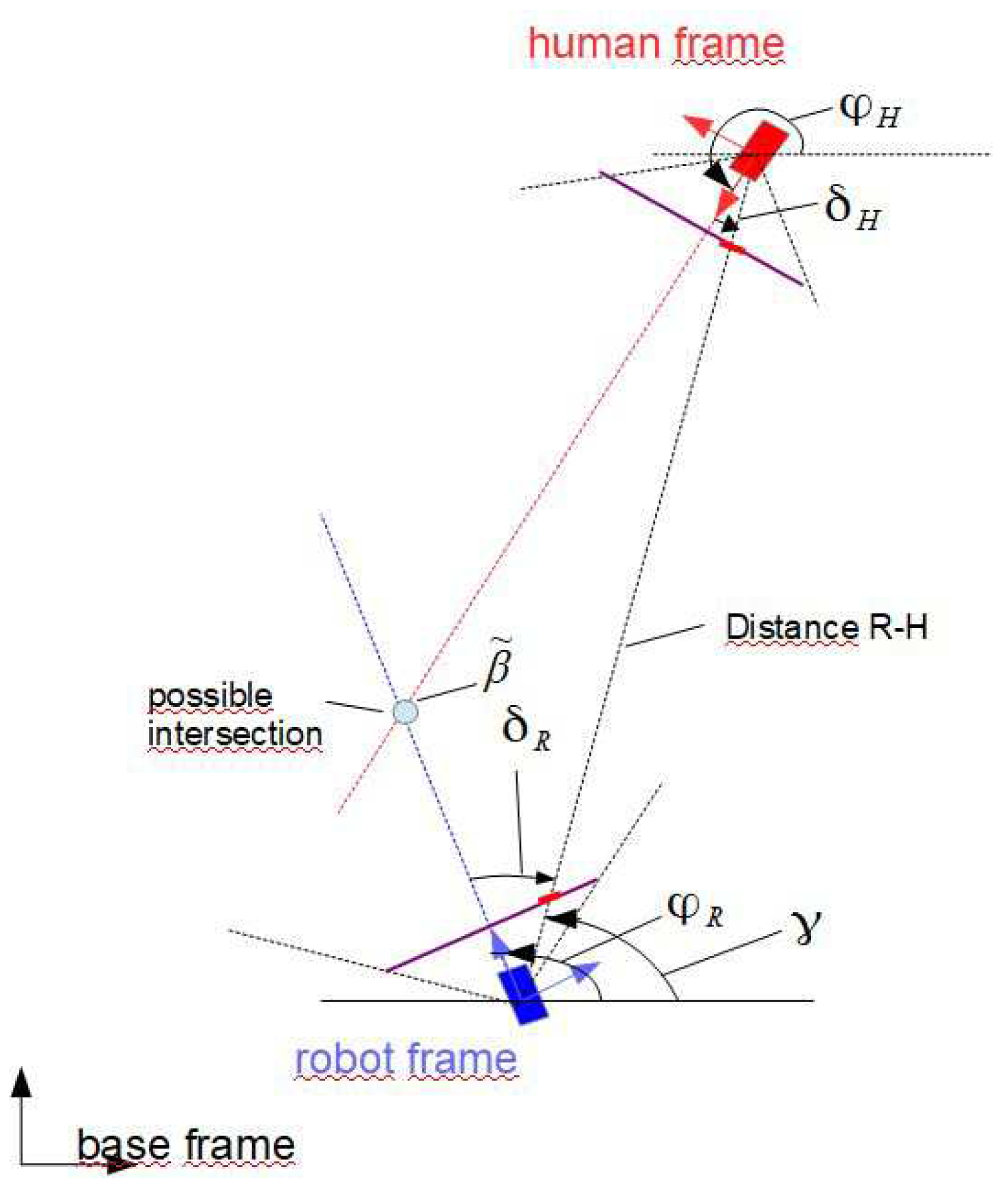

Let the intersection

of two linear trajectories

and

in a plane be described by the following relations (see

Figure 2)

where

and

are the positions of human and robot and

and

are their orientation angles,

and

are positive angles measured from the

y coordinates counterclockwise. The angle at the intersection is

. The variables

,

,

,

,

, distance

and angle

are assumed to be measurable. If

is not directly measurable then it can be computed by

The coordinates

and

of the intersection are computed straight forward by [

7]

After rearranging (

4) we observe that

is linear in

where

This notation is of advantage for further computations such as fuzzification of the intersection problem and the transformation of error distributions.

2.2. Computation of Intersections - Fuzzy Approach

The fuzzy solution presented in the following is a combination of classical analytical (crisp) methods and rule based methods in the sense of a Takagi-Sugeno fuzzy rule base.

In the following we introduce a fuzzy rule-based approximation of (

5) with

fuzzy rules

n - number of fuzzy terms , and for and

with the result

,

are normalized membership functions with

and

.

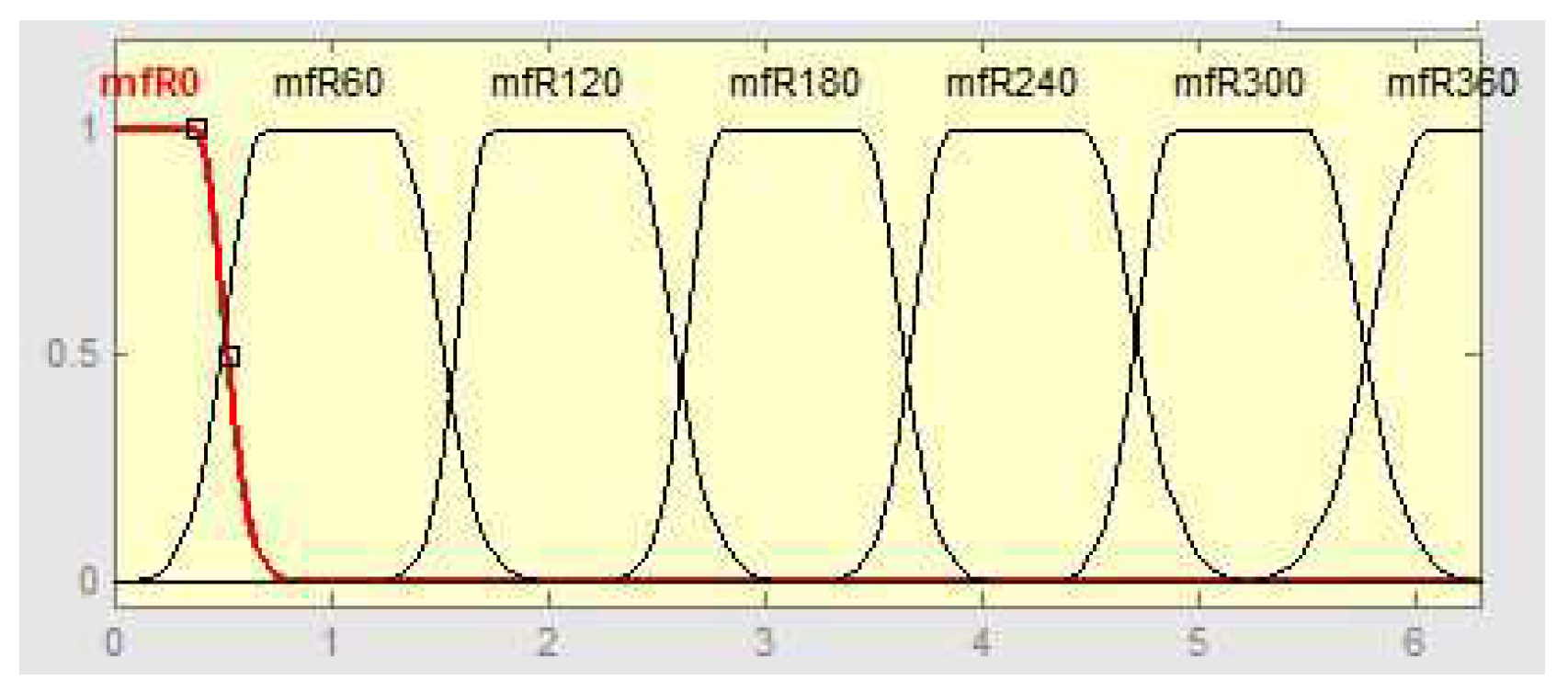

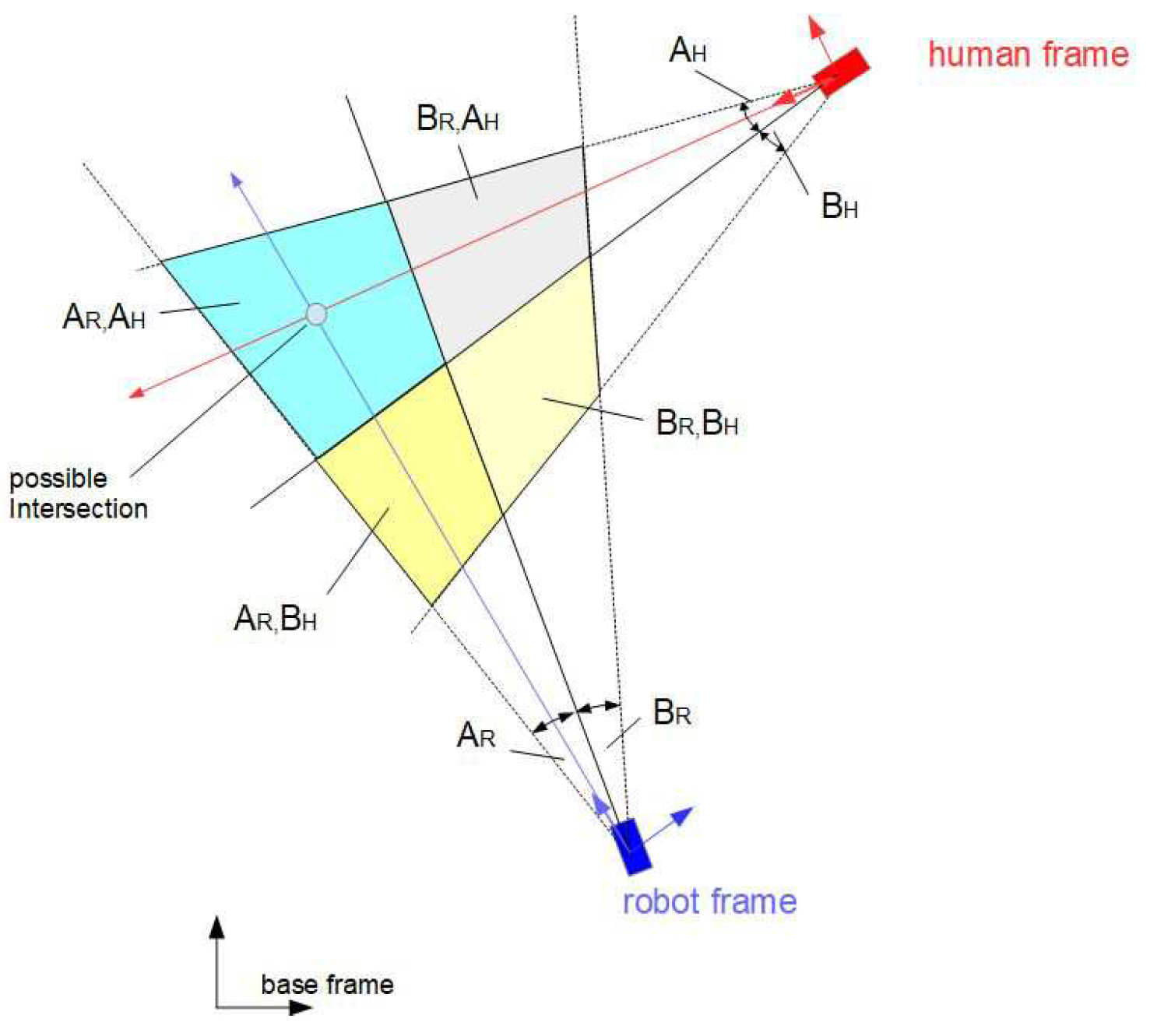

Let the universes of discourse for

and

be

. Furthermore, let these universes of discourse be divided into

n partitions (for example 6) of 60 which leads to

fuzzy rules. Corresponding membership functions are shown in

Figure 3. It turns out that this resolution leads to a poor fuzzy approximation. The approximation quality can be improved by increasing the number of fuzzy sets which however results in a quadratic increase of the number of fuzzy rules. To avoid an "explosion" of the number of fuzzy rules being computed in one time step a set of sub-areas covering a small number of rules for each sub-area is defined. Based on the measurements of

and

, the appropriate sub-area is selected together with a corresponding set of rules (see

Figure 4, sub-area

). With this, the number of rules to be activated at one time step of calculation is low, although the total number of rules can be high. At the borderlines between sub-areas abrupt changes may occur which can be avoided by overlapping of sub-areas.

2.3. Differential Approach

Robots and human agents usually change their positions, orientations, and velocities which requires a differential approach apart from the exact solution (

4). In addition, the analysis of uncertainty and noise at

and the existence of noise at

,

, and

requires a differential strategy.

Differentiating (

4) with

yields

where

The following sections deal with the accuracy of the computed intersection in the case of noisy orientation information (see

Figure 5).

3. Transformation of Gaussian Distributions

3.1. General Assumptions

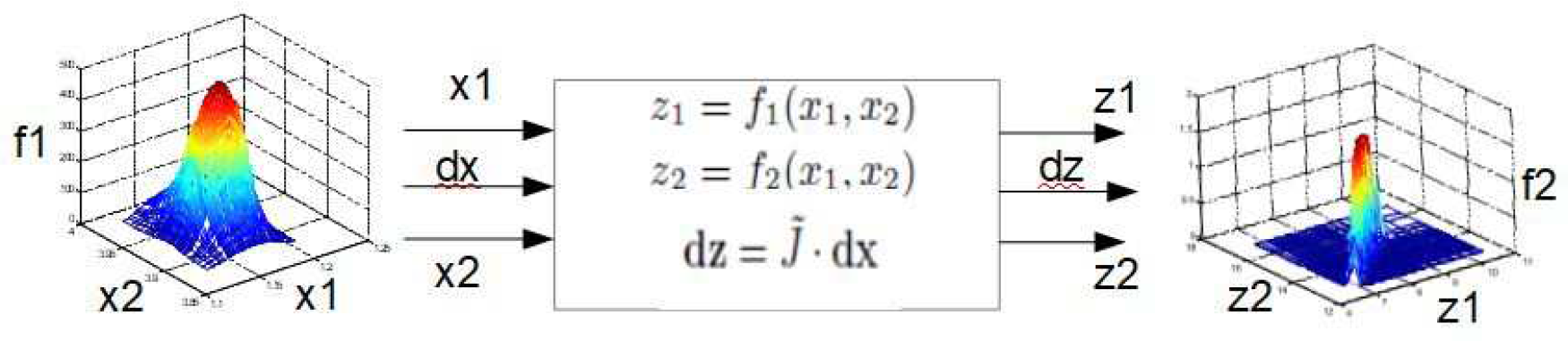

Consider a nonlinear system

where the random variables

denote the input,

the output and

F denotes a nonlinear transformation. The distribution of the uncorrelated Gaussian distributed components

and

is described by

where

,

- mean

,

- standard deviation

and

,

- mean

,

- standard deviation

.

The goal is: Given the nonlinear transformation (

9) and the distribution (

9). Compute the output signals

and

and their distributions together with their standard deviations and the correlation coefficient. Linear systems transform Gaussian distributions linearly such that the output signals are also Gaussian distributed. This does not apply for nonlinear systems, but if the input standard deviation is small enough then a local linear transfer function can be built for which the outputs are Gaussian distributed. Suppose the the input standard devivations are small with respect to the nonlinear function then the output distribution can be written as follows

- correlation coefficient.

3.2. Statistical Linearization, Two Inputs-Two Outputs

Let the nonlinear transformation

be described by two smooth transfer functions (see block

Figure 6)

where

and

Linearization of (

12) yields

with

Output Distribution

To obtain the density

(

11) of the output signal, we invert (

14) and substitute the entries of

into (

10)

with

and

where

and

. Entries

are the result of the inversion of

. From this substitution which we get

where

and

The exponent of (

18) is rewritten into

and furthermore

Let

then a comparison of xpo in (

21) and the exponent in (

11) yields

Standard deviations

,

and correlation coefficient

yield

The result is: if the parameter of the input distribution and the transfer function are known,then the output distribution parameters can be computed straight forward.

Fuzzy Solution

To save computing costs in real time we create a TS fuzzy model that is represented by the rules

where

are fuzzy terms for

,

are functions of predefined variables

and

and are weighting functions with ,

Inverse Solution

The previous paragraph discussed the direct transformation task: Let the distribution parameters of the input variable be defined, find the corresponding output parameters. However it might also be useful to solve the inverse task: Given the output parameters (standard deviation, correlation coefficient), find the corresponding input parameters. This solution of inverse task is similar to those discussed in

Section 3.2. The starting point are equations (

10) and (

11) which describe the distributions of the inputs and outputs, respectively. Then we substitute (

13) into (

10) and rename the resulting exponent

into

and discuss the exponent

with

Now, comparing (

27) with the exponent of (

10) of the input density we find that the mixed term in (

27) must be zero from which we obtain the correlation coefficient

and with this the standard deviations of the inputs

The detailed development can be found in [

14].

3.3. Six Inputs - Two Outputs

Consider again the nonlinear system

In the previous subsections we assumed the positions and not to be currupted with noise. However taking into account the positions to be random variables, the number of inputs are 6 so that the input vector yields or with the output vector .

Furthermore let the uncorrelated Gaussian distributed inputs

...

be described by the 6-dim density

where

;

,

- mean(

),

- covariance matrix.

According to (

11) the output density is described by

- correlation coefficient, .

After some calculations [

15] we find for

,

and

with

which is the counterpart to the 2-dim input case (

24).

Inverse Solution

An inverse solution cannot be uniquely computed due to the underdetermined character of the 6-input – 2-output system. Therefore, from required variances at the intersection position (output) corresponding variances for positions and orientations of robot-human or robot-robot (input) cannot be concluded.

Fuzzy Approach

The steps to the fuzzy approach is very similar to those of the 2-input case:

- define operation points

- compute

,

,

at

from (

33

- formulate fuzzy rules

according to (

25) and (

26),

The number n of rules is computed as follows:

With - number of fuzzy terms, - number of inputs, we obtain - number of rules.

This number of rules is unacceptable high. To limit

n to an adequate number, one has to limit the number of inputs and/or fuzzy terms to look for the most influential variables either on heuristic or systematic way [

16]. This however is not the issue to be discussed in this paper.

4. Sigma-Point-Transformation

In the following the estimation/identification of the standard deviations of possible intersection coordinates of trajectories for both robot/robot and human/robot - combinations by means of the sigma-point technique is discussed. The following method is based on the Unscented Kalman Filter technique where the intersections cannot be directly measured but predicted/computed only. Nevertheless it is possible to compute the variance of the predicted events, such as possible collisions or planned rendezvous situations, by a direct propagation of statistical parameters - the sigma-points - through the nonlinear geometrical relation which is a result of the crossing of two trajectories. Let

- input vector,

- output vector where for the special case

and

The nonlinear relation between

and

is given by (

34)

For the discrete case we obtain for the state

and for the measured output

where

and

are the system noise and measurement noise, respectively.

is the output nonlinearity. Let furthermore

- mean at time

- covariance matrix

- initial state with known mean

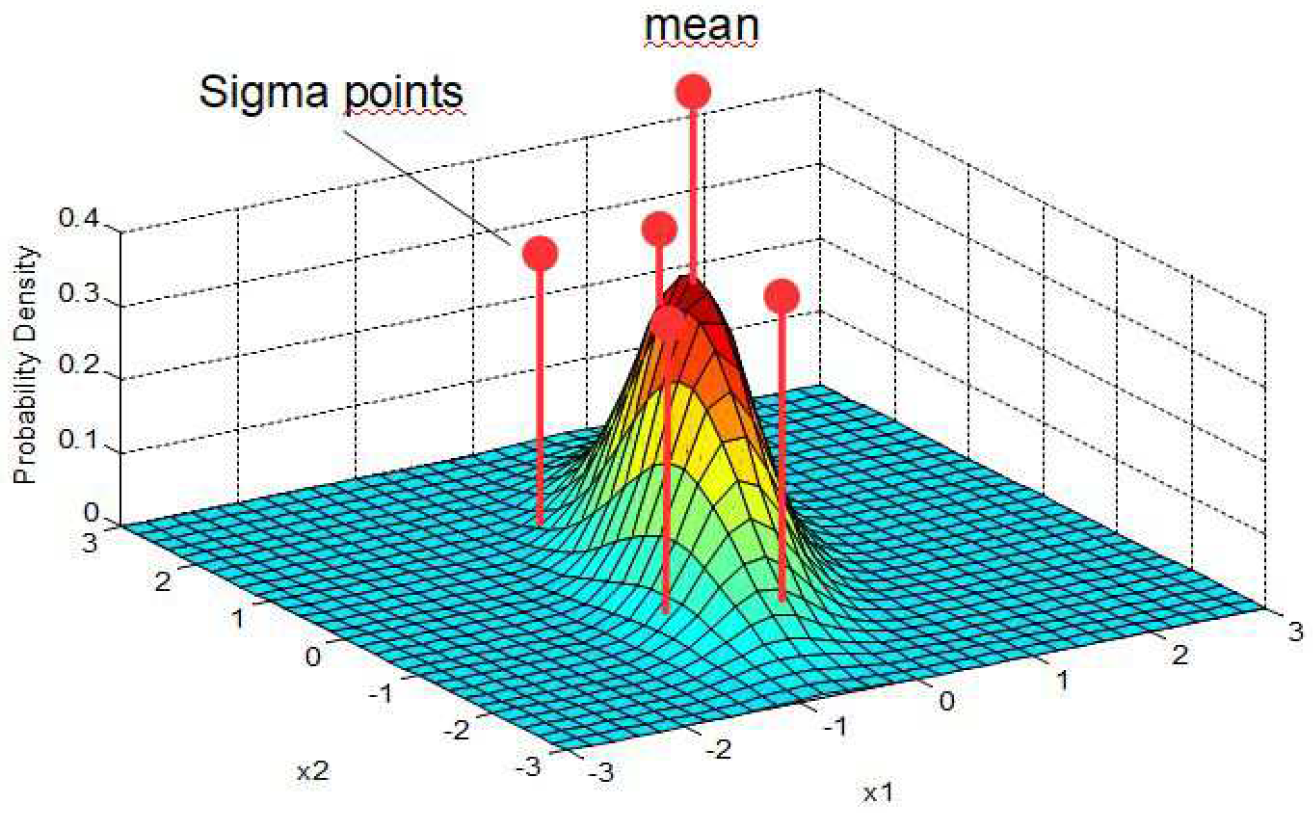

Selection of Sigma-Points

Sigma-points are selected parameters of a given error distribution of a random variable. Sigma-points lie along the major eigen-axes of the covariance matrix of the random variable. The height of each sigma-point (see

Figure 7) represents its relative weight

used in the following selection procedure.

Let

be a set of

sigma-points where

n is the dimension of the state space (in our example

).

Consider the following selection of sigma-points

under the following condition

and

are scaling factors. A usual choice is

and

.

is the row/column of the matrix square root of . The square root of a matrix is the solution for which is obtained by Cholesky factorization.

Model Forecast Step

To go on with the UKF, the following step is devoted to the model forecast. In this way the sigma-points

are propagated through the nonlinear process model

where the superscript

f means "forecast". From these transformed and forecasted sigma-points the mean and covariance for the forecast value of

Measurement update step

In this step the sigma-points are propagated through the nonlinear observation model

from which we obtain mean and covariance (innovation covariance)

and the cross-covariance

Data Assimilation Step

In this step, called the forecast information is combined with the new information from the output

from which we obtain with the Kalman filter gain

The gain

is given by

and the posterior covariance is updated by

Usually it is sufficient to compute mean and variance for the output/state

of the nonlinear static system

. In this case it is possible to stop a further computing at eq. (

42) meaning rather to calculate the transformed sigma-points

and develop the regarding output means and variances from (

41) and (

42). In this connection it is enough to substitute the covariance matrix

into (

38) instead of

. One advantage of the sigma-point approach prior to statistical linearization is the easy scalability to multi-dimensional random variables.

For the intersection problem there are 2 cases:

1. 2 inputs, 2 outputs (2 orientation angles, 2 crossing coordinates)

2. 6 inputs, 2 outputs (2 orientation angles and 4 position coordinates, 2 crossing coordinates)

For the statistical linearization (method 1) the step from the 2 inputs - 2 outputs case to the (6,2)-case is computationally more costly than that for the sigma-point approach (method 2), (see eqs. (

20) ... (

24) versus eqs. (

37), (

40)... (

42).

Sigma-Points - Fuzzy Solutions

In order to lower the computing effort the application of TS-fuzzy interpolation may be a solution which will be shown in the following. Having a look at the 2-dimensional problem we can see a nonlinear propagation of the input sigma-points through a nonlinear function

. Let

be the 2-dimensional "input" sigma-points

or for the special case "intersection"

The propagation through

leads to "output" sigma-points

or for the special case

The special nonlinear function

is described by (see (

5))

where

is a nonlinear matrix (6) linearly combined with the position vector

.

A fuzzification aims at

:

Applied to the sigma-points

we get a TS fuzzy model described by the following rules

where

are fuzzy terms for

; the matrices

are functions of predefined variables

and

. This set of rules leads to the result

and

are weighting functions with

,

. The advantage of this approach is that the

matrices

can be computed off line. Then, the calculation of mean and covariance matrix is obtained by

From the covariance

the variances

can be obtained

Inverse Solution

The inverse solution for the Sigma-Point approach is much easier to get than that for the statistical linearization method. Starting from eq. (

34) we build the inverse function

on the condition that

exists. Then the covariance matrix

is defined in correspondence to the required variances

, and

. The following next steps correspond to equations (

34)-(

42). The position vector

is assumed to be known. The inversion of

requirers a linearization of

and a starting point to obtain a stable convergence to the inverse

. The result is the mean

and the covariance

at the input. A reliable inversion is only possible for the 2-input 2-output case.

6-Inputs 2-Outputs

This case works exactly as the 2-input 2-output case along eqs. (

34)-(

42) due to the fact that the computation of the Sigma-Points (

38)-(

40) and the propagation through the nonlinearity

includes automatically the input and output dimensions.

5. Simulation Results

The following simulations show results of uncertainties of predicted intersections based on statistical linearization and sigma-point-Transformation. For both methods identical parameters are employed for comparisson reasons (see

Figure 2) Position/orientation of robot and human are given by

m

m

,

.

and are corrupted by Gaussian noise with standard deviations (std) of rad, , rad, .

Statistical linearization

Table 1 shows a comparison of the non-fuzzy method with the fuzzy approach using sectors of

of the unit circle for the orientations of robot and human. Notations in

Table 2 are:

- std-computed,

- std-measured etc. As expected, we see that higher resolutions lead to a better match between fuzzy and analytical approach. Furthermore, the match between measured and calculated values depends on the form of membership functions (MFS). For example, low input standard deviations (0.02 rad) show a better match for Gaussian membership functions, higher input standard deviations (0.05 rad =

) require Gaussian bell shape membership functions which comes from different smoothing effects (see columns 4 and 5 in

Table 2).

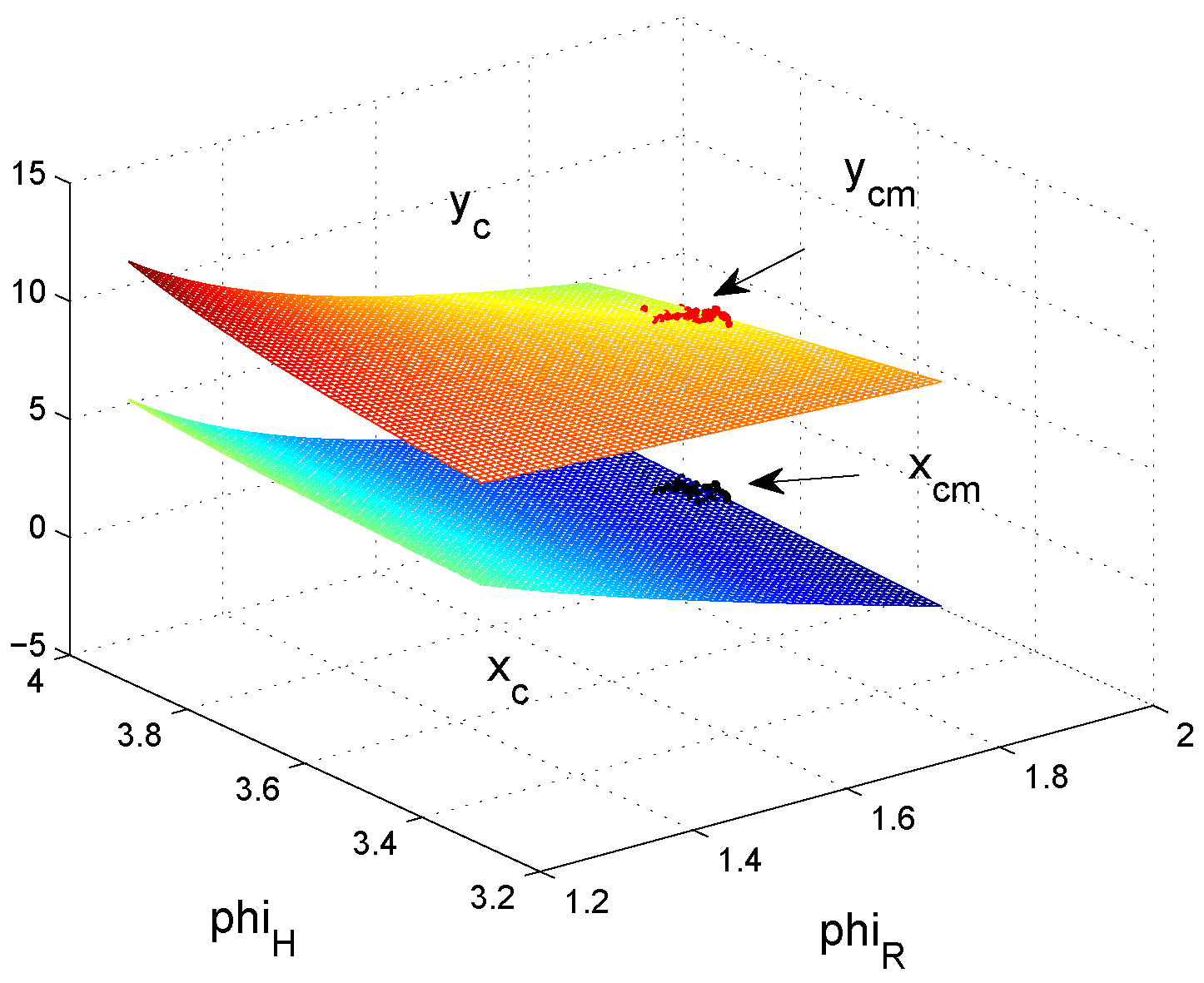

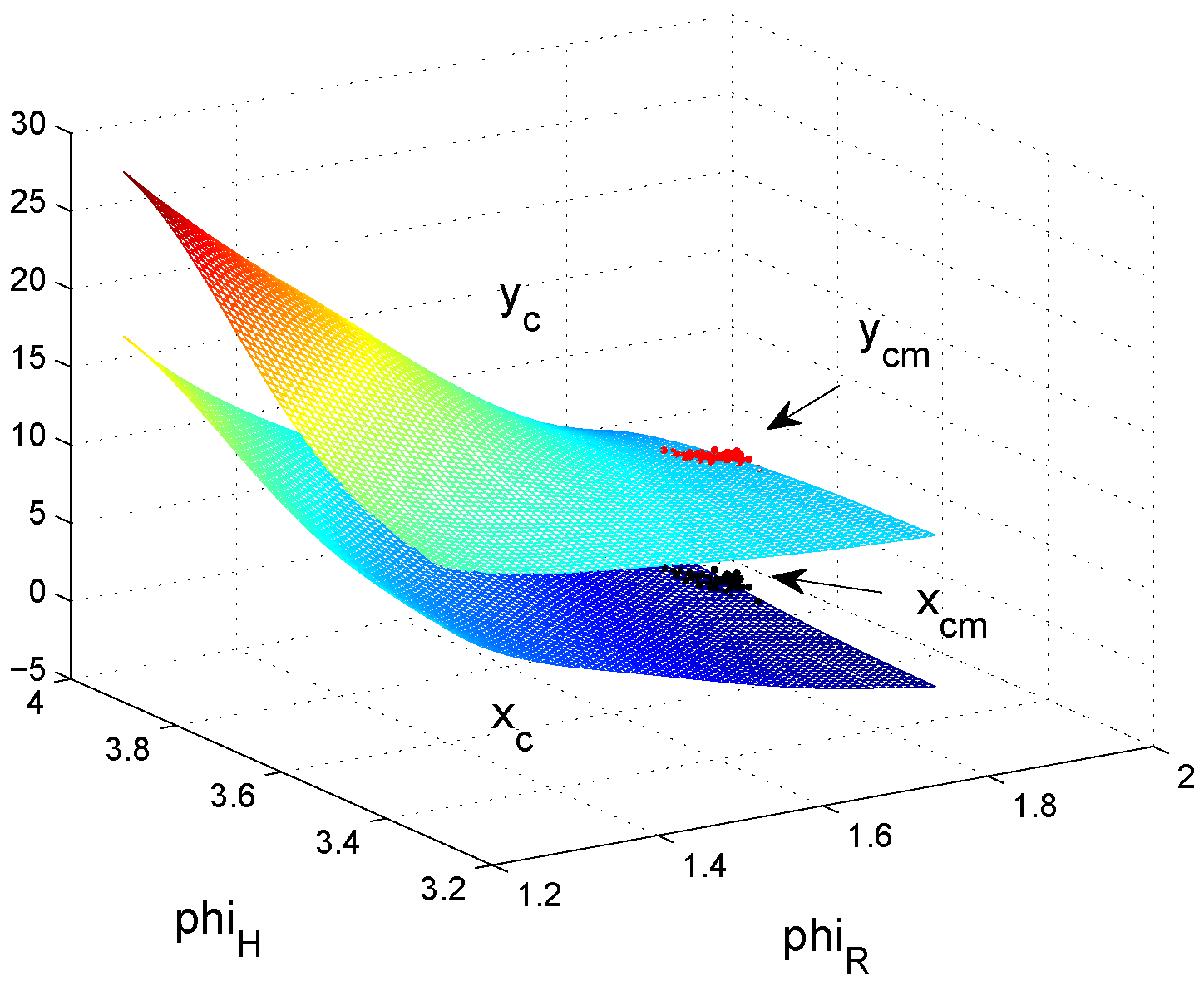

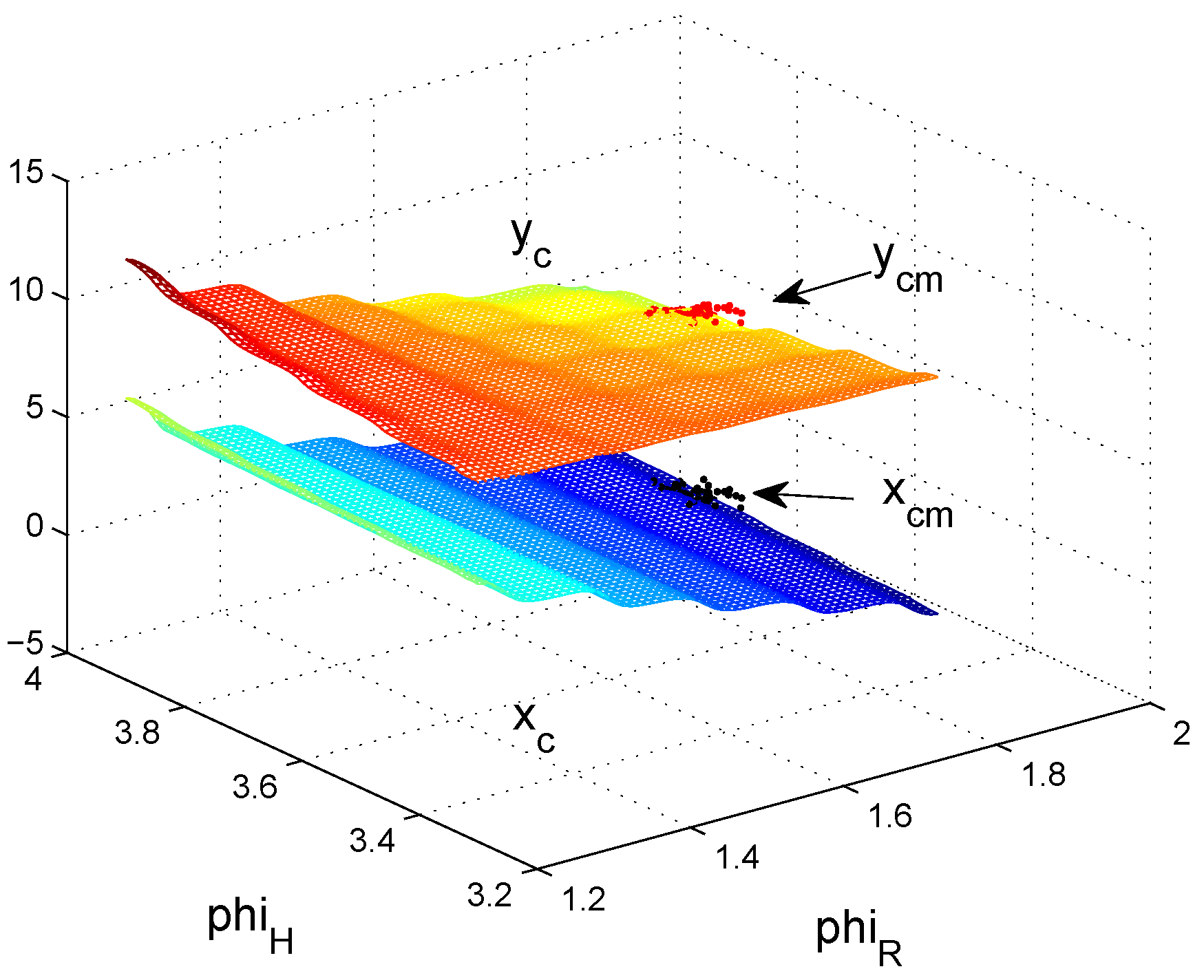

A comparison of control surfaces and corresponding measurements

(black and red dots) is depicted in

Figure 8,

Figure 9 and

Figure 10.

Figure 8 shows the control surface of

and

for the non-fuzzy case (

4). Control surfaces of the fuzzy approximations (

7) for

and

sectors are shown in

Figure 9 and

Figure 10. Resolution

(

Figure 9) shows a very high deviation compared to the non-fuzzy approach (

Figure 8) which decreases further down to resolution

(

Figure 10). This explains the high differences between measured and computed standard deviations and correlation coefficients, in particular for sector sizes of

and higher.

Sigma-Point method

2-inputs - 2-outputs:

The simulation of the sigma-point method is based on a Matlab implementation of an unscented Kalman filter by [

17]. The first example deals with the 2-inputs - 2-outputs case in which only the orientations are taken into account, but the disturbances of the positions of robot and human are not part of the sigma-point calculation. A comparison between the computed and measured covariance show a very good match. The same holds for the standard deviations

. A comparison with the statistical linearization shows a good match as well (see

Table 2, rows 1 and 2).

A view at the sigma-points presents the following results:

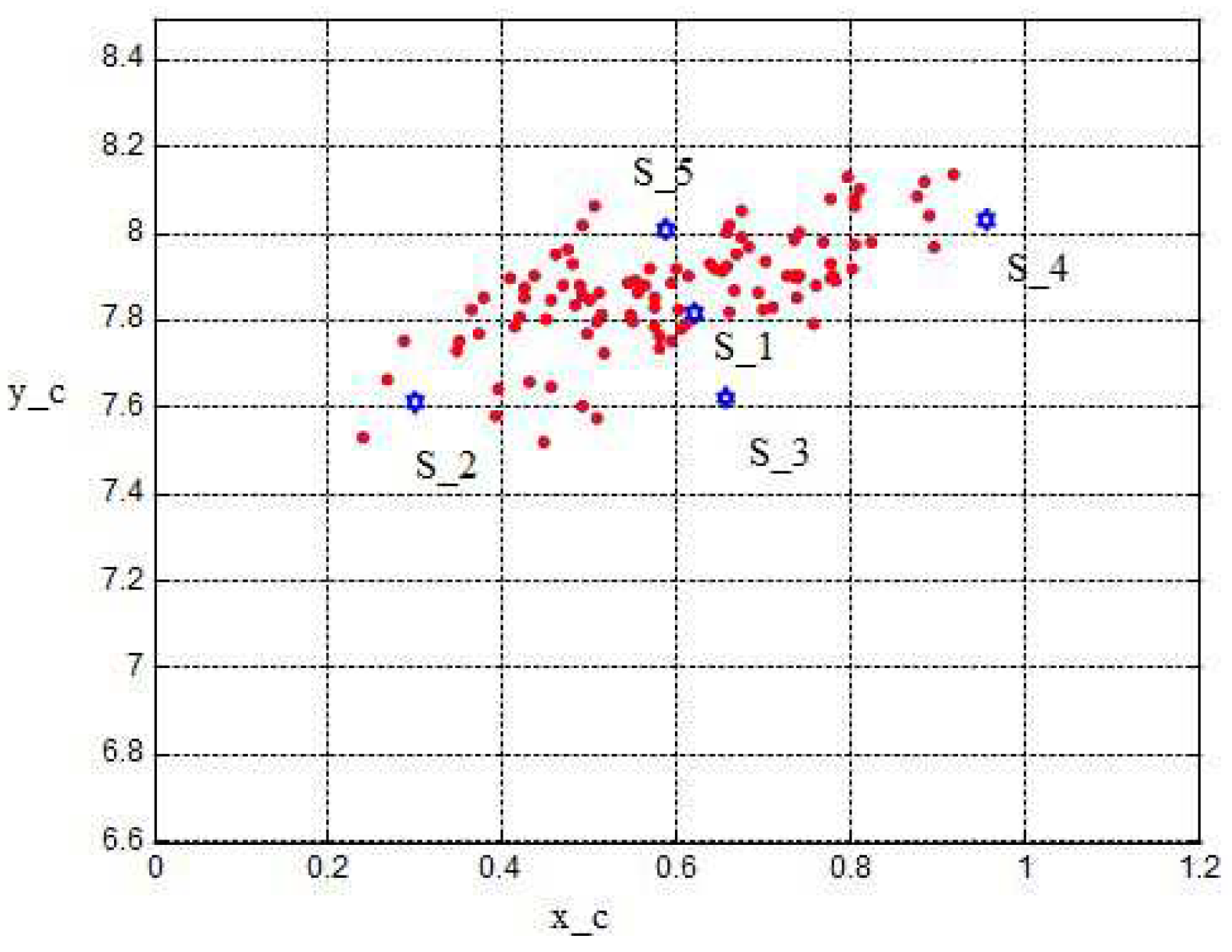

Figure 11 shows the two-dimensional distribution of the orientation angles

,

and the corresponding sigma-points

where

denotes the mean value.

Figure 12 shows the two-dimensional distribution of the intersection coordinates

with the sigma-points

.

denotes the mean value and

are distributed in such a way that the

are transformed into

,

. From both figures an optimal selection of both

and

can be observed which results in a good match of the computed and measured standard deviations

.

6-inputs - 2-outputs:

The 6-inputs 2-outputs example shows that the additional consideration of 4 input position coordinates with

leads to similar results both for computed and measured covariances and between sigma-point method and statistical linearization (see

,

, and

,

,

- computed,

- measured variation).

Table 2 shows the covariance submatrix considering the output positions only.

2-inputs 2-outputs, direct and inverse solution

The next example shows the computation of the direct and inverse case. In the direct case we obtain again similar values between computed and measured covariances and, with this, standard deviations. The results of the inverse solution leads to similar values of the original inputs (orientations

), (see

Table 2). Simulations of fuzzy versions showed the same similarities and can therefore be left out here.

2-inputs 2-outputs, Moving robot/human

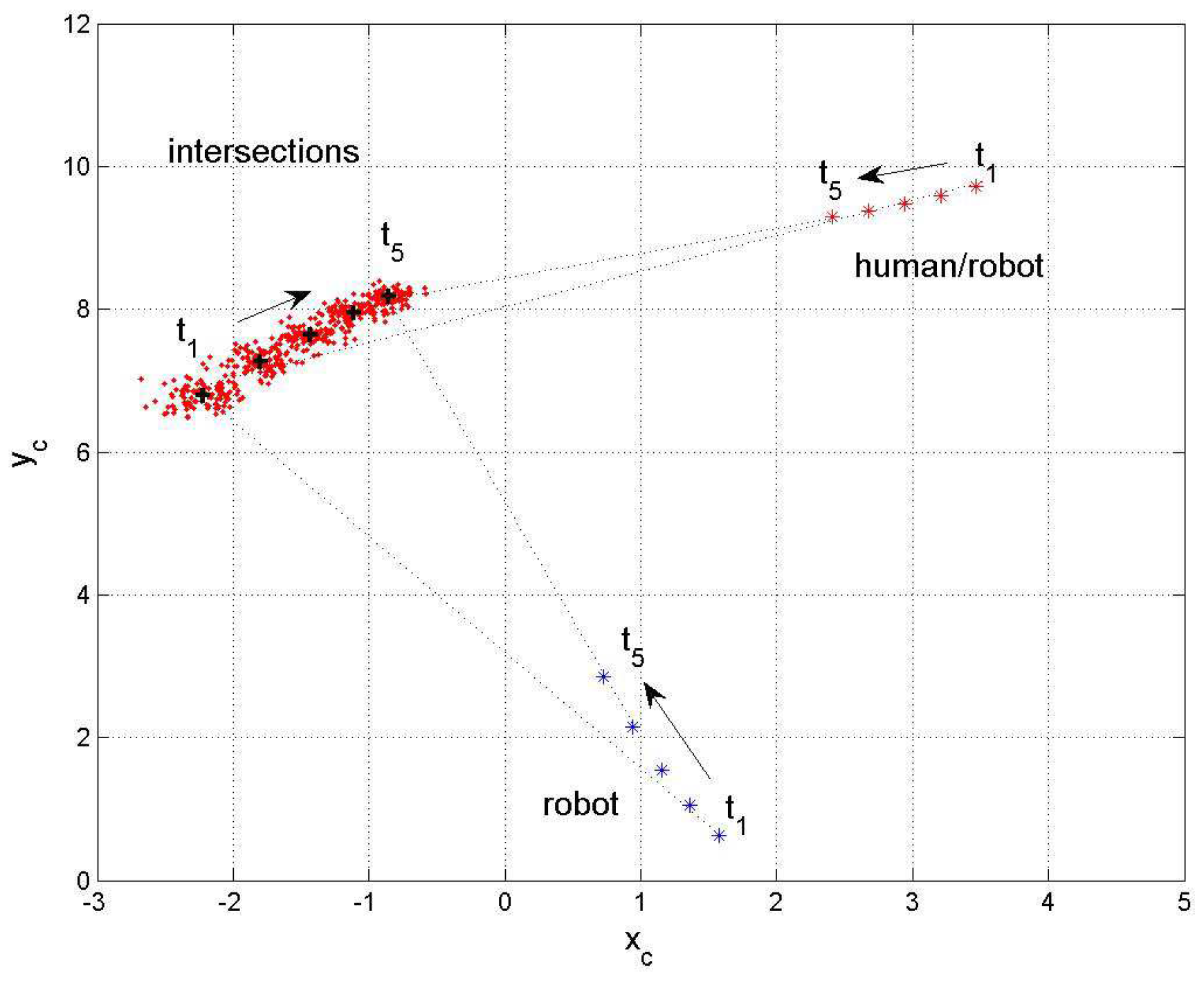

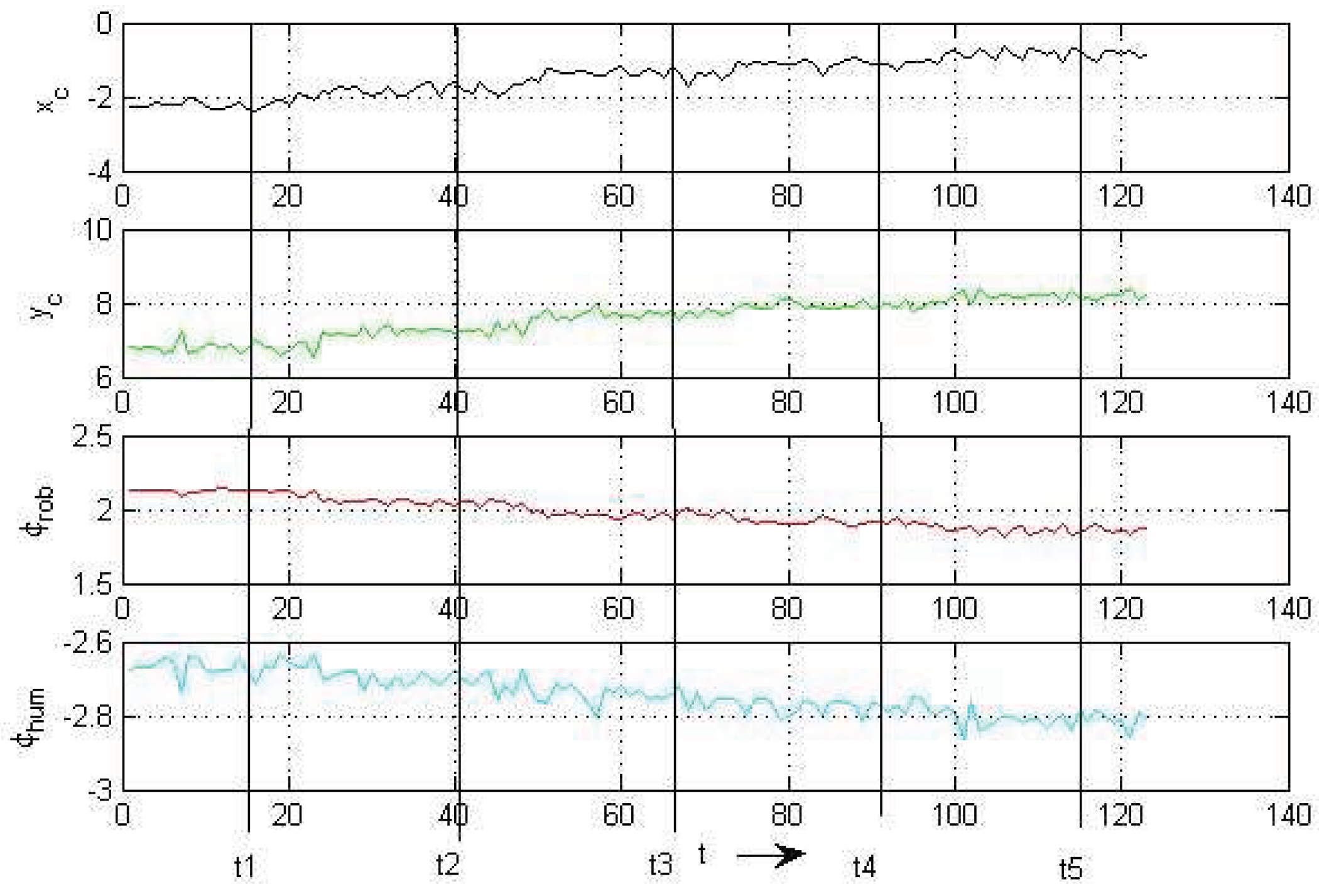

The next example deals with robot and human in motion.

Figure 13 shows positions and orientations of robot and human at selected time steps

and the development of the corresponding intersections

.

Figure 14 shows the corresponding time plot. The time steps

are taken at

with a time distance of

which are 25 time steps of 0.04s each. Robot and human start at

m

m

with the velocities ,

,

,

k - time step

The x components of the velocities and stay constant during the whole simulation.

The

y components change their velocities with constant factors

where

and

. The orientation angles start with

.

and change their values every second according to the direction of motion.

From both plots one observes an expected decrease of the output standard deviations for a mutual decrease of their distances to the regarding intersection and a good match between computed and measured values

(see

Table 3). With the information about the distance of the robot and the standard deviation from and at the expected intersection, respectively, it becomes possible to plan either an avoidance strategy or a mutual cooperation between robot and human.

6. Summary and Conclusions

The content of this work is the prediction of encounter situations of mobile robots and human agents in shared areas by analyzing planned/intended trajectories in the presence of uncertainties and system and observation noise. In this context the problem of intersections of trajectories with respect to system uncertainties and Gaussian noise of position and orientation of the agents involved are discussed. The problem is adressed by two methods: the statistical linearization of distributions and the sigma-point-Transformation of distribution parameters. Positions and orientations of robot and human are corrupted with Gaussian noise represented by the parameters mean and standard deviation. The goal is to calculate mean and standard deviation/variation at the intersection via the nonlinear relation between positions/ orientations of robot and human on the one hand and the position of the intersection of their intended trajectories on the other hand.

This analysis is realized by statistical linearization of the nonlinear relation between the statistics of robot and human (input) and the statistics of the intersection (output). The output results are mean and standard deviation of the intersection as functions of the input parameters mean and standard deviation of positions and orientations of robot and human. This work is first carried out for 2 inputs - 2 outputs relations (2 orientations of robot/human - 2 intersection coordinates) and then for 6 inputs - 2 outputs (2 orientations and 4 position coordinates of robot/human - 2 intersection coordinates). These cases were extended to their fuzzy versions by different Takagi-Sugeno (TS) fuzzy approximations and compared with the non-fuzzy case. Up to a certain resolution the approximation works as accurate as the original non-fuzzy version. For the 2-input - 2-output case an inverse solution is derived, except the 6-input - 2-output case because of the underdetermined nature of the differential input-output relation.

The sigma-point transformation aims at transforming/propagating distribution parameters - the sigma-points - directly through nonlinearities. The transformed sigma-points are then converted into distribution parameters mean and covariance matrix. The sigma-point transformation is closely connected to the unscented Kalman filter which is used in the example of robot and human in motion. The specialty of the example is a computed virtual system output ("observation") - the intersection of two intended trajectories - where the corresponding output uncertainty is a sum of the transformed position/orientation noise and the computational uncertainty from the fuzzy approximation. In total the comparison between the computed and measured covariances show very good match and the comparison with the statistical linearization shows good coincidences as well. Both the sigma-point transformation and the differential statistical linearization scales for more than 2 variables linearly. However the computing costs for the differential approach are still higher than that for the sigma-point approach. In summary, a prediction of the accuracy of human-robot trajectories using the methods presented in this work increases the performance of human robot collaboration and human safety. In future work this method can be used for robot-human scenarios in factory workshops and for robots working in complicated environments like rescue operations in cooperation with human operators.

Author Contributions

R.P. developed the methods and implemented the simulation programs. A.L. conceived the project and gave advise and support.

Funding

This work has received funding from the European Union s Horizon 2020 research and innovation programme under grant agreement No 101017274 (DARKO).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare that they have no competing interests.

References

- Khatib, O. Real-time 0bstacle avoidance for manipulators and mobile robots. IEEE Int. Conf. On Robotics and Automation,St. Loius,Missouri, 1985 1985, p. 500505.

- Firl, J. Probabilistic Maneuver Recognition in Traffic Scenarios. Doctoral dissertation, KIT Karlsruhe, 2014.

- W.Luo.; J.Xing.; Milan, A.; Zhang, X.; Liu, W.; Zhao, X.; Kim, T. Multiple Object Tracking: A Literature Review. Computer Vision and Pattern Recognition, arXiv 1409,7618 2014, p. 118.

- J.Chen.; Wang, C.; Chou, C. Multiple target tracking in occlusion area with interacting object models in urban environments. Robotics and Autonomous Systems, Volume 103, May 2018 2018, pp. 68–82.

- Kassner, M.; W.Patera.; Bulling, A. Pupil: an open source platform for pervasive eye tracking and mobile gaze-based interaction. In Proceedings of the Proceedings of the 2014 ACM international joint conference on pervasive and ubiquitous computing. ACM, 2014, pp. 1151–1160.

- Bruce, J.; Wawer, J.; Vaughan, R. Human-Robot Rendezvous by Co-operative Trajectory Signals. 2015. pp. 1–2.

- Palm, R.; Lilienthal, A. Fuzzy logic and control in Human-Robot Systems: geometrical and kinematic considerations. In Proceedings of the WCCI 2018: 2018 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE). IEEE, IEEE, 2018, pp. 827–834.

- R.Palm.; Driankov, D. Fuzzy inputs. Fuzzy Sets and Systems - Special issue on modern fuzzy control 1994, pp. 315–335.

- L.Foulloy.; S.Galichet. Fuzzy control with fuzzy inputs. IEEE Trans. Fuzzy Systems, 11 (4) 2003, pp. 437–449.

- Banelli, P. Non-Linear Transformations of Gaussians and Gaussian-Mixtures with implications on Estimation and Information Theory. IEEE Trans. on Information Theory 2013.

- Julier, S.; J.K.Uhlmann. Unscented Filtering and Nonlinear Estimation. In Proceedings of the Proc. of the of the IEEE 2004, Vol.92, No.3, 2004, 2004, pp. 401–422.

- R.v.d.Merwe.; E.Wan.; S.J.Julier. Sigma-Point Kalman Filters for Nonlinear Estimation and Sensor-Fusion: Applications to Integrated Navigation. In Proceedings of the Proc. of the AIAA 2004, Guidance, Navigation and Control Conference, 2004, Providence, RI, Aug 2004.

- G.A.Terejanu. Unscented Kalman Filter Tutorial. Department of Computer Science and Engineering University at Buffalo 2011.

- R.Palm.; Lilienthal, A. Uncertainty and Fuzzy Modeling in Human-robot Navigation. In Proceedings of the Proc. of the 11th Intern. Joint Conf. on Comp. Int. (IJCCI 2019),, IJCCI, Wien, 2019; pp. 296–305.

- R.Palm.; Lilienthal, A. Fuzzy Geometric Approach to Collision Estimation Under Gaussian Noise in Human-Robot Interaction. In Proceedings of the IJCCI 2019. Studies in Computational Intelligence, vol 922. Springer, 2021, IJCCI, Cham, 2021; pp. 191–221.

- J.Schaefer.; K.Strimmer. A shrinkage to large scale covariance matrix estimation and implications for functional genomics. Statistical Applications in Genetics and molecular Biology, vol. 4, iss. 1, Art. 32 2005.

- Cao, Y. Learning the Unscented Kalman Filter. https://www.mathworks.com/ matlabcentral/ fileexchange/ 18217-learning-the-unscented-kalman-filter 2021.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).