Submitted:

29 March 2024

Posted:

01 April 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

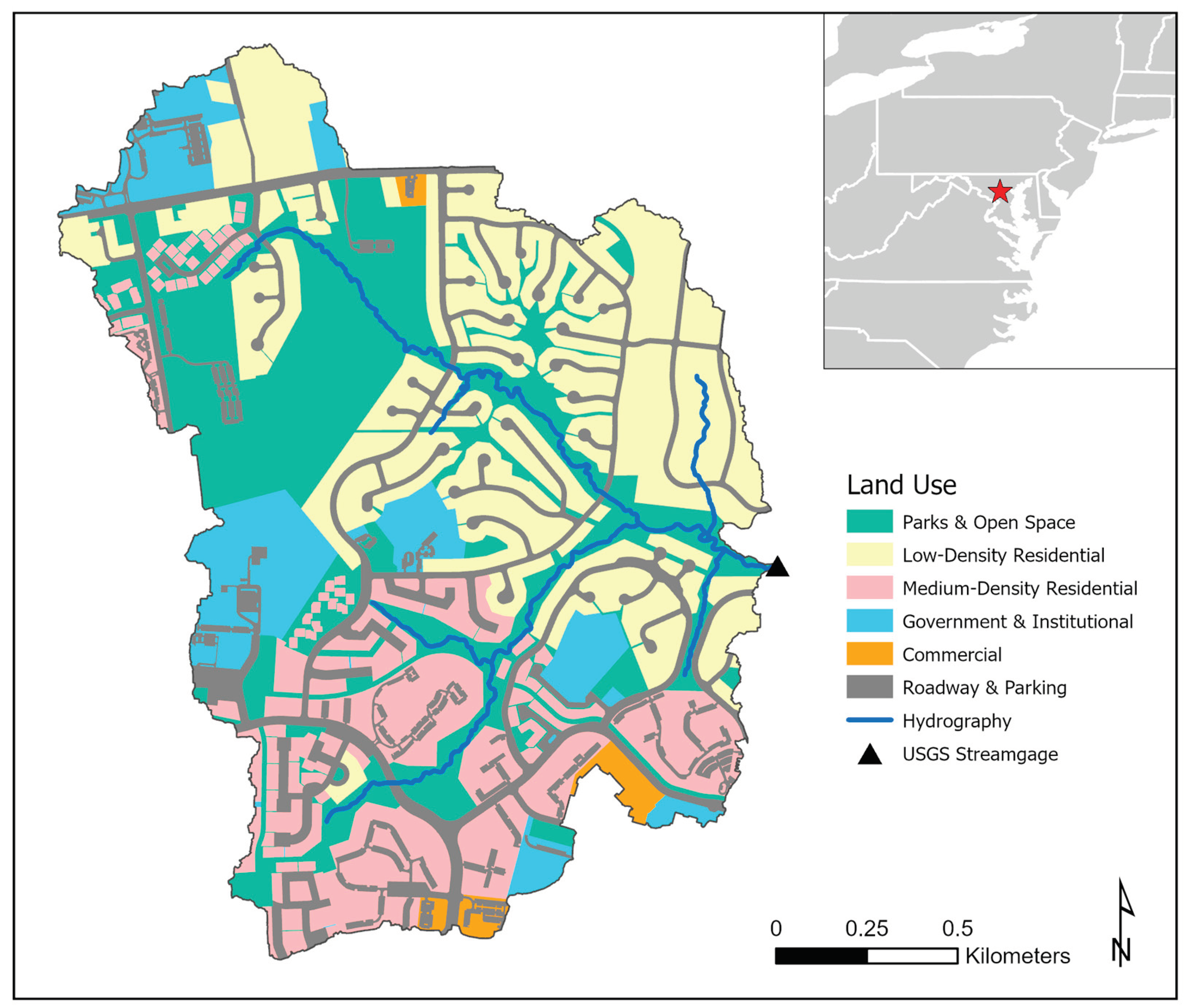

2.1. Study Area

2.2. Hydrologic Model Development and Calibration

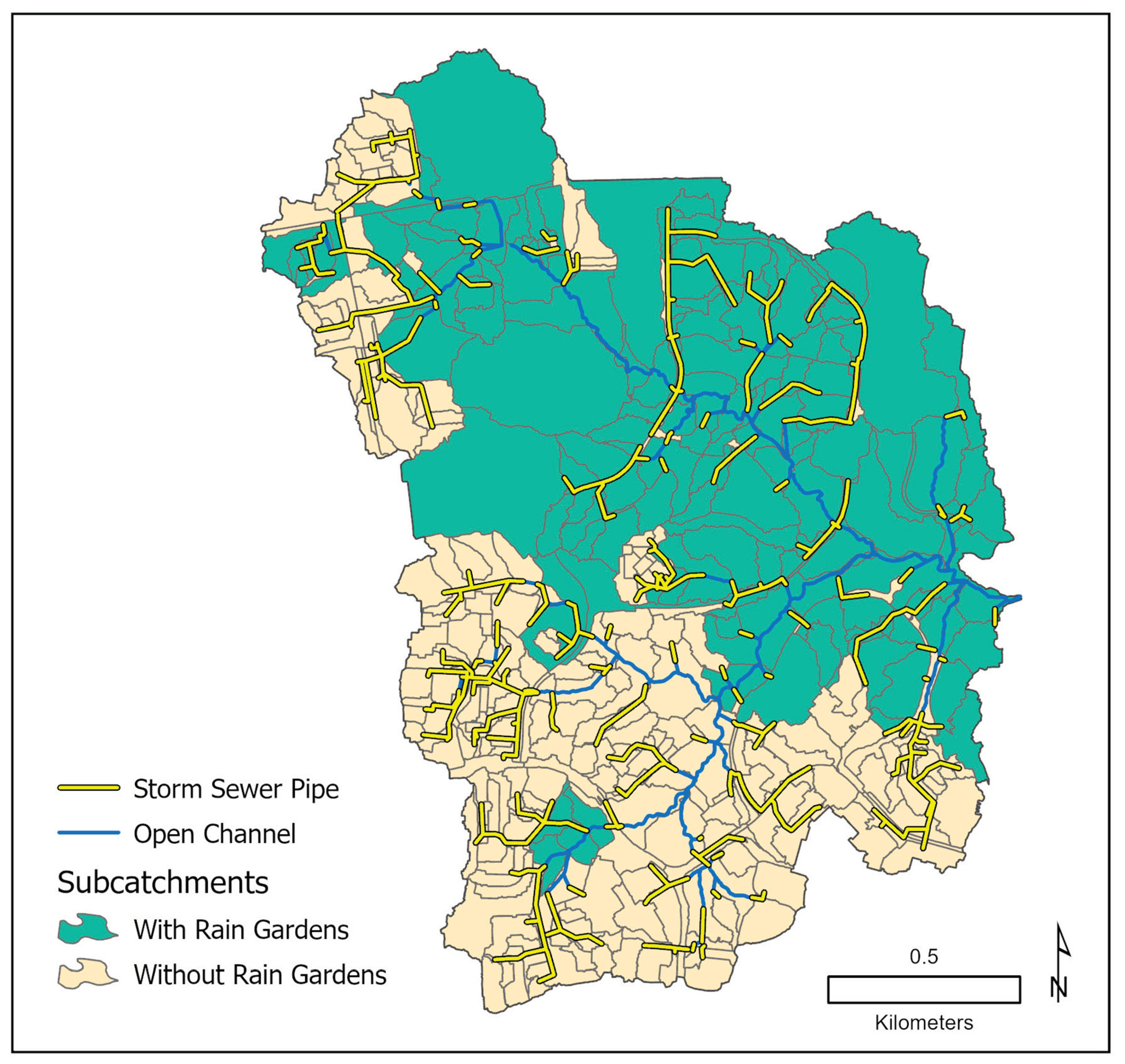

2.3. Rain Garden Scenario Simulations

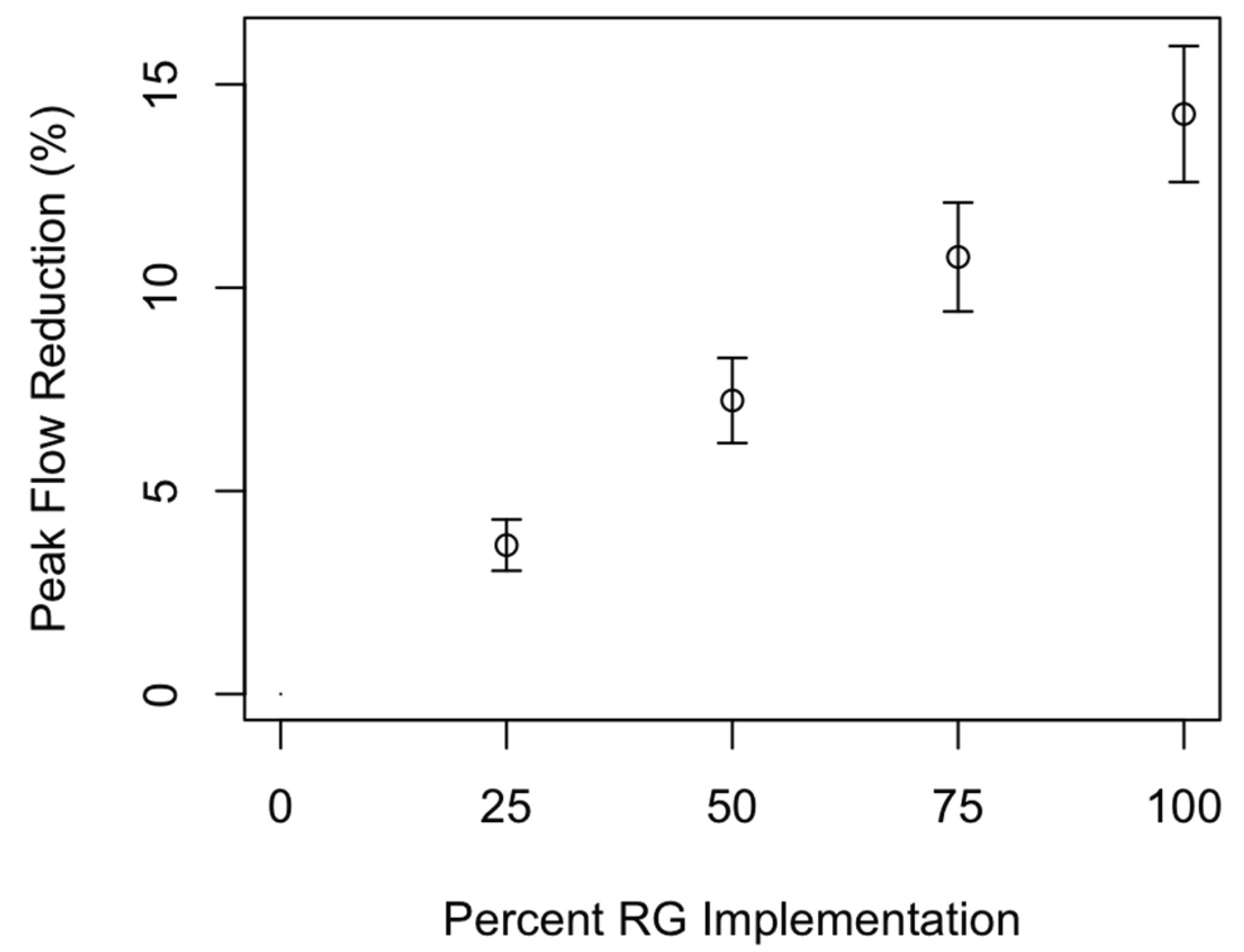

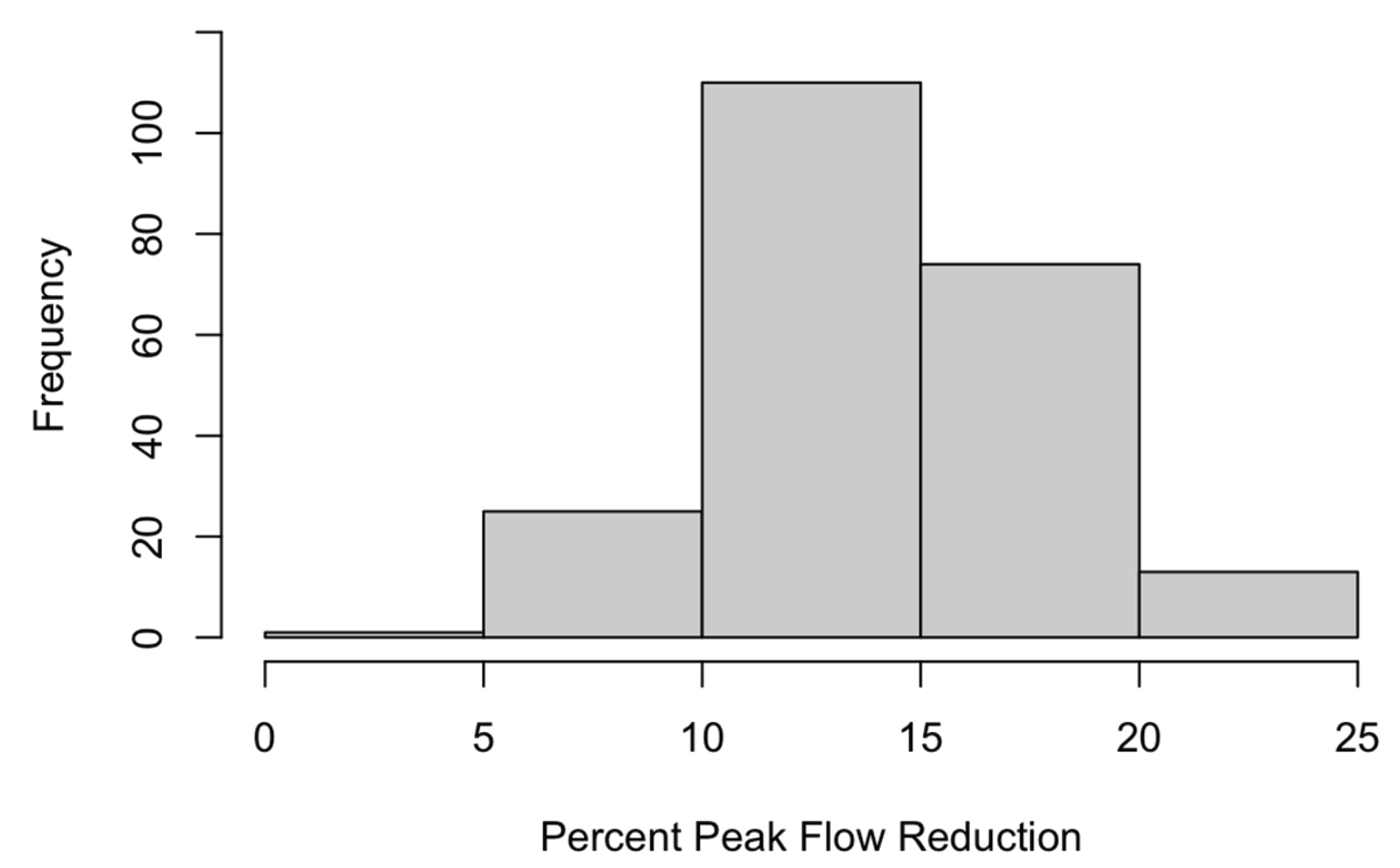

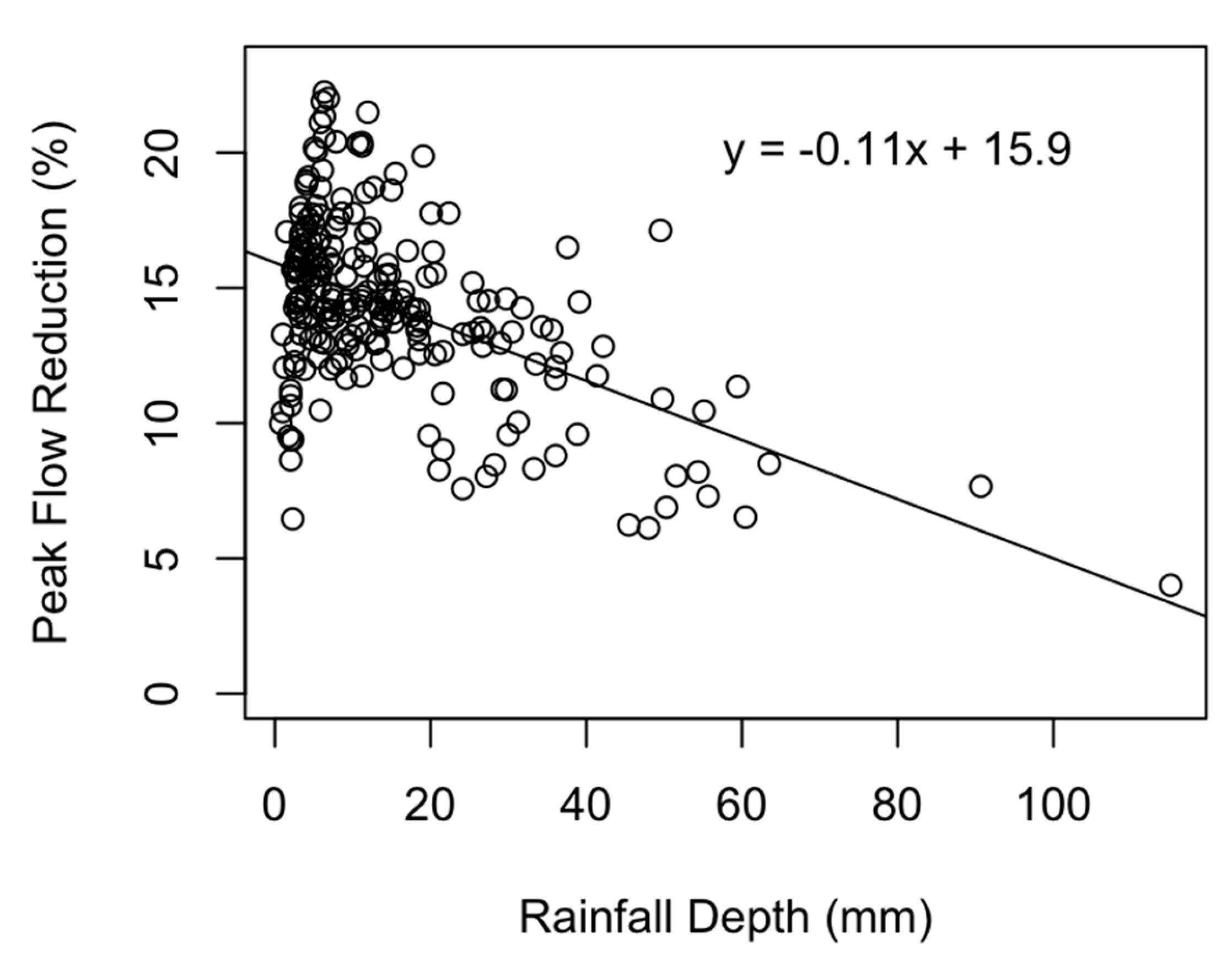

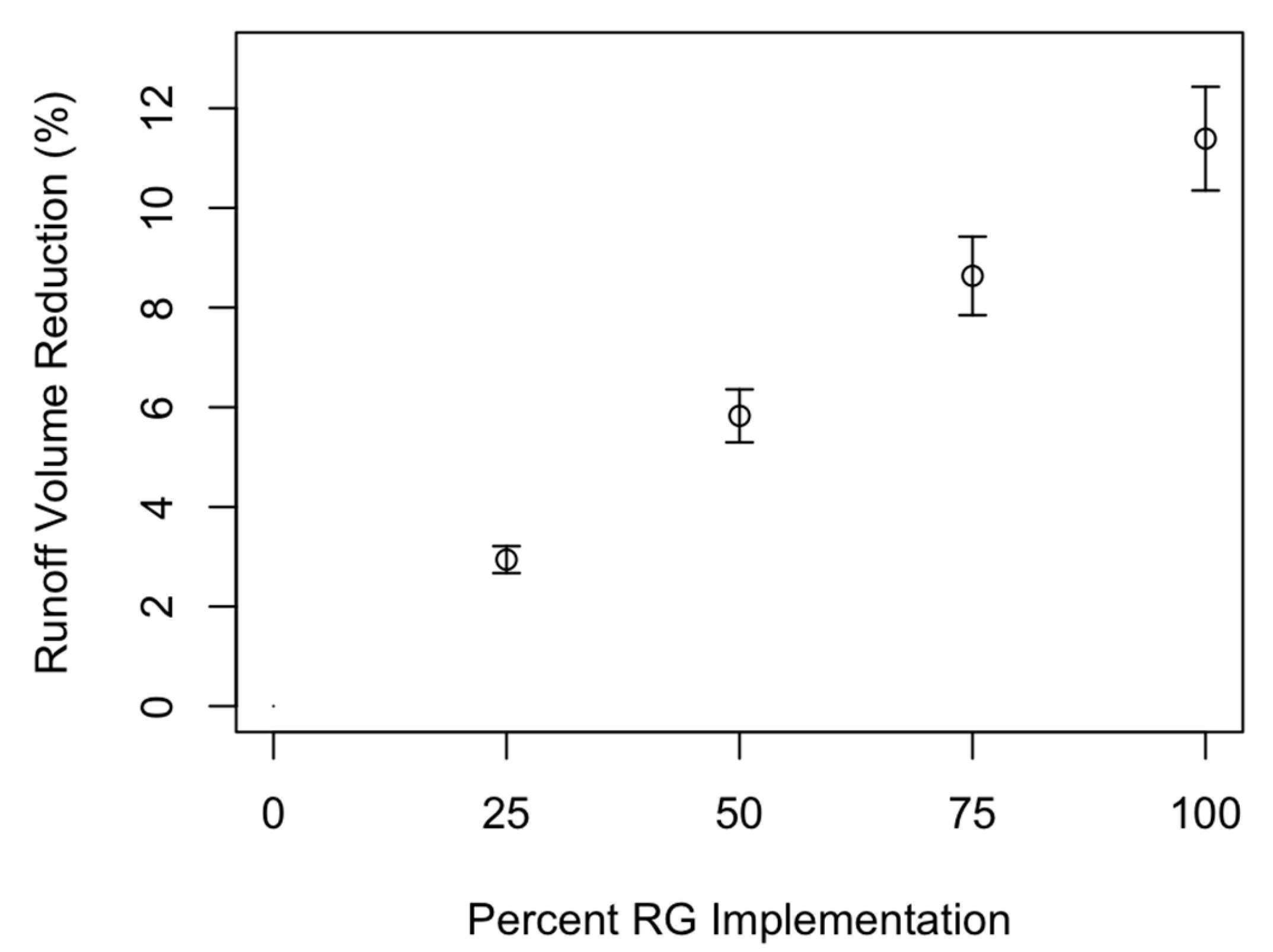

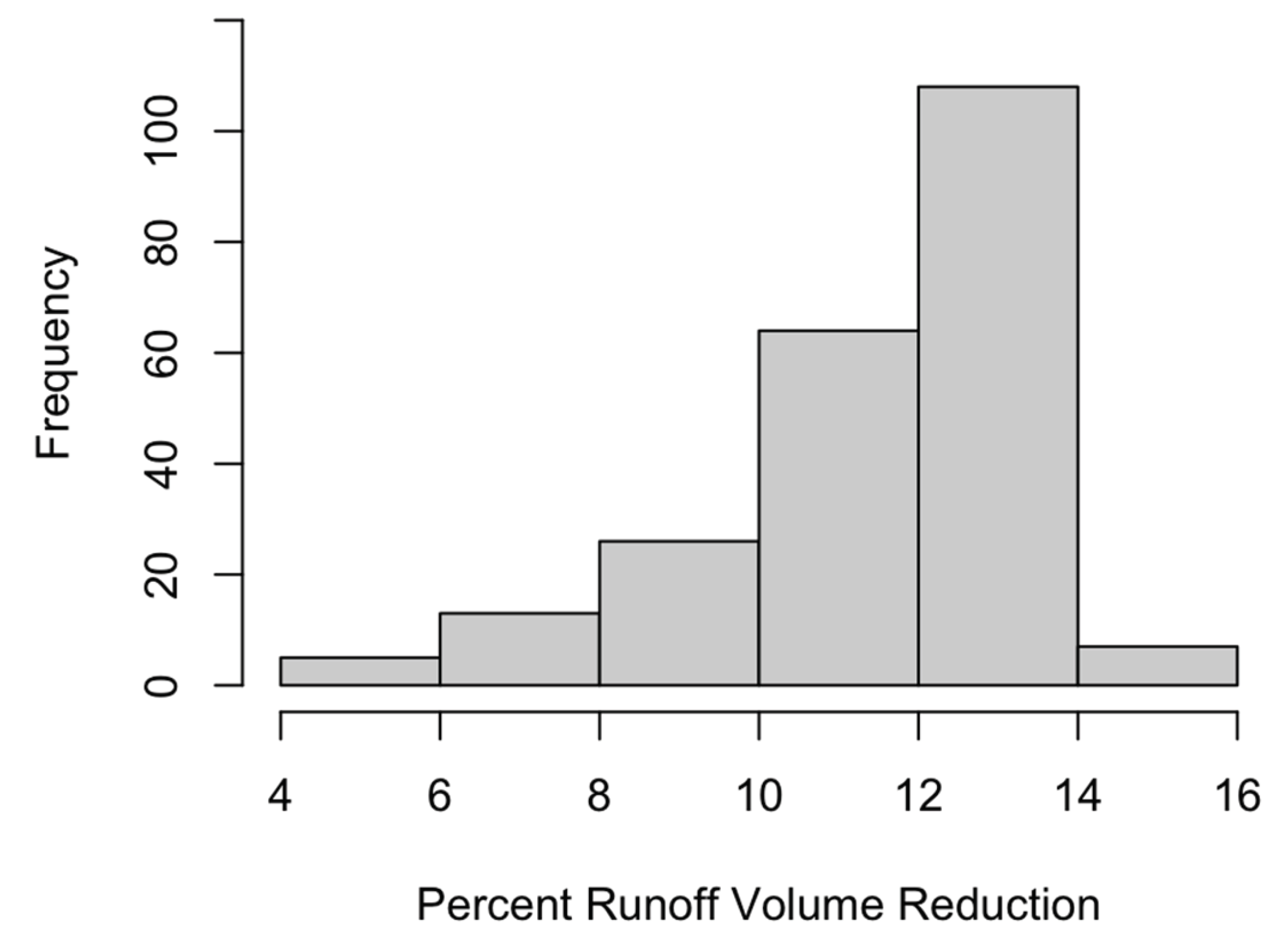

3. Results

4. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hughes, R.M.; Dunham, S.; Maas-Hebner, K.G.; Yeakley, J.A.; Schreck, C.; Harte, M.; Molina, N.; Shock, C.C.; Kaczynski, V.W.; Schaeffer, J. A Review of Urban Water Body Challenges and Approaches: (1) Rehabilitation and Remediation. Fisheries 2014, 39, 18–29. [Google Scholar] [CrossRef]

- Walsh, C.J.; Roy, A.H.; Feminella, J.W.; Cottingham, P.D.; Groffman, P.M.; Morgan, R.P. The Urban Stream Syndrome: Current Knowledge and the Search for a Cure. J. North Am. Benthol. Soc. 2005, 24, 706–723. [Google Scholar] [CrossRef]

- Walsh, C.J.; Fletcher, T.D.; Burns, M.J. Urban Stormwater Runoff: A New Class of Environmental Flow Problem. PLoS ONE 2012, 7, e45814. [Google Scholar] [CrossRef] [PubMed]

- Leopold, L. Hydrology for Urban Land Planning – A Guidebook on the Hydrologic Effects of Urban Land Use; U.S. Geological Survey: Washington, D.C., 1968.

- Paul, M.J.; Meyer, J.L. Streams in the Urban Landscape. Annu. Rev. Ecol. Syst. 2001, 32, 333–365. [Google Scholar] [CrossRef]

- Ahiablame, L.M.; Engel, B.A.; Chaubey, I. Effectiveness of Low Impact Development Practices: Literature Review and Suggestions for Future Research. Water Air Soil Pollut. 2012, 223, 4253–4273. [Google Scholar] [CrossRef]

- Dietz, M.E. Low Impact Development Practices: A Review of Current Research and Recommendations for Future Directions. Water Air Soil Pollut. 2007, 186, 351–363. [Google Scholar] [CrossRef]

- U.S. Environmental Protection Agency (USEPA) What Is Green Infrastructure? Available online: https://www.epa.gov/green-infrastructure/what-green-infrastructure (accessed on 16 March 2024).

- Anderson, A.R.; Franti, T.G.; Shelton, D.P. Hydrologic Evaluation of Residential Rain Gardens Using a Stormwater Runoff Simulator. Trans. ASABE 2018, 61, 495–508. [Google Scholar] [CrossRef]

- Davis, A.P. Field Performance of Bioretention: Hydrology Impacts. J. Hydrol. Eng. 2008, 13, 90–95. [Google Scholar] [CrossRef]

- Shuster, W.; Darner, R.; Schifman, L.; Herrmann, D. Factors Contributing to the Hydrologic Effectiveness of a Rain Garden Network (Cincinnati OH USA). Infrastructures 2017, 2, 11. [Google Scholar] [CrossRef] [PubMed]

- Tu, M.-C.; Traver, R. Water Table Fluctuation from Green Infrastructure Sidewalk Planters in Philadelphia. J. Irrig. Drain Eng. 2019, 145, 05018008. [Google Scholar] [CrossRef]

- Golden, H.E.; Hoghooghi, N. Green Infrastructure and Its Catchment-Scale Effects: An Emerging Science. Wiley Interdiscip. Rev. : Water 2018, 5, e1254. [Google Scholar] [CrossRef] [PubMed]

- Jefferson, A.J.; Bhaskar, A.S.; Hopkins, K.G.; Fanelli, R.; Avellaneda, P.M.; McMillan, S.K. Stormwater Management Network Effectiveness and Implications for Urban Watershed Function: A Critical Review. Hydrol. Process. 2017, 31, 4056–4080. [Google Scholar] [CrossRef]

- Li, C.; Fletcher, T.D.; Duncan, H.P.; Burns, M.J. Can Stormwater Control Measures Restore Altered Urban Flow Regimes at the Catchment Scale? J. Hydrol. 2017, 549, 631–653. [Google Scholar] [CrossRef]

- Jennings, A.A.; Berger, M.A.; Hale, J.D. Hydraulic and Hydrologic Performance of Residential Rain Gardens. J. Environ. Eng. 2015, 141, 04015033. [Google Scholar] [CrossRef]

- Bell, C.D.; Wolfand, J.M.; Panos, C.L.; Bhaskar, A.S.; Gilliom, R.L.; Hogue, T.S.; Hopkins, K.G.; Jefferson, A.J. Stormwater Control Impacts on Runoff Volume and Peak Flow: A Meta-analysis of Watershed Modelling Studies. Hydrol. Process. 2020, 34, 3134–3152. [Google Scholar] [CrossRef]

- Hopkins, K.G.; Bhaskar, A.S.; Woznicki, S.A.; Fanelli, R.M. Changes in Event-based Streamflow Magnitude and Timing after Suburban Development with Infiltration-based Stormwater Management. Hydrol. Process. 2020, 34, 387–403. [Google Scholar] [CrossRef] [PubMed]

- Jarden, K.M.; Jefferson, A.J.; Grieser, J.M. Assessing the Effects of Catchment-Scale Urban Green Infrastructure Retrofits on Hydrograph Characteristics. Hydrol. Process. 2016, 30, 1536–1550. [Google Scholar] [CrossRef]

- Loperfido, J.V.; Noe, G.B.; Jarnagin, S.T.; Hogan, D.M. Effects of Distributed and Centralized Stormwater Best Management Practices and Land Cover on Urban Stream Hydrology at the Catchment Scale. J. Hydrol. 2014, 519, 2584–2595. [Google Scholar] [CrossRef]

- Pennino, M.J.; McDonald, R.I.; Jaffe, P.R. Watershed-Scale Impacts of Stormwater Green Infrastructure on Hydrology, Nutrient Fluxes, and Combined Sewer Overflows in the Mid-Atlantic Region. Sci. Total Environ. 2016, 565, 1044–1053. [Google Scholar] [CrossRef] [PubMed]

- Rhea, L.; Jarnagin, T.; Hogan, D.; Loperfido, J.V.; Shuster, W. Effects of Urbanization and Stormwater Control Measures on Streamflows in the Vicinity of Clarksburg, Maryland, USA: Effects on Streamflows. Hydrol. Process. 2015, 29, 4413–4426. [Google Scholar] [CrossRef]

- Shuster, W.; Rhea, L. Catchment-Scale Hydrologic Implications of Parcel-Level Stormwater Management (Ohio USA). J. Hydrol. 2013, 485, 177–187. [Google Scholar] [CrossRef]

- Woznicki, S.A.; Hondula, K.L.; Jarnagin, S.T. Effectiveness of Landscape-Based Green Infrastructure for Stormwater Management in Suburban Catchments. Hydrol. Process. 2018, 32, 2346–2361. [Google Scholar] [CrossRef]

- Avellaneda, P.M.; Jefferson, A.J.; Grieser, J.M.; Bush, S.A. Simulation of the Cumulative Hydrological Response to Green Infrastructure. Water Resour. Res. 2017, 53, 3087–3101. [Google Scholar] [CrossRef]

- Rezaei, A.R.; Ismail, Z.; Niksokhan, M.H.; Dayarian, M.A.; Ramli, A.H.; Shirazi, S.M. A Quantity–Quality Model to Assess the Effects of Source Control Stormwater Management on Hydrology and Water Quality at the Catchment Scale. Water 2019, 11, 1415. [Google Scholar] [CrossRef]

- Avellaneda, P.M.; Jefferson, A.J. Sensitivity of Streamflow Metrics to Infiltration-Based Stormwater Management Networks. Water Resour. Res. 2020, 56. [Google Scholar] [CrossRef]

- Boening-Ulman, K.M.; Winston, R.J.; Wituszynski, D.M.; Smith, J.S.; Andrew Tirpak, R.; Martin, J.F. Hydrologic Impacts of Sewershed-Scale Green Infrastructure Retrofits: Outcomes of a Four-Year Paired Watershed Monitoring Study. J. Hydrol. 2022, 611, 128014. [Google Scholar] [CrossRef]

- Feng, M.; Jung, K.; Li, F.; Li, H.; Kim, J.-C. Evaluation of the Main Function of Low Impact Development Based on Rainfall Events. Water 2020, 12, 17. [Google Scholar] [CrossRef]

- Hood, M.J.; Clausen, J.C.; Warner, G.S. Comparison of Stormwater Lag Times for Low Impact and Traditional Residential Development. J Am Water Resour. Assoc 2007, 43, 1036–1046. [Google Scholar] [CrossRef]

- Lammers, R.W.; Miller, L.; Bledsoe, B.P. Effects of Design and Climate on Bioretention Effectiveness for Watershed-Scale Hydrologic Benefits. J. Sustain. Water Built Environ. 2022, 8, 04022011. [Google Scholar] [CrossRef]

- Ahiablame, L.; Shakya, R. Modeling Flood Reduction Effects of Low Impact Development at a Watershed Scale. J. Environ. Manag. 2016, 171, 81–91. [Google Scholar] [CrossRef]

- Epps, T.H.; Hathaway, J.M. Using Spatially-Identified Effective Impervious Area to Target Green Infrastructure Retrofits: A Modeling Study in Knoxville, TN. J. Hydrol. 2019, 575, 442–453. [Google Scholar] [CrossRef]

- Di Vittorio, D.; Ahiablame, L. Spatial Translation and Scaling Up of Low Impact Development Designs in an Urban Watershed. J. Water Manag. Model. 2015. [Google Scholar] [CrossRef]

- Fan, G.; Lin, R.; Wei, Z.; Xiao, Y.; Shangguan, H.; Song, Y. Effects of Low Impact Development on the Stormwater Runoff and Pollution Control. Sci. Total Environ. 2022, 805, 150404. [Google Scholar] [CrossRef] [PubMed]

- Liang, C.-Y.; You, G.J.-Y.; Lee, H.-Y. Investigating the Effectiveness and Optimal Spatial Arrangement of Low-Impact Development Facilities. J. Hydrol. 2019, 577, 124008. [Google Scholar] [CrossRef]

- Samouei, S.; Özger, M. Evaluating the Performance of Low Impact Development Practices in Urban Runoff Mitigation through Distributed and Combined Implementation. J. Hydroinformatics 2020, 22, 1506–1520. [Google Scholar] [CrossRef]

- Wadhwa, A.; Pavan Kumar, K. Selection of Best Stormwater Management Alternative Based on Storm Control Measures (SCM) Efficiency Indices. Water Policy 2020, 22, 702–715. [Google Scholar] [CrossRef]

- Zhang, L.; Ye, Z.; Shibata, S. Assessment of Rain Garden Effects for the Management of Urban Storm Runoff in Japan. Sustainability 2020, 12, 9982. [Google Scholar] [CrossRef]

- Li, J.; Zhang, B.; Li, Y.; Li, H. Simulation of Rain Garden Effects in Urbanized Area Based on Mike Flood. Water 2018, 10, 860. [Google Scholar] [CrossRef]

- Guo, X.; Du, P.; Zhao, D.; Li, M. Modelling Low Impact Development in Watersheds Using the Storm Water Management Model. Urban Water J. 2019, 16, 146–155. [Google Scholar] [CrossRef]

- Hopkins, K.G.; Woznicki, S.A.; Williams, B.M.; Stillwell, C.C.; Naibert, E.; Metes, M.J.; Jones, D.K.; Hogan, D.M.; Hall, N.C.; Fanelli, R.M.; et al. Lessons Learned from 20 y of Monitoring Suburban Development with Distributed Stormwater Management in Clarksburg, Maryland, USA. Freshw. Sci. 2022, 000–000. [Google Scholar] [CrossRef]

- Page, J.L.; Winston, R.J.; Mayes, D.B.; Perrin, C.A.; Hunt, W.F. Retrofitting Residential Streets with Stormwater Control Measures over Sandy Soils for Water Quality Improvement at the Catchment Scale. J. Environ. Eng. 2015, 141, 04014076. [Google Scholar] [CrossRef]

- Tchintcharauli-Harrison, M.B.; Santelmann, M.V.; Greydanus, H.; Shehab, O.; Wright, M. Role of Neighborhood Design in Reducing Impacts of Development and Climate Change, West Sherwood, OR. Front. Water 2022, 3, 757420. [Google Scholar] [CrossRef]

- Parker, K.; Horowitz, J.M.; Brown, A.; Fry, R.; Cohn, D.; Igielnik, R. Demographic and Economic Trends in Urban, Suburban and Rural Communities. Pew Research Center’s Social & Demographic Trends Project 2018.

- United Nations, Department of Economic and Social Affairs, Population Division (UN DESA) World Urbanization Prospects: The 2018 Revision; New York, 2019.

- IPCC Climate Change 2023: Synthesis Report; Contribution of Working Groups I, II and III to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change [Core Writing Team, H. Lee and J. Romero (eds.)]; IPCC: Geneva, Switzerland, 2023.

- Howard County GIS Division Howard County, Maryland, Geologic Formations. Available online: https://data.howardcountymd.gov/mapgallery/misc/Geology22x34countywide.pdf (accessed on 21 March 2024).

- U.S. Climate Data Weather Averages Baltimore-BWI Arpt, Maryland. Available online: https://www.usclimatedata.com/climate/baltimore-bwi-arpt/maryland/united-states/usmd0019 (accessed on 22 February 2024).

- National Water Information System (NWIS) USGS Current Conditions for USGS 01593370 L PAX RIV TRIB ABOVE WILDE LAKE AT COLUMBIA, MD. Available online: https://waterdata.usgs.gov/nwis/uv?site_no=01593370 (accessed on 22 February 2024).

- Center for Watershed Protection and Tetra Tech, Inc. Centennial and Wilde Lake Watershed Restoration Plan; 2005. Available online: https://www.howardcountymd.gov/sites/default/files/media/2015-12/Section1_Introduction.pdf (accessed on 22 February 2024).

- Rossman, L.A. Storm Water Management Model User’s Manual Version 5.1; U.S. Environmental Protection Agency, 2015.

- CHI Computational Hydraulics International (CHI). Available online: https://www.chiwater.com/Home (accessed on 4 February 2024).

- Rossman, L.A.; Huber, W. Storm Water Management Model Reference Manual Volume I – Hydrology (Revised); U.S. Environmental Protection Agency: Cincinnati, OH, 2016.

- ESRI Desktop GIS Software | Mapping Analytics | ArcGIS Pro. Available online: https://www.esri.com/en-us/arcgis/products/arcgis-pro/overview (accessed on 4 February 2024).

- U.S. Geological Survey (USGS) The National Map Downloader. Available online: https://apps.nationalmap.gov/downloader/ (accessed on 26 March 2024).

- U.S. Geological Survey (USGS) EarthExplorer. Available online: https://earthexplorer.usgs.gov/ (accessed on 26 March 2024).

- Maryland Geographic Information Office MD iMAP Portal. Available online: https://imap.maryland.gov/ (accessed on 26 March 2024).

- Howard County GIS Division Howard County Maryland Data Download and Viewer. Available online: https://data.howardcountymd.gov/ (accessed on 26 March 2024).

- U.S. Department of Agriculture Natural Resources Conservation Service Web Soil Survey Available online: https://websoilsurvey.nrcs.usda.gov/app/WebSoilSurvey.aspx (accessed on 26 March 2024).

- Wijetunge, R. Storm Drain Infrastructure Data (Howard County Government Stormwater Management Division), personal communication, 22 June 2022.

- Baltimore County Dept. of Public Works Baltimore County Rain Gauge Data Available online: https://drive.google.com/drive/folders/1ZP_kHl_bdiL6JMYuXUDT_5GXTzwq2KHM (accessed 26 March 2024).

- Poltilove, D. Weather Data, personal communication, 9 September 2023.

- Green, W.; Ampt, G. Studies on Soil Physics, 1. The Flow of Air and Water Through Soils. J. Agric. Sci. 1911, 4, 11–24. [Google Scholar]

- Hargreaves, G.; Samani, Z. Reference Crop Evapotranspiration from Temperature. Appl. Eng. Agric. 1985, 1, 96–99. [Google Scholar] [CrossRef]

- Nash, J.E.; Sutcliffe, J.V. River Flow Forecasting through Conceptual Models Part I — A Discussion of Principles. J. Hydrol. 1970, 10, 282–290. [Google Scholar] [CrossRef]

- Ladson, A. Hydrology: An Australian Introduction; Oxford University Press Australia, 2008; ISBN 978-0-19-555358-1.

- Moriasi, *!!! REPLACE !!!*; Gitau, M.; Pai, N.; Daggupati, P. Hydrologic and Water Quality Models: Performance Measures and Evaluation Criteria. Trans. ASABE 2015, 58, 1763–1785. [Google Scholar] [CrossRef]

- Rossman, L.A.; Huber, W. Storm Water Management Model Reference Manual Volume III – Water Quality; U.S. Environmental Protection Agency: Cincinnati, OH, 2016; p. 127.

- Hoghooghi, N.; Golden, H.; Bledsoe, B.; Barnhart, B.; Brookes, A.; Djang, K.; Halama, J.; McKane, R.; Nietch, C.; Pettus, P. Cumulative Effects of Low Impact Development on Watershed Hydrology in a Mixed Land-Cover System. Water 2018, 10, 991. [Google Scholar] [CrossRef] [PubMed]

- Palla, A.; Gnecco, I. Hydrologic Modeling of Low Impact Development Systems at the Urban Catchment Scale. J. Hydrol. 2015, 528, 361–368. [Google Scholar] [CrossRef]

- Li, J.; Li, Y.; Li, Y. SWMM-Based Evaluation of the Effect of Rain Gardens on Urbanized Areas. Env. Earth Sci 2016, 75, 17. [Google Scholar] [CrossRef]

- Salvadore, E.; Bronders, J.; Batelaan, O. Hydrological Modelling of Urbanized Catchments: A Review and Future Directions. J. Hydrol. 2015, 529, 62–81. [Google Scholar] [CrossRef]

| Dataset or Layer Name | Description | Source |

|---|---|---|

| Digital Elevation Model (DEM) | 1 meter DEM, dated 2018 | USGS 3DEP Program, via USGS National Map Downloader [56] |

| Orthoimagery | High-resolution (6-inch) aerial photography, dated 2014 and 2020 | USGS Earth Explorer [57] and MD iMAP Portal [58] |

| Impervious Cover | Dated 2020 | Howard County Maryland Data Download and Viewer [59] |

| Land Use | Dated 2024 | Howard County Maryland Data Download and Viewer [59] |

| Forest Cover | “Soft canopy” dated 2018 | Howard County Maryland Data Download and Viewer [59] |

| Soil Data | Characteristics for top 2 meters of soils | Natural Resources Conservation Service (NRCS) Soil Survey Geographic Database (SSURGO) [60] |

| Storm Sewer Infrastructure | Geodatabase containing inlet, manhole, pipe, and outfall locations | Howard County Stormwater Management Division [61] |

| Rainfall | 5-minute interval rainfall data | Baltimore County Rain Gauge Network [62] |

| Temperature | Daily maximum and minimum temperature | Observation station operated by a Community Collaborative Rain, Hail, and Snow Network (CoCoRaHS) observer [63] |

| Discharge | 1-minute interval discharge at the catchment outlet | USGS streamgage 01593370, via the National Water Information System (NWIS) [50] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).