1. Introduction

Human Activity Recognition (HAR) has emerged as a transformative technology enabling the continuous monitoring of both static and dynamic behaviors in diverse environmental conditions [

1]. Its applications extend across industries, sports, and healthcare [

2]. With respect to the healthcare sector, continuous HAR monitoring presents an opportunity to obtain ecologically valid information about a patient's condition in everyday environments and facilitates the registration of rare and fluctuating events that are often missed in stationary short-term clinical assessments [

3]. This technology holds significant potential for identifying diseases in their pre-clinical stages, monitoring disease progression or evaluating the effects of interventions, and detecting critical events such as falls [

4,

5,

6,

7,

8].

The technical landscape of HAR in healthcare applications predominantly relies on wearable motion sensors, primarily accelerometers, often augmented with gyroscopes or magnetometers. The small size and low energy consumption of these sensors allow them to be placed either directly on different body parts or to be integrated in wearable devices (e.g. smartwatches). The placement location on the body is strategically chosen, so that the motion profile captured by the sensor can identify and distinguish various forms of activities as effectively as possible. Common choices for mounting include the lower back [

9], thighs [

8,

10], feet (often integrated into shoes) [

11], and wrist (often integrated into smartwatches or fitness trackers) [

12], balancing unobtrusiveness and seamless integration into daily life.

In this study, we explore an alternative approach by utilizing an ear-worn sensor for HAR [

13,

14]. The ear, in contrast to the lower extremities or the trunk, has several unique advantages for connecting an HAR device. The head, housing crucial sensory peripheries for vision, audition, and balance, remains exceptionally stable during various movements [

15,

16], providing a reliable locus for low-noise identification and differentiation of different bodily activities. Additionally, the ear is a location where users in particular in the elderly population often already employ assistive devices like hearing aids or eyeglass frames that could be readily combined with a miniature motion sensor [

17]. Finally, beyond motion, the ear is an ideal location for monitoring a person's physical and health status, as optical in-ear sensors can reliably capture vital signs such as heart rate, blood pressure, body temperature, and oxygen saturation [

18]. Therefore, the ear stands out as a promising candidate site for wearing a single integrative sensing device, facilitating comprehensive continuous monitoring of a person's activity and health status in everyday life.

The primary objective of this study was to investigate the potential of an ear-worn motion sensor, integrated into an in-ear vital signs monitor, to classify various human activities. In a large group of healthy individuals, daily activities such as lying, sitting, standing, walking, running, and stair walking were recorded and labeled in a free-living environment. To achieve a classification of these activities, we employed machine learning algorithms, adopting a two-fold strategy: shallow machine learning models utilizing interpretable features or parametrizations of movement (e.g., movement amplitude and variance) and state-of-the-art deep learning algorithms specifically designed for HAR. This dual-pronged approach aims to explore the interpretability of features and the robust classification capabilities offered by deep learning in the context of inertial-sensor-based HAR.

2. Materials and Methods

2.1. Participants

Fifty healthy individuals, between 20 and 47 years old (age: 29.4 ± 6.5 years; height: 1.73 ± 0.10 m; weight: 70.8 ± 15.8 kg; 25 females), participated in the study. All participants signed written informed consent prior to inclusion and were screened for any neurological or orthopedic conditions that would influence either balance or locomotion.

2.2. Ear-Worn Motion Sensor

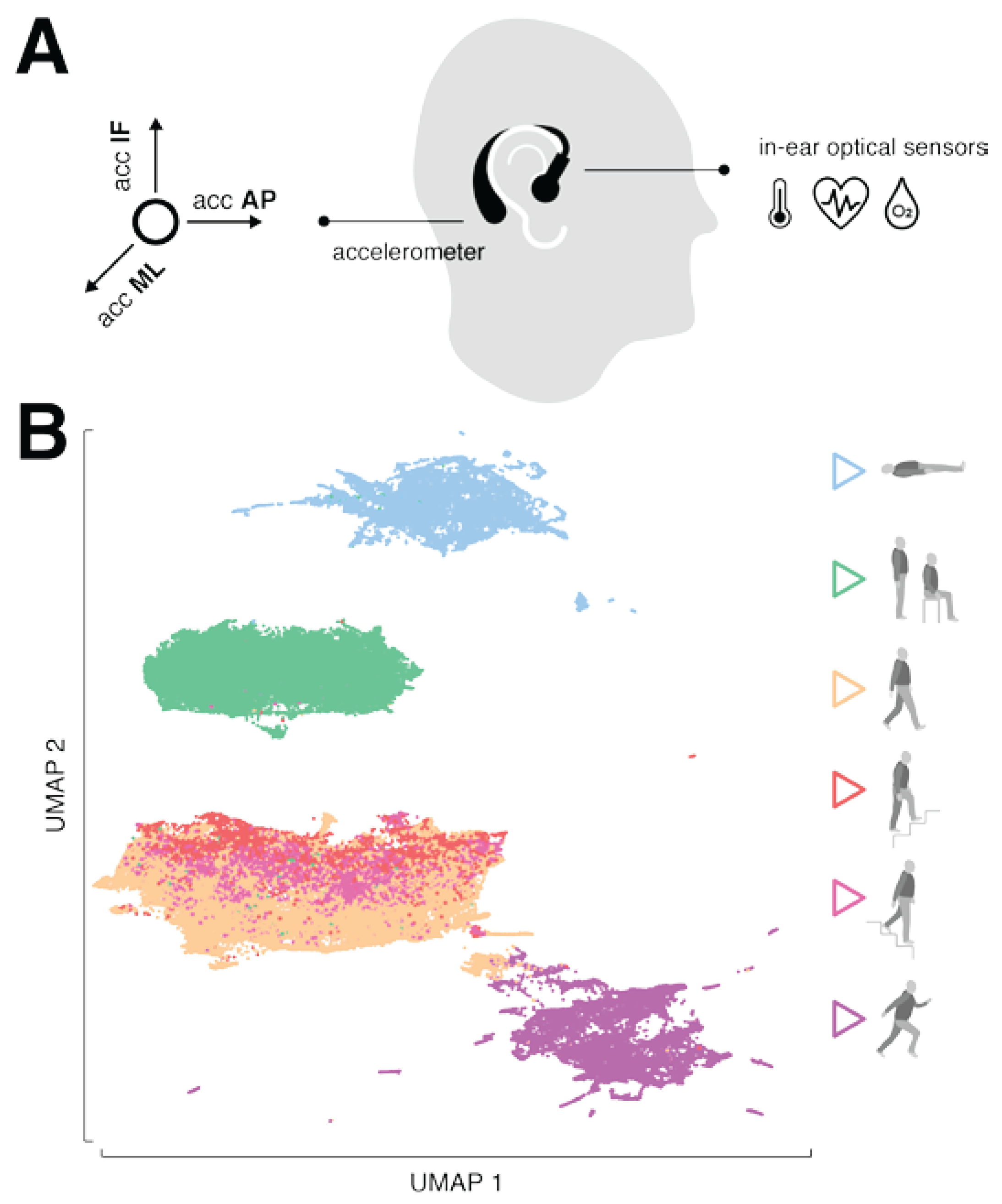

The motion sensor consisted of a triaxial accelerometer (range: ± 16 g; accuracy: 0.0002 g; sampling rate: 100 Hz), which is integrated into a commercial, wearable in-ear vital signs monitor (c-med° alpha, size: 55.2 mm x 58.6 mm x 10.0 mm; weight: 7 g, Cosinuss GmbH, Munich, Germany). The vital signs monitor consists of a silicon ear-plug that is in contact with the outer ear canal skin and contains an infrared thermometer for recording body temperature and an optical sensor for measuring pulse rate and blood oxygen saturation. The ear-plug is connected to an ear-piece hooked around the ear conch, in which the motion sensor is located (

Figure 1A). The wearable device transmits acquired motion and vital signals in real-time via Bluetooth Low Energy to a gateway that subsequently streams this information into the cosinuss° Health server. The server platform can be accessed via a smartphone application to monitor the acquired signals in real-time and to add real-time annotations (activity labels) to the recording (see ground truth annotation in Sec. 2.3 Experimental procedures).

2.3. Experimental Procedures

The experiments were conducted in the university research building and outdoors (urban environment). Initially, each participant was briefed on the experimental procedures. Subsequently, two ear-worn sensors were attached to the left and right ears with the aim to train and obtain an algorithm for activity classification that works independent of the attachment side.

The focus of activity classification was on so-called low-level activities, characterized by a sequence of body movements and postures, typically lasting few seconds to several minutes [

19]. The recorded activity forms included lying, sitting, standing, walking, ascending or descending stairs, and running (

Figure 1B). To make the activity classification robust for everyday variations, participants were encouraged to perform them as naturally as possible. For example, lying also included turning or restlessly lying in bed, standing involved tapping in place or chatting, and walking was performed at varying slow, comfortable or fast speeds. The activities lying and stair climbing were exclusively recorded indoors, while the other activities were recorded both indoors and outdoors. Each participant performed all activities multiple times in a pseudorandomized order. The average recording duration was approximately 30 minutes per participant.

An experimenter accompanied the participant throughout the entire experiment and instructed them on when to end one activity and start a new one. The experimenter simultaneously performed ground truth annotation (activity type) by annotating the real-time sensor time series with the respective activity label via a smartphone application. The activity label was assigned for the period shortly after the onset of the activity until its termination. Transitions between activities or brief interruptions in the experimental procedure did not receive a label.

In preliminary analyses, it was observed that the activity classes of sitting and standing were fundamentally indistinguishable, which is expected, as the head behaves in the same orientation during both activities. Therefore, the two classes were combined for further investigations resulting in a total of six activity classes: lying, sitting/standing, walking, ascending stairs, descending stairs, and running.

2.4. Classification Models

2.4.1. Data Segmentation

The acquired three-dimensional motion time series (anterior-posterior dimension: AP; superior-inferior dimension: SI; medio-lateral dimension: ML) were segmented by using the sliding window technique employing various sizes of non-overlapping windows (i.e.: 0.5, 1, 2 s) to determine an optimal configuration. Only recording sequences with an activity label were used, and simultaneously, it was ensured that each recording segment contained only a unique activity label.

2.4.2. Shallow Learning Models

After segmentation, a set of time-domain and frequency-domain features established for time series analysis of physiological signals were computed per segment. These included the mean, mean of sum of absolute values, minimum, maximum, range, sum, standard deviation, variance, root-mean-square, interquartile range, zero-crossing rate, skewness, kurtosis, signal energy, and spectral entropy that were computed per motion axis as well as for the acceleration magnitude vector (, , , ). In addition, the Pearson and Kendall correlation coefficients were computed for every combination between motion axes resulting in a total number of 64 features.

For pre-evaluation, a set of eight standard machine learning models were trained including K-Nearest Neighbors, Decision Tree, Support Vector Machine, Naive Bayes, Bagging, Random Forest, ExtraTrees, Gradient Boosting. Input features were first normalized by applying a transformation to zero-mean and unit-variance distribution and subsequently, each model was trained using a stratified 10-fold cross-validation that ensured that data from one participant was either only represented in the training or testing set. From the set of all model and all sliding window combinations tested, the model with the highest accuracy was selected for further hyperparameter optimization using grid search with cross-validation. Finally, feature selection on the optimized classifier was performed using univariate statistical comparisons (i.e., ANOVA F-value between features) to identify a parsimonious set of the most informative features that still ensure high classification accuracy.

2.4.3. Deep Learning Models

Besides shallow learning models, that use pre-engineered motion features, the performance of deep learning models that automate feature extraction from raw sensor inputs was evaluated. Two deep learning models specifically designed for the task of HAR on wearable motion signals were considered. The

DeepConvLSTM architecture combines a convolutional neural network with long-short-term memory recurrent network (LSTM) and has been widely applied in the past [

20]. The model employs a series of convolutional layers that learn to extract essential features from the raw motion time series followed by LSTM layers that model their temporal dependencies. The

ConvTransformer based on a combination of a convolutional neural network with a transformer model is a more recent model that achieves state-of-the-art performance on many publicly available datasets [

21]. The model initially utilizes a convolutional layer to model the local information of the motion time series, then uses a transformer to represent the temporal information of the modeled signal features and adds an attention mechanism to determine the essential features.

The two deep learning models were trained for same window sizes as described above. Initially, raw motion sensor data were normalized by applying a transformation to zero-mean and unit-variance distribution and subsequently, each model was trained using a stratified 10-fold cross-validation that ensured that data from one participant was either only represented in the training or testing set. Both models are trained to reduce categorical cross-entropy loss using Adam optimizer.

2.4.4. Performance Metrics and Implementation

The performance of the different studied shallow and deep learning models was evaluated primarily by the weighted

F1-Score, that considers both precision (

) and recall (

) while considering imbalances in class distribution. It calculates the harmonic mean of precision and recall and ranges between 1 and 0 reflecting best and worst performance, respectively:

All analyses and models were implemented in Python 3.9 using scikit-learn 1.3 and the Keras API 2.10 with TensorFlow backend.

3. Results

3.1. Dataset Characteristics

A total of 50.8 hours (left ear-worn sensors: 27.0 hours; right ear-worn sensors: 23.8 hours) of activity was recorded from the 50 participants. In six participants, sensor data was only available from one ear-worn device due to transmission or battery issues occurring during recording. The distribution of recorded activity classes and the corresponding duration statistics for single periods of activity are shown in

Table 1. Recorded activities had a varying duration between 3 to 200 s. The final dataset reveals a certain imbalance between the measured activity classes, with walking dominating at 31% of all cases, and descending stairs being the least represented at only 6% of the cases. To address this imbalance between activity classes in the subsequent analyses, each classification model was trained using a stratified cross-validation, and the model performance was evaluated based on the weighted F1-score, which considers imbalances between the classes.

3.2. Shallow Learning Models

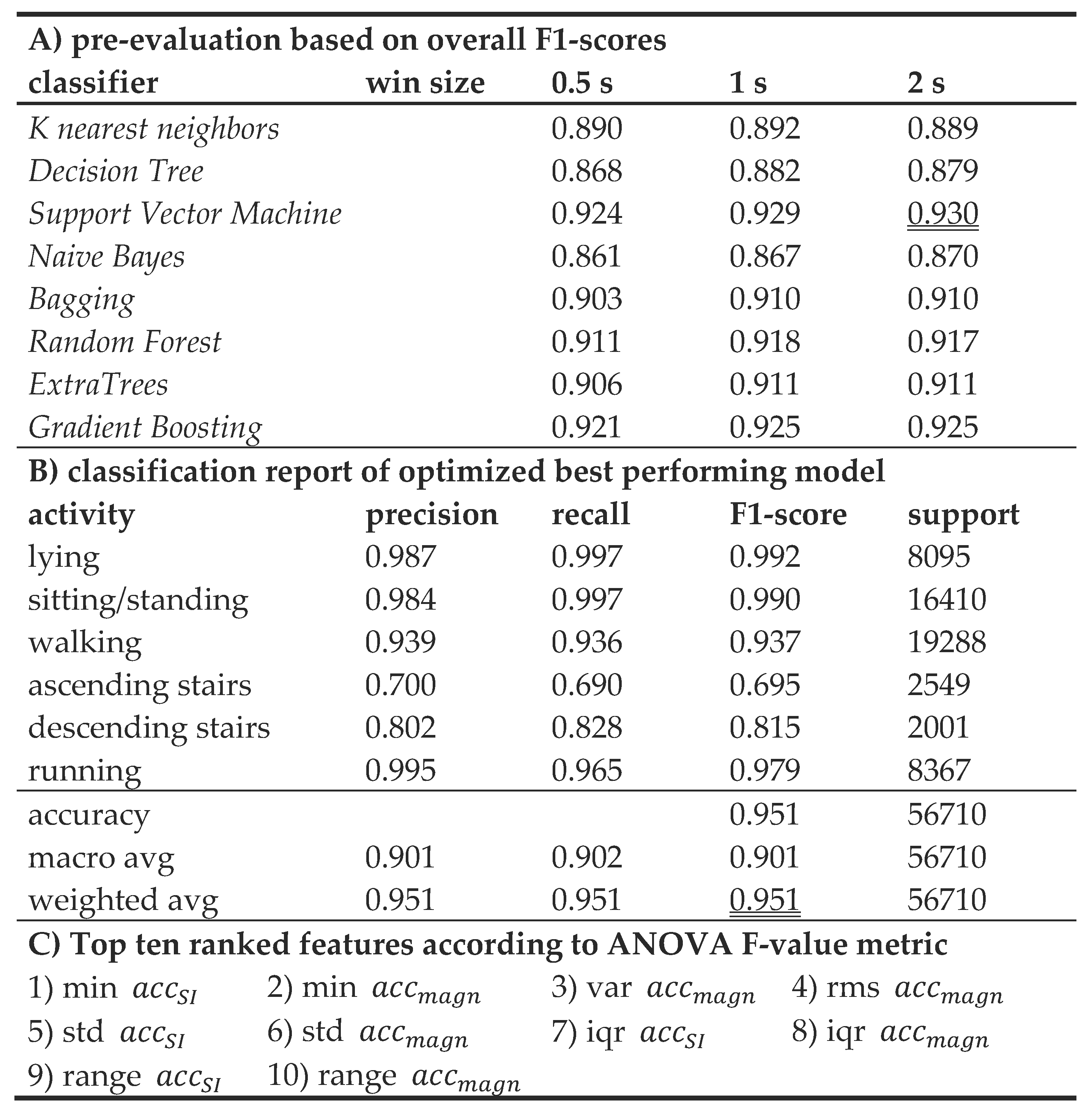

Table 2A shows the results of the pre-evaluation for various window sizes used for data segmentation and different shallow machine learning models. All models achieved better classification rates for longer window sizes. Across all examined window sizes, Support Vector Machine was leading in classification, achieving the best accuracy of 93% with a window size of 2 s. Hyperparameter optimization of this classifier performed using grid search with cross-validation yielded a gamma-value of 0.0001 and a regularization parameter of C of 10,000.

Table 2B presents the classification report of this optimized model with an average classification accuracy of 95%. It almost perfectly identified and differentiated between the activities lying, sitting/standing, and running but still showed some difficulties in distinguishing between the activities walking, ascending and descending stairs.

In a final step, a feature selection was performed to obtain a parsimonious model with a concise number of parameters without significantly compromising classification accuracy. This procedure revealed that a model based on ten signal features was sufficient to yield a classification accuracy above 91% (

Table 2C). The top ranked features reflected basic time-domain characteristics (e.g. variance, range, minimum) of the up and down head acceleration and the acceleration magnitude vector (

and

).

Table 2.

Outcomes from deep learning models (A) pre-evaluation of different classifiers on varying window sizes used for data segmentation. The best configuration (classifier and window size) is underlined. (B) Classification report of the optimized (grid search cross-validation) best performing configuration, i.e., support vector machine with a window size of 2 s. (C) Outcomes from feature selection performed on the optimized model best performing model.

Table 2.

Outcomes from deep learning models (A) pre-evaluation of different classifiers on varying window sizes used for data segmentation. The best configuration (classifier and window size) is underlined. (B) Classification report of the optimized (grid search cross-validation) best performing configuration, i.e., support vector machine with a window size of 2 s. (C) Outcomes from feature selection performed on the optimized model best performing model.

3.3. Deep Learning Models

Both examined deep learning models showed an overall improved classification compared to the optimized best performing shallow learning model. The increase in classification accuracy ranged between 2-3%. The more recent model, ConvTransformer, did not show any advantage over the DeepConvLSTM network.

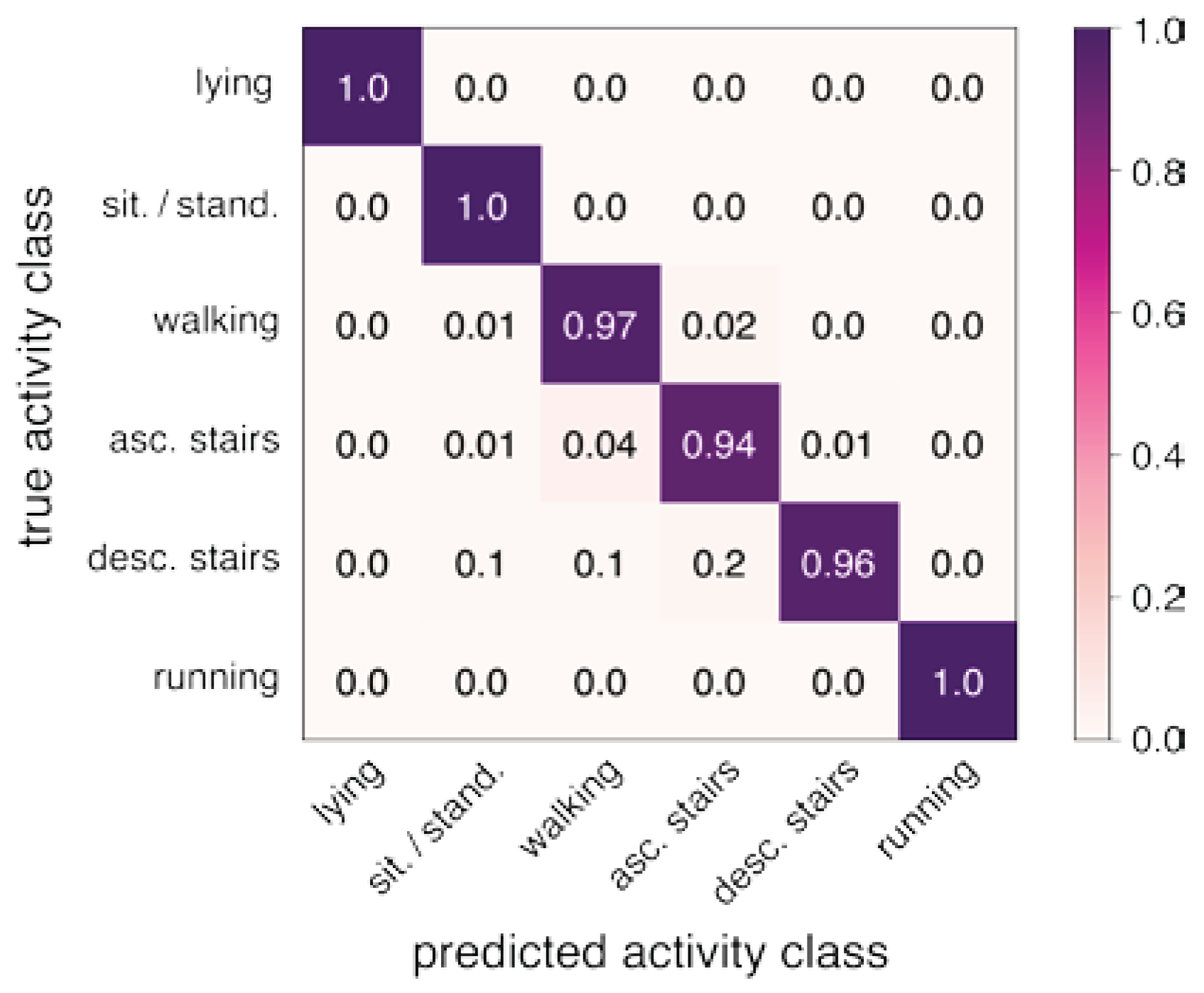

Table 3A displays the classification performance of both networks for various window sizes.

Table 3B presents the classification report for the best configuration yielding an average classification accuracy of 98% (DeepConvLSTM with a window size of 2 seconds) and

Figure 2 the corresponding confusion matrix.

4. Discussion

The primary objective of this study was to assess the efficacy of an ear-mounted motion sensor in classifying various human activities. Our findings substantiate the ear's suitability as a measurement site for an activity monitor, given its stability during diverse movements and the potential integration with commonly worn assistive devices, such as hearing aids and eyeglass frames. Moreover, the ear's capacity to host both motion and vital sign sensors positions it as a promising location for comprehensive health monitoring. The application of state-of-the-art deep learning models yielded excellent results, achieving a 98% accuracy in classifying six common activities, which surpasses previous equivalent approaches [

13,

14] and is comparable to current benchmark activity classifiers using one or multiple motion sensors at the trunk or lower extremities. Furthermore, even a conventional shallow network demonstrated compelling performance using a concise set of interpretable time-domain features. To emphasize, the yielded classification algorithm is agnostic regarding which ear the sensor is worn and robust against moderate variations in sensor orientation (e.g. due to differences in auricle anatomy), meaning no initial calibration of the sensor orientation is required.

The study incorporated a diverse cohort of participants, spanning various ages, genders, and body dimensions, engaging in activities in natural, urban environments. While the algorithm successfully classified a wide range of activities, a limitation emerged in differentiating between sitting and standing, a challenge also observed in previous trunk-mounted approaches [

22]. Future enhancements could involve considering postural transitions, potentially allowing for the distinction between these two activities. Additionally, the current algorithm did not account for active (e.g., biking) or passive (e.g., riding a car, subway) transportation, suggesting room for expansion to create a more comprehensive activity monitor.

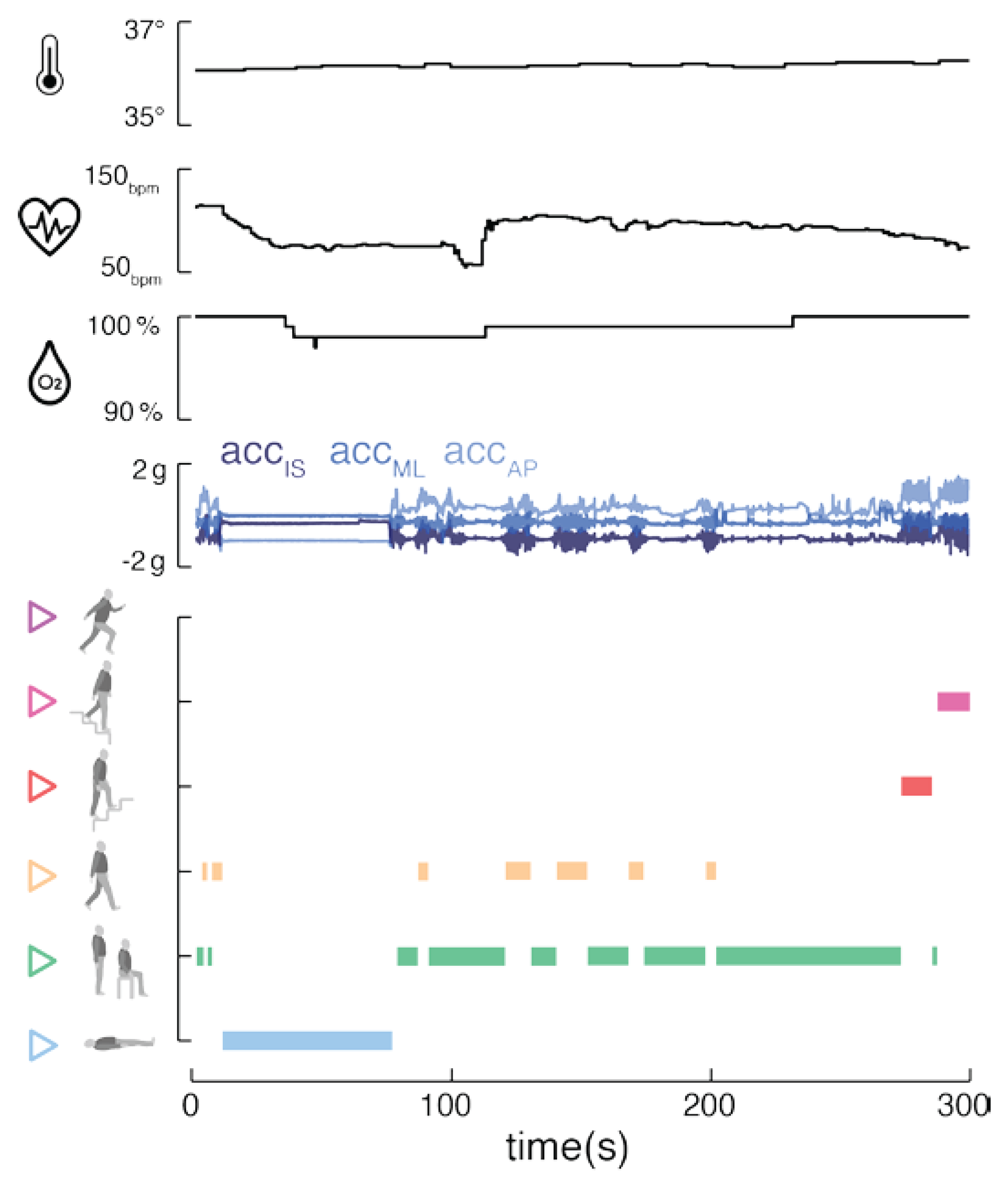

The integration of an ear-worn motion sensor with a vital signs monitor offers promising advantages (

Figure 3). The parallel monitoring of bodily activity and vital signs, including temperature, pulse rate, and oxygen saturation, allows a comprehensive view of an individual's health status. This approach is particularly beneficial in tele-monitoring applications, where understanding the context and behavior is crucial for accurate feedback [

23,

24,

25]. Correlating vital signs with specific activities aids in establishing individual baselines and identifying anomalies that may indicate health issues [

26,

27,

28]. Long-term analysis of these correlations could provide personalized health insights, identifying individual behavioral patterns and habits that might impact health. For athletes, understanding how vital signs respond to different exercise intensities can help to optimize training regimes and prevent overtraining[

29]. The motion sensor could finally contribute to improving the accuracy of vital sign readings by automatically detecting and addressing motion artifacts through algorithm tuning [

18].

5. Conclusions

This study demonstrates the effectiveness of an ear-worn motion sensor for highly accurate classification of common sedative and ambulatory activities. When combined with an in-ear vital signs monitor, this integrated system enhances the accuracy of vital sign readings and enables the study of correlations between vital signs and activities. Future applications should expand the activity monitor to include activities like active and passive transportation, and efforts should be directed towards training algorithms for more detailed activity classification, such as characterizing step-to-step patterns during ambulatory activities [

17]. This research lays the foundation for a comprehensive and versatile ear-centered monitoring system with potential applications in healthcare, sports, and beyond.

Author Contributions

Conceptualization, L.B., R.S. and M.W.; methodology, L.B., J.D., R.R. and M.W.; software, J.D, R.R. and M.W..; validation, L.B. and M.W.; formal analysis, L.B., J.D., R.R. and M.W.; investigation, L.B. and M.W; resources, R.S. and M.W.; data curation, L.B. and M.W.; writing—original draft preparation, L.B. and M.W.; writing—review and editing, J.D, R.R. and R.S..; visualization, M.W.; supervision, M.W.; project administration, M.W.; funding acquisition, M.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by German Federal Ministry for Education and Science, grant number 01EO1401 and 13GW0490B.

Institutional Review Board Statement

The study protocol was approved by the ethics committee of the medical faculty of the University of Munich (LMU, 22-0644) and the study was conducted in conformity with the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The datasets used and/or analysed during the current study will be available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Arshad, M.H.; Bilal, M.; Gani, A. Human Activity Recognition: Review, Taxonomy and Open Challenges. Sensors 2022, 22, 6463. [Google Scholar] [CrossRef]

- Minh Dang L, Min K, Wang H, Jalil Piran M, Hee Lee C, Moon H. Sensor-based and vision-based human activity recognition: A comprehensive survey. Pattern Recognition 2020;108.

- Warmerdam, E.; Hausdorff, J.M.; Atrsaei, A.; Zhou, Y.; Mirelman, A.; Aminian, K.; Espay, A.J.; Hansen, C.; Evers, L.J.W.; Keller, A.; et al. Long-term unsupervised mobility assessment in movement disorders. Lancet Neurol. 2020, 19, 462–470. [Google Scholar] [CrossRef] [PubMed]

- Schniepp, R.; Huppert, A.; Decker, J.; Schenkel, F.; Schlick, C.; Rasoul, A.; Dieterich, M.; Brandt, T.; Jahn, K.; Wuehr, M. Fall prediction in neurological gait disorders: differential contributions from clinical assessment, gait analysis, and daily-life mobility monitoring. J. Neurol. 2021, 268, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Ilg, W.; Seemann, J.; Giese, M.; Traschütz, A.; Schöls, L.; Timmann, D.; Synofzik, M. Real-life gait assessment in degenerative cerebellar ataxia. Neurology 2020, 95, e1199–e1210. [Google Scholar] [CrossRef] [PubMed]

- Del Din, S.; Elshehabi, M.; Galna, B.; Hobert, M.A.; Warmerdam, E.; Suenkel, U.; Brockmann, K.; Metzger, F.; Hansen, C.; Berg, D.; et al. Gait analysis with wearables predicts conversion to Parkinson disease. Ann. Neurol. 2019, 86, 357–367. [Google Scholar] [CrossRef] [PubMed]

- Rehman, R.Z.U.; Zhou, Y.; Del Din, S.; Alcock, L.; Hansen, C.; Guan, Y.; Hortobágyi, T.; Maetzler, W.; Rochester, L.; Lamoth, C.J.C. Gait Analysis with Wearables Can Accurately Classify Fallers from Non-Fallers: A Step toward Better Management of Neurological Disorders. Sensors 2020, 20, 6992. [Google Scholar] [CrossRef] [PubMed]

- Wuehr, M.; Huppert, A.; Schenkel, F.; Decker, J.; Jahn, K.; Schniepp, R. Independent domains of daily mobility in patients with neurological gait disorders. J. Neurol. 2020, 267, 292–300. [Google Scholar] [CrossRef] [PubMed]

- Galperin I, Hillel I, Del Din S, et al. Associations between daily-living physical activity and laboratory-based assessments of motor severity in patients with falls and Parkinson's disease. Parkinsonism Relat Disord 2019;62:85-90.

- Lord, S.; Chastin, S.F.M.; McInnes, L.; Little, L.; Briggs, P.; Rochester, L. Exploring patterns of daily physical and sedentary behaviour in community-dwelling older adults. J. Am. Geriatr. Soc. 2011, 40, 205–210. [Google Scholar] [CrossRef] [PubMed]

- Sazonov, E.; Hegde, N.; Browning, R.C.; Melanson, E.L.; Sazonova, N.A. Posture and Activity Recognition and Energy Expenditure Estimation in a Wearable Platform. IEEE J. Biomed. Heal. Informatics 2015, 19, 1339–1346. [Google Scholar] [CrossRef] [PubMed]

- Hegde, N.; Bries, M.; Swibas, T.; Melanson, E.; Sazonov, E. Automatic Recognition of Activities of Daily Living Utilizing Insole-Based and Wrist-Worn Wearable Sensors. IEEE J. Biomed. Heal. Informatics 2017, 22, 979–988. [Google Scholar] [CrossRef] [PubMed]

- Min C, Mathur A, Kawsar F. Exploring audio and kinetic sensing on earable devices. Proceedings of the 4th ACM Workshop on Wearable Systems and Applications. Munich, Germany: Association for Computing Machinery, 2018: 5–10.

- Atallah, L.; Lo, B.; King, R.; Yang, G.-Z. Sensor Positioning for Activity Recognition Using Wearable Accelerometers. IEEE Trans. Biomed. Circuits Syst. 2011, 5, 320–329. [Google Scholar] [CrossRef] [PubMed]

- Kavanagh, J.J.; Morrison, S.; Barrett, R.S. Coordination of head and trunk accelerations during walking. Eur. J. Appl. Physiol. 2005, 94, 468–475. [Google Scholar] [CrossRef] [PubMed]

- Winter DA, Ruder GK, MacKinnon CD. Control of Balance of Upper Body During Gait. In: Winters JM, Woo SLY, eds. Multiple Muscle Systems: Biomechanics and Movement Organization. New York, NY: Springer New York, 1990: 534-41.

- Seifer, A.-K.; Dorschky, E.; Küderle, A.; Moradi, H.; Hannemann, R.; Eskofier, B.M. EarGait: Estimation of Temporal Gait Parameters from Hearing Aid Integrated Inertial Sensors. Sensors 2023, 23, 6565. [Google Scholar] [CrossRef] [PubMed]

- Röddiger T, Clarke C, Breitling P, et al. Sensing with Earables. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies 2022;6:1-57.Arshad MH, Bilal M, Gani A. Human Activity Recognition: Review, Taxonomy and Open Challenges. Sensors (Basel) 2022;22.

- Zhang M, Sawchuk AA. Usc-Had. Proceedings of the 2012 ACM Conference on Ubiquitous Computing2012: 1036-43.

- Ordóñez, F.J.; Roggen, D. Deep Convolutional and LSTM Recurrent Neural Networks for Multimodal Wearable Activity Recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Wang, W.; An, A.; Qin, Y.; Yang, F. A human activity recognition method using wearable sensors based on convtransformer model. Evol. Syst. 2023, 14, 939–955. [Google Scholar] [CrossRef]

- Lugade, V.; Fortune, E.; Morrow, M.; Kaufman, K. Validity of using tri-axial accelerometers to measure human movement—Part I: Posture and movement detection. Med Eng. Phys. 2014, 36, 169–176. [Google Scholar] [CrossRef] [PubMed]

- Ivascu T, Negru V. Activity-Aware Vital SignMonitoring Based on a Multi-Agent Architecture. Sensors (Basel) 2021;21.

- Wang, Z.; Yang, Z.; Dong, T. A Review of Wearable Technologies for Elderly Care that Can Accurately Track Indoor Position, Recognize Physical Activities and Monitor Vital Signs in Real Time. Sensors 2017, 17, 341. [Google Scholar] [CrossRef] [PubMed]

- Starliper, N.; Mohammadzadeh, F.; Songkakul, T.; Hernandez, M.; Bozkurt, A.; Lobaton, E. Activity-Aware Wearable System for Power-Efficient Prediction of Physiological Responses. Sensors 2019, 19, 441. [Google Scholar] [CrossRef] [PubMed]

- Wu K, Chen EH, Hao X, et al. Adaptable Action-Aware Vital Models for Personalized Intelligent Patient Monitoring. 2022 International Conference on Robotics and Automation (ICRA); 2022 23-27 May 2022: 826-32.

- Lokare, N.; Zhong, B.; Lobaton, E. Activity-Aware Physiological Response Prediction Using Wearable Sensors. Inventions 2017, 2, 32. [Google Scholar] [CrossRef]

- Sun F-T, Kuo C, Cheng H-T, Buthpitiya S, Collins P, Griss M. Activity-Aware Mental Stress Detection Using Physiological Sensors. 2012; Berlin, Heidelberg: Springer Berlin Heidelberg: 211-30.

- Seshadri, D.R.; Li, R.T.; Voos, J.E.; Rowbottom, J.R.; Alfes, C.M.; Zorman, C.A.; Drummond, C.K. Wearable sensors for monitoring the internal and external workload of the athlete. npj Digit. Med. 2019, 2, 1–18. [Google Scholar] [CrossRef] [PubMed]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).