Submitted:

02 April 2024

Posted:

03 April 2024

You are already at the latest version

Abstract

Keywords:

Author Summary

- Two integrated dynamic models of the C. elegans’ whole-brain network and the robot moving dynamics are built;

- Real-time communication is achieved between the BNN model and the robot’s dynamical model including applicable encoding and decoding algorithms, facilitating their collaborative operation;

- The cooperative work between the BNN model and the robot experimental prototype is also realized;

- The study accomplishes the effective designed mechanisms of using BNN model to control the robot in our numerical and experimental tests, including the ‘foraging’ behavior control and locomotion control.

1. Introduction

2. Materials and Methods

2.1. Dynamic Simulation of C. elegans’ BNN

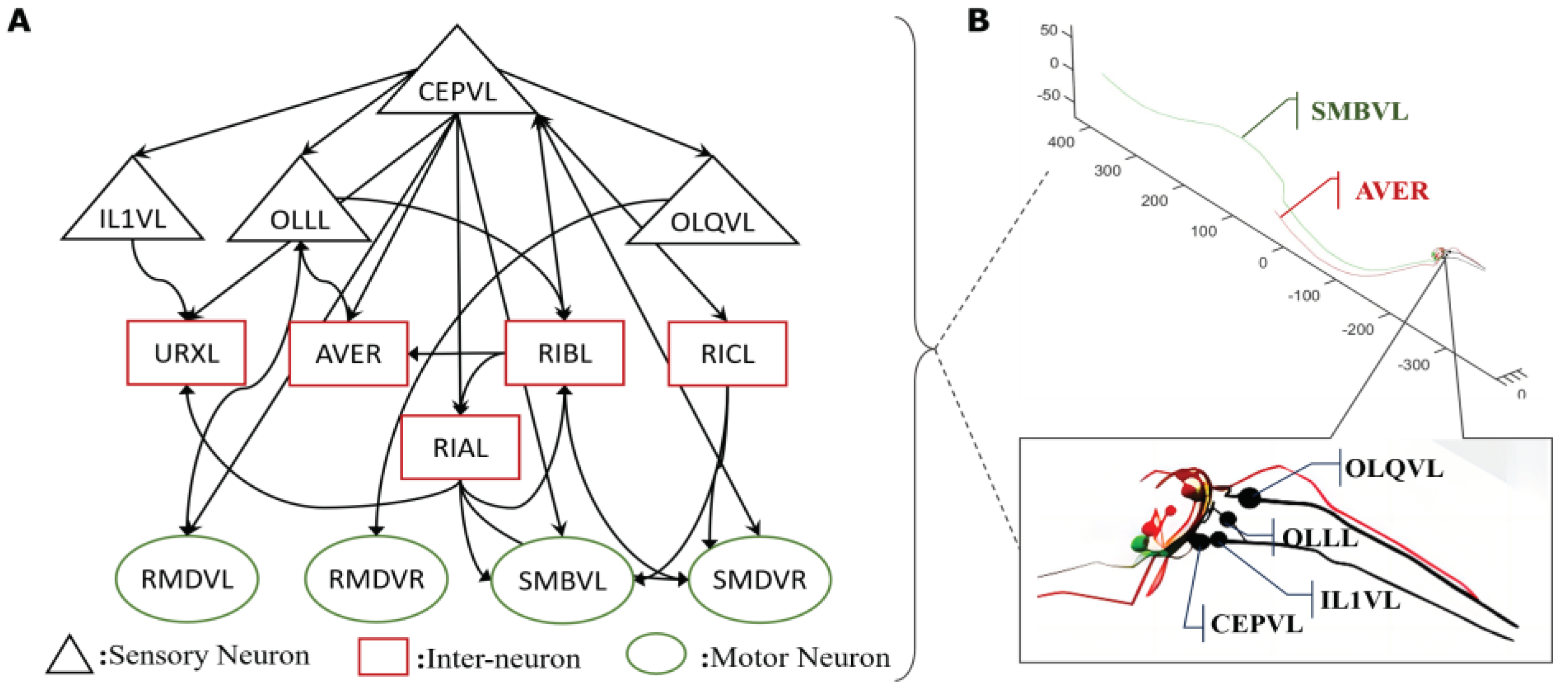

2.1.1. The Whole Brain Structure of C. elegans

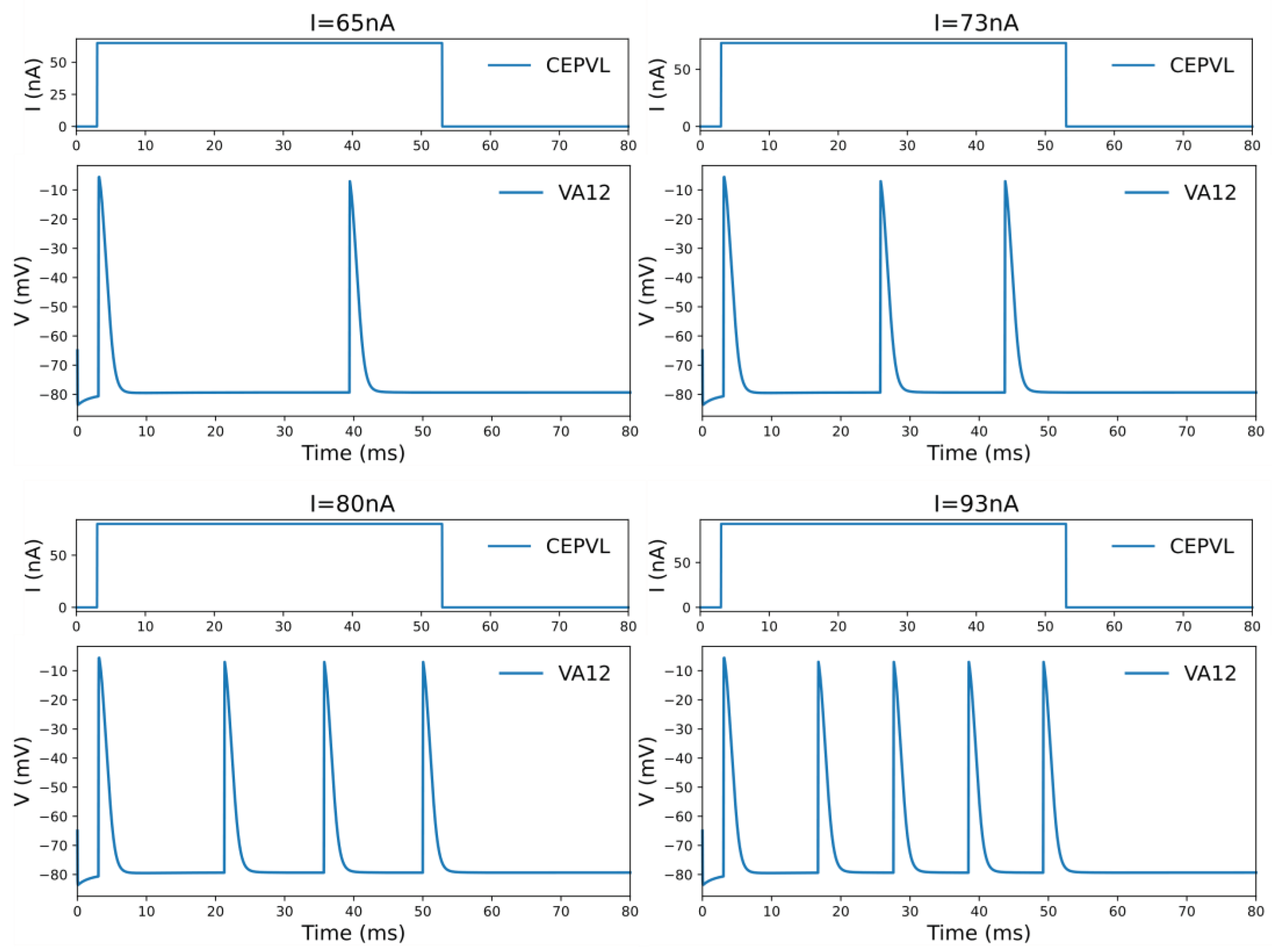

2.1.2. Modelling the Neural Network of C. elegans

2.1.3. Control Circuit Identification

2.2. Robotic platforms

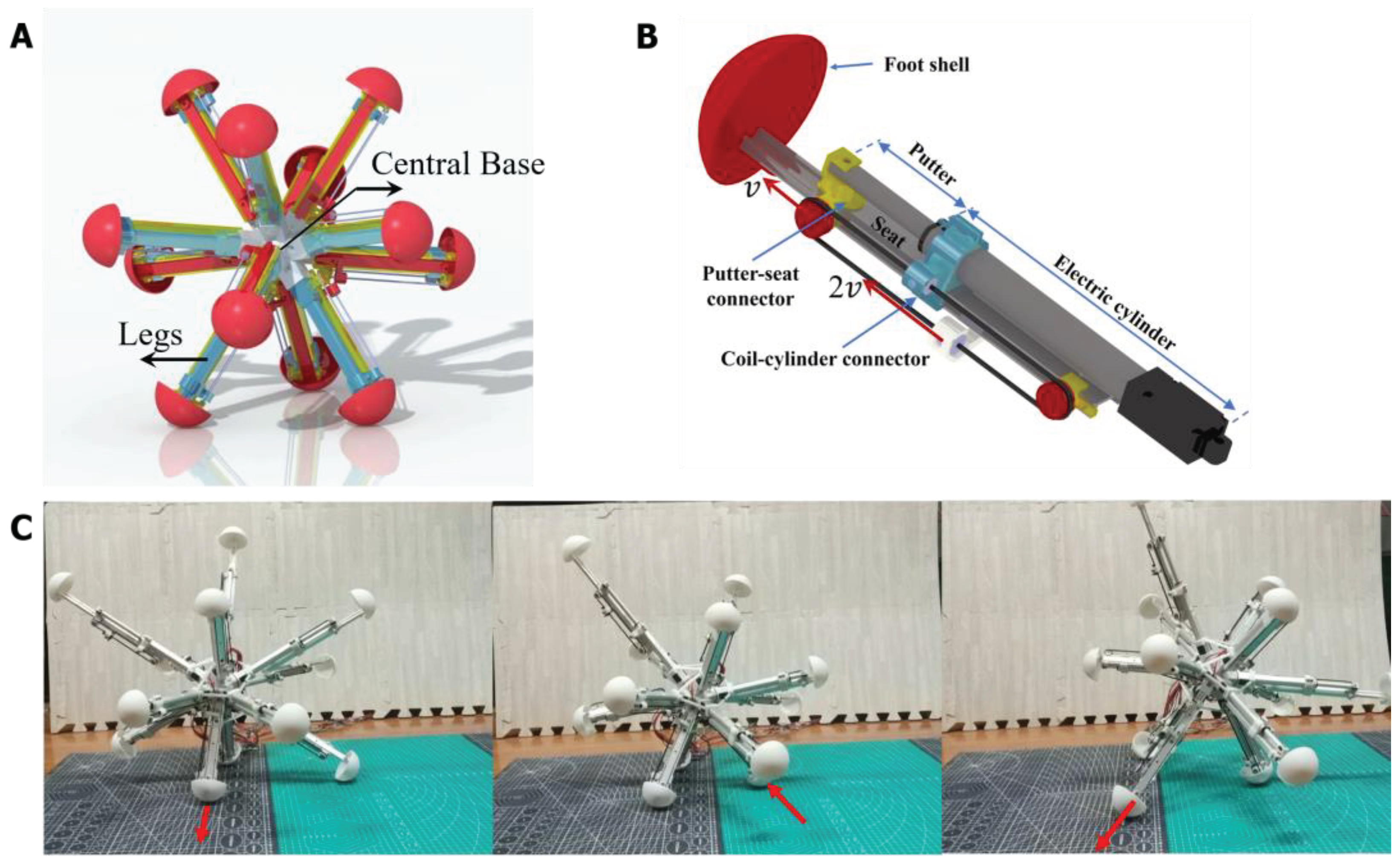

2.2.1. 12-legged Radial-Skeleton Robot

2.2.2. Kinetic Model of Robot for Simulation

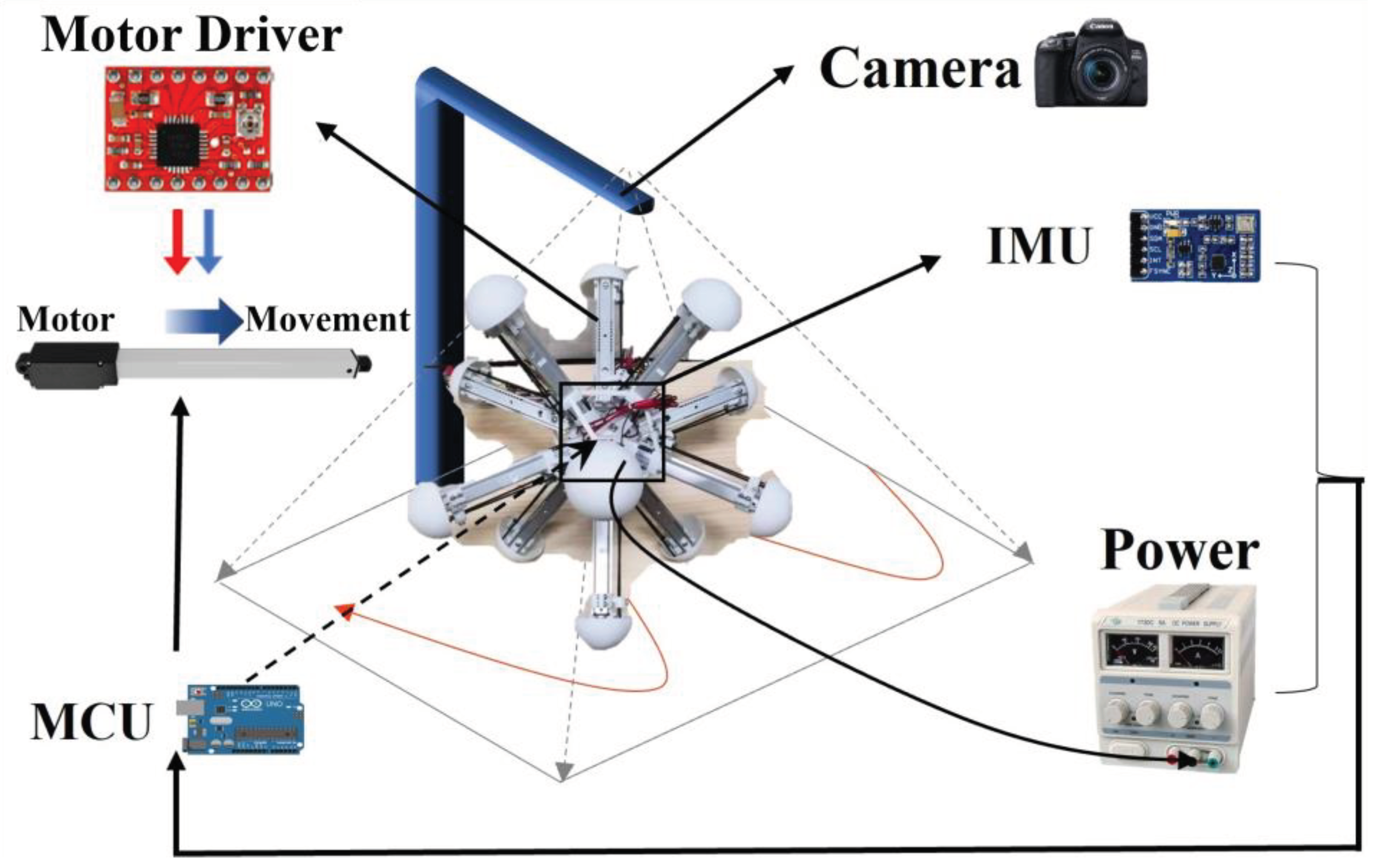

2.2.3. Construction of the Experiment Platform

3. Results

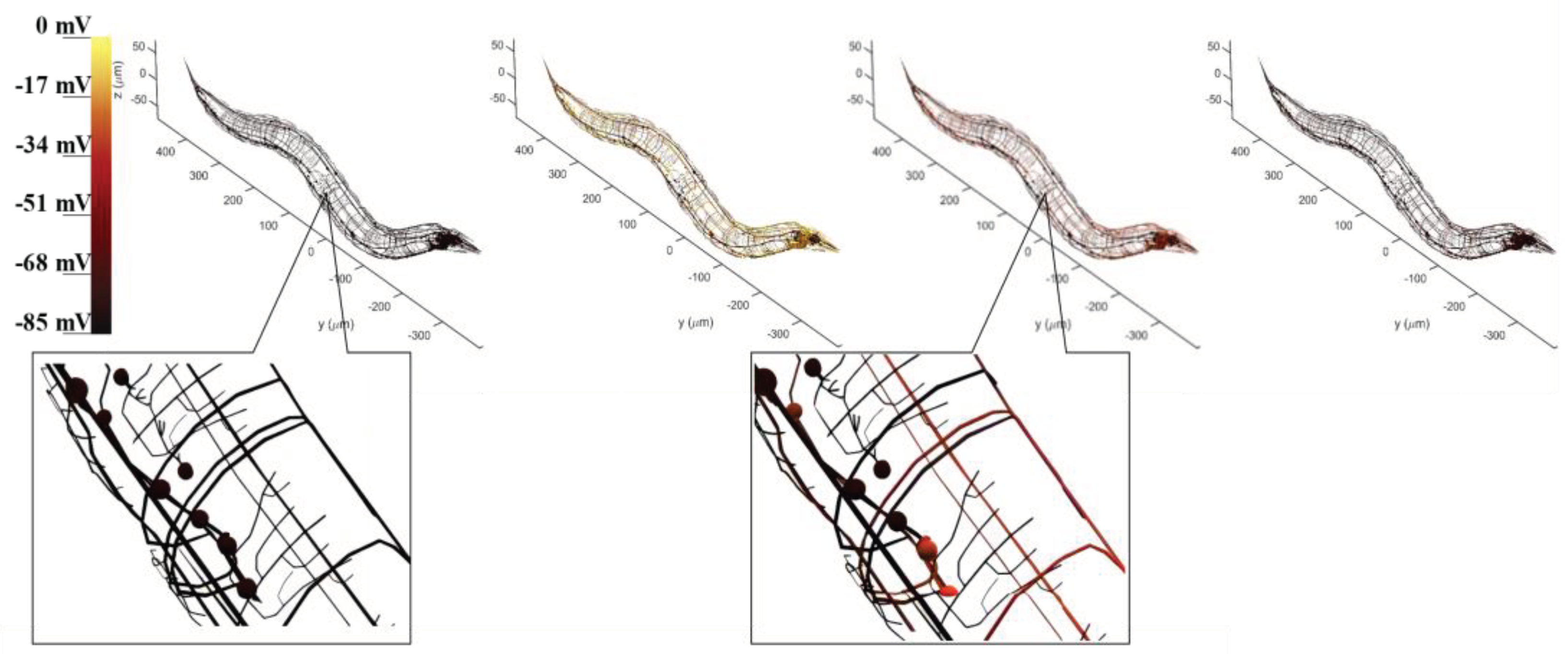

3.1. Visualization of the Whole-Brain BNN Model

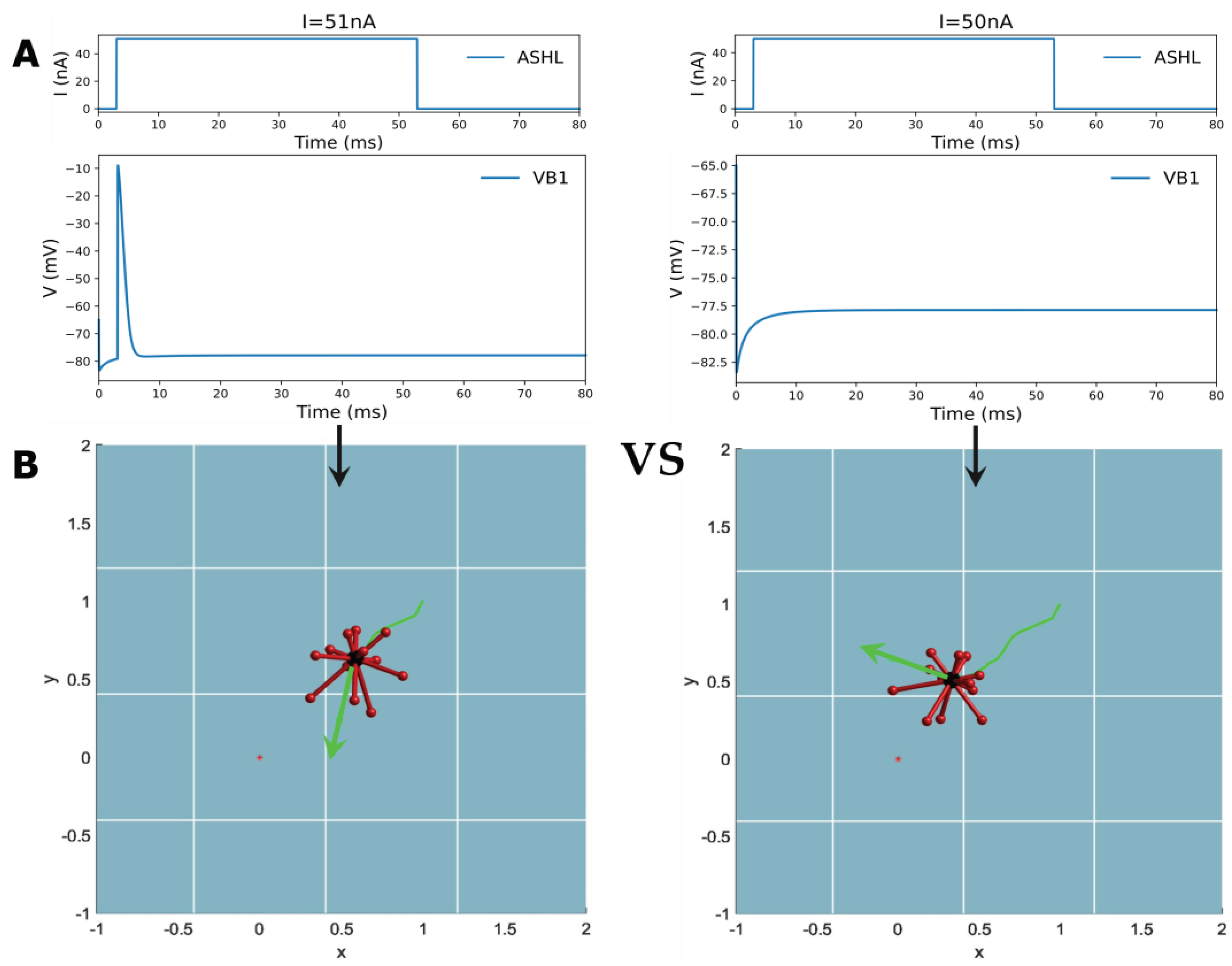

3.2. BNN Model Controls Robot Orientating

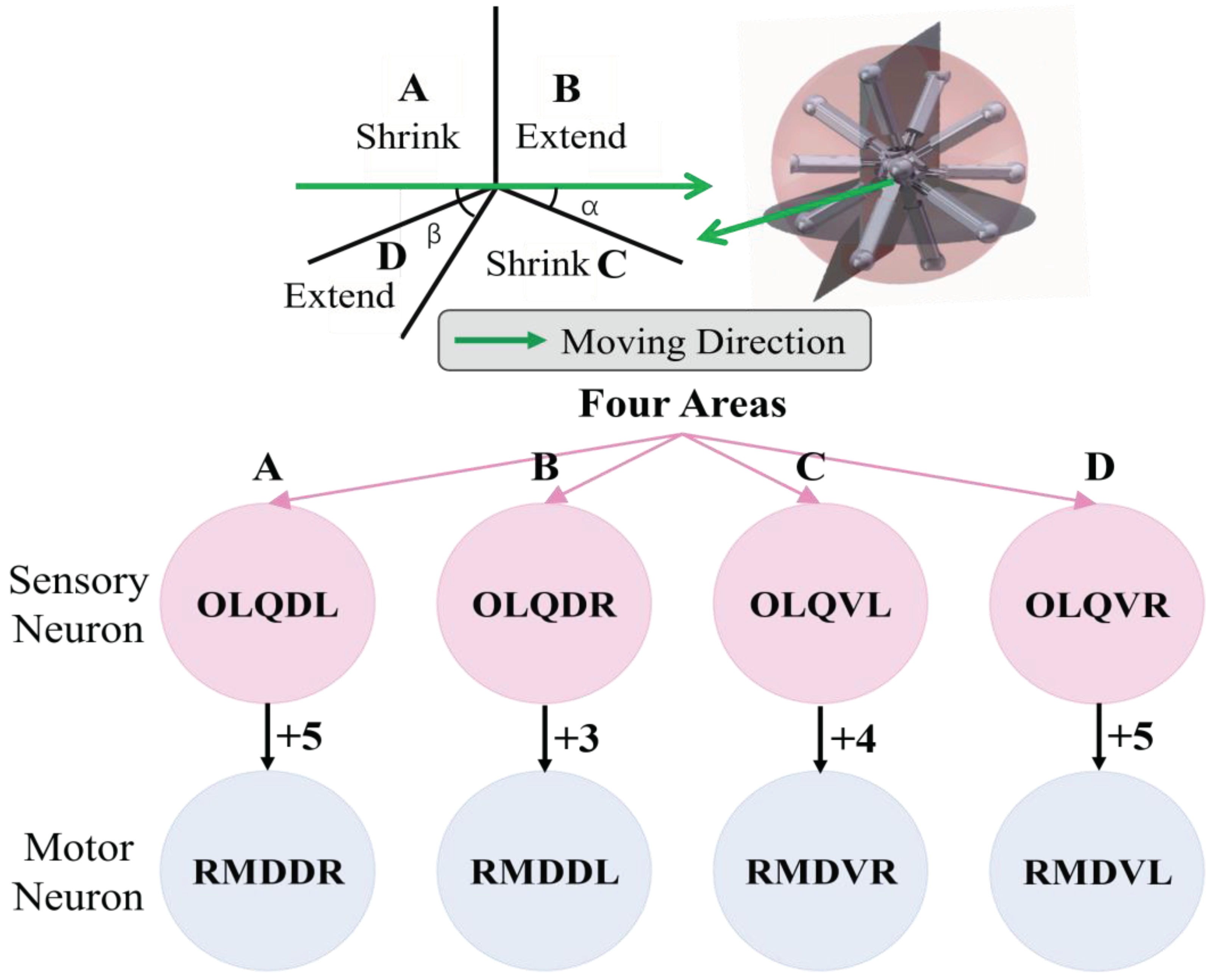

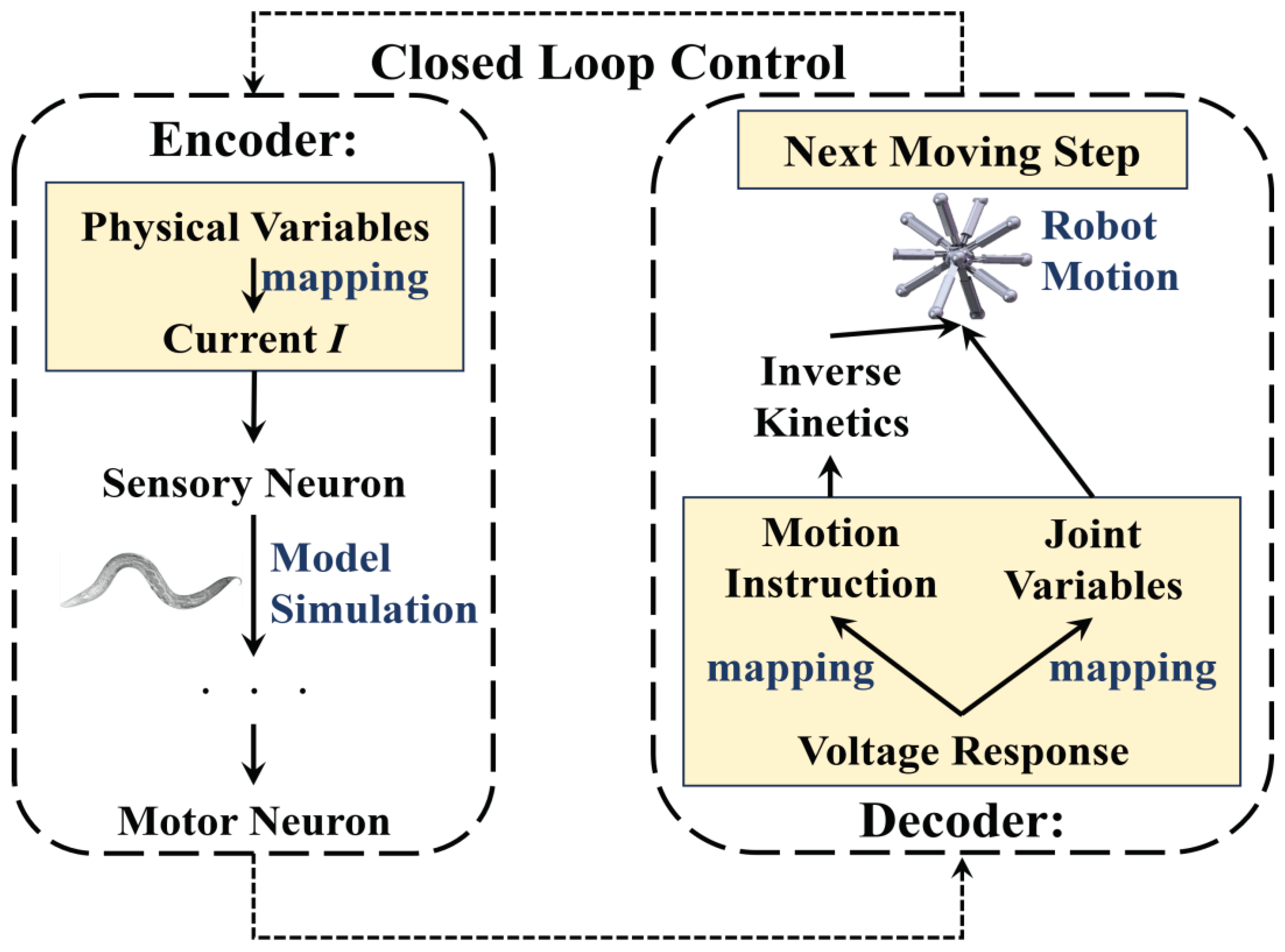

3.2.1. Mechanism for BNN to Control Robot

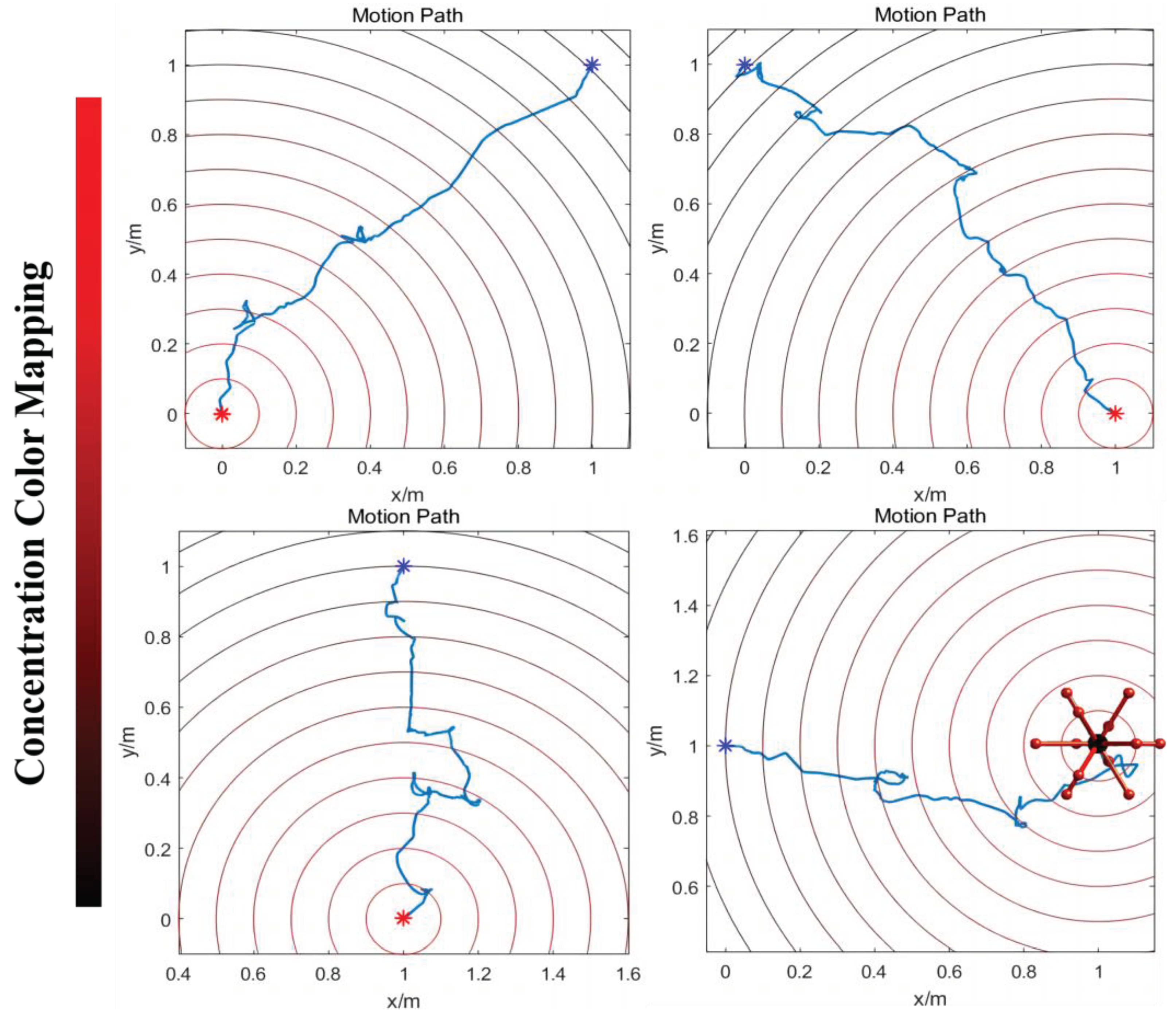

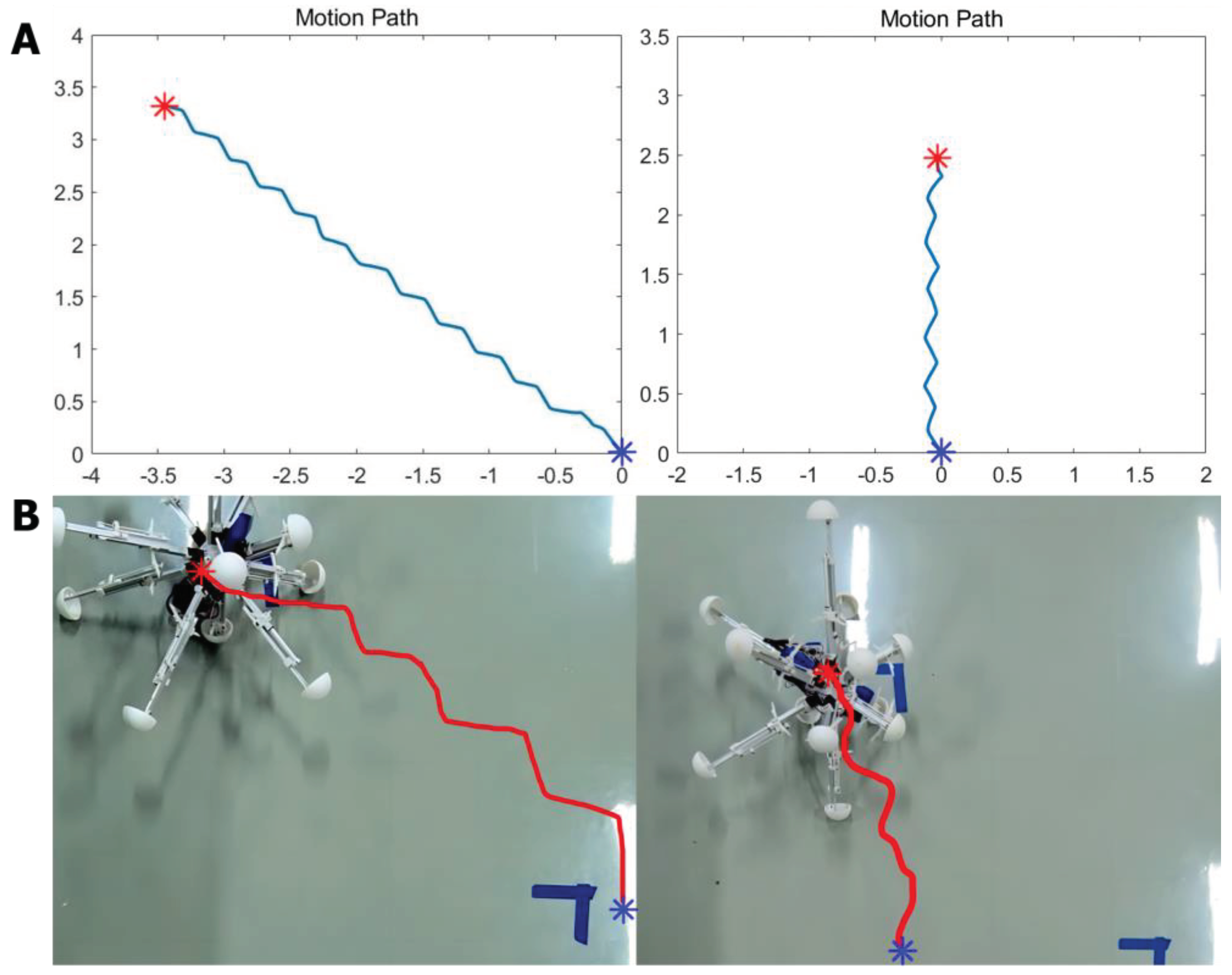

3.2.2. Innate ‘Foraging’ Behavior Control

3.2.3. Omnidirectional Locomotion Control

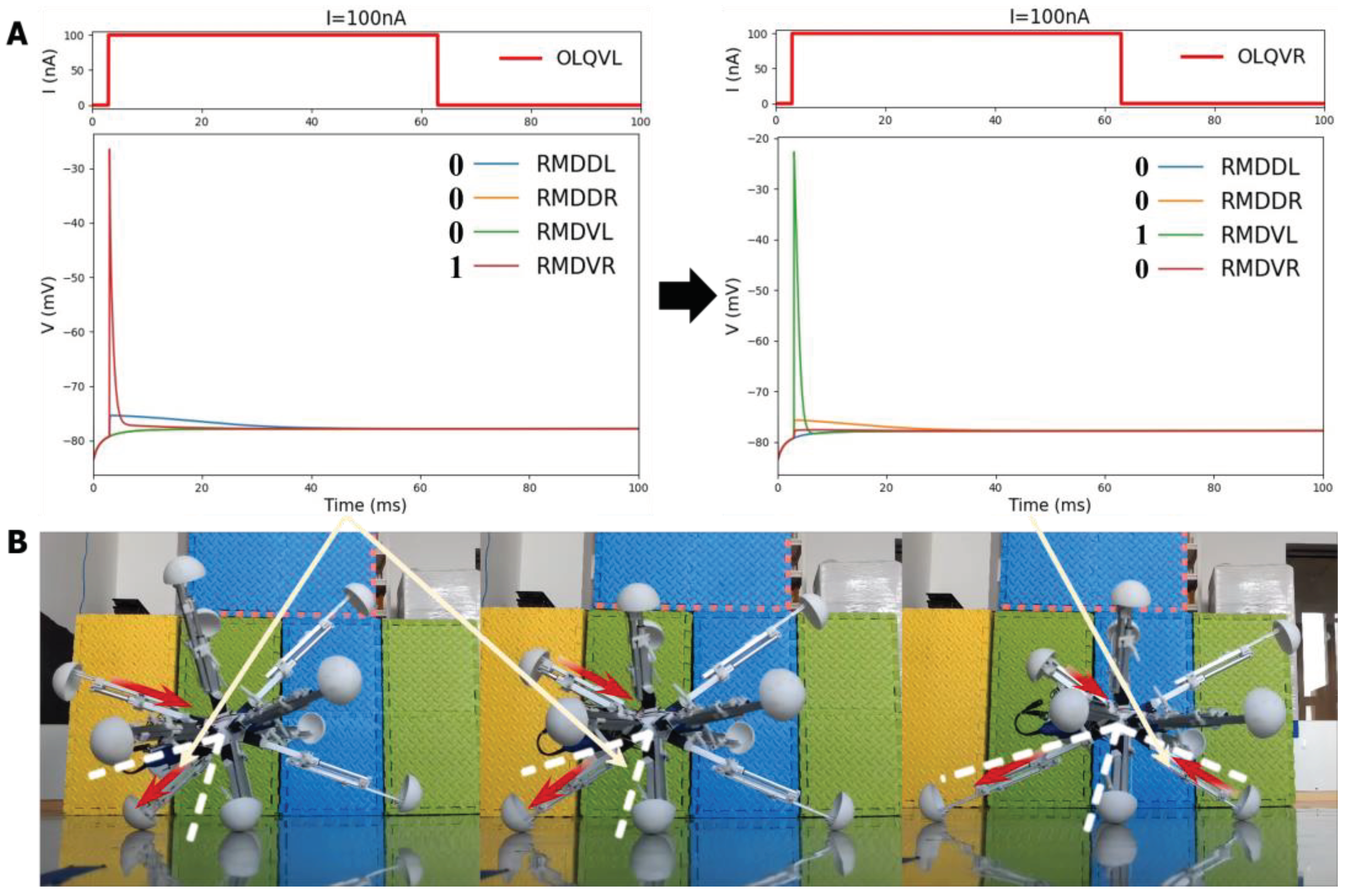

3.3. Experiment Validation of BNN Control

4. Discussion

4.1. Contributions and Defect

4.2. Result Analysis

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Dorkenwald, S. , et al., Neuronal wiring diagram of an adult brain. bioRxiv, 2023: p. 2023.06. 27.546656.

- Deco, G.; Tononi, G.; Boly, M.; Kringelbach, M.L. Rethinking segregation and integration: contributions of whole-brain modelling. Nat. Rev. Neurosci. 2015, 16, 430–439. [Google Scholar] [CrossRef] [PubMed]

- Cakan, C.; Jajcay, N.; Obermayer, K. neurolib: A Simulation Framework for Whole-Brain Neural Mass Modeling. Cogn. Comput. 2021, 15, 1132–1152. [Google Scholar] [CrossRef]

- Mueller, J.M.; Ravbar, P.; Simpson, J.H.; Carlson, J.M. Drosophila melanogaster grooming possesses syntax with distinct rules at different temporal scales. PLOS Comput. Biol. 2019, 15, e1007105. [Google Scholar] [CrossRef] [PubMed]

- Hebert, L.; Ahamed, T.; Costa, A.C.; O’shaughnessy, L.; Stephens, G.J. WormPose: Image synthesis and convolutional networks for pose estimation in C. elegans. PLOS Comput. Biol. 2021, 17, e1008914. [Google Scholar] [CrossRef]

- Mujika, A.; Leškovský, P.; Álvarez, R.; Otaduy, M.A.; Epelde, G. Modeling Behavioral Experiment Interaction and Environmental Stimuli for a Synthetic C. elegans. Front. Neurosci. 2017, 11, 71. [Google Scholar] [CrossRef]

- Lechner, M.; Hasani, R.; Amini, A.; Henzinger, T.A.; Rus, D.; Grosu, R. Neural circuit policies enabling auditable autonomy. Nat. Mach. Intell. 2020, 2, 642–652. [Google Scholar] [CrossRef]

- Sarma Gopal P., L. C.W., Portegys Tom, Ghayoomie Vahid, Jacobs Travis, Alicea Bradly, Cantarelli Matteo, Currie Michael, Gerkin Richard C., Gingell Shane, Gleeson Padraig, Gordon Richard, Hasani Ramin M., Idili Giovanni, Khayrulin Sergey, Lung David, Palyanov Andrey, Watts Mark and Larson Stephen D, OpenWorm: overview and recent advances in integrative biological simulation of Caenorhabditis elegans. PHYLOSOPHICAL TRANSACTIONS OF THE ROYAL SOCIETY B, 2018. 373.

- Black, L. A Worm’s Mind In A Lego Body. 2014; Available from: https://www.i-programmer.info/news/105-artificial-intelligence/7985-a-worms-mind-in-a-lego-body.html.

- Gingell, S. and T. elegans robot. 2019.

- Deng, X.; Xu, J.-X.; Wang, J.; Wang, G.-Y.; Chen, Q.-S. Biological modeling the undulatory locomotion of C. elegans using dynamic neural network approach. Neurocomputing 2016, 186, 207–217. [Google Scholar] [CrossRef]

- Yin, X.; Noguchi, N.; Choi, J. Development of a target recognition and following system for a field robot. Comput. Electron. Agric. 2013, 98, 17–24. [Google Scholar] [CrossRef]

- Tsalik, E.L.; Hobert, O. Functional mapping of neurons that control locomotory behavior in Caenorhabditis elegans. J. Neurobiol. 2003, 56, 178–197. [Google Scholar] [CrossRef]

- Schafer, W.R. , Mechanosensory molecules and circuits in C. elegans. Pflügers Archiv-European Journal of Physiology, 2015. 467: p. 39-48.

- Lüersen, K.; Faust, U.; Gottschling, D.C.; Döring, F. Gait-specific adaptation of locomotor activity in response to dietary re-striction in Caenorhabditis elegans. J. Exp. Biol. 2014, 217, 2480–2488. [Google Scholar]

- Gray, J.M.; Hill, J.J.; Bargmann, C.I. A circuit for navigation in Caenorhabditis elegans. Proc. Natl. Acad. Sci. 2005, 102, 3184–3191. [Google Scholar] [CrossRef]

- Riddle, D. , et al., Introduction: The neural circuit for locomotion. C. elegans II, 1997.

- Qi, W. , et al., Design and Experiment of Complex Terrain Adaptive Robot Based on Deep Reinforcement Learning. Journal of Astronautics, 2022. 43(9): p. 1176-1185.

- Venkataraman, A. and K.K. Jagadeesha, Evaluation of inter-process communication mechanisms. Architecture, 2015. 86: p. 64.

- Riddle, D.L. , et al. elegans ii. 1997.

- Izquierdo, E.J.; Beer, R.D. Connecting a Connectome to Behavior: An Ensemble of Neuroanatomical Models of C. elegans Klinotaxis. PLOS Comput. Biol. 2013, 9, e1002890. [Google Scholar] [CrossRef]

- Sabrin, K.M.; Wei, Y.; Heuvel, M.P.v.D.; Dovrolis, C. The hourglass organization of the Caenorhabditis elegans connectome. PLOS Comput. Biol. 2020, 16, e1007526. [Google Scholar] [CrossRef]

- Mohammadi, A.; Rodgers, J.B.; Kotera, I.; Ryu, W.S. Behavioral response of Caenorhabditis elegansto localized thermal stimuli. BMC Neurosci. 2013, 14, 1–12. [Google Scholar] [CrossRef]

- Milward, K.; Busch, K.E.; Murphy, R.J.; de Bono, M.; Olofsson, B. Neuronal and molecular substrates for optimal foraging in Caenorhabditis elegans. Proc. Natl. Acad. Sci. 2011, 108, 20672–20677. [Google Scholar] [CrossRef]

- Goodman, M.B. and P. Sengupta, How Caenorhabditis elegans Senses Mechanical Stress, Temperature, and Other Physical Stimuli. Genetics, 2019. 212(1): p. 25-51.

- Chatzigeorgiou, M.; Bang, S.; Hwang, S.W.; Schafer, W.R. tmc-1 encodes a sodium-sensitive channel required for salt chemosensation in C. elegans. Nature 2013, 494, 95–99. [Google Scholar] [CrossRef]

- WormAtlas, A., Z. F., Herndon, L.A., Wolkow, C.A., Crocker, C., Lints, R. and Hall, D.H. (ed.s)., WormAtlas. 2002-2023: http://www.wormatlas.org.

- Kaplan, J.M.; Horvitz, H.R. A dual mechanosensory and chemosensory neuron in Caenorhabditis elegans. Proc. Natl. Acad. Sci. 1993, 90, 2227–2231. [Google Scholar] [CrossRef]

- Sawin, E.R.; Ranganathan, R.; Horvitz, H. C. elegans Locomotory Rate Is Modulated by the Environment through a Dopaminergic Pathway and by Experience through a Serotonergic Pathway. Neuron 2000, 26, 619–631. [Google Scholar] [CrossRef]

- McDonald, P.W.; Hardie, S.L.; Jessen, T.N.; Carvelli, L.; Matthies, D.S.; Blakely, R.D. Vigorous Motor Activity inCaenorhabditis elegansRequires Efficient Clearance of Dopamine Mediated by Synaptic Localization of the Dopamine Transporter DAT-1. J. Neurosci. 2007, 27, 14216–14227. [Google Scholar] [CrossRef]

- Wen, Q.; Po, M.D.; Hulme, E.; Chen, S.; Liu, X.; Kwok, S.W.; Gershow, M.; Leifer, A.M.; Butler, V.; Fang-Yen, C.; et al. Proprioceptive Coupling within Motor Neurons Drives C. elegans Forward Locomotion. Neuron 2012, 76, 750–761. [Google Scholar] [CrossRef]

- Faumont S, L.T. , Lockery SR., Neuronal microcircuits for decision making in C. elegans. Current opinion in neurobiology, 2012. 22(4):580-91.

- Fenyves, B.G.; Szilágyi, G.S.; Vassy, Z.; Sőti, C.; Csermely, P. Synaptic polarity and sign-balance prediction using gene expression data in the Caenorhabditis elegans chemical synapse neuronal connectome network. PLOS Comput. Biol. 2020, 16, e1007974. [Google Scholar] [CrossRef]

- Hodgkin, A.L.; Huxley, A.F. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 1952, 117, 500–544. [Google Scholar] [CrossRef]

- Nicoletti, M.; Loppini, A.; Chiodo, L.; Folli, V.; Ruocco, G.; Filippi, S. Biophysical modeling of C. elegans neurons: Single ion currents and whole-cell dynamics of AWCon and RMD. PLOS ONE 2019, 14, e0218738. [Google Scholar] [CrossRef]

- Kang, L.; Gao, J.; Schafer, W.R.; Xie, Z.; Xu, X.S. C. elegans TRP Family Protein TRP-4 Is a Pore-Forming Subunit of a Native Mechanotransduction Channel. Neuron 2010, 67, 381–391. [Google Scholar] [CrossRef]

- Davis, P. , et al., WormBase in 2022—data, processes, and tools for analyzing Caenorhabditis elegans. Genetics, 2022. 220(4).

- Wang, J.; Chen, L.; Fei, X. Analysis and control of the bifurcation of Hodgkin–Huxley model. Chaos, Solitons Fractals 2005, 31, 247–256. [Google Scholar] [CrossRef]

- Nielsen, M.S. , et al., Gap junctions. Compr Physiol, 2012. 2(3): p. 1981-2035.

- Hall, D.H. , Gap junctions in C. elegans: Their roles in behavior and development. Developmental neurobiology, 2017. 77(5): p. 587-596.

- Vogel, R.; Weingart, R. Mathematical model of vertebrate gap junctions derived from electrical measurements on homotypic and heterotypic channels. J. Physiol. 1998, 510, 177–189. [Google Scholar] [CrossRef]

- Awile, O.; Kumbhar, P.; Cornu, N.; Dura-Bernal, S.; King, J.G.; Lupton, O.; Magkanaris, I.; McDougal, R.A.; Newton, A.J.H.; Pereira, F.; et al. Modernizing the NEURON Simulator for Sustainability, Portability, and Performance. Front. Neurosci. 2022, 16, 884046. [Google Scholar] [CrossRef]

- Stephens, G.J.; Johnson-Kerner, B.; Bialek, W.; Ryu, W.S. Dimensionality and Dynamics in the Behavior of C. elegans. PLOS Comput. Biol. 2008, 4, e1000028. [Google Scholar] [CrossRef]

- Schwarz, R.F.; Branicky, R.; Grundy, L.J.; Schafer, W.R.; Brown, A.E.X. Changes in Postural Syntax Characterize Sensory Modulation and Natural Variation of C. elegans Locomotion. PLOS Comput. Biol. 2015, 11, e1004322. [Google Scholar] [CrossRef]

- Kindt, K.S.; Viswanath, V.; Macpherson, L.; Quast, K.; Hu, H.; Patapoutian, A.; Schafer, W.R. Caenorhabditis elegans TRPA-1 functions in mechanosensation. Nat. Neurosci. 2007, 10, 568–577. [Google Scholar] [CrossRef]

- Zhang, F.; Yu, Y.; Wang, Q.; Zeng, X.; Niu, H. A terrain-adaptive robot prototype designed for bumpy-surface exploration. Mech. Mach. Theory 2019, 141, 213–225. [Google Scholar] [CrossRef]

- Zhang, F.; Yu, Y.; Wang, Q.; Zeng, X. Physics-driven locomotion planning method for a planar closed-loop terrain-adaptive robot. Mech. Mach. Theory 2021, 162, 104353. [Google Scholar] [CrossRef]

- Yang, D.; Liu, Y.; Ding, F.; Yu, Y. Bionic Multi-legged Robot Based on End-to-end Artificial Neural Network Control. 2022 IEEE International Conference on Cyborg and Bionic Systems (CBS). LOCATION OF CONFERENCE, ChinaDATE OF CONFERENCE; pp. 104–109.

- Yang, D., Y. Liu, and Y. Yu. A General Locomotion Approach for a Novel Multi-legged Spherical Robot. in 2023 IEEE International Conference on Robotics and Automation (ICRA). 2023.

- Xue, M.; Zhu, C. The Socket Programming and Software Design for Communication Based on Client/Server. 2009 Pacific-Asia Conference on Circuits, Communications and Systems (PACCS). LOCATION OF CONFERENCE, ChinaDATE OF CONFERENCE; pp. 775–777.

- Akram, M.A.; Nanda, S.; Maraver, P.; Armañanzas, R.; Ascoli, G.A. An open repository for single-cell reconstructions of the brain forest. Sci. Data 2018, 5, 180006. [Google Scholar] [CrossRef]

- Towlson, E.K. , et al., Caenorhabditis elegans and the network control framework—FAQs. Philosophical Transactions of the Royal Society B: Biological Sciences, 2018. 373(1758): p. 20170372.

- Badhwar, R.; Bagler, G. Control of Neuronal Network in Caenorhabditis elegans. PLOS ONE 2015, 10, e0139204. [Google Scholar] [CrossRef]

- Brezina, V.; Orekhova, I.V.; Weiss, K.R.; Dickinson, P.S.; Armstrong, M.K.; Dickinson, E.S.; Fernandez, R.; Miller, A.; Pong, S.; Powers, B.W.; et al. The Neuromuscular Transform: The Dynamic, Nonlinear Link Between Motor Neuron Firing Patterns and Muscle Contraction in Rhythmic Behaviors. J. Neurophysiol. 2000, 83, 207–231. [Google Scholar] [CrossRef]

- Hills, T.; Brockie, P.J.; Maricq, A.V. Dopamine and Glutamate Control Area-Restricted Search Behavior inCaenorhabditis elegans. J. Neurosci. 2004, 24, 1217–1225. [Google Scholar] [CrossRef]

- Pradhan, S.; Quilez, S.; Homer, K.; Hendricks, M. Environmental Programming of Adult Foraging Behavior in C. elegans. Curr. Biol. 2019, 29, 2867–2879. [Google Scholar] [CrossRef]

- Petzold, B.C.; Park, S.-J.; Ponce, P.; Roozeboom, C.; Powell, C.; Goodman, M.B.; Pruitt, B.L. Caenorhabditis elegans Body Mechanics Are Regulated by Body Wall Muscle Tone. Biophys. J. 2011, 100, 1977–1985. [Google Scholar] [CrossRef]

- Castellanos, J.A. and J.D. Tardos, Mobile robot localization and map building: A multisensor fusion approach. 2012: Springer Science & Business Media.

- Zeng, T.; Si, B. A brain-inspired compact cognitive mapping system. Cogn. Neurodynamics 2020, 15, 91–101. [Google Scholar] [CrossRef] [PubMed]

- Baxter, D.A. and J.H. Byrne, Learning rules from neurobiology. The neurobiology of neural networks, 1993: p. 71-105.

- Bentley, B.; Branicky, R.; Barnes, C.L.; Chew, Y.L.; Yemini, E.; Bullmore, E.T.; Vértes, P.E.; Schafer, W.R. The Multilayer Connectome of Caenorhabditis elegans. PLOS Comput. Biol. 2016, 12, e1005283. [Google Scholar] [CrossRef] [PubMed]

| Parameters | Values |

|---|---|

| 3.1 | |

| 0.289 nS | |

| -75 mV | |

| 55 mV | |

| -84 mV | |

| 45 mV |

| Sensory Neuron (Mechanosensory) | Motor Neuron | Current Stimuli Value Range (Unit: nA) |

|---|---|---|

| ASHL | DA, DB, VA, VB, VD, SMB, SMD, RMB, RMD | 60- (One action potential only) |

| ADEL | DA, DB, VA, VB, VD | 59-64 (Bifurcation Range) |

| ADER | DA, DB, VA, VB, VD | 59-68 (Bifurcation Range) |

| CEPDL | DA, DB, VA, VB, VD, SMB, SMD, RMB, RMD | 64-111 (Bifurcation Range) |

| CEPDR | DA, DB, VA, VB, VD, SMB, SMD, RMB, RMD | 64-91 (Bifurcation Range) |

| CEPVL | DA, DB, VA, VB, VD, SMB, SMD, RMB, RMD | 64-111 (Bifurcation Range) |

| CEPVR | DA, DB, VA, VB, VD, SMB, SMD, RMB, RMD | 64-85 (Bifurcation Range) |

| PDEL | DA, DB, VA, VB, SMDVL | 60-190 (Bifurcation Range) |

| PDER | DA, DB, VA, VB, VD, SMB, SMD, RMB, RMD | 60-88 (Bifurcation Range) |

| OLQDL | RMDDR | 91- (One action potential only) |

| OLQDR | RMDDL | 91- (One action potential only) |

| OLQVL | RMDVR | 91- (One action potential only) |

| OLQVR | RMDVL | 94- (One action potential only) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).