Submitted:

03 April 2024

Posted:

03 April 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

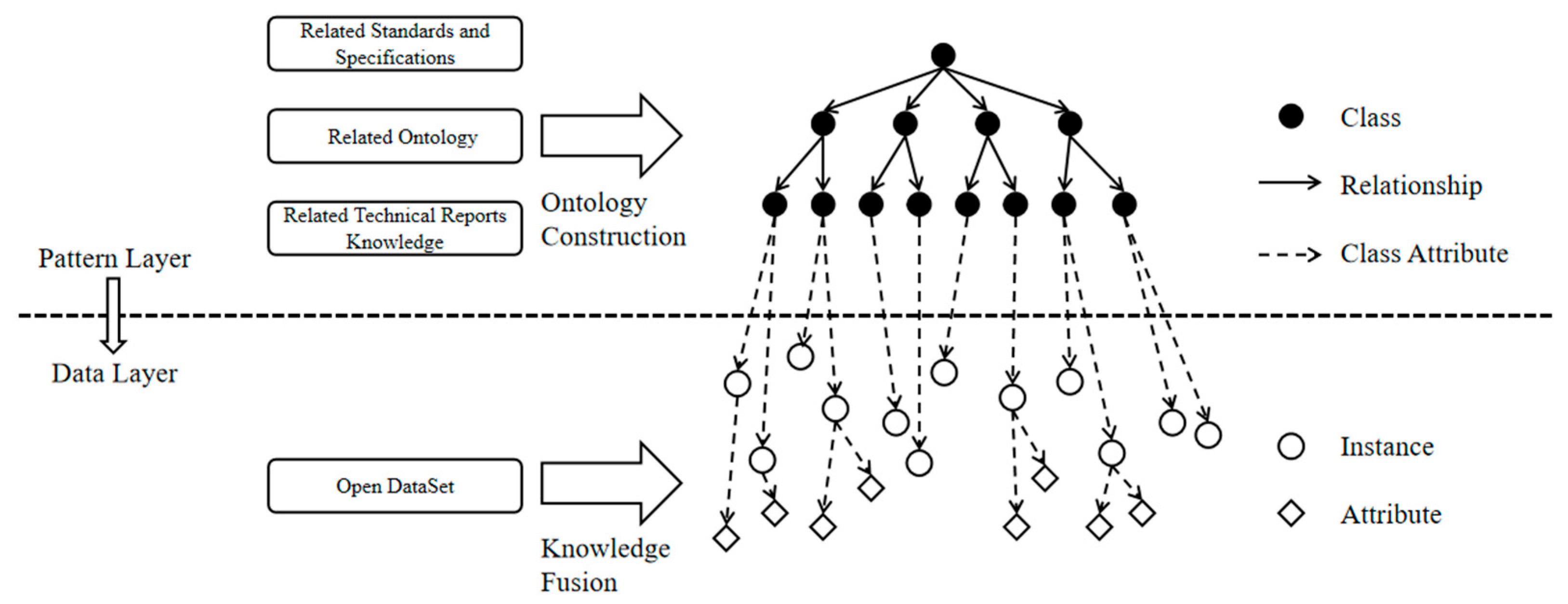

2. Knowledge Graph

3. Methods

3.1. Knowledge Acquisition

3.1.1. Domain Concepts

3.1.2. Domain Ontology

3.1.3. Domain Data

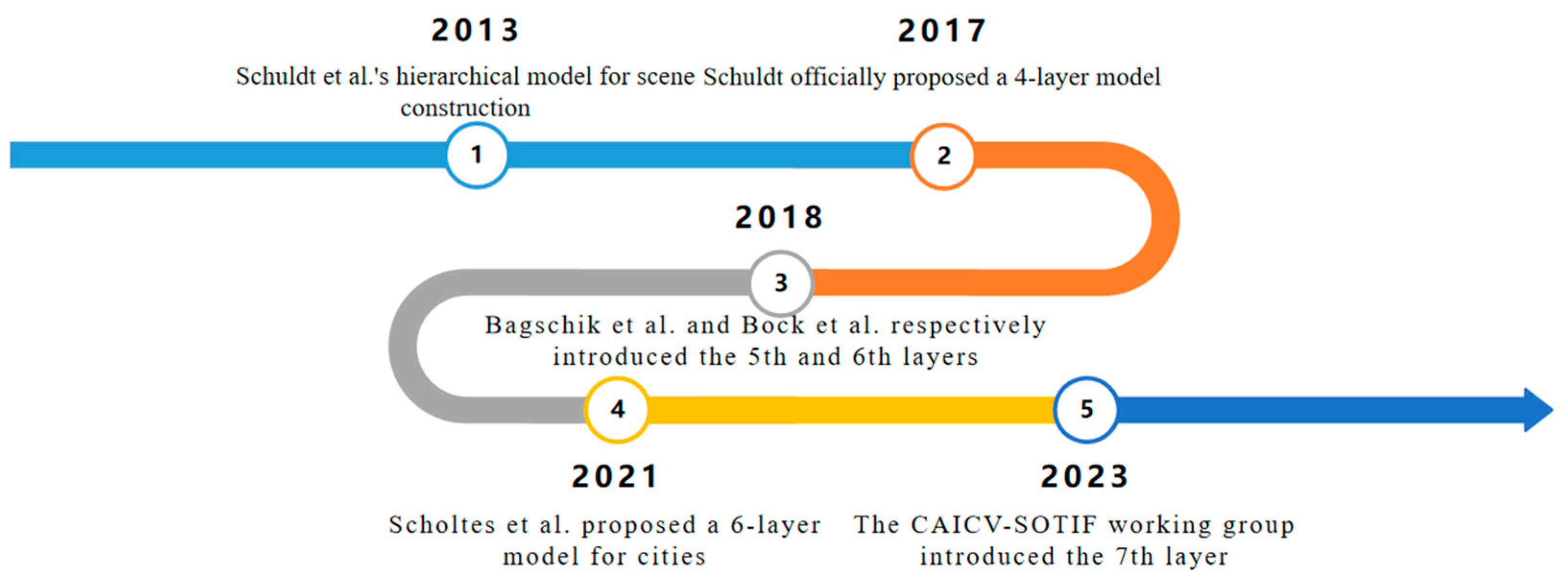

3.2. Construction of Ontology for Driving Scenarios

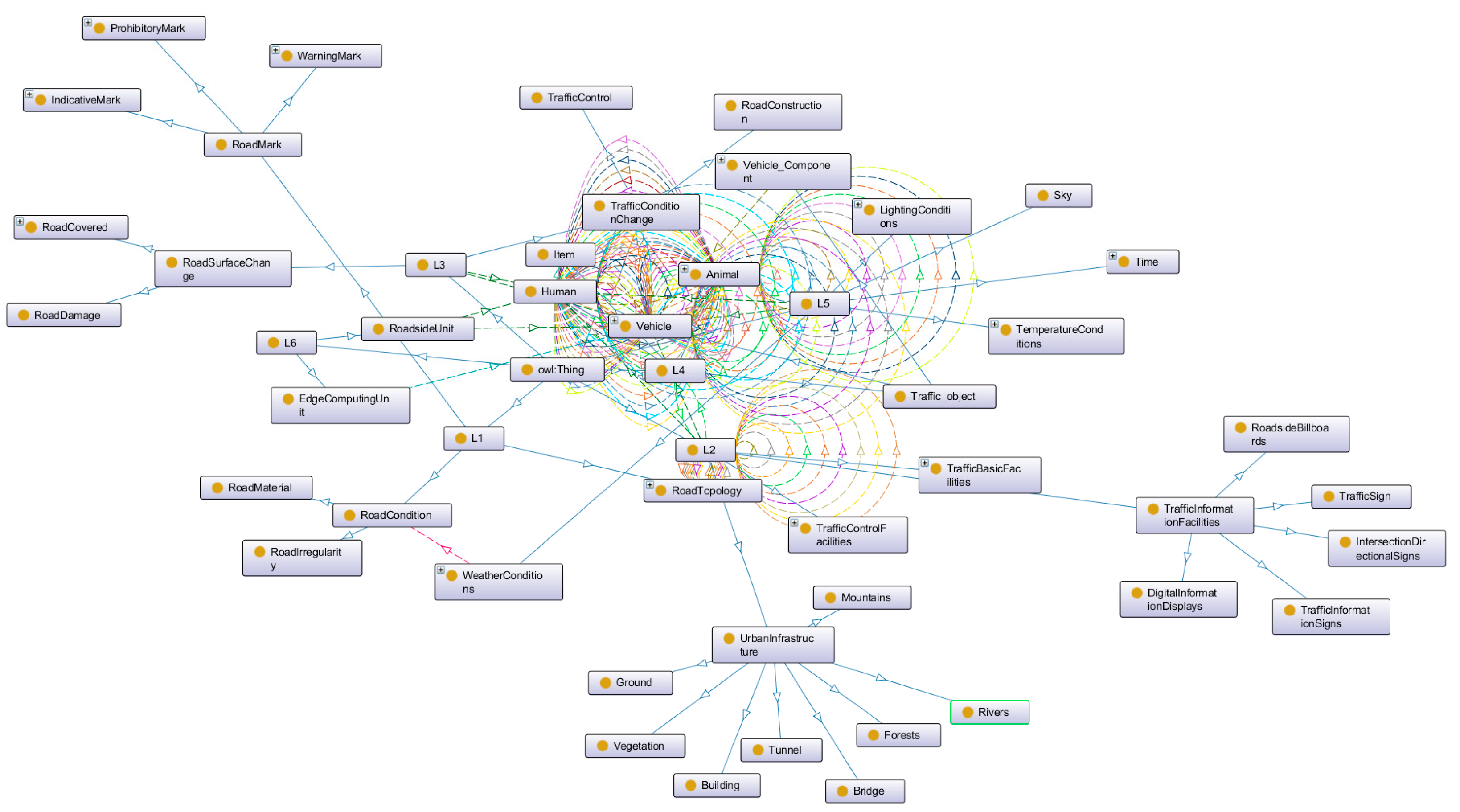

3.2.1. Definition of Classes and Their Hierarchical Structure

3.2.2. Definition of Class Attributes

3.2.3. Definition of Class Relationships

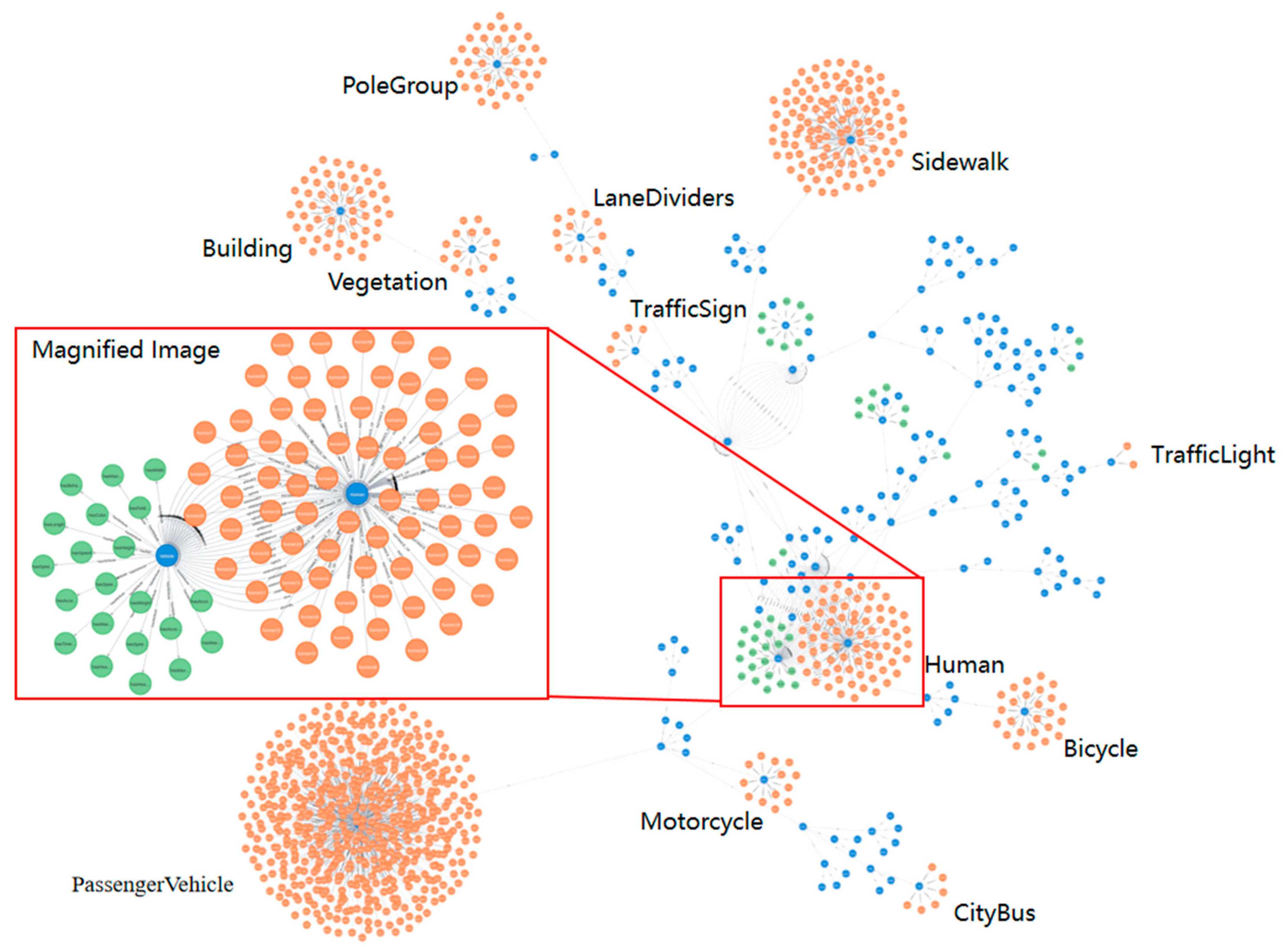

3.3. Knowledge Extraction and Fusion

3.3.1. Data Extraction

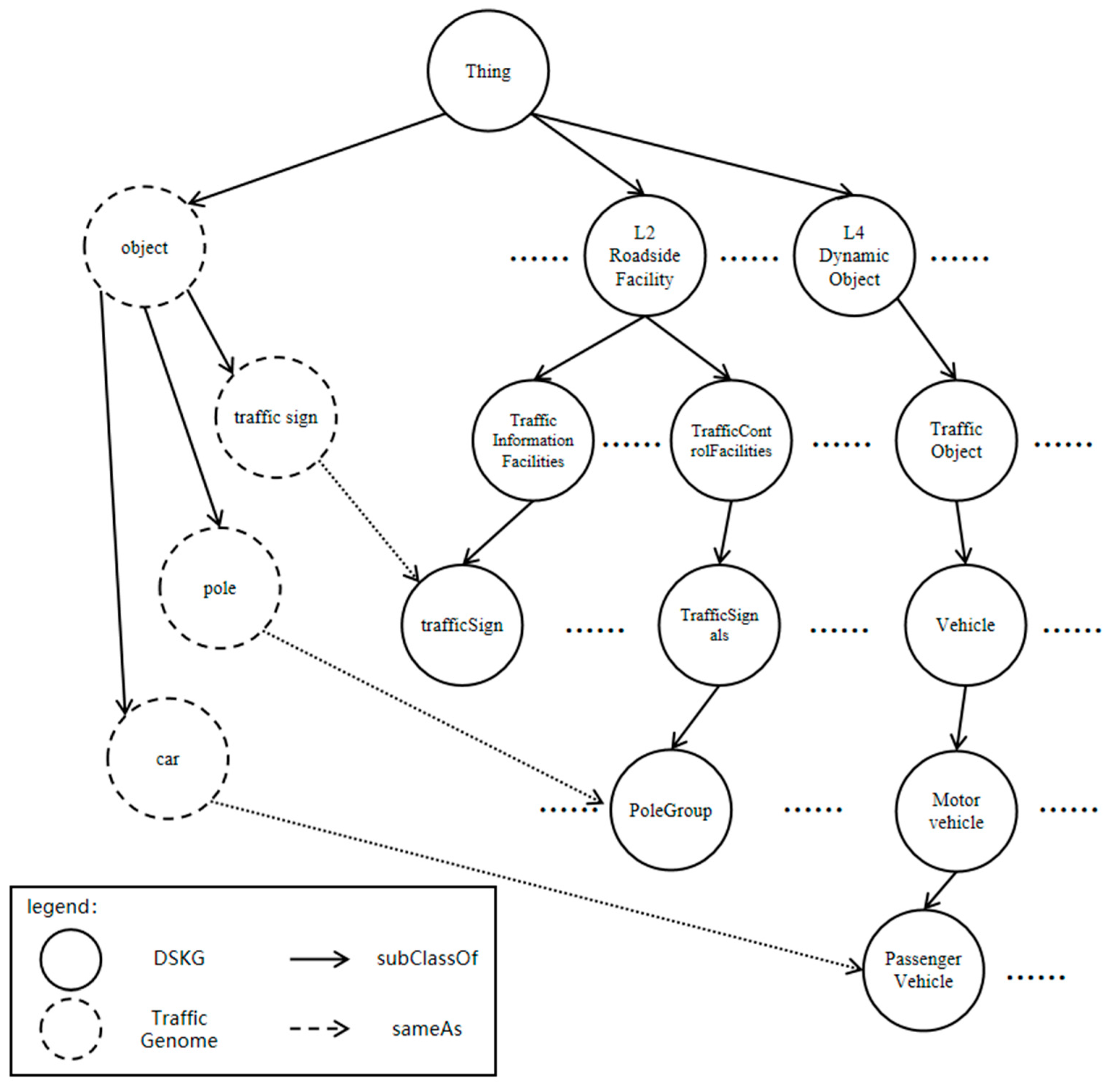

3.3.2. Knowledge Fusion

3.4. Knowledge Inference Models Based on Representation Learning

3.4.1. Model Definition

3.4.2. Model Training and Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- ISO 21448:2022 Road vehicles-safety of the intended functionality. Available online: https://www.iso.org/obp/ui#iso:std:iso: 21448:ed-1:v1:en (accessed on 28 March 2024).

- 2. Wickramarachchi R, Henson C, Sheth A. CLUE-AD: a context-based method for labeling unobserved entities in autonomous driving data. Proceedings of the AAAI Conference on Artificial Intelligence 2023, 37, 16491–16493. [CrossRef]

- SINGHAL, A. Introducing the knowledge graph: things, not strings. Available online: https://blog.google/products/search/ introducing-knowledge-graph-things-not/ (accessed on 28 March 2024).

- 4. Geyer S, Baltzer M, Franz B, et al. Concept and development of a unified ontology for generating test and use - case catalogues for assisted and automated vehicle guidance. IET Intelligent Transport Systems 2014, 8, 183–189.

- 5. Schuldt F, Saust F, Lichte B, et al. Effiziente systematische Testgenerierung für Fahrerassistenzsysteme in virtuellen Umgebungen. Automatisierungssysteme, Assistenzsysteme und Eingebettete Systeme Für Transportmittel. 2013, 4, 1–7.

- Schuldt, F. Ein Beitrag für den methodischen Test von automatisierten Fahrfunktionen mit Hilfe von virtuellen Umgebungen. doktors, Technische Universitat Braunschweig, Braunschweig, 2017.

- Audi G, Volkswagen AG, et al. The PEGASUS Method. Available online: https://www.pegasusprojekt.de/en/pegasus-method (accessed on 28 March 2024).

- Bagschik G, Menzel T, Maurer M. Ontology based scene creation for the development of automated vehicles. IEEE Intelligent Vehicles Symposium. 2018, 1813–1820. [Google Scholar]

- 9. Bock J, Krajewski R, Eckstein L, et al. Data basis for scenario-based validation of HAD on highways. Aachen Colloquium Automobile and Engine Technology 2018, 8–10.

- 10. Bagschik G, Menzel T, Körner C, et al. Wissensbasierte szenariengenerierung für betriebsszenarien auf deutschen autobahnen. Workshop Fahrerassistenzsysteme und automatisiertes Fahren 2018, 12, 12.

- Weber H, Bock J, Klimke J, et al. A framework for definition of logical scenarios for safety assurance of automated driving. Traffic injury prevention. 2019, 20, 65–70. [Google Scholar] [CrossRef] [PubMed]

- 12. Scholtes M, Westhofen L, Turner LR, et al. 6-layer model for a structured description and categorization of urban traffic and environment. IEEE Access. 2021, 9, 59131–59147. [CrossRef]

- Report on Advanced Technology Research of Functional Safety for Intelligent Connected Vehicles. Available online: http://www.caicv.org.cn/index.php/material?cid=38 (accessed on 28 March 2024).

- Bagschik G, Menzel T, Maurer M. Ontology based scene creation for the development of automated vehicles. IEEE Intelligent Vehicles Symposium. 2018, 1813–1820. [Google Scholar]

- 15. Menzel T, Bagschik G, Isensee L, et al. From functional to logical scenarios: Detailing a keyword-based scenario description for execution in a simulation environment. IEEE Intelligent Vehicles Symposium. 2019, 2383–2390.

- 16. Tahir Z, Alexander R. Intersection focused situation coverage-based verification and validation framework for autonomous vehicles implemented in Carla. International Conference on Modelling and Simulation for Autonomous Systems 2021, 191–212.

- 17. Herrmann M, Witt C, Lake L, et al. Using ontologies for dataset engineering in automotive AI applications. Design, Automation & Test in Europe Conference & Exhibition 2022, 526–531.

- ASAM OpenXOntology. Available online: https://www.asam.net/standards/asam- openxontology/ (accessed on 28 March 2024).

- 19. Bogdoll D, Guneshka S, Zöllner JM. One ontology to rule them all: Corner case scenarios for autonomous driving. European Conference on Computer Vision 2022, 409–425.

- Westhofen L, Neurohr C, Butz M, et al. Using ontologies for the formalization and recognition of criticality for automated driving. IEEE Open Journal of Intelligent Transportation Systems. 2022, 519–538. [Google Scholar]

- 21. Geiger A, Lenz P, Stiller C, et al. Vision meets robotics: The kitti dataset. The International Journal of Robotics Research 2013, 32, 1231–1237. [CrossRef]

- 22. Yu F, Chen H, Wang X, et al. Bdd100k: A diverse driving dataset for heterogeneous multitask learning. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition 2020, 2636–2645.

- 23. Caesar H, Bankiti V, Lang AH, et al. nuscenes: A multimodal dataset for autonomous driving. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition 2020, 11621–11631.

- 24. Sun P, Kretzschmar H, Dotiwalla X, et al. Scalability in perception for autonomous driving: Waymo open dataset. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition 2020, 2446–2454.

- 25. Geyer J, Kassahun Y, Mahmudi M, et al. A2d2: Audi autonomous driving dataset. arXiv preprint. 2020; arXiv:2004.06320.

- 26. Xiao P, Shao Z, Hao S, et al. Pandaset: Advanced sensor suite dataset for autonomous driving. IEEE International Intelligent Transportation Systems Conference 2021, 3095–3101.

- 27. Zhang Z, Zhang C, Niu Z, et al. Geneannotator: A semi-automatic annotation tool for visual scene graph. arXiv preprint, 2021; arXiv:2109.02226.

- Cordts M, Omran M, Ramos S, et al. The cityscapes dataset for semantic urban scene understanding. Proceedings of the IEEE conference on computer vision and pattern recognition. 2016, 3213–3223. [Google Scholar]

- 29. Jia M, Zhang Y, Pan T, et al. Ontology Modeling of Marine Environmental Disaster Chain for Internet Information Extraction: A Case Study on Typhoon Disaster. J. Geo-Inf. Sci 2020, 22, 2289–303.

- 30. Ghosh SS, Chatterjee SK. A knowledge organization framework for influencing tourism-centered place-making. Journal of Documentation 2022, 78, 157–176. [CrossRef]

| Authors | Year | Scene categoryASAM | Open status |

|---|---|---|---|

| Bagschik et al. | 2018 | Highway | - |

| Menzel et al. | 2019 | Highway | - |

| Tahir et al. | 2022 | Urban | - |

| Hermann et al. | 2022 | Urban | - |

| ASAM | 2022 | Full scene | √ |

| Bogdoll et al. | 2022 | Full scene | √ |

| Westhofen et al. | 2022 | Urban | √ |

| Dataset | Year | Number of Concept Classes | Number of Attributes or Relationships |

|---|---|---|---|

| KITTI | 2013 | 5 | - |

| BDD100K | 2018 | 40 | - |

| NuScenes | 2019 | 23 | 5 |

| A2D2 | 2019 | 52 | - |

| Waymo | 2020 | 4 | - |

| PandaSet | 2020 | 37 | 13 |

| Traffic Genome | 2021 | 34 | 51 |

| Classes | Attributes |

|---|---|

| Lane | Length, width, direction, type, speed limit, etc. |

| Traffic markings | Type, color, width, length, shape, maintenance status, etc. |

| Vehicle | Type, brand, color, driving status, etc. |

| Person | Age group, gender, activity status, etc. |

| Object | Type, size, color, material, shape, mobility, etc. |

| Relationship Category | Meaning | Examples |

|---|---|---|

| Spatial Relationship | Describes the topological, directional, and metric relationships between concept classes. | The position dependency between lanes, road irregularities, and roadside facilities. |

| The positional relationship between the driving direction of the vehicle and dynamic objects such as vehicles and pedestrians. | ||

| The relative distance between the vehicle and roadside facilities, dynamic objects, and other elements. | ||

| Temporal Relationship | Describes the geometric topology information of time points or timelines between concept classes. | Whether the vehicle passes through the intersection during the green light phase of the traffic signal. |

| Changes in environmental conditions over time during vehicle travel. | ||

| The duration of vehicle parking in temporary parking areas. | ||

| Semantic Relationship | Describes the traffic connections between concept classes to express accessibility or restrictions, subject to constraints of time and space. | The restricted access rules for the tidal lane at different times. |

| The relationship between people and vehicles in terms of driving or being driven. |

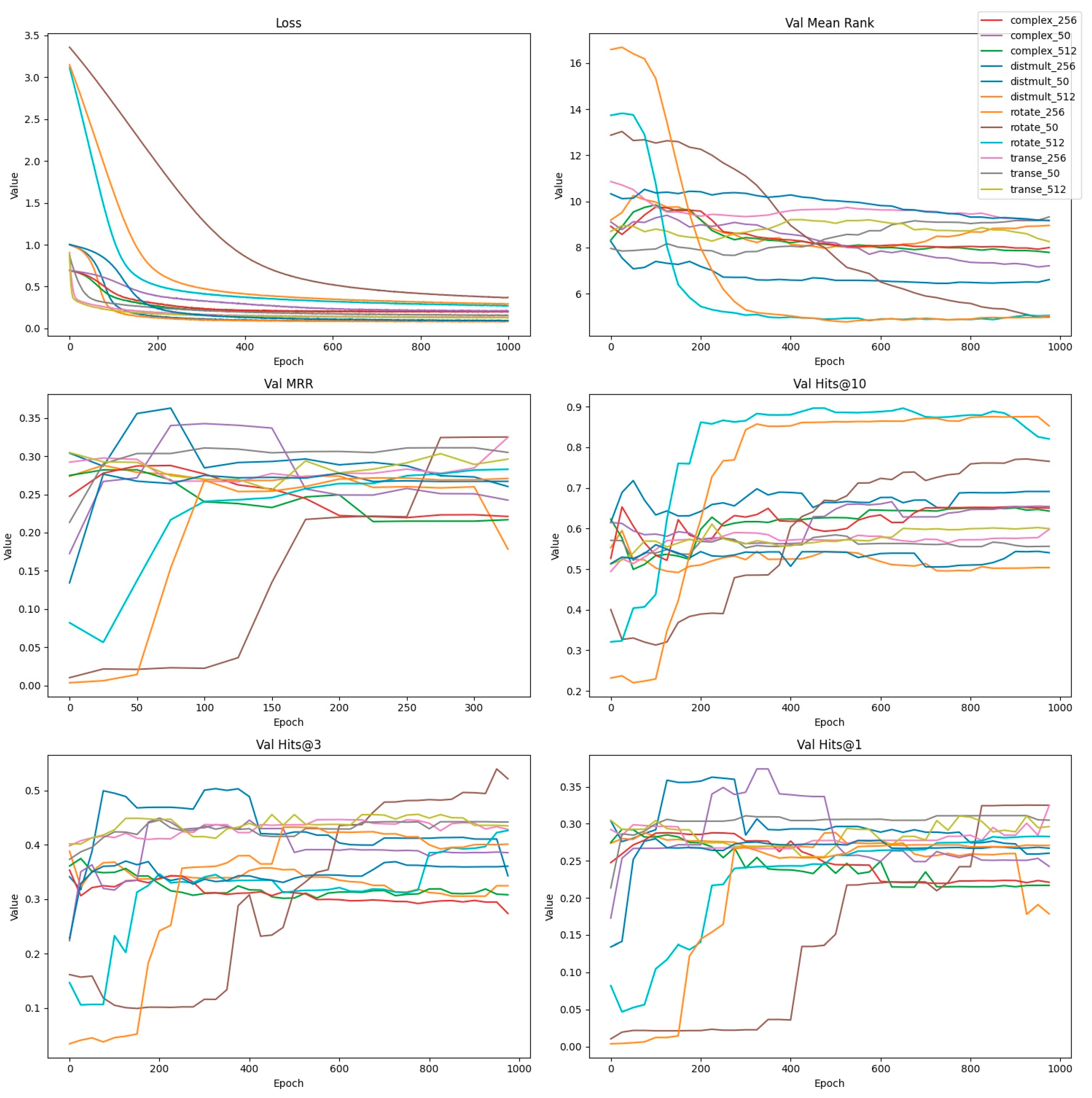

| Model | Learning Rate | Hidden Layer | Margin | Batch_Size |

|---|---|---|---|---|

| TransE | 0.001 | 50/256/512 | 0.9 | 20000 |

| Complex | 0.001 | 50/256/512 | None | 20000 |

| Distmult | 0.001 | 50/256/512 | 0.9 | 20000 |

| Rotate | 0.001 | 50/256/512 | 1.0 | 20000 |

| Model (Optimal Hidden Layer) | MR | MRR | Hits@10 | Hits@3 | Hits@1 |

|---|---|---|---|---|---|

| Transe (256) | 9.18 | 41.67% | 59.79% | 42.82% | 32.47% |

| Complex (50) | 8.51 | 42.01% | 56.43% | 42.98% | 33.77% |

| Distmult (256) | 7.01 | 46.09% | 65.91% | 46.92% | 36.29% |

| Rotate (50) | 4.99 | 45.68% | 76.54% | 52.17% | 32.51% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).