1. Introduction

Idaho is one of the top-five producers of specialty crops in the USA. Specialty crops is defined as fruits and vegetables, tree nuts, dried fruits, and horticulture and nursery crops, including floriculture [

1]. Specialty crop growers in Idaho have been affected by labor shortage especially those growing fruits and vegetables. To help farmers fill the labor gaps, they use the H-2A Temporary Agricultural Program [

2]. The H-2A program allows the farmers to hire workers from other countries especially for seasonal operations like harvesting, which is very labor intensive. However, USDA announced that farmers who hire H-2A laborers next year will be paying higher wages [

3]. This increase in labor wage will put more strain to the increasing costs of fruit and vegetable production. The H2-A program may be a solution but it is a short-term solution. We need to look for long-term solutions and one of them is robotics.

Robotics has been successfully integrated in industries like automotive manufacturing, home-building industry, and others [

4]. Integrating robots into a manufacturing process is not an easy task, however, it could be facilitated by the structured environment inherent in a manufacturing plant [

5]. For example, cars are assembled using an assembly line with the help of conveyors and supporting jigs and fixtures [

6]. This means that the pieces are always in the same location at certain periods of time. In addition, the work areas have adequate artificial lighting to help with visibility. So, inserting a robot in an assembly line is easier as compared to inserting a robot in an orchard.

There have been numerous studies of using a robot in an orchard [

7]. In fact, it has been started four decades ago using a black and white camera and a color filter to recognize the apples on a tree [

8,

9]. Since then, different types of cameras such as color video cameras, thermal cameras [

10], and multispectral cameras [

11] have been used to recognize the fruits on a tree. Fruit recognition is one of the most challenging aspect of robotic harvesting in addition to picking the fruit successfully without damaging it [

12,

13]. Recognizing the fruit is the first step for a successful harvest. This is the reason why most of the research has been devoted to fruit recognition [

14]. The unstructured nature of the orchard plus the variability of the lighting conditions is a big challenge. As mentioned, picking the fruit without damaging it is the second critical part for a successful harvesting. The robot should have an arm that has sufficient degrees of freedom to manipulate the end effector towards the fruit. And when the robot reaches the fruit, the end effector should remove the fruit from the tree without damaging it and the tree.

The Robotics Vision Lab of Northwest Nazarene University has developed a robotic platform for studying these challenges. The robot, called Orchard roBot (OrBot), has been tested for fruit harvesting specifically apples. The robot is composed of a robotic manipulator with an end effector and vision system that combines color and infrared to locate the fruits [

15]. It has been tested in a commercial orchard with a success rate of about 85%, and a speed of about 12 seconds per fruit. As compared to humans, this is a slow speed. However, with the labor shortage problem, any help would be helpful even if it is slow. One of the proposed ideas is to have the robot work at night when humans are resting, and then human can pick up the fruit left by the robot during the day. This is similar to a strawberry harvesting robot [

16] that works slowly at night and harvests only certain fruits, and then the human workers continue picking in the morning. This is the main objective of this study, which is to investigate the feasibility of night time harvesting using OrBot. Specifically the objectives of this study are;

To identify lighting conditions suitable for night time harvesting,

To evaluate the image processing algorithm for fruit recognition.

2. Materials and Methods

2.1. Robotic Systems Overview

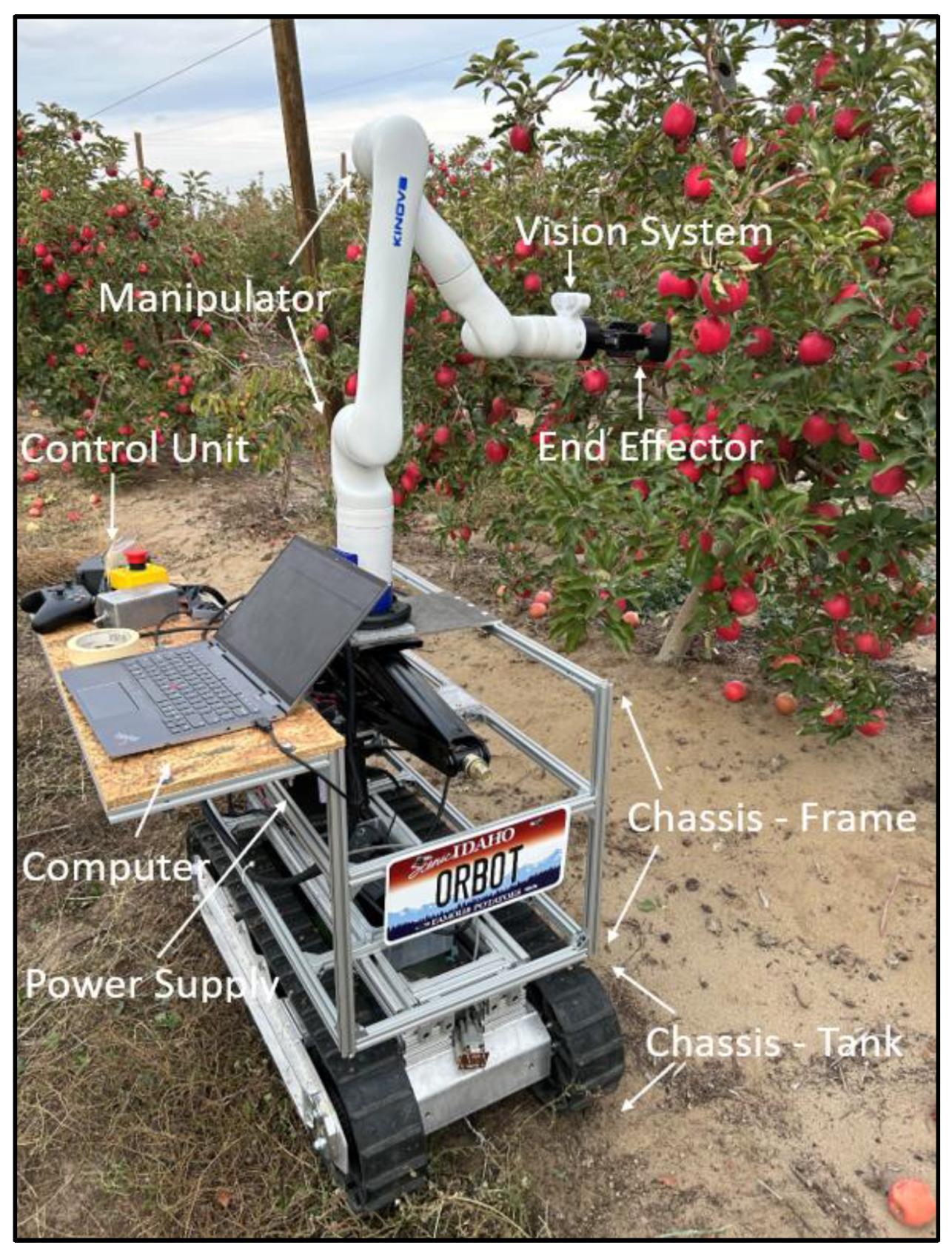

The OrBot harvesting system, seen in

Figure 1, is composed of the following: manipulator, vision system, end effector, personal computer, control unit, power supply, and a motorized chassis.

The manipulator is a third generation Kinova robotic arm [

17]. This arm has six degrees of freedom and has a reach of 902mm. The arm has a full-range continuous payload of 2 kg, which easily exceeds the weight of an apple, and it has a maximum Cartesian translation speed of 500mm/s. The average power consumption of the arm is 36W. These characteristics make this manipulator suitable for fruit harvesting.

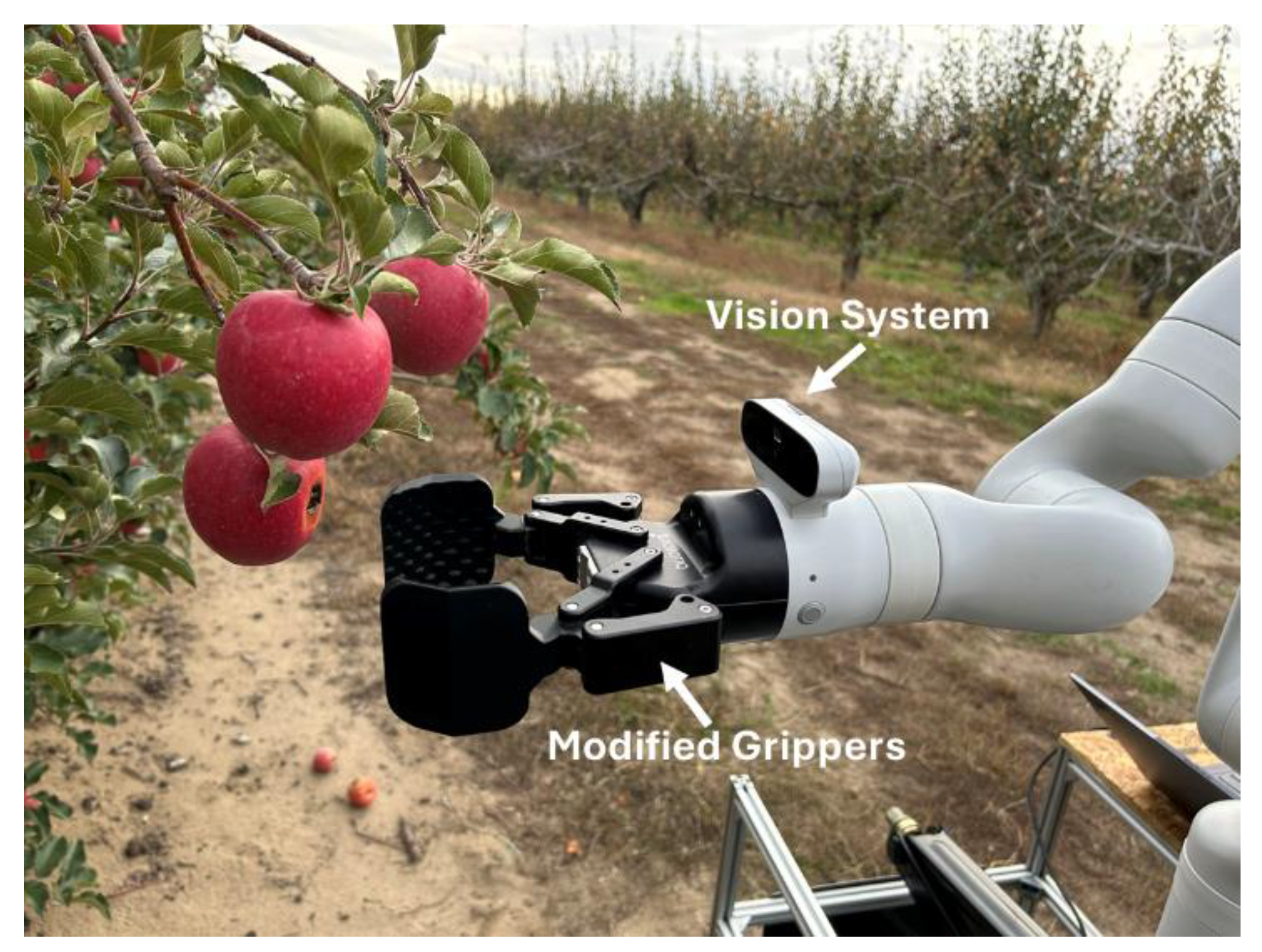

The vision system is comprised of a color sensor and a depth sensor. The color sensor (Omnivision OV5640) has a resolution of 1920 × 1080 pixels and a field of view of 65 degrees. The depth sensor (Intel RealSense Depth Module D410), enabled by both stereo vision and active IR sensing, has a resolution of 480 × 270 pixels with a field of view of 72 degrees. The color sensor is used to detect the location of the fruit, while the depth sensor is used to approximate the distance of the fruit from the end effector.

The end effector is a standard two-finger gripper that has a stroke of 85mm and a grip force of 20-235N, which is controlled depending on the target fruit. The stock finger was replaced with a custom 3D-printed set of curved pads. These pads have a textured grip surface on the inside of the plate, which allows for a more secure grasp of the fruit during harvest. In addition, the gripper is controlled by a modified bang-bang controller [

18] to minimize the damage to the fruit. The bang-bang controller detects the presence of resistance as the gripper closes, thus it can handle different sizes of fruits.

The personal laptop computer is responsible for controlling the manipulator, end effector, and vision system of ORBOT. It receives data from the motor encoders and vision system and uses this data to modify the position of the manipulator and end effector. The manipulator is controlled using Kinova Kortex, a manufacturer-developed and included software platform [

19]. Kortex comes with Application Programming Interface (API) tools to allow for customized control. The control program for the manipulator and for the vision system were developed using a combination of the Kinova Kortex API, Matlab, Digital Image Processing toolbox, and Image Acquisition toolbox [

20].

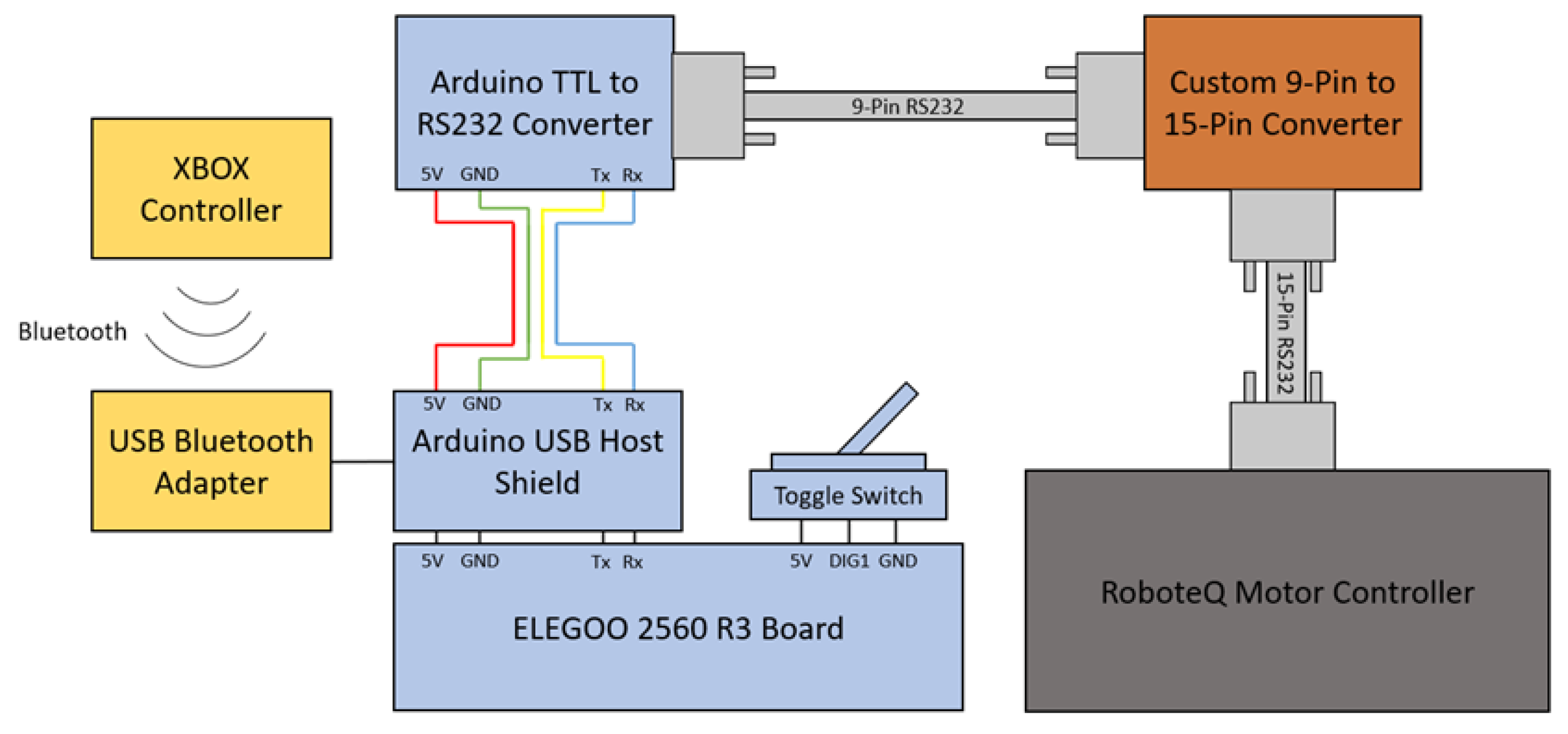

The main control unit of ORBOT, seen in

Figure 3, consists of the following: an ELEGOO MEGA 2560 R3 Board, a USB Host Shield, a toggle switch, and a TTL to RS232 Converter. The main control unit is responsible for communication between and control of the motor controller within the tank and the laptop computer. Programmed using the Arduino IDE, the main control unit is responsible for controlling the entire robot system. To achieve this, it utilizes serial communication to send signals to the laptop computer and the motor controller within the tank chassis. The main control unit can be set to either autonomous or driver-control mode through the use of a toggle switch. During driver-control mode, the laptop computer puts the manipulator into idle mode and the operator is able to drive ORBOT manually through the use of an XBOX Controller. This allows the operator to relocate ORBOT to a different location without needing to restart the Matlab program. During autonomous mode, the Xbox Controller is disabled, and the laptop computer continuously loops through the harvesting process until the robot is put into driver-control mode.

The entire system is mounted on a new chassis, which enables ORBOT to move itself within the orchard. This chassis consists of two main sections: the frame, and the tank. The frame is constructed from 80/20 aluminum bars, which allows for an easily customizable and stable platform.

The frame was fabricated and assembled at the university machine shop. The frame boasts several sections that are aimed at improving the ease of use in the field, which include a scissor jack and a plywood side table. The scissor jack allows the manipulator to be raised in order to gain access to apples that would be otherwise out of reach. The plywood side table provides a central location for all operator controls, which includes the emergency stop for the manipulator, the emergency stop for the tank chassis, the main control unit, and the personal computer. In addition to these features, the frame also carries ORBOT’s power supply which is a portable power station with a capacity of 540Wh.

2.2. Image Processing Procedure

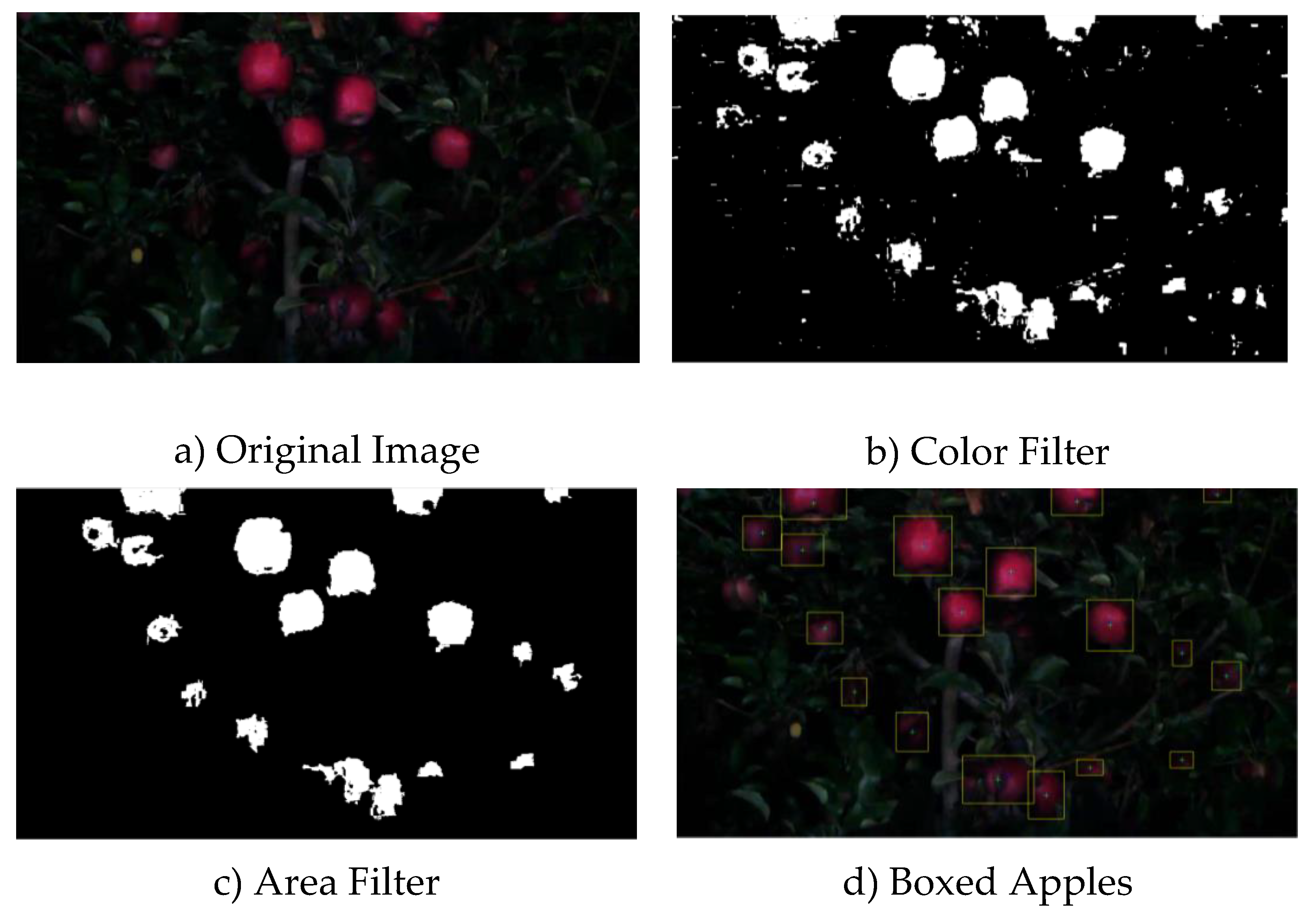

The apples were detected through the use of image processing. The first step in image processing is the image capture, where an image is taken with the vision system. This image (Original Image) is saved, and a discriminant function is applied to segment the image. The discriminant function, d, is expressed as

Chromaticity r and g are calculated from the RGB values of the image pixels [

21]. In the segmentation process, the discriminant function is calculated for each pixel and if it is greater than zero, then it is a fruit pixel otherwise it is a background pixel. The resulting image is the binary image.

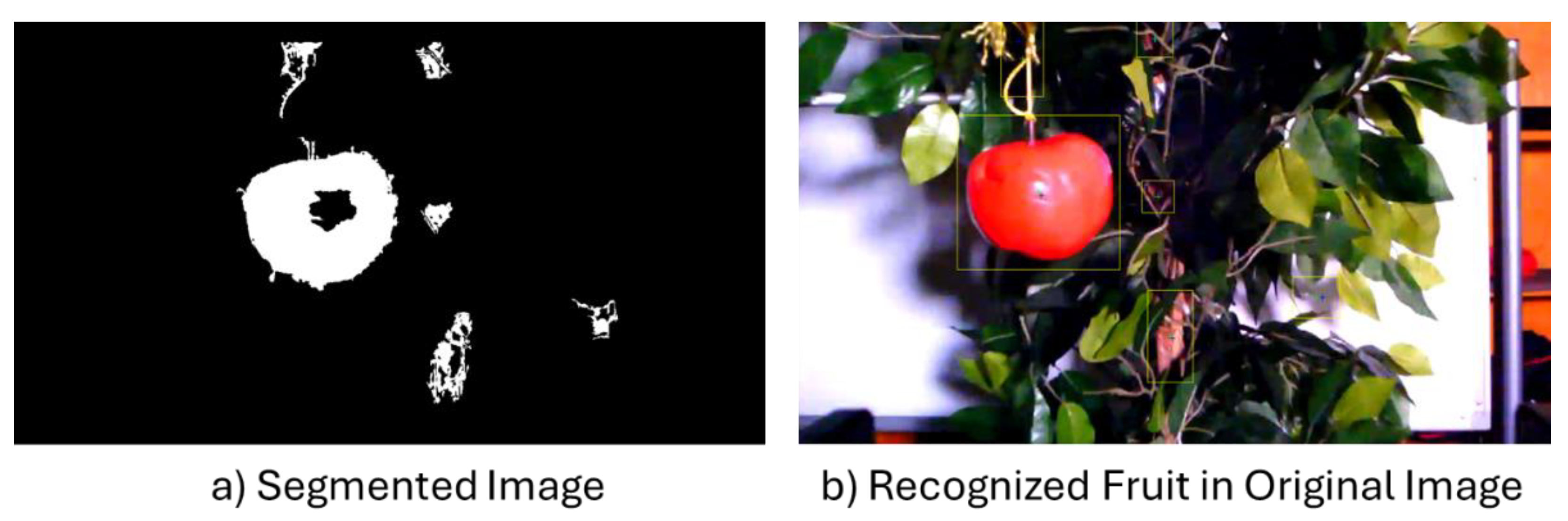

This binary image (Color Filtered) is then inspected with an area filter. Each white pixel that is in contact with another is added to a sum, resulting in many different forms in the image that each have an area value. These areas are then inspected to determine if they are too small to be an apple. If the area of a particular form does not meet the area requirement necessary to be part of an apple, then all of the pixels within the form are set to 0. This results in a “cleaner” image with less noise, which means that there are only larger forms left.

Features were then extracted from the third image (Area Filtered). These features include area, position, height, and width, and these were used to apply bounding boxes to the apples that were recognized. Each form that met the size requirement has a bounding box placed around the edges of it, resulting in the fourth and final image (Boxed Apples). Each step in the image processing procedure can be seen in

Figure 4.

2.3. Selection of Artificial Lighting Parameters

In order to enable the current vision system of ORBOT to function at night, an artificial source of light needed to be utilized. To this end, the NEEWER P600-2.4G photography light was chosen. This photography light was chosen for its lightweight frame and light customization options. These features enabled the light stands to be easily repositioned in the field, while also allowing for a wide range of customization options for the produced light.

Before this light could be implemented in the field, however, the optimal color and intensity would need to be determined. The color, known as the temperature, of the photography light ranges from a lowest value of 3200K to a highest value of 5600K. The intensity of the photography light, known as the power, ranges from a lowest value of 0% to a highest value of 100%. The optimal combination of these two settings is the one that produces the least amount of noise during image processing. The data collection was performed in the lab through the use of a simulated nighttime environment. The simulated nighttime environment was created by covering the windows, which removed all natural sources of light from the room and left the photography light as the only source of illumination.

The data was collected by saving an image of a plastic apple hung in a fake, decorative tree that was illuminated by the photography light at different combinations of light settings. The image was then processed through the same method seen in

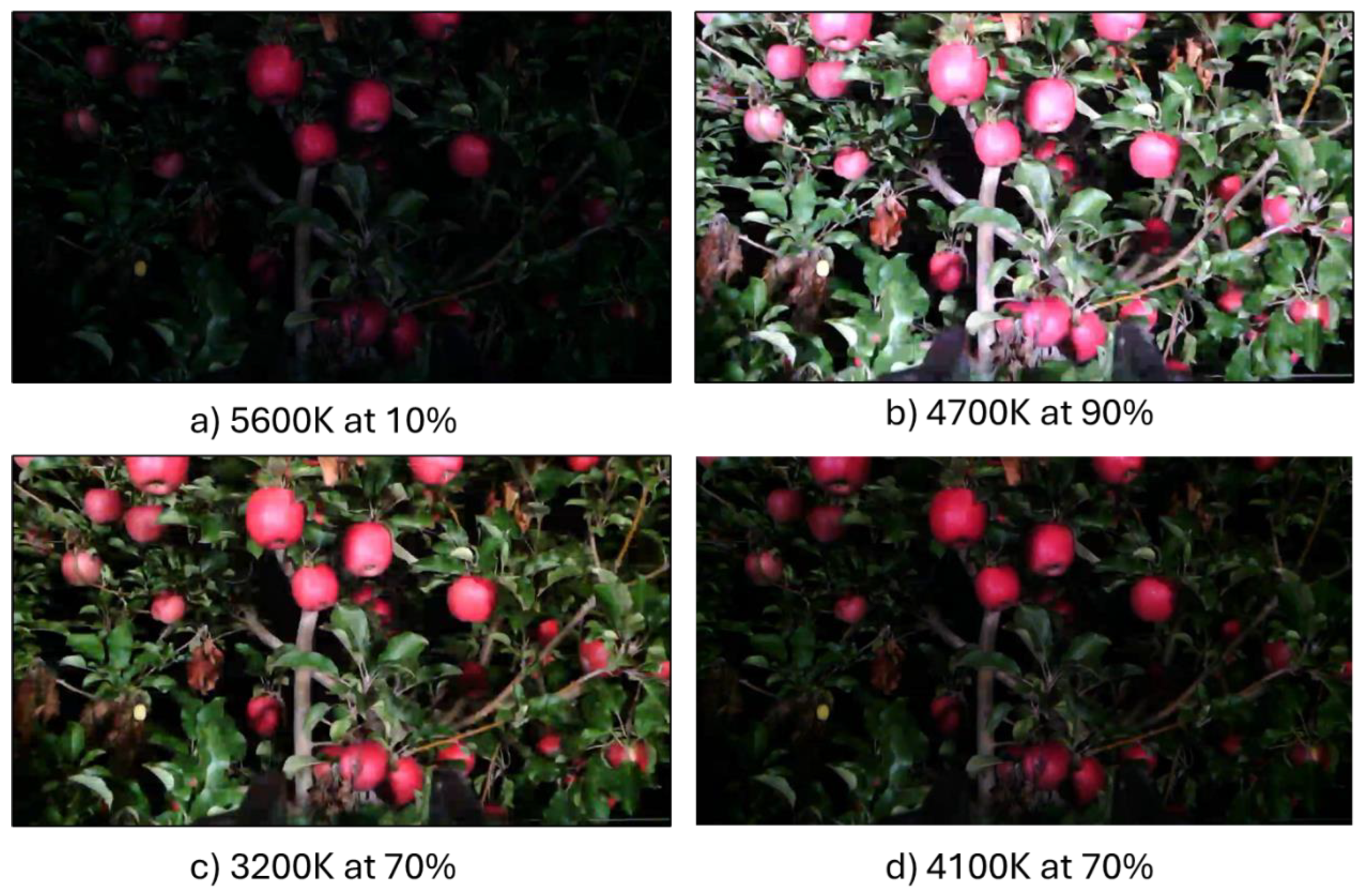

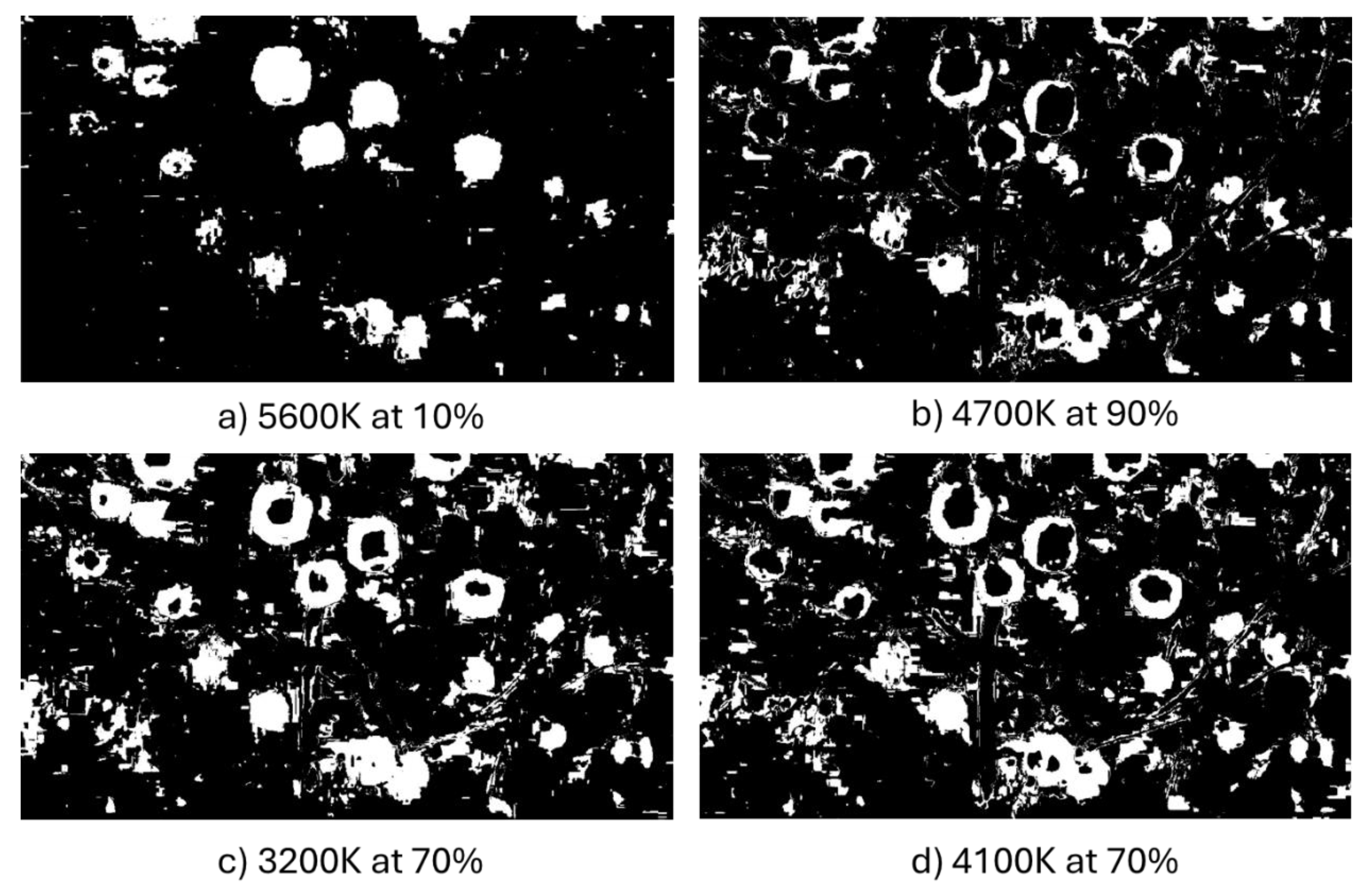

Section 2.2, which provided a value for white pixel count and total boxed area. The noise level present in each image was then found by subtracting the number of white pixels in a particular image from the total area of the bounding boxes present within the image. This process was repeated according to the following procedure: The power setting was increased by 20% for each datapoint, which resulted in power levels of 10%, 30%, 50%, 70%, and 90% for each temperature setting. At the same time, the temperature setting was increased by 300K every five datapoints, starting at 3200K (cool) and ending at 5600K (warm). This resulted in a dataset of nine different temperature levels, with each of these having five different power levels, which resulted in a total datapoint set of forty-five different combinations. This process was then repeated in the orchard using real nighttime conditions, which resulted in two sets of forty-five datapoints. A sample of four images acqui is shown below in

Figure 5. These are the unmodified images that are captured by the camera, which result in the processed images corresponding to each as seen in

Figure 6.

2.4. Evaluation of Nighttime Harvesting

In order to test the functionality of the existing harvesting process during nighttime conditions, two of the NEEWER P600-2.4G photography lights were used. As seen in

Figure 4, the lights were placed on either side of the robot roughly three feet away, which minimizes the shadows produced.

The collection of the harvesting efficiency data was streamlined by disabling the gripper functionality. This made the process less time consuming since the robot only needed to move to the fruit, position the fruit inside the customized gripper and then move back to the starting position to signify a successful harvest. This modified harvesting procedure was done so the same fruits were tested in the daytime and nighttime tests.

The following process was performed to collect the harvesting data: The robot started in autonomous mode and began by “harvesting” the closest apple (largest size within FOV). A black piece of construction paper was then taped to this apple in order to prevent it from being harvested again. Since this apple was no longer in view, the next closest apple was harvested. This process continued until all apples with the robot’s field of view were harvested. The robot was then placed into driver-control mode and driven to the next location in the orchard row.

This harvesting procedure was repeated both during the day and the night, resulting in fifty daytime trials and fifty nighttime trials. Each of these trials was either considered a success or a failure. If the robot successfully recognized and moved to the correct position in front of the apple, then the trial was considered a success. If the robot failed to locate the apple properly and moved to the incorrect position, then the trial was considered a failure.

3. Results

3.1. Suitable Artificial Lighting Parameters

The processes described in

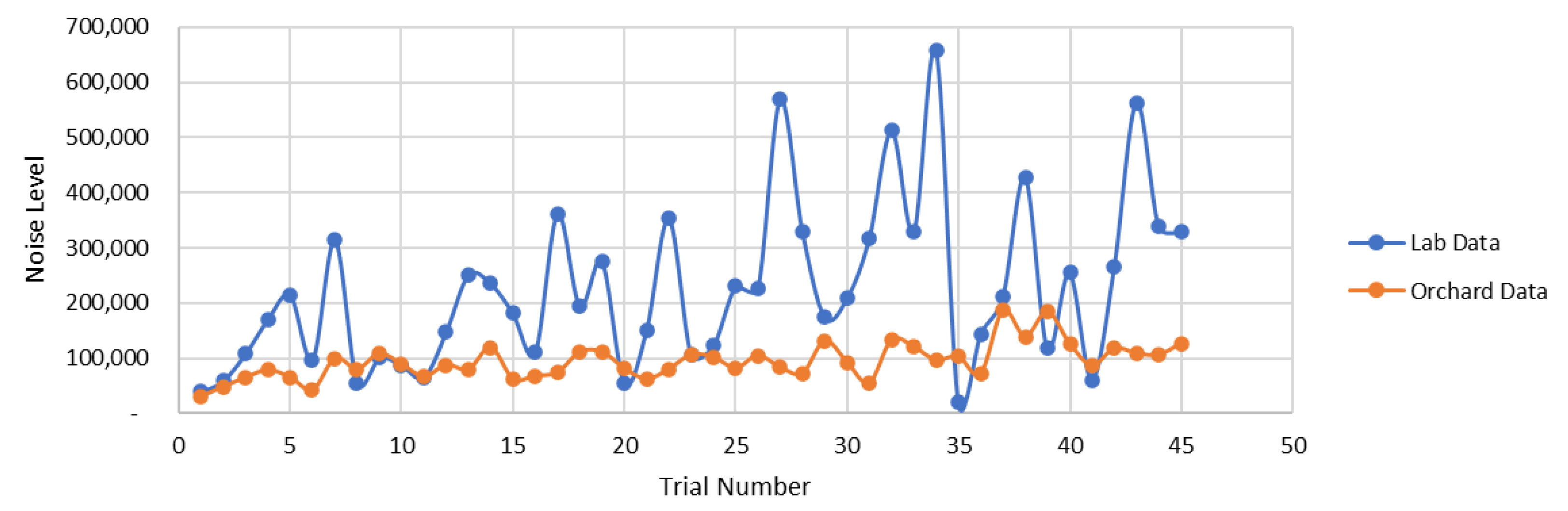

Section 2.3 were performed both in the lab and in the orchard. The two separate sets of data that these produced were analyzed in conjunction with one another in order to produce a higher level of confidence in the selected combination of light parameters. The results of the artificial lighting parameter selection can be seen in

Figure 7. The y-axis displays the “Noise Level”, which was found by subtracting the total number of white pixels in a given image from the sum of the boxed areas in the image. The x-axis displays the trial number, with the power and temperature settings distributed as seen in

Section 2.3. For example, the data for Trial 1 through Trial 5 was collected using a temperature value of 3200K, while each trial within this set of five was collected with power settings of 10%, 30%, 50%, 70%, and 90%.

The lab data (seen in blue) generates a significantly higher level of noise than what is seen in the orchard data (seen in orange). This is likely due to the fact that plastic apples and plastic trees were used in the lab, which would have a much different level of light reflection than real leaves and real apples.

Of the forty-five combinations of lighting parameters, the one that generated the least amount of noise was Trial 35 (3800K at 90% power). Upon closer inspection of the image taken at this datapoint, however, it becomes clear that this is not the true lowest noise. As seen in

Figure 8, a “hole” is present in the center of the apple in the final result of the image processing. This was caused by the bright reflection of the light that is present in the center of the apple, which was due to the higher intensity. This specular reflection common for spherical shaped fruits caused the segmentation process to not identify the pixels in this area as part of an apple, which resulted in a hollowed apple shape [

22]. This occurrence was also observed during the daytime when the sun’s direction is directly hitting a spherical object. This then generated an inaccurate noise level, and Trial 35 was therefore considered an outlier.

3.2. Evaluation of Fruit Harvesting

In order to quantify the efficiency of the harvesting process, the robot was taken to the orchard and used to harvest apples many times. The harvesting process was run a total of one-hundred times, consisting of fifty trials during daytime conditions and fifty trials during nighttime conditions.

Based on the data collected, the harvesting efficiency of ORBOT is 88% while operating in daytime conditions and 94% when operating in nighttime conditions. These values were found by simply calculating the average of the successes and failures. This increase in the harvesting efficiency of the robot at night can be attributed to the lighting conditions present during operation. During the day, there is a large amount of diffused light present from the sun. This diffused light causes shadows to form around the apples and trees, which results in suboptimal image quality during the image processing procedure. These shadows, however, are not present during the night. Since there is no diffused lighting at night, there is a much greater level of control of the lighting conditions and therefore the amount of shadows present in the harvesting area.

The two photography lights are able to reduce the presence of shadows significantly, allowing for a much higher rate of successful harvesting than can be produced during the day. These results further solidify the confidence in the selected light parameters that were discussed previously in Section 3.3.

4. Discussion

As previously mentioned, the two main reasons that hinders the commercialization of a fruit harvesting robot are the slow harvesting speed and low harvesting efficiency. One of the ideas that has been proposed is to have the robot operate at night, and then human pickers continue the harvesting operation during the daytime. So even if the robot is slow, it can still fill the gap of the labor shortage [

23]. Thus, it is imperative to study the harvesting capability of the robot during nighttime operation.

One of the requirements for nighttime harvesting is artificial lighting, which provides illumination to the scene. The advantage of using artificial lighting in this case is that we have control of the lighting intensity and direction [

24]. As compared to daytime harvesting where we are dependent on the sun, its position, and cloud covering. It is more difficult to control these parameters. The variable lighting condition is one of the reasons some researchers have developed image processing algorithms that could adapt to the variability [

25]. However, if we could control lighting, then there is no need to adapt the image processing.

To use artificial lighting, we have to identify the type of lighting and its parameters that will be used. Some researchers have used incandescent, fluourescent, and LED lighting [

25]. One apple harvesting test used incandescent, and another apple harvesting used LED lighting [

27]. In this study, we have selected LED lighting with control of the color temperature and intensity. To determine the parameters that will be used for nighttime harvesting, the noise of the images at different lighting conditions was measured. In one study, it was shown that noise is one of the challenges encountered when using artificial lighting [

26]. The noise brought about by artificial lighting was generally characterized as Gaussian and also having a salt and pepper characteristic. A simple area and shape filtering approach can be used to remove this type of noise. This was used in this study. However, if the image processing can be facilitated in the hardware level, then it simplifies the image processing [

28]. This means that if we could minimize the noise by selecting the lighting parameters, then the image processing to remove the noise is more simplified. The results of our tests showed that a color temperature of 5600 K and an intensity of 10% had the lowest noise. This was the lighting condition that was used for evaluating the nighttime harvesting tests and compared with the daytime harvesting.

The nighttime harvesting had a success harvest rate of 94% as compared to the daytime harvesting success rate of 88%. One of the main reasons for the improved success rate at night is the ability to control the lighting conditions. When compared to daytime harvesting that uses the sun as the lighting source, the lighting condition and direction are changing. With artificial lighting at night, the scene is always front lighted and the color temperature and intensity are constant. In addition, the depth sensor of the robot is not affected by the artificial lights as compared to direct sunlight. We did not encounter any distance estimation issues at night as compared during the daytime. These harvesting results also prove that the selected artificial lighting parameters are effective.

The next step that the Robotics Vision Lab will conduct will be to develop an autonomous harvesting process. The harvesting process will start the robot from one end of the tree rows. The robot will then move forward while searching for a fruit. When it a fruit is found, the robot will stop and perform the harvesting operation. Once the fruit is harvested, the robot continue its forward motion searching for another fruit until it reaches the end of the row. Once it reaches the end of the row, the robot will move back to the starting point. As it moves back, it still searches for a fruit. The reason is that as fruits are removed from previous iteration, new fruits will be visible to the robot. This process will be tested during daytime and nighttime. As the OrBot is already mounted on a platform that can be autonomously navigated, this harvesting sequence can easily be implemented.

5. Conclusions

A harvesting robot called OrBot was developed to harvest fruits. OrBot is composed of a 6-DOF manipulator with a customized fruit gripper, a color sensor, a depth sensor, a personal computer, and a motorized chassis. OrBot was evaluated to harvest at night. It was found that LED lights with a color temperature of 5600K and 10% intensity provided a suitable artificial illumination for robotic harvesting. These light parameters yielded the lowest noise in the image. When OrBot was tested in a commercial orchard using twenty Pink Lady Apple trees, the robot had a harvesting success rate of 94% at night time as compared to daytime harvesting yielding 88%. The use of artificial lighting at night clearly improved fruit recognition and the depth estimation was not affected by the artificial lighting.

Author Contributions

Conceptualization, D.M.B.; methodology, D.M.B., J.W., E.B.; software, J.W., E.,B., D.M.B..; validation, J. W., D.M.B. and E.B.; formal analysis, D.M.B., J.W., E.B. investigation, J.W.. E.B.; resources, D.M.B. ; data curation, J.W. and D.M.B.; writing—original draft preparation, J.W. and D.M.B..; writing—review and editing, D.M.B., J.W., E.B.; supervision, D.M.B.; project administration, D.M.B.; funding acquisition, D.M.B. All authors have read and agreed to the published version of the manuscript.

Funding

This project was supported in part by the Idaho State Department of Agriculture through the Specialty Crop Block Grant Program.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

The authors would like to acknowledge the support of Symms Fruit Ranch for allowing the use of OrBot in their commercial orchards.

Conflicts of Interest

The authors declare no conflict of interest.

References

- What is a Specialty Crop? Available online: https://www.ams.usda.gov/services/grants/scbgp/specialty-crop (accessed on 30 March 2024).

- Made in Idaho: Fruitland packing company going strong. Available online: https://www.kivitv.com/news/made-in-idaho-fruitland-packing-company-going-strong (accessed on 30 March 2024).

- Farmers and Ranchers Will Pay More for H-2A Labor in 2024. Available online: https://www.fels.net/1/labor-safety/30-labor/779-farmers-and-ranchers-will-pay-more-for-h-2a-labor-in-2024.html (accessed on 30 March, 2024).

- Day, Chia-Peng. "Robotics in industry—their role in intelligent manufacturing." Engineering 4.4 (2018): 440-445. [CrossRef]

- Li, Ming, Andrija Milojević, and Heikki Handroos. "Robotics in manufacturing—The past and the present." Technical, Economic and Societal Effects of Manufacturing 4.0: Automation, Adaption and Manufacturing in Finland and Beyond (2020): 85-95.

- Bartoš, Michal, et al. "An overview of robot applications in automotive industry." Transportation Research Procedia 55 (2021): 837-844. [CrossRef]

- Lytridis, C.; Kaburlasos, V.G.; Pachidis, T.; Manios, M.; Vrochidou, E.; Kalampokas, T.; Chatzistamatis, S. An Overview of Cooperative Robotics in Agriculture. Agronomy 2021, 11, 1818. [CrossRef]

- Y. Sarig, Robotics of Fruit Harvesting: A State-of-the-art Review, Journal of Agricultural Engineering Research, Volume 54, Issue 4, 1993, Pages 265-280,. [CrossRef]

- Harrell, R. C., D. C. Slaughter, and Phillip D. Adsit. "A fruit-tracking system for robotic harvesting." Machine Vision and Applications 2 (1989): 69-80. [CrossRef]

- Bulanon, D. Mꎬ, T. Fꎬ Burks, and V. Alchanatis. "Study on temporal variation in citrus canopy using thermal imaging for citrus fruit detection." Biosystems Engineering 101.2 (2008): 161-171. [CrossRef]

- Bulanon, Duke M., Thomas F. Burks, and Victor Alchanatis. "A multispectral imaging analysis for enhancing citrus fruit detection." Environmental Control in Biology 48.2 (2010): 81-91. [CrossRef]

- Kang, H.; Zhou, H.; Wang, X.; Chen, C. Real-Time Fruit Recognition and Grasping Estimation for Robotic Apple Harvesting. Sensors 2020, 20, 5670. [CrossRef]

- Vrochidou, E.; Tsakalidou, V.N.; Kalathas, I.; Gkrimpizis, T.; Pachidis, T.; Kaburlasos, V.G. An Overview of End Effectors in Agricultural Robotic Harvesting Systems. Agriculture 2022, 12, 1240. [CrossRef]

- Li, Y.; Feng, Q.; Li, T.; Xie, F.; Liu, C.; Xiong, Z. Advance of Target Visual Information Acquisition Technology for Fresh Fruit Robotic Harvesting: A Review. Agronomy 2022, 12, 1336. [CrossRef]

- Bulanon, D.M.; Burr, C.; DeVlieg, M.; Braddock, T.; Allen, B. Development of a Visual Servo System for Robotic Fruit Harvesting. AgriEngineering 2021, 3, 840-852. [CrossRef]

- Hayashi, S., Shigematsu, K., Yamamoto, S., Kobayashi, K., Kohno, Y., Kamata, J., & Kurita, M. (2010). Evaluation of a strawberry-harvesting robot in a field test. Biosystems engineering, 105(2), 160-171. [CrossRef]

- GEN3 ROBOTS, IMAGINE THE POSSIBILITIES. Available online: https://www.kinovarobotics.com/product/gen3-robots (accessed on 30 March 2024).

- Compher, I., & Bulanon, D. M. (2023, April). Improving The Performance of OrBot The Fruit Picking Robot. In 2023 IEEE International Opportunity Research Scholars Symposium (ORSS) (pp. 78-81). IEEE.

- Kinovarobotics/kortex. Available online: https://github.com/Kinovarobotics/kortex (accessed on 30 March 2024).

- Image Processing Toolbox. Available online: https://www.mathworks.com/products/image-processing.html (accessed on 30 March 2024).

- M.W.Hannan, T.F.Burks, D.M.Bulanon. “A Machine Vision Algorithm for Orange Fruit Detection”. Agricultural Engineering International: the CIGR Ejournal. Manuscript 1281. Vol. XI. December, 2009.

- Hao, J.; Zhao, Y.; Peng, Q. A Specular Highlight Removal Algorithm for Quality Inspection of Fresh Fruits. Remote Sens. 2022, 14, 3215. [CrossRef]

- Hayashi, S., Shigematsu, K., Yamamoto, S., Kobayashi, K., Kohno, Y., Kamata, J., & Kurita, M. (2010). Evaluation of a strawberry-harvesting robot in a field test. Biosystems engineering, 105(2), 160-171. [CrossRef]

- Longsheng, F., Bin, W., Yongjie, C., Shuai, S., Gejima, Y., & Kobayashi, T. (2015). Kiwifruit recognition at nighttime using artificial lighting based on machine vision. International Journal of Agricultural and Biological Engineering, 8(4), 52-59.

- Hou, G., Chen, H., Jiang, M., & Niu, R. (2023). An Overview of the Application of Machine Vision in Recognition and Localization of Fruit and Vegetable Harvesting Robots. Agriculture, 13(9), 1814. [CrossRef]

- Ren, J. (2024). A Reliable Night Vision Image De-Noising Based on Optimized ACO-ICA Algorithm. IAENG International Journal of Computer Science, 51(3).

- Fu, L., Majeed, Y., Zhang, X., Karkee, M., & Zhang, Q. (2020). Faster R–CNN–based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosystems Engineering, 197, 245-256. [CrossRef]

- Awcock, G. J., & Thomas, R. (1995). Applied image processing (pp. 111-118). Basingstoke, UK: Macmillan.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).