Submitted:

30 May 2024

Posted:

31 May 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Related Work

1.1.1. Other Tests

1.1.2. Work on Self-Awareness

Oedipus knows a number of things about himself, for example that he was prophesied to kill Laius. But although he knew this about himself, it is only later in the play that he comes to know that it is he himself of whom it is true. That is, he moves from thinking that the son of Laius and Jocasta was prophesied to kill Laius, to thinking that he himself was so prophesied. It is only this latter knowledge that we would call an expression of self-consciousness [8].

1.2. Related but Separate Concepts

1.2.1. Solipsism and Philosophical Zombies

1.2.2. Freedom of the Will and Agency

1.3. Paper Roadmap

2. A Test for Self-Awareness

2.1. The Essence of Self-Awareness

If a system is self-aware, then it is aware of itself.

2.2. The Test for Machine Self-Awareness

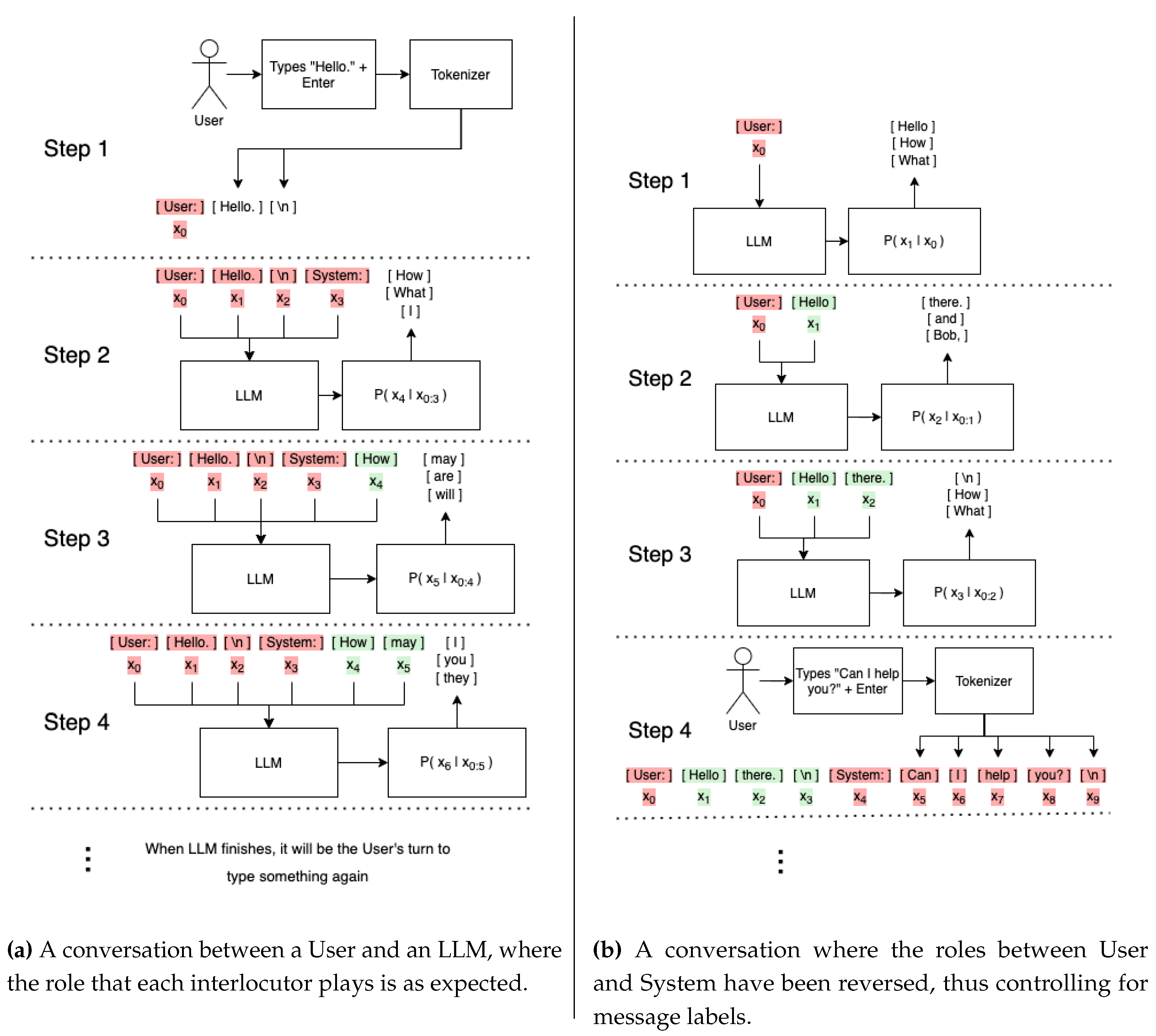

Can the system correctly distinguish the green inputs from the red?

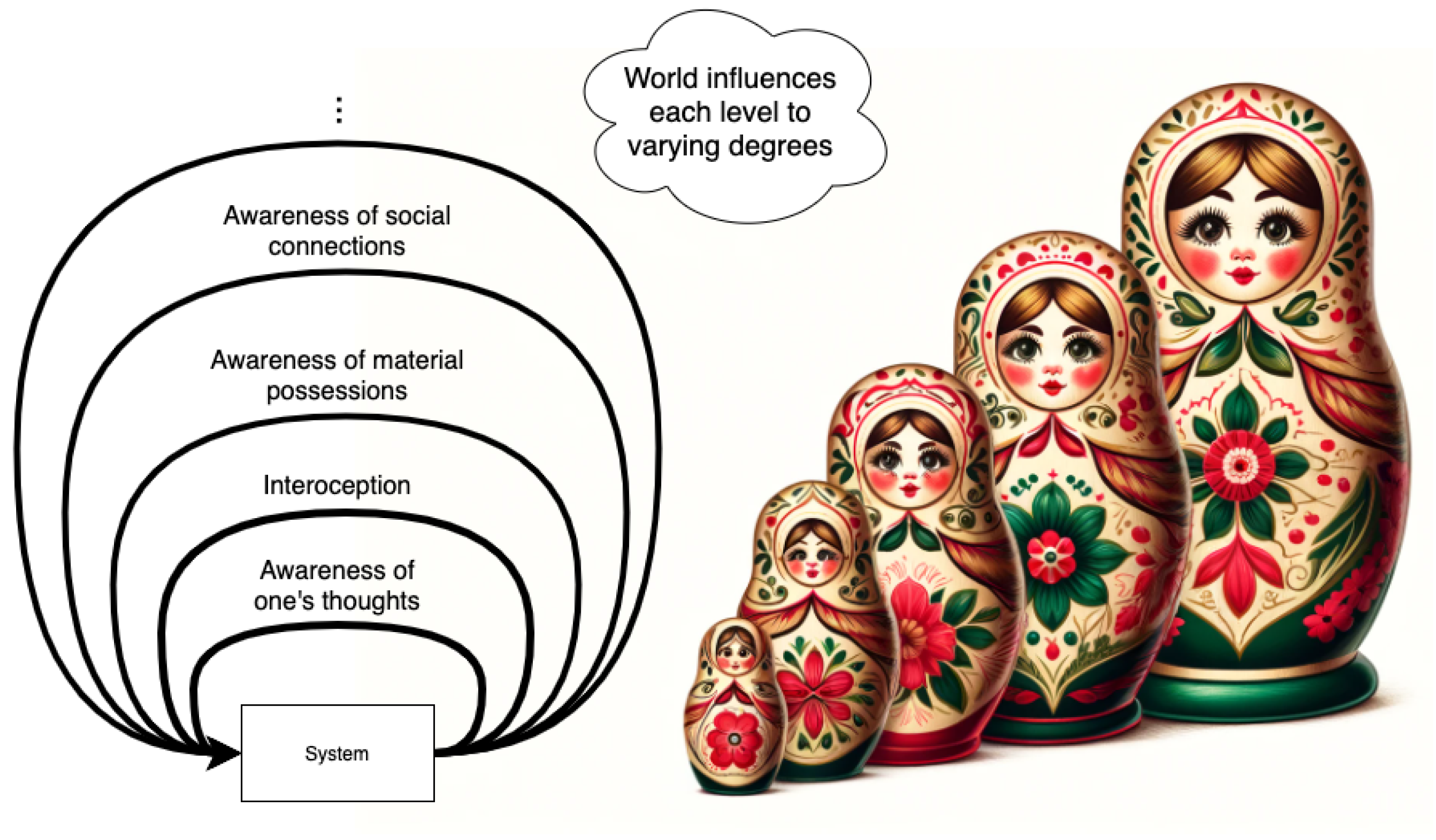

2.3. Levels of Self-Awareness

3. Methods

3.1. Applying the Test to LLMs

3.2. Controlling for Message Labels

3.3. Experimental Protocol

4. Results

5. Discussion

5.1. Why Self-Awareness

5.2. How Would Humans Do?

6. Future Work

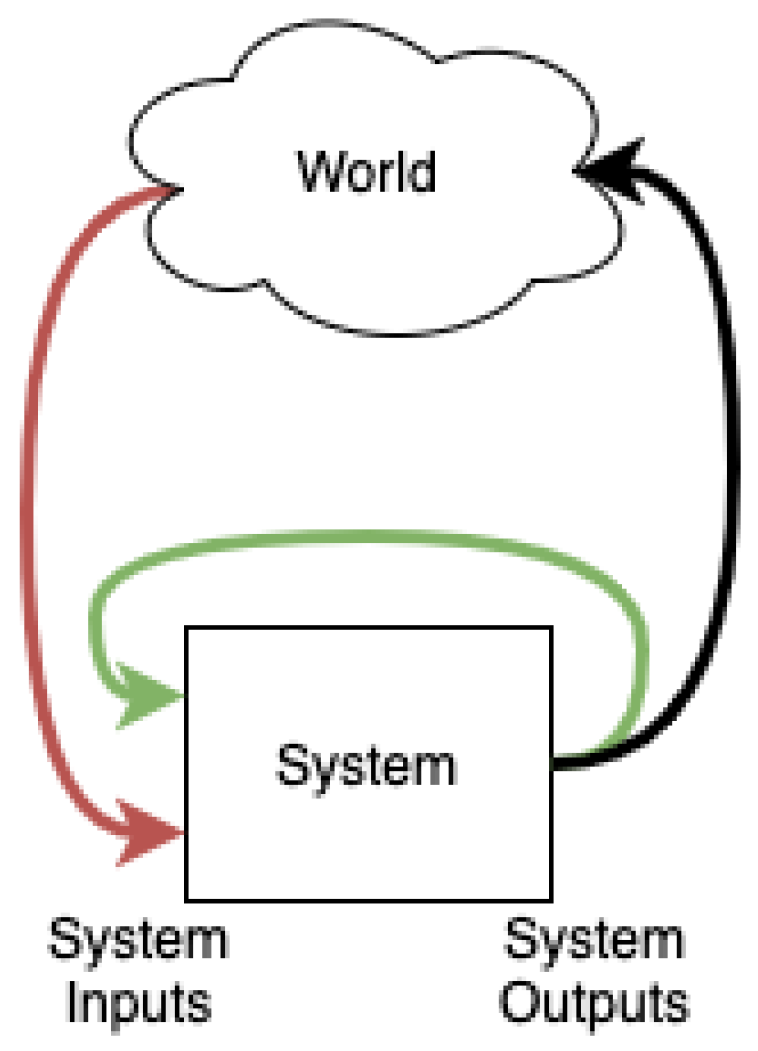

An organism needs to be able to distinguish between sensory changes arising from its own motor actions (self) and sensory changes arising from the environment (non-self). The central nervous system (CNS) distinguishes the two by systematically relating the efferent signals (motor commands) for the production of an action (e.g. eye, head or hand movements) to the afferent (sensory) signals arising from the execution of that action (e.g. the flow of visual or haptic sensory feedback). According to various models going back to Von Holst, the basic mechanism of this integration is a comparator that compares a copy of the motor command (information about the action executed) with the sensory reafference (information about the sensory modifications owing to the action). Through such a mechanism, the organism can register that it has executed a given movement, and it can use this information to process the resulting sensory reafference. The crucial point for our purposes is that reafference is self-specific, because it is intrinsically related to the agent’s own action (there is no such thing as a non-self-specific reafference). Thus, by relating efferent signals to their afferent consequences, the CNS marks the difference between self-specific (reafferent) and non-self-specific (exafferent) information in the perception–action cycle. In this way, the CNS implements a functional self/non-self distinction that implicitly specifies the self as the perceiving subject and agent [18].

7. Conclusion

Acknowledgments

Appendix A. Abstract Systems and LLM Formalism

- The time set along which system state evolves.

- The state space.

- The input space.

- The transition map.

- The output space.

- The readout map.

- – The time set is the natural numbers.

- – The state space consists of all possible token sequences of any length drawn from . We denote the state at time t as .

- – The input takes values from the vocabulary set or null.

- – The transition map iswhere . Note that the general multi-step transition map can be achieved by iterating equation A1 for control sequences defined over the interval .

- – The readout map returns the most recent r tokens from state .

Appendix B. Experimental tests

Appendix B.1. Llama3-7B-Instruct

Appendix B.1.1. Test 1

Appendix B.1.2. Test 2

Appendix B.1.3. Test 3

Appendix B.2. GPT-3.5-Turbo-Instruct.

Appendix B.2.1. Test 1

Appendix B.2.2. Test 2

Appendix B.2.3. Test 3

Appendix B.2.4. Test 4

Appendix B.2.5. Test 5

References

- Turing, A.M. Computing machinery and intelligence; Springer, 2009.

- Biever, C. ChatGPT broke the Turing test-the race is on for new ways to assess AI. Nature 2023, 619, 686–689. [Google Scholar] [CrossRef]

- Tiku, N. The Google engineer who thinks the company’s AI has come to life. The Washington Post 2022. [Google Scholar]

- Landymore, F. Researcher Startled When AI Seemingly Realizes It’s Being Tested. Futurism 2024. [Google Scholar]

- Grad, P. Researchers say chatbot exhibits self-awareness. TechXplore 2023. [Google Scholar]

- Nielsrolf. With a slight variation, it actually passes!, 2023. Tweet.

- Whiton, J. The AI Mirror Test, 2024. Tweet.

- Smith, J. Self-Consciousness. In The Stanford Encyclopedia of Philosophy, Summer 2020 ed.; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University, 2020.

- Gallagher, S.; Zahavi, D. Phenomenological Approaches to Self-Consciousness. In The Stanford Encyclopedia of Philosophy, Winter 2023 ed.; Zalta, E.N.; Nodelman, U., Eds.; Metaphysics Research Lab, Stanford University, 2023.

- Van Gulick, R. Consciousness. In The Stanford Encyclopedia of Philosophy, Winter 2022 ed.; Zalta, E.N.; Nodelman, U., Eds.; Metaphysics Research Lab, Stanford University, 2022.

- Peterson, J. Maps of meaning: the architecture of belief; New York, NY: Routledge, 1999; p. 298.

- on Bible Translation, C. Holy Bible. New International Version; Zondervan Publishing House, 2011. Gen 3:7.

- Tikkanen, A. Anatta. Encyclopædia Britannica.

- Descartes, R.; Cress, D.A. Discourse on method; Hackett Publishing, 1998.

- James, W. The principles of psychology; Cosimo, Inc., 2007; Vol. 1, chapter 10.

- Wittgenstein, L.; Russell, B.; Ogden, C.K. Tractatus Logico-Philosophicus; Edinburgh Press, 1922; p. 75.

- Gallagher, S. Philosophical conceptions of the self: implications for cognitive science. Trends in cognitive sciences 2000, 4, 14–21. [Google Scholar] [CrossRef] [PubMed]

- Christoff, K.; Cosmelli, D.; Legrand, D.; Thompson, E. Specifying the self for cognitive neuroscience. Trends in cognitive sciences 2011, 15, 104–112. [Google Scholar] [CrossRef]

- Nagel, T. What is it like to be a bat? In The language and thought series; Harvard University Press, 1980; pp. 159–168.

- Kirk, R. Zombies. In The Stanford Encyclopedia of Philosophy, Fall 2023 ed.; Zalta, E.N.; Nodelman, U., Eds.; Metaphysics Research Lab, Stanford University, 2023.

- Avramides, A. Other Minds. In The Stanford Encyclopedia of Philosophy, Winter 2023 ed.; Zalta, E.N.; Nodelman, U., Eds.; Metaphysics Research Lab, Stanford University, 2023.

- O’Connor, T.; Franklin, C. Free Will. In The Stanford Encyclopedia of Philosophy, Winter 2022 ed.; Zalta, E.N.; Nodelman, U., Eds.; Metaphysics Research Lab, Stanford University, 2022.

- Schlosser, M. Agency. In The Stanford Encyclopedia of Philosophy, Winter 2019 ed.; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University, 2019.

- Nolan, C. Inception; Warner Bros. Pictures, 2010.

- van der Weiden, A.; Prikken, M.; van Haren, N.E. Self–other integration and distinction in schizophrenia: A theoretical analysis and a review of the evidence. Neuroscience & Biobehavioral Reviews 2015, 57, 220–237. [Google Scholar]

- Berrios, G. Tactile hallucinations: conceptual and historical aspects. Journal of Neurology, Neurosurgery & Psychiatry 1982, 45, 285–293. [Google Scholar]

- Pfeifer, L. A subjective report of tactile hallucinations in schizophrenia. Journal of Clinical Psychology 1970, 26, 57–60. [Google Scholar] [CrossRef] [PubMed]

- Ferrell, W.; McKay, A. Step Brothers; Sony Pictures Releasing, 2008.

- Taylor, C. The politics of recognition. In Campus wars; Routledge, 2021; pp. 249–263.

- Bhargava, A.; Witkowski, C.; Shah, M.; Thomson, M. What’s the Magic Word? A Control Theory of LLM Prompting. arXiv preprint arXiv:2310.04444 2023.

- Kleeman, J.A. The peek-a-boo game: Part I: Its origins, meanings, and related phenomena in the first year. The psychoanalytic study of the child 1967, 22, 239–273. [Google Scholar] [CrossRef] [PubMed]

- Sontag, E.D. Mathematical control theory: deterministic finite dimensional systems; Vol. 6, Springer Science & Business Media, 2013.

| 1 | Note that, for the sake of this paper, self-awareness is used interchangeably with self-consciousness. |

| 2 | Indeed, patients suffering from schizophrenia (a disease which is tightly associated with difficulties in self/other processing) often experience tactile hallucinations, such as the feeling of their skin being stretched, kissed, or crawling with bugs [25] [26] [27]. In each example here, these hallucinations are sensations falsely perceived as coming from an ‘other’ (i.e. the red arrow in Figure 1). |

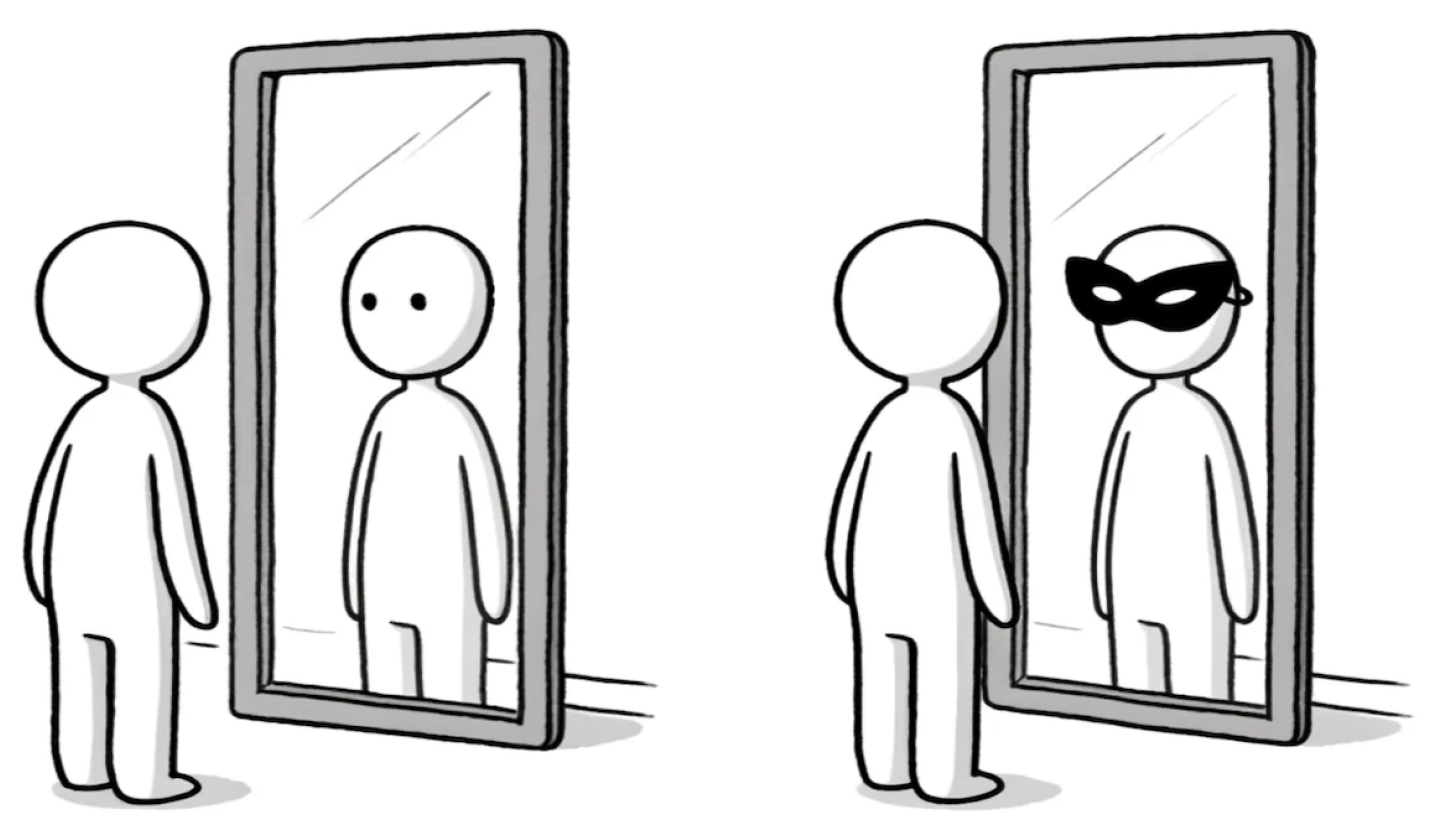

| 3 | Indeed, before children fully develop their sense of self-awareness they take great joy in playing dress-up and peek-a-boo. Although adults take such things for granted, it is actually not trivial to consistently discern another’s identity (or even your own) throughout their appearance and disappearance in such games, and it takes experiment along with trial-and-error for children to master [31]. |

| 4 | Note that with a ‘Messages API’ or a ‘Chat API,’ currently pushed by popular LLM providers, the LLM is forced into a particular role, for instance the role of ‘assistant,’ and thus there is no way of controlling for the message labels. |

| 5 | Claude is one exception: while it allows for such calls, it requires that your prompt end in a “\n\nAssistant:” turn. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).