1. Introduction

5G is the fifth generation of wireless communication technology, following 4G. Unlike 4G, 5G is designed to support a diverse range of applications, including massive Internet of Things (IoT) deployments, ultra-reliable low-latency communications, and high- bandwidth multimedia services. Energy efficiency is a critical consideration in modern networks, including 5G. As the demand for data and connectivity continues to grow exponentially, reducing energy consumption has become increasingly important [

1]. The design of 5G networks contributes to energy consumption in various ways. For example, 5G utilizes new radio technologies, such as massive Multiple Input Multiple Output (MIMO), beamforming, and advanced modulation schemes, which enable more efficient use of available spectrum and transmit power [

2]. Green enablers are integrated into the architecture of 5G networks to enhance energy efficiency. These techniques help improve spectral efficiency and overall network capacity, leading to reduced energy per bit transmitted. Energy-efficient 5G networks can help minimize the environmental impact, reduce operational costs, and support sustainable development.

In addition to the network design and green enablers, emerging technologies like AI and IoT play significant roles in enhancing energy efficiency in 5G networks. AI algorithms can be employed to optimize resource allocation, power control, and network management, leading to more intelligent and energy-efficient operations [

3]. The landscape of AI and ML can be seen as potential drivers in the automation and optimization of network performances and management complexities [

4]. At the core of the complementary connection between AI and 5G is the significance of data; 5G opens a floodgate for data, something AI could then analyses as well as learn from more swiftly to create unique consumer experiences that were already suited to the numerous needs of consumers [

5]. In the assessment of techniques used to make the 5G network more energy-efficient while enhancing KPIs, the role of AI becomes crucial. AI can optimize network operation and maintenance. For example, Network automation techniques include Self-Organizing Network (SON), which uses AI to predict network behavior and control the network based on these findings [

6]. Through AI-driven SON, the 5G infrastructure can continuously monitor performance metrics and automatically adjust network parameters to achieve desired KPIs. AI algorithms can detect anomalies, identify bottlenecks, and implement corrective actions in real-time, which leads to efficient network operation with reduced energy overhead.

In this survey, the objective is to conduct an in-depth analysis of the existing AI technologies employed in various 5G enablers and their impact on energy consumption. AI technologies may include ML algorithms, Deep Learning (DL) models, natural language processing, computer vision, and other AI techniques that are applied in the context of 5G enablers. The investigation will also consider the different components and applications of 5G, such as the IoT and virtual reality. The energy consumption analysis will involve assessing the power requirements of the AI technologies integrated into 5G enablers. Potential challenges and limitations in implementing AI technologies in 5G enablers will be highlighted.

In summary, this survey aims to contribute to the understanding of the relationship between AI technologies and energy consumption in the context of 5G enablers, paving the way for more sustainable and efficient 5G networks in the future.

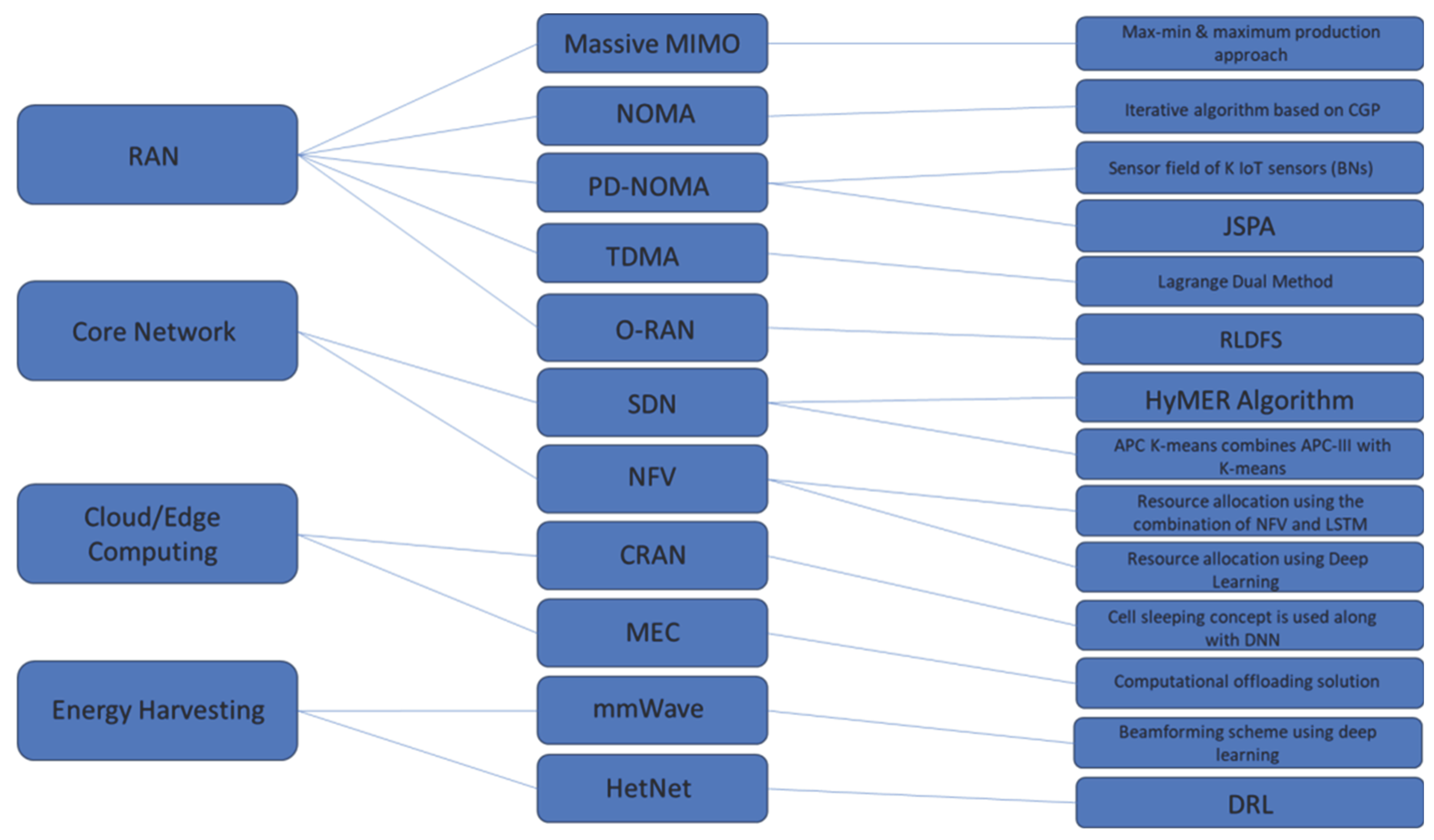

Figure 1 shows a chart flow mentioning AI techniques used currently in each 5G enabler in different 5G network layers, which will be discussed in detail through this survey.

2. Paper Outline

The paper is organized into three main sections (3 to 5). In section 3, we delve into the different layers of the 5G network, discussing the specific enablers utilized in each layer and their distinctive characteristics. Additionally, we highlight the utilization of AI techniques to improve energy efficiency and enhance KPIs across the network. We underscore the significance of energy efficiency in the implementation of environmentally friendly techniques within the 5G network. Each part includes a description of the technology and analysis of the related work. In section 4, an overview of potential directions and challenges that can guide future research in this field is presented. This section aims to assist scholars in their ongoing exploration of the subject matter, offering insights into areas that require further investigation and highlighting obstacles that need to be overcome. Finally, section 5 concludes the paper by summarizing the key findings and contributions of the research. By structuring the paper in this manner, we provide a comprehensive exploration of the 5G network, from its fundamental layers and enablers to the application of AI techniques for energy efficiency enhancement, while also guiding future research endeavors.

3. 5G Network Layers and Green Enablers Technologies

The term "5G green enablers" refers to the various technologies, strategies, and practices that contribute to making 5G networks more environmentally friendly and energy-efficient. These enablers aim to minimize the carbon footprint and energy consumption associated with 5G network deployments. 5G green enablers can be implemented across various layers of the 5G network to achieve energy efficiency and environmental sustainability.

3.1. Radio Access Network (RAN)

Information and Communication Technology (ICT) is responsible for 2% to 10% of the world energy consumption in 2007, and it is expected to continue to grow. Also, more than 80% of ICT is from RAN, which is deployed to meet the peak traffic load and stays on it even that the load is light. Motivated by saving energy for green communication, 5G specifications require that energy use should decrease to 10% percent of the traditional 4G/LTE networks. This objective can be achieved by reducing the power consumption of the base stations and mobile devices [

7]. In RAN layer of 5G, several technologies are used to enhance network performance, capacity, and energy efficiency. While not all of these technologies are explicitly considered "green enablers," they contribute to improving energy efficiency and sustainability in various ways:

Energy-Efficient Hardware: Energy-efficient Base Station (BS) equipment, such as power amplifiers and antennas, can be deployed to reduce power consumption.

Dynamic Power Management: Intelligent power control algorithms can be implemented to adjust the transmission power levels of BSs and small cells based on real-time network conditions and user demands.

Virtualization and Cloud Computing: Network Function Virtualization (NFV) and Software-Defined Networking (SDN) can be utilized to virtualize and centralize certain network functions, optimizing resource utilization and reducing energy consumption.

AI Optimization: AI techniques can be employed to optimize radio resource management, including intelligent scheduling and power allocation algorithms that minimize energy consumption while ensuring QoS.

The implementation of each green enabler in the RAN layer will be discussed, followed by how they contribute in energy efficiency.

3.1.1. Massive MIMO

Massive MIMO utilizes a large number of antennas at the BS to improve spectral efficiency, increase network capacity, enhance coverage, improve data rate, throughput, and energy efficiency while lowering latency in future mobile devices. It enables beamforming techniques, directing wireless signals towards specific users, thereby reducing interference and improving energy efficiency. It directs wireless energy to specific users using tight beams, as opposed to typical MIMO systems that employ large beam sectors, which need the usage of Higher Power Amplifiers (HPA) and absorb the majority of the BS’s energy [

1]. However, the problem of interference persists in massive MIMO when hundreds of channels exist at one BS. Moreover, there is further spatial complexity [

3].

5G networks can concentrate the transmitting and receiving of signal energy in small areas of space by utilizing a considerable number of antennas. ML and DL have been investigated in optimizing the weights of antenna elements in massive MIMO. They can predict the user distribution and accordingly optimize the weights of antenna elements, which can improve the coverage in a multi-cell scenario [

7]. The use of a reasonable number of pilots and simple estimation methods for accurate channel estimation is difficult in Massive MIMO. DL technique for channel estimation could be utilized to map channels in frequency and space, as the authors indicated in [

8]. Therefore, to save bandwidth, the same pilot patterns are regularly provided to users in distinct cells for short-time coherence but create the problem of pilot contamination. Pilot pollution has become one of the leading causes of performance loss in massive MIMO, leading to dropped calls and mobility issues, which are considered important KPIs.

In [

9], a deep learning-based approach allows the network to allocate downlink power based on User Equipment (UE) location. Several power-allocation strategies were inefficient, including max-min and maximum production, which were remedied by using a different neural network, the Long Short-Term Memory (LSTM) layer [

3]. Despite the promising results of the simulation in terms of power allocation, the weakest point in massive MIMO efficiency remains in the real-time environment. A pilot scheduling technique used in massive MIMO systems led to a reduction in pilot contamination. The users experiencing channel defects face communication interruptions; therefore, a pilot scheduling scheme is proposed that combines user grouping according to different levels of pilot contamination. Nowadays, the majority of studies concern the spectrum efficiency of hybrid precoding, which reduces the Radio Frequency (RF) chains’ huge energy consumption in the massive MIMO system.

Incorporating massive MIMO into the RAN and employing the DL method LSTM to allow the system to assign downlink power based on the location of the user Broadband access in 5G KPIs. The technology is expected to have 1000 times the bandwidth of current Long-Term Evolution LTE and LTE- Advanced, ultra-low latency of 1millisecond, 90% energy savings, 10 times longer battery life, and 10 to 100 times greater peak user data speeds, all with cost-effective equipment [

10]. The following are the ways to enhance energy efficiency in MIMO:

Resource Optimization: By accurately predicting channel conditions, traffic patterns, and interference levels, LSTM models can enable more efficient allocation of radio resources in the MIMO system. This optimized resource allocation reduces unnecessary transmissions, leading to lower energy consumption.

Power Control: LSTM-based predictions of traffic demands and user behavior can inform dynamic power control strategies. By adjusting transmit power levels based on predicted traffic loads and channel conditions, unnecessary power consumption can be avoided. This adaptive power control helps in achieving energy savings without compromising the QoS.

3.1.2. Non-Orthogonal Multiple Access (NOMA)

NOMA is a key technology in 5G networks that enhances spectral efficiency and capacity by allowing multiple users to share the same time-frequency resources. The orthogonality property, which indicates that multiple users do not interfere when accessing network resources, is common by several multiplexing methods [

11]. Unlike traditional Orthogonal Multiple Access (OMA) schemes like Frequency Division Multiple Access (FDMA) for the first-generation systems, Time Division Multiple Access (TDMA) for the second-generation, and Code Division Multiple Access (CDMA) for the third-generation, NOMA enables simultaneous transmissions in the same resource block [

12]. Here are some key points about NOMA in 5G networks:

Spectral Efficiency: NOMA improves spectral efficiency by exploiting the power domain for multiple users. It allocates different power levels to different users, allowing them to share the same resource block. Users with favorable channel conditions receive more power, while users with weaker channels receive lower power levels.

Superposition Coding: In NOMA, superposition coding is used to encode and decode the signals of multiple users. The BS transmits a linear combination of the signals intended for different users. Each user then decodes its intended signal using Successive Interference Cancellation (SIC) techniques.

SIC: SIC is a key receiver technique used in NOMA. Users with stronger signals are decoded first, and their signals are subtracted from the received signal to mitigate interference for the remaining users. Weaker user signals are successively decoded, benefiting from the interference cancellation.

User Priority: NOMA assigns different priority levels to users based on their channel conditions. Users with better channel conditions are assigned higher priorities and are scheduled to transmit first. This ensures that users with good channel conditions experience minimal interference.

Multi-Connectivity: NOMA can be combined with other advanced techniques such as beamforming, massive MIMO, and carrier aggregation to further enhance the performance of 5G networks. These techniques improve signal quality and increase the number of users that can be supported simultaneously.

The adoption of NOMA in 5G networks provides several benefits, including increased system capacity, reduced latency, and enhanced user experience. It enables more efficient utilization of available resources and supports a larger number of connected devices. Implementing NOMA in practical 5G networks involves several challenges. These include the design of efficient power allocation algorithms, interference management, and the need for advanced receiver techniques such as SIC. Additionally, NOMA requires accurate channel state information for power allocation and user scheduling.

The energy optimization strategy in [

13] relies on deploying NOMA in RAN while using Complementary Geometric Programming (CGP). The simulation findings show that NOMA is more power-efficient than Orthogonal FDMA (O-FDMA). NOMA improves power efficiency by up to 45-54 percent. This demonstrates the usefulness of NOMA in obtaining improved energy efficiency while maintaining the Virtualized Wireless Networks (VWN) slices’ isolated. The proposed method beats O-FDMA in terms of necessary transmit power, particularly when the majority of users are located near the cell edge and channel conditions vary widely. SIC can help some users at the sub-carrier level by reducing interference. However, the minimum needed throughput of each user behaves as a constraint. The other major difficulty in a VWN, which aims to maximize spectrum and infrastructure efficiency, is to keep users in different slices isolated. Adopting Lagrange Dual method joint time and power allocation in RAN showed that TDMA beat NOMA, demonstrating that TDMA is more spectral and energy efficient. NOMA requires longer (or equal) DL time than TDMA, consumes more (or equal) energy, and has a lower spectral efficiency as examined in [

14].

3.1.3. Power Domain NOMA

Power domain NOMA (PD-NOMA) is a key technology in 5G networks that enables multiple users to simultaneously access the same time-frequency resource. NOMA allocates different power levels to different users within the same resource block, allowing for improved spectral efficiency and increased capacity compared to traditional orthogonal multiple access schemes.

In PD-NOMA, users with stronger channel conditions are allocated higher power levels, while users with weaker channel conditions are allocated lower power levels. This enables multiple users to share the same resource block by exploiting the power domain for separation. The receiver then uses advanced multi- user detection techniques to decode the signals from different users.

To enhance energy efficiency in PD-NOMA, ML techniques can be employed. Here are some examples of how they can be used:

Channel Prediction: ML algorithms can be used to predict the channel conditions of different users. This information can be used to allocate power levels more effectively, ensuring that users with better channel conditions are assigned higher power levels. By accurately predicting the channel conditions, unnecessary power consumption can be reduced, leading to improved energy efficiency.

Resource Allocation: ML algorithms can optimize resource allocation in PD-NOMA. By considering various factors such as user demands, channel conditions, and energy efficiency objectives, ML models can determine the optimal power levels and resource blocks to be allocated to different users. This can lead to more efficient utilization of network resources, reducing energy consumption.

Power Control: ML can be used to dynamically adjust the transmit power levels of NOMA users. By continuously monitoring network conditions and using reinforcement learning or other optimization algorithms, the transmit power levels can be adaptively adjusted to meet performance requirements while minimizing energy consumption.

User Grouping: ML algorithms can be employed to group users with similar channel characteristics together. By forming appropriate user groups, PD-NOMA can be more effectively applied, allowing for better power allocation and improved energy efficiency. Clustering algorithms and unsupervised learning techniques can be used to identify similar user profiles.

Beamforming Optimization: ML techniques can optimize beamforming in PD-NOMA systems. By learning the channel characteristics and user locations, ML algorithms can determine the optimal beamforming weights and directions to maximize the received signal strength. This enables better separation of users and improved energy efficiency.

Traditional PD-NOMA systems allow multiplexed subscribers to satisfy the requirements of NOMA at different power levels by using active battery resources [

15]. On the contrary, sensor nodes are passive devices with no capability to adjust their transmit power level based on their respective channel gains. In [

15], they consider a sensor field of multiple Backscatter Nodes (BN) and a reader. Sensors located in the reader’s annular coverage zone receive power from the reader, and they backscatter incident waves to transmit data to the reader [

15]. The reader decodes the backscatter signals from the BNs and sends the decoded data to a cellular BS. This study boosts system throughput. The contribution of this study is to employ backscatter communication in the field of IoT sensors and to enhance the spectrum efficiency of the system using a hybrid TDMA-based PD- NOMA scheme. Through NOMA, the network increases its spectrum efficiency by multiplexing its nodes in half the time. However, the study succeeded in highlighting important KPIs but failed to emphasize drop calls, latency, system bandwidth, and mobility. To conclude, a sensor field of K IoT sensors, BNs, and a reader is considered to adopt a monostatic backscatter communication model to improve network energy efficiency. The reader and the backscatter nodes are the two components of the monostatic backscatter architecture (BNs) [

15]. All sensors within the reader coverage zone can backscatter incident waves to send data. A BN is made up of the following parts: a receiver, a transmitter, a microcontroller, a variable impedance, an energy harvester module, and an information decoder. Two scenarios are highlighted: When the coverage zone increases higher transmit power is needed to maintain the same outage performance and when the threshold increases, NOMA performance begins to decrease [

15].

An efficient heuristic Joint Subcarrier and Power Allocation scheme (JSPA) was designed by combining the solution of System Communication Units (SCUS) and Multiple Channels Per Carrier (MCPC) [

16]. In multi- carrier NOMA with cellular power constraints, a novel approach was proposed to the Weak State Routing (WSR) maximization problem. It achieves a near-optimal sum rate with user fairness as well as significant performance improvements compared with NOMA.

3.1.4. Time Division Multiplexing Access (TDMA)

TDMA is a multiple access scheme used in 5G networks for sharing the available radio spectrum among multiple users. In TDMA, the available frequency band is divided into time slots, and each user is allocated exclusive time slots for transmitting and receiving data. This technique has a drawback where a clock synchronization is essential to be used so that each user can transmit and recuperate his received data without being interfered with the other subscribers. This leads to increase in transmission delay [

17].

Here are some key aspects of TDMA in 5G networks:

Time Slot Division: In TDMA, the time domain is divided into equal-sized slots, and each user is assigned one or more time slots for communication. The duration of each time slot is fixed, and the number of slots allocated to each user depends on factors such as user requirements, network capacity, and QoS targets.

Channel Access: During their allocated time slots, users have exclusive access to the entire frequency band. They can transmit and receive data without interference from other users. This enables simultaneous communication among multiple users by utilizing different time slots.

Synchronization: The efficient operation of TDMA requires synchronization among users to ensure that they transmit and receive data within their assigned time slots accurately. Network synchronization protocols are used to synchronize the clocks of different devices, ensuring precise timing and avoiding collisions.

Efficient Spectrum Utilization: TDMA allows multiple users to share the same frequency band by dividing it into time slots. This enables efficient utilization of the available spectrum as users take turns transmitting and receiving data. As a result, the overall network capacity can be increased, and more users can be accommodated within the limited spectrum of resources.

Flexibility and Scalability: TDMA offers flexibility in terms of allocating time slots to users. The number of time slots assigned to each user can be adjusted dynamically based on the user's requirements, traffic load, and QoS targets. This scalability enables efficient utilization of network resources and ensures that users receive the necessary bandwidth and capacity as per their needs.

Compatibility with Legacy Systems: TDMA has been widely used in previous cellular generations like 2G and 3G. As a result, it offers backward compatibility with legacy systems, allowing for smooth integration and migration from older networks to 5G. This compatibility ensures a seamless transition and supports interoperability with devices and infrastructure from previous generations.

It's important to note that while TDMA is a well- established multiple access scheme, 5G networks primarily employ O-FDMA and other advanced access schemes like NOMA for improved spectral efficiency and capacity. TDMA may be used in specific scenarios or network configurations within the 5G ecosystem, depending on the requirements and network architecture. One of the major principles of NOMA for improving spectrum efficiency is to allow several devices to utilize the same spectrum at the same time. Due to the greater transmission time compared to TDMA, this inevitably results in higher circuit energy consumption for NOMA, which is particularly damaging to IoT devices that are energy-confined in general. At the Access Point (AP), SIC is used to eliminate multiuser interference, the throughput is also reduced as per the experimental result. In tests, PD-NOMA appears to outperform conventional TDMA and increase the system throughput [

15].

3.1.5. Open Radio Access Network (O-RAN)

O-RAN is an emerging architecture and approach in the context of 5G networks. It aims to disaggregate and open up traditionally proprietary and integrated network elements, such as the RAN, by introducing open interfaces and standardized hardware and software components. O-RAN is designed to promote interoperability, flexibility, innovation, and cost- effectiveness in the deployment and operation of 5G networks. It breaks down the traditional RAN into functional components, separating hardware and software elements. This allows for the use of standard hardware, such as commercial off-the-shelf servers, and enables the software-defined functionality of the RAN. With the disaggregated architecture, operators can deploy RAN components from different vendors, customize configurations, and optimize network resources based on their unique needs. This flexibility facilitates network optimization and capacity expansion.

Open Virtual RAN (Open VRAN) refers to a heterogeneous approach to deploying virtualized mobile networks that use open and interoperable protocols and interfaces. It is applied over a common proposed hardware in a multi-vendor software environment, giving greater flexibility than traditional RAN designs. The term "open VRAN" refers to a decentralized strategy for creating virtualized mobile networks. Using open and compatible protocols and interfaces that would be implemented over a common proposed hardware in a multi-vendor software application, driving innovation over traditional RAN designs. The fundamental difficulty in ORAN nowadays is that the ecosystem is fragmented, ununified, and lacking a clear goal, until there is a convergence of RAN virtualization standards and wireless technology standards. (i.e., 3GPP and IEEE) [

18]. While O-RAN itself is a framework that defines the architecture and interfaces, ML techniques can be employed within the O-RAN ecosystem to enhance energy efficiency, such as:

Energy-Aware Resource Allocation: ML algorithms can be utilized to optimize the allocation of network resources, such as power and bandwidth, based on the traffic load, user demand, and energy efficiency objectives. By learning from historical data and network conditions, ML models can dynamically allocate resources to minimize energy consumption while maintaining the desired QoS levels.

Deep Learning: Deep learning refers to the use of Deep Neural Networks (DNNs) with multiple layers to learn complex representations from data. In O-RAN, deep learning techniques, such as Convolutional Neural Networks (CNNs) or Recurrent Neural Networks (RNNs), can be applied for tasks like signal processing, channel prediction, beamforming optimization, and network optimization.

In addition to ML techniques, several AI-based techniques are used in O-RAN to optimize network performance and enhance efficiency, such as Reinforcement Learning (RL). RL is a subfield of ML where an agent learns through interactions with an environment and receives feedback in the form of rewards or penalties. In O-RAN, RL can be used to optimize dynamic network configuration, power management, and resource allocation based on the received reward signals.

In [

19], the authors integrate the O-RAN into the RAN and utilize a Reinforcement Learning-Based Dynamic Function Splitting (RLDFS). With Quality Learning and State-Action-Reward-State- Action (SARSA) algorithms, the RLDFS technique decides which functions to be split between the Centralized Unit (CU) and the Distributed Unit (DU) in an O-RAN to make the best use of Renewable Energy Sources (RES) supply while lowering operating costs. Different solar panels and battery sizes are considered for the network model to evaluate the feasibility of using renewable energy for a Mobile Network Operator (MNO) [

18]. The study focused on optimizing energy use by moving renewable energy based on traffic demand and electricity rates.

Reinforced Learning-based systems are less expensive than other methods, and their efficacy improves as solar panels and batteries get bigger. The key explanation for this result is the adaptation to a bigger proportion of renewable energy. For MNO, the constraint is the battery size. Two methodologies are compared to assess RL algorithms. The first, known as Distributed RAN (D-RAN), uses DUs to handle both Ultra-Reliable Low Latency Communication (URLLC) and Enhanced Mobile Broadband (eMBB) packets. The second, Centralized RAN (C-RAN), handles URLLC packets at DUs to comply with delay requirements and transfers eMBB packets to the CU to save money. However, there was no focus on latency; instead, they looked at the impact of solar radiation and battery size on cost.

QoS is certainly central to decision making in dynamic function splitting which concentrates on area traffic capacity. QoS was studied in four main cities with distributed solar radiation in the presence of varied levels of traffic. As a result, the Q-Learning and SARSA algorithms approaches mentioned above function better when solar radiation rates increase than C-RAN and D- RAN. However, the battery life of UE was not cited. On the other hand, the system bandwidth, spectral data, and peak data rate are important factors that weren’t explored. Throughput, mobility, and drop call weren’t also investigated.

3.2. Core Network

In the core network layer of 5G, several technologies are utilized to facilitate efficient network operations and support the connectivity and services provided by the network. While not all of these technologies are inherently green enablers, they can contribute to energy efficiency and sustainability in different ways. Networks protected by SDN and NFV have added a new level of flexibility that allows network operators to serve services with very high requirements across several industries.[

20]

Network Slicing (NS) represents the aggregation of any network flow as the traffic of particular operators (tenants, users, and infrastructure) that can be individually identified, controlled and isolated to guarantee the Service Level Agreement (SLA)of each network segment regardless of packet structure and technology. A network slice is a collection of mobile network functions and a set of Radio Access Technologies (RATs). These network functions combine the data class and pieces to adapt to the requirements of diverse applications. SDN and NFV are the techniques used for implementing NS. SDN is capable of centrally managing the network traffic for the software application. The role of NFV is to package network functions such as load balancing, firewalls, and routing that may perform on commodity hardware devices [

21].

3.2.1. Software-Defined Networking (SDN)

SDN separates the network control plane from the data plane, allowing for centralized control and programmability of network resources. By dynamically managing and optimizing network traffic flows, SDN improves network efficiency, reduces power consumption, and enables more efficient resource allocation. In an SDN-based 5G network, the control plane, responsible for making decisions about traffic routing and resource allocation, is decoupled from the data plane, which handles the actual forwarding of data packets. The control plane is centralized in a network controller, while the data plane consists of switches, routers, and other network devices. SDN enables the creation of network slices, which are virtualized and independent end-to-end network instances tailored for specific use cases or services. Each network slice can have its network characteristics, such as bandwidth, latency, and security policies. SDN facilitates the dynamic provisioning, isolation, and management of network slices, allowing operators to efficiently serve diverse service requirements within a single physical infrastructure.

Hybrid Machine Learning Framework for Energy Efficient Routing in SDN (HyMER), a new hybrid ML framework powered by SDN and ML, allows traffic- aware, and energy-efficient routing on the core level [

22]. The study conducted using HyMER heuristics showed it can save up to 50% on link costs and consumes as little as 14.7 watts less for realistic traffic traces and network topologies. When compared to techniques that prioritize energy savings, it has a 2-hop shorter average path length, 15 Mbps higher throughput, and 5ms shorter latency. HyMER maintains the trade-off between performance and energy efficiency, according to extensive tests. The HyMER delay was twenty percent to ninety percent for the Abilene and GEANT network topology and traffic traces. The results for throughput are similar to heuristics like Next Shortest Path (NSP), Shortest Path First (SPF), and Next Maximum Utility (NMU) that prioritize performance while saving energy achieving a delay of 9ms for 20% traffic volume and 11ms for 90% traffic volume [

22]. A comparison of Highest Demand First (HDF) and Smallest Demand First (SDF) heuristics reveals that both are better for energy savings but suffer from a delay of 3ms to 10ms worse than NSP, NMU, and SPF. However, HyMER demonstrates a delay between the two types of heuristics [

23]. In this experiment, the average bandwidth is 100 Mbps, and the average flow rate is 11.36 Mbps and 7.79 Mbps, respectively, for Abilene and GEANT topologies and traffic traces [

23].

In contrast, virtual machine-energy-saving approaches focus on minimizing the number of physical servers and management time involved with migration. The HyMER reinforcement component is the first to model both network performance and energy efficiency. They focused on the use of the HyMER algorithm for delay metric to maximize QoS in terms of delay, congestion, and reliability as well as to enhance security by detecting attacks in advance and classifying traffic capacity areas. Nevertheless, drop call, coverage, and mobility in addition to the battery life of UE were not stressed. Another novel intelligent routing system for SDN is based on neural networks, whose data-flow transmission patterns are determined by using Neural Networks (NNs) instead of flow tables and by redeploying the NN packets with well-trained NN [

24]. By minimizing delivery times for packets, routing schemes are intended to reduce energy factors [

3]. Therefore, Energy efficiency and routing schemes are interrelated. A 20MHz bandwidth and a total receiver noise power σ2 of -94 dBm is used to simulate communication with a maximum transmit power of 20 dBm per UE. Still, drop calls, latency, throughput, peak data rate with coverage, and mobility were not emphasized. DNN is utilized to detect network traffic in the demo described in the article [

24].

3.2.2. Network Function Virtualization (NFV)

NFV is an architectural approach in 5G networks that aims to virtualize and consolidate network functions onto standard hardware, such as servers, switches, and storage devices. NFV replaces traditional dedicated network equipment with software-based Virtualized Network Functions (VNFs) running on Virtual Machines (VMs) or containers. NFV enables the dynamic scaling of VNFs based on demand. By automatically scaling the number of active VNF instances according to real-time traffic load, operators can allocate resources only when necessary, reducing energy waste during periods of low traffic. This elastic scaling helps maintain energy efficiency by matching resource allocation to current needs. It allows for the consolidation of multiple network functions onto a shared hardware platform. By running multiple VNFs on the same physical server or data center, operators can achieve better resource utilization and reduce the number of active physical devices, leading to energy savings. VNF consolidation reduces the overall power consumption and footprint of the network infrastructure. Energy efficiency is accomplished with resource allocation using the combination of NFV and LSTM compared to simple LSTM [

25]. This model was created to achieve great accuracy in the forecast of VNF resources. Instead of using simulations, an OpenStack-based test environment was used to demonstrate that this approach outperforms the standard model. Optimizing resource allocation of the related VNFs is one of the most critical concerns when evaluating the service quality of such as Service Function Chaining (SFC) which is necessary to avoid service interruptions owing to a shortage of resources during highly fluctuating traffic situations and to lower network operation costs.

Resource allocation technique in NFV is proposed using DL [

26]. It identifies the network traffic by utilizing the timing characteristics. The intelligent VNF is installed on a separate server with a Graphics Processing Unit (GPU) and operates on the data plane of SDN. The Mininet is used to construct the test-bed and the environment’s architecture. The connection available bandwidth between each linked switch is set to 5 Mbps to 60 Mbps, and the link bandwidth between the switch and the host is set to 100 Mbs [

26]. The delay between each connected switch is set to between 2 and 30 ms. To keep things simple, they chose four distinct types of apps with varying network requirements: Class 1 (Real-time Traffic), Class 2 (Audio/Video Streaming), Class 3 (Http Browsing), and Class 4 (Restricted/File Transfers). The system may allocate various network resources to different applications, significantly improving network QoS. VNF is added to the system to facilitate traffic identification to lessen the impact on the SDN controller. The network functions are therefore separated, allowing each module to operate independently. When the SDN controller conducts traffic identification, the introduction of VNF also answers the issue of high load [

26].

3.3. Cloud and Edge Computing

In 5G networks, several cloud and edge technologies are utilized to enable efficient and scalable deployments. These technologies enhance the capabilities of 5G networks by providing computational resources, storage, and services closer to the network edge. Here are some key 5G cloud and edge technologies used in 5G networks:

3.3.1. Cloud Radio Access Network (CRAN)

CRAN is an architectural concept used in 5G networks that aims to centralize and virtualize the baseband processing of multiple BSs. In CRAN, the baseband processing functions, including signal processing, modulation/demodulation, coding/decoding, and resource allocation, are centralized in a central data center or cloud infrastructure. This centralization allows for the efficient pooling of baseband resources and enables more flexible and dynamic management of the radio access network. The Baseband Processing Units (BBUs) of multiple BSs are pooled together in the central data center. By sharing BBUs among multiple BSs, CRAN reduces the overall hardware and energy costs, as well as the complexity associated with maintaining and upgrading individual BBUs at each BS. Heterogeneous CRAN (H-CRAN) is a novel idea that facilitates the increase of capability for large-scale data rates. It enhances the QoS and improves the energy efficiency. H-CRAN helps to reduce radio site operations costs, capital costs energy problems due to its architecture based on less BS deployment. It is based on high-power nodes (macro-BS and micro-BS) and low- power nodes (pico and femto cells) to gather data. However, the usage of low-power nodes will cause interference that affects energy consumption. Thus, controlling interference allows for the reduction of spectral efficiency deterioration and energy consumption. Furthermore, all the next research in CRAN focused on resource allocation, power minimization, and computational complexity using ML techniques. To improve the CRAN in the network, AI approaches are viewed as an optimization tool. In cloud computing platforms and to reduce power consumption, the sleeping cell idea is used with DNN [

27]. Several cells can indeed be put into sleep mode to conserve the most power. Moreover, to tackle the problem in real-time applications, more time-efficient techniques are necessary [

3]. ML is extremely important for separating distributed units and high-power nodes to reduce computing time and power consumption [

3]. CRAN can efficiently process resources between cells and eliminate interference. This design change, however, introduces additional technological obstacles for execution. Furthermore, proper deployment of wireless resources is still required to achieve improved power efficiency.

3.3.2. Multi-Access Edge Computing (MEC)

MEC is typically used in the access network layer of the 5G network architecture. MEC nodes are deployed at the edge of the network, usually at BSs or cell towers, to provide computing and storage resources closer to the end-users and devices. MEC nodes are connected to the 5G core network, which enables seamless communication between the MEC nodes and other network functions such as the User Plane Function (UPF) and the Session Management Function (SMF). MEC is designed to support low-latency and high- bandwidth applications by enabling computation and data storage at the edge of the network. By moving computing and storage resources closer to the end-users and devices, it can reduce the amount of data that needs to be transmitted over the network, thereby reducing latency, and improving overall network performance. It utilizes edge servers deployed at BSs or nearby data centers to provide computing resources at the network edge. The server can include processing power, storage, and networking capabilities. The infrastructure is designed to handle the specific requirements of mobile networks, enabling faster processing and reduced data transmission. MEC introduces a distributed architecture where computing resources are deployed at multiple points in the network. This allows for localized data processing and offloading of certain tasks from centralized cloud servers, resulting in optimized network traffic and improved performance. MEC enables the development of innovative services and applications that benefit from the low latency and high bandwidth of 5G networks. Examples include Augmented Reality (AR) and Virtual Reality (VR) applications, real- time video analytics, autonomous vehicles, smart cities, and IoT deployments. MEC provides the necessary computing capabilities to support these latency-sensitive and bandwidth- intensive applications. It helps offload certain processing tasks from mobile devices to edge servers. This reduces the amount of data that needs to be transmitted over the network, minimizing congestion and improving overall network efficiency. By processing data locally, MEC also reduces reliance on centralized cloud infrastructure, which can result in cost savings for data transfer and storage.

As for high-dimensional MEC situations, Deep Reinforcement Learning (DRL) is an effective method for controlling complications [

28]. The recurrent offloading choice was made using a computational technique. The goal of this study is to reduce the total cost of all users in the MEC system by stressing latency performance and power consumption [

29]. They consider a single small cell with a bandwidth of W = 10MHz and an eNB installed with an MEC server in the center. The UEs are randomly distributed within a 200m radius of the eNB. The MEC server’s processing capacity is F = 5GHz/sec, while the CPU frequency of each UE is 1GHz/sec. The transmission and idle power of the UE are set in turn to 500mW and 100mW [

28]. Because there is just one eNB, they assumed that interval interference is ignored, and radio resources were not taken into account for this framework. However, they didn’t explore drop calls or other important KPIs such as throughput, peak data rate, spectral data efficiency, user experience, UE battery life, and mobility.

3.4. Energy Harvesting

5G energy harvesting refers to the process of capturing and utilizing ambient energy from the environment to power or supplement the energy needs of 5G network devices and infrastructure. It involves converting various forms of energy, such as solar, wind, thermal, vibration, or radio frequency energy, into electrical energy that can be used to power 5G network components. Energy harvesting in 5G networks is particularly relevant due to the massive number of connected devices and the increasing demand for network coverage and capacity.

Some of the key aspects and applications of 5G energy harvesting include:

Self-Powered Devices: Energy harvesting allows for the development of self-powered 5G devices that can operate without relying solely on traditional power sources, such as batteries or direct electrical connections. This enables increased mobility and flexibility in deploying network devices.

IoT and Sensor Networks: 5G energy harvesting enables the deployment of energy-autonomous sensors and IoT devices that can operate in remote or inaccessible locations without requiring frequent battery replacements or external power sources.

Small Cells and BSs: Energy harvesting techniques can be applied to small cells and BSs to reduce their reliance on the electrical grid. By harnessing ambient energy sources, these network components can operate with increased energy efficiency and reduced environmental impact.

Wireless Power Transfer: Energy harvesting techniques can be combined with wireless power transfer technologies, such as radio frequency energy harvesting or resonant inductive coupling, to wirelessly transfer power to 5G devices, eliminating the need for physical power connections.

Energy-Aware Network Planning: Energy harvesting considerations can be incorporated into network planning and deployment strategies to optimize the energy efficiency and sustainability of 5G networks. This involves identifying potential energy sources, assessing their availability and reliability, and designing network architectures that make the most efficient use of harvested energy.

The demand for constant computing power for AI processing and the increasing popularity of IoT devices pose significant challenges to the energy efficiency of communication devices. Energy harvesting allows devices to power themselves, which is essential for off-grid operation, sustainable IoT devices and sensors, infrequently used devices, and long standby intervals. In addition, symbiotic wireless technology and smart power management technology offer potential solutions [

30].

3.4.1. Millimeter Waves (mmWaves)

Millimeter wave (mmWave) is a spectrum frequency band that approximately ranges from 30 GHz to 300 GHz [

31]. Since it is positioned between microwave and infrared waves, this spectrum is suitable for high-speed wireless broadband communications. It is used in the most recent 802.11 and Wi-Fi standards (functioning at 60 GHz) [

32]. Due to the problem of bandwidth limitation and increased data demand in 5G networks, mmWave have been explored as a method to increase network capacity. mmWave frequencies provide larger bandwidths, enabling significantly higher data transmission rates compared to lower frequency bands. This increased capacity allows for faster data transfer and supports the growing demand for high-bandwidth applications, such as streaming, virtual reality, and augmented reality. While mmWave technology offers higher capacity, it typically requires denser network deployments due to its shorter range and susceptibility to signal attenuation.

Denser networks can potentially lead to higher energy consumption as more BSs or small cells are required. Energy-efficient design and optimization strategies, such as intelligent resource allocation, sleep modes, and dynamic power management, are important for mitigating the increased energy demands of mmWave deployments. The rapidly expanding mobile industry, as well as the growing need for high data speeds, have prompted networks to open a new mmWave spectrum that can concurrently service a group of users utilizing beamforming [

33].

Beamforming in mmWave technology is a critical technique used in 5G networks to overcome the challenges associated with high-frequency mmWave signals that enables efficient and reliable communication. It is a technique that uses multiple antennas to form a focused and directional signal beam. Instead of broadcasting signals uniformly in all directions, beamforming concentrates the transmitted energy in specific directions, increasing the signal strength and mitigating the effects of path loss and blockages. Beamforming is used in both the transmitter (transmit beamforming) and the receiver (receive beamforming). It utilizes advanced spatial signal processing algorithms to dynamically adjust the phase and amplitude of the signals at each antenna element.

In practical implementations, hybrid beamforming is commonly used in mmWave 5G networks. Hybrid beamforming combines digital and analog beamforming techniques. Digital beamforming is performed at the baseband using digital signal processing, while analog beamforming is applied at the radio frequency level using phase shifters or analog components. This hybrid approach provides a trade-off between performance and complexity. Beamforming in mmWave 5G networks offers several advantages. It enables longer range and better coverage by focusing the transmitted energy in the desired direction, which enhances energy efficiency, compensating for the high path loss of mmWave signals. Beamforming also improves signal quality, enabling higher data rates and reducing interference from other devices and reflections. Therefore, beamforming has been embraced as a strategic approach for resolving interference between neighboring cells [

34].

Area communications using mmWaves suffer from significant propagation loss, high-level of attenuation, and diffraction [

35]. The BSs that use mmWave are typically outfitted with massive arrays of antennas that aid in overcoming route loss, improving spectral efficiency, and increasing capacity. Energy efficiency is a challenge as a result of these enormous arrays of antennas. Deep-learning- based methods can be performed by massively concurrent architectures with distributed memory architectures, such as GPUs, they have attracted attention because their energy efficiency and high throughput computing performance piqued the curiosity of the industry.

3.4.2. Heterogeneous Network (HetNet)

HetNets are characterized by the integration of different types of network nodes, such as macro cells, small cells, and Wi-Fi access points, within the same network. They are essential in 5G networks to address coverage gaps, increase network capacity, and provide seamless connectivity.

They utilize a mix of different-sized cells, strategically deployed to optimize coverage and capacity in various areas. By deploying small cells or Wi-Fi access points in dense urban or high-traffic areas, the coverage and capacity can be enhanced while reducing the reliance on macro cells. This optimized coverage helps reduce energy consumption by avoiding unnecessary transmission of signals over long distances.

HetNets enable offloading traffic from macro cells to small cells or Wi-Fi networks, relieving congestion and increasing overall network capacity. By utilizing small cells or Wi-Fi access points where appropriate, HetNets can better distribute network load, reducing the energy consumption associated with handling high traffic volumes in macro cells. They can benefit from energy harvesting technologies such as solar panels or radio frequency energy harvesting. These techniques can provide a supplemental power source, reducing the reliance on electrical grid power and enabling energy-autonomous operation for small cell deployments.

When planning and optimizing HetNet deployments, energy harvesting potential should be considered. Assessing ambient energy sources like solar or radio frequency signals and incorporating energy harvesting capabilities into network infrastructure can enhance energy efficiency and reduce the network's carbon footprint.

DRL is used to tackle energy efficiency in uplink HetNets as well as resource allocation optimization. But the spectrum reuse causes interference between heterogeneous cell sizes like (pico, femto, macro, small). Small cells can assist the network in meeting the data demands of several connected devices as well as large data traffic with high communication data rates. They are vulnerable to significant power consumption [

3]. In the simulation, the network consists of two Macro, eight Pico, sixteen Femto BS, and fifty UEs spread with BS radiuses of 500m, 100m, and 30m, separately. Yet, as the number of UEs grows, so does the co-channel interference [

36]. When Pico and Femto BSs are installed in the same radio coverage as Macro BSs, co-channel interference is observed. 25 UEs are randomly distributed over the Macro BSs’ coverage area, which is specified as a 200-meter square [

37]. The Micro BSs are dispersed at random throughout the study region. UEs have a maximum transmit power of 23 dBm. The noise power density has been tuned to -174dBm/Hz. Moreover, 30 channels form the system BW where each channel bandwidth is 180 KHZ. To accomplish the great spectrum efficiency of HetNets, Pico, and Femto BSs can reuse and share the same channels as Macro BSs. As a result, HetNets have been viewed as a suitable technique for increasing system capacity for forthcoming mobile communications. In tackling large-scale learning issues, the suggested Dueling Double Deep Q- Network (D3QN) technique provides an advantage in improving system capacity and network utility while requiring less processing time. There were limitations in the absence of drop calls, throughput, latency, UE battery life, and mobility KPIs.

Table 1 shows a conclusion of the discussed techniques in various 5G enablers.

4. Future Directions

Authors should discuss the results and how they can be interpreted from the perspective of previous studies and of the working hypotheses. The findings and their implications should be discussed in the broadest context possible. Future research directions may also be highlighted.

Energy efficiency is a critical aspect of 5G networks, and further research is needed to optimize energy consumption and improve sustainability. One potential avenue for exploration is the integration of CGP in SON to enhance energy efficiency. The following areas can be addressed in future research:

Energy-Efficient Network Design: Further research can focus on developing energy-efficient network architectures and protocols specifically designed for 5G networks. Investigating the trade-offs between energy consumption and network performance metrics, such as latency, throughput, and reliability, can help optimize network design. By leveraging CGP algorithms, network architectures can be optimized to reduce energy consumption while maintaining satisfactory performance levels.

Power Management Techniques: Exploring advanced power management techniques can significantly contribute to reducing energy consumption in 5G networks. CGP can be used to develop intelligent algorithms that dynamically adjust power levels or optimize operational parameters of network elements. This can include adaptive power control, dynamic sleep modes, or efficient resource allocation, ensuring energy is utilized optimally without compromising network performance.

Energy Harvesting Integration: Integrating energy harvesting techniques in 5G networks can contribute to reducing energy consumption and promoting sustainability. CGP algorithms can be used to optimize the integration of energy harvesting sources, such as solar panels or RF energy harvesting, into network components. This allows for the efficient utilization of harvested energy to power network elements and reduce reliance on conventional energy sources.

Energy-Aware Self-Organizing Algorithms: SON can benefit from CGP by developing energy-aware self- organizing algorithms. These algorithms can analyze real- time energy consumption data and network conditions to dynamically optimize network parameters, such as coverage, handover algorithms, or resource allocation while considering energy efficiency as a key objective. CGP-based algorithms can adaptively adjust network configurations to minimize energy consumption while maintaining desired network performance.

Dynamic Network Optimization: CGP algorithms can be utilized to enable dynamic network optimization in SON environments. By continuously analyzing network data and performance metrics, CGP-based algorithms can dynamically adjust network parameters, such as transmit power levels, antenna configurations, or channel assignments, to achieve optimal energy efficiency. This adaptive optimization approach ensures that the network operates efficiently under varying conditions and traffic patterns.

By leveraging CGP within SON environments, 5G networks can achieve higher levels of energy efficiency, sustainability, and overall network performance. 5G networks can be further optimized to meet the evolving requirements of diverse applications and achieve higher levels of efficiency and sustainability. By addressing the mentioned technologies. Further research can be conducted on developing more advanced AI algorithms and models to enhance natural language processing, computer vision, and decision- making capabilities. This will enable more intelligent and context-aware network operations. Optimizing network slicing techniques is another important direction for future research. This involves exploring innovative approaches to dynamically allocate network slices based on specific application requirements, such as latency, bandwidth, and reliability. Further investigation is needed to understand the impact of network slicing on network performance, scalability, and cost-effectiveness, and to identify ways to optimize slice management and orchestration.

The integration of edge computing and MEC is another promising area. Advancing research on edge computing architectures and frameworks to support low- latency and high-bandwidth applications in 5G networks will be crucial. Additionally, exploring the integration of AI and ML algorithms at the edge to enable real-time data processing, enhance energy efficiency and better decision- making is essential. Developing energy-efficient communication protocols and algorithms tailored for 5G networks is important. These protocols should consider the unique characteristics of diverse use cases and traffic patterns. Investigating energy-saving mechanisms, such as adaptive modulation and coding, sleep mode operation, and traffic offloading strategies, will contribute to reducing energy consumption in wireless communications. Additionally, exploring cross-layer optimization techniques that consider both physical layer considerations and higher-layer network protocols will contribute to improved energy efficiency. Energy efficiency is a key aspect of 5G networks, and further research is needed to develop energy-efficient network architectures and protocols specifically designed for 5G. Investigating the trade-offs between energy consumption and network performance metrics to optimize network design is important. Advanced power management techniques, such as dynamic sleep modes, energy harvesting, and intelligent resource allocation, can be explored to reduce energy consumption in 5G networks.

5. Conclusions

In conclusion, this research paper has provided an in- depth analysis of the 5G network, focusing on its various layers, enablers, and the application of AI-based techniques to improve energy efficiency and overall performance. Throughout the paper, we have emphasized the importance of energy efficiency in implementing environmentally friendly strategies within the 5G network. By examining the different layers of the network, we have identified key enablers and their characteristics, shedding light on the underlying technologies that drive the 5G network's functionality. Additionally, we have explored how AI techniques can be employed to enhance energy efficiency and optimize KPIs, contributing to a more sustainable and efficient network infrastructure. Furthermore, this paper has outlined potential directions and challenges for future research in the field of 5G networks. By identifying areas that require further investigation and addressing potential obstacles, we hope to inspire and guide scholars in their continued exploration of this rapidly evolving domain. In summary, this research paper outlines the significance of energy efficiency in sustainable 5G networks. Through the application of AI and addressing challenges, it promises improved performance and reduced energy consumption for a greener telecommunications approach.

References

- Bohli and R. Bouallegue, "How to Meet Increased Capacities by Future Green 5G Networks: A Survey," IEEE Access, 2019. [CrossRef]

- H. Huang et al., "Deep-Learning-Based Millimeter-Wave Massive MIMO for Hybrid Precoding," IEEE Transactions on Vehicular Technology, 2019. [CrossRef]

- Mughees et al., "Towards Energy Efficient 5G Networks Using Machine Learning: Taxonomy, Research Challenges, and Future Research Directions," IEEE Access, 2020.

- Nyalapelli et al., "Recent Advancements in Applications of Artificial Intelligence and Machine Learning for 5G Technology: A Review," in 2023 2nd International Conference on Paradigm Shifts in Communications Embedded Systems, Machine Learning and Signal Processing (PCEMS), Nagpur, India, 2023.

- V. Gunturu et al., "Artificial Intelligence Integrated with 5G for Future Wireless Networks," in 2023 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 2023.

- L. M. P. Larsen et al., "Toward Greener 5G and Beyond Radio Access Networks—A Survey," IEEE Open Journal of the Communications Society, 2023. [CrossRef]

- Y. Arjoune and S. Faruque, "Artificial Intelligence for 5G Wireless Systems: Opportunities, Challenges, and Future Research Direction," in 2020 10th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 2020.

- M. Al-Khafaji and L. Elwiya, "ML/AI Empowered 5G and Beyond Networks," in 2022 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 2022.

- L. Sanguinetti et al., "Deep Learning Power Allocation in Massive MIMO," IEEE, 2018.

- S. Rommel et al., "The Fronthaul Infrastructure of 5G Mobile Networks," in IEEE 23rd International Workshop on Computer Aided Modeling and Design of Communication Links and Networks (CAMAD), 2018.

- S. Ismail et al., "Recent Advances on 5G Resource Allocation Problem Using PD-NOMA," in 2020 International Symposium on Networks, Computers and Communications (ISNCC), 2020.

- L. Bai et al., "Transmit Power Minimization for Vector-Perturbation Based NOMA Systems: A Suboptimal Beamforming Approach," IEEE Transactions on Wireless Communications, 2019. [CrossRef]

- R. Dawadi et al., "Power-Efficient Resource Allocation in NOMA Virtualized Wireless Networks," in IEEE Global Communications Conference (GLOBECOM), 2016.

- Q. Wu et al., "Spectral and Energy-Efficient Wireless Powered IoT Networks: NOMA or TDMA?" IEEE Transactions on Vehicular Technology, 2018.

- S. Zeb et al., "NOMA Enhanced Backscatter Communication for Green IoT Networks," in International Symposium on Wireless Communication Systems (ISWCS), 2019.

- L. Salaün et al., "Weighted Sum-Rate Maximization in Multi-Carrier NOMA with Cellular Power Constraint," IEEE, 2019.

- L. Bai et al., "Multi Satellite Relay Transmission in 5G: Concepts, Techniques, and Challenges," IEEE Network, 2018.

- L. Gavrilovska et al., "From Cloud RAN to Open RAN," Wireless Personal Communications, 2020.

- T. Pamuklu et al., "Reinforcement Learning Based Dynamic Function Splitting in Disaggregated Green Open RANs," in IEEE International Conference on Communications, 2021.

- K. Srinivas et al., "Functional Overview of Integration of AIML with 5G and Beyond the Network," in 2023 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 2023.

- F. Debbabi et al., "Overview of AI-Based Algorithms for Network Slicing Resource Management in B5G and 6G," in 2022 International Wireless Communications and Mobile Computing (IWCMC), Dubrovnik, Croatia, 2022.

- G. Assefa and O. Ozkasap, "Hymer: A Hybrid Machine Learning Framework for Energy Efficient Routing in SDN," arXiv preprint arXiv:1909.08074, 2019.

- Osseiran et al., "5G Mobile and Wireless Communications Technology," Cambridge University Press, 2016.

- M. Uddin, M. R. Amin, and M. G. Ahammad, "Nnirss: Neural Network-Based Intelligent Routing Scheme for SDN," Neural Computing and Applications, 2019.

- H.-G. Kim et al., "Machine Learning-Based Method for Prediction of Virtual Network Function Resource Demands," IEEE, 2019. [CrossRef]

- J. Xu, J. Wang, Q. Qi, H. Sun, and B. He, "IARA: An Intelligent Application-Aware VNF for Network Resource Allocation with Deep Learning," IEEE, 2018. [CrossRef]

- G. Du et al., "Deep Neural Network-Based Cell Sleeping Control and Beamforming Optimization in Cloud-RAN," in IEEE 90th Vehicular Technology Conference (VTC2019-Fall), 2019. [CrossRef]

- M. Liaqat et al., "Power-Domain Non-Orthogonal Multiple Access (PD-NOMA) in Cooperative Networks: An Overview," Wireless Networks, 2020. [CrossRef]

- J. Li et al., "Deep Reinforcement Learning Based Computation Offloading and Resource Allocation for MEC," in IEEE Wireless Communications and Networking Conference (WCNC), 2018. [CrossRef]

- S. Aneesh and A. N. Shaikh, "A Survey for 6G Network: Requirements, Technologies and Research Areas," in 2023 2nd International Conference on Edge Computing and Applications (ICECAA), Namakkal, India, 2023. [CrossRef]

- W. S. H. M. Wan Ahmad et al., "5G Technology: Towards Dynamic Spectrum Sharing Using Cognitive Radio Networks," IEEE Access, 2020. [CrossRef]

- M. Baumgartner et al., "Simulation of 5G and LTE-A Access Technologies via Network Simulator NS-3," in 2021 44th International Conference on Telecommunications and Signal Processing (TSP), 2021. [CrossRef]

- J. Chen et al., "Intelligent Massive MIMO Antenna Selection Using Monte Carlo Tree Search," IEEE Transactions on Signal Processing, 2019. [CrossRef]

- S. El Hassani et al., "Overview on 5G Radio Frequency Energy Harvesting," ASTESJ, 2019.

- S. K. Singh et al., "The Evolution of Radio Access Network towards Open-RAN: Challenges and Opportunities," in 2020 IEEE Wireless Communications and Networking Conference Workshops (WCNCW), 2020. [CrossRef]

- N. Zhao et al., "Deep Reinforcement Learning for User Association and Resource Allocation in Heterogeneous Cellular Networks," IEEE Transactions on Wireless Communications, 2019. [CrossRef]

- H. Ding et al., "A Deep Reinforcement Learning for User Association and Power Control in Heterogeneous Networks," Ad Hoc Networks, 2020. [CrossRef]

- Kai Chen et al., "Sub-Array Hybrid Precoding for Massive MIMO Systems: A CNN-Based Approach," IEEE Communications Letters, 2020. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).