Submitted:

07 April 2024

Posted:

08 April 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

3. Method of AdvSentiNet

3.1. Framework

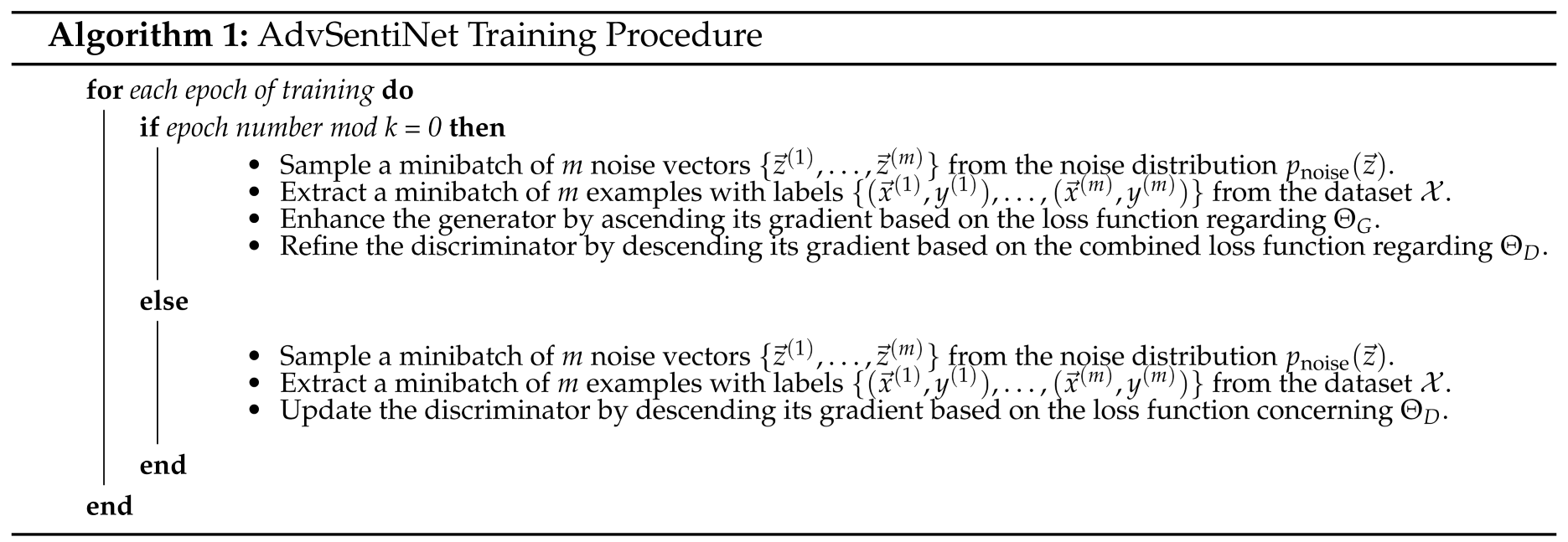

3.2. Implementation and Training

4. Experiments

4.1. Datasets and Preprocessing

4.2. Implementation

4.2.0.1. Learning Rate and Momentum Optimization.

4.2.0.2. Regularization and Dropout Strategies.

4.2.0.3. Hyperparameter Optimization Procedure.

4.3. Experimental Results

4.4. In-depth Performance Evaluation

5. Concluding Remarks and Future Directions

References

- Schouten, K.; Frasincar, F. Survey on Aspect-Level Sentiment Analysis. IEEE Transactions on Knowledge and Data Engineering 2016, 28, 813–830. [Google Scholar] [CrossRef]

- Wallaart, O.; Frasincar, F. A Hybrid Approach for Aspect-Based Sentiment Analysis Using a Lexicalized Domain Ontology and Attentional Neural Models. 16th Extended Semantic Web Conference (ESWC 2019). Springer, 2019, Vol. 11503, LNCS, pp. 363–378.

- Fei, H.; Zhang, M.; Ji, D. Cross-Lingual Semantic Role Labeling with High-Quality Translated Training Corpus. Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, 2020, pp. 7014–7026.

- Socher, R.; Perelygin, A.; Wu, J.; Chuang, J.; Manning, C.D.; Ng, A.; Potts, C. Recursive Deep Models for Semantic Compositionality Over a Sentiment Treebank. Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing (EMNLP), 2013, pp. 1631–1642.

- Wu, S.; Fei, H.; Li, F.; Zhang, M.; Liu, Y.; Teng, C.; Ji, D. Mastering the Explicit Opinion-Role Interaction: Syntax-Aided Neural Transition System for Unified Opinion Role Labeling. Proceedings of the Thirty-Sixth AAAI Conference on Artificial Intelligence, 2022, pp. 11513–11521.

- Shi, W.; Li, F.; Li, J.; Fei, H.; Ji, D. Effective Token Graph Modeling using a Novel Labeling Strategy for Structured Sentiment Analysis. Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2022, pp. 4232–4241.

- Fei, H.; Zhang, Y.; Ren, Y.; Ji, D. Latent Emotion Memory for Multi-Label Emotion Classification. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, pp. 7692–7699.

- Wang, F.; Li, F.; Fei, H.; Li, J.; Wu, S.; Su, F.; Shi, W.; Ji, D.; Cai, B. Entity-centered Cross-document Relation Extraction. Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, 2022, pp. 9871–9881.

- Zhuang, L.; Fei, H.; Hu, P. Knowledge-enhanced event relation extraction via event ontology prompt. Inf. Fusion 2023, 100, 101919. [Google Scholar] [CrossRef]

- Udochukwu, O.; He, Y. A Rule-Based Approach to Implicit Emotion Detection in Text. NLDB, 2015.

- Tan, L.I.; Phang, W.S.; Chin, K.O.; Patricia, A. Rule-Based Sentiment Analysis for Financial News. 2015 IEEE International Conference on Systems, Man, and Cybernetics, 2015, pp. 1601–1606. [CrossRef]

- Fei, H.; Ren, Y.; Ji, D. Retrofitting Structure-aware Transformer Language Model for End Tasks. Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, 2020, pp. 2151–2161.

- Seal, D.; Roy, U.; Basak, R. , Sentence-Level Emotion Detection from Text Based on Semantic Rules; 2019; pp. 423–430. [CrossRef]

- Fei, H.; Wu, S.; Li, J.; Li, B.; Li, F.; Qin, L.; Zhang, M.; Zhang, M.; Chua, T.S. LasUIE: Unifying Information Extraction with Latent Adaptive Structure-aware Generative Language Model. Proceedings of the Advances in Neural Information Processing Systems, NeurIPS 2022, 2022, pp. 15460–15475. [Google Scholar]

- Qiu, G.; Liu, B.; Bu, J.; Chen, C. Opinion word expansion and target extraction through double propagation. Computational linguistics 2011, 37, 9–27. [Google Scholar] [CrossRef]

- Fei, H.; Ren, Y.; Zhang, Y.; Ji, D.; Liang, X. Enriching contextualized language model from knowledge graph for biomedical information extraction. Briefings in Bioinformatics 2021, 22. [Google Scholar] [CrossRef] [PubMed]

- Wu, S.; Fei, H.; Ji, W.; Chua, T.S. Cross2StrA: Unpaired Cross-lingual Image Captioning with Cross-lingual Cross-modal Structure-pivoted Alignment. Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2023, pp. 2593–2608.

- Deng, Y.; Zhang, W.; Lam, W. Opinion-aware Answer Generation for Review-driven Question Answering in E-Commerce, 2020. arXiv:cs.CL/2008.11972].

- Chen, S.; Li, C.; Ji, F.; Zhou, W.; Chen, H. Review-Driven Answer Generation for Product-Related Questions in E-Commerce; Association for Computing Machinery: New York, NY, USA, 2019; WSDM ′19, p. 411–419. [Google Scholar] [CrossRef]

- Wu, S.; Fei, H.; Qu, L.; Ji, W.; Chua, T.S. NExT-GPT: Any-to-Any Multimodal LLM. CoRR, 2309. [Google Scholar]

- Gao, S.; Ren, Z.; Zhao, Y.E.; Zhao, D.; Yin, D.; Yan, R. Product-Aware Answer Generation in E-Commerce Question-Answering. CoRR, 1901. [Google Scholar]

- Zheng, S.; Xia, R. Left-Center-Right Separated Neural Network for Aspect-based Sentiment Analysis with Rotatory Attention, 2018. arXiv preprint, arXiv:1802.00892.

- Truşcă, M.M.; Wassenberg, D.; Frasincar, F.; Dekker, R. A Hybrid Approach for Aspect-Based Sentiment Analysis Using Deep Contextual Word Embeddings and Hierarchical Attention. 20th International Conference on Web Engineering (ICWE 2020). Springer, 2020, Vol. 12128, LNCS, pp. 365–380.

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In 28th Annual Conference on Neural Information Processing Systems (NIPS 2014); Curran Associates, Inc., 2014; pp. 2672–2680.

- Han, J.; Zhang, Z.; Schuller, B. Adversarial training in affective computing and sentiment analysis: Recent advances and perspectives. IEEE Computational Intelligence Magazine 2019, 14, 68–81. [Google Scholar] [CrossRef]

- Wu, S.; Fei, H.; Ren, Y.; Ji, D.; Li, J. Learn from Syntax: Improving Pair-wise Aspect and Opinion Terms Extraction with Rich Syntactic Knowledge. Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, 2021, pp. 3957–3963.

- Li, B.; Fei, H.; Liao, L.; Zhao, Y.; Teng, C.; Chua, T.; Ji, D.; Li, F. Revisiting Disentanglement and Fusion on Modality and Context in Conversational Multimodal Emotion Recognition. Proceedings of the 31st ACM International Conference on Multimedia, MM, 2023, pp. 5923–5934.

- Fei, H.; Liu, Q.; Zhang, M.; Zhang, M.; Chua, T.S. Scene Graph as Pivoting: Inference-time Image-free Unsupervised Multimodal Machine Translation with Visual Scene Hallucination. Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2023, pp. 5980–5994.

- Karimi, A.; Rossi, L.; Prati, A.; Full, K. Adversarial training for aspect-based sentiment analysis with BERT. arXiv preprint, arXiv:2001.11316 2020.

- Xu, H.; Liu, B.; Shu, L.; Yu, P.S. BERT Post-Training for Review Reading Comprehension and Aspect-based Sentiment Analysis. 2019 Conference of the North American Chapter of ACL: Human Language Technologies (NAACL-HLT 2019). ACL, 2019, pp. 2324–2335.

- Li, J.; Xu, K.; Li, F.; Fei, H.; Ren, Y.; Ji, D. MRN: A Locally and Globally Mention-Based Reasoning Network for Document-Level Relation Extraction. Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, 2021, 1359–1370. [Google Scholar]

- Fei, H.; Wu, S.; Ren, Y.; Zhang, M. Matching Structure for Dual Learning. Proceedings of the International Conference on Machine Learning, ICML, 2022, pp. 6373–6391.

- Cao, H.; Li, J.; Su, F.; Li, F.; Fei, H.; Wu, S.; Li, B.; Zhao, L.; Ji, D. OneEE: A One-Stage Framework for Fast Overlapping and Nested Event Extraction. Proceedings of the 29th International Conference on Computational Linguistics, 2022, pp. 1953–1964.

- Wu, S.; Fei, H.; Cao, Y.; Bing, L.; Chua, T.S. Information Screening whilst Exploiting! Multimodal Relation Extraction with Feature Denoising and Multimodal Topic Modeling. Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2023, pp. 14734–14751.

- Fei, H.; Li, F.; Li, B.; Ji, D. Encoder-Decoder Based Unified Semantic Role Labeling with Label-Aware Syntax. Proceedings of the AAAI Conference on Artificial Intelligence, 2021, pp. 12794–12802.

- Li, B.; Fei, H.; Li, F.; Wu, Y.; Zhang, J.; Wu, S.; Li, J.; Liu, Y.; Liao, L.; Chua, T.S.; Ji, D. DiaASQ: A Benchmark of Conversational Aspect-based Sentiment Quadruple Analysis. Findings of the Association for Computational Linguistics: ACL 2023, 2023, 13449–13467. [Google Scholar]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Manandhar, S.; Androutsopoulos, I. SemEval-2015 Task 12: Aspect Based Sentiment Analysis. 9th International Workshop on Semantic Evaluation (SemEval 2015). ACL, 2015, pp. 486–495.

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Manandhar, S.; Androutsopoulos, I. SemEval-2016 Task 5: Aspect Based Sentiment Analysis. 10th International Workshop on Semantic Evaluation (SemEval 2016). ACL, 2016, pp. 19–30.

- Fei, H.; Li, F.; Li, C.; Wu, S.; Li, J.; Ji, D. Inheriting the Wisdom of Predecessors: A Multiplex Cascade Framework for Unified Aspect-based Sentiment Analysis. Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, IJCAI, 2022, pp. 4096–4103.

- Odena, A. Semi-Supervised Learning with Generative Adversarial Networks. arXiv preprint, arXiv:1606.01583 2016, [1606.01583].

- Fei, H.; Wu, S.; Ren, Y.; Li, F.; Ji, D. Better Combine Them Together! Integrating Syntactic Constituency and Dependency Representations for Semantic Role Labeling. Findings of the Association for Computational Linguistics: ACL/IJCNLP 2021, 2021, 549–559. [Google Scholar]

- Wu, S.; Fei, H.; Zhang, H.; Chua, T.S. Imagine That! Abstract-to-Intricate Text-to-Image Synthesis with Scene Graph Hallucination Diffusion. Advances in Neural Information Processing Systems 2024, 36. [Google Scholar]

- Fei, H.; Wu, S.; Ji, W.; Zhang, H.; Chua, T.S. Empowering dynamics-aware text-to-video diffusion with large language models. arXiv preprint, arXiv:2308.13812 2023.

- Qu, L.; Wu, S.; Fei, H.; Nie, L.; Chua, T.S. Layoutllm-t2i: Eliciting layout guidance from llm for text-to-image generation. Proceedings of the 31st ACM International Conference on Multimedia, 2023, pp. 643–654.

- Fei, H.; Ren, Y.; Ji, D. Boundaries and edges rethinking: An end-to-end neural model for overlapping entity relation extraction. Information Processing & Management 2020, 57, 102311. [Google Scholar]

- Li, J.; Fei, H.; Liu, J.; Wu, S.; Zhang, M.; Teng, C.; Ji, D.; Li, F. Unified Named Entity Recognition as Word-Word Relation Classification. Proceedings of the AAAI Conference on Artificial Intelligence, 2022, pp. 10965–10973.

- Abdul-Mageed, M.; Ungar, L. EmoNet: Fine-Grained Emotion Detection with Gated Recurrent Neural Networks. Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers); Association for Computational Linguistics: Vancouver, Canada, 2017; pp. 718–728. [Google Scholar] [CrossRef]

- Fei, H.; Chua, T.; Li, C.; Ji, D.; Zhang, M.; Ren, Y. On the Robustness of Aspect-based Sentiment Analysis: Rethinking Model, Data, and Training. ACM Transactions on Information Systems 2023, 41, 50:1–50:32. [Google Scholar] [CrossRef]

- Zhao, Y.; Fei, H.; Cao, Y.; Li, B.; Zhang, M.; Wei, J.; Zhang, M.; Chua, T. Constructing Holistic Spatio-Temporal Scene Graph for Video Semantic Role Labeling. Proceedings of the 31st ACM International Conference on Multimedia, MM, 2023, pp. 5281–5291.

- Fei, H.; Ren, Y.; Zhang, Y.; Ji, D. Nonautoregressive Encoder-Decoder Neural Framework for End-to-End Aspect-Based Sentiment Triplet Extraction. IEEE Transactions on Neural Networks and Learning Systems 2023, 34, 5544–5556. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; Fei, H.; Ji, W.; Wei, J.; Zhang, M.; Zhang, M.; Chua, T.S. Generating Visual Spatial Description via Holistic 3D Scene Understanding. Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2023, pp. 7960–7977.

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. CoRR, 2019. [Google Scholar]

- Fei, H.; Li, B.; Liu, Q.; Bing, L.; Li, F.; Chua, T.S. Reasoning Implicit Sentiment with Chain-of-Thought Prompting. Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), 2023, pp. 1171–1182.

- Schouten, K.; Frasincar, F. Ontology-Driven Sentiment Analysis of Product and Service Aspects. 15th Extended Semantic Web Conference (ESWC 2018). Springer, 2018, Vol. 10843, LNCS, pp. 608–623.

- Salimans, T.; Goodfellow, I.J.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved Techniques for Training GANs. 29th Annual Conference on Neural Information Processing Systems (NIPS 2016). Curran Associates, Inc., 2016, pp. 2226–2234.

- Goodfellow, I.J. On distinguishability criteria for estimating generative models. 3rd International Conference on Learning Representations (ICLR 2015), Workshop Track, 2015.

- Bird, S.; Klein, E.; Loper, E. Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit; O’Reilly Media, Sebastopol, CA, 2009.

- Miller, G.A. WordNet: a lexical database for English. Communications of the ACM 1995, 38, 39–41. [Google Scholar] [CrossRef]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for Hyper-Parameter Optimization. 25th Annual Conference on Neural Information Processing Systems (NIPS 2011), 2011, pp. 2546–2554.

- Li, Z.; Feng, C.; Zheng, J.; Wu, M.; Yu, H. Towards Adversarial Robustness via Feature Matching. IEEE Access 2020, 8, 88594–88603. [Google Scholar] [CrossRef]

| SemEval 2015 | SemEval 2016 | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Negative | Neutral | Positive | Total | Negative | Neutral | Positive | Total | ||

| Train Data | 3.2% | 24.4% | 72.4% | 1278 | 26.0% | 3.8% | 70.2% | 1879 | |

| Test Data | 5.3% | 41.0% | 53.7% | 597 | 20.8% | 4.9% | 74.3% | 650 | |

| Hyperparameter | Explored Range | Optimized Value | |

|---|---|---|---|

| 2015 | 2016 | ||

| [0.007, 0.01, 0.02, 0.03, 0.05, 0.09] | 0.02 | 0.03 | |

| [0.7, 0.8, 0.9] | 0.9 | 0.7 | |

| [0.1, 0.15, 0.2, 0.4] | 0.1 | 0.15 | |

| [0.4, 0.6, 0.8, 1.6] | 0.4 | 0.6 | |

| k | [3, 4, 5] | 3 | 3 |

| SemEval 2015 | SemEval 2016 | |||||

|---|---|---|---|---|---|---|

| Within-sample | Beyond-sample | Within-sample | Beyond-sample | |||

| With Ontology | AdvSentiNet | 89.7% | 82.5% | 91.5% | 87.3% | |

| Previous Version | 88.8% | 81.7% | 91.0% | 84.4% | ||

| Without Ontology | AdvSentiNet | 96.6% | 82.2% | 96.2% | 88.2% | |

| Previous Version | 94.9% | 80.7% | 95.1% | 80.6% | ||

| SemEval 2015 | SemEval 2016 | |||

| AdvSentiNet (w/o ontology) | 82.2% | AdvSentiNet (w/o ontology) | 88.2% | |

| Previous Iteration | 81.7% | Leading Competitor | 88.1% | |

| Other Notable Model | 81.7% | Previous Iteration | 87.0% | |

| Another Model | 81.3% | Another Model | 85.8% | |

| Yet Another Model | 81.2% | Yet Another Model | 85.6% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).