Submitted:

07 April 2024

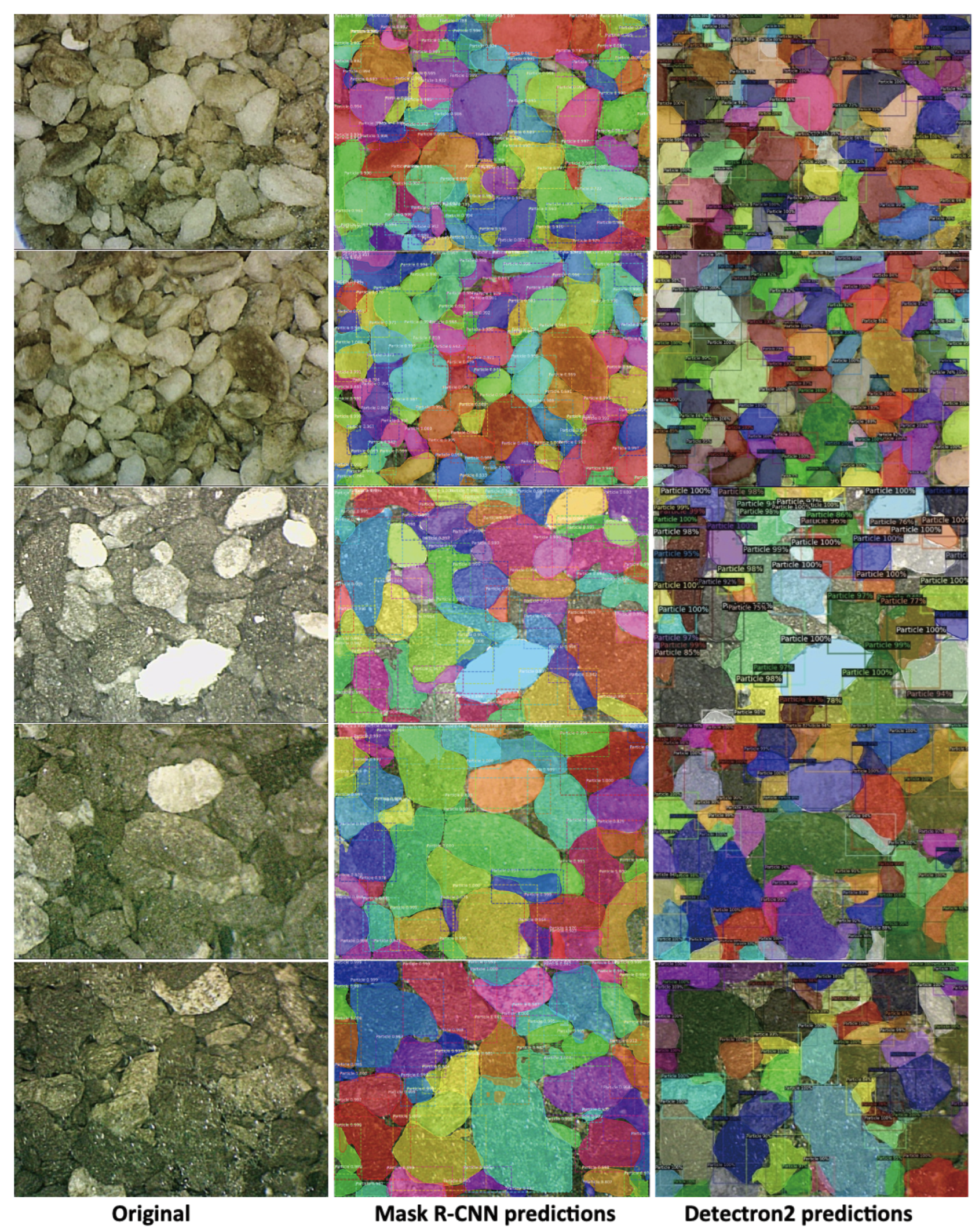

Posted:

09 April 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Methodology

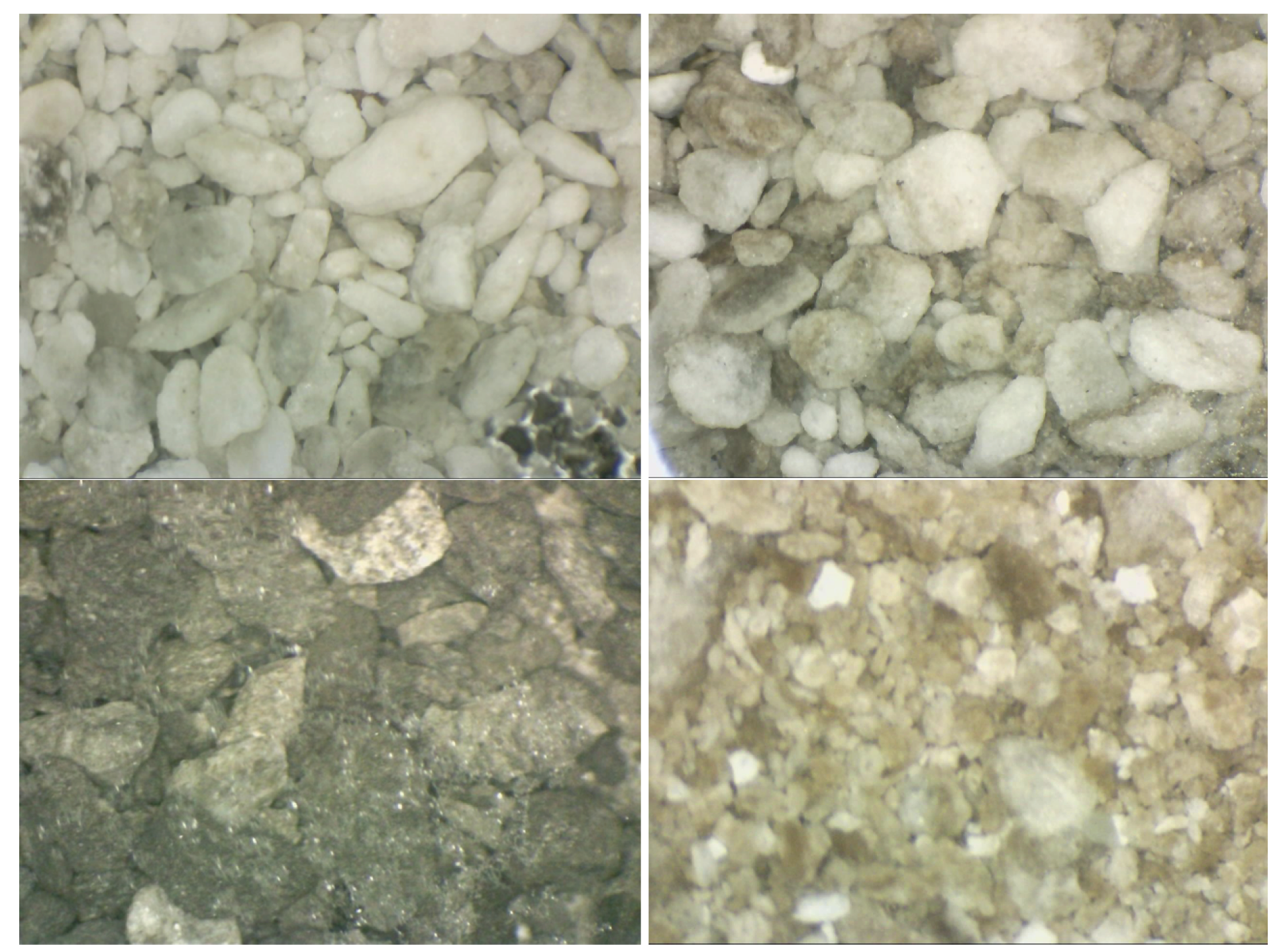

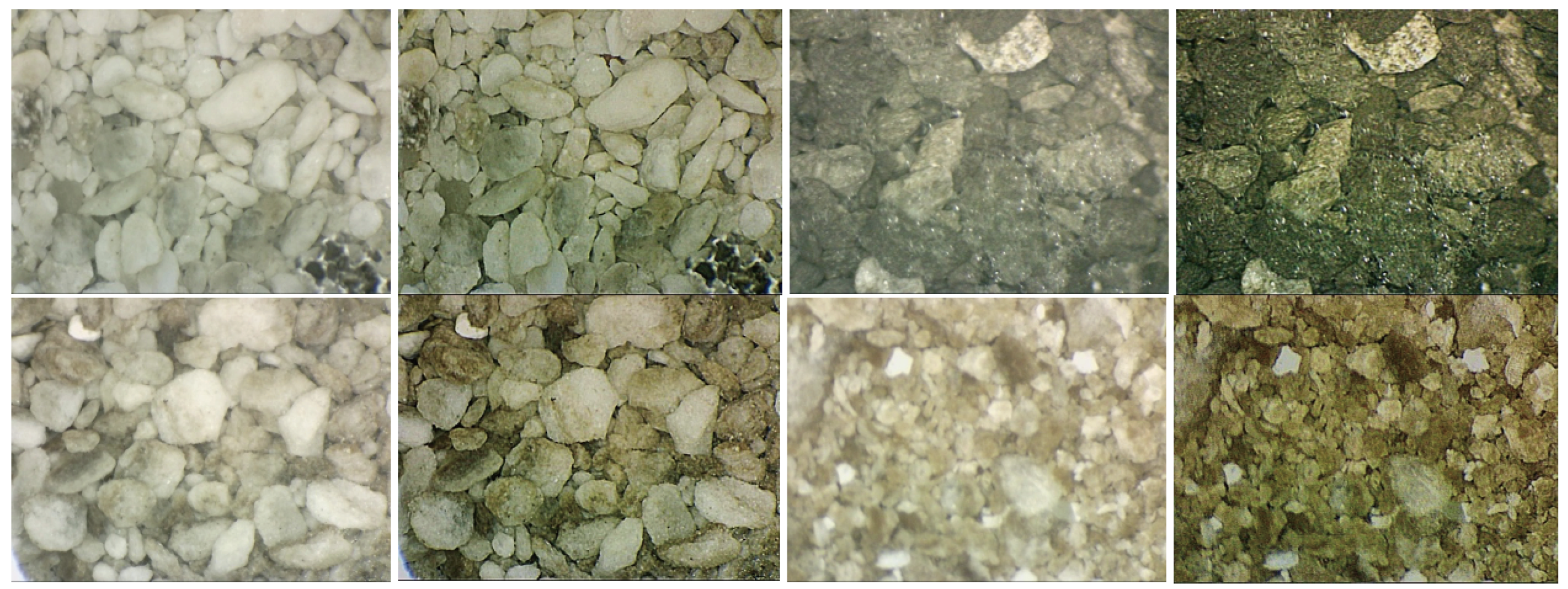

2.1. Data Collection

- Blurriness: the resolution of several images was unclear, hampering the image clarity.

- Indistinct Boundaries: Distinguishing particle boundaries or edges in the images proved to be difficult.

- Limited data size: The dataset was small, comprising only 69 images to work with.

2.2. Data Quality Enhancement and Preprocessing

2.2.1. Image Denoising

2.2.2. Exposure Correction

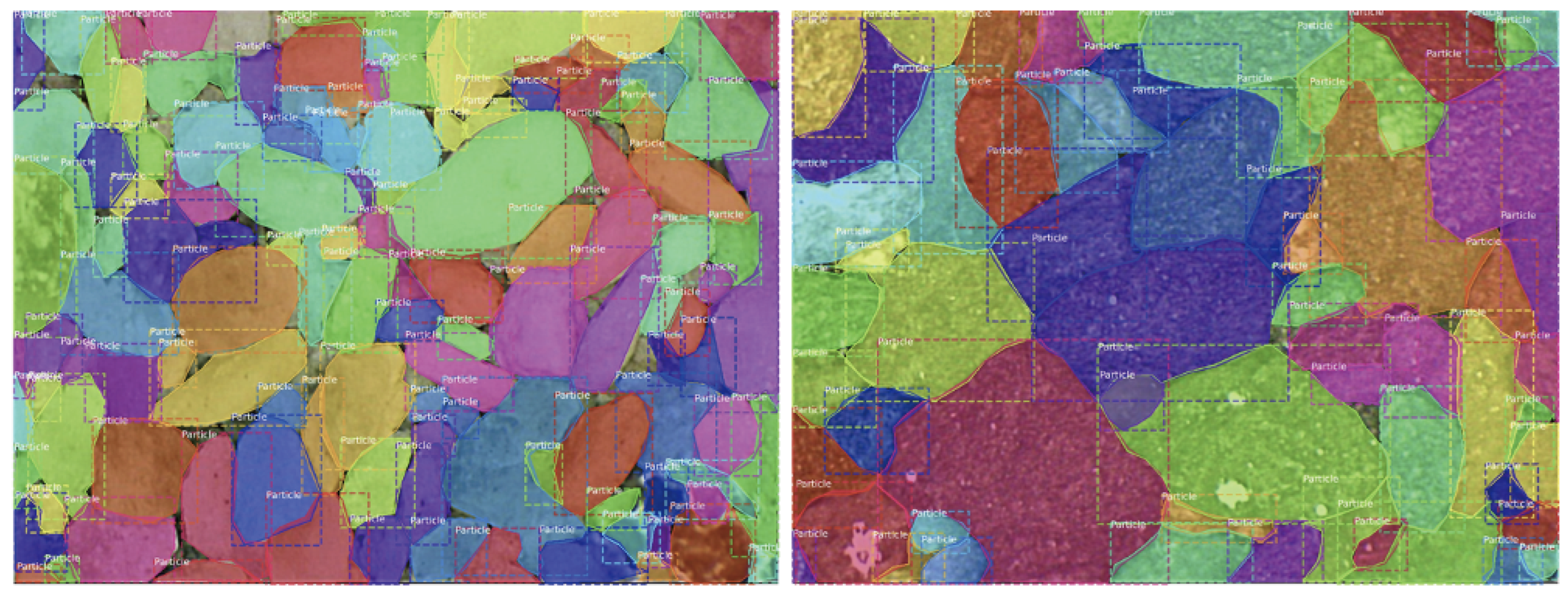

2.3. Data Annotation

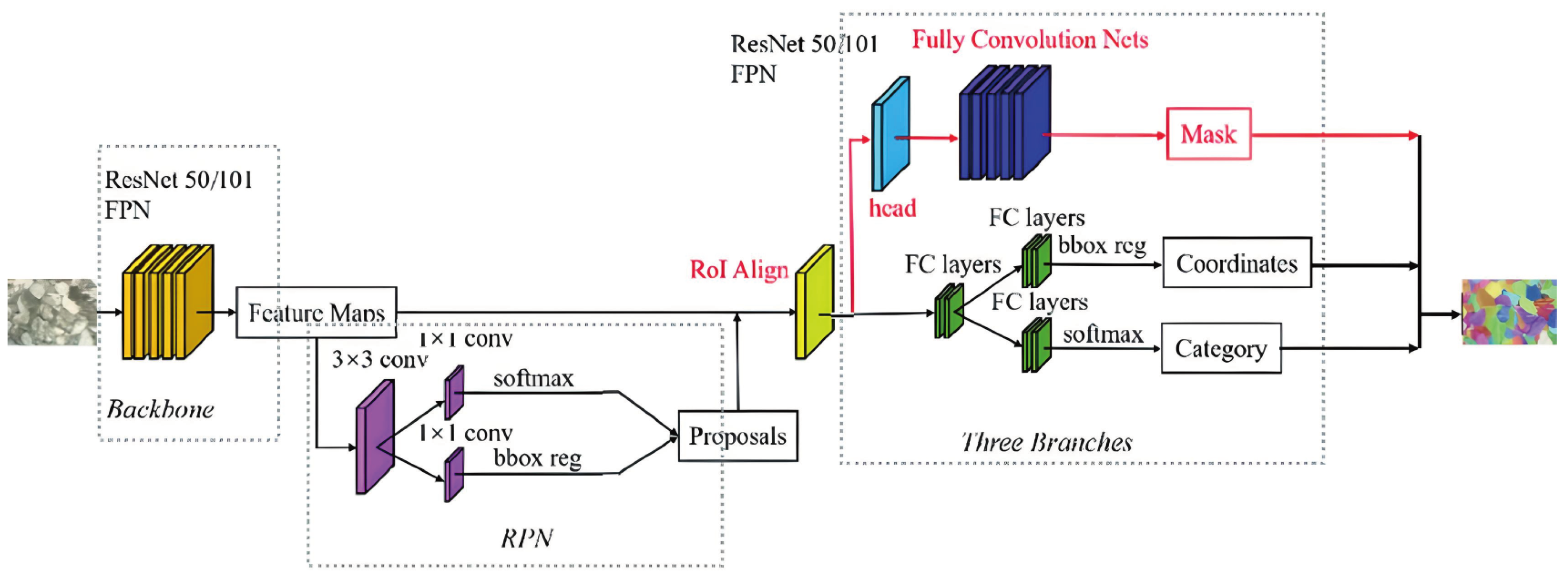

2.4. MASK R-CNN

2.5. Detectron2

3. Implementation Details

3.1. Evaluation Metrics

3.2. Transfer Learning

3.3. Training the Mask R-CNN Model

- Phase 1: Training the network head for 40 epochs with an increased learning rate at 0.006 (learning rate * 2)

- Phase 2: Fine-tuning layers 3+ (ResNet stage 3 and up) for 120 epochs

- Phase 3: Fine-tuning layers 4+ (ResNet stage 4 and up) for 160 epochs

- Phase 4: Fine-tuning all layers for 200 epochs with a reduced learning rate at 0.0003 (learning rate / 10)

3.4. Training the Detectron2 Model

4. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E.; others. Deep learning for computer vision: A brief review. Computational intelligence and neuroscience 2018, 2018. [CrossRef]

- Wang, W. Rock particle image segmentation and systems. Pattern recognition techniques, technology and applications 2008, pp. 197–226.

- Ai, D.; Jiang, G.; Lam, S.K.; He, P.; Li, C. Automatic pixel-wise detection of evolving cracks on rock surface in video data. Automation in Construction 2020, 119, 103378. [CrossRef]

- Fan, H.; Tian, Z.; Xu, X.; Sun, X.; Ma, Y.; Liu, H.; Lu, H. Rockfill material segmentation and gradation calculation based on deep learning. Case Studies in Construction Materials 2022, 17, e01216. [CrossRef]

- Guo, Q.; Wang, Y.; Yang, S.; Xiang, Z. A method of blasted rock image segmentation based on improved watershed algorithm. Scientific Reports 2022, 12, 7143. [CrossRef]

- Thurley, M.J. Automated image segmentation and analysis of rock piles in an open-pit mine. 2013 International Conference on Digital Image Computing: Techniques and Applications (DICTA). IEEE, 2013, pp. 1–8.

- Yang, Z.; Wu, H.; Ding, H.; Liang, J.; Guo, L. Enhanced U-Net model for rock pile segmentation and particle size analysis. Minerals Engineering 2023, 203, 108352. [CrossRef]

- Liu, H.; Ren, Y.L.; Li, X.; Hu, Y.X.; Wu, J.P.; Li, B.; Luo, L.; Tao, Z.; Liu, X.; Liang, J.; others. Rock thin-section analysis and identification based on artificial intelligent technique. Petroleum Science 2022, 19, 1605–1621. [CrossRef]

- Elvis Nkioh, N. Geological characterization of rock samples by LIBS and ME-XRT analytical techniques, 2022.

- Rong, G.; Liu, G.; Hou, D.; Zhou, C.b.; others. Effect of particle shape on mechanical behaviors of rocks: a numerical study using clumped particle model. The Scientific World Journal 2013, 2013. [CrossRef]

- Rodriguez, J. Importance of the particle shape on mechanical properties of soil materials. PhD thesis, Luleå tekniska universitet, 2013.

- Zaitoun, N.M.; Aqel, M.J. Survey on image segmentation techniques. Procedia Computer Science 2015, 65, 797–806. [CrossRef]

- Zhang, Z.; Yang, J.; Ding, L.; Zhao, Y. Estimation of coal particle size distribution by image segmentation. International Journal of Mining Science and Technology 2012, 22, 739–744. [CrossRef]

- Andrew, M. A quantified study of segmentation techniques on synthetic geological XRM and FIB-SEM images. Computational Geosciences 2018, 22, 1503–1512. [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE transactions on systems, man, and cybernetics 1979, 9, 62–66. [CrossRef]

- Liang, H.; Zou, J. Rock image segmentation of improved semi-supervised SVM–FCM algorithm based on chaos. Circuits, Systems, and Signal Processing 2020, 39, 571–585. [CrossRef]

- Amankwah, A.; Aldrich, C. Rock image segmentation using watershed with shape markers. 2010 IEEE 39th Applied Imagery Pattern Recognition Workshop (AIPR). IEEE, 2010, pp. 1–7.

- Reinhardt, M.; Jacob, A.; Sadeghnejad, S.; Cappuccio, F.; Arnold, P.; Frank, S.; Enzmann, F.; Kersten, M. Benchmarking conventional and machine learning segmentation techniques for digital rock physics analysis of fractured rocks. Environmental Earth Sciences 2022, 81, 71. [CrossRef]

- Chauhan, S.; Rühaak, W.; Khan, F.; Enzmann, F.; Mielke, P.; Kersten, M.; Sass, I. Processing of rock core microtomography images: Using seven different machine learning algorithms. Computers & Geosciences 2016, 86, 120–128. [CrossRef]

- Nurzynska, K.; Iwaszenko, S. Application of texture features and machine learning methods to grain segmentation in rock material images. Image Analysis & Stereology 2020, 39, 73–90. [CrossRef]

- Harvey, A.; Fotopoulos, G. Geological mapping using machine learning algorithms. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2016, 41, 423–430.

- Zheng, J.; Hryciw, R.D. Identification and characterization of particle shapes from images of sand assemblies using pattern recognition. Journal of Computing in Civil Engineering 2018, 32, 04018016. [CrossRef]

- Liu, Y.; Zhang, Z.; Liu, X.; Wang, L.; Xia, X. Efficient image segmentation based on deep learning for mineral image classification. Advanced Powder Technology 2021, 32, 3885–3903. [CrossRef]

- Zhou, X.; Gong, Q.; Liu, Y.; Yin, L. Automatic segmentation of TBM muck images via a deep-learning approach to estimate the size and shape of rock chips. Automation in Construction 2021, 126, 103685. [CrossRef]

- Liu, C.; Li, M.; Zhang, Y.; Han, S.; Zhu, Y. An enhanced rock mineral recognition method integrating a deep learning model and clustering algorithm. Minerals 2019, 9, 516. [CrossRef]

- Hao, S.; Zhou, Y.; Guo, Y. A brief survey on semantic segmentation with deep learning. Neurocomputing 2020, 406, 302–321. [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: analysis, applications, and prospects. IEEE transactions on neural networks and learning systems 2021.

- Huang, H.w.; Li, Q.t.; Zhang, D.m. Deep learning based image recognition for crack and leakage defects of metro shield tunnel. Tunnelling and underground space technology 2018, 77, 166–176. [CrossRef]

- Liang, Z.; Nie, Z.; An, A.; Gong, J.; Wang, X. A particle shape extraction and evaluation method using a deep convolutional neural network and digital image processing. Powder Technology 2019, 353, 156–170. [CrossRef]

- Liu, X.; Zhang, Y.; Jing, H.; Wang, L.; Zhao, S. Ore image segmentation method using U-Net and Res_Unet convolutional networks. RSC advances 2020, 10, 9396–9406. [CrossRef]

- Duan, J.; Liu, X.; Wu, X.; Mao, C. Detection and segmentation of iron ore green pellets in images using lightweight U-net deep learning network. Neural Computing and Applications 2020, 32, 5775–5790. [CrossRef]

- Jin, C.; Wang, K.; Han, T.; Lu, Y.; Liu, A.; Liu, D. Segmentation of ore and waste rocks in borehole images using the multi-module densely connected U-net. Computers & Geosciences 2022, 159, 105018. [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. Proceedings of the IEEE international conference on computer vision, 2017, pp. 2961–2969.

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. https://github.com/facebookresearch/detectron2, 2019.

- Vu, T.; Bao, T.; Hoang, Q.V.; Drebenstetd, C.; Hoa, P.V.; Thang, H.H. Measuring blast fragmentation at Nui Phao open-pit mine, Vietnam using the Mask R-CNN deep learning model. Mining Technology 2021, 130, 232–243. [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. Journal of Big data 2016, 3, 1–40.

- Cui, Z.; Li, K.; Gu, L.; Su, S.; Gao, P.; Jiang, Z.; Qiao, Y.; Harada, T. Illumination adaptive transformer. arXiv preprint arXiv:2205.14871 2022.

- Jiao, L.; Zhao, J. A survey on the new generation of deep learning in image processing. Ieee Access 2019, 7, 172231–172263. [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13. Springer, 2014, pp. 740–755.

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.Y.; Cubuk, E.D.; Le, Q.V.; Zoph, B. Simple copy-paste is a strong data augmentation method for instance segmentation. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2021, pp. 2918–2928.

- Qin, H.; Zhang, D.; Tang, Y.; Wang, Y. Automatic recognition of tunnel lining elements from GPR images using deep convolutional networks with data augmentation. Automation in Construction 2021, 130, 103830. [CrossRef]

- Liu, Y.; Wang, X.; Zhang, Z.; Deng, F. Deep learning based data augmentation for large-scale mineral image recognition and classification. Minerals Engineering 2023, 204, 108411. [CrossRef]

- Li, D.; Zhao, J.; Liu, Z. A novel method of multitype hybrid rock lithology classification based on convolutional neural networks. Sensors 2022, 22, 1574. [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. Journal of big data 2019, 6, 1–48. [CrossRef]

| Models | AP | AP50 | AP75 |

|---|---|---|---|

| Mask R-CNN: ResNet101 | 27.5 | 55.5 | 24.8 |

| Detectron2: ResNet50 | 19.8 | 42.8 | 17.9 |

| Detectron2: ResNet101 | 15.5 | 34.1 | 13.3 |

| Detectron2: ResNeXt101-32x8d | 18.6 | 38.9 | 15.9 |

| Detectron2: ResNeXt101-LSJ | 17.3 | 36.9 | 15.6 |

| Detectron2: Cascade R-CNN | 17.5 | 38 | 13.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).