Submitted:

08 April 2024

Posted:

09 April 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Literature Review

1.2. Contributions

- Novel Method to Integrating Control and Deep Learning Methods: Developing a proposed method known as a hybrid intelligent control method for adaptive MG optimisation is an innovative way to integrate cutting-edge deep learning algorithms with basic rule-based control approaches. This unique approach combines deep neural network learning with rule-based logic interpretability to provide a complete solution for optimising adaptive MG operations.

- Enhanced Flexibility (Adaptability) and Intelligence: The hybrid intelligent control method greatly increases the flexibility and intelligence of MG control systems. Using deep learning algorithms such as GRU, LSTM, and RNN, the system can learn complex patterns and correlations from past data, allowing for more informed and dynamic control decisions in real time.

- Improved Efficiency and Performance: The proposed method’s integration of deep learning techniques enhances the effectiveness and performance of MG operations. The system maximises the environmental advantages of MG deployment by maximising the utilisation of RESs, minimising peak demand, and improving overall system stability and resilience through optimising EMSs.

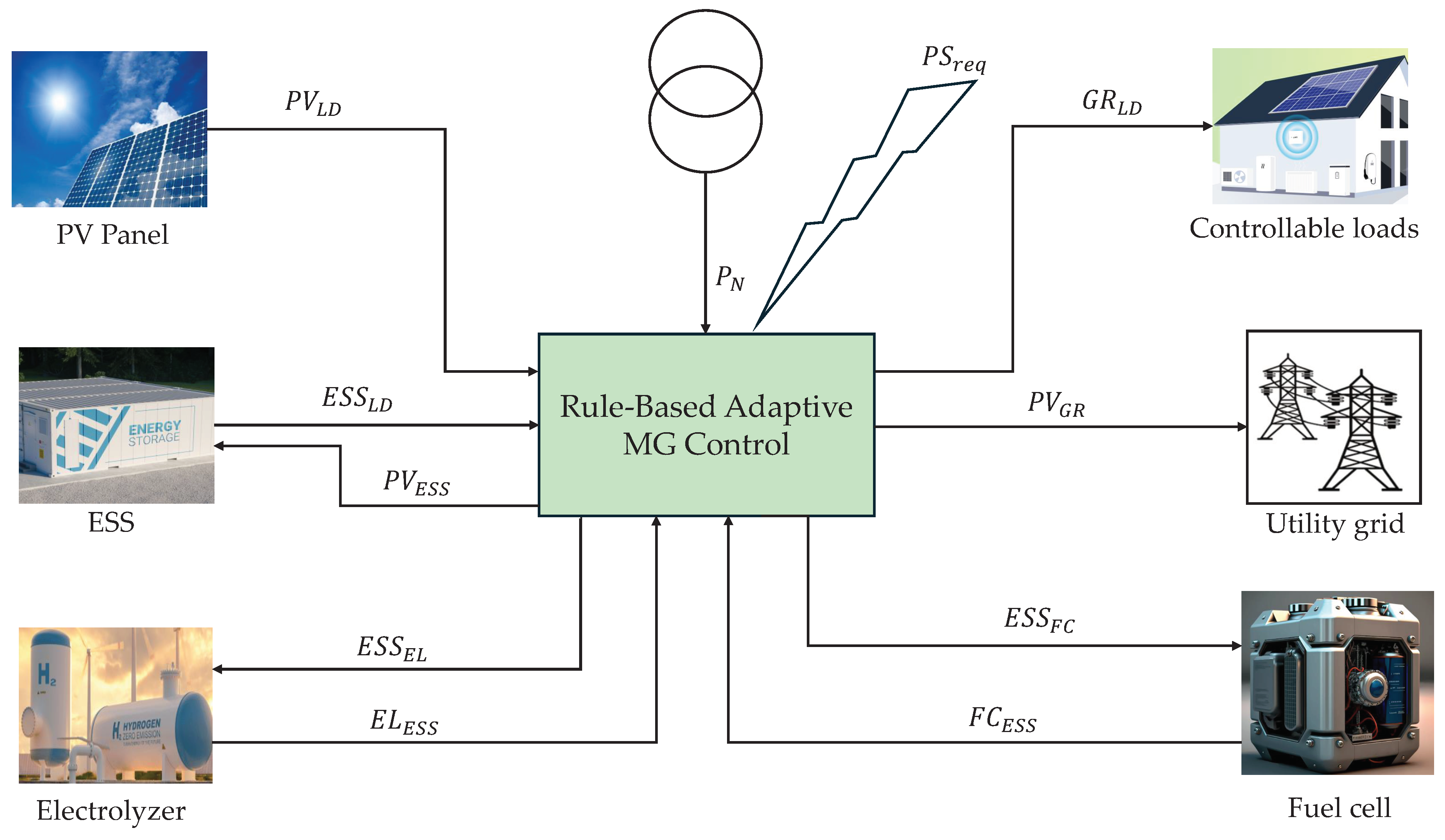

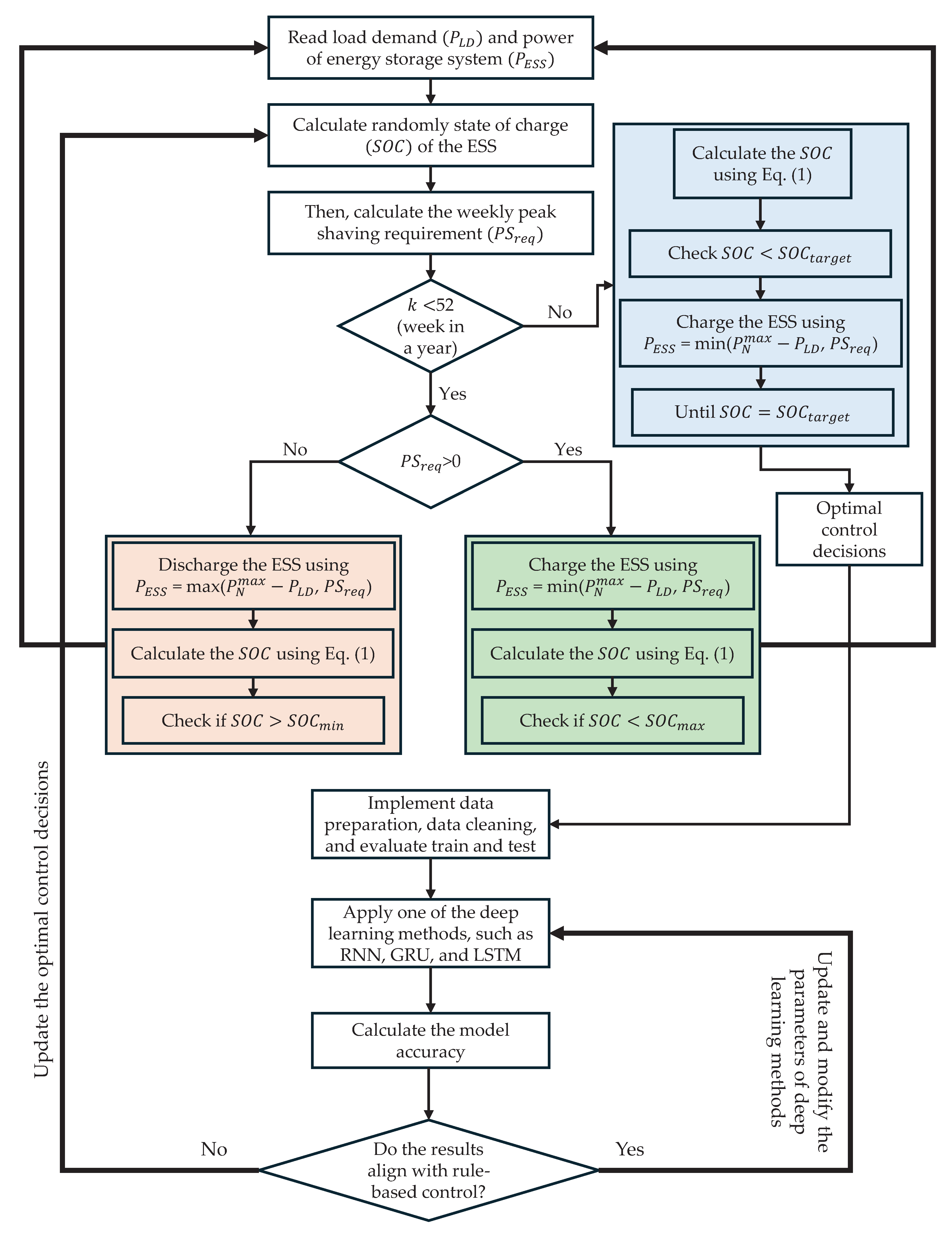

2. Materials and Methods

2.1. Rule-Based Control

- The power imported from the utility grid is minimized.

- The usage of the ESS is penalized to prevent the charging from the utility grid.

- The exported energy to the utility grid is encouraged.

-

Charging: The constraint can be re-written by:

-

Discharging: The constraint can be re-written by:

2.2. General Formulations of Deep Learning Techniques

2.2.1. Long Short Term Memory

2.2.2. Gated Recurrent Unit

2.3. Integration of Rule-Based Control with Deep Learning Techniques

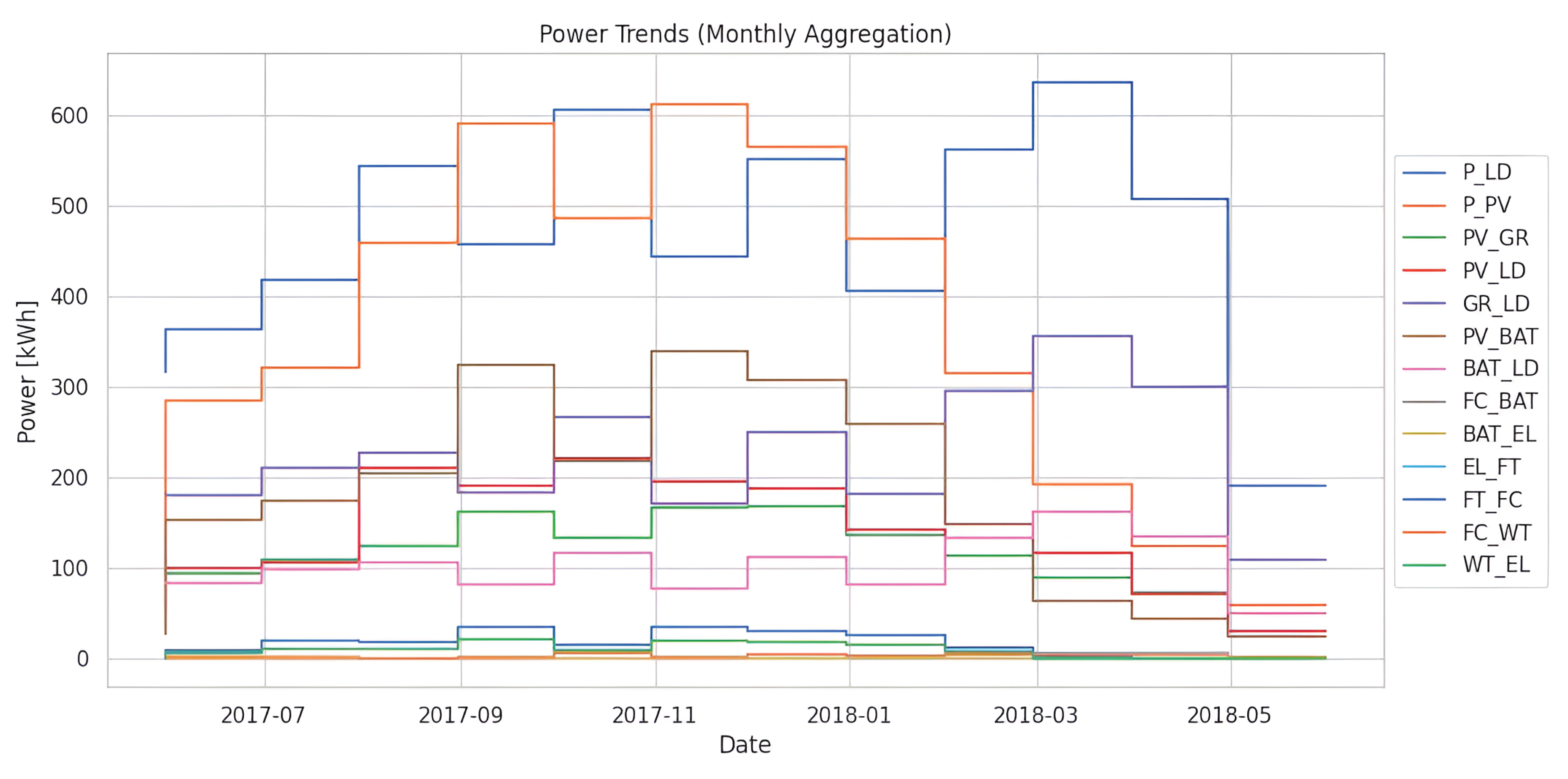

2.4. Dataset Preprocessing

- Accumulated: The column is the total need of the smart building; hence, the sum of the columns , , and fulfils it. The photovoltaic energy source distributes the power to the columns , , and .

- Additional elements: The power needs in these columns are at negligible levels since , , , and require a small amount of power for ignition.

- Main elements: Since the presented smart building system mainly circulates power within LD, PV, GR, and BAT, the corresponding columns are considered the main elements.

- Significant changes in power qualities are found during different time periods. For example, on May 31, 2017, total power usage was 316.75 kWh, with PV generation accounting for 83.65 kWh, power from PV to the grid () (44.65), and electricity from PV to local distribution () (49.90 kWh). In contrast, on March 31, 2018, overall power consumption increased to 635.77 kWh, accompanied by changes in PV generation (192.31 kWh) and other power distribution components.

- Seasonal variations are evident in the dataset, with distinct trends observed across different months. For example, during the summer months, such as June and July 2017, both power consumption and generation peaked, indicating higher energy demand and increased solar irradiance. Conversely, in winter months, such as December 2017, power consumption remained relatively stable, while PV generation decreased due to reduced daylight hours.

- Figure 4 underscores the role of RESs in power generation. For instance, on April 30, 2018, the PV contributed significantly to overall power generation, with PV generation reaching 124.53 kWh and WT to EL () at 0.33 kWh. These assets are crucial in reducing dependency on conventional grid power and mitigating environmental impact.

- ESSs, particularly batteries, facilitate efficient power management within the smart building. Notably, while certain power components such as , , , , , and are essential for energy transfer and system operation, their individual contributions to overall power consumption and generation are minimal. For instance, on May 31, 2017, , , , , , and collectively accounted for less than 1 kWh of power transfer.

2.5. Model and Hyperparameter Search

2.5.1. RNN-Based Architectures:

2.5.2. Optimizers:

2.5.3. Learning Rate Schedulers:

2.6. Implementation Details

3. Results and Discussions

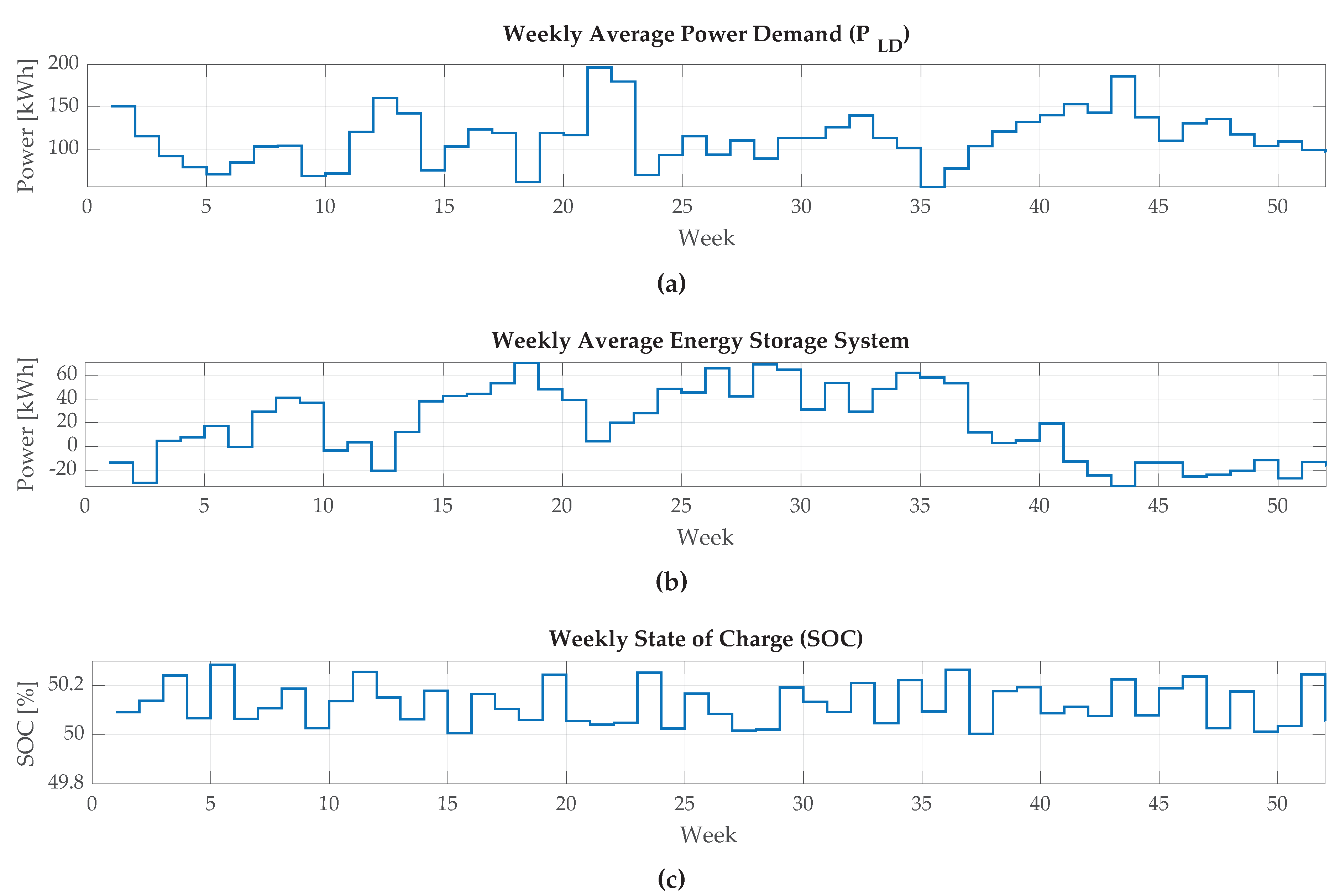

3.1. The Results of the Rule-Based Control

3.2. The Results of the Deep Learning Methods

- Optimizer: The experiments showed that none optimizers can be considered better than the others. Although the number of occurrences of SGD seems lower than the others, it is still a suitable candidate.

- Learning Rate Scheduler: The constant learning rate schedule dominates the results.

- Deepness of the Architecture: Considering the data set used, it has been observed that relatively shallow models give better results, regardless of the recurrent layer type.

3.2.1. Threats to Validity

- L1 Infinite search space: Several factors limit the RNN-based model training process.

- L2 Obtained results: This study is not a benchmarking of various models.

- L3 Single power consumption dataset: This study uses only data from a single smart building.

- L4 Single expert for model training: Although the search space has been discussed collaboratively, a single expert conducted the experimental designs of RNN-based models.

3.3. The Results of the Hybrid Intelligent Control

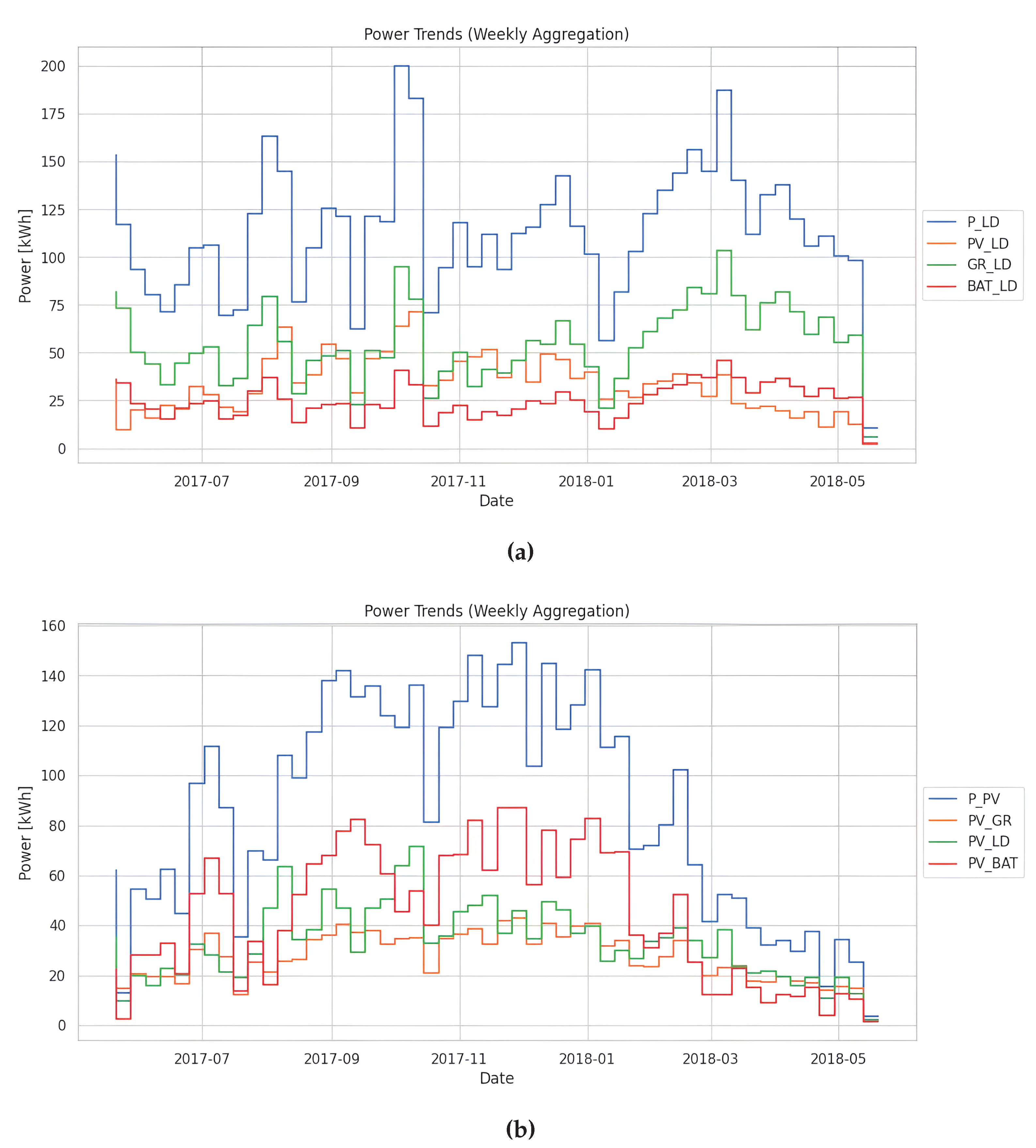

- The weekly variations in power attributes reveal distinct patterns over time. By integrating rule-based control strategies, such as scheduling power generation and consumption based on predicted demand, the system optimizes energy utilization while minimizing wastage. For example, on June 4, 2017, and exhibited lower values than in previous weeks, indicating potential energy savings through load shifting or demand response mechanisms (see Figure 6a).

- Deep learning techniques enhance the system’s predictive capabilities, enabling accurate forecasting of power generation and consumption patterns. Through RNNs or LSTM models, the system can adapt to dynamic changes in energy demand and supply, optimizing decision-making processes in real-time. For instance, as shown in Figure 6b, on August 13, 2017, the system accurately predicted an increase in power consumption, allowing proactive adjustments to grid interactions and energy storage.Deep learning models use features like and to estimate power generation and consumption trends, resulting in more accurate decision-making. On June 4, 2017, the incorporation of data allowed the system to predict increasing PV generation and proactively modify energy distribution and storage.

- Integrating battery systems, directed by rule-based control and informed by deep learning predictions, is critical for optimising energy storage and distribution. Figure 6 shows that the system can intelligently manage battery charging and discharging cycles by considering and data in conjunction with other variables, such as and . The system decreases grid dependency and peak load demand by strategically charging and discharging batteries in response to predicted demand and generation. On July 30, 2017, data showed effective use of battery capacity to balance changes in and .

- The combination of basic rule-based control and deep learning provides synergistic benefits for energy management. Rule-based algorithms give deterministic guidance for system operation, but deep learning models improve adaptability and responsiveness to changing environmental conditions. By combining the benefits of both techniques, the system reaches peak energy efficiency, cost savings, and environmental sustainability performance.

- Our proposed solution is scalable and adaptable to various energy conditions and building environments. The system is adaptable to changing energy demands, renewable energy sources, and grid interactions, whether deployed in a residential, commercial, or industrial scenario. Furthermore, constant learning and refining of deep learning models ensures robustness and resistance to changing energy issues.

4. Conclusions

- The choice of optimizer and learning rate scheduler has a significant impact on model performance, with the constant learning rate scheduler consistently outperforming other schedules.

- Shallow RNN architectures with relatively few hidden states yield better results than deeper architectures.

- No single recurrent layer type (e.g., Simple RNN, LSTM, GRU) emerges as superior, suggesting that the choice of architecture should be tailored to the specific characteristics of the dataset and modelling task.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BAT | Battery |

| CS | Cuckoo Search |

| EL | Electrolyzer |

| EMS | Energy Management System |

| ESS | Energy Storage System |

| FC | Fuel cell |

| FT | Fuel tank |

| GR | Grid |

| GRU | Gated Recurrent Unit |

| GOA | Grasshopper Optimization Algorithm |

| HRES | Hybrid Renewable Energy System |

| LD | Load |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MG | Microgrid |

| MSE | Mean Squared Error |

| MPC | Model Predictive Control |

| MS | Master-Slave |

| PD | Primal-dual |

| PSO | Particle Swarm Optimization |

| PV | Photovoltaic |

| RES | Renewable Energy Sources |

| RNN | Recurrent Neural Network |

| S-MPC | Switched Model Predictive Control |

| sRNN | Simple Recurrent Neural Network |

| TLBO | Teaching Learning-based Optimization |

| WT | Water tank |

| Power flow from the BAT to the LD | |

| The maximum power flow the BAT to the LD | |

| Power flow from the BAT to the EL | |

| C | Battery Capacity |

| Internal memory cell at k | |

| Power flow from the EL to the FT | |

| Forget gate at k time step |

| Power flow from the FC to the BAT | |

| Power flow from the FC to the WT | |

| Power flow from the FT to the FC | |

| Power flow the GR to the LD | |

| The maximum power flow the GR to the LD | |

| Hidden state at k time step | |

| Cost function | |

| Control horizon | |

| The nominal capacity of the ESS | |

| Load Demand | |

| Power generated from the PV | |

| The power obtained from the upstream network | |

| The network operator’s set boundary | |

| Power flow from the PV to the BAT | |

| The maximum power flow the PV to the BAT | |

| Power flow from the PV to the GR | |

| The maximum power flow the PV to the GR | |

| Power flow from the PV to the LD | |

| The maximum power flow the PV to the LD | |

| State of Charge | |

| The state of charge of the battery | |

| The minimum of state of charge of the battery | |

| The maximum of state of charge of the battery | |

| Power flow from the WT to the EL | |

| State vector at k time step | |

| Output vector at k time step | |

| Input vector at k time step | |

| Charging efficiency of the battery | |

| Discharging efficiency of the battery |

References

- Mannini, R.; Darure, T.; Eynard, J.; Grieu, S. Predictive Energy Management of a Building-Integrated Microgrid: A Case Study. Energies 2024, 17, 1355. [Google Scholar] [CrossRef]

- Giaouris, D.; Papadopoulos, A.I.; Voutetakis, S.; Papadopoulou, S.; Seferlis, P. A power grand composite curves approach for analysis and adaptive operation of renewable energy smart grids. Clean Technologies and Environmental Policy 2015, 17, 1171–1193. [Google Scholar] [CrossRef]

- Košťál, K.; Khilenko, V.; Hunák, M. Hierarchical Blockchain Energy Trading Platform and Microgrid Management Optimization. Energies 2024, 17, 1333. [Google Scholar] [CrossRef]

- Giaouris, D.; Papadopoulos, A.I.; Seferlis, P.; Papadopoulou, S.; Voutetakis, S.; Stergiopoulos, F.; Elmasides, C. Optimum energy management in smart grids based on power pinch analysis. Chem. Eng. 2014, 39, 55–60. [Google Scholar]

- Khawaja, Y.; Giaouris, D.; Patsios, H.; Dahidah, M. Optimal cost-based model for sizing grid-connected PV and battery energy system. 2017 IEEE Jordan Conference on Applied Electrical Engineering and Computing Technologies (AEECT), IEEE, 2017; 1–6. [Google Scholar] [CrossRef]

- Bashishtha, T.K.; Singh, V.P.; Yadav, U.K.; Varshney, T. Reaction Curve-Assisted Rule-Based PID Control Design for Islanded Microgrid. Energies 2024, 17, 1110. [Google Scholar] [CrossRef]

- Versaci, M.; La Foresta, F. Fuzzy Approach for Managing Renewable Energy Flows for DC-Microgrid with Composite PV-WT Generators and Energy Storage System. Energies 2024, 17, 402. [Google Scholar] [CrossRef]

- Nikkhah, S.; Allahham, A.; Royapoor, M.; Bialek, J.W.; Giaouris, D. Optimising building-to-building and building-for-grid services under uncertainty: A robust rolling horizon approach. IEEE Transactions on Smart Grid 2021, 13, 1453–1467. [Google Scholar] [CrossRef]

- Gadoue, S.M.; Giaouris, D.; Finch, J.W. Tuning of PI speed controller in DTC of induction motor based on genetic algorithms and fuzzy logic schemes. In Proceedings of the 5th International Conference on Technology and Automation; 2005; pp. 85–90. [Google Scholar]

- Allahham, A.; Greenwood, D.; Patsios, C.; Taylor, P. Adaptive receding horizon control for battery energy storage management with age-and-operation-dependent efficiency and degradation. Electric Power Systems Research 2022, 209, 107936. [Google Scholar] [CrossRef]

- Pervez, M.; Kamal, T.; Fernández-Ramírez, L.M. A novel switched model predictive control of wind turbines using artificial neural network-Markov chains prediction with load mitigation. Ain Shams Engineering Journal 2022, 13, 101577. [Google Scholar] [CrossRef]

- Nikkhah, S.; Rabiee, A.; Soroudi, A.; Allahham, A.; Taylor, P.C.; Giaouris, D. Distributed flexibility to maintain security margin through decentralised TSO–DSO coordination. International Journal of Electrical Power & Energy Systems 2023, 146, 108735. [Google Scholar] [CrossRef]

- Khawaja, Y.; Qiqieh, I.; Alzubi, J.; Alzubi, O.; Allahham, A.; Giaouris, D. Design of cost-based sizing and energy management framework for standalone microgrid using reinforcement learning. Solar Energy 2023, 251, 249–260. [Google Scholar] [CrossRef]

- Zhu, J.; Chen, J.; Zhuo, Y.; Mo, X.; Guo, Y.; Liu, L.; Liu, M. Stochastic energy management of active distribution network based on improved approximate dynamic programming. IEEE Transactions on Smart Grid 2021, 13, 406–416. [Google Scholar] [CrossRef]

- Aaslid, P.; Korpås, M.; Belsnes, M.M.; Fosso, O.B. Stochastic optimization of microgrid operation with renewable generation and energy storages. IEEE Transactions on Sustainable Energy 2022, 13, 1481–1491. [Google Scholar] [CrossRef]

- Wang, X.; Hua, Q.; Liu, P.; Sun, L. Stochastic dynamic programming based optimal energy scheduling for a hybrid fuel cell/PV/battery system under uncertainty. Process Safety and Environmental Protection 2022, 165, 380–386. [Google Scholar] [CrossRef]

- Pamulapati, T.; Cavus, M.; Odigwe, I.; Allahham, A.; Walker, S.; Giaouris, D. A review of microgrid energy management strategies from the energy trilemma perspective. Energies 2022, 16, 289. [Google Scholar] [CrossRef]

- Spiliopoulos, N.; Sarantakos, I.; Nikkhah, S.; Gkizas, G.; Giaouris, D.; Taylor, P.; Rajarathnam, U.; Wade, N. Peer-to-peer energy trading for improving economic and resilient operation of microgrids. Renewable Energy 2022, 199, 517–535. [Google Scholar] [CrossRef]

- Suresh, M.C.V.; Edward, J.B. A hybrid algorithm based optimal placement of DG units for loss reduction in the distribution system. Applied Soft Computing 2020, 91, 106191. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, S.; Sun, B.; Li, X. A distributed proximal primal–dual algorithm for energy management with transmission losses in smart grid. IEEE Transactions on Industrial Informatics 2022, 18, 7608–7618. [Google Scholar] [CrossRef]

- Liu, L.-N.; Yang, G.-H. Distributed optimal energy management for integrated energy systems. IEEE Transactions on Industrial Informatics 2022, 18, 6569–6580. [Google Scholar] [CrossRef]

- Cavus, M.; Allahham, A.; Adhikari, K.; Zangiabadia, M.; Giaouris, D. Control of microgrids using an enhanced Model Predictive Controller. IET 2022. [Google Scholar] [CrossRef]

- Cavus, M.; Adhikari, A.A.K.; Zangiabadi, M.; Giaouris, D. Energy Management of Microgrids using a Flexible Hybrid Predictive Controller.

- Cavus, M.; Allahham, A.; Adhikari, K.; Zangiabadi, M.; Giaouris, D. Energy management of grid-connected microgrids using an optimal systems approach. IEEE Access 2023, 11, 9907–9919. [Google Scholar] [CrossRef]

- Cavus, M.; Allahham, A.; Adhikari, K.; Giaouris, D. A hybrid method based on logic predictive controller for flexible hybrid microgrid with plug-and-play capabilities. Applied Energy 2024, 359, 122752. [Google Scholar] [CrossRef]

- Cavus, M.; Ugurluoglu, Y.F.; Ayan, H.; Allahham, A.; Adhikari, K.; Giaouris, D. Switched Auto-Regressive Neural Control (S-ANC) for Energy Management of Hybrid Microgrids. Applied Sciences 2023, 13, 11744. [Google Scholar] [CrossRef]

- Rahmani, E.; Mohammadi, S.; Zadehbagheri, M.; Kiani, M. Probabilistic reliability management of energy storage systems in connected/islanding microgrids with renewable energy. Electric Power Systems Research 2023, 214, 108891. [Google Scholar] [CrossRef]

- Zhang, X.; Guan, J.; Zhang, B. A master slave peer to peer integration microgrid control strategy based on communication. In 2016 IEEE PES Asia-Pacific Power and Energy Engineering Conference (APPEEC); IEEE, 2016; pp. 1106–1110. [Google Scholar] [CrossRef]

- Pang, K.; Wang, C.; Hatziargyriou, N.D.; Wen, F.; Xue, Y. Formulation of radiality constraints for optimal microgrid formation. IEEE Transactions on Power Systems, 2022. [Google Scholar] [CrossRef]

- Mansouri Kouhestani, F.; Byrne, J.; Johnson, D.; Spencer, L.; Brown, B.; Hazendonk, P.; Scott, J. Multi-criteria PSO-based optimal design of grid-connected hybrid renewable energy systems. International Journal of Green Energy 2020, 17, 617–631. [Google Scholar] [CrossRef]

- Oviedo, R.J.M.; Fan, Z.; Gormus, S.; Kulkarni, P.; Kaleshi, D. Residential energy demand management in smart grids. In PES T&D 2012; IEEE, 2012; pp. 1–8. [Google Scholar]

- Libao, F.J.D.; Dizon, R.O. Rule-based energy management strategy for hybrid electric road train. In 2018 IEEE 10th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM); IEEE, 2018; pp. 1–4. [Google Scholar]

- Sanaye, S.; Sarrafi, A. A novel energy management method based on Deep Q Network algorithm for low operating cost of an integrated hybrid system. Energy Reports 2021, 7, 2647–2663. [Google Scholar] [CrossRef]

- Abdul Basit, N.A.I.; Rosmin, N.; Mustaamal, A.H.; Hussin, S.M.; Said, D.M. A Simple Energy Management System for a Micro Grid System Using Rule-Based Algorithm. In Control, Instrumentation and Mechatronics: Theory and Practice; Springer, 2022; pp. 273–284. [Google Scholar]

- Sansa, I.; Boussaada, Z.; Bellaaj, N.M. Solar Radiation Prediction Using a Novel Hybrid Model of ARMA and NARX. Energies 2021, 14, 6920. [Google Scholar] [CrossRef]

- Brahma, B.; Wadhvani, R. Solar irradiance forecasting based on deep learning methodologies and multi-site data. Symmetry 2020, 12, 1830. [Google Scholar] [CrossRef]

- Schmidt, R.M. Recurrent neural networks (rnns): A gentle introduction and overview. arXiv 2019, arXiv:1912.05911. [Google Scholar]

- Amidi, A.; Amidi, S. Vip cheatsheet: Recurrent neural networks, 2018.

- Nikkhah, S.; Allahham, A.; Royapoor, M.; Bialek, J.W.; Giaouris, D. A community-based building-to-building strategy for multi-objective energy management of residential microgrids. In 2021 12th International Renewable Engineering Conference (IREC); IEEE, 2021; pp. 1–6. [Google Scholar]

- Pamulapati, T.; Allahham, A.; Walker, S.L.; Giaouris, D. Evolution Operator-based automata control approach for EMS in active buildings. IET, 2022. [Google Scholar]

- Lee, D.; Arigi, A.M.; Kim, J. Algorithm for autonomous power-increase operation using deep reinforcement learning and a rule-based system. IEEE Access 2020, 8, 196727–196746. [Google Scholar] [CrossRef]

- Chang, C.; Zhao, W.; Wang, C.; Song, Y. A novel energy management strategy integrating deep reinforcement learning and rule based on condition identification. IEEE Transactions on Vehicular Technology 2022, 72, 1674–1688. [Google Scholar] [CrossRef]

- Allahham, A.; Greenwood, D.; Patsios, C. Incorporating Ageing Parameters into Optimal Energy Management of Distribution Connected Energy Storage. AIM 2019. [Google Scholar]

- Javaid, C.J.; Allahham, A.; Giaouris, D.; Blake, S.; Taylor, P. Modelling of a virtual power plant using hybrid automata. The Journal of Engineering 2019, 17, 3918–3922. [Google Scholar] [CrossRef]

- Allahham, A.; Greenwood, D.; Patsios, C.; Walker, S.L.; Taylor, P. Primary frequency response from hydrogen-based bidirectional vector coupling storage: modelling and demonstration using power-hardware-in-the-loop simulation. Frontiers in Energy Research 2023, 11, 1217070. [Google Scholar] [CrossRef]

- Sökmen, K.F.; Çavuş, M. Review of batteries thermal problems and thermal management systems. Journal of Innovative Science and Engineering (JISE) 2017, 1, 35–55. [Google Scholar]

- Cengiz, M.; Forshaw, M.; Atapour-Abarghouei, A.; McGough, A.S. Predicting the performance of a computing system with deep networks. In Proceedings of the 2023 ACM/SPEC International Conference on Performance Engineering (ICPE ’23), Association for Computing Machinery, New York, NY, USA; 2023; pp. 91–98. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. 2014.

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics (AISTATS ’10), edited by Y.W. Teh and M. Titterington, vol. 9 of Proceedings of Machine Learning Research, PMLR, Chia Laguna Resort; Sardinia, Italy, 13–15 May 2010; pp. 249–256.

- Eismann, S.; Costa, D.E.; Liao, L.; Bezemer, C.P.; Shang, W.; van Hoorn, A.; Kounev, S. A case study on the stability of performance tests for serverless applications. Journal of Systems and Software 2022, 189, 111294. [Google Scholar] [CrossRef]

- Wohlin, C.; Runeson, P.; Höst, M.; Ohlsson, M.C.; Regnell, B.; Wesslén, A. Experimentation in Software Engineering; Springer Science & Business Media, 2012. [Google Scholar]

- Akbulut, O.; McLaughlin, L.; Xin, T.; Forshaw, M.; Holliman, N.S. Visualizing ordered bivariate data on node-link diagrams. Visual Informatics 2023, 7, 22–36. [Google Scholar] [CrossRef]

| 1 | Github Link: REDACTED UNTIL PUBLISH |

| Data Format | Column |

| Accumulated | , |

| Main elements | , , , , |

| Additional elements | , , , , , |

| Optimizer | LR_Sch | Batch Size | Arch_Details | Test_R2 | Test_MSE | Test_MAE |

|---|---|---|---|---|---|---|

| adam | constant | 7 | GRU(50) | 0.999809 | 0.000002 | 0.000831 |

| adam | constant | 7 | GRU(15) | 0.999780 | 0.000003 | 0.001037 |

| adam | constant | 7 | LSTM(50) | 0.999731 | 0.000003 | 0.000798 |

| adam | constant | 7 | sRNN(50) | 0.999468 | 0.000006 | 0.001499 |

| rmsprop | constant | 7 | GRU(50) | 0.999466 | 0.000055 | 0.001366 |

| rmsprop | constant | 7 | sRNN(50) | 0.999457 | 0.000005 | 0.001246 |

| rmsprop | constant | 7 | GRU(15) | 0.999223 | 0.000012 | 0.001820 |

| adam | constant | 7 | LSTM(15) | 0.999041 | 0.000010 | 0.001433 |

| rmsprop | constant | 7 | GRU(15) + GRU(15) | 0.998331 | 0.000022 | 0.002302 |

| rmsprop | constant | 7 | LSTM(10) | 0.997685 | 0.000026 | 0.002008 |

| rmsprop | constant | 7 | GRU(50) + GRU(50) + GRU(50) | 0.997464 | 0.000034 | 0.003154 |

| rmsprop | constant | 7 | LSTM(50) + LSTM(50) + LSTM(50) | 0.993648 | 0.000071 | 0.002782 |

| adam | constant | 7 | LSTM(15) + LSTM(15) + LSTM(15) | 0.989356 | 0.000117 | 0.002773 |

| rmsprop | constant | 7 | LSTM(15) + LSTM(15) + LSTM(15) | 0.983175 | 0.000175 | 0.004793 |

| adam | constant | 7 | LSTM(10) + LSTM(10) | 0.982457 | 0.000190 | 0.002483 |

| rmsprop | constant | 7 | LSTM(5) + LSTM(5) | 0.975035 | 0.000278 | 0.005464 |

| sgd | constant | 7 | LSTM(10) | 0.833051 | 0.001675 | 0.019741 |

| sgd | constant | 7 | LSTM(5) | 0.824882 | 0.001812 | 0.017543 |

| adam | constant | 7 | sRNN(5) | 0.797054 | 0.002377 | 0.017024 |

| rmsprop | constant | 7 | GRU(5) | 0.780668 | 0.002561 | 0.007525 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).