1. Introduction

Wind energy is an environmentally friendly and economically viable form of renewable energy. According to the Global Wind Energy Council (GWEC)’s Global Wind Report 2023, it is projected that global onshore wind power capacity will exceed 100GW for the first time by 2024 [

1]. However, wind energy data exhibits significant randomness and non-stationarity, which has a substantial impact on the stable operation of power systems. Therefore, accurate prediction of wind power is crucial [

2,

3,

4].

Currently, wind energy forecasting methods are mainly divided into physical models, statistical models, and artificial intelligence models [

5]. Physical models primarily consider various geographical information, essentially based on Numerical Weather Prediction (NWP) [

6] and high-precision wind farm simulation strategies. This approach has been widely applied in countries such as Spain, Denmark, and Germany, with examples including the SOWIE model developed by Eurowind in Germany [

7] and a wind sequence correction algorithm based on NWP proposed by Wang et al. [

8]. These algorithms utilize large amounts of data to calculate accurate and reliable wind power predictions. However, due to the large data scale, physical models suffer from slow computational speed and low efficiency, and are affected by adverse wind farm conditions, making reliable data collection difficult. Statistical methods, on the other hand, do not consider external conditions such as geography or electrical factors. Their core is to use the relationships between historical wind power data for prediction in order to improve prediction efficiency [

9]. Classical models such as Moving Average (MA) [

10] and Autoregressive Integrated Moving Average (ARIMA) [

11] are based on modeling linear relationships between data. However, when facing complex patterns, these methods suffer from issues of low prediction accuracy and poor performance.

In comparison to the aforementioned approaches, artificial intelligence models have exhibited increasingly remarkable performance in the domain of wind energy prediction. Methods such as Support Vector Regression (SVR) [

12], Extreme Learning Machine (ELM) [

13], and Gated Recurrent Unit (GRU) [

14] have yielded significant accomplishments in wind energy prediction research. However, due to the considerable prediction errors commonly associated with individual models, hybrid prediction models have gained widespread adoption in recent years. Presently, hybrid models primarily encompass three facets: data preprocessing, optimization algorithm tuning, and the prediction of single or combined models. Zhang et al. proposed a method employing Wavelet Transform (WT) for data denoising preprocessing and enhanced the Improved Atomic Search Optimization (IASO) algorithm to optimize the parameters of Regularized Extreme Learning Machine (RELM) [

15]. The accuracy of this model was substantiated through ablation experiments. Wang et al. optimized the input weights of ELM using genetic algorithms [

16], whereas Zhai, Ma, and Tan utilized the Artificial Fish Swarm Algorithm and Salp Swarm Algorithm to optimize the initial input weights and thresholds of ELM [

17,

18]. The outcomes indicate that these models manifest high prediction accuracy. In recent years, the wind power prediction domain has begun embracing deep learning models such as Long Short-Term Memory (LSTM) [

19,

20], Temporal Convolutional Neural Network (TCN) [

21], and Bidirectional Gated Recurrent Unit (BiGRU) [

22]. These models and their derivatives have emerged as principal tools in this sphere. Scholars like W. Wang proposed a prediction methodology based on the fusion of TCN and Light Gradient Boosting Machine (LightGBM) [

23]. Researchers such as Chi integrated the attention mechanism into the BiGRU-TCN hybrid model and employed wavelet denoising (WT) processed raw data for prediction[

24]. Experimental results corroborate the robust predictive capability of this model. Presently, researchers generally favor hybrid models grounded in intelligent algorithms. Ding et al. advanced a wind energy prediction model optimizing ELM using the Whale Optimization Algorithm (WOA) [

25]. Ye et al. preprocessed raw data using clustering algorithms and optimized ELM parameters using Genetic Algorithm (GA) to realize short-term wind speed prediction [

26]. Zhang et al. proposed a sparse search algorithm (SSA) to optimize the TCN-BiGRU model and employed the Variational Mode Decomposition (VMD) algorithm to decompose data, thereby mitigating the non-stationarity of wind power data [

27]. Ablation experiments demonstrated that this model achieved heightened prediction accuracy compared to scenarios where the SSA algorithm was not employed for parameter optimization. The research by the aforementioned scholars underscores that hybrid models, predicated on algorithmic parameter optimization, can further enhance model prediction accuracy.

Wind power data is inherently characterized by randomness and non-stationarity, necessitating data preprocessing to effectively mitigate prediction errors. To address this, scholars have proposed methodologies grounded in signal decomposition for model formulation. This approach principally leverages signal decomposition algorithms to partition initial wind energy data into multiple regular sub-modes, enabling independent prediction of each sub-mode. Empirical evidence has underscored the efficacy of this approach in substantially reducing prediction errors. For instance, Gao et al. [

28] introduced a composite model combining Empirical Mode Decomposition (EMD) with GRU for prediction tasks. However, the EMD algorithm encounters notable challenges such as mode mixing when confronted with gapped signals. In response, scholars have advocated for the incorporation of uniformly distributed white noise into EMD, manifesting as Ensemble Empirical Mode Decomposition (EEMD) and Complementary Ensemble Empirical Mode Decomposition (CEEMD). This technique has found widespread adoption within the prediction domain. For instance, Wang et al. [

29] utilized the EEMD method to decompose photovoltaic generation data into high-frequency, mid-frequency, and low-frequency components, subsequently predicting each component individually and aggregating the predictions to yield the final forecast. Torres et al.[

30] argued that inadequate decomposition processing frequencies may lead to the persistence of white noise's influence and the emergence of pseudo-mode phenomena. Consequently, they proposed the Complementary Ensemble Empirical Mode Decomposition with Adaptive Noise (CEEMDAN), effectively mitigating residual white noise's impact. Addressing residual noise and pseudo-mode concerns further, Colominas et al.[

31] introduced the Improved Complementary Ensemble Empirical Mode Decomposition with Adaptive Noise (ICEEMDAN), demonstrating heightened reconstruction accuracy for components and enhanced suitability for nonlinear signal analysis. For instance, Mohaned L B et al. [

32] proposed a tool wear detection method based on spectral decomposition and ICEEMDAN mode energy, with experimental findings showcasing enhanced detection precision using this decomposition method.

In summary, several limitations persist within the realm of wind power prediction. Firstly, prevalent approaches tend to employ single-scale prediction models for all decomposed sub-modes, overlooking the distinctive characteristics of sub-modes across varying frequencies. Secondly, contemporary artificial intelligence prediction methods often encounter challenges in parameter optimization, resulting in high trial and error costs. Lastly, some conventional optimization algorithms exhibit insufficient optimization capabilities and sluggish convergence speeds. Therefore, this paper advocates for a multi-faceted approach, merging data decomposition techniques with multiple models, and harnessing the Differential Bees Optimization algorithm (DBO) [

33]. This strategy rectifies existing wind power model shortcomings by addressing deficiencies in sub-mode prediction methods, parameter optimization, and scale singularity. Specifically, ICEEMDAN is employed to decompose the original wind power sequence into modes, yielding multiple sub-modes. Subsequently, all sub-sequences are reconstructed into several new components using Permutation Entropy (PE), with high, mid, and low-frequency components determined based on PE values. Following this, LSSVR, RELM, and MHA-BiGRU models are established to predict high, mid, and low-frequency components respectively. The DBO algorithm is then utilized to optimize the MHA-BiGRU model. Finally, the predicted values of each frequency are aggregated to obtain the final prediction result.

The main contributions of this paper are as follows:

(1) Proposing a multiscale wind power prediction hybrid model combining ICEEMDAN, PE, LSSVR, RELM, and MHA-BiGRU. Utilizing ICEEMDAN to decompose the original wind energy sequence. Compared with methods such as EMD, EEMD, and CEEMDAN, this method can more effectively address mode mixing and residual noise problems, thereby better handling the nonlinearity and non-stationarity characteristics of wind power sequences.

(2) Utilizing the strong noise resistance and low computational complexity advantages of PE to calculate the entropy values corresponding to the complexity of each sub-mode, and reconstructing the sub-components in phase space according to the size of the entropy values. By reconstructing into high, medium, and low-frequency components, further eliminating the non-stationarity characteristics of wind power data and optimizing the input data of the model.

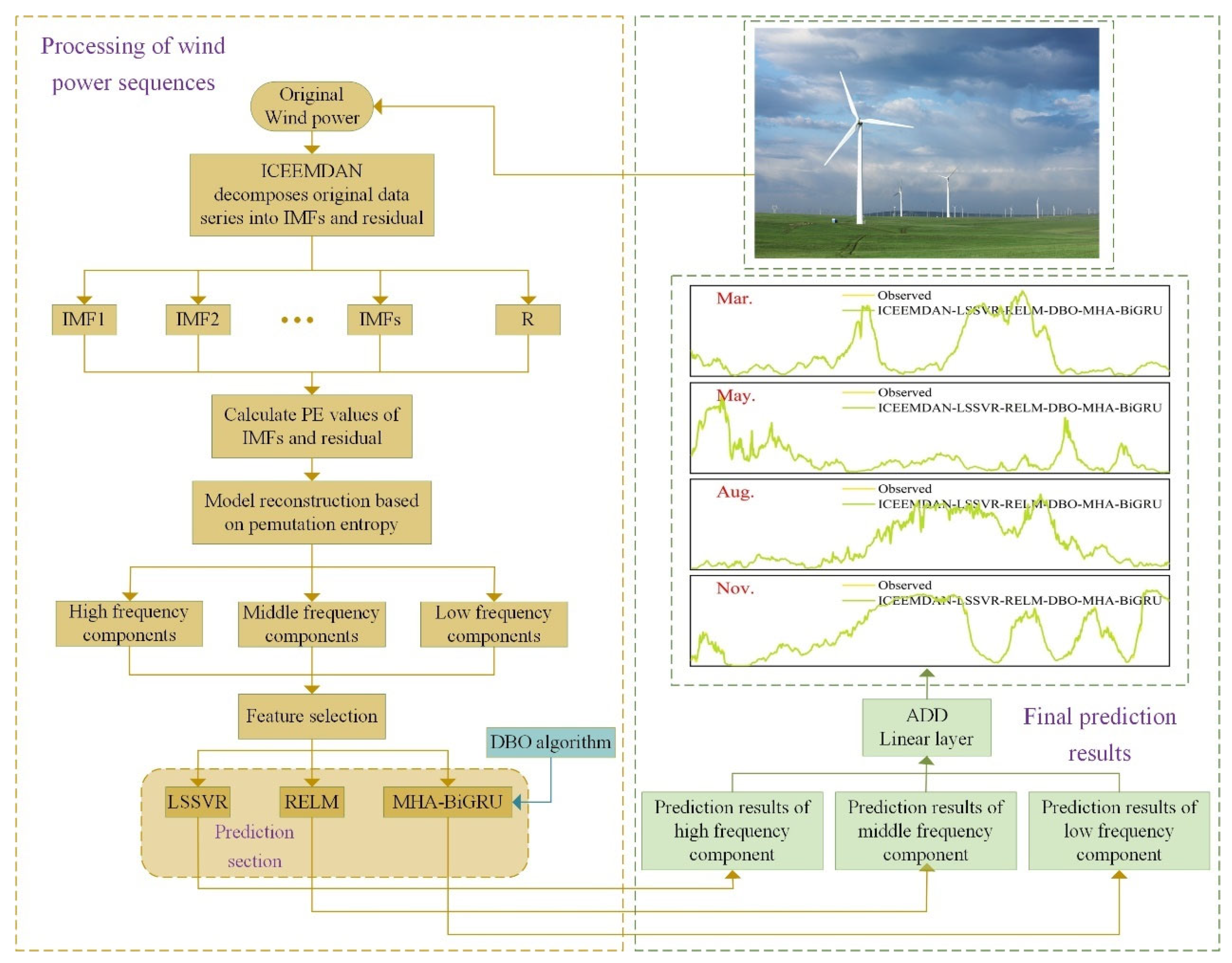

Figure 1.

Flowchart of the proposed ICEEMDAN-LSSVR-RELM-DBO-MHA-BiGRU model.

Figure 1.

Flowchart of the proposed ICEEMDAN-LSSVR-RELM-DBO-MHA-BiGRU model.

(3) By utilizing the characteristics of different models in different frequency predictions, the high, medium, and low-frequency components obtained through decomposition are inputted into LSSVR, RELM, and MHA-BiGRU models respectively for prediction, thereby achieving targeted multiscale hybrid prediction and overcoming the problem of insufficient prediction accuracy of a single model.

(4) Utilizing the powerful capability of multi-head attention mechanism to capture the strong correlation between data, combined with the BiGRU model, can comprehensively and accurately capture the information in the sequence, solve the problem of information overload when predicting with the BiGRU model due to the inability to perform parallel computation, and thus improve the accuracy of model prediction. Then, predicting the low-frequency sequence, in order to address the limitations and blindness of manual parameter tuning in the MHA-BiGRU model when there are too many parameters, optimizing the learning rate, the number of BiGRU neurons and multi-head attention heads, as well as the number of filters and regularization parameters through the DBO algorithm to further improve its prediction accuracy.

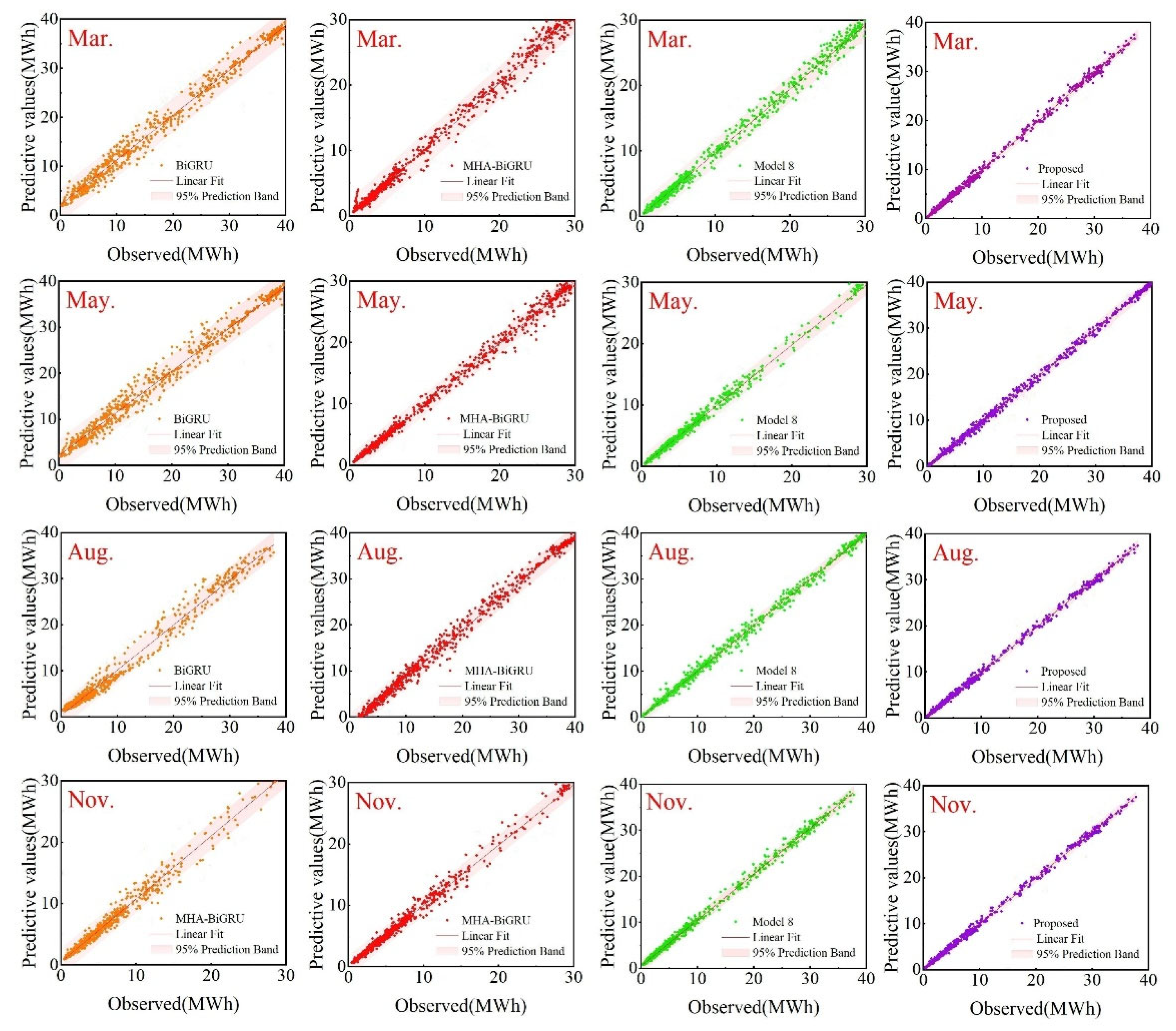

(5) Conducting multiple groups of ablation experiments on the proposed model,verifying the superiority of the proposed model from the perspectives of evaluation indicators, error analysis, and linear fitting. The model is compared with 8 benchmark models and validated using data sets from 4 different seasons. The results show that the proposed model improves the accuracy of wind power prediction and achieves better prediction results.

2. Methods

2.1. Intrinsic Combined Ensemble Empirical Mode Decomposition with Adaptive Noise

Empirical mode decomposition (EMD), proposed by Huang et al. in 1998, is a signal processing method suitable for nonlinear and non-stationary processes. However, after decomposing data using this method, there is a significant issue of mode mixing. Subsequently, Wu et al. proposed the Ensemble Empirical Mode Decomposition (EEMD) method to address this issue. EEMD introduces white noise with a mean of 0 into the decomposition process, uniformly distributing it across the entire time-frequency space to mask the inherent noise of the signal, thereby alleviating the problem of mode mixing caused by EMD decomposition. Nevertheless, when the number of decomposition iterations in EEMD is insufficient, the presence of white noise may lead to reconstruction errors.

To overcome the limitations of EMD and EEMD, Torres et al. proposed the Complete Ensemble Empirical Mode Decomposition with Adaptive Noise (CEEMDAN) method. CEEMDAN replaces the uniformly distributed white noise signal with IMF components containing auxiliary noise obtained after EMD decomposition, thereby reducing the influence of residual white noise components.

However, CEEMDAN still cannot completely address the issues of residual noise and pseudo modes. Therefore, Colominas et al. further improved CEEMDAN by introducing the Iterative Complete Ensemble Empirical Mode Decomposition with Adaptive Noise (ICEEMDAN) method. The core of this signal decomposition method lies in selecting the Kth IMF component of the white noise decomposed by EMD as the auxiliary noise. Through multiple iterations of noise addition and decomposition, ICEEMDAN comprehensively addresses the randomness and non-stationarity of the data, thereby enhancing the stability and reliability of the final decomposition results and reducing residual noise generated during reconstruction. The computational process is as follows:

1. Based on the original signal

s, construct a new sequence

by adding

i groups of white noise

to

s, resulting in the first group of residues

.

where

represents the k-th mode component generated by EMD decomposition,

represents the local mean of the signal generated by the EMD algorithm, and

represents the overall mean.

2. Calculate the first mode component

iteratively to obtain the k-th group of residues

and mode component

.

where

can be expressed as:

3. Repeat step 2 until the calculation is complete, obtaining all mode components and the final residue.

2.2. Permutation Entroy

The PE (Permutation Entropy) algorithm, introduced by Bandt et al., serves as a method for characterizing the complexity of time series. Its core principle lies in assessing the irregularity of a time series through the examination of permutation patterns within its subsequences. A higher entropy value signifies greater complexity within the time series, whereas a lower value indicates a higher degree of regularity. The computational formula for this algorithm is as follows:

1. Consider a time series .

2. Perform phase space reconstruction on the time series, resulting in a reconstruction matrix

Z with a given dimension

m and time delay

.

where

3. Sort the elements of in ascending order and record the sequence of elements in each row of the reconstruction matrix. Calculate the probability of occurrence for each element sequence to obtain .

4. The permutation entropy of time series

X is defined as:

where

represents the probability of each element size relationship permutation in the reconstruction matrix

Z,

m is the given dimension,

k is the number of subsequences, and

q is the total number of elements.

2.3. Bidirectional Gated Recurrent Unit

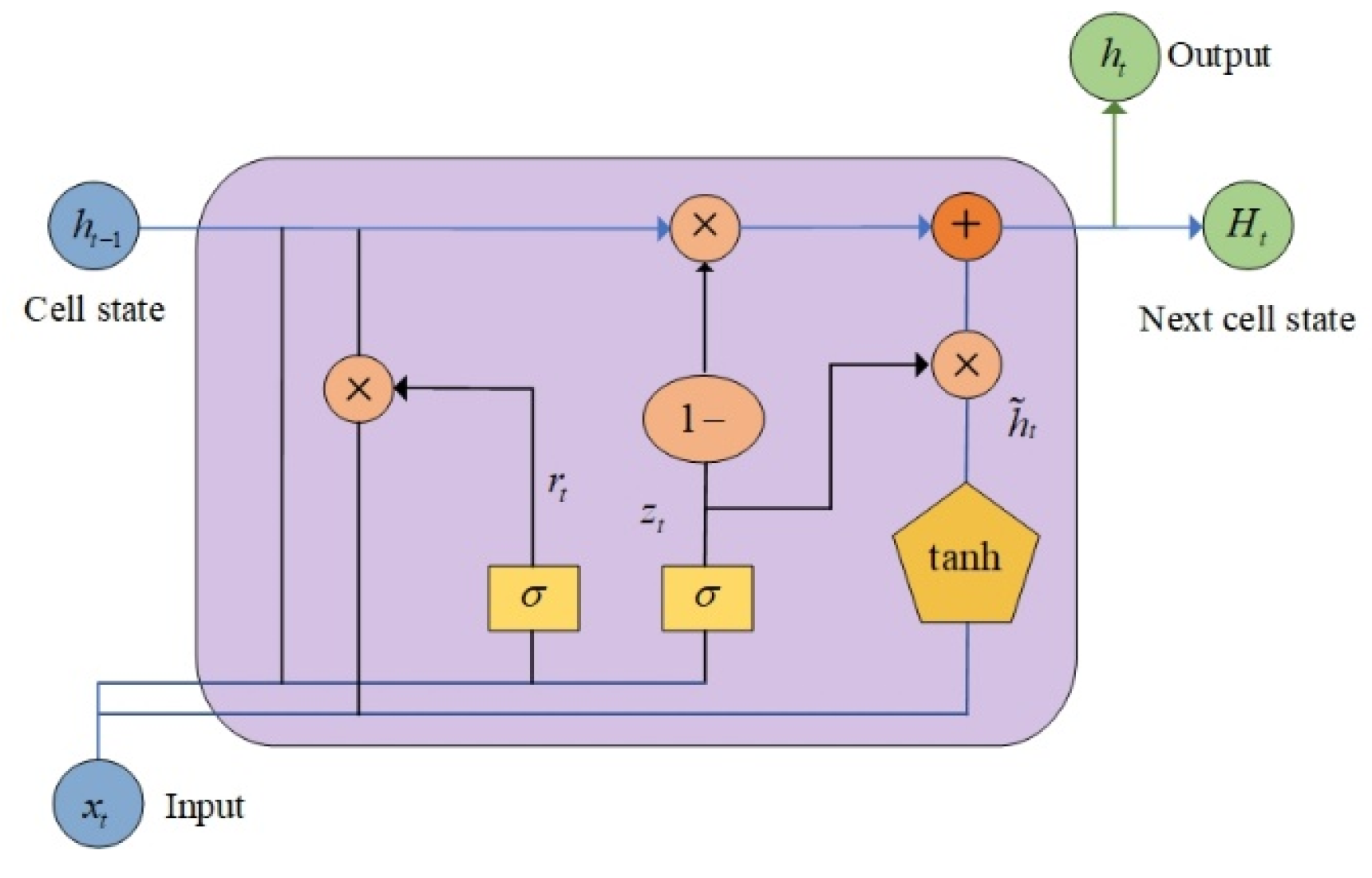

The GRU (Gated Recurrent Unit) stands as an enhanced version of the Long Short-Term Memory (LSTM) network within the domain of recurrent neural networks. It is tailored to capture long-term dependencies within sequential data while boasting fewer parameters than LSTM, thereby mitigating computational costs. The core principle involves amalgamating the forget gate and input gate into a unified update gate. Through the management of information flow and state updates, it effectively reduces the parameter count and computational overhead. The model's architectural depiction is presented in

Figure 2, accompanied by the following computational formulas:

where

represents the update gate,

,

,

,

represent the reset gate, current time step input information, new cell vector, and hidden state vector, respectively.

,

,

W represent the weight matrices.

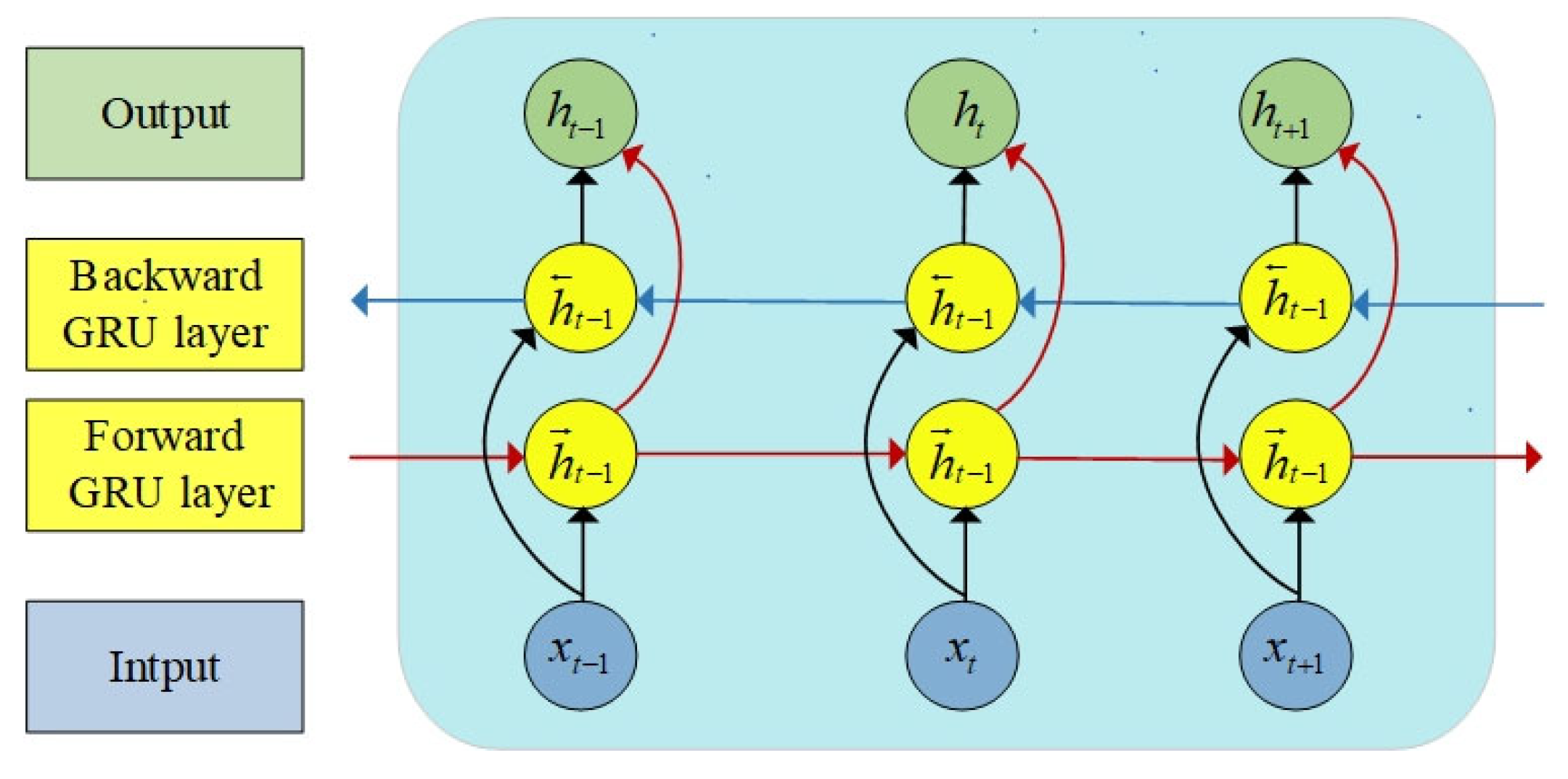

Due to the strong temporal characteristics inherent in wind power load data, information corresponding to both the previous time step (

t-1) and the current time step (

t+1) significantly impact the prediction results at time

t during model training. Consequently, the GRU model fails to fully exploit the inherent information within wind power sequences. In contrast, the Bidirectional Recurrent Neural Network (BiGRU) model addresses this limitation by utilizing both past and future data to enhance prediction accuracy. This effectively overcomes the drawback of low data information utilization observed in the GRU network. Comprised of two GRU models, BiGRU possesses the capability to capture bidirectional dependencies within sequential data, thereby enabling it to adapt to more complex sequence patterns. The network structure is visually depicted in

Figure 3.

2.4. Multi-Head Attention

The Attention Mechanism (AM) is a computational method for efficient allocation of information resources, prioritizing more crucial tasks to effectively mitigate information overload.In AM, input information is represented by key vectors (Keys) and value vectors (Values), while target information is represented by query vectors (Query).The weights of the value vectors are determined based on the similarity between the query vector and the key vector. Following this, the final attention values are computed by aggregating the weighted value vectors. The fundamental formula is as follows:

where

is the attention value,

Q represents the query vector,

K represents the key vector in the key-value pairs,

V represents the value vector in the key-value pairs,

W represents the weight corresponding to

V, and

is the weight transformation function.

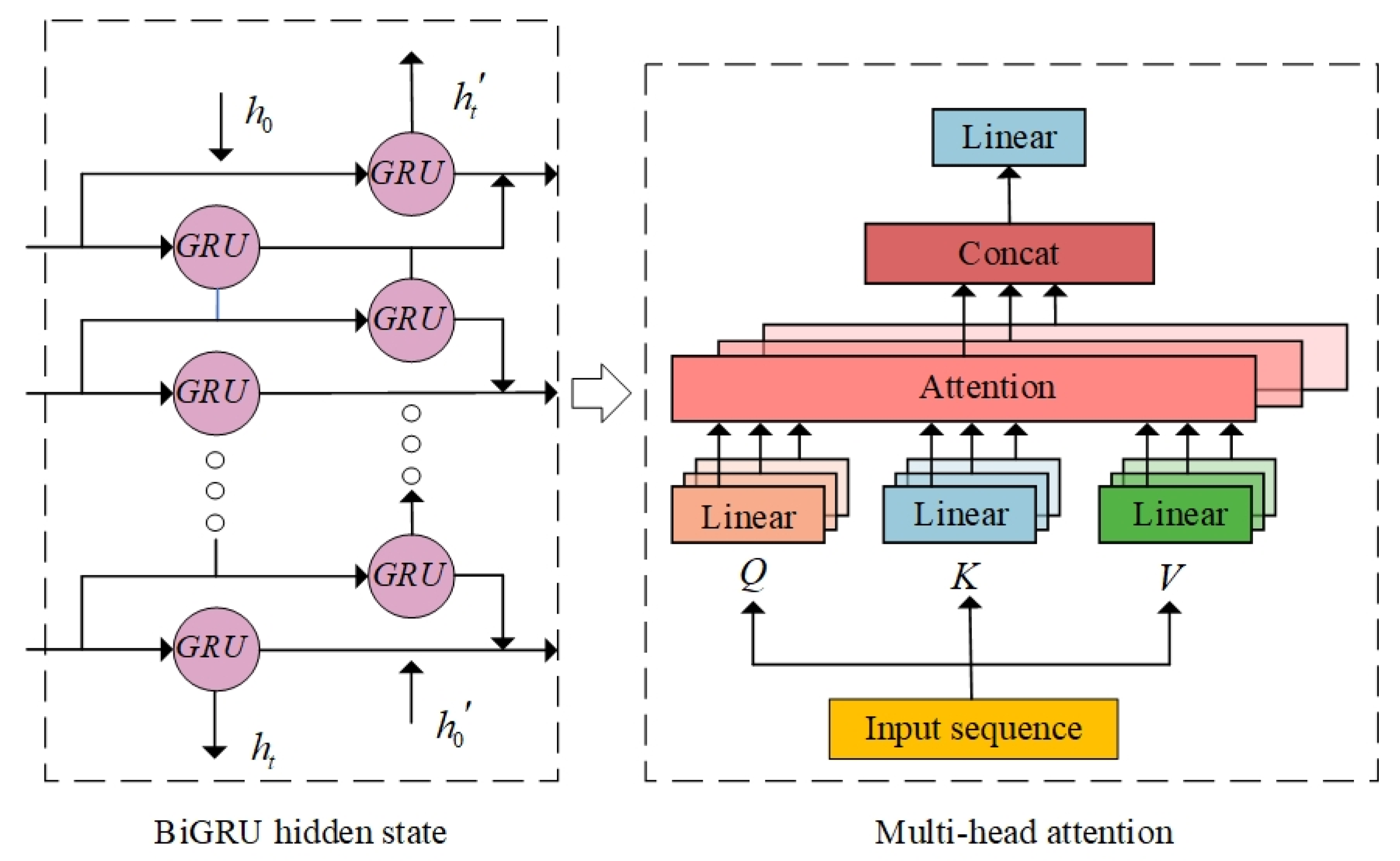

The Multi-head Attention mechanism originates from the Transformer [34] model. Its core principle involves mapping query, key, and value vectors to multiple spaces through distinct linear transformations, followed by calculating the scaled dot-product attention.The computational formula is as follows:

where

represents the dimensionality of the keys, which is used to scale the dot product to prevent the issue of gradient vanishing.

Subsequently, vectors Q, K, and V of dimensionality d are transformed into single vectors of dimensionality using different weight matrices , and . Here, n denotes the number of parallel layers or heads, and these individual vectors are input into corresponding parallel attention layers.Finally, the outputs of each layer are concatenated and fused together using a Linear layer to amalgamate all head output results.

As depicted in the Multi-head Attention section in

Figure 4, in our model, we harness the powerful capability of multi-head attention mechanism to capture diverse temporal scale features in time series, thereby predicting wind power sequences. The mathematical computation formula is as follows:

where

,

,

,

,

.

The multi-head attention architecture facilitates leveraging the complexity of input sequences and capturing long-range dependencies, thereby enhancing prediction accuracy. In this study, to mitigate overfitting, the model training employs Mean Squared Error (MSE) as the loss function and utilizes the Adam optimizer for parameter updates.

2.5. MHA-BiGRU

Although BiGRU performs effectively in handling wind power sequences, it lacks the ability to parallelize data processing, resulting in information overload and reduced computational efficiency when dealing with large datasets. To address this limitation, this study combines BiGRU with the Multi-head Attention mechanism (MHA), as depicted in

Figure 4. This integrated model architecture effectively resolves the aforementioned issue. The core process involves utilizing the data trained through the BiGRU hidden layers as input for the MHA network. The decomposed Q, K, and V obtained are then fed into each head for attention value computation. Subsequently, the different results outputted from each channel are feature-weighted and concatenated through a connection layer to form the sequence.

2.6. DBO-MHA-BiGRU

The Dung Betele Optimizer (DBO) optimization algorithm, proposed by Shen et al. in 2022, introduces a novel swarm intelligence optimization approach. Its primary principle involves simulating five distinct behaviors observed in dung beetles: rolling, dancing, foraging, stealing, and reproducing, to address optimization problems. Leveraging the DBO algorithm, the MHA-BiGRU model undergoes optimization of parameters such as learning rate, number of BiGRU neurons, number of attention heads, filter count, and regularization parameters. This optimization ensures the model's convergence, with the objective of minimizing the loss function. The optimization formula is as follows:

where

,

,

,

,and epoch represent the number of attention heads, filter count, MHA-BiGRU model layers, learning rate, and regularization parameter, respectively.

train_loss denotes the loss function during the training process.

2.7. Least Squares Support Vector Regression

Least Squares Support Vector Regression (LSSVR), proposed by Vapnik in the early 1990s, is a statistical learning method known for its fast training speed, good generalization performance, and strong ability to fit nonlinear functions. It particularly excels in handling high-frequency signals, as its core algorithm transforms the solution of a convex quadratic optimization problem into solving a system of linear equations. Consequently, LSSVR requires fewer parameters to train compared to SVR, resulting in faster training speed.

Suppose there is a training set

, where

represents the input and

represents the output. The model calculation formula is as follows:

In the equation, , represents the weight vector, denotes the non-linear mapping from the input space to the high-dimensional feature space, stands for the Lagrange multiplier, is the kernel function, and b is the bias term.

2.8. Regularized Extreme Learning Machine

Extreme Learning Machine (ELM) is a machine learning algorithm proposed by Professor Huang from Nanyang Technological University in 2004. Its distinguishing feature is a single hidden layer feedback neural network. It can be transformed into solving the generalized inverse problem of the M-P matrix by simply adding a least squares minimum norm problem. Consequently, ELM has fewer model parameters and boasts fast training speed. It demonstrates excellent capability in handling medium-frequency sequence information.

Suppose we have a sample set

, where the model input is denoted by

and the model output by

. Then, an ELM network with L hidden layer nodes can be defined as:

In the equation, represents the activation function, denotes the connection weights between the output layer and the hidden layer, signifies the output weights between the hidden layer and the output layer. stands for the bias of the i-th hidden unit, and represents the network output. To minimize the output error, the calculation formula is as follows: , H denotes the hidden layer output and T represents the expected output.

To improve the model's generalization performance, a regularization parameter is introduced to solve , effectively addressing numerical instability issues when computing the pseudo-inverse of H. The computation process of the RELM model, utilizing a regularized least squares method to solve , is mathematically expressed as follows: .

2.9. Composition of the Proposed Model

Drawing from the aforementioned methodologies, this study introduces a multi-scale hybrid wind power prediction model that integrates ICEEMDAN signal decomposition, permutation entropy (PE) reconstruction, and LSSVR-RELM-MHA-BiGRU. The model parameters are optimized using the DBO optimization algorithm. The overall model workflow, as depicted in

Figure 1, is further elucidated with detailed step-by-step explanations as follows:

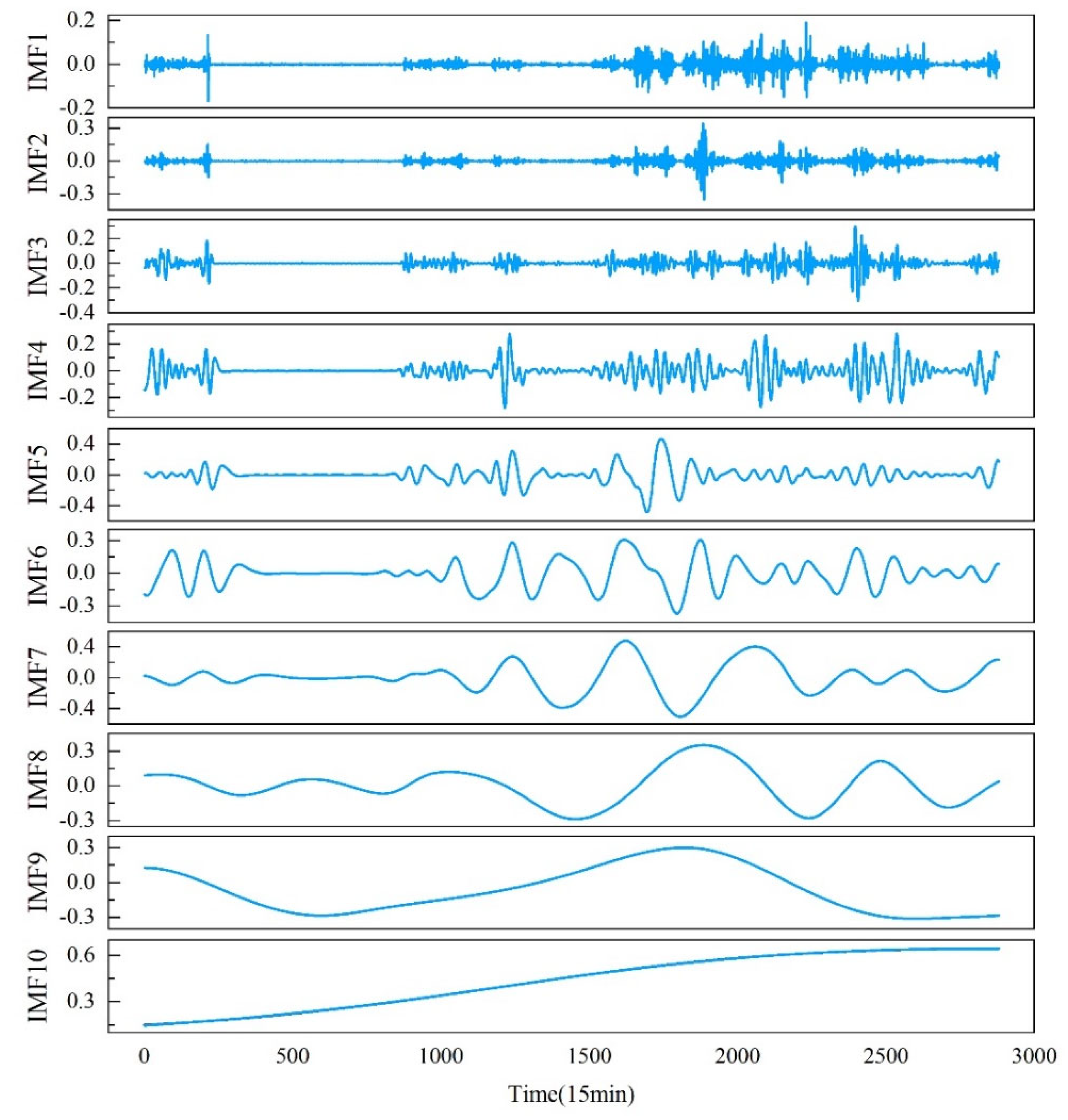

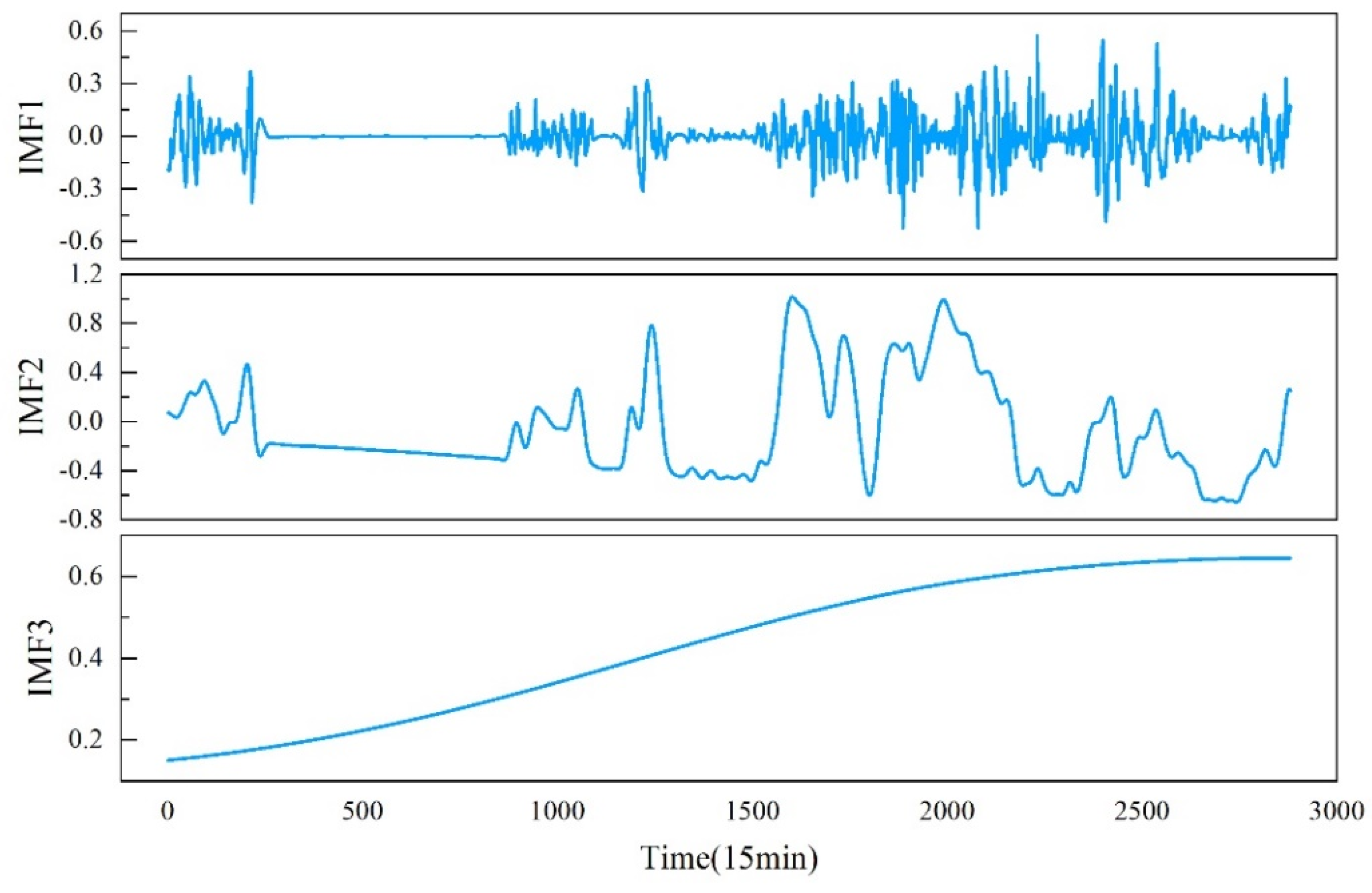

Step 1: ICEEMDAN decomposes the original wind power data into multiple Intrinsic Mode Function (IMF) components and a residual R. Using permutation entropy (PE), all IMF components are reconstructed to reduce computational complexity. Subsequently, the reconstructed components are categorized into high-frequency, medium-frequency, and low-frequency components based on their PE values.

Step 2: The DBO optimization algorithm is applied to optimize the hyperparameters of the MHA-BiGRU model. The optimized model is then used to predict the IMF low-frequency component after reconstruction.

Step 3: The high-frequency, medium-frequency, and low-frequency components are separately fed into the LSSVR, RELM, and MHA-BiGRU models, respectively. Predictions are obtained for each component.

Step 4: The predictions for the high, medium, and low-frequency IMF components are aggregated to obtain the final prediction result.

5. Conlcusions

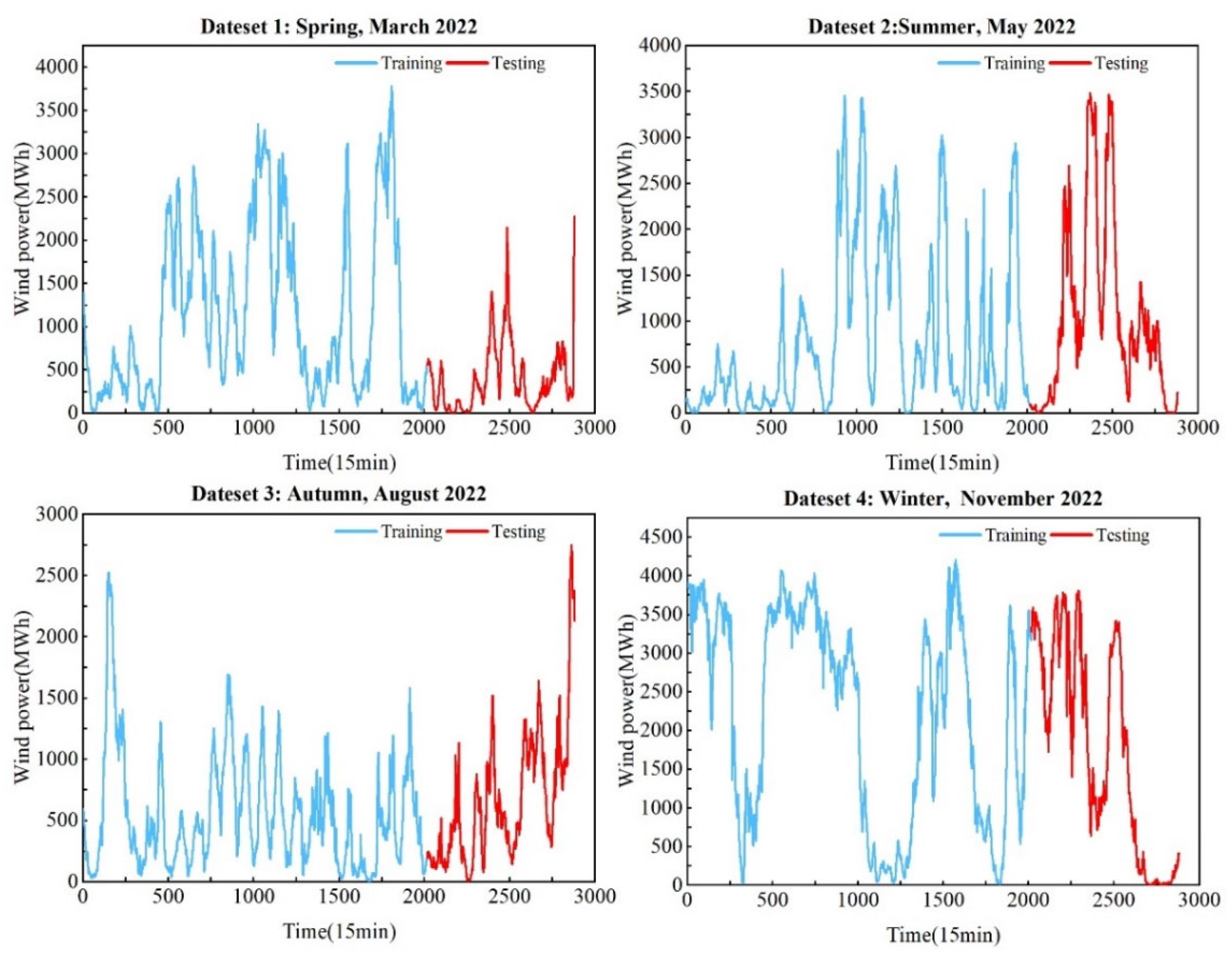

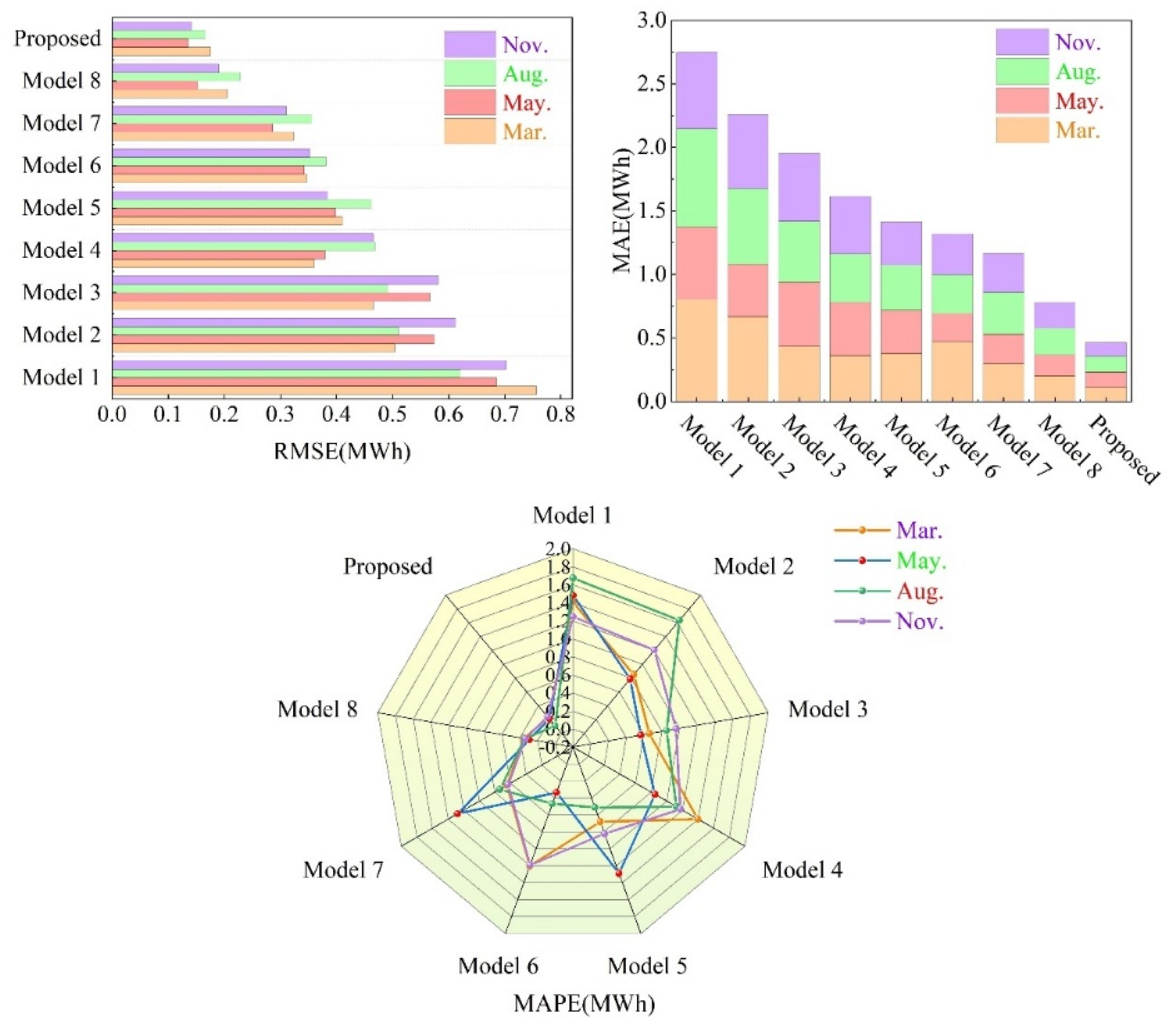

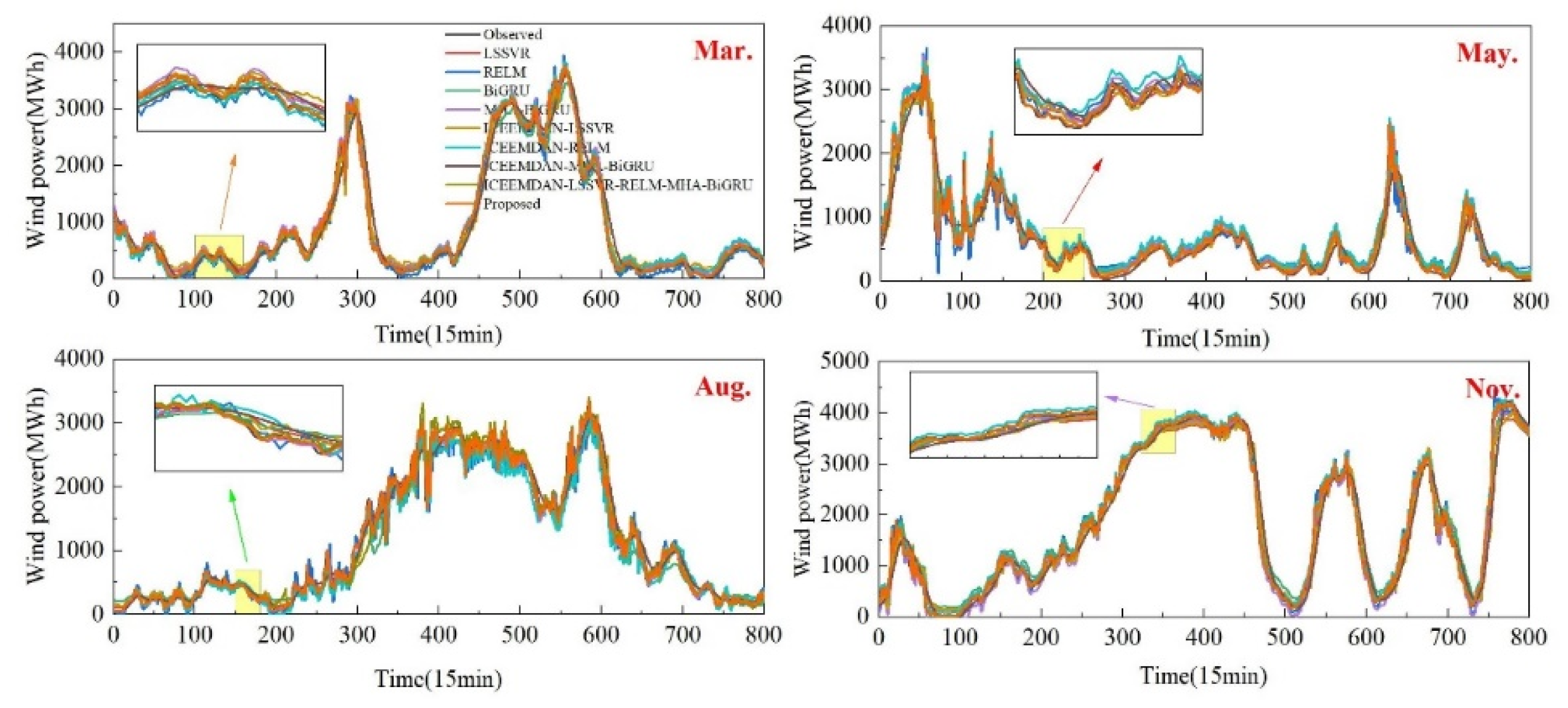

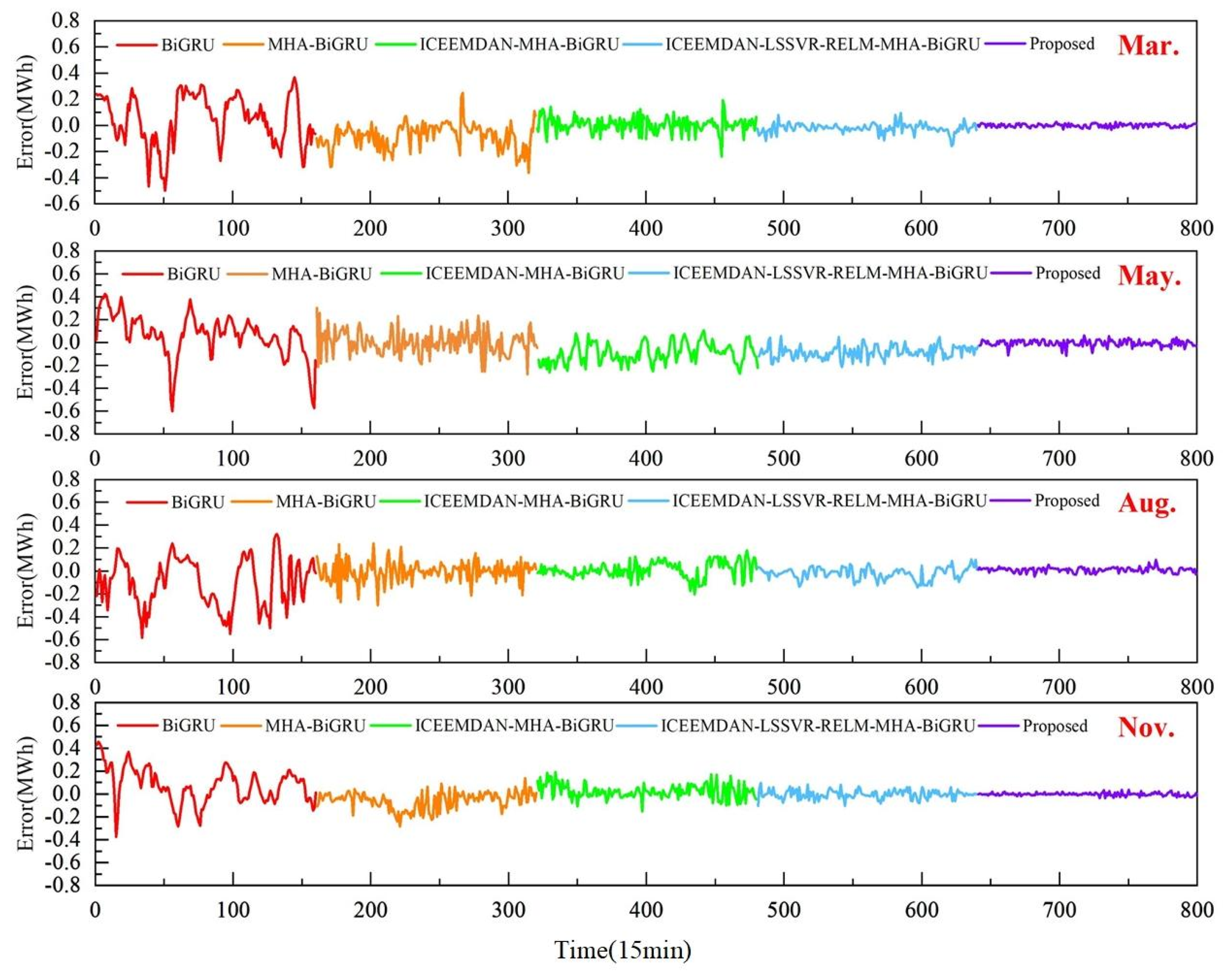

This study proposes a novel multiscale wind power prediction model that integrates ICEEMDAN, PE, LSSVR, RELM, Multi-head Attention, and BiGRU methods. By leveraging wind power data collected from a Belgian Elia wind farm over four months, the model's predictive performance advantages are thoroughly assessed and validated through various analytical approaches, including error metrics, relative error plots, and linear regression analyses. Comparative ablation experiments are conducted against a range of models including LSSVR, RELM, BiGRU, MHA-BiGRU, ICEEMDAN-MHA-BiGRU, and ICEEMDAN-LSSVR-RELM-MHA-BiGRU. Key findings from this analysis are summarized as follows:

1. The multiscale model, which integrates LSSVR, RELM, and BiGRU methods, outperforms single-scale prediction models in terms of predictive accuracy.

2. Compared to the ICEEMDAN-MHA-BiGRU model, the proposed ICEEMDAN-LSSVR-RELM-DBO-MHA-BiGRU model demonstrates superior fitting accuracy across different wind power sequence prediction plots for the four data sets. This underscores the effectiveness of decomposing wind power sequences into high, medium, and low-frequency components in enhancing predictive accuracy.

3. Employing permutation entropy to reconstruct sub-components of decomposed sequences into multiple frequency bands and utilizing multiscale models to predict each frequency band contributes significantly to improved predictive performance.

4. The predictive capability of BiGRU and MHA models optimized using the DBO algorithm surpasses that of models without DBO optimization. This indicates that introducing optimization algorithms can further enhance wind power prediction accuracy.