1. Introduction

In the digital age, the landscape of the contemporary world is increasingly shaped by technological advancements, where threats in the cyber realm pose significant challenges to enterprises. Recognizing these threats necessitates a nuanced understanding of cybersecurity culture, the development of robust cyber–risk management strategies, and the adoption of a proactive, collaborative approach tailored to each organization’s unique context [

1,

2]. In response, this paper introduces a novel, adaptable cybersecurity risk management framework designed to seamlessly integrate with the evolving threat landscape, leveraging technological progress and aligning with specific organizational needs.

The advancement of optimization techniques, driven by an expansion in scientific knowledge, has led to notable breakthroughs in various fields, including cybersecurity [

3]. Artificial intelligence (AI) plays a pivotal role in this evolution, especially through the development of bio–inspired optimization algorithms. These algorithms, inspired by natural processes, have been instrumental in enhancing cyber risk management strategies by offering innovative solutions and efficiencies [

4]. Despite their effectiveness in solving complex problems, these algorithms can encounter limitations, such as stagnation at local optima, which poses a challenge to achieving global optimization [

5]. Nevertheless, this challenge also presents an opportunity for a strategic focus on diversification within the search domain, facilitating significant improvements in cyber risk management efficacy.

Bio–inspired algorithms often struggle to achieve global optimum due to their inherent design, which tends to favor convergence towards local optima based on immediate environmental information. This can lead to premature acceptance of suboptimal solutions [

6,

7]. Addressing this issue is crucial and involves promoting a balanced approach to exploration and exploitation, encouraging the exploration of previously uncharted territories and the pursuit of untapped opportunities, thereby enhancing the identification and mitigation of cyber risks [

8].

This research proposes a cutting–edge hybrid algorithm that combines metaheuristic algorithms with reinforcement learning to efficiently search for and identify optimal solutions in global optimization tasks. This approach aims to strike a delicate balance between exploration and exploitation, gradually offering more advantageous solutions over time, while avoiding the pitfalls of premature convergence [

9]. By leveraging the strengths of bio–inspired algorithms such as Particle Swarm Optimization (PSO), Bat Algorithm (BAT), Gray Wolf Optimizer (GWO), and Orca Predator Algorithm (OPA) for initial detection, and subsequently optimizing the search process with Deep Q–Learning (DQL), this study seeks to address and overcome the challenges of exploration–exploitation balance and computational complexity, especially in high–dimensional search spaces [

10,

11].

Enhancing the methodology outlined in [

12], this paper extends the integration of bio–inspired algorithms with Deep Q–Learning to optimize the implementation of Cyber Security Operations Centers (Ciber SOC). It focuses on a comprehensive risk and requirement evaluation, the establishment of clear objectives, and the creation of a robust technological infrastructure, featuring key tools such as Security Information and Event Management (SIEM) and Network Intrusion Detection Systems (NIDS) for effective real–time monitoring and threat mitigation [

13,

14].

Structured to provide a thorough investigation, this paper is organized as follows:

Section 2 offers a detailed review of recent integrations of machine learning with metaheuristics in cybersecurity, highlighting multi–objective optimization challenges.

Section 3 delves into preliminary concepts of bio–inspired algorithms, emphasizing the principles of PSO, BAT, GWO, and OPA, alongside a formal introduction to DQL and advancements in Cyber SOC and SIEM technologies.

Section 4 outlines the development of the proposed solution, with

Section 5 detailing the experimental design methodology.

Section 6 analyzes the results, discussing the hybridization’s effectiveness in generating efficient solutions. Finally,

Section 7 concludes the study, summarizing key findings and suggesting directions for future research.

2. Related Work

The rising frequency and severity of cyberattacks underscore the essential role of cybersecurity in protecting organizational assets. Research such as the study by [

15] introduces a groundbreaking multi–objective optimization approach for cybersecurity countermeasures using Genetic Algorithms. This methodology aims to fine–tune Artificial Immune System parameters to achieve an ideal balance between minimizing risk and optimizing execution time. The robustness of this model is demonstrated through comprehensive testing across a broad spectrum of inputs, showcasing its capacity for a swift and effective cybersecurity response.

In the realm of machine learning (ML), techniques are being increasingly applied across diverse domains, including the creation of advanced machine learning models, enhancing physics simulations, and tackling complex linear programming challenges. The research conducted by [

16] delves into the significant impact of machine learning on the domain knowledge of metaheuristics, leading to enhanced problem–solving methodologies. Furthermore, the integration of machine learning with metaheuristics, as explored in studies [

17,

18], opens up promising avenues for cyber risk management, showcasing the transformative potential of ML in developing new strategies and enhancing existing cybersecurity mitigation efforts.

The synergy between advanced machine learning techniques and metaheuristics is pivotal in crafting solutions that effectively address the sophisticated and ever–evolving landscape of cyber threats. Notably, research such as [

19] emphasizes the utility of integrating Q–Learning with Particle Swarm Optimization for the resolution of combinatorial problems, marking a significant advancement over traditional PSO methodologies. This approach not only enhances solution quality but also exemplifies the effectiveness of learning–based hybridizations in the broader context of swarm intelligence algorithms, providing a novel and adaptable methodology for tackling optimization challenges.

Innovative algorithmic design further underscores the progress in optimization techniques, with the introduction of the self–adaptive virus optimization algorithm by [

20]. This novel algorithm improves upon the conventional virus optimization algorithm by minimizing the reliance on user–defined parameters, thus facilitating a broader application across various problem domains. The dynamic adaptation of its parameters significantly elevates the algorithm’s performance on benchmark functions, showcasing its superiority, particularly in scenarios where the traditional algorithm exhibited limitations. This advancement is achieved by streamlining the algorithm, reducing controllable parameters to a singular one, thereby enhancing its efficiency and versatility for continuous domain optimization challenges.

The discourse on metaheuristic algorithms for solving complex optimization problems is enriched by [

21], which addresses the manual design of these algorithms without a cohesive framework. Proposing a General Search Framework to amalgamate diverse metaheuristic strategies, this method introduces a systematic approach for the selection of algorithmic components, facilitating the automated design of sophisticated algorithms. This framework enables the development of novel, population–based algorithms through reinforcement learning, marking a pivotal step towards the automation of algorithm design supported by effective machine learning techniques.

In the domain of intrusion detection, [

22] introduces an innovative technique, metaheuristic with deep learning enabled intrusion detection system for secured smart environment (MDLIDS–SSE), which combines metaheuristics with deep learning to secure intelligent environments. Employing Z–score normalization for data preprocessing via the improved arithmetic optimization algorithm based feature selection (IAOA–FS), this method achieves high precision in intrusion classification, surpassing recent methodologies. Experimental validation underscores its potential in safeguarding smart cities, buildings, and healthcare systems, demonstrating promising results in accuracy, recall, and detection rates.

Additionally, the Q–Learning Vegetation Evolution algorithm, as presented in [

23], exemplifies the integration of Q–Learning for optimizing coverage in numerical and wireless sensor networks. This approach, featuring a mix of exploitation and exploration strategies and the use of online Q–Learning for dynamic adaptation, demonstrates significant improvements over conventional methods through rigorous testing on CEC2020 benchmark functions and real–world engineering challenges. This research contributes a sophisticated approach to solving complex optimization problems, highlighting the efficacy of hybrid strategies in the field.

In the sphere of cyber risk management, particularly from the perspective of the Ciber SOC and SIEM, research efforts focus on strategic optimization, automated responses, and adaptive methodologies to navigate the dynamic cyber threat landscape. Works such as [

12,

24] explore efficient strategies for designing network topologies and optimizing cybersecurity incident responses within SIEM systems. These studies leverage multi–objective optimization approaches and advanced machine learning models, like deep–Q neural networks, to enhance decision–making processes, showcasing significant advancements in the automation and efficiency of cybersecurity responses.

Emerging strategies in intrusion detection and network security, highlighted by [

25,

26], emphasize the integration of reinforcement learning with oversampling and undersampling algorithms, and the combination of Particle Swarm Optimization–Genetic Algorithm with LSTM-GRU of deep learning that fused the GRU (gated recurrent unit) and LSTM (long short-term memory). These approaches demonstrate a significant leap forward in detecting various types of attacks within Internet of Things (IoT) networks, showcasing the power of combining machine learning and optimization techniques for IoT security. The model’s accuracy in classifying different attack types, as tested on the CICIDS-2017 dataset, outperforms existing methods and suggests a promising direction for future research in this domain.

Furthermore, [

27] introduces a semi–supervised alert filtering scheme that leverages semi–supervised learning and clustering techniques to efficiently distinguish between false and true alerts in network security monitoring. This method’s effectiveness, as evidenced by its superior performance over traditional approaches, offers a fresh perspective on alert filtering, significantly contributing to the improvement of network security management by reducing alert fatigue.

The exploration of machine learning’s effectiveness and cost–efficiency in NIDS for small medium enterprises (SME) in the UK is presented in [

28]. This study assesses various intrusion detection and prevention devices, focusing on their ability to manage zero–day attacks and related costs. The research, conducted during the COVID–19 pandemic, investigates both commercial and open–source NIDS solutions, highlighting the balance between cost, required expertise, and the effectiveness of machine learning–enhanced NIDS in safeguarding SMEs against cyber threats.

From the perspective of Cyber SOC, [

29] addresses the increasing complexity of cyberattacks and their implications for public sector organizations. This study proposes a ’Wide–Scope CyberSOC’ model as a unique outsourced solution to enhance cybersecurity awareness and implementation across various operational domains, tackling the challenges faced by public institutions in building a skilled cybersecurity team and managing the blend of internal and external teams amidst the prevailing outsourcing trend.

Lastly, [

30] offers a comprehensive analysis of the Bio–Inspired Internet of Things, underscoring the synergy between biomimetics and advanced technologies. This research evaluates the current state of Bio–IoT, focusing on its benefits, challenges, and future potential. The integration of natural principles with IoT technology promises to create more efficient and adaptable solutions, addressing key challenges such as data security and privacy, interoperability, scalability, energy management, and data handling.

3. Preliminaries

In this study, we integrate bio–inspired algorithms with an advanced machine learning technique to tackle a complex optimization problem. Specifically, we utilize Particle Swarm Optimization, the Bat Algorithm, the Grey Wolf Optimizer and Orca Predator Algorithm, which are inspired by the intricate processes and behaviors observed in nature and among various animal species. These algorithms are improved by incorporating Reinforcement Learning through Deep Q–Learning on the search process of bio–inspired methods.

3.1. Particle Swarm Optimization

Particle Swarm Optimization is a computational method that simulates the social behavior observed in nature, such as birds flocking or fish schooling, to solve optimization problems [

31]. This technique is grounded in the concept of collective intelligence, where simple agents interact locally with one another and with their environment to produce complex global behaviors.

In PSO, a swarm of particles moves through the solution space of an optimization problem, with each particle representing a potential solution. The movement of these particles is guided by their own best–known positions in the space as well as the overall best–known positions discovered by any particle in the swarm. This mechanism encourages both individual exploration of the search space and social learning from the success of other particles. The position of each particle is updated according to Equations (

1) and (

2).

where

is the velocity of particle

i at iteration

.

w It is the weight of inertia that helps balance exploration and exploitation.

and

are coefficients representing self–confidence and social trust, respectively.

and

are random numbers between 0 and 1.

is the best known position for the particle

i and

is the best position known to the entire population. Finally,

and

represent the current position of the particle

i and the next one, respectively.

The algorithm iterates these updates, allowing particles to explore the solution space, with the aim of converging towards the global optimum. The parameters w, , and play crucial roles in the behavior of the swarm, affecting the convergence speed and the algorithm’s ability to escape local optima.

PSO is extensively employed due to its simplicity, efficiency, and versatility, enabling its application across a broad spectrum of optimization problems. Its capability to discover solutions without requiring gradient information renders it especially valuable for problems characterized by complex, nonlinear, or discontinuous objective functions.

3.2. Bat Algorithm

The Bat Algorithm is an optimization technique inspired by the echolocation behavior of bats. It simulates the natural echolocation mechanism that bats use for navigation and foraging. This algorithm captures the essence of bats’ sophisticated biological sonar systems, translating the dynamics of echolocation and flight into a computational algorithm capable of searching for global optima in complex optimization problems [

6].

In the Bat Algorithm, a population of virtual bats navigates the solution space, where each bat represents a potential solution. The bats use a combination of echolocation and a random walk to explore and exploit the solution space effectively. They adjust their echolocation parameters, such as frequency, pulse rate, and loudness, to locate prey, analogous to finding the optimal solutions in a given problem space. The algorithm employs the following equations for updating the bats’ positions and velocities:

where

is the frequency of the bat

i, ranging from

to

with

being a random number between 0 and 1.

represents the velocity of bat

i at iteration

, and

signifies the global best solution found by any bat.

denotes the position of bat

i for the next iteration.

Additionally, to model the bats’ local search and exploitation capability, a random walk is incorporated around the best solution found so far. This is achieved by modifying a bat’s position using the average loudness

A of all the bats and the pulse emission rate

r, guiding the search towards the optimum:

where

represents a new solution generated by local search around the global best position

, and

is a random number drawn from a uniform distribution. The values of

A and

r decrease and increase respectively over the course of iterations, fine–tuning the balance between exploration and exploitation based on the proximity to the prey, i.e., the optimal solution.

The Bat Algorithm’s efficiency stems from its dual approach of global search, facilitated by echolocation–inspired movement, and local search, enhanced by the random walk based on pulse rate and loudness. This combination allows the algorithm to explore vast areas of the search space while also intensively searching areas near the current best solutions.

3.3. Gray Wolf Optimizer

The Gray Wolf Optimizer is an optimization algorithm inspired by the social hierarchy and hunting behavior of gray wolves in nature. This algorithm mimics the leadership and team dynamics of wolves in packs to identify and converge on optimal solutions in multidimensional search spaces [

32]. The core concept behind GWO is the emulation of the way gray wolves organize themselves into a social hierarchy and collaborate during hunting, applying these behaviors to solve optimization problems.

In a gray wolf pack, there are four types of wolves: alpha (), beta (), delta (), and omega (), representing the leadership hierarchy. The alpha wolves lead the pack, followed by beta and delta wolves, with omega wolves being at the bottom of the hierarchy. This social structure is translated into the algorithm where the best solution is considered the alpha, the second best the beta, and the third best the delta. The rest of the candidate solutions are considered omega wolves, and they follow the lead of the alpha, beta, and delta wolves towards the prey (optimal solution).

The positions of the wolves are updated based on the positions of the alpha, beta, and delta wolves, simulating the hunting strategy and encircling of prey. The mathematical models for updating the positions of the gray wolves are given by the following equations:

where

represents the position vector of the prey (or the best solution found so far),

is the position vector of a wolf,

and

are coefficient vectors, and

t indicates the current iteration. The vectors

and

are calculated as follows:

where

linearly decreases from 2 to 0 over the course of iterations, and

,

are random vectors in

.

The hunting (optimization) is guided mainly by the alpha, beta, and delta wolves, with omega wolves following their lead. The algorithm effectively simulates the wolves’ approach and encircling of prey, exploration of the search area, and exploitation of promising solutions.

3.4. Orca Predator Algorithm

The Orca Predator Algorithm draws inspiration from the sophisticated hunting techniques of orcas, known for their strategic and cooperative behaviors [

33]. Orca societies are characterized by complex structures and collaborative efforts in predation, employing echolocation for navigation and prey detection in their aquatic environments. OPA models solutions as

n–dimensional vectors within a solution space

, mimicking these marine predators’ approaches to tracking and capturing prey.

OPA’s methodology encompasses two main phases reflective of orca predation: the chase, involving herding and encircling tactics, and the attack, focusing on the actual capture of prey. During the chase phase, the algorithm alternates between herding prey towards the surface and encircling it to limit escape opportunities, with the decision based on a parameter

p and a random number

r within

. The attack phase simulates the final assault on the prey, highlighting the importance of coordination and precision.

These equations detail the algorithm’s dynamics, modeling velocity and spatial adjustments reflective of orca hunting behaviors. represents the position of the i-th orca at time t, with denoting the optimal solution’s position. Parameters a, b, d, and e are random coefficients that influence the algorithm’s exploration and exploitation mechanisms, with F indicating the attraction force between agents.

After herding prey to the surface, orcas coordinate to finalize the hunt, using their positions and the positions of randomly chosen peers to strategize their attack. This collective behavior is encapsulated in the following equations, illustrating the algorithm’s mimicry of orca hunting techniques.

These formulations demonstrate how orcas adapt their positions based on the dynamics of their surroundings and the behaviors of their pod members, optimizing their strategies to efficiently capture prey. Through this algorithm, the intricate and collaborative nature of orca predation is leveraged as a metaphor for solving complex optimization problems, with a focus on enhancing solution accuracy and efficiency.

3.5. Reinforcement Learning

Reinforcement Learning revolves around the concept of agents operating autonomously to optimize rewards through their decisions, as outlined in comprehensive studies [

34]. These agents navigate their learning journey via a trial and error mechanism, pinpointing behaviors that accrue maximum rewards, both immediate and in the future, a hallmark trait of reinforcement Learning [

35].

During the reinforcement Learning journey, agents are in constant interaction with their surroundings, engaging with essential elements like the policy, value function, and at times, a simulated representation of the environment [

36,

37,

38,

39]. The value function assesses the potential success of the actions taken by the agent within its environment, while adjustments in the agent’s policy are influenced by the rewards received.

One pivotal reinforcement Learning method, Q–Learning, aims to define a function that evaluates the potential success of an action

in a certain state

at time

t [

40,

41]. This evaluation function, or Q function, undergoes updates as per Equation (

13):

Here, symbolizes the learning rate, and represents the discount factor, with being the reward after executing action .

Deep Reinforcement Learning (DRL) merges deep learning with reinforcement learning, tackling problems of higher complexity and dimensionality [

42]. In DRL, deep neural networks approximate the value functions or policies. Deep Q–Learning, a subset of DRL, utilizes a neural network to estimate the Q value function, reflecting the anticipated aggregate reward for a specific state action. This Q value function evolves through an iterative learning process as the agent engages with the environment and garners rewards.

Within Deep Q–Learning, the Q function is articulated as

, where

denotes the present state,

the action undertaken by the agent at time

t, and

the network’s weights [

43]. The Q function’s update mechanism is guided by Equation (

14):

Here, and indicate the subsequent state and action at time , respectively. The learning rate influences the extent of Q value function updates at each learning step. A higher facilitates rapid adjustment to environmental changes, beneficial during the learning phase’s early stages or in highly variable settings. Conversely, a lower ensures a more gradual and steady learning curve but might extend the convergence period. The discount factor prioritizes future over immediate rewards, promoting strategies focused on long–term gain. In contrast, a lower favors immediate rewards, suitable for less predictable futures or scenarios necessitating quick policy development. The reward is received post–action execution in state , with denoting the parameters of a secondary neural network that periodically synchronizes with to enhance training stability.

A hallmark of Deep Q–Learning is the incorporation of Replay Memory, a pivotal component of its learning framework [

44,

45]. Replay Memory archives the agent’s experiences as tuples

, with each tuple capturing a distinct experience involving the current state

, the executed action

, the obtained reward

, and the ensuing state

. This methodology of preserving and revisiting past experiences significantly improves the learning efficiency and efficacy, enabling the agent to draw from a broader spectrum of experiences. It also diminishes the sequential dependency of learning events, a crucial strategy for mitigating the risk of over–reliance on recent data and fostering a more expansive learning approach. Furthermore, DQL employs the mini–batch strategy for extracting experiences from Replay Memory throughout the training phase [

46]. Rather than progressing from individual experiences one by one, the algorithm opts for random selection of mini–batches of experiences. This technique of batch sampling bolsters learning stability by ensuring sample independence and optimizes computational resource utilization.

Finally, learning in DQL is governed by a loss function according to Equation (

15), which measures the discrepancy between the estimated Q and target values.

where

y is the target value, calculated by Equation (

16):

Here, is the reward received after taking action in the state , and is the discount factor, which balances the importance of short–term and long–term rewards. The formulation represents the maximum estimated value for the next state , according to the target network with parameters . is the Q value estimated by the evaluation network for the current state and action , using the current parameters . In each training step in DQL, the evaluation network receives a loss function backpropagated based on a batch of experiences randomly selected from the experience replay memory. The evaluation network’s parameter, , is then updated by minimizing the loss function through the Stochastic Gradient Descent (SGD) function. After several steps, the target network’s parameter, , is updated by assigning the latest parameter to . After a period of training, the two neural networks are trained stably.

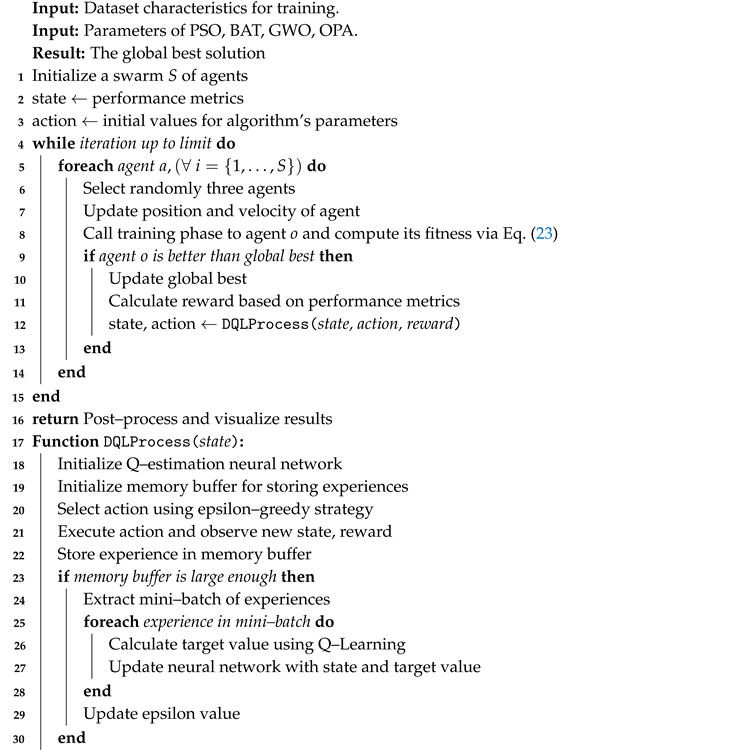

3.6. Cybersecurity Operations Centers

Recent years have seen many organizations establish Cyber SOCs in response to escalating security concerns, necessitating substantial investments in technology and complex setup processes [

47]. These centralized hubs enhance incident detection, investigation, and response capabilities by analyzing data from various sources, thereby increasing organizational situational awareness and improving security issue management [

48]. The proliferation of the Internet and its integral role in organizations brings heightened security risks, emphasizing the need for continuous monitoring and the implementation of optimization methods to tackle challenges like intrusion detection and prevention effectively [

49].

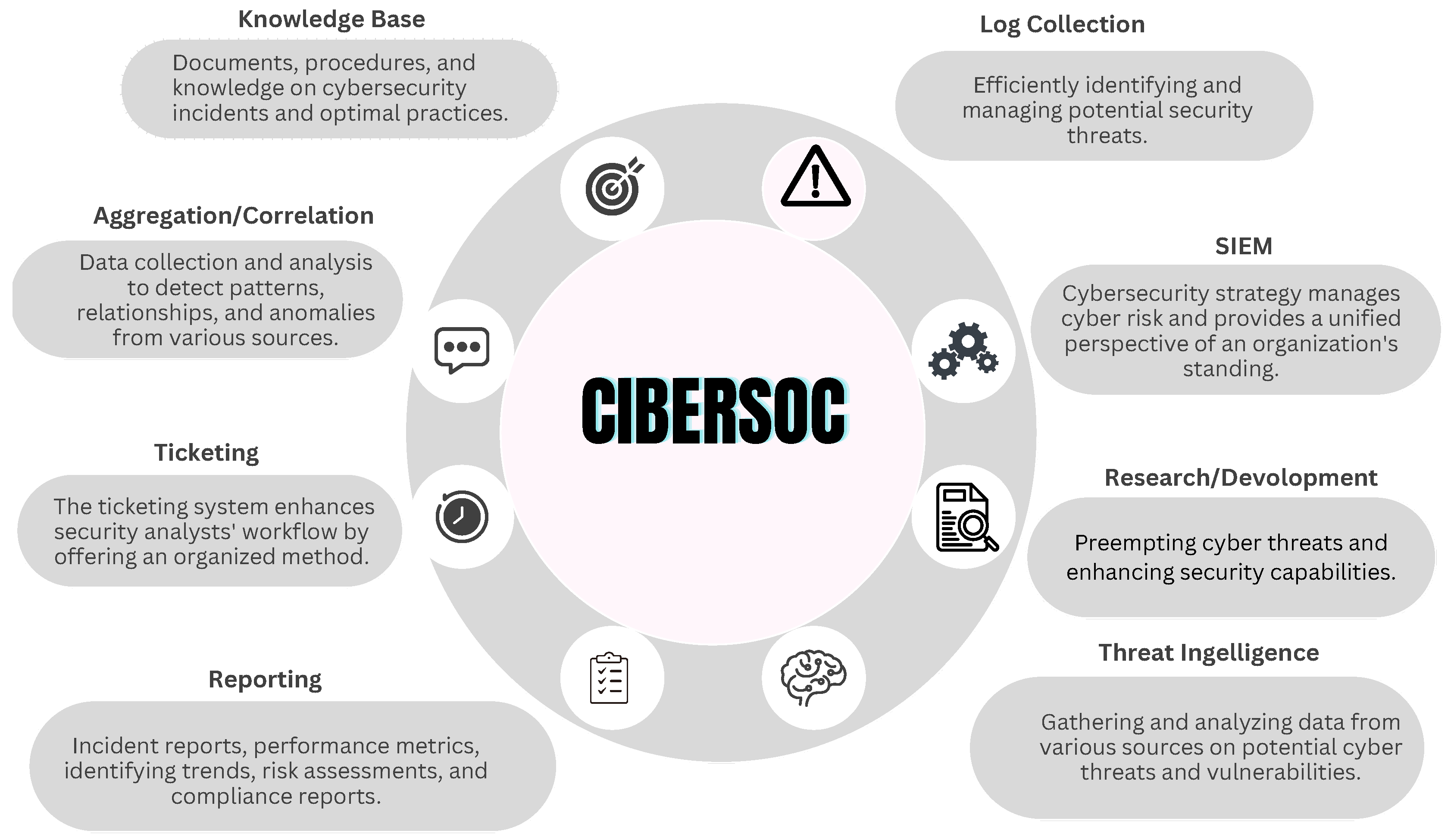

Security Information and Event Management systems have become essential for Cyber SOCs, playing a critical role in safeguarding the IT infrastructure by enhancing cyber threat detection and response, thereby improving operational efficiency and mitigating security incident impacts [

50]. Efficient allocation of centralized NIDS sensors through an SIEM system is crucial for optimizing detection coverage and operational efficiency, considering the organization’s specific security needs [

51]. This strategic approach allows for cohesive management and comprehensive security data analysis, leading to a faster and more effective response to security incidents [

52]. SIEM systems, widely deployed to manage cyber risks, have evolved into comprehensive solutions that offer broad visibility into high–risk areas, focusing on proactive mitigation strategies to reduce incident response costs and time [

53].

Figure 1 illustrates the functional characteristics of a Cyber SOC.

Today’s computer systems are universally vulnerable to cyberattacks, necessitating continuous and comprehensive security measures to mitigate risks [

54]. Modern technology infrastructures incorporate various security components, including firewalls, intrusion detection and prevention systems, and security software on devices, to fortify against threats [

55]. However, the autonomous operation of these measures requires the integration and analysis of data from different security elements for a complete threat overview, highlighting the importance of Security Information and Event Management systems [

56]. As the core of Cyber SOCs, SIEM systems aggregate data from diverse sources, enabling effective threat management and security reporting [

57].

SIEM architectures consist of key components such as source device integration, log collection, and event monitoring, with a central engine performing log analysis, filtering, and alert generation [

58,

59]. These elements work together to provide real–time insights into network activities, as depicted in

Figure 2.

NIDS sensors, often based on cost–effective Raspberry Pi units, serve as adaptable and scalable modules for network security, requiring dual Ethernet ports for effective integration into the SIEM ecosystem [

60]. This study aims to enhance the assignment and management of NIDS sensors within a centralized network via SIEM, improving the optimization of sensor deployment through the application of Deep Q–Learning to metaheuristics, advancing upon previous work [

12].

In this context, cybersecurity risk management is essential for organizations to navigate the evolving threat landscape and implement appropriate controls [

61]. It aims to balance securing networks and minimizing losses from vulnerabilities [

62], requiring continuous model updates and strategic deployment of security measures [

63]. Cyber risk management strategies, including the adoption of SIEM systems, are vital for monitoring security events and managing incidents [

62].

The optimization problem focuses on deploying NIDS sensors effectively, considering cost, benefits, and indirect costs of non–installation. This involves equations to minimize sensor costs (

18), maximize benefits (

19), and minimize indirect costs (

20), with constraints ensuring sufficient sensor coverage (

21) and network reliability (

22).

This streamlined approach extends the model to larger networks and emphasizes the importance of regular updates and expert collaboration to improve cybersecurity outcomes [

12,

64].

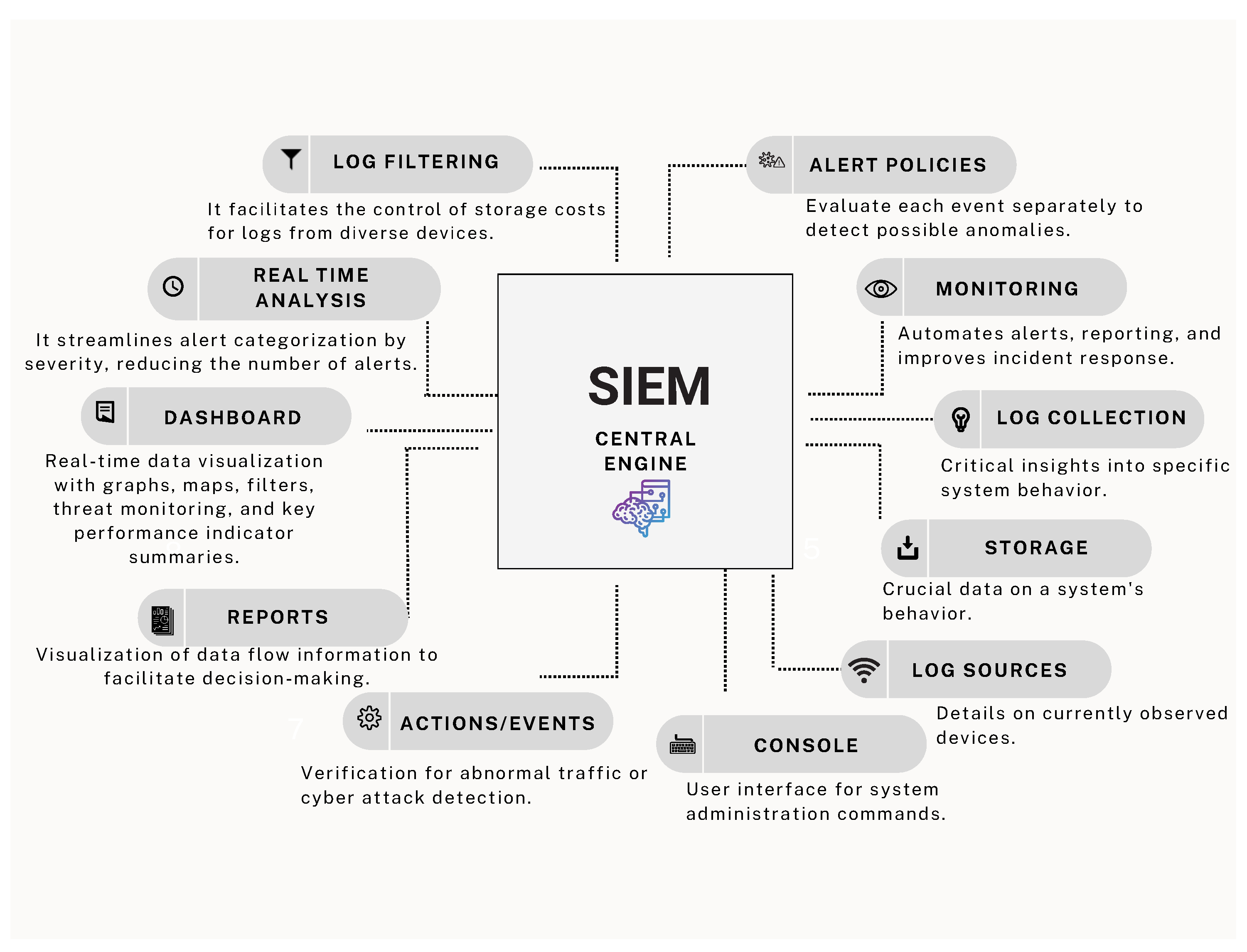

Expanding on research [

12] which optimized NIDS sensor allocation in medium–sized networks, this study extends the approach to larger networks. By analyzing a case study, this research first tackles instance zero with ten VLANs, assigning qualitative variables to each based on operational importance and failure susceptibility for strategic NIDS sensor placement. This formulation leads to an efficient allocation of NIDS sensors for the foundational instance zero, as depicted in

Figure 3. The study scales up to forty additional instances, providing a robust examination of NIDS sensor deployment strategies in varied network configurations.

4. Solution Development

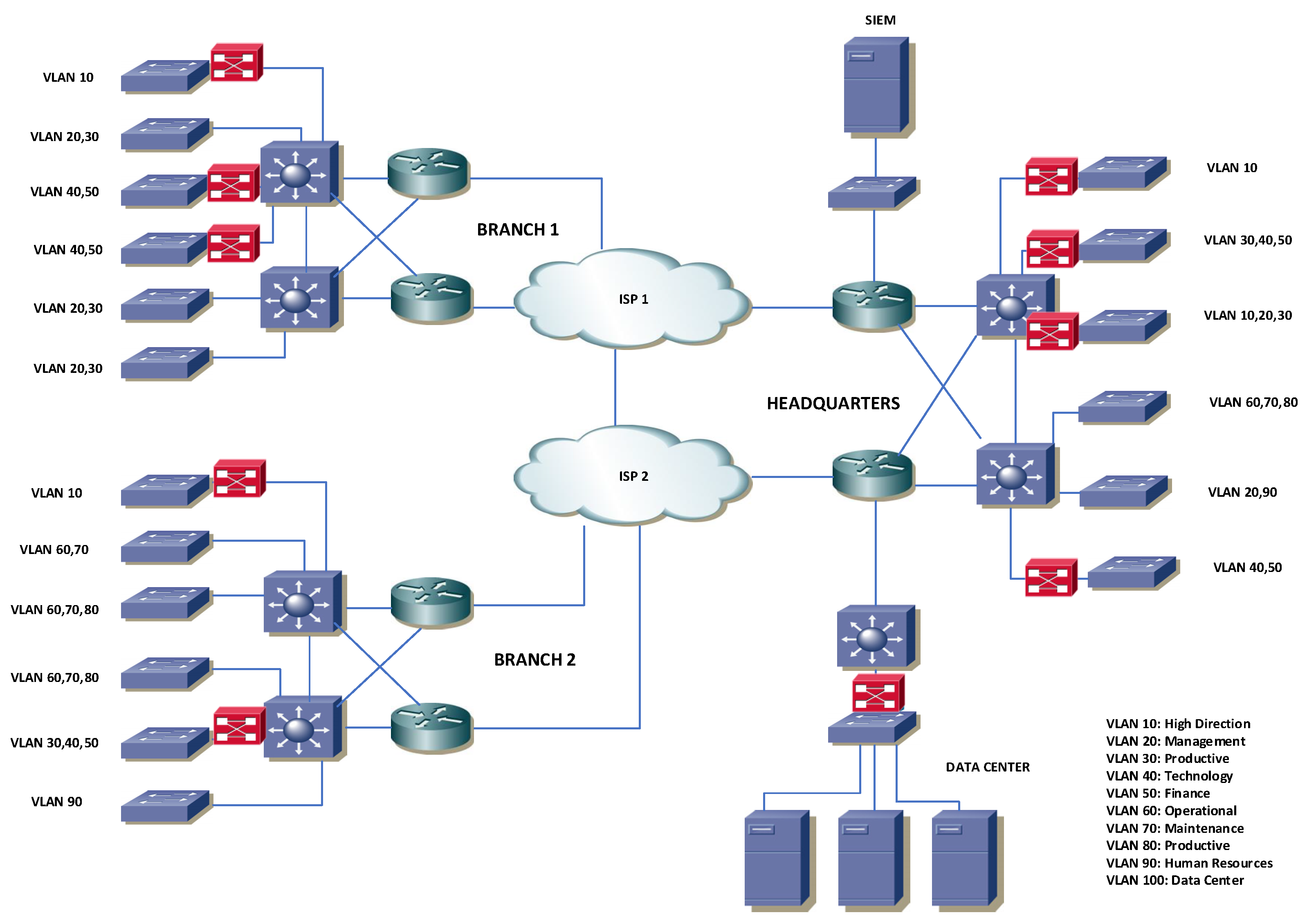

This solution advances the integration of bioinspired algorithms—Particle Swarm Optimization, Bat Algorithm, Grey Wolf Optimizer, and Orca Predator Algorithm —with Deep Q–Learning to dynamically fine–tune the parameters of these algorithms. Utilizing the collective and adaptive behaviors of PSO, BAT, GWO, and OPA alongside the capabilities of DQL to handle extensive state and action spaces, we enhance the efficiency of feature selection. Inspired by the natural strategies of their respective biological counterparts and combined with DQL’s proficiency in managing high–dimensional challenges [

33,

65], this approach innovates optimization tactics while effectively addressing complex combinatorial issues.

DQL is pivotal for shifting towards exploitation, particularly in later optimization phases. As PSO, BAT, GWO, and OPA explore the solution space, DQL focuses the exploration on the most promising regions through an epsilon–greedy policy, optimizing action selection as the algorithm progresses and learns [

66].

Each algorithm functions as a metaheuristic with agents (particles, bats, wolves, agents) representing search agents within the binary vector solution space.

DQL’s reinforcement learning strategy fine–tunes the operational parameters of these algorithms, learning from their performance outcomes to enhance exploration and exploitation balance. Through replay memory, DQL benefits from historical data, incrementally improving NIDS sensor mapping for SIEM system.

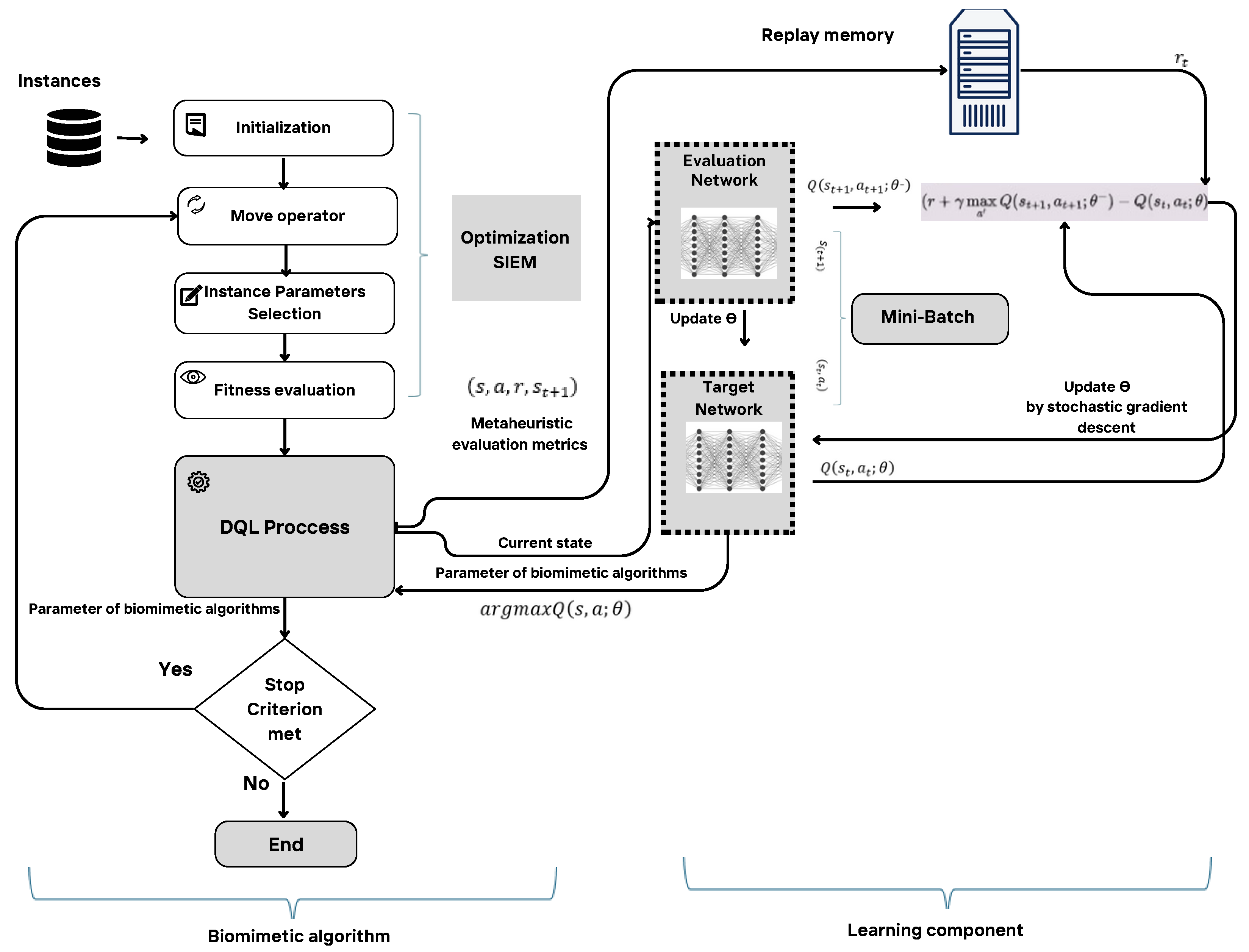

Figure 4 displays the collaborative workflow between the bioinspired algorithms and DQL, showcasing an efficient and effective optimization methodology that merges nature–inspired exploration with DQL’s adaptive learning.

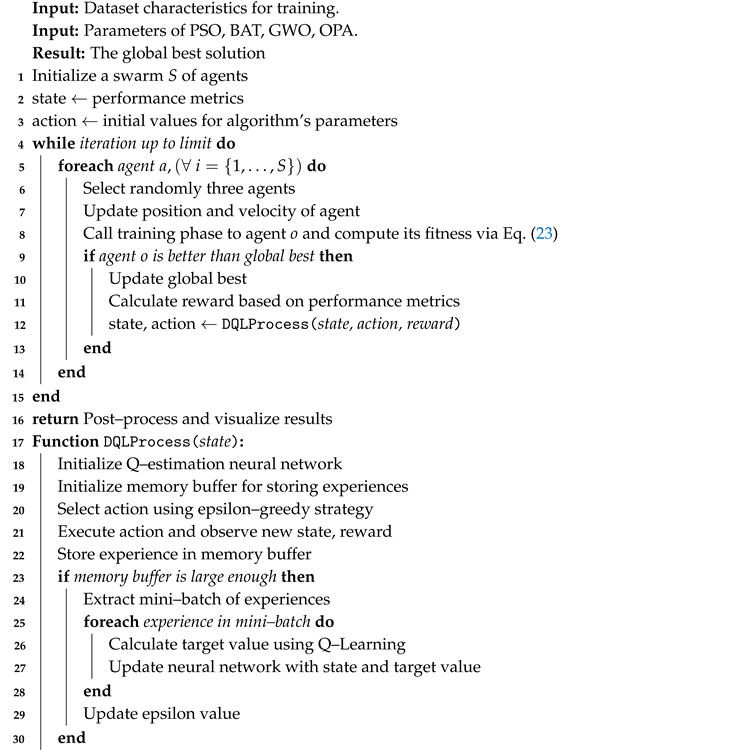

The essence of our methodology is captured in the pseudocode of Algorithm 1, beginning with dataset input and leading to the global best solution identification. This process involves initializing agents, adjusting their positions and velocities, and employing a training phase to compute fitness, followed by DQL’s refinement of exploration and exploitation strategies.

The core loop iterates until reaching a specified limit, with each agent’s position and velocity updated and fitness evaluated for refining the search strategy.

The computational complexity of our enhanced metaheuristic and DQL integration reflects the algorithm’s depth and breadth in tackling of finding efficient solutions, balancing efficiency with computational demands. Advances in computing power significantly reduce the impact of this complexity, supporting the feasibility of this comprehensive optimization approach.

|

Algorithm 1: Enhanced bioinspired optimization method. |

|

5. Experimental setup

Forty instances have been proposed for the experimental stage. These instances entail random operating parameters, which are detailed below for each instance: the number of operational VLANs, the types of sensors used, the range of sensor costs, the range of benefits associated with sensor installation in a particular VLAN, the range of indirect costs incurred when a sensor is not installed in a given VLAN, and the probability of non–operation for a given VLAN.

Additionally, it’s important to note that, as per the mathematical modeling formulation outlined earlier, one of its constraints mandates a minimum operational or uptime availability of ninety percent for the organization’s network. The specific values for each instance are provided in detail in

Table 1.

Once the solution vector has been altered, it becomes necessary to implement a binarization step for the usage of continuous metaheuristics in a binary domain [

67]. This involves comparing the Sigmoid function to a randomly uniform value

that falls within the range of 0 and 1. Subsequently, a conversion function, for instance,

, is employed as a method of discretization. In this scenario, if the statement holds true, then

. Conversely, if it doesn’t hold true, then

.

Our objective is to devise plans and offer recommendations for the trial phase, thereby demonstrating that the recommended strategy is a feasible solution for determining the location of the sensor NIDS. The time taken to solve is calculated to gauge the duration of metaheuristics required to achieve efficient solutions. We use the highest value as a critical measure to evaluate subsequent outcomes, which the Equation determines (

23).

where

represents weight of objective functions and

must be satisfied. Values of

is defined by analogous estimating.

is the single–objective function and

stores the best value met independently. Finally,

is an upper bound of minimization single–objective functions.

Following this, we employ ordinal examination to assess the adequacy of the strategy. Subsequently, we elaborate on the hardware and software utilized to duplicate computational experiments. Outcomes will be depicted through tables and graphics.

We highlight that test scenarios are developed using standard simulated networks designed to mimic the behavior and characteristics of real networks. These simulations represent the operational characteristics of networks in organizations of various sizes, from minor to medium and large. Depending on its scale and extent, defined by the number of VLANs, each VLAN consists of multiple devices, such as computers, switches, and server farms, along with their related connections. The research evaluates test networks that vary in size, starting from networks with ten VLANs, moving to networks with twenty–five VLANs, and extending to more extensive networks with up to fifty VLANs. The simulation considers limitations such as bandwidth capacity, latency, packet loss, and network congestion by replicating the test networks and considering their functional and working properties. These aspects, along with other factors, are critical in defining the uptime of each VLAN. Network availability is defined as the time or percentage during which the network remains operational and accessible, without experiencing significant downtime. For this study, it is essential that networks maintain a minimum availability of 90%, as interruptions and periods of downtime may occur due to equipment failure, network congestion or connectivity problems. Implementing proactive monitoring through the SIEM will ensure high availability on the network.

5.1. Infrastructure

Python 3.10 was used to implement the proposal. The computer used to run each test has the followings attributes: macOS 14.2.1 Darwin Kernel v23 with an Ultra M2 chipe, and 64 GB of RAM.

5.2. Methodology

Given the forty instances representing networks of various sizes and complexities, described in

Table 1, they will be used to evaluate the performance between the native and enhanced metaheuristics following the principles established in [

68]. The methodological proposal consists of an analytical comparison between the hybridization results, that is, the results obtained from the original form of the algorithm. Optimization and the results obtained with the application of Deep Q–Learning. To achieve this, we have implemented the following methodological approach.

Preparation and planning: In this phase, network instances that emulate real–world cases, from medium–sized networks to large networks, are generated, randomly covering the various operational and functional scenarios of modern networks. Subsequently, the objectives to achieve are defined as having a secure, operational, and highly available network. These are to minimize the number of NIDS sensors assigned to the network, maximize the installation benefits, and minimize the indirect costs of non–installation. Experiments are designed to systematically evaluate hybridization improvements in a controlled manner, ensuring balanced optimization of the criteria described above.

Execution and assessment: Carry out a comprehensive evaluation of both native and improved metaheuristics, analyzing the quality of the solutions obtained and the efficiency in terms of calculation and convergence characteristics. Implement comprehensive tests to perform performance comparisons with descriptive statistical methods and perform the Mann–Whitney–Wilcoxon test for comparative analysis. This method involves determining the appropriateness of each execution for each given instance.

Analysis and validation: Perform a comprehensive and in–depth analysis to understand the influence of Deep Q–Learning and the behavior of the PSO, BAT, GWO, and OPA metaheuristics in generating efficient solutions for the corresponding instances. To do this, comparative tables and graphs of the solutions generated by the native and improved metaheuristics will be built.

6. Results and Discussion

Table 2,

Table 3,

Table 4,

Table 5 and

Table 6 shows the main findings corresponding to the execution of the native metaheuristics and the metaheuristics improved with Deep Q–Learning. The tables are structured into forty sections (one per instance), each consisting of six rows that statistically describe the value of the metric corresponding to the scalarization of objectives, considering the best value obtained as the minimum value and the worst value obtained as the maximum value. The median represents the middle value, and the mean denotes the average of the results, while the standard deviation (STD) and the interquartile range (IQR) quantify the variability in the findings. Concerning columnar representation, PSO, BAT, GWO, and OPA detail results for a bio–inspired optimizer lacking a learning component. PSODQL, BATDQL, GWODQL, and OPADQL represent our enhanced version of biomimetic algorithms.

When analyzing instances one to nine, it is evident that both the native metaheuristics and the metaheuristics improved with Deep Q–Learning produce identical solutions and metrics, given the low complexity of the IT infrastructure of these first instances; however, despite generating exact values regarding the best scalarization value, instances six, eight, and nine show variations in their generation, which can be seen in the variation of the standard deviations of PSO, BAT, and BATDQL.

From instances ten to sixteen, there are slight variations in the solutions obtained by each metaheuristic, although the value of the best solution remains identical in most instances. As for the worst value generated, variations begin to develop, causing variations to start in the average, standard deviation, median, and interquartile range. In metaheuristics improved with Deep Q–Learning, specifically PSODQL, BATDQL, and OPADQL, it is verified that the standard deviation is lower compared to their corresponding native metaheuristics. This exciting finding demonstrates that the solution values are very similar to the average; in other words, there is little variability among the solution results, suggesting that the results are consistent and stable. Moreover, experiments with Deep Q–Learning metaheuristics indicate that the experiments are reliable and that random errors have a minimal impact on the outcomes.

Subsequently, in instance seventeen, a great variety is seen in the solutions generated, with PSODQL providing the best solution and OPADQL in second place, maintaining the previous finding with respect to the standard deviation.

For instance, from eighteen to twenty, there is a wide variety of solutions, highlighting PSODQL, BATDQL, and OPADQL. It is interesting to verify that BAT, GWO, and OPA, both native and improved, generate the exact value of the best solution. However, the standard deviation in the improved metaheuristics is lower than that obtained in the native metaheuristics, which reaffirms the consistency and stability of the results.

From instance twenty–one to instance thirty–two, the PSODQL, BATDQL, and OPADQL metaheuristics generate better solution values concerning their corresponding native metaheuristics, and regarding their corresponding standard deviations, it is lower concerning the native metaheuristics, standing out PSODQL performance produced the best solution values.

In instances thirty–three and thirty–four, the performance of the metaheuristics PSODQL, BATDQL, and OPADQL is maintained, highlighting the excellent performance of BATDQL in instance thirty–three and OPADQL in instance thirty–four.

Concluding with instances thirty–five to forty, we can observe that PSODQL, BATDQL, and OPADQL continue to obtain the best solution values; the standard deviation maintains a small value compared to their native counterparts. Highlighting PSODQL, which generated the best solution value.

In the application of metaheuristics with Deep Q–Learning, specifically PSODQL, BATDQL, and OPADQL, in addition to generating better solution values, observing a low standard deviation is beneficial as it indicates that the generated solutions are efficiently clustered around optimal values, thus reflecting the high precision and consistency of the results. This pattern suggests the algorithm’s notable effectiveness in identifying optimal or near–optimal solutions, with minimal variation across multiple executions, a crucial aspect for effectively resolving complex problems. Furthermore, a reduced interquartile range reaffirms the concentration of solutions around the median, decreasing data dispersion and refining the search towards regions of the solution space with high potential, which improves precision in reaching efficient solutions.

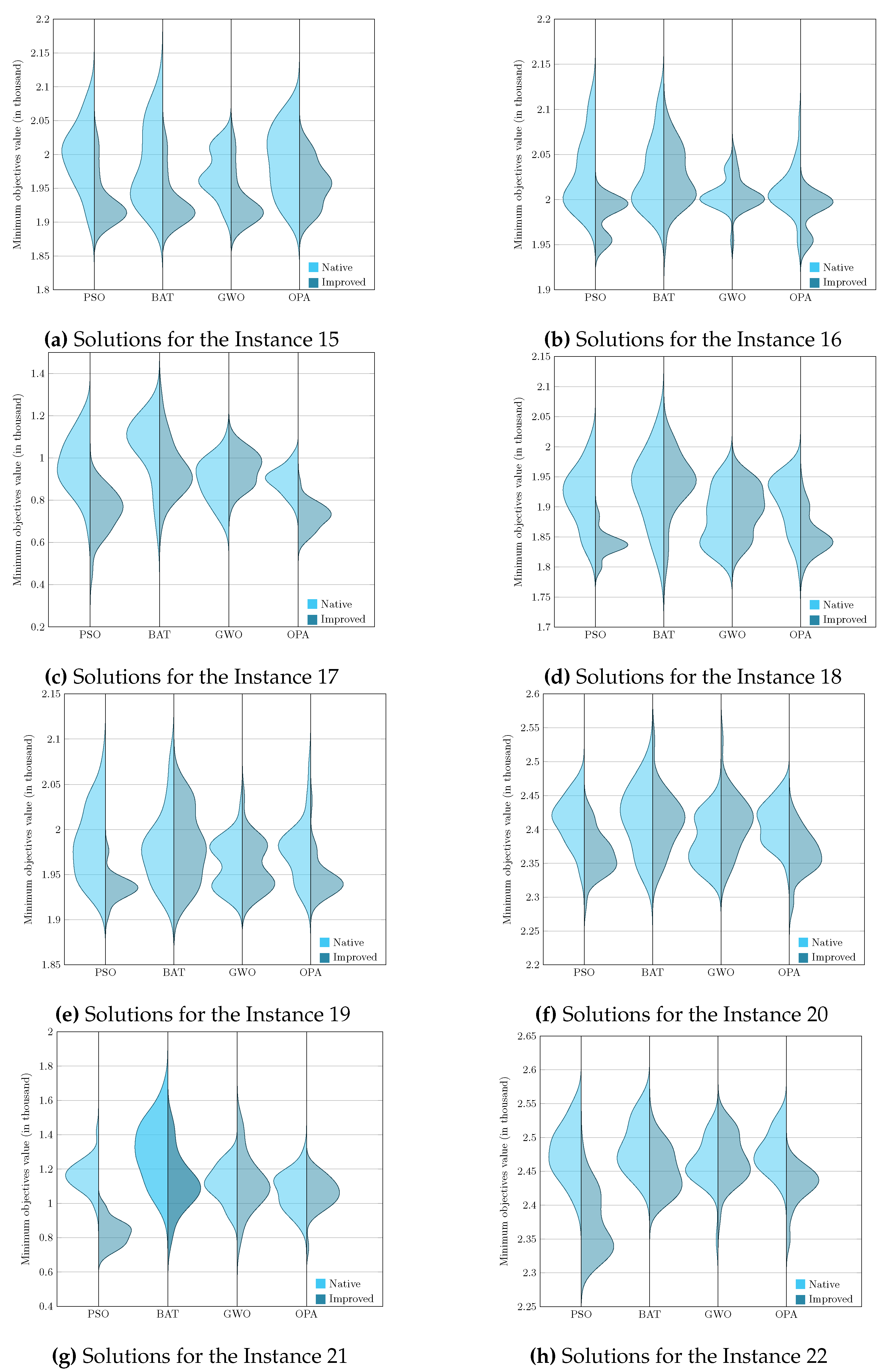

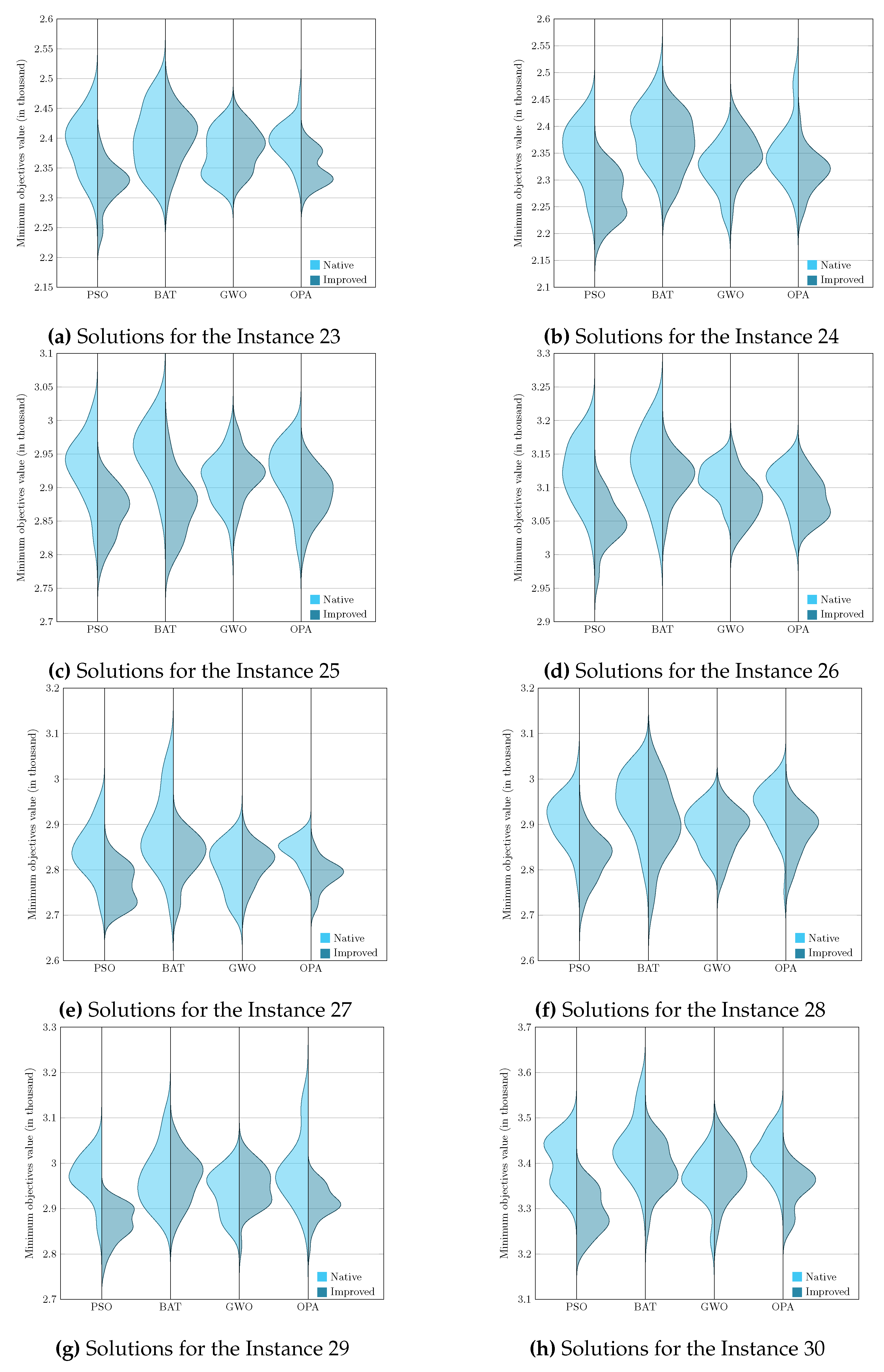

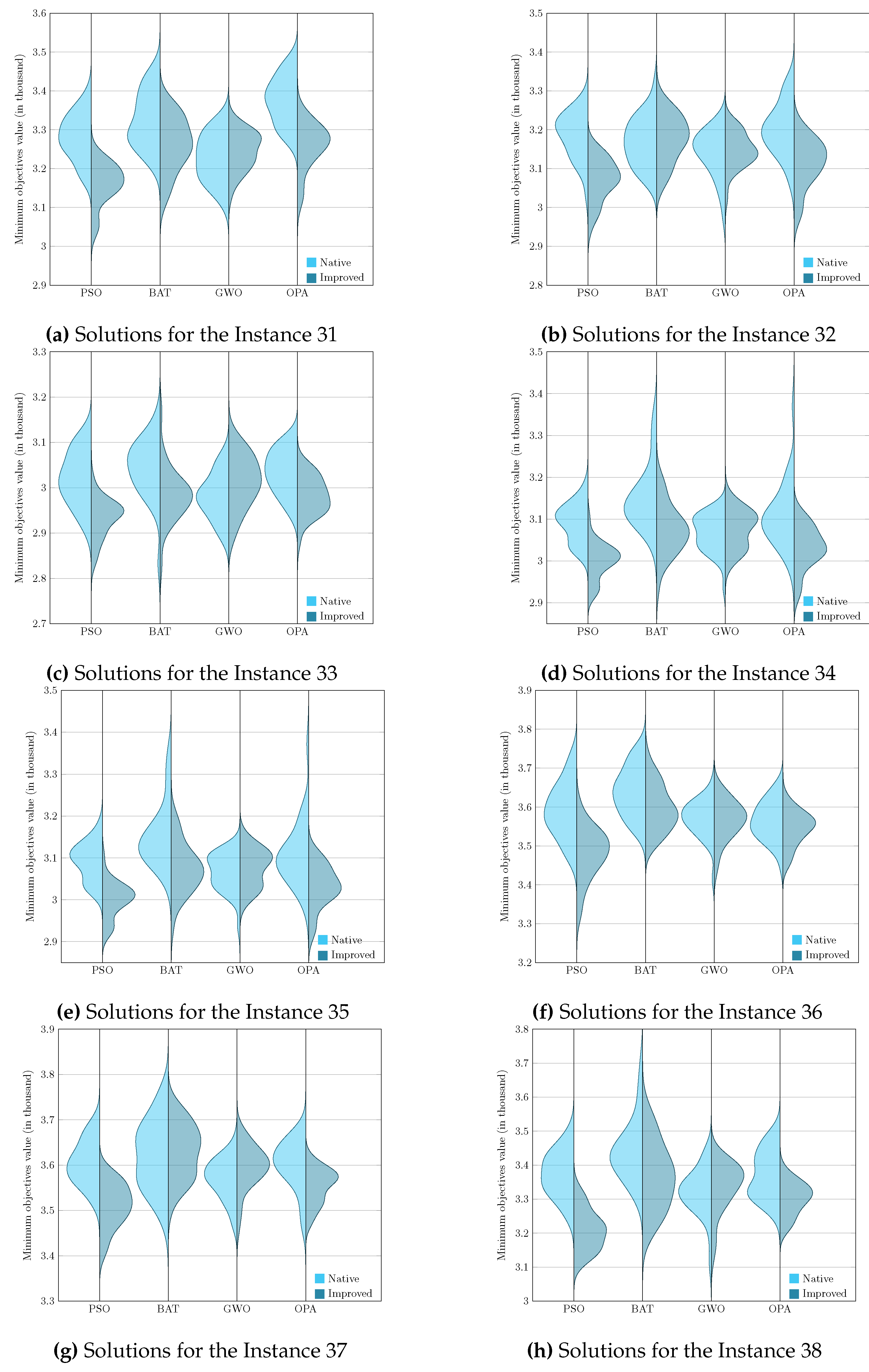

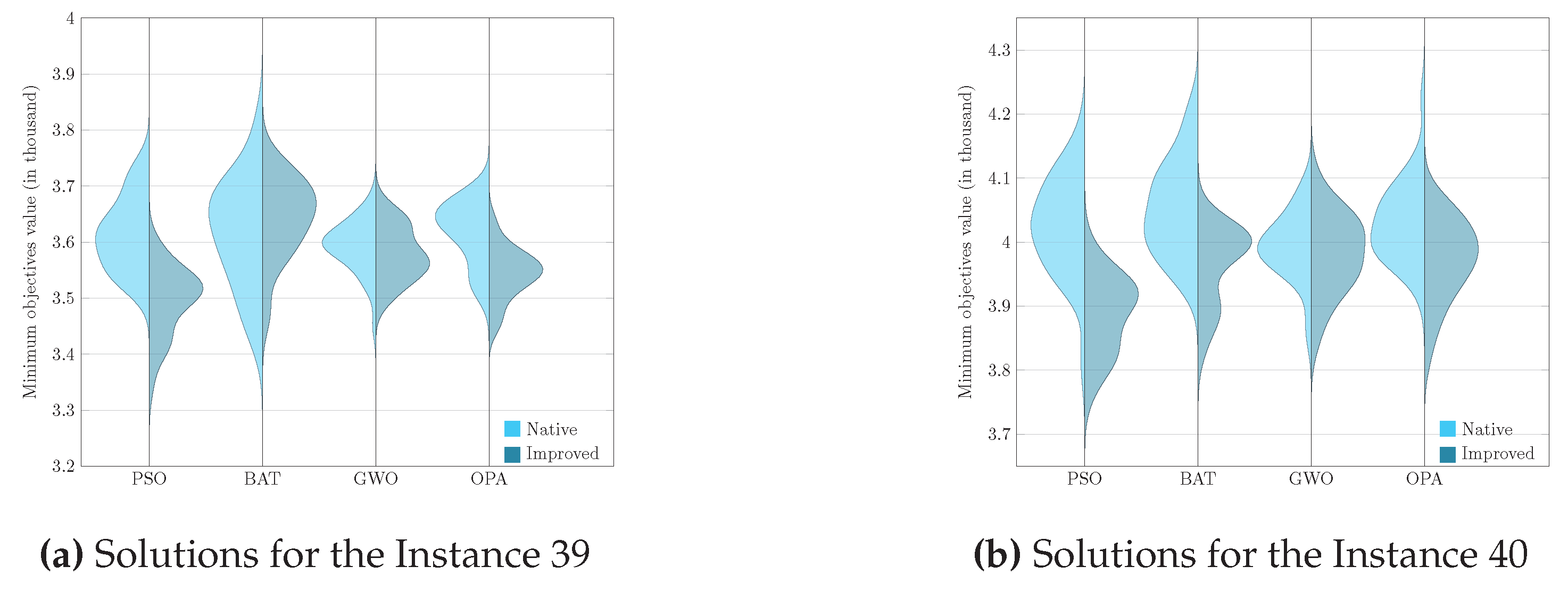

To present the results graphically, we faced the challenge of analyzing and comparing samples generated from non–parametric underlying processes, that is, processes whose data do not assume a normal distribution. Given this, it became essential to use a visualization tool such as the violin diagram, which adequately handles the non–parametric nature of the data and provides a clear and detailed view of their corresponding distributions. Visualizing these graphs allows us to consolidate the previously analyzed results, corresponding to the evaluation metric and, later in this section, the Wilcoxon–Mann–Whitney test.

Figure 5,

Figure 6,

Figure 7 and

Figure 8 enrich our comprehension of the effectiveness of biomimetic algorithms (left side) and their enhanced version (right side). These graphical illustrations reveal the data’s distribution, highlighting the learning component provides a real improvement for each optimization algorithm. The violin diagram, is an analytical tool that combines box plots and kernel density diagrams to compare data distribution between two samples, it was used to visualize the results . It shows summarized statistics, such as medians and quartiles, and the data density along its range. It helps identify and analyze significant differences between two samples, offering insights into patterns and the data structure [

69]. This way, we can appreciate that in instances fifteen and sixteen, the standard deviation is slight in the metaheuristics with DQL compared to native metaheuristics, especially PSODQL, BATDQL, and OPADQL. Furthermore, the median in PSODQL in instance fifteen is much lower than in native PSO. For instance, for instances seventeen to twenty, in addition to noting the minor standard deviation in the metaheuristics with Q Learning, the medians for PSODQL and OPADQL are significantly lower than their native counterparts. From twenty to twenty–six, the previous results for the metaheuristics with DQL are maintained, and the distributions and medians for PSODQL and OPADQL move to lower values. For instance, in twenty–seven and twenty–eight, the standard deviation is slight in the metaheuristics with DQL compared to the native metaheuristics. For instance, we can verify that the distribution and the median in PSODQL reach lower values in twenty–nine. For instance, in thirty and thirty–one, the distributions and medians in PSODQL, BATDQL, and OPADQL reach lower values. For instance, at thirty–two, both PSODQL and OPADQL distributions and medians reach lower values, and from thirty–three to forty, PSODQL, we can verify that in most cases, PSODQL, BATDQL, and OPA’s medians tend to lower values. From the above, we can confirm that the visualizations of the solutions for the instances allow us to reaffirm the findings and results of the substantial improvement of the metaheuristics with DQL compared to the native metaheuristics, highlighting PSODQL as the one that generates the best solutions throughout the experimentation phase.

It is worth mentioning that the visualization of the solutions from instances one to fourteen is impossible to graph since they mainly generate the same statistical values.

In the context of this research, the Wilcoxon–Mann–Whitney test, a non–parametric statistical test used to compare two independent samples [

70]. It was used to determine if there are significant differences in two groups of samples that may not have the same distribution, which were generated from native metaheuristics and DQL. The significance level was previously set at

p=

to conduct the test.

The results are detailed in

Table 7, describing the following findings. It is verified that from instances fifteen and sixteen, there are significant differences between the samples generated by PSODQL and native PSO, concluding that there is an improvement in the results obtained by PSODQL. For BAT and BATDQL, there are no significant differences between the samples; the same happens for GWO and GWODQL. However, for OPA and OPADQL, there is a substantial difference between the samples. However, PSODQL shows a more remarkable improvement as it has a more significant difference than OPADQL since the obtained

p-value is lower, as verified in the table. In instances seventeen, for the samples generated by PSO, there is a significant difference between the samples, resulting in a better performance of PSODQL; the same happens with BAT, resulting in a better BATDQL; for native GWO, it is better than GWODQL, and for the samples generated by OPA, there is a significant difference, resulting in a better OPADQL. In the eighteenth and nineteenth instances, it is confirmed that PSODQL is better than PSO. For BAT and BATDQL, there are no significant differences between the samples, just as for GWO and GWODQL. Moreover, OPADQL is better for OPA, as there are substantial differences between the samples. In both cases, PSODQL is better since it has the lowest

p value. In instance twenty, PSODQL and OPADQL show significant differences between their samples; however, OPADQL is better since it has the lowest

p value. In instance twenty–one, given the obtained results, PSODQL is better than native PSO, and the same applies to BAT; for GWO, native GWO is better, and for OPADQL, there are no significant differences between the samples. For this instance, PSODQL is better since it has the lowest

p value.

For instances from twenty–three to twenty–eight, significant differences are verified between the samples generated by PSO, BAT, and OPA, the result being that the samples generated by DQL will be better. For GWO, there are cases of significant differences between their samples. For the twenty–ninth instance, significant differences exist for the samples generated by PSO and OPA, resulting in better PSODQL and OPADQL. For instance, thirty to thirty–two, PSODQL, BATDQL, and OPADQL are better. For the thirty–three instances, PSODQL and OPADQL turned out to be better. For instance, thirty–four to thirty–six PSODQL, BATDQL, and OPADQL are better. For the thirty–seventh instance, PSODQL and OPADQL are better. PSODQL, BATDQL, and OPADQL were the best for the thirty–eight cases. For instance, thirty–nine and forty PSODQL and OPADQL are the best.

The central objective of this study was to evaluate the impact of integrating the Deep Q–Learning technique into traditional metaheuristics to improve their effectiveness in optimization tasks. The results demonstrate that the Deep Q–Learning enhanced versions, specifically PSODQL, BATDQL, and OPADQL, exhibited superior performance compared to their native counterparts. Notably, PSODQL stood out significantly, outperforming native PSO in one hundred percent of the cases during the experimental phase. These findings highlight the potential of reinforcement learning, through Deep Q–Learning, as an effective strategy to enhance the performance of metaheuristics in optimization problems.