1. Introduction

Non-destructive testing plays a key role in industrial quality control [

1]. X-ray imaging in particular is a highly effective non-destructive testing technique that can deliver detailed 3D images of the internal structure of products. It is therefore widely used in various industries for quality control [

2,

3,

4]. Conventional tomographic acquisition requires a full rotation around the object, limiting its applicability to off-line inspection of small to medium-sized objects. To overcome this limitation, several approaches to tomographic reconstruction from limited-angle data have been developed. These methods typically assume additional prior knowledge of the object to fill in information that is missing in the measurements. A prime example is discrete tomography, where objects consisting of a few distinct materials can be reconstructed from severely under-sampled data. In some applications, objects are wide and flat, and tomographic data can only be collected for a very limited angular range. This pushes the limits of conventional limited-angle tomography and we have to resort to another class of methods [

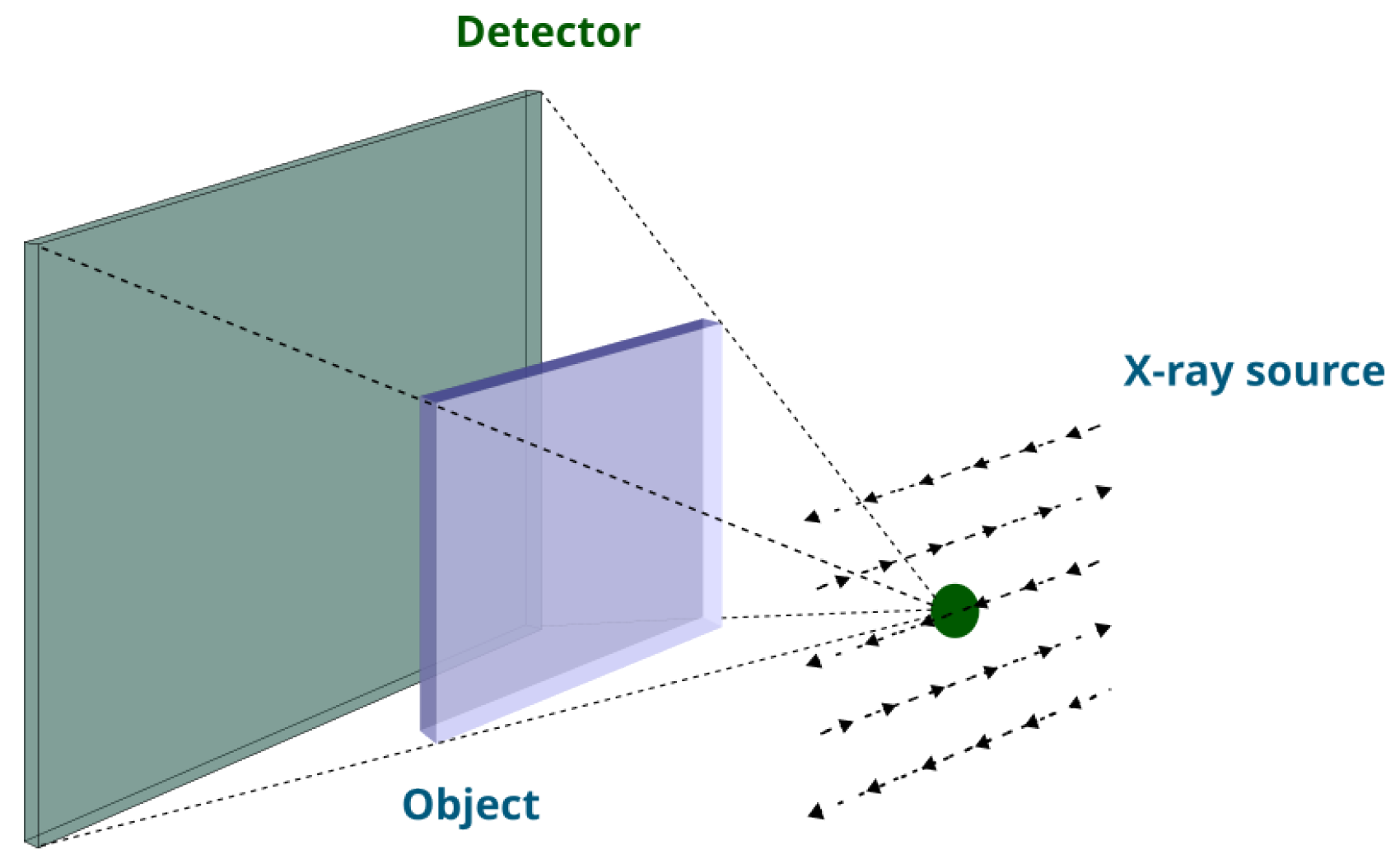

5]. Laminography offers a method to reconstruct an image of a specific slice of a flat layered object. As shown in

Figure 1, it needs a flexible source and detector that can move and collect data from several directions such that during scanning the X-ray tube is located on the same side of the flat object and the detector is on the opposite side of it [

6].

In this paper we consider a particular setup for laminographic imaging which requires the acquisition of X-ray projection images from

N source positions on a fixed grid, while the detector is fixed on the opposite side of the object. Our aim is to be able to optimally design acquisition protocols with

sources for a given set of objects. By optimally designing the setup we can reduce imaging artifacts as well as potentially speed up the data-acqisition process in cases where moving the source is time consuming (e.g., robotic arm imaging). We pose the resulting optimal experimental design (OED) problem [

7,

8] as a bi-level optimization problem that employs derivative-based methods to facilitate implementation in high-dimensional settings [

9].

Our main contributions are the following.

We analyze the corresponding 3D and 2D (slice-based) image reconstruction process, present an analog of the Fourier Slice Theorem for this setup and analyze the image artifacts present in slice-images.

We pose an experimental design problem for selecting the K most informative source positions for laminography of a class of objects, and formulate a bi-level optimization in the Bayes risk minimization framework to solve it.

The remainder of the paper is organized as follows. In

Section 2, we present the forward and backward operators in the continuous domain for the depth imaging procedure. We study depth reconstruction in the discrete domain in

Section 3. In

Section 4, we propose a bi-level optimization problem to find a source design with

K sources chosen from a fixed array of

sources. In

Section 5, we propose an algorithm to solve the proposed bi-level optimization problem. In

Section 6, we show the results obtained by our method and compare them to optimal source designs, obtained by exhaustive search. In

Section 8, we provide a discussion on the limitations and future work of the proposed method.

1.1. Notation

Throughout the paper, scalars and vector elements are denoted by lower-case letters, vectors by lower-case boldface letters, and matrices by upper-case boldface letters. The notation stands for transpose of either vector or matrix. Moreover, # denotes cardinality of a vector and denotes the -norm of a vector.

2. Forward and Backward Operators in the Continuous Domain

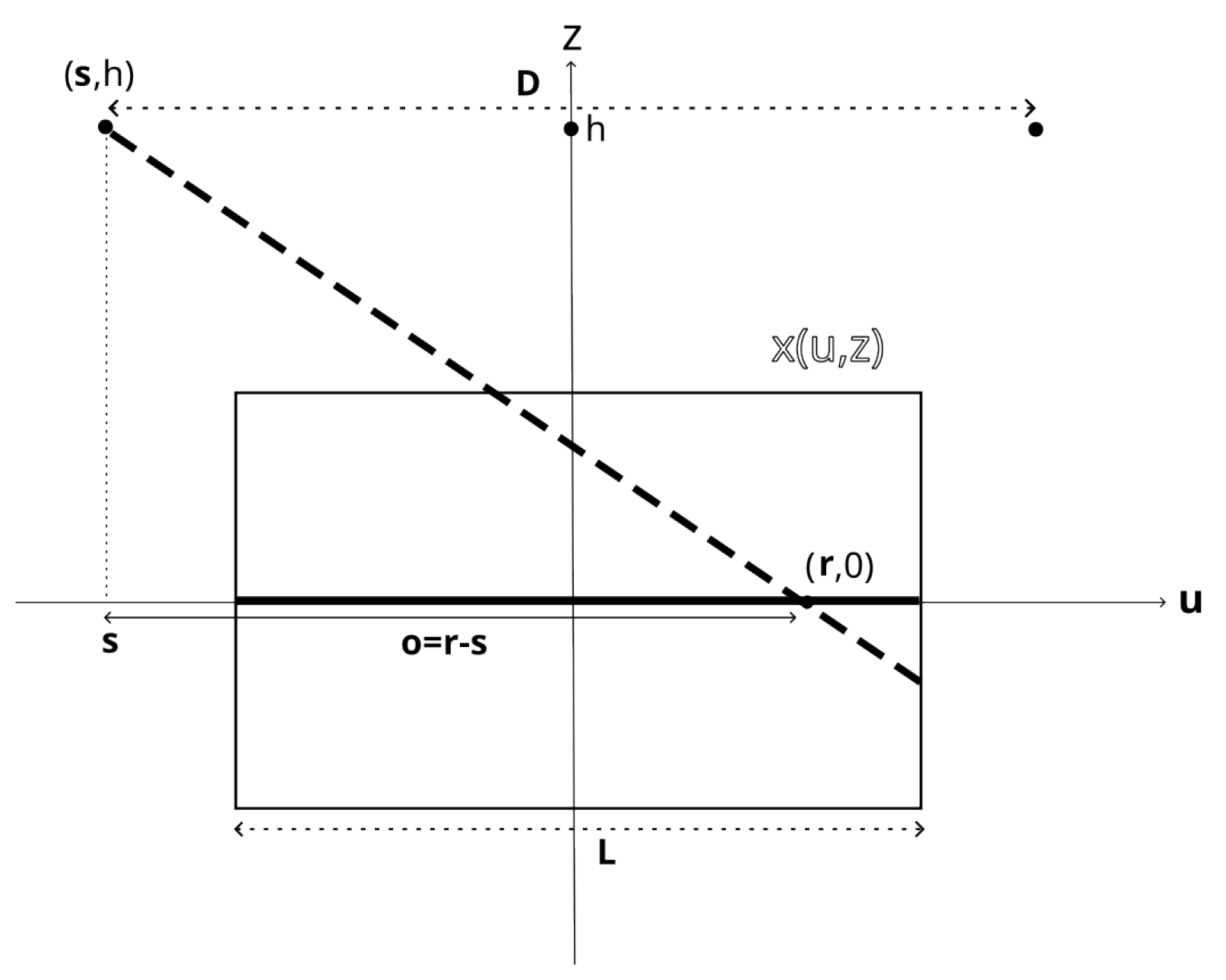

In this section, we will first review the linear X-ray projection model, followed by introducing the forward and backward operators for the setup shown in

Figure 2.

2.1. Linear Projection Model

During the X-ray imaging process, an object is exposed to a beam of X-ray photons emitted by an X-ray tube. Some of these photons are absorbed by the object, while others reach the detector. The captured measurements can be modeled using the Beer-Lambert law, which describes the exponential relationship between the intensity of the X-ray beam and the distance it travels through the object

Here,

denotes the number of photons that reach the detector from a starting point

in the direction of

, where

.

is the initial intensity of the beam (i.e., the number of photons emitted by the source), and

x is the function representing the attenuation coefficient of the object. This relation is usually written as:

with

representing the measured data for a single ray. In the following, we present the forward and backward operators for the X-ray setup shown in

Figure 2. To study the depth imaging procedure within this specific setup, we need to understand forward and backward operators for both 2D and 3D objects.

2.2. Forward and Backward Operators for the Slice Imaging Setup

The proposed imaging setup is schematically depicted in

Figure 2. X-rays are emitted by a source at lateral position

and height

h. Without loss of generality, we parametrize the detector position by

. The forward operator,

, is now given by

as shown in

Figure 2. Here,

denotes the attenuation coefficient of the object in terms of lateral (

) and vertical (

) coordinates. In fact, it integrates along the ray from the source at

to the detector located at

.

2.2.1. A Fourier Slice Theorem

Defining the measurements in receiver-offset coordinates as

, we find the following relation between the 2D Fourier transform of

g and the 3D Fourier transform of

x. Let

and

represent the spatial frequency coordinates, then it reads: (see the derivation in

Appendix A.1)

where

and

denote the 2D and 3D Fourier transforms, respectively, and

signifies the vector difference between the detector position

and the source position

.

Figure 3 depicts sampled elements

(black circles) with respect to the Fourier object samples

(shown by green squares). Obviously, the sampling procedure is missing a portion of the object spectrum due to the limited values of

, i.e., the forward operator samples from

. Since the range of

is finite, this leads to a missing wedge in the frequency domain. This missing wedge leads to an ill-posed inverse problem of recovering

x from

g (or equivalently,

y).

2.2.2. The 2D Slice at Depth z

To study the depth-imaging procedure, we introduce the corresponding forward operator

which maps a 2D image at depth

z, denoted by

to the corresponding projection data

(See

Appendix A.2),

The corresponding adjoint operator

is given by (See

Appendix A.2),

We then find that

is given by

so that for a limited range of

,

and hence is trivially inverted. Depth imaging of an object consisting of a single thin layer can thus be achieved by a simple back-projection. When multiple layers are present we expect artifacts, which we study next.

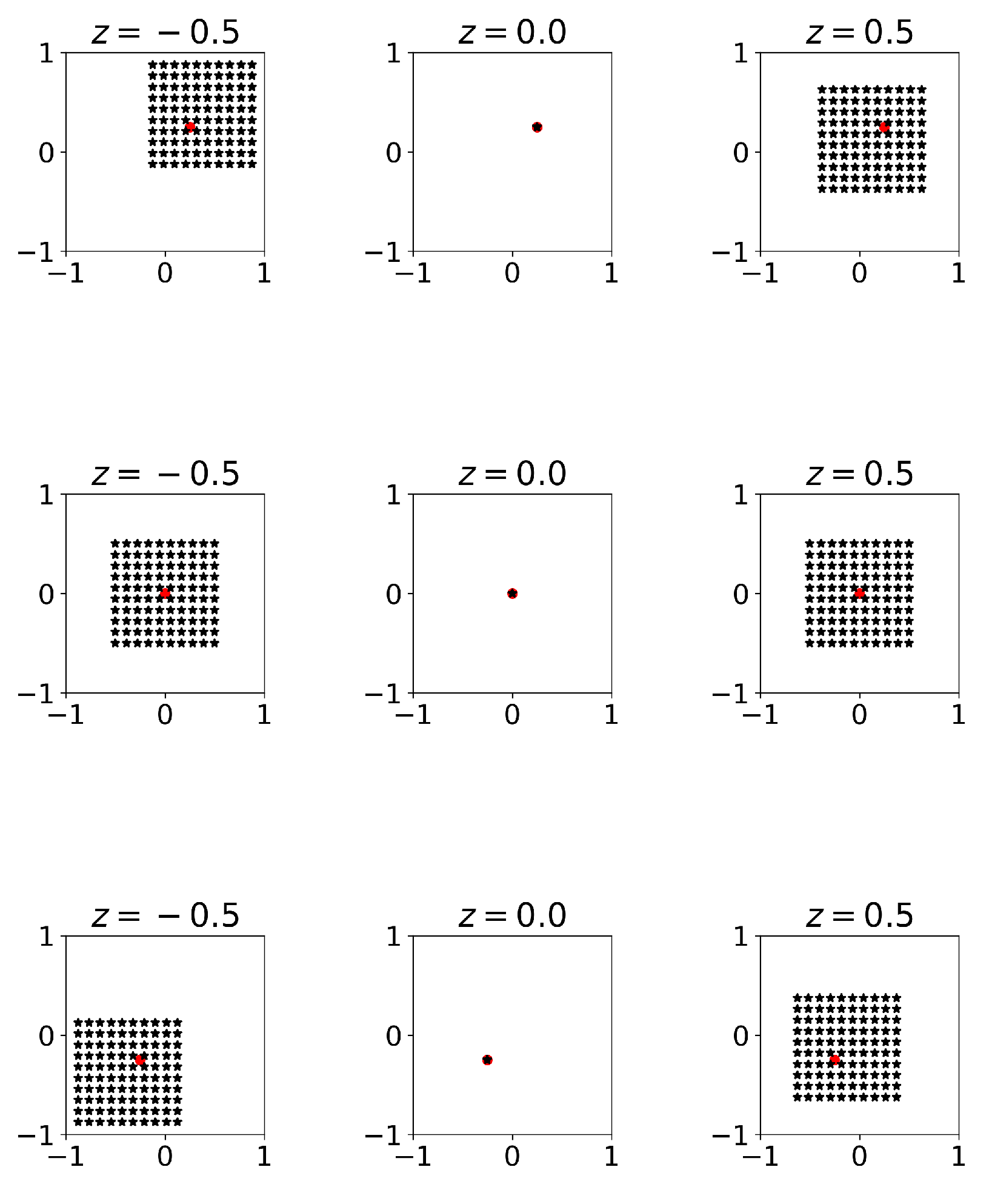

2.3. Depth Image Reconstruction

Here, we are interested in studying the artifacts that arise when imaging a slice from measurements of a 3D layered object. In the remainder of this section, we let

for ease of notation. As argued above, we can form a depth image by back projection, so it suffices to study the operator

(See

Appendix A.3). In particular, we expect to image layered objects, which we can model as

Using a finite number of sources

, the depth image of the

layer is then given by

Hence, the error in the depth image of the

layer is a superposition of spatially averaged versions of the other layers. An illustration of the resulting spatial sampling patterns is shown in

Figure 4.

The source design problem is now to find a set of source positions such that unwanted parts in the depth image are minimized. As we don’t expect to be able to find a closed-form solution to the source design problem in general, we discretize the problem and resort to numerical optimization.

3. Discrete Problem

3.1. Forward and Back Projection

We discretize the forward model in (

3) and express it as

where

,

and

. Note that the number of rows in

is the product of the number of detector pixels and the number of sources. Every block of rows corresponds to a particular X-ray source.

Let

,

denote the forward matrix and the slice object at depth

z, respectively. In general,

will not be a multiple of the identity as in the continuous setting (

7), so we consider solving

. We can view this as an approximation of 3D reconstruction through the Schur complement [

10]. To see this, let

and

represent the forward operator and the object for all pixels except the ones contained in

. The linear equations for the forward projection (

10) can now be expressed as follows:

By multiplying

, it reads

By utilizing Schur complement, one can re-write this in terms of

as

where

However, it is computationally not feasible to compute the corresponding Schur complements, and we can interpret depth imaging as neglecting the additional terms involving

.

3.2. Algebraic Reconstruction

Although the operator

is unitary (up to a scaling factor), the corresponding discrete operator

is generally not. Moreover, it is desirable to stabilize the reconstruction with additional regularization. We therefore pose depth imaging as a regularized least-squares problem:

where

controls the trade-off between the two terms. While the problem is not extremely ill-posed, we utilize

as a safety factor to ensure the uniqueness of the reconstructed depth image. The above optimization can be solved using iterative algorithms such as the LSQR [

11]. Various other regularization techniques, such as Total Variation regularization, or sparse regularization, introduce different penalty functions that promote desired properties in the reconstructed image.

4. Efficient Source Design for K Sources

Let

be the design parameter, where the components of

determine the influence of each source in the reconstruction process. The problem of finding the optimal design parameter

for a fixed number of sources in the reconstruction of a specific depth plane can be formulated as a bi-level optimization problem as below,

where

represents the ground truth and the hyper-parameter

serves as scaling factor, ensuring that

is on the same scale as

. Also,

is obtained by solving

where

denotes the data corresponding to

and

is a linear operator which applies the weights

to each source’s corresponding measurements. The optimization problem (

15) minimizes the Euclidean distance between the reconstructed image (scaled by

) and the ground truth subject to certain constraints on

to enforce its binary nature with

K elements.

The binary constraint on

in (

15) can be relaxed using bound constraints [

12], and the bi-level optimization is then formulated as

while the optimal value of the scalar parameter

in (

15) is substituted in above optimization with

. Mathematically speaking, this problem seeks a

that minimizes the Euclidean distance of

and

and lies on the intersection of a hyperplane

and the hyperbox

. Note that we cannot guarantee that this will produce binary solutions. In such cases, we convert the solution to a binary form by setting the

K largest elements to one and assigning zeros to the remaining elements.

In practice the ground truth of

is unknown, however, a set of calibration data

of representative images may be available. This can then be utilized to compute an efficient source design such that minimizes the empirical Bayes risk. More precisely, for a given set of calibration data

with corresponding measurements

, we can generalize (

17) to

where

is the solution of (

16) for the

i-th calibration data

.

5. Implementation

To address the implementation of the Efficient

K-Source Design (EKSD) method, it is worth mentioning that the non-linear optimization problem of (

17) requires numerical optimization algorithms to start from an initial guess and refine the solution iteratively. This section discusses the solution of (

17) using a projected gradient method.

5.1. Projected Gradient Method

In general, the projected gradient (PG) method solves,

where

is a smooth function and

is a nonempty closed convex set. The projection onto

is the mapping

defined by:

The PG algorithm is defined by,

where

is the step size,

L is the Lipschitz constant of

, and

is the gradient of

f at

. The stopping criteria is defined as the condition where the Euclidean norm of the difference between consecutive design parameters, denoted as

, is less than or equal to a specified threshold value, denoted as

.

5.2. Gradient of the Objective Function

To implement the non-linear optimization of (

17) using the PG algorithm, the gradient calculation of

is required. Here, it reads,

where

, and

with

. (See the derivation in

Appendix A.4)

5.3. Projection Onto the Simplex Constraint

Here, we investigate the projection operator

presented in (

21) which maps each point onto the simplex set of

. (See the derivation in

Appendix A.5)

where

and

can be obtained by solving the following optimization problem:

which can be solved using the Newton method.

6. Numerical Experiments

Here, we present some results related to our proposed method (EKSD). We evaluate the performance of EKSD using the following criteria:

i: Normalized mean square error (NMSE) can be defined as the error between the reconstructed image and ground truth

ii: Structural similarity index measure (SSIM): Unlike NMSE, SSIM takes into account the structural information and perceived quality of the images. It compares three aspects of the images: luminance, contrast, and structure, and computes a similarity index between 0 and 1, where 1 indicates perfect similarity [

13].

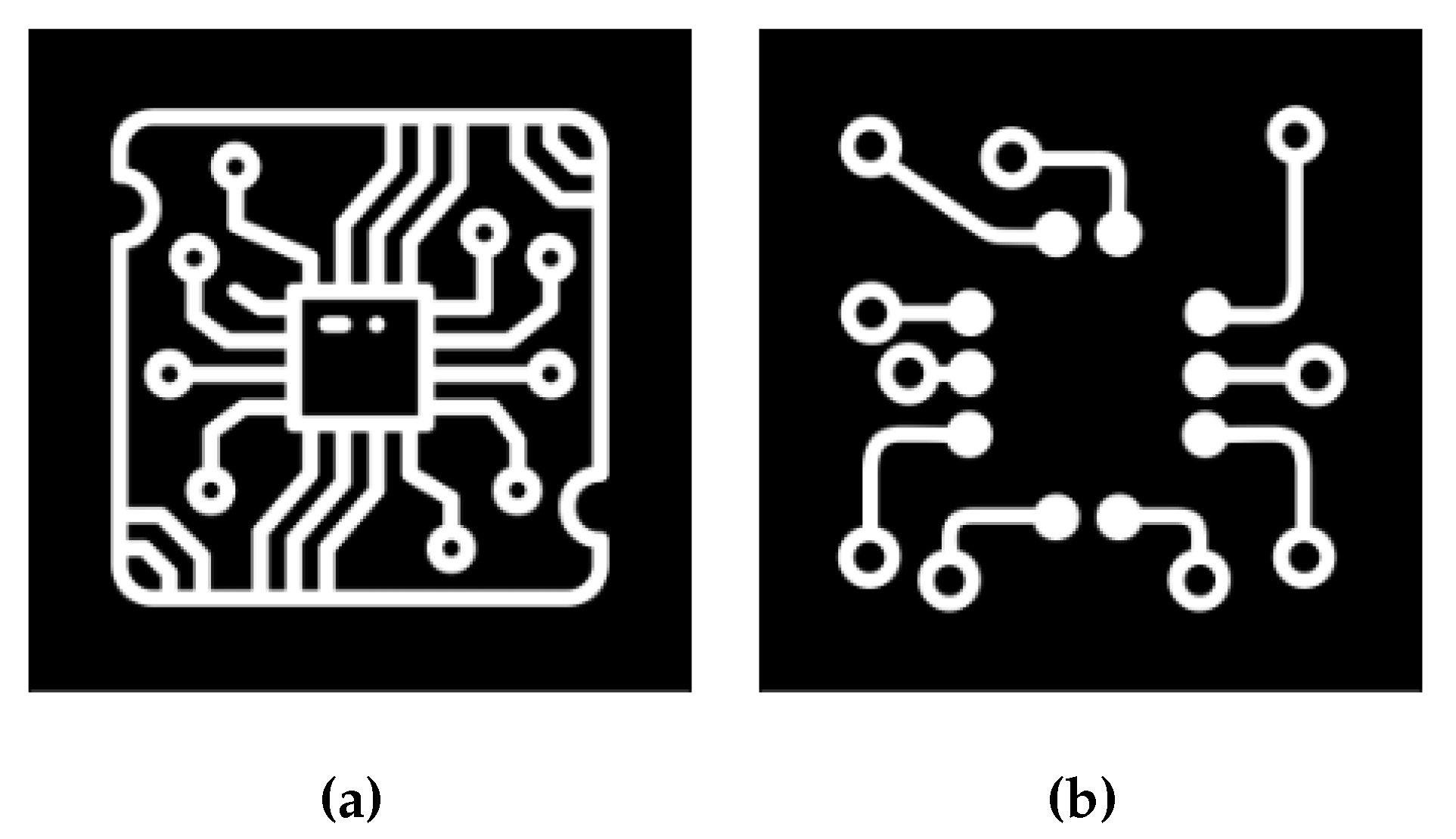

6.1. Data Set

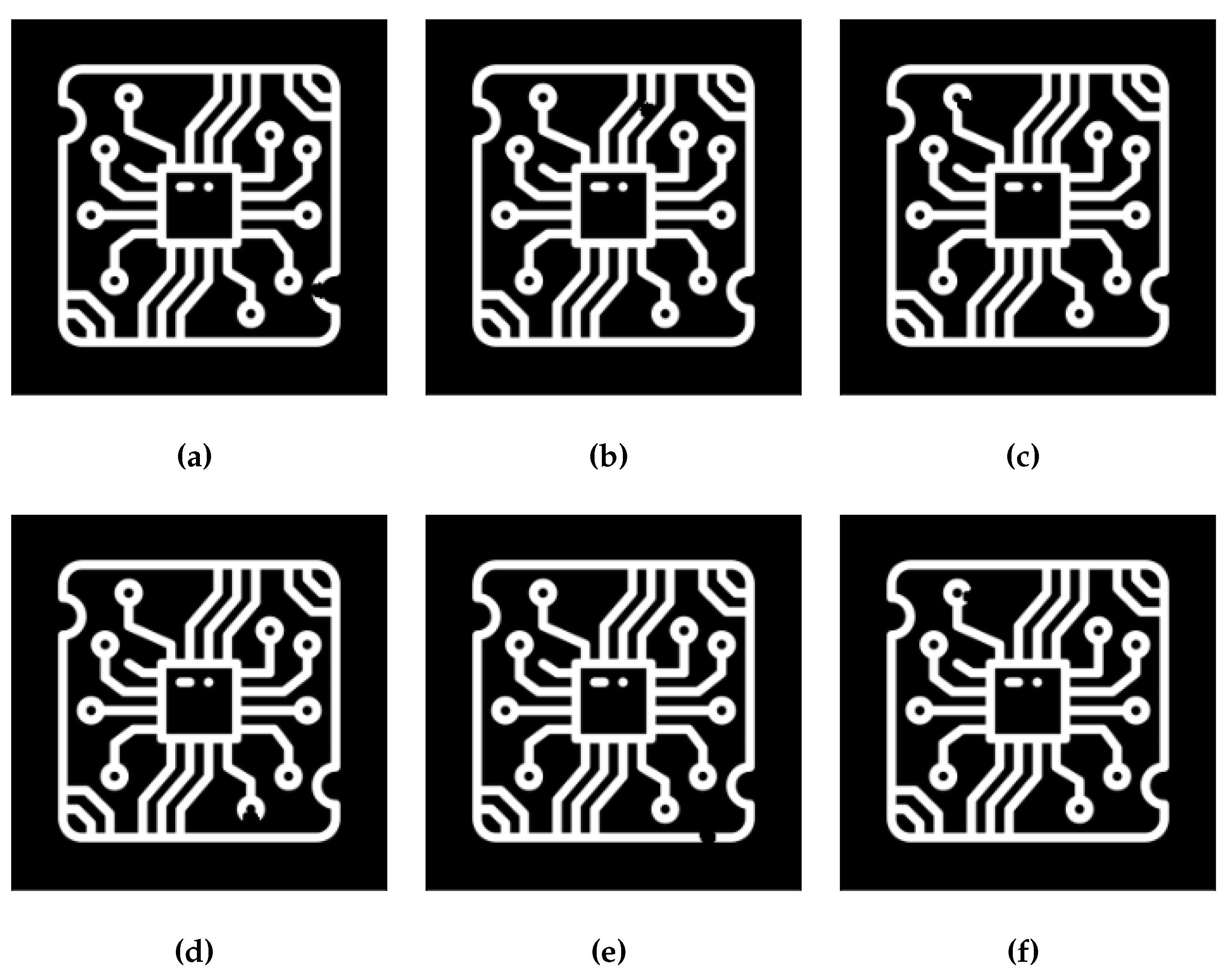

As a benchmark example we consider a two-layer PCB of size

pixels. It consists of two distinct wire designs (as illustrated in

Figure 5) with a thickness of 25 pixels each. These designs are separated by a gap of 20 pixels. The layer of interest is the one depicted in

Figure 5a.

We create 200 defect shapes in various locations, forming a data set of defective wire design for the bottom layer. A few samples of this data set are depicted in

Figure 6. We use half of this data set for source design (calibration) and half for validation.

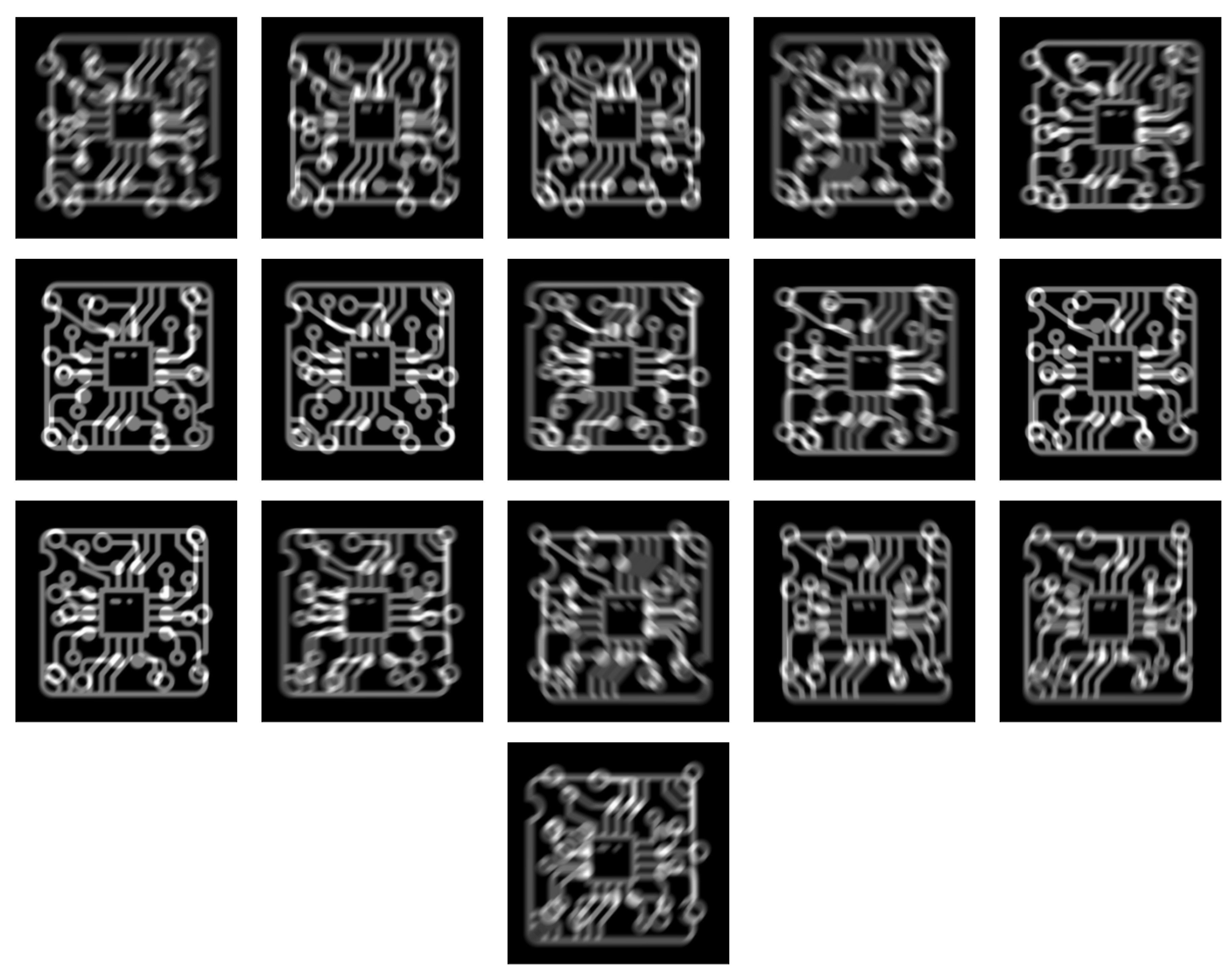

6.2. Implementation Details

We define the X-ray setup as shown in

Figure 2. The distance

h, between the center of the array and the detector, is equal to

. Here,

L represents the width of the phantom. Moreover, we consider

(discretized uniformly with 250 × 250 pixels) and

(discretized uniformly with

source positions), where

D is the fixed array length. The projection data are generated using the ASTRA toolbox [

14]. For illustration, we present the projection images from all sources in a

array of sources in

Figure 7. It is challenging to inspect individual layers of a the phantom with a single projection, as overlapping them hinders a proper investigation.

We choose a small regularization parameter to ensure the solution’s stability and uniqueness without significantly biasing the reconstruction. We carefully tuned the step size, , to be sufficiently small, reducing the risk of algorithmic overshooting during optimization. Additionally, we manually adjusted the stopping threshold, , to ensure that the algorithm stops at approximately consistent convergence points. The initial value of the parameter vector is initialized either with a vector ones or with i.i.d. uniform random values in . We refer to these methods as EKSD(K) and EKSDR(K), respectively. In some experiments, we will compare the results to completely random designs of K out of N source positions. We refer to this as the RANDOM method.

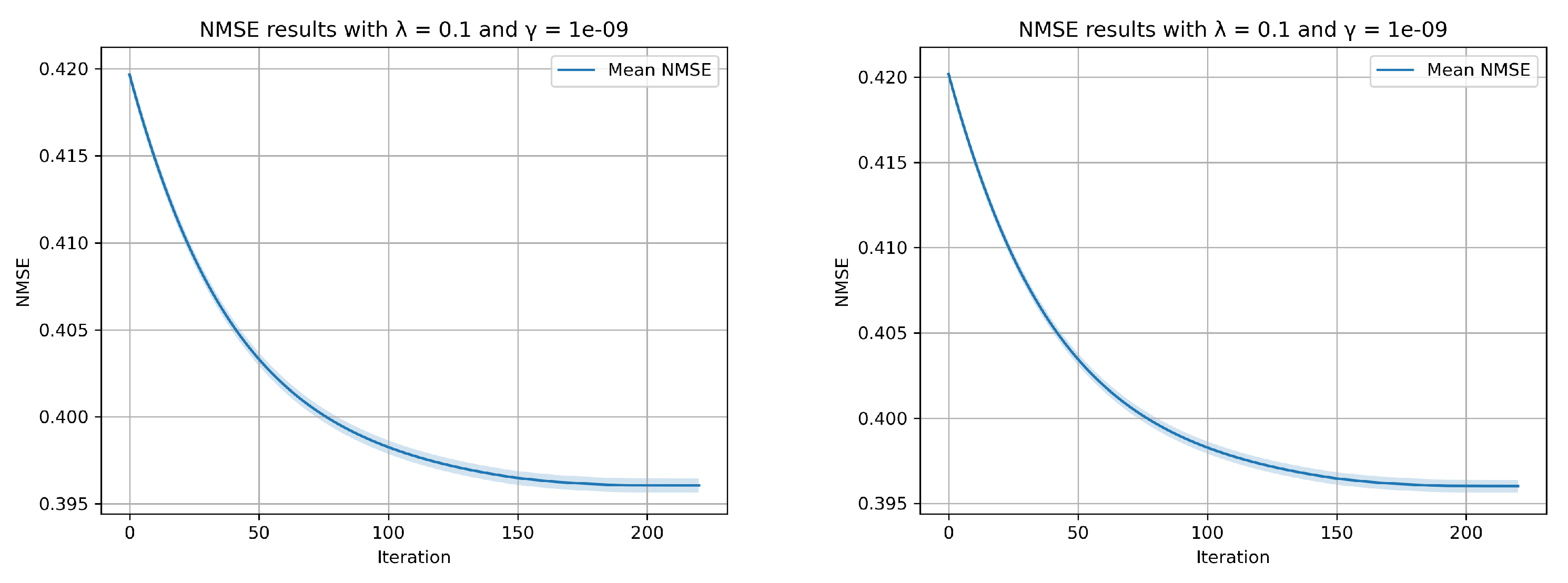

6.3. Convergence

We evaluate the convergence behavior of the EKSD algorithm across various iterations. Here, we let

,

,

. In

Figure 8, we depict the NMSE at each iteration as evaluated on the calibration (left) and validation (right) data. This figures shows that the design is not overfitted on the calibration data.

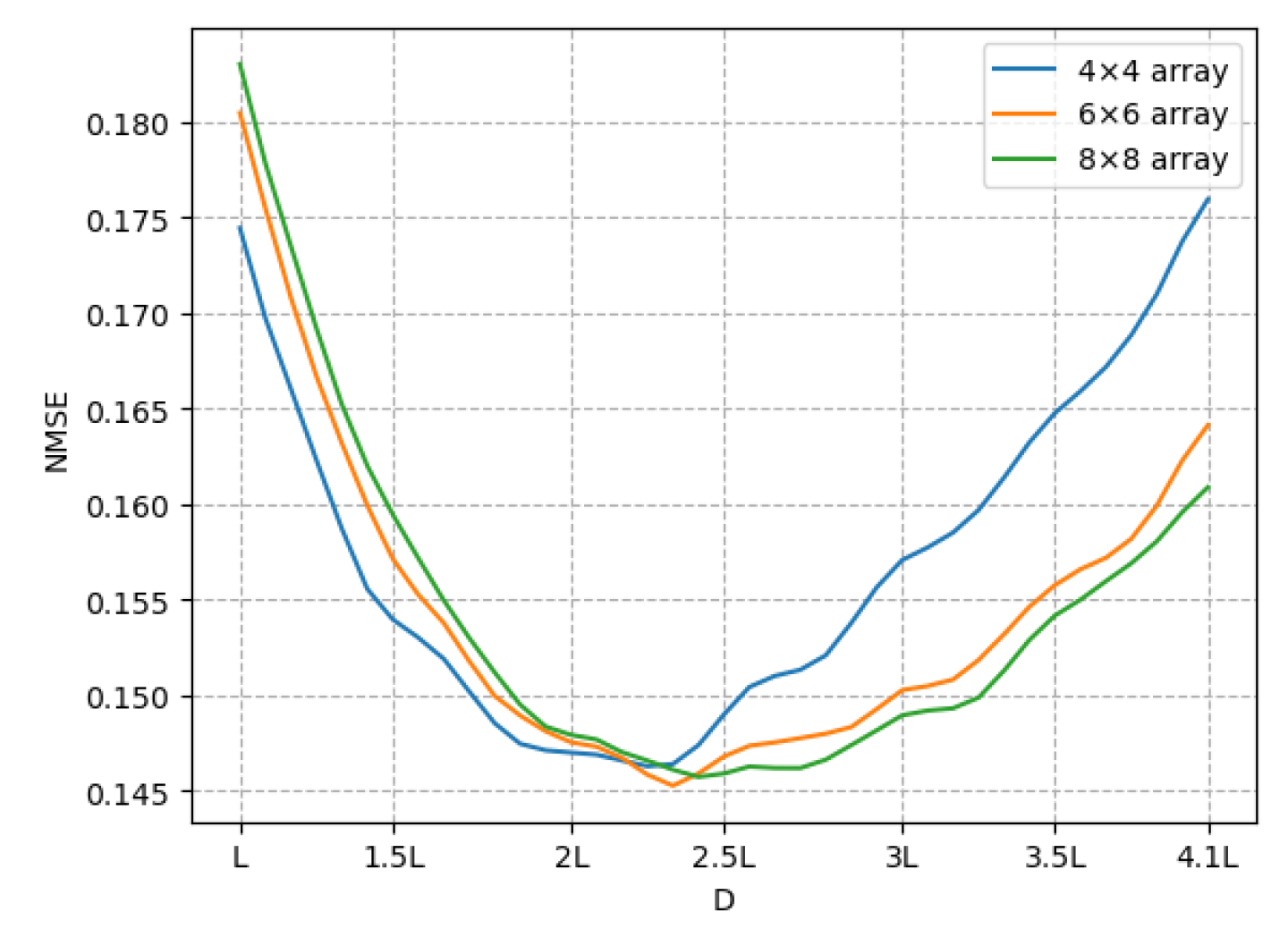

6.4. Exploring the Effects of Array Length

Here, we study the influence of the array length

D on the reconstruction error for

. Hence, we compare the average NMSE over the validation data across various array lengths, as depicted in

Figure 9. The heightened errors observed for both smaller and larger array lengths indicate that certain source positions within the array contribute to artifacts in the depth image, leading to an elevated NMSE.

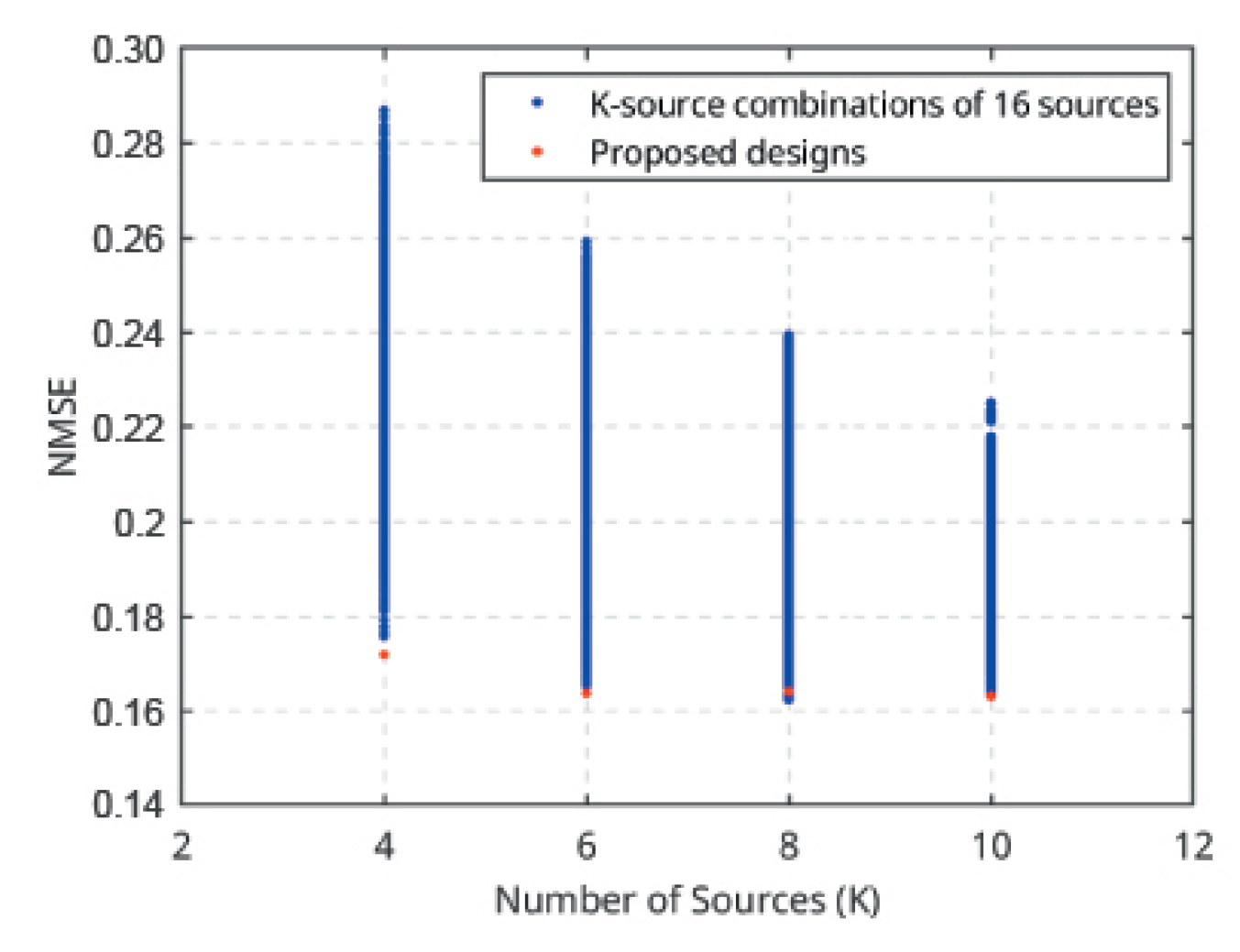

6.5. Exhaustive Search

We carried out an exhaustive search to assess the performance of EKSD. We consider

source positions with

.

Figure 10 shows the average NMSE over the validation data for all possible

K-source designs for each

K, as well as the average NMSE achieved by the EKSD-design. We see the the designs found by EKSD are nearly optimal.

6.6. Source Design 1

We consider three configurations with

at

, and we show the NMSE and SSIM results in

Table 1. Initially, we display results for depth-reconstructed images utilizing all source positions within the array. Subsequently, we derive

efficient source designs with EKSD and EKSDR and present the corresponding NMSE and SSIM results. For each value of

N, we observe that EKSD and EKSDR outperform than the case using all source positions. This improvement can be attributed to the reduction of artifacts in the images, which is likely due to the selective nature of measurements in the EKSD and EKSDR approaches. As a comparison we show the average NMSE and SSIM of random designs with

sources, and these are significantly worse than all other methods.

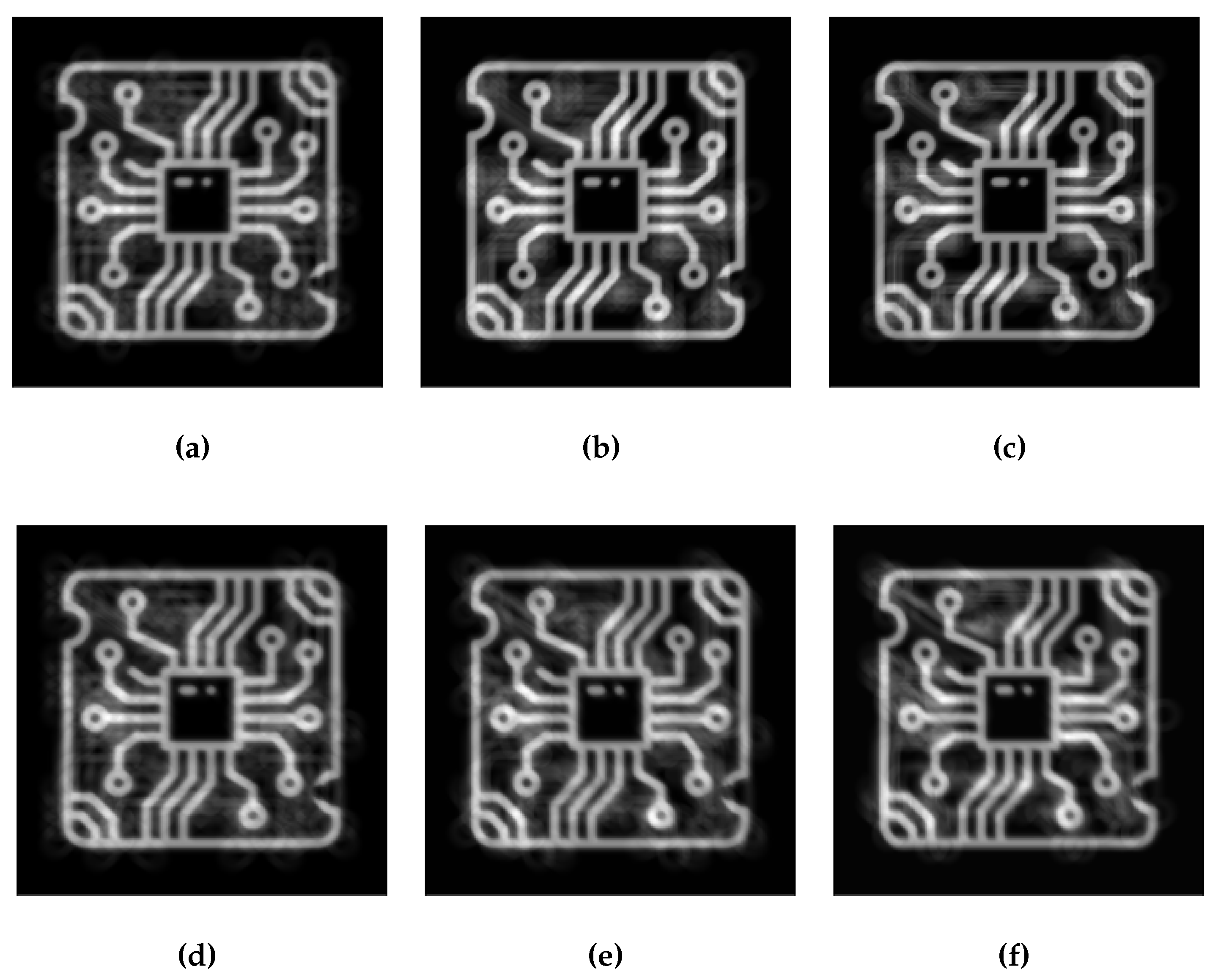

Figure 11 shows the corresponding depth images of the bottom layer reconstructed with source positions suggested by EKSD. As demonstrated in

Section 2.3, artifacts in these images attributed to the superposition of features from different layers. The lack of rotational acquisition in laminography leads to this artifacts. Clearly, the designs proposed by EKSD minimize artifacts in depth images compared to random designs, facilitating accurate defect detection within the depth images.

6.7. Source Design 2

We repeat the previous experiment with the array length optimally according to

Figure 9;

for different

N values. The results are summarized in

Table 2. As the full designs are already optimal in a sense, there is less dramatic improvement when optimizing the design with fewer source positions. However, the results for

suggest that increasing the number of positions while maintaining a fixed array length, thereby reducing the distance between sources, may result in a non-binary solution. Then, converting the solution into a sparse vector with

elements, results in a less optimal outcome. The proposed source designs and corresponding depth images are shown in

Figure 12.

7. Discussion

In this research, we explore the methodology of experimental design for a laminographic setup, acknowledging potential challenges that may arise in real-world applications. Here, we outline several significant challenges with our proposed method: (i) The non-convex nature of the objective function may lead to entrapment in local minima. Addressing this, diversifying initial conditions enhances the likelihood of identifying more optimal solutions or the global minimum. (ii) Our method optimizes the positions of sources within a specified grid. However, this grid may not represent the most efficient set of source positions for optimization. An alternative approach could involve optimizing within a continuous domain of positions, leveraging the closed-form formulation for depth image reconstruction presented in this paper. (iii) While our investigation of the experimental design problem assumes noise-free measurements, this assumption may not hold in real-world scenarios characterized by noisy data. This can be addressed by incorporating noise directly into the optimization process to enhance real-world applicability.

8. Conclusion

In real world scenarios, imaging a particular depth of a planar object can yield valuable information with less effort than a full 3D reconstruction. In a depth image, the information corresponding to the chosen layer appears sharp, while the details of other layers appear blurred. We argue that source design plays a role in mitigating these unwanted artifacts and pose the experimental design problem as a bi-level optimization problem. To solve this problem, we employed a bilvel optimization and implemented it by gradient-based methods. The suggested framework can be tailored more specifically to a particular application by incorporating different regularization terms in lower level optimization or by adopting alternate loss function in the upper level optimization to more effectively capture the characteristics of the image.

Appendix A

Appendix A.1. Forward Operator for the 3D Object

Figure 2 presents the 2D view of acquisition setup for a planar object

where

is the lateral coordinate and

the depth coordinate. The line integral of

from

to

for a fixed

h can be expressed as follows:

where

,

denote the source and detector positions, respectively. Next, we substitute

by its representation in the Fourier domain

:

using (

A2) in (

A1), it yields:

using

, it follows,

and then,

using

, it can be written,

which means we can link the 3D Fourier transform of

x to the 2D Fourier transform of the data as,

Appendix A.2. Forward Operator for a 2D Slice on the Depth z

We introduce

as a slice of the object

x at depth

z, i.e.,

We then define the forward operator

as projecting a 2D object to the detector at

via,

or equivalently as,

The adjoint operator

can be obtained by considering the definition

. First, we have

Now let

, then

and

, and it follows,

We thus find that

is defined as

By using (

A9) in (A11), the composition

can be written as below

Appendix A.3. Back Projection for a Depth Image

We use

in (

A1) to express

using (A11),

Now let

, then it can be rewritten as

Appendix A.4. Gradient Calculation of the Objective Function

To find the gradient of

with respect to

, by the chain roll it reads:

where the second term is equal to zero because

is the optimal point of

. Then, the first term can be written as:

To obtain an expression for the derivative of

with respect to

, we exploit the fact that the lower-level optimization problem (

16) has a closed-form solution:

We define

, and then the derivative follows,

recall that

, therefore,

then (

A18) can be rewritten as,

where

is equal to

in (

A17) and

is denoted by

, then it can be rewritten,

for simplicity, we denote

as

and it follows,

where

. By replacement of (

A22) into (

22), yields

Appendix A.5. Projection Onto the Simplex Constraint

Let

be an operator that maps each point onto the simplex set of

. In fact, the orthogonal projection of

is the unique solution of:

to solve the above optimization via its dual problem, we introduce the Lagrangian [

15],

where

is a Lagrangian multiplier. The dual problem can now be written as

where

is the dual function as the minimum value of Lagrangian over

. Regarding strong duality the optimal solution of (

A24) and (

A26) are equal if there exists a dual variable of

that makes (

A26) as a tight lower bound for (

A24). The solution of the dual problem of (

A26) can be written as [

16],

where

is obtained by solving

. Let’s define the function

that is non-increasing and can be solved using the Newton method. The derivative is given by,

References

- Medici, V.; Martarelli, M.; Paone, N.; Pandarese, G.; van de Kamp, W.; Verhoef, B.; Sipsas, K.; Broechler, R.; Besada, L.B.; Alexopoulos, K.; Nikolakis, N. Integration of Non-Destructive Inspection (NDI) systems for Zero-Defect Manufacturing in the Industry 4.0 era. 2023 IEEE International Workshop on Metrology for Industry 4.0 & IoT (MetroInd4.0&IoT), 2023, pp. 439–444. [CrossRef]

- Wang, B.; Zhong, S.; Lee, T.L.; Fancey, K.S.; Mi, J. Non-destructive testing and evaluation of composite materials/structures: A state-of-the-art review. Advances in mechanical engineering 2020, 12, 1687814020913761. [Google Scholar] [CrossRef]

- Schimleck, L.; Dahlen, J.; Apiolaza, L.A.; Downes, G.; Emms, G.; Evans, R.; Moore, J.; Pâques, L.; Van den Bulcke, J.; Wang, X. Non-Destructive Evaluation Techniques and What They Tell Us about Wood Property Variation. Forests 2019, 10. [Google Scholar] [CrossRef]

- Hassler, U.; Oeckl, S.; Bauscher, I.; others. Inline ct methods for industrial production. International Symposium on NDT in Aerospace, 2008, pp. 123–131.

- Gondrom, S.; Zhou, J.; Maisl, M.; Reiter, H.; Kröning, M.; Arnold, W. X-ray computed laminography: an approach of computed tomography for applications with limited access. Nuclear Engineering and Design 1999, 190, 141–147. [Google Scholar] [CrossRef]

- O’Brien, N.; Mavrogordato, M.; Boardman, R.; Sinclair, I.; Hawker, S.; Blumensath, T. Comparing cone beam laminographic system trajectories for composite NDT. Case Studies in Nondestructive Testing and Evaluation 2016, 6, 56–61. [Google Scholar] [CrossRef]

- Haber, E.; Horesh, L.; Tenorio, L. Numerical methods for experimental design of large-scale linear ill-posed inverse problems. Inverse Problems 2008, 24, 055012. [Google Scholar] [CrossRef]

- Haber, E.; Magnant, Z.; Lucero, C.; Tenorio, L. Numerical methods for A-optimal designs with a sparsity constraint for ill-posed inverse problems. Computational Optimization and Applications 2012, 52, 293–314. [Google Scholar] [CrossRef]

- Ruthotto, L.; Chung, J.; Chung, M. Optimal Experimental Design for Inverse Problems with State Constraints. SIAM Journal on Scientific Computing 2018, 40, B1080–B1100. [Google Scholar] [CrossRef]

- Zhang, F. The Schur complement and its applications; Vol. 4, Springer Science & Business Media, 2006.

- Paige, C.C.; Saunders, M.A. LSQR: An algorithm for sparse linear equations and sparse least squares. ACM Transactions on Mathematical Software (TOMS) 1982, 8, 43–71. [Google Scholar] [CrossRef]

- Nemhauser, G.; Wolsey, L., Linear Programming. In Integer and Combinatorial Optimization; John Wiley & Sons, Ltd, 1988; chapter I.2, pp. 27–49, [https://onlinelibrary.wiley.com/doi/pdf/10.1002/9781118627372.ch2]. [CrossRef]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- van Aarle, W.; Palenstijn, W.J.; Cant, J.; Janssens, E.; Bleichrodt, F.; Dabravolski, A.; Beenhouwer, J.D.; Batenburg, K.J.; Sijbers, J. Fast and flexible X-ray tomography using the ASTRA toolbox. Opt. Express 2016, 24, 25129–25147. [Google Scholar] [CrossRef] [PubMed]

- Boyd, S.; Vandenberghe, L. Convex optimization; Cambridge university press, 2004.

- Kadu, A.; van Leeuwen, T.; Batenburg, K.J. CoShaRP: a convex program for single-shot tomographic shape sensing. Inverse Problems 2021, 37, 105005. [Google Scholar] [CrossRef]

Figure 1.

A laminographic setup designed for scanning flat objects, featuring a flexible source that moves along a raster trajectory. Note that dashed arrows specify the source trajectory.

Figure 1.

A laminographic setup designed for scanning flat objects, featuring a flexible source that moves along a raster trajectory. Note that dashed arrows specify the source trajectory.

Figure 2.

2D view of the acquisition setup. The source is located at lateral position and height h; the detector is parameterized without loss of generality by . The object is characterized by x as a function of lateral coordinate and vertical coordinate z. The ray from the source to the detector is shown by the dashed line.

Figure 2.

2D view of the acquisition setup. The source is located at lateral position and height h; the detector is parameterized without loss of generality by . The object is characterized by x as a function of lateral coordinate and vertical coordinate z. The ray from the source to the detector is shown by the dashed line.

Figure 3.

Schematic depiction of the missing wedge in the Fourier domain sampling of by the measurements . The green squares represent and black circles represent which are limited in by . The limited aperture in gives rise to the missing wedge.

Figure 3.

Schematic depiction of the missing wedge in the Fourier domain sampling of by the measurements . The green squares represent and black circles represent which are limited in by . The limited aperture in gives rise to the missing wedge.

Figure 4.

Example of the spatial sampling patterns when imaging the middle layer (at ) of an object with layers and regularly spaced sources in at . The red circle indicates the point at which we evaluate the output.

Figure 4.

Example of the spatial sampling patterns when imaging the middle layer (at ) of an object with layers and regularly spaced sources in at . The red circle indicates the point at which we evaluate the output.

Figure 5.

The wire designs with a size of are located at the bottom and top layers within the PCB, respectively.

Figure 5.

The wire designs with a size of are located at the bottom and top layers within the PCB, respectively.

Figure 6.

Various defects in different locations on the wire design.

Figure 6.

Various defects in different locations on the wire design.

Figure 7.

Different views from sources in a array of sources.

Figure 7.

Different views from sources in a array of sources.

Figure 8.

The EKSD algorithm’s convergence as applied to a calibration data set, is depicted. The blue line indicates the mean values, while the black dots highlight the variance of the NMSE at each iteration.

Figure 8.

The EKSD algorithm’s convergence as applied to a calibration data set, is depicted. The blue line indicates the mean values, while the black dots highlight the variance of the NMSE at each iteration.

Figure 9.

The reconstruction error for various ranges of the area occupied by the source.

Figure 9.

The reconstruction error for various ranges of the area occupied by the source.

Figure 10.

The error range applies to any K source positions within the array of 16 positions, where K can be 4, 6, 8, or 10.

Figure 10.

The error range applies to any K source positions within the array of 16 positions, where K can be 4, 6, 8, or 10.

Figure 11.

epth images in a, b, c are reconstructed using 10 proposed source positions from the total of 16, 36, and 64 positions, respectively. Conversely, the depth images in d, e, f are derived from 10 random source positions out of the same total positions.

Figure 11.

epth images in a, b, c are reconstructed using 10 proposed source positions from the total of 16, 36, and 64 positions, respectively. Conversely, the depth images in d, e, f are derived from 10 random source positions out of the same total positions.

Figure 12.

Depth images shown in a, b, c are reconstructed by 10 proposed source positions shown in d, e, f, respectively.

Figure 12.

Depth images shown in a, b, c are reconstructed by 10 proposed source positions shown in d, e, f, respectively.

Table 1.

NMSE and SSIM results with the occupied array length of . For randomized methods, we present the average and standard deviation over 50 runs.

Table 1.

NMSE and SSIM results with the occupied array length of . For randomized methods, we present the average and standard deviation over 50 runs.

| Source design |

NMSE (N) |

SSIM (N) |

| |

|

|

|

|

|

|

| ALL() |

|

|

|

|

|

|

| EKSD () |

|

|

|

|

|

|

| EKSDR () |

|

|

|

|

|

|

| RANDOM () |

|

|

|

|

|

|

Table 2.

NMSE and SSIM results with the occupied array length of . For randomized methods, we present the average and standard deviation over 50 runs.

Table 2.

NMSE and SSIM results with the occupied array length of . For randomized methods, we present the average and standard deviation over 50 runs.

| Source design |

NMSE (N) |

SSIM (N) |

| |

|

|

|

|

|

|

| ALL() |

|

|

|

|

|

|

| EKSD () |

|

|

|

|

|

|

| EKSDR () |

|

|

|

|

|

|

| RANDOM () |

|

|

|

|

|

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).