Submitted:

15 April 2024

Posted:

16 April 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Order Insensitivity: The self attention mechanism in Transformers treats inputs as an unsequenced collection, which is problematic for time series prediction where order is important. Even though, positional encodings used in Transformers partially addresses this but may not fully incorporate the temporal information. Some transformer-based models try to solve this problem using enhancements in architecture, e.g., Autoformer [9] uses series decomposition blocks that enhance the system's ability to learn from intricate temporal patterns [9,13,14]

- Complexity Trade-offs: The attention mechanism in Transformers has high computational costs for long sequences due to its quadratic complexity , and while modifications of sparse attention mechanisms e.g., Informer [8] reduces this to by using a ProbSparse technique. Some models reduce this complexity to e.g., FEDformer [15] that uses Fourier enhanced structure, and Pyraformer [16] which incorporates pyramidal attention module with inter-scale and intra-scale connections to accomplish the linear complexity. These reductions in complexity come at the cost of some information loss in the time series prediction.

- Noise Susceptibility: Transformers with many parameters are prone to overfitting noise, a significant issue in volatile data like financial time series where the actual signal is often subtle [13].

- Long-Term Dependency Challenge: Transformers, despite their theoretical potential, often find it challenging to handle very long sequences typical in time series forecasting, largely due to training complexities and gradient dilution. For example, PatchTST [11] used disassembling a time series into smaller segments and used it as patches to address this issue. This may cause some segment fragmentation issues at the boundaries of the patches in input data.

- Interpretation Challenge: Transformers' complex architecture with layers of self-attention and feed-forward networks complicates understanding their decision-making, a notable limitation in time series forecasting where rationale clarity is crucial. An attempt has been made in LTS-Linear [13] to address this by using a simple linear network instead of a complex architecture. However, this may be unable to exploit the intricate multivariate relationships between data.

2. Related Work

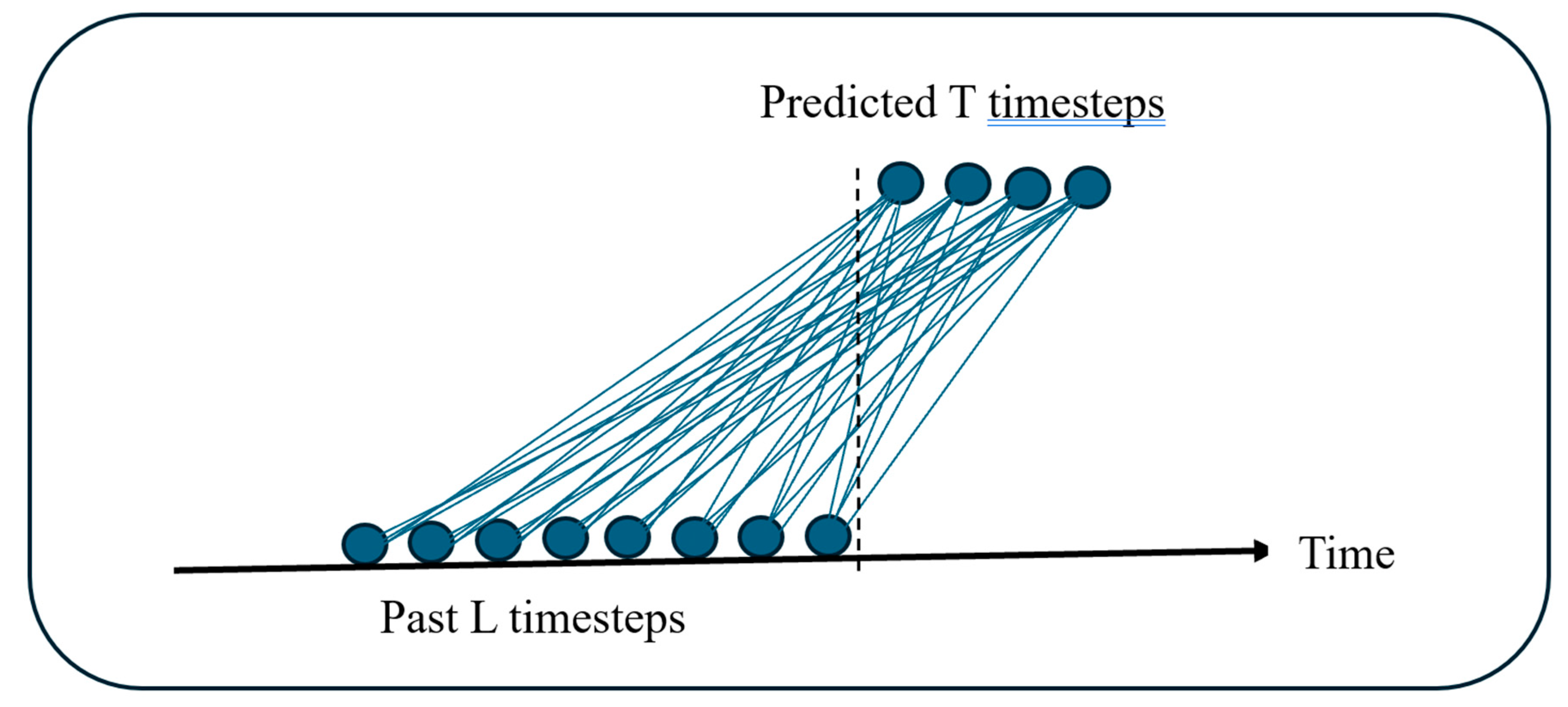

3. Methodology

3.1. Proposed Models for Time Series Forecasting

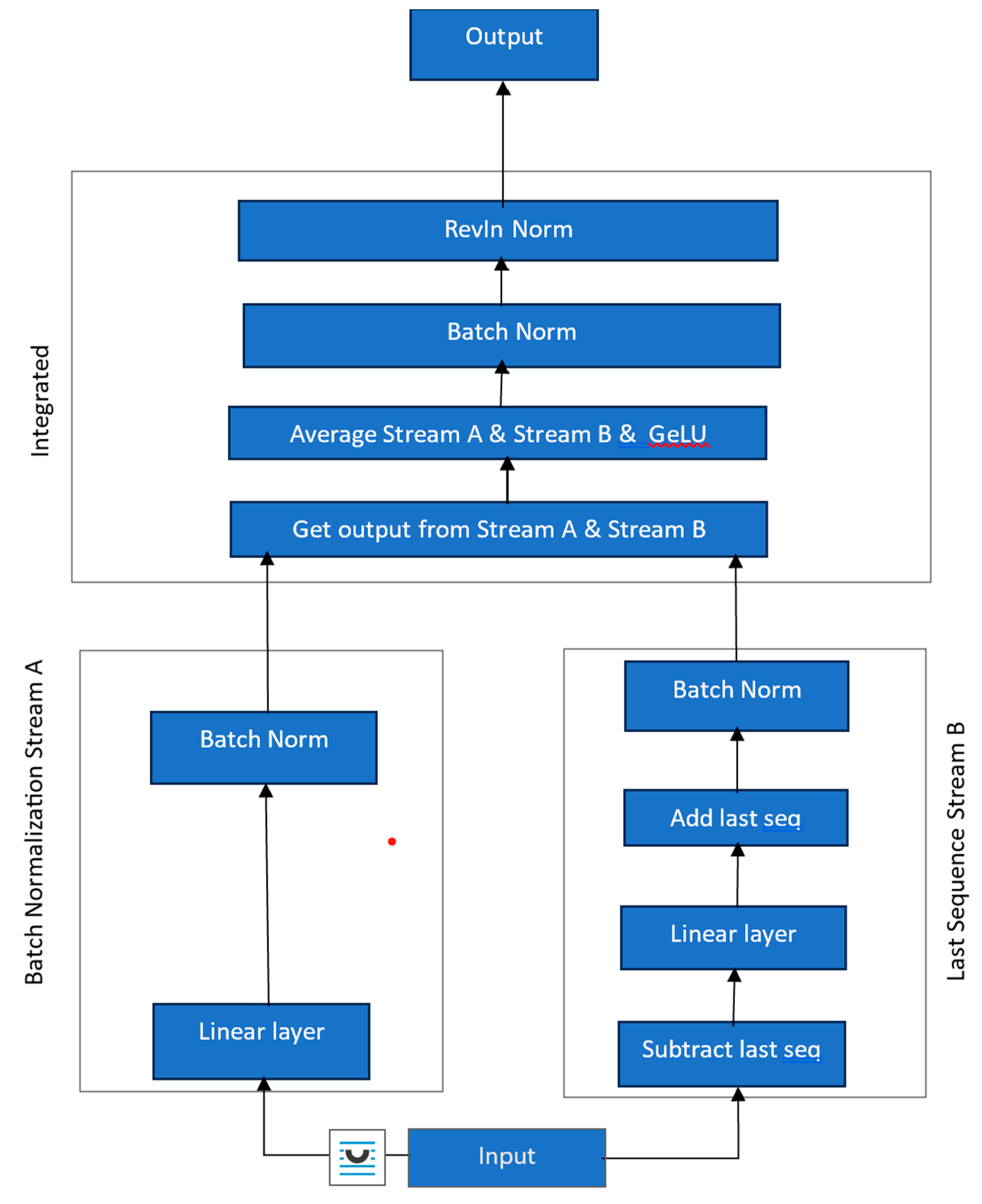

3.2. Enhanced Linear Models for Time Series Forecasting (ELM)

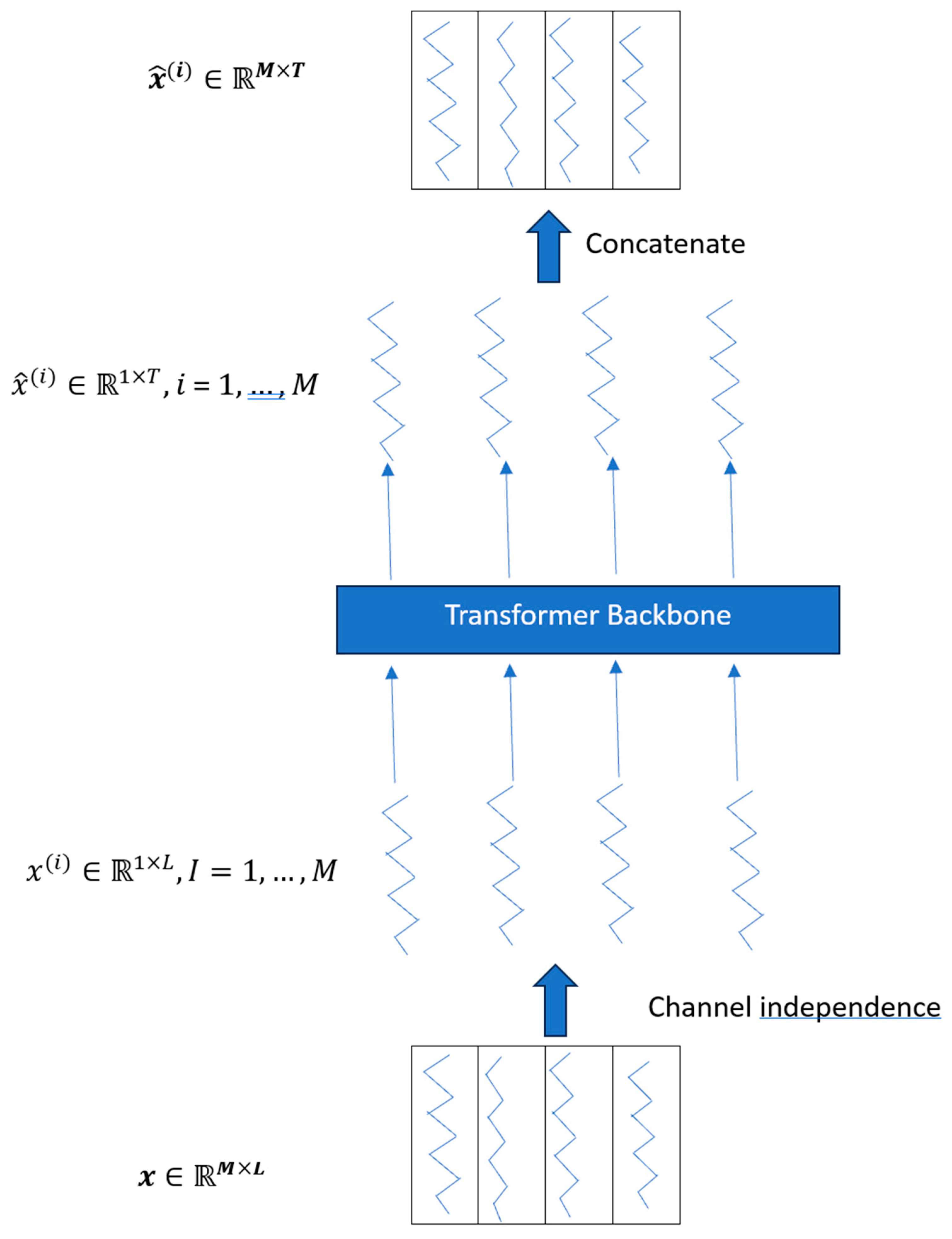

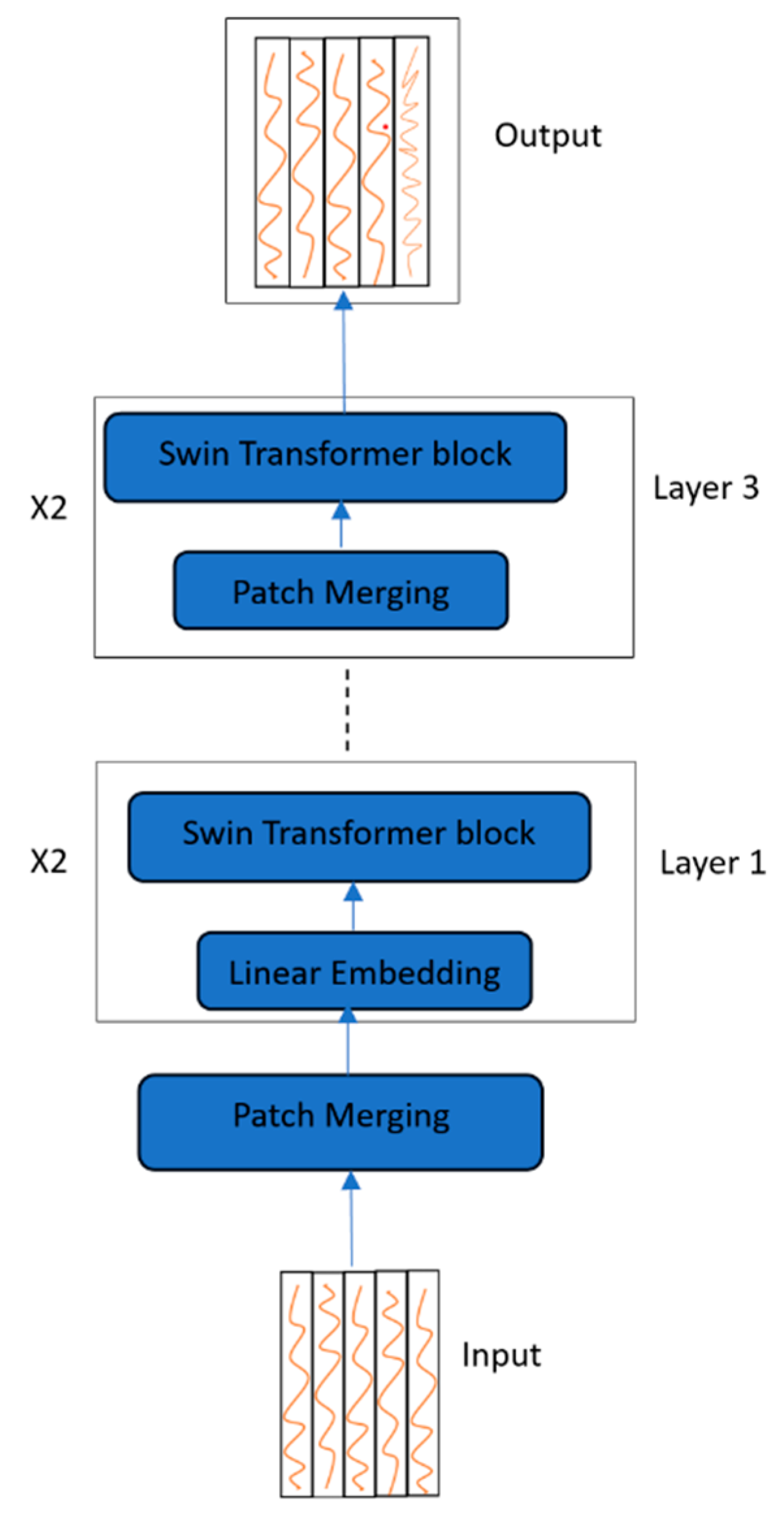

3.3. Adaptation of Vision Transformers to Time Series Forecasting

4. Results

5. Discussion

6. Conclusions

References

- G. E. Box, G. M. Jenkins, G. C. Reinsel, and G. M. Ljung, “Time series analysis: forecasting and control,” John Wiley & Sons, 2015.

- De Livera, A.M., Hyndman, R.J., & Snyder, R. D. (2011), Forecasting time series with complex seasonal patterns using exponential smoothing, Journal of the American Statistical Association, 106(496), 1513-1527. [CrossRef]

- Vitor Cerqueira1,, Luis Torgo1, and Carlos Soares1, "Machine Learning vs Statistical Methods for Time Series Forecasting: Size Matters," arXiv:1909.13316v1 [stat.ML] 29 Sep 2019.

- David Salinas, Valentin Flunkert, Jan Gasthaus, Tim Januschowski, “DeepAR: Probabilistic forecasting with autoregressive recurrent networks,” International Journal of Forecasting, Volume 36, Issue 3, 2020, Pages 1181-1191, ISSN 0169-2070. [CrossRef]

- Hansika Hewamalage, Christoph Bergmeir, Kasun Bandara, “Recurrent Neural Networks for Time Series Forecasting: Current Status and Future Directions,” International Journal of Forecasting Volume 37, Issue 1, January–March 2021, Pages 388-427.

- Guokun Lai, Wei-Cheng Chang, Yiming Yang, Hanxiao Liu, "Modeling Long- and Short-Term Temporal Patterns with Deep Neural Networks," SIGIR 18, July 2018.

- Vaswani, Ashish, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. "Attention is all you need." Advances in neural information processing systems 30 (2017).

- Zhou, Haoyi, Shanghang Zhang, Jieqi Peng, Shuai Zhang, Jianxin Li, Hui Xiong, and Wancai Zhang. "Informer: Beyond efficient transformer for long sequence time-series forecasting." In Proceedings of the AAAI conference on artificial intelligence, vol. 35, no. 12, pp. 11106-11115. 2021.

- Wu, Haixu, Jiehui Xu, Jianmin Wang, and Mingsheng Long. "Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting," Advances in Neural Information Processing Systems 34 (2021): 22419-22430.

- Zhou, Tian, Ziqing Ma, Qingsong Wen, Xue Wang, Liang Sun, and Rong Jin. "Fedformer: Frequency enhanced decomposed transformer for long-term series forecasting." In International Conference on Machine Learning, pp. 27268-27286. PMLR, 2022.

- Nie, Yuqi, Nam H. Nguyen, Phanwadee Sinthong, and Jayant Kalagnanam. "A Time Series is worth 64 words: Long-term forecasting with Transformers," arXiv preprint arXiv:2211.14730 (2022).

- Zhou, Haoyi, Shanghang Zhang, Jieqi Peng, Shuai Zhang, Jianxin Li, Hui Xiong, and Wancai Zhang. "Informer: Beyond efficient transformer for long sequence time-series forecasting." In Proceedings of the AAAI conference on artificial intelligence, vol. 35, no. 12, pp. 11106-11115. 2021.

- Zeng, Ailing, Muxi Chen, Lei Zhang, and Qiang Xu. "Are Transformers effective for Time Series Forecasting?." In Proceedings of the AAAI conference on artificial intelligence, vol. 37, no. 9, pp. 11121-11128. 2023.

- Li, Shiyang, Xiaoyong Jin, Yao Xuan, Xiyou Zhou, Wenhu Chen, Yu-Xiang Wang, and Xifeng Yan. "Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting." Advances in neural information processing systems 32 (2019).

- Zhou, Tian, Ziqing Ma, Qingsong Wen, Xue Wang, Liang Sun, and Rong Jin. "Fedformer: Frequency enhanced decomposed transformer for long-term series forecasting." In International Conference on Machine Learning, pp. 27268-27286. PMLR, 2022.

- Shizhan Liu, Hang Yu, Cong Liao, Jianguo Li, Weiyao Lin, Alex X Liu, and Schahram Dustdar, “Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting,” International Conference on Learning Representations, 2022.

- Chevillon, Guillaume. "Direct multi-step estimation and forecasting," Journal of Economic Surveys 21, no. 4 (2007): 746-785.

- Gu, Albert, and Tri Dao. "Mamba: Linear-time sequence modeling with selective state spaces." arXiv preprint arXiv:2312.00752 (2023).

- Liu, Yue, Yunjie Tian, Yuzhong Zhao, Hongtian Yu, Lingxi Xie, Yaowei Wang, Qixiang Ye, and Yunfan Liu. "Vmamba: Visual state space model." arXiv preprint arXiv:2401.10166 (2024).

- Michael Zhang, Khaled Saab, Michael Poli, Tri Dao, Karan Goel, and Christopher Ré, "Effectively Modeling Time Series with Simple Discrete State Spaces," arXiv:2303.09489v1 [cs.LG] 16 Mar 2023.

- Kim, Taesung, Jinhee Kim, Yunwon Tae, Cheonbok Park, Jang-Ho Choi, and Jaegul Choo. "Reversible instance normalization for accurate time-series forecasting against distribution shift," International Conference on Learning Representations, 2021.

- Liu, Ze, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, and Baining Guo," Swin transformer: Hierarchical vision transformer using shifted windows," Proceedings of the IEEE/CVF international conference on computer vision, pp. 10012-10022. 2021.

- Ahamed, Md Atik, and Qiang Cheng. "TimeMachine: A Time Series is Worth 4 Mambas for Long-term Forecasting." arXiv preprint arXiv:2403.09898 (2024).

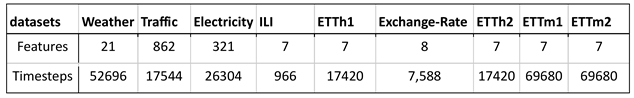

| Model | Type |

|---|---|

| FEDformer 1 | Transformer-based |

| Autoformer | Transformer-based |

| Informer | Transformer-based |

| Pyraformer | Transformer-based |

| LogTrans | Transformer-based |

| DLinear | Non-Transformer |

| PatchTST | Transformer-based |

| Models | (our model) ELM | PatchTST/64 | DLinear | FEDformer | Autoformer | Informer | Pyraformer | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Metric | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | |

| Weather | 96 | 0.140 | 0.184 | 0.149 | 0.198 | 0.176 | 0.237 | 0.238 | 0.314 | 0.249 | 0.329 | 0.354 | 0.405 | 0.896 | 0.556 |

| 192 | 0.183 | 0.226 | 0.194 | 0.241 | 0.22 | 0.282 | 0.275 | 0.329 | 0.325 | 0.37 | 0.419 | 0.434 | 0.622 | 0.624 | |

| 336 | 0.233 | 0.266 | 0.245 | 0.282 | 0.265 | 0.319 | 0.339 | 0.377 | 0.351 | 0.391 | 0.583 | 0.543 | 0.739 | 0.753 | |

| 720 | 0.306 | 0.319 | 0.314 | 0.334 | 0.323 | 0.362 | 0.389 | 0.409 | 0.415 | 0.426 | 0.916 | 0.705 | 1.004 | 0.934 | |

| Traffic | 96 | 0.398 | 0.265 | 0.360 | 0.249 | 0.41 | 0.282 | 0.576 | 0.359 | 0.597 | 0.371 | 0.733 | 0.41 | 2.085 | 0.468 |

| 192 | 0.408 | 0.269 | 0.379 | 0.256 | 0.423 | 0.287 | 0.61 | 0.38 | 0.607 | 0.382 | 0.777 | 0.435 | 0.867 | 0.467 | |

| 336 | 0.417 | 0.274 | 0.392 | 0.264 | 0.436 | 0.296 | 0.608 | 0.375 | 0.623 | 0.387 | 0.776 | 0.434 | 0.869 | 0.469 | |

| 720 | 0.456 | 0.299 | 0.432 | 0.286 | 0.466 | 0.315 | 0.621 | 0.375 | 0.639 | 0.395 | 0.827 | 0.466 | 0.881 | 0.473 | |

| Electricity | 96 | 0.131 | 0.223 | 0.129 | 0.222 | 0.14 | 0.237 | 0.186 | 0.302 | 0.196 | 0.313 | 0.304 | 0.393 | 0.386 | 0.449 |

| 192 | 0.146 | 0.236 | 0.147 | 0.240 | 0.153 | 0.249 | 0.197 | 0.311 | 0.211 | 0.324 | 0.327 | 0.417 | 0.386 | 0.443 | |

| 336 | 0.162 | 0.253 | 0.163 | 0.259 | 0.169 | 0.267 | 0.213 | 0.328 | 0.214 | 0.327 | 0.333 | 0.422 | 0.378 | 0.443 | |

| 720 | 0.200 | 0.287 | 0.197 | 0.29 | 0.203 | 0.301 | 0.233 | 0.344 | 0.236 | 0.342 | 0.351 | 0.427 | 0.376 | 0.445 | |

| Illness | 24 | 1.820 | 0.809 | 1.319 | 0.754 | 2.215 | 1.081 | 2.624 | 1.095 | 2.906 | 1.182 | 4.657 | 1.449 | 1.42 | 2.012 |

| 36 | 1.574 | 0.775 | 1.579 | 0.87 | 1.963 | 0.963 | 2.516 | 1.021 | 2.585 | 1.038 | 4.65 | 1.463 | 7.394 | 2.031 | |

| 48 | 1.564 | 0.793 | 1.553 | 0.815 | 2.13 | 1.024 | 2.505 | 1.041 | 3.024 | 1.145 | 5.004 | 1.542 | 7.551 | 2.057 | |

| 60 | 1.512 | 0.803 | 1.470 | 0.788 | 2.368 | 1.096 | 2.742 | 1.122 | 2.761 | 1.114 | 5.071 | 1.543 | 7.662 | 2.1 | |

| ETTh1 | 96 | 0.362 | 0.389 | 0.370 | 0.400 | 0.375 | 0.399 | 0.376 | 0.415 | 0.435 | 0.446 | 0.941 | 0.769 | 0.664 | 0.612 |

| 192 | 0.398 | 0.412 | 0.413 | 0.429 | 0.405 | 0.416 | 0.423 | 0.446 | 0.456 | 0.457 | 1.007 | 0.786 | 0.79 | 0.681 | |

| 336 | 0.421 | 0.427 | 0.422 | 0.440 | 0.439 | 0.443 | 0.444 | 0.462 | 0.486 | 0.487 | 1.038 | 0.784 | 0.891 | 0.738 | |

| 720 | 0.437 | 0.453 | 0.447 | 0.468 | 0.472 | 0.490 | 0.469 | 0.492 | 0.515 | 0.517 | 1.144 | 0.857 | 0.963 | 0.782 | |

| ETTh2 | 96 | 0.263 | 0.331 | 0.274 | 0.337 | 0.289 | 0.353 | 0.332 | 0.374 | 0.332 | 0.368 | 1.549 | 0.952 | 0.645 | 0.597 |

| 192 | 0.318 | 0.369 | 0.341 | 0.382 | 0.383 | 0.418 | 0.407 | 0.446 | 0.426 | 0.434 | 3.792 | 1.542 | 0.788 | 0.683 | |

| 336 | 0.348 | 0.399 | 0.329 | 0.384 | 0.448 | 0.465 | 0.4 | 0.447 | 0.477 | 0.479 | 4.215 | 1.642 | 0.907 | 0.747 | |

| 720 | 0.409 | 0.444 | 0.379 | 0.422 | 0.605 | 0.551 | 0.412 | 0.469 | 0.453 | 0.49 | 3.656 | 1.619 | 0.963 | 0.783 | |

| ETTm1 | 96 | 0.291 | 0.338 | 0.293 | 0.346 | 0.299 | 0.343 | 0.326 | 0.39 | 0.51 | 0.492 | 0.626 | 0.56 | 0.543 | 0.51 |

| 192 | 0.332 | 0.361 | 0.333 | 0.370 | 0.335 | 0.365 | 0.365 | 0.415 | 0.514 | 0.495 | 0.725 | 0.619 | 0.557 | 0.537 | |

| 336 | 0.362 | 0.377 | 0.369 | 0.392 | 0.369 | 0.386 | 0.392 | 0.425 | 0.51 | 0.492 | 1.005 | 0.741 | 0.754 | 0.655 | |

| 720 | 0.418 | 0.409 | 0.416 | 0.420 | 0.425 | 0.421 | 0.446 | 0.458 | 0.527 | 0.493 | 1.133 | 0.845 | 0.908 | 0.724 | |

| ETTm2 | 96 | 0.160 | 0.246 | 0.166 | 0.256 | 0.167 | 0.260 | 0.18 | 0.271 | 0.205 | 0.293 | 0.355 | 0.462 | 0.435 | 0.507 |

| 192 | 0.219 | 0.288 | 0.223 | 0.296 | 0.224 | 0.303 | 0.252 | 0.318 | 0.278 | 0.336 | 0.595 | 0.586 | 0.73 | 0.673 | |

| 336 | 0.271 | 0.321 | 0.274 | 0.329 | 0.281 | 0.342 | 0.324 | 0.364 | 0.343 | 0.379 | 1.27 | 0.871 | 1.201 | 0.845 | |

| 720 | 0.360 | 0.380 | 0.362 | 0.385 | 0.397 | 0.421 | 0.41 | 0.42 | 0.414 | 0.419 | 3.001 | 1.267 | 3.625 | 1.451 | |

| Exchange | 96 | 0.084 | 0.201 | 0.081 | 0.203 | 0.148 | 0.278 | 0.197 | 0.323 | 0.847 | 0.752 | 0.376 | 1.105 | ||

| 192 | 0.156 | 0.296 | 0.157 | 0.293 | 0.271 | 0.38 | 0.3 | 0.369 | 1.204 | 0.895 | 1.748 | 1.151 | |||

| 336 | 0.266 | 0.403 | 0.305 | 0.414 | 0.46 | 0.5 | 0.509 | 0.524 | 1.672 | 1.036 | 1.874 | 1.172 | |||

| 720 | 0.665 | 0.649 | 0.643 | 0.601 | 1.195 | 0.841 | 1.447 | 0.941 | 2.478 | 1.31 | 1.943 | 1.206 | |||

| Models | (our) Swin Transformer | (our model) ELM | PatchTST/64 | DLinear | FEDformer | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Metric | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | |

| Weather | 96 | 0.173 | 0.224 | 0.140 | 0.184 | 0.149 | 0.198 | 0.176 | 0.237 | 0.238 | 0.314 |

| 192 | 0.227 | 0.268 | 0.183 | 0.226 | 0.194 | 0.241 | 0.22 | 0.282 | 0.275 | 0.329 | |

| 336 | 0.277 | 0.305 | 0.233 | 0.266 | 0.245 | 0.282 | 0.265 | 0.319 | 0.339 | 0.377 | |

| 720 | 0.333 | 0.345 | 0.306 | 0.319 | 0.314 | 0.334 | 0.323 | 0.362 | 0.389 | 0.409 | |

| Traffic | 96 | 0.621 | 0.342 | 0.398 | 0.265 | 0.360 | 0.249 | 0.41 | 0.282 | 0.576 | 0.359 |

| 192 | 0.651 | 0.359 | 0.408 | 0.269 | 0.379 | 0.256 | 0.423 | 0.287 | 0.61 | 0.38 | |

| 336 | 0.648 | 0.353 | 0.417 | 0.274 | 0.392 | 0.264 | 0.436 | 0.296 | 0.608 | 0.375 | |

| 720 | 0.384 | 0.4509 | 0.456 | 0.299 | 0.432 | 0.286 | 0.466 | 0.315 | 0.621 | 0.375 | |

| Electricity | 96 | 0.189 | 0.296 | 0.131 | 0.223 | 0.129 | 0.222 | 0.14 | 0.237 | 0.186 | 0.302 |

| 192 | 0.191 | 0.296 | 0.146 | 0.236 | 0.147 | 0.240 | 0.153 | 0.249 | 0.197 | 0.311 | |

| 336 | 0.205 | 0.3107 | 0.162 | 0.253 | 0.163 | 0.259 | 0.169 | 0.267 | 0.213 | 0.328 | |

| 720 | 0.228 | 0.327 | 0.200 | 0.287 | 0.197 | 0.29 | 0.203 | 0.301 | 0.233 | 0.344 | |

| ILI | 24 | 5.806 | 1,800 | 1.820 | 0.809 | 1.319 | 0.754 | 2.215 | 1.081 | 2.624 | 1.095 |

| 36 | 6.931 | 1.968 | 1.574 | 0.775 | 1.579 | 0.87 | 1.963 | 0.963 | 2.516 | 1.021 | |

| 48 | 6.581 | 1.904 | 1.564 | 0.793 | 1.553 | 0.815 | 2.13 | 1.024 | 2.505 | 1.041 | |

| 60 | 6.901 | 1.968 | 1.512 | 0.803 | 1.470 | 0.788 | 2.368 | 1.096 | 2.742 | 1.122 | |

| ETTh1 | 96 | 0.592 | 0.488 | 0.362 | 0.389 | 0.370 | 0.400 | 0.375 | 0.399 | 0.376 | 0.415 |

| 192 | 0.542 | 0.514 | 0.398 | 0.412 | 0.413 | 0.429 | 0.405 | 0.416 | 0.423 | 0.446 | |

| 336 | 0.537 | 0.518 | 0.421 | 0.427 | 0.422 | 0.440 | 0.439 | 0.443 | 0.444 | 0.462 | |

| 720 | 0.614 | 0.571 | 0.437 | 0.453 | 0.447 | 0.468 | 0.472 | 0.490 | 0.469 | 0.492 | |

| ETTh2 | 96 | 0.360 | 0.405 | 0.263 | 0.331 | 0.274 | 0.337 | 0.289 | 0.353 | 0.332 | 0.374 |

| 192 | 0.386 | 0.426 | 0.318 | 0.369 | 0.341 | 0.382 | 0.383 | 0.418 | 0.407 | 0.446 | |

| 336 | 0.372 | 0.421 | 0.348 | 0.399 | 0.329 | 0.384 | 0.448 | 0.465 | 0.4 | 0.447 | |

| 720 | 0.424 | 0.454 | 0.409 | 0.444 | 0.379 | 0.422 | 0.605 | 0.551 | 0.412 | 0.469 | |

| ETTm1 | 96 | 0.400 | 0.421 | 0.291 | 0.338 | 0.293 | 0.346 | 0.299 | 0.343 | 0.326 | 0.39 |

| 192 | 0.429 | 0.443 | 0.332 | 0.361 | 0.333 | 0.370 | 0.335 | 0.365 | 0.365 | 0.415 | |

| 336 | 0.439 | 0.447 | 0.362 | 0.377 | 0.369 | 0.392 | 0.369 | 0.386 | 0.392 | 0.425 | |

| 720 | 0.477 | 0.466 | 0.418 | 0.409 | 0.416 | 0.420 | 0.425 | 0.421 | 0.446 | 0.458 | |

| ETTm2 | 96 | 0.210 | 0.292 | 0.160 | 0.246 | 0.166 | 0.256 | 0.167 | 0.260 | 0.18 | 0.271 |

| 192 | 0.264 | 0.325 | 0.219 | 0.288 | 0.223 | 0.296 | 0.224 | 0.303 | 0.252 | 0.318 | |

| 336 | 0.311 | 0.356 | 0.271 | 0.321 | 0.274 | 0.329 | 0.281 | 0.342 | 0.324 | 0.364 | |

| 720 | 0.408 | 0.412 | 0.360 | 0.380 | 0.362 | 0.385 | 0.397 | 0.421 | 0.41 | 0.42 | |

| Models | (our model) ELM | SpaceTime | DLinear | FEDformer | Autoformer | TimeMachine(Mamba) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Metric | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE |

| ETTh1 720 | 0.448 | 0.463 | 0.499 | 0.48 | 0.440 | 0.453 | 0.506 | 0.507 | 0.514 | 0.512 | 0.462 | 0.475 |

| ETTh2 720 | 0.387 | 0.428 | 0.402 | 0.434 | 0.394 | 0.436 | 0.463 | 0.474 | 0.515 | 0.511 | 0.412 | 0.441 |

| ETTm1 720 | 0.415 | 0.409 | 0.408 | 0.415 | 0.433 | 0.422 | 0.543 | 0.49 | 0.671 | 0.561 | 0.430 | 0.429 |

| ETTm2 720 | 0.348 | 0.377 | 0.358 | 0.378 | 0.368 | 0.384 | 0.421 | 0.415 | 0.433 | 0.432 | 0.380 | 0.396 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).