1. Introduction

Road safety is a critical issue around the world, and driver errors are the predominant factor in road accidents. In 2021, 813,169 road accidents were reported in the European Union [

1], and 21,322 and 20,936 were reported in Poland in 2022 and 2023, respectively, with an alarming 90.9% attributed to the actions of drivers [

1,

2,

3]. This underscores the urgent need to explore the root causes of these errors and implement effective preventive measures.

A category of strategic errors that contribute significantly to road accidents includes driving in challenging conditions, operating damaged vehicles, or driving in compromised physical, mental, and intoxication states [

4,

5,

6]. Deliberate distractions, such as mobile phone use, further compound the risks associated with these errors [

7,

8,

9,

10]. A growing area of research focuses on the discourse surrounding age limitations for drivers and the development of systems to prevent the exclusion of senior drivers from road activities[

11,

12,

13,

14]. These discussions highlight the need for comprehensive approaches to improve road safety, considering both human factors and technological interventions [

15,

16,

17].

In response to the imperative of road safety and recognizing the limitations of traditional methods, researchers have turned to non-invasive techniques to identify factors that may compromise a driver’s focus [

18,

19,

20,

21]. Among these techniques, electrooculography (EOG) emerges as a promising method [

22]. EOG is a non-invasive approach that monitors eye movements, providing valuable insights into a driver’s visual attention and cognitive processes during vehicle operation [

23,

24,

25,

26,

27].

The primary objective of our conducted research was to assess the potential for classifying driving styles, considering the levels of driver experience and the challenges they face during vehicle operation. To achieve this, EOG signals were captured using smart glasses, specifically JINS MEME ES_R. Signals were collected during driving in various scenarios encountered in real traffic, including different road conditions and three types of parking [

28,

29,

30,

31,

32].

The significance of the study lies in its comprehensive examination of various aspects that influence driving skills. By assessing potential distractors, especially during periods of lost concentration, the research contributes to a deeper understanding of the complexities associated with driving behavior. In the realm of artificial intelligence, a Neural Network was employed for the classification of driving styles. This approach allows analysis, capturing patterns in signals associated with different driving behaviors [

28,

29,

30].

In this study, we seek to contribute to improving road traffic safety in Poland by unraveling the intricacies of driver behavior [

33,

34,

35]. By leveraging technologies such as EOG and machine learning algorithms, we aim to pave the way for effective interventions and strategies that mitigate the risks associated with driver errors [

36,

37,

38]. The findings hold implications not only for Poland but can also inform global efforts to create safer road environments [

39,

40,

41].

1.1. Related work

In [

42], Feng et al. evaluated the association between speeding events and cognitive status among older male and female drivers. Their findings revealed valuable insights into the impact of cognition and gender on speeding behavior in the elderly. Various studies contribute to addressing the gap in empirical research concerning high-range speeding behavior among older drivers, shedding light on the predictors and characteristics of such events among individuals aged 75–94 years [

43,

44,

45]. The investigation by Ludenberg et al. focused on examining the correlation between cognitive impairments, as assessed by a set of neuropsychological tests, and traffic violations among elderly drivers [

46]. In [

47], the authors aimed to explore the correlation between gait speed and cognitive function in older adults, acknowledging the common decline in both domains with advancing age. This analysis seeks to elucidate potential preclinical indicators and enhance diagnostic evaluations within this demographic context. A critical gap in research by investigating the relationship between cognitive and visual function decline and speeding behavior among older drivers, shedding light on potential self-restrictive driving patterns in response to functional limitations [

48].

The study conducted by Feng et al. involved a naturalistic driving approach, wherein objective driving data were collected over a two-week period from 36 older drivers with suspected mild cognitive impairment and 35 older drivers without cognitive impairment. The outcome of interest was the number of speeding events, defined as traveling 5+ km/h over the posted speed limit for at least a minute. Most of the participants did not show speeding events during the monitoring period, but notable differences were observed between older male and female drivers. Interestingly, suspected mild cognitive impairment was significantly associated with a higher rate of speeding events among older male drivers.

This finding suggests a possible link between cognitive decline and unsafe driving practices in this demographic [

44,

49,

50]. In contrast, for older female drivers, no significant factors were identified to be associated with the rate of speeding events [

51,

52,

53]. This gender-specific variation highlights the need for targeted interventions and safety measures tailored to different demographic groups [

54,

55]. Contrary to the prevalent belief that most participants did not speed during the monitoring period, the study revealed significant differences, particularly when analyzing the demographic variables of older male and female drivers. These distinctions became important when placed in the broader context of research on experienced older drivers and provide detailed insight into the potential influence of cognitive status on speeding behavior.

This project contributes to the broader academic discourse on older drivers by presenting a focused examination of the relationship between cognitive impairment and the tendency to speed. Integrating our findings with existing research on older drivers provides a basis for comparative analyzes that shed light on potential patterns and differences between drivers with diverse cognitive profiles within the older demographic group. Such observations make a substantive contribution to the ongoing scientific discussion on the interplay of cognitive factors and driving behavior among older people, especially those with extensive driving experience. The importance of this study lies not only in its novel insight into the dynamics of speeding behavior among older drivers, but also in its methodological alignment with prior research, which promotes a coherent narrative in the field of aging driver research. Comparison with similar studies allows a more detailed understanding of the complexities associated with cognitive status and its potential consequences on driving performance among older adults with extensive driving experience.

Aksjonov’s work emphasizes the use of machine learning and fuzzy logic for detecting and evaluating driver distraction [

56]. Similarly, Sun et al. proposed a method for online distraction detection using kinematic motion models and a multiple model algorithm, focusing on abnormal driving states induced by distractions [

57]. The relevance of these studies lies in the broader context of improving road safety by understanding and mitigating the factors that lead to unsafe driving behaviors. Additionally, studies exploring the detection of distracted driving based on physiological signals, such as EEG, add another dimension to the discourse.

Wang et al. proposed an EEG-based brain-computer interface to detect distracted driving, achieving an accuracy of approximately 90% in recognizing distracted and concentrated driving epochs [

58]. The temporal-spatial deep learning approach for driver distraction detection based on EEG signals further contributes to the growing body of literature exploring diverse methodologies for identifying distracted driving behaviors. Similarly, the use of algorithm-based on actigraphy for the evaluation of sleep in older adults, as demonstrated by Regalia et al., showcases the potential of wearables and multi-sensor information to monitor various aspects of health and behavior [

59]. Other studies demonstrate the use of smartphones, wearable sensors and machine learning techniques for evaluation of driver’s state and behavior [

60,

61,

62,

63,

64].

The literature reveals a consistent relationship between cognitive function and driving performance in various age groups, with recent evidence suggesting its relevance for middle-aged drivers, an area that remains underexplored [

65]. This study aims to address this gap by investigating the association between overall cognitive function and driving behavior in middle-aged drivers, as well as the specific cognitive domains relevant to driving. Methodologically, 89 drivers aged 25 to 65 years underwent cognitive evaluations alongside a driving simulator task, with driving performance metrics that included speeding and lane deviation. Results indicate that overall cognitive function, alongside specific domains such as mental status, executive function, and memory, significantly predict driving behavior in this cohort. These findings underscore the importance of cognitive abilities in driving across the lifespan, although the generalizability of the results may be limited due to the sample’s predominantly university-based composition, necessitating replication in more representative samples. Future research should aim to develop comprehensive models that clarify driving performance in all age groups and traffic psychology domains, noting that in the future, with the advent of autonomous vehicles, such models may serve as a basis to detect anomalies related to vehicle operation [

66,

67,

68].

In conclusion, the multiple studies discussed the importance of the driver, including their behavior, which encompasses factors such as cognitive status, gender, distraction detection, and sleep assessment. These insights are crucial to the development of advanced driver assistance systems and policies aimed at improving road safety. Integration of real-time detection systems, as exemplified by Scopus-indexed research, underscores the importance of leveraging technological advancements to improve road safety. Future research should continue to explore innovative methodologies and interdisciplinary approaches to fully address the complexities of driver behavior in diverse populations.

2. Materials and Methods

2.1. Data Acquisition

The research involved two sets of participants: a group of twenty skilled drivers aged between 40 and 68, each having a minimum of ten years of driving experience, and another group consisting of ten novice drivers aged between 18 and 46, who were currently undergoing driving lessons at a driving school. All participants gave their informed consent to participate in the study. According to Article 39j of the Act on Road Transportation of the Republic of Poland and Chapter 2 of the Act on Vehicle Drivers of the Republic of Poland, the drivers must undergo a medical examination before starting driving lessons [

69,

70]. Thus, we operate under the assumption that participants in the study group did not have any health conditions that could directly pose a risk while driving [

71].

For data acquisition, we decided to use JINS MEME ES_R smart glasses produced by JINS, Inc. (Tokyo, Japan), which are technologically advanced eyewear that goes beyond traditional eyeglasses to offer additional functionality. The key features of the glasses include three-point electrooculography and a six-axis inertial measurement unit (IMU) with an accelerometer and a gyroscope [

72]. Glasses, as a daily accessory, serve as an excellent tool for research while driving, as they allow minimizing the driver’s distractions regardless of their level of experience. At the same time, glasses equipped with sensors can capture significant physiological data on eye and head movement, which play a crucial role in driving activity and provide valuable information on individual driving styles.

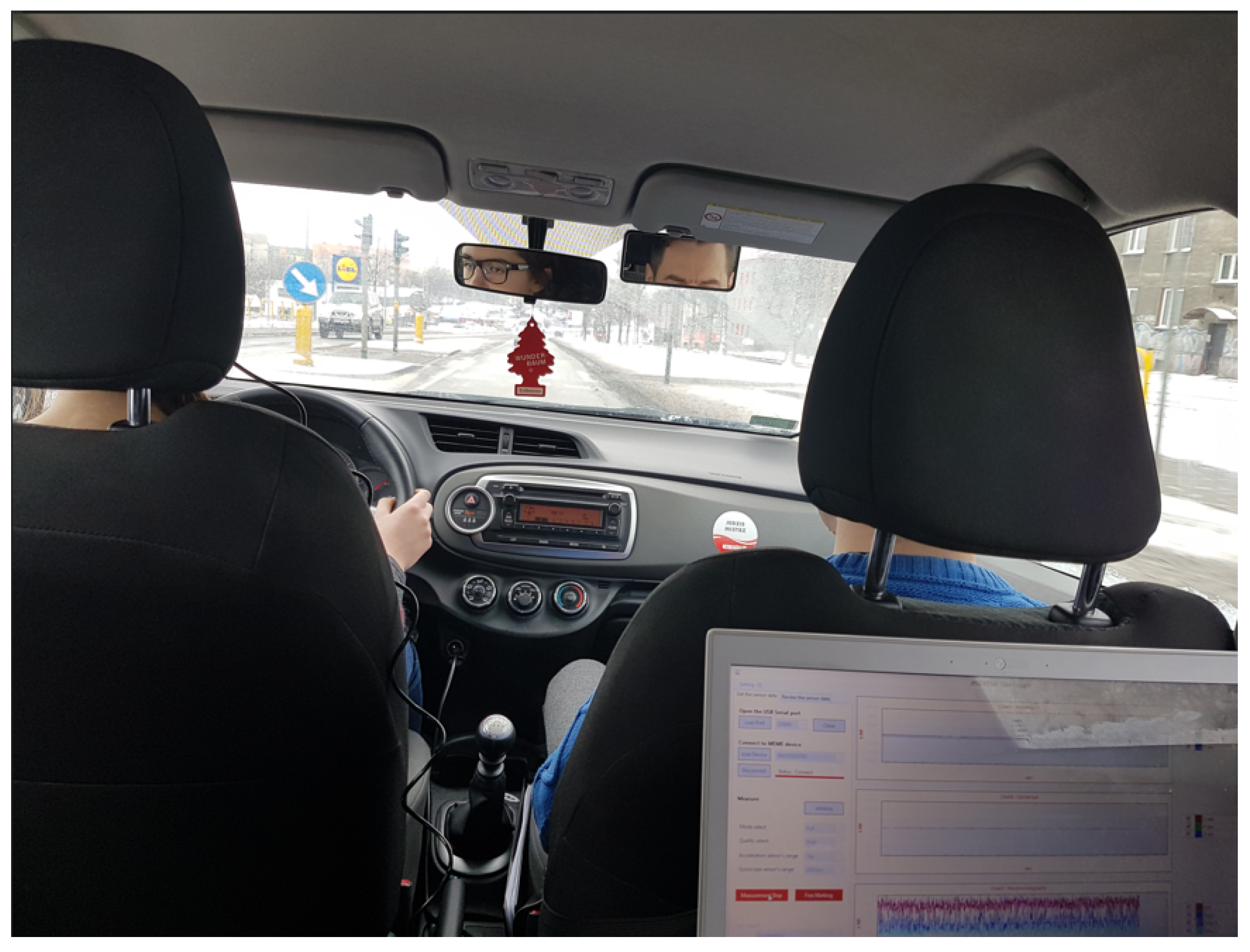

The data were recorded during the drive by a third person seated in the rear seat with a sampling frequency of 100 Hz.

Figure 1 presents the experimental setup. Each of the volunteers drove the same route, including several maneuvers evaluated during the practical driving exam, including parking, city driving, highway navigation, and passing through a residential area. The route was 28.7 km long and lasted approximately 75 minutes. A detailed description of the route can be found in [

28].

The study adhered to the guidelines outlined in the Declaration of Helsinki of 1975, revised in 2013, and received approval from the Provincial Police Department in Katowice. The research protocol, sanctioned by resolution KNW/0022/KB1/18, was approved by the Bioethics Committee of the Medical University of Silesia in Katowice on 16 October 2018. To maintain confidentiality, the identities of both learner drivers and experienced drivers were not disclosed, according to agreements established with the driving school.

2.2. Pre-Processing

The preprocessing of the recorded data involved several steps to enhance the quality and relevance of the information collected. The raw data was sampled at a frequency of 100 Hz and consisted of ten channels, including three from the accelerometer (ACC_X, ACC_Y, ACC_Z), three from the gyroscope (GYRO_X, GYRO_Y, GYRO_Z), and four from the EOG sensor (EOG_L, EOG_R, EOG_H, EOG_V).

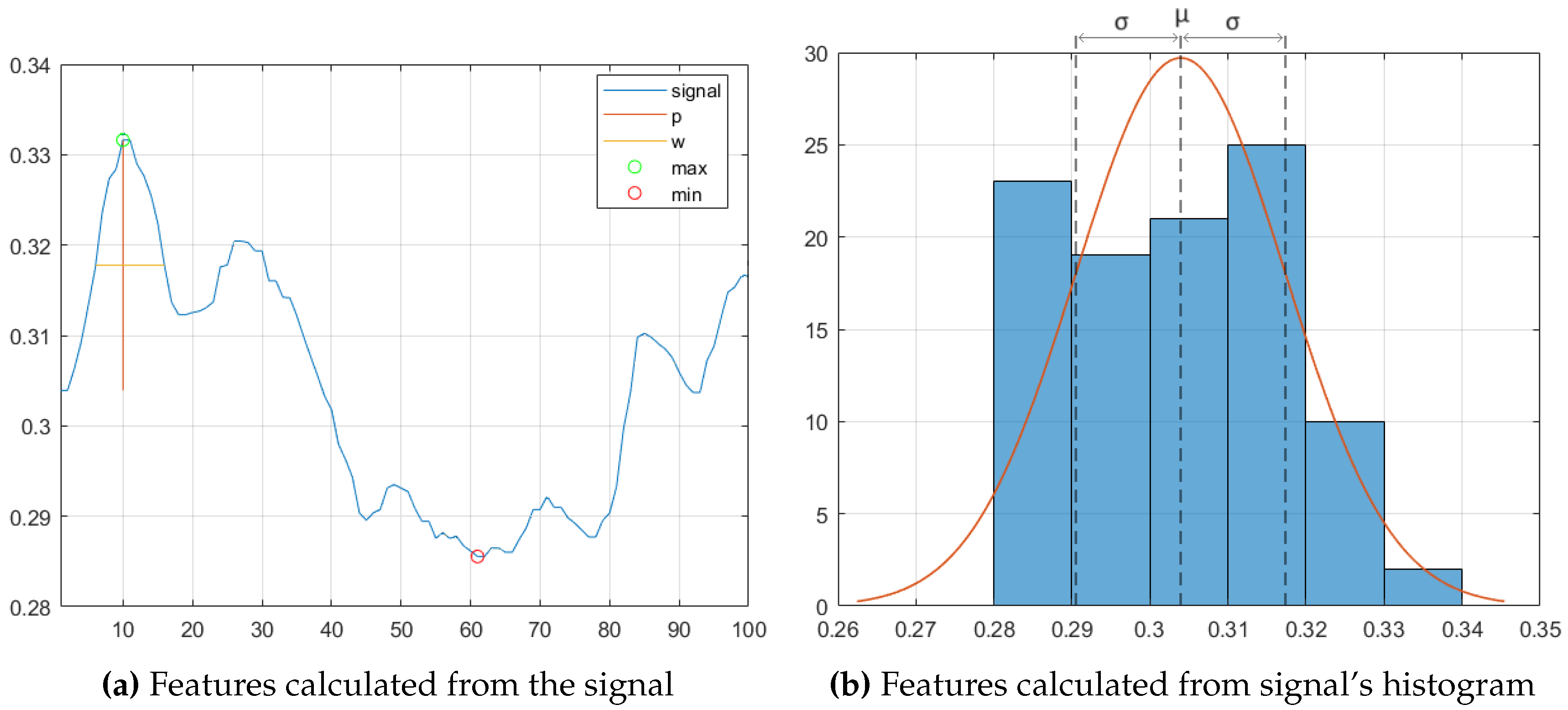

The first step of pre-processing was to apply a third-order median filter. Then, a global min-max normalization was performed by identifying the minimum and maximum values within the entire dataset. Subsequently, we segmented the signals into windows of 100 samples (1 s) with a 50-sample (0.5 s) stride. The next step involved the calculation of the features for each window. Based on previous studies [

29,

32,

73], we calculated a vector of the following ten features:

mean value of a normal distribution fitted to the signal (),

standard deviation of a normal distribution fitted to the signal (),

minimum value (min),

maximum value (max),

skewness (skew),

kurtosis (kurt),

sum of the sample values (area),

entropy,

width of the widest peak (w),

prominence of the widest peak (p).

Figure 2 visualizes the determination of the aforementioned features.

For each vector of features, we assigned a class label: 1 for experienced drivers and 2 for inexperienced drivers. These pre-processing operations resulted in a feature matrix comprising 61,927 rows and 100 features (ten for each of the ten signal channels). Specifically, 37,062 data points were derived from experienced drivers, while 24,865 were from learner drivers.

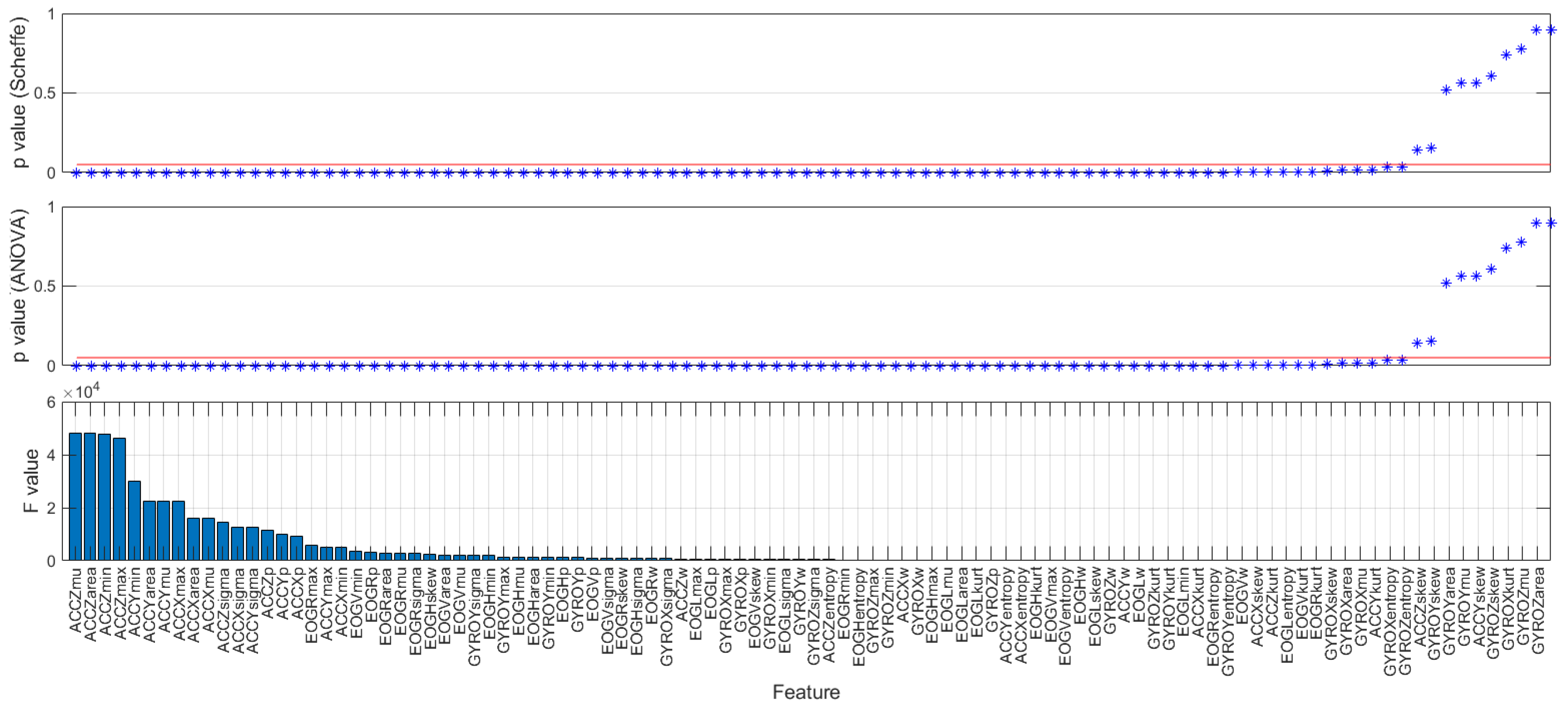

To test the importance of the calculated features, we performed one-way analysis of variance (ANOVA). In this case, for each feature, ANOVA tests the hypothesis that mean values of the specific feature are equal in two groups (classes). In addition to ANOVA, we performed multiple comparisons analysis, as pairwise comparisons of the group means with Scheffe’s procedure [

74]. The result of the tests performed are presented in

Figure 3.

Analysis reveals that ten features exhibit p-values grater than 0.05 in both the ANOVA and the multiple comparison test, indicating that their means are statistically equivalent. Consequently, we will omit these features from further analysis. According to the tests, the remaining features present significantly different means and can be taken into account for the classification problem.

2.3. Classification

Initially, 10% of the data were set aside for the test set to assess the performance of the model. Furthermore, data were divided into training and validation sets using a five-fold cross-validation scheme.

To enhance the classification process, various feature sets were tested for classification. Initial testing involved 90, 50, 40, 30, 20 and 10 the most important features according to the ANOVA F-scores. The best results were achieved with the set of 40 features. Specifically, the chosen features were:

ACC_X: , , min, max, area, p;

ACC_Y: , , min, max, area, p;

ACC_Z: , , min, max, area, p;

GYRO_Y: , min, max, p;

EOG_R: , , skew, max, area, w, p;

EOG_H: , , skew, min, area, p;

EOG_V: , , min, area, p;

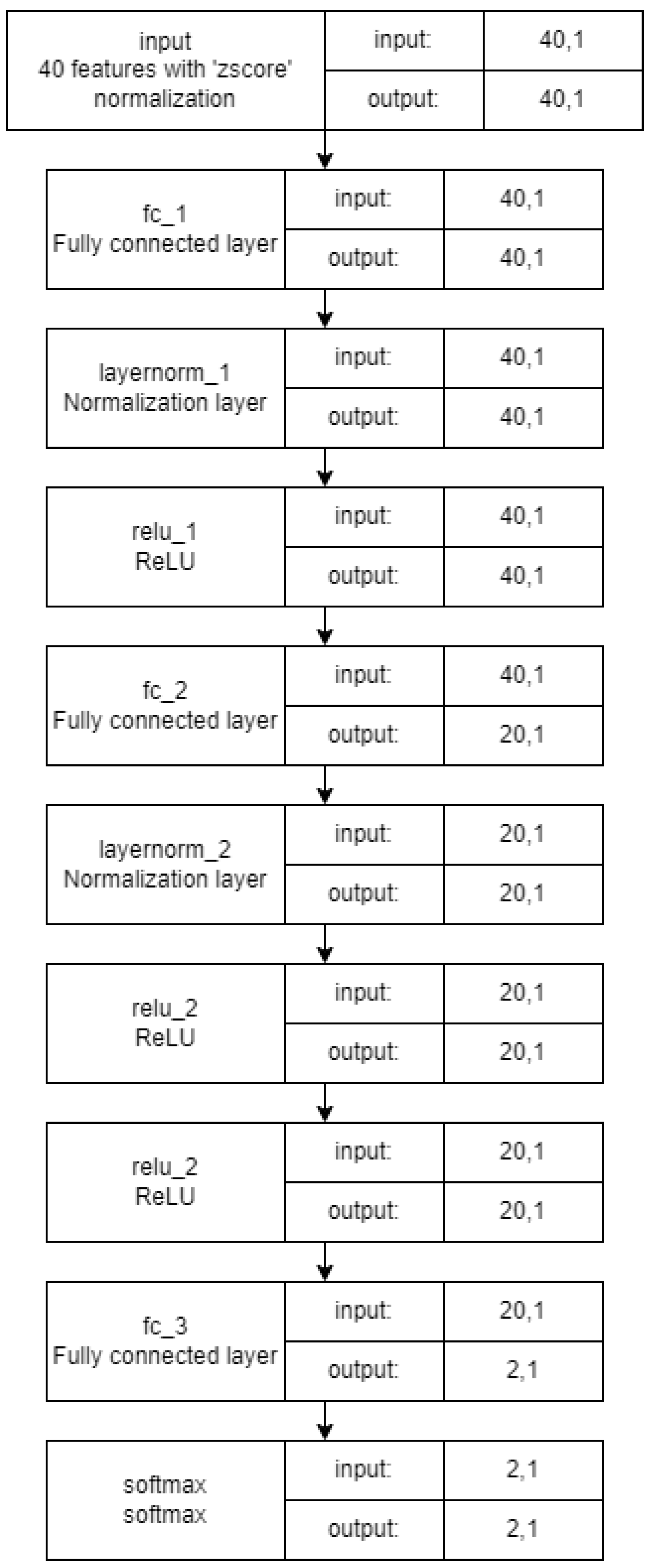

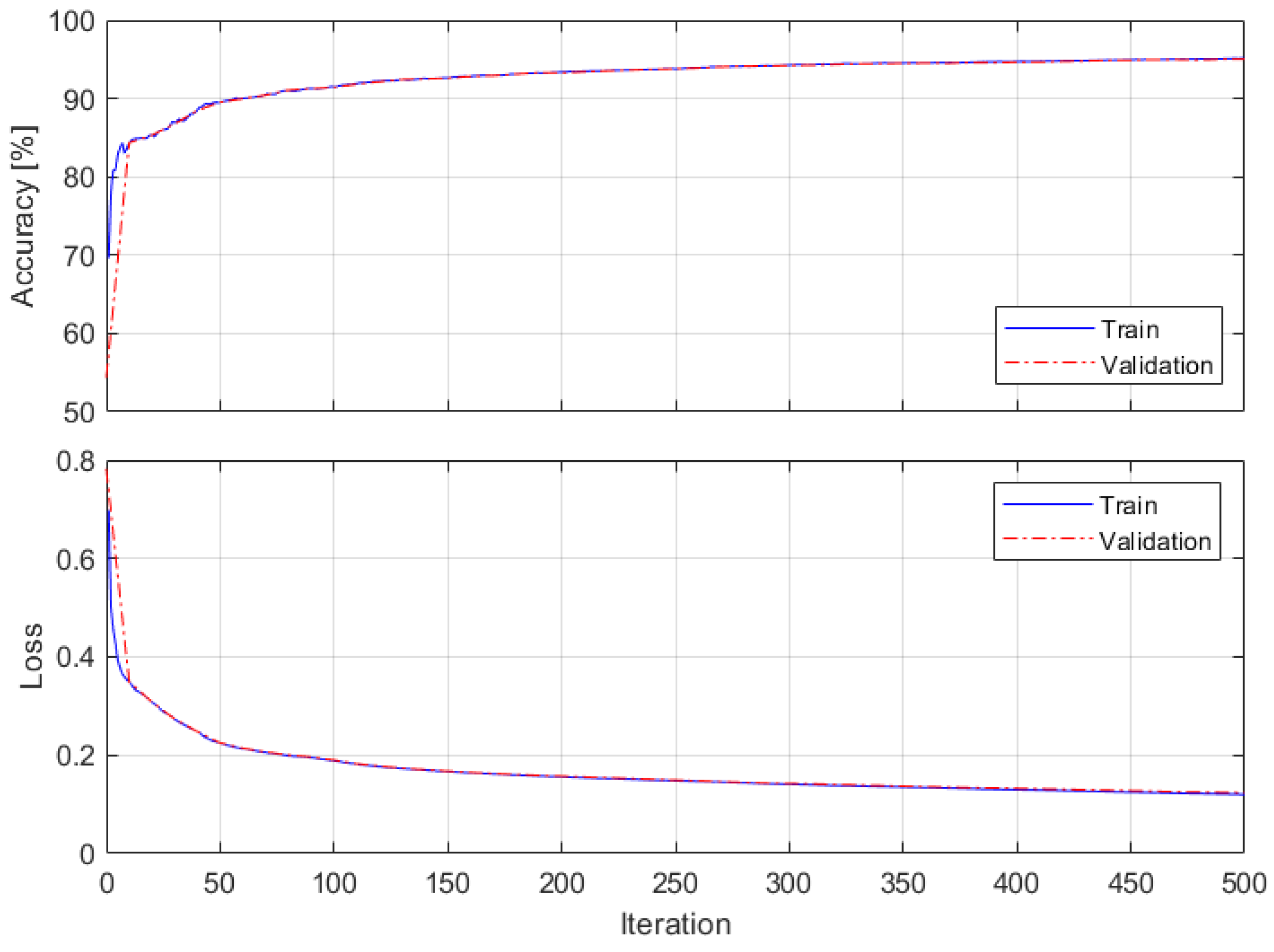

We experimented with various configurations of neural networks. The final neural network was configured as follows:

Number of fully connected layers: 3,

First layer size: 40,

Second layer size: 20,

Third layer size: 2,

Activation function: ReLU (Rectified Linear Unit),

Iteration limit: 500,

Validation frequency: 10 iterations.

These hyperparameters were determined by iterative testing to achieve the best classification performance, as well as keeping the computational complexity low. The detailed model architecture is presented in

Figure 4.

In addition we chose the LBFGS (Limited-memory Broyden-Fletcher-Goldfarb-Shanno) solver with cross-entropy loss function. LBFGS is a popular optimization algorithm that is used to solve unconstrained optimization problems. It operates by iteratively updating the parameters (weights and biases) of the model in the direction that reduces the value of the objective function. LBFGS typically incorporates a line search procedure to determine the step size along the search direction that minimizes the objective function. Line search ensures that each step taken during optimization is meaningful and leads to a reduction in the objective function. This algorithm process data in a single batch.

The selected configuration demonstrated superior results in accurately distinguishing between experienced and learner drivers based on the feature matrix derived from the pre-processing stage.

Training process lasted for 3 min 44 sec on average per training operation.

Figure 5 presents the averaged learning curves among iterations.

3. Results

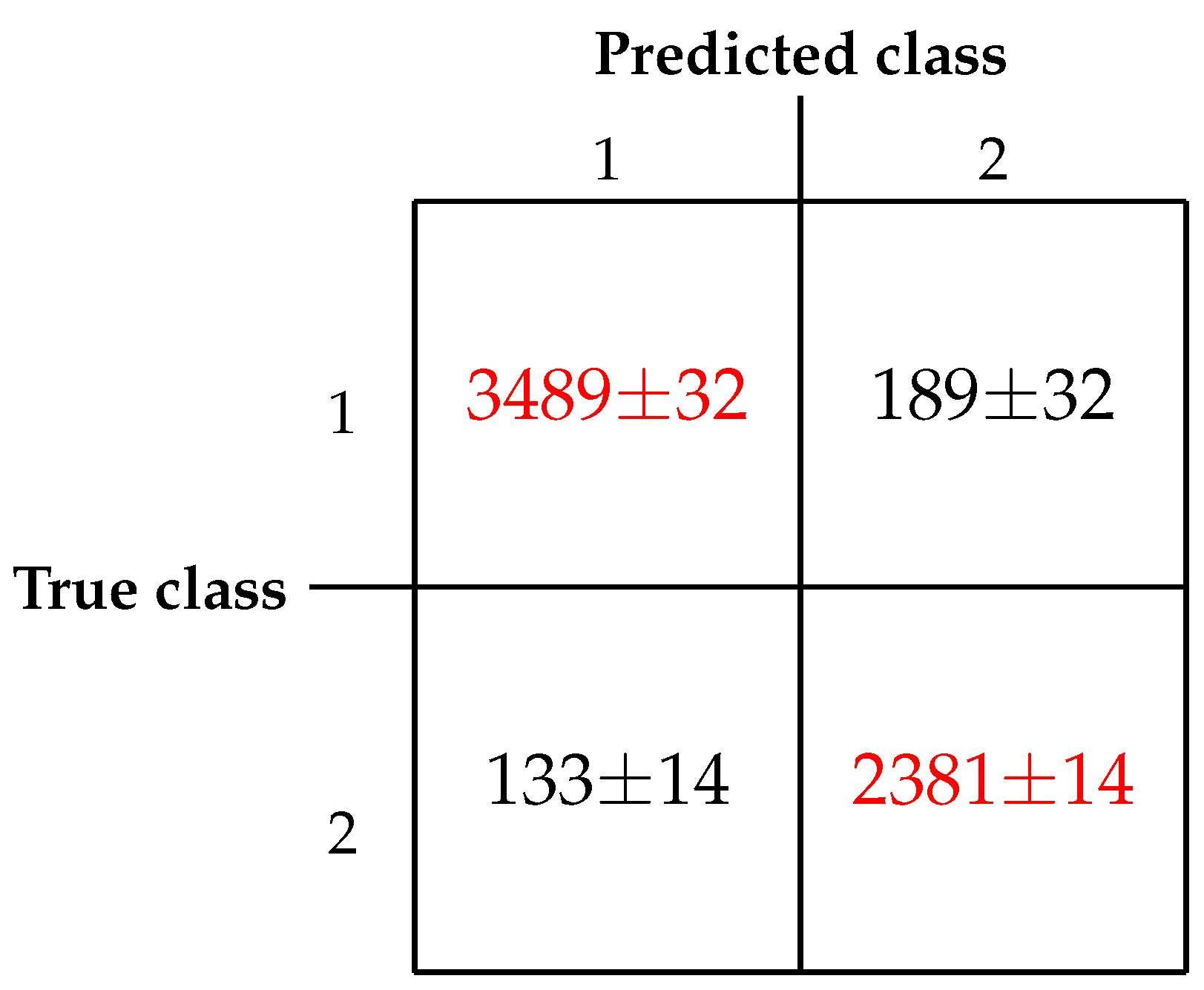

For evaluating the model’s performance, we used the previously prepared test dataset. The confusion matrix provides a clear visual representation of the outcomes, as illustrated in

Figure 6. A confusion matrix is a visual representation that summarizes the classification results and showcases the true positive, true negative, false positive, and false negative instances. This matrix provides an overview of the model’s ability to correctly classify instances and identify misclassifications. In this case, we present the confusion matrix as an average of values from five learning iterations ± standard deviation.

Table 1 contains the standard performance metrics derived from the confusion matrix. These metrics include accuracy, precision, recall, and the F1 score, providing a detailed understanding of the effectiveness of the model to distinguish between experienced and learner drivers.

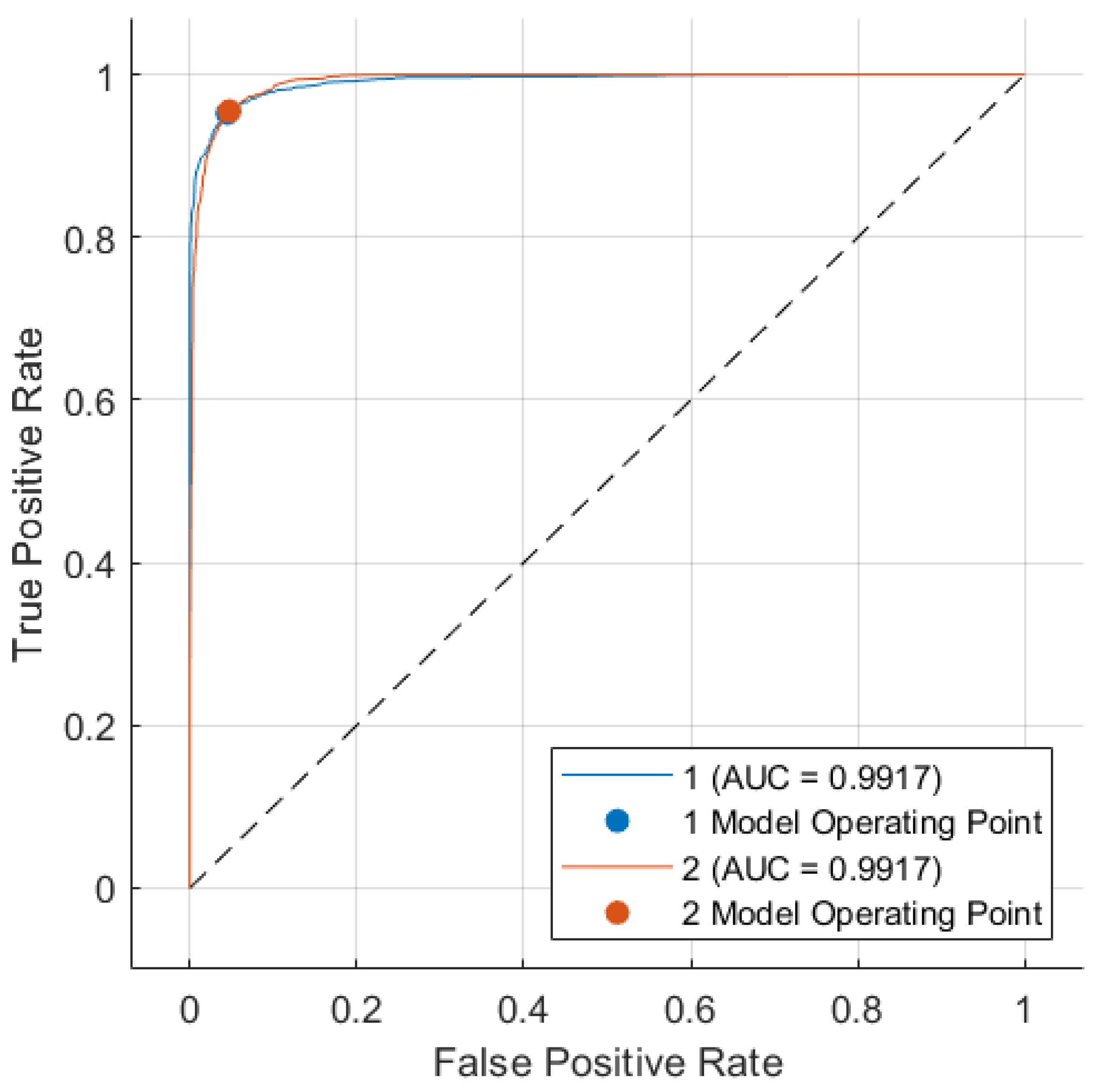

Furthermore, in

Figure 7, the Receiver Operating Characteristics (ROC) curves are presented along with the Area Under the Curve (AUC) values. ROC curves illustrate the trade-off between the true positive rate and the false positive rate across various classification thresholds. AUC quantifies the overall performance of the classifier, with a higher AUC indicative of better discriminatory capabilities.

The combined presentation of the confusion matrix, performance metrics, and ROC curves provides a comprehensive evaluation of the model’s effectiveness in driver classification, highlighting its strengths and areas for potential refinement.

The operations were performed on a personal computer equipped with an Intel(R) Core(TM) i5-9300H CPU running at 2 GHz, 16 GB of RAM (random access memory) and a single 8 GB NVIDIA GeForce GTX 1650 GPU. Pre-processing, classification and analyses were executed utilizing MATLAB 2023b software with the Deep Learning toolbox. The model underwent training utilizing the ’trainnet’ function in MATLAB.

4. Discussion

The high accuracy rate of 94% achieved in differentiating between experienced and novice drivers using machine learning analysis of wearable sensor data in real-world conditions is notable. This proves the robustness and adaptability of the developed classification model, despite the inherent challenges posed by the unpredictable nature of real-world driving environments. Our ability to achieve such accuracy highlights the potential of wearable sensor technology coupled with advanced machine learning techniques to effectively monitor and classify driver behavior.

Our results are slightly higher compared to those reported in Chen et al. [

75] study on risk level recognition in distracted drivers based on video and vehicle sensor data (accuracy of 94% vs. 92%) and similar to those reported in [

76] in four impairments of attention (84.1%–100% correct detection rate vs. 94% accuracy). With our study, we prove that the differences between experienced and inexperienced drivers lie in eye fixations, as reported by Falkmer and Gregersen in study [

77], and head movements.

It is important to acknowledge that while our study demonstrates promising results, there are limitations to consider. Despite efforts to standardize testing conditions, factors beyond our control, such as varying road conditions, traffic density, and weather conditions, may have influenced the accuracy of our classification model. Furthermore, the generalizability of our findings may be limited by the specific characteristics of the study population and the driving environment.

Moving forward, future research could explore ways to mitigate the impact of external factors on classification accuracy, such as incorporating real-time data streams and adaptive learning algorithms. Furthermore, investigating the potential integration of additional sensor modalities, such as video or audio data, could provide more comprehensive information on driver behavior and improve classification performance.

In terms of practical applications, our findings hold promise for the development of intelligent driver monitoring systems aimed at improving road safety. By accurately identifying the differences in driving behavior between experienced and novice drivers, such systems could provide valuable feedback and interventions to improve driver training programs, promote safe driving practices, and mitigate the risk of accidents.

In general, our study contributes to the growing body of research on driver classification and intelligent transportation systems, underscoring the potential of wearable sensor technology and machine learning approaches to revolutionize the way we monitor and understand driver behavior in real-world settings. Through continued research and innovation, we can strive towards creating safer and more efficient transportation systems for all road users.

5. Conclusions

For both experienced and learner drivers, smart glasses can collect data on driving behaviors and patterns. This information can be used for performance analysis, helping drivers identify areas for improvement and adopt safer driving habits. In the case of learner drivers, smart glasses can be a valuable tool during driving lessons. Instructors can use the collected data to provide targeted feedback and tailor their teaching approach based on the individual needs of the learner.

The unpredictability and complexity of real-world driving environments introduce numerous variables beyond our control, making the achievement of such high accuracy particularly noteworthy. Despite efforts to standardize testing conditions, external factors inherent to real-road scenarios inherently contribute to the challenge of precise driver classification. In conclusion, achieving a 94% accuracy in classifying drivers based on data from wearable sensors under real-world conditions represents a commendable success.

Author Contributions

Conceptualization, N.P. and R.D.; methodology, N.P., R.D., and K.D.; software, N.P.; validation, N.P., R.D., and K.D.; formal analysis, N.P. and K.D.; investigation, N.P. and S.S.; resources, R.D.; data curation, N.P. and R.D.; writing—original draft preparation, N.P., R.D., and S.S.; writing—review and editing, N.P., R.D., K.D., S.S., and M.J.; visualization, N.P. and S.S.; supervision, R.D. and E.T. ; project administration, N.P. and R.D.; funding acquisition, R.D., E.T. and M.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by a postdoctoral grant number 07/040/RGH22/1015 under the "Excellence Initiative – Research University" pro-quality program - rectoral postdoctoral grants.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of the Medical University of Silesia (protocol code KNW/0022/KB1/18, 16 October 2018).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Acknowledgments

We would like to express our gratitude to the volunteers who participated in the study, as well as to the driving school that facilitated the collection of signals from novice drivers.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ANOVA |

Analysis of variance |

| AUC |

Area Under the Curve |

| EOG |

Electrooculography, electrooculographic |

| IMU |

Inertial Measurement Unit |

| LBFGS |

Limited-memory Broyden-Fletcher-Goldfarb-Shanno |

| RAM |

Random access memory |

| ReLU |

Rectified Linear Unit |

| ROC |

Receiver Operating Characteristics |

References

- European Commission. Road accidents by NUTS 3 regions. Available at https://ec.europa.eu/eurostat/databrowser/view/tran_sf_roadnu/default/table?lang=en&category=tran.tran_sf.tran_sf_road, 2024. Accessed 19 April 2024.

- Polish Road Safety Observatory. Final data on road accidents in Poland in 2022. Available online at https://obserwatoriumbrd.pl/en/final-data-on-road-accidents-in-poland-in-2022/, 2023. Accessed 19 April 2024.

- Polish Road Safety Observatory. Accident Data (Preliminary and Final Figures). Available online at https://obserwatoriumbrd.pl/wp-content/uploads/2024/03/Accidents_Poland_2019_2023_EN_final.pdf, 2024. Accessed 19 April 2024.

- Hsiao, H.; Chang, J.; Simeonov, P. Preventing Emergency Vehicle Crashes: Status and Challenges of Human Factors Issues. Human Factors 2018, 60, 1048–1072. Publisher: SAGE Publications Inc. [CrossRef]

- Doniec, R.; Piaseczna, N.; Duraj, K.; Sieciński, S.; Irshad, M.T.; Karpiel, I.; Urzeniczok, M.; Huang, X.; Piet, A.; Nisar, M.A.; Grzegorzek, M. The detection of alcohol intoxication using electrooculography signals from smart glasses and machine learning techniques. Systems and Soft Computing 2024, 6, 200078. [CrossRef]

- Hao, H.; Li, Y.; Medina, A.; Gibbons, R.; Wang, L. Understanding crashes involving roadway objects with SHRP 2 naturalistic driving study data. Journal of safety research 2020, 73, 199–209. [CrossRef]

- Zhang, L.; Cui, B.; Yang, M.; Guo, F.; Wang, J. Effect of Using Mobile Phones on Driver’s Control Behavior Based on Naturalistic Driving Data. International Journal of Environmental Research and Public Health 2019, 16, 1464. [CrossRef]

- Ige, J.; Banstola, A.; Pilkington, P. Mobile phone use while driving: Underestimation of a global threat. Journal of transport and health 2016, 3, 4–8. [CrossRef]

- Backer-Grøndahl, A.; Sagberg, F. Driving and telephoning: Relative accident risk when using hand-held and hands-free mobile phones. Safety Science 2011, 49, 324–330. [CrossRef]

- Pöysti, L.; Rajalin, S.; Summala, H. Factors influencing the use of cellular (mobile) phone during driving and hazards while using it. Accident; analysis and prevention 2005, 37 1, 47–51. [CrossRef]

- Albert, G.; Lotan, T.; Weiss, P.; Shiftan, Y. The challenge of safe driving among elderly drivers. Healthcare Technology Letters 2017, 5, 45 – 48. [CrossRef]

- Schmeidler, K.; Fencl, I. Intelligent transportation systems for Czech ageing generation. Perspectives on Science 2016, 7, 304–311. [CrossRef]

- Lyon, C.; Mayhew, D.; Granié, M.; Robertson, R.; Vanlaar, W.; Woods-Fry, H.; Thévenet, C.; Furian, G.; Soteropoulos, A. Age and road safety performance: Focusing on elderly and young drivers. IATSS Research 2020. [CrossRef]

- Burns, P.C. Navigation and the mobility of older drivers. The journals of gerontology. Series B, Psychological sciences and social sciences 1999, 54 1, S49–55. [CrossRef]

- Martins, M.A.; Garcez, T.V. A multidimensional and multi-period analysis of safety on roads. Accident; analysis and prevention 2021, 162, 106401. [CrossRef]

- Baskov, V.; Isaeva, E. INFORMATION-DIGITAL APPROACH TO THE ASSESSMENT OF THE LEVEL OF ROAD SAFETY. World of transport and technological machines 2022. [CrossRef]

- Salmon, P.; McClure, R.; Stanton, N. Road transport in drift? Applying contemporary systems thinking to road safety. Safety Science 2012, 50, 1829–1838. [CrossRef]

- Kapitaniak, B.; Walczak, M.; Kosobudzki, M.; Jóźwiak, Z.; Bortkiewicz, A. Application of eye-tracking in the testing of drivers: A review of research. International journal of occupational medicine and environmental health 2015, 28 6, 941–54. [CrossRef]

- Mbouna, R.O.; Kong, S.; Chun, M.G. Visual Analysis of Eye State and Head Pose for Driver Alertness Monitoring. IEEE Transactions on Intelligent Transportation Systems 2013, 14, 1462–1469. [CrossRef]

- Yan, X.; Abas, A. Preliminary on Human Driver Behavior: A Review. International journal of artificial intelligence 2020, 7, 29–34. [CrossRef]

- Ramírez, J.M.; Rodríguez, M.D.; Andrade, A.G.; Castro, L.A.; Beltrán, J.; Armenta, J.S. Inferring Drivers’ Visual Focus Attention Through Head-Mounted Inertial Sensors. IEEE Access 2019, 7, 185422–185432. [CrossRef]

- Galley, N. The evaluation of the electrooculogram as a psychophysiological measuring instrument in the driver study of driver behaviour. Ergonomics 1993, 36 9, 1063–70. [CrossRef]

- Yang, L.; Ma, R.; Zhang, H.M.; Guan, W.; Jiang, S. Driving behavior recognition using EEG data from a simulated car-following experiment. Accident; analysis and prevention 2017, 116, 30–40. [CrossRef]

- Savkin, A.; Hussain, Z.M.; Abdel-Qader, I.; Abosaq, H.; Ramzan, M.; AlThobiani, F.; Abid, A.; Aamir, K.M.; Abdushkour, H.A.; Irfan, M.; Gommosani, M.E.; Ghonaim, S.M.; Shamji, V.R.; Rahman, S. Unusual Driver Behavior Detection in Videos Using Deep Learning Models. Sensors (Basel, Switzerland) 2022, 23. [CrossRef]

- Cho, H.; Yoon, S. Divide and Conquer-Based 1D CNN Human Activity Recognition Using Test Data Sharpening †. Sensors (Basel, Switzerland) 2018, 18. [CrossRef]

- Murugan, S.; Sivakumar, P.; Kavitha, C.; Harichandran, A.; Lai, W. An Electro-Oculogram (EOG) Sensor’s Ability to Detect Driver Hypovigilance Using Machine Learning. Sensors (Basel, Switzerland) 2023, 23. [CrossRef]

- Shahverdy, M.; Fathy, M.; Berangi, R.; Sabokrou, M. Driver behaviour detection using 1D convolutional neural networks. Electronics Letters 2021. [CrossRef]

- Doniec, R.J.; Sieciński, S.; Duraj, K.M.; Piaseczna, N.J.; Mocny-Pachońska, K.; Tkacz, E.J. Recognition of Drivers’ Activity Based on 1D Convolutional Neural Network. Electronics 2020, 9. [CrossRef]

- Doniec, R.; Piaseczna, N.; Li, F.; Duraj, K.; Pour, H.H.; Grzegorzek, M.; Mocny-Pachońska, K.; Tkacz, E. Classification of Roads and Types of Public Roads Using EOG Smart Glasses and an Algorithm Based on Machine Learning While Driving a Car. Electronics 2022, 11, 2960. [CrossRef]

- Doniec, R.; Konior, J.; Sieciński, S.; Piet, A.; Irshad, M.T.; Piaseczna, N.; Hasan, M.A.; Li, F.; Nisar, M.A.; Grzegorzek, M. Sensor-Based Classification of Primary and Secondary Car Driver Activities Using Convolutional Neural Networks. Sensors 2023, 23. [CrossRef]

- Doniec, R.; Sieciński, S.; Piaseczna, N.; Duraj, K.; Chwał, J.; Gawlikowski, M.; Tkacz, E., Classification of Recorded Electrooculographic Signals on Drive Activity for Assessing Four Kind of Driver Inattention by Bagged Trees Algorithm: A Pilot Study. In Lecture Notes in Networks and Systems; Springer Nature Switzerland, 2023; Vol. 746, p. 225–236. [CrossRef]

- Piaseczna, N.; Doniec, R.; Sieciński, S.; Grzegorzek, M.; Tkacz, E. Does glucose affect our vision? A preliminary study using smart glasses. 2023 IEEE EMBS Special Topic Conference on Data Science and Engineering in Healthcare, Medicine and Biology, 2023, pp. 113–114. [CrossRef]

- Sieklicka, A.; Chądzyńska, P.; Iwanowicz, D. Analysis of the Behavior of Vehicle Drivers at Signal-Controlled Intersection Approach while Waiting for a Green Signal—A Case Study in Poland. Applied Sciences 2022. [CrossRef]

- Emerling, M.; Janik, N.; Moritz, J.; Mróz, T.; Nowik, M.; Tacica, M.; Węgrzyn, T.; Paradowska, M. Attitudes of Young People in Poland Towards Road Safety. European journal of management 2018, 6, 61–79. [CrossRef]

- Pędzierska, M.; Pawlak, P.; Kruszewski, M.; Jamson, S. ESTIMATED ASSESSMENT OF THE POTENTIAL IMPACT OF DRIVERASSISTANCE SYSTEMS USED IN AUTOMATED VEHICLES ON THE LEVEL OF ROAD SAFETY IN POLAND. Transport Problems 2020, 15, 325–338. [CrossRef]

- Ye, Z.; Shi, X.; Strong, C.; Larson, R. Vehicle-based sensor technologies for winter highway operations. Iet Intelligent Transport Systems 2012, 6, 336–345. [CrossRef]

- Srinivas, C.M.V.; Jaglan, S.; S, S.H.; Ghamande, M.; Trivedi, P.U.; Ramakrishnan, R. Integrating Smart Technologies for Enhanced Traffic Management and Road Safety: A Data-Driven Approach. 2023 Second International Conference on Augmented Intelligence and Sustainable Systems (ICAISS) 2023, pp. 748–754. [CrossRef]

- Dang, X.; Wang, G.; Zhou, X.; Wang, S. Evaluation of Regional Road Transport Safety Service Level With Edge Computing in Scalable Sensor and Actuator Networks. Scalable Computing: Practice and Experience 2023. [CrossRef]

- Yang, Y.; Sun, H.; Liu, T.; Huang, G.B.; Sourina, O. Driver Workload Detection in On-Road Driving Environment Using Machine Learning. In Proceedings of ELM-2014 Volume 2; Springer International Publishing, 2015; p. 389–398. [CrossRef]

- Kaliraja, C.; Chitradevi, D.; Rajan, A. Predictive Analytics of Road Accidents Using Machine Learning. 2022 2nd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE) 2022, pp. 1782–1786. [CrossRef]

- Megnidio-Tchoukouegno, M.; Adedeji, J. Machine Learning for Road Traffic Accident Improvement and Environmental Resource Management in the Transportation Sector. Sustainability 2023. [CrossRef]

- Feng, Y.R.; Meuleners, L.; Stevenson, M.; Heyworth, J.; Murray, K.; Fraser, M.; Maher, S. The Impact of Cognition and Gender on Speeding Behaviour in Older Drivers with and without Suspected Mild Cognitive Impairment, 2021. Publisher: Dove Press. [CrossRef]

- Chevalier, A.; Coxon, K.; Rogers, K.; Chevalier, A.; Wall, J.; Brown, J.; Clarke, E.; Ivers, R.; Keay, L. Predictors of older drivers’ involvement in high-range speeding behavior. Traffic Injury Prevention 2017, 18, 124 – 131. [CrossRef]

- Eramudugolla, R.; Huque, M.H.; Wood, J.; Anstey, K. On-Road Behavior in Older Drivers With Mild Cognitive Impairment. Journal of the American Medical Directors Association 2020. [CrossRef]

- Kawano, N.; Iwamoto, K.; Ozaki, N. DRIVING-RELATED RISKS AND MOBILITY IN ELDERLY DRIVERS WITH MCI. Innovation in Aging 2017, 1, 1195–1195. [CrossRef]

- Lundberg, C.; Hakamies-blomqvist, L.; Almkvist, O.; Johansson, K. Impairments of some cognitive functions are common in crash-involved older drivers. Accident; analysis and prevention 1998, 30 3, 371–7. [CrossRef]

- Peel, N.; Alapatt, L.J.; Jones, L.; Hubbard, R. The Association Between Gait Speed and Cognitive Status in Community-Dwelling Older People: A Systematic Review and Meta-analysis. The journals of gerontology. Series A, Biological sciences and medical sciences 2018, 74 6, 943–948. [CrossRef]

- Chevalier, A.; Coxon, K.; Rogers, K.; Chevalier, A.; Wall, J.; Brown, J.; Clarke, E.; Ivers, R.; Keay, L. A longitudinal investigation of the predictors of older drivers’ speeding behaviour. Accident; analysis and prevention 2016, 93, 41–47. [CrossRef]

- Casutt, G.; Theill, N.; Martin, M.; Keller, M.; Jäncke, L. The drive-wise project: driving simulator training increases real driving performance in healthy older drivers. Frontiers in Aging Neuroscience 2014, 6. [CrossRef]

- Iverson, M.D.; Gronseth, M.G.; Reger, P.M.; Classen, P.M.O.S.; Dubinsky, M.M.R.; Rizzo, M.M. Practice Parameter update: Evaluation and management of driving risk in dementia. Neurology 2010, 74, 1316 – 1324. [CrossRef]

- Lam, L. Factors associated with fatal and injurious car crash among learner drivers in New South Wales, Australia. Accident; analysis and prevention 2003, 35 3, 333–40. [CrossRef]

- Amin, S. Backpropagation – Artificial Neural Network (BP-ANN): Understanding gender characteristics of older driver accidents in West Midlands of United Kingdom. Safety Science 2020. [CrossRef]

- Classen, S.; Shechtman, O.; Awadzi, K.; Joo, Y.; Lanford, D.N. Traffic violations versus driving errors of older adults: informing clinical practice. The American journal of occupational therapy : official publication of the American Occupational Therapy Association 2010, 64 2, 233–41. [CrossRef]

- Bauer, M.J.; Adler, G.; Kuskowski, M.; Rottunda, S.J. The Influence of Age and Gender on the Driving Patterns of Older Adults. Journal of Women & Aging 2003, 15, 16 – 3. [CrossRef]

- Stamatiadis, N.; Deacon, J.A. Trends in highway safety: effects of an aging population on accident propensity. Accident; analysis and prevention 1995, 27 4, 443–459. [CrossRef]

- Aksjonov, A.; Nedoma, P.; Vodovozov, V.; Petlenkov, E.; Herrmann, M. Detection and Evaluation of Driver Distraction Using Machine Learning and Fuzzy Logic. IEEE Transactions on Intelligent Transportation Systems 2019, 20, 2048–2059. [CrossRef]

- Sun, W.; Aguirre, M.; Jin, J.J.; Feng, F.; Rajab, S.; Saigusa, S.; Dsa, J.; Bao, S. Online distraction detection for naturalistic driving dataset using kinematic motion models and a multiple model algorithm, 2021. [CrossRef]

- Wang, Y.K.; Chen, S.A.; Lin, C.T. An EEG-based brain–computer interface for dual task driving detection. Neurocomputing 2014, 129, 85–93. [CrossRef]

- Regalia, G.; Gerboni, G.; Migliorini, M.; Lai, M.; Pham, J.; Puri, N.; Pavlova, M.K.; W, R.; Picard.; Sarkis, R.A.; Onorati, F. Sleep assessment by means of a wrist actigraphy-based algorithm: agreement with polysomnography in an ambulatory study on older adults. Chronobiology International 2021, 38, 400–414. PMID: 33213222. [CrossRef]

- Chen, L.W.; Chen, H.M. Driver Behavior Monitoring and Warning With Dangerous Driving Detection Based on the Internet of Vehicles. IEEE Transactions on Intelligent Transportation Systems 2021, 22, 7232–7241. [CrossRef]

- Choi, M.; Koo, G.; Seo, M.; Kim, S.W. Wearable Device-Based System to Monitor a Driver’s Stress, Fatigue, and Drowsiness. IEEE Transactions on Instrumentation and Measurement 2018, 67, 634–645. [CrossRef]

- Li, Z.; Zhang, K.; Chen, B.; Dong, Y.; Zhang, L. Driver identification in intelligent vehicle systems using machine learning algorithms. IET Intelligent Transport Systems 2018. [CrossRef]

- Lindow, F.; Kashevnik, A. Driver Behavior Monitoring Based on Smartphone Sensor Data and Machine Learning Methods. 2019 25th Conference of Open Innovations Association (FRUCT) 2019, pp. 196–203. [CrossRef]

- Yang, C.H.; Liang, D.; Chang, C.C. A novel driver identification method using wearables. 2016 13th IEEE Annual Consumer Communications & Networking Conference (CCNC) 2016, pp. 1–5. [CrossRef]

- Ledger, S.A.; Bennett, J.M.; Chekaluk, E.; Batchelor, J.; Meco, A.D. Cognitive function and driving in middle adulthood: Does age matter? Transportation Research Part F: Traffic Psychology and Behaviour 2019. [CrossRef]

- Lee, J.; Oh, E.; Kim, S.; Sohn, E.; Lee, A. COMPARISONS OF COGNITIVE STATUS AND DRIVING ABILITY IN THE ELDERLY. Alzheimer’s & Dementia 2017, 13. [CrossRef]

- Anstey, K.; Wood, J. Chronological age and age-related cognitive deficits are associated with an increase in multiple types of driving errors in late life. Neuropsychology 2011, 25 5, 613–21. [CrossRef]

- Owsley, C.; Ball, K.; Sloane, M.; Roenker, D.; Bruni, J. Visual/cognitive correlates of vehicle accidents in older drivers. Psychology and aging 1991, 6 3, 403–15. [CrossRef]

- Act of 6 September 2001 on the road traffic. Journal of Laws of the Republic of Poland (Dz.U. 1997 nr 28 poz. 152). Available at: http://isap.sejm.gov.pl/isap.nsf/download.xsp/WDU20011251371/U/D20011371Lj.pdf.

- Act of 5 January 2011 on Vehicle Drivers. Journal of Laws of the Republic of Poland (Dz.U. 2011 nr 30 poz. 151). Available at: http://prawo.sejm.gov.pl/isap.nsf/DocDetails.xsp?id=WDU20110300151.

- An, B.W.; Shin, J.H.; Kim, S.Y.; Kim, J.; Ji, S.; Park, J.; Lee, Y.; Jang, J.; Park, Y.G.; Cho, E.; Jo, S.; Park, J. Smart Sensor Systems for Wearable Electronic Devices. Polymers 2017, 9. [CrossRef]

- JINS MEME. JINS MEME glasses specifications. Accessed 31 January 2024.

- Piaseczna, N.; Duraj, K.; Doniec, R.; Tkacz, E. Evaluation of Intoxication Level with EOG Analysis and Machine Learning: A Study on Driving Simulator*. 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), 2023, pp. 1–4. [CrossRef]

- Scheffe, H. The analysis of variance, wiley classics library ed ed.; A Wiley publication in mathematical statistics, Wiley-Interscience Publication: New York, 1999.

- Chen, H.; Liu, H.; Chen, H.; Huang, J. Towards Sustainable Safe Driving: A Multimodal Fusion Method for Risk Level Recognition in Distracted Driving Status. Sustainability 2023, 15, 9661. [CrossRef]

- Lim, S.; Yang, J.H. Driver state estimation by convolutional neural network using multimodal sensor data. Electronics Letters 2016, 52, 1495–1497. [CrossRef]

- Falkmer, T.; Gregersen, N.P. A Comparison of Eye Movement Behavior of Inexperienced and Experienced Drivers in Real Traffic Environments. Optometry and Vision Science 2005, 82, 732–739. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).