3.1. Dataset Description

The dataset used in this study comprises two distinct sets of data: recordings obtained in real-road conditions and in a simulated environment. Real-road data were collected from 30 subjects, including experienced and inexperienced drivers. Experienced drivers drove their own cars, while inexperienced drivers operated a specially designated and labeled vehicle under the guidance of an instructor. The person in charge of gathering data in both situations was sitting in the back seat. All drivers traveled an identical 28.7 km route, which included elements evaluated in the practical driving exam in Poland, specifically driving through intersections (X-shaped and circular) in each direction and parking (parallel, perpendicular and diagonal). A comprehensive description of the experimental protocol is provided in [

56,

57].

The simulated data were recorded using a professional driving simulator previously used at the driving school to train novice drivers. The components of the simulator stand included a computer with the Microsoft Windows 10 operating system, a steel structural framework with an adjustable chair, a set of Logitech controls (gearbox, pedals, and steering wheel), three 27-inch LED monitors designed for extended use, and dedicated software. The experimental setup for the simulation is extensively described in the work of Piaseczna et al. and Doniec et al. [

11,

58]. Data were recorded from 30 volunteers; sixteen subjects in this group had been holding a driver’s license for several years, while the rest had no or limited driving experience.

Table 1 provides a summary description of the study group, a detailed description is available as

supplementary material (File S1).

In both setups the data were captured using JINS MEME ES_R smart glasses (see

Figure 1), which incorporate a 3-axis gyroscope, accelerometer, and a three-point electrooculography (EOG) sensor [

19]. The types of data that can be recorded with these smart glasses are especially valuable in driving-related research [

53,

59,

60,

61]. The selection of these glasses was based on the assumption that they do not distract the driver and are suitable for use in a car environment [

11,

56,

57,

58,

62]. To compare data between real-road and simulated environments, it is ideal to use the same type of equipment to record the data to avoid various kinds of bias. For this reason, the use of traditional driving simulator in this research is preferred over VR-based simulators, as it does not exert an influence on the head and face muscles, which would create a bias on the recorded signals.

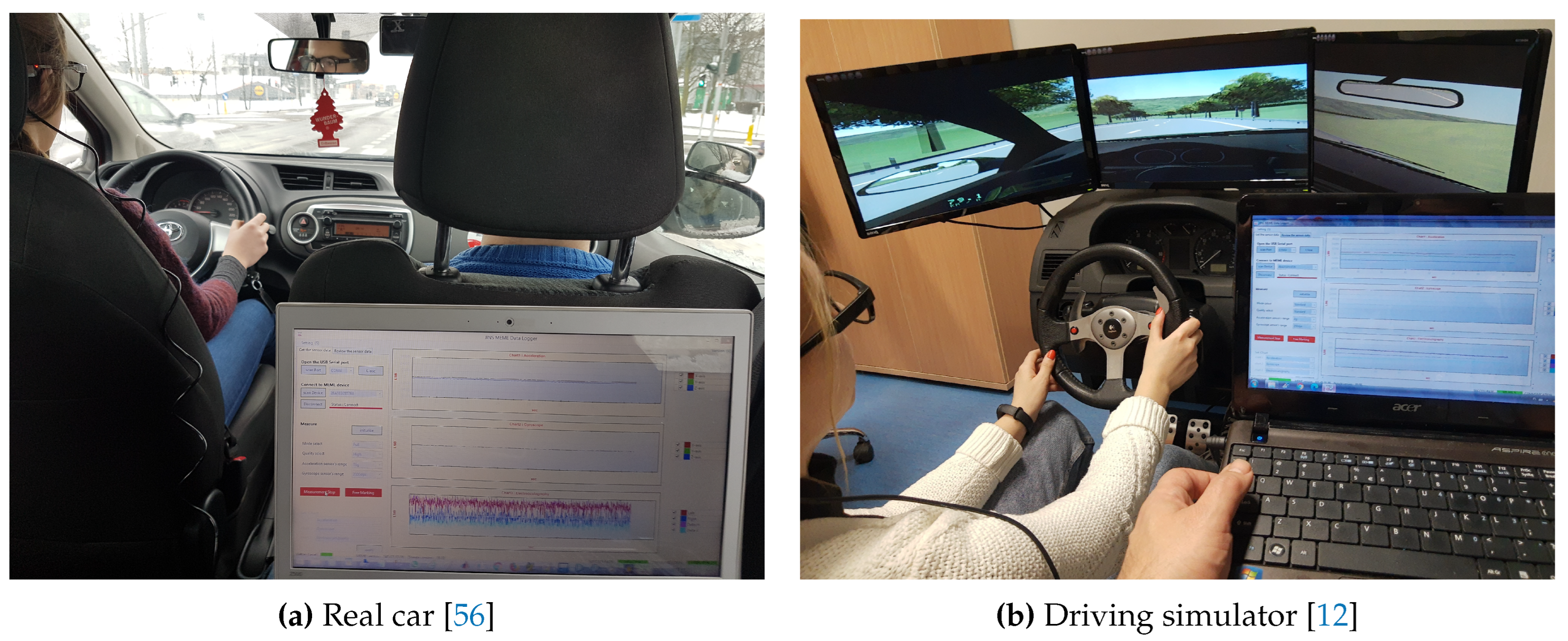

The tasks performed by the individual driving the simulator mirrored those conducted in real-road conditions, that is, included driving through different types of intersections and different types of parking. In this case, each subject performed several repetitions of each activity. Both setups are illustrated in

Figure 2.

The dataset consists of 390 recordings from driving in real road conditions and 1,829 recordings from driving on the simulator. The research adhered to the principles outlined in the Declaration of Helsinki and all participants gave their informed consent before participating in the study.

3.3. Data Similarities

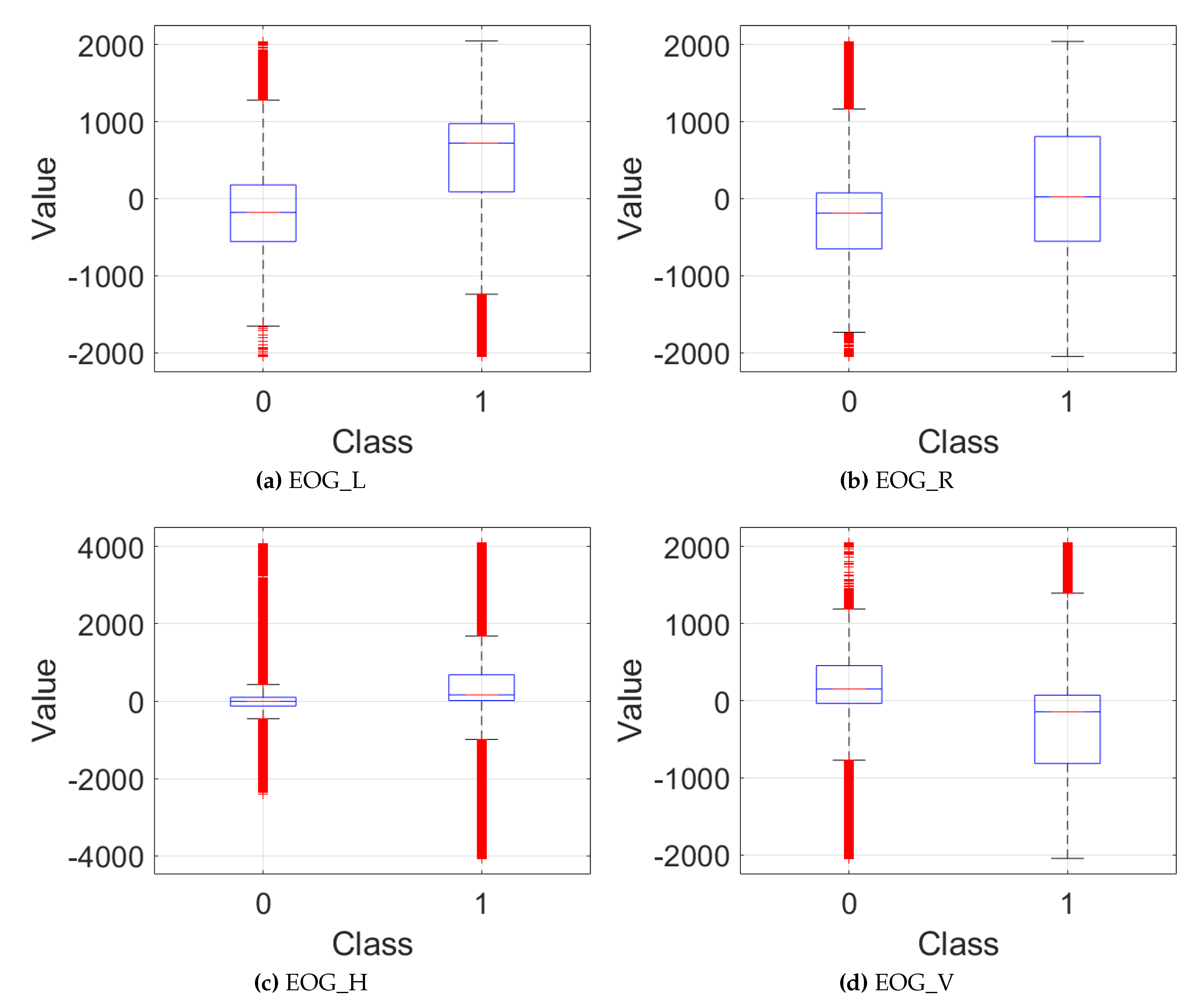

To check the similarity of signals in two categories, we first calculated general statistics for each signal channel for each class (see

Table 2). To provide a visual summary of the central tendency, variability and outliers of the data, we generated box charts for each channel of the data (see

Figure 3).

The analysis reveals differences in the means of the signals between two categories. In the next step, we confirm whether the differences are statistically significant by performing a one-way ANOVA analysis and Scheffé’s method for multiple comparison tests. For each test, we received a high F value and a p values = 0.

Despite the clear differences in the average values for signals belonging to different classes, their high intra-class variance can also be observed, which makes the classification of a single data sample not trivial. For this reason, we decided to perform an analysis to check for more specific dependencies between the signals between the groups. For that purpose, we normalized each signal using min-max normalization to ensure that the similarity measures reflect similarities in shape and timing between the signals, rather than being influenced by differences in amplitude. Next, for each pair of signals on the corresponding channels, we computed the following parameters:

Pearson’s correlation coefficient,

cross-correlation coefficient,

maximum magnitude-square coherence,

euclidean distance,

Comparing each signal from one category with each signal from the other category and computing the aforementioned parameters was performed on the signal widows and resulted in sixteen 44,225 x 25,287 matrices. To summarize the output and evaluate the overall similarity between the two categories, we decided to take into account the average, maximum, and minimum values of each channel, as well as their mean value (see

Table 3,

Table 5,

Table 7,

Table 9).

Pearson’s correlation coefficient is a measure of the linear relationship between two continuous variables. It quantifies the degree to which a change in one variable is associated with a change in another variable. The formula for the Pearson correlation coefficient

r between two variables

X and

Y is following:

where:

and are individual data points,

and are the means of X and Y, respectively,

∑ denotes the summation over all data points [

63].

Table 3 contains a summary of the values of the Pearson coefficients.

Table 3.

Pearson correlation coefficient statistics.

Table 3.

Pearson correlation coefficient statistics.

| Channel |

Minimum |

Maximum |

Average |

| EOG_L |

-0.9888 |

0.9637 |

0.0000 |

| EOG_R |

-0.9757 |

0.9784 |

0.0000 |

| EOG_H |

-0.9737 |

0.9629 |

0.0000 |

| EOG_V |

-0.9748 |

0.9758 |

0.0000 |

| Average |

-0.8504 |

0.8705 |

0.0000 |

The strength and direction of the linear relationship between two signals should be interpreted as follows:

r close to 1 indicates strong positive linear relationship. As one variable increases, the other variable tends to increase.

r close to -1 indicates a strong negative linear relationship. As one variable increases, the other variable tends to decrease.

r close to 0 indicates a weak or no linear relationship. Changes in one variable do not predict changes in the other variable.

Table 4 shows the percentage of values for which the linear correlation coefficient r is within the specified ranges.

Most signal pairs are in the range of weak linear correlation, which means that there is only a slight linear relationship between them, i.e. changes in signal from one group are not linearly associated with changes in the signal from another group. However, the relationship between the signals might be non-linear.

Cross-correlation measures the similarity of two signals or time series data with different time delays. In other words, cross-correlation provides an intuitive way of measuring the similarity of signals by comparing them at different time shifts. The cross-correlation function is defined as follows:

where:

is the cross-correlation function,

and are the input signals,

is the complex conjugate of the signal

shifted by

k samples (time lag) [

64,

65].

The summary of the values of the cross-correlation coefficients is presented in

Table 5.

Table 5.

Cross-correlation coefficient statistics.

Table 5.

Cross-correlation coefficient statistics.

| Channel |

Minimum |

Maximum |

Average |

| EOG_L |

0.2109 |

0.9959 |

0.8111 |

| EOG_R |

0.2307 |

0.9995 |

0.8088 |

| EOG_H |

0.2281 |

0.9981 |

0.8032 |

| EOG_V |

0.2800 |

0.9994 |

0.8115 |

| Average |

0.5215 |

0.9716 |

0.8087 |

The value of the cross-correlation coefficient indicates the degree of similarity between two signals, and the strength of correlation should be interpreted in the same way as in linear correlation. For this purpose, we defined cross-correlation ranges, which are as follows:

strong correlation: 0.7≤|r|≤1,

moderate correlation: 0.4≤|r|<0.7,

weak correlation: 0.1≤|r|<0.4,

no correlation: |r|<0.1.

Given that the analysis did not show a negative correlation and the minimum correlation for signal pairs in the data set is greater than 0.2, in

Table 6 we have provided a summary of the percentages of signal pairs for which the value of the cross-correlation coefficient r is within three of the specified ranges.

Table 6.

Percentage of signal pairs in specific range of cross-correlation coefficient r.

Table 6.

Percentage of signal pairs in specific range of cross-correlation coefficient r.

| Channel |

r<0.4 |

0.4≤r<0.7 |

r≥0.7 |

| EOG_L |

0.0086% |

7.6939% |

92.2934% |

| EOG_R |

0.0052% |

8.4710% |

91.5237% |

| EOG_H |

0.0074% |

8.9419% |

91.0506% |

| EOG_V |

0.0044% |

6.8368% |

93.1587% |

| Average |

0.0000% |

0.8886% |

99.1073% |

A strong cross-correlation suggests that the two signals have similar patterns or structures. For example, they might exhibit similar peaks and troughs at similar times. For most pairs of signals in the dataset, the value of the cross-correlation coefficient r was greater than 0.7, suggesting high similarity between the two groups of signals.

Spectral coherence is a measure of the similarity between two signals in the frequency domain. It quantifies how well the signals maintain a constant phase relationship over different frequencies. Magnitude Squared Coherence ranges from 0 to 1, where 0 indicates no coherence (independence) and 1 indicates perfect coherence (dependence). Although coherence is usually represented as a function of frequency, providing insights into the frequency-dependent relationship between two signals, a single scalar value can be useful for summarizing the overall coherence between two signals; therefore, we used a maximum value of the coherence function across all frequencies. This value indicates the highest degree of linear relationship between signals at any frequency. The maximum value of magnitude squared coherence

between two signals

and

is given by:

where

is the cross power spectral density of

and

,

is the power spectral density of

, and

is the power spectral density of

[

66].

Table 7 presents summary of maximum magnitude-square coherence values between the two groups of signals.

Table 7.

Maximum magnitude-square coherence statistics.

Table 7.

Maximum magnitude-square coherence statistics.

| Channel |

Minimum |

Maximum |

Average |

| EOG_L |

0.0055 |

0.9999 |

0.8368 |

| EOG_R |

0.0048 |

0.9999 |

0.8354 |

| EOG_H |

0.1272 |

0.9994 |

0.8314 |

| EOG_V |

0.1332 |

0.9999 |

0.8379 |

| Average |

0.3549 |

0.9933 |

0.8354 |

The higher the magnitude-square coherence value, the greater the similarity of a pair of signals at a given frequency. In

Table 8, we have shown the percentage of signals for which the maximum magnitude-square coherence C is within specific ranges.

Table 8.

Percentage of signal pairs in specific range of magnitude-square coherence value C.

Table 8.

Percentage of signal pairs in specific range of magnitude-square coherence value C.

| Channel |

C<0.4 |

0.4≤C<0.7 |

C≥0.7 |

| EOG_L |

0.3161% |

11.6373% |

88.0426% |

| EOG_R |

0.3014% |

11.6509% |

88.0476% |

| EOG_H |

0.1611% |

12.1719% |

87.6668% |

| EOG_V |

0.1346% |

11.1321% |

88.7332% |

| Average |

0.0001% |

5.8344% |

94.1614% |

For most signal pairs, the maximum magnitude-square coherence value is greater than 0.7, suggesting a frequency match between the two signal groups.

The Euclidean distance is a measure of the "straight-line" distance between two points in Euclidean space. When applied to signals, it quantifies the difference between two signals by treating each pair of corresponding samples as points in a multi-dimensional space. The Euclidean distance

d between two signals

x and

y, each consisting of

n samples, is given by:

where:

and are the i-th samples of the signals and , respectively,

n is the number of samples in each signal.

A summary of the distances between the two groups of signals is presented in

Table 9.

Table 9.

Euclidean distance statistics.

Table 9.

Euclidean distance statistics.

| Channel |

Minimum |

Maximum |

Average |

| EOG_L |

0.4622 |

13.3734 |

5.6198 |

| EOG_R |

0.4777 |

12.8738 |

5.5314 |

| EOG_H |

0.7980 |

12.7638 |

5.2635 |

| EOG_V |

0.5217 |

13.5402 |

5.4781 |

| Average |

1.6050 |

12.4880 |

5.4732 |

For normalized signals, similarity can be defined by the Euclidean distance at the level of a few units.

Table 10 shows the percentage of signal pairs for which the Euclidean distance d is within specific ranges.

Table 10.

Euclidean distance distribution range

Table 10.

Euclidean distance distribution range

| Channel |

d<4 |

4≤d<8 |

8≤d<12 |

d>12 |

| EOG_L |

4.5726% |

90.9342% |

4.4866% |

0.0063% |

| EOG_R |

5.0649% |

91.4666% |

3.4644% |

0.0039% |

| EOG_H |

6.7816% |

92.2690% |

0.9487% |

0.0004% |

| EOG_V |

5.3111% |

91.4697% |

3.2150% |

0.0040% |

| Average |

1.2409% |

97.4667% |

1.2922% |

3.5869% |

For most pairs of signals, the Euclidean distance d is in the range of 4 - 8, suggesting a high similarity between the two groups of signals.

Taking into account that the signals show a high degree of similarity, extracting meaningful features for classification can be challenging. For this problem, we decided to incorporate neural networks that can capture complex, nonlinear relationships in the data.

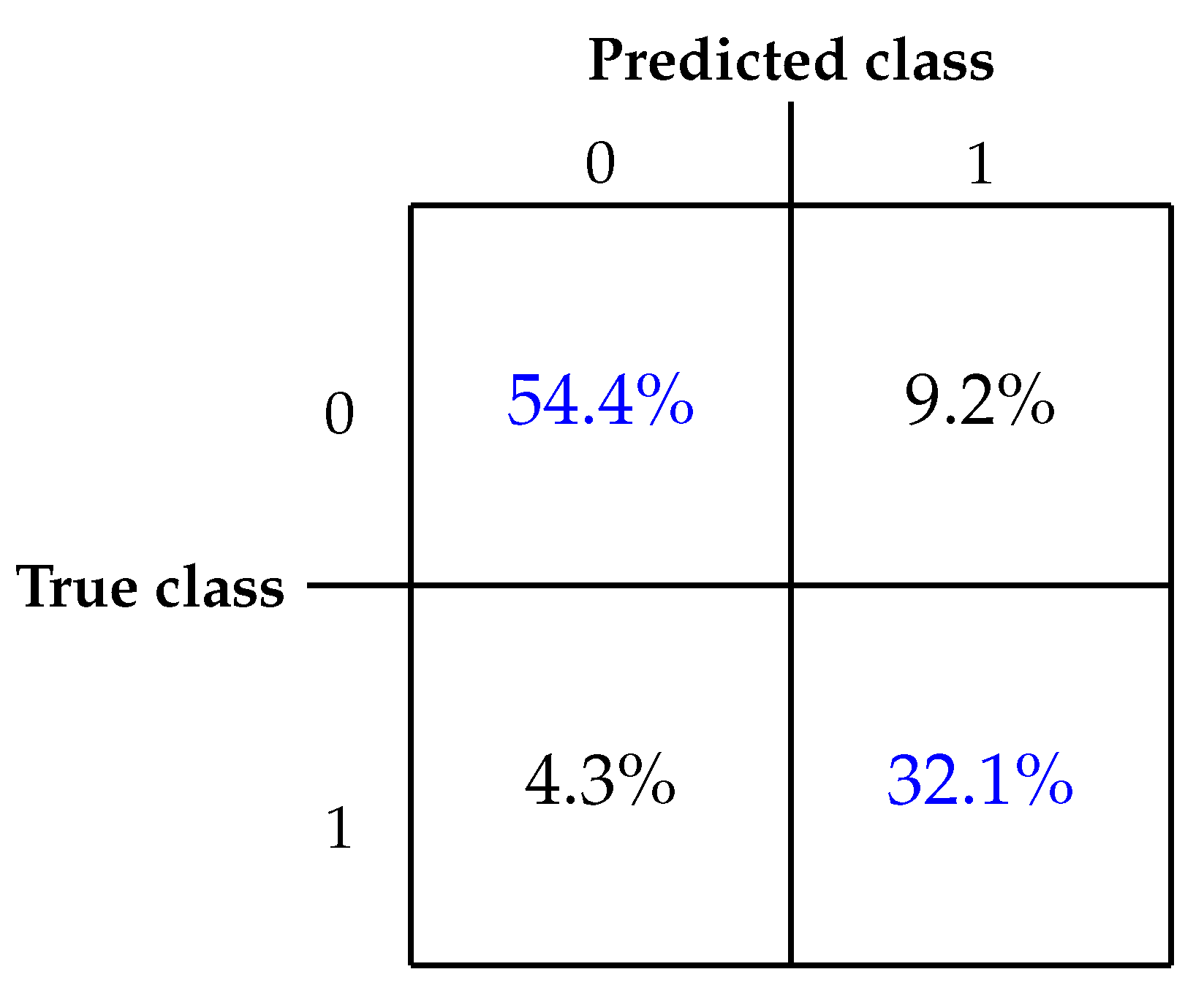

3.4. Classification

The classification task was implemented using a recurrent neural network architecture using MATLAB environment. The network comprises the following layers:

Sequence Input Layer with 4 features (channels), normalized using z-score normalization,

Bidirectional Long Short-Term Memory Layer with 100 units, in both forward and backward directions, capturing information from the past and future, configured to output the entire sequence,

Dropout Layer randomly sets a half of input units to zero at each update during training time, which helps prevent overfitting.

Bidirectional Long Short-Term Memory Layer with 50 units configured to output the entire sequence,

Dropout Layer,

Bidirectional Long Short-Term Memory Layer with 10 units, configured to output the last time step’s hidden state,

Dropout Layer,

Fully Connected Layer with 2 neurons for classification,

Softmax Layer for probability distribution calculation,

Classification Layer for labeling using class weights to address class imbalance.

Bidirectional LSTM (Long Short-Term Memory) models are commonly used for data classification tasks due to their ability to capture both past and future context. The bidirectional nature of LSTM models makes them well suited for these problems, especially when dealing with sequential data, where capturing context and long-term dependencies is essential for accurate classification [

67,

68,

69].

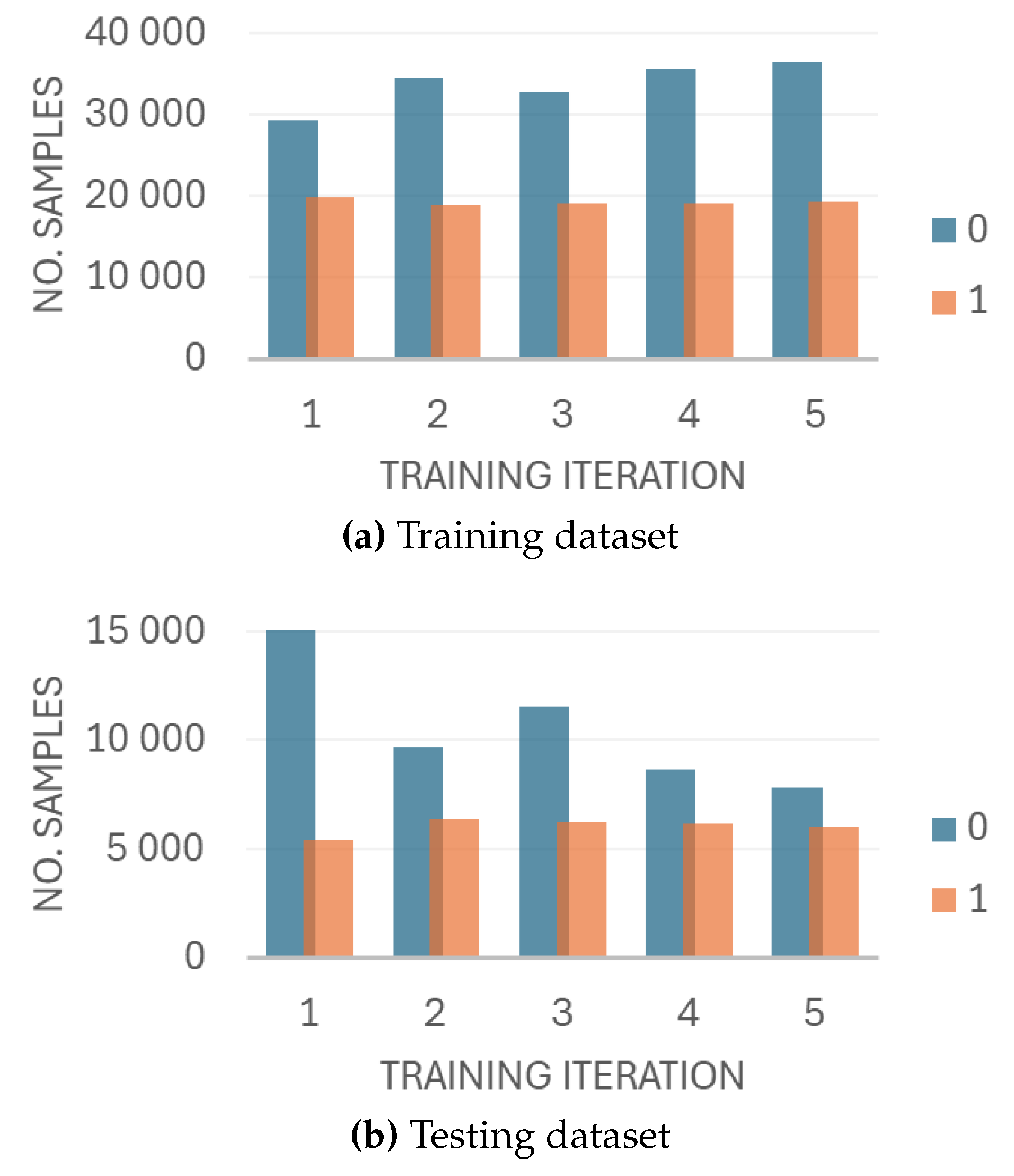

To avoid overfitting to specific train-test data partitions, we employed the k-fold cross-validation technique. For that purpose, we split the data into five subsets (folds) and performed five model training iterations. We decided to choose five folds, as it provides a good compromise between high bias and high variance of the results [

70,

71,

72].

In each iteration, we chose six subjects from each category to create a test dataset – this set was held out to prevent the data leaks and thus to test the models performance on a completely new data. The remaining data were used to train the model. The data distribution for each training iteration in the training and testing dataset is presented in

Figure 4.

We aim to keep the number of subjects in the test set equal so that the performance of the model is as accurate as possible in different cases. Therefore, the size of each iteration varies significantly.

Due to the class imbalance in our dataset, we decided to initialize the class weights to give higher importance to the underrepresented class (1) during the initial training phase, which can help the model learn better representations for those classes. For this, using the training dataset, we first calculated the class weights based on the formula:

where:

N is the total number of samples in the dataset,

is the number of samples in class i,

C is the total number of classes.

Next, we normalize the class weights so that they sum up to the total number of classes. This step ensures that the weights collectively represent the importance of each class relative to the others. The normalized class weight

for each class

i was calculated as follows:

Finally, we initialized the weights in the classification layer of the neural network using the calculated normalized class weights [

73].

Table 11 presents the class weights in each training iteration.

The training parameters were chosen based on common practices and initial testing and were set as follows:

Optimization algorithm: Adam,

Mini-batch size: 1000,

-

Learning rate:

- -

Initial learning rate: 0.001,

- -

Drop period: 5 epochs,

- -

Drop factor: 0.5,

- -

Schedule: Piecewise,

Data shuffling: Every epoch,

Sequence length: 200,

Number of epochs: 20.

The presented configuration was designed to train a robust recurrent neural network for effective classification of the provided dataset. Adam (Adaptive Moment Estimation) is a popular optimization algorithm that combines the advantages of two other extensions of stochastic gradient descent: Adaptive Gradient Algorithm (AdaGrad) and Root Mean Square Propagation (RMSProp). The data is shuffled at the beginning of every epoch. Shuffling helps break the correlation between batches and makes the model more robust. The learning rate controls how much the model needs to change in response to the estimated error each time the model weights are updated. An initial value of 0.001 is a common starting point for many problems. The learning rate is halved every five epochs to prevent overfitting.

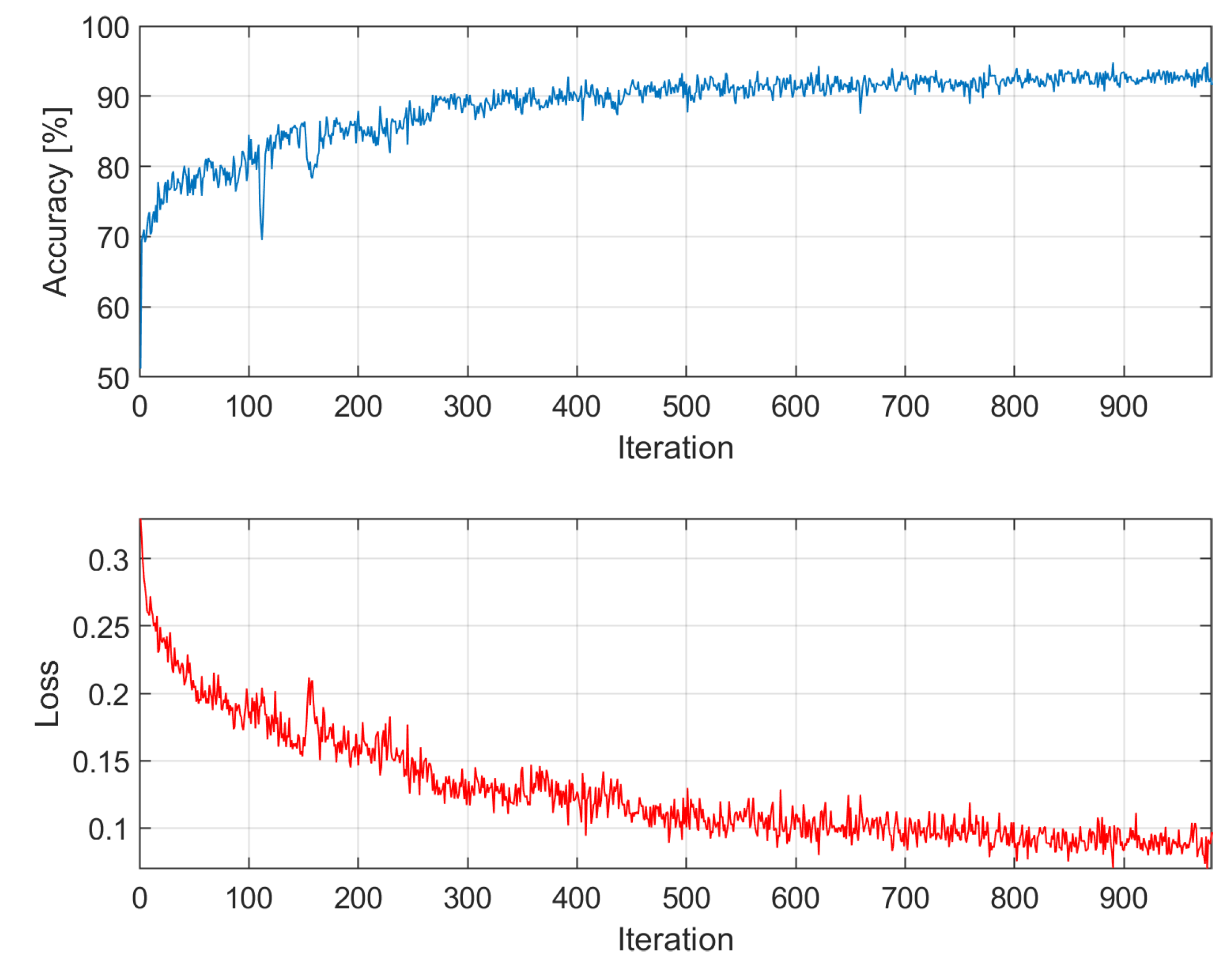

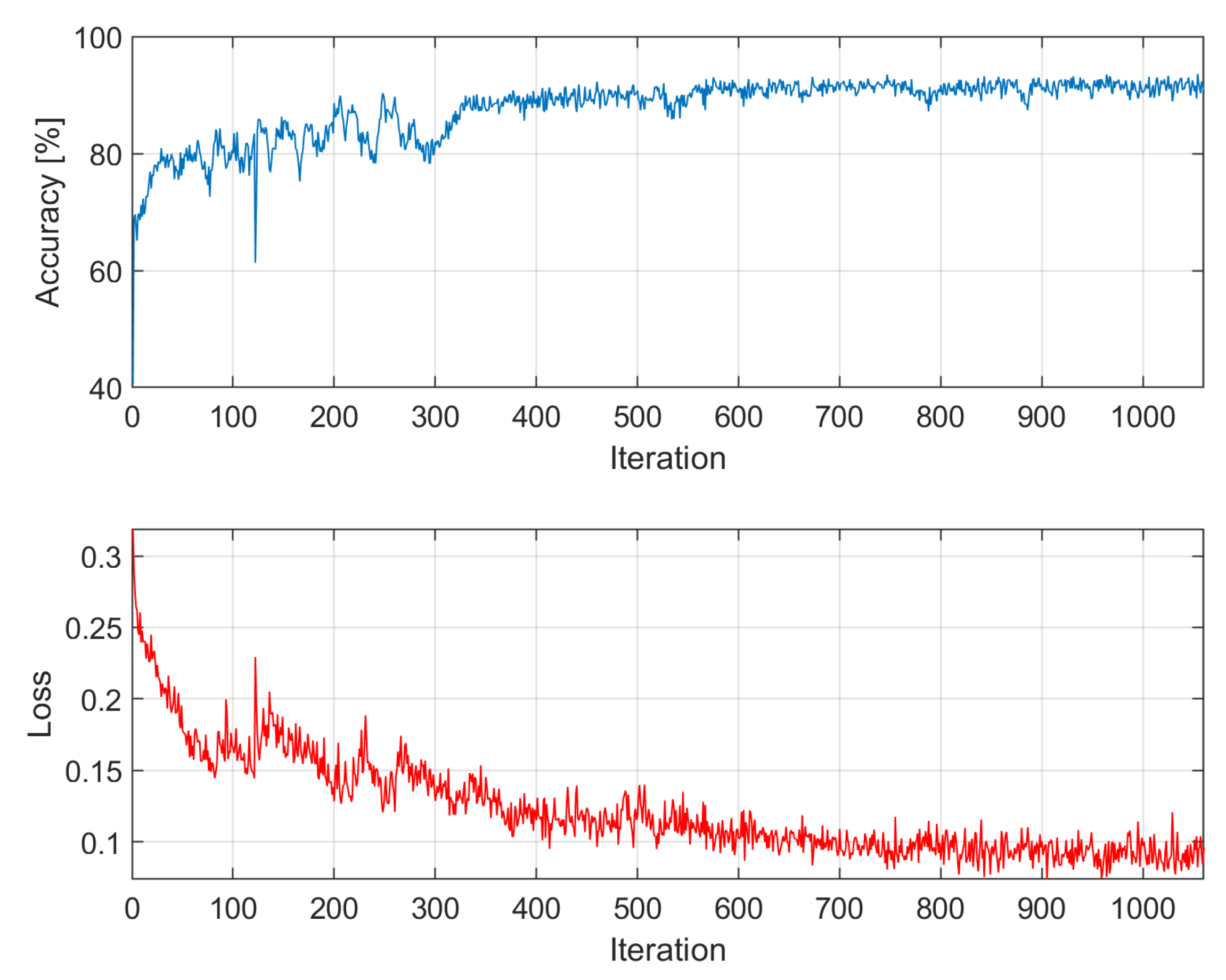

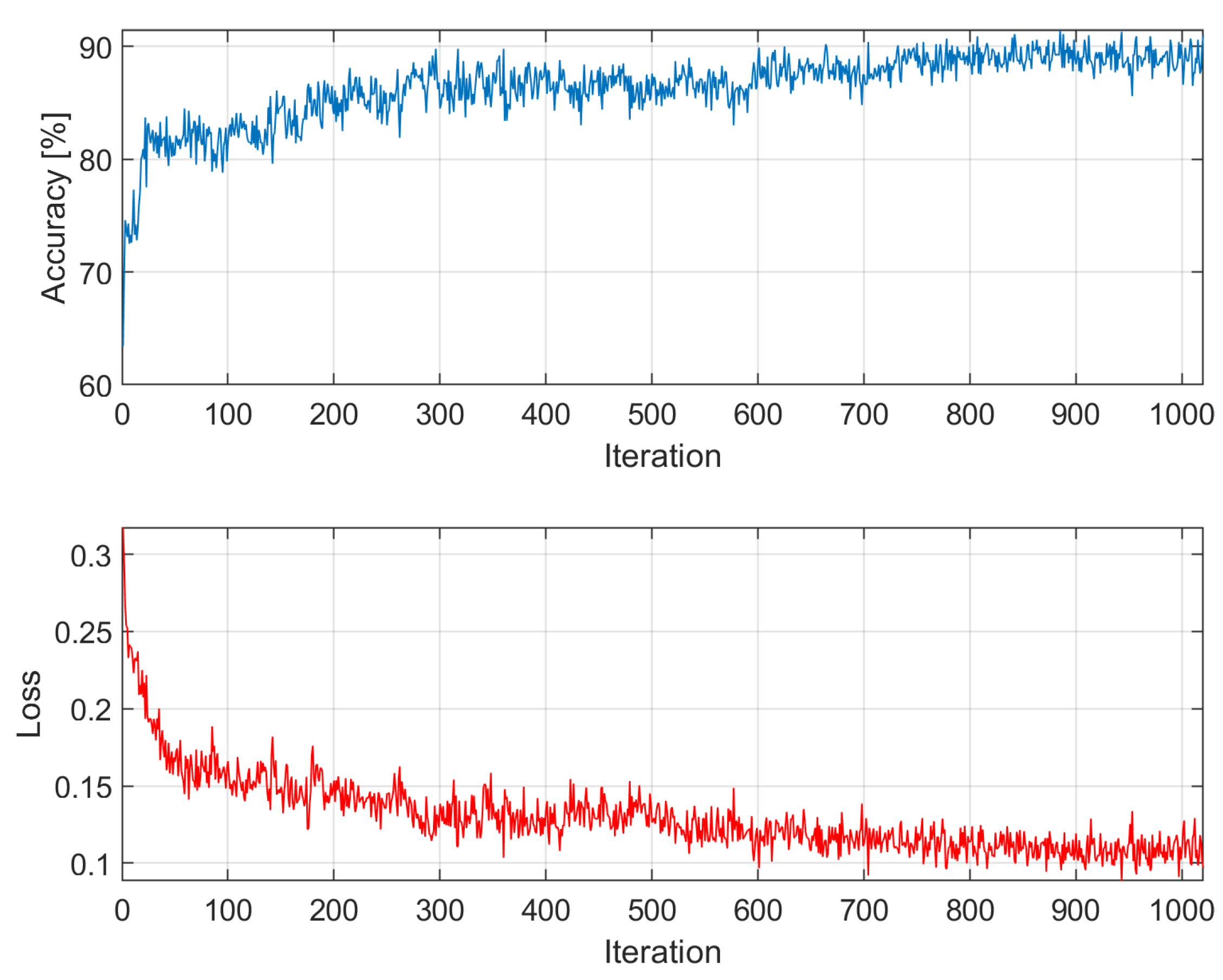

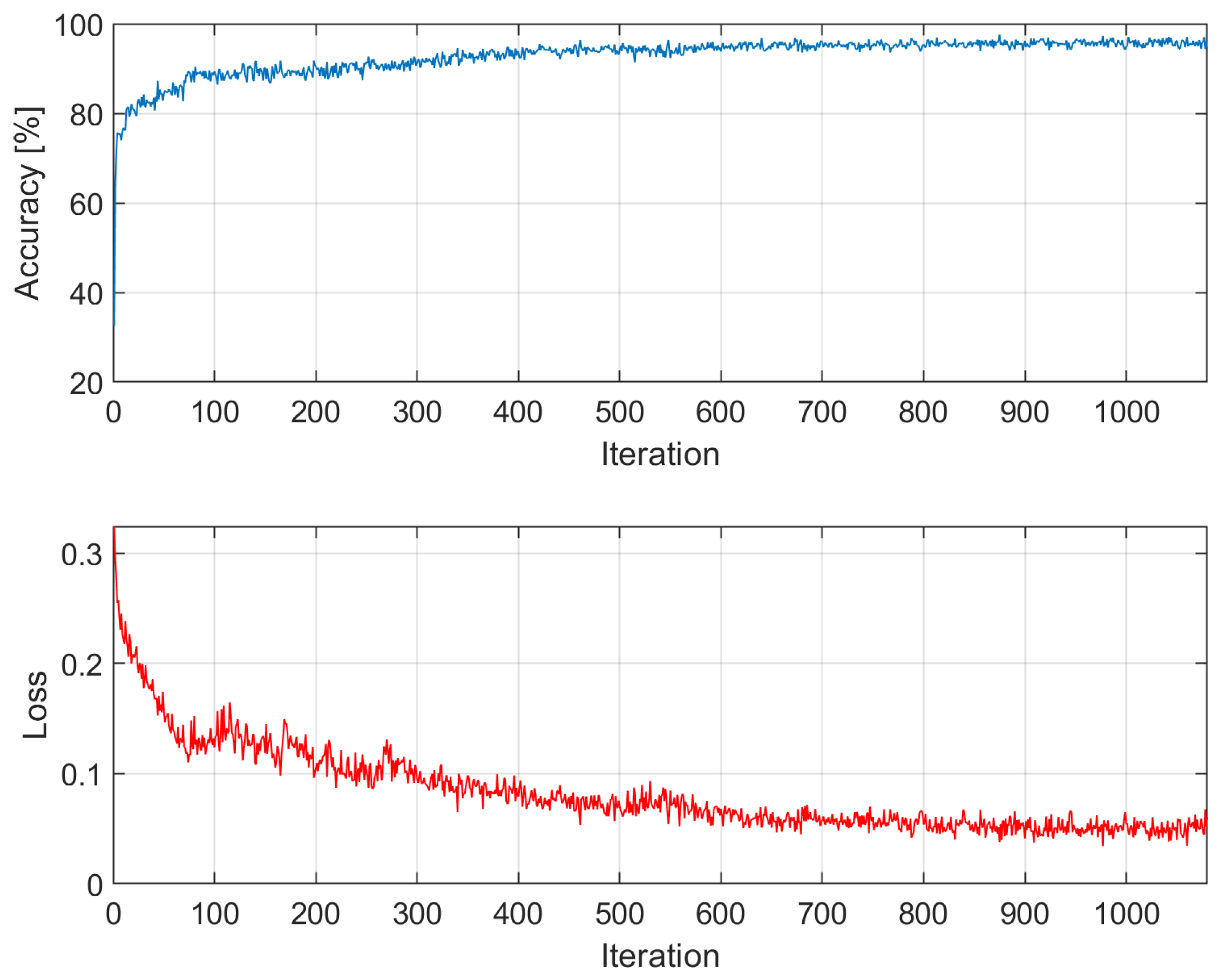

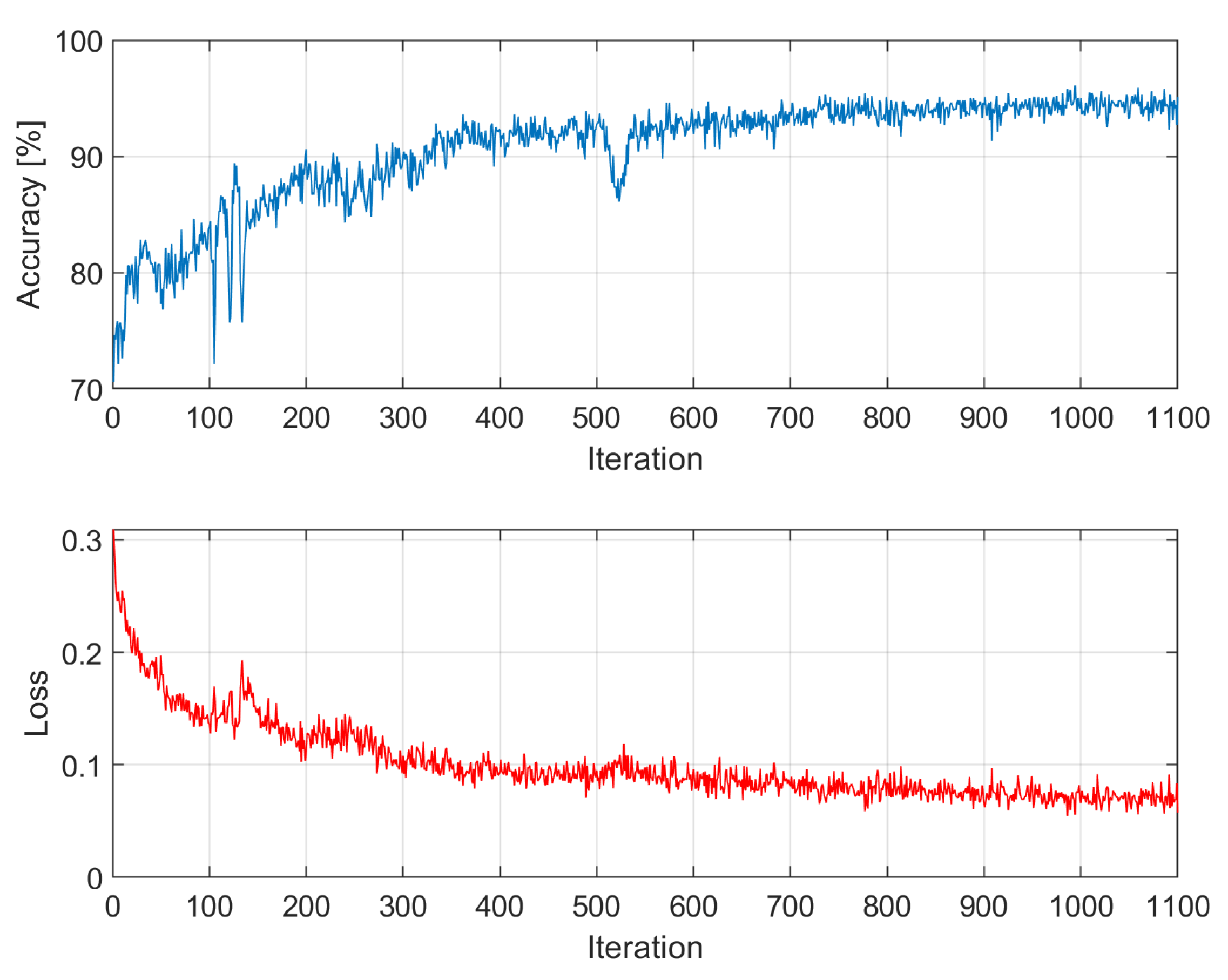

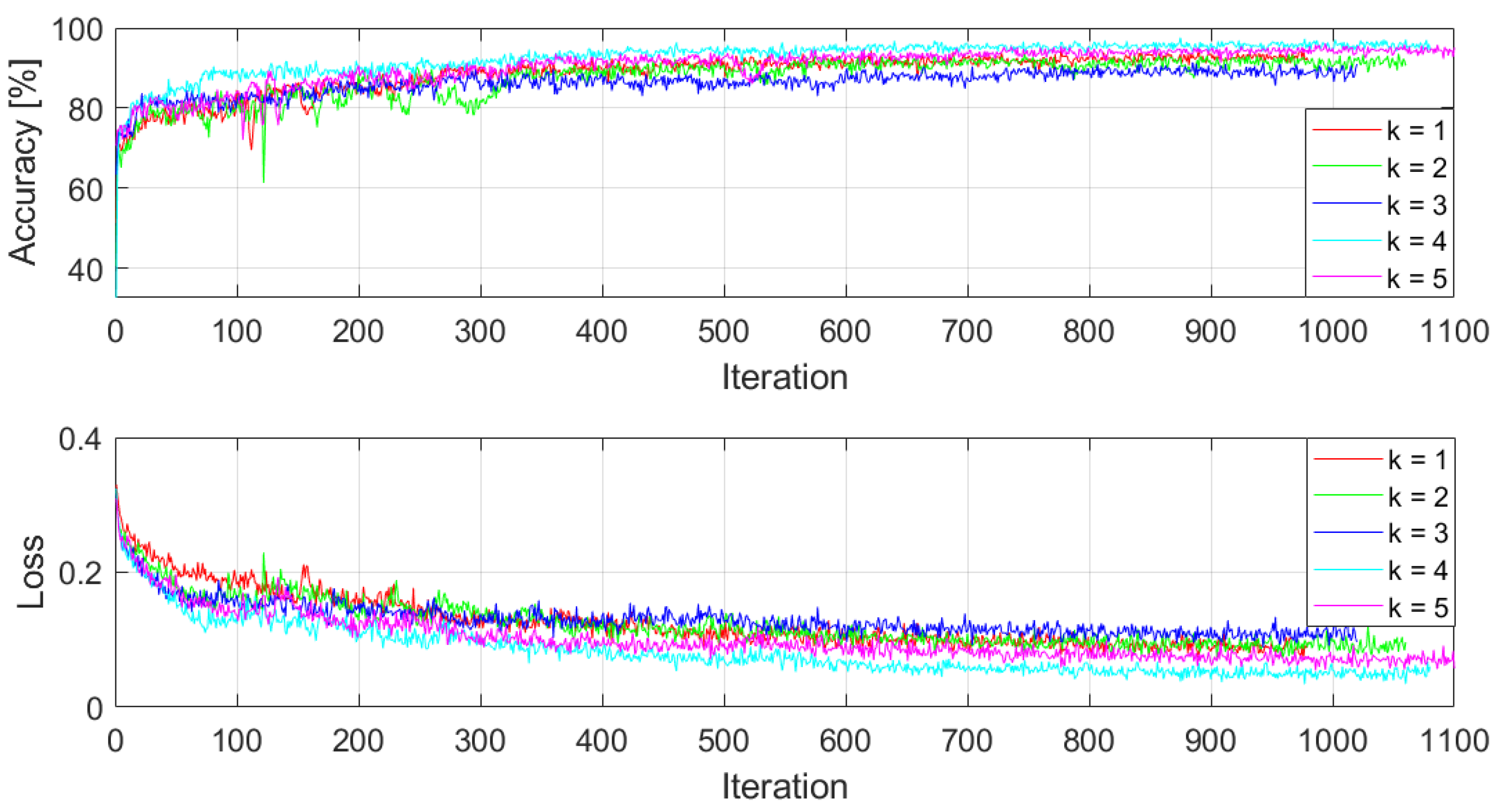

After training, the model was evaluated on a test set prepared previously. The training process is presented in

Figure 5. The learning curves for each training iteration as separate figures can be found in the

Appendix A.

All operations were executed on a PC with an Intel(R) Core(TM) i5-9300H CPU operating at 2GHz, 16GB of RAM, and a single 8GB NVIDIA GeForce GTX 1650 GPU. Pre-processing and classification tasks were performed using MATLAB 2023b software. Each training iteration lasted 19 minutes and 11 seconds on average.