Submitted:

19 April 2024

Posted:

23 April 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Mathematical Framework

2.1. Sparse Gaussian Process Regression Framework

2.2. Sparse Variational Gaussian Process

2.3. Model Setup

3. Results and Discussions

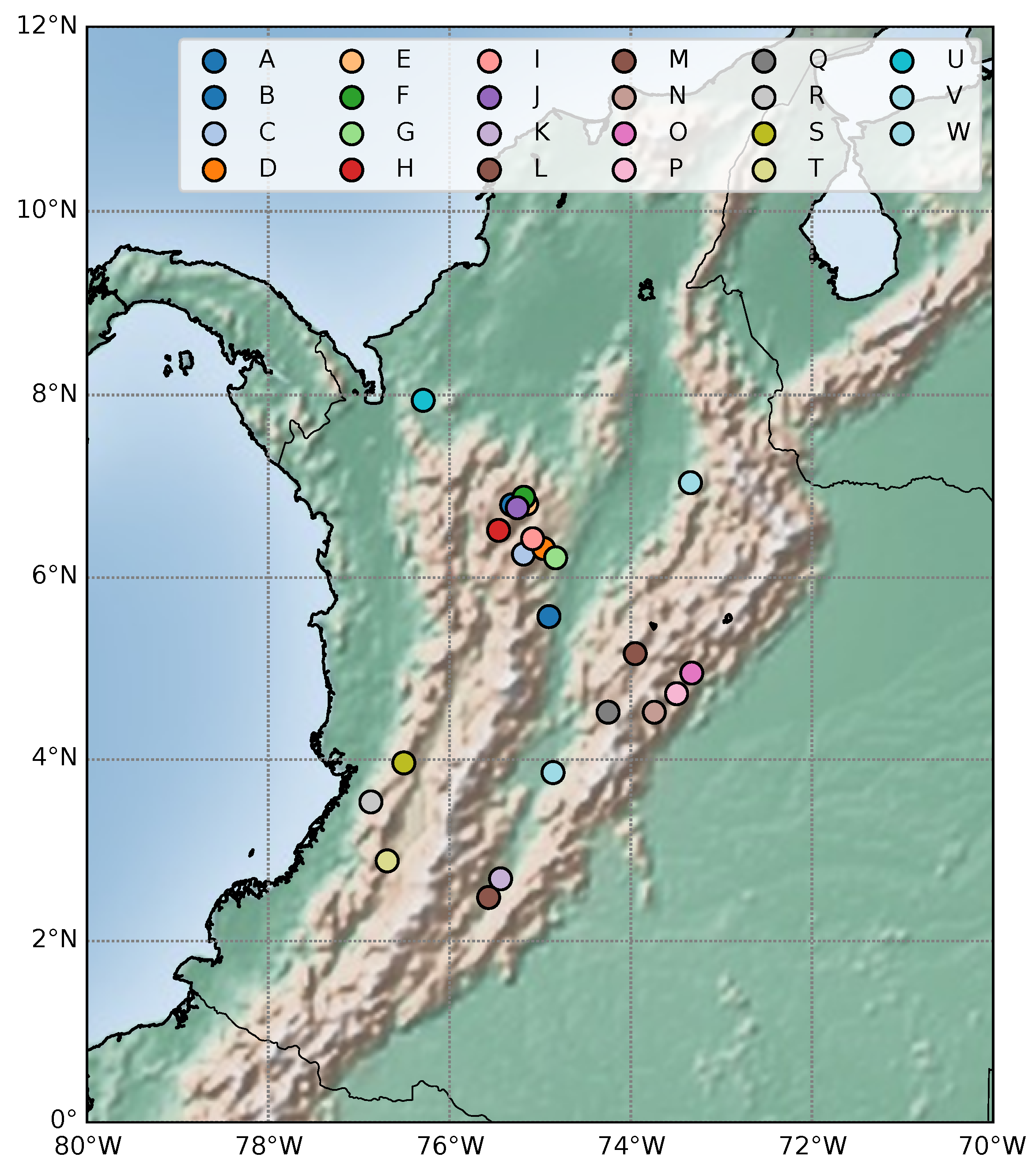

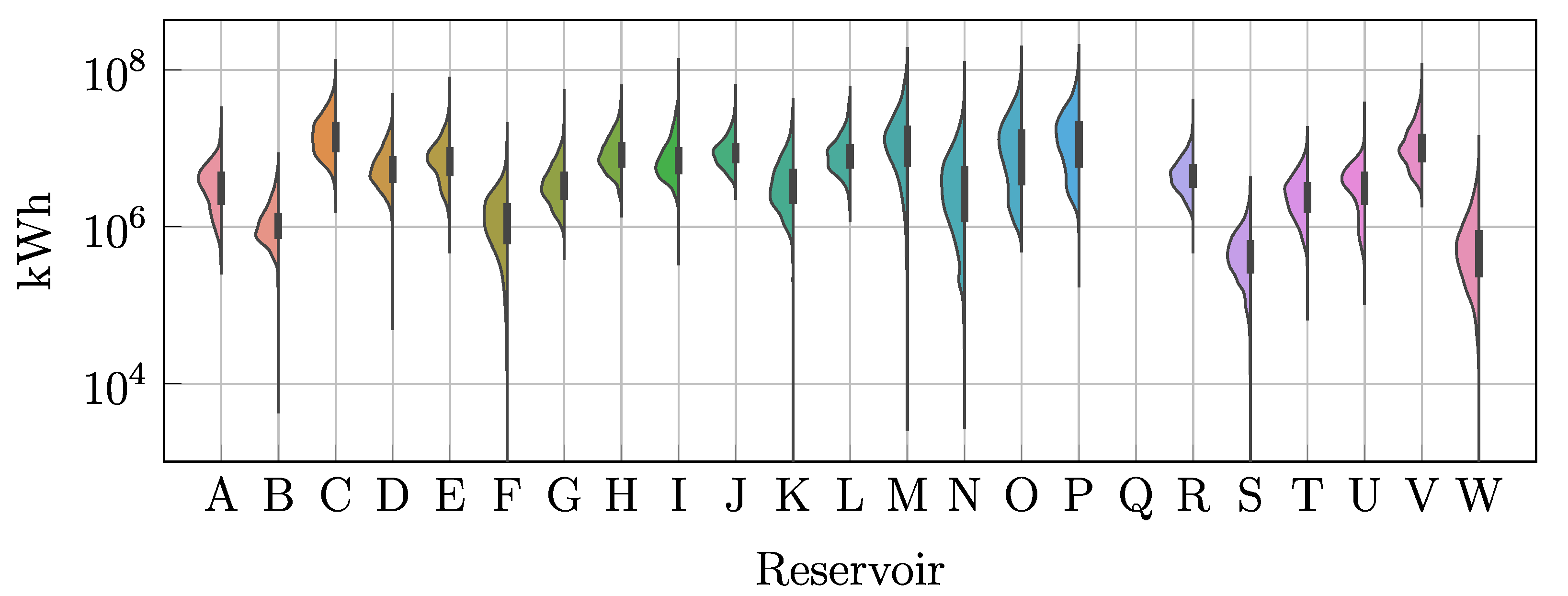

3.1. Dataset Collection

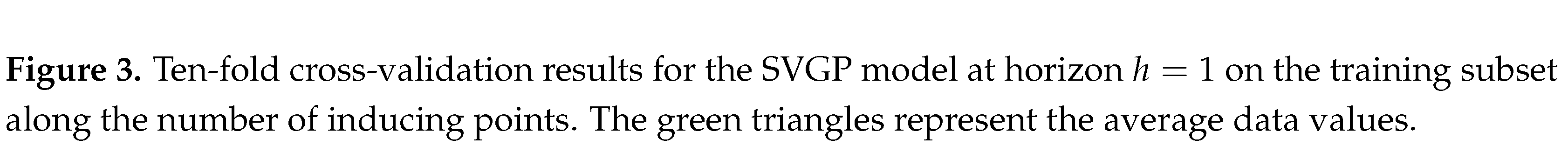

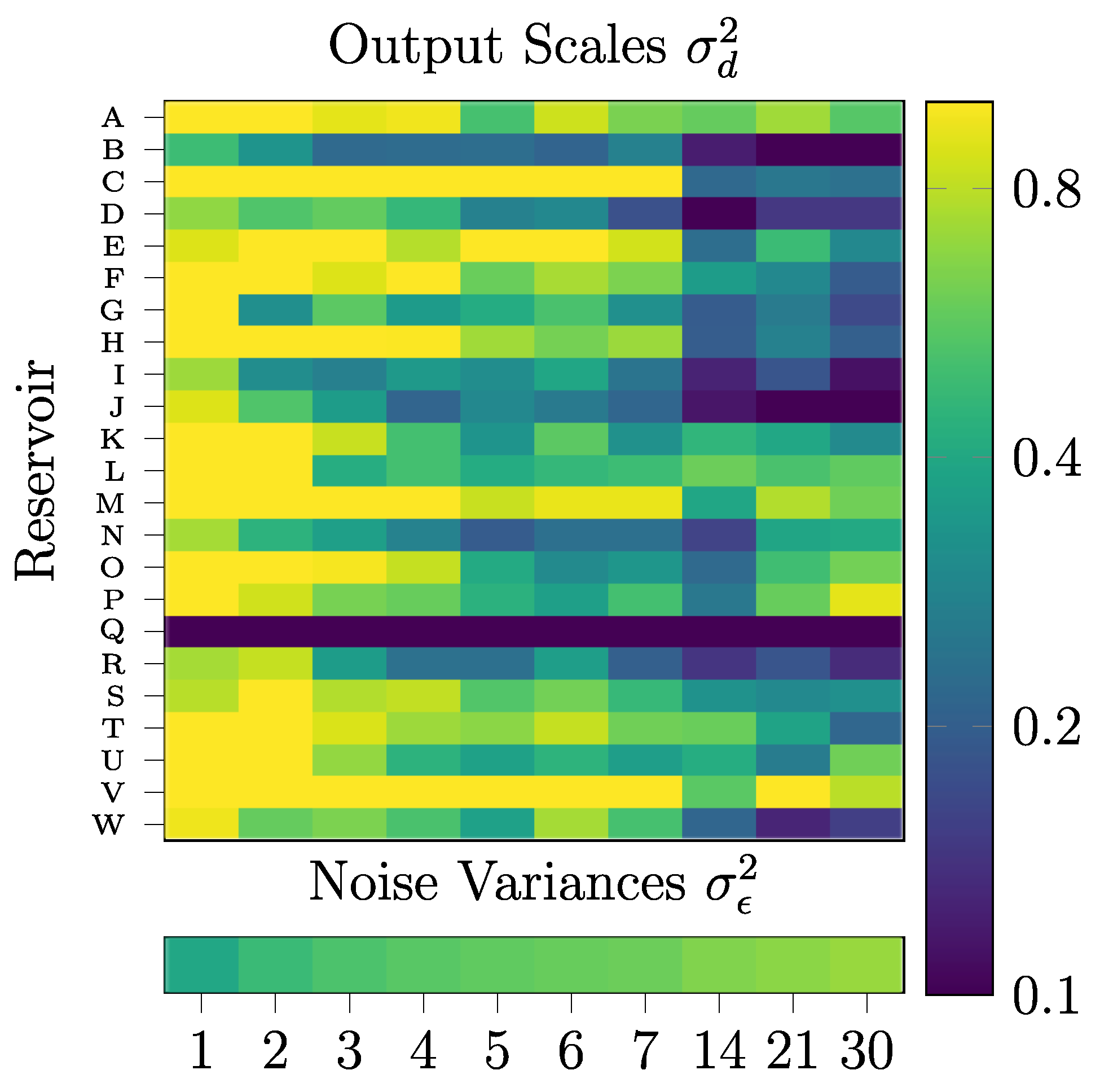

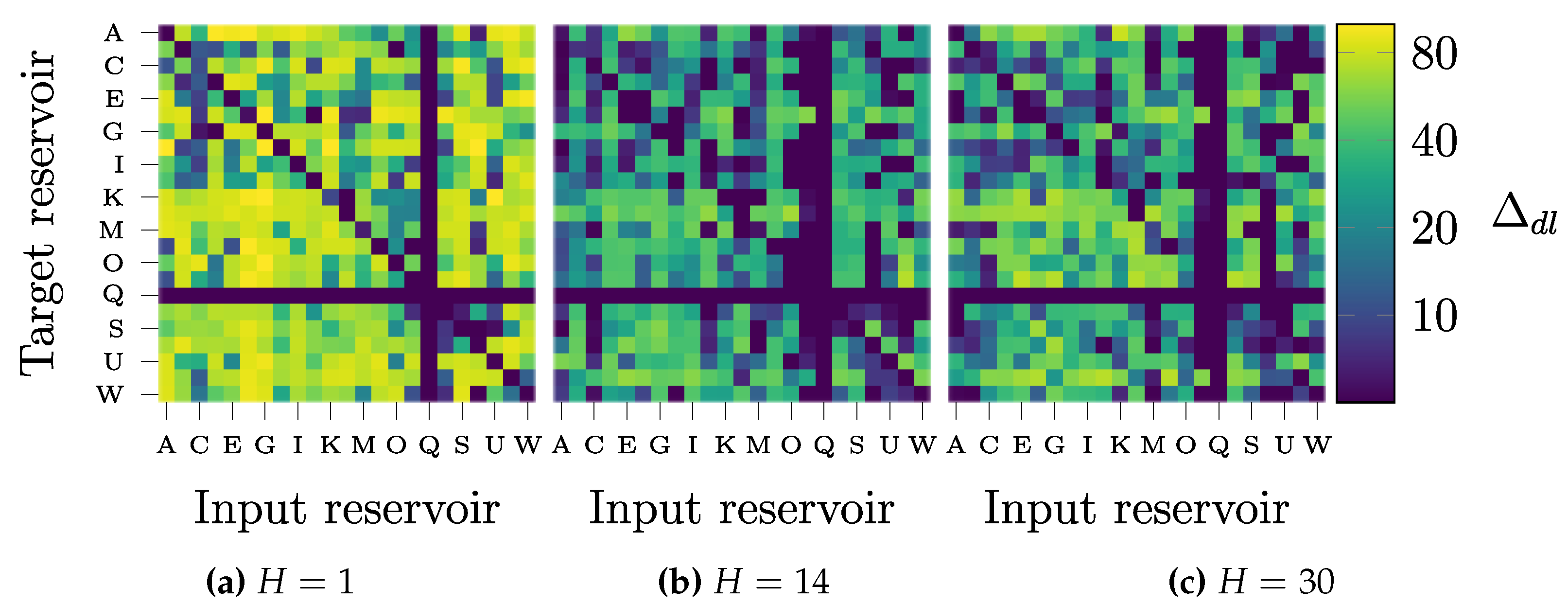

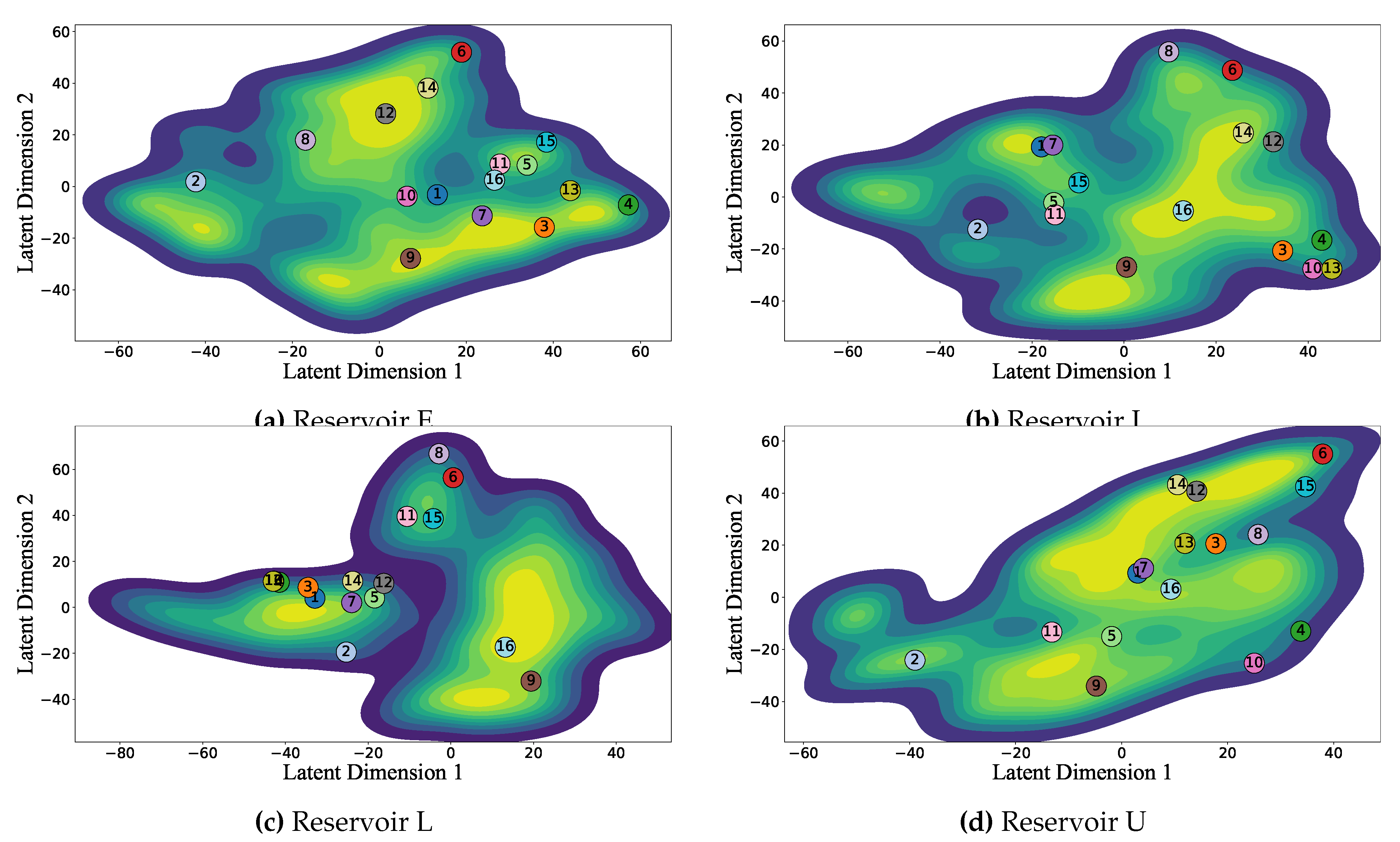

3.2. Hyperparameter Tuning and Model Interpretability

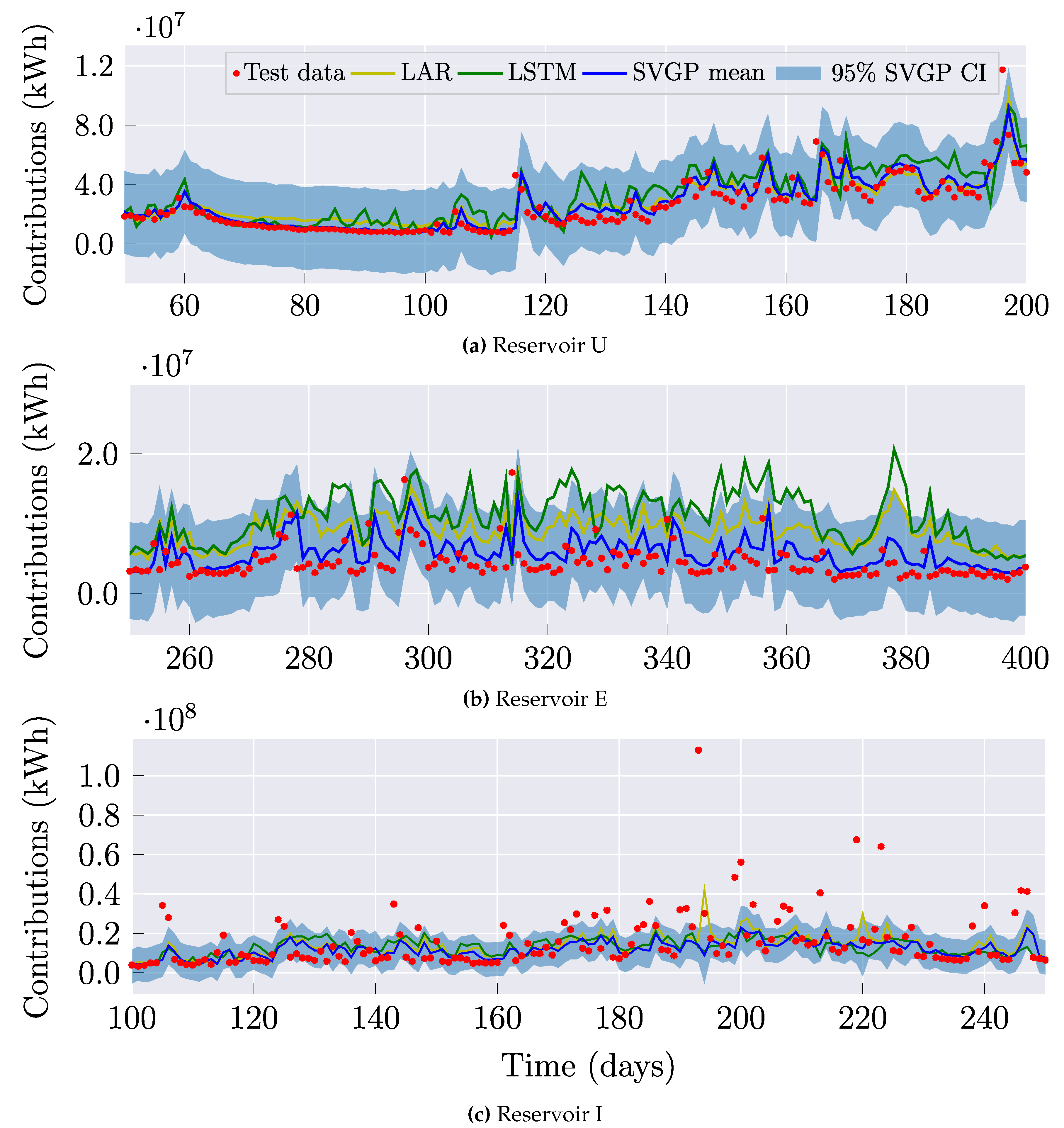

3.3. Performance Analysis

4. Concluding Remarks

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Beça, P. , Rodrigues, A.C., Nunes, J.P., Diogo, P., Mujtaba, B., 2023. Optimizing reservoir water management in a changing climate. Water Resources Management 37, 3423–3437. URL. [CrossRef]

- Bruinsma, W. , Perim, E., Tebbutt, W., Hosking, S., Solin, A., Turner, R., 2020. Scalable exact inference in multi-output Gaussian processes, in: III, H.D., Singh, A. (Eds.), Proceedings of the 37th International Conference on Machine Learning, PMLR. pp. 1190–1201. URL: https://proceedings.mlr.press/v119/bruinsma20a.html.

- Cheng, M. , Fang, F., Kinouchi, T., Navon, I., Pain, C., 2020. Long lead-time daily and monthly streamflow forecasting using machine learning methods. Journal of Hydrology 590, 125376. URL: https://www.sciencedirect. 0022. [Google Scholar] [CrossRef]

- Coronado-Hernández, O.E. , Merlano-Sabalza, E., Díaz-Vergara, Z., Coronado-Hernández, J.R., 2020. Selection of hydrological probability distributions for extreme rainfall events in the regions of colombia. Water 12. URL: https://www.mdpi. 2073. [Google Scholar] [CrossRef]

- Cárdenas-Peña, D. , Collazos-Huertas, D., Castellanos-Dominguez, G., 2017. Enhanced data representation by kernel metric learning for dementia diagnosis. Frontiers in Neuroscience 11. URL: https://www.frontiersin.org/articles/10.3389/fnins.2017. 0041. [Google Scholar] [CrossRef]

- Cárdenas-Peña, D. , Collazos-Huertas, D., Álvarez Meza, A., Castellanos-Dominguez, G., 2018. Supervised kernel approach for automated learning using general stochastic networks. Engineering Applications of Artificial Intelligence 68, 10–17. URL: https://www.sciencedirect. 0952. [Google Scholar] [CrossRef]

- Damianou, A. , Lawrence, N.D., 2013. Deep Gaussian processes, in: Carvalho, C.M., Ravikumar, P. (Eds.), Proceedings of the Sixteenth International Conference on Artificial Intelligence and Statistics, PMLR, Scottsdale, Arizona, USA. pp. 207–215. URL: https://proceedings.mlr.press/v31/damianou13a.html.

- Departamento Nacional de Planeación, 2023. Bases del plan nacional de inversiones 2022-2026. Documento en línea. URL: https://colaboracion.dnp.gov.co/CDT/portalDNP/PND-2023/2023-05-04-bases-plan-nacional-de-inversiones-2022-2026.pdf.

- Ditthakit, P. , Pinthong, S., Salaeh, N., Weekaew, J., Thanh Tran, T., Bao Pham, Q., 2023. Comparative study of machine learning methods and gr2m model for monthly runoff prediction. Ain Shams Engineering Journal 14, 101941. URL: https://www.sciencedirect. 2090. [Google Scholar] [CrossRef]

- Dong, L. , Li, Y., Xiu, X., Li, Z., Zhang, W., Chen, D., 2023. An integrated ultra short term power forecasting method for regional wind–pv–hydro. Energy Reports 9, 1531–1540. URL: https://www.sciencedirect. 2352. [Google Scholar] [CrossRef]

- Eressa, M.R. , Badis, H., George, L., Grosso, D., 2022. Sparse variational gaussian process with dynamic kernel for electricity demand forecasting, in: 2022 IEEE 7th International Energy Conference (ENERGYCON), pp. 1–6. [CrossRef]

- Gal, Y. , Ghahramani, Z., 2016. Dropout as a Bayesian approximation: Representing model uncertainty in deep learning, in: International Conference on Machine Learning, pp. 1050–1059.

- Guo, J. , Liu, Y., Zou, Q., Ye, L., Zhu, S., Zhang, H., 2023. Study on optimization and combination strategy of multiple daily runoff prediction models coupled with physical mechanism and lstm. Journal of Hydrology 624. URL: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85165535666&doi=10.1016%2fj.jhydrol.2023. 1299. [Google Scholar] [CrossRef]

- H. Kashani, M. H. Kashani, M., Ghorbani, M.A., Dinpashoh, Y., Shahmorad, S., 2016. Integration of volterra model with artificial neural networks for rainfall-runoff simulation in forested catchment of northern iran. Journal of Hydrology 540, 340–354. URL: https://www.sciencedirect. 0022. [Google Scholar] [CrossRef]

- Hensman, J. , Fusi, N., Lawrence, N.D., 2013. Gaussian processes for big data. URL: https://arxiv.org/abs/1309. 6835. [Google Scholar] [CrossRef]

- Hojjati-Najafabadi, A. , Mansoorianfar, M., Liang, T., Shahin, K., Karimi-Maleh, H., 2022. A review on magnetic sensors for monitoring of hazardous pollutants in water resources. Science of The Total Environment 824, 153844. URL: https://www.sciencedirect. 0048. [Google Scholar] [CrossRef]

- Hu, Y. , Yan, L., Hang, T., Feng, J., 2020. Stream-flow forecasting of small rivers based on lstm. arXiv:2001.05681.

- Huangpeng, Q. , Huang, W., Gholinia, F., 2021. Forecast of the hydropower generation under influence of climate change based on rcps and developed crow search optimization algorithm. Energy Reports 7, 385–397. URL: https://www.sciencedirect. 2352. [Google Scholar] [CrossRef]

- Kilinc, H.C. , Haznedar, B., 2022. A hybrid model for streamflow forecasting in the basin of euphrates. Water 14. URL: https://www.mdpi. 2073; /80. [Google Scholar] [CrossRef]

- Kim, T. , Yang, T., Gao, S., Zhang, L., Ding, Z., Wen, X., Gourley, J.J., Hong, Y., 2021. Can artificial intelligence and data-driven machine learning models match or even replace process-driven hydrologic models for streamflow simulation?: A case study of four watersheds with different hydro-climatic regions across the conus. Journal of Hydrology 598, 126423. URL: https://www.sciencedirect. 0022. [Google Scholar] [CrossRef]

- Lakshminarayanan, B. , Pritzel, A., Blundell, C., 2017. Simple and scalable predictive uncertainty estimation using deep ensembles. arXiv:1612.01474.

- Li, J. , Yuan, X., 2023. Daily streamflow forecasts based on cascade long short-term memory (lstm) model over the yangtze river basin. Water 15. URL: https://www.mdpi. 2073. [Google Scholar] [CrossRef]

- Li, P. , Zha, Y., Shi, L., Tso, C.H.M., Zhang, Y., Zeng, W., 2020. Comparison of the use of a physical-based model with data assimilation and machine learning methods for simulating soil water dynamics. Journal of Hydrology 584, 124692. URL: https://www.sciencedirect. 0022. [Google Scholar] [CrossRef]

- Liu, C.L. , Tseng, C.J., Huang, T.H., Yang, J.S., Huang, K.B., 2023. A multi-task learning model for building electrical load prediction. Energy and Buildings 278, 112601. URL: https://www.sciencedirect. 0378. [Google Scholar] [CrossRef]

- Liu, H. , Cai, J., Ong, Y.S., 2018. Remarks on multi-output gaussian process regression. Knowledge-Based Systems 144, 102–121. URL: https://www.sciencedirect. 0950. [Google Scholar] [CrossRef]

- Liu, K. , Li, Y., Hu, X., Lucu, M., Widanage, W.D., 2020. Gaussian process regression with automatic relevance determination kernel for calendar aging prediction of lithium-ion batteries. IEEE Transactions on Industrial Informatics 16, 3767–3777. [CrossRef]

- Lo Iacono, G. , Armstrong, B., Fleming, L.E., Elson, R., Kovats, S., Vardoulakis, S., Nichols, G.L., 2017. Challenges in developing methods for quantifying the effects of weather and climate on water-associated diseases: A systematic review. PLOS Neglected Tropical Diseases 11, 1–35. URL. [CrossRef]

- Mensah, J.K. , Ofosu, E.A., Yidana, S.M., Akpoti, K., Kabo-bah, A.T., 2022. Integrated modeling of hydrological processes and groundwater recharge based on land use land cover, and climate changes: A systematic review. Environmental Advances 8, 100224. URL: https://www.sciencedirect. 2666. [Google Scholar] [CrossRef]

- jing Niu, W. , kai Feng, Z., 2021. Evaluating the performances of several artificial intelligence methods in forecasting daily streamflow time series for sustainable water resources management. Sustainable Cities and Society 64, 102562. URL: https://www.sciencedirect. 2210. [Google Scholar] [CrossRef]

- Park, H.J. , Kim, Y., Kim, H.Y., 2022. Stock market forecasting using a multi-task approach integrating long short-term memory and the random forest framework. Applied Soft Computing 114, 108106. URL: https://www.sciencedirect. 1568. [Google Scholar] [CrossRef]

- Quilty, J. , Adamowski, J., 2020. A stochastic wavelet-based data-driven framework for forecasting uncertain multiscale hydrological and water resources processes. Environmental Modelling & Software 130, 104718. URL: https://www.sciencedirect. 1364. [Google Scholar] [CrossRef]

- Rahimzad, M. , Moghaddam Nia, A., Zolfonoon, H., Soltani, J., Danandeh Mehr, A., Kwon, H.H., 2021. Performance comparison of an LSTM-based deep learning model versus conventional machine learning algorithms for streamflow forecasting. Water Resources Management 35, 4167–4187. URL. [CrossRef]

- Rasmussen, C.E. , Williams, C.K.I., 2006. Gaussian processes for machine learning. Adaptive computation and machine learning, MIT Press.

- Sahoo, B.B. , Jha, R., Singh, A., Kumar, D., 2019. Long short-term memory (lstm) recurrent neural network for low-flow hydrological time series forecasting. Acta Geophysica 67, 1471–1481. URL. [CrossRef]

- Sit, M. , Demiray, B.Z., Xiang, Z., Ewing, G.J., Sermet, Y., Demir, I., 2020. A comprehensive review of deep learning applications in hydrology and water resources. Water Science and Technology 82, 2635–2670. URL. [CrossRef]

- Sulamo, M.A. , Kassa, A.K., Roba, N.T., 2021. Evaluation of the impacts of land use/cover changes on water balance of Bilate watershed, Rift valley basin, Ethiopia. Water Practice and Technology 16, 1108–1127. URL. [CrossRef]

- Sun, A.Y. , Wang, D., Xu, X., 2014. Monthly streamflow forecasting using gaussian process regression. Journal of Hydrology 511, 72–81. URL: https://www.sciencedirect. 0022. [Google Scholar] [CrossRef]

- Sun, N. , Zhang, S., Peng, T., Zhang, N., Zhou, J., Zhang, H., 2022. Multi-variables-driven model based on random forest and gaussian process regression for monthly streamflow forecasting. Water 14, 1828. URL: https://www.mdpi. 2073. [Google Scholar] [CrossRef]

- Tebong, N.K. , Simo, T., Takougang, A.N., 2023. Two-level deep learning ensemble model for forecasting hydroelectricity production. Energy Reports 10, 2793–2803. URL: https://www.sciencedirect. 2352. [Google Scholar] [CrossRef]

- Tofiq, Y.M. , Latif, S.D., Ahmed, A.N., Kumar, P., El-Shafie, A., 2022. Optimized model inputs selections for enhancing river streamflow forecasting accuracy using different artificial intelligence techniques. Water Resources Management 36, 5999–6016. [CrossRef]

- Unidad de Planeación Minero Energética (UPME), 2019. Mapa energético de colombia. Documento en línea. URL: https://www1.upme.gov.co/Hemeroteca/Memorias/Mapa_energetico_Colombia.pdf.

- Wen, H. , Ma, J., Gu, J., Yuan, L., Jin, Z., 2022. Sparse variational gaussian process based day-ahead probabilistic wind power forecasting. IEEE Transactions on Sustainable Energy 13, 957–970. [CrossRef]

- Yaseen, Z.M. , Allawi, M.F., Yousif, A.A., Jaafar, O., Hamzah, F.M., El-Shafie, A., 2018. Non-tuned machine learning approach for hydrological time series forecasting. Neural Computing and Applications 30, 1479–1491. URL. [CrossRef]

- Zounemat-Kermani, M. , Batelaan, O., Fadaee, M., Hinkelmann, R., 2021. Ensemble machine learning paradigms in hydrology: A review. Journal of Hydrology 598, 126266. URL: https://www.sciencedirect. 0022. [Google Scholar] [CrossRef]

- Álvarez, M.A. , Rosasco, L., Lawrence, N.D., 2012. Kernels for Vector-Valued Functions: A Review. Now Foundations and Trends. URL: https://ieeexplore.ieee.org/document/8187598.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).