1. Introduction

Emotions play a significant role in human interactions, serving as essential mediators in social communication systems[

1]. Human expression of emotions incorporates diverse modalities, including facial expressions, speech patterns [

2], and body language [

3]. According to Darwin and Prodger [

4], human facial expressions indicate their emotional states and intentions. Recently, automatic emotion detection through computer vision techniques has shown a growth in interest and application across many domains, including hospital patient care [

5], neuroscience research [

6], smart home technologies [

7], and even in cancer treatment [

8,

9]. This diversity has established emotion recognition as a distinct and growing field within research, primarily due to its wide range of applications and intense impact on various phases of human life.

Emotion recognition from images mainly consists of two steps: feature extraction and classification. Facial images encompass a multitude of features including geometric, texture, color, intensity, landmarks, shape, and histogram-based features. Handcrafted techniques for feature extraction in facial images involve manual identification of landmarks for geometric features, texture analysis using methods like Local Binary Patterns (LBP) [

10], and color distribution analysis through histograms. To enhance the feature extraction process, dimensionality reduction techniques such as PCA (Principal Component Analysis) [

11]/t-SNE (t-Distributed Stochastic Neighbor Embedding) [

12] have been employed to obtain crucial features for classification. Traditional machine learning algorithms like Support Vector Machine (SVM) [

13], and Random Forest (RF) [

14] were used to classify the emotions from these features. However, hand-crafted features often struggle to capture the important information required for effective face identification. Moreover, kernel-based methods frequently produce feature vectors that are excessively large, leading to overfitting of the model [

15].

Deep learning, particularly Convolutional Neural Networks (CNNs) [

16], is renowned for its capability to autonomously learn hierarchical features and complex patterns. However, CNNs frequently face challenges such as overfitting, which arises from limited data availability and computational complexity. Additionally, issues like vanishing or exploding gradients can undermine the stability of training processes [

17].

Transfer learning has gained popularity in machine learning as a method for accelerating tasks. Transfer learning provides a framework for leveraging well-known pre-trained models such as VGG (Visual Geometry Group) [

18], ResNet [

19], DenseNet121 [

20] trained on millions of image data and is particularly relevant for FER application in the related domain of facial images.

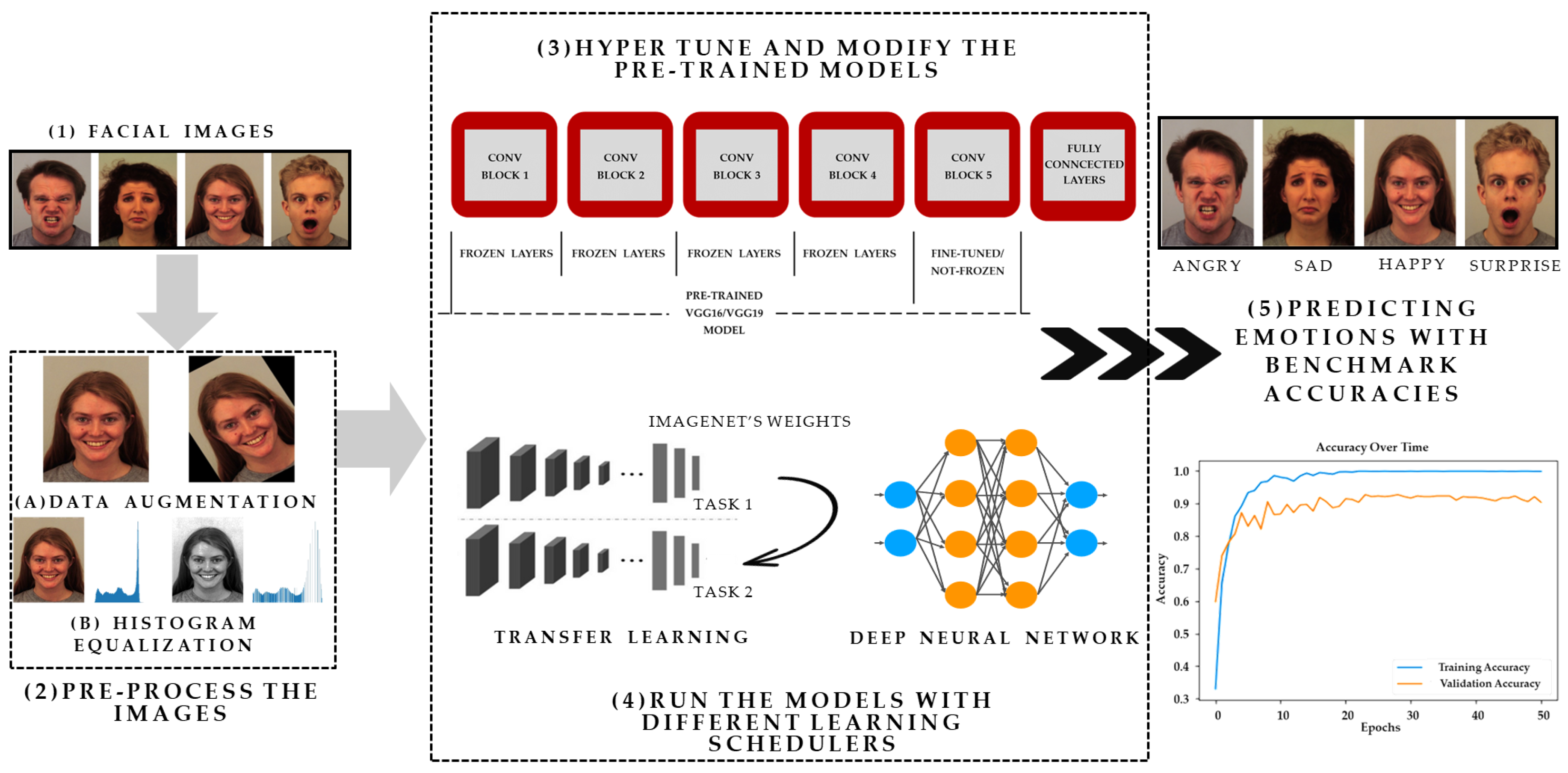

A schematic representation of the proposed framework is presented in

Figure 1, utilizing images sourced from the Karolinska Directed Emotional Faces (KDEF) [

21] dataset for illustrative purposes. As presented in

Figure 1, the first step (1) showcases facial images retrieved from the KDEF, Filtered Facial Expression Recognition 2013 (FER2013) [

22], and Cohn-Kanade (CK+) [

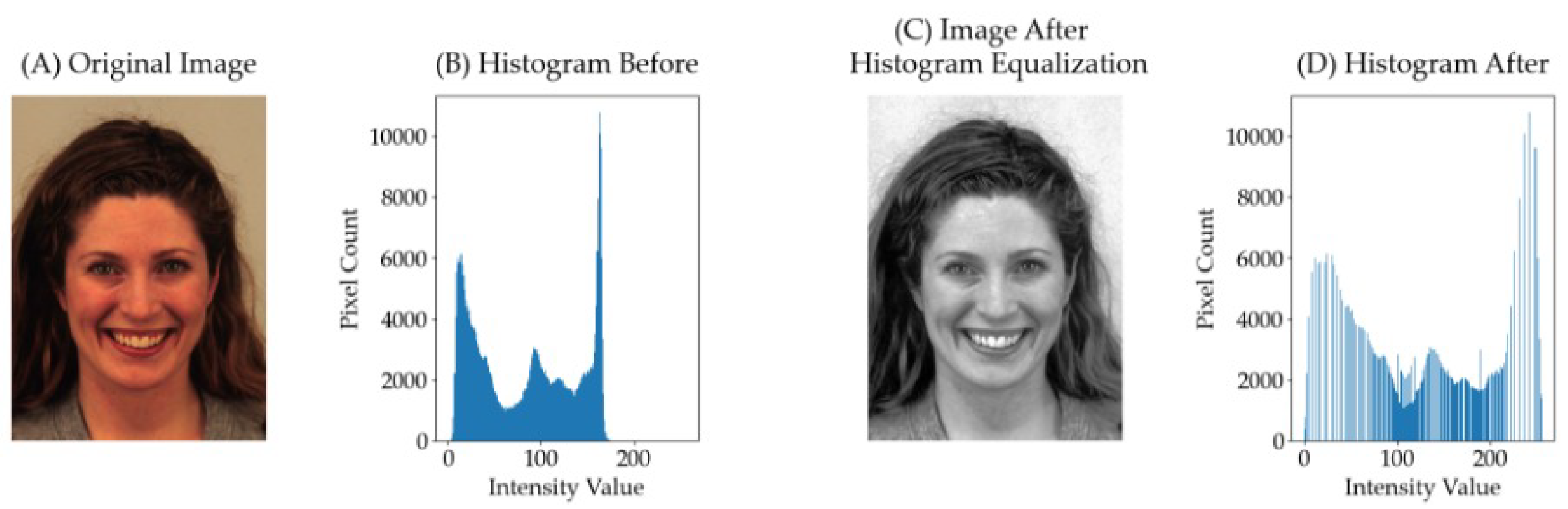

23] datasets. In the subsequent step (2), the data undergoes preprocessing, wherein data augmentation techniques such as horizontal flipping, zooming, rotating, and histogram equalization [

24] methods are applied. These techniques serve to augment the dataset, enhancing image contrast and thereby facilitating improved feature extraction. Moving forward to step (3), fine-tuning and modification of the pre-trained VGG19 and VGG16 models are undertaken. During this process, the last convolutional block of the models is kept unfrozen, while the remaining layers of the base models (pretrained VGG16, VGG19) are frozen. Additionally, the fully connected layers are then connected to these models. Moreover, diverse learning rate schedulers, including cosine annealing [

25] are implemented in the models. The evaluation phase encompasses training the models on datasets such as KDEF, Filtered FER2013, and CK+, followed by assessment using various evaluation metrics. These metrics include Accuracy, AUC-ROC [

26], AUC-PRC, and Weighted F1 score [

27], respectively.

A significant contribution of this paper is on optimizing the performance of pre-trained simple architecture such as VGG on well-known FER image datasets. Instead of opting for complex deep neural network models, this study demonstrates that careful fine-tuning can lead to better classification accuracy with simpler architectures. This study investigates the efficacy of histogram equalization and data augmentation in improving FER accuracy, alongside optimizing the performance of pre-trained architectures like VGG on three benchmark FER datasets. Additionally, this paper showcases the effectiveness of different regularization techniques, callbacks, and learning schedulers in enhancing model performance for FER by conducting extensive experiments.

The subsequent sections of this paper are structured as follows:

Section 2 presents a review of related literature in the field.

Section 3 introduces histogram equalization and cosine annealing to aid in understanding the proposed models.

Section 4 elaborates on the transfer learning-based model, datasets used, and the experiment pipeline.

Section 5 presents the experimental results, while

Section 6 provides a thorough discussion of these results. Finally,

Section 7 concludes the paper by discussing its significance and outlining potential future work.

2. Related Works

Numerous studies conducted in recent years have focused on FER, employing various techniques. Traditional machine learning approaches have been used alongside CNN models to get important information for classifying emotions extracted from visual objects.

Xiao-Xu et al. [

28] employed an ensemble approach using Wavelet Energy Feature (WEF) and Fisher’s Linear Discriminants (FLD) for feature extraction and classification of seven facial expressions (anger, disgust, fear, happiness, normal, sadness, surprise) within the Japanese Female Facial Expression (JAFFE) dataset [

29]. Abhinav Dhall et al. utilized the Pyramid of Histogram of Gradients (PHOG) [

30] and Local Phase Quantization (LPQ) [

31] features to encode shape and appearance information. They selected keyframes via K-means clustering [

32] of normalized shape vectors from Constrained Local Models (CLM) based face tracking. Emotion classification on the SSPNET [

33] and GEMEP-FERA [

34] datasets was conducted using SVM and Largest Margin Nearest Neighbor (LMNN) [

35]. Pu et al. proposed a framework employing two-fold RF classifiers to recognize Action Units (AUs) from image sequences. Facial motion measurement involved tracking Active Appearance Model (AAM) [

36] facial feature points with Lucas-Kanade optical flow [

37], using displacement vectors between neutral and peak expressions as motion features. These features were fed into a first-level RF for AU determination, followed by a second-level RF for facial expression classification [

38]. Golzadeh et al. focused on spatio-temporal feature extraction based on tracked facial landmarks, aiming to develop an automatic emotion recognition system [

39]. They employed the KDEF dataset to identify features that represent different human facial expressions, subsequently evaluating them through various classification methods. Through experimentation, employing K-fold cross-validation, they achieved precise recognition of facial expressions, attaining up to 87% accuracy with the newly devised features and a multiclass SVM classifier. Liew et al. propose five feature characteristics for FER and compare their performance using different classifiers and datasets (KDEF, CK+, JAFFE, and MUG [

40]). Among Gaussian-based filtering and response (GABOR), Haar [

41], LBP, and Histogram of oriented gradients (HOG) [

42] classifiers, HOG performs best for FER with higher image resolutions (above 48x48 pixels), averaging 80% accuracy in these datasets [

43].

The researchers found that the most straightforward method for classifying emotions is through CNN models. CNNs are well-suited for image tasks due to their ability to capture various levels of features efficiently and recognize patterns and objects in images regardless of their positions or sizes. Thakare et al. used several classifiers such as ConvNet, RF classifiers, and Extreme Gradient Boosting (XGBoost) classifiers [

44], with the CNN model ConvNet consistently yielding the highest accuracy in emotion classification [

45]. The researchers proposed a novel FER approach that integrates CNN with image edge detection to bypass traditional feature extraction. This method involves normalizing facial images, extracting edges, and merging this information with features to preserve structural composition. Subsequently, implicit features are reduced using maximum pooling, followed by softmax classification for emotion recognition. Testing on FER2013 [

46] and LFW datasets [

47] resulted in an average emotion detection rate of 88.56% with faster training, approximately 1.5 times quicker than comparative models [

48]. Badrulhisham et al. focused on real-time FER, employing MobileNet [

49] to train their model, achieving an 85% recognition accuracy for four emotions (happy, sad, surprise, disgust) on their custom dataset [

50]. Puthanidam et al. propose a hybrid approach for facial expression recognition, integrating image pre-processing steps with diverse CNN architectures to enhance accuracy and reduce training time [

51]. Experimental validation across multiple databases and facial orientations resulted in significant findings: achieving an accuracy of 89.58% on the KDEF dataset, 100% accuracy on the JAFFE dataset, and 71.975% accuracy on the combined dataset (KDEF + JAFFE + SFEW). These results were obtained using cross-validation techniques to minimize bias.

A significant amount of work has focused on employing transfer learning techniques with CNN models such as AlexNet [

52], SqueezeNet [

53], and VGG19, evaluating their efficacy on benchmark datasets including FER2013, JAFFE, KDEF, CK+, SFEW [

54], and KMU-FED. VGG19 demonstrated notable performance, achieving 99.7% accuracy on the KMU-FED database and competitive results across other benchmark datasets. Specifically, VGG19 attained performance accuracies of 98.98% for the CK+ dataset, 92.99% for the KDEF dataset with all data variations, 91.5% for the selected KDEF FrontalView dataset, 84.38% for JAFFE, 66.58% for FER2013, and 56.02% for SFEW [

55]. Additionally, some researchers have explored visual emotion recognition through social media images by employing pre-trained VGG19, ResNet50V2, and DenseNet-121 architectures as their base. Through fine-tuning and regularization techniques, these models demonstrated improved performance on Twitter images from the Crowdflower dataset, with DenseNet-121 exhibiting superior accuracies of 73%, 75%, and 89%, respectively [

56]. Furthermore, Subudhiray et al. investigated dual transfer learning for facial emotion classification, experimenting with pre-trained CNN architectures including VGG16, ResNet50, Inception ResNet [

57], Wide ResNet [

58], and AlexNet. By combining extracted feature vectors into various pairs and inputting them into an SVM classifier, this approach showed promising results in terms of accuracy, kappa, and overall accuracy compared to state-of-the-art methods across benchmark datasets such as JAFFE, CK+, KDEF, and FER2013 [

59]. Kaur et al. introduce FERFM, a novel approach using fine-tuned MobileNetV2 [

60] for FER on mobile devices. A pipeline strategy was introduced, where the pre-trained MobileNetV2 architecture is fine-tuned by eliminating the last six layers and adding dropout, max pooling, and dense layer. Using transfer learning from ImageNet, the method achieves an accuracy of 85.7% in RGB-KDEF dataset. It surpasses VGG16 with faster processing at 43ms per image and fewer trainable parameters totaling 1,510,599. [

61]. In another research, they proposed a system that employs a CNN framework using AlexNet’s features, achieving higher accuracy compared to other methods across various datasets like JAFFE, KDEF, CK+, FER2013, and AffectNet [

62]. Moreover, they proved it is more efficient and requires fewer device resources than other state-of-the-art deep learning models like VGG16, GoogleNet [

63], and ResNet [

64]. In another study, Zavarez et al. fine-tuned the VGG-Face Deep CNN model pre-trained for face recognition, the study investigates the impact of a cross-database approach [

65]. Results reveal significant accuracy improvements, with average accuracies of 88.58%, 67.03%, 85.97%, and 72.55% on CK+, MMI, RaFD, and KDEF databases, respectively.

5. Results

In this section, an in-depth discussion of the performance of the the VGG models on each of the datasets is given followed by an overall performance comparison on all three datasets in

Table 7.

5.1. KDEF

For the KDEF dataset, specifically, the hyper-tuned VGG19 model with histogram equalization achieved a commendable accuracy of 95.92%, while the fine-tuned VGG19 model without histogram lagged by just 1.7%. Similar trends were also observed in the VGG16 architectures, where histogram equalization helped the fine-tuned models achieve slightly better results. On the other hand, the models with all layers frozen and without histogram equalization, demonstrated inferior performance.

However, the most significant results are highlighted in

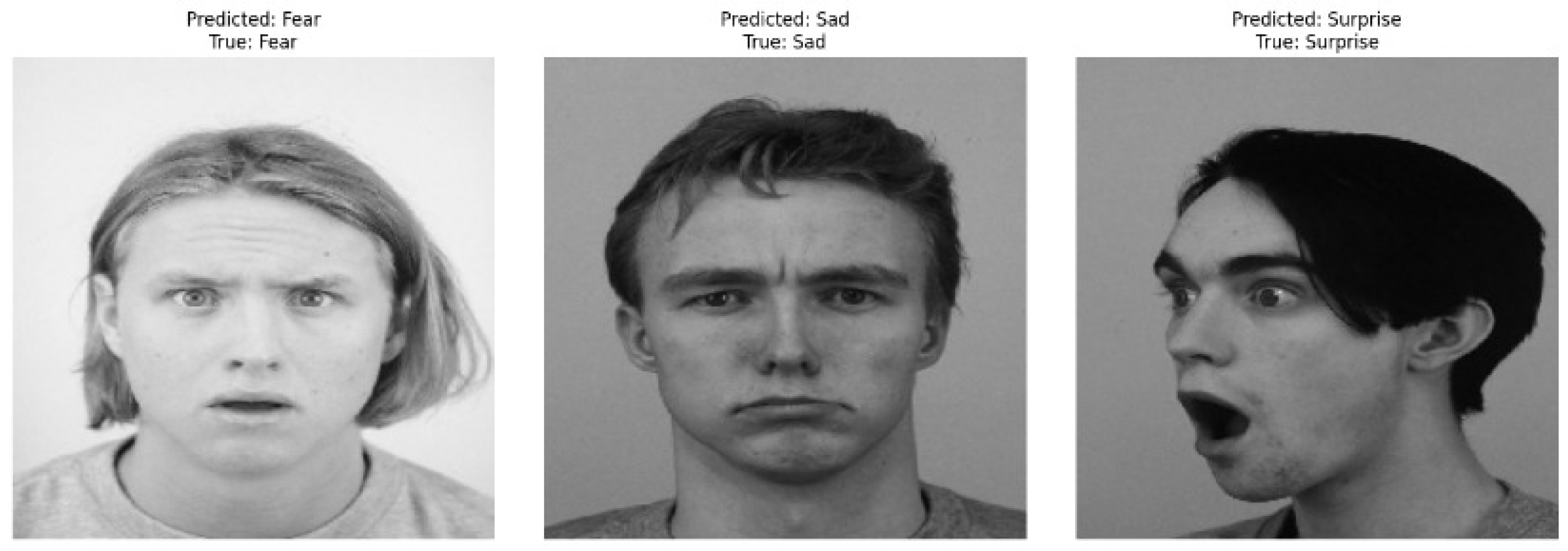

Table 5, which presents class-wise evaluation metrics for our best model, the fine-tuned VGG19 model with histogram equalization. Notably, the highest scores were observed in the happy class, with precision, recall, and F1-score all reaching 100%. Furthermore, our models performed exceptionally when predicting classes with actual emotions (

Figure 3). In both scenarios, the models with the highest accuracies showcased the correct classification of emotions.

5.2. CK+

For the CK+ dataset, our models have achieved the highest accuracies among various architectures. Specifically, the fine-tuned VGG16 and VGG19 models, both with and without histogram equalization, demonstrate exceptional performance with an accuracy of 100% and 98.99%, repectively (

Table 7). Additionally, these models exhibit strong performance in terms of weighted F1, AUC-ROC, and AUC-PRC metrics. VGG16 and VGG19 models, where the layers of the base models are frozen and not fine-tuned, exhibit lower performance across all evaluation metrics, showing 96.97% and 90.91% respectively.

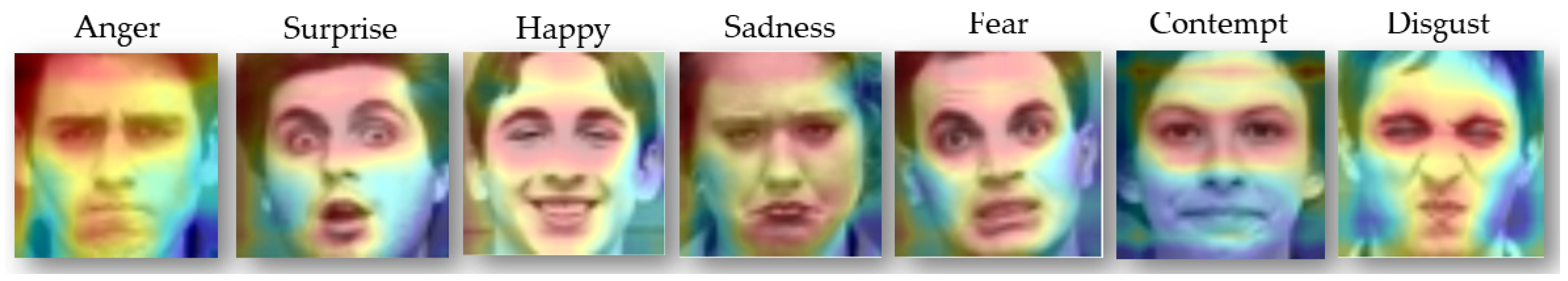

To gain insights into the inner workings of our CNN models, we have employed Grad-CAM (Gradient-weighted Class Activation Mapping) [

71]. Grad-CAM is a visualization technique that highlights important regions of an input image used by CNN for classification. By examining the Grad-CAM visualizations (

Figure 4), we observe that our models are effectively focusing on crucial areas (red marked areas) where important features are located. This suggests that our models are making informed decisions based on relevant image features.

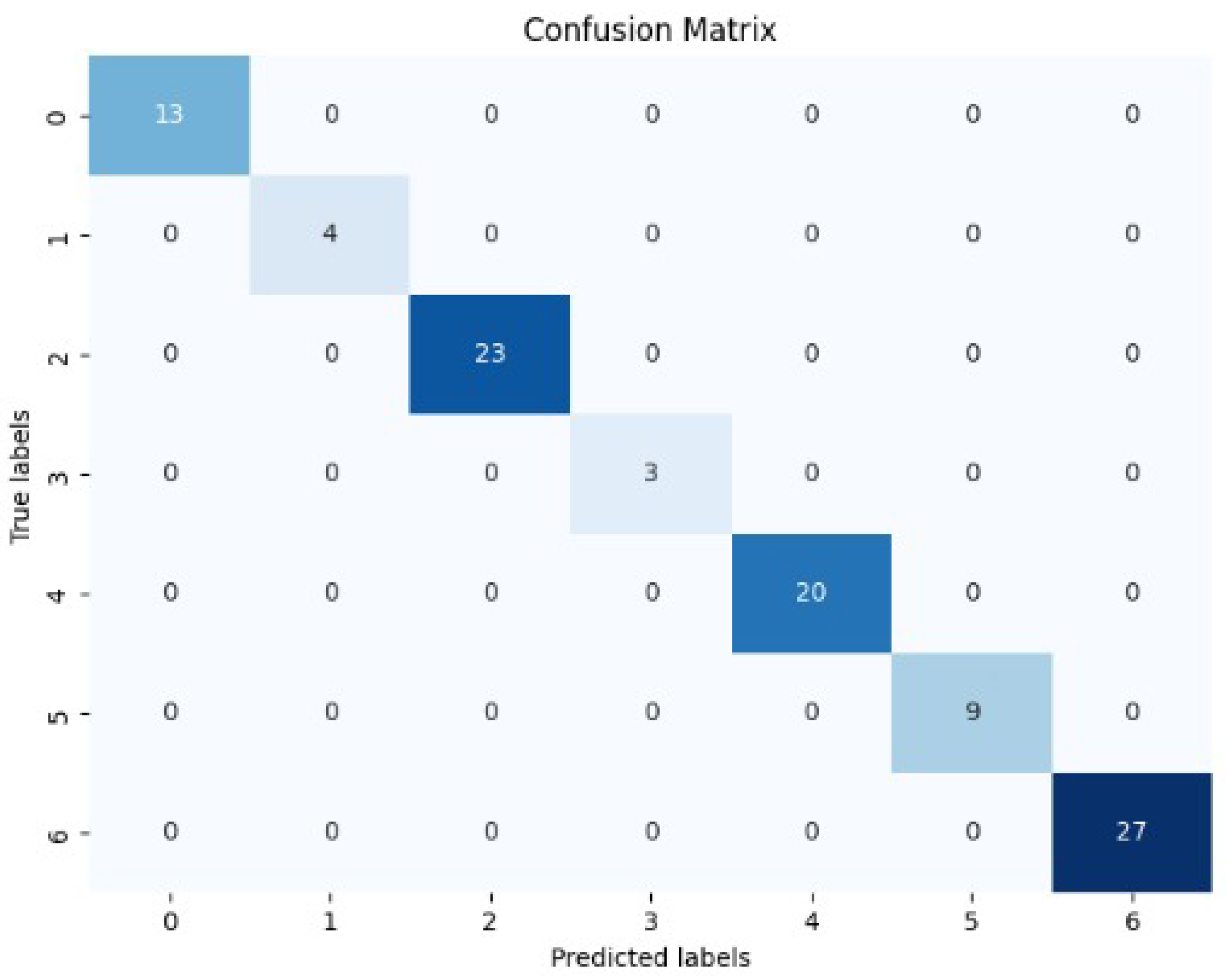

Furthermore, the superiority of our models can be visualized when we are examining the confusion matrix. The confusion matrix (

Figure 5) reveals zero misclassification rate on the CK+ datasets.

5.3. Filtered FER2013

In the case of the Filtered FER2013 dataset, the VGG16 model with histogram equalization outperforms other models, achieving an accuracy of almost 69.65%. The performance difference was marginal in the fine-tuned VGG19 models with and without histogram, which achieved accuracies of 69.44% and 60.06%, respectively.

For instance, when we applied cosine annealing to the fine-tuned VGG16 models with histogram, the model’s accuracy initially plateaued at around 67.57%. However, after reloading the model and running the algorithm for an additional 30 epochs with cosine annealing, the accuracy improved to 69.65%. This adaptive learning rate scheduling of cosine annealing allowed the model to escape local minima and explore the parameter space more effectively, ultimately leading to improved performance. The effectiveness of the models is further highlighted in

Table 6, where per-class accuracy demonstrates how well the model performs across different classes with the FER2013 dataset.

Table 6.

Performance of fine-tuned VGG16 model with histogram on different classes in the FER2013 dataset.

Table 6.

Performance of fine-tuned VGG16 model with histogram on different classes in the FER2013 dataset.

| Class |

Precision |

Recall |

F1-score |

| Angry |

0.62 |

0.62 |

0.62 |

| Disgust |

0.81 |

0.71 |

0.76 |

| Fear |

0.58 |

0.51 |

0.54 |

| Happy |

0.87 |

0.88 |

0.87 |

| Neutral |

0.63 |

0.66 |

0.64 |

| Sad |

0.57 |

0.61 |

0.59 |

| Surprise |

0.81 |

0.80 |

0.80 |

Table 7.

Performance comparison of different models on various datasets.

Table 7.

Performance comparison of different models on various datasets.

| Model |

Dataset |

Accuracy (%) |

Weighted-F1 (%) |

AUC-ROC (%) |

AUC-PRC (%) |

| VGG19 with all layer freezed + No Histogram |

CK+ |

90.91 |

91.00 |

99.00 |

96.00 |

| Finetuned VGG19 + No Histogram |

CK+ |

97.98 |

98.00 |

100.00 |

99.00 |

| Finetuned VGG19 + Histogram |

CK+ |

98.99 |

99.00 |

100.00 |

100.00 |

| VGG16 with all layer freezed + No Histogram |

CK+ |

96.97 |

97.00 |

100.00 |

99.00 |

| Finetuned VGG16 + No Histogram |

CK+ |

97.98 |

98.00 |

100.00 |

100.00 |

| Finetuned VGG16 + Histogram |

CK+ |

100 |

100.00 |

100.00 |

100.00 |

| VGG19 with all layer freezed + No Histogram |

KDEF |

54.76 |

53.93 |

86.65 |

59.29 |

| Finetuned VGG19 + No Histogram |

KDEF |

94.22 |

94.20 |

99.64 |

98.25 |

| Finetuned VGG19 + Histogram |

KDEF |

95.92 |

95.90 |

99.60 |

98.53 |

| VGG16 with all layer freezed and + No Histogram |

KDEF |

56.80 |

56.62 |

88.97 |

62.80 |

| Finetuned VGG16 + No Histogram |

KDEF |

92.18 |

92.12 |

99.62 |

98.08 |

| Finetuned VGG16 + Histogram |

KDEF |

92.86 |

92.87 |

99.69 |

98.34 |

| VGG19 with all layer freezed + No Histogram |

FER2013 |

35.99 |

29 |

57.70 |

19.73 |

| Finetuned VGG19 + No Histogram |

FER2013 |

69.06 |

68.57 |

80.87 |

52.26 |

| Finetuned VGG19 + Histogram |

FER2013 |

69.44 |

68.34 |

80.20 |

51.58 |

| VGG16 with all + freezed with No Histogram |

FER2013 |

41.20 |

35.53 |

60.36 |

22.22 |

| Finetuned VGG16 + No Histogram |

FER2013 |

68.8 |

69.29 |

81.34 |

52.37 |

| Finetuned VGG16 + Histogram |

FER2013 |

69.65 |

69.65 |

80.75 |

51.83 |

5.4. Comparison of Methods

The comparison of methods primarily focused on the accuracy metric, as most other researchers did not employ additional metrics like the AUC-ROC or AUC-PRC. The comparison of methods based on the KDEF, CK+, and FER2013 is depicted in the

Table 8.

Our model demonstrates exceptional performance on the KDEF dataset compared to state-of-the-art works. The closest contender is the study by Sahoo et al., achieving nearly 93% accuracy using a transfer learning model based on VGG19. On the CK+ dataset, our model also showcases supremacy. Although the work of Dar et al. achieved the same accuracy, their model was notably complex, with each convolutional block comprising approximately 18 layers.

However, the discussion primarily centers around the FER2013 dataset, where our model exhibits relatively good performance. We utilized the filtered FER2013 dataset from the work of Bialek et al., where their single 5-layer model achieved approximately 70.09% accuracy. Despite attempting to implement the same model on the filtered FER2013 dataset, we achieved only 68.27% accuracy. Therefore, we implemented our own pipeline, achieving an accuracy of almost 69.65%. Moreover, despite employing a simpler model based on pre-trained VGG16, our model compares favorably with other works that utilize more complex architectures. Notably, our model takes less time because of the lower parameters that it is using.

6. Discussion

This section of our study highlights key notations and compares our models with existing works in the field. We delve into specific notations crucial for understanding our approach and provide a thorough comparison of our models with those previously established in the literature.

The comparison between models with all layers frozen with no histogram and those with fine-tuned models with no histogram reveals significant differences in performance across datasets. For instance, in the KDEF dataset, the VGG19 with all layer freeze + No histogram achieved an accuracy of 54.76%, whereas the Finetuned VGG19 + No Histogram achieved nearly 94.22%. This variation underscores the limitations of freezing all layers, as it limits the model’s adaptability to the new task/domain by preserving fixed feature extraction mechanisms. Oppositely, unfreezing the last five layers allows for fine-tuning, enabling the model to learn task-specific representations and enhance performance by using pre-trained weights while accommodating adjustments to suit the new task requirements.

Furthermore, we proceed to compare the models’ performance post-histogram equalization. Notably, all hyper-tuned models exhibited slightly improved performance with histogram equalization. As indicated in

Table 7, hyper-tuned models using histogram equalized images showed a 0.3-2% enhancement on average compared to their counterparts without histogram equalization. However, exceptions were observed in the CK+ dataset, where both hyper-tuned models, with and without histogram equalization, exhibited similar performance.

In the case of the Filtered FER2013 dataset, one interesting observation worth discussing is the performance of the FER2013 dataset with image size set to 144x144. Despite the original VGG16 and VGG19 models being trained on the 224x224x3 ImageNet dataset, the 144x144 image size showed promising results across all experiments. This deviation from the conventional image size might be attributed to the potential loss of information and degradation of image quality when resizing an image to larger dimensions. When resizing an image to a larger size, the existing pixels are stretched to fill the new dimensions, which can lead to blurriness or pixelation. This loss of information may have been mitigated by selecting an optimum size of 144x144, which preserved the originality of the image while maintaining a balance between image quality and resolution.

When handling a small dataset like CK+, training models like VGG16 and VGG19 can be prone to overfitting due to their extensive parameter count. However, employing regularization techniques and training techniques, coupled with data augmentation, aids in effectively training the model with this limited data. This effectiveness can be seen in

Figure 4, where the model effectively identifies crucial features in facial data across various classes, showcasing its robustness even with a constrained dataset.