1. Introduction

Image stitching is a technology that can align and blend multiple images to generate a high-resolution, wide-field-of-view and artifact-free mosaic. It has broad and promising applications in many fields such as virtual reality, remote sensing mapping, and urban modeling. The calculation of the global homography, as an important step in image stitching [

1,

2], directly determines the image alignment accuracy and the final user experience. However, global homography only works for planar scenes or rotation-only camera motions. For non-planar scenes or when the optical centers of cameras do not coincide, homography tends to cause misalignment, resulting in blurring and ghosting in the mosaic. It can also cause perspective deformation, making the final mosaic blurred and severely stretched at the edges. Many solutions have proposed to solve the problems of parallax and perspective deformation in image stitching, so as to improve the quality of stitched images. But most state-of-art mesh-based [

3,

4,

5] and multi-plane [

6,

7,

8] method are time-consuming and vulnerable to false matches.

In this work, an innovative image stitching method combining sliding camera (SC) and asymmetric optical flow (AOF), referred to as the SC-AOF method, is proposed to reduce both perspective deformation and alignment error. In the non-overlapping area of the mosaic, the SC-AOF method manages to keep the viewpoint of the mosaic same as or one rotation around camera Z axis from those of input images. In the overlapping area of the mosaic, the viewpoint is changed from one input image viewpoint to another, which can effectively solve the perspective deformation at the edge. A global projection plane is estimated to project input images onto the mosaic; After that, an asymmetric optical flow method is employed to further align the images. In the blending, softmax function and alignment error are used to dynamically adjust the width of the blending area to further eliminate ghosting and improve the mosaic quality. This paper makes the following contributions:

The SC-AOF method innovatively uses an approach based on sliding camera to reduce perspective deformation. Combined with either a global projection model or a local projection model, this method can effectively reduce the perspective deformation.

An optical flow-based image alignment and blending method is adopted to further mitigate misalignment and improve stitching quality of mosaic generated by a global projection model.

Each step in the SC-AOF method can be combined with other methods to improve the stitching quality of the other methods. ‘

This article is organized as follows.

Section 2 presents the related works.

Section 3 firstly introduces the overall method of this article, then an edge stretching reduction method based on sliding camera and a local misalignment reduction method based on asymmetric optical flow are elaborated in detail.

Section 4 presents our qualitative and quantitative experimental results compared with other methods. Finally,

Section 5 concludes our method.

2. Related Works

For the local alignment, APAP(as-projective-as-possible) [

8,

9] use weighted DLT (direct linear transform) method to estimate the location-dependent homography and then eliminate misalignment. But if some key-points match incorrectly, the image areas near these key-points may have incorrect homography, resulting in serious alignment errors and distortion. REW (robust elastic warping) [

10,

11] uses the TPS (thin-plate spline) interpolation method to convert discrete matched feature points into a deformation field, which is used to warp the image and achieve accurate local alignment. TFT (triangular facet approximation) [

6] uses the Delaunay triangulation method and the matched feature points to triangulate the mosaic canvas, and the warping inside each triangle is determined by the homography calculated based on the three triangle vertices, so the false matches will lead to serious misalignment. The warping-residual-based image stitching method [

7] first estimates multiple homography matrices, and calculates warping residuals of each matched feature point using the multiple homography matrices. The homography of each region is estimated using moving DLT with the difference of the warping residuals as weight, which make the method can handle larger parallax then APAP, but is less robust to the incorrectly estimated homography. NIS (natural image stitching) [

12] method estimates a pixel-to-pixel transformation based on feature matches and the depth map to achieve accurate local alignment. In [

13], by increasing feature correspondences and optimizing hybrid terms, sufficient correct feature correspondences are obtained in the low-texture areas to eliminate misalignment. The two methods require additional calculations to enhance robustness, but also are susceptible to the uneven distribution and false matches of feature points.

For perspective deformation, SPHP (shape preserving half projective) [

14,

15] spatially combines perspective transformation and similarity transformation to reduce deformation. Perspective transformation can better align pixels in overlapping areas, and similarity transformation preserves the viewpoint of the original image in non-overlapping areas. AANAP (Adaptive as-natural-as-possible) [

16] derives the appropriate similarity transform directly based on matched feature points, and use weights to gradually transit from perspective transform to similarity transform. The transitions from the homography of overlapping area to the similarity matrix of non-overlapping area adopted by SPHP and AANAP are artificial and unnatural, and can generate some "strange" homography matrices, causing significant distortion in the overlapping area. GSP (global similarity prior) [

17,

18] adds a global similarity priori to constrains the warping of each image so that it resembles a similarity transformation as a whole and avoid large perspective distortion. In SPW (single-projective warp) [

19], the quasi-homography warp [

20] is adopted to mitigate projective distortion and preserve single perspective. SPSO (Structure Preservation and Seam Optimization) [

4] uses a hybrid warping model based on multi-homography and mesh-based warp to obtain precise alignment of areas at different depths while preserving local and global image structures. GES-GSP (geometric structure preserving-global similarity prior) [

21] employs deep learning-based edge detection to extract various types of large-scale edges, and further introduces large-scale geometric structure preserving to GSP to preserve the curves in images and protect them from distortion. These methods all require constructing and solving a super large linear equation to acquire the corresponding coordinates after mesh warping.

Based on the above analysis, generating a natural mosaic quickly and robustly remains a challenging task.

3. Methodology

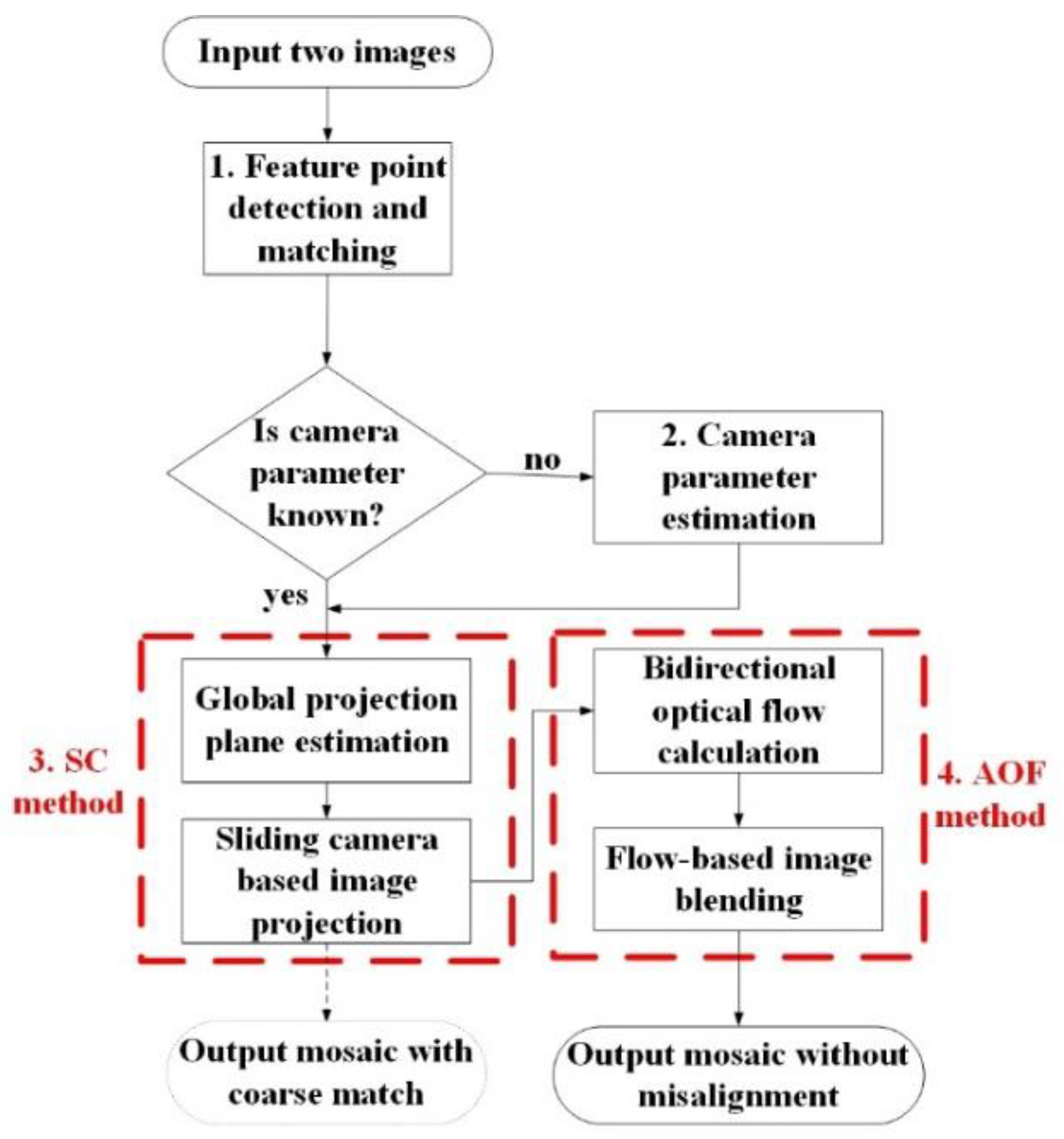

The flow chart of the SC-AOF algorithm is illustrated in

Figure 1. The detail about each of its steps are described below.

Step 1: Feature point detection and matching. SIFT (Scale-invariant feature transform) and SURF (speed-up robust feature) methods are generally used to detect and describe key-points from two input images. Using KNN (k-nearest neighbor) method, a group of matched points is extracted from the key-points and used for camera parameter estimation in step 2 and global projection plane calculation in step 3.

Step 2: Camera parameter estimation. The intrinsic and extrinsic camera parameters are the basis of the SC method, and can be obtained in advance or estimated. When camera parameters are known, we can skip step 1 and directly start from step 3. When camera parameters are unknown, they can be estimated by minimizing the epipolar and planar errors, as described in

Section 3.3.

Step 3: Sliding camera based image projection. In this step, we estimate the global projection plane first, then adjust the camera projection matrix and generate a virtual camera in the overlapping area by interpolation, and obtain the warped images by global planar projection, as detailed in section 3.1. Misalignment can be found in the two warped images obtained in current step. Therefore, we need to use AOF method in step 4 to further improve the alignment accuracy.

Step 4: Flow-based image blending. In this step, we first calculate the bidirectional asymmetric optical flow between the two warped images; then further align and blend the two warped images to generate mosaic using the optical flow (see

Section 3.2 for more details).

3.1. SC: Viewpoint Preservation Based on Sliding Camera

The sliding camera (SC) method is proposed for the first time to solve perspective deformation, and is the first step in the SC-AOF method. For this reason, this section will first introduce the stitching process of this method, and then details how to calculate the global projection plane and the sliding projection matrix required by this method.

3.1.1. SC Stitching Process

In order to ensure that the mosaic can maintain the perspective of the two input images, the SC method is used. That is, in the non-overlapping area, the viewpoints of the two input images are preserved. In the overlapping area, the viewpoint of camera is gradually transformed from to .

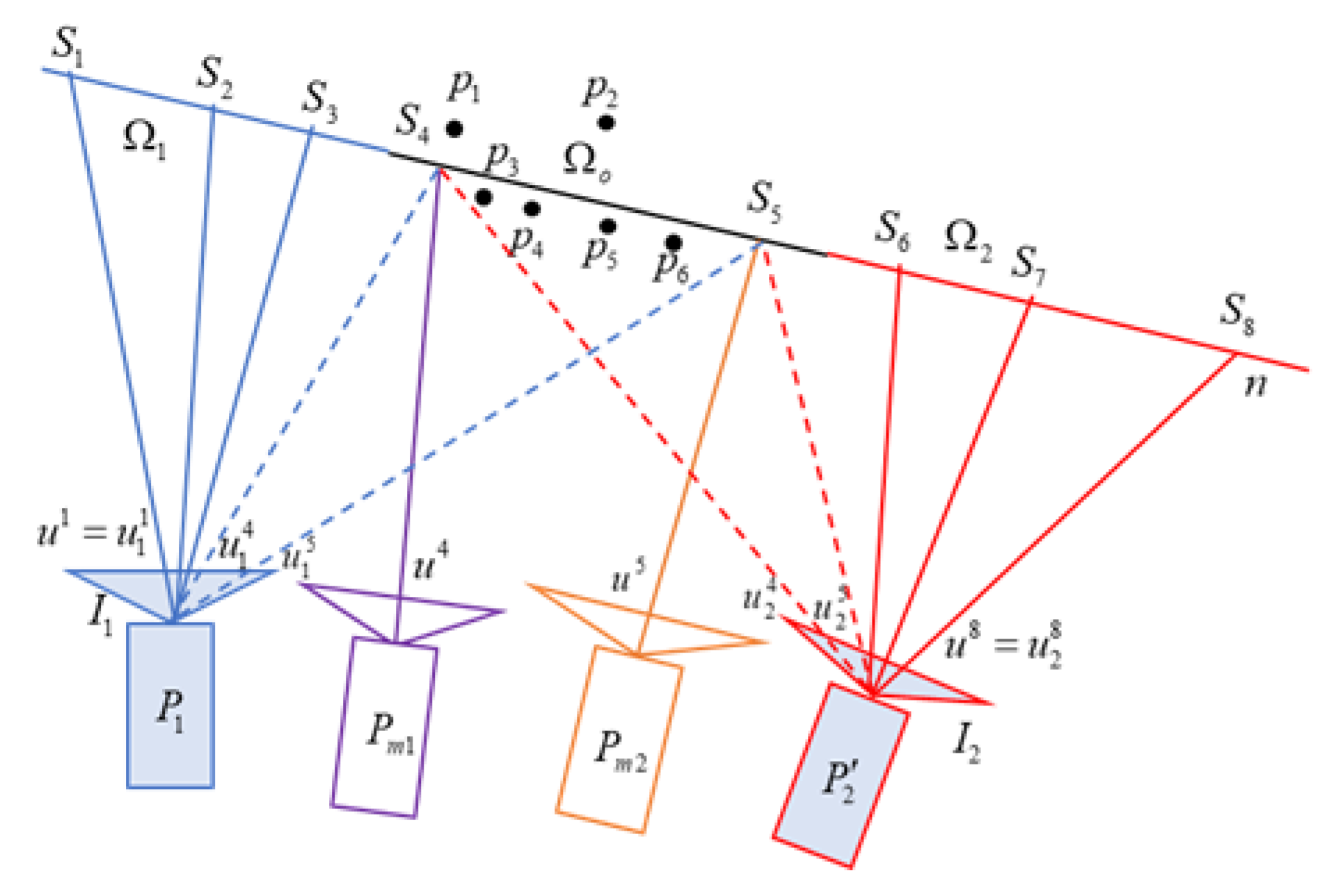

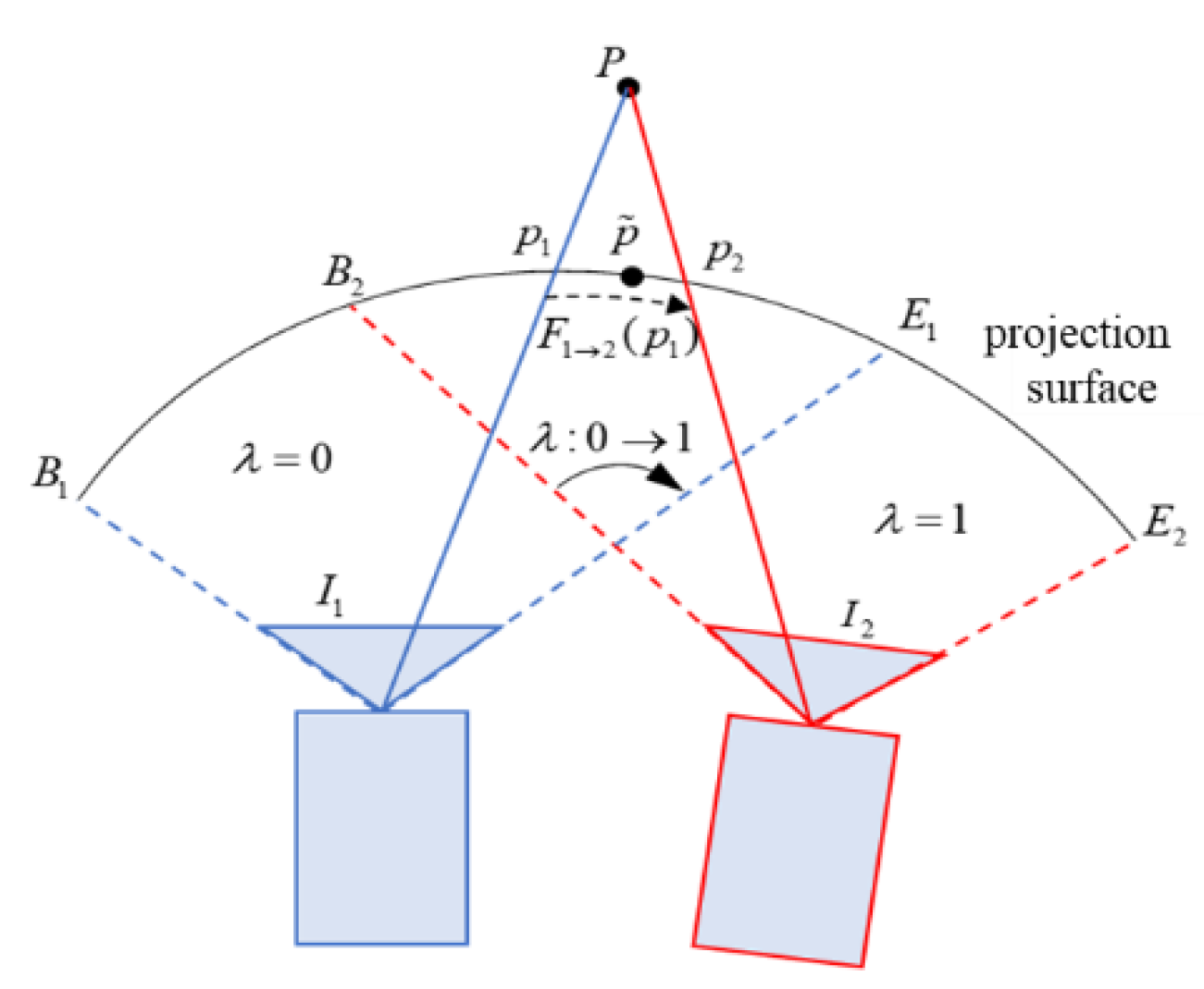

As shown in

Figure 2, the image

and

are back-projected onto the projection surface

, so that the corresponding non-overlapping areas

,

and overlapping area

are obtained. Assume that the pixels in the mosaic

are

, which correspond to the sampling points

in the projection surface

. When the sampling points are within the projection area

of image

, the mosaic is generated from the viewpoint of

.

are the intersection points of the backprojection lines of

in

and the projection surface

. Therefore,

, where

is the projection matrix of

. When the sampling points are within the projection area

of image

, the mosaic is generated from the camera viewpoint of

. Similarly, we obtain

and

, where

=6,7,8. In the overlapping area

of

and

, the SC method is used to generate a virtual camera, whose viewpoint gradually transitions from the viewpoint of

to that of

.

and

are the intersection points of the back-projection lines of

,

in the visual camera and projection plane

$n

$, respectively. The virtual camera's image is generated from images

and

using perspective transformation. For examples, pixel

of the virtual camera corresponds to pixel

in

and pixel

in

, and are generated by blending the latter two pixels.

Global projection surface calculation. In order to match the corresponding pixels

of

and

of

, the projection surface

needs to be as close as possible to the real scene point, we can use the moving plane method [

7,

8,

9] or the triangulation method [

6] to obtain a more accurate scene surface. Since the SC-AOF method will use the optical flow to further align the images, for the stitching speed and stability, only the global plane is calculated as the projection surface.

Section 3.1.2 will calculate the optimal global projection surface using the matched points.

Sliding camera generation. Generally, since the pixel coordinates of

and

are not uniform, in the mosaic

, when

in the non-overlapping area of

,

is false in the non-overlapping area of

, where

is the sampling point on the projection surface. It is necessary to adjust the projection matrix of

to

, so that

. As the red camera is shown in

Figure 2.

Section 3.1.3 will deduce the adjustment method of the camera projection matrix, and interpolate in the overlapping area to generate a sliding camera, and obtain the warped images of

and

.

3.1.2. Global Projection Surface Calculation

The projection matrices of cameras

and

corresponding to images

and

are:

where

and

are the intrinsic parameter matrices of

and

respectively;

is the inter-camera rotation matrix; and

is location of the optical center of

in the coordinate system

.

The relationship between the projection

in

and the projection

in

of 3D point

on plane

$n

$ is:

where

and

are the homogeneous coordinates of

and

respectively; The intersection point

satisfies

.

means that

is parallel to

.

If camera parameters

,

and

are known, then we can deduce the following (3) from (2)

where

and

are the normalized coordinates of

and

.

We use (3) of all matched points to construct a overdetermined equation and obtain the fitted global plane by solving this equation. Since the optical flow-based stitching method will be used to further align the images, the RANSAC method is not used here to calculate the plane with the most inliers. Instead, the global plane that fits all feature points as closely as possible is selected, misalignment caused by global plane projection will be better sloved during optical flow blending.

3.1.3. Projection Matrix Adjustment and Sliding Camera Generation

To preserve the viewpoint in the non-blending area of

, it is only required to satisfy

.

is a similarity transformation between

and

, and can be obtained by fitting the matched feature points:

where

and

are the homogeneous coordinates of pixels in

and

respectively.

Therefore, in the non-overlapping area of , , where is the corresponding 3D point of on plane . So we get the projection matrix =.

By RQ decomposition, the internal parameter matrix

and rotation

are extracted from

:

where

and

are upper triangular matrix and rotation matrix respectively; and the third line of both matrices is

.

Compared with , has a different intrinsic parameter matrix, and its rotation matrix only differs by one rotation around axis, and its optical center is not changed.

From

to

, the projection matrix transitions from

to

.

,

and

in the projection matrix

of the virtual camera can be calculated by weighting the corresponding intrinsic parameter matrices, rotation matrices and translation vectors of

and

:

where

represent the quaternions corresponding to

,

and

,

is the angle between

and

, and

is the weighting coefficient.

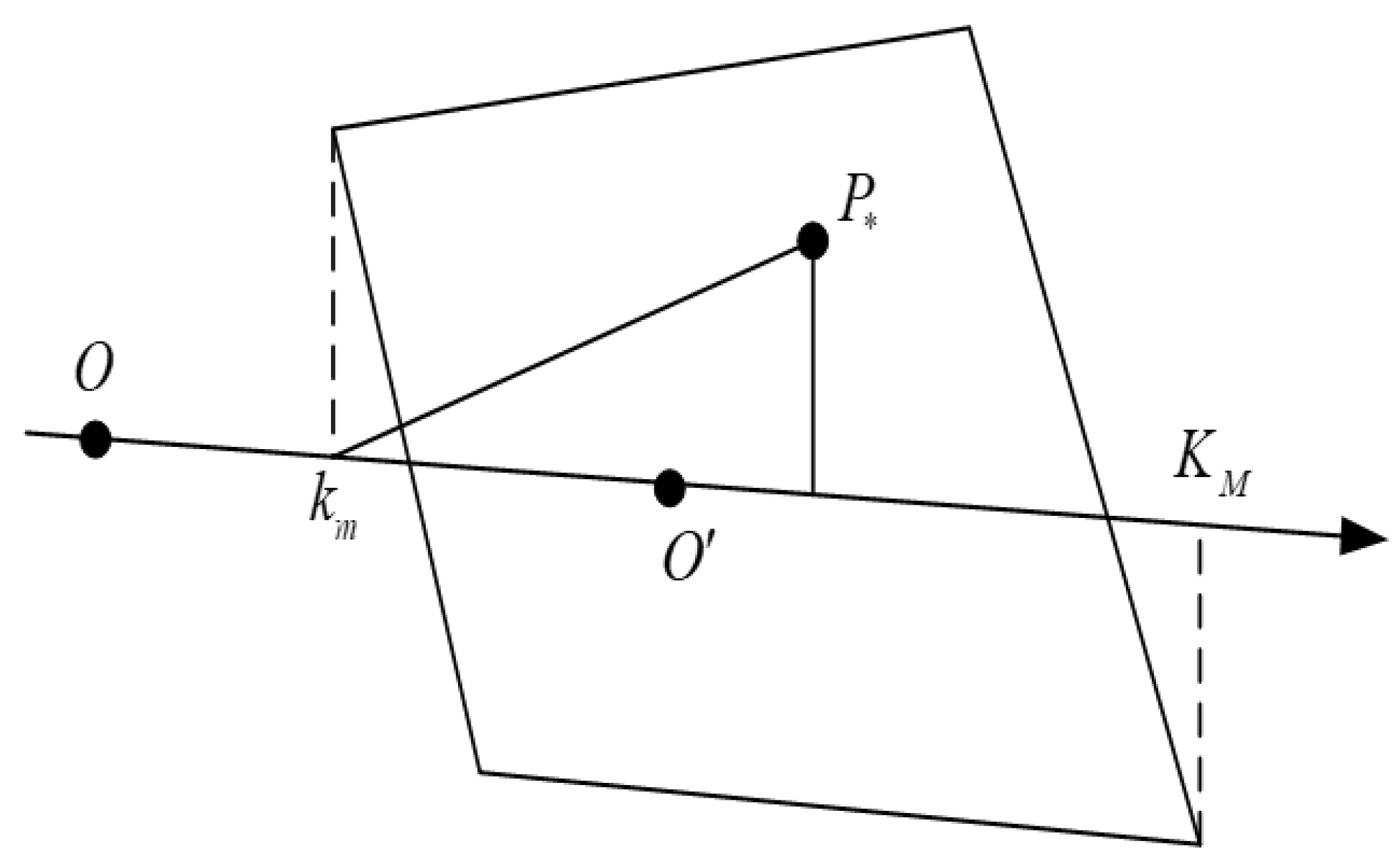

The weighting coefficient

$m

$ can be calculated by the method in AANAP [

16]:

The meaning of each variable is shown in

Figure 3. In the overlapping area, if

corresponds to sliding camera

, then the relation between

and

in

can be expressed as:

(10) and (11)are also applicable to non-overlapping area. Projecting

and

through

and

onto the mosaic respectively to get warped images

and

. Obviously:

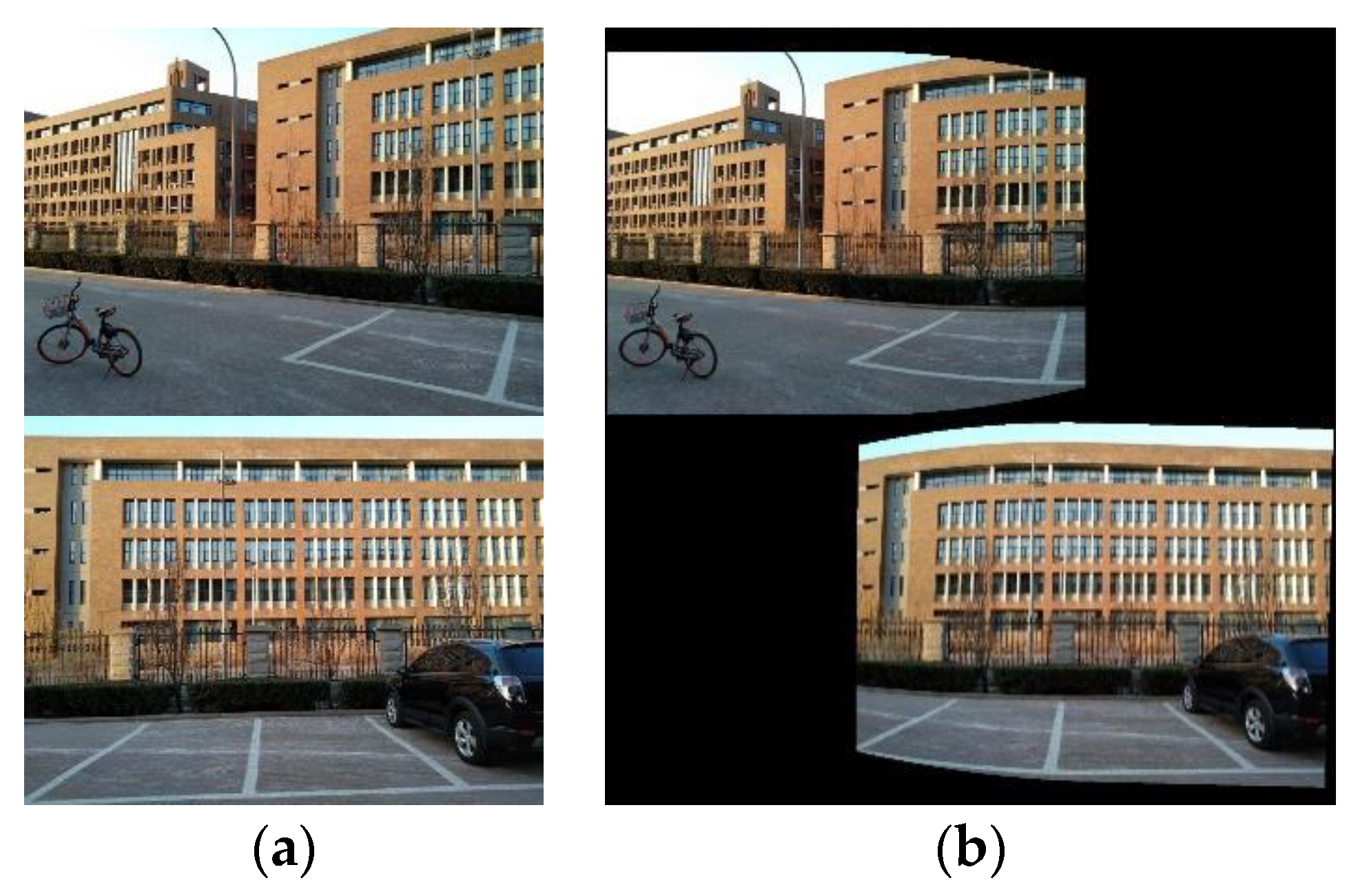

Figure 4 shows the experiment result on two school images used in [

10]. Due to the parallax between

and

, blending

and

will cause ghosting. Therefore, the next section will use an optical flow based blending method (AOF) to further align the images.

3.2. SC: Viewpoint Preservation Based on Sliding Camera

The mosaic generated by the SC method will inevitably has misalignment in most cases. So the optical-flow-based method is further employed to achieve more accurate alignment. This section firstly introduces the image alignment process based on asymmetric optical flow (AOF), and then details the calculation method of AOF.

3.2.1. Image Fusion Process of AOF

and

are projected onto the custom projection surface to obtain warped images

and

, which are blended to generate the mosaic

. As the 3D points of the scene are not always on the projection plane, ghosting artifacts can be seen in the mosaic, as shown in

Figure 4 in the above section. Direct multi-band image blending will lead to artifacts and blurring. As shown in

Figure 5, point

is projected to two points

and

in the mosaic, resulting in duplication of content. To solve the ghosting problem in the mosaic, the optical flow-based blending method in [

22] is adopted.

Suppose

represents the optical flow value of

in

and

represents the optical flow value of

in

. If the blending weight of pixel

in the overlapping area is

(from the non-overlapping area of

to the non-overlapping area of

,

gradually transitions from 0 to 1, as shown in

Figure 5, then after blending, the pixel value of image

at

is:

Where represents the corresponding value of in , represents the corresponding value of in . That is, for any pixel in the overlapping area of the mosaic, its final pixel value can be obtained by a weighted combination of its corresponding values in the two warped images using optical flow.

In order to get a better blending effect, following the method presented by Meng and Liu [

23], a softmax function is used to make the mosaic transition quickly from

to

, narrowing the blending area. And if the optical flow value of a warped image is larger, the salience is higher, and the blending weight of the warped image should be increased accordingly. Therefore, the following blending weight

can be used as the blending weight:

where

and

represents the optical flow magnitude;

is the shape coefficient of the softmax function; and

denotes the enhancement coefficient of the optical flow. The larger

and

are, the closer

is to 0 or 1, and the smaller the image transition area.

Also, similar to multi-band blending, a wider blending area is used in smooth and color-consistent areas, and a narrower blending area is used in color-inconsistent area. And the pixel consistency is measure using

:

The final blending parameter

is obtained:

corresponds to a fast transition from

to

,

corresponds to a linear transition from

to

. When the color differs smally, he transition from

to

is linear, and when the color difference is large, we tend to have a fast transition from

to

.

Then the pixel value of the mosaic is:

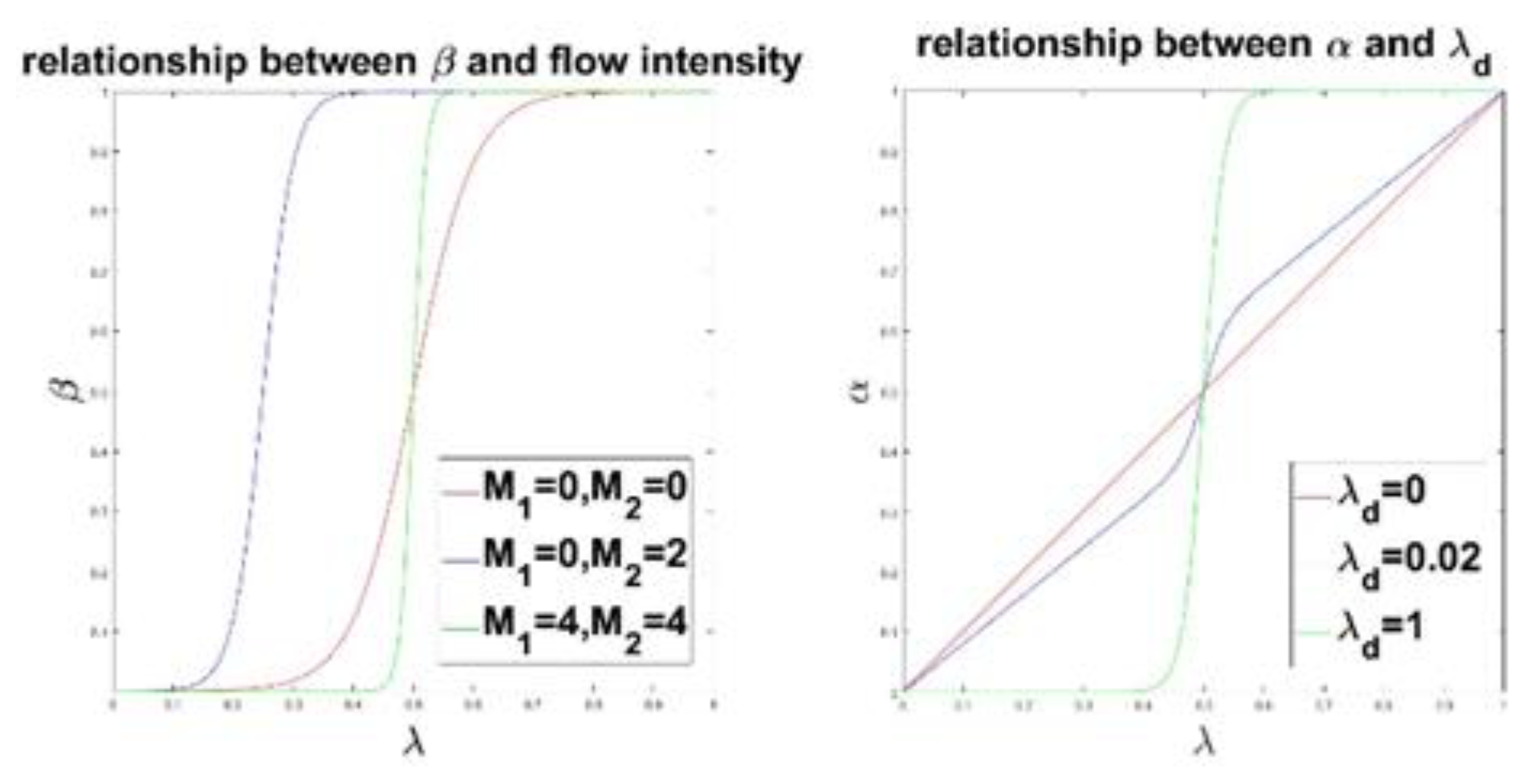

The curve in the left panel of

Figure 6 shows the curve of

changing with

under different optical flow intensities.

can be used to achieve quick transition of the mosaic from

to

, narrowing the transition area. In case of large optical flow, the blending weight of the corresponding image can be increased to reduce the transition area. The curve in the right panel of

Figure 6 shows the impact of

on the curve of

as a function of

. When

is small, a wider fusion area tends to be used; otherwise, a narrower fusion area is used, which is similar to the blending of different frequency bands in multi-band blending method.

3.2.2. Calculation of Asymmetric Optical Flow

The general pipeline of the optical flow calculation is to construct an image pyramid, calculate the optical flow of each layer from coarse to fine, and use the estimated current-layer optical flow divided by the scaling factor as the initial optical flow of the finer layer until the optical flow of the finest layer is obtained [

23,

24,

25]. Different methods are proposed to achieve better solutions that satisfy brightness constancy assumptions, solve large displacements and appearance variation [

27,

28], address edge blur and improve temporal consistency [

29,

30,

31]. Recently, some deep learning methods are proposed. For example, RATF(recurrent all-pairs field transforms for optical flow) [

32] extracts per-pixel features, builds multi-scale 4D correlation volumes for all pairs of pixels, and iteratively updates a flow field through a recurrent unit. FlowFormer(optical flow Transformer) [

33] based on transformer neural network architecture designs a novel encoder which effectively aggregates cost information of correlation volume into compact latent cost tokens, and a recurrent cost decoder which recurrently decodes cost features to iteratively refine the estimated optical flows.

In order to improve the optical flow calculation speed, we use the method based on optical flow propagation and gradient descent adopted in Facebook surround360 [

34] to calculate the optical flow. When calculating the optical flow of each layer, first calculate the optical flow of each pixel from top to bottom and from left to right. From the optical flow values of the current-layer left and top pixels and upper-layer same-position pixel, the value with minimum error represented by (19) is selected as the initial value of the current pixel. Then a gradient descent method is performed to update the optical flow value of the current pixel, and then spread to the right and bottom pixels, as a candidate for the initial optical flow of the right and bottom pixels. After completing the forward optical flow propagation from top to bottom and from left to right, perform a reverse optical flow propagation and gradient descent from bottom to top and from right to left to obtain the final optical flow value.

When calculating the optical flow value

of pixel

, the error function

used is:

where,

denotes the optical flow alignment error of the edge image (which is Gaussian filtered to improve the robustness),

denotes the consistency error of the optical flow,

denotes the Gaussian-filtered optical flow of pixel

;

denotes the magnitude error after normalization of optical flow, with excessively large optical flow being penalized;

and

are the width and height of current-layer image, respectively; and

denotes the diagonal matrix with diagonal elements

and

.

3.3. Estimation of Image Intrinsic and Extrinsic Parameters

The SC-AOF method requires a known camera parameters of images

and

. When only the intrinsic parameters

and

of an image are known, the essential matrix

between two images can be obtained by feature point matching, and the rotation matrix

and translation vector

between images can be obtained by decomposing the essential matrix. When both intrinsic and extrinsic parameters are unknown, the intrinsic parameters can be estimated by calibration [

35,

36] firstly, and then the extrinsic parameters of the image can be estimated accordingly. In these cases, both intrinsic and extrinsic parameters of image

and

can be estimated robustly.

When none of the above methods is feasible, it is necessary to calculate the fundamental matrix from the matched feature points, and restore the camera internal and external parameters.

When camera has zero skew, known principal point and aspect ratio, then each intrinsic parameter matrix has only 1 degree of freedom (focal length of camera). The total degree freedom of camera parameters is 7(

has 2 degrees of freedom because the scale cannot be recovered,

has three degrees of freedom, and each camera has 1 degree of freedom), which is equal to the fundamental matrix

's degree of freedom. The internal and external parameters of the image can be recovered using a self-calibration method [

37]. But even if these constraints are met, The solved camera parameters by [

37] suffers from large errors when the scene is approximately planar and the matching error is large. Therefore, here we use the method of optimizing the objective function in [

6] to solve the internal and external parameters of the camera.

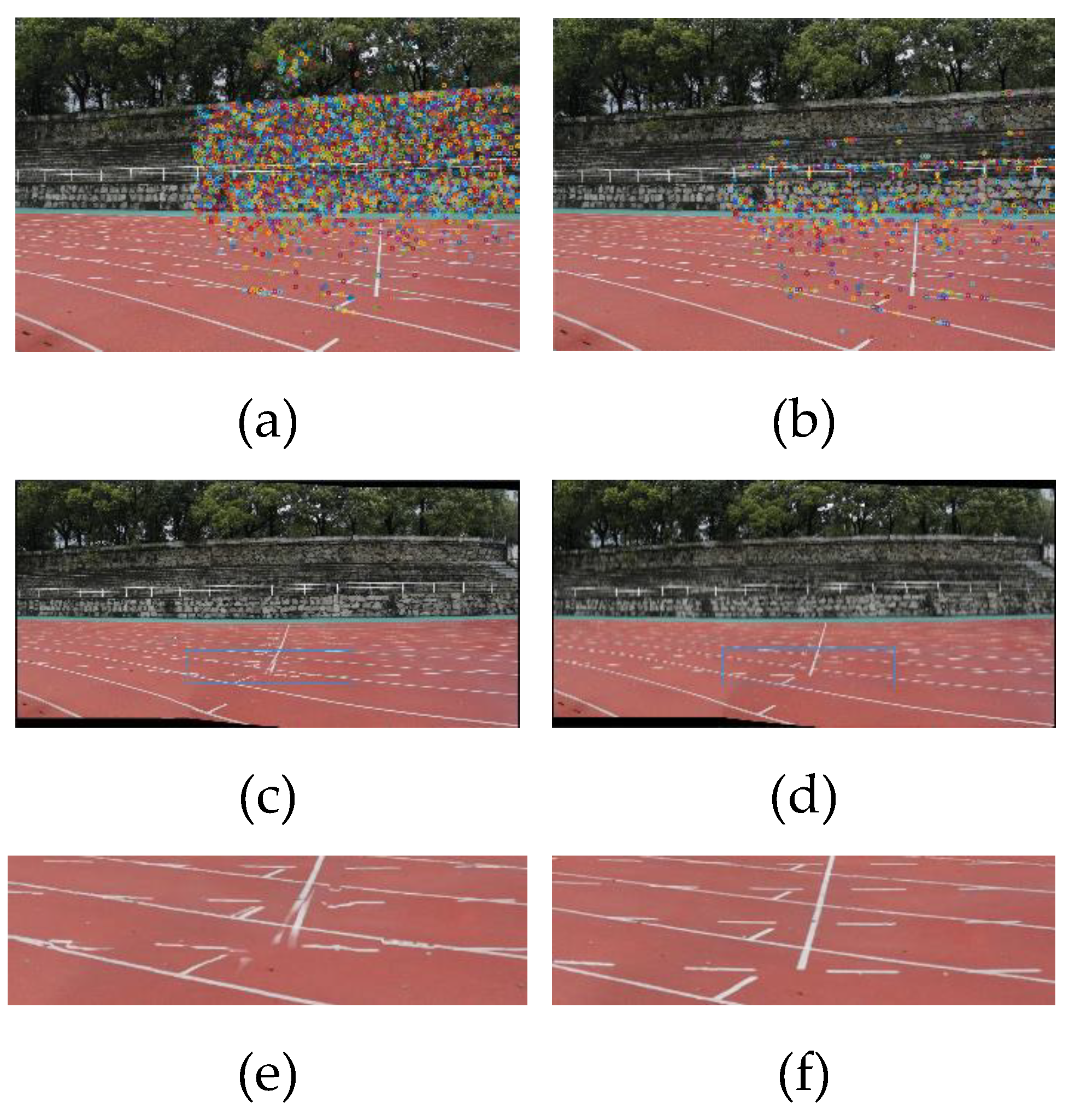

In order to get an accurate fundamental matrix, firstly, the feature points need to be distributed more evenly in the image. As shown in

Figure 7, a uniform and sparse distribution of feature points can both reduce the computation time and obtain more robust intrinsic and extrinsic camera parameters and global projection plane, which will lead to improved stitching results.

Secondly, it is necessary to filter the matched feature points to exclude the influence of outliers. Use the similarity transformation to normalize the matched feature points. After normalization, the mean value of the feature points is 0, and the average distance to the origin is .

Thirdly, multiple homographies are estimated to exclude outlier points. Let and denote all matched feature points and the total number of matched feature points. In , The RANSAC method with threshold is applied to compute homography and its inlier set , and the matches of isolated feature points which have no neighboring points within 50 pixel distance is removed from . A new candidate set is generated by removing from . Repeat the above steps to calculate homography matrices and corresponding inlier set until <20 or . The final inlier set is . If , then there is only one valid plane. In this case, apply the RANSAC method with threshold to recalculate homography and the corresponding inlier set .

After excluding the outliers, for any matched points

in the inlier set

, the cost function is:

where the

balanced epipolar constraint and the infinite homography constraint, and generally take

.

is a robust kernel function, which can mitigate the effect of mis-matched points on the optimization of camera internal and external parameters.

and

denote the projection errors of the epipolar constraint and of the infinite homography constraint, respectively:

where

denotes the length of the vector composed of the first two components of

. That is, assuming

, then

.

represents the third component value of the vector

.

4. Experiment

To verify the effectiveness of the SC-AOF method, The mosaics generated by our method and the existing APAP [

4], AANAP [

16], SPHP [

14], TFT [

7], REW [

10] and SPW [

18] are compared on some typical datasets used by other methods to verify the feasibility and advantages of the SC-AOF method in solving deformation and improving alignment; Next, SC-AOF method is used together with other method to demonstrate its good compatibility.

4.1. Effectiveness Analysis of SC-AOF Method

In this section, various methods of image stitching are compared and analyzed based on three indicators: perspective deformation, local alignment and running speed. The experimental setup is as follows.

The first two experiments compare typical methods for solving perspective deformation and local alignment respectively, and all the methods in the first two experiments are included in the third experiment to show the superiority of SC-AOF method in all aspects.

Since the averaging methods generally underperforms linear blending ones, all methods to be compared adopt linear blending to achieve the best performance.

All methods other than ours use the parameters recommended by their proposers. Our SC-AOF method has the following parameter settings in optical flow-based image blending: 10, 100, and 10

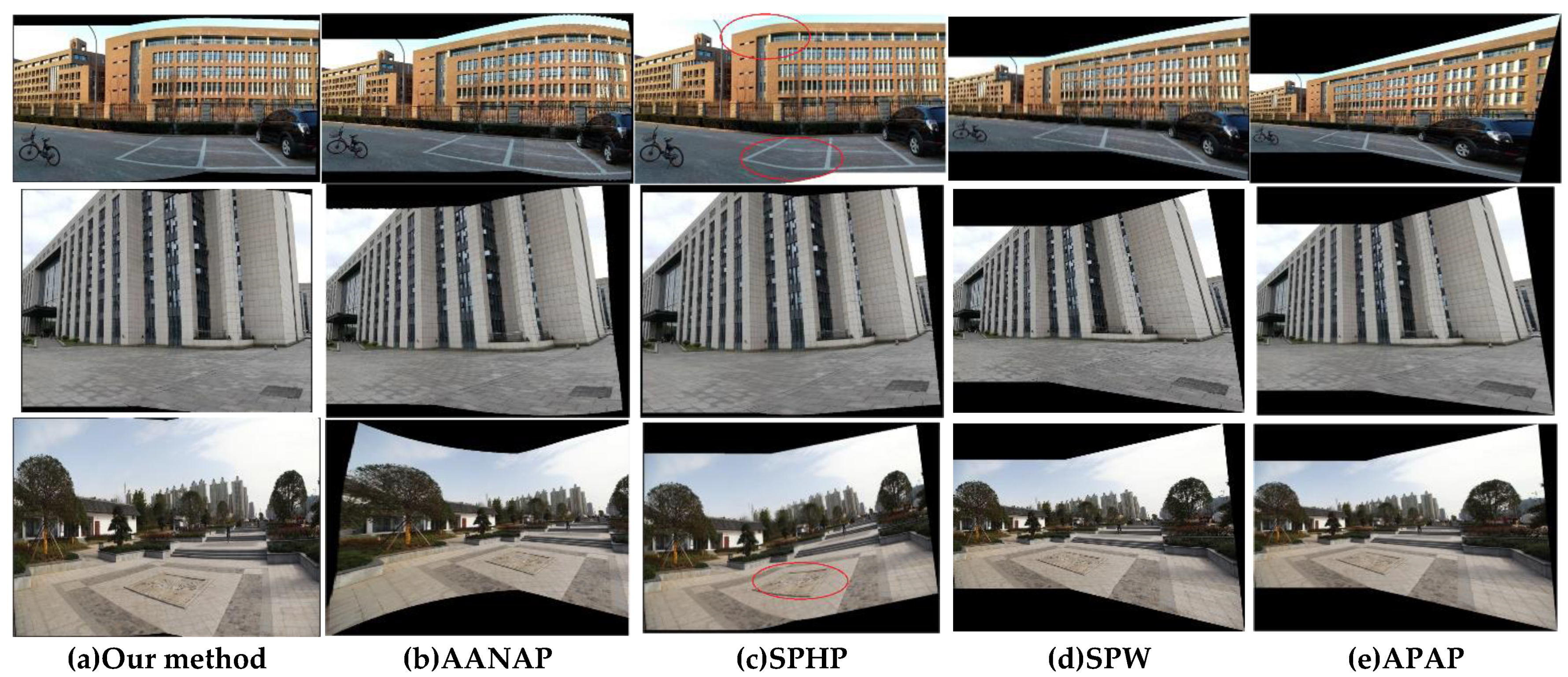

4.1.1. Perspective Deformation Reduction

Figure 8 shows the results of the SC-AOF method versus SPHP, APAP, AANAP and SPW methods for perspective deformation reduction in image stitching. School, building and park square datasets were used in this experiment. We can see from

Figure 8 that, compared with the other methods, our SC-AOF method makes the viewpoint of the stitched image change in a more natural manner and effectively eliminates perspective deformation. As explained below, all other methods underperform our SC-AOF method.

The image stitched using the APAP method has its edges stretched to a large extent. This is because it does not process perspective deformation. This method only serves as a reference to verify the effectiveness of perspective deformation reducing algorithms.

The AANAP algorithm can achieve a smooth transition between the two viewpoints, but results in severely "curved edges". And there is even more severe edge stretching for the park square dataset than that of the APAP method. This is because, when the AANAP method extrapolates from homographies, it linearizes the homography in addition to similarity transformation, causing affine deformation in the final transformation.

Compared with the APAP method, the SPW method makes no significant improvement in perspective deformation, except for the image in the first row. SPW preserves perspective consistency, so multiple-viewpoint method excels in solving perspective deformation compared to single-viewpoint method.

The SPHP algorithm performs well overall. However, it causes severe distortions in some areas (red circled in

Figure 8c) due to the rapid change of viewpoints. This is because the SPHP method estimates the similarity transformation and interpolated homographies from global homography. As a result, the similarity transformation can't not reflect the real scene information and the interpolated homographies may deviate from a reasonable image projection.

4.1.2. Local Alignment

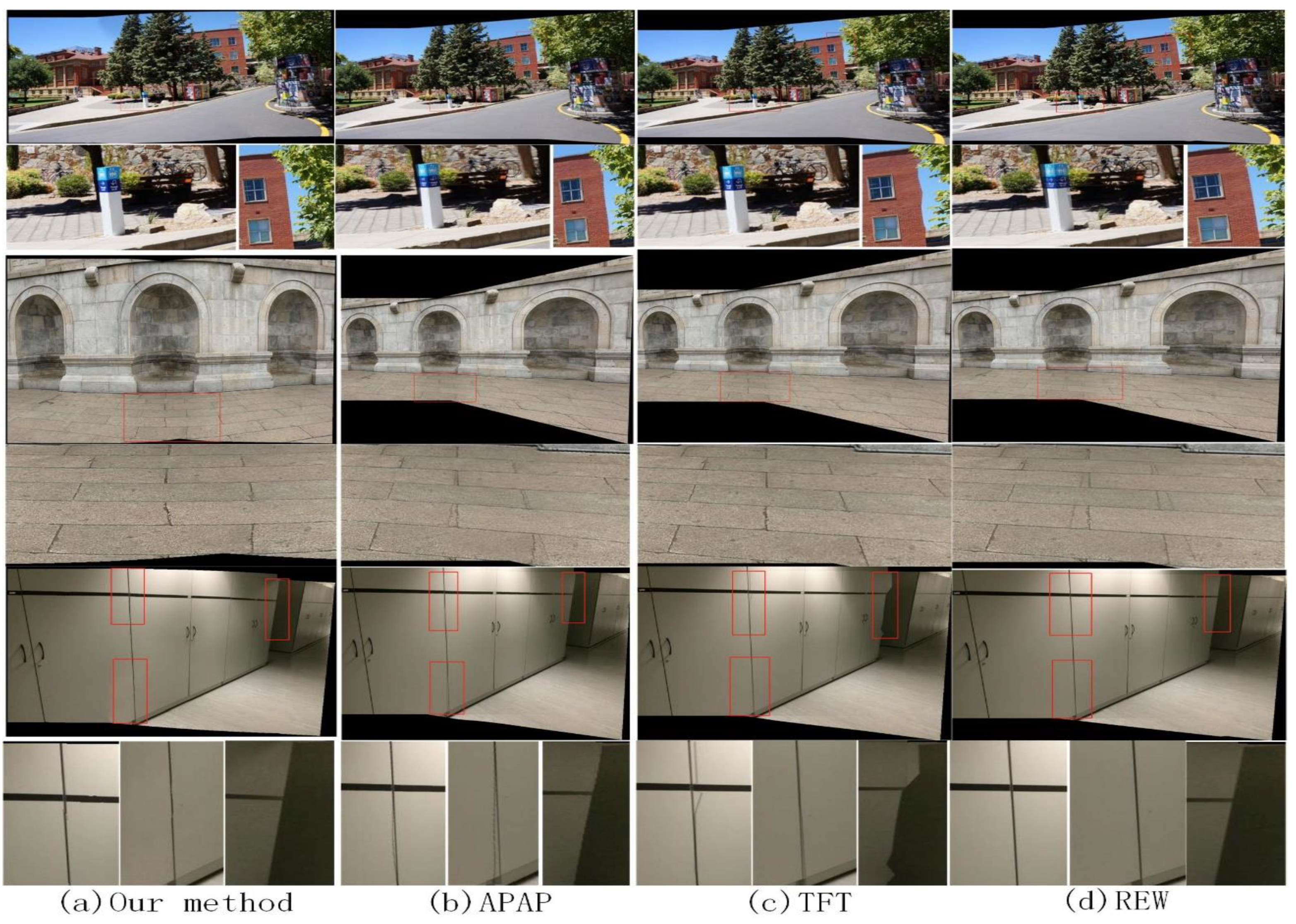

Figure 9 shows the results of the SC-AOF method versus APAP, TFT and REW methods for local alignment in image stitching. It can be seen that SC-AOF performs well in all scenes, showing the effectiveness of our method in local alignment.

The APAP method performs fairly well in most images, though with some alignment errors. This is because the moving DLT method smooths the mosaics to some extent.

The TFT generated stitched image of excellent quality in planar areas. But when there is a sudden depth change in the scene, there will be serious distortions. This is because large errors appear when calculating plane using three vertices of a triangle in the area with sudden depth changes.

The REW method has large alignment error in the planar area and aligns the images better than the APAP and TFT method in all other scenes. This is because the fewer feature points in the planar area might be filtered out as mismatched points by the REW method.

The SSIM (structural similarity) is employed to objectively describe the alignment accuracy of different methods. The scores of all methods on the datasets temple, rail tracks, garden, building, school, park-square, wall, and cabinet are listed in

Table 1.

APAP and AANAP have high scores on all image pairs, but the scores are lower than our method and REW, proving that APAP and AANAP blur mosaics to some extent.

When SPHP is not combined with APAP, only the global homography is used to align the images, resulting in lower scores compared to other methods.

TFT has higher scores on the datasets except for the building dataset. TFT can improve alignment accuracy but also bring instability.

SPW combines quasi-homography and content-preserving warping to align images, which adding other constraints while also reducing the accuracy of alignment, resulting in lower scores compared to REW and our method.

Both REW and our method use global homography matrix to coarsely align the images. Afterwards, in REW and our method, a deformation field and optical flow are applied to further align the images respectively. Therefore, the both methods has higher scores and robustness than other methods.

4.1.3. Stitching Speed Comparison

The running speed is a direct reflection of the efficiency of each stitching method.

Table 2 shows the speed of the SC-AOF method versus the APAP, AANAP, SPHP, TFT, REW and SPW methods. The temple, rail tracks, garden, building, school, park-square, wall, and cabinet datasets contributed by [

4,

10] are used in this experiment. It can be seen that the REW algorithm has the fastest stitching speed. The reason is that it only needs to calculate TPS parameters based on feature point matching and then compute the transformations of grid points quickly. Our SC-AOF method ranks second in terms of stitching speed, and the AANAP algorithm requires the longest running time. Both the APAP and AANAP methods calculate the local homographies based on moving DLT, and the AANAP method needs to calculate the Taylor expansion of anchor points. Combined with the results in

Figure 8 and

Figure 9, it is verified that the SC-AOF method has the best overall performance, which can maintain a desirable operation efficiency while guaranteeing the final image quality. Our method can have broad applications and promotion significance.

4.2. Compatibility of SC-AOF Method

The SC-AOF method can not only be used independently to generate stitched image with reduced perspective deformation and low alignment error, but also be decomposed (into SC method and image blending method) and combined with other methods to improve the quality of the mosaic.

4.2.1. SC Module Compatibility Analysis

The sliding camera (SC) module in the SC-AOF method can not only be used in the global alignment model, but also be combined with other local alignment models (e.g., APAP and TFT) to solve perspective deformation while maintaining the alignment accuracy. The implementation steps are as follows.

Use the global similarity transformation to project onto the coordinate system to calculate the size and mesh vertices of the mosaic;

Use (6)-(9) to calculate the weights of mesh vertices and the projection matrix, replace the homography in (2) with the homography matrix in local alignment model, and bring them into (12) to compute the warped images and blend them.

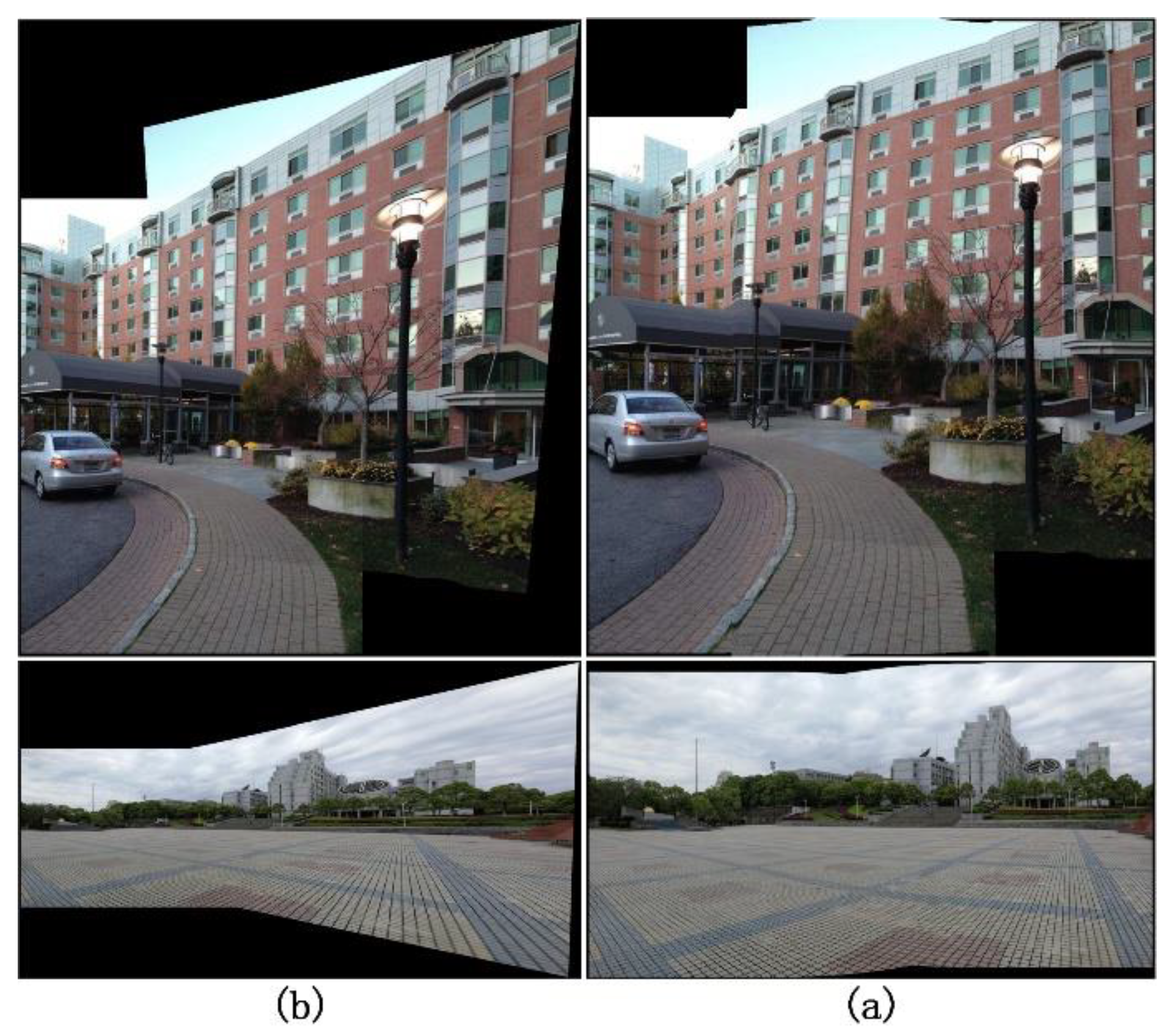

Figure 10 presents the stitched images when using the TFT algorithm alone vs. using the TFT algorithm combined with the SC method. It can be found that the combined method is more effective in mitigating the edge stretching, and that it generates more natural images. This shows that the SC method can effectively solve perspective deformation suffered by the local alignment method.

4.2.2. Blending Module Compatibility Analysis

The asymmetric optical flow-based blending in the SC-AOF method can also be used in other methods to enhance the final stitching effect. The implementation steps are as follows.

Generate two projected images using one of the other algorithms and calculate the blending parameters based on the overlapping areas;

-

Set the optical flow value to be 0, replace linear blending parameter with in (17) to blend warped images, preserve the blending band width in the low frequency-area and narrow the blending width in the high-frequency area to get a better image stitching effect.

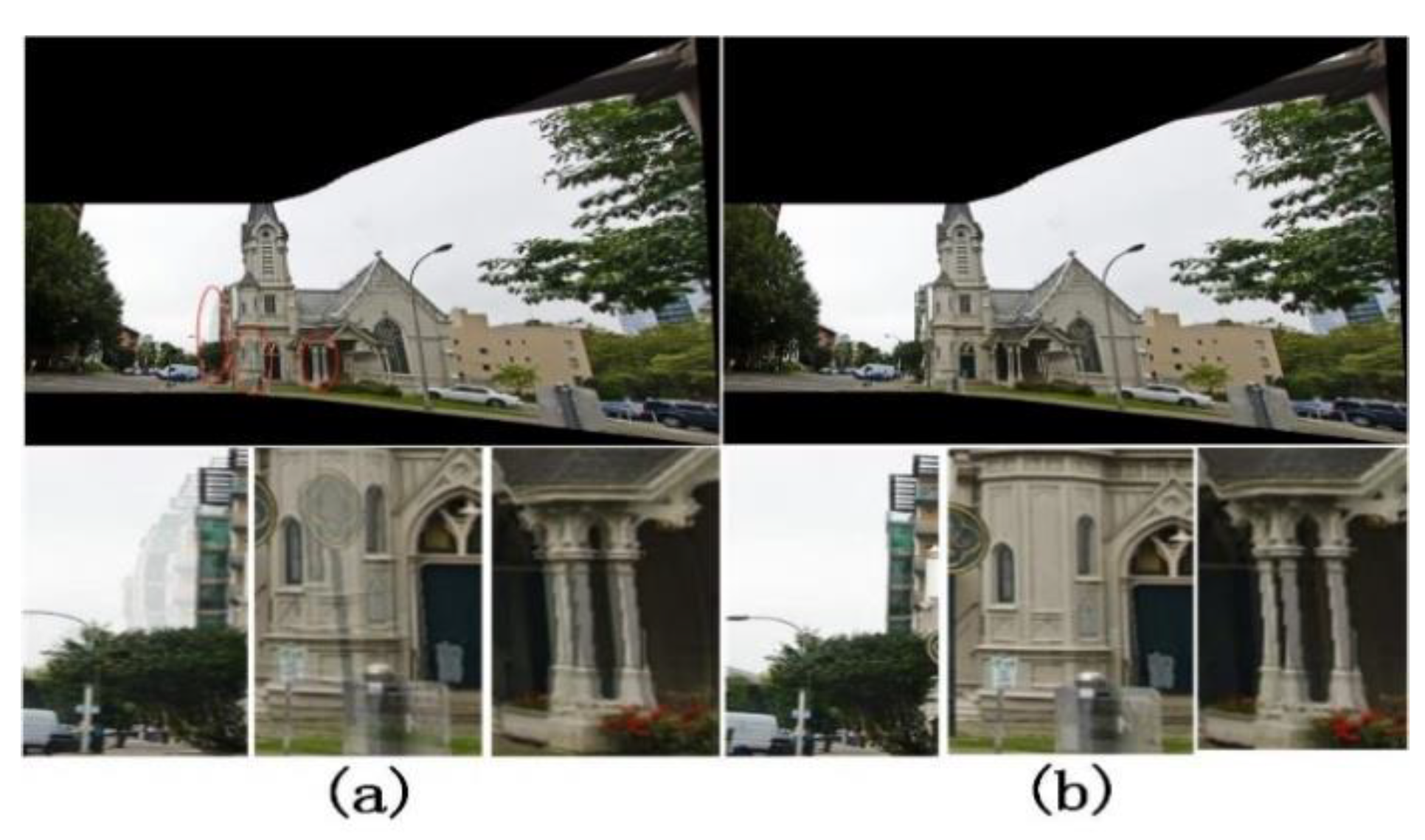

Figure 11 shows the image stitching effect of the APAP algorithm when using linear blending vs. when using our bending method. It can be seen that the blurring and ghosting in the stitched images are effectively mitigated when using our blending method. This shows that our blending algorithm can blend the aligned images better.

5. Conclusions

In this paper, to solve the perspective deformation and misalignment in image stitching using homographies, a SC-AOF method is proposed. In image warping, a new virtual camera and a projection matrix are generated as the observation perspective in the overlapping area by interpolating between two projection matrices; and the overlapping area transitions gradually from one viewpoint to another to achieve viewpoint preserving and smooth transition of the stitched image and thus solve the perspective deformation problem. In image blending, optical flow-based blending algorithm is proposed to further improve alignment accuracy. The width of blending area is automatically adjusted according to softmax function and alignment accuracy. Finally, extensive comparison experiments are conducted to demonstrate the effectiveness of our algorithm in reducing perspective deformation and improving alignment accuracy. In addition, our algorithm had broad applicability, as its component modules can be used with other algorithms to mitigate edge stretching and improve alignment accuracy.

However, the proposed local alignment method would fail if the input images contain large parallax, which will cause severe occlusion to prevent us from obtaining the correct optical flow. The problem of local alignment failure caused by large parallax also exists in other local alignment methods. Exploring more robust optical flow calculation and occlusion processing method to reduce misalignment in large parallax scene is an interesting research direction of the future work.

Author Contributions

Conceptualization, Qing Li and Jiayi Chang; methodology, Jiayi Chang; software, Jiayi Chang; validation, Jiayi Chang; formal analysis, Qing Li; investigation, Qing Li and Jiayi Chang; resources, Yanju Liang; data curation, Yanju Liang; writing—original draft preparation, Jiayi Chang; writing—review and editing, Liguo Zhou; visualization, Liguo Zhou; supervision, Qing Li; project administration, Qing Li; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

References

- Abbadi, N.K.E.L.; Al Hassani, S.A.; Abdulkhaleq, A.H. A review over panoramic image stitching techniques[C]//Journal of Physics: Conference Series. IOP Publishing, 2021, 1999, 012115. [Google Scholar]

- Gómez-Reyes, J.K.; Benítez-Rangel, J.P.; Morales-Hernández, L.A.; et al. Image mosaicing applied on UAVs survey[J]. Applied Sciences, 2022, 12, 2729. [Google Scholar] [CrossRef]

- Xu, Q.; Chen, J.; Luo, L.; et al. UAV image stitching based on mesh-guided deformation and ground constraint[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14, 4465–4475. [Google Scholar] [CrossRef]

- Wen, S.; Wang, X.; Zhang, W.; et al. Structure Preservation and Seam Optimization for Parallax-Tolerant Image Stitching[J]. IEEE Access, 2022, 10, 78713–78725. [Google Scholar] [CrossRef]

- Tang, W.; Jia, F.; Wang, X. An improved adaptive triangular mesh-based image warping method[J]. Frontiers in Neurorobotics, 2023, 16, 1042429. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Deng, B.; Tang, R.; et al. Local-adaptive image alignment based on triangular facet approximation[J]. IEEE Transactions on Image Processing, 2019, 29, 2356–2369. [Google Scholar] [CrossRef]

- Lee, K.Y.; Sim, J.Y. Warping residual based image stitching for large parallax[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2020: 8198-8206.

- Zhu, S.; Zhang, Y.; Zhang, J.; et al. ISGTA: an effective approach for multi-image stitching based on gradual transformation matrix[J]. Signal, Image and Video Processing, 2023, 17, 3811–3820. [Google Scholar] [CrossRef]

- Zaragoza, J.; Chin, T.J.; Brown, M.S.; et al. As-Projective-As-Possible Image Stitching with Moving DLT[C]// Computer Vision and Pattern Recognition (CVPR). IEEE, 2013.

- Li, J.; Wang, Z.; Lai, S.; et al. Parallax-tolerant image stitching based on robust elastic warping[J]. IEEE Transactions on multimedia, 2017, 20, 1672–1687. [Google Scholar] [CrossRef]

- Xue, F.; Zheng, D. Elastic Warping with Global Linear Constraints for Parallax Image Stitching[C]//2023 15th International Conference on Advanced Computational Intelligence (ICACI). IEEE, 2023: 1-6.

- Liao, T.; Li, N. Natural Image Stitching Using Depth Maps[J]. arXiv, 2022; arXiv:2202.06276. [Google Scholar]

- Cong, Y.; Wang, Y.; Hou, W.; et al. Feature Correspondences Increase and Hybrid Terms Optimization Warp for Image Stitching[J]. Entropy, 2023, 25, 106. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.H.; Sato, Y.; Chuang, Y.Y. Shape-preserving half-projective warps for image stitching[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2014: 3254-3261.

- Chen, J.; Li, Z.; Peng, C.; et al. UAV image stitching based on optimal seam and half-projective warp[J]. Remote Sensing, 2022, 14, 1068. [Google Scholar] [CrossRef]

- Lin, C.C.; Pankanti, S.U.; Ramamurthy, K.N.; et al. Adaptive as-natural-as-possible image stitching[C]//Computer Vision & Pattern Recognition.IEEE, 2015. [CrossRef]

- CHEN Yusheng, CHUANG Yungyu. Natural Image Stitching with the Global Similarity Prior[C]. European Conference on Computer Vision. Springer International Publishing, 2016.

- Cui, J.; Liu, M.; Zhang, Z.; et al. Robust UAV thermal infrared remote sensing images stitching via overlap-prior-based global similarity prior model[J]. IEEE journal of selected topics in applied earth observations and remote sensing, 2020, 14, 270–282. [Google Scholar] [CrossRef]

- Liao, T.; Li, N. Single-perspective warps in natural image stitching[J]. IEEE transactions on image processing, 2019, 29, 724–735. [Google Scholar] [CrossRef] [PubMed]

- Li, N.; Xu, Y.; Wang, C. Quasi-homography warps in image stitching[J]. IEEE Transactions on Multimedia, 2017, 20, 1365–1375. [Google Scholar] [CrossRef]

- Du, P.; Ning, J.; Cui, J.; et al. Geometric Structure Preserving Warp for Natural Image Stitching[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022: 3688-3696.

- Bertel, T.; Campbell ND, F.; Richardt, C. Megaparallax: Casual 360 panoramas with motion parallax[J]. IEEE transactions on visualization and computer graphics, 2019, 25, 1828–1835. [Google Scholar] [CrossRef] [PubMed]

- Meng, M.; Liu, S. High-quality Panorama Stitching based on Asymmetric Bidirectional Optical Flow[C]//2020 5th International Conference on Computational Intelligence and Applications (ICCIA). IEEE, 2020: 118-122.

- Hofinger, M.; Bulò, S.R.; Porzi, L.; et al. Improving optical flow on a pyramid level[C]//European Conference on Computer Vision. Cham: Springer International Publishing, 2020: 770-786.

- Shah ST, H.; Xuezhi, X. Traditional and modern strategies for optical flow: an investigation[J]. SN Applied Sciences, 2021, 3, 289. [Google Scholar] [CrossRef]

- Zhai, M.; Xiang, X.; Lv, N.; et al. Optical flow and scene flow estimation: A survey[J]. Pattern Recognition, 2021, 114, 107861. [Google Scholar] [CrossRef]

- Liu, C.; Yuen, J.; Torralba, A. Sift flow: Dense correspondence across scenes and its applications[J]. IEEE transactions on pattern analysis and machine intelligence, 2010, 33, 978–994. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S.; Zhao, L.; Zhang, Z.; et al. Global matching with overlapping attention for optical flow estimation[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022: 17592-17601.

- Rao, S.; Wang, H. Robust optical flow estimation via edge preserving filtering[J]. Signal Processing: Image Communication, 2021, 96, 116309. [Google Scholar] [CrossRef]

- Jeong, J.; Lin, J.M.; Porikli, F.; et al. Imposing consistency for optical flow estimation[C]//Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition. 2022: 3181-3191.

- Anderson, R.; Gallup, D.; Barron, J.T.; et al. Jump: virtual reality video[J]. ACM Transactions on Graphics (TOG), 2016, 35, 1–13. [Google Scholar] [CrossRef]

- Teed, Z.; Deng, J. Raft: Recurrent all-pairs field transforms for optical flow[C]//Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23-28 August 2020, Proceedings, Part II 16. Springer International Publishing, 2020: 402-419.

- Huang, Z.; Shi, X.; Zhang, C.; et al. Flowformer: A transformer architecture for optical flow[C]//European Conference on Computer Vision. Cham: Springer Nature Switzerland, 2022: 668-685.

- https://github.com/facebookarchive/Surround360.

- Zhang Y, J. Camera calibration[M]//3-D Computer Vision: Principles, Algorithms and Applications. Singapore: Springer Nature Singapore, 2023: 37-65.

- Zhang Y, Zhao X, Qian D. Learning-Based Framework for Camera Calibration with Distortion Correction and High Precision Feature Detection[J]. arXiv, 2022; arXiv:2202.00158.

- Fang J, Vasiljevic I, Guizilini V, et al. Self-supervised camera self-calibration from video[C]//2022 International Conference on Robotics and Automation (ICRA). IEEE, 2022: 8468-8475.

Figure 1.

Flow chat of SC-AOF method. After the detection and matching of feature points, the camera parameters are obtained in advance or estimated. Then the two warped images are calculated using SC method, and the mosaic that is coarsely aligned can be obtained. Finally, the AOF method is used to further align the two warped images to generate a blended mosaic with higher alignment accuracy.

Figure 1.

Flow chat of SC-AOF method. After the detection and matching of feature points, the camera parameters are obtained in advance or estimated. Then the two warped images are calculated using SC method, and the mosaic that is coarsely aligned can be obtained. Finally, the AOF method is used to further align the two warped images to generate a blended mosaic with higher alignment accuracy.

Figure 2.

Image stitching based on sliding cameras. is the projection surface, which is fitted by scene points .Stitched image can be generated by projection of sampling points . The points in the area are generated by back-projection of pixels in . Similarly, the points in the area are generated by back-projection of pixels in . The points in the area are generated by back-projection of pixels in virtual cameras. The pixel values of correspond to the fused pixel values of projection in and .

Figure 2.

Image stitching based on sliding cameras. is the projection surface, which is fitted by scene points .Stitched image can be generated by projection of sampling points . The points in the area are generated by back-projection of pixels in . Similarly, the points in the area are generated by back-projection of pixels in . The points in the area are generated by back-projection of pixels in virtual cameras. The pixel values of correspond to the fused pixel values of projection in and .

Figure 3.

The diagram of gradient weight. A quadrilateral is the boundary of the overlapping area of and mapped image of using . where is the center of and is the warped point of the center point of using . and are the projection points closest to and on the line of the quadrilateral vertices, respectively. indicates the pixel coordinates within the overlapping area that need to calculate weighted parameter .

Figure 3.

The diagram of gradient weight. A quadrilateral is the boundary of the overlapping area of and mapped image of using . where is the center of and is the warped point of the center point of using . and are the projection points closest to and on the line of the quadrilateral vertices, respectively. indicates the pixel coordinates within the overlapping area that need to calculate weighted parameter .

Figure 4.

Image stitching based on sliding cameras and global projection plane. (a) shows the two original images to be stitched together and ; (b) shows the mapped images and ; (c) shows the average blending images of and . That is, in the overlapping area, the blended value is .

Figure 4.

Image stitching based on sliding cameras and global projection plane. (a) shows the two original images to be stitched together and ; (b) shows the mapped images and ; (c) shows the average blending images of and . That is, in the overlapping area, the blended value is .

Figure 5.

Image blending based on optical flow. is the projection surface of the mosaic. In the overlapping areas (denoted by ) of and , we need to blend and . The 3D point is outside the projection surface. when is projected onto the projection surface, ghosting points and points appear. Through the weighted blending of asymmetric optical flow, the and are merged into point , which solves the ghosting problem of stitching.

Figure 5.

Image blending based on optical flow. is the projection surface of the mosaic. In the overlapping areas (denoted by ) of and , we need to blend and . The 3D point is outside the projection surface. when is projected onto the projection surface, ghosting points and points appear. Through the weighted blending of asymmetric optical flow, the and are merged into point , which solves the ghosting problem of stitching.

Figure 6.

Blending parameter curves. The figure on the left shows the curves at different optical flow intensities. The right figure shows the curve at different values.

Figure 6.

Blending parameter curves. The figure on the left shows the curves at different optical flow intensities. The right figure shows the curve at different values.

Figure 7.

The impact of feature point distribution on stitching results. The feature points in (a) are concentrated in the grandstand. The corresponding mosaic (c) is misaligned in the playground area. The feature points in (b) are evenly distributed within a 2*2 grid. Although the total number of feature points is smaller, but the mosaic (d) has better quality. (e) and (f) show the detail of mosaics.

Figure 7.

The impact of feature point distribution on stitching results. The feature points in (a) are concentrated in the grandstand. The corresponding mosaic (c) is misaligned in the playground area. The feature points in (b) are evenly distributed within a 2*2 grid. Although the total number of feature points is smaller, but the mosaic (d) has better quality. (e) and (f) show the detail of mosaics.

Figure 8.

Comparison of perspective deformation processing. (a), (b), (c), (d) and (e) are the mosaics generated by our method, AANAP, SPHP, SPW and APAP on the datasets , respectively.

Figure 8.

Comparison of perspective deformation processing. (a), (b), (c), (d) and (e) are the mosaics generated by our method, AANAP, SPHP, SPW and APAP on the datasets , respectively.

Figure 9.

Comparison of image registration. (a), (b), (c) and (d) show the alignment results of our method, APAP, TFT and REW on the datasets garden, wall, and cabinet, respectively.

Figure 9.

Comparison of image registration. (a), (b), (c) and (d) show the alignment results of our method, APAP, TFT and REW on the datasets garden, wall, and cabinet, respectively.

Figure 10.

The combination of TFT and moving cameras method. (a) is the mosaics created using TFT, and (b) is the mosaics obtained by adding the moving camera method to TFT.

Figure 10.

The combination of TFT and moving cameras method. (a) is the mosaics created using TFT, and (b) is the mosaics obtained by adding the moving camera method to TFT.

Figure 11.

The combination of APAP and our blending method. (a) shows the mosaic and detail view generated by the APAP using linear blending. (b) show the results of APAP combined with our blending method.

Figure 11.

The combination of APAP and our blending method. (a) shows the mosaic and detail view generated by the APAP using linear blending. (b) show the results of APAP combined with our blending method.

Table 1.

Comparison on SSIM.

Table 1.

Comparison on SSIM.

| |

APAP |

AANAP |

SPHP |

TFT |

REW |

SPW |

Ours |

| temple |

0.90 |

0.91 |

0.73 |

0.95 |

0.94 |

0.85 |

0.96 |

| rail-tracks |

0.87 |

0.90 |

0.62 |

0.92 |

0.92 |

0.87 |

0.93 |

| garden |

0.90 |

0.94 |

0.81 |

0.95 |

0.95 |

0.92 |

0.93 |

| building |

0.93 |

0.94 |

0.89 |

0.74 |

0.96 |

0.90 |

0.96 |

| school |

0.89 |

0.91 |

0.67 |

0.90 |

0.91 |

0.87 |

0.93 |

| wall |

0.83 |

0.91 |

0.68 |

0.90 |

0.93 |

0.81 |

0.92 |

| park-square |

0.95 |

0.96 |

0.80 |

0.97 |

0.97 |

0.95 |

0.97 |

| cabinet |

0.97 |

0.97 |

0.87 |

0.97 |

0.98 |

0.97 |

0.96 |

Table 2.

Comparison on elapsed time.

Table 2.

Comparison on elapsed time.

| |

APAP |

AANAP |

SPHP |

TFT |

REW |

SPW |

Ours |

| temple |

8.8 |

27.6 |

20.5 |

2.8 |

1.1 |

4.5 |

3.5 |

| rail-tracks |

50.4 |

161.6 |

91.0 |

36.1 |

35.4 |

260.5 |

29.5 |

| garden |

57.9 |

148.0 |

72.3 |

41.1 |

20.6 |

64.4 |

32.9 |

| building |

14.3 |

47.0 |

19.3 |

53.4 |

2.8 |

10.4 |

6.6 |

| school |

8.6 |

37.6 |

3.5 |

6.8 |

4.4 |

9.8 |

10.8 |

| wall |

16.4 |

81.0 |

12.1 |

37.5 |

13.6 |

15.7 |

37.2 |

| park-square |

51.3 |

194.4 |

91.5 |

53.8 |

13.5 |

149.4 |

30.2 |

| cabinet |

6.3 |

23.8 |

4.2 |

1.6 |

0.8 |

3.8 |

3.7 |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).