1. Introduction

Pulmonary embolism (PE) is the obstruction of blood arteries in the lungs caused by a blood clot[

1]. Peripheral edema is third in prevalence among cardiovascular illnesses. The disease has a death rate of 30% [

2,

3,

4]. A delay in diagnosing the condition raises the likelihood of impairment and mortality [

5]. Early diagnosis is crucial for treating the disease effectively [

6,

7]. CTPA is the preferred method for diagnosing PE due to its quick and detailed imaging capabilities [

8,

9]. Blood arteries show bright on contrast-enhanced CT scans due to the contrast material. The embolism appears dark because it does not absorb the contrast agent.

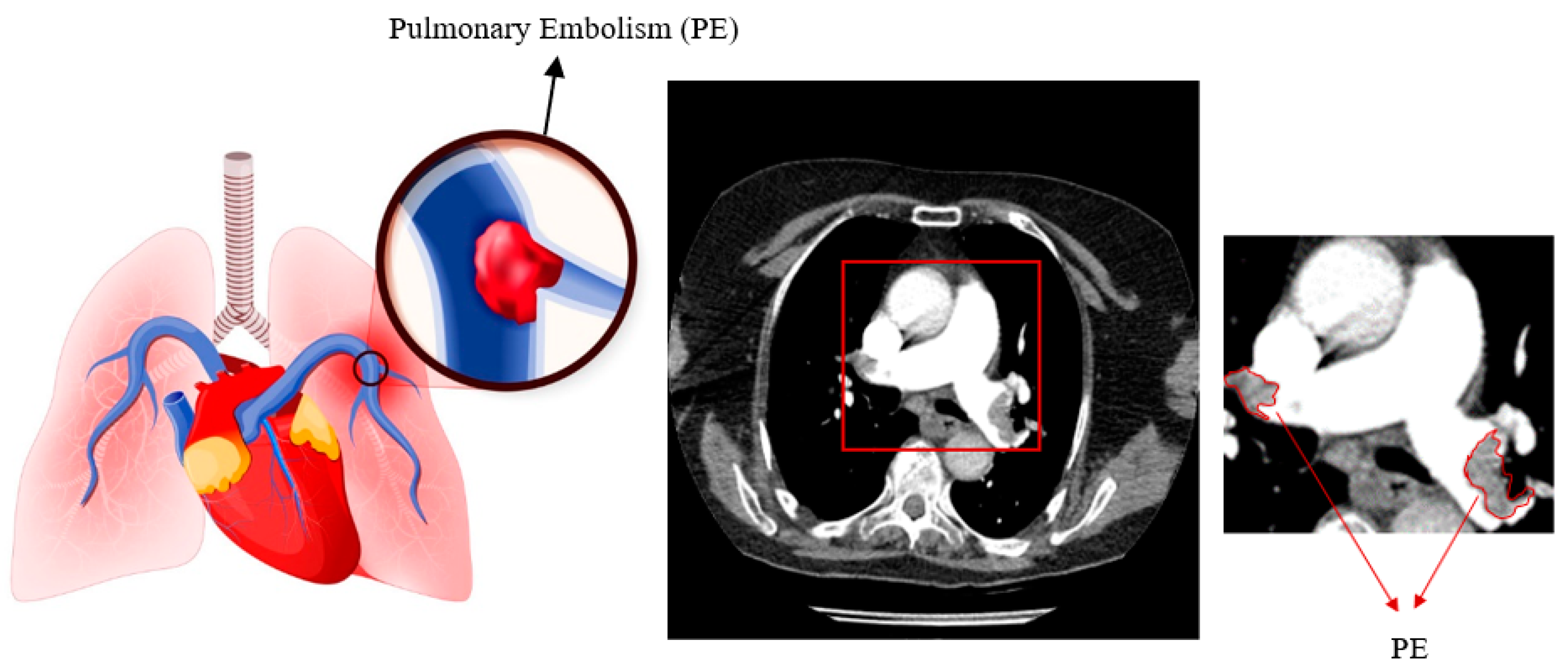

Figure 1 displays pulmonary embolism in a high-quality computed tomography scan.

Detection of PE from CTPA images is performed manually by experienced radiologists. Therefore, it can be time consuming and sometimes difficult [

10]. Some studies have shown that there is a 13% discrepancy between overnight and daytime assessments for the detection of PE [

11,

12,

13]. In addition, in some emergency situations, rapid and accurate assessment of PE is of great importance [

14]. Some semi-quantitative methods can be used to measure the degree of vascular occlusion and determine the severity of PE. The most common of these methods are the Mastora score and the Qanadli score, known as the Vascular Obstruction Index (VOI), measured by an expert [

15,

16]. However, there is inconsistency between different experts in the use of these methods [

17]. Therefore, the researchers used computer-assisted systems to automatically detect PE.

In this study, an improved mask R-CNN method was proposed for the detection and localisation of pulmonary embolism from CT images. The relationship between the loss function and the performance of the Mask R-CNN algorithm was determined. A local dataset was used in the study. Details of the proposed method are given in the second part of the article. Experimental results are presented in the third part.

1.1. Related Works

Thanks to the developing technological infrastructure, the performance in PE detection is increasing by using different algorithms. Early studies of pulmonary fixation included limited clinical applications and showed poor performance. In these studies, clinical findings were used instead of CT images as material. As a method, feature extraction was done with simple artificial neural networks [

18,

19,

20]. However, in recent years, there are also studies using up-to-date methods for the automatic detection of PE [

21,

22]. These studies have shown that computer aided systems are successful in detecting PE. It has also been shown that these systems can accurately detect small PEs that may escape the eye of the specialist [

22,

23]. In a study, PE detection was made automatically by classifying the features of PE with K-Nearest Neighbors (KNN), Artificial Neural Network (ANN) and Support Vector Machine (SVM) algorithms. A sensitivity of 98% was obtained [

24]. Machine learning algorithms have been widely used in recent years and have achieved high performance. There are many studies in which MR, CT images are analyzed with deep learning algorithms and much higher performances are obtained [

25,

26,

27]. With convolutional neural networks, high performance can be achieved in PE detection via CTPA images [

28,

29]. Pham et al combined natural language processing and machine learning in diagnosing thromboembolic disease [

30]. CT-based deep learning and automated PE studies have distinct challenges compared to their counterparts in other locations. For example, PE data represent only a small fraction, next to the size of the baseline CT data. There are also signal-to-noise problems when the intravenous contrast injection protocol and patient's breath-hold instructions are not followed [

31,

32]. For this reason, the correct creation of the neural network model and dataset used has a high effect on the performance. In particular, it is of great importance that the ground truths in the dataset are created correctly. Examples of recent studies conducted with deep learning-based techniques for the detection of PE are presented in

Table 1.

2. Material and Methods

2.1. Material

The dataset was obtained from the Radiology Department of Kahramanmaraş Sütçü İmam University. Computed tomography images of 50 patients, 27 of whom were female and 23 male, were used. The age range of the patients is between 28 and 95. Images were obtained with TOSHIBA AQUILION ONE 320/640 Slice instrument. A raw dataset was created with a total of 430 1212*1212 images in .tif format by taking only PE-containing sections. In this study, the sections in which PE is displayed as the largest surface area are used . The CT scans are 8 bit (0-255) gray level images. An average of 9 PE-containing sections were taken from each patient (min=8 , max=12). Ethics committee report was obtained for the data set. All of the PEs in the images in this dataset were labeled by Kamil DOĞAN, one of the authors of the study, a 15-year expert radiologist, using the Matlab ImageLabeler toolbox.

2.2. Data Augmentation

The study only included patients with pulmonary embolism and no other accompanying tissues such lymph nodes. Images were acquired from a restricted sample of 50 patients. These images underwent data augmentation techniques. The most often utilized improvement methods include image rotation, contrast adjustment, vertical and horizontal mirroring, and zooming. This study utilized horizontal and vertical rotation (±90°) as well as horizontal and vertical mirroring approaches. The number of images has been multiplied by five.

2.3. Preprocessing

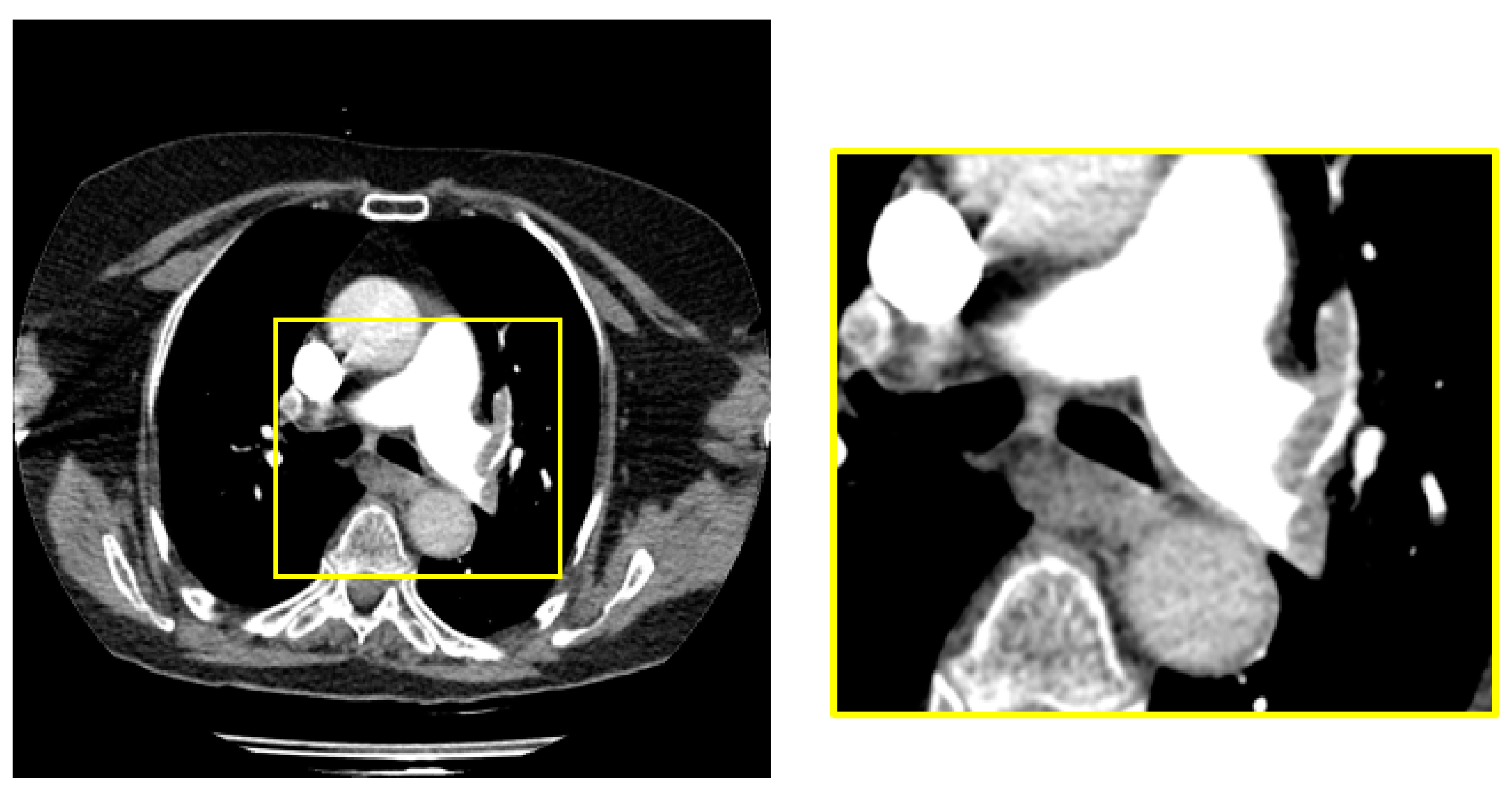

The dimensions of the raw images in the dataset are 1212 x 1212. PE is located in the middle region of all images in raw dataset. We obtained a 448x448 sub-image from the middle region of the raw image to cover the sections with pulmonary embolism.

Figure 2 shows the raw image and sample subimage with PE.

2.4. Mask R-CNN Network Architecture

Region-based Convolutional Neural Networks (R-CNN) [

33], Faster R-CNN [

34] and Mask R-CNN [

35] have high performance in object detection. Unlike other models, Mask R-CNN performs both detection and segmentation. This network is the extended version of Faster R-CNN network. as follows; Mask R-CNN also has an extra segmentation pattern (segmentation mask). There are two phases in Mask R-CNN. In the first stage, feature extraction is performed for the regions. In the second stage, bounding box detection, class detection and segmentation are performed according to the extracted features. The Mask R-CNN architecture includes a backbone network.

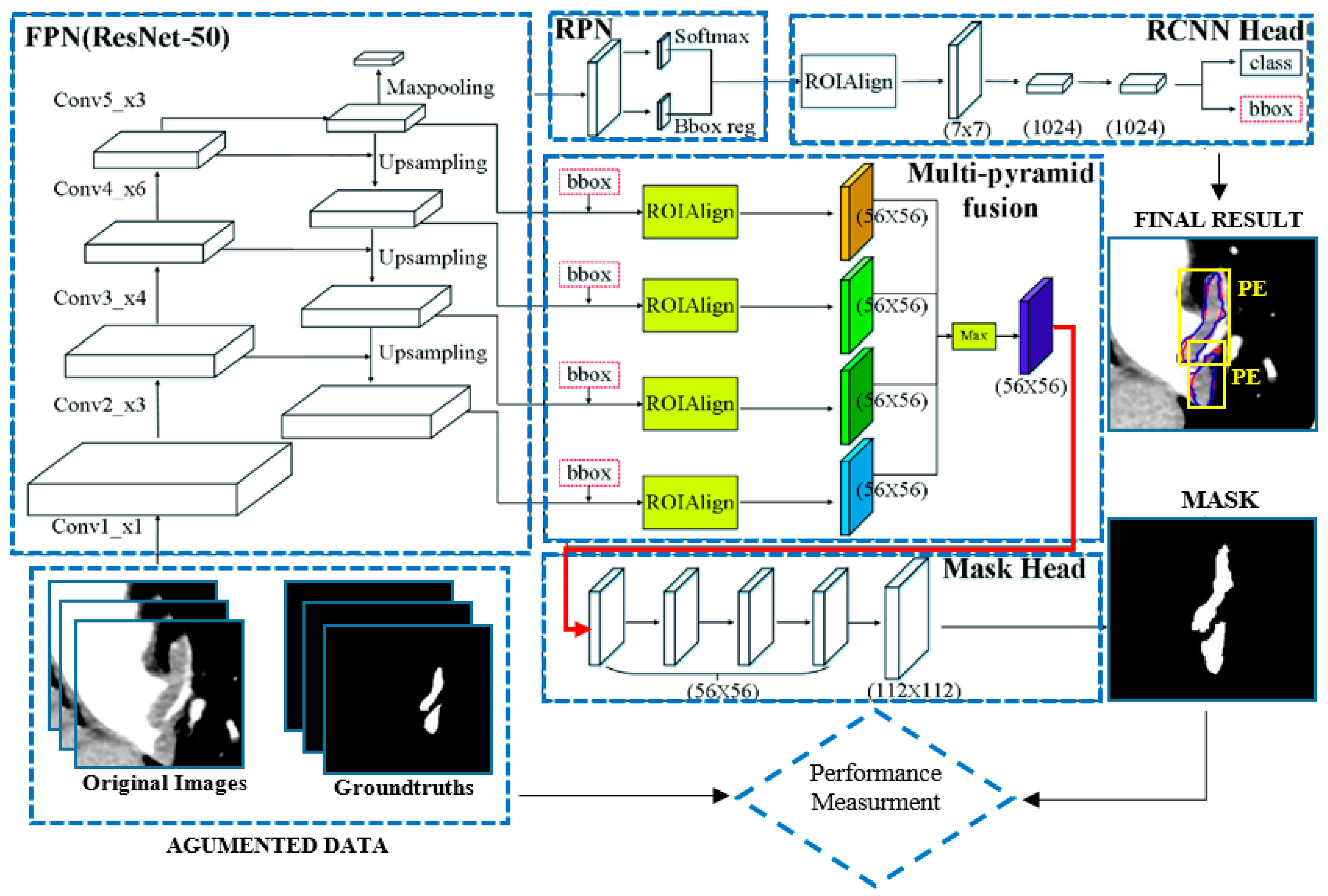

Figure 3 shows the Mask R-CNN architecture.

As seen in

Figure 4, Mask R-CNN has an FPN network that provides deep feature extraction. ResNet50 [

36] was used as the backbone network in the study. The Feature Pyramid Network (FPN) is designed according to the pyramid concept. It is a network structure that stands out in speed as well as accuracy. It has a multi-scale feature map. It has an upstream and downstream network structure. The upstream path is a convolutional neural network for feature extraction. As the number of upstream layers increases, the semantic value increases. High level structures are detected. Since ResNet has a multi-layer structure, its training speed and estimation performance are quite high. In the basic structure of ResNet, there are hops between the front and back layers to assist back propagation in the training process of deep networks. After the FPN network comes the RPN network. Thanks to this network, regions containing possible objects are detected. The RPN region proposition is a deep convolutional neural network. The RPN takes any size of data as input and finds a bounding box bid based on the object score. It makes this suggestion by shifting a small mesh over the feature map generated by the convolutional layer. After the RPN network is ROIAlign. ROI alignment performs the same process as ROI pooling. However, Roi Align solves the problem of unnecessary offsets in segmentation problems by using bilinear interpolation. In this way, it achieves results much faster. Thanks to this layer, the image dimensions are rescaled and transmitted to the fully connected layer. After the fully connected layer, the class and bounding box information of each region is obtained.

2.5. Improved Mask R-CNN Network Architecture

In the study, Mask R-CNN structure was modified. Thus, the developed Mask was trained as an R-CNN network. In order to improve the detection of pulmonary embolism, two weighting parameters λ

1 and λ

1 are used in the loss function. The loss function consists of the sum of classification loss, positioning loss and segmentation loss as shown in equation 1.

L

cls corresponds to what level the classes detected incorrectly. In multi-object detection, this value should be increased if more than one object is not detected. Lbox is an adjustable parameter for the correct determination of the object's boundaries. This parameter can be increased if the bounding box is incorrectly positioned when the object is detected. On the other hand, λ

1, λ

2 weights the segmentation positioning losses of the object and thus the network performance can be improved. L

mask indicates how accurately the object is segmented. As shown in Equation 2

is expressed as. Here y and

denote the label and predictive value, respectively.

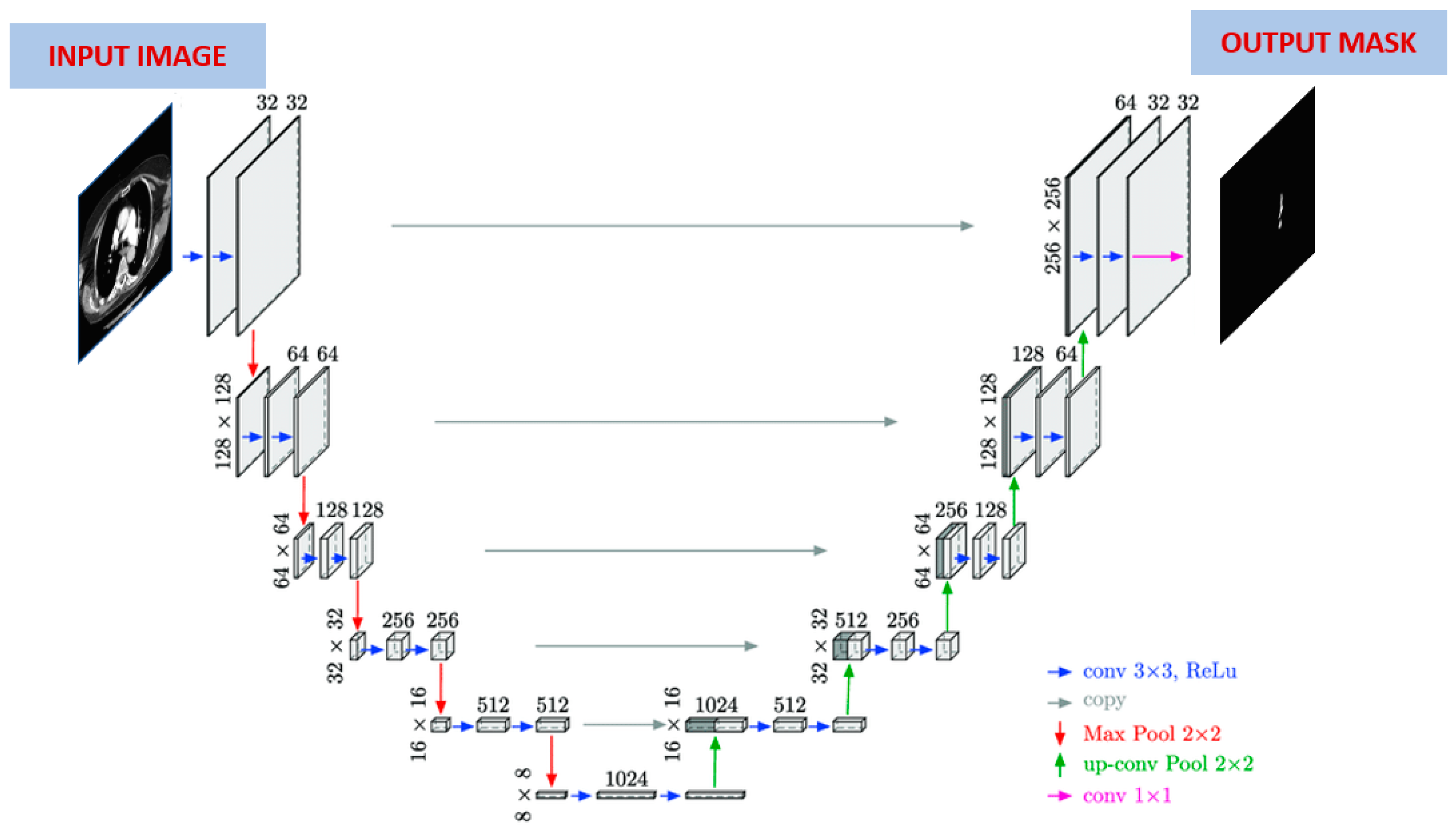

2.6. U-Net

The performance of the improved Mask R-CNN algorithm is compared with the U-Net algorithm. For this reason, PE segmentation was also done with the U-Net algorithm. The working principle of the U-Net model is similar to the operation of autoencoders. The purpose of these models is to compress the input data and make the best learning with the least loss [

37]. In this architecture, the number of neurons in the input and output layers is equal. However, the number of neurons in the hidden layer can vary. A U-Net based neural network is shown in

Figure 4. If the structure of the network is examined, U-Net is a segmentation architecture built on a fully connected convolutional neural network.

Since it resembles the letter U in shape, it got its name from this letter. There are two parts in this architecture. The first part is known as contraction (encoding) and the second part is known as expansion (decoding). The first part, the coding part, is a traditional CNN architecture and the image size is slowly reduced using some convolution and maximum pooling layers. The convolution layer consists of 3x3 filters, and the maximum pooling layer consists of 2x2 filters. The second part, the decoding part, is completely symmetrical to the first part. In this part, the feature map is enlarged step by step by deconvolution towards the actual size of the image. Each convolution layer in the architecture is followed by an activation layer.

2.7. Evaluation Metrics

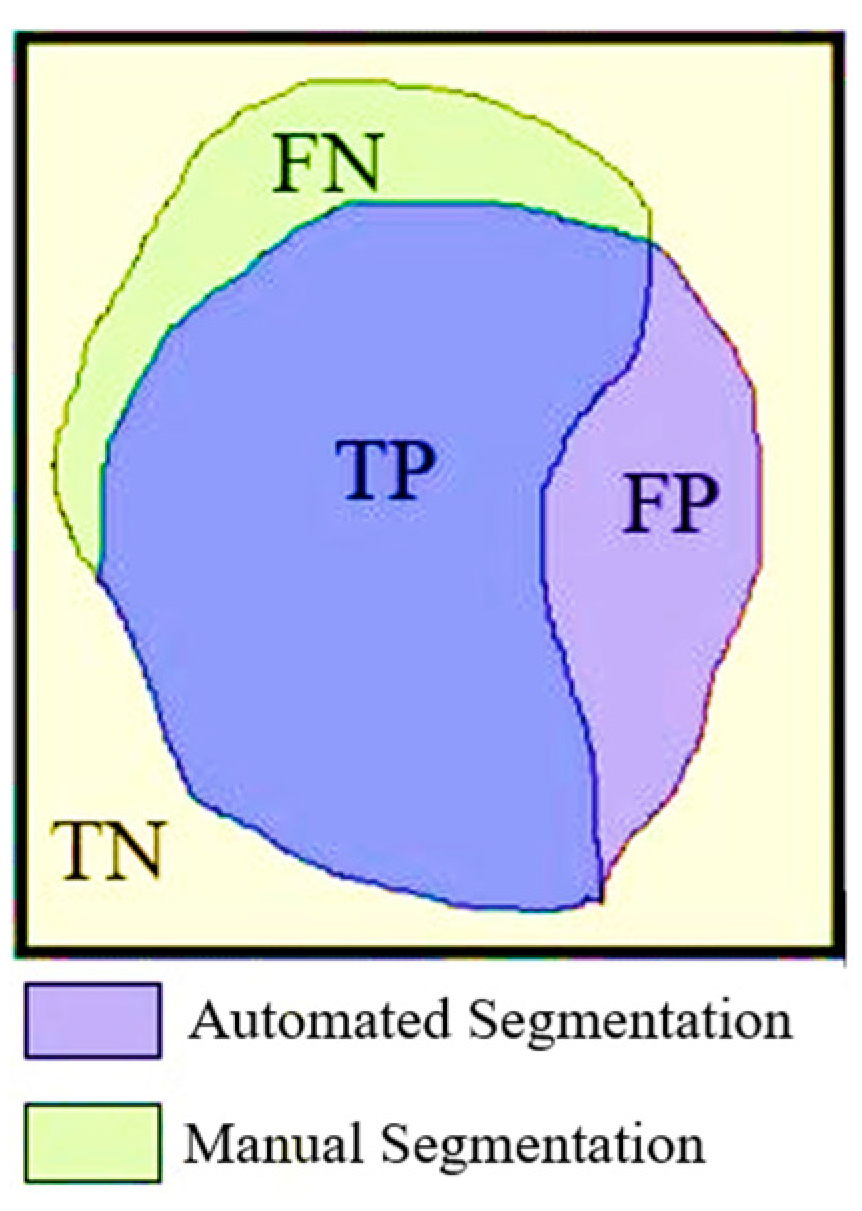

Sensitivity, specificity, accuracy, Dice and Jaccard were used to determine the performance of Mask R-CNN in detecting pulmonary embolism. Sensitivity refers to the proportion of PE within the detected pixels. A low sensitivity value indicates that true lesions are not adequately detected. On the other hand, high sensitivity values indicate that the system is able to detect a high proportion of the regions that are recognised as lesions. Evaluating different criteria together gives more accurate results. These criteria are expressed as follows.

Figure 5 shows the true positive (TP), true negative (TN), false positive (FP) and false negative (FN) areas of the pixel groups obtained by automatic and manual segmentation. The equations for the performance parameters used are given in equations 3, 4, 5, 6 and 7 below.

3. Experimental Results

In this study, thorax CT images of 50 patients with pulmonary embolism were used. Images containing a total of 430 pulmonary embolisms were created by taking the sections in which the pulmonary embolism was seen from these images. The study was carried out in four stages: data augmentation, preprocessing, PE segmentation, and performance evaluation. Preprocessed images were used as input to the Mask R-CNN network. Images of 36 patients (1505) were used for training, and images of 14 patients (645) were used for testing. Feature extraction for PE detection was performed with the ResNet50 convolutional neural network pre-trained with the COCO dataset. Both hold-out validation (70% train 30% test) and 10-fold cross-validation were used for performance evaluation.

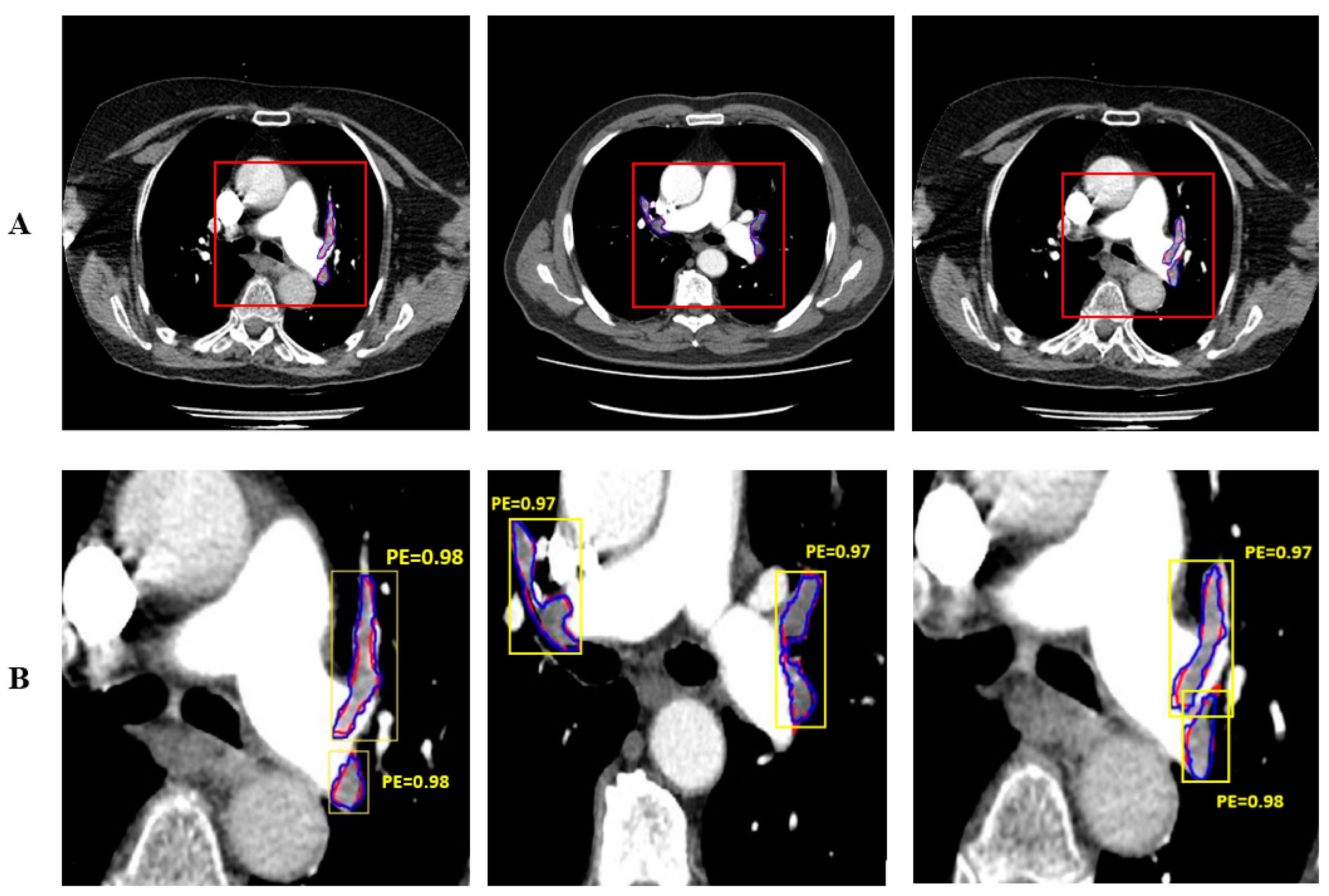

Figure 6 shows the automatically and manually segmented PE’s. The PE manually segmented by the expert doctor is shown in red and the PE automatically segmented by the proposed system is shown in blue. Mask R-CNN performs both detection and segmentation. The detection of PE is shown with yellow bounding box.

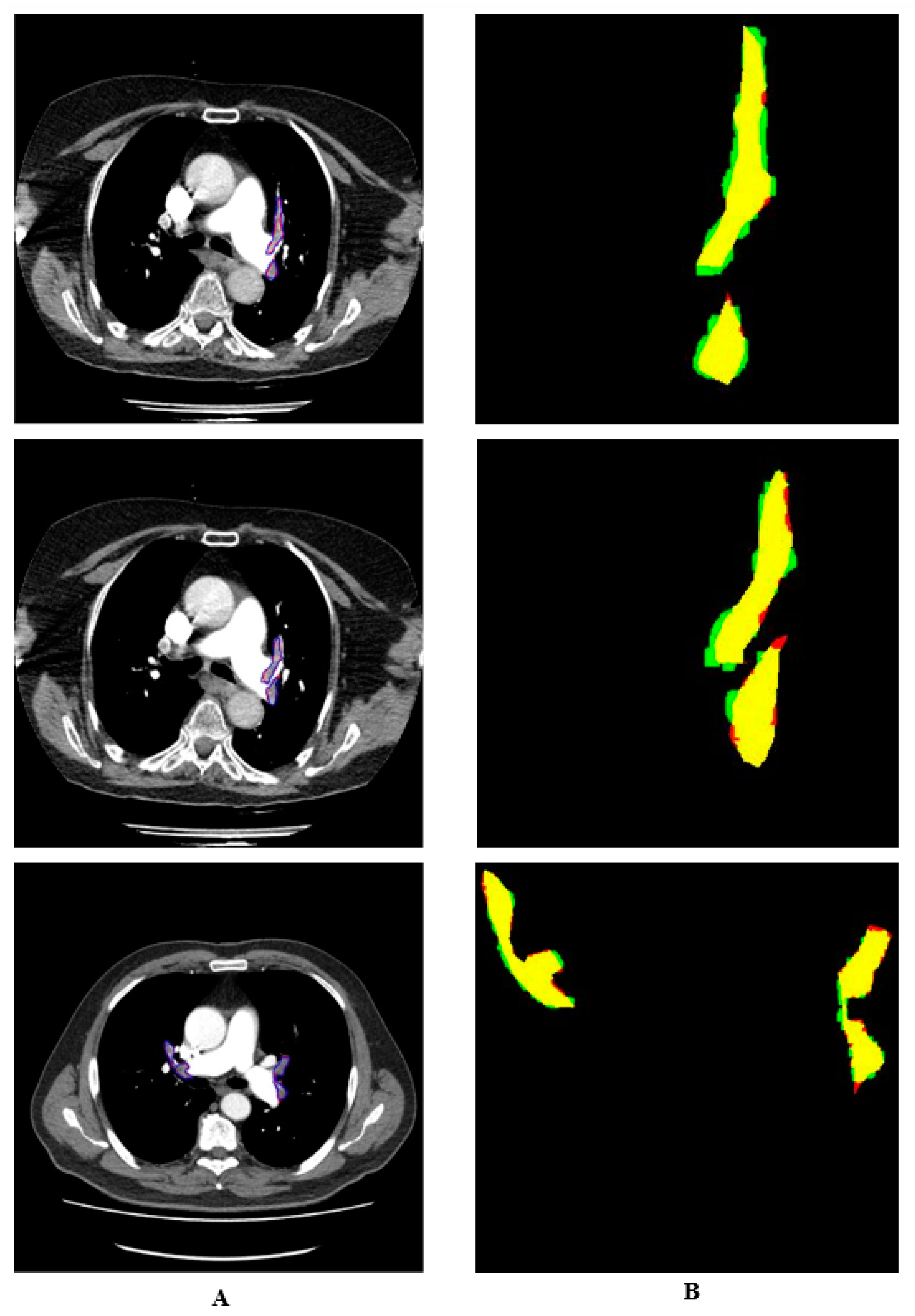

Manual and automatic segmentations are shown in colour in

Figure 7. The regions identified by the expert but not detected by the system are shown in red. The regions detected by the system as PE but not by the doctor are shown in green. The pixels belonging to the PE detected in both ways are shown in yellow. It is seen that the proposed system detects PE with a high performance.

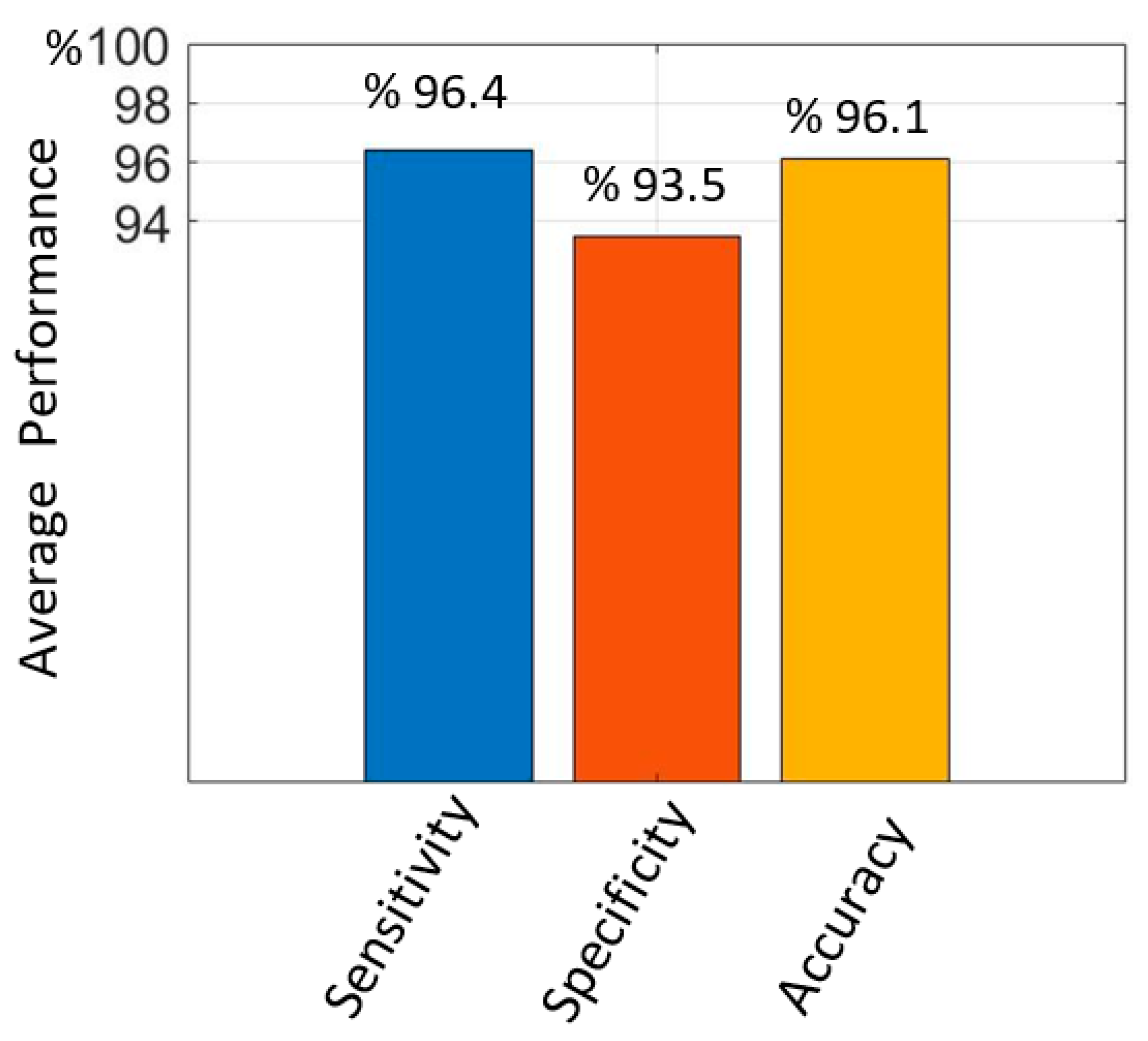

The average sensitivity, specificity, and accuracy values obtained from the test data with the proposed method for hold-out validation are given in

Figure 8. The average sensitivity value was 96.4%, the specificity value was 93.5% and the accuracy value was 96.1%. It is seen that the proposed improved Mask R-CNN has a high performance in PE detection.

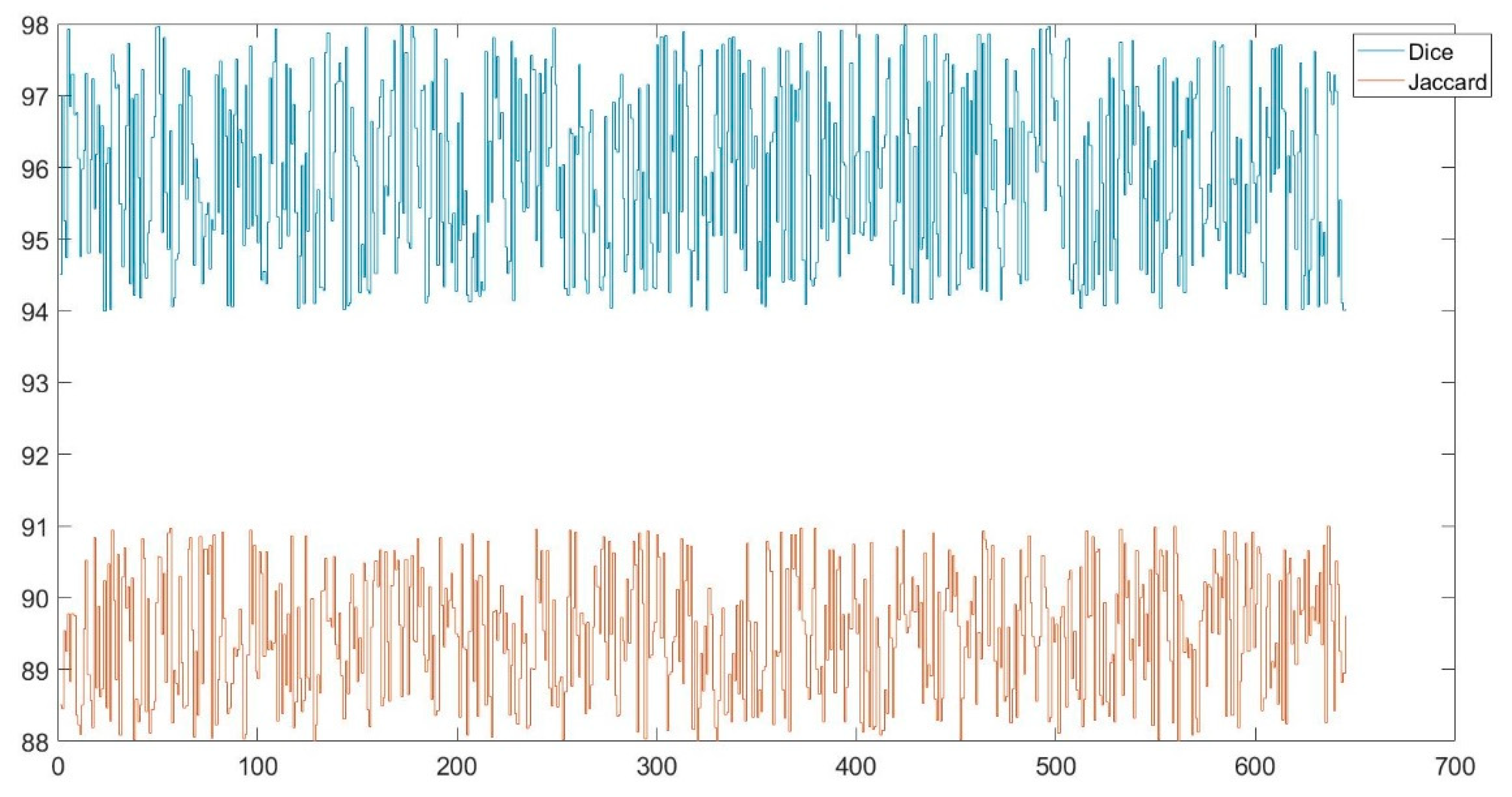

Dice and Jaccard similarity indices were also used in this study to determine the similarity between automatic and manual PE detection. The minimum and maximum Dice values were found to be 0.95 and 0.97 respectively. The minimum and maximum Jaccard values were found to be 0.88 and 0.91 respectively.

Figure 9 shows the graph of the Dice and Jaccard values obtained for the test images. The mean values obtained for the test data are given in

Table 2. The mean values of the Dice and Jaccard similarity indices were obtained as 0.96 and 0.90 respectively. The performance of the study was tested using both hold-out validation and 10-fold cross-validation.

Table 2 shows the PE segmentation performance of Mask R-CNN and Enhanced Mask R-CNN. Enhanced Mask R-CNN was found to be more successful, especially when 10-fold cross-validation was performed.

The performances of improved Mask R-CNN and U-Net and classical Mask R-CNN in PE detection are compared as shown in

Table 3. The results showed that the improved Mask R-CNN provided higher sensitivity, specificity and accuracy values than the classical Mask R-CNN and U-Net models. In particular, the fact that the sensitivity value was higher with the enhanced Mask R-CNN indicates that it has a higher success rate in detecting the pixels of the lesion compared to the other two methods.

The accuracy values obtained at different λ values are given in

Table 4. The results obtained show that the highest performance for this study is λ

1=0.9, λ

2=0.8. The case of λ

1=1 and λ

2=1 is valid for classical Mask R-CNN. Here, λ

1 is the coefficient of the loss function of the bounding box (L

box) and λ

2 (L

mask) is the coefficient of the loss function of the mask.

The performance of the proposed improved Mask R-CNN and other methods in detecting PE is compared in

Table 5. As can be seen, the proposed improved Mask R-CNN shows a high performance in detecting PE.

4. Conclusions

The location and size of the pulmonary embolism, known as a pulmonary artery occlusion, is important for the treatment of the disease. The study developed a computer-based system for detecting pulmonary embolism from computed tomography scans. The system uses a deep neural network trained on images in the local dataset. Modified Mask R-CNN was used as the network model. This modification process involves weighting the loss functions in the Mask R-CNN algorithm with different coefficients. The results obtained showed that the Mask R-CNN algorithm developed according to the classical Mask R-CNN and U-Net was more successful in removing the PE boundaries. In this study, only CT images with PE and without PE-like lesions such as lymph nodes were selected. Axial sections with PEs were used from these images. Segmentation of PEs in these sections was performed. It is possible that other regions may be detected as PEs in axial sections without PEs. The performance values obtained represent the correct extraction performance of the pixels of the PE. The study has highlighted that the performance of the modified mask R-CNN can be improved in object segmentation applications. Since the system determines the location and boundaries of the PE, it can help specialists make a decision about the PE.

Acknowledgments

This study is related to “Establishment of a Decision Support System that Automatically Detects Pulmonary Artery Occlusion (Pulmonary Embolism) project. And it was supported by Kahramanmaras Sutcu Imam University Scientific Research Unit within the post-doctoral researcher program (Project No: 2021/1-46 DOSAP).

References

- Pforte, A., Epidemiology, diagnosis, and therapy of pulmonary embolism. European journal of medical research, 2004. 9(4): p. 171-179.

- Goldhaber, S.Z. and H.J.T.L. Bounameaux, Pulmonary embolism and deep vein thrombosis. 2012. 379(9828): p. 1835-1846.

- Pena, E. and C. Dennie. Acute and chronic pulmonary embolism: an in-depth review for radiologists through the use of frequently asked questions. in Seminars in Ultrasound, CT and MRI. 2012. Elsevier.

- Sadigh, G., A.M. Kelly, and P.J.A.J.o.R. Cronin, Challenges, controversies, and hot topics in pulmonary embolism imaging. 2011. 196(3): p. 497-515.

- Kumamaru, K.K., et al., Correlation between early direct communication of positive CT pulmonary angiography findings and improved clinical outcomes. 2013. 144(5): p. 1546-1554.

- Leung, A.N., et al., An official American Thoracic Society/Society of Thoracic Radiology clinical practice guideline: evaluation of suspected pulmonary embolism in pregnancy. 2011. 184(10): p. 1200-1208.

- Leung, A.N., et al., American Thoracic Society documents: an official American Thoracic Society/Society of Thoracic Radiology clinical practice guideline—evaluation of suspected pulmonary embolism in pregnancy. 2012. 262(2): p. 635-646.

- Konstantinides, S., Torbicki a., Agnelli G, Danchin N, Fitzmaurice D, Galie N, et al. ESC Guidelines on the diagnosis and management of acute pulmonary embolism. European Heart Journal, 2014. 283: p. 1-48.

- Hartmann, I.J.C. and M. Prokop, Spiral CT in the diagnosis of acute pulmonary embolism. Kontraste (Hamburg), 2002. 46(3): p. 2-10.

- Stein, P.D., et al., Multidetector computed tomography for acute pulmonary embolism. 2006. 354(22): p. 2317-2327.

- Yavas, U.S., C. Calisir, and I.R.J.K.J.o.R. Ozkan, The interobserver agreement between residents and experienced radiologists for detecting pulmonary embolism and DVT with using CT pulmonary angiography and indirect CT venography. 2008. 9(6): p. 498-502.

- Rufener, S.L., et al., Comparison of on-call radiology resident and faculty interpretation of 4-and 16-row multidetector CT pulmonary angiography with indirect CT venography. 2008. 15(1): p. 71-76.

- Joshi, R., et al., Reliability of on-call radiology residents’ interpretation of 64-slice CT pulmonary angiography for the detection of pulmonary embolism. 2014. 55(6): p. 682-690.

- Kline, T. and T.J.R. Kline, Radiologists, communication, and Resolution 5: a medicolegal issue. 1992. 184(1): p. 131-134.

- Qanadli, S.D., et al., New CT index to quantify arterial obstruction in pulmonary embolism: comparison with angiographic index and echocardiography. 2001. 176(6): p. 1415-1420.

- Mastora, I., et al., Severity of acute pulmonary embolism: evaluation of a new spiral CT angiographic score in correlation with echocardiographic data. 2003. 13(1): p. 29-35.

- Shiina, Y., et al., Quantitative evaluation of chronic pulmonary thromboemboli by multislice CT compared with pulsed Tissue Doppler Imaging and its relationship with brain natriuretic peptide. 2008. 130(3): p. 505-512.

- Patil, S., et al., Neural network in the clinical diagnosis of acute pulmonary embolism. 1993. 104(6): p. 1685-1689.

- Tourassi, G.D., et al., Artificial neural network for diagnosis of acute pulmonary embolism: effect of case and observer selection. 1995. 194(3): p. 889-893.

- Scott, J. and E.J.R. Palmer, Neural network analysis of ventilation-perfusion lung scans. 1993. 186(3): p. 661-664.

- Wittenberg, R., et al., Acute pulmonary embolism: effect of a computer-assisted detection prototype on diagnosis—an observer study. 2012. 262(1): p. 305-313.

- Kligerman, S.J., et al., Missed pulmonary emboli on CT angiography: assessment with pulmonary embolism-computer-aided detection. 2014. 202(1): p. 65-73.

- Bettmann, M.A., et al., ACR Appropriateness Criteria® acute chest pain—suspected pulmonary embolism. 2012. 27(2): p. W28-W31.

- Ozkan, H., et al., Automatic detection of pulmonary embolism in CTA images using machine learning. 2017. 23(1): p. 63-67.

- Hamet, P., et al., Artificial intelligence in medicine. Metabolism. 2017.

- Litjens, G., et al., A survey on deep learning in medical image analysis. 2017. 42: p. 60-88.

- Tao, Q., et al., Deep learning–based method for fully automatic quantification of left ventricle function from cine MR images: a multivendor, multicenter study. 2019. 290(1): p. 81-88.

- Tajbakhsh, N., M.B. Gotway, and J. Liang. Computer-aided pulmonary embolism detection using a novel vessel-aligned multi-planar image representation and convolutional neural networks. in International Conference on Medical Image Computing and Computer-Assisted Intervention. 2015. Springer.

- Yang, X., et al., A two-stage convolutional neural network for pulmonary embolism detection from CTPA images. 2019. 7: p. 84849-84857.

- Pham, A.-D., et al., Natural language processing of radiology reports for the detection of thromboembolic diseases and clinically relevant incidental findings. 2014. 15(1): p. 1-10.

- Moore, A.J., et al., Imaging of acute pulmonary embolism: an update. 2018. 8(3): p. 225.

- Chen, M.C., et al., Deep learning to classify radiology free-text reports. Radiology, 2018. 286(3): p. 845-852.

- Girshick, R., et al. Rich feature hierarchies for accurate object detection and semantic segmentation.

- Ren, S., et al., Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in neural information processing systems, 2015. 28.

- He, K., et al. Mask r-cnn. 2017.

- He, K., et al. Deep residual learning for image recognition. 2017.

- Ronneberger, O., P. Fischer, and T. Brox. U-net: Convolutional networks for biomedical image segmentation. 2015. Springer.

- Olescki, G., Clementin de Andrade, J. M., Escuissato, D. L., & Oliveira, L. F. , A two stepworkflowfor pulmonary embolism detection using deep learning and feature extraction. Computer methods in biomechanics and biomedical engineering: imaging & visualization, 2023,11(3), 341-350.

- Grenier, P. A., Ayobi, A., Quenet, S., Tassy, M., Marx, M., Chow, D. S., ... & Chaibi, Y. Deep Learning-Based Algorithm for Automatic Detection of Pulmonary Embolism in Chest CT Angiograms. Diagnostics, 2023,13(7), 1324.

- Khan, M., Shah, P. M., Khan, I. A., Islam, S. U., Ahmad, Z., Khan, F., & Lee, Y. IoMT EnabledComputer-Aided Diagnosis of Pulmonary Embolism from Computed Tomography Scans Using Deep Learning. Sensors, 2023, 23(3), 1471.

- Xu, H., Li, H., Xu, Q., Zhang, Z., Wang, P., Li, D., & Guo, L.. Automatic detection of pulmonaryembolism in computed tomography pulmonary angiography using Scaled-YOLOv4. Medical Physics.2023.

- Huhtanen, H., Nyman, M., Mohsen, T., Virkki, A., Karlsson, A., & Hirvonen, J. Automateddetection of pulmonary embolism from CT-angiograms using deep learning. BMC Medical Imaging, 2022. 22(1), 43.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).