Submitted:

27 April 2024

Posted:

29 April 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

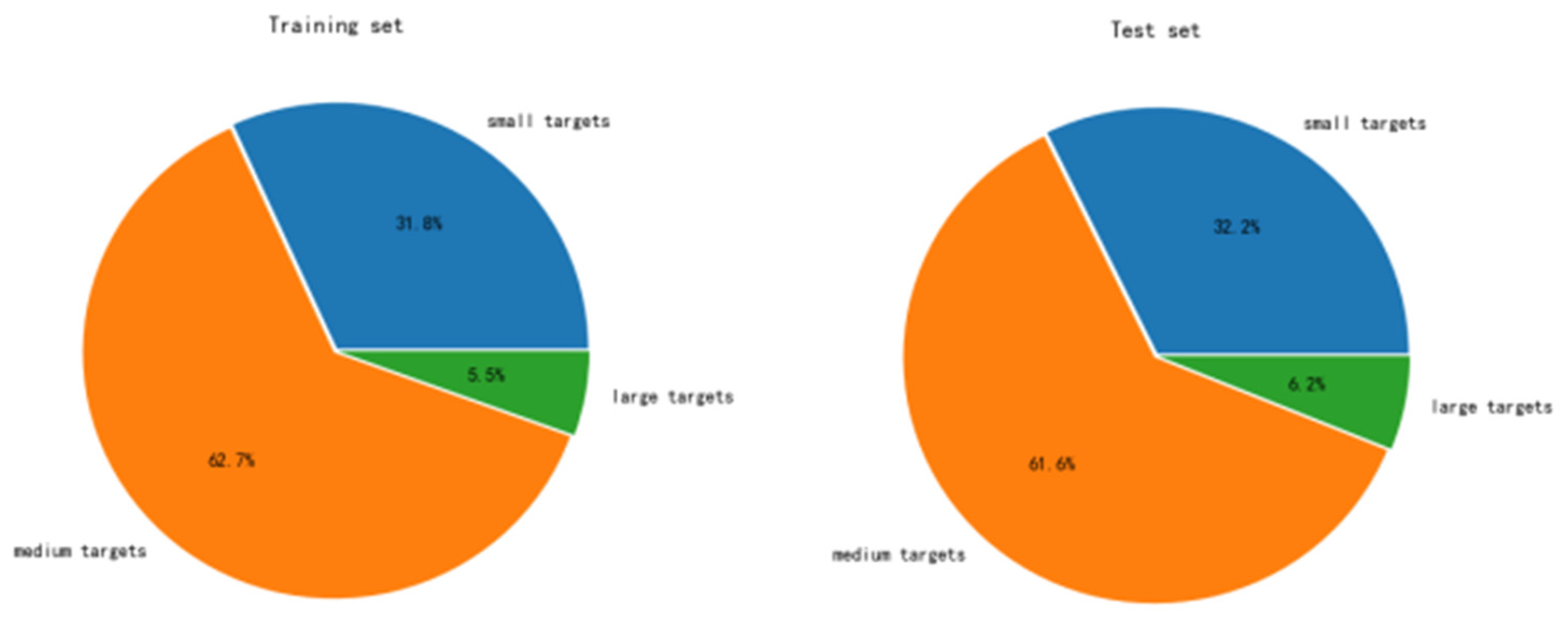

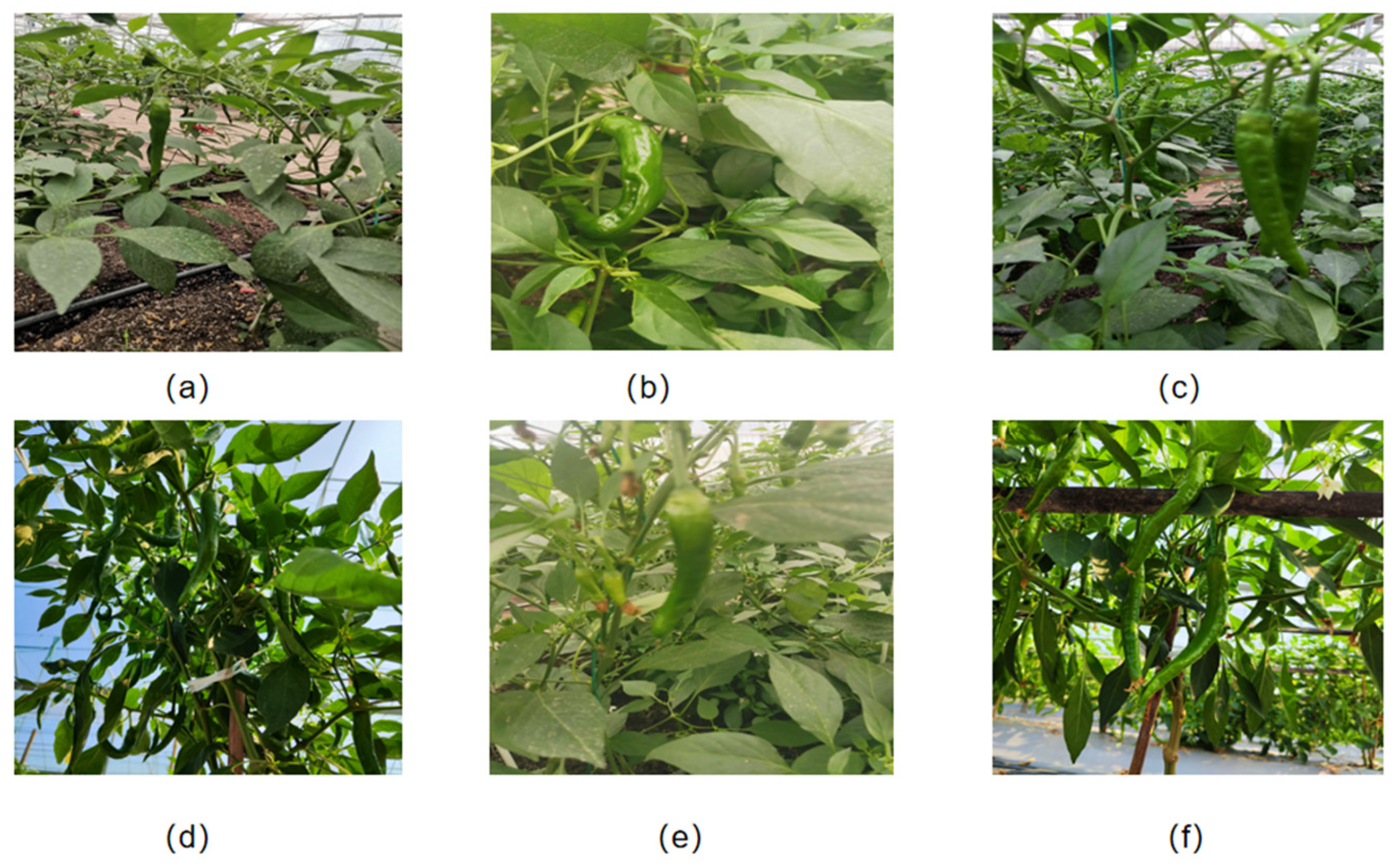

2.1. Data Acquisition

2.2. Data Acquisition

2.3. Experimental Environment

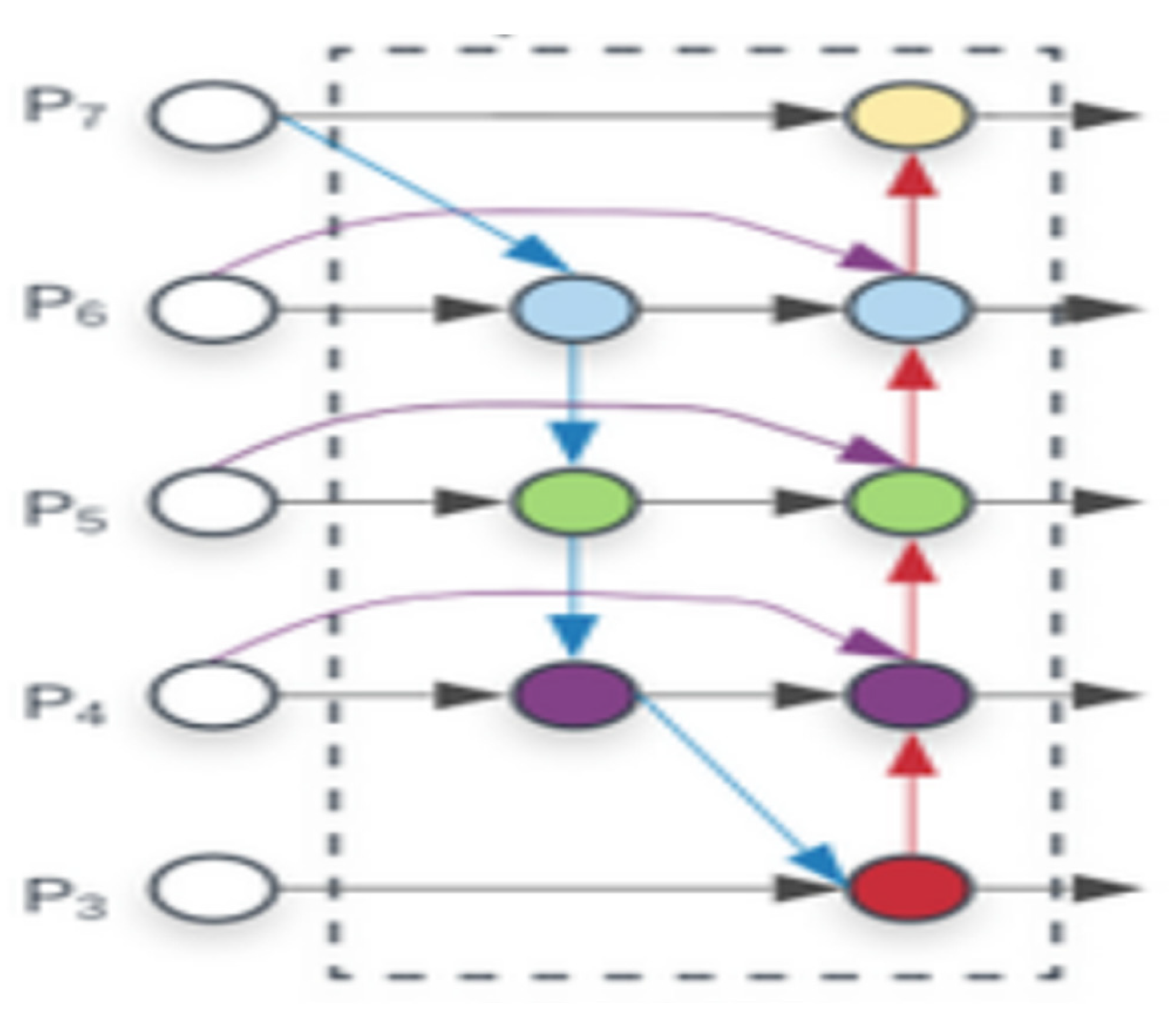

2.4. HFFN (Hierarchical Feature Fusion Network) Hierarchical Feature Fusion Network Module

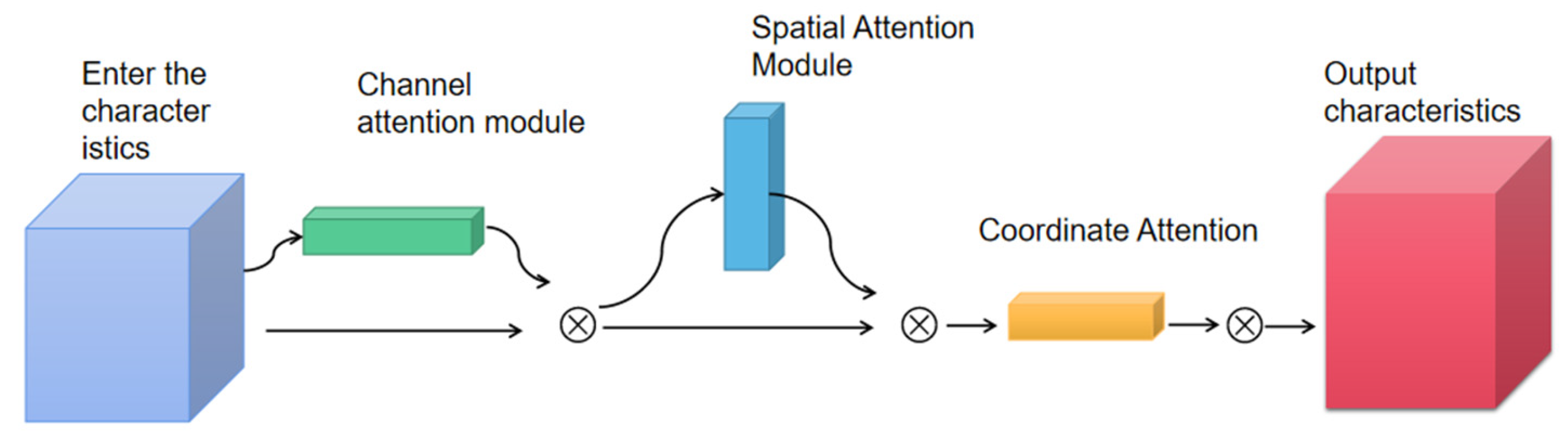

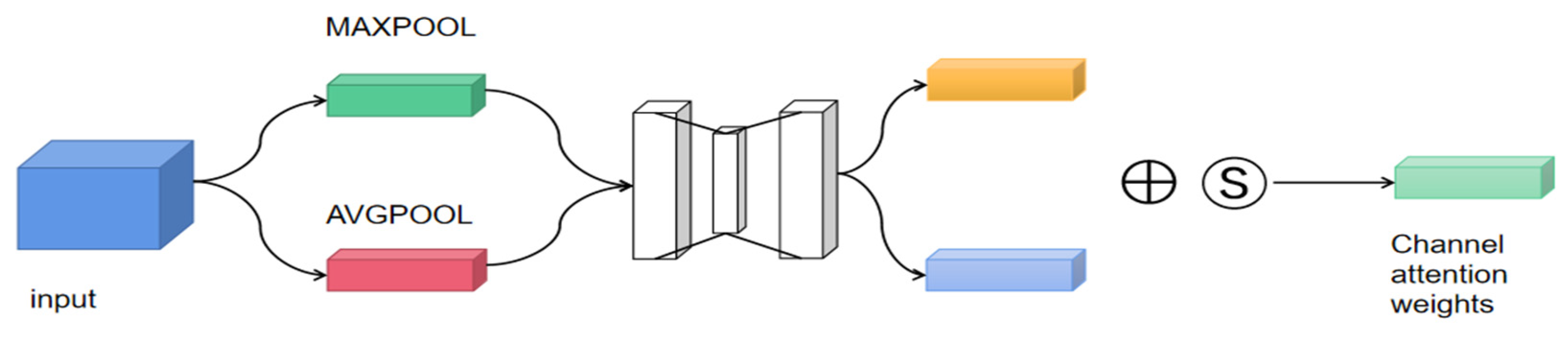

2.5. Three-Channel Attention Mechanism

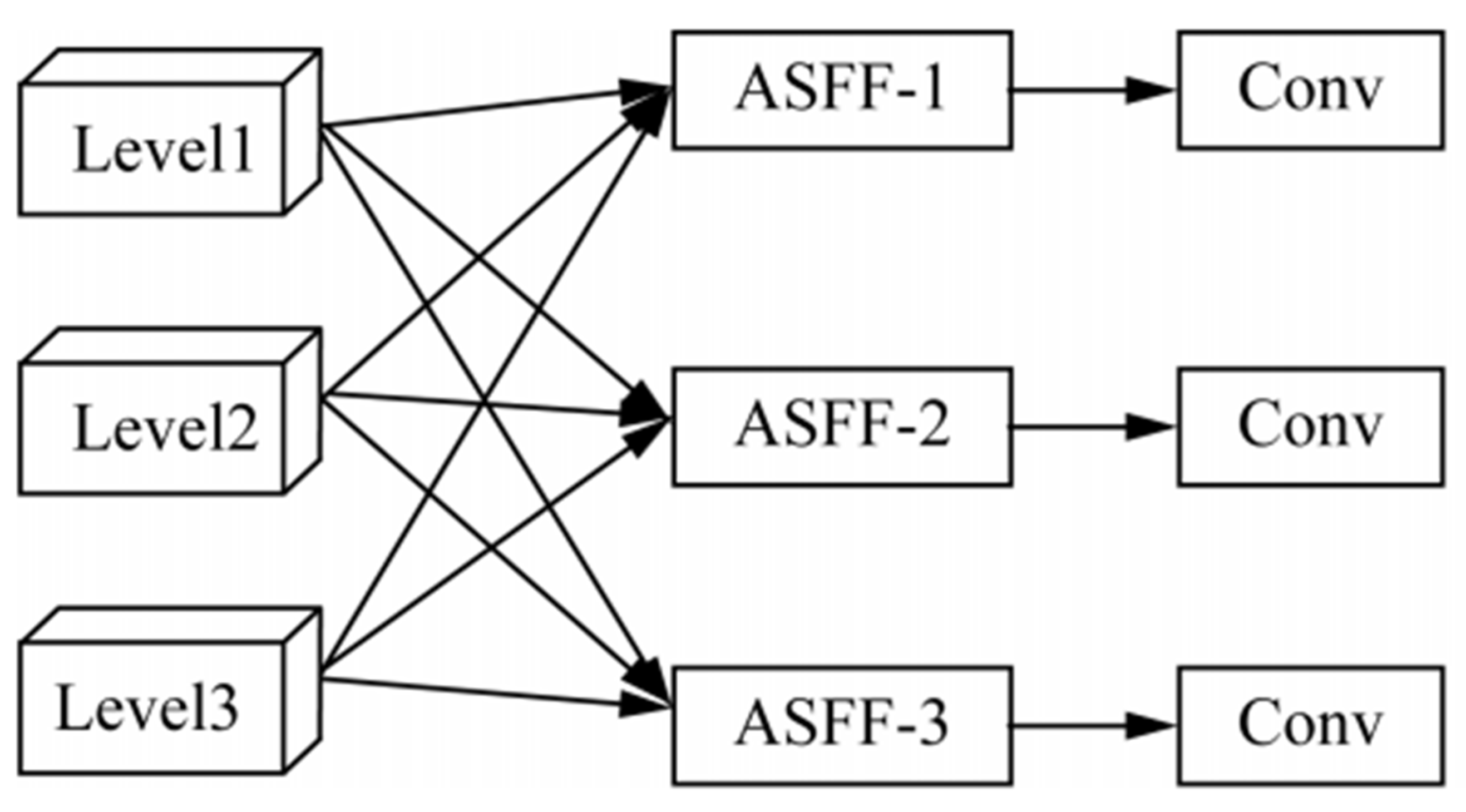

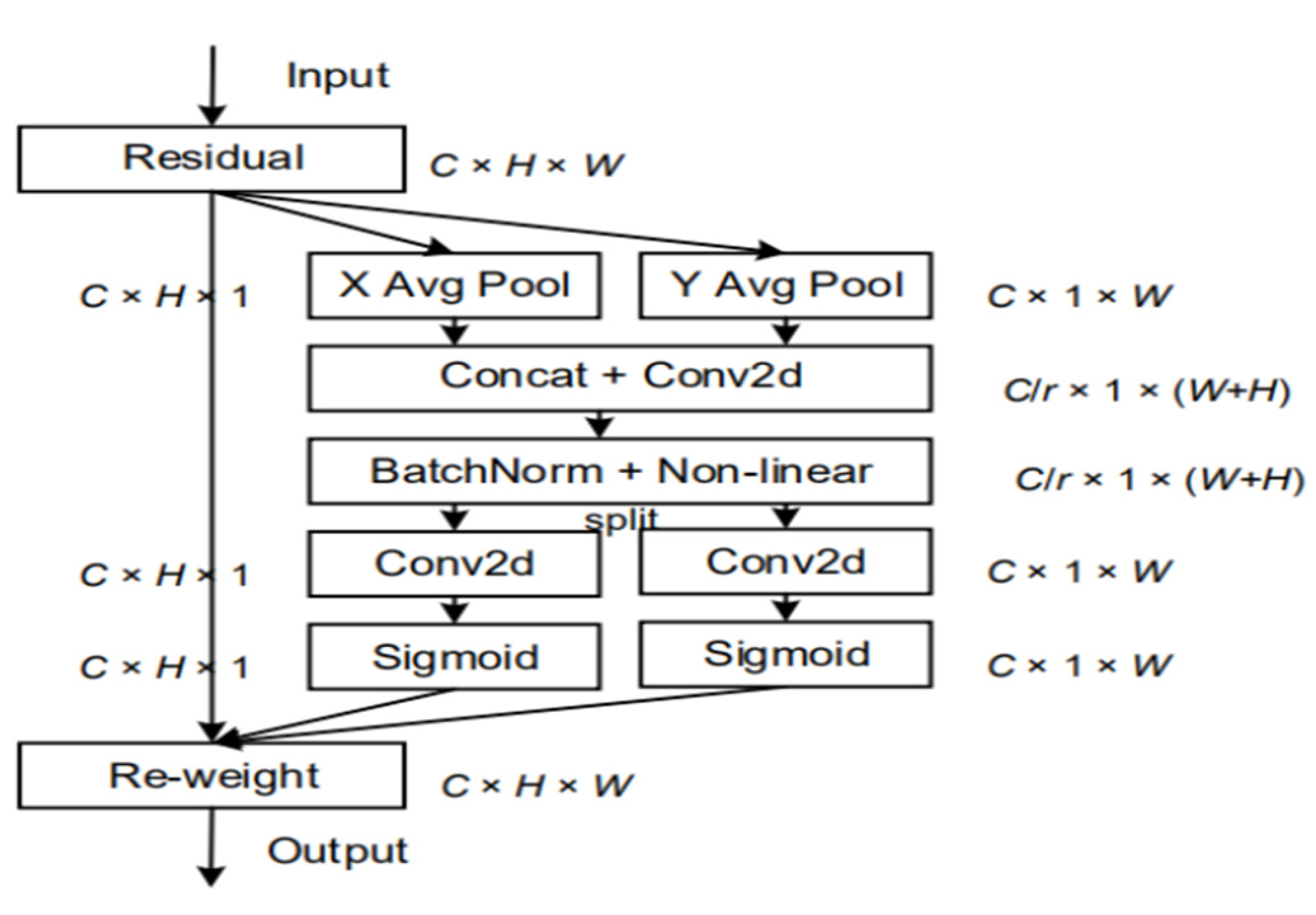

2.6. Resolution Adaptive Feature Fusion Network Module

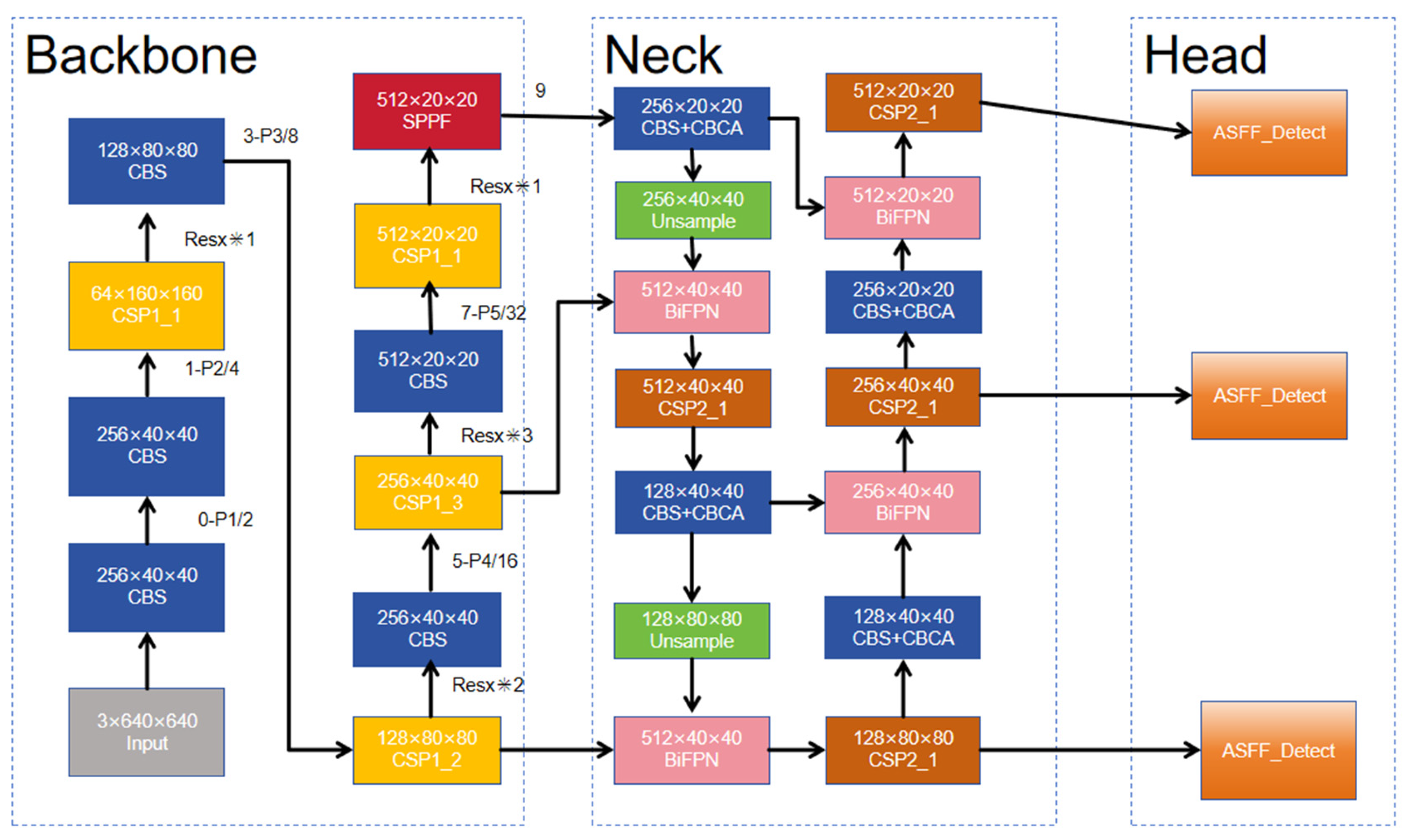

2.7. Yolo-Chili Network

3. Results and Discussion

3.1. Parameter Setting

3.2. Evaluation Indicators

3.3. Yolo-Chili Ablation Test Performance Comparison

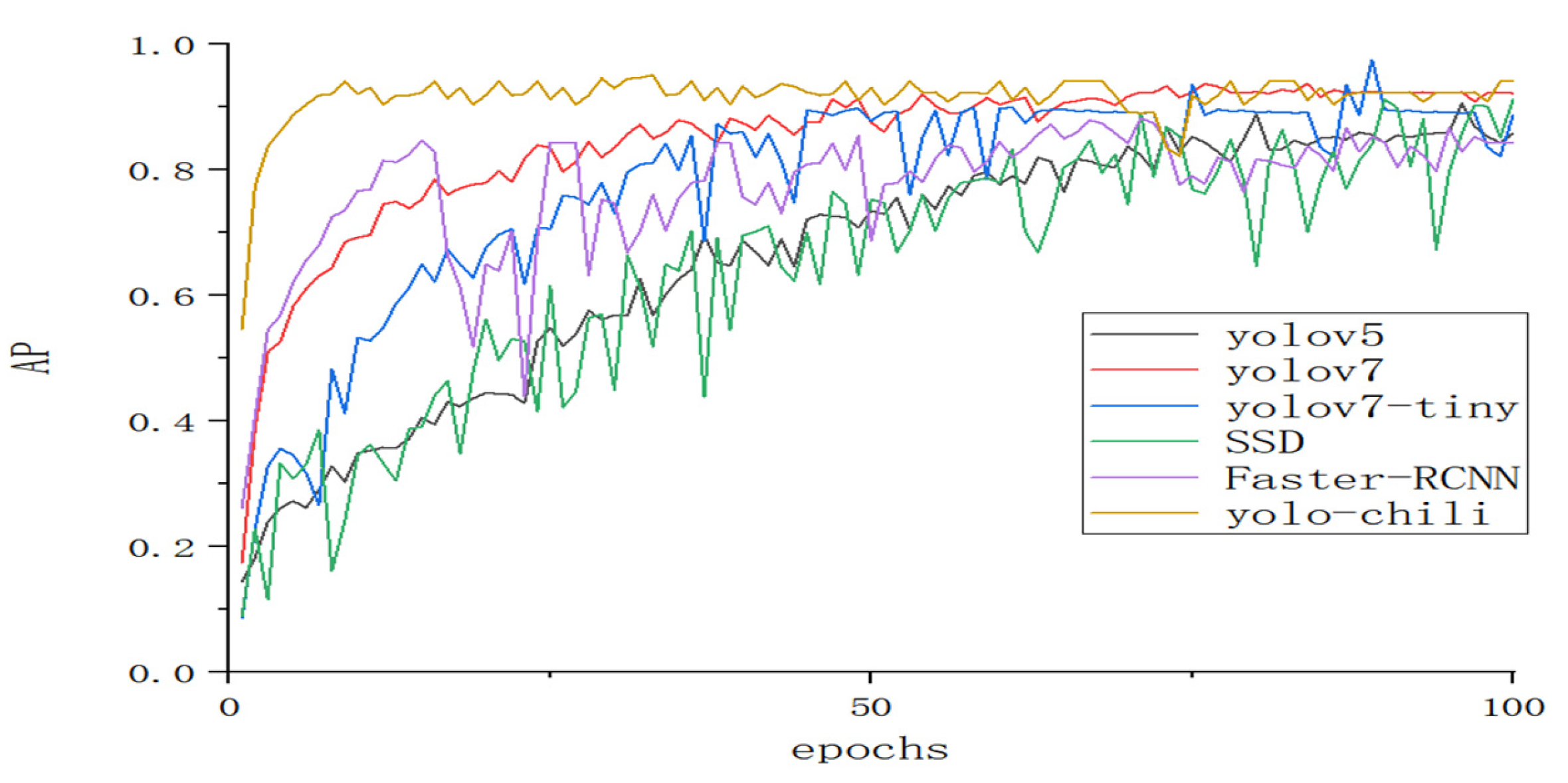

3.4. Comparison of the Performance of Different Object Detection Models.

3.5. Reducing Model Size Using Quantitative Pruning

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fu, L.; Duan, J.; Zou, X.; Lin, J.; Zhao, L.; Li, J.; Yang, Z. Fast and Accurate Detection of Banana Fruits in Complex Background Orchards. IEEE Access 2020, 8, 196835–196846. [Google Scholar] [CrossRef]

- Mathew, M.P.; Mahesh, T.Y. Leaf-based disease detection in bell pepper plant using YOLO v5. Signal, Image Video Process. 2021, 16, 841–847. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Liu T H, Nie X N, Wu J M, et al. Pineapple (Ananas comosus) fruit detection and localization in natural environment based on binocular stereo vision and improved YOLOv3 model. Precision Agriculture 2023, 24, 139–160. [CrossRef]

- Gai, R.; Chen, N.; Yuan, H. A detection algorithm for cherry fruits based on the improved YOLO-v4 model. Neural Comput. Appl. 2021, 35, 13895–13906. [Google Scholar] [CrossRef]

- Jiang, M.; Song, L.; Wang, Y.; Li, Z.; Song, H. Fusion of the YOLOv4 network model and visual attention mechanism to detect low-quality young apples in a complex environment. Precis. Agric. 2021, 23, 559–577. [Google Scholar] [CrossRef]

- Yang, G.; Wang, J.; Nie, Z.; Yang, H.; Yu, S. A Lightweight YOLOv8 Tomato Detection Algorithm Combining Feature Enhancement and Attention. Agronomy 2023, 13, 1824. [Google Scholar] [CrossRef]

- Tian, Y.; Wang, S.; Li, E.; Yang, G.; Liang, Z.; Tan, M. MD-YOLO: Multi-scale Dense YOLO for small target pest detection. Comput. Electron. Agric. 2023, 213. [Google Scholar] [CrossRef]

- Lin, Y.; Huang, Z.; Liang, Y.; Liu, Y.; Jiang, W. AG-YOLO: A Rapid Citrus Fruit Detection Algorithm with Global Context Fusion. Agriculture 2024, 14, 114. [Google Scholar] [CrossRef]

- Yang, S.; Xing, Z.; Wang, H.; Dong, X.; Gao, X.; Liu, Z.; Zhang, X.; Li, S.; Zhao, Y. Maize-YOLO: A New High-Precision and Real-Time Method for Maize Pest Detection. Insects 2023, 14, 278. [Google Scholar] [CrossRef]

- Zhao, Y.; Yang, Y.; Xu, X.; Sun, C. Precision detection of crop diseases based on improved YOLOv5 model. Front. Plant Sci. 2023, 13, 1066835. [Google Scholar] [CrossRef]

- Karthikeyan, M.; Subashini, T.S.; Srinivasan, R.; Santhanakrishnan, C.; Ahilan, A. YOLOAPPLE: Augment Yolov3 deep learning algorithm for apple fruit quality detection. Signal, Image Video Process. 2023, 18, 119–128. [Google Scholar] [CrossRef]

- Tang, R.; Lei, Y.; Luo, B.; Zhang, J.; Mu, J. YOLOv7-Plum: Advancing Plum Fruit Detection in Natural Environments with Deep Learning. Plants 2023, 12, 2883. [Google Scholar] [CrossRef] [PubMed]

- Parico A I B, Ahamed T. Real time pear fruit detection and counting using YOLOv4 models and deep SORT. Sensors 2021, 21, 4803. [CrossRef]

- Lawal O M, Huamin Z, Fan Z. Ablation studies on YOLOFruit detection algorithm for fruit harvesting robot using deep learning[C]//IOP Conference Series: Earth and Environmental Science. IOP Publishing 2021, 922, 012001.

- Li T, Sun M,Ding X, et al. Identification of ripening tomatoes based on YOLO v4+ HSV. Transactions of the Chinese Society of Agricultural Engineering 2021, 37.

- Guo, J.; Xiao, X.; Miao, J.; Tian, B.; Zhao, J.; Lan, Y. Design and Experiment of a Visual Detection System for Zanthoxylum-Harvesting Robot Based on Improved YOLOv5 Model. Agriculture 2023, 13, 821. [Google Scholar] [CrossRef]

- Yang J, Qian Z,Zhang Y, et al. Real-Time Tomato Recognition in Complex Environments with Improved YOLOv4-tiny. Transactions of the Chinese Society of Agricultural Engineering 2022, 38.

- Wang L, Qin M,Lei J, et al. Blueberry Ripeness Recognition Based on Improved YOLOv4-Tiny. Transactions of the Chinese Society of Agricultural Engineering 2021, 37.

- Sun F, Wang Y,Lan P, et al. Apple Fruit Disease Recognition Based on Improved YOLOv5s and Migration Learning. Transactions of the Chinese Society of Agricultural Engineering. 2022, 38.

- Ren, R.; Zhang, S.; Sun, H.; Gao, T. Research on Pepper External Quality Detection Based on Transfer Learning Integrated with Convolutional Neural Network. Sensors 2021, 21, 5305. [Google Scholar] [CrossRef]

- Zhou, J.; Hu, W.; Zou, A.; Zhai, S.; Liu, T.; Yang, W.; Jiang, P. Lightweight Detection Algorithm of Kiwifruit Based on Improved YOLOX-S. Agriculture 2022, 12, 993. [Google Scholar] [CrossRef]

- Zhang, C.; Kang, F.; Wang, Y. An Improved Apple Object Detection Method Based on Lightweight YOLOv4 in Complex Backgrounds. Remote. Sens. 2022, 14, 4150. [Google Scholar] [CrossRef]

- Wang, D.; He, D. Channel pruned YOLO V5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosyst. Eng. 2021, 210, 271–281. [Google Scholar] [CrossRef]

- Gou J, Yu B, Maybank S J, et al. Knowledge distillation: A survey. International Journal of Computer Vision 2021, 129, 1789–1819. [CrossRef]

- Wang, F.; Jiang, J.; Chen, Y.; Sun, Z.; Tang, Y.; Lai, Q.; Zhu, H. Rapid detection of Yunnan Xiaomila based on lightweight YOLOv7 algorithm. Front. Plant Sci. 2023, 14, 1200144. [Google Scholar] [CrossRef] [PubMed]

- Fu, L.; Yang, Z.; Wu, F.; Zou, X.; Lin, J.; Cao, Y.; Duan, J. YOLO-Banana: A Lightweight Neural Network for Rapid Detection of Banana Bunches and Stalks in the Natural Environment. Agronomy 2022, 12, 391. [Google Scholar] [CrossRef]

- Fang, W.; Guan, F.; Yu, H.; Bi, C.; Guo, Y.; Cui, Y.; Su, L.; Zhang, Z.; Xie, J. Identification of wormholes in soybean leaves based on multi-feature structure and attention mechanism. J. Plant Dis. Prot. 2022, 130, 401–412. [Google Scholar] [CrossRef]

- Abade, A.; Ferreira, P.A.; Vidal, F.d.B. Plant diseases recognition on images using convolutional neural networks: A systematic review. Comput. Electron. Agric. 2021, 185, 106125. [Google Scholar] [CrossRef]

- Zeng, W.; Li, M. Crop leaf disease recognition based on Self-Attention convolutional neural network. Comput. Electron. Agric. 2020, 172, 105341. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Yu, L.; Xiong, J.; Fang, X.; Yang, Z.; Chen, Y.; Lin, X.; Chen, S. A litchi fruit recognition method in a natural environment using RGB-D images. Biosyst. Eng. 2021, 204, 50–63. [Google Scholar] [CrossRef]

| Configure | Para |

| CPU | core i5-11400H |

| GPU | Nvidia GeForce RTX 3050TI |

| Accelerated environment | CUDA10.1 CUDNN7.5.0 |

| development environment (computer) | Pycharm2020.1.3 |

| operating system | Windows 10 64-bit system |

| software environment | Anaconda 4.8.4 |

| storage environment | Memory 16.0GBMechanical Hard Disk 2T |

| Yolo-chili | HFFN | Three-channel attention mechanism | Resolution Adaptive Feature Fusion Network Module | AP(Average Precision) (%) | precision(%) | recall (%) |

| ✓ | 83.24 | 91.33 | 81.77 | |||

| ✓ | ✓ | 91.39 | 92.74 | 91.65 | ||

| ✓ | ✓ | 82.32 | 87.93 | 81.62 | ||

| ✓ | ✓ | 85.54 | 93.54 | 82.15 | ||

| ✓ | ✓ | ✓ | 92.24 | 93.42 | 91.19 | |

| ✓ | ✓ | ✓ | 91.27 | 93.20 | 91.62 | |

| ✓ | ✓ | ✓ | ✓ | 94.11 | 94.42 | 92.25 |

| Models | Parameters/×106M | FLOPs/G | Model size/MB | AP (%) | precision(%) | recall (%) |

|---|---|---|---|---|---|---|

| Yolov5 | 7.24 | 16.6 | 14.1 | 85.53 | 91.33 | 81.77 |

| Yolov7 | 37.49 | 123.5 | 74.5 | 92.39 | 94.24 | 91.65 |

| Yolov7-tiny | 6.51 | 14.2 | 12.1 | 89.32 | 93.93 | 87.62 |

| SSD | 26.29 | 62.8 | 93.3 | 91.24 | 93.42 | 73.19 |

| Faster-RCNN | 137.10 | 370.2 | 111.5 | 83.63 | 67.84 | 81.62 |

| Yolo-chili | 11.4 | 21.2 | 18.7 | 94.11 | 94.42 | 92.25 |

| Models | Size/MB | AP | Recall | Precision | FPS |

|---|---|---|---|---|---|

| Yolo-chili | 18.7 | 94.11 | 92.25 | 94.42 | 94 |

| pruned_quantized_model | 9.64 | 93.66 | 0.97 | 0.97 | 87 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).