1. Introduction

Perception of movements is based on sensory information that reaches the brain from peripheral somatic receptors and, in case of intact vision, also from input from the eyes. These processes are known as proprioception [

1] and visual feedback [

2], respectively. However, this idea has been challenged by the proposal that our awareness of movement execution primarily arises from our initial “intention” to move. According to this proposal, the sensation of moving is associated with increased activity in the parietal (perceptual) and frontal (motor) regions of the brain [

3]. Thus, the concept of movement intention is framed as a higher-order cognitive function associated with the initial stages of movement planning, and this may specify the body part involved, centered on the target and contingent upon the task requirements [

4].

Experiments with functional imaging in humans have suggested that conscious intentions to perform reach-and-grasp movements towards objects can be predicted from brain signals shortly before the movements are actually executed [

5,

6]. It remains unclear whether the concept of movement intention can be extended to covert movements, such as motor imagery, and whether the intention to engage differentially in various types of movement imagery can be predicted based solely on brain activity. Decoding the neural bases of intentions for goal-directed actions is important not only for gaining a deeper understanding of higher-level brain functions and awareness but also for developing innovative treatments for movement disorders. These treatments may include neurorehabilitation, non-invasive brain stimulation, brain–machine interfaces, and neuroprosthetics [

7].

In the present study, we investigated whether differences in spatial and temporal patterns of brain activation, using blood oxygen level–dependent (BOLD) signals, were elicited by the participants’ intentions to perform different types of motor imagery (left vs. right hand) as already demonstrated for slow cortical potentials (SCP) [

8]. However, the poor spatial resolution of SCP does not allow a clear definition of the anatomical-functional relationship between the direction of intention. We investigated whether a multivariate pattern classifier could use these patterns to identify movement intentions by using BOLD signals obtained from different brain regions. To this end, we designed an event-related functional magnetic resonance imaging (fMRI) paradigm. In this paradigm, participants were first prompted about the upcoming motor imagery they would perform (left or right hand) but were explicitly instructed to refrain from initiating the imagery until they received a further cue. To ensure that any observed brain activations were not influenced by hidden hand or eye movements of the participants, we monitored the muscle activity in their hands with electromyography (EMG) and tracked their eye movements during the experiment. We hypothesized that intentions for different types of motor imagery could be successfully decoded from BOLD signals of the brain, particularly from the parieto-frontal regions.

2. Materials and Methods

2.1. Participants

Ten participants (five female, 21–26 years) participated in the study. All were free of any neurological or major disease or medication, and all had normal vision. They were right-handed as assessed by the Edinburgh Handedness Inventory [

9]. All participants gave informed consent to participate in the study, which was approved by the local ethics committee of the Faculty of Medicine of the University of Tübingen, Germany.

2.2. Experimental Protocol

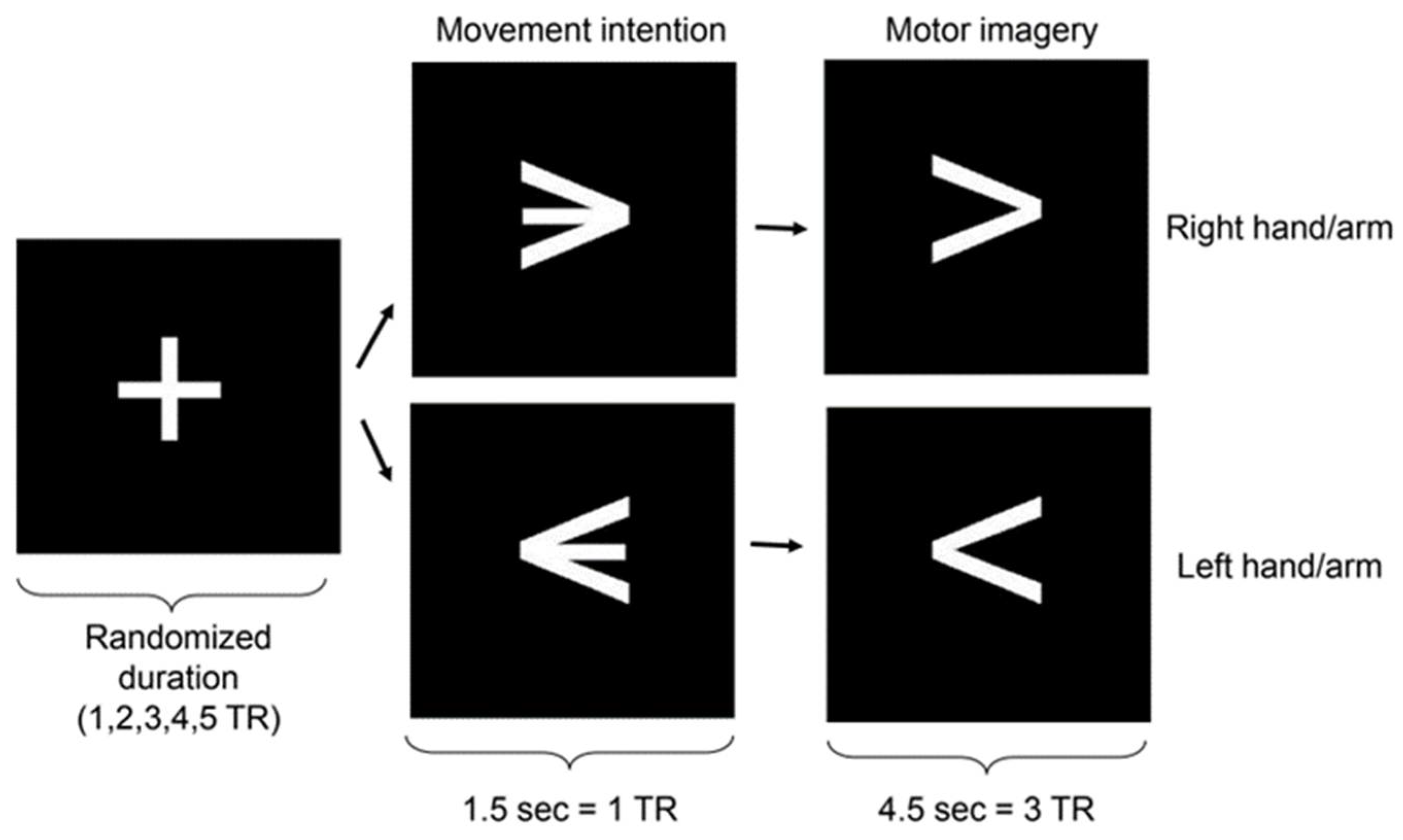

During the fMRI scanning sessions, the experimental protocol was presented visually to the participants via a mirror attached to the head coil and by using the Presentation software (Neurobehavioral Systems, Inc., CA). The protocol consisted of an event-related design of successive runs composed of fixation, motor intention, and motor imagery blocks. Fixation block durations were pseudo-randomized to integral multiples of the MRI repetition time (TR) (1.5s) between 1 and 5 TRs. The motor intention blocks had a duration of 1 TR, whereas the imagery blocks had a duration of 3 TRs (

Figure 1). During the motor intention blocks, participants were presented with a left-pointing or right-pointing arrow at the center of the screen, indicating the direction of the upcoming motor imagery block (left or right hand). Participants were explicitly instructed not to initiate the imagery until they received further cues. During the subsequent imagery blocks, participants were instructed to perform kinesthetic motor imagery (involving imagining the sensation of performing a hand movement). The imagined movement involved a sequence of three sub-movements: first, reaching for an imagined tool placed approximately 10 cm in front of the hand; next, grabbing it; and finally, flexing the arm to lift the imagined object towards the ipsilateral shoulder. This sequence of movements was used because complex imagined movements produce stronger brain activations [

10]. Participants mentally conducted these movements of the right or left hand according to the instructions presented. Each participant underwent four scanning sessions, with each session following an identical paradigm. One session consisted of 20 runs of fixation, motor intention, and motor imagery blocks for each hand, resulting in 40 trials in total. Left-hand and right-hand trials were pseudo-randomized.

Before the task, participants received detailed information about the protocol. Once placed in the scanner, participants underwent several practices run in the same position as was to be used during the experiment to familiarize them with the task.

2.3. FMRI Data Acquisition and Preprocessing

Experiments were conducted using a 3-Tesla MR Trio system (Siemens, Erlangen, Germany) with a standard 12-channel head coil. Functional image had 16 slices (voxel size = 3.3 mm × 3.3 mm × 5.0 mm, slice gap = 1 mm). Slices were AC/PC aligned in axial orientation. A standard echo-planar imaging (EPI) sequence was used (TR = 1.5 s, matrix size = 64 × 64, effective echo time TE = 30 ms, flip angle α = 70°, bandwidth = 1.954 kHz/pixel). For superimposing functional maps on brain anatomy, a high-resolution T1-weighted structural scan of the whole brain was acquired for each participant (MPRAGE, matrix size = 256 × 256, 160 partitions, 1-mm3 isotropic voxels, TR = 2300 ms, TE = 3.93 ms, α = 8°). Two foam cushions immobilized the participant’s head.

Preprocessing of the fMRI images was performed with SPM12 (Welcome Department of Imaging Neuroscience, London, UK), and classification was performed using MATLAB (The Mathworks, Natick, MA) scripts. Realignment, co-registration, normalization onto the Montreal Neurological Institute space, smoothing (Gaussian kernel of 8 mm full width at half maximum), and whole brain masking were then performed. To account for the variance of the BOLD signals for different participants, z normalizations were applied across all the time-series to the data for each participant data separately.

2.4. Support Vector Classification

To decode brain activations related to motor intentions, a multivariate analysis was performed using a machine learning algorithm called support vector machine (SVM) [

11,

12], a pattern recognition technique which has shown high performance in comparison to other existing methods of pattern classification of fMRI signals [

13,

14,

15,

16,

17].

For SVM analysis, pre-processed images were obtained with SPM5, and classification was performed using an in-house MATLAB (The Mathworks, Natick, MA) toolbox [

18]. For SVM classification, the previously computed z-values were used as features.

First, we examined whether it was possible to distinguish between successive conditions (fixation, intention, and imagery) by classifying the spatial patterns of brain signals extracted from brain regions of interest (ROIs) known to be involved in motor planning and execution, namely the posterior parietal cortex (PPC), supplementary motor area (SMA), premotor cortex (PMC), and primary motor cortex (M1). To explore the specificity of these regions coding for motor intention, the dorsolateral prefrontal cortex (DLPFC), a large brain region not usually considered to be involved in motor tasks, was included in the analysis. The posterior cingulate cortex [

19] and fronto-polar cortex [

20] were also included because of their involvement in the preparation for overt motor execution. The somatosensory area was included based on findings from neural recordings in monkeys, which demonstrated that neural activity in the postcentral cortex precedes active limb movement [

21].

To evaluate the decoding accuracy from data for these brain areas, brain masks were created with WFU PickAtlas Toolbox by using Brodmann areas (BA) as follows: BA 10 for the frontopolar cortex, BA 9 + 45 + 46 for the DLPFC, the mesial part of BA 6 for the SMA, the remaining part of BA 6 for the PMC, BA 4 for the M1, BA 1 + 2 + 3 for the primary somatosensory cortex, BA 5 + 7 + 39 + 40 for the PPC, and BA 31 for the posterior cingulate cortex. To separate the mask of the PMC from the mask of BA6, the mask of the SMA (in the aal labels of the WFU PickAtlas Toolbox) was subtracted from that of BA6. The classification performance from data was evaluated through 4-fold cross-validation [

22]. The pattern analysis accounted for the delay in the hemodynamic response with respect to the stimulus onset by introducing an equivalent delay of 3 s (2 TRs) in the input data set [

23].

2.5. Multivariate Spatial Analysis with Effect Mapping

Based on the parameters of the trained SVM model, we analyzed the fMRI data with the Effect Mapping [

11] (EM). To identify informative voxels from the SVM model, the EM measures the effect of each voxel in multi-voxel space to the SVM output by considering two factors, namely, the input vectors and the weight vector;

, where

is the SVM output,

is the weight vector, and

the input vector which determine the SVM output. The effect of each voxel on the classifier output is measured by computing normalized Mutual Information (NMI) between the voxel and the SVM output. MI is defined as the amount of information that one random variable contains about another random variable [

24]. That is, when two random variables X and Y occur with a joint probability mass function

and marginal probability function

and

, the entropies of the two random variables and the joint probability are given respectively by:

MI,

, is the relative entropy between the joint distribution and the product distribution, i.e.,

To correct for variance of mutual information based on entropies

and

, normalized mutual information is defined as [

25]:

Hence, the effect value (EV)

of a voxel

k is defined as:

where

is the SVM output after excluding the sign function,

and

are the SVM weight value and activation in voxel

k, respectively.

After normalizing the absolute value of

from Eq. (4), we obtain the relation:

where sgn(.) is a sign function, and

is the standard deviation of all

. In the present study, Eq. (5) (

) was used to compute the EV at each voxel to make E(effect)-maps from different participants and different folds of cross-validation be comparable.

With different contrasts, i.e., intention vs. fixation, and left vs. right over time points (left and right fixations, left and right intentions, and left and right imaginations), E-maps were separately obtained from data taken together from all the brain areas, of the participants. The E-maps from a contrast for 4-fold CV of all participants (i.e., 40 E-maps; 4 E-maps from 4-fold CV and 10 participants) were averaged into an E-map for a group analysis, and then the averaged map was smoothed spatially with 5 mm Fixed Width Half Maximum (FWHM) to minimize distortion of the map for ease of interpretation. In the interpretation of the E-map, positive and negative EVs were related the design labels, 1 and -1, of the SVM classifier, respectively. That is, if the design labels of two conditions are exchanged, the sign of EVs are also reversed.

2.6. Eye tracker

To investigate whether classification accuracies obtained from the brain ROIs could have been influenced due to eye movements, we monitored pupil positions inside the scanner using the EYE-TRAC® 6 (Applied Science Laboratories, MA), a video-based infrared eye-tracker with long-range optics, specifically designed for fMRI, and a sampling rate of 60 Hz in 9 participants, simultaneously with collection of the fMRI data. After removal of blinks and outliers, we performed a discriminant analysis to find out whether the data could be classified according to the lateralization (left or right) of each condition (intention and imagery). Discriminant analysis [

26] is a statistical method that can be used to develop a predictive model of group membership based on observed features of the data. Starting from a sample of cases with a known group membership, a discriminant function is generated based on the linear combination of the variables. This function, which provides the best discrimination between the groups, can be then applied to new cases. In literature, three different types of discriminant analysis can be found: direct, hierarchical and stepwise. The difference in these three methods consists of entering the variables for the function. In the direct method, the one we used, all variables are entered together; in the hierarchical one, the researcher determines the order; and in the stepwise discriminant analysis (SWDA), variables are entered step-by-step and are statistically evaluated to determine which one contributes the most to discriminate. In our analysis, the lateralization of the conditions was used to determine the discriminative function. We computed for the 9 participants (4 sessions per participant) a 10-fold cross-validated discriminant analysis for each TR. The results of this analysis provided us with means and standard errors.

2.7. Electromyography

In order to explore whether classification accuracies obtained from brain areas could have been influenced by muscular activity, participants were instructed to remain still and avoid any movement during the experiment. Furthermore, 6 participants performed the same experimental task again, in which EMG data was recorded simultaneously. The EMG data was not obtained in the same sessions in which brain data were analyzed, in order to avoid participants to be distracted from the task due to the presence of the EMG recording, to avoid brain activations due to skin stimulations by the electrodes and wires, and to avoid possible artifacts in the brain signals due to the EMG. EMG data was acquired using 6 pairs of bipolar, sintered Ag/AgCl electrodes, which were placed based on physical landmarks on antagonistic muscle pairs having 3 pairs in each arm. One pair was placed close to the external epicondyle over the extensor digitorum (extension), the second pair over the flexor carpi radialis (flexion), and the last pair over the external head of the biceps (flexion). The electrode wires were twisted per pair to minimize the differential effect of the magnetic field on the EMG leads. A ground electrode was placed on the ankle joint. Current-limiting resistors (5 kΩ) were attached to the EMG electrodes to prevent possible warming of the electrodes. All electrodes were connected to an electrode input box, which was in turn connected to the amplifier. The digital signals were transmitted via an optical cable and stored on a personal computer outside the MR room. Data were recorded using a MR-compatible bipolar 16-channel amplifier (BrainAmp) from Brain Products GmbH, Munich, Germany. Sampling rate of data acquisition was set at 5000Hz with a low pass filter of 250 Hz and a signal resolution of 0.5 muV. The acquired EMG signal was synchronized with the scanner clock using the SynchBox device from Brain Products GmbH, Munich, Germany. The SyncBox scanner interface serves as the direct receiver for pulses from the MR gradient clock (10,000 kHz). A model of the MR gradient artifact was generated by averaging EMG signals from five repetitions times (TR, 1.5s) of the echo planar imaging (EPI) pulse sequence. The MR artifact template obtained was then subtracted from the original data for correcting the gradient artifacts. The artifact corrected and filtered data is subsequently used for pattern classification in the following manner. EMG signals for each hand were separated into datasets for distinct motor class labels as follows: left and right intention trials and left and right imagery trials. Here, each trial corresponds to EMG data acquired during one complete TR of the EPI pulse sequence. The classes, left and right intention, corresponded to 1 TR, and the classes left and right imagery to 3 TRs, respectively, of the EMG data.

Complexity of the EMG waveform for each TR (1.5s) was determined as a time-domain feature from a moving window of 240 ms and a window-overlap of 24 ms. The waveform was computed from the equation:

Where X is the rectified EMG data of the window from data point k=1 to k= L, being L the length of the window. The feature, WL, of the signal is a combined indicator of signal amplitude and frequency. The extracted feature was then transformed to a principal component space by performing a discrete Karhunen-Love transform. Non-linear decoding filters were designed using multilayer, feed-forward Artificial Neural Networks (ANNs) because of their use in nonlinear regression and classification. By using a tan-sigmoid transfer function for the hidden layer neurons and a log-sigmoid transfer function for the output layer, the network assigns a probability to each movement, P{Mi}, where i = 1, 2 corresponds to the 2 hand movements types (right and left). The movement type with the highest probability is chosen as the final output of the classifier. The neural network was trained using MATLAB’s scaled conjugate gradient descent algorithm in combination with early validation to improve generalization. The results were validated using a 10-fold cross validation technique.

3. Results

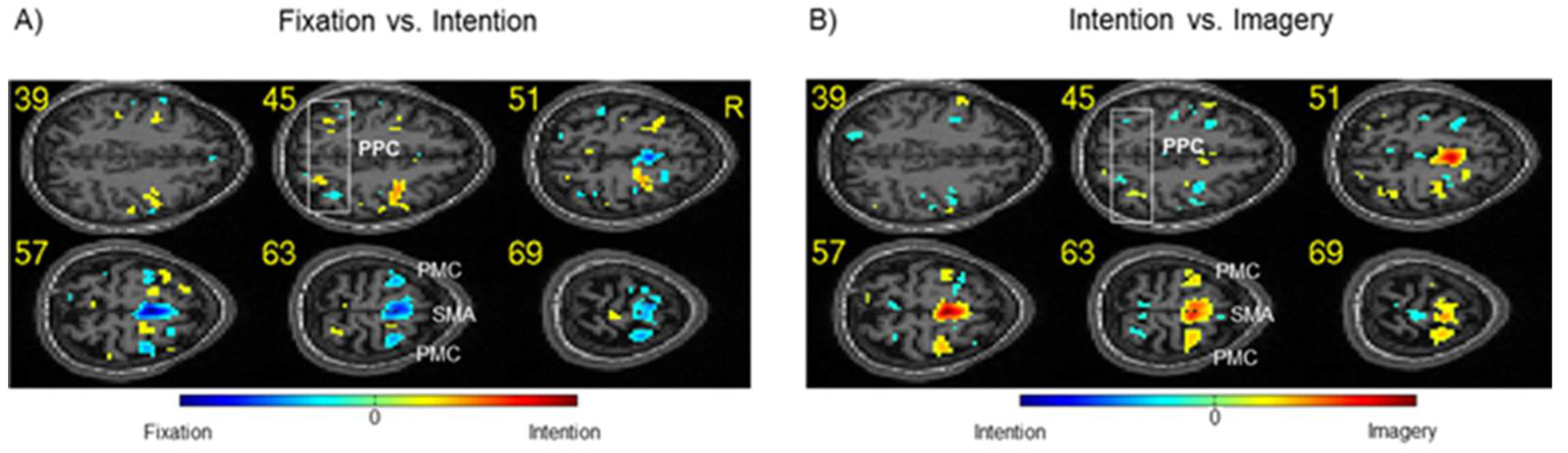

First, we examined whether it was possible to distinguish between successive conditions (fixation, intention, and imagery) by classifying the spatial patterns of brain signals. The results of multivariate pattern analysis using SVM showed that the classification accuracies for all these areas were above chance (>50%) for classification between blocks of fixation and motor intention and between motor intention and motor imagery. Furthermore, the PMC and PPC displayed the highest classification accuracies (>80%) when compared with other ROIs (see

Table 1). Based on the parameters of the trained SVM model, we further analyzed the fMRI data with the EM method of multivariate functional analysis [

11]. The E-maps (

Figure 2) show a clear distinction between the activation patterns for the different conditions and relative differences between the ROIs in intention formation and imagery, thus confirming the presence of discriminative information in the PPC, PMC, and SMA.

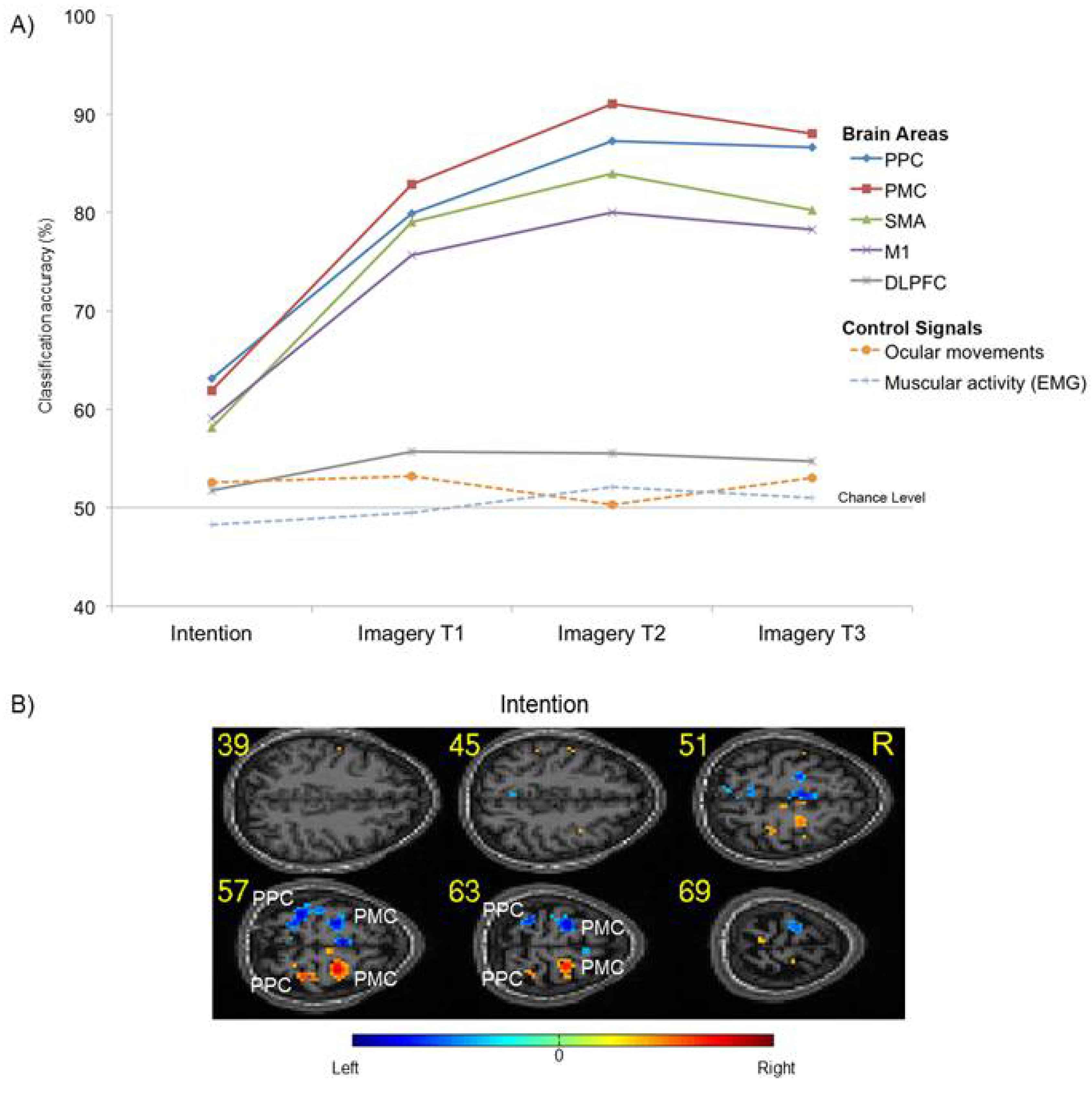

In a second step, we further investigated the specificity of the information in the ROIs by testing whether the pattern classifier could decode the laterality (left or right) of the task. In the motor intention block, the classifier could robustly distinguish between left and right tasks in the PPC (accuracy = 63.1%) and in the PMC (accuracy = 61.9%), showing that information about movement intention direction is available in these areas (

Table 2). During the imagery blocks, classification accuracies of >80% distinguished left imagery from right imagery in the PMC, PPC, and SMA. Again, particularly high classification results were obtained for the PPC and PMC (mean = 86%) (

Table 2 and

Figure 3A). The E-maps, displaying the most informative voxels for the classification between left and right intention, confirm the important roles played by the PPC and PMC in intention formation (

Figure 3B).

To confirm that these results were not influenced by physiological factors such as eye movements and hand muscle activity, we acquired eye tracker and EMG signals within the fMRI sessions and performed pattern classification of these signals by using linear discriminant and neural network analyses. Results of the classification of pupil positions for left vs. right motor intention, as well as for motor imagery, were around the chance level. Similar results were obtained by classifying the EMG signals of the left and right hands (

Table 2 and

Figure 3A). As intentions for movement are shown to be anatomically segregated in the PPC, with regions being specialized for planning saccades, in addition to reaches and grasps, these results show that the fMRI classification was specifically derived from movement imagery and was not related to systematic eye movements.

4. Discussion

Our results show that intentions for different types of motor imagery can be predicted from BOLD signals in the parieto-frontal regions (particularly the PPC and PMC) of the human brain with a support vector classifier. Varied evidence shows that the PPC plays a crucial role in movement planning [

27] and that it contains anatomically segregated regions (intentional maps) that code for the planning of different movements [

28]. Furthermore, electrical stimulation of the inferior parietal cortex in human patients with brain tumors caused a strong intention and desire to move, whereas more intense stimulation of this area led to illusory movement awareness, thus lending credence to the hypothesis that both motor intention and motor awareness emerge from activations of parietal regions [

29]. Having said that, the PMC has anatomical connections with the frontal, parietal, and motor cortical regions [

30]. This establishes the PMC as a central hub in the processes of motor planning and execution, effectively transmitting information regarding the advanced cognitive functions associated with movement [

31]. The high classification accuracies obtained in the present study during the intention block for the PPC and PMC suggest that these regions play analogous roles in intention formation for both overt and covert movements.

The specificity of these results could be seen in light of the comparatively lower classification accuracies obtained from the other brain areas included in the analysis. This point is further reinforced by considering the almost chance classification accuracy obtained from the DLPFC for both intention and imagery, despite this being a relatively large region in the brain.

Interestingly, for left vs. right classification during both movement intention and imagery, a general tendency for higher prediction accuracies for the PPC and PMC, as compared to that for M1, was found across every time point. These findings are concordant with the idea that premotor and parietal regions play a predominant role in different aspects of action planning [

32,

33,

34] and that the primary motor cortex is inconsistently activated during motor imagery, usually at a lower intensity than during motor execution [

35].

It might be argued that the experimental paradigm does not assure that participants did not perform mental imagery during the intention block. However, this interpretation is not tenable in view of the high classification results for differentiating between motor intention and motor imagery and the different brain areas involved in the two conditions (see

Table 1 and

Figure 2). It might be also argued that the high classification accuracies obtained for intention were merely the result of a nonspecific expectancy for the forthcoming go signal or imagery block. However, in this case, it would have been impossible for the classifier to distinguish between left and right motor intentions (see

Table 2 and

Figure 3).

We propose that the concept of intention that was studied in this experiment belongs to the class of “immediate intentions,” which are accompanied by conscious experiences of impending actions [

36]. Immediate intentions can be distinguished from prospective intentions based on how early the episodic details of an action are planned. In the former case, the time lag between an intention and its action can be very short and may not even be consciously separable. In the latter case, the time difference between the formation of an intention and its actual execution may be quite prolonged, as in the planning of a holiday today and its actual execution several days later. It has been proposed that immediate intentions have a feature that makes a clear prediction about the oncoming action. Termed the content argument, this states that intentions for two different actions (e.g., left vs. right hand) have two different contents in the brain, capable of explaining or predicting which body part will be used for movement. In our study, we have shown evidence for the content hypothesis by predicting with high accuracy left movement imagery (of reaching and grasping), as distinct from right movement imagery, by using fMRI signals before the onset of imagery.

Our results suggest future applications in which high-level preparatory activity in the parieto-frontal regions can be applied to control neural prosthetics in BCI. We assume that repeatable formations of motor intentions may persist in the absence of overt movement in those patients who can maintain or be trained to maintain mental simulations of movement, a concept that has important implications for rehabilitation and restoration of movement, e.g., after a stroke. If a paradigm similar to that used here is implemented, a portable brain–computer interface (BCI) based on EEG [

37] or near-infrared spectroscopy (NIRS) [

38] could be built to operate a neuro-prosthetic device as an “intention brain–machine interface.” There is an advantage in using higher, cognitive areas of the sensorimotor system, in particular the PPC, for the following reasons: 1) Although the motor regions may undergo degradation during paralysis, the PPC may suffer less degradation because of its close link to the visual system, which is still intact; 2) as movement restoration is accompanied by neural plasticity, it is hypothesized that the PPC plays a larger role in this recovery because of its involvement in sensorimotor re-registration of behavior; and 3) for accurate control of movement, closed-loop feedback is necessary, and this is largely lost in the motor areas as a result of lesions but often remains intact in the PPC because the re-afference to this region is also visual.

Author Contributions

Conceptualization, S.R., S.L., J. D., N.B., E.F, and R.S.; methodology, S.L, A.R., E.P.. E.S., E.G ; software, S.L and R.S.; formal analysis, S.L, A.R. E.P., E.S., E.G.; investigation, S.R., S.L, A.R. E.P.. E.S., E.G., resources, N.B, R.S.; data curation, S.L.; writing—original draft preparation, S.R, S.L, J.D, E.F, N.B and R.S.; writing—review and editing, S.R, S..L, J.D.,, N.B. and R.S.; supervision, R.S., N.B. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the "Agencia Nacional de Investigación y Desarrollo" in Chile (ANID), through "Fondo Nacional de Desarrollo Científico y Tecnológico" (Fondecyt Regular, Project No.1211510), the National Agency for Research and Development Millennium Science Initiative and Millennium Institute for Research on Depression and Personality-MIDAPICS13_005.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the local ethics committee of the Faculty of Medicine of the University of Tübingen, Germany.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The datasets presented in this article are not readily available because are part of an ongoing study or due to technical/time limitations. Requests to access the datasets should be directed to: ranganatha.sitaram@stjude.org.

Acknowledgments

The authors thank Keith A. Laycock, PhD, ELS, for scientific editing of the manuscript.

Conflicts of Interest

The authors have no conflicts of interest to declare.

References

- Proske, U.; Gandevia, S.C. The proprioceptive senses: their roles in signaling body shape, body position and movement, and muscle force. Physiol. Rev. 2012, 92, 1651–1697. [Google Scholar] [CrossRef] [PubMed]

- Krakauer, J.W.; Ghazanfar, A.A.; Gomez-Marin, A.; MacIver, M.A.; Poeppel, D. Neuroscience Needs Behavior: Correcting a Reductionist Bias. Neuron 2017, 93(3), 480–490. [Google Scholar] [CrossRef] [PubMed]

- Desmurget, M.; Reilly, K.T.; Richard, N.; Szathmari, A.; Mottolese, C.; Sirigu, A. Movement intention after parietal cortex stimulation in humans. Science 2009, 324(5928), 811–813. [Google Scholar] [CrossRef] [PubMed]

- Gallivan, J.P.; Chapman, C.S.; Gale, D.J.; Flanagan, J.R.; Culham, J.C. Selective modulation of early visual cortical activity by movement intention. Cereb. Cortex 2019, 29(11), 4662–4678. [Google Scholar] [CrossRef] [PubMed]

- Gallivan, J.P.; McLean, D.A.; Valyear, K.F.; Pettypiece, C.E.; Culham, J.C. Decoding action intentions from preparatory brain activity in human parieto-frontal networks. J. Neurosci. 2011, 31(26), 9599–9610. [Google Scholar] [CrossRef] [PubMed]

- Libet, B. Unconscious cerebral initiative and the role of conscious will in voluntary action. Behav. Brain Sci. 1985, 8(4), 529–566. [Google Scholar] [CrossRef]

- Bulea, T.C.; Prasad, S.; Kilicarslan, A.; Contreras-Vidal, J.L. Sitting and standing intention can be decoded from scalp EEG recorded prior to movement execution. Front. Neurosci. 2014, 8, 376. [Google Scholar] [CrossRef] [PubMed]

- Birbaumer, N.; Elbert, T.; Lutzenberger, W.; Rockstroh, B.; Schward, J. EEG and slow cortical potentials in anticipation of mental tasks with different hemispheric involvement. Biol. Psychol. 1981, 13, 251–260. [Google Scholar] [CrossRef] [PubMed]

- Oldfield, R.C. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef]

- Kline, A.; Pittman, D.; Ronsky, J.; Goodyear, B. Differentiating the brain’s involvement in executed and imagined stepping using fMRI. Behav. Brain Res. 2020, 394, 112829. [Google Scholar] [CrossRef]

- Lee, S.; Halder, S.; Kübler, A.; Birbaumer, N.; Sitaram, R. Effective functional mapping of fMRI data with support-vector machines. Hum. Brain Mapp. 2010, 31, 1502–1511. [Google Scholar] [CrossRef]

- Sitaram, R.; Lee, S.; Ruiz, S.; Rana, M.; Veit, R.; Birbaumer, N. Real-time support vector classification and feedback of multiple emotional brain states. NeuroImage 2011, 56, 753–765. [Google Scholar] [CrossRef] [PubMed]

- LaConte, S.; Anderson, J.; Muley, S.; Ashe, J.; Frutiger, S.; Rehm, K.; Hansen, L.K.; Yacoub, E.; Hu, X.; Rottenberg, D.; Strother, S. The evaluation of preprocessing choices in single-subject BOLD fMRI using NPAIRS performance metrics. NeuroImage 2003, 18, 10–27. [Google Scholar] [CrossRef]

- LaConte, S.; Strother, S.; Cherkassky, V.; Anderson, J.; Hu, X. Support vector machines for temporal classification of block design fMRI data. NeuroImage 2005, 26, 317–329. [Google Scholar] [CrossRef]

- Shaw, M.E.; Strother, S.C.; Gavrilescu, M.; Podzebenko, K.; Waites, A.; Watson, J.; Anderson, J.; Jackson, G.; Egan, G. Evaluating subject specific preprocessing choices in multisubject fMRI data sets using data-driven performance metrics. NeuroImage 2003, 19, 988–1001. [Google Scholar] [CrossRef] [PubMed]

- Strother, S.; La Conte, S.; Hansen, L.K.; Anderson, J.; Zhang, J.; Pulapura, S.; Rottenberg, D. Optimizing the fMRI data-processing pipeline using prediction and reproducibility performance metrics: I. A preliminary group analysis. NeuroImage 2004, 23 (Suppl 1), S196–207. [Google Scholar]

- Martinez-Ramon, M.; Koltchinskii, V.; Heileman, G.L.; Posse, S. fMRI pattern classification using neuroanatomically constrained boosting. NeuroImage 2006, 31, 1129–1141. [Google Scholar] [CrossRef] [PubMed]

- Rana, M.; Gupta, N.; Da Rocha, J.L.D.; Lee, S.; Sitaram, R. A toolbox for real-time subject-independent and subject-dependent classification of brain states from fMRI signals. Front. Neurosci. 2013, 7, 170. [Google Scholar] [CrossRef]

- Wang, Z.; Fei, L.; Sun, Y.; Li, J.; Wang, F.; Lu, Z. The role of the precuneus and posterior cingulate cortex in the neural routes to action. Comput. Assist. Surg. 2019, 24 (Suppl 1), 113–120. [Google Scholar] [CrossRef]

- Di Giacomo, J.; Gongora, M.; Silva, F.; Vicente, R.; Arias-Carrion, O.; Orsini, M.; Teixeira, S.; Cagy, M.; Velasques, B.; Ribeiro, P. Repetitive Transcranial Magnetic Stimulation changes absolute theta power during cognitive/motor tasks. Neurosci. Lett. 2018, 687, 77–81. [Google Scholar] [CrossRef]

- Lara, A.H.; Elsayed, G.F.; Zimnik, A.J.; Cunningham, J.P.; Churchland, M.M. Conservation of preparatory neural events in monkey motor cortex regardless of how movement is initiated. eLife 2018, 7, e31826. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer: New York, 2001. [Google Scholar]

- De Martino, F.; Valente, G.; Staeren, N.; Ashburner, J.; Goebel, R.; Formisano, E. Combining multivariate voxel selection and support vector machines for mapping and classification of fMRI spatial patterns. NeuroImage 2008, 43(1), 44–58. [Google Scholar] [CrossRef] [PubMed]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; Wiley: New York, 1991.

- Maes, F.; Collignon, A.; Vandermeulen, D.; Marchal, G.; Suetens, P. Multimodality image registration by maximization of mutual information. IEEE Trans. Med. Imaging 1997, 16, 187–198. [Google Scholar] [CrossRef]

- McLachlan, G.J. Discriminant Analysis and Statistical Pattern Recognition; Wiley Interscience: Hoboken, 2004. [Google Scholar]

- Pilacinski, A.; Lindner, A. Distinct contributions of human posterior parietal and dorsal premotor cortex to reach trajectory planning. Sci. Rep. 2019, 9, 1962. [Google Scholar] [CrossRef] [PubMed]

- Andersen, R.A.; Buneo, C.A. Intentional maps in posterior parietal cortex. Annu. Rev. Neurosci. 2002, 25, 189–220. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Wang, Y.; Cui, H. Posterior parietal cortex predicts upcoming movement in dynamic sensorimotor control. Proc. Natl. Acad. Sci. USA 2022, 119, e2118903119. [Google Scholar] [CrossRef]

- Huang, Y.; Hullfish, J.; De Ridder, D.; Vanneste, S. Meta-analysis of functional subdivisions within human posteromedial cortex. Brain Struct. Funct. 2019, 224, 435–452. [Google Scholar] [CrossRef]

- Hadjidimitrakis, K.; Bakola, S.; Wong, Y.T.; Hagan, M.A. Mixed spatial and movement representations in the primate posterior parietal cortex. Front. Neural Circuits 2019, 13, 15. [Google Scholar] [CrossRef]

- Neige, C.; Rannaud Monany, D.; Lebon, F. Exploring cortico-cortical interactions during action preparation by means of dual-coil transcranial magnetic stimulation: a systematic review. Neurosci. Biobehav. Rev. 2021, 128, 678–692. [Google Scholar] [CrossRef]

- Orban, G.A.; Sepe, A.; Bonini, L. Parietal maps of visual signals for bodily action planning. Brain Struct. Funct. 2021, 226, 2967–2988. [Google Scholar] [CrossRef]

- Pilacinski, A.; Wallscheid, M.; Lindner, A. Human posterior parietal and dorsal premotor cortex encode the visual properties of an upcoming action. PLoS One 2018, 13, e0198051. [Google Scholar] [CrossRef] [PubMed]

- Dechent, P.; Merboldt, K.-D.; Frahm, J. Is the human primary motor cortex involved in motor imagery? Cogn. Brain Res. 2004, 19(2), 138–144. [Google Scholar] [CrossRef] [PubMed]

- Pacherie, E.; Haggard, P. What are intentions? In Conscious Will and Responsibility: A Tribute to Benjamin Libet; Sinnott-Armstrong, W.; Nadel, L., Eds.; Oxford Academic: Oxford, pp. 70-84. [CrossRef]

- Memon, S.A.; Waheed, A.; Başaklar, T.; Ider, Y.Z. Low-cost portable 4-channel wireless EEG data acquisition system for BCI applications. In Medical Technologies National Congress (TIPTEKNO), Magusa, Cyprus, 2018. [CrossRef]

- Paulmurugan, K.; Vijayaragavan, V.; Ghosh, S.; Padmanabhan, P.; Gulyás, B. Brain–computer interfacing using functional near-infrared spectroscopy (fNIRS). Biosensors (Basel) 2021, 11, 389. [Google Scholar] [CrossRef] [PubMed]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).