1. Introduction

Nonlinear equations and systems of nonlinear equations (SNLEs) are ubiquitous in simulations of physical phenomena [

1] and play a crucial role in pure and applied sciences [

2]. These types of systems are considered among the most challenging problems to solve in numerical mathematics [

3,

4], and their difficulty increases significantly as the number of equations in the system grows.

The well-known Newton’s method and its variants [

5] are among the most common iterative numerical techniques used to solve SNLEs. The effectiveness of these solvers is strongly dependent on the quality of the initial approximations used [

6,

7], and convergence may not be guaranteed. Additionally, they can be very computationally demanding, especially for large and complex systems. Moreover, there is no sufficiently efficient and robust general numerical method for solving SNLEs [

8]. Hence, it is crucial to explore alternative approaches to tackle the challenge of obtaining accurate and efficient approximations to the solutions of SNLEs.

In recent years, there has been growing interest in population-based metaheuristic algorithms for their ability to tackle complex and challenging numerical problems, such as SNLEs. These algorithms belong to a broader category of computational intelligence techniques, offering a versatile and robust approach to optimization [

9]. However, although they are capable of addressing large-scale and high-dimensional problems, providing near-optimal solutions within a reasonable timeframe, it is important to note that they do not guarantee optimal (i.e., exact) solutions.

Population-based metaheuristic algorithms, typically drawing inspiration from natural evolution and social behavior, encompass various techniques such as particle swarm optimization, genetic algorithms, evolutionary strategies, evolutionary programming, genetic programming, artificial immune systems, and differential evolution. These algorithms utilize principles observed in nature to guide their search for optimal solutions. Numerous modifications have been proposed to make such algorithms more efficient, and their inherent characteristics make them natural candidates for parallelization. However, their effective application requires a careful selection of the appropriate strategy and the tuning of specific parameters, which is neither simple nor obvious.

Metaphor-less optimization algorithms, in contrast, operate independently of metaphors or analogies and are considered parameter-less since they do not require any parameter tuning. Originating from the pioneering work of R. V. Rao [

10], these methods have gained increasing importance due to their relative simplicity and efficiency in solving problems across various domains with varying levels of complexity. These algorithms are often more abstract and rely on mathematical principles or specific optimization strategies rather than mimicking real-world phenomena.

Given the complexities associated with solving large-scale SNLEs, high-performance computing (HPC) techniques, such as the use of heterogeneous architectures, become crucial to ensuring access to adequate computational resources and achieving accurate and timely solutions. The deployment of parallel algorithms on Graphics Processing Units (GPUs) used as general-purpose computing devices has become a fundamental aspect of computational efficiency. These hardware units are equipped with a multitude of cores, allowing for the execution of numerous parallel operations simultaneously, thus surpassing the capabilities of traditional Central Processing Units (CPUs).

The integration of parallel algorithms into GPUs represents a significant advancement in HPC, providing an effective way to achieve scalability and efficiency at a relatively affordable price and effectively accelerating computations across diverse domains, including scientific simulations, artificial intelligence, financial modeling, and data analytics. The GPU-based parallel acceleration of metaheuristics allows for the efficient resolution of large, complex optimization problems and facilitates the exploration of vast solution spaces that would otherwise be impractical with traditional sequential computation due to the computational costs.

In this paper, a systematic review and detailed description of metaphor-less algorithms is made, including the Jaya optimization algorithm [

10], the Enhanced Jaya (EJAYA) algorithm [

11], a variant of Jaya that has recently been shown by the authors to be quite effective in solving nonlinear equation systems [

12], the three Rao algorithms [

13], the best-worst-play (BWP) algorithm [

14], and the very recent max-min-greedy-interaction (MaGI) algorithm [

15]. This paper also briefly discusses a few previous parallelizations of the Jaya optimization algorithm, as well as very recent and efficient GPU-based massively parallel implementations of the Jaya, Enhanced Jaya (EJAYA), Rao, and BWP algorithms developed by the authors. In addition, a novel GPU-accelerated version of the MaGI algorithm is proposed. The GPU-accelerated versions of the metaphor-less algorithms developed by the authors and tested on a suite of GPU devices using a set of difficult, large-scale SNLEs.

SNLEs must be reformulated into optimization problems for solving them using metaheuristics. Considering a SNLE represented generically in vector form as , with components , this system of n nonlinear equations with n unknowns can be transformed into an n-dimensional nonlinear minimization problem. Transforming SNLEs into optimization problems involves defining an objective function to minimize. In this paper, the objective function is defined as the sum of the absolute values of the residuals, .

In population-based optimization algorithms, the generation of the initial population commonly involves the random or strategic generation of a group of potential candidates to serve as the starting point for the optimization process. As a standard practice, the default initialization approach for the majority of metaheuristic algorithms involves the use of random number generation, or the Monte Carlo method [

16], in which individuals within the initial population are generated randomly within predefined bounds or limitations. This approach does not guarantee an even distribution of candidate solutions across the search space [

17] and can influence the performance of the algorithms. However, tests have shown that this behavior is contingent on both the test problems and the algorithm under consideration [

16], indicating that certain combinations of problem domains and algorithms are almost impervious to this scenario. Considering the prevalent use of randomly generated starting populations and the scarce number of experiments employing alternative initialization procedures, a stochastic population initialization approach was also adopted.

The organization of this paper is as follows: The theoretical aspects and implementation details of the aforementioned metaphor-less algorithms are provided in

Section 2. Principles behind GPU programming and details about the GPU-based implementation of each algorithm, including aspects about the different parallelization strategies employed, are presented in

Section 2. All aspects of the experimental setup and methodology used to evaluate the performance of GPU-based algorithms are detailed in

Section 4, including details about the hardware and benchmark functions used. The computational experiment findings and discussion are presented in

Section 5. Finally, the conclusions drawn from the key findings are presented in

Section 6.

3. GPU Parallelization of Metaphor-Less Optimization Algorithms

3.1. Principles of CUDA Programming

The graphics processing unit (GPU) has become an essential component of modern computers. Initially designed for rendering graphics, this hardware has evolved to manage simultaneously a multitude of graphical elements, such as pixels or vertices, for which a large parallel processing capacity is required.

The Compute Unified Device Architecture (CUDA) [

22] is a computing platform developed by NVIDIA that harnesses the power of GPUs for general-purpose parallel computing, making it possible to take advantage of the massive parallelism offered by the GPU for distinct computing tasks. The CUDA software stack acts as a framework that abstracts the inherent complexities of GPU programming. This streamlines communication and coordination between the central processing unit (CPU) and GPU, providing an interface to efficiently utilize GPU resources in order to accelerate specific functions within programs. The functions designed to execute highly parallelized tasks on the GPU are referred to as kernels.

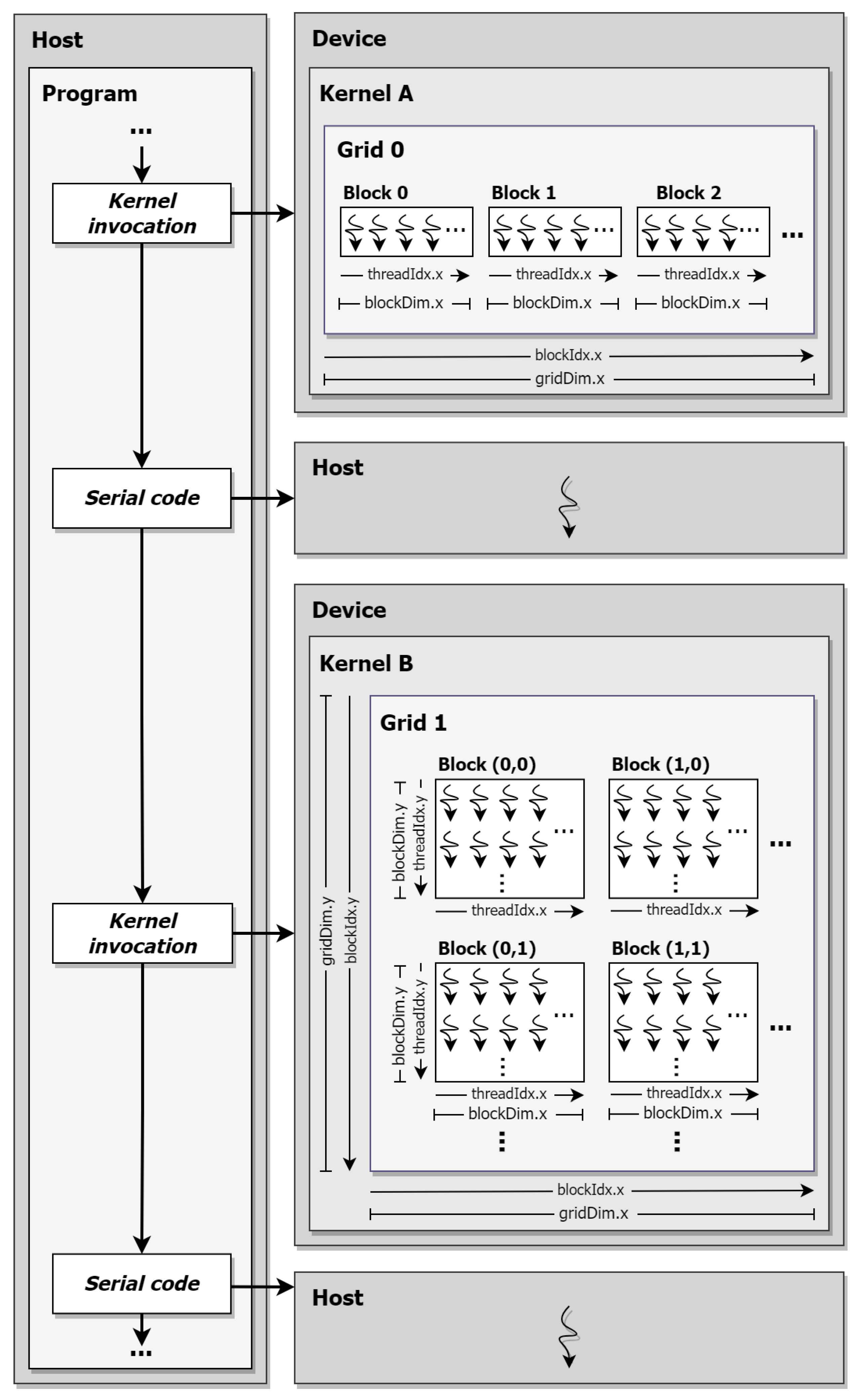

CUDA functions as a heterogeneous computing framework, consequently dictating a specific execution pattern within kernels The CPU functions as the host, responsible for initiating kernel launches and overseeing the control flow of the program. On the other hand, the kernels are executed in parallel by numerous threads on the GPU, designated as the device. In essence, the host performs tasks that are better suited for sequential execution, while the GPU handles the computationally intensive parts of the program in parallel. This relationship is illustrated in

Figure 1.

The computational resources of the GPU are organized into a grid to execute multiple kernels in parallel. This grid acts as an abstraction layer, structuring the available computational elements into blocks, each containing a set number of threads. This thread hierarchy is configurable into grids with one, two, or three dimensions, tailored to accommodate the parallelization needs of a specific kernel. The configuration parameters within the thread hierarchy play a pivotal role in determining GPU hardware occupancy, making them a critical aspect of optimizing resource utilization.

In

Figure 1,

Kernel A showcases a one-dimensional thread-block arrangement, while

Kernel B displays a two-dimensional configuration. The illustration also includes the CUDA built-in variables for accessing the grid and block dimensions (

gridDim and

blockDim), block and thread indices (

blockIdx and

threadIdx), and their respective coordinates (

x and

y). These variables also facilitate the unique identification of individual threads within a kernel through a process known as thread indexing.

The discrete nature of the host and device hardware involves separate memory spaces, preventing direct data access between them. This necessitates explicit data transfers, resulting in substantial computational overheads and performance degradation. Consequently, meticulous optimization of memory access patterns and a reduction in the frequency of data transfer are crucial to mitigating performance bottlenecks [

23].

CUDA also provides access to diverse memory types within the GPU memory hierarchy, which is an important consideration for performance optimization. The memory types encompass global, shared, and local memories, each serving distinct purposes. The global memory is the largest memory segment on the device but is also the slowest. It remains persistent throughout kernel execution, serves as the interface for data transfers between the host and device, and is accessible by all threads in the GPU, thus enabling global data sharing. The shared memory, though much smaller, offers greater speed but is exclusive to threads within a block, enabling efficient data exchange among them. Lastly, local memory is the smallest in size and is private to individual threads, allowing them to store their specific local variables and support thread local execution.

3.2. Methodology Used for GPU-Based Parallelization

The primary step in parallelizing any algorithm requires an extensive assessment of its architecture, dependencies, data structure, etc., to devise a viable parallelization strategy. While some aspects may not be conducive to parallel execution, others, albeit parallelizable, may pose implementation challenges.

Several studies focusing on parallel and distributed metaheuristic algorithms have employed implementation strategies based on decomposing the optimization problem into smaller, independent subproblems (see e.g., [

24]). These subproblems can then be distributed across multiple parallel computation units, sometimes running several sequential instances of metaheuristic algorithms concurrently. An alternative method involves running specific phases of the algorithm in parallel, while others are executed sequentially (see e.g., [

24]). However, these approaches often prove less efficient due to the loss of computational resources attributed to overheads related to data transfers, synchronization, and other communication mechanisms.

While these parallel strategies can notably enhance computational performance compared to their original sequential counterparts, they are suboptimal for GPU computing due to their tendency to underutilize the available computational resources [

24]. Furthermore, specific parallelization strategies can result in modifications in the behavior of the parallel algorithm in contrast to its original version, leading to variations in convergence characteristics and the quality of the obtained approximations.

As CUDA inherently adopts a heterogeneous approach, establishing an effective balance between the host and device is crucial. In the proposed GPU-based parallelization, the primary responsibilities of the host include initializing algorithm variables, invoking kernel functions, managing the primary loop, and reporting the best solution found. Consequently, all intensive computations are exclusively handled by the device.

Given this perspective, the proposed methodology for GPU-based parallelization of metaphor-less optimization algorithms must ensure optimal utilization of the available GPU resources while still aligning with the fundamental operating principles of the original algorithm. This is essential to ensure a similar convergence behavior and quality of the obtained solutions in the parallelized version. Additionally, maintaining the same flexibility as the original algorithm is paramount, ensuring that the parallel algorithm can operate under virtually any optimization parameters.

To accomplish these objectives and handle large-scale optimization problems efficiently, the GPU-based parallelization design emphasizes the data structures of the optimization algorithms. In this approach, the GPU computational units are assigned based on the size and structure of the data segment being processed. Consequently, scalability is achieved when the number of threads allocated closely matches the size of the data computed. This leads to the alignment of both one-dimensional (1D) and two-dimensional (2D) arrays with the corresponding CUDA thread hierarchy, facilitating their processing by the respective kernels and addressing data locality issues.

Figure 2 illustrates the data-to-thread indexing of the largest data structure, a 2D array containing population data, to a 2D grid arrangement on the device. This configuration enables parallel computation across multiple blocks of threads. In this example, data corresponding to variable 6 from candidate 8 (using 0 as the starting index) is processed by the thread (3,2) within block (1,1). For the parallel processing of 1D array data, such as fitness values from each candidate solution, a 1D thread-block arrangement is utilized.

Optimizing the number of blocks and threads per block is critical for leveraging hardware resources efficiently. However, determining the optimal configuration is not straightforward; it varies based on algorithm characteristics, GPU architecture, memory needs, and workload specifics. Traditional methods for determining this configuration involve iterative experimentation, optimization, and profiling, which can be time-consuming.

In the proposed GPU-based parallelization approach, a dynamic and generalized method to automatically determine optimal GPU hardware occupancy was developed. This process involves a function that predicts the launch configurations for each kernel by leveraging CUDA runtime methods available in devices supporting CUDA 6.5 or higher, utilizing parameters such as data size, supported block size, and kernel memory requirements. To suit the algorithmic data being processed by optimization algorithms, two kernel launch configuration functions were created, one for 1D and another for 2D thread-block kernels. This approach ensures that kernels are adaptable to diverse GPU hardware configurations and are inherently structured for horizontal scalability through the utilization of additional available threads, all achieved without the need for modifications to the parallel implementation. Although automatic methods present the potential for achieving maximum GPU occupancy, they may not consistently produce the most efficient parameters tailored to a specific hardware setup.

In this study, the method for automatically determining optimal GPU hardware occupancy has undergone revision. The modification entails the incorporation of a parameter designed to constrain the suggested block size (i.e., the number of threads allocated for computation in each block used) to a multiple of the supported warp size by the GPU. The warp size, which denotes the minimum number of threads that can be concurrently executed in CUDA, is inherently dependent on the GPU architecture and can be automatically derived from the hardware parameters. Empirical tests have revealed that constraining the suggested block size to twice the warp size yields an additional average performance improvement of 19.8% compared to the preceding version of the automated launch configuration method when using the RTX 3090 GPU. This enhancement is primarily attributed to the facilitated coalesced memory access and optimized instruction dispatch achieved through the harmonization of block size.

While both shared memory and local memory exhibit faster access compared to global memory due to their proximity to processing units, their limited size and accessibility pose challenges when handling extensive data volumes. Therefore, the proposed GPU-based implementation prioritizes the use of larger global memory. This memory type offers substantial storage capacity, accommodating extensive datasets, variables, diverse data types, and complex data structures. Moreover, it aids in thread synchronization, ensuring that when a kernel concludes execution and the subsequent one initiates, all processed data synchronizes in the GPU global memory. In scenarios where quicker memory access is more efficient, this approach presents a drawback by potentially limiting computational task performance. Nevertheless, it fosters a versatile implementation in terms of problem size, population size of the optimization algorithm, and GPU hardware compatible with this parallelization method.

The GPU parallelization strategy presented aims to be versatile and adaptable. It is not only capable of utilizing resources across different GPU hardware but can also be seamlessly tailored to suit other metaheuristic optimization methods.

3.3. GPU-Based Acceleration of the Jaya Optimization Algorithm

GPU-Jaya was the first known parallelization of the Jaya algorithm on a GPU, introduced by Wang et al. in 2018 [

25] to estimate the parameters of a Li-ion battery model. The parallelization strategy employed in this algorithm focuses on minimizing data transmission between the host and the GPU. It utilizes both global and shared memories to efficiently manage data location, and the algorithm incorporates a parallel reduction procedure to further optimize specific steps of the parallelization. Data is stored in a collection of one-dimensional arrays, leading to the establishment of a set of conversion and mapping mechanisms. This implies a potential computing overhead resulting from the use of data adaptations. The number of threads is fixed at 32, while the number of blocks is determined by the size of the population array (

) divided by 32. This suggests that the population array may need to be a multiple of 32, imposing constraints on selectable test parameters.

The testing encompassed modest population sizes, ranging from 64 to 512. The problem involved six decision variables, with a maximum allowable number of iterations set at 20,000. With the fastest GPU used for testing, a Tesla K20c GPU with 2496 CUDA cores and 5 GB of VRAM, the GPU-Jaya achieved speedup factors ranging from 2.96 to 34.48, with an average of 13.17.

Jimeno-Morenilla and collaborators proposed in 2019 [

26] another parallelization of the Jaya algorithm on the GPU. The parallel execution scheme in this implementation determines the number of blocks based on the number of algorithm runs, while the number of threads per block is established by the population size and the number of design variables. However, this approach has the disadvantage of leading to an inefficient use of GPU resources, as the hardware allocation is influenced by the number of algorithm runs rather than the dimensions of the test case. Moreover, it imposes notable constraints on the allowable range of test parameters, particularly the population size and the number of decision variables. These restrictions stem from the close correlation of these parameters with the hardware characteristics of the GPU used for testing, particularly the maximum number of threads per block.

The algorithm underwent evaluation on a GTX 970 GPU featuring 1664 CUDA cores and 2 GB of VRAM. It was utilized a set of 30 unconstrained functions with population sizes ranging from 8 to 256, encompassing 2 to 32 decision variables and constrained by a maximum iteration count of 30,000. The implementation achieved maximum speedup factors ranging from 18.4 to 189.6, with an average of 53.

A recent GPU parallelization of the Jaya algorithm proposed by the authors [

27,

28] makes use of the parallel execution strategy on GPUs detailed in the preceding section. The fundamental structure of this GPU-based version of Jaya is elucidated in Algorithm 9.

|

Algorithm 9 GPU-based parallel Jaya |

- 1:

/* Initialization */ - 2:

Initialize , and ; ▹ Host

- 3:

; - 4:

; - 5:

; ▹Host

- 6:

/* Main loop */ - 7:

while do ▹Host

- 8:

Determine and ; - 9:

; - 10:

; - 11:

; - 12:

; ▹ Host

- 13:

end while - 14:

Output the best solution found and terminate; ▹ Host and device

|

Upon initial observation, juxtaposing the GPU-based Jaya algorithm with its sequential counterpart (Algorithm 1) elucidates a notably analogous structure. They primarily adhere to identical execution procedures, diverging primarily due to the absence of nested loops iterating through the population elements (i.e., the candidate solutions) and decision variables. This aspect is a fundamental component of the established parallelization methodology, aiming to maintain the philosophical underpinnings of the original algorithm throughout its adaptation to GPU processing. As a result, the GPU-based version of Jaya retains the ability to generate identical optimization behavior to the original algorithm.

The provided procedure relies on a heterogeneous computational approach, where the computational involvement of the host is notably limited. As delineated in Algorithm 9, the primary tasks of the host entail initializing algorithmic parameters, invoking the various kernel functions, overseeing the algorithm’s iterative steps (encapsulated in the main loop), and documenting the optimal solution identified. Consequently, the host manages the sequential aspects of the algorithm, whereas all optimization-related data resides and undergoes processing exclusively within the device. This operational paradigm ensures that no data is transferred between the host and device throughout the main computational process. In the final step of the algorithm, the device identifies the best solution discovered, which is then transferred to the host for output.

Given the aforementioned details, the generation of the initial population takes place directly within the device, following the procedure outlined in Algorithm 10.

|

Algorithm 10 Kernel for generating the initial population |

- 1:

function Generate_initial_population_kernel( ) - 2:

/* Device code */

- 3:

Determine using x dimension of , , and ; - 4:

Determine using y dimension of , , and ; - 5:

if and then - 6:

; - 7:

end if - 8:

end function |

In the context of the GPU-based algorithm, the conventional nested loops utilized for iterating through population data during processing have become obsolete due to concurrent parallel execution. Employing a two-dimensional thread block arrangement grid, the kernel specified in Algorithm 10 facilitates the parallel creation of the initial population. This methodology assigns an individual thread to generate each individual data element within the population (X), supported by a matrix data structure with dimensions . The kernel leverages the x and y coordinates (indices) from the block dimension (blockDim), block index (blockIdx), and thread index (threadIdx) to determine the specific row and column in the data matrix allocated for computation for assigning each data element individually to a separated thread for processing. This allocation strategy maximizes the efficiency of the parallel processing, allowing each thread to perform designated computations based on its unique position within the block and grid structure.

The same parallelization technique is applied across various algorithm phases encompassed within the main loop. Consequently, each stage of the computational process now runs concurrently on all elements within a given iteration. This paradigm shift means that

now signifies all updated candidate solutions within a given algorithmic iteration, rather than representing a singular updated candidate. Subsequently, all algorithmic steps have been adjusted accordingly, commencing with the population update kernel outlined in Algorithm 11.

|

Algorithm 11 Kernel for population update (Jaya) |

- 1:

function Jaya-driven_population_update_kernel(X) - 2:

/* Device code */

- 3:

Determine using x dimension of , , and ; - 4:

Determine using y dimension of , , and ; - 5:

if and then - 6:

-

; ▹ Eq. (1)

- 7:

; - 8:

end if - 9:

end function |

Similar to the original algorithm, the maintenance of newly generated candidate solutions within the search space boundary remains a necessity. Within the GPU-based algorithm, this task is managed by the function delineated in Algorithm 12.

|

Algorithm 12 Function to ensure boundary integrity |

- 1:

function Check_boundary_integrity_function() - 2:

if then - 3:

; - 4:

end if - 5:

if then - 6:

; - 7:

end if - 8:

end function |

The selection of the best candidate solution by a greedy selection algorithm is parallelized in Algorithm 13.

|

Algorithm 13 Kernel for greedy selection |

- 1:

function Greedy_selection_kernel(X, ) - 2:

/* Device code */

- 3:

Determine using x dimension of , , and ; - 4:

if then - 5:

if is better than then - 6:

; - 7:

; - 8:

end if - 9:

end if - 10:

end function |

Considering that the data structures supporting fitness values for both the newly generated candidate solutions and the current candidate solutions consist of single-dimensional arrays, each with a length of , the kernel implementation requires solely a one-dimensional grid arrangement to conduct parallel comparisons.

Essentially, for concurrent determination of the optimal candidate solution, the greedy selection kernel operates using a total number of threads equivalent to . The indexing of data and threads is managed by the variable , which is determined using inherent variables with details about the hierarchical arrangement of blocks and threads.

The present GPU-based parallelization of Jaya distinguishes itself from the previous approaches by its efficient utilization of both the host and device during computation. Specifically, the host exclusively oversees the main loop, and data transfers between the host and device are minimized, never occurring during the most computationally demanding phases of the algorithm. Furthermore, the device directly accesses data without necessitating additional mapping or conversion. Notably, the approach to GPU grid arrangement and kernel invocations deviates from prior implementations. The dimensional structure of the grid and the determination of the necessary threads and blocks for computation are primarily driven by the size and structure of the data undergoing processing.

3.4. GPU-Based Acceleration of the Enhanced Jaya Algorithm

There is no mention in the existing literature of any previous GPU-based parallelization of the Enhanced Jaya (EJAYA) algorithm, which makes it impossible to directly compare the parallelization of the EJAYA algorithm proposed by the authors [

29,

30] and described in detail in this section with any other previous work.

While analyzing the differences between EJAYA and the original Jaya optimization algorithm, it becomes evident that EJAYA encompasses several additional steps and a more intricate formulation, primarily due to a more complex search method. Consequently, the GPU parallelization of EJAYA explained here demands a more elaborate approach than the original algorithm, requiring redesign and adaptation of its methods to meet the requisites and constraints of parallel processing. The overview of the GPU-based parallel EJAYA can be observed in Algorithm 14.

|

Algorithm 14 GPU-based parallel EJAYA |

- 1:

/* Initialization */ - 2:

Initialize , and ; ▹ Host

- 3:

; - 4:

; - 5:

- 6:

; ▹Host

- 7:

/* Main loop */ - 8:

while do ▹Host

- 9:

Determine and ; - 10:

; - 11:

if then ▹ Host

- 12:

- 13:

end if - 14:

; - 15:

; - 16:

; - 17:

; - 18:

; - 19:

; ▹ Host

- 20:

end while - 21:

Output the best solution found and terminate; ▹ Host and device

|

According to the GPU-based parallelization strategy employed, all updated candidate solutions are generated simultaneously in parallel during each algorithm iteration. This means that some parts of the implementation need to be adjusted to ensure that all essential data for the LES and GES phases (Eqs.

5 and

8) are readily available for computation in the population update kernel.

Consequently, to perform the LES phase, the GPU-based EJAYA requires the prior determination of the upper and lower local attract points (

and

), as indicated on Eqs.

2 and

4. This procedure is described in Algorithm 15.

|

Algorithm 15 Kernel for determining upper and lower attract points |

- 1:

function Determine_attract_points_kernel() - 2:

/* Device code */

- 3:

Determine using x dimension of , , and ; - 4:

if then - 5:

; ▹ Eq. (2)

- 6:

; ▹ Eq. (4)

- 7:

end if - 8:

end function |

Both and are single-dimensional arrays with a length of the problem dimension (). This workload is parallelized simultaneously using a one-dimensional grid arrangement with a number of threads equal to , where each thread, denoted by the variable , handles the computation of a specific element within the arrays. The formulation of and necessitates the determination of two random numbers ( and ) and the population mean () prior to the kernel execution.

For the GES phase of the GPU-based EJAYA, the historical population (

) is determined using Eq.

6 and subsequently processed by Eq.

7. The determination of the switching probability

is conducted by the host (specifically, in line 11 of Algorithm 14). This approach is preferable as it is more computationally efficient to handle a simple instruction such as an

statement in the host rather than by the device, considering the overhead related to kernel invocation. For the permutation of the historical population, the first step involves predetermining the random permutation of the position index of the candidate solutions within the population (

). This permutation is then used as a template to shuffle the order of the candidates, as described in Algorithm 16.

|

Algorithm 16 Kernel for permuting the population |

- 1:

function Permute_population_kernel() - 2:

/* Device code */

- 3:

Determine using x dimension of , , and ; - 4:

if then - 5:

; - 6:

end if - 7:

end function |

Before updating the population, the exploration strategy, denoted as

in Eq.

9, needs to be determined. In the GPU-based EJAYA, where the newly generated candidate solutions

represent the candidates for the entire population in a single iteration, the

factor must correspond to the exploration strategy for the whole population. This ensures that the exploration strategy aligns with the parallel processing of the entire population. The procedure is illustrated in Algorithm 17.

|

Algorithm 17 Kernel for determining the exploration strategy |

- 1:

function Determine_exploration_strategy_kernel( ) - 2:

/* Device code */

- 3:

Determine using x dimension of , , and ; - 4:

if then - 5:

if then - 6:

`LES’; - 7:

else - 8:

`GES’; - 9:

end if - 10:

end if - 11:

end function |

The functionality required for performing both the LES and GES search strategies during the population update phase is facilitated through a unified kernel, as illustrated in Algorithm 18. In this kernel, the method for updating the population in the GES phase requires predefining an array of random numbers following a normal distribution called

, with a length equal to

, in each iteration. This approach ensures a correct parallelization of Eq.

8, as the same random number is applied to update every dimension of a population candidate.

|

Algorithm 18 Kernel for population update (EJAYA) |

- 1:

function EJAYA-driven_population_update_kernel(X) - 2:

/* Device code */

- 3:

Determine using x dimension of , , and ; - 4:

Determine using y dimension of , , and ; - 5:

if and then - 6:

if then ▹ LES

- 7:

-

; ▹ Eq. (5)

- 8:

else ▹ GES

- 9:

-

; ▹ Eq. (8)

- 10:

end if - 11:

; - 12:

end if - 13:

end function |

3.5. GPU-Based Acceleration of the Rao Optimization Algorithms

Algorithm 19 presents a comprehensive characterization of a collective GPU-based parallelization of the three Rao algorithms recently proposed by the authors [

31]. This implementation integrates the elementary methods constituting Rao-1, Rao-2, and Rao-3 heuristics into a singular and unified parallelization.

|

Algorithm 19 GPU-based parallel Rao-1, Rao-2, and Rao-3 |

- 1:

/* Initialization */ - 2:

Initialize , and ; ▹ Host

- 3:

; - 4:

; - 5:

; ▹Host

- 6:

/* Main loop */ - 7:

while do ▹Host

- 8:

Determine and ; - 9:

if algorithm = `Rao-2’ or `Rao-3’ then - 10:

; - 11:

end if - 12:

; - 13:

; - 14:

; - 15:

; ▹ Host

- 16:

end while - 17:

Output the best solution found and terminate; ▹ Host and device

|

The three Rao algorithms employ a shared optimization approach, differing primarily in their population update strategies. Their fundamental algorithmic phases bear resemblance to those in Jaya. Notably, the main distinction arises in Rao-2 and Rao-3, where these algorithms necessitate selecting a random candidate solution from the population to compute the newly generated candidate solutions. Given the current parallelization strategy, this random candidate solution must be established in advance for all population candidates in each iteration before conducting the population update. This procedure is outlined in Algorithm 20.

|

Algorithm 20 Kernel for selecting random solutions from the population |

- 1:

function Select_random_solutions_kernel() - 2:

/* Device code */

- 3:

Determine using x dimension of , , and ; - 4:

if then - 5:

- 6:

while do - 7:

- 8:

end while - 9:

end if - 10:

end function |

The kernel is organized to operate using a one-dimensional grid, as its purpose is to generate a 1D array with a length equal to containing the indices of the random candidate solutions.

Algorithm 21 provides a consolidated method that generates the updated candidate solutions for all the Rao algorithms in a unified manner.

|

Algorithm 21 Kernel for population update (Rao algorithms) |

- 1:

function Rao-driven_population_update_kernel(X) - 2:

/* Device code */

- 3:

Determine using x dimension of , , and ; - 4:

Determine using y dimension of , , and ; - 5:

if and then - 6:

if algorithm = `Rao-1’then ▹ Eq. (10)

- 7:

; - 8:

else if algorithm = `Rao-2’then ▹ Eq. (11)

- 9:

if is better than then - 10:

-

;

- 11:

else - 12:

-

;

- 13:

end if - 14:

else if algorithm = `Rao-3’then ▹ Eq. (12)

- 15:

if is better than then - 16:

-

;

- 17:

else - 18:

-

;

- 19:

end if - 20:

end if - 21:

; - 22:

end if - 23:

end function |

3.6. GPU-Based Acceleration of the BWP Algorithm

The main skeleton of the GPU-based parallel Best-Worst-Play (BWP) algorithm presented by the authors in [

32] is shown in Algorithm 22.

|

Algorithm 22 GPU-based parallel BWP algorithm. |

- 1:

/* Initialization */ - 2:

Initialize , and ; ▹ Host

- 3:

; - 4:

; - 5:

; ▹Host

- 6:

/* Main loop */ - 7:

while do ▹Host

- 8:

/* Leverage the Jaya heuristic */

- 9:

Determine and ; - 10:

; - 11:

; - 12:

; - 13:

/* Leverage the Rao-1 based heuristic */

- 14:

Determine and ; - 15:

; - 16:

; - 17:

; - 18:

; ▹ Host

- 19:

end while - 20:

Output the best solution found and terminate; ▹ Host and device

|

Although the BWP optimization strategy involves the consecutive use of two distinct algorithms (namely Jaya and a Rao-1 based heuristic), it is not possible to reduce the number of steps in the main loop. This limitation stems from the absence of overlapping methods, as each algorithmic phase is inherently sequential in nature.

The contents of Algorithm 23 detail the population update process within the Rao-1 based method.

|

Algorithm 23 Kernel for population update (BWP) |

- 1:

function BWP-Rao1-based_population_update_kernel(X) - 2:

/* Device code */

- 3:

Determine using x dimension of , , and ; - 4:

Determine using y dimension of , , and ; - 5:

if and then - 6:

; ▹ Eq. (13)

- 7:

; - 8:

end if - 9:

end function |

3.7. A Novel GPU-Based Parallelization of the MaGI Algorithm

This section introduces a novel GPU-based parallelization of the Max–Min-Greedy-Interaction (MaGI) algorithm following the methodology outlined in this paper, as illustrated by Algorithm 24.

Since the MaGI algorithm employs two sequential metaphor-less heuristics in sequence, its approach to parallel implementation closely resembles that of BWP.

In accordance with the original algorithm (Algorithm 8), the first phase of the main loop of the GPU-based parallel MaGI algorithm involves employing the Jaya heuristic to generate an updated population, followed by further refinement using a Rao-2 based heuristic. This last heuristic requires the identification of random solutions from the population that have to be precomputed for all candidate solutions before population updates take place (line 15 of Algorithm 24), in accordance with the parallelization strategy presented for the original Rao-2 algorithm.

The GPU-based parallel implementation of the modified version of the Rao-2 heuristic is presented in Algorithm 25.

|

Algorithm 24 GPU-based parallel MaGI algorithm |

- 1:

/* Initialization */ - 2:

Initialize , and ; ▹ Host

- 3:

; - 4:

; - 5:

; ▹Host

- 6:

/* Main loop */ - 7:

while do ▹Host

- 8:

/* Leverage the Jaya heuristic */

- 9:

Determine and ; - 10:

; - 11:

; - 12:

; - 13:

Determine and ; - 14:

/* Leverage the Rao-2 based heuristic */

- 15:

; - 16:

; - 17:

; - 18:

; - 19:

; ▹ Host

- 20:

end while - 21:

Output the best solution found and terminate; ▹ Host and device

|

|

Algorithm 25 Kernel for population update (MaGI) |

- 1:

function MaGI-Rao2-based_population_update_kernel(X) - 2:

/* Device code */

- 3:

Determine using x dimension of , , and ; - 4:

Determine using y dimension of , , and ; - 5:

if and then - 6:

if is better than then ▹ Eq. (14)

- 7:

-

;

- 8:

else - 9:

-

;

- 10:

end if - 11:

; - 12:

end if - 13:

end function |

5. Results and Discussion

The subsequent discussion delves into the results stemming from the parallelization of the metaphor-less optimization algorithms discussed in this article in the GPU architecture. The primary emphasis lies in evaluating the attained speedup gains, understanding their behavior concerning increases in the problem dimension, and exploring different GPU hardware configurations. This aims to unravel the implications of leveraging parallel computational resources for optimizing algorithmic performance.

The speedup factor quantifies the performance improvements resulting from the parallel implementation and is defined as the ratio of the sequential to the parallel computation time. It is important to note that the speedup values presented in this analysis were calculated using the full computational times without rounding up.

Test data is systematically organized into tables, wherein test problems are categorized by dimension (i.e., population size and number of variables). The mean sequential computational times are presented in the column labeled CPU, while the results for parallel computation are organized into distinct columns based on the GPU hardware used for testing. All reported computational times pertain to the comprehensive execution of the algorithm, encompassing the entire duration from initiation to completion.

The primary emphasis in parallel computation is on data obtained with the RTX 3090 and A100 GPUs, which are presented in columns bearing the same names. The selection of the RTX 3090 GPU aligns with its consistent use in previous parallelization of the majority of algorithms discussed in this article, while the A100 GPU represents a highly relevant hardware option in the professional sector, and it is employed here to illustrate the scalability of the parallel implementations and its adeptness in harnessing additional GPU resources without necessitating adjustments to the underlying parallelization strategy.

Data obtained from the analysis of mean computational times for both sequential and parallel implementations, along with the corresponding speedup gains for each studied algorithm, were organized into tables and grouped based on similarities in algorithmic structure. Consequently,

Table 1 presents computational results for the Jaya and EJAYA algorithms; the three Rao algorithms are showcased in

Table 2, while the outcomes of the tests conducted with the BWP and MaGI algorithms are displayed in

Table 3. Although Jaya and EJAYA are presented side by side, it is important to highlight that the EJAYA algorithm possesses a distinct structure compared to the other algorithms.

Findings reveal the superior efficiency of GPU-based algorithms compared to their corresponding sequential implementations. In all algorithms, the speedup gains increased proportionally with the growth of the problem dimension. Consequently, the lower speedup gains were observed in the smallest dimensions, while the most significant speedups were achieved in the largest dimensions. This expectation is rooted in the parallelization strategy employed for GPU-based algorithms, wherein the allocation of GPU processing capabilities (expressed in terms of the number of CUDA cores, the block size, and the number of blocks) is adjusted automatically according to the problem dimensionality and inherent characteristics of the GPU.

Regarding the GPU hardware, the A100 GPU demonstrated speedup gains ranging from a minimum of 34.4 in the EJAYA algorithm to a maximum of 561.8 in the Rao-3, achieving a global mean speedup (across all algorithms, problems, and dimensions) of 231.2. This performance surpassed that of the RTX 3090 GPU, which attained speedups ranging from 33.9 in the EJAYA algorithm to 295.8 with the Jaya algorithm, resulting in a global mean speedup of 127.9, approximately 44.7% lower than that of the A100 GPU.

Despite having fewer 3584 CUDA cores when compared to the RTX 3090 GPU (a reduction of 34.1%), the A100 GPU manages to perform more computational work by leveraging its significantly wider memory bus of 5120 bits, in contrast to the 384 bits in the RTX 3090, while sharing the same architecture. This implies that each CUDA core in the A100 GPU is able to operate more efficiently than its counterpart in the RTX 3090, mainly due to its enhanced memory subsystem, particularly under this type of workload. This outcome aligns with expectations, considering that factors such as the parallelization strategy, GPU architecture, the number of available CUDA cores, and the width of the GPU memory bus are pivotal for achieving optimal performance in CUDA programming.

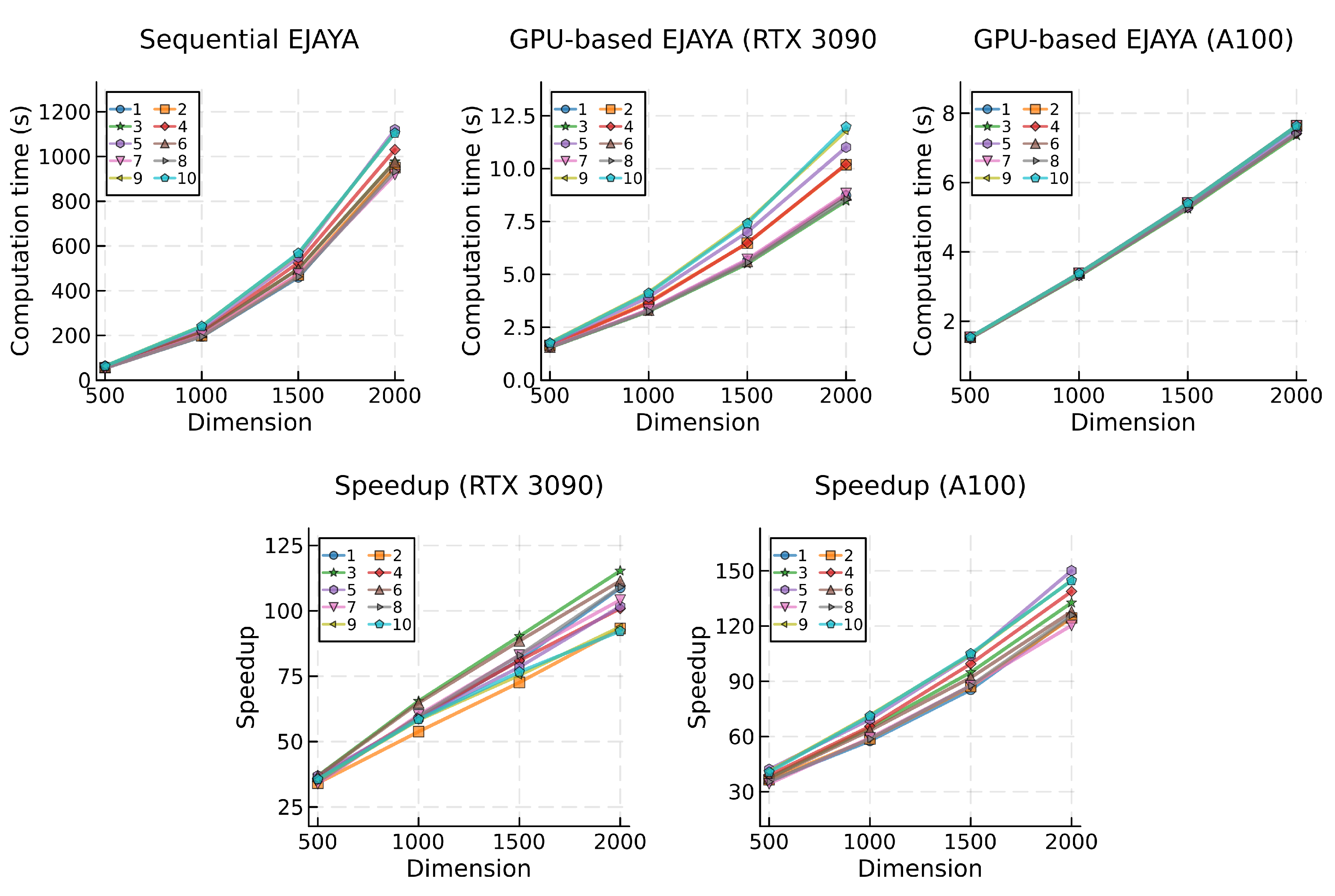

The GPU-based algorithm that most effectively leveraged the parallel processing capabilities of the GPU hardware was the Rao-3 algorithm, with an average speedup across all problems and dimensions of 169.9 for the RTX 3090 GPU and 290.0 for the A100. In contrast, the GPU-based EJAYA algorithm demonstrated the more modest, although still relevant, speedup values, with an average speedup of 69.9 for the first GPU and 82.6 with the second. This observed result could be attributed to the parallelization strategy employed for the EJAYA algorithm, which faced challenges in attaining a similar level of efficiency as the remaining algorithms. Additionally, inherent characteristics of the EJAYA algorithm, such as the utilization of a more complex exploration method involving different strategies requiring switching and selecting probabilities, mean historical solutions, and permutation of population elements, contribute to its distinct performance dynamics.

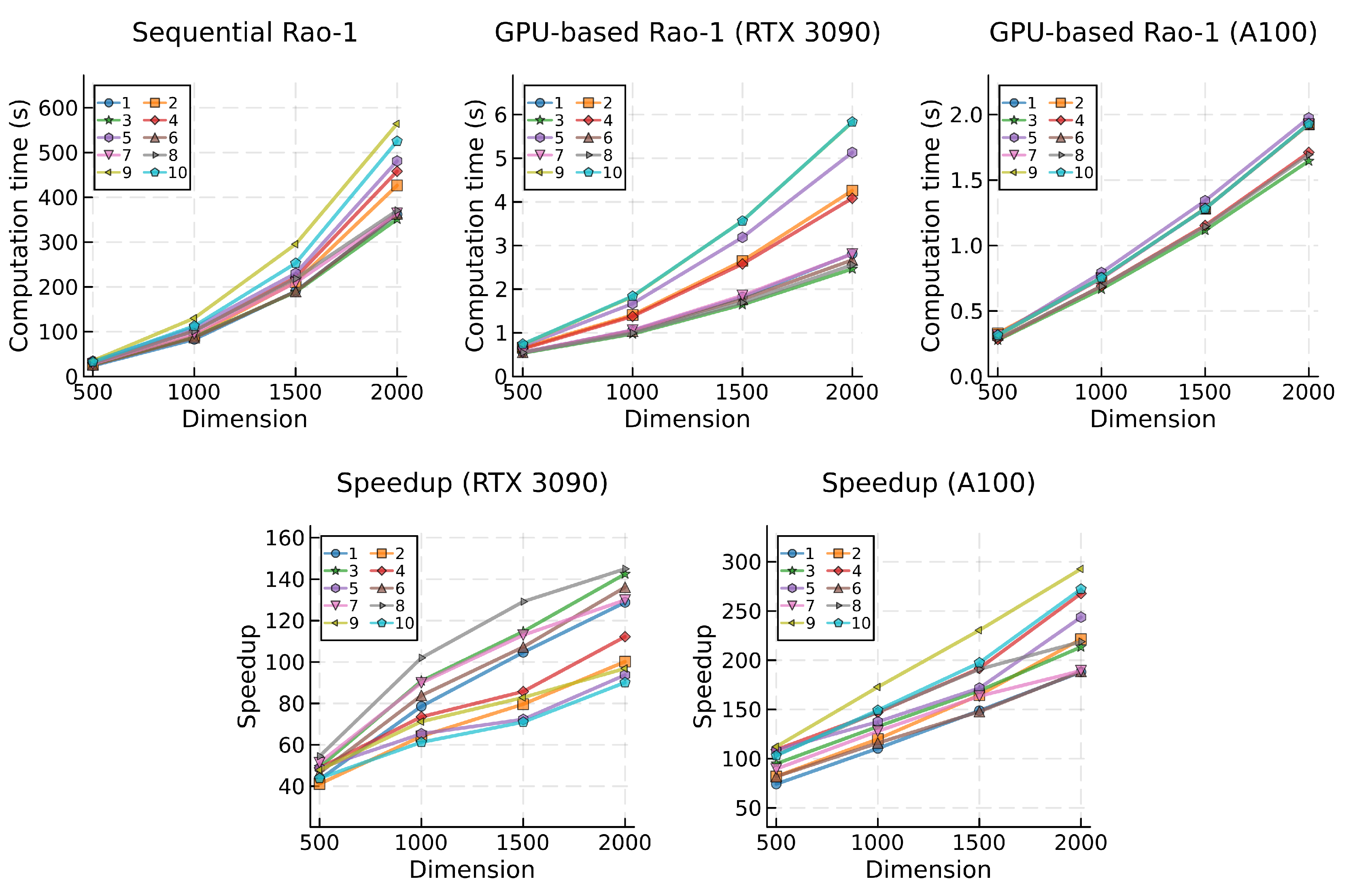

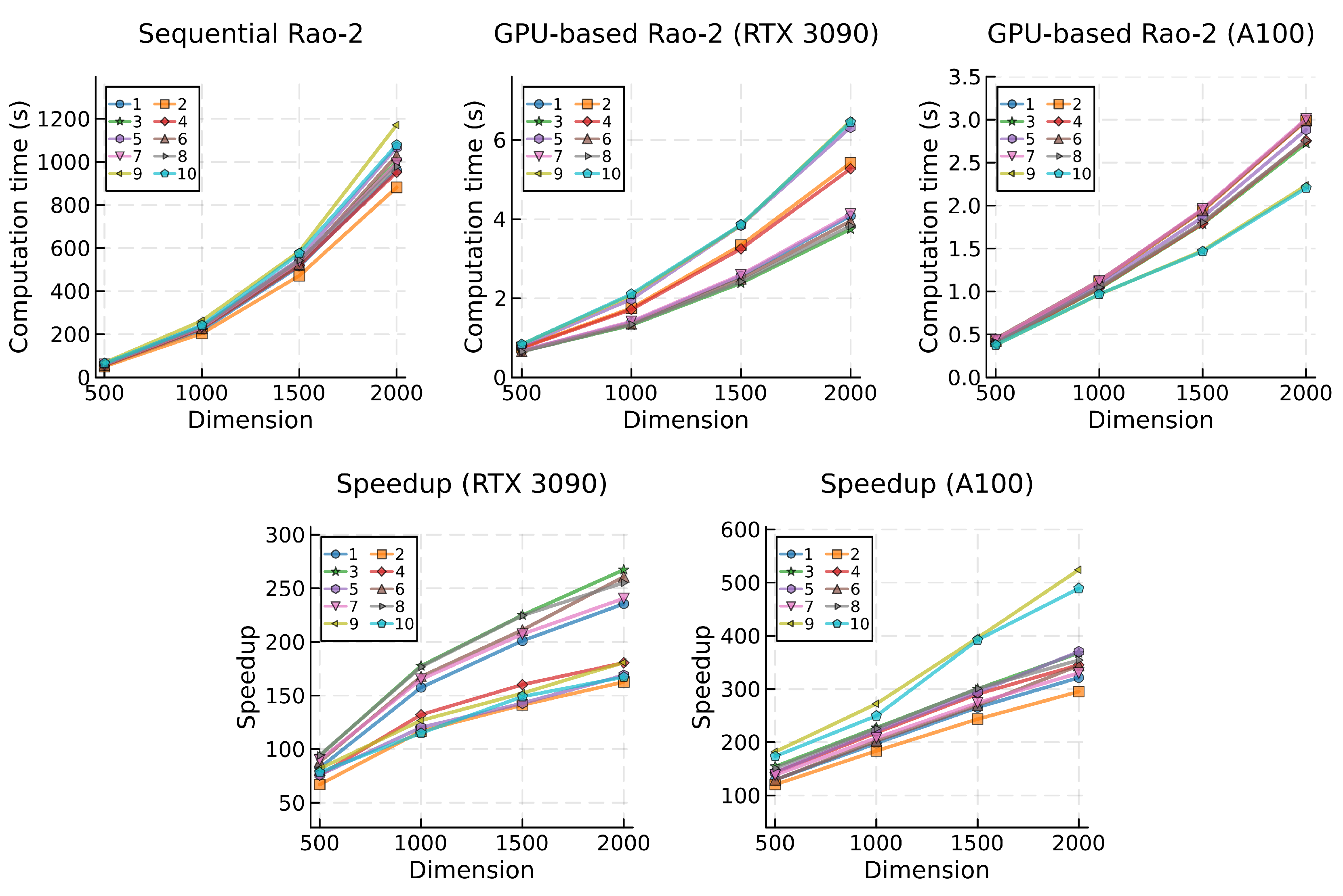

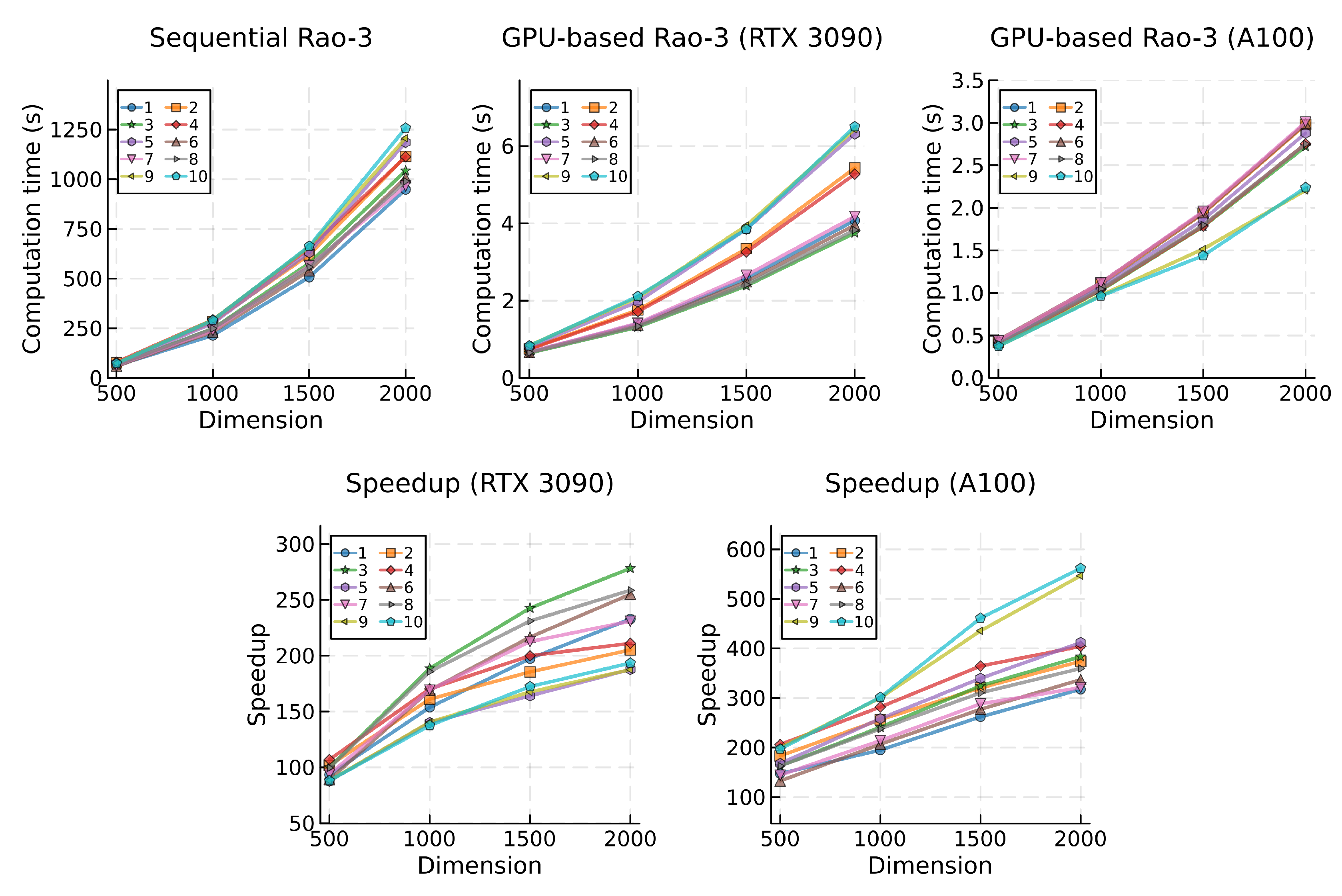

Upon analyzing the results obtained for the Rao algorithms (refer to

Table 2), a clear pattern becomes apparent. The Rao-1 algorithm, characterized by the simplest mathematical model among the three, exhibited the shortest computational time, resulting in an average of 194.23 seconds for the sequential algorithm (executed on the CPU). Conversely, Rao-2 and Rao-3 required approximately 147.9% more time on average than Rao-1 to complete identical tests, with a respective duration of 460.08 s and 502.96 s.

In a comparative evaluation, the GPU-based implementations demonstrated superior scalability amidst the increasing complexity of the Rao algorithms when juxtaposed with their sequential counterparts. With the RTX 3090 GPU, the Rao-1 algorithm yielded a global average of 2.06 seconds, while Rao-2 and Rao-3 exhibited an increase of approximately 26.7% (2.60 s for Rao-2 and 2.61 s for Rao-3). Employing the A100 GPU, the difference in the global average between Rao-1 (1.04 s) and both Rao-2 and Rao-3 (1.51 s and 1.50 s) was approximately 45.1% higher. Although this denotes a superior growth rate in computational time when compared with the RTX 3090 GPU, it is noticeable that the A100 GPU achieved an average speedup that was 73.7% higher.

This enhanced scalability of the GPU-based algorithm is further reflected in the speedup values attained across the various Rao algorithms. The algorithm with lower computational demands achieved comparatively lower speedup gains. Specifically, for the GPU-based Rao-1 algorithm running on the RTX 3090, the average speedup was 84.8, escalating to 155.4 for Rao-2 and 169.9 for Rao-3. Comparing to Rao-1, this represents an increase in speedup of 83.3% and 100.3%, respectively. A similar trend is observed with the A100 GPU, where average speedup gains for the Rao algorithms were 159.8, 261.1, and 290.0. This denotes a comparative increase in speedup achieved with Rao-2 and Rao-3 over Rao-1 of 63.4% and 81.5%, respectively.

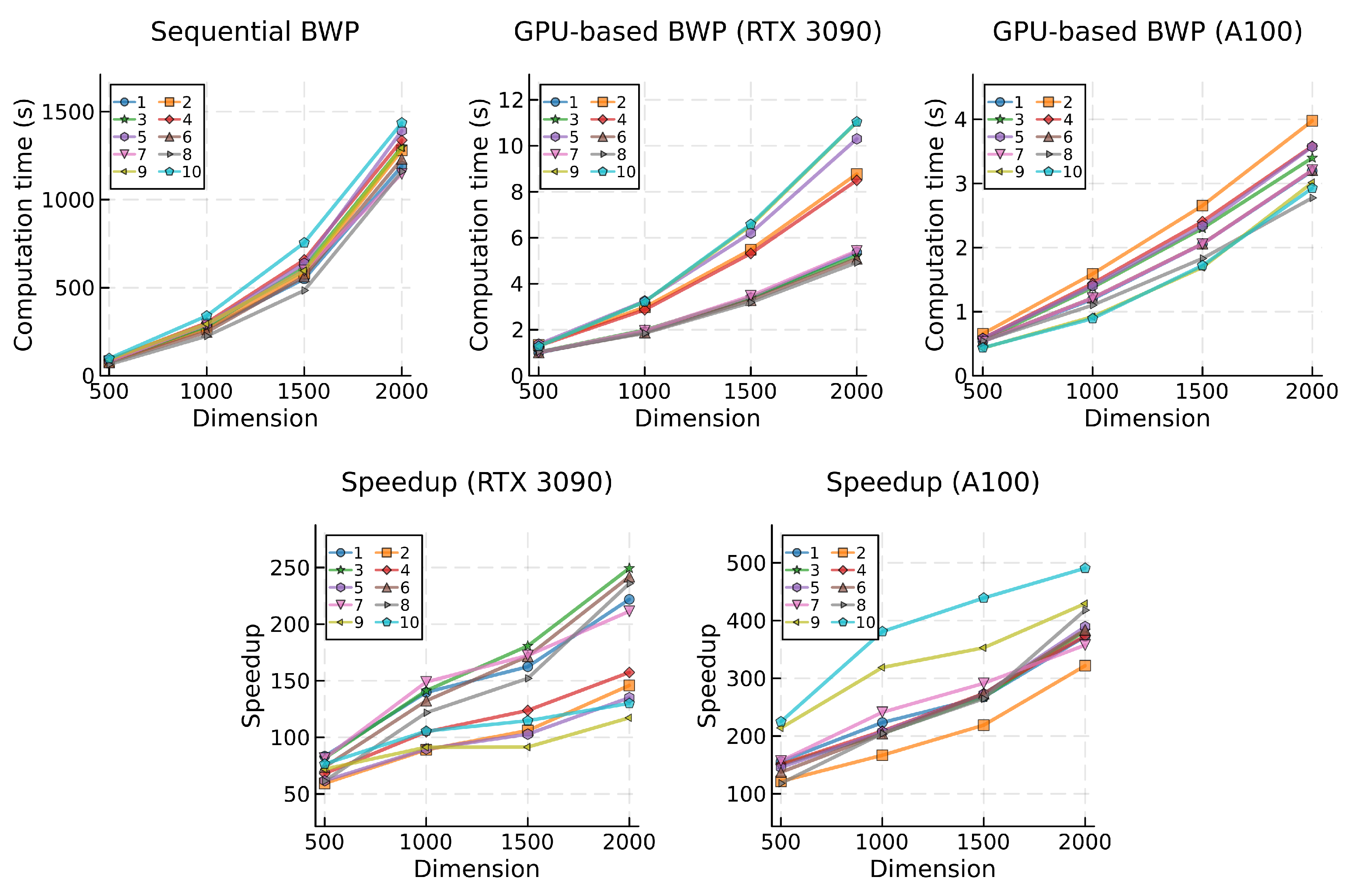

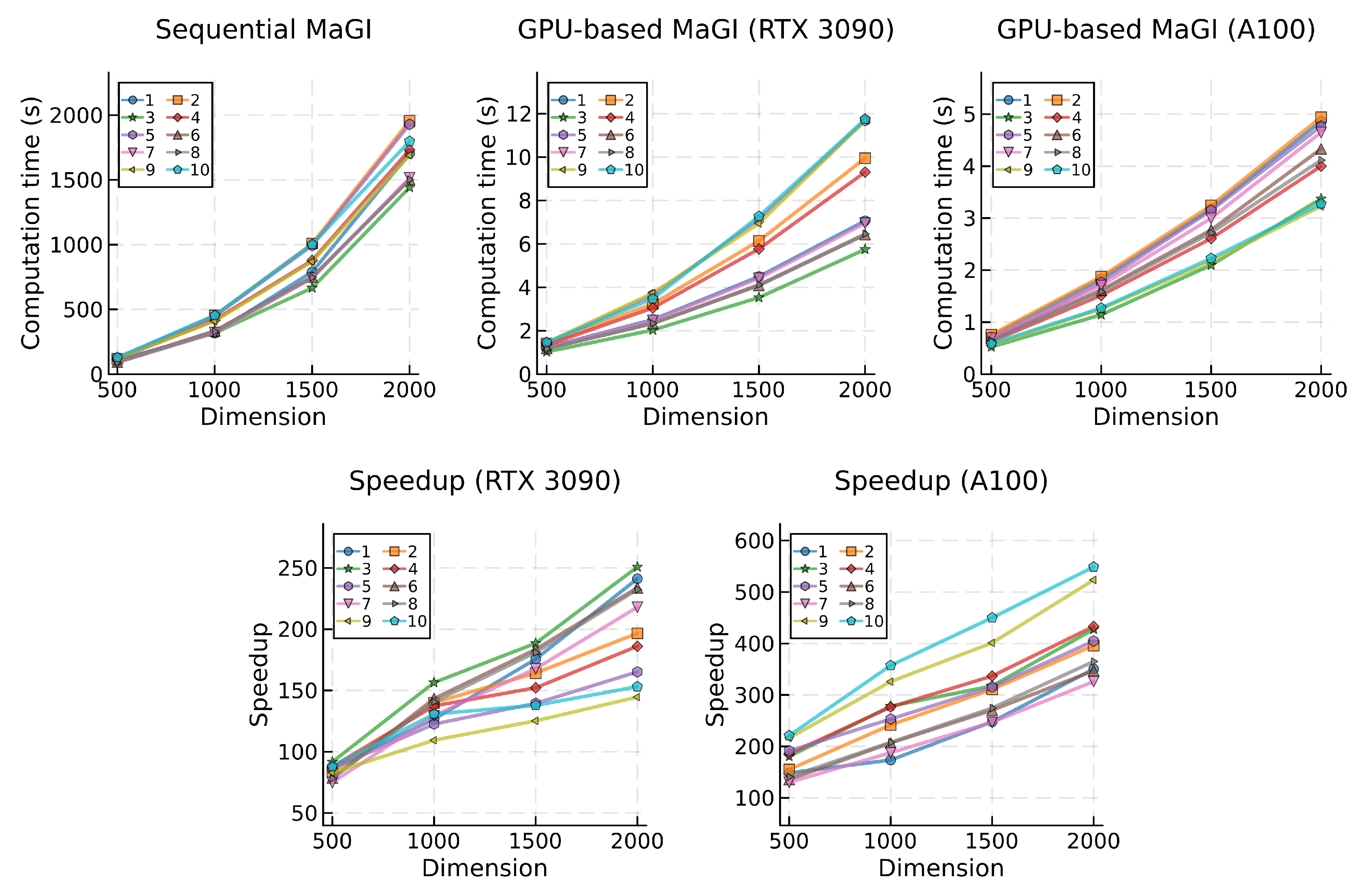

A similar scenario unfolds with the BWP and MaGI algorithms (

Table 3), where the speedup attained by the GPU-based implementation increases when transitioning to a more computationally demanding algorithm. Despite both algorithms being Jaya-based, the MaGI algorithm features a slightly more intricate mathematical model, being based on Rao-2 as opposed to Rao-1 in the case of the BWP algorithm. Consequently, the sequential implementation of the MaGI algorithm resulted in a 34.1% increase in global average computation time compared to BWP.

When comparing both algorithms using the GPU-based implementation, the observed increases were smaller. Utilizing the RTX 3090, the increase was approximately 14.7%, and it rose to approximately 26.5% with the A100 GPU. Consistent with previous findings, while the A100 exhibits a greater growth in computational times compared to the RTX 3090 GPU when transitioning to a more demanding algorithm, the speedups achieved by the A100 were superior, resulting in an average increase of 104.5% when considering both the BWP and MaGI algorithms.

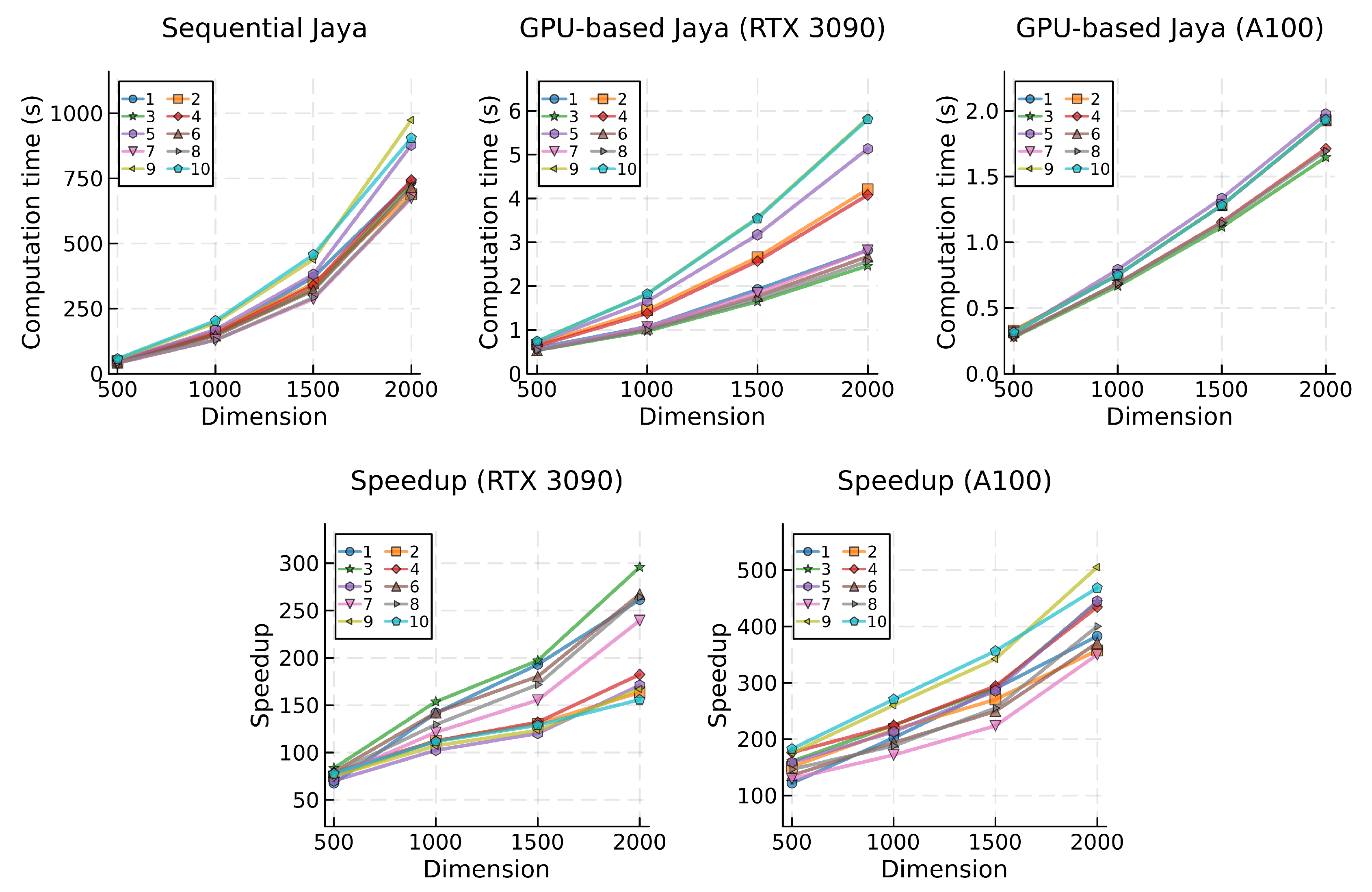

The behavior of both sequential and parallel implementations of the studied metaphor-less algorithms across the different problem dimensions, along with the achieved speedups, is depicted in

Figure 3 through

Figure 9.

While profiling the different test problems and specifically examining the top three most and least computationally demanding in terms of average execution time, certain patterns become evident. Results from the sequential implementation of the algorithms reveal that problems 5, 9, and 10 consistently rank among the most demanding, with the exception of problem 9, which does not appear in the top three most demanding for the BWP and MaGi algorithms. In parallel testing, the RTX 3090 GPU exhibits a similar pattern, consistently ranking problems 5, 9, and 10 as the most computationally demanding across all algorithms. However, when using the A100 GPU, no similar or consistent pattern emerges. No single problem ranks as the most demanding of all algorithms, but problems 1, 2, and 5 typically rank among the slowest. Analyzing the test problems per individual algorithm, problem 5 appears as one of the slowest in both sequential and parallel implementations for most tested algorithms. The exception is in EJAYA, Rao-2, and Rao-3, where problem 5 does not rank among the top three slowest problems when computing with the A100 GPU.

Figure 3.

Mean computational times (top) and speedup (bottom) for the Jaya algorithm, categorized by problem and dimension.

Figure 3.

Mean computational times (top) and speedup (bottom) for the Jaya algorithm, categorized by problem and dimension.

Figure 4.

Mean computational times (top) and speedup (bottom) for the EJAYA algorithm, categorized by problem and dimension.

Figure 4.

Mean computational times (top) and speedup (bottom) for the EJAYA algorithm, categorized by problem and dimension.

Figure 5.

Mean computational times (top) and speedup (bottom) for the Rao-1 algorithm, categorized by problem and dimension.

Figure 5.

Mean computational times (top) and speedup (bottom) for the Rao-1 algorithm, categorized by problem and dimension.

Figure 6.

Mean computational times (top) and speedup (bottom) for the Rao-2 algorithm, categorized by problem and dimension.

Figure 6.

Mean computational times (top) and speedup (bottom) for the Rao-2 algorithm, categorized by problem and dimension.

Figure 7.

Mean computational times (top) and speedup (bottom) for the Rao-3 algorithm, categorized by problem and dimension.

Figure 7.

Mean computational times (top) and speedup (bottom) for the Rao-3 algorithm, categorized by problem and dimension.

Figure 8.

Mean computational times (top) and speedup (bottom) for the BWP algorithm, categorized by problem and dimension.

Figure 8.

Mean computational times (top) and speedup (bottom) for the BWP algorithm, categorized by problem and dimension.

Figure 9.

Mean computational times (top) and speedup (bottom) for the MaGI algorithm, categorized by problem and dimension.

Figure 9.

Mean computational times (top) and speedup (bottom) for the MaGI algorithm, categorized by problem and dimension.

Problems 1, 6, 7, and 8 usually emerge as the least computationally demanding in the sequential tests. However, no single problem consistently ranks as the fastest for all algorithms. In the context of the GPU-based implementations, tests utilizing the RTX 3090 GPU consistently position problems 3, 6, and 8 as the fastest across all tested algorithms. Nonetheless, no consistent pattern is observed when using the A100 GPU. Generally, problems 3, 8, 9, and 10 rank among the top three in terms of the lowest average execution time. When individually assessing the test problems for each algorithm, problem 8 generally appears among the least computationally intensive, in both sequential and parallel implementations, with the exception of the Rao-2 and Rao-3 algorithms, where no specific pattern has emerged.

A consistent trend observed in all sequential implementations reveals an escalating impact with the growing problem dimension, giving rise to a concave upward curve characterized by an increasing slope. This trend indicates that computational time becomes more pronounced as dimensionality increases. Such behavior aligns seamlessly with the nature of the test problems, as scaling up SNLEs generally leads to a surge in computational complexity. This underscores the imperative for efficient algorithmic strategies capable of navigating the computational challenges posed by these intricate mathematical models.

Upon analyzing the computational times for GPU-based algorithms, two key observations provide insights into the performance characteristics of the proposed parallel implementation and GPU hardware. The first observation pertains to the grouping of lines representing computation times for each test problem. The general dispersion of lines observed in the data obtained with the RTX 3090 GPU suggests that this hardware may be more influenced by problem complexity, resulting in higher variability in computational performance. In contrast, the A100 GPU exhibits a more clustered grouping of computation times, indicating more stable and predictable performance across different problem dimensions. Notably, two exceptions to this trend are observed in the GPU-based implementation of BWP (

Figure 8) and MaGI (

Figure 9) algorithms.

The second and more pertinent observation concerns the gradient of computation time. When testing GPU-based algorithms with the RTX 3090 GPU, increases in problem dimension result in a less pronounced rise in computational time compared to the corresponding sequential version of the same algorithm. Conversely, most tests conducted with the A100 GPU suggest an almost linear relationship between computational time and problem dimension. It is noteworthy, however, that some results obtained with the BWP and MaGI algorithms deviate more substantially from a linear trend. This implies that the rate at which computational time increases remains more constant with the growing problem dimension when the A100 GPU is used.

This observation suggests that GPU-based algorithms can more efficiently handle the scaling of computational demands. It implies that the parallel implementation is adept at effectively distributing and managing the workload across its parallel processing cores, thereby better controlling the rise in computational time.

Insights into the scalability and efficiency gains of the proposed parallelization strategy can be derived from an analysis of the speedup achieved during the transition from sequential to parallel GPU algorithms. Across all test scenarios, the speedup lines (bottom plots in

Figure 3 through

Figure 9) exhibit a clear upward trend, indicating the proficient parallelization of the algorithms in the GPU-based implementation tested in both the RTX 3090 and A100 GPU. Furthermore, with the increase in problem dimension, the speedup consistently rises, emphasizing the effectiveness of the parallelization strategy in managing larger computational workloads and highlighting its scalability.

With the RTX 3090 GPU, variations in problem dimensions, as observed in the Jaya, BWP, and MaGI GPU-based algorithms, sometimes result in more jagged speedup lines, while the A100 GPU tends to consistently produce smoother speedup results. This observation suggests that the former GPU is more sensitive to variations in computational demands. While the speedup lines obtained with both GPUs exhibit positive growth for all algorithms across all problem dimensions, specific algorithms such as EJAYA, Rao-2, and Rao-3 display a concave downward shape in the speedups achieved with the RTX 3090, indicating a slowdown in speedup growth at higher dimensions. In contrast, speedup lines results with the A100 GPU mostly show a nearly linear or concave upward shape, signifying that the parallelization strategy effectively leverages this GPU hardware to achieve superior processing capabilities.

A noteworthy observation unfolds when examining the speedup performance across different problems. Problems 9 and 10 generally demonstrate remarkable speedup achievements with the A100 GPU. In contrast, these same problems tend to rank among the worst speedup results when executed on the RTX 3090 GPU. This disparity in speedup outcomes between the two GPUs underscores the nuanced influence of problem characteristics on the effectiveness of parallelization strategies.

A more comprehensive analysis aiming to further investigate the adaptation capabilities of the parallelization strategy across various GPU hardware configurations is presented in

Table 4. The provided data includes the average times of all test problems per problem dimension and is grouped by algorithm. The computations encompass results for the sequential implementation (in the column labeled `CPU’) and the GPU-based implementation executed on four different GPUs, along with the achieved speedup gains.

The performance hierarchy among the tested GPUs indicates that the Tesla T4 exhibits the lowest overall performance, followed by the RTX 3090, Tesla V100S, and, finally, the A100, thereby showcasing the highest performance. This alignment consistently corresponds to the inherent hardware characteristics and capabilities of each GPU. The RTX 3090, distinguished by a significantly greater number of CUDA cores and higher memory bandwidth compared to the Tesla T4, naturally positions itself higher in the performance hierarchy. Despite both the Tesla V100S and A100 having fewer CUDA cores than the RTX 3090, they are intricately engineered for parallel processing, featuring significantly more advanced memory subsystems capable of facilitating data movement at substantially higher rates.

Comparatively, the Tesla V100S and A100 GPUs differ in their configurations. The former has both a higher CUDA core count and a wider memory bus. It is important to acknowledge that critical aspects of the GPU microarchitecture, including CUDA compute capability, instruction pipeline depth, execution unit efficiency, and memory hierarchy intricacies, also contribute significantly to the overall performance of a GPU.

All tested GPUs demonstrated positive speedup ratios across all algorithms and dimensions. Nevertheless, concerning algorithms EJAYA, BWP, and MaGI, the Tesla T4 GPU exhibited a marginal decrease in average speedup ratios within some of the middle problem dimensions, followed by an increase in the highest problem dimension. Despite this marginal reduction, it is crucial to emphasize that the Tesla T4 GPU consistently maintained positive speedup ratios. This indicates a nuanced shift in the efficiency of the GPU, which could be attributed to factors related to the parallelization strategy or to GPU hardware, such as the arrangement of CUDA cores or the internal configuration of processing units.

When examining the execution times of the sequential implementations, the Rao-1 algorithm stands out as the fastest, indicative of a lower computational demand. However, this was not mirrored in the GPU results of the same algorithm. The computational times obtained with the GPU-based Rao-1, across all tested GPUs, closely resemble those acquired with the GPU-based Jaya, a mathematically more complex algorithm. This observation suggests a potential bottleneck in the performance of the parallelization strategy, possibly stemming from inherent characteristics of the parallelization design coupled with other factors related to GPU programming. These factors may encompass thread setup and coordination, overhead associated with kernel launch, thread synchronization mechanisms, and other CUDA programming nuances.

6. Conclusions

The analysis of GPU-based implementations of metaphor-less optimization algorithms, conducted within the framework of a standardized parallelization strategy, has yielded insightful conclusions regarding the efficacy, scalability, and performance characteristics of parallel computation across a diverse set of large-scale SNLEs with varying dimensions and tested using distinct GPU hardware configurations.

The utilization of parallel computational resources on GPUs proved highly effective in enhancing algorithmic performance. The GPU-based versions of the tested metaphor-less optimization algorithms consistently demonstrated superior efficiency compared to their sequential counterparts. In the detailed analysis conducted, the achieved speedup ranged from a minimum of 33.9 to a maximum of 561.8. The observed speedup gains showed a proportional correlation with the augmentation of the problem dimension. Smaller dimensions resulted in more modest speedup gains, while larger dimensions led to more significant improvements. This behavior aligns with the adaptive nature of the parallelization strategy, dynamically adjusting GPU processing capabilities based on problem complexity.

The efficiency of GPU-based implementations varied among different optimization algorithms. The Rao-3 algorithm demonstrated exceptional efficiency in leveraging parallel processing capabilities, achieving the highest average speedup across all tested dimensions. Conversely, algorithms with more intricate mathematical models, such as EJAYA, exhibited more modest yet still significant speedup values. This observation underscores the impact of algorithmic complexity on the effectiveness of parallelization.

These observations were derived from results obtained with both the RTX 3090 and A100 GPUs, with the latter consistently outperforming the former despite having fewer CUDA cores. Moreover, the RTX 3090 GPU displayed higher sensitivity to variations in problem complexity, resulting in greater variability in computational performance. On the other hand, the A100 GPU showcased more stability and predictability, delivering consistent performance across different problem dimensions. This underscores the nuanced influence of GPU hardware characteristics on algorithmic efficiency.

The dynamic adaptability of the parallelization strategy to various GPU hardware configurations was further analyzed. Results revealed that all tested GPUs exhibited positive speedup ratios across all algorithms and dimensions, leading to the emergence of a performance hierarchy. The Tesla T4 demonstrated the lowest overall performance, followed by the RTX 3090, the Tesla V100S, and finally, the A100, which showcased the highest performance. This performance hierarchy is closely aligned with the intrinsic hardware characteristics of each GPU, indicating that the parallelization strategy effectively capitalized on the specific architectural features of each hardware.

Furthermore, the results highlighted that while factors such as the number of cores and memory bandwidth play a crucial role in determining GPU capabilities, they only offer a partial view of the overall performance. Such nuances underscored the importance of interpreting performance results within the context of real-world applications.

In GPU programming, the pursuit of optimal performance should be harmonized with some degrees of flexibility. While maximizing performance remains crucial, overly rigid optimized strategies may restrict algorithmic parameters or compatibility with different GPU hardware. The parallel implementation proposed in this paper showcases the capability of a generic approach to efficiently manage GPU resource allocation while providing scalability across different hardware configurations. This adaptability is achieved automatically, without requiring any source code modification, ensuring that applications remain responsive to evolving computational needs and effectively harness the potential of GPUs for general-purpose computing.

Refining and optimizing the parallelized metaphor-less optimization algorithms on GPU architecture, as presented in this study, unveils several promising avenues for further research. An exhaustive examination of the algorithms under consideration, with a concerted effort on refining and optimizing their parallelized versions, holds the potential for substantial enhancements to their performance. Delving deeper into the specific impact of test problems on parallel computation may yield valuable insights into the nuanced effects of parallel processing across diverse computational scenarios. Exploring dynamic parallelization strategies that adapt to varying problem complexities and GPU characteristics could also lead to additional gains in efficiency, scalability, and performance across a broader spectrum of hardware configurations. As an illustrative example, revising the method responsible for determining the block size, as suggested in this study, resulted in a noteworthy improvement of approximately 19.8% in the average performance of the GPU-based implementations.