1. Introduction

The young coconut is one of the most common fruit varieties in the world and has substantial food applications. In the Philippines alone, approximately 330 million coconut trees producing a total of 45 nuts per tree each year. Also, 26% of total agricultural land in the Philippines is classified as coconut region (Dar, 2019). The maturity of young coconut can be classified as mucus-like stage, cooked-rice stage, and leather-like stage (Albalos et al., 2021; Javel et al., 2019; Pascua, 2017). Malauhog is a Tagalog term for mucus-like, which literally means “mucus-like,” its coconut meat is 6-7 months old at this stage, which is very soft and can easily be taken from the shell. For the cooked-rice stage, its coconut meat is 7-8 months old, which has the same consistency of cooked-rice, the Tagalog term for the cooked-rice stage is Malakanin. This stage is mostly used for making salads and buko pie. Leather-like stage or also called as Malakatad has coconut meat that is 8-9 months old. Malakatad is commonly used for making sweets. As the coconuts ripen, the amount of coconut water is replaced as the meat hardens (Grant, 2021). Determining the maturity of young coconut has no standard basis (Beach & Hamre, 2017; Javel et al., 2019), but other studies have proven that the thickness of the coconut meat has a strong correlation in determining the maturity of the young coconut (Pandiselvam, 2020).

In terms of determining the maturity of young coconut, the competent fruit grower can only detect its maturity through the physical characteristics, mechanical strength, acoustic and physiological properties of young coconut (Rahmawati et al., 2019). As for normal young coconut buyers, the maturity and changes in the young coconut are imperceptible for them (Terdwongworakul et al., 2009).

In the Philippines, the basis for the determination of phases of adulthood of a coconut after harvest has remained extremely subjective. Farmers or traders use sounds formed by tapping fresh young coconut fruits to differentiate their maturity levels either by using his fingernails, his knuckles, the rounded end of a knife or the knife itself. However, this is a relatively rare skill, experience is needed for this, and it is difficult for people with less-gifted ears or in noisy market environments. Also, this approach will no longer be feasible in dealing with a volume of coconut in terms of export or industrial processing (Albalos et al., 2021).

The researcher attempted to equate the maturity of young coconut with its sound properties. Fruit marketers often relied on a limited pool of skilled laborers. In an attempt to increase the accuracy of determining the maturity level of coconut, the study aimed to develop a coconut meat maturity level identifier device using acoustic sensing and convolutional neural network.

Traditional detection of coconut maturity has often relied on manual operation by tapping the coconut using bolo or machete and such method is inconsistent. This method can only be done by those people who have enough knowledge about coconut. Since the traditional operation of detecting the maturity of coconut is manual, having the proposed device will help in eliminating subjective results.

The Raspberry Pi products as a cheap microprocessor have now been used for applications that utilize neural networks (Monteiro, 2018). Its capabilities for image processing have been proven of good use when it comes to feature extraction and classification in coconut based on this technique has been made (Arboleda et al., 2020; Tantrakansakul & Khaorapapong, 2014). Studies centered in coconut researches find its way to the realm of machine learning techniques to determine its quality (Alonzo et al., 2018). Conversion of the sound of tapping the coconut to an image can be done using spectrogram techniques. Convolution Neural Networks can be used to classify spectrograms (Curilem et al., 2018; Prasetyo et al., 2019). The Raspberry Pi has the capability to process spectrogram and to implement deep learning algorithms. The deep learning method was used in a wide range of applications (Afrisal & Gedung, 2019; Hendry et al., 2020). One promising architecture of CNN is LeNet due to its lightweight and good performance and successfully applied in recognition of a set of handwritten characters (Akhand & Rahman, 2015; He et al., 2015; James, 2017; Purkaystha, 2017; Septianto et al., 2018; Shokoohi et al., 2013; Soleh, 2017; Syarief & Setiawan, 2020).

For the following system, a portable device that mechanically taps the coconut acquires the audio, converts the audio to spectrogram, and finally classifies its maturity using CNN architecture was developed. A Raspberry Pi 3B+ was used as the system processor and was configured to do spectrogram and deep learning tasks. Spectrograms had transformed before it was fed to the LeNet CNN model. The CNN parameters were tuned to maximize its performance while keeping in mind the memory and processing limitations of the system. Harvesting of the coconut is not part of the study, but attempts to automate this process has been made (Maheswaran et al., 2017; Rajesh et al., 2015)

The present article is divided into four main sections. In the first section, a brief introduction was made. In the second section, the operation of the proposed system for the classification of coconut maturity is explained. In the third section, the experimental setup and data gathered from different tests of the system were presented. Finally, in the last section, a series of conclusions were established regarding the performance of the system, according to the results obtained during the tests.

2. Research Method

The following is the basic operation of the program for audio acquisition, conversion to spectrogram, preprocess, and classification using a Raspberry Pi. For this, section 2 was divided into two subsections, the first raise the physical working conditions for the implementation of the application, the second describes the algorithm’s flow.

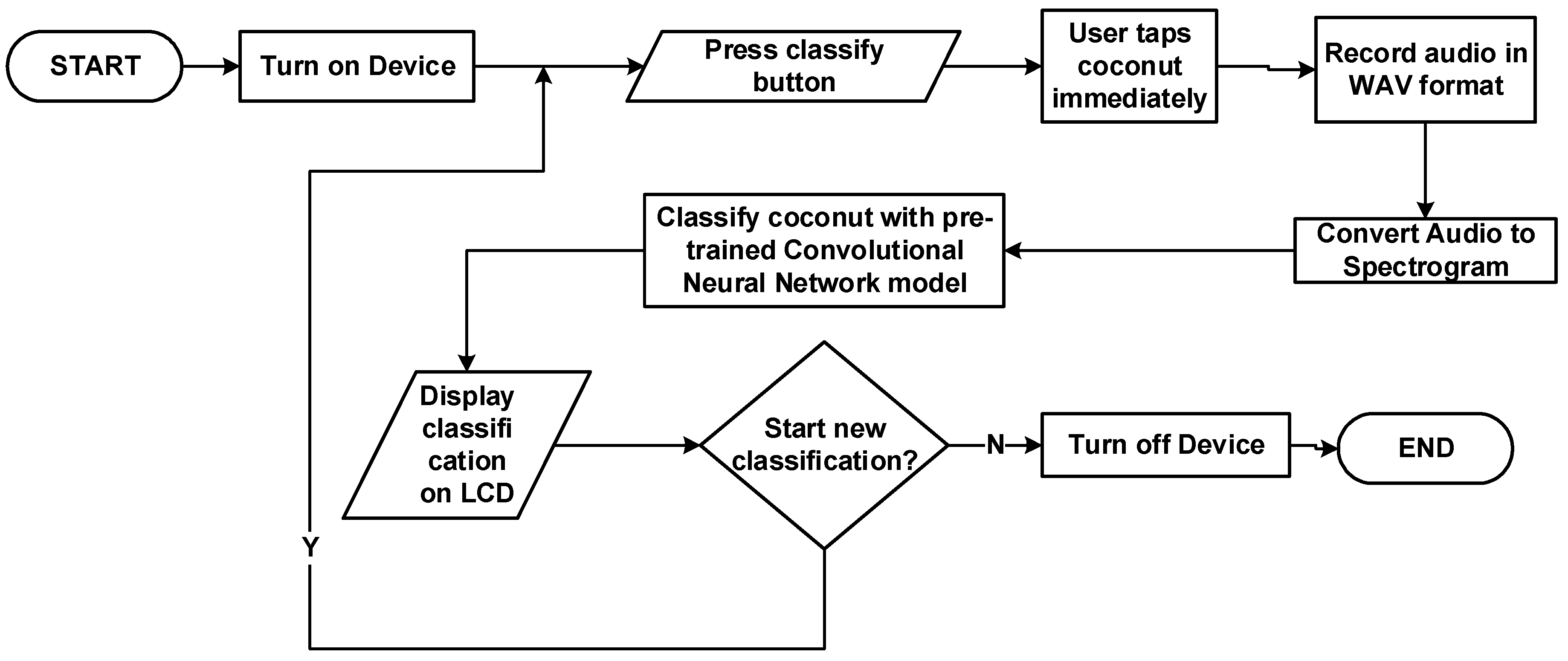

The flow of the system process is described in

Figure 1. Initially, the device will be manually turned on via switch, this will boot up the Raspberry Pi 3B+ and the program for the system will automatically start. Also, the display will turn on and prompt the message that classifying is ready when everything was initialized. To classify, the user will press a button. This will trigger code in the program to turn on the mic and record sound, saves it, and convert it to a spectrogram JPEG file. This image file will be fed on a pre-trained CNN classifier. The result from the classification will then be displayed on the LCD. This process can be repeated multiple times until the user switched off the device

The spectrogram script implemented in the python program uses Fast Fourier Transform. This was chosen since many studies proved its robustness for classification and segmentation even when the environment is noisy (Lopez-Caudana et al., 2017; Rahmawati et al., 2019). Moreover, the use of spectrogram in classifying harmonic signals has been found out to be accurate, fast, and cost-efficient (Jopri et al., 2017). These harmonic signals are a key difference in the sound produced by different maturity levels of coconut.

Methods and Materials

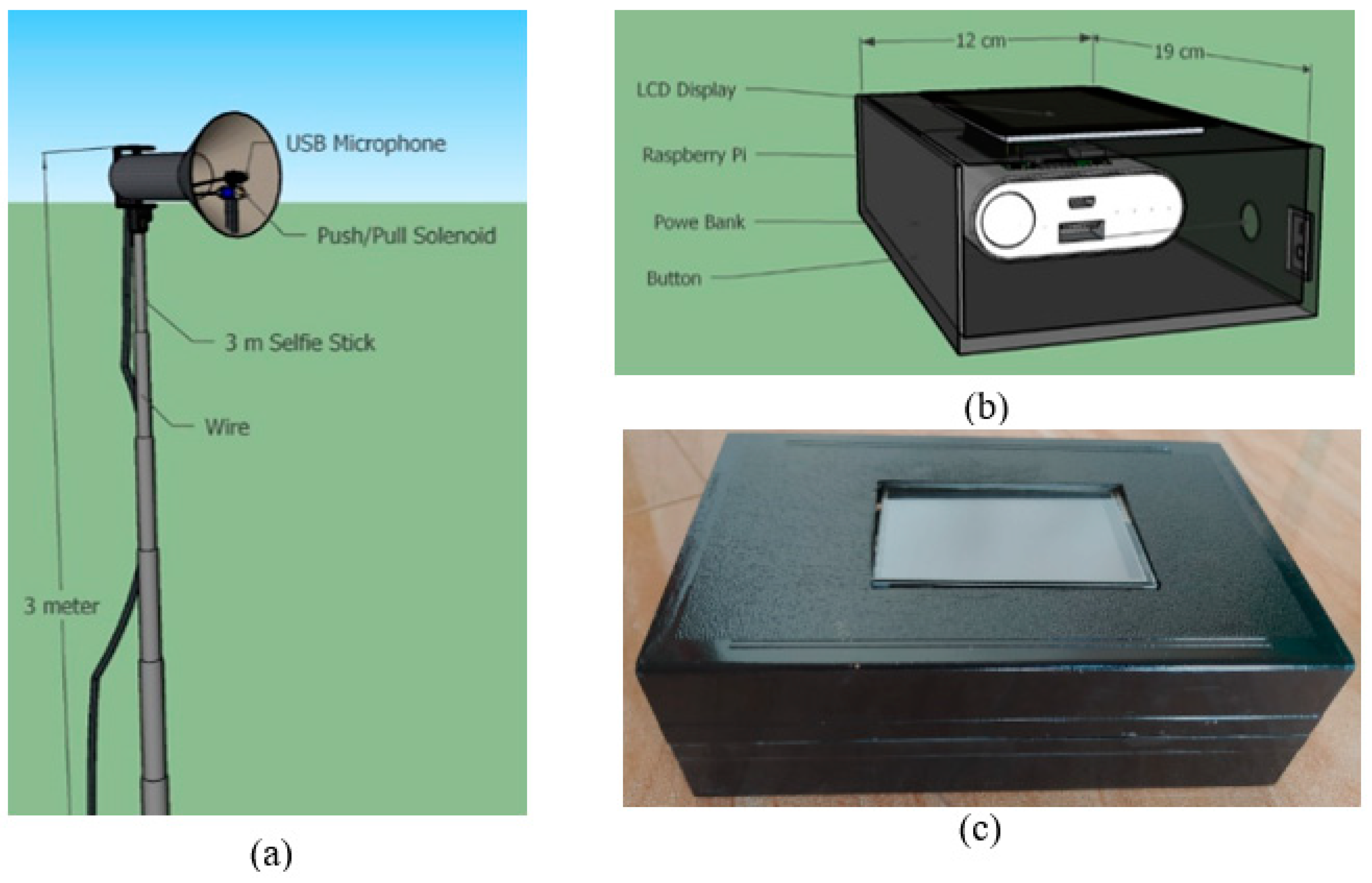

The proposed design of the device is shown in

Figure 2.

Figure 2(a) shows the proposed tapper portion of the system, which consists of a microphone, a push/pull solenoid, and a retractable stick. The microphone and solenoid valve will be installed closed to each other and enclosed in a conical-shaped enclosure. This design will isolate outside noise that may affect the readings. It will then be mounted to a 3-meter selfie-stick. A 5-meter USB extender cable with repeater will be connected to the USB microphone to hook it up to the Raspberry Pi. At least a 5-meter stranded wire will be used to connect the solenoid to the Raspberry PI GPIO pin. These wired connections will provide power and control signals to both the microphone and the solenoid.

The circuit of the system will be installed inside the acrylic casing, and the components will be placed closely to minimize the footprint of the whole device since it needs to be portable and light. As shown in

Figure 2(b), the circuit will be composed of the Raspberry PI 3B+, a power bank placed behind the microprocessor, and a 3.5-inch TFT Touchscreen Display. The Touchscreen Display together with power and control buttons will be visible outside the casing.

Algorithm Flow

The program will be written in Python language using the pre-installed Python IDLE software in the Raspbian OS.

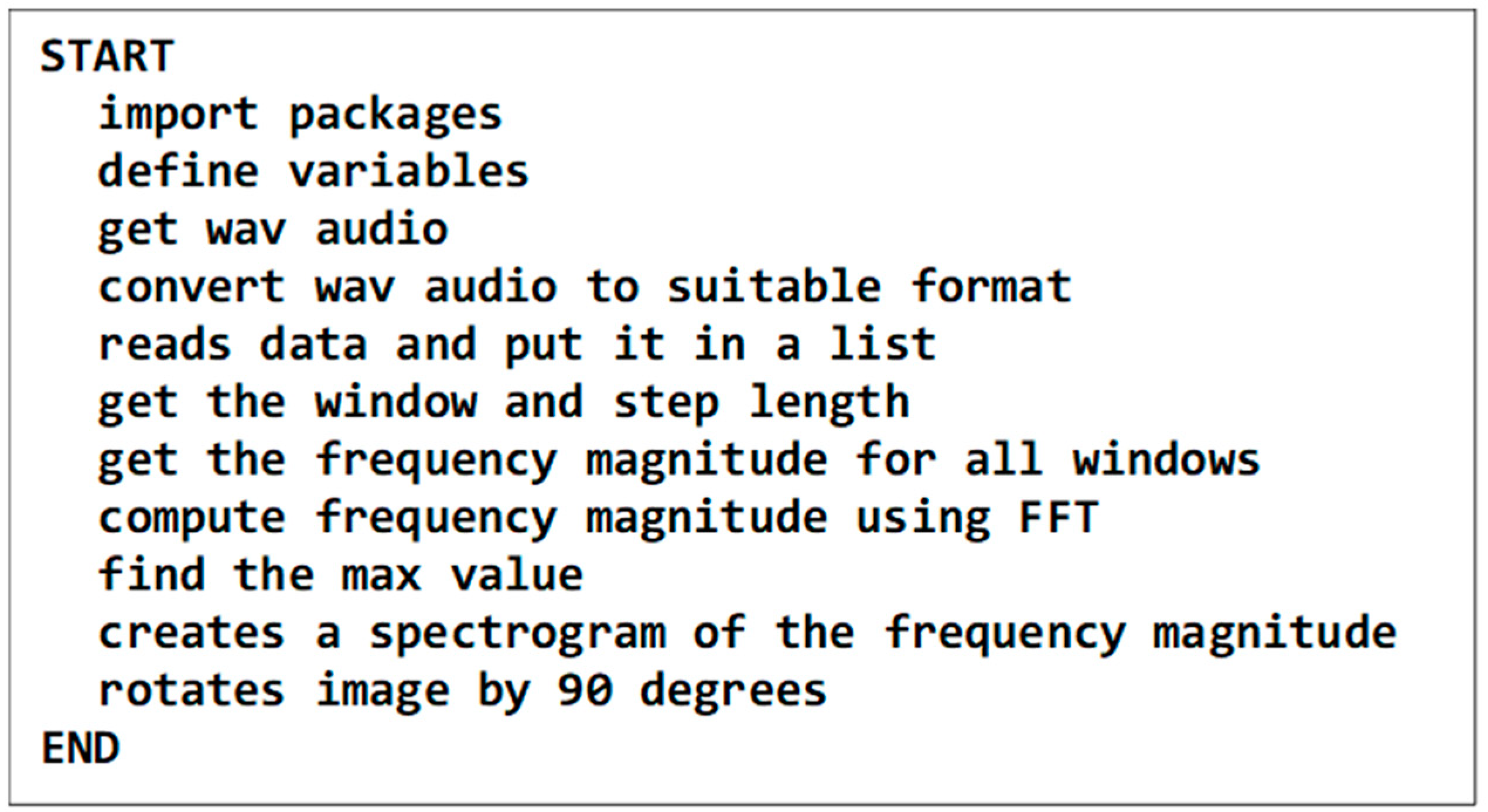

Figure 3 shows the pseudocode in developing the source code that will control the flow of the system and convert the audio data to image data before feeding it to the CNN classifier. To perform the conversion, necessary packages were imported and variables were defined. A script that captured the audio from the microphone was executed. This signal was converted to a suitable format. The data was converted to a list in python. This will ease the mathematical operations. Parameters necessary to draw the spectrogram will be determined like windows and step length. After this, the frequency magnitude will be computed using a Fast Fourier Transform algorithm. The max value will be computed next. Finally, through decision making and loops, a spectrogram based on the frequency magnitude will be drawn and will be rotated by 90 degrees since the x and y-axis were interchanged in a spectrogram.

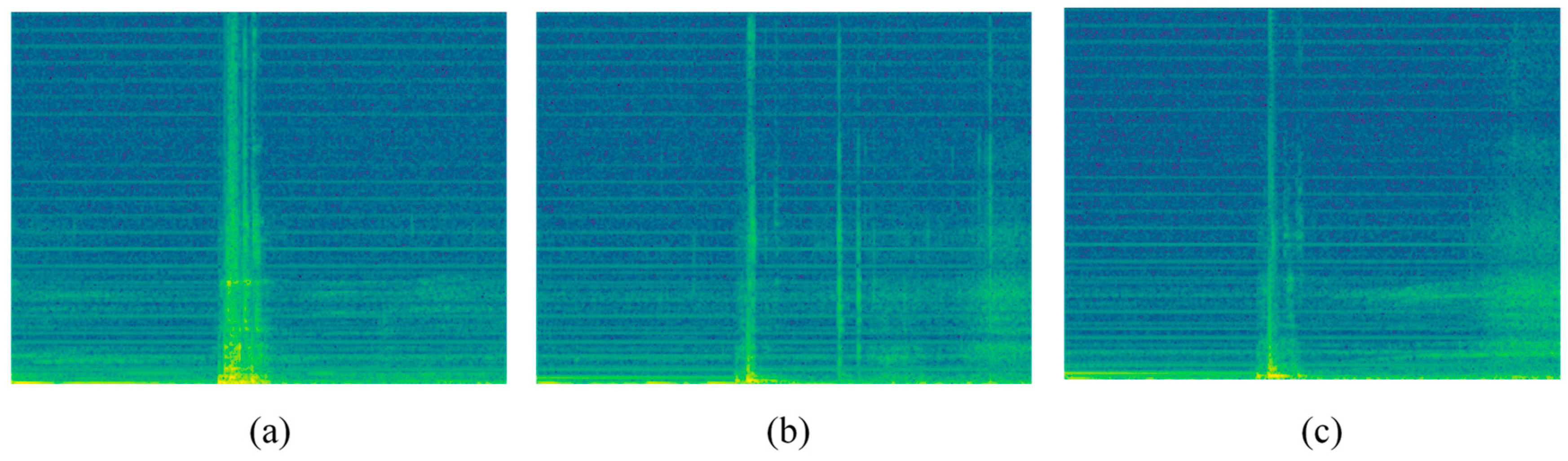

The images used as training data for the CNN classifier are spectrograms of the audio acquired by tapping the coconut using a solenoid. Ninety coconuts consisting of 30 coconuts for the three maturities were used for training the neural network. Each coconut was tapped at six different spots.

Figure 4 shows a sample image of the spectrogram for each coconut maturity. A database of 540 was then used for training, where 25% of this will be randomly used for testing the accuracy of the model.

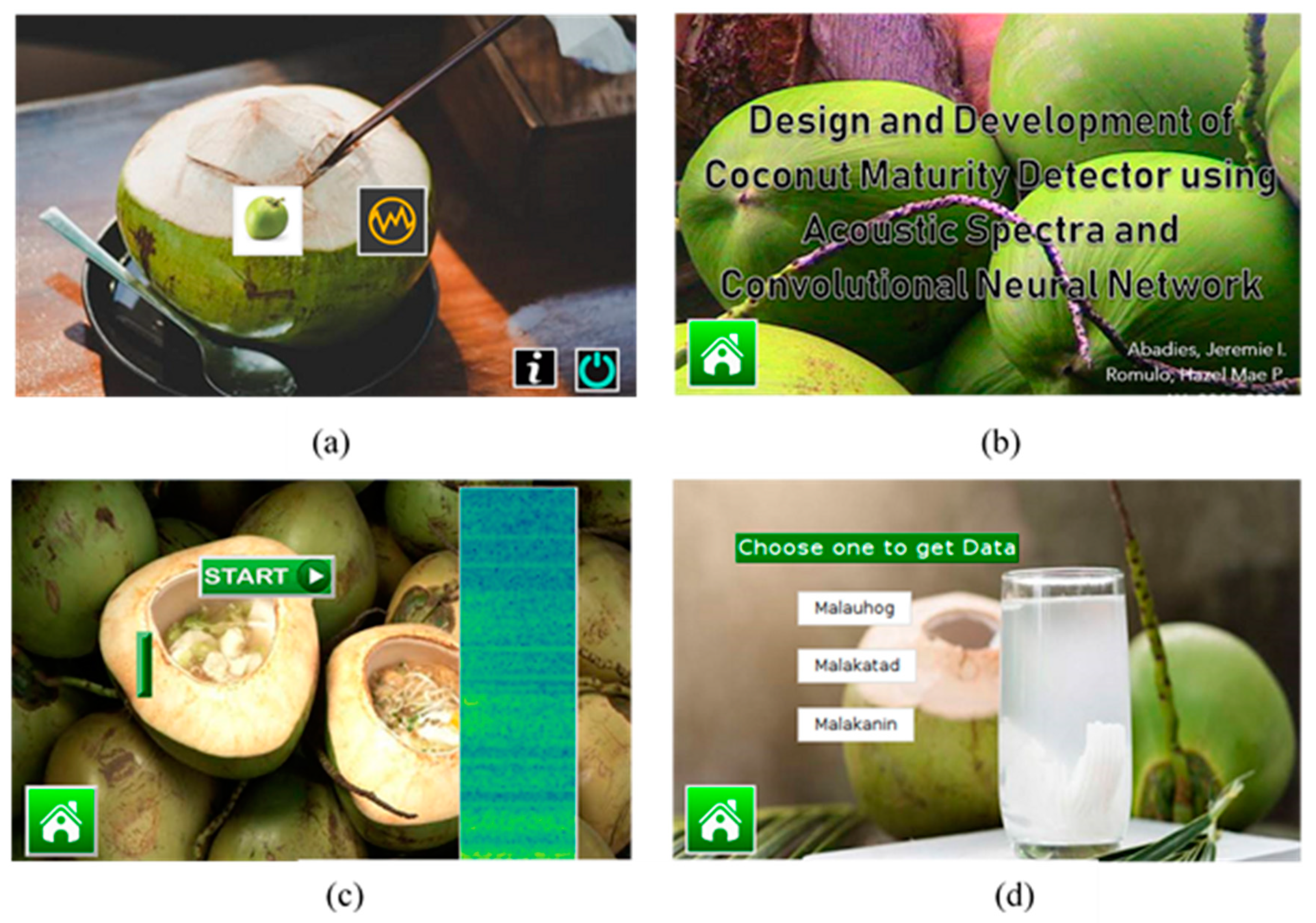

A convolutional neural network was developed in Keras. The model was compiled from a dataset of spectrogram images. The LeNet architecture was chosen as CNN classifier due to its simplicity and small size, which Raspberry Pi can handle. It has seven layers, among which the convolutional layers (3), sub-sampling (pooling) layers (2), fully connected layer, and the output layer. These convolutional layers use 5×5 convolutions with stride 1. Sub-sampling is 2×2 average pooling layers. ReLU activations were used throughout the network. Finally, the model will be integrated to the system program. A Graphical User Interface was developed, as shown in

Figure 5. The system has two modes of operation; classification and data acquisition.

3. Results and Discussion

The LeNet classifier was first trained with spectrogram images that do not undergo augmentation, where the percentage of accuracy after 25 epochs is 83.67%. Using the same spectrogram samples, the model was then trained to three types of augmentation, where X2, X4, and X6 represent rotation, shift, and shear of the image, respectively.

Table 1 summarizes the findings for these different trials keeping other parameters constant. It is found out that augmentation does not improve the accuracy of classification. Thus, the model integrated into the system does not perform any augmentation which can speed up the classification process.

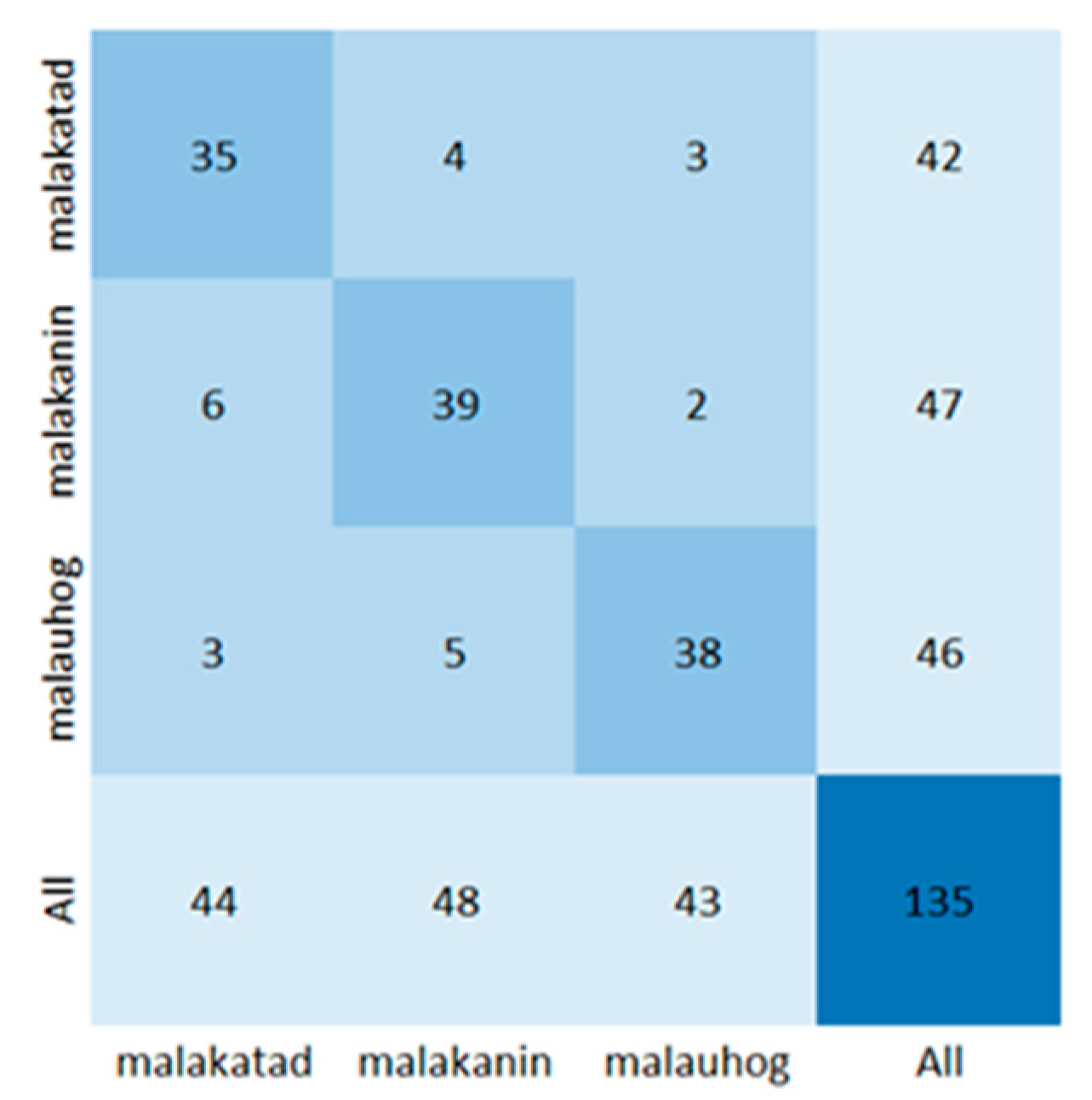

A more detailed view of testing the model is shown in

Figure 6. It can be observed that across the three maturities of coconut fruit, the classification accuracy of the model is consistent. On average, it was observed that the classification process is about 4.5 seconds, taking into account all the processes involved such as tapping the coconut, recording the audio, converting the audio to spectrogram, loading the spectrogram to the CNN model and displaying the result.

4. Conclusions

The proposed use of a CNN classifier based on LeNet architecture accurately classified 82% of the coconut samples during testing of the model. It is found out that augmentation of the spectrogram data does not significantly increase the performance of the classifier. These results demonstrate the ability of the system to serve as a substitute for classifying coconut. The design of the device as a stand-alone system with a retractable part where the microphone and tapper were installed gave it the versatility to classify coconut fruit even before harvesting. The classification process from mechanically tapping the coconut up to displaying the result took an average of 4.5 seconds, which is fast considering the complexity of the algorithm that the Raspberry Pi needs to process using its limited resources in terms of memory and processing power.

Acknowledgements

This work is dedicated to the Department of Computer and Electronics Engineering of the Cavite State University.

References

- Afrisal, H. and Gedung D., “Metode Pengenalan Tempat Secara Visual Berbasis Fitur CNN untuk Navigasi Robot di Dalam Gedung Visual Place Recognition Method Based-on CNN Features for Indoor Robot Navigation,” J. Teknol. dan Sist. Komput., vol. 7, no. 2, pp. 47–55, 2019.

- Akhand M. A., H. and Rahman M., “Bangla Handwritten Numeral Recognition using Convolutional Neural Network,” 2015 Int. Conf. Electr. Eng. Inf. Commun. Technol., no. May, pp. 21–23, 2015. [CrossRef]

- Albalos R. M., C. , Suministrado D. C., Fernandez K., and Quilloy E. P., “Acoustic impulse response of young coconut (Cocos nucifera L.) fruits in relation to maturity,” vol. 23, no. 1, 2021.

- Alonzo L. M., B. , Chioson F. B., Co H. S., Bugtai N. T., and Baldovino R. G., “A Machine Learning Approach for Coconut Sugar Quality Assessment and Prediction,” 2018 IEEE 10th Int. Conf. Humanoid, Nanotechnology, Inf. Technol. Control. Environ. Manag., pp. 1–4, 2018. [CrossRef]

- Arboleda E., R. , Fajardo A. C., and Medina R. P., “Green coffee beans feature extractor using image processing,” Telkomnika, vol. 18, no. 4, pp. 2027–2034, 2020. [CrossRef]

- Beach J., B. and Hamre C. H., Tropical and Sub-tropical Fruits, 1st ed. 2017.

- Ben Hamida, A. , Samet M., Lakhoua N., and Drira M., “Sound Spectral Processing Based on Fast Fourier Transform Applied to Cochlear Implant For the Conception a Graphical Spectrogram and for the Generation of Stimulating Pulses,” IEEE, pp. 1388–1393, 1998.

- Curilem, M. , Canario J. P., Franco L., and Rios R., “Using CNN to classify spectrograms of seismic events from Llaima volcano (Chile),” IEEE, 2018. [CrossRef]

- Dar, W. , “PH Coconut Industry A ‘Sleeping Giant,’” BizNewsAasia, 2019.

- Grant, A. (2021, April 16). Harvesting of coconut trees - how to pick coconuts from trees. Gardening Know How. https://www.gardeningknowhow.com/edible/fruits/coconut/when-are-coconuts-ripe.htm.

- Hendry, J. , Rachman A., Zulherman D., Rachman A., and Zulherman D., “Sistem deteksi ketepatan pembacaan surah al-Kautsar berbasis kata menggunakan mel frequency cepstrum coefficient dan cosine similarity Recites fidelity detection system of al-Kautsar verse based on words using mel,” vol. 8, no. January, pp. 27–35, 2020.

- Javel I., M. , Bandala A. A., Salvador R. C., Bedruz R. A. R., Dadios E. P., and Vicerra R. R. P., “Coconut fruit maturity classification using fuzzy logic,” 2018 IEEE 10th Int. Conf. Humanoid, Nanotechnology, Inf. Technol. Commun. Control. Environ. Manag. HNICEM 2018, pp. 1–6, 2019. [CrossRef]

- James, A. , “Malayalam Handwritten Character Recognition Using Convolutional Neural Network,” Int. Conf. Inven. Commun. Comput. Technol., no. Icicct, pp. 278–281, 2017. [CrossRef]

- Keller, M. and Medler J., “Use of FFT-Based Measuring Instruments for EMI Compliance Measurements,” 2014 Int. Symp. Electromagn. Compat. Tokyo, no. 1, pp. 1–2, 2014.

- Lopez-Caudana, E., Quiroz, O., Rodríguez, A., Yépez, L., & Ibarra, D. (2017). Classification of materials by acoustic signal processing in real time for Nao Robots. International Journal of Advanced Robotic Systems, 14(4), 172988141771499. [CrossRef]

- Maheswaran, S. , Sathesh S., Saran G., and Vivek B., “Automated Coconut Tree Climber,” Int. Conf. Intell. Comput. Control, 2017.

- Monteiro, A. , De Oliveira M., De Oliveira R., and Silva T., “Embedded application of convolutional neural networks on Raspberry Pi for SHM,” Electron. Lett., vol. 54, no. 11, pp. 680–682, 2018. [CrossRef]

- Pandiselvam, R. et al., “Mechanical properties of tender coconut (COCOS NUCIFERA L.): Implications for the design of processing machineries,” J. Food Process Eng., vol. 43, no. 2, p. e13349, Feb. 2020. [CrossRef]

- Pascua A., M. , “Impact Damage Threshold of Young Coconut (Cocos nucifera L.),” Int. J. Adv. Agric. Sci. Technol., vol. 4, no. 11, pp. 1–9, 2017.

- Prasetyo, H. , Arie B., and Akardihas P., “Batik Image Retrieval using Convolutional Neural Network,” Telkomnika, vol. 17, no. 6, pp. 3010–3018, 2019. [CrossRef]

- Purkaystha, B. , “Bengali Handwritten Character Recognition Using Deep Convolutional Neural Network,” 2017 20th Int. Conf. Comput. Inf. Technol., pp. 22–24, 2017. [CrossRef]

- Rahmawati, D., Haryanto, H., & Sakariya, F. (2019). The design of coconut maturity prediction device with acoustic frequency detection using naive Bayes method-based microcontroller. JEEMECS (Journal of Electrical Engineering, Mechatronic and Computer Science), 2(1). https://doi.org/10.26905/jeemecs.v2i1.2806. [CrossRef]

- Rajesh, K., Thejus P., Allan P., Trayesh V., and Gokul M., “Robotic Arm Design for Coconut-tree Climbing Robot,” Appl. Mech. Mater., vol. 786, pp. 328–333, 2015. [CrossRef]

- Shokoohi, Z. , Hormat A. M., Mahmoudi F., and Badalabadi H., “Persian Handwritten Numeral Recognition Using Complex Neural Network And Non-linear Feature Extraction,” 2013 1st Iran. Conf. Pattern Recognit. Image Anal., 2013. [CrossRef]

- Soleh, M. , “Handwritten Javanese Character Recognition using Descriminative Deep Learning Technique,” 2017 2nd Int. Conf. Inf. Technol. Inf. Syst. Electr. Eng., pp. 325–330, 2017. [CrossRef]

- Syarief, M. and Setiawan W., “Convolutional neural network for maize leaf disease image classification,” Telkomnika, vol. 18, no. 3, pp. 1376–1381, 2020. [CrossRef]

- Tantrakansakul, P. and Khaorapapong T., “The Classification Flesh Aromatic Coconuts in Daylight,” IEEE, 2014. [CrossRef]

- Terdwongworakul, A. , Chaiyapong S., Jarimopas B., and Meeklangsaen W., “Physical properties of fresh young Thai coconut for maturity sorting,” Biosyst. Eng., vol. 103, no. 2, pp. 208–216, 2009. [CrossRef]

- Zawawi T. N. S., T. , Abdullah A. R., and Shair E. F., “Electromyography Signal Analysis Using Spectrogram,” IEEE SCOReD, pp. 16–17, 2013. [CrossRef]

- Jopri M., H. , Abdullah A. R., Manap M., Yusoff M. R., Sutikno T., and Habban M. F., “An Improved Detection and Classification Technique of Harmonic Signals in Power Distribution by Utilizing Spectrogram,” Int. J. Electr. Comput. Eng., vol. 7, no. 1, pp. 12–20, 2017. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).