Submitted:

03 May 2024

Posted:

07 May 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

2.1. Deep Learning-Based Recommendation System

2.2. Reinforcement Learning-Based Recommendation System

3. Methodology

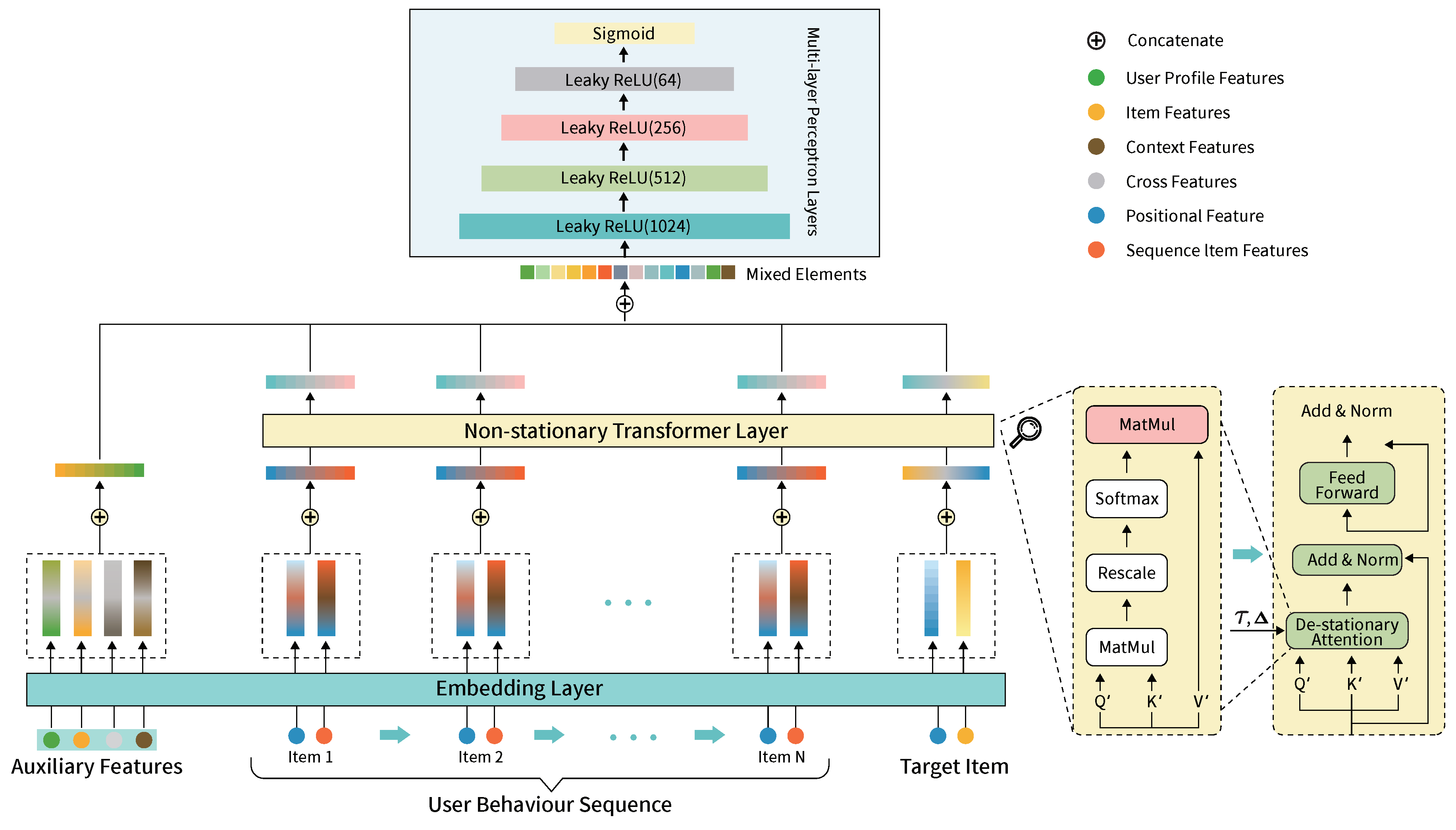

3.1. Non-stationary Transformer Structure

3.1.1. Projector Layer

3.1.2. Transformer Encoder Layer

3.2. Fusion in the Deep Learning-based Recommendation System

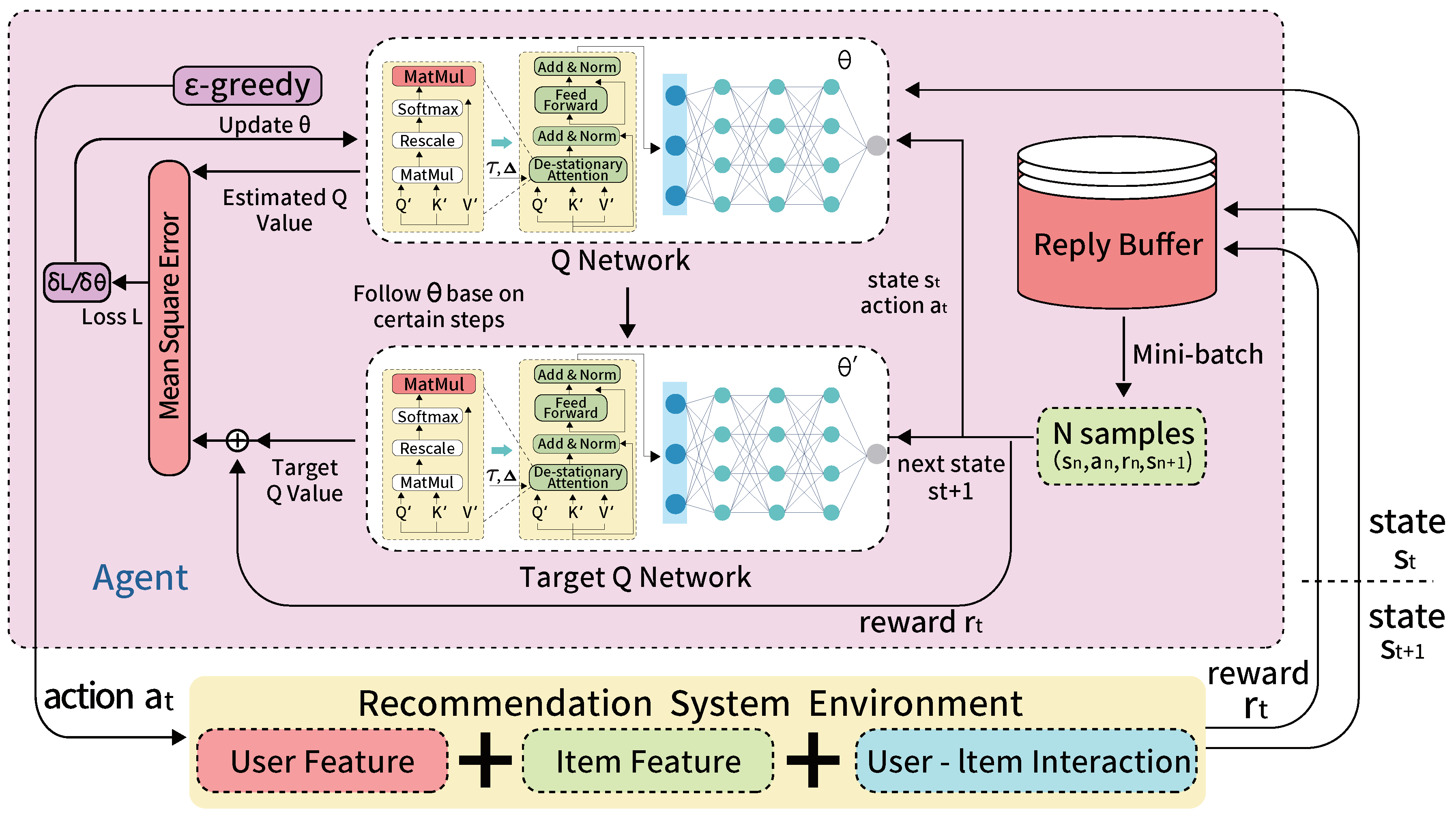

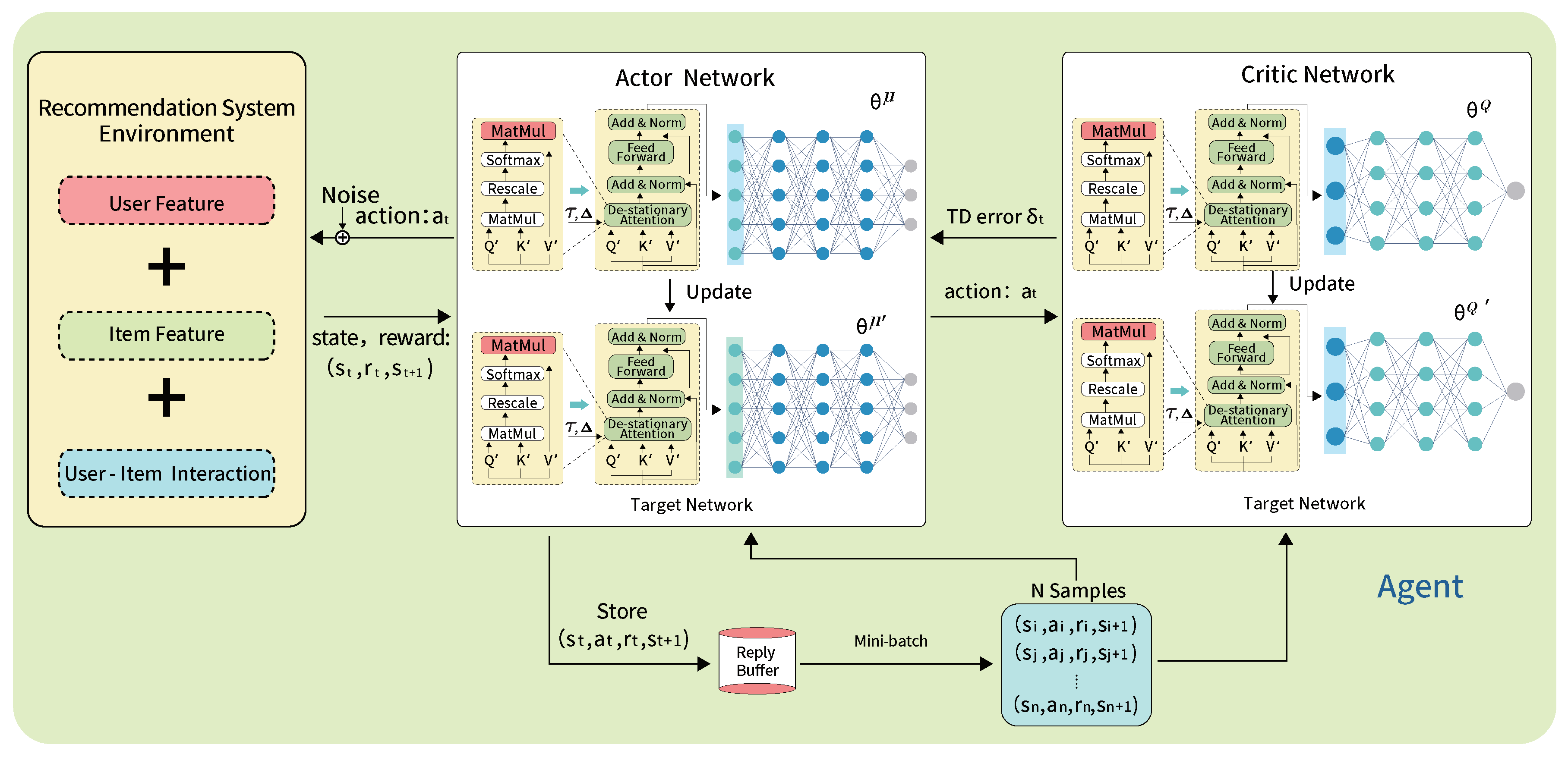

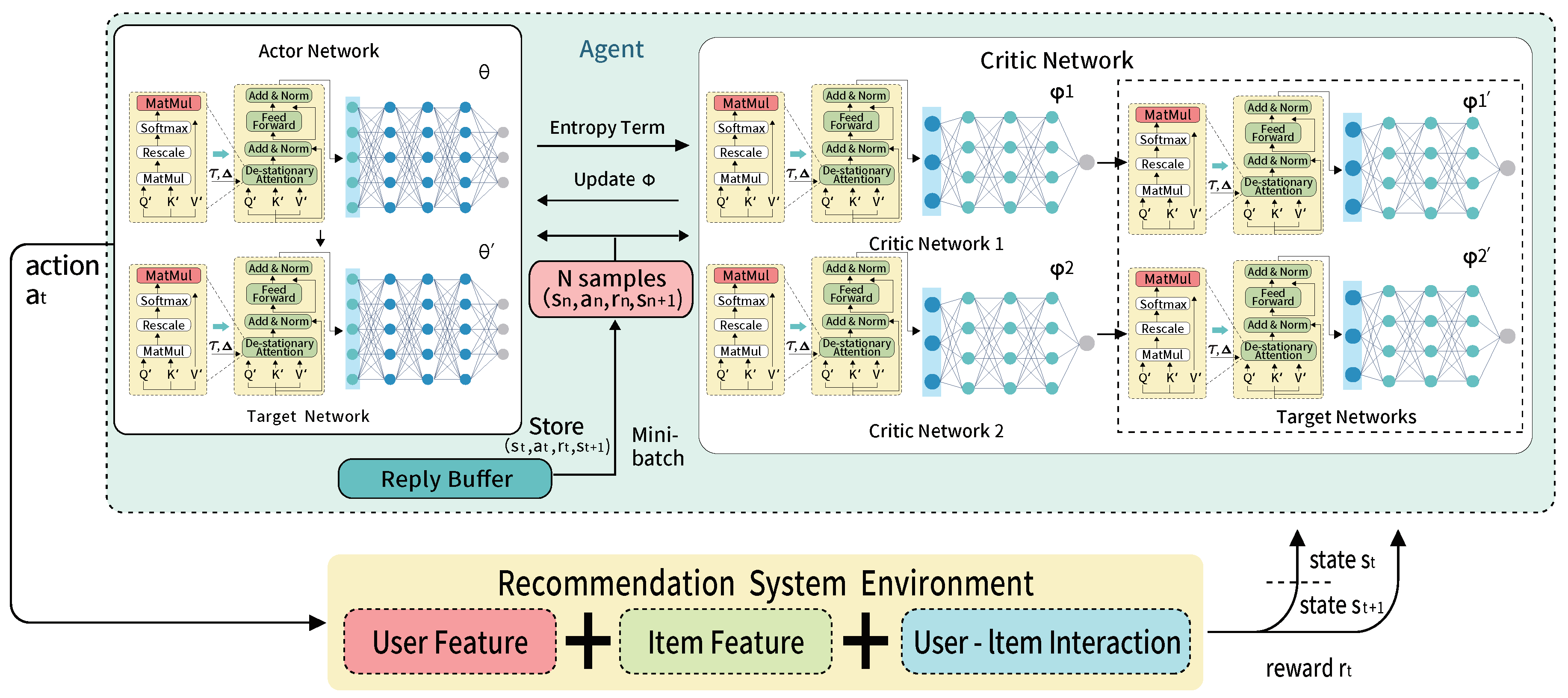

3.3. Fusion in the Reinforcement Learning-Based Recommendation System

4. Experimental Analysis

4.1. Datasets

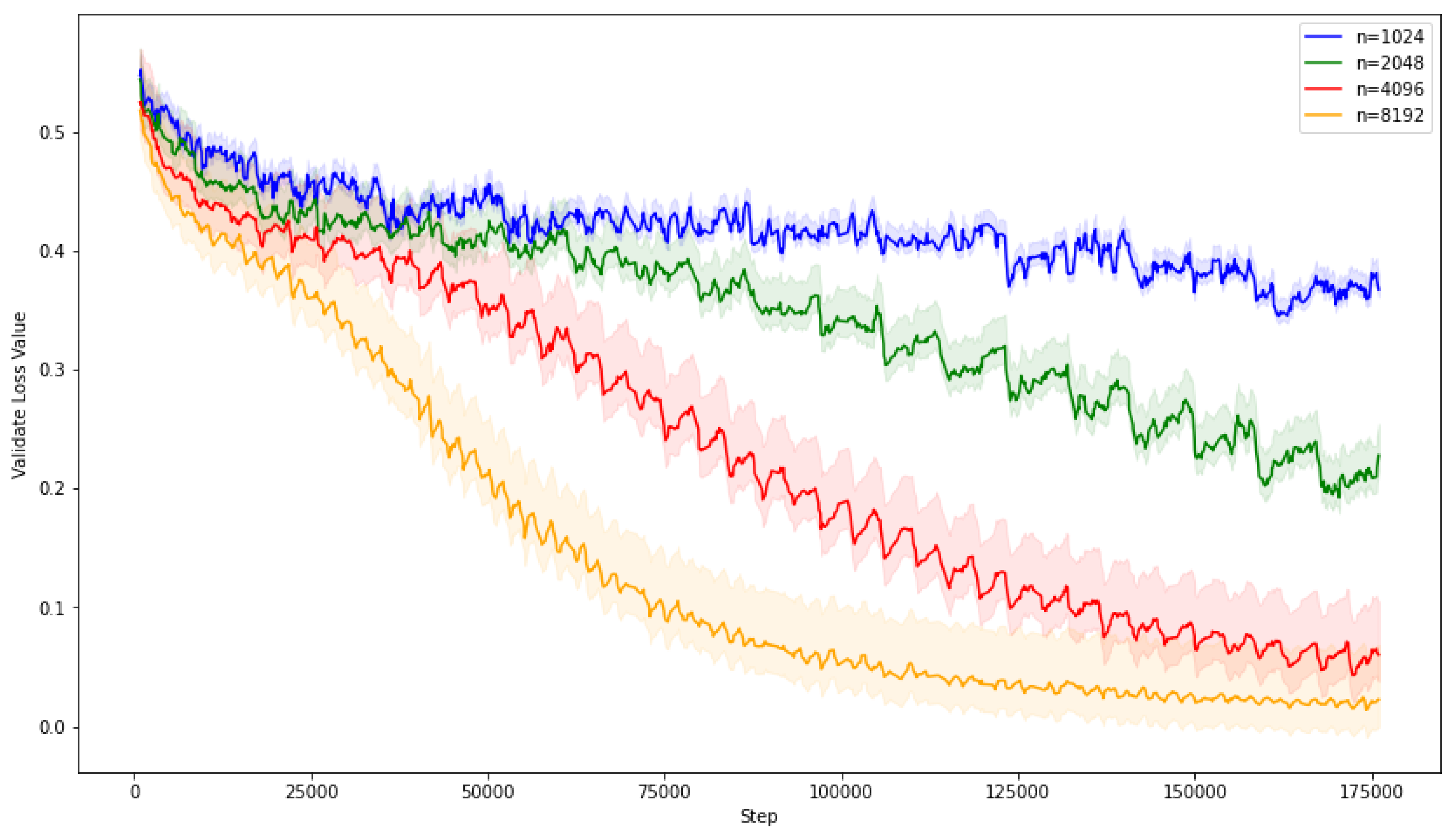

4.2. Deep Learning-Related Experiment

4.3. Reinforcement Learning-Related Experiment

- epochs: 10,000

- maximum sequence length: 64

- batch size: 64

- action size: 284

- number of classes: 2

- number of dense features: 432

- number of category features: 21

- number of sequences: 2

- embedding size: 128

- hidden units: 128

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- E-commerce Sales by Country 2023. Available online: https://www.linkedin.com/pulse/ecommerce-sales-country-2023-julio-diaz-lg0be?trk=article-ssr-frontend-pulse_more-articles_related-content-card (accessed on 21 January 2024).

- Liu, Y.; Man, K.; Li, G.; Payne, T.R.; Yue, Y. Dynamic Pricing Strategies on the Internet. In Proceedings of the International Conference on Digital Contents: AICo (AI, IoT, and Contents) Technology, Dehradun, India, 2022; pp. 23–24. [Google Scholar]

- Global Short Video Platforms Market. Available online: https://www.grandviewresearch.com/press-release/global-short-video-platforms-market (accessed on 1 March 2023).

- Guo, H.; Tang, R.; Ye, Y.; Li, Z.; He, X. DeepFM: a factorization-machine based neural network for CTR prediction. In Proceedings of the 26th International Joint Conference on Artificial Intelligence; 2017; pp. 1725–1731. [Google Scholar]

- Cheng, H.-T.; Koc, L.; Harmsen, J.; Shaked, T.; Chandra, T.; Aradhye, H.; Anderson, G.; Corrado, G.; Chai, W.; Ispir, M.; et al. Wide & deep learning for recommender systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems; 2016; pp. 7–10. [Google Scholar]

- Zhou, G.; Mou, N.; Fan, Y.; Pi, Q.; Bian, W.; Zhou, C.; Zhu, X.; Gai, K. Deep interest evolution network for click-through rate prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, Volume 33, Number 01; 2019; pp. 5941–5948. [Google Scholar]

- Chen, Q.; Zhao, H.; Li, W.; Huang, P.; Ou, W. Behavior sequence transformer for e-commerce recommendation in Alibaba. In Proceedings of the 1st International Workshop on Deep Learning Practice for High-Dimensional Sparse Data; 2019; pp. 1–4. [Google Scholar]

- Gong, Y.; Zhu, Y.; Duan, L.; Liu, Q.; Guan, Z.; Sun, F.; Ou, W.; Zhu, K.Q. Exact-k recommendation via maximal clique optimization. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining; 2019; pp. 617–626. [Google Scholar]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Volume 30, Number 1; 2016. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv, 2015; arXiv:1509.02971. [Google Scholar]

- Liu, Y.; Wu, H.; Wang, J.; Long, M. Non-stationary transformers: Exploring the stationarity in time series forecasting. Advances in Neural Information Processing Systems 2022, 35, 9881–9893. [Google Scholar]

- Liu, Z.; Cheng, M.; Li, Z.; Huang, Z.; Liu, Q.; Xie, Y.; Chen, E. Adaptive normalization for non-stationary time series forecasting: A temporal slice perspective. Advances in Neural Information Processing Systems 2024, 36. [Google Scholar]

- Liu, Y.; Mikriukov, D.; Tjahyadi, O.C.; Li, G.; Payne, T.R.; Yue, Y.; Siddique, K.; Man, K.L. Revolutionising Financial Portfolio Management: The Non-Stationary Transformer’s Fusion of Macroeconomic Indicators and Sentiment Analysis in a Deep Reinforcement Learning Framework. Applied Sciences 2023, 14. [Google Scholar] [CrossRef]

- Ye, M.; Choudhary, D.; Yu, J.; Wen, E.; Chen, Z.; Yang, J.; Park, J.; Liu, Q.; Kejariwal, A. Adaptive dense-to-sparse paradigm for pruning online recommendation system with non-stationary data. arXiv, 2020; arXiv:2010.08655. [Google Scholar]

- Jagerman, R.; Markov, I.; de Rijke, M. When people change their mind: Off-policy evaluation in non-stationary recommendation environments. In Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining; 2019; pp. 447–455. [Google Scholar]

- Yuan, G.; Yuan, F.; Li, Y.; Kong, B.; Li, S.; Chen, L.; Yang, M.; Yu, C.; Hu, B.; Li, Z.; et al. Tenrec: A large-scale multipurpose benchmark dataset for recommender systems. Advances in Neural Information Processing Systems 2022, 35, 11480–11493. [Google Scholar]

- Wang, K.; Zou, Z.; Zhao, M.; Deng, Q.; Shang, Y.; Liang, Y.; Wu, R.; Shen, X.; Lyu, T.; Fan, C. RL4RS: A Real-World Dataset for Reinforcement Learning based Recommender System. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval; 2023; pp. 2935–2944. [Google Scholar]

- Koren, Y.; Rendle, S.; Bell, R. Advances in collaborative filtering. In Recommender Systems Handbook; Springer, 2021; pp. 91–142. [Google Scholar]

- Takács, G.; Tikk, D. Alternating least squares for personalized ranking. In Proceedings of the Sixth ACM Conference on Recommender Systems; 2012; pp. 83–90. [Google Scholar]

- Rendle, S. Factorization machines. In Proceedings of the 2010 IEEE International Conference on Data Mining; IEEE, 2010; pp. 995–1000. [Google Scholar]

- Zhang, W.; Du, T.; Wang, J. Deep Learning over Multi-field Categorical Data: –A Case Study on User Response Prediction. In Advances in Information Retrieval: 38th European Conference on IR Research, ECIR 2016, Padua, Italy, March 20–23, 2016. Proceedings 38; Springer, 20 March 2016; pp. 45–57. [Google Scholar]

- Xiao, J.; Ye, H.; He, X.; Zhang, H.; Wu, F.; Chua, T.-S. Attentional factorization machines: Learning the weight of feature interactions via attention networks. arXiv 2017, arXiv:1708.04617. [Google Scholar]

- Li, Z.; Wu, S.; Cui, Z.; Zhang, X. GraphFM: Graph factorization machines for feature interaction modeling. arXiv 2021, arXiv:2105.11866. [Google Scholar]

- Yang, F.; Yue, Y.; Li, G.; Payne, T.R.; Man, K.L. KEMIM: Knowledge-enhanced User Multi-interest Modeling for Recommender Systems. IEEE Access 2023. [Google Scholar] [CrossRef]

- Zhou, G.; Zhu, X.; Song, C.; Fan, Y.; Zhu, H.; Ma, X.; Yan, Y.; Jin, J.; Li, H.; Gai, K. Deep interest network for click-through rate prediction. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining; 2018; pp. 1059–1068. [Google Scholar]

- Sun, F.; Liu, J.; Wu, J.; Pei, C.; Lin, X.; Ou, W.; Jiang, P. BERT4Rec: Sequential recommendation with bidirectional encoder representations from transformer. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management; 2019; pp. 1441–1450. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Man, K.L.; Li, G.; Payne, T.R.; Yue, Y. Evaluating and Selecting Deep Reinforcement Learning Models for Optimal Dynamic Pricing: A Systematic Comparison of PPO, DDPG, and SAC. In Proceedings of the 2024 8th International Conference on Control Engineering and Artificial Intelligence; 2024; pp. 215–219. [Google Scholar]

- Liu, Y.; Man, K.L.; Li, G.; Payne, T.; Yue, Y. Enhancing sparse data performance in e-commerce dynamic pricing with reinforcement learning and pre-trained learning. In Proceedings of the 2023 International Conference on Platform Technology and Service (PlatCon); IEEE, 2023; pp. 39–42. [Google Scholar]

- Zhao, X.; Zhang, L.; Ding, Z.; Xia, L.; Tang, J.; Yin, D. Recommendations with negative feedback via pairwise deep reinforcement learning. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining; 2018; pp. 1040–1048. [Google Scholar]

- Zheng, G.; Zhang, F.; Zheng, Z.; Xiang, Y.; Yuan, N.J.; Xie, X.; Li, Z. DRN: A deep reinforcement learning framework for news recommendation. In Proceedings of the 2018 World Wide Web Conference; 2018; pp. 167–176. [Google Scholar]

- Lei, Y.; Wang, Z.; Li, W.; Pei, H.; Dai, Q. Social attentive deep Q-networks for recommender systems. IEEE Transactions on Knowledge and Data Engineering 2020, 34, 2443–2457. [Google Scholar] [CrossRef]

- Zhao, X.; Gu, C.; Zhang, H.; Yang, X.; Liu, X.; Tang, J.; Liu, H. Dear: Deep reinforcement learning for online advertising impression in recommender systems. In Proceedings of the AAAI Conference on Artificial Intelligence, Volume 35, Number 1; 2021; pp. 750–758. [Google Scholar]

- Zhao, X.; Xia, L.; Zhang, L.; Ding, Z.; Yin, D.; Tang, J. Deep reinforcement learning for page-wise recommendations. In Proceedings of the 12th ACM Conference on Recommender Systems; 2018; pp. 95–103. [Google Scholar]

- Chen, X.; Huang, C.; Yao, L.; Wang, X.; Zhang, W. Knowledge-guided deep reinforcement learning for interactive recommendation. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN); IEEE: pp. 1–8, 2020. [Google Scholar]

- Liu, F.; Tang, R.; Guo, H.; Li, X.; Ye, Y.; He, X. Top-aware reinforcement learning based recommendation. Neurocomputing 2020, 417, 255–269. [Google Scholar] [CrossRef]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In International Conference on Machine Learning, 2018; PMLR: pp. 1861–1870.

- He, X.; An, B.; Li, Y.; Chen, H.; Wang, R.; Wang, X.; Yu, R.; Li, X.; Wang, Z. Learning to collaborate in multi-module recommendation via multi-agent reinforcement learning without communication. In Proceedings of the 14th ACM Conference on Recommender Systems; 2020; pp. 210–219. [Google Scholar]

- Zhang, W.; Liu, H.; Wang, F.; Xu, T.; Xin, H.; Dou, D.; Xiong, H. Intelligent electric vehicle charging recommendation based on multi-agent reinforcement learning. In Proceedings of the Web Conference 2021.

- Huleihel, W.; Pal, S.; Shayevitz, O. Learning user preferences in non-stationary environments. In Proceedings of the International Conference on Artificial Intelligence and Statistics; PMLR, 2021; pp. 1432–1440. [Google Scholar]

- Wu, Q.; Iyer, N.; Wang, H. Learning contextual bandits in a non-stationary environment. Proceedings of The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval; 2018; pp. 495–504. [Google Scholar]

- Chandak, Y.; Theocharous, G.; Shankar, S.; White, M.; Mahadevan, S.; Thomas, P. Optimizing for the future in non-stationary MDPs. In Proceedings of the International Conference on Machine Learning; PMLR, 2020; pp. 1414–1425. [Google Scholar]

- Watkins, C.J.C.H.; Dayan, P. Q-learning. Machine Learning 1992, 8, 279–292. [Google Scholar] [CrossRef]

- He, X.; Chua, T.-S. Neural factorization machines for sparse predictive analytics. In Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval; 2017; pp. 355–364. [Google Scholar]

- Wang, R.; Fu, B.; Fu, G.; Wang, M. Deep & Cross Network for Ad Click Predictions. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery & Data Mining; 2017; pp. 1–7. [Google Scholar]

| Set | Records | Users | Items | Video Categories | User Features | Seq Interactions |

|---|---|---|---|---|---|---|

| Train | 84,239,614 | 998,993 | 2,027,521 | 3 | 3 | 10 |

| Validate | 18,051,346 | 985,099 | 1,104,613 | 3 | 3 | 10 |

| Test | 18,051,346 | 985,315 | 1,104,678 | 3 | 3 | 10 |

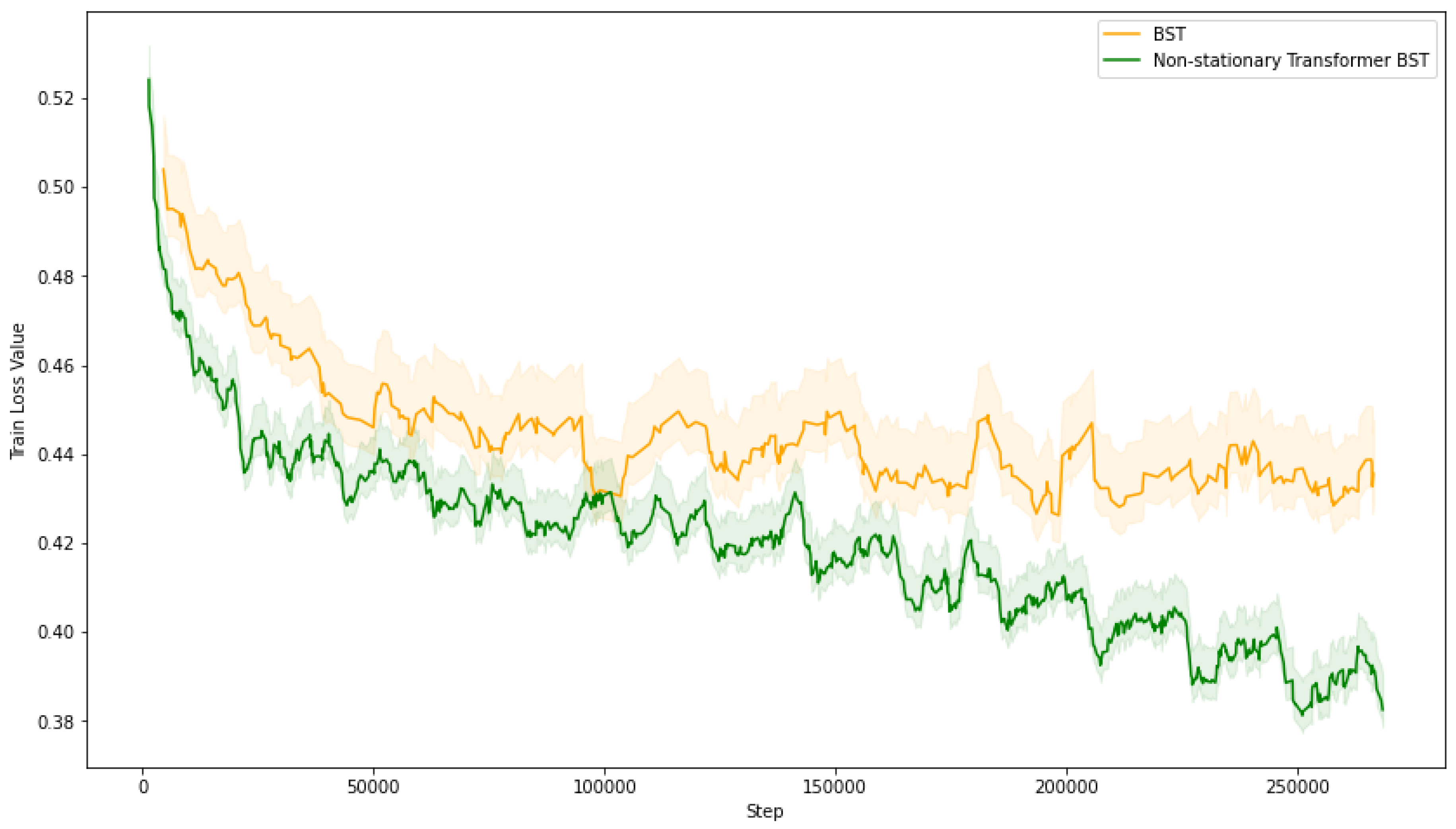

| Model | Logloss | AUC | F1 score |

|---|---|---|---|

| BST | 0.4808 (+8.31%) | 0.7921 (-0.81%) | 0.7386 (-2.79%) |

| Non-stationary Transformer BST | 0.4439 | 0.7986 | 0.7598 |

| Wide & Deep | 0.51 (+14.89%) | 0.7919 (-0.84%) | 0.7225 (-4.91%) |

| DeepFM | 0.5083 (+14.51%) | 0.793 (-0.70%) | 0.7463 (-1.78%) |

| NFM | 0.508 (+14.44%) | 0.7957 (-0.36%) | 0.7512 (-1.13%) |

| DCN | 0.5092 (+14.71%) | 0.7927 (-0.74%) | 0.7261 (-4.44%) |

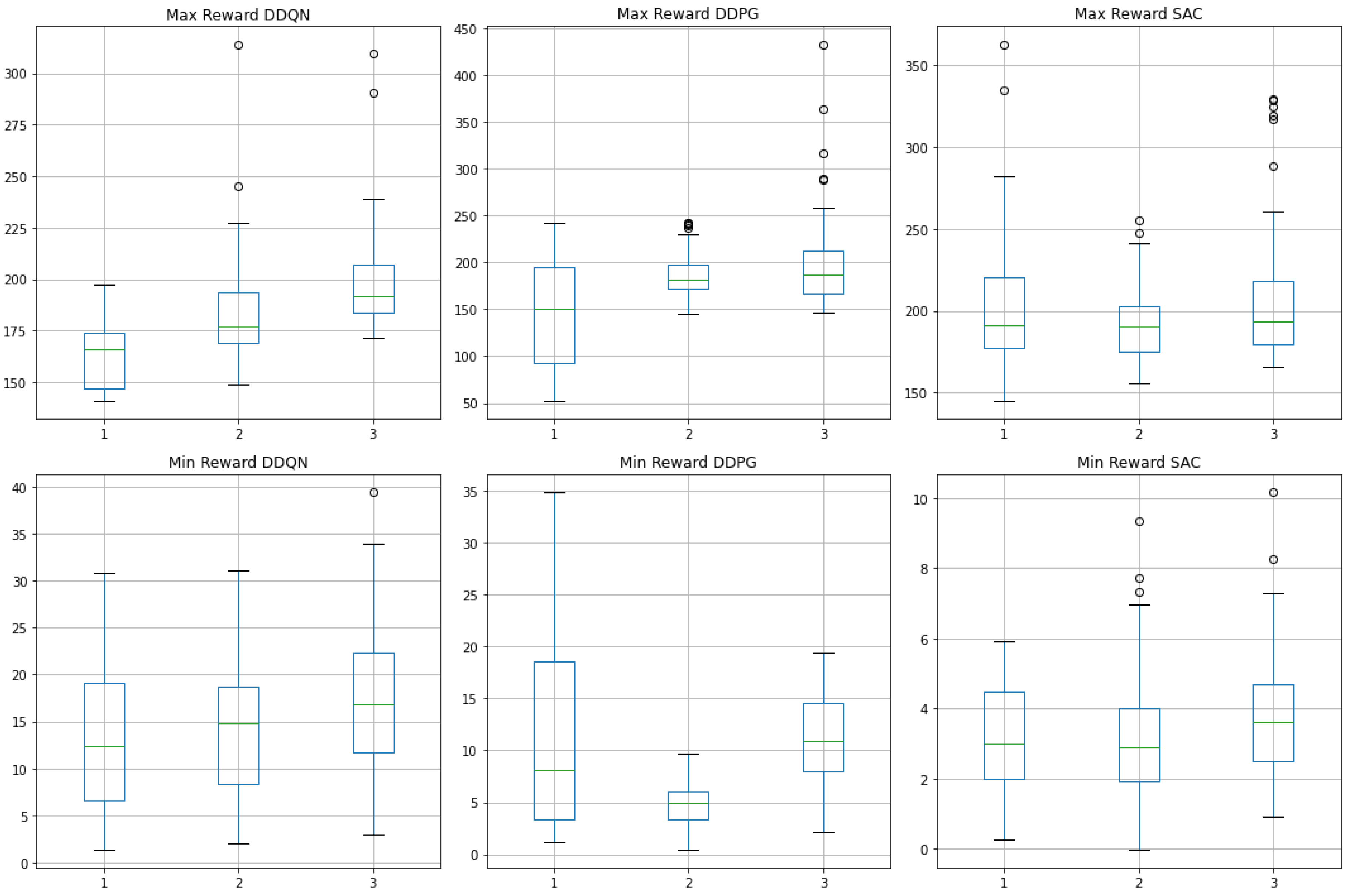

| Algorithm | Episode Reward Mean | Episode Reward Standard Deviation |

|---|---|---|

| Original DDQN | 61.93 | 8.35 |

| Transformer DDQN | 69.38 | 6.84 |

| Non-stationary Transformer DDQN | 96.71 | 7.72 |

| Original DDPG | 107.33 | 9.94 |

| Transformer DDPG | 112.16 | 8.25 |

| Non-stationary Transformer DDPG | 115.08 | 8.11 |

| Original SAC | 68.85 | 9.21 |

| Transformer SAC | 84.07 | 8.63 |

| Non-stationary Transformer SAC | 100.56 | 8.71 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).