Submitted:

08 May 2024

Posted:

23 May 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

2.1. Experiment Location and Design

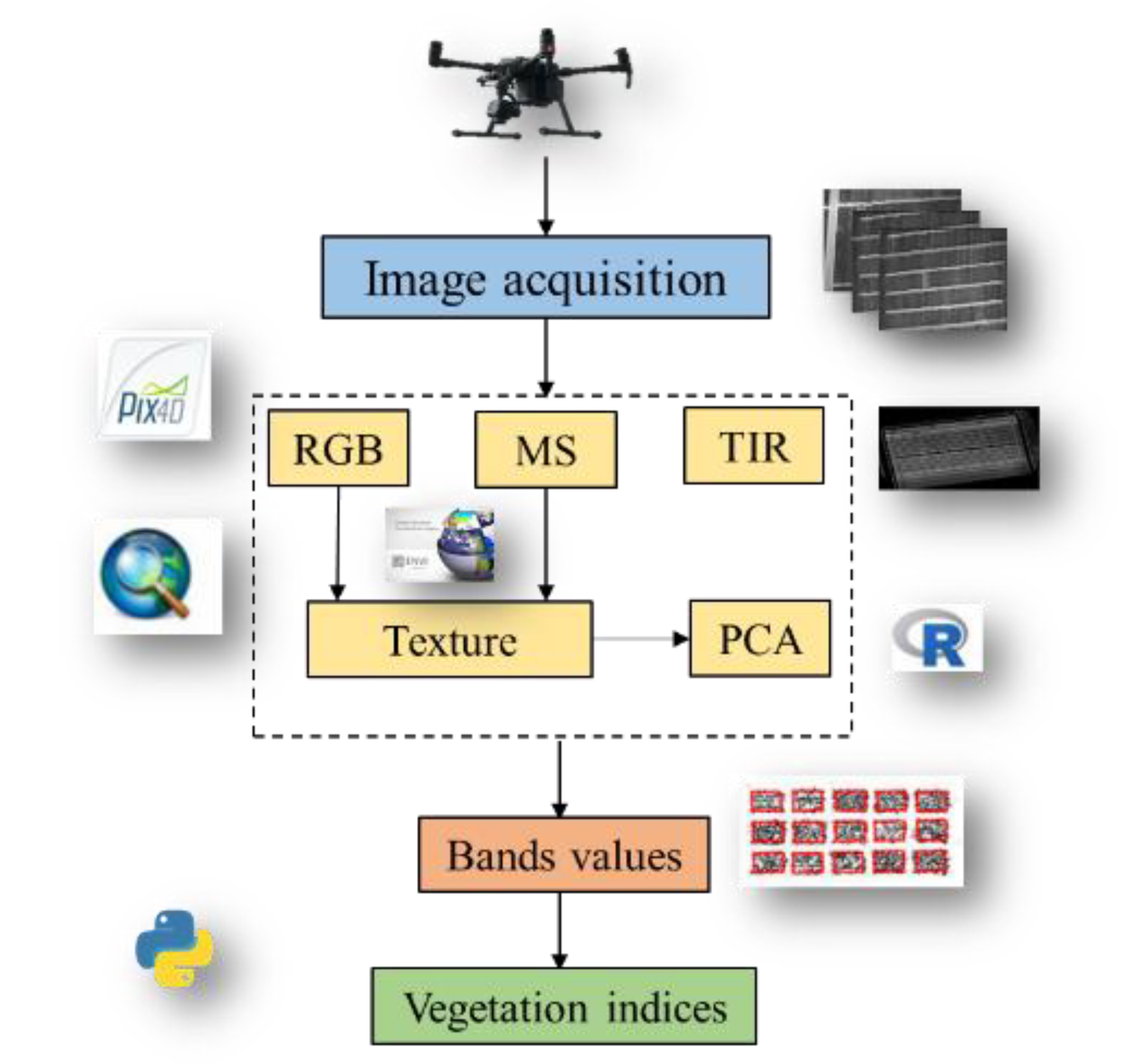

2.2. Multi-Sensor Image Acquisition and Processing Based on UAV

2.3. Extraction of Vegetation and Texture Index

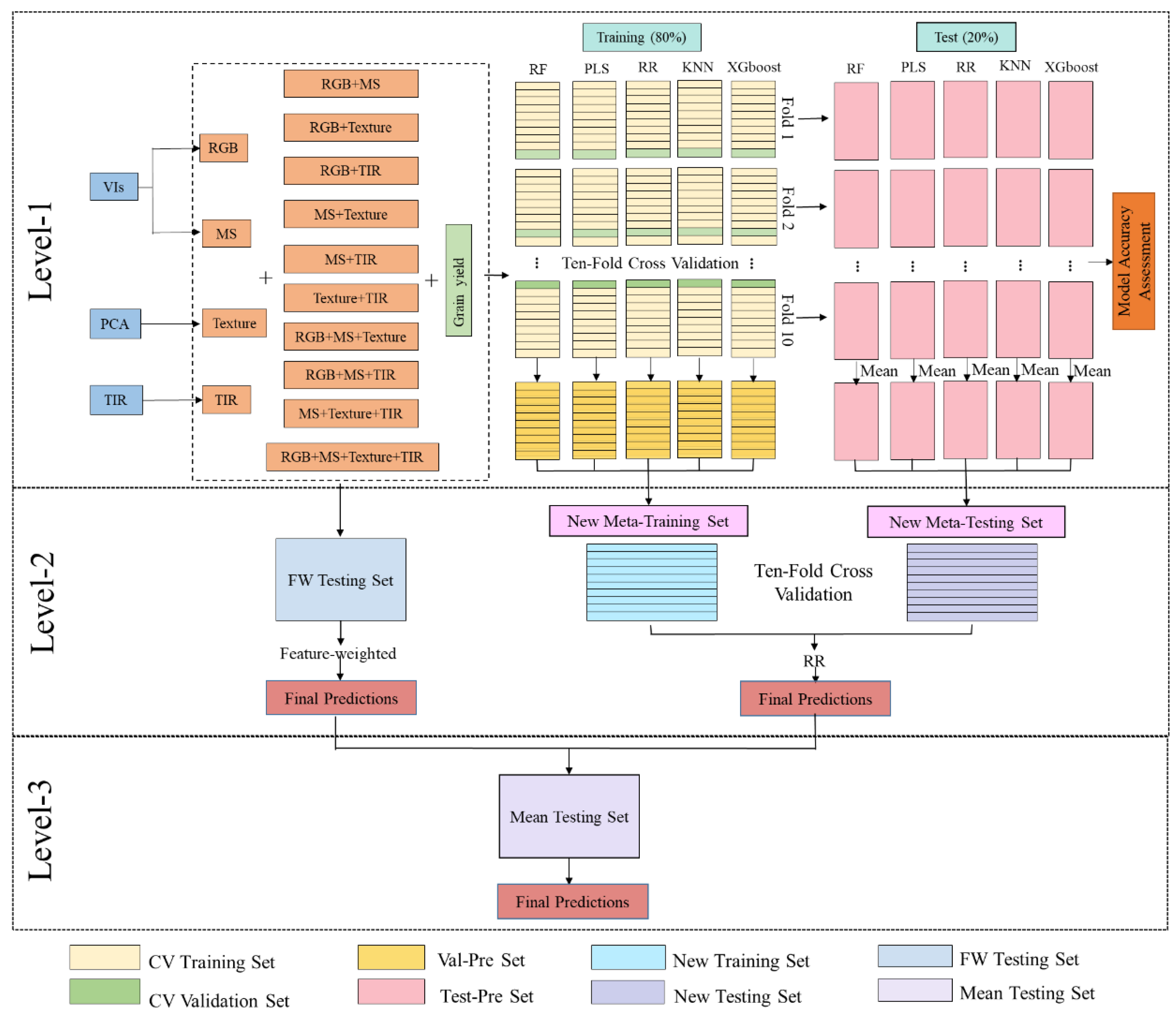

2.3. Ensemble Learning Framework

2.4. Model Performance Evaluation

3. Results

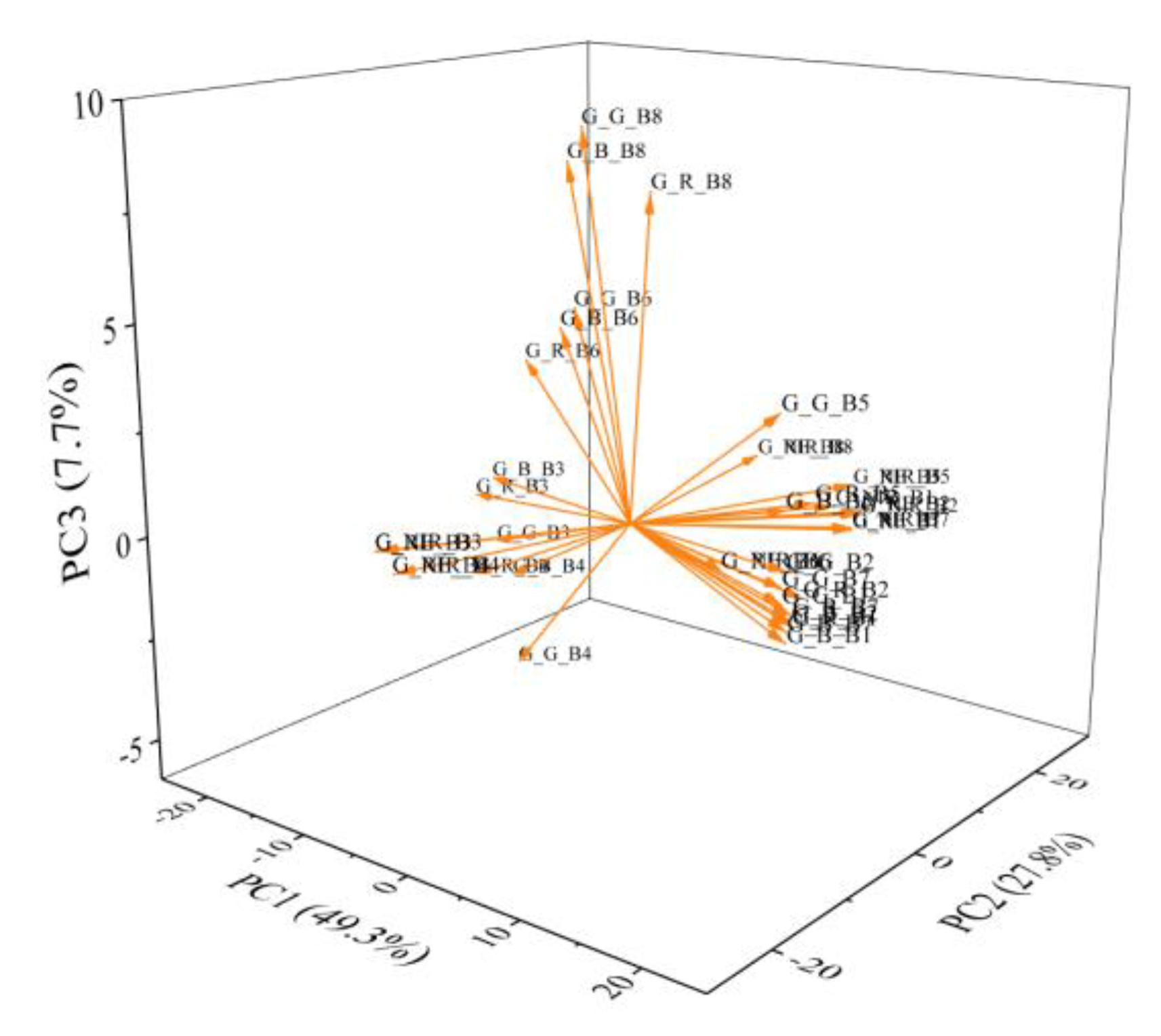

3.1. Principal Component Analysis of Texture Features

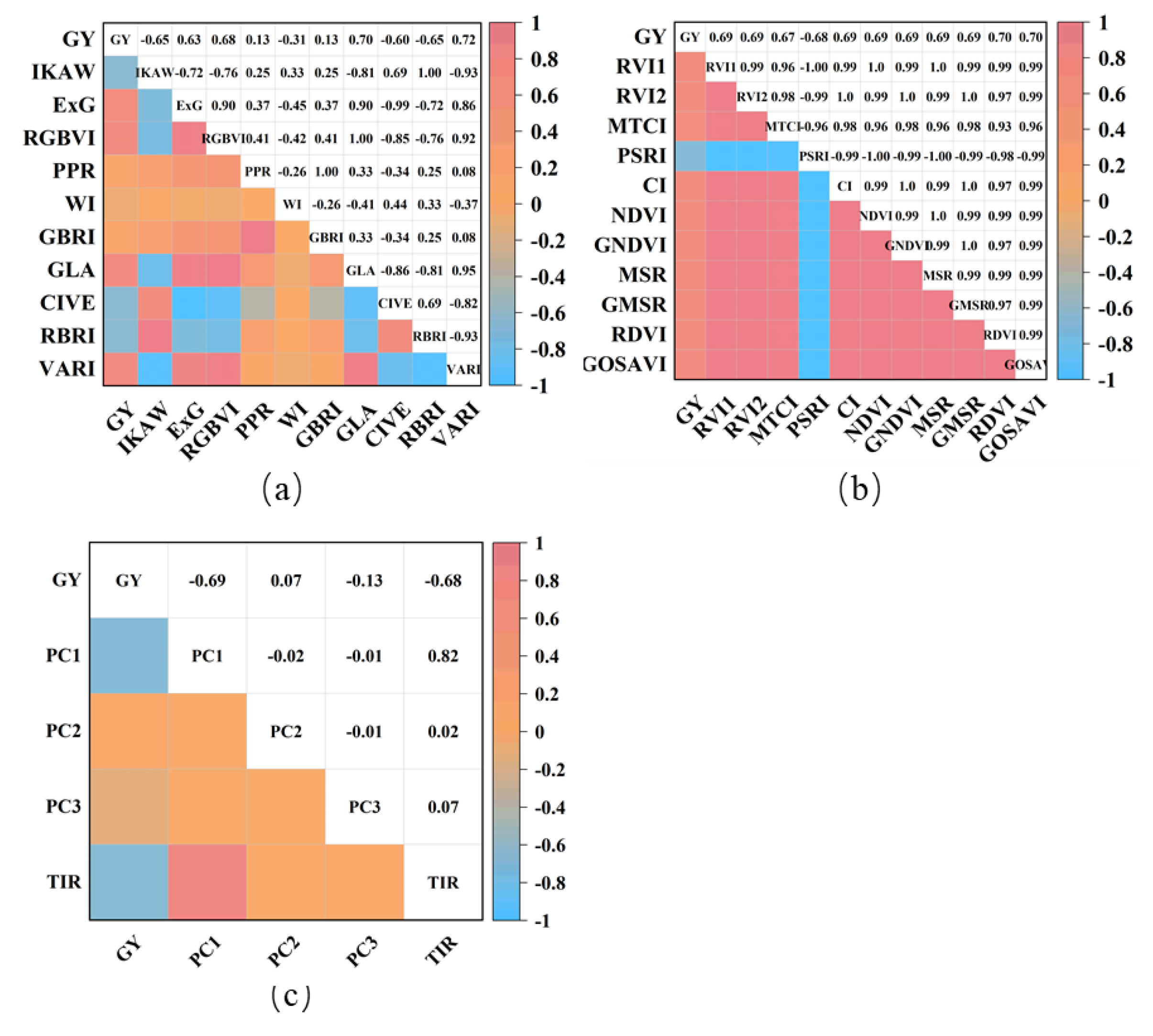

3.2. Correlation Analysis of CI, VI, Texture Features and TIR with Wheat Yield

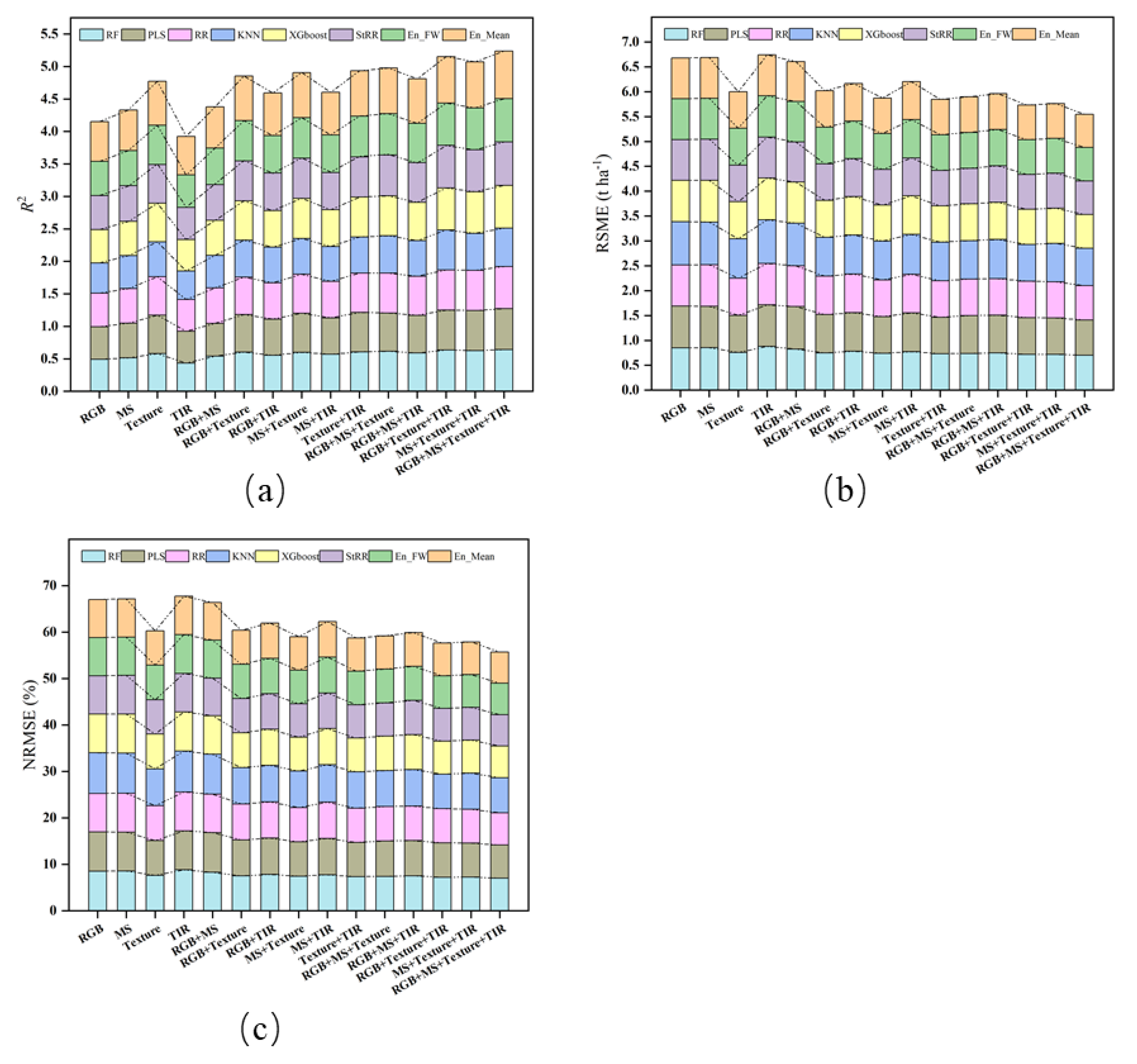

3.3. Wheat Yield Estimation for Optimal Sensor

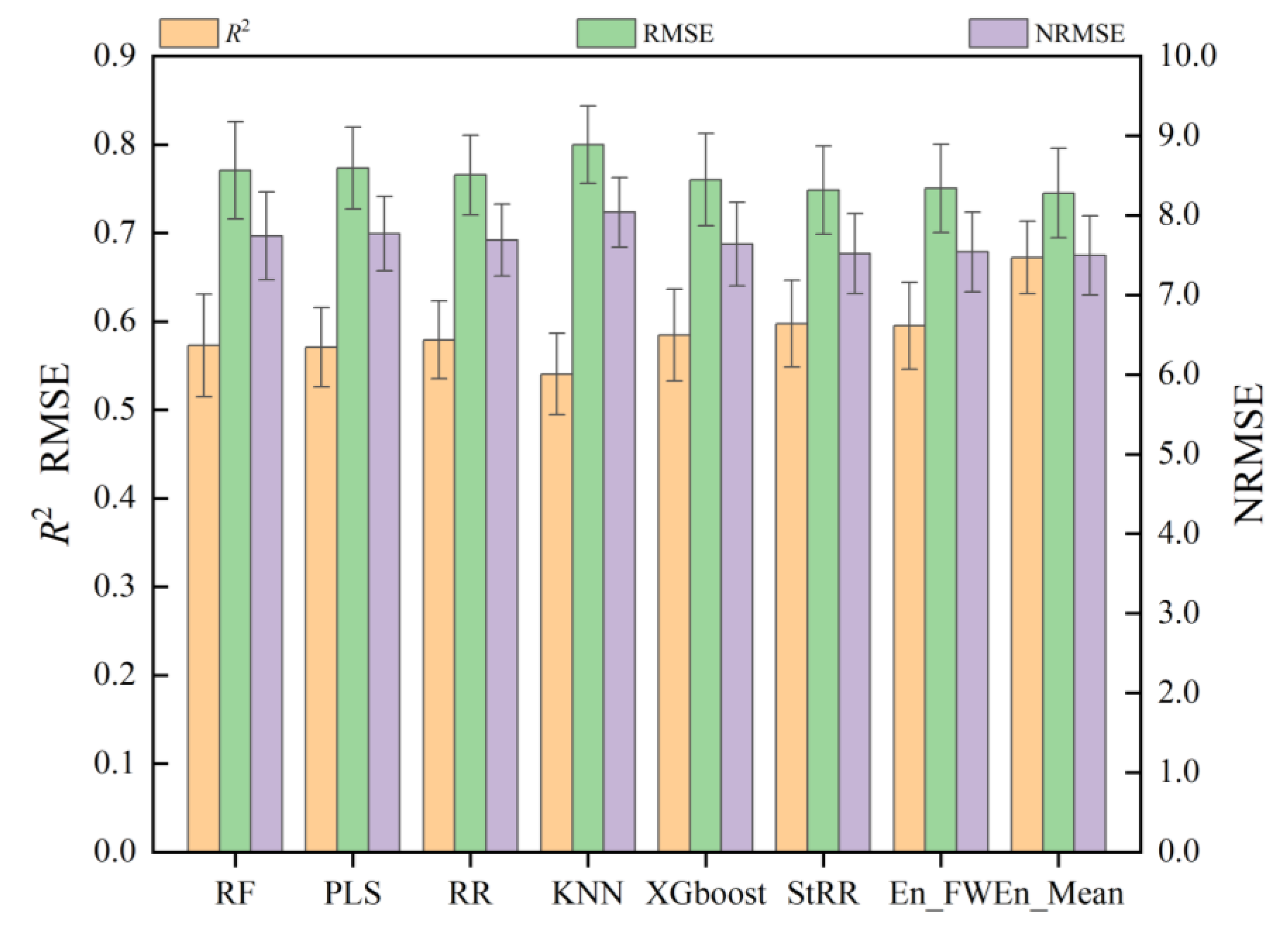

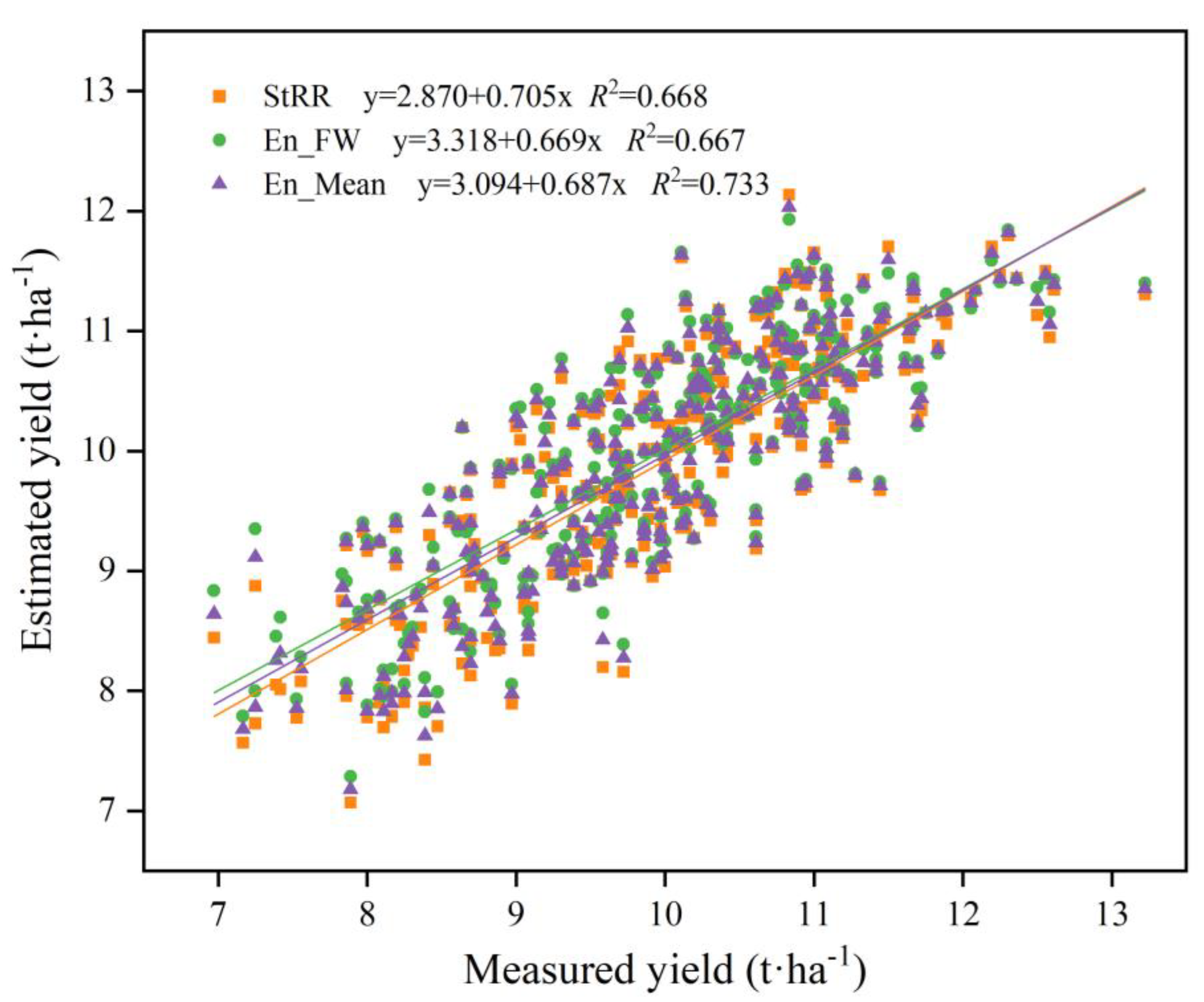

3.4. Optimal Machine Learning Algorithm for Wheat Yield Estimation

4. Discussion

4.1. Estimation of Wheat Yield from Single Sensor Data and Multi-Sensor Fusion Data

4.2. Application of Basic Model in Wheat Yield Estimation

4.3. Performance of Ensemble Learning in Wheat Yield Prediction

5. Conclusion

Funding

References

- Sun, C. , Dong, Z., Zhao, L., Ren, Y., Zhang, N., & Chen, F. (2020). The wheat 660k SNP array demonstrates great potential for marker-assisted selection in polyploid wheat. Plant Biotechnology Journal, 18(6).

- Zhou, X. , Zheng, H. B., Xu, X. Q., He, J. Y., Ge, X. K., & Yao, X.; et al. (2017). Predicting grain yield in rice using multi-temporal vegetation indices from uav-based multispectral and digital imagery. ISPRS Journal of Photogrammetry and Remote Sensing, 130, 246-25.

- Bian, C. , Shi, H., Wu, S., Zhang, K., Wei, M., & Zhao, Y.; et al. (2022). Prediction of Field-Scale Wheat Yield Using Machine Learning Method and Multi-Spectral UAV Data. Remote Sensing,14, 1474.

- Xu, W., Chen, P., Zhan, Y., Chen, S., Zhang, L., & Lan, Y. (2021). Cotton yield estimation model based on machine learning using time series uav remote sensing data. International Journal of Applied Earth Observation and Geoinformation, 104.

- Thenkabail, P.S. , Lyon, J.G., Huete, A. (2011). Hyperspectral remote sensing of vegetation and agricultural crops: Knowledge gain and knowledge gap after 40 years of research. CRC Press, 26, 663-688.

- Li, B. , Liu, R., Liu, S., Liu, Q., Liu, F., & Zhou, G. (2012). Monitoring vegetation coverage variation of winter wheat by low-altitude UAV remote sensing system. Transactions of the Chinese Society of Agricultural Engineering, 28(13), 160-165.

- Maimaitijiang, M., Sagan, V., Sidike, P., Hartling, S., & Fritschi, F.B. (2020). Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sensing of Environment, 237, 111599.

- Li, B. , Xu, X., Zhang, L., Han, J., & Jin, L. (2020). Above-ground biomass estimation and yield prediction in potato by using UAV-based RGB and hyperspectral imaging. ISPRS Journal of Photogrammetry and Remote Sensing, 162, 161-172.

- Hassan, M. A. , Yang, M., Rasheed, A., Yang, G., Reynolds, M., Xia, X.; et al. (2019). A rapid monitoring of NDVI across the wheat growth cycle for grain yield prediction using a multi-spectral UAV platform. Plant science, 282, 95-103.

- De Grandi, G.D. , Lucas, R.M., & Kropacek, J. (2009). Analysis by wavelet frames of spatial statistics in sar data for characterizing structural properties of forests. IEEE Transactions on Geoscience & Remote Sensing, 47(2), 494-507.

- Das, S. , Christopher, J., Apan, A., Choudhury, M. R., Chapman, S., Menzies, N. W., & Dang, Y. P. (2020). UAV-thermal imaging: A robust technology to evaluate in-field crop water stress and yield variation of wheat genotypes. In 2020 IEEE India Geoscience and Remote Sensing Symposium (InGARSS), 138-141.

- Rischbeck, P., Elsayed, S., Mistele, B., Barmeier, G., Heil, K., & Schmidhalter, U. (2016). Data fusion of spectral, thermal and canopy height parameters for improved yield prediction of drought stressed spring barley. European Journal of Agronomy, 78, 44-59.

- Fei, S. , Hassan, M. A., Xiao, Y., Su, X., Chen, Z., Cheng, Q.; et al. (2022). UAV-based multi-sensor data fusion and machine learning algorithm for yield prediction in wheat. Precision Agriculture, 24, 187-212.

- Liakos, K.G. , Busato, P., Moshou, D., Pearson, S., Bochtis, D. (2018). Machine Learning in Agriculture: A Review. Sensors,18, 2674.

- Ramos, A. P. M. , Osco, L. P., Furuya, D.E.G., Gonalves, W.N., & Pistori, H. (2020). A random forest ranking approach to predict yield in maize with uav-based vegetation spectral indices. Computers and Electronics in Agriculture, 178, 105791.

- Han, J. , Zhang, Z., Cao, J., Luo, Y., Zhang, L., Li, Z., Zhang, J. (2020). Prediction of Winter Wheat Yield Based on Multi-Source Data and Machine Learning in China. Remote Sensing, 12, 236.

- Maimaitijiang, M. , Ghulam, A., Sidike, P., Hartling, S., Maimaitiyiming, M., Peterson, K.; et al. (2017). Unmanned aerial system (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS Journal of Photogrammetry and Remote Sensing, 134, 43–58.

- Ahmed, A.A.M. , Sharma, E., Jui, S.J.J., Deo, R.C., Nguyen-Huy, T., & Ali, M. (2022). Kernel ridge regression hybrid method for wheat yield prediction with satellite-derived predictors. Remote Sensing, 14(5), 1136.

- Cedric, L. S. , Adoni, W. Y. H., Aworka, R., Zoueu, J. T., Mutombo, F. K., Krichen, M., & Kimpolo, C. L. M. (2022). Crops yield prediction based on machine learning models: Case of West African countries. Smart Agricultural Technology, 2, 100049.

- Sarijaloo, F.B., Porta, M., Taslimi, B., & Pardalos, P.M. (2021). Yield performance estimation of corn hybrids using machine learning algorithms. Aritificial Intelligence in Agriculture, 5, 82-89.

- Chlingaryan, S. W. B. (2018). Machine learning approaches for crop yield prediction and nitrogen status estimation in precision agriculture: A review. Computers and Electronics in Agriculture, 151,61-69.

- Van der Laan, M. J. , Polley, E. C., & Hubbard, A. E. (2007). Super learner. Statistical applications in genetics and molecular biology, 6(1).

- Dong, X. , Zhiwen, Y.U., Cao, W., Shi, Y., & Qianli, M.A. (2019). A survey on ensemble learning. Frontiers of Computer Science, 14(2).

- Leo, B. (1996). Stacked regressions. Machine learning, 24, 49-64.

- Zhang, W., Ren, H., Jiang, Q., & Zhang, K. (2015). Exploring Feature Extraction and ELM in Malware Detection for Android Devices. International Symposium on Neural Networks. Springer, Cham, 489-498.

- Wei, P. , Lu, Z., & Song, J. (2015). Variable importance analysis: A comprehensive review. Reliability Engineering & System Safety, 142, 399-432.

- Kelly, J.D., Davis, L. (1991). A Hybrid Genetic Algorithm for Classification. IJCAI, 91, 645–650.

- Raymer, M. L., Punch, W. F., Goodman, E. D., Kuhn, L. A., & Jain, A. K. (2000). Dimensionality reduction using genetic algorithms. IEEE transactions on evolutionary computation, 4(2), 164-171.

- Daszykowski, M. , Kaczmarek, K., Heyden, Y.V., & Walczak, B. (2007). Robust statistics in data analysis–a review: Basic concepts. Chemometrics & Intelligent Laboratory Systems, 85(2), 203-219.

- Xue, J. , & Su, B. (2017). Significant remote sensing vegetation indices: A review of developments and applications. Journal of sensors, 2017, 1-17.

- Humeau-Heurtier, A. (2019). Texture feature extraction methods: A survey. IEEE access, 7, 8975-9000.

- Abdi, H. , & Williams, L. J. (2010). Principal component analysis. Wiley interdisciplinary reviews: Computational statistics, 2(4), 433-459.

- Ji, Y. , Liu, R., Xiao, Y. Cui, Y., Chen, Z., Zong, X., &Yang, T. (2023). Faba bean above-ground biomass and bean yield estimation based on consumer-grade unmanned aerial vehicle RGB images and ensemble learning. Precision Agriculture, 24, 1439–1460.

- Peñuelas, J. , Gamon, J. A., Fredeen, A. L., Merino, J., & Field, C. B. (1994). Reflectance indices associated with physiological changes in nitrogen-and water-limited sunflower leaves. Remote sensing of Environment, 48(2), 135-146.

- Louhaichi, M., Borman, M. M., & Johnson, D. E. (2001). Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto International, 16(1), 65-70.

- Woebbecke, D. M., Meyer, G. E., Von Bargen, K., & Mortensen, D. A. (1995). Color indices for weed identification under various soil, residue, and lighting conditions. Transactions of the ASAE, 38(1), 259-269.

- Guijarro, M. , Pajares, G., Riomoros, I., Herrera, P. J., Burgos-Artizzu, X. P., & Ribeiro, A. (2011). Automatic segmentation of relevant textures in agricultural images. Computers and Electronics in Agriculture, 75(1), 75-83.

- Gitelson, A. A., Kaufman, Y. J., Stark, R., & Rundquist, D. (2002). Novel algorithms for remote estimation of vegetation fraction. Remote sensing of Environment, 80(1), 76-87.

- Kawashima, S., & Nakatani, M. (1998). An algorithm for estimating chlorophyll content in leaves using a video camera. Annals of Botany, 81(1), 49-54.

- Sellaro, R. , Crepy, M., Trupkin, S. A., Karayekov, E., Buchovsky, A. S., Rossi, C., & Casal, J. J. (2010). Cryptochrome as a sensor of the blue/green ratio of natural radiation in Arabidopsis. Plant physiology, 154(1), 401-409.

- Gitelson, A. A. , & Merzlyak, M. N. (1996). Signature analysis of leaf reflectance spectra: Algorithm development for remote sensing of chlorophyll. Journal of plant physiology, 148(3-4), 494-500.

- Zhang, S., & Liu, L. (2014). The potential of the MERIS Terrestrial Chlorophyll Index for crop yield prediction. Remote sensing letters, 5(8), 733-742.

- Pinter Jr, P. J. , Hatfield, J. L., Schepers, J. S., Barnes, E. M., Moran, M. S., Daughtry, C. S., & Upchurch, D. R. (2003). Remote sensing for crop management. Photogrammetric Engineering & Remote Sensing, 69(6), 647-664.

- Xue, L., Cao, W., Luo, W., Dai, T., & Zhu, Y. (2004). Monitoring leaf nitrogen status in rice with canopy spectral reflectance. Agronomy Journal, 96(1), 135-142.

- Chen, J. M. (1996). Evaluation of vegetation indices and a modified simple ratio for boreal applications. Canadian Journal of Remote Sensing, 22(3), 229-242.

- Roujean, J. L., & Breon, F. M. (1995). Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote sensing of Environment, 51(3), 375-384.

- Peñuelas, J. , Filella, I., & Gamon, J. A. (1995). Assessment of photosynthetic radiation-use efciency with spectral refectance. New Phytologist, 131, 291–296.

- Gitelson, A. A. , Viña, A., Arkebauer, T. J., Rundquist, D. C., Keydan, G., & Leavitt, B. (2003). Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophysical research letters, 30(5).

- Gilabert, M. A. , González-Piqueras, J., Garcıa-Haro, F. J., & Meliá, J. (2002). A generalized soil-adjusted vegetation index. Remote Sensing of environment, 82(2-3), 303-310.

- Merzlyak, M. N. , Gitelson, A. A., Chivkunova, O. B., & Rakitin, V. Y. (1999). Non-destructive optical detection of pigment changes during leaf senescence and fruit ripening. Physiologia plantarum, 106(1), 135-141.

- Dong, X. , Yu, Z., Cao, W., Shi, Y. & Ma, Q. (2020). A survey on ensemble learning. Frontiers of Computer Science, 14: 241-258.

- Quinlan, J. R. (1992). Learning with continuous classes. In 5th Australian joint conference on artificial intelligence, 92, 343-348.

- Croci, M. , Impollonia, G., Meroni, M., & Amaducci, S. (2022). Dynamic maize yield predictions using machine learning on multi-source data. Remote sensing, 15(1), 100.

- Pham, H.T. , Awange, J., Kuhn, M., Nguyen, B.V., & Bui, L.K. (2022). Enhancing Crop Yield Prediction Utilizing Machine Learning on Satellite-Based Vegetation Health Indices. Sensors, 22, 719.

- Soria, X. , Sappa, A.D. and Akbarinia, A. (2017). Multispectral single-sensor RGB-NIR imaging: New challenges and opportunities. In 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), 1-6.

- Cao, X., Liu, Y., Yu, R., Han, D., & Su, B. (2021). A comparison of UAV RGB and multispectral imaging in phenotying for stay green of wheat population. Remote Sensing, 13(24), 5173.

- Luz, B.R.D. , & Crowley, J.K. (2010). Identification of plant species by using high spatial and spectral resolution thermal infrared (8.0–13.5μm) imagery. Remote Sensing of Environment, 114(2): 404-413.

- Elarab, M. , Ticlavilca, A. M., Torres-Rua, A.F., Maslova, I., & Mckee, M. (2015). Estimating chlorophyll with thermal and broadband multispectral high resolution imagery from an unmanned aerial system using relevance vector machines for precision agriculture. International Journal of Applied Earth Observation and Geoinformation, 43, 32-42.

- Beatriz, R. D. L. , & Crowley, J. K. (2007). Spectral reflectance and emissivity features of broad leaf plants: Prospects for remote sensing in the thermal infrared (8.0-14.0 μm). Remote Sensing of Environment, 109, 393-405.

- Bolón-Canedo, V. , & Alonso-Betanzos, A. (2019). Ensembles for feature selection: A review and future trends. Information fusion, 52, 1-12.

- Huang, L. , Liu, Y., Huang, W., Dong, Y., Ma, H., Wu, K., & Guo, A. (2022). Combining random forest and XGBoost methods in detecting early and mid-term winter wheat stripe rust using canopy level hyperspectral measurements. Agriculture, 12(1), 74.

- Nagaraju, A. , & Mohandas, R. (2021). Multifactor Analysis to Predict Best Crop using Xg-Boost Algorithm. In 2021 5th International Conference on Trends in Electronics and Informatics (ICOEI), 155-163.

- Li, Y. , Zeng, H., Zhang, M., Wu, B., Zhao, Y., Yao, X., Cheng, T., Qin, X., & Wu., F. (2023). A county-level soybean yield prediction framework coupled with XGBoost and multidimensional feature engineering. International Journal of Applied Earth Observation and Geoinformation, 118, 103269.

- Joshi, A. , Pradhan, B., Chakraborty, S., & Behera, M. D. (2023). Winter wheat yield prediction in the conterminous United States using solar-induced chlorophyll fluorescence data and XGBoost and random forest algorithm. Ecological Informatics, 77, 102194.

- Aguate, F. M., Trachsel, S., Pérez, L. G., Burgueño, J., Crossa, J., Balzarini, M., & de los Campos, G. (2017). Use of hyperspectral image data outperforms vegetation indices in prediction of maize yield. Crop Science, 57(5), 2517-2524.

- Zeng, W. Z., Xu, C., Zhao, G., Wu, J.W., & Huang, J. (2018). Estimation of sunflower seed yield using partial least squares regression and artificial neural network models. Pedosphere, 28(5), 764-774.

- Li, C., Wang, Y., Ma, C., Chen, W., Li, Y., Li, J., & Ding, F. (2021). Improvement of wheat grain yield prediction model performance based on stacking technique. Applied Sciences, 11(24), 12164.

- Pavlyshenko, B. (2018). Using stacking approaches for machine learning models. 2018 IEEE second international conference on data stream mining & processing (DSMP), 255-258.

- Anh, V.P., Minh, L.N., Lam, T.B. (2017). Feature weighting and svm parameters optimization based on genetic algorithms for classification problems. Applied Intelligence, 46, 455-469.

| Sensor | Spectral Indices | Equation | References |

|---|---|---|---|

| RGB | Red Green Blue Vegetation Index | RGBVI=(G2 − B ∗ R)∕(G2 + B ∗ R) | [33] |

| Plant Pigment Ratio | PPR= (G – B)/(G + B) | [34] | |

| Green Leaf Algorithm | GLA=(2*G-R-B)/(2*G+R+B) | [35] | |

| Excess Green Index | ExG=2*G-R-B | [36] | |

| Colour Index of Vegetation Extraction | CIVE=0.441*R-0.881*G+0.3856*B+18.78745 | [37] | |

| Visible Atmospherically Resistant Index | VARI=(G-R)/(G+R-B) | [38] | |

| Kawashima Index | IKAW=(R-B)/(R+B) | [39] | |

| Woebbecke Index | WI=(G-B)/(R-G) | [36] | |

| Green Blue Ratio Index | GBRI=G/B | [40] | |

| Red Blue Ratio Index | RBRI=R/B | [40] | |

| MS | Green-NDVI | GNDVI=(NIR-G)/(NIR+G) | [41] |

| MERIS Terrestrial Chlorophyll Index | MTCI=(NIR-R)/(RE-R) | [42] | |

| Normalized Difference Vegetation Index | NDVI=(NIR-R)/(NIR+R) | [38] | |

| Ratio Vegetation Index | RVI1=NIR/R | [43] | |

| Ratio Vegetation Index | RVI2=NIR/G | [44] | |

| Modifed Simple Ratio Index | MSRI=(NIR/R-1)/(NIR/R+1)**0.5 | [45] | |

| Re-normalized Difference Vegetation Index | RDVI=(NIR-R)/(NIR+R)**0.5 | [46] | |

| Structure Insensitive Pigment Index | SIPI = (NIR-B)∕(NIR+B) | [47] | |

| Colour Index | CI=NIR/G-1 | [48] | |

| Generalized Soil-adjusted Vegetation Index | GOSAVI=(NIR-G)/(NIR+G+0.16) | [49] | |

| Plant Senescence Refectance Index | PSRI=(R-B)/NIR | [50] |

| Principal Component | Initial Eigenvalues | ||

|---|---|---|---|

| Eigenvalue | Variance Contribution Ratio (%) | Cumulative Variance Contribution Ratio (%) | |

| 1 2 3 4 5 6 7 8 9 10 11 12 |

19.72 11.13 3.09 1.93 1.54 0.74 0.66 0.38 0.28 0.22 0.15 0.06 |

49.30 27.80 7.70 4.80 3.80 1.90 1.70 0.90 0.70 0.60 0.40 0.10 |

49.30 77.10 84.90 89.70 93.50 95.40 97.00 98.00 98.70 99.20 99.60 100.00 |

| Sensor | Metric | Base learner | Secondary learner | Thirdary learner | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RF | PLS | RR | KNN | XGboost | StRR | En_FW | En_Mean | |||||

| RGB | R2 | 0.492 | 0.501 | 0.517 | 0.465 | 0.514 | 0.525 | 0.524 | 0.612 | |||

| RMSE (t ha-1) | 0.848 | 0.841 | 0.827 | 0.871 | 0.830 | 0.820 | 0.821 | 0.818 | ||||

| NRMSE (%) | 8.520 | 8.449 | 8.310 | 8.750 | 8.339 | 8.241 | 8.247 | 8.172 | ||||

| MS | R2 | 0.513 | 0.534 | 0.534 | 0.507 | 0.528 | 0.542 | 0.548 | 0.625 | |||

| RMSE (t ha-1) | 0.853 | 0.834 | 0.834 | 0.858 | 0.839 | 0.827 | 0.821 | 0.822 | ||||

| NRMSE (%) | 8.565 | 8.378 | 8.383 | 8.619 | 8.433 | 8.304 | 8.249 | 8.243 | ||||

| Texture | R2 | 0.579 | 0.592 | 0.592 | 0.539 | 0.593 | 0.605 | 0.596 | 0.678 | |||

| RMSE (t ha-1) | 0.758 | 0.746 | 0.746 | 0.793 | 0.745 | 0.734 | 0.743 | 0.733 | ||||

| NRMSE (%) | 7.617 | 7.498 | 7.498 | 7.963 | 7.487 | 7.374 | 7.459 | 7.384 | ||||

| TIR | R2 | 0.434 | 0.490 | 0.490 | 0.439 | 0.482 | 0.500 | 0.495 | 0.594 | |||

| RMSE (t ha-1) | 0.879 | 0.834 | 0.834 | 0.875 | 0.840 | 0.826 | 0.830 | 0.823 | ||||

| NRMSE (%) | 8.825 | 8.382 | 8.382 | 8.791 | 8.443 | 8.295 | 8.335 | 8.292 | ||||

| RGB+MS | R2 | 0.540 | 0.506 | 0.545 | 0.503 | 0.537 | 0.561 | 0.552 | 0.636 | |||

| RMSE (t ha-1) | 0.825 | 0.854 | 0.820 | 0.857 | 0.827 | 0.806 | 0.814 | 0.805 | ||||

| NRMSE (%) | 8.285 | 8.580 | 8.241 | 8.611 | 8.307 | 8.096 | 8.173 | 8.107 | ||||

| RGB+Texture | R2 | 0.604 | 0.577 | 0.577 | 0.569 | 0.605 | 0.619 | 0.614 | 0.687 | |||

| RMSE (t ha-1) | 0.747 | 0.772 | 0.772 | 0.779 | 0.746 | 0.733 | 0.737 | 0.733 | ||||

| NRMSE (%) | 7.506 | 7.754 | 7.758 | 7.828 | 7.491 | 7.360 | 7.407 | 7.314 | ||||

| Sensor | Metric | Base learner | Secondary learner | Thirdary learner | ||||||||

| RF | PLS | RR | KNN | XGboost | StRR | En_FW | En_Mean | |||||

| RGB+TIR | R2 | 0.554 | 0.557 | 0.560 | 0.548 | 0.561 | 0.575 | 0.580 | 0.657 | |||

| RMSE (t ha-1) | 0.780 | 0.777 | 0.775 | 0.785 | 0.774 | 0.762 | 0.757 | 0.756 | ||||

| NRMSE (%) | 7.839 | 7.806 | 7.786 | 7.889 | 7.772 | 7.650 | 7.602 | 7.620 | ||||

| MS+Texture | R2 | 0.598 | 0.604 | 0.601 | 0.551 | 0.617 | 0.623 | 0.619 | 0.694 | |||

| RMSE (t ha-1) | 0.741 | 0.735 | 0.738 | 0.782 | 0.723 | 0.718 | 0.721 | 0.714 | ||||

| NRMSE (%) | 7.443 | 7.389 | 7.410 | 7.859 | 7.263 | 7.208 | 7.246 | 7.198 | ||||

| MS+TIR | R2 | 0.569 | 0.561 | 0.563 | 0.536 | 0.566 | 0.581 | 0.571 | 0.656 | |||

| RMSE (t ha-1) | 0.772 | 0.780 | 0.778 | 0.801 | 0.775 | 0.762 | 0.770 | 0.763 | ||||

| NRMSE (%) | 7.760 | 7.833 | 7.811 | 8.049 | 7.789 | 7.654 | 7.739 | 7.660 | ||||

| Texture+TIR | R2 | 0.607 | 0.607 | 0.607 | 0.555 | 0.614 | 0.628 | 0.620 | 0.697 | |||

| RMSE (t ha-1) | 0.732 | 0.732 | 0.733 | 0.780 | 0.726 | 0.713 | 0.720 | 0.710 | ||||

| NRMSE (%) | 7.357 | 7.358 | 7.359 | 7.831 | 7.290 | 7.161 | 7.235 | 7.157 | ||||

| RGB+MS+Texture | R2 | 0.615 | 0.590 | 0.614 | 0.577 | 0.613 | 0.639 | 0.627 | 0.702 | |||

| RMSE (t ha-1) | 0.736 | 0.760 | 0.738 | 0.772 | 0.739 | 0.713 | 0.725 | 0.716 | ||||

| NRMSE (%) | 7.396 | 7.638 | 7.412 | 7.755 | 7.421 | 7.163 | 7.281 | 7.146 | ||||

| RGB+MS+TIR | R2 | 0.588 | 0.582 | 0.602 | 0.547 | 0.591 | 0.603 | 0.612 | 0.686 | |||

| RMSE (t ha-1) | 0.750 | 0.755 | 0.737 | 0.786 | 0.747 | 0.736 | 0.728 | 0.723 | ||||

| NRMSE (%) | 7.532 | 7.589 | 7.405 | 7.897 | 7.508 | 7.389 | 7.310 | 7.287 | ||||

| Sensor | Metric | Base learner | Secondary learner | Thirdary learner | ||||||||

| RF | PLS | RR | KNN | XGboost | StRR | En_FW | En_Mean | |||||

| RGB+Texture+TIR | R2 | 0.636 | 0.614 | 0.620 | 0.615 | 0.647 | 0.652 | 0.655 | 0.717 | |||

| RMSE (t ha-1) | 0.718 | 0.739 | 0.733 | 0.738 | 0.707 | 0.702 | 0.698 | 0.696 | ||||

| NRMSE (%) | 7.210 | 7.424 | 7.367 | 7.415 | 7.098 | 7.051 | 7.014 | 7.061 | ||||

| MS+Texture+TIR | R2 | 0.627 | 0.616 | 0.620 | 0.568 | 0.641 | 0.643 | 0.645 | 0.711 | |||

| RMSE (t ha-1) | 0.720 | 0.730 | 0.726 | 0.774 | 0.706 | 0.704 | 0.702 | 0.699 | ||||

| NRMSE (%) | 7.234 | 7.336 | 7.296 | 7.777 | 7.090 | 7.072 | 7.049 | 7.046 | ||||

| RGB+MS+Texture+TIR | R2 | 0.640 | 0.631 | 0.649 | 0.589 | 0.660 | 0.668 | 0.667 | 0.733 | |||

| RMSE (t ha-1) | 0.701 | 0.709 | 0.692 | 0.748 | 0.681 | 0.673 | 0.674 | 0.668 | ||||

| NRMSE (%) | 7.038 | 7.127 | 6.949 | 7.519 | 6.842 | 6.760 | 6.771 | 6.727 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).