1. Introduction

Prostate cancer stands as a major health concern globally, necessitating precise diagnostic and grading methodologies for effective therapeutic interventions. Prostate cancer is characterized by abnormal cell growth in the prostate gland. It is the second most common cancer in the world and the most common cancer in men. Each year, over 1.4 million new cases of prostate cancer are found, causing more than 375,000 deaths [

1]. It typically starts in the cells of the prostate gland and can grow slowly, initially confined to the gland itself, or it may spread rapidly to other parts of the body if left untreated. Early detection through screening tests such as prostate-specific antigen (PSA) tests and digital rectal exams (DREs) is crucial for timely treatment and improved outcomes The processing and scanning of tissue biopsies in the laboratory produce large Whole-Slide Images (WSIs), enhancing workflow efficiency and reproducibility. One characteristic of PCa is its tendency to be “non-aggressive,” which can complicate treatment decisions and determine the necessity of more serious interventions. To address this challenge, the Gleason Grading System classifies tumors into numerical risk groups known as WHO/ISUP grade groups, as adopted by the International Society of Urological Pathology (ISUP) and the World Health Organization (WHO). The conventional grading of prostate cancer involves challenges marked by intra- and inter-observer variability, potentially leading to suboptimal treatment decisions. Advancements in imaging techniques and molecular biomarkers offer promising avenues for enhancing the precision of prostate cancer diagnosis and risk stratification, guiding personalized treatment strategies tailored to individual patients’ needs. Integrating these innovative approaches into clinical practice holds the potential to improve prognostic accuracy and optimize therapeutic outcomes for men diagnosed with prostate cancer. To address these complexities the integration of machine learning (ML) and deep learning (DL) techniques has emerged as a promising frontier, revolutionizing the landscape of prostate cancer diagnosis and grading. These technologies can enhance the accuracy of diagnostic processes, reducing the variability associated with human interpretation. This, in turn, can lead to more reliable and personalized treatment decisions for individuals diagnosed with prostate cancer. One essential aspect of this diagnostic is the Gleason score grading system. The Gleason score provides crucial insights into the aggressiveness of prostate cancer by evaluating the patterns observed in cancerous tissue under a microscope. Higher Gleason scores correlate with more aggressive cancer, aiding clinicians in determining optimal treatment strategies tailored to individual patients. By integrating the Gleason score into diagnostic and treatment protocols, healthcare professionals can enhance precision and personalized care for individuals with prostate cancer. This integration not only facilitates more accurate grading but also allows for the development of predictive models that assist clinicians in tailoring treatment plans based on individual patient characteristics. By leveraging advanced computational methods, machine learning models can analyze vast amounts of medical data, including imaging and pathology reports, to identify subtle patterns and markers indicative of the severity of prostate cancer. This approach not only assists in more accurate grading but also allows for the development of predictive models that can help healthcare professionals tailor treatment plans based on individual patient characteristics. In this research paper, we perform a comparative study of ResNet models within the domain of prostate cancer diagnosis and grading. Our primary objective is to surpass the accuracies achieved in previous literature by leveraging the advancements in deep learning techniques. Through our research, we wish to contribute to the ongoing efforts in revolutionizing the landscape of prostate cancer diagnosis and grading, with the goal of enhancing patient outcomes and personalized care. Recent advancements in artificial intelligence (AI) and machine learning (ML) have reshaped prostate cancer diagnosis and Gleason grading. Goldenberg et al. [

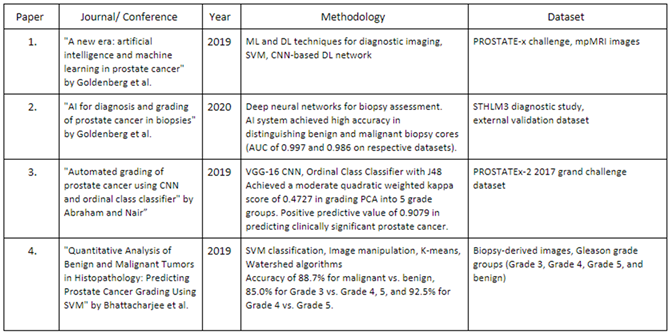

2] provide an overview of AI and ML’s potential in prostate cancer management, showing performance comparable to traditional diagnostic methods. Abraham and Nair [

4] developed a deep learning algorithm for histopathologic diagnosis and Gleason grading, focusing on the PROSTATEx-2 2017 dataset. A study in The Lancet Oncology also presents an AI system for prostate biopsies, demonstrating accuracy and performance similar to expert pathologists [

5]. SVM-based analysis in the work by Bhattacharjee et al. [

3] enriches the AI landscape, accurately classifying Gleason grading based on biopsy-derived image features. These studies highlight the promising trajectory of AI and ML applications, offering accurate and efficient methodologies for prostate cancer grading. Goldenberg et al. [

2] explored ML and deep learning (DL) techniques such as support vector machines (SVM) and convolutional neural networks (CNN) for diagnostic imaging in prostate cancer, using the PROSTATEx challenge dataset, the study shows performance comparable to radiologists. In The Lancet Oncology [

5], a study focuses on an AI system for prostate biopsies, achieving remarkable performance in distinguishing benign and malignant biopsy cores. Abraham and Nair [

4] introduced a novel approach for grading prostate cancer using a VGG-16 convolutional neural network and an ordinal class classifier, contributing to histopathologic diagnosis and Gleason grading. Bhattacharjee et al. [

3] presented a quantitative analysis of benign and malignant tumours in histopathology, predicting prostate cancer grading using SVM based on biopsy-derived images. The collective findings from these studies demonstrate AI and ML’s transformative potential in prostate cancer diagnosis and grading. These methods offer comparable performance to traditional approaches while presenting opportunities for automation, reducing workload, and providing expertise in resource-limited regions. The table summarizes the key findings in each paper.

2. Proposed Methods

The primary objective of our proposed work is to enhance the accuracy of prostate cancer diagnosis and Gleason grading using advanced machine learning techniques. It aims to implement Resnet models and compare their accuracies for better classification and grading of prostate cancer. It also proposes a model which uses the Segmentation model as a feature extractor for the CNN models trained. Our proposed work aims to advance prostate cancer diagnosis and Gleason grading through the utilization of the histopathological dataset, DiagSet. This dataset, comprising over 2.6 million tissue patches from 430 fully annotated scans, 4675 scans with binary diagnosis, and 46 scans independently diagnosed by histopathologists, provides a rich resource for in-depth analysis. It includes meticulously annotated tissue patches, binary diagnoses, and expert-assigned diagnoses. (Accessible at

https://aieconsilio.diag.pl,) It provides a foundation for our research, ensuring a thorough investigation into the factors influencing model performance. Our approach is centred around ensembles of convolution neural networks operating on histopathological scans at different scales. We implement a CNN framework tailored to the characteristics of DiagSet. This involves the detection of cancerous tissue regions and the prediction of scan-level diagnosis.

2.1. Dataset

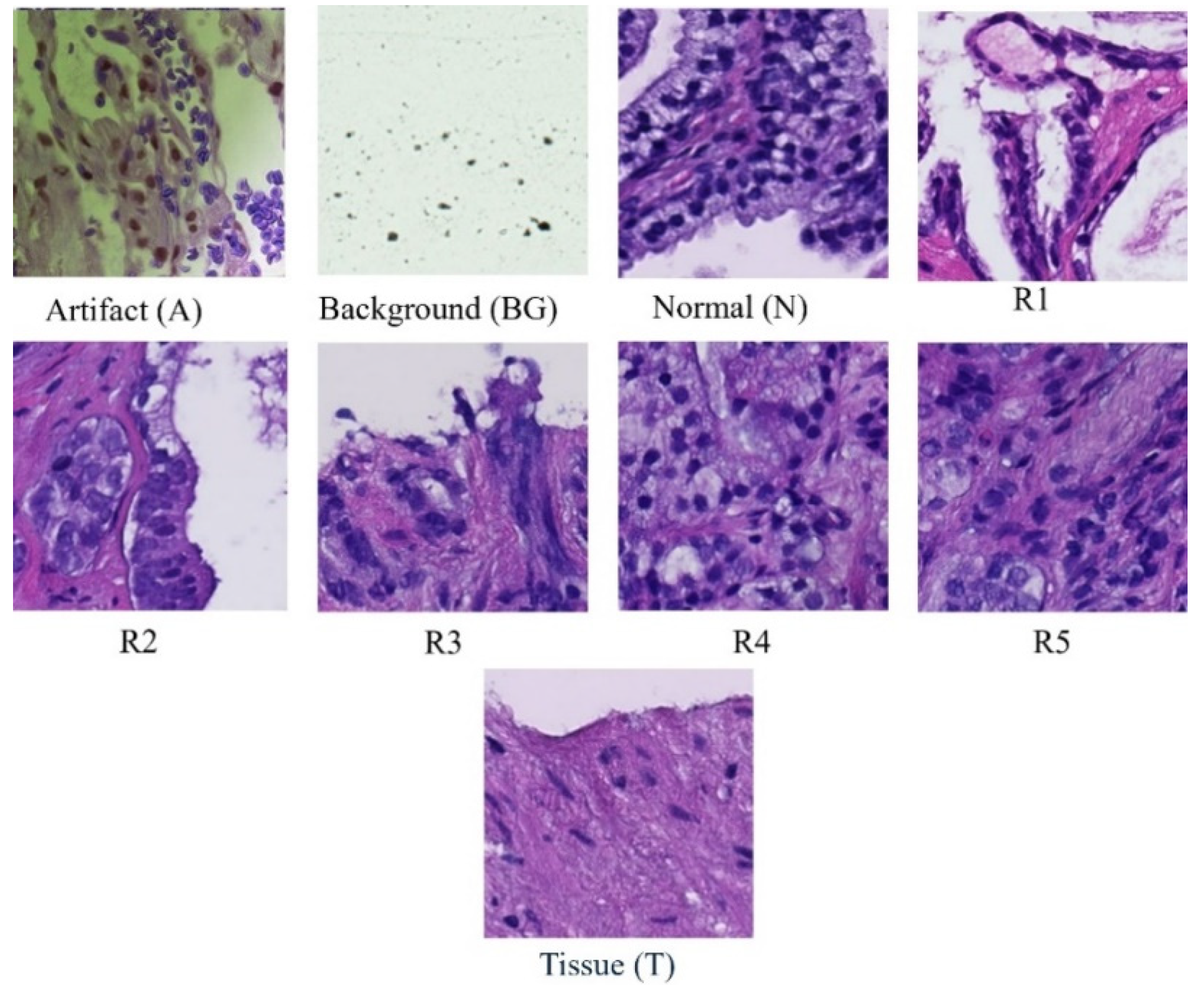

Each whole slide image is systematically partitioned into 256x256 blocks, forming the basis for a detailed analysis using Convolutional Neural Network (CNN) classifiers. These classifiers categorize each block into one of nine distinct classes: scan background (BG), tissue background (T), normal, healthy tissue (N), acquisition artifact (A), or one of the five Gleason grades (R1R5). Leveraging the versatility of pre-trained ResNet models, specifically ResNet-18, ResNet-34, and ResNet-50, our methodology ensures a comprehensive examination of the dataset at varying levels of complexity. This multi-model approach enhances the robustness of our analysis, capturing intricate features within each block and facilitating a nuanced understanding of prostate cancer pathology across different Gleason grades and tissue types. Furthermore, this extends across multiple magnification levels, including 40×, 20×, 10×, and 5×. At each magnification, the systematic division and classification of 256 × 256 blocks are repeated, allowing for a comprehensive analysis of prostate cancer pathology at varying resolutions. By comparing the accuracies obtained at different magnifications, our approach aims to discern patterns and variations in classification performance, providing insights into the robustness and scalability of the proposed model across a spectrum of image resolutions. This multi-level evaluation enhances the reliability and generalizability of our findings, acknowledging the significance of adapting to diverse magnification contexts in the field of histopathological analysis.

3. Methodology

At each magnification level (40×, 20×, 10×, and 5×), structured methodology was implemented to prepare and assess the dataset with thoroughness and precision.

3.1. Dataset

Initially, the dataset underwent a partitioning process, meticulously dividing it into distinct training and testing sets to ensure an equitable distribution of images across various classes. This step was crucial in mitigating potential biases and ensuring the representativeness of the data. Moreover, to address potential class imbalances and enhance the efficacy of model training, a carefully curated subset consisting of 4000 images per class was meticulously selected. This deliberate curation aimed to provide the model with a diverse array of examples, thereby fortifying its ability to generalize proficiently across a wide spectrum of scenarios. By preparing the dataset in this manner, we aimed to ensure that our model could effectively learn and generalize from a comprehensive and representative set of histopathological images. This approach not only improved the model’s performance but also bolstered its robustness and reliability in real-world applications of prostate cancer diagnosis and Gleason grading.

3.2. Training Phase

The selection of ResNet models ResNet-18, ResNet-34, and ResNet-50, was based on their established effectiveness in capturing intricate features relevant to various Gleason grades and tissue types across various levels of magnification. The decision to employ these architectures was based on their proven capabilities to handle the complexities of histopathological images effectively. The training phase spanned 100 epochs and employed pre-trained ResNet models, including ResNet18, ResNet-34 and ResNet-50 architectures. Each ResNet model underwent extensive training on the curated dataset, with the objective of fine-tuning their parameters to accurately classify tissue patches into one of the nine distinct classes, including scan background, tissue background, normal healthy tissue, acquisition artifact, and the five Gleason grades. During the training phase, a 5-fold cross-validation approach was used to enhance the model’s robustness. This involved partitioning the dataset into five subsets, with each subset used as a validation set once while the remaining four subsets were used for training. This process was repeated five times, with each subset used exactly once as the validation set. Implementing 5-fold cross-validation helped to prevent overfitting by systematically dividing the dataset into multiple subsets for training and validation, ensuring that the model’s performance is robust and reliable on unseen data. It enhanced the model’s ability to generalize well to new, unseen data by evaluating its performance across diverse scenarios. Additionally, cross-validation mitigates biases or inconsistencies in the dataset, leading to a more stable and resilient model. It also facilitates the optimization of model hyperparameters and provides more reliable evaluation metrics, instilling confidence in the model’s predictions. By iteratively training and validating the model on different subsets of the data, the 5-fold cross-validation technique helped ensure that the model’s performance was not overly dependent on any subset of the data, thus improving its generalization ability.

3.3. Testing Phase

For the testing phase, a stratified split function was employed to maintain class balance in the testing dataset. This strategic approach ensures a thorough evaluation of the model’s performance across diverse classes and magnification levels. Stratification was imperative to prevent any bias in the evaluation process and to guarantee that the model’s performance was assessed comprehensively across all classes. The incorporation of pre-trained ResNet models, coupled with meticulous data preparation and testing strategies, underscores the resilience and adaptability of the proposed methodology. This comprehensive approach contributes to the accuracy of prostate cancer diagnosis and Gleason grading, establishing a robust foundation for reliable histopathological analysis across different magnification contexts. In the next stage of model development, we integrated the DeepLabv3 segmentation model to augment the feature extraction process, thus enhancing the predictive capabilities of our classification models. This integration is designed to strengthen the model’s reliability and accuracy. The process involves feeding input images to DeepLabv3, which is optimized to efficiently extract relevant features. The output produced by the segmentation model, which encapsulates detailed spatial and semantic information, is directed to a convolutional neural network (CNN) classifier. This CNN classifier utilizes the extracted features to generate predictions, leveraging the understanding provided by the DeepLabv3 segmentation model. Through this comprehensive approach, we aim to improve the accuracy and robustness of our predictive model, advancing its performance in classification tasks. The initialization of both the ResNet and DeepLabv3 models serves as the foundational step in this process. Parameter freezing is implemented to safeguard the integrity of both models during training, except for the final fully connected layer of ResNet models, which undergoes modification to accommodate the classification requirements of the dataset. By preserving the feature extraction capabilities of DeepLabv3 and subsequently fine-tuning the ResNet models using these extracted features, the combined model aims to harness the collective strengths of both models to achieve enhanced performance and generalization on the target classification task. This approach capitalizes on the transfer learning potential of ResNet, and the feature-rich representations generated by DeepLabv3, thereby facilitating more accurate and robust predictions, particularly in scenarios with limited labelled data availability. This innovative approach offers several distinct advantages. Firstly, by leveraging the feature extraction capabilities of DeepLabv3, the model aptly captures the intricate and nuanced features inherent in the input images. This facilitates a richer representation of the data, enabling the CNN classifier to make more precise and accurate predictions. Furthermore, the integration of a segmentation model as a feature extractor allows our model to discern spatial relationships and semantic context within the images.

4. Results

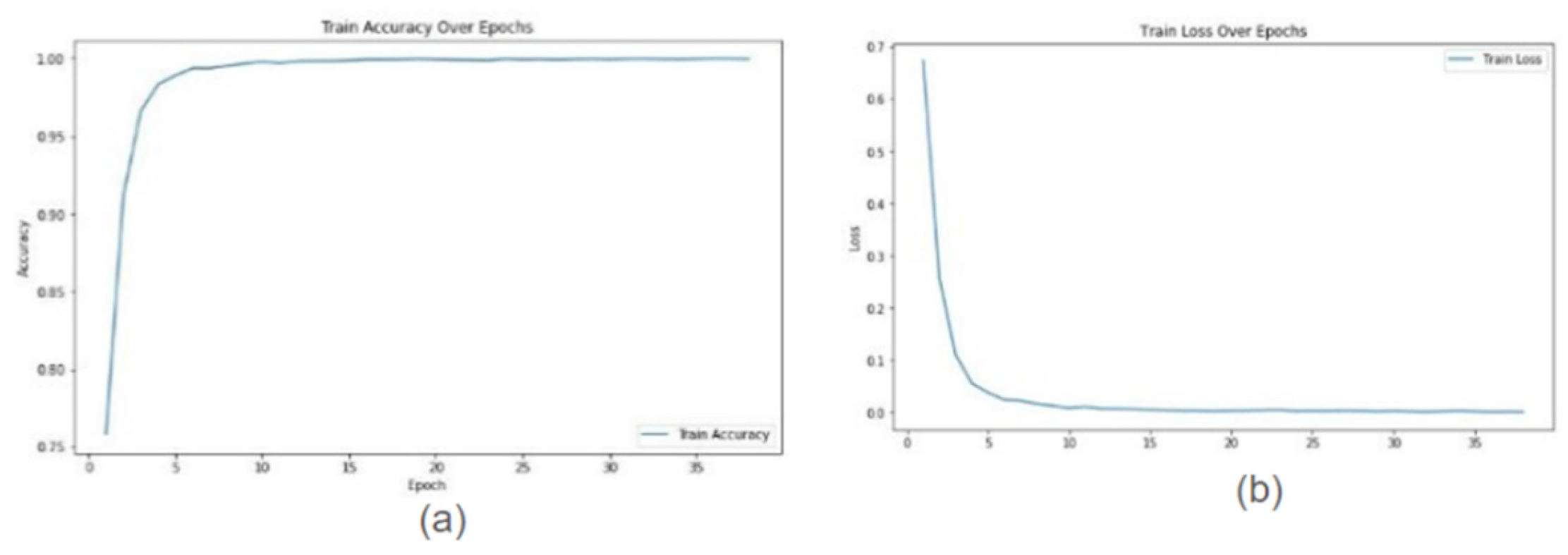

In this study, we conducted a comprehensive evaluation of our proposed model’s performance across various magnification levels (40×, 20×, 10×, and 5×) in the context of prostate cancer diagnosis and Gleason grading. Leveraging ResNet18, ResNet34, and ResNet50 architectures, our model highlighted remarkable adaptability and accuracy throughout the investigation. For ResNet18, testing accuracies ranged from 0.9956 at 10× magnification to 0.9992 at 20× magnification, indicating a high level of reliability in predictions across different image resolutions. ResNet34 exhibited even higher testing accuracies, consistently achieving 0.9999 at multiple magnifications (40× and 20×) and only slightly lower at other magnifications (0.9957 at 10× and 0.9993 at 5×), showcasing its robust ability to handle a variety of inputs with exceptional accuracy. ResNet50 also performed impressively, achieving testing accuracies between 0.9915 at 10× magnification and 0.9981 at 5×, demonstrating its competence in maintaining high levels of performance across the varying levels of image magnifications. The graphs show training accuracy, validation accuracy train and validation loss for Resnet34 model on 20× magnification image.

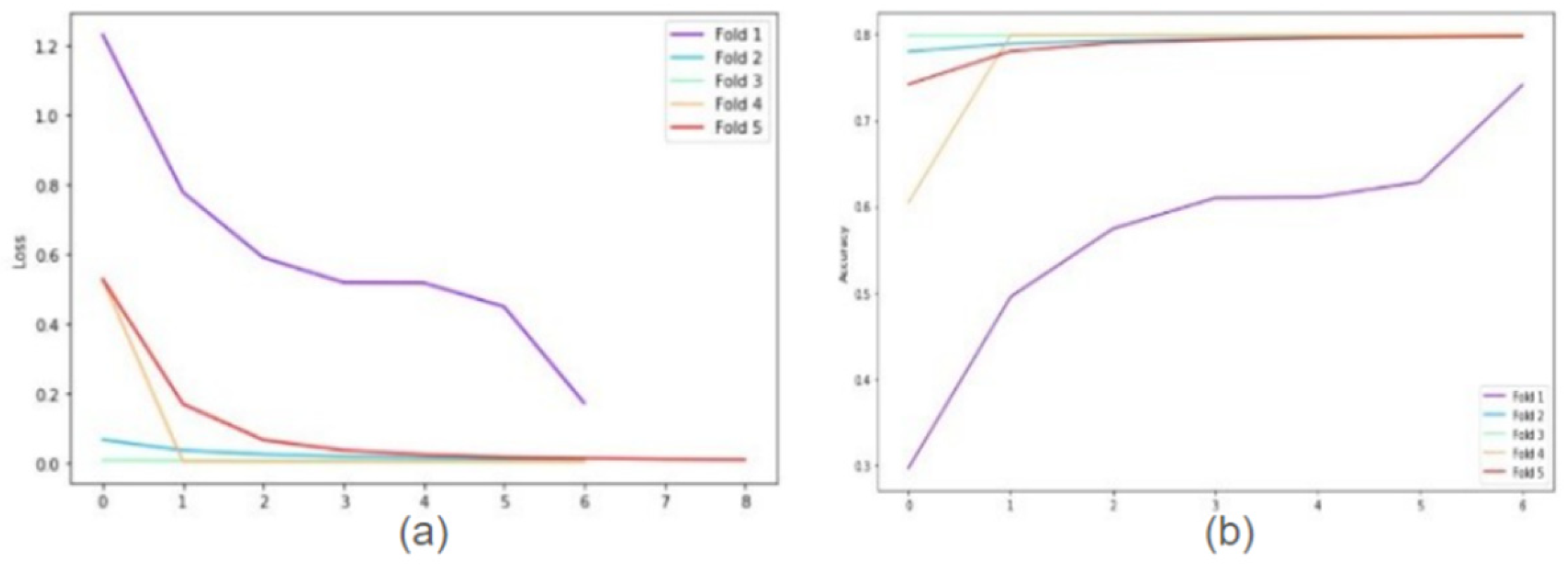

The following graphs show training loss for each fold, accuracies, and validation loss for Resnet34 model on 20× magnification image set when 5-fold cross-validation is used. In the graph of training accuracy, the 3rd and 4th folds show higher accuracies and extremely low losses from the initial epochs. The possible explanation is the images in those folds are similar to the ones used while training the model. By evaluating the model’s performance under both scenarios, we were able to quantify the tangible benefits of employing the 5-fold cross-validation technique.

Table 1 shows the model’s robustness and generalization ability, highlighting improvements achieved through the validation process. Additionally, the graphical representations above in

Figure 1 and

Figure 2 offer a clear understanding of the model’s learning trajectory and its performance dynamics during training and validation stages.

Figure 2 depicts the training loss for each fold, along with accuracies and validation loss for the ResNet34 model on 20× magnification. The supplemental section shows learning loss and accuracies for all Resnet architectures across all magnifications.

Figure 1.

Samples of Classes in Dataset.

Figure 1.

Samples of Classes in Dataset.

Figure 1.

Graphs for Resnet34 model on images of 20× magnification (a) Training Accuracy (b) Training loss.

Figure 1.

Graphs for Resnet34 model on images of 20× magnification (a) Training Accuracy (b) Training loss.

Figure 2.

Cross fold training loss (a) and training accuracies (b) for ResNet34 model on 20× magnification.

Figure 2.

Cross fold training loss (a) and training accuracies (b) for ResNet34 model on 20× magnification.

Table 1.

Summary of the Literature Survey.

Table 1.

Summary of the Literature Survey.

These findings collectively emphasize the adaptability, reliability, and versatility of ResNet18, ResNet34, and ResNet50 in prostate cancer diagnosis and Gleason grading across different magnification levels. The ability of these models to maintain high accuracy rates across varying resolutions is essential for ensuring accurate and consistent diagnostic assessments, thereby contributing to improved patient outcomes and clinical decision-making in the field of prostate cancer pathology.

Table 2.

Models performance on 5-Fold Cross-validation.

Table 2.

Models performance on 5-Fold Cross-validation.

| Magnification |

Architecture |

Average Training Accuracy |

Testing Accuracy |

40×

20×

10×

5× |

ResNet18 |

0.9995

0.9996

0.9993

0.9995 |

0.9977

0.9992

0.9964

0.9921 |

40×

20×

10×

5× |

ResNet34 |

0.9992

0.9993

0.9998

0.9993 |

0.9999

0.9999

1.0000

0.9993 |

40×

20×

10×

5× |

ResNet50 |

0.9993

0.9991

0.9956

0.9893 |

0.9957

0.9915

0.9952

0.9981 |

5. Discussion

The study demonstrates the effectiveness of using ResNet models like ResNet18, ResNet34, and ResNet50 in diagnosing prostate cancer and grading Gleason scores. These models perform admirably across various magnification levels, indicating their proficiency in detecting crucial details in histopathological images. However, there’s potential for further exploration into other algorithms. Exploring techniques like Vision Transformers could potentially improve the models’ ability to discern subtle variations, leading to enhanced diagnostic accuracy. Moreover, the success of the proposed approach in prostate cancer diagnosis suggests its potential applicability to other malignancies. Expanding the methodology to include datasets and histopathological images from different cancer types could facilitate more accurate diagnosis and grading across various cancers. This multi-cancer approach could contribute to a broader understanding of histopathological features and aid in the development of comprehensive diagnostic tools applicable across diverse oncological contexts. Moving forward, an intriguing prospect involves transforming these developed models into an accessible web application. This would democratize access to advanced prostate cancer diagnostic tools, enabling healthcare professionals to integrate machine learning into their clinical practice more seamlessly. The application could offer features such as real-time analysis of histopathological images, automated Gleason grading, and seamless integration with existing electronic medical record systems for streamlined patient management. An important consideration for future work includes the potential expansion of modalities in electronic health record (EHR) data to further enhance the model’s capabilities. Integrating additional types of EHR data, such as radiological imaging, genetic information, and laboratory test results, could provide a more holistic approach to diagnosing and grading prostate cancer. This comprehensive utilization of patient data can improve model accuracy, leading to better patient outcomes and personalized treatment plans.

6. Conclusions

The proposed approach, framed as a classification problem and utilizing ResNet models in conjunction with the Diagset dataset, highlights its potential as a robust tool for prostate cancer diagnosis and Gleason grading. The integration of machine learning and deep learning techniques represents a pivotal advancement in prostate cancer diagnosis and grading. The proposed methodology, centered around convolutional neural networks operating on histopathological scans at multiple magnification levels, presents a comprehensive and robust approach to prostate cancer diagnosis and Gleason grading. Leveraging the rich resource provided by the Diagset dataset, this research offers insights into the intricate features underlying prostate cancer pathology across different Gleason grades and tissue types. The comprehensive evaluation of the proposed model’s performance across various magnification levels reaffirms its adaptability and reliability in accurately assessing prostate cancer pathology. The consistent high accuracies achieved by the ResNet models further validate their stability and efficacy, positioning them as valuable assets in clinical practice for prostate cancer diagnosis and Gleason grading. Moving forward, an intriguing prospect involves transforming these developed models into an accessible web application. This would democratize access to advanced prostate cancer diagnostic tools, enabling healthcare professionals to integrate machine learning into their clinical practice more seamlessly. The application could offer features such as real-time analysis of histopathological images, automated Gleason grading, and seamless integration with existing electronic medical record systems for streamlined patient management. The comprehensive evaluation of the proposed model’s performance across various magnification levels reaffirms its adaptability and reliability in accurately assessing prostate cancer pathology. The consistent high accuracies achieved by the ResNet models further validate their stability and efficacy, positioning them as valuable assets in clinical practice for prostate cancer diagnosis and Gleason grading. Overall, the study lays a solid groundwork for advancing prostate cancer diagnosis and Gleason grading through the integration of machine learning techniques. By investigating avenues for various other algorithms and efficiency, future research can enhance the accuracy, scalability, and clinical utility of these models, benefiting both healthcare providers and patients.

Funding

This research received no funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rice-Stitt, T., Valencia-Guerrero, A., Cornejo, K. M., & Wu, C. L. Updates in Histologic Grading of Urologic Neoplasms. Archives of Pathology & Laboratory Medicine 2020, 144(3), 335–343. [Online]. Available. [CrossRef]

- Goldenberg, S. L., Nir, G., & Salcudean, S. E. A new era: Artificial intelligence and machine learning in prostate cancer. Nature Reviews Urology 2019, 16(7), 391-403. [Online]. Available. [CrossRef]

- Ström, P.; et al. Artificial intelligence for diagnosis and grading of prostate cancer in biopsies: A population-based, diagnostic study. The Lancet Oncology 2020, 21(2), 222-232. [Online]. Available. [CrossRef]

- Bhattacharjee, S.; et al. Quantitative Analysis of Benign and Malignant Tumors in Histopathology: Predicting Prostate Cancer Grading Using SVM. Applied Sciences 2019, 9(15), 2969. [Online]. Available. [CrossRef]

- Abraham, B., & Nair, M. S. Automated grading of prostate cancer using convolutional neural network and ordinal class classifier. Informatics in Medicine Unlocked 2019, 17, 100256. [Online]. Available. [CrossRef]

- Alzubaidi, L.; et al. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. Journal of Big Data 2021, 8(1), 53. [Online]. Available. [CrossRef]

- Wang, J.; et al. Cnn-rnn: A unified framework for multi-label image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, 2285-2294.

- Kumar, M. D., Babaie, M., Zhu, S., Kalra, S., & Tizhoosh, H. R. A comparative study of CNN, BoVW and LBP for classification of histopathological images. In 2017 IEEE Symposium Series on Computational Intelligence (SSCI), 2017, 1-7. [Online]. Available. [CrossRef]

- LeCun, Y., Bengio, Y., & Hinton, G. Deep learning. Nature 2015, 521(7553), 436-444. [Online]. Available. [CrossRef]

- Pati, P.; et al. Weakly supervised joint whole-slide segmentation and classification in prostate cancer. Medical Image Analysis 2023, 89, 102915. [CrossRef]

- Fakoor, R., Ladhak, F., Nazi, A., & Huber, M. Using deep learning to enhance cancer diagnosis and classification. In Proceedings of the International Conference on Machine Learning 2013, 28, 3937-3949.

- Xu, W., Fu, Y. L., & Zhu, D. ResNet and its application to medical image processing: Research progress and challenges. Computer Methods and Programs in Biomedicine 2023, 107660. [CrossRef]

- Almoosawi, N. M., & Khudeyer, R. S. ResNet-34/DR: A residual convolutional neural network for the diagnosis of diabetic retinopathy. Informatica 2021, 45(7).

- Guo, M., & Du, Y. Classification of thyroid ultrasound standard plane images using ResNet-18 networks. In 2019 IEEE 13th International Conference on Anti-counterfeiting, Security, and Identification (ASID), 2019, 324-328.

- Yurtkulu, S. C., Şahin, Y. H., & Unal, G. Semantic segmentation with extended DeepLabv3 architecture. In 2019 27th Signal Processing and Communications Applications Conference (SIU), 2019, 1-4.

- Heryadi, Y.; et al. The effect of ResNet model as feature extractor network to performance of DeepLabV3 model for semantic satellite image segmentation. In 2020 IEEE Asia-Pacific Conference on Geoscience, Electronics and Remote Sensing Technology (AGERS), 2020, 74-77.

- Kayalibay, B., Jensen, G., & van der Smagt, P. CNN-based segmentation of medical imaging data. arXiv preprint arXiv:1701.03056 2017.

- Mortazi, A., & Bagci, U. Automatically designing CNN architectures for medical image segmentation. In Machine Learning in Medical Imaging: 9th International Workshop, MLMI 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, September 16, 2018, Proceedings 9, pp. 98-106. Springer International Publishing.

- Liu, F., Lin, G., & Shen, C. CRF learning with CNN features for image segmentation. Pattern Recognition 2015, 48(10), 2983-2992. [CrossRef]

- Sharma, P., Berwal, Y. P. S., & Ghai, W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Information Processing in Agriculture 2020, 7(4), 566-574. [CrossRef]

- Dolz, J.; et al. HyperDense-Net: A hyper-densely connected CNN for multi-modal image segmentation. IEEE Transactions on Medical Imaging 2019, 38(5), 1116-1126. [CrossRef]

- Sultana, F., Sufian, A., & Dutta, P. Evolution of image segmentation using deep convolutional neural network: A survey. Knowledge-Based Systems 2020, 201, 106062. [CrossRef]

- Frontiers in Radiology. Cardiothoracic Imaging. Vol. 3. January 12, 2024. [Online]. Available. [CrossRef]

- He, K., Zhang, X., Ren, S., & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, 770–778. [Online]. Available. [CrossRef]

- Wang, X.; et al. Weakly supervised framework for detecting lesions in medical images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, 3514-3522.

- Liu, F.; et al. Deep residual network for joint analysis of breast and optical images in cancer detection. IEEE Journal of Biomedical and Health Informatics 2019, 23(4), 1395-1405.

- Zhu, H.; et al. ResNet-50 architecture for breast cancer histopathological image classification. Journal of Medical Systems 2020, 44(3), 77.

- Cao, R.; et al. Prostate cancer detection and segmentation in multi-parametric MRI via CNN and conditional random field. In 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), 2019, pp. 1900-1904. [Online]. Available. [CrossRef]

- Soni, M.; et al. Light weighted healthcare CNN model to detect prostate cancer on multiparametric MRI. Computational Intelligence and Neuroscience 2022. [Online]. Available. [CrossRef]

- Tolkach, Y.; et al. High-accuracy prostate cancer pathology using deep learning. Nature Machine Intelligence 2020, 2(7), 411-418. [CrossRef]

- Abbasi, A. A.; et al. Detecting prostate cancer using deep learning convolution neural network with transfer learning approach. Cognitive Neurodynamics 2020, 14, 523-533. [CrossRef]

- De Vente, C.; et al. Deep learning regression for prostate cancer detection and grading in bi-parametric MRI. IEEE Transactions on Biomedical Engineering 2020, 68(2), 374-383.

- Anguita, D.; et al. The ‘K’ in K-fold Cross Validation. In ESANN 2012, 102, 441-446.

- Li, Z.; et al. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Transactions on Neural Networks and Learning Systems 2021, 33(12), 6999-7019.

- Albawi, S., Mohammed, T. A., & Al-Zawi, S. Understanding of a convolutional neural network. In 2017 International Conference on Engineering and Technology (ICET) 2017, 1-6. [Online]. Available. [CrossRef]

- Shah, R. B. Current perspectives on the Gleason grading of prostate cancer. Archives of Pathology & Laboratory Medicine 2009, 133(11), 1810-1816. [CrossRef]

- Tabesh, A.; et al. Multifeature prostate cancer diagnosis and Gleason grading of histological images. IEEE Transactions on Medical Imaging 2007, 26(10), 1366-1378. [CrossRef]

- Kott, O.; et al. Development of a deep learning algorithm for the histopathologic diagnosis and Gleason grading of prostate cancer biopsies: A pilot study. European Urology Focus 2021, 7(2), 347-351. [CrossRef]

- Sarwinda, D.; et al. Deep learning in image classification using residual network (ResNet) variants for detection of colorectal cancer. Procedia Computer Science 2021, 179, 423-431. [CrossRef]

- Hou, L.; et al. Patch-based convolutional neural network for whole slide tissue image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, 2424-2433.

- Koziarski, M.; et al. Combined cleaning and resampling algorithm for multi-class imbalanced data with label noise. Knowledge-Based Systems 2020, 204, 106223. [CrossRef]

- Deng, J.; et al. Imagenet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition, 2009, 248-255.

- Samaratunga, H.; et al. From Gleason to International Society of Urological Pathology (ISUP) grading of prostate cancer. Scandinavian Journal of Urology 2016, 50(5), 325-329. [CrossRef]

- Nir, G.; et al. Automatic grading of prostate cancer in digitized histopathology images: Learning from multiple experts. Medical Image Analysis 2018, 50, 167-180. [CrossRef]

- Gleason, D. F. Classification of prostatic carcinomas. Cancer Chemotherapy Reports 1966, 50, 125-128.

- Nagpal, K.; et al. Development and validation of a deep learning algorithm for improving Gleason scoring of prostate cancer. NPJ Digital Medicine 2019, 2(1), 48.

- Litjens, G.; et al. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Scientific Reports 2016, 6(1), 26286. [CrossRef]

- Srinidhi, C. L., Ciga, O., & Martel, A. L. Deep neural network models for computational histopathology: A survey. Medical Image Analysis 2021, 67, 101813. [CrossRef]

- Lu, L.; et al. Deep learning and convolutional neural networks for medical image computing. Advances in Computer Vision and Pattern Recognition 2017, 10, 978-3.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).