Submitted:

16 May 2024

Posted:

17 May 2024

You are already at the latest version

Abstract

Keywords:

I. Introduction

- Event Sources: These are the systems or applications that generate the data streams, such as sensor networks, social media platforms, financial transaction logs, or industrial machinery.

- Event Stream Processing (ESP): This layer ingests the event data in real-time, performs preliminary filtering and transformation, and prepares it for further analysis.

- AI and Machine Learning (ML) Models: These models are trained on historical data to identify patterns, extract insights, and make predictions based on the real-time data stream.

- Decision-Making Algorithms: Based on the results of the AI models, these algorithms determine the appropriate actions or responses to be taken in real time.

- Serverless Functions: These are modular code units triggered by specific events and perform the tasks associated with data processing, model execution, decision-making, and output generation.

- Enabling real-time data ingestion and processing: By utilizing event-driven triggers, the framework ensures immediate processing of incoming data streams, minimizing latency and allowing for near-instantaneous responses.

- Facilitating scalable and elastic resource allocation: Serverless platforms automatically scale resources based on the volume of incoming events, ensuring efficient resource utilization and cost optimization.

- Promoting modularity and reusability: Serverless functions encapsulate specific tasks, leading to a modular and reusable workflow architecture.

- Enhancing flexibility and adaptability: The framework allows for dynamic adjustment of AI models and decision-making algorithms based on changing data patterns and evolving requirements.

II. Related Work

III. Methodology and Implementation

A. Event Sources and Data Ingestion

- Event Producers: Diverse systems and applications can act as event producers, continuously generating data streams relevant to the AI workflow. These sources could include sensor networks, social media platforms, financial transaction logs, industrial machinery, or any system capable of producing time-series data.

- Event Ingestion Mechanisms: Dedicated streaming platforms like Apache Kafka or cloud-based pub/sub-services (AWS Kinesis, Azure Event Hub) are utilized to capture and buffer the incoming event streams. These platforms offer high throughput, scalability, and fault tolerance, ensuring reliable data ingestion even during periods of high event volume.

B. AI Components

- Machine Learning Models: Pre-trained machine learning models, specifically designed for the chosen application domain, are integrated into the workflow. These models are trained on historical data to identify patterns, extract insights, and make real-time predictions based on the incoming data streams. The choice of model type (e.g., classification, regression, anomaly detection) depends on the application’s specific requirements.

- Decision-Making Algorithms: Based on the outputs generated by the machine learning models, decision-making algorithms are employed to determine the appropriate actions or responses in real time. These algorithms may involve rule-based systems, reinforcement learning techniques, or more complex optimization methods tailored to the specific use case.

C. Serverless Function Orchestration

- Serverless Functions: Modular and reusable serverless functions are utilized to encapsulate the distinct tasks within the workflow. These functions are triggered by specific events received from the event ingestion platform. Each function performs a designated task, such as data pre-processing, model execution, decision-making, or output generation.

- Workflow Orchestration: A serverless workflow orchestration platform is employed to coordinate the execution of these functions in the desired sequence. This platform manages the data flow between functions, ensures proper error handling, and facilitates the overall execution of the AI workflow. Cloud-based offerings like AWS Step Functions, Azure Logic Apps, or Google Cloud Workflows provide suitable options for this purpose.

D. Function Implementation Details

- Programming Languages: The serverless functions are developed using programming languages supported by the chosen serverless platform (e.g., Python, Node.js, Java).

- Data Serialization and Deserialization: Efficient data serialization and deserialization formats (e.g., JSON, Protobuf) are employed to ensure seamless data exchange between functions and minimize processing overhead.

- Performance Optimization Techniques: Caching mechanisms, data partitioning, and asynchronous programming practices are implemented within the functions to optimize performance and minimize latency during real-time processing.

E. Implementation Details

- AWS Lambda: A widely adopted platform offering many services and integrations with other AWS tools.

- Azure Functions: Integrates seamlessly with the Azure ecosystem and provides various event triggers and bindings.

- Google Cloud Functions: Offers serverless capabilities alongside other Google Cloud services and machine learning tools.

IV. Results

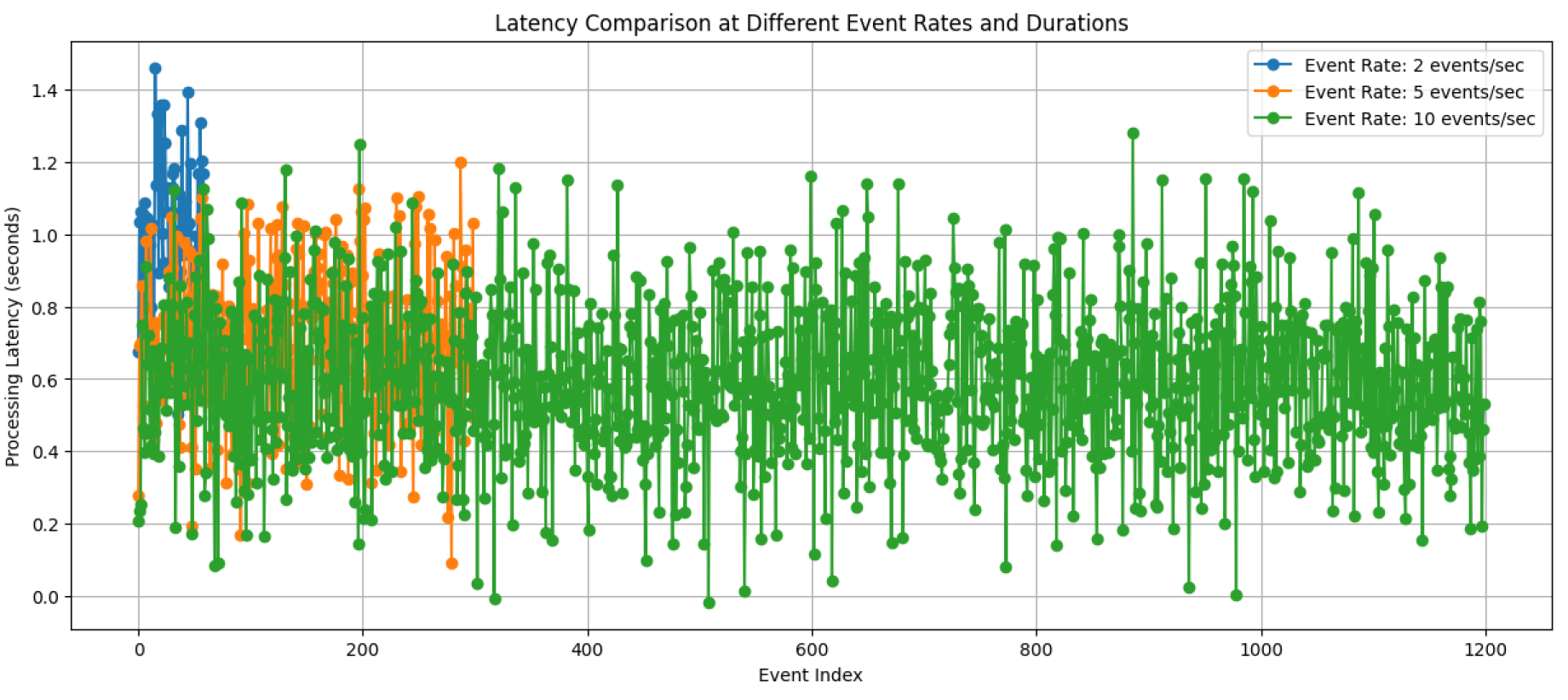

A. Latency

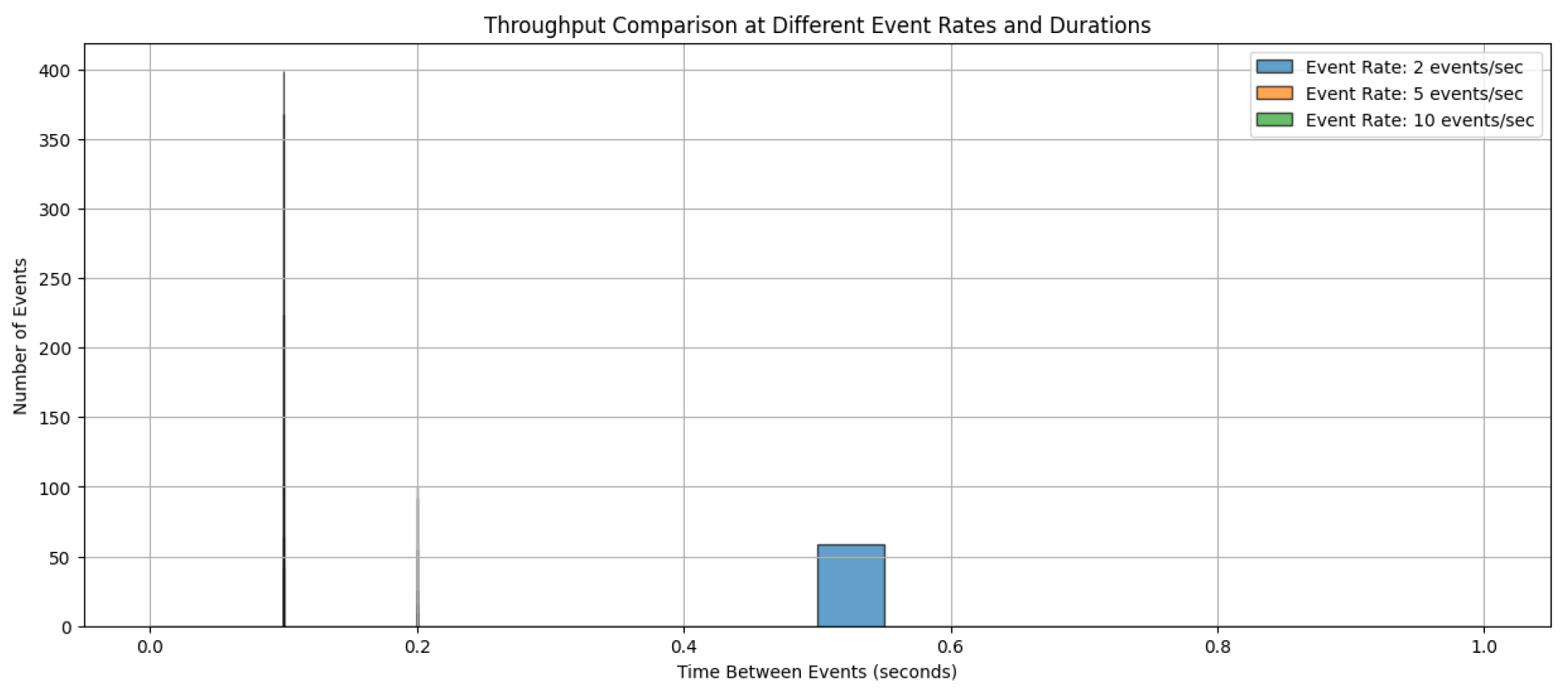

B. Throughput

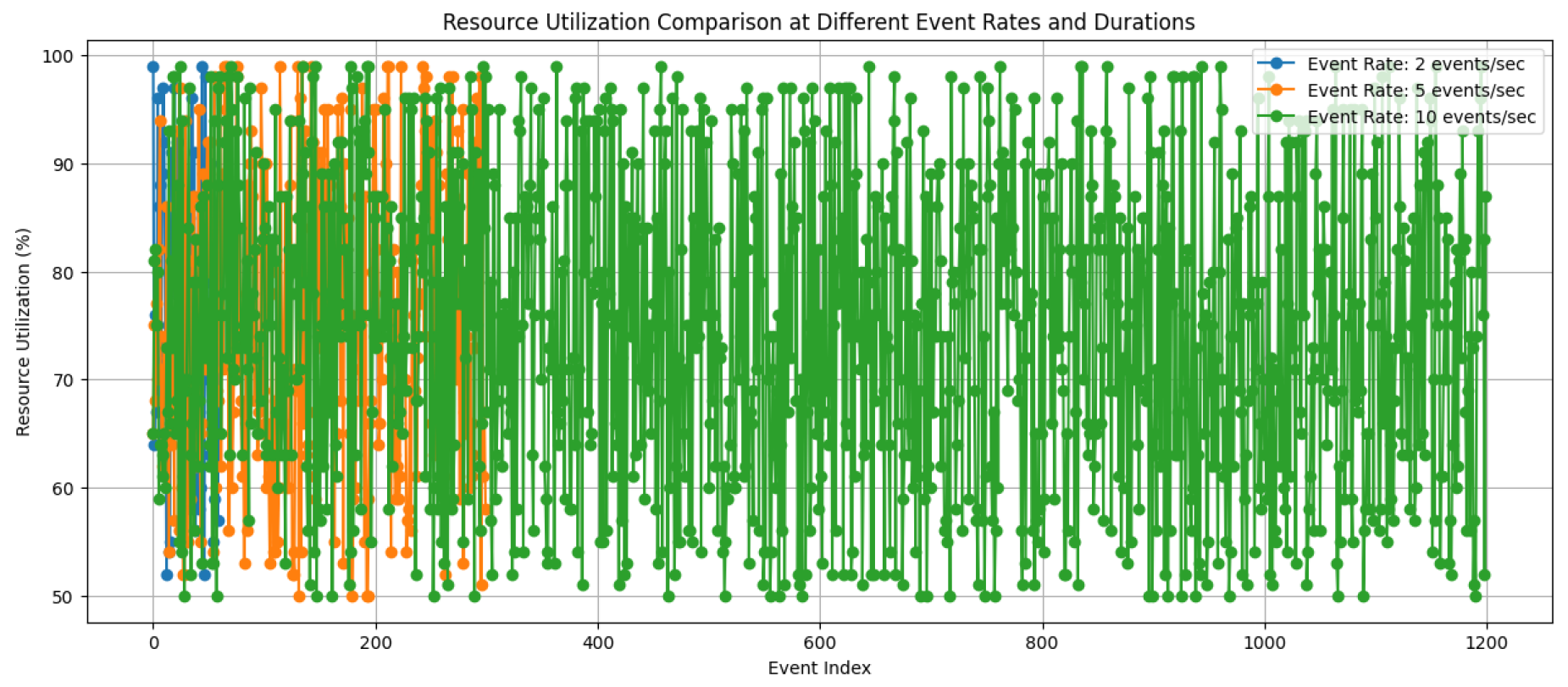

C. Resource Utilization

D. Outcomes and Effectiveness

- The provided code demonstrates a framework for simulating and visualizing the performance characteristics of an event-driven AI workflow.

- By generating data with varying event rates and durations, it showcases the potential trade-offs between latency, throughput, and resource utilization.

- This approach allows you to analyze the impact of different event rates on the overall performance of your workflow, enabling you to identify potential bottlenecks and optimize resource allocation strategies.

E. Additional Considerations

- The presented results are based on a simulated scenario with simplified data.

- Further analysis can incorporate more complex data processing logic, evaluate different serverless platforms and optimization techniques, and monitor real-time resource utilization data.

V. Discussion

A. Strengths and Limitations

-

Strengths:

- -

- The provided framework offers a comprehensive approach to visualizing and analyzing the performance of event-driven AI workflows.

- -

- It enables the exploration of the trade-offs between latency, throughput, and resource utilization across different event rates and durations.

- -

- The use of tables alongside graphs provides a clear and concise overview of the quantitative results.

-

Limitations:

- -

- The simulated data and processing logic represent a simplified scenario. Actual performance will vary significantly based on the specific implementation and chosen technologies.

- -

- The framework requires further customization to incorporate more complex event processing pipelines and real-time resource monitoring data.

- -

- While the code provides insights into performance metrics, it doesn’t directly address the accuracy of the AI models within the workflow.

B. Future Research Directions and Open Challenges

- Incorporating Machine Learning Complexity: Future research could involve integrating more complex machine learning models and analyzing their impact on processing times, resource utilization, and overall accuracy [19].

- Real-Time Resource Optimization: Developing dynamic resource allocation strategies based on real-time workload and resource utilization data can further optimize performance and cost efficiency [20].

- Edge Computing Integration: Exploring the integration of edge computing resources within the workflow can potentially reduce latency for time-sensitive applications.

- Explainability and Fairness in AI Decisions: As real-time AI increasingly influences decision-making, ensuring explainability and fairness of the models within the workflow becomes crucial.

- Application of the Framework: Future research could apply the proposed event-driven AI workflow framework to platforms like "Fostering Joint Innovation" and "Personalized Educational Frameworks" to enhance real-time data processing and decision-making capabilities in collaborative environments. This would involve adapting the framework to support collaborative feedback mechanisms and project management features, enabling users to collaborate effectively [21,22].

C. Ethical Considerations

- Bias and Fairness: It’s essential to continuously monitor and mitigate potential biases within the AI models used in the workflow to ensure fairness and ethical decision-making.

- Privacy and Data Security: Protecting the privacy of user data throughout the event processing pipeline and implementing robust security measures are critical considerations.

- Transparency and Explainability: Providing transparency in the decision-making processes of the AI models within the workflow is crucial for building trust and ensuring responsible AI development.

VI. Conclusion

References

- Jamali, H., Karimi, A., & Haghighizadeh, M. (2018). A new method of Cloud-based Computation Model for Mobile Devices: Energy Consumption Optimization in Mobile-to-Mobile Computation Offloading. In Proceedings of the 6th International Conference on Communications and Broadband Networking (pp. 32–37). Singapore, Singapore. [CrossRef]

- Jamali, H., Shill, P.C., Feil-Seifer, D., Harris, F.C., Dascalu, S.M. (2024). A Schedule of Duties in the Cloud Space Using a Modified Salp Swarm Algorithm. In: Puthal, D., Mohanty, S., Choi, BY. (eds) Internet of Things. Advances in Information and Communication Technology. IFIPIoT 2023. IFIP Advances in Information and Communication Technology, vol 683. Springer, Cham. 10.1007/978-3-031-45878-15.

- Vemulapalli, G. (2023). Architecting for Real-Time Decision-Making: Building Scalable Event-Driven Systems. International Journal of Machine Learning and Artificial Intelligence, 4(4), 1-20. doi: ....

- Arjona, A., López, P. G., Sampé, J., Slominski, A., & Villard, L. (2021). Triggerflow: Trigger-based orchestration of serverless workflows. Future Generation Computer Systems, 124, 215-229. doi: ....

- Burckhardt, S., Chandramouli, B., Gillum, C., Justo, D., Kallas, K., McMahon, C., … Zhu, X. (2022). Netherite: efficient execution of serverless workflows. Proc. VLDB Endow., 15(8), 1591–1604. doi: ....

- Elgamal, T., Sandur, A., Nahrstedt, K., & Agha, G. (2018). Costless: Optimizing Cost of Serverless Computing through Function Fusion and Placement. In 2018 IEEE/ACM Symposium on Edge Computing (SEC) (pp. 300-312). Seattle, WA, USA. [CrossRef]

- Bardsley, D., Ryan, L., & Howard, J. (2018). Serverless Performance and Optimization Strategies. In 2018 IEEE International Conference on Smart Cloud (SmartCloud) (pp. 19-26). New York, NY, USA. [CrossRef]

- Lin, C., & Khazaei, H. (2021). Modeling and Optimization of Performance and Cost of Serverless Applications. IEEE Transactions on Parallel and Distributed Systems, 32(3), 615-632. [CrossRef]

- Mahmoudi, N., Lin, C., Khazaei, H., & Litoiu, M. (2019). Optimizing serverless computing: introducing an adaptive function placement algorithm. In Proceedings of the 29th Annual International Conference on Computer Science and Software Engineering (pp. 203–213). Toronto, Ontario, Canada. USA: IBM Corp.

- Liu, X., Wen, J., Chen, Z., Li, D., Chen, J., Liu, Y., … Jin, X. (2023). FaaSLight: General Application-level Cold-start Latency Optimization for Function-as-a-Service in Serverless Computing. ACM Trans. Softw. Eng. Methodol., 32(5). [CrossRef]

- Carver, B., Zhang, J., Wang, A., Anwar, A., Wu, P., & Cheng, Y. (2020). Wukong: a scalable and locality-enhanced framework for serverless parallel computing. In Proceedings of the 11th ACM Symposium on Cloud Computing (pp. 1–15). Virtual Event, USA. [CrossRef]

- Kaplunovich, A., Joshi, K. P., & Yesha, Y. (2019). Scalability Analysis of Blockchain on a Serverless Cloud. In 2019 IEEE International Conference on Big Data (Big Data) (pp. 4214-4222). Los Angeles, CA, USA. [CrossRef]

- Hellerstein, J. M., Faleiro, J., Gonzalez, J. E., Sreekanti, V., Tumanov, A., & Wu, C. (2018). Serverless Computing: One Step Forward, Two Steps Back. ArXiv. /abs/1812.03651.

- Aditya, P., et al. (2019). Will Serverless Computing Revolutionize NFV? Proceedings of the IEEE, 107(4), 667-678. [CrossRef]

- Paraskevoulakou, E., & Kyriazis, D. (2023). ML-FaaS: Toward Exploiting the Serverless Paradigm to Facilitate Machine Learning Functions as a Service. IEEE Transactions on Network and Service Management, 20(3), 2110-2123. [CrossRef]

- Naranjo, D. M., Risco, S., Moltó, G., & Blanquer, I. (2023). A serverless gateway for event-driven machine learning inference in multiple clouds. Concurrency and Computation: Practice and Experience, 35(18), e6728. [CrossRef]

- Datta, P., Kumar, P., Morris, T., Grace, M., Rahmati, A., & Bates, A. (2020). Valve: Securing Function Workflows on Serverless Computing Platforms. In Proceedings of The Web Conference 2020 (pp. 939-950). Taipei, Taiwan. [CrossRef]

- Raith, P., Rausch, T., Furutanpey, A., & Dustdar, S. (2023). Faas-sim: A trace-driven simulation framework for serverless edge computing platforms. Software: Practice and Experience, 53(12), 2327-2361. [CrossRef]

- Jiang, J., Gan, S., Du, B. et al. A systematic evaluation of machine learning on serverless infrastructure. The VLDB Journal 33, 425–449 (2024), . [CrossRef]

- M. Szalay, P. Mátray and L. Toka, "Real-Time FaaS: Towards a Latency Bounded Serverless Cloud," in IEEE Transactions on Cloud Computing, vol. 11, no. 2, pp. 1636-1650, 1 April-June 2023, . [CrossRef]

- Jamali, H., Dascalu, S.M., Harris, F.C. (2024). Fostering Joint Innovation: A Global Online Platform for Ideas Sharing and Collaboration. arXiv preprint arXiv:2402.12718. [CrossRef]

- Shill, P. C., Wu, R., Jamali, H., Hutchins, B., Dascalu, S., Harris, F. C., & Feil-Seifer, D. (2023). WIP: Development of a Student-Centered Personalized Learning Framework to Advance Undergraduate Robotics Education. 2023 IEEE Frontiers in Education Conference (FIE), 1–5. [CrossRef]

| Event Rate (events/second) | Average Latency (seconds) |

|---|---|

| 2 | 1.0054 |

| 5 | 0.6954 |

| 10 | 0.5964 |

| Event Rate (events/second) | Average Throughput (events/second) |

|---|---|

| 2 | 0.5000 |

| 5 | 0.2000 |

| 10 | 0.1000 |

| Event Rate (events/second) | Average Utilization (%) |

|---|---|

| 2 | 75.55 |

| 5 | 75.35 |

| 10 | 74.51 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).