Submitted:

11 May 2024

Posted:

13 May 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Material and Methods

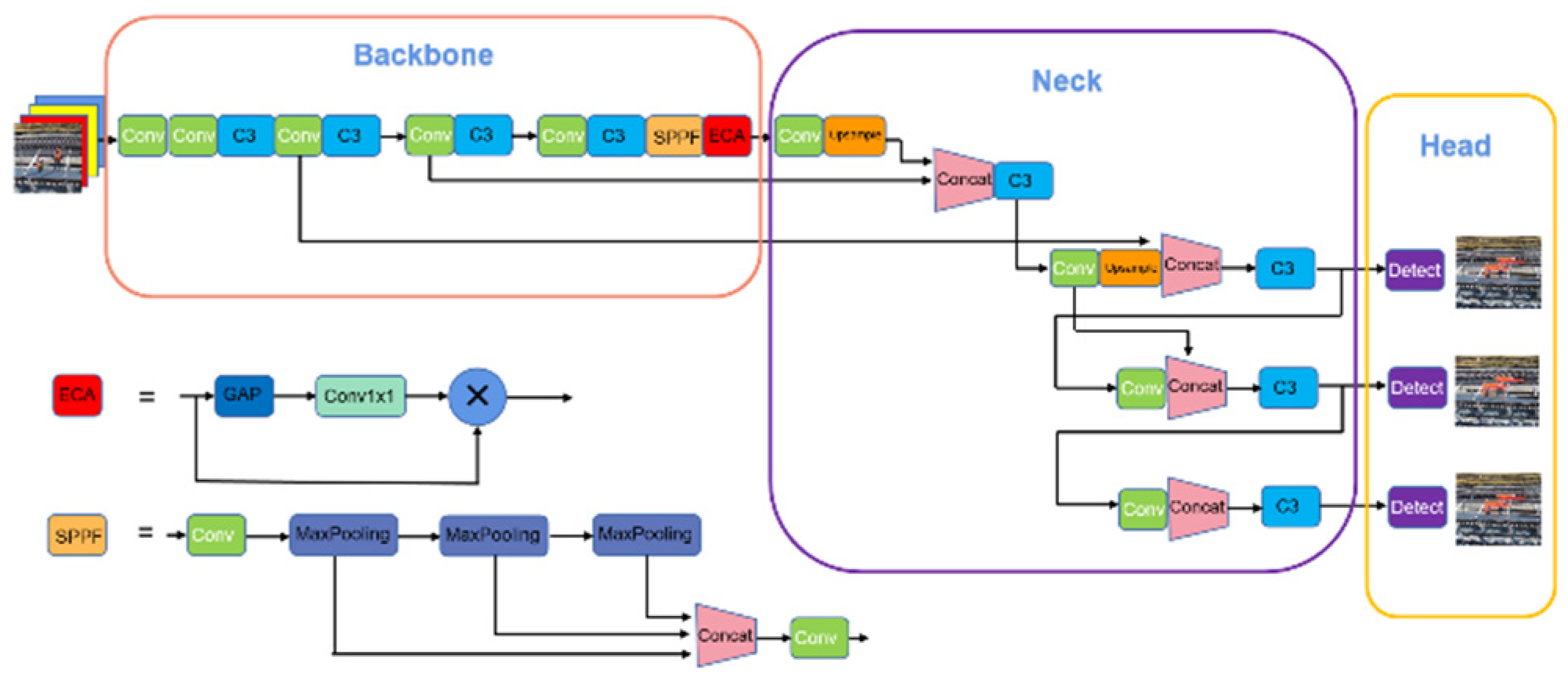

2.1. The YOLO-EA Network Structure

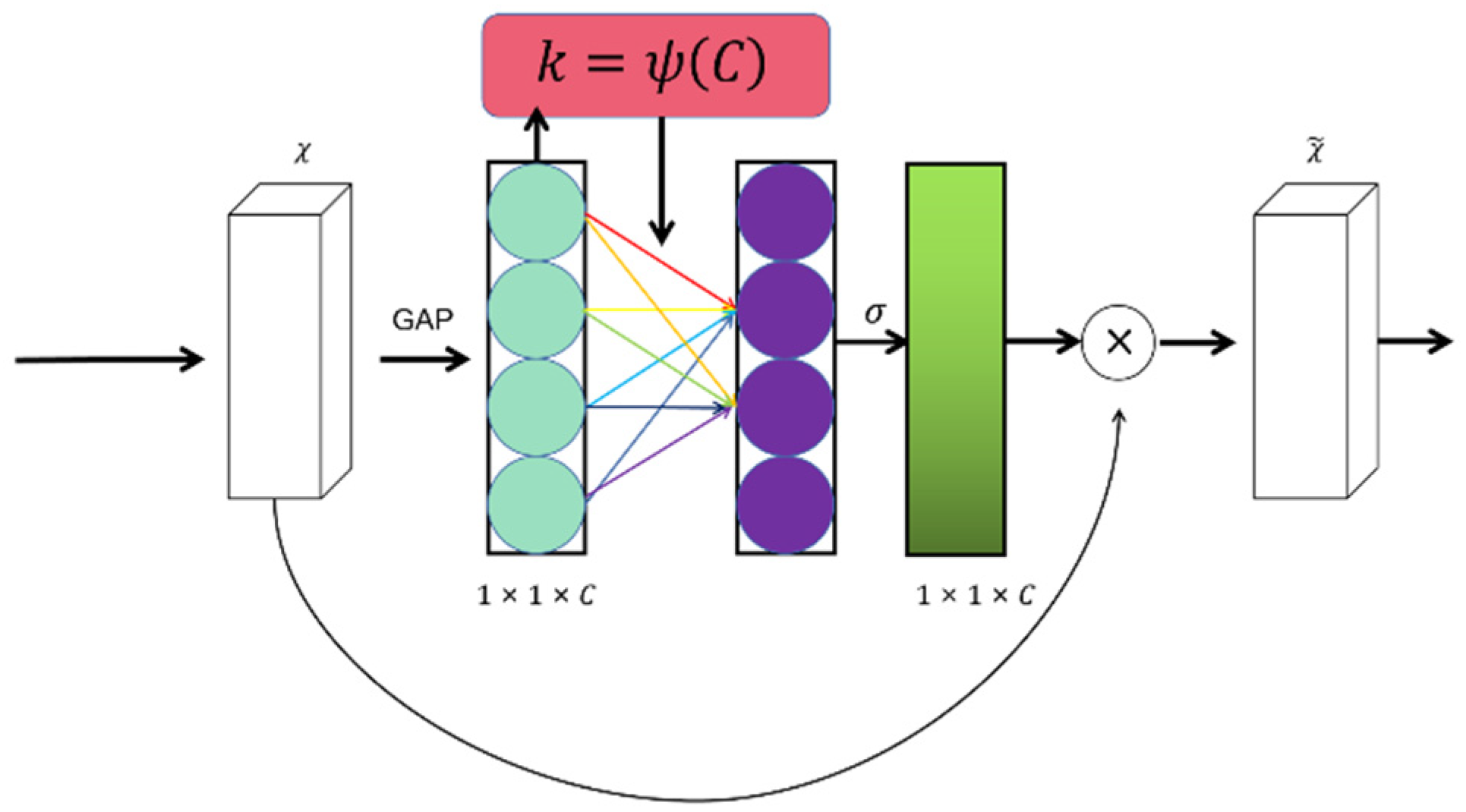

2.2. ECA Attention Mechanism Module

2.3. EIoU Loss Function

| Box | Xmin | Ymin | Xmax | Ymax |

|---|---|---|---|---|

| ground truth bounding box | 213 | 83 | 257 | 146 |

| Predicted Box (Before Fitting) | 219 | 107 | 247 | 145 |

| Predicted Box (After Fitting) | 219 | 100 | 247 | 138 |

3. Rsesult

3.1. Experimental Environment

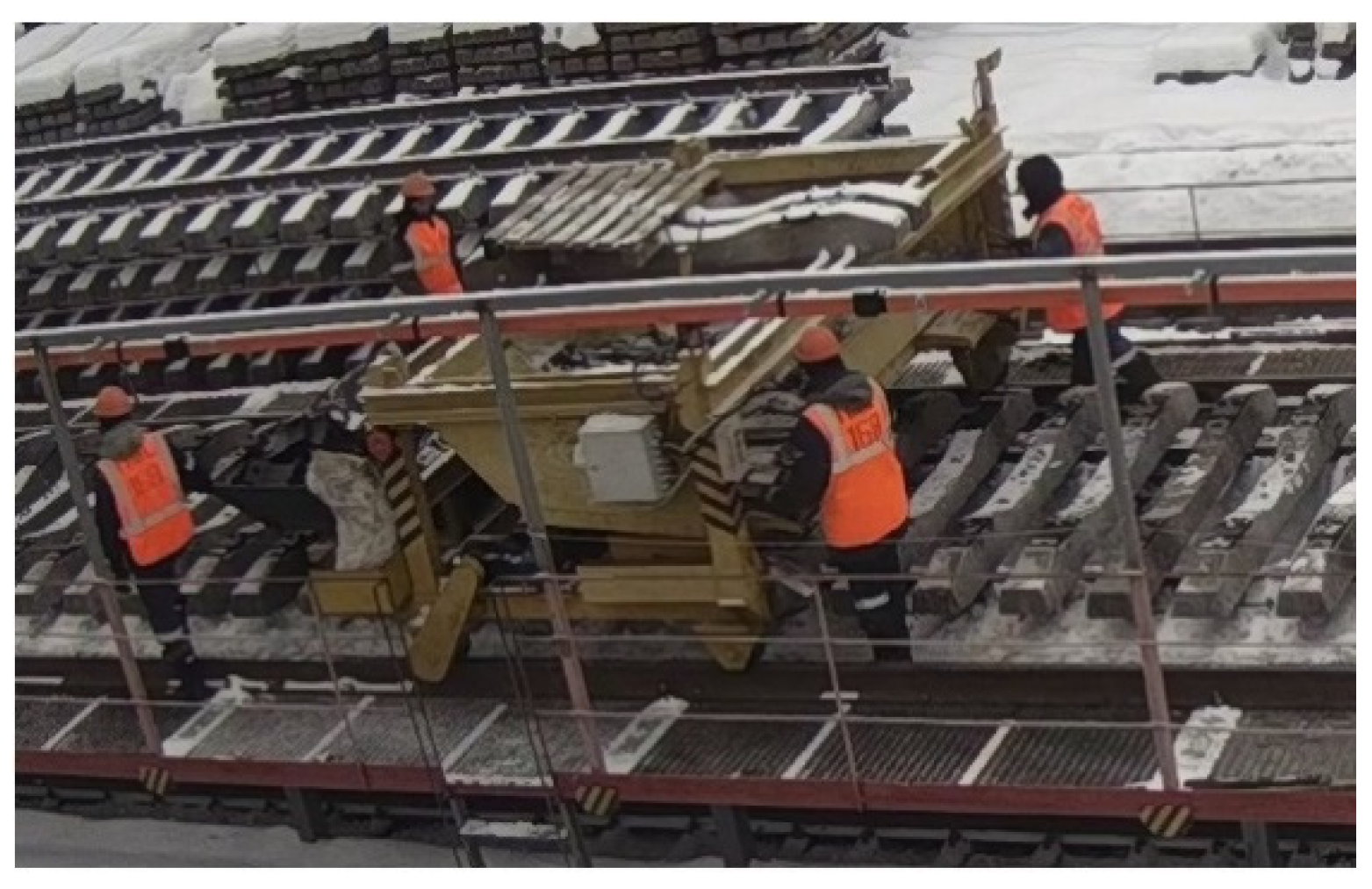

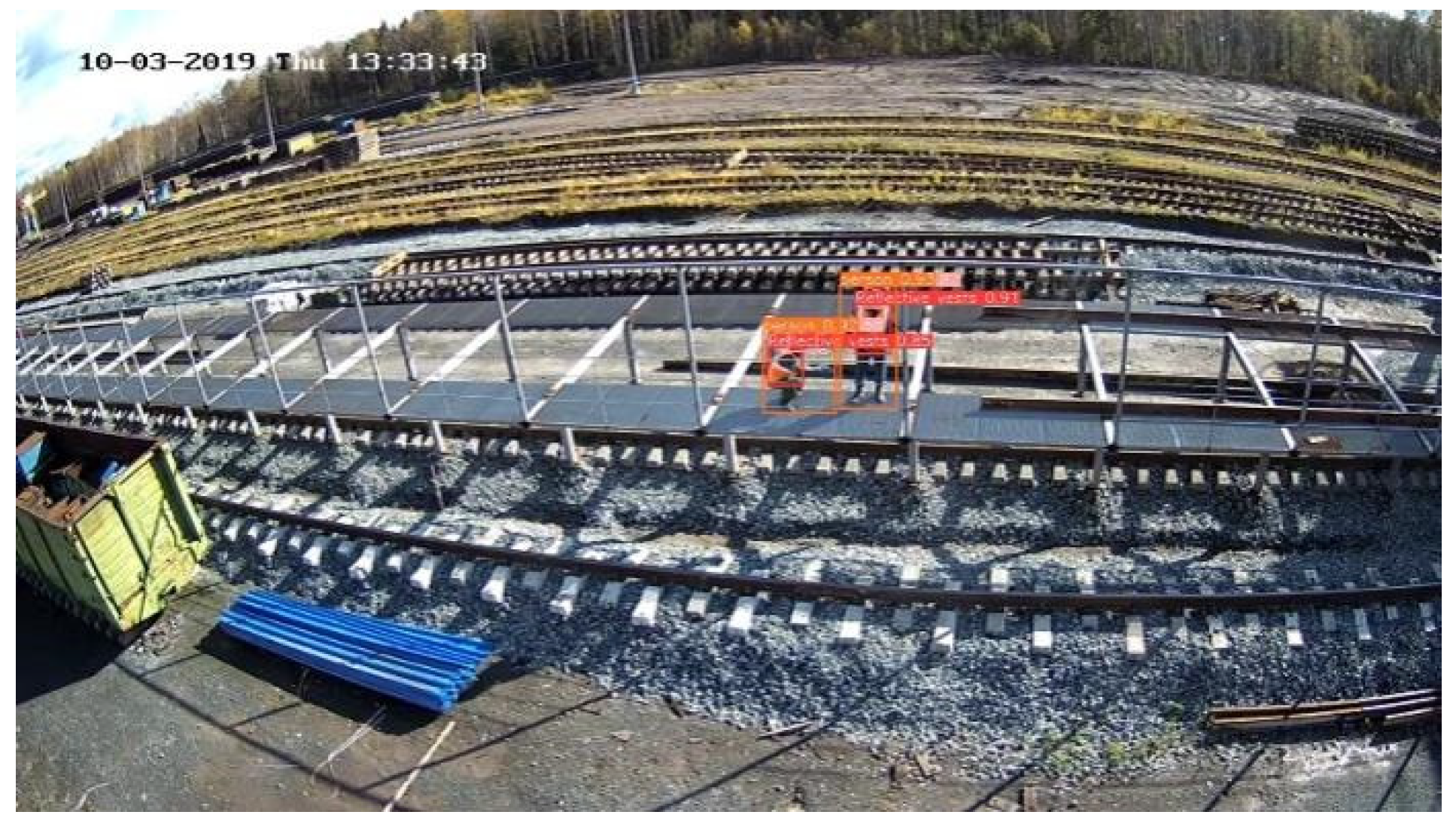

3.2. Experimental Dataset

| Label | Negative Samples | Occluded Samples | Total Samples |

|---|---|---|---|

| Reflective Vest | 1526 | 1306 | 7884 |

| Helmet | 914 | 6516 | |

| Person | / | 7974 |

3.3. Experimental Results and Analysis

| ECA | EIOU | mAP50-95 | precision /% | recall/% | Epochs |

|---|---|---|---|---|---|

| 0.647 | 96.4 | 94.2 | 200 | ||

| √ | 0.671 | 98.2 | 95.5 | ||

| √ | 0.642 | 97.2 | 94.8 | ||

| √ | √ | 0.692 | 98.9 | 94.7 |

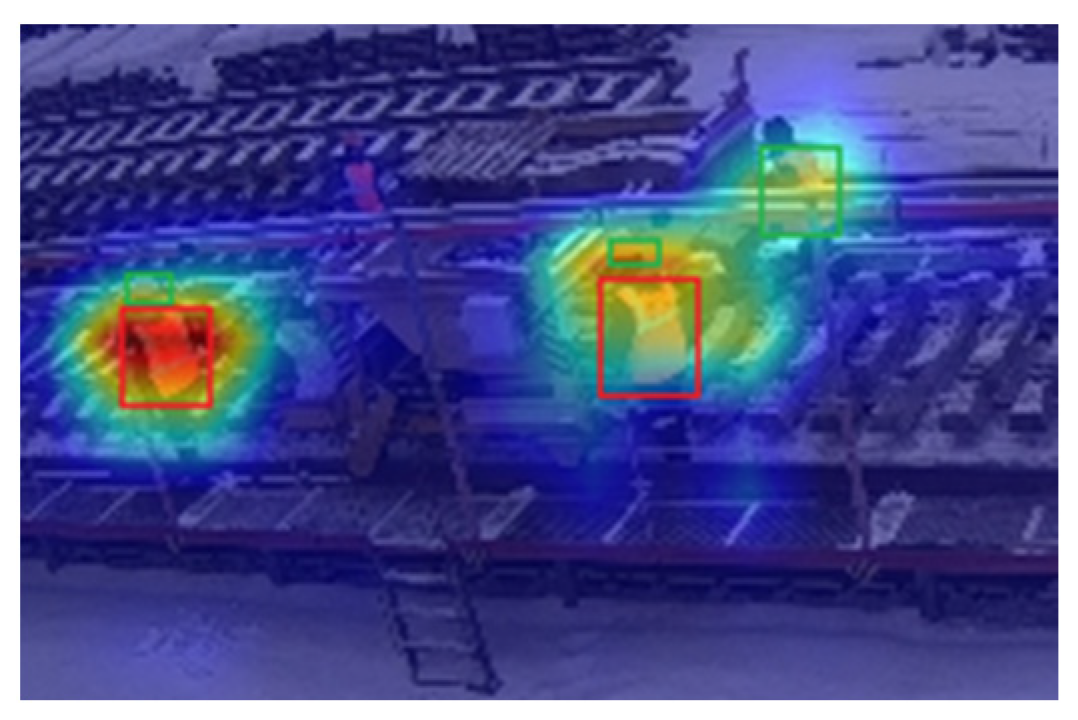

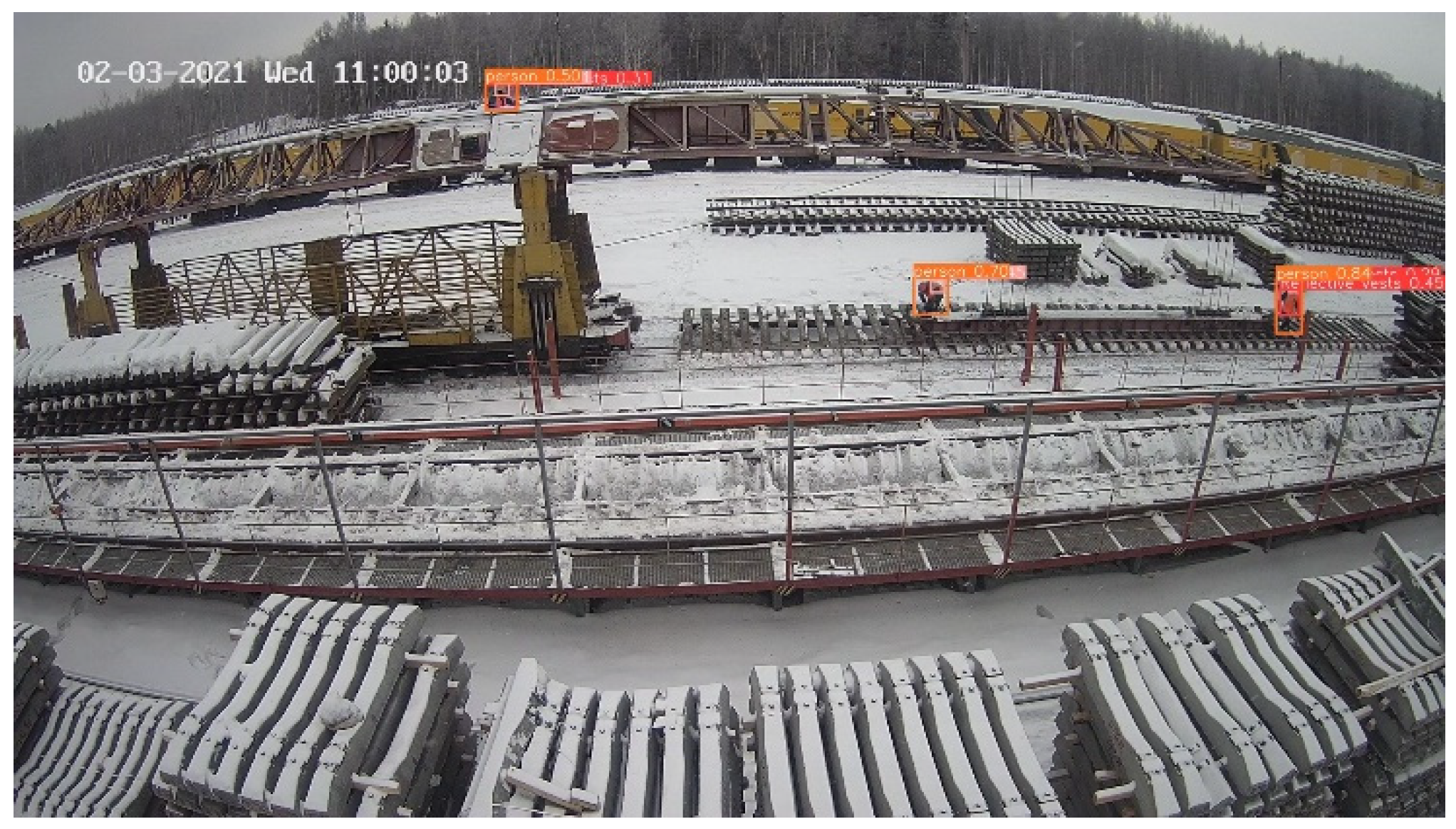

3.4. Analysis of ECA Attention Mechanism on Small Object Detection

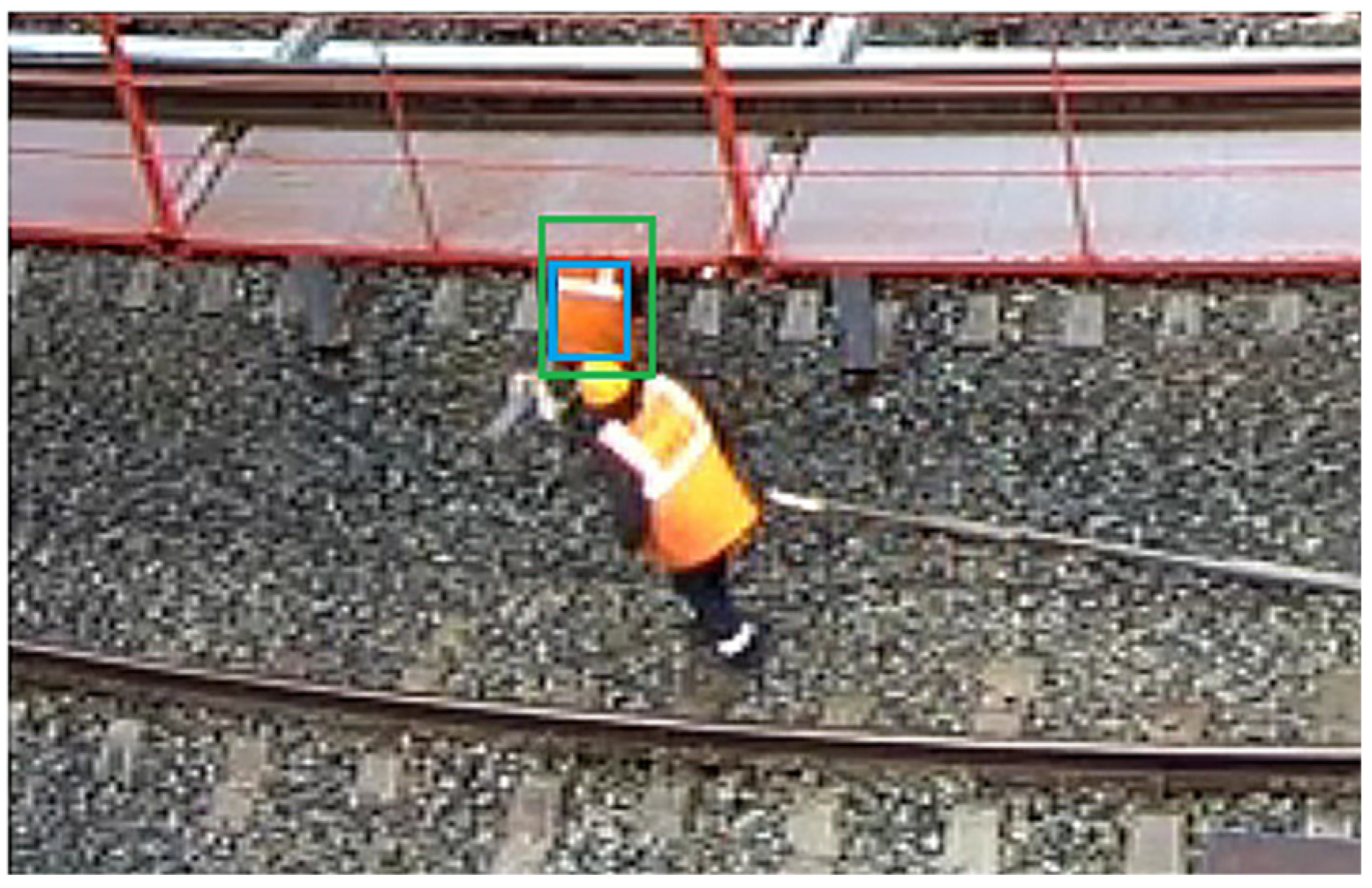

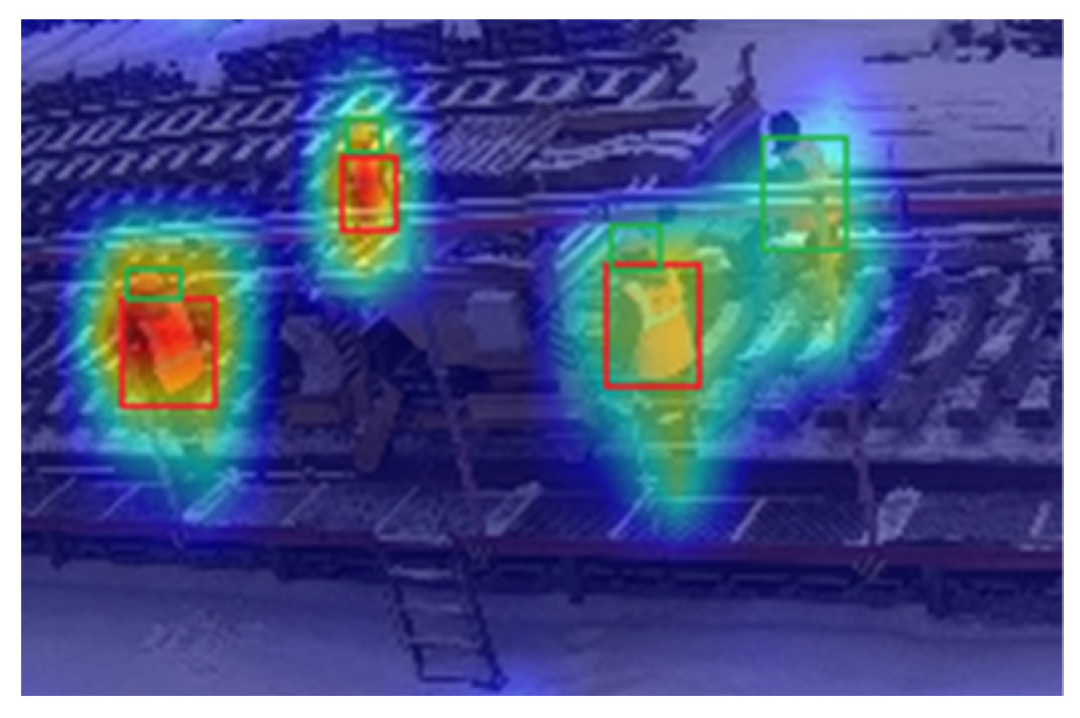

3.5. EIOU’s Effect Analysis of Blocking Target

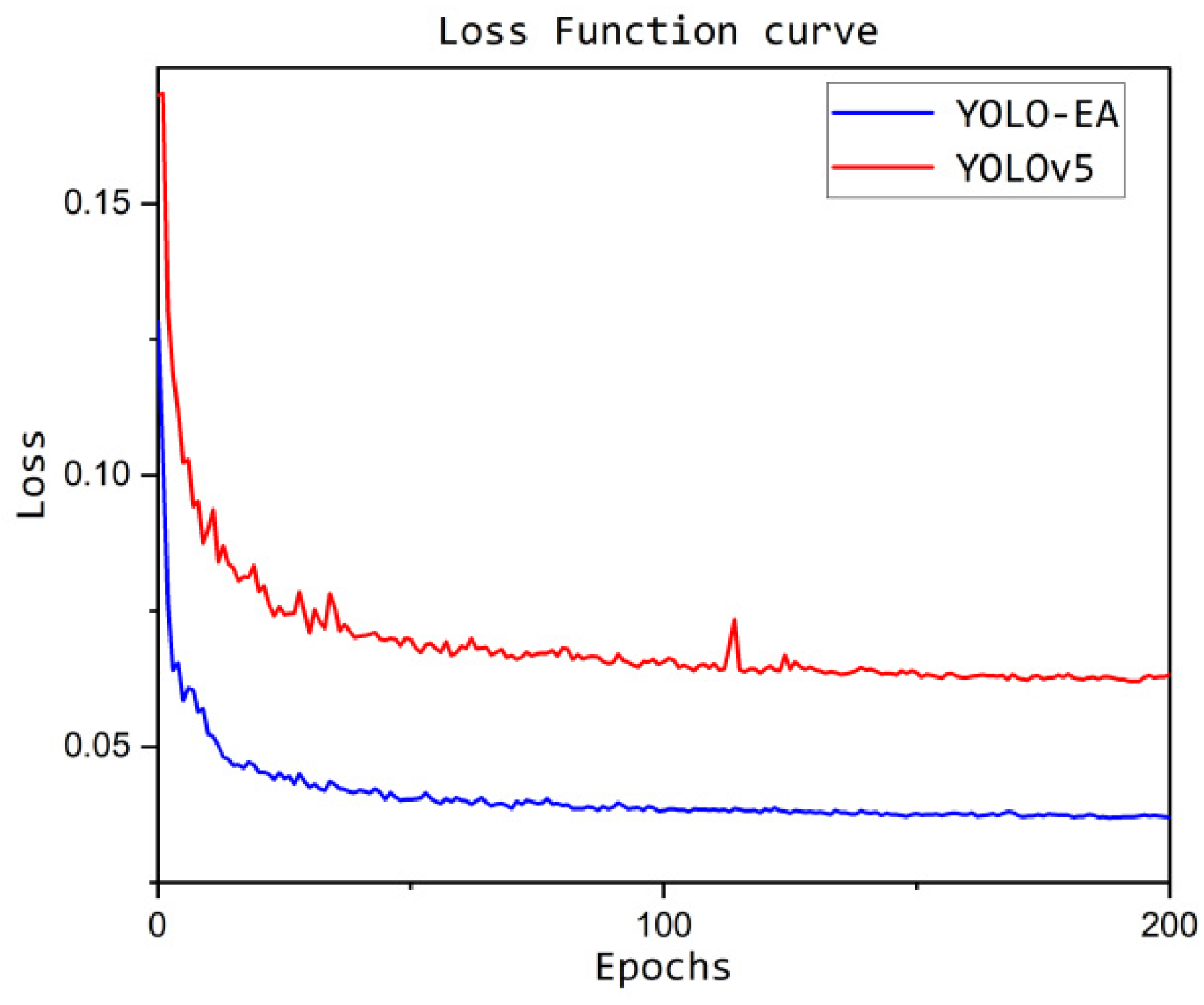

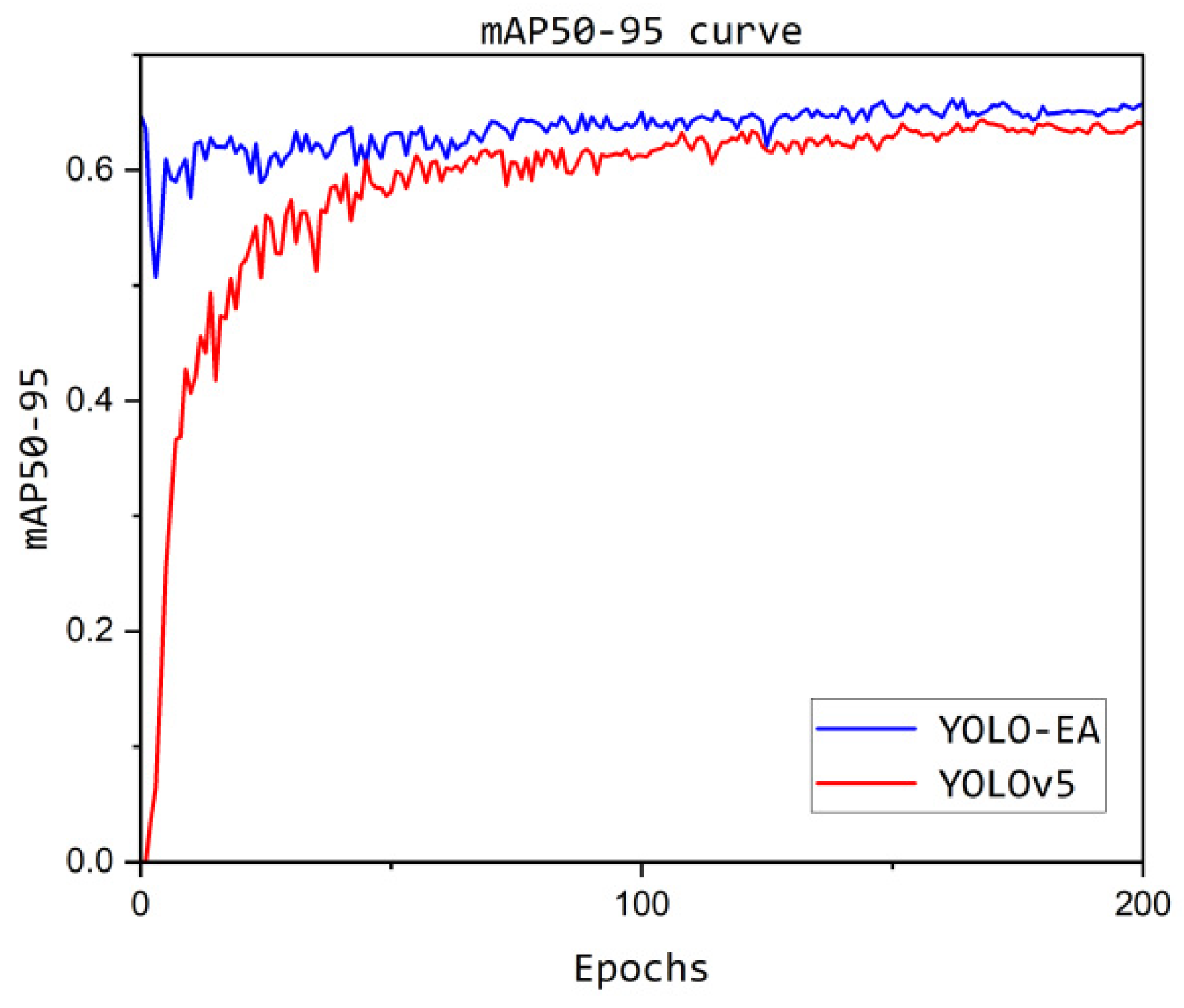

3.6. Training Process Analysis

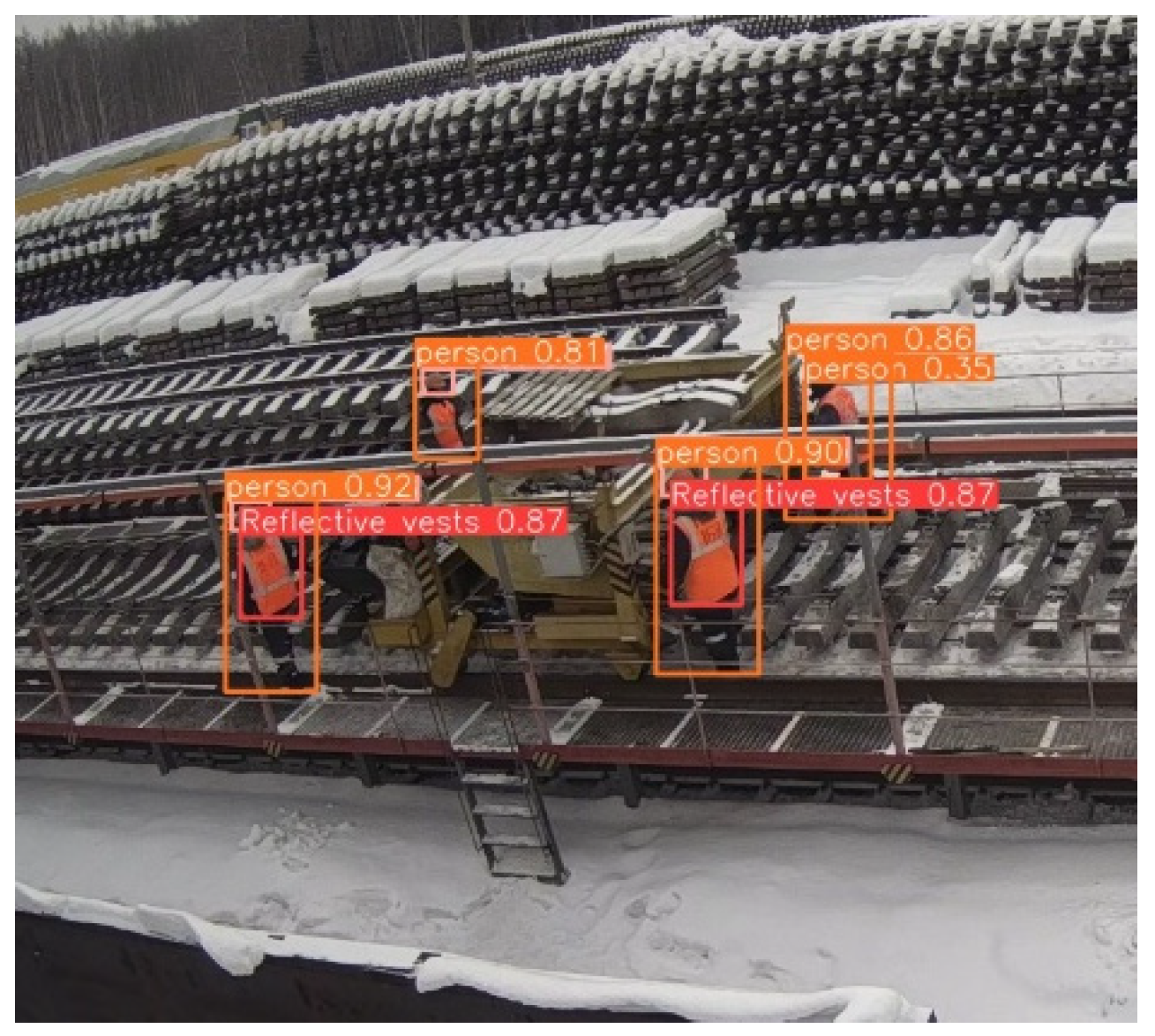

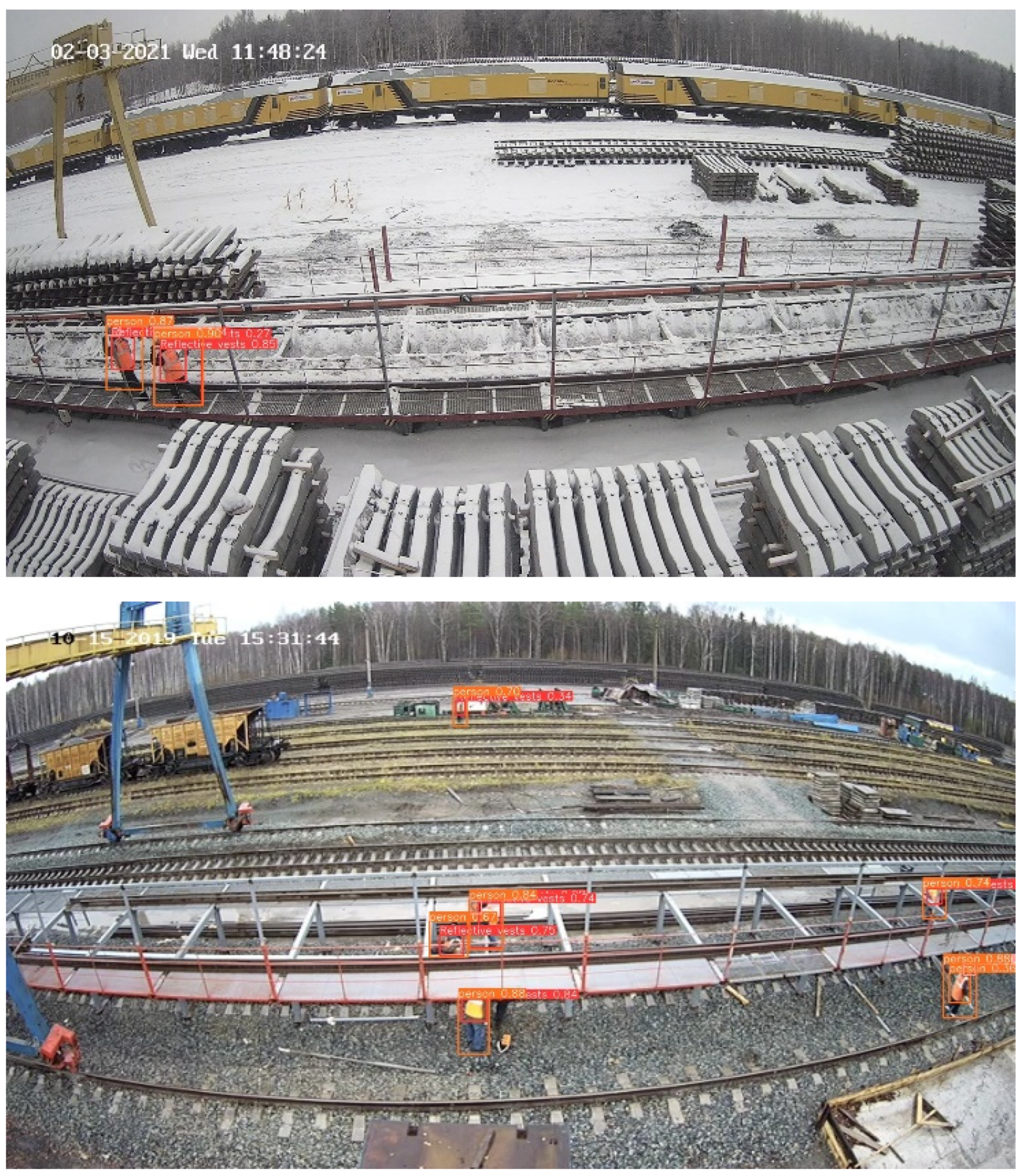

3.7. Target Detection Performance in Various Categories

| Object Category | Precision /% | Recall /% | mAP50-95 |

|---|---|---|---|

| Reflective vest | 98.5 | 92.6 | 0.686 |

| Helmet | 98 | 94.6 | 0.635 |

| Person | 98.5 | 98.6 | 0.768 |

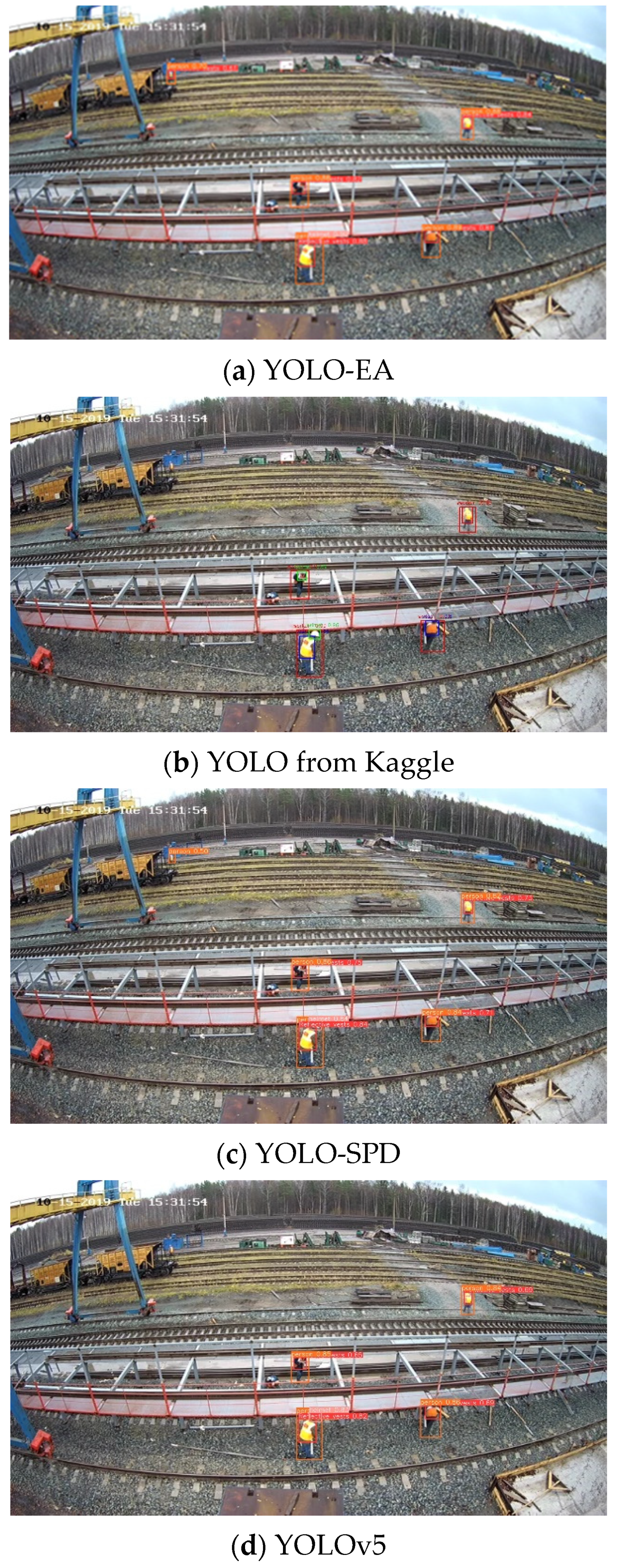

3.8. Model Performance Comparison

| Model | fps | mAP | Precision/% | Recall/% |

|---|---|---|---|---|

| YOLOv5 from Kaggle [27] | 79.371 | 0.559 | 93.7 | 91.8 |

| YOLOv5x | 71.211 | 0.647 | 96.4 | 94.2 |

| YOLO-SPD | 77.744 | 0.601 | 97.5 | 94.0 |

| YOLO-EIOU | 70.922 | 0.642 | 97.2 | 94.8 |

| YOLO-ECA | 70.844 | 0.671 | 98.3 | 95.3 |

| YOLO-EA | 70.774 | 0.692 | 98.9 | 94.7 |

3.9. Experimental Results Analysis

4. Discussion

5. Conclusion

References

- Yu, H. Motivation behind China’s ‘One Belt, One Road ‘initiatives and establishment of the Asian infrastructure investment bank[J]. Journal of Contemporary China, 2017, 26(105): 353-368.

- Okonjo-Iweala N, Osafo-Kwaako P. Nigeria’s economic reforms: Progress and challenges[J]. Brookings Global Economy and Development Working Paper, 2007 (6).

- Huang, Y. Understanding China’s Belt & Road initiative: motivation, framework and assessment[J]. China Economic Review, 2016, 40: 314-321.

- Great Britain Health and Safety Executive, 2021/22, “Statistics - Work-related fatal injuries in Great Britain”, Great Britain Health and Safety Executive. [Online]. Available online: https://www.hse.gov.uk/statistics/fatals.htm.

- IPAF, 28 Jun. 2023, “IPAF Global Safety Report”, IPAF-JOURNAL. [Online]. Available online: https://www.ipaf.org/en/resource-library/ipaf-global-safety-report.

- Bansal, R. Khanna and S. Sharma, "UHF-RFID Tag Design for Improved Traceability Solution for workers’ safety at Risky Job sites," 2021 IEEE International Conference on RFID Technology and Applications (RFID-TA), Delhi, India, 2021, pp. 267-270. [CrossRef]

- Arboleya, J. Laviada, Y. Álvarez-López and F. Las-Heras, "Real-Time Tracking System Based on RFID to Prevent Worker–Vehicle Accidents," in IEEE Antennas and Wireless Propagation Letters, vol. 20, no. 9, pp. 1794-1798, Sept. 2021. [CrossRef]

- Doungmala P, Klubsuwan K. Helmet wearing detection in Thailand using Haar like feature and circle hough transform on image processing[C]//2016 IEEE International Conference on Computer and Information Technology (CIT). IEEE, 2016: 611-614.

- Farhadi A, Redmon J. Yolov3: An incremental improvement[C]//Computer vision and pattern recognition. Berlin/Heidelberg, Germany: Springer, 2018, 1804: 1-6.

- Yan D, Wang L. Improved YOLOv3 Helmet Detection Algorithm[C]//2021 4th International Conference on Robotics, Control and Automation Engineering (RCAE). IEEE, 2021: 6-11.

- F. Zhou, H. Zhao and Z. Nie, "Safety Helmet Detection Based on YOLOv5," 2021 IEEE International Conference on Power Electronics, Computer Applications (ICPECA), Shenyang, China, 2021, pp. 6-11. [CrossRef]

- K. He, X. Zhang, S. Ren and J. Sun, "Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition," in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 37, no. 9, pp. 1904-1916, 1 Sept. 2015. [CrossRef]

- Girshick R, Donahue J, Darrell T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2014: 580-587.

- Girshick, R. Fast r-cnn[C]//Proceedings of the IEEE international conference on computer vision. 2015: 1440-1448.

- Ren S, He K, Girshick R, et al. Faster r-cnn: Towards real-time object detection with region proposal networks[J]. Advances in neural information processing systems, 2015, 28.

- Liu W, Anguelov D, Erhan D, et al. Ssd: Single shot multibox detector[C]//European conference on computer vision. Springer, Cham, 2016: 21-37.

- Redmon J, Divvala S, Girshick R, et al. You only look once: Unified, real-time object detection[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2016: 779-788.

- Jin Z, Qu P, Sun C, et al. DWCA-YOLOv5: An improve single shot detector for safety helmet detection[J]. Journal of Sensors, 2021, 2021: 1-12.

- Yan D, Wang L. Improved YOLOv3 Helmet Detection Algorithm[C]//2021 4th International Conference on Robotics, Control and Automation Engineering (RCAE). IEEE, 2021: 6-11.

- Wang, Q., Wu, B., Zhu, P., Li, P., Zuo, W., Hu, Q.: Eca-net: Efficient channel attention for deep convolutional neural networks. arXiv preprint arXiv:1910.03151 (2019).

- Hu J, Shen L, Sun G. Squeeze-and-excitation networks[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2018: 7132-7141.

- Lei Zhu, Xinjiang Wang, Zhanghan Ke, Wayne Zhang, Rynson Lau. BiFormer: Vision Transformer with Bi-Level Routing Attention arXiv preprint arXiv:2303.08810 (2023).

- Wang L, Zhang X, Yang H. Safety Helmet Wearing Detection Model Based on Improved YOLO-M[J]. IEEE Access, 2023, 11: 26247-26257.

- Rezatofighi, H., N. Tsoi, J. Gwak, A. Sadeghian, I. Reid, S. Savarese, and I.C. Soc. 2019. “Generalized Intersection Over Union: A Metric and A Loss for Bounding Box Regression.” Paper presented at the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, June 16–20.

- Zhang, Y., W. Ren, Z. Zhang, Z. Jia, L. Wang, and T. Tan. 2021. “Focal and Efficient IOU Loss for Accurate Bounding Box Regression.” Neurocomputing 506 (28): 146–157. [CrossRef]

- MIKE MAZUROV, Oct. 2022, “Railroad Worker Detection Dataset”, kag-gle. [Online]. Available online: https://www.kaggle.com/datasets/mikhailma/railroad-worker-detection-dataset.

- Sunkara R, Luo T. No more strided convolutions or pooling: a new CNN building block for low-resolution images and small objects[C]//Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2022, Grenoble, France, September 19–23, 2022, Proceedings, Part III. Cham: Springer Nature Switzerland, 2023: 443-459.

- MIKE MAZUROV, Jun. 2023, “Inference-Darknet-Yolov4-on-Python”, kag-gle. [Online]. Available online: https://www.kaggle.com/code/mikhailma/inference-darknet-yolov4-on-python.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).