1. Introduction

Generational advancements in astrophysics have led to the invention and development of technologies and theories that serve as better models for understanding the universe. These advancements in astrophysics are tied to new models that can accurately classify celestial bodies (Radio Galaxy, Nebulous, Dwarf Galaxy, etc…). Additionally, with more powerful processing power, the amount of astronomical data processed increases each year. As a result, astrophysicists are provided with a bountiful supply of datasets. Additionally, as technology becomes more potent in handling big data, each dataset can grow in size and quality—contemporary surveys can now store gigabytes of storage with precise data and details. These surveys are also bolstered by technology that provides the view of areas of the universe that were unviewable years before. In the context of machine learning, all these new details and features can cause overfitting which would cloud predictive abilities, and dampen the machine’s ability to classify images[

1]. Essentially, the model overgeneralizes these highly detailed features. These problems motivate the design of more efficient models with lower run times and higher accuracies that may resolve this issue. This is where we delve into the field of signal analysis and the usage of wavelets.

2. Background

We begin by analyzing a relatively novel signal processing instrument: wavelets. Wavelets have been around since the turn of the twenty first century. An examination of wavelets and their transformations sheds light for its use in image pre-processing and how it reveals non-obvious, underlying details .

2.1. Wavelets

Mathematically, wavelets are functions on the time domain that represent a functional waveform whose average value is 0 over an infinite interval, as such wavelets typically approach the value 0 when approaching positive and negative infinity. A wavelet can be described as a “brief oscillation” playing a key role in signal analysis and wavelet transforms by functioning as a detail uncoverer. Typically, there is a single basic wavelet and then a mother wavelet. We obtain the mother wavelet after adding a scaling term to the single basic wavelet, essentially normalizing it. A wavelet is inherently complex, enabling us to create an infinite, orthonormal set of them, forming a Hilbert basis. Just as sinusoidal functions with different frequencies form an "orthogonal" set (multiplying and integrating distinct functions leads to a Kronecker-Delta function), wavelets share this same characteristics [

2].

2.2. Wavelet Transformation

The wavelet transform is a mathematical function used to extract specific details and features from a signal. It takes the form of:

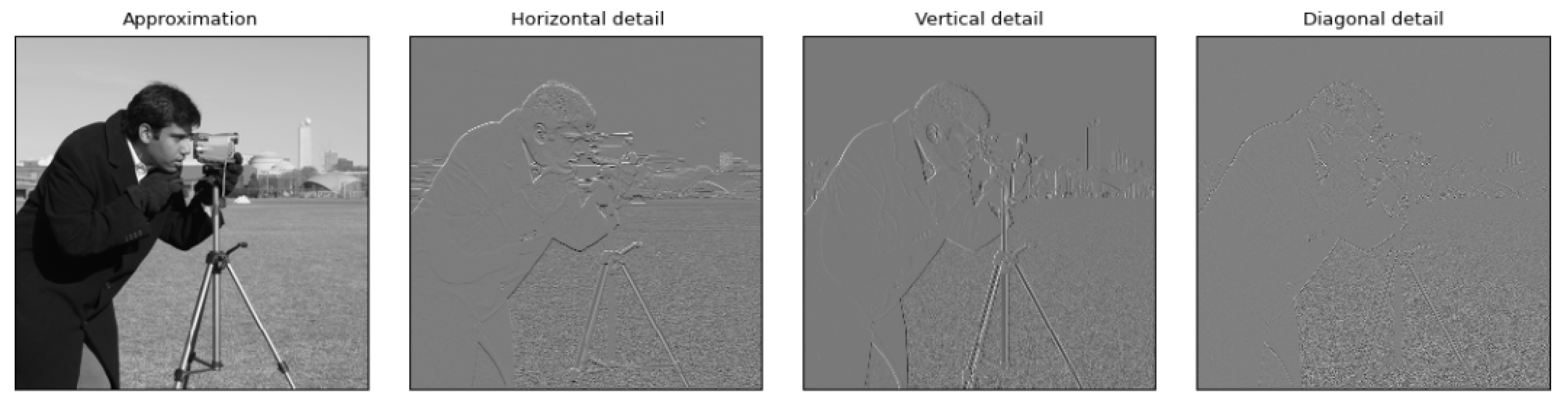

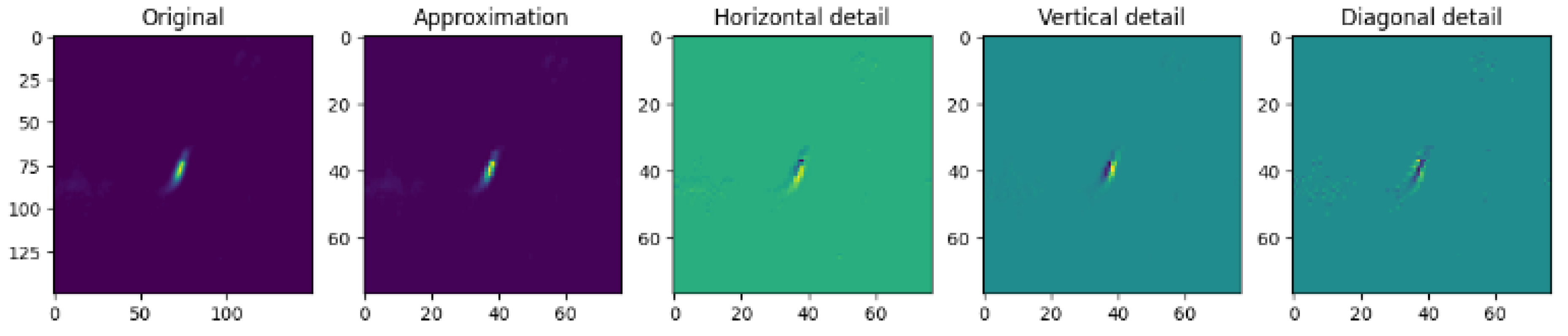

One can note this transform similarly models the fast Fourier transform (they function very similarly). The function transforms the signal using the complex conjugate of the particular wavelet by taking a finite or infinite superposition between the two. The wavelet transform actually functions very similarly to that of a convolution, but instead the kernel is actually the wavelet. Similar to a convolution, wavelets can weed out specific features of a signal. In particular, the wavelets we used were capable of weeding out horizontal, vertical, and diagonal detail.

Figure 1.

Example of different filters applied to an image through Wavelet Transformations

Figure 1.

Example of different filters applied to an image through Wavelet Transformations

An avid reader might wonder the difference between the Wavelet Transform and the Fourier Transform. Both decompose the signal into their respective sinusoidal waves uncovering the frequencies, yet the Fourier Transform fails to account for the time at which each sinusoidal wave is occurring at - the Wavelet Transform uncovers both time and frequency domains. [

3]

2.3. Applications

In a more general sense, wavelet transform have applications in the fields of data and image compression, most notably in the JGP 2000 format. For example, data with abnormal variation and spikes like audio files is better compressed with wavelet transforms while data with periodic variations are better compressed with other methods such as the Fourier Transform. [

4]

Wavelet analysis has improved the accuracy of image classification models in a different number of ways. Due to the variability of wavelets in both the frequency and time domain, wavelet functions have been used as activation functions or replacing pooling layers with wavelet transforms.[

5] In our project, we simply use wavelets as a pre-processing technique while leaving many of the standard machine learning techniques unchanged.

3. Method

3.1. Data Gathering and Preprocessing

We gathered data from the MiraBest Batched Dataset, a dataset consisting of 1256 images of radio-loud AGNs from two sky surveys: FIRST and NVSS [

6]. FIRST or Faint Images of the Radio Sky at Twenty-cm was last updated in 2011 on the NRAO’s Very Large Array, an influential astronomical radio observatory in New Mexico [

7,

8]. It largely centered on the North and South Galactic Caps, and in the years since, it has become one of the most used surveys in radio galaxy classification tasks, forming the basis of much of the research being done in the area [

9,

10,

11]. NVSS on the other hand, another survey done on the VLA, covers the sky over a negative forty-degree declination [

12]. Both surveys are publicly available through FTP and MiraBest is available on Zenodo.

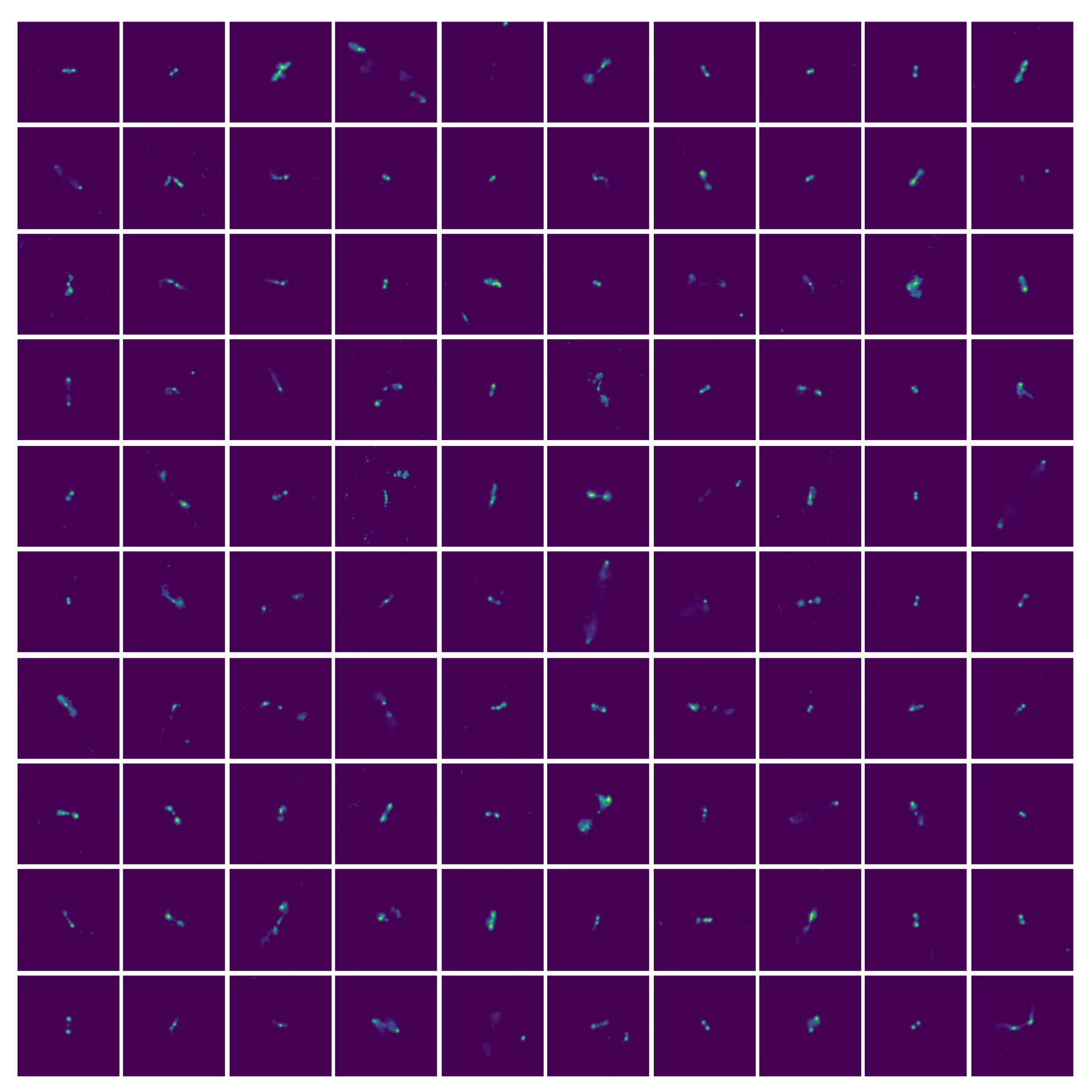

Figure 2.

Illustration of MiraBest Dataset

Figure 2.

Illustration of MiraBest Dataset

Using a virtual telescope, the MiraBest Dataset collected images from both surveys classified by FRI and FRII-type galaxies. We selected MiraBest based on a variety of factors: size, ease of use, and image quality. Additionally, as the dataset was created to help train models in the classifying of radio galaxies and had preset data loading tools that allowed for compiling AGNs into train and test sets, so its results can be readily achieved using simple PyTorch code (as shown with its many implementations) [

13].

We started by conducting an Exploratory Data Analysis, largely looking at the distribution of image labels and ensuring that the dataset was not too unbalanced for the classification task we had in mind. We found it to have roughly 40% FRI and 60% FRII, and after concluding that the data required no other preprocessing or image restructuring, we compiled the images into the dataset’s provided train and test sets—a roughly 70% to 30% split.

Another dataset we looked into was RadioGalaxyNet a dataset with both radio and infrared channels [

14]. We found its intended purpose of automated detection rather than classification to be beyond our goals of utilizing wavelet analysis. However, as the dataset is well furnished with annotations, and contains even more galaxies (4,155 across 2,800 images), it could have a bearing on a future work’s dataset selection.

3.2. Model Architecture

Prior research has proven Convolutional Neural Networks to have significant promise in the field of Computer Vision for three main reasons [

15].

High accuracies on image classification tasks

Les computationally expensive compared to other types of neural networks and other machine learning algorithms

The use of convolutional layers in reducing dimensionality without losing information

Furthermore, a significant level of research has already been done on the classification of stellar bodies and specifically, radio galaxies with ConvNets [

13,

16]. For those reasons, CNN was our model of choice.

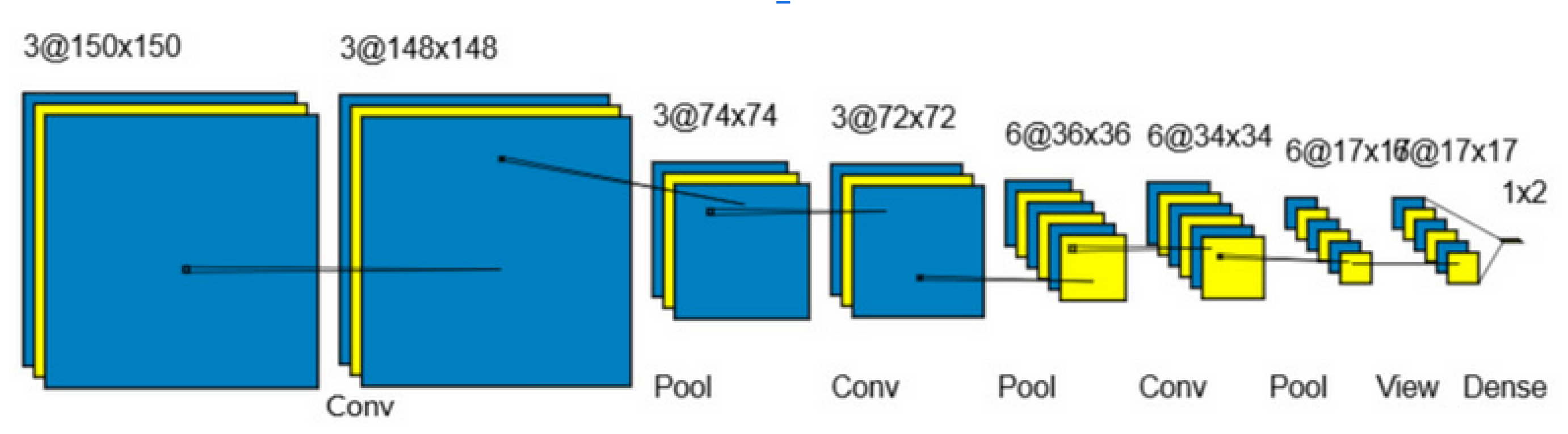

Figure 3.

Model Architecture

Figure 3.

Model Architecture

After creating a baseline model, a standard PyTorch Convolutional Neural Network with a total of 3,752 neurons, consisting of 3 convolutional layers, 3 pooling layers, and a single flattening layer, we trained the model. It took in gray-scale images of resolution 150 by 150 pixels.

As mentioned before, the convolutional layers can isolate single features (similar to how wavelet analysis will be applied later). Following it are the max pooling layers which reduce spatiality and tend to help mitigate overfitting.

The first convolutional layer takes in images of size 150x150, with a kernel size of 3, an output size of 3, and an input channel of 1. Afterward, the result is passed through a max pooling layer with a ReLU activation function that selects the maximum between 0 and its input. The same process is repeated twice more, first with a convolutional layer of kernel size 3x3, 3 output channels, and 3 input channels and second with a layer of the same kernel and input sizes but with 6 output channels instead of 3 (the ReLU max pooling layers after the convolutional are identical). Finally, the image is flattened and put through a fully connected layer with an output channel of 2. After that, the model was then given a cross-entropy loss function and the Adam optimizer with a learning rate of 0.01 [

17].

Part of the reason behind our decision to create a base model with so few layers was a prediction that the model would overfit. To combat that issue without lowering any resolution of images—that could compromise important astronomical features—we simplified the model and reduced the number of neurons.

3.3. Hyperparameter Optimization

After logging the results of the benchmark model (using Matplotlib and an epoch-based graphing system) and then analyzing both the accuracies and loss for the training and validation, we concluded that the model was overfitting [

18].

Because of this unsatisfactory validation accuracy, we began to optimize hyperparameters—hoping to find the right balance between the number of neurons and validation accuracy. So, to correct the overfitting, we started by changing the number of convolutional layers and dropout layers, lowering the number of neurons, changing activation functions, and playing with the learning rate.

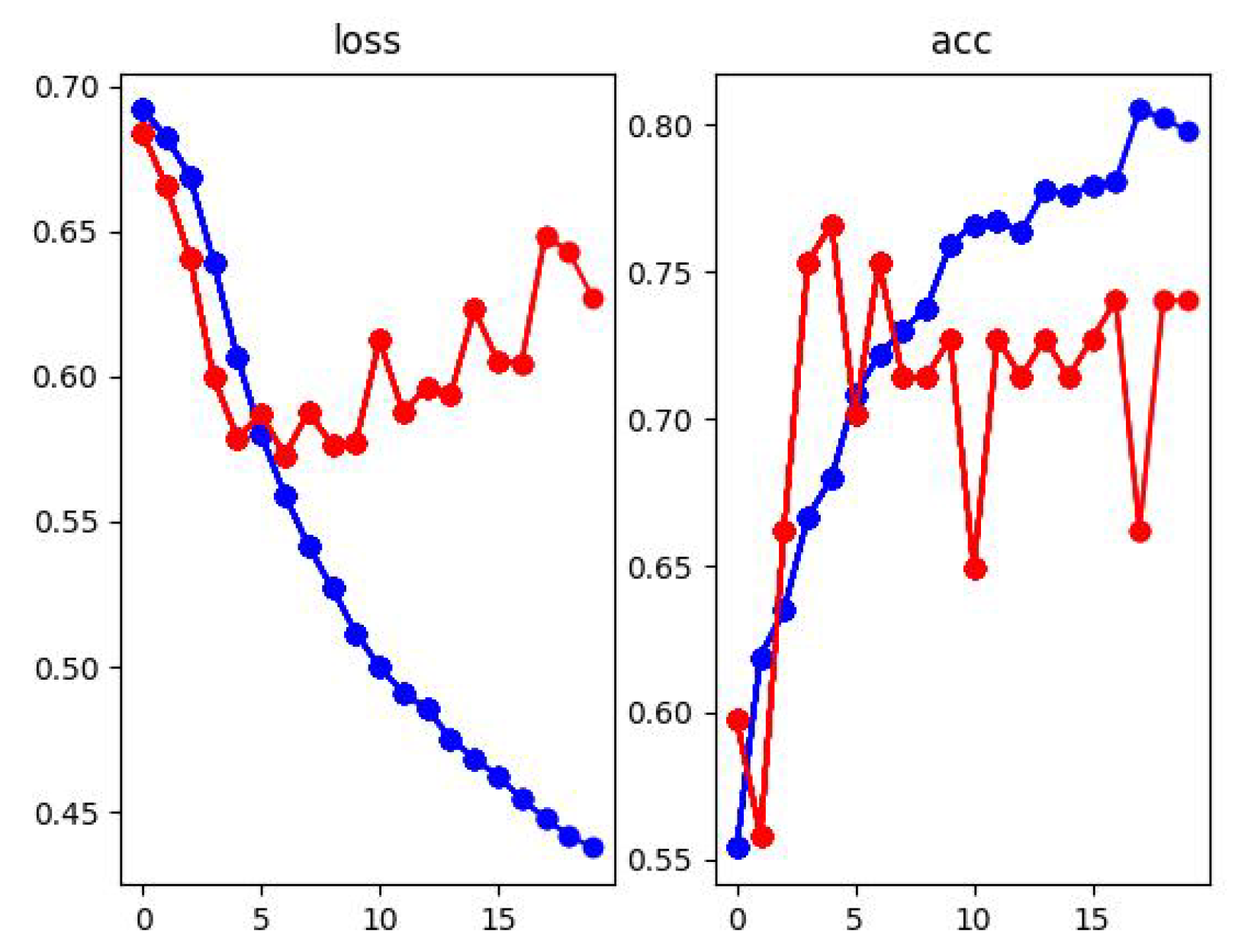

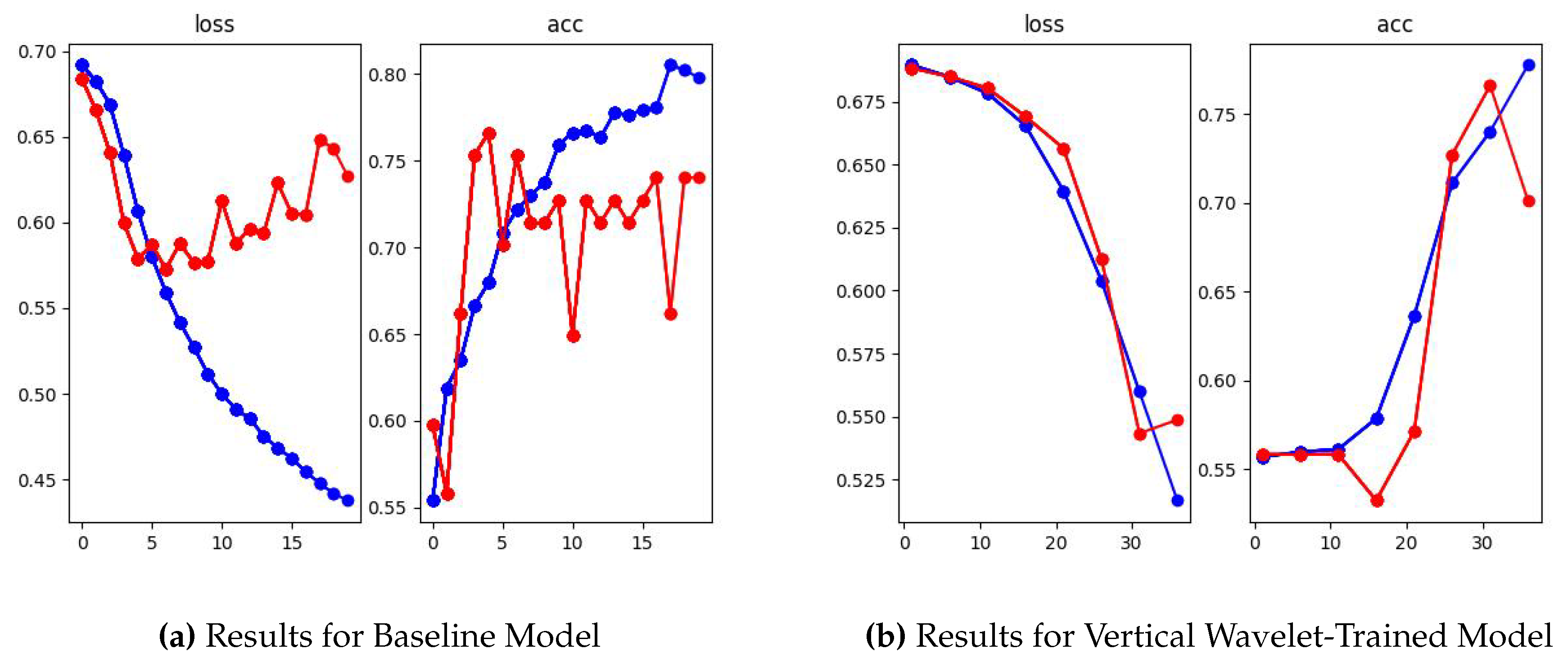

Figure 4.

Optimized Baseline Model’s Results on the 20th Epoch

Figure 4.

Optimized Baseline Model’s Results on the 20th Epoch

As early as epoch 5 the validation loss (pictured in red) began to spike upwards, illustrating a drop in loss that continued to worsen as the model continued to train. In contrast, the training loss and accuracy point to the model’s performance on the training set being highly effective. In fact, logs of the model’s results after 20 epochs have shown training accuracy to reach as high as 90%.

Even with optimized hyperparameters, our validation accuracy only increased minimally, by 5%. As a result, we began to seek other methods of combating overfitting, other methods that found the right trade-off between training accuracy and validation accuracy without compromising on model resolution or adding or removing images from the dataset.

3.4. Wavelet Analysis

Referring back to the wavelet transform, we began by inputting each image as our

. The wavelet transform has the capacity to be modified for higher dimensional signals like images. With each signal (or image), we applied four different wavelets

that acted as filters for specific features: approximation, horizontal, vertical, and diagonal. Approximation compressed the image quality, horizontal isolated horizontal details, vertical isolated vertical details, and diagonal isolated diagonal details. We also created a “combined” details input, stacking each of the filters together into one super-image. These functions were provided by the PyWavelets library [

19].

Figure 5.

Example of Wavelet Analysis on a specific AGN

Figure 5.

Example of Wavelet Analysis on a specific AGN

3.5. Model Training

Using the same hyperparameter-optimized CNN architecture, we created five training loops for each of the details. Each ran for twenty epochs, which we found to be sufficient to view any overfitting in a model.

Then while iterating through the batches for both the train and test sets, we started by isolating the different details using the biorthogonal bior1.3 family. Afterward, we began updating the loss and step functions. Additionally, we updated a variable that tallied the number of correct predictions, which we used to graph and log the results for both losses and both accuracies every five epochs.

Figure 6.

Comparison of model train on unaltered images and model trained on vertical wavelet images

Figure 6.

Comparison of model train on unaltered images and model trained on vertical wavelet images

Table 1.

Comparison of model accuracies and losses. The model trained on vertical wavelets achieved better performance compared to other models with respect to accuracy, loss, and overfitting.

Table 1.

Comparison of model accuracies and losses. The model trained on vertical wavelets achieved better performance compared to other models with respect to accuracy, loss, and overfitting.

| Model |

Accuracy (%) |

Loss |

| Train |

Validation |

Train |

Validation |

| Baseline |

79.80 |

74.03 |

0.4377 |

0.6270 |

| Approximation |

77.20 |

67.53 |

0.4654 |

0.5588 |

| Horizontal |

79.08 |

71.43 |

0.4506 |

0.5255 |

| Diagonal |

79.51 |

72.73 |

0.4397 |

0.5224 |

| Combined |

77.63 |

68.83 |

0.4500 |

0.5843 |

| Vertical |

77.49 |

81.82 |

0.5153 |

0.5054 |

4. Results

4.1. Overfitting

As shown in the graph of the results for original images, the model started overfitting on epoch 6. On the other hand, our best-performing model, the CNN trained on vertical details, takes 30 epochs to overfit. Even then, the overfitting we observed is a much smaller increase in the validation loss compared to the much larger validation loss spikes of the original images.

Going beyond the vertical wavelet-trained model, and looking at the others, it seems like they all perform better with overfitting. Their validation loss is much more stable—even though they do receive spikes, their occurrence is less frequent and much less drastic.

4.2. Accuracy

When we used hyperparameter optimization, the model got better at predicting new data but at a cost of overall accuracy We know that when loss decreases, the “real” accuracy is always going to increase, on the other side when loss increases like when the original model starts overfitting, the “real” accuracy starts decreasing even though the accuracy shown on the graph seems to increase

Using this knowledge we estimate the “real” original model accuracy to be 74.03%. For the model trained on vertical details, we estimate a validation accuracy of 81.82%, an increase of over 10.52%.

5. Future Work

The success of wavelet analysis as a pre-processor for the machine learning model is evidence for its usage in the classification of other non-galactic images. A future application of wavelet analysis could reveal underlying features of different images. For example, if a certain RR Lyrae underwent a physical process that enunciated a diagonal feature, wavelet analysis could distinguish that well enough for the machine learning model to recognize. In regards to the methodology, we may look to try different wavelets that will filter different detail and note the changes in accuracy of those. In regards to our model, we believe that pre-trained models will fare better compared to the primitive models we used. Additionally, it should be possible to develop algorithms that could isolate different details such as a circular detail that detects a "curl" of a signal and filters those details out. A larger range of filters encapsulates more possibilities of certain predictors that may influence the classification process. In future runs of this experiments, different wavelets and pre-trained models would provide more insight into the predictability of certain astronomical bodies.

6. Conclusions

Even though some of the models did not get extreme increases in accuracy over the originals, every single one of them outperformed the model’s tendencies to overfit. Additionally, it appears applying wavelet transformations as a pre-processing filter reduced model overfitting In the future, wavelet transformations could be used to identify which features could be better indicators of certain galaxies For our dataset, vertical features were the best indicators in classifying galaxies from the FIRST sky survey suggesting theory could be developed around the fact that vertical details may be a In general, wavelets are extendable to any astronomical image; details that identify certain celestial bodies can be identified through wavelets. By understanding the relevant details of celestial bodies, it will also help develop astrophysics theory explaining the significance of these features. As wavelets are a relatively novel idea in signal processing, more research and programming can be done to improve the model of wavelet transforms, further expanding their usages in image classification and pre-processing.

References

- Krawczyk, Bartosz. Learning from imbalanced data: open challenges and future directions. [CrossRef]

- A Really Friendly Guide to Wavelets. Available online: http://www.polyvalens.com/wavelets/theory/#7.+The+scaling+function+ 5B7 5D (accessed on 02.28.2024).

- Andrew E. Yagle, B.J.K. AN INTRODUCTION TO WAVELETS 1996.

- Selesnick, I.W. Wavelet Transforms — A Quick Study 2007.

- Wang, L.; Sun, Y. Image classification using convolutional neural network with wavelet domain inputs. IET Image Processing 2022, 16, 2037–2048, [https://ietresearch.onlinelibrary.wiley.com/doi/pdf/10.1049/ipr2.12466]. [CrossRef]

- Porter, F.A.M.; Scaife, A.M.M. MiraBest: A Dataset of Morphologically Classified Radio Galaxies for Machine Learning, 2023, [arXiv:astro-ph.IM/2305.11108].

- Robert H. Becker and David J. Helfand and Richard L. White and Michael D. Gregg and Sally A. Laurent-Muehleisen. The FIRST Survey: Faint Images of the Radio Sky at Twenty Centimeters. Available online: http://sundog.stsci.edu/ (accessed on 02.24.2024).

- Very Large Array. Available online: https://public.nrao.edu/telescopes/VLA/ (accessed on 04.04.2024).

- Nair, P.B.; Abraham, R.G. A CATALOG OF DETAILED VISUAL MORPHOLOGICAL CLASSIFICATIONS FOR 14,034 GALAXIES IN THE SLOAN DIGITAL SKY SURVEY. The Astrophysical Journal Supplement Series 2010, 186, 427 – 456.

- Aniyan, A.; Thorat, K. Classifying Radio Galaxies with the Convolutional Neural Network. The Astrophysical Journal Supplement Series 2017, 230.

- Slijepcevic, I.V.; Scaife, A.M.M.; Walmsley, M.; Bowles, M.; Wong, O.I.; Shabala, S.S.; White, S.V. Radio Galaxy Zoo: Towards building the first multi-purpose foundation model for radio astronomy with self-supervised learning. RAS Techniques and Instruments 2023.

- J. J. Condon and W. D. Cotton and E. W. Greisen and Q. F. Yin and R. A. Perley and G. B. Taylor and J. J. Broderick. The NRAO VLA Sky Survey. Available online: https://www.cv.nrao.edu/nvss/ (accessed on 04.04.2024).

- Mohan, D.; Scaife, A. MCMC to address model misspecification in Deep Learning classification of Radio Galaxies. 2023.

- Gupta, N.; Hayder, Z.; Norris, R.P.; Huynh, M.; Petersson, L. RadioGalaxyNET: Dataset and Novel Computer Vision Algorithms for the Detection of Extended Radio Galaxies and Infrared Hosts, 2023, [arXiv:astro-ph.IM/2312.00306].

- Niklas Lang. Breaking down Convolutional Neural Networks: Understanding the Magic behind Image Recognition. Available online: https://towardsdatascience.com/using-convolutional-neural-network-for-image-classification-5997bfd0ede4 (accessed on 04.07.2024).

- Tolley, E. Wavelet Scattering Networks for Identifying Radio Galaxy Morphologies, 2024, [arXiv:astro-ph.IM/2403.03016].

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization, 2017, [arXiv:cs.LG/1412.6980].

- sybernix. Breaking down Convolutional Neural Networks: Understanding the Magic behind Image Recognition. Available online: https://sybernix.medium.com/drawing-loss-curves-for-deep-neural-network-training-in-pytorch-ac617b24c388 (accessed on 04.07.2024).

- Lee, G.R.; Gommers, R.; Waselewski, F.; Wohlfahrt, K.; Leary, A. PyWavelets: A Python package for wavelet analysis. Journal of Open Source Software 2019, 4, 1237. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).