1. Introduction

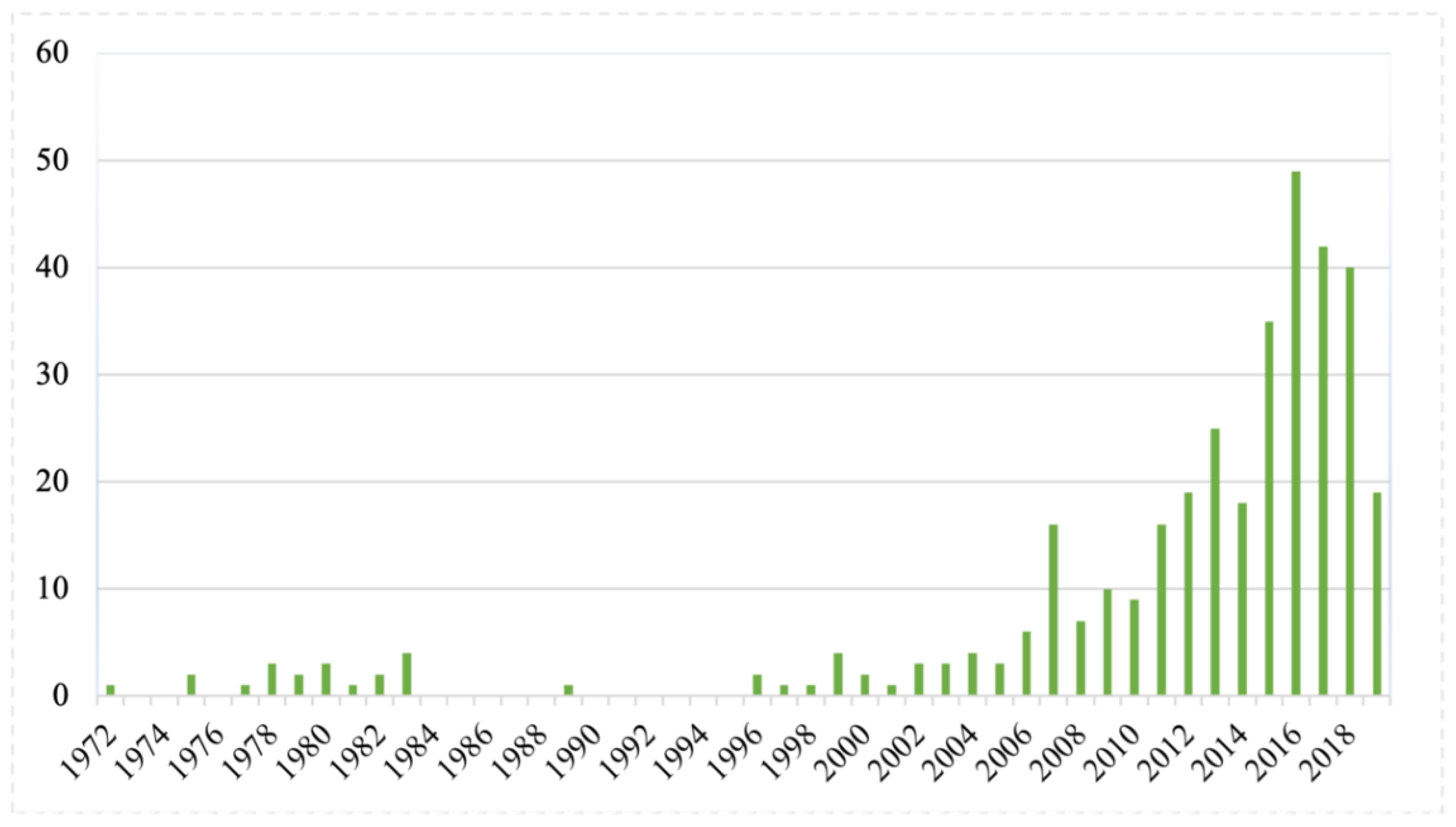

Research on student learning in the laboratory accounts for a rapidly growing corpus of knowledge. A recent systematic review of learning outcomes associated with laboratory instruction in university chemistry education shows how the number of publications in leading journals grew substantially in the past decades up to mid-2019, as shown in

Figure 1 [

1]. The review demonstrates that laboratory instruction lends itself to a variety of learning outcomes and experiences that may be different from that of other settings such as lectures and tutorials. However, in higher education teaching practices, this statement is more often assumed than actually substantiated. Longstanding critics of the effectiveness of laboratory instruction reassert the need to improve research in this field, by focusing on evidence and rigor, as well as revisiting the philosophical foundations for what constitutes learning [

2,

3,

4].

In the review above, researchers have used widely varied theories and methodologies. They espoused theoretical frameworks such as self-determination theory, social constructivism, active learning theory, and cognitive load theory. In their investigation, they used methodologies such as phenomenography, (quasi) experimental design, learning analytics, action research, and ethnography. Notably, there were only three discourse studies, out of the bulk of 355 included in the systematic review. The review incorporated critical appraisals of the quality of reviewed studies, according to the criteria proposed by Zawacki-Richter and colleagues [

5]. Drawing on some insights from these appraisals, it was recommended that in terms of research methodology, future studies could benefit from greater methodological rigor, which mirrors Hofstein and Lunetta’s [

3] recommendation in their major review of laboratory education research. While there has been some advancements in theories and methodologies of doing research in this setting, there are still some areas that necessitate improvement and refinement.

This methodology paper seeks to delineate rigor within laboratory education research and illustrate an attempt to address it with a dedicated research project. The notions of “triangulation” and “multiperspectivity” will be used to characterize it. Relevant literature in laboratory education research will be consulted in light of debates on overarching methods and methodology. While this paper is primarily situated in chemistry education research literature, many elements are transferable to other science disciplines as well as interdisciplinary contexts.

2. Contextualizing Rigor

Rigor is a critical aspect of doing science education research, which can be conceptualized as both theoretical and methodological notions. It refers to the extent to which research processes are controlled, systematic, and reliable. Although the ideals of precision and accuracy, as well as validity and reliability, have been debated among qualitative and quantitative researchers [

6,

7,

8], it is generally agreed that for a piece of research to have a scientific merit, it has to meet certain quality criteria. These criteria are often centered on clear and theoretically informed research questions, explicated and minimized researcher bias, and triangulated methods or iterative approaches within a single method.

2.1. Theory-Informed Research Questions

Underlying the rationale for choices made in an inquiry is the research question. Rigor is established when the question is researchable, meaningful, and informed by theory [

9,

10]. In chemistry education research, Bunce

10 particularly emphasizes the importance of theory-based questions, by clearly situating them within the existing knowledge structure, such as in cognitive science, learning sciences, sociology, and so forth. For example, Kiste and colleagues [

11] use active learning theory to situate their study on learning assessment of integrated chemistry laboratory-lecture experience. They argue from the overwhelming evidence for the effectiveness of active learning pedagogies in increasing student knowledge, positively influencing their affective strategies, and decreasing failure rates. Informed by the established theory and strong empirical evidence, they formulated research questions around “What is the effect of the integrated laboratory-lecture environment on student knowledge, course grades, failure rates and learning attitudes?” Likewise, Wei and colleagues [

12] frame their investigation into student interactions in chemistry laboratories within social constructivism theory, particularly the so-called Model of Educational Reconstruction. They argue that the model highlights the importance of considering students’ perspectives of interactions in the laboratory in improving an understanding of the principles underlying laboratory work. As such, they pursue a research question such as “What do undergraduate students consider to be important interactions for effective learning in introductory chemistry laboratories?” Both of these studies are among the most highly regarded studies in the aforementioned systematic review, which shows how theory-informed research questions could enhance the research quality and rigor.

In an illustrative case of the BLINDED project elaborated in the next section, the theory of competence development [

13,

14] is used to design interrelated lines of investigation into student learning in the laboratory. This theoretical framing dovetails a mandate from the Danish Ministry of Education that altogether points to the gaps of knowledge yet to be resolved. As such, the initial design of the project seeks to address a research question such as “Which factors influence pharmaceutical students’ acquisition of laboratory-related competences, and how can such competences be assessed?”

2.2. Researcher and Participant Biases

Rigor is also established when biases, both that of the researchers and participants, are explicated and minimized. Researcher biases have been a real issue particularly in qualitative research [

8,

15]. Even from the initial stage, the researcher’s background, beliefs, and experiences may exert biases on the choices made related to research questions, which may influence which context and participants are involved. At any later stage, their biases could also influence data collection and interpretation. Johnson and colleagues [

8] maintain that in order to establish rigor, these biases should be acknowledged and addressed. An example from laboratory education research can be drawn from Markow and Lonning [

16], where they use a quasi-experimental research design to study the effect of the construction of pre-laboratory and post-laboratory concept maps on students’ conceptual understanding. They acknowledge that the researcher teaching both the experimental and control groups could exert researcher biases. To minimize it, two chemical educators viewed videotapes of the pre-laboratory instruction for both groups and judged the nature of the instruction. Also, the concept maps for pre-laboratory were graded separately prior to the post-laboratory concept maps, which was “purposefully done to avoid researcher bias” (p.1021).

Because science education research is concerned with human participants, biases could also originate in participants’ background, beliefs, and experiences. Winberg and Berg [

17] speak of “prestige bias” in their interview study on the effects of pre-laboratory activities on cognitive focus during the experiment, which refers to participants’ own perceptions of how a statement would make them appear. When students know they are being evaluated, they may focus on what the researchers may want to hear. They focus their study on the spontaneous use of chemistry knowledge, so they minimize participant bias by “avoiding explicitly examining chemistry questions” (p. 1119). Interview studies are particularly prone to both types of biases, and efforts should be made to explicate and reduce them. A way of resolving this problem is by operationalizing the notion of “multiperspectivity”.

Used to characterize methodological rigor, multiperspectivity in educational research can be defined as a narrative approach to investigating the lived experiences of research participants in which multiple and often discrepant viewpoints are employed for the substantiation and evaluation of a subject of interest [

18,

19]. The philosophical foundation for addressing multiperspectivity in research is twofold. On the one hand is partiality of knowledge and experiences, which refers to the idea that our understanding of the world and reality is limited and incomplete [

20]. On the other hand is intersubjectivity, which refers to the variety of possible relations between people’s perspectives [

21]. In substantiating stakeholders’ perspectives on learning in the laboratory, multiple perspectives are aimed at resolving the limitations and presumably biases of one’s viewpoint and lived experiences, by exploring alternative narratives from other stakeholders. It is also useful to establish the relations between these perspectives, by identifying similarities and differences, verifying factual information, sketching nuances, and exploring the richness of the context.

2.3. Iteration and Triangulation

The third cornerstone of methodological rigor is concerned with data collection. In a single-method approach, such as surveys or interview study, rigor is established through iteration

8. For example, Santos-Diaz and colleagues [

22] describe how they iteratively administered a questionnaire on undergraduate students’ laboratory goals through three stages of development. After a pilot implementation involving 904 participants, they modified the survey to ensure validity and reliability. In their attempt at establishing rigor, they acknowledge that surveys could cause response fatigue, so redundancy should be avoided. To compare, in their phenomenological study on student experiences of reform in a general chemistry laboratory, Chopra and colleagues [

23] emphasize the inductive and iterative processes involving five researchers to reduce verbal data into the essence and meaning of experiences. The cyclical process is insightful in this qualitative study, as it demonstrates how the researchers minimize biases through refinement, agreement, and contestation. In many qualitative studies where only one researcher is involved, this may not be possible. Therefore, other measures should be taken to ensure that the findings are generated in a comparably rigorous fashion. One way of going about it is through triangulation.

In its simplest definition, triangulation refers to a combination of quantitative and qualitative approaches in the same study [

24]. As such, it is predominantly associated with mixed-methods research. However, it has also been developed within the qualitative research tradition [

25]. The core argument of triangulation in establishing rigor lies in the assertion that the use of multiple methods produces stronger inferences, addresses more diverse research questions, and hence, generates more diverse findings, increases data validity, and reduces bias [

24,

26]. In their study on argumentation in the laboratory, Walker and Sampson [

27] use three different data sources to track students’ argumentative competence development over time. Performance tasks evaluated at three points in time, video recording of students engaging in argumentation in the laboratory, and their laboratory reports were used to make triangulated claims about how students learn to argue in the laboratory, and how engaging in argumentation also generates learning. By addressing methodological rigor, the study provides genuine evidence for learning outcomes associated with higher-order thinking skills.

Within the qualitative research tradition, triangulation has also been pursued [

24,

25,

28]. There are several ways in which qualitative researchers have done this. One way is by combining various methods of qualitative data collection and analysis, such as interviews, observations, visual data, discourse analysis, and document analysis. Another way is by meaningfully combining theories that underpin these methods, such that different epistemological assumptions are made explicit and set in a dialogue [

28]. Lawrie and colleagues [

29] combine wiki laboratory notebooks and open response items to investigate learning outcomes and processes in an inquiry-type nanoscience chemistry laboratory course. Their study shows that the wiki environment enhances student understanding of experimental processes and communication of results. As a comparison, Horowitz [

30] incorporates a triangulation of theories in their study on intrinsic motivation associated with a project-based organic chemistry laboratory. By critically engaging with social constructivism, self-determination theory, and interest theory, they demonstrate how students’ responses to curriculum implementation are mediated by their achievement goal orientations, particularly among mastery-oriented students.

3. Illustrative Case

3.1. Context

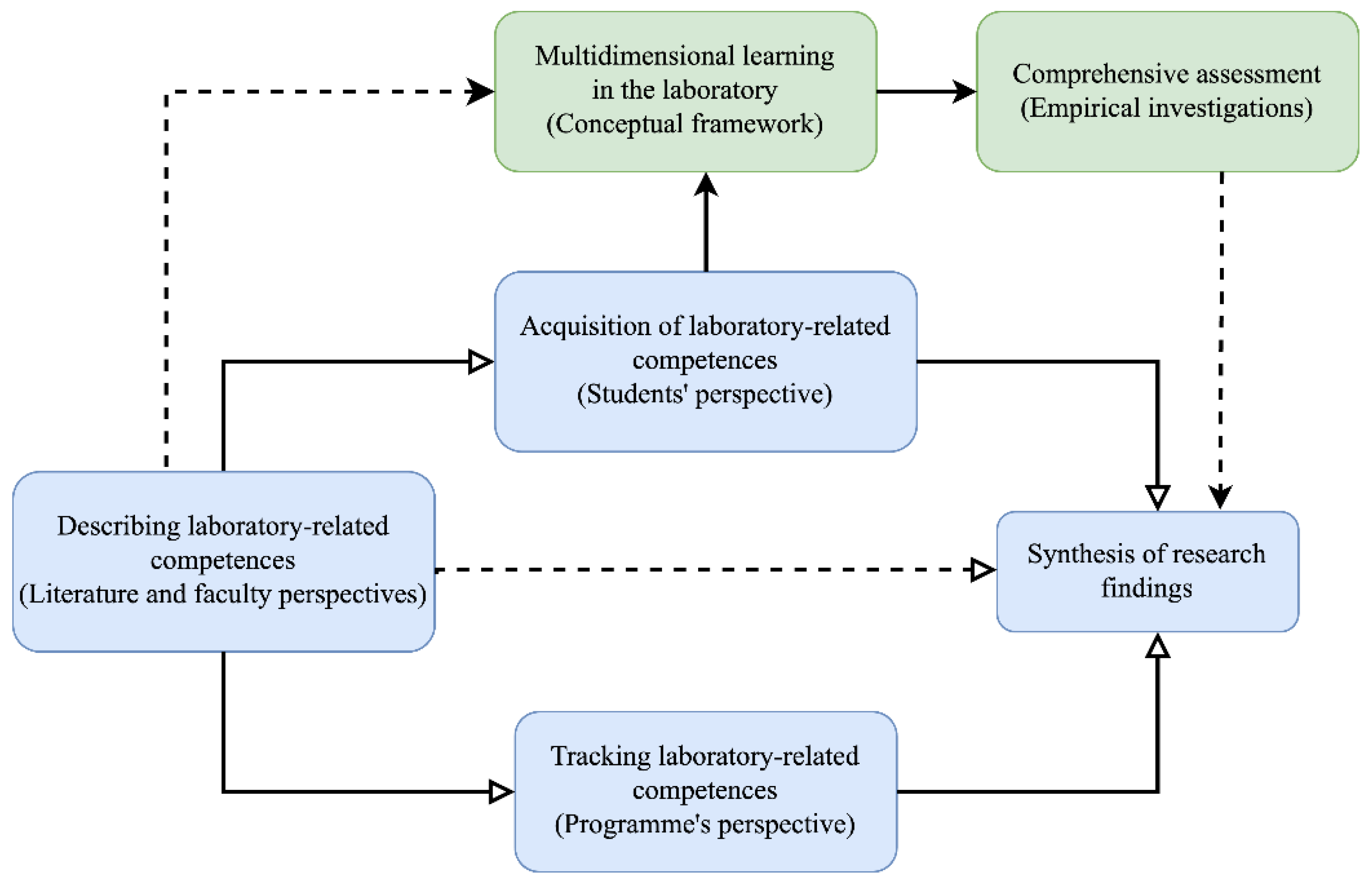

The context I use to illustrate rigor in this paper is a large research project aimed at improving learning in the laboratory at the university level. An interdisciplinary, collaborative project between Department A and Department B at a research university, it involves several researchers at different career levels from various scientific backgrounds, including chemistry, biology, pharmaceutical sciences, mathematics, and philosophy. It has been running since 2019 and is nearing completion. As of 2024, some of the research findings have been synthesized [

31]. The project is illustrated there to highlight some aspects of establishing methodological rigor as argued in the previous section. The project incorporates two research avenues, as shown in Figure 3. One is the initial design of the project, structured in several work packages (WPs), in which WP2 explores the literature and faculty perspectives, whereas WP3 explores students’ perspectives and WP4 explores the study program perspective. These are shown in blue in

Figure 2. Most of the findings from this part have been published (BLINDED). The other avenue, shown in green, is concerned with a research program within the project, focusing on a comprehensive assessment of multidimensional learning in the laboratory (henceforth referred to as CAMiLLa), which is grounded in a conceptual framework and working model for the integration of learning domains in the laboratory [

32].

To address methodological rigor, each study within the project was designed with careful considerations of the aspects mentioned in the previous section. WP2, WP3, and WP4 were primarily designed as qualitative studies. As such, multiperspectivity and a combination of qualitative data sources are central. In CAMiLLa, an attempt has been made to enhance rigor, by means of triangulation of methods. As such, this part of the project is designed within mixed methods paradigm. It is centered on a framework for comprehensively mapping domains of learning in the laboratory, conceptualized as epistemic practice [

32]. Within this framing, laboratory work is conceptualized as epistemic practice [

33,

34], referring to processes related to co-construction and evaluation of knowledge, in which students activate their cognition, affect, conation, and coordinate their body with those faculties of mind in continual negotiations with their peers. The conceptual framework regards student learning as a multidimensional construct and requires a more holistic and comprehensive analysis beyond a typical cognitive view. The overarching goal of these studies is to develop a comprehensive assessment instrument that does justice to the complexity and richness of learning environment in this challenging context.

3.2. Methods

The original WPs in the project primarily used transcripts from the interviews as data, with additional materials such as laboratory reports, curriculum documents, and laboratory manuals. They have been analyzed with thematic [

35] and phenomenographic analyses [

36]. In CAMiLLa, data are generated from multimodal transcripts, focus group interviews, laboratory reports, and questionnaires. Adhering to the principles of ethnography, students doing experimental work in the laboratories of analytical chemistry and physical chemistry were observed and recorded (both video and audio). Their interactions and conversations with each other, with instructors, and with laboratory technicians were converted into lines of utterances and non-verbal cues. These form a laboratory discourse [

32,

37], which, slightly different from classroom discourse [

38], incorporates more modalities [

39], including gestural-kinesthetic and instrumental-operational. Immediately following the laboratory exercise, students were interviewed, guided by and contextualized in either snippets of the video recordings or excerpts from their laboratory reports. The interviews elicited their reasoning skills, argumentation, collaborative learning behavior, problem-solving, affective experiences, goal setting, motivational and volitional strategies, as well as broader epistemic understanding related to the uncertainty of measurements, limitations of knowledge, and scientific practices. These prompts also inform the design of a questionnaire focusing on the conative and affective domains of learning in the laboratory, which is grounded in previously validated instruments [

40,

41,

42,

43,

44].

The laboratory discourse was analyzed using epistemic network analysis [

45,

46] and microanalytic discourse analysis [

47]. The use of both types of discourse analysis was needed because the laboratory discourse itself was characterized inductively at the beginning. Pertinent themes in both verbal and non-verbal interactions were coded inductively as stanzas, and as such, were microanalyzed. Of course, this was not done with the entire corpus, but once a degree of saturation was achieved (there was no more new, emerging theme), the data were then analyzed using ENA, involving a second coder. The other data sources were analyzed differently. Interview data were analyzed thematically [

35], akin to the previous studies [

48], whereas questionnaire data were analyzed with variance analysis.

4. Results and Discussion

4.1. Multiperspectivity on Laboratory Learning across the Work Packages

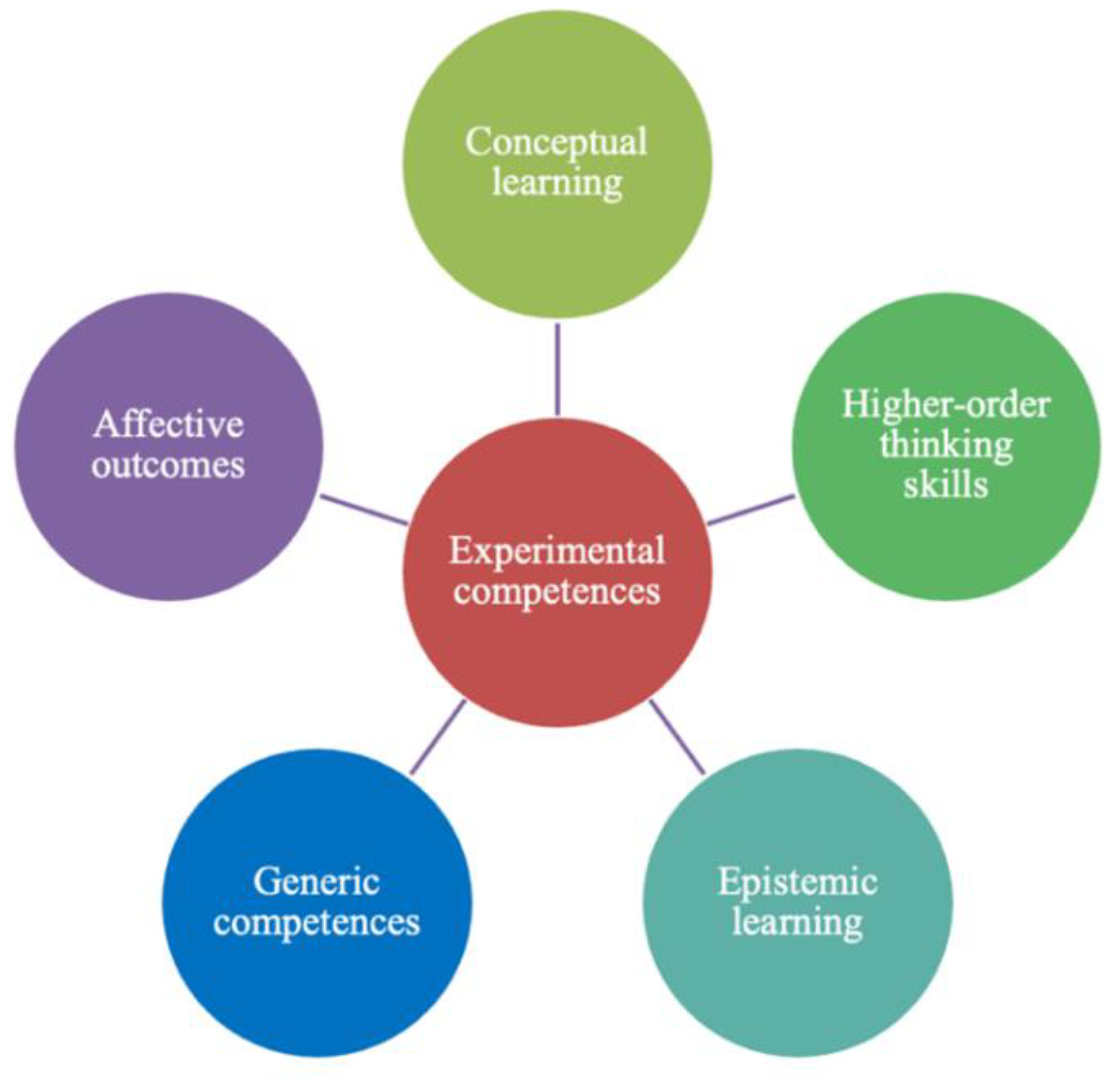

The multiple perspectives on learning in the laboratory from the literature and faculty perspectives (WP2) are centered on the conceptualization of experimental competences, as shown in

Figure 3. Each cluster of learning outcomes in this graph constitutes a host of pedagogical constructs substantiated in the literature of laboratory education research in university chemistry. For instance, within the cluster of “higher-order thinking skills”, constructs such as “reasoning” and “argumentation” have been shown to be associated with laboratory work. A faculty perspective regarding the contextualization of higher-order thinking skills in the laboratory is that students also develop these skills when they are given opportunities to design an experiment [

48]. Likewise, “conceptual learning” in the laboratory pertains to learning about scientific concepts pertaining to experimental work. Scholars have been critical about justifications for laboratory work in addressing conceptual learning goals [

4,

49]. Therefore, within this cluster, a focus has been shifted towards establishing connection between the underlying scientific principles (loosely termed as “theory”) and the experiment at hand (loosely termed as “practice”), which is also perceived by faculty members to be a central goal of laboratory work within this cluster [

48].

To illustrate how multiperspectivity was used to establish rigor, the notion of “theory-practice connection” has also been investigated from a student perspective (BLINDED), as a part of WP3. Students’ conception of this notion seems to fall into at least three categories, i.e. conception of laboratory work as a visual representation of theory, a multimodal support of theory, and a complementary perspective in understanding theory. Because studies within WP2 and WP3 were conducted in the same context, it can be argued that they altogether address both breadth and depth of understanding what and how students learn in the laboratory, at least to a certain degree. While WP2 provides a broad overview of learning outcomes associated with laboratory work, WP3 delves deeper into one construct that essentially originates in the WP2. Some of the ideas related to discussions on conceptual learning were investigated further, as described in the following section, to provide more perspectives and forms of triangulation.

4.2. Triangulation and the Comprehensive Assessment

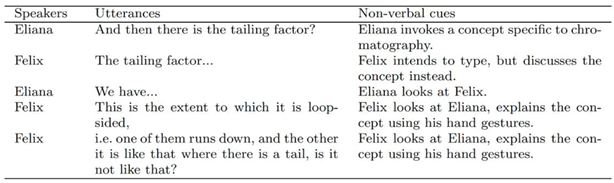

Triangulated findings from the comprehensive assessment provide meaningful insights into student learning processes in the laboratory, to complement the previous focus on learning outcomes. As mentioned above, these are currently divided into several parts. First, multimodal discourse in the laboratory. This part is primarily concerned with how laboratory discourse is characterized by multimodality [

39,

50] and multiple representations [

51]. The use of gestures in explaining a chemical concept, for example, is evinced in a stanza where Felix, a participant, explains the concept of ’tailing factor’ using his hands to Eliana, his lab partner (

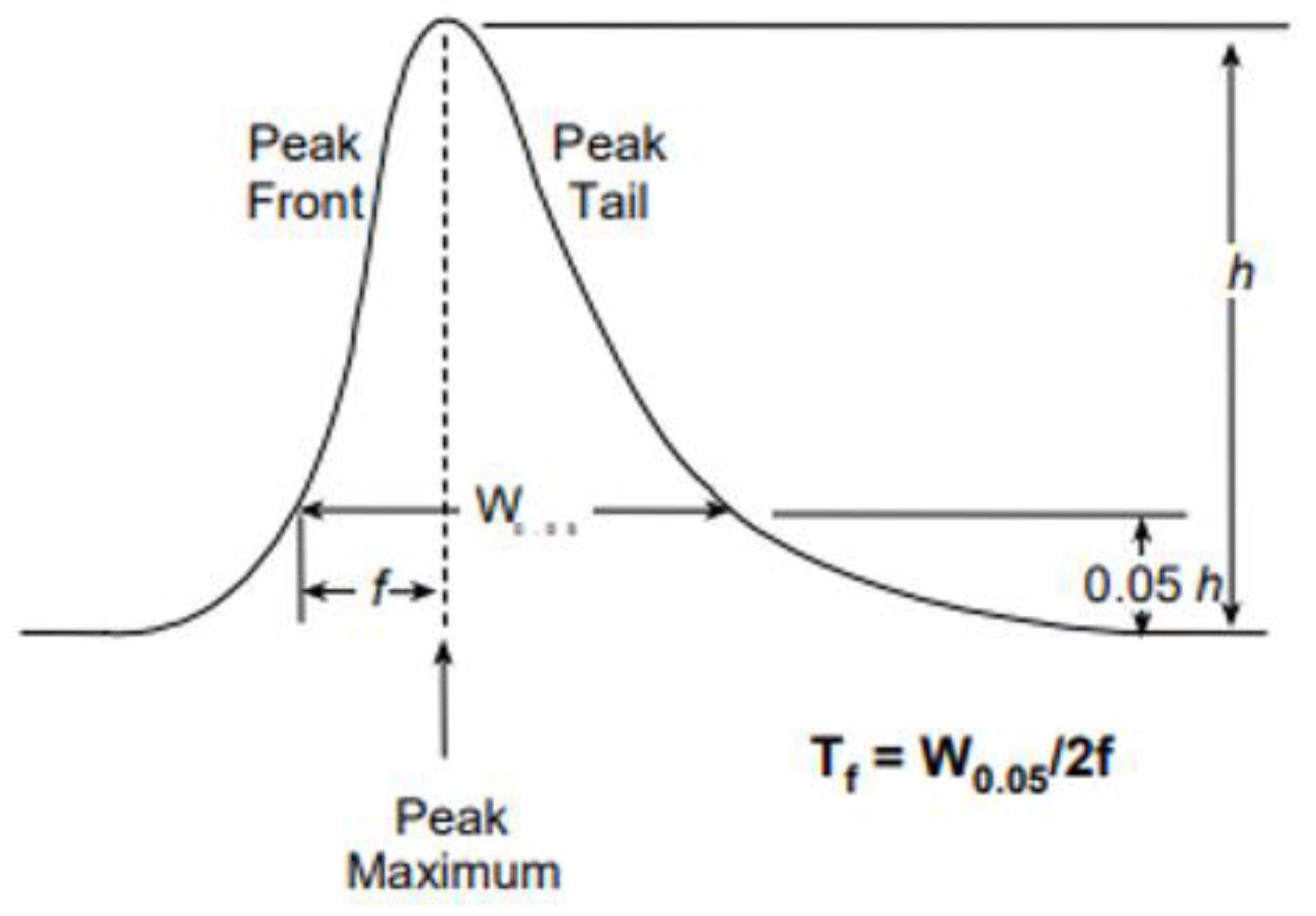

Table 1).

Tailing factor (Tf ) is a concept used widely in pharmaceutical industry that is related to peak tailing. As commonly known in chromatographic science, an ideal chromatography peak is a sharp, symmetrical, Gaussian shape. In reality, it may look more like

Figure 4.

This is an example of how students invoke a concept relevant to what they were about to do, i.e. performing an analysis of tablets containing caffeine and paracetamol using High Performance Liquid Chromatography (HPLC) in the laboratory of analytical chemistry. A typical analysis in pharmaceutical sciences, it requires them to plan an inquiry on how to analyze the content of the tablets and to determine whether the indicated content on the label was true. The experiment required many aspects to consider: technical skills at preparing solutions and operating the instrument, experiment design competence, reasoning skills, argumentative competence, safety and sustainability protocols, conceptual understanding, sensical-perceptual skills at reading scales and meniscus, mind-body coordination during delicate procedures of transferring volatile (and poisonous!) liquids, group dynamic, negotiation of meaning and task distribution, time management, emotional, motivational, and volitional control and regulation. All while trying to learn and somehow appreciate their time in the laboratory. A previous study shows that most students appreciate being able to be in the laboratory and perform laboratory exercises [

52].

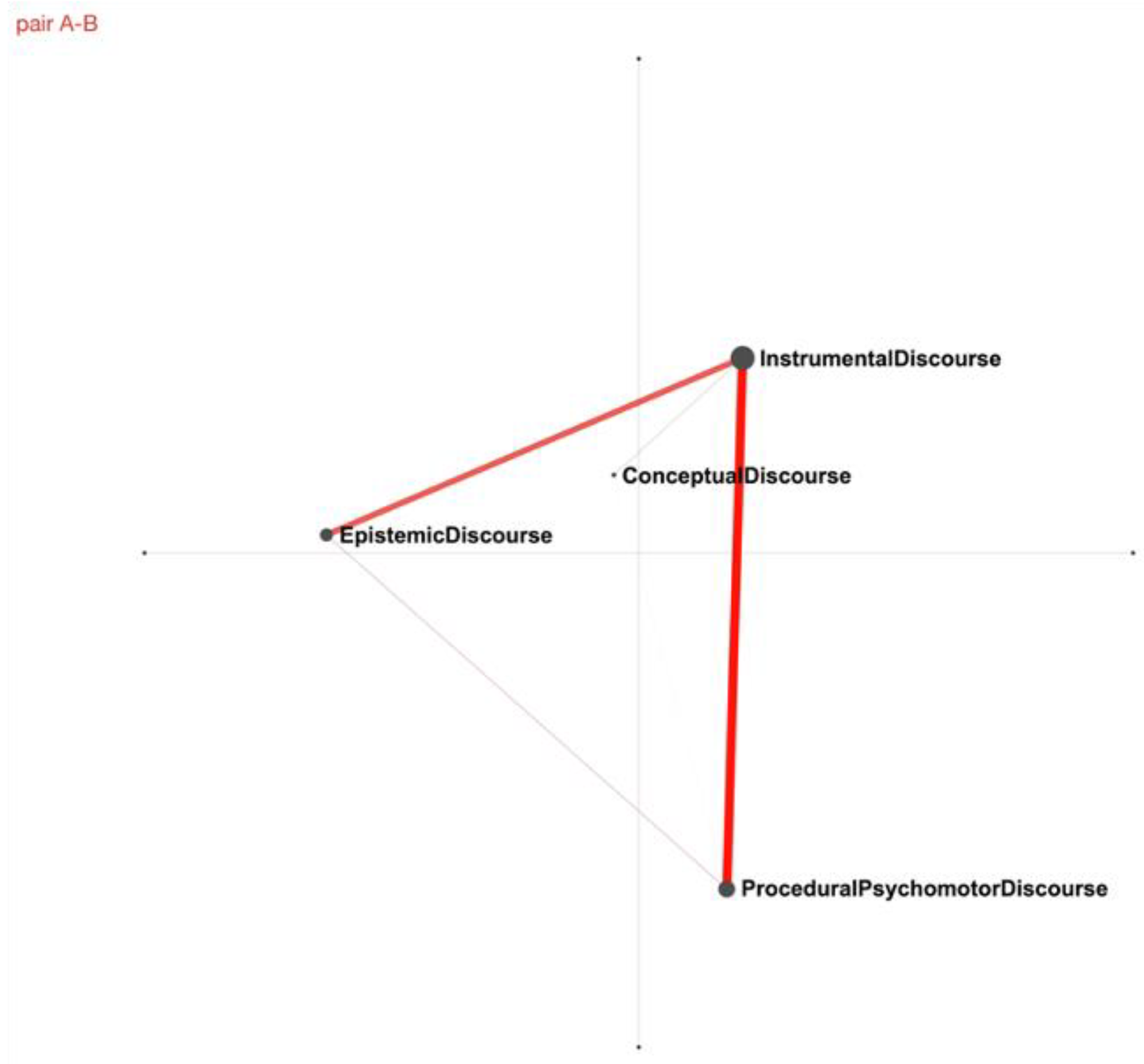

In the larger corpus of data, conceptual discourse may not be the most prominent aspect of student learning process, as can be discerned from the structure of different archetypes of discourse in the laboratory, shown in

Figure 5. This is a visualized structure of discourse drawn from a pair (Alice and Belle) doing an experiment on electrochemistry in the laboratory of physical chemistry, where the curriculum and instruction are mainly expository [

53]. It shows how the line of connection between conceptual discourse and any other discourse (e.g. instrumental and epistemic) is the weakest, and hence it can be said that the interactions between students in this laboratory are not mainly concerned with concepts but more with procedures and instruments. This kind of insight is heretofore only gained from quantification of this type of interactions, such as in a previous discourse study on gas behavior [

54]. However, the way they connect to each other is captured much better with epistemic network analysis.

CAMiLLa is aimed at providing more comprehensive and holistic information about student learning from a multidimensional view. It has been a daunting task to capture the details of learning processes through such a theoretical lens, especially when the video corpus was transcribed and analyzed with multimodal discourse analysis. Nonetheless, snippets from the discourse so far are valuable in sketching nuances and characterizing the interior architecture of student learning. Instances of authentic conversations during various discursive moves in the laboratory as well as during the focus group interviews point to the evidence for epistemic affect [

55], which is an emerging construct in the learning sciences referring to affective and emotional states associated with co-construction of (scientific) knowledge. Triangulation of methods allows for cross-checking, elaboration, and upscaling of evidentiary claims [

56].

5. Conclusion

This paper explores how a pertinent concept in doing science education research, i.e. methodological rigor, is described in laboratory education research literature and illustrated with a large research project aimed at improving learning in the laboratory at the university level. The results demonstrate that multiperspectivity and triangulation of methods are key to improving rigor in this rapidly developing field of research. Detailed discursive moves provide a first-hand account of learning processes as they unfold in their authentic and naturalistic setting, to complement previously reconceptualized responses to prompts about learning generated through interviews and surveys.

Establishing methodological rigor is a prerequisite of high-quality empirical knowledge in science education research. However, it can be challenging, particularly when the research setting is a complex learning environment such as teaching laboratories. These challenges can be related to limited resources, including the number of researchers involved in various stages of the study. A dominant research paradigm in an institution could also be perceived as a limiting factor, in which researchers may feel they have to comply with the majority. For example, certain institutions may profile themselves more strongly within a qualitative research tradition, whereas others assign more value to large-scale quantitative research. Improving rigor may entail challenging such a paradigm. Indeed, this has been demonstrated in the paradigm wars over the past few decades [

24,

57]. Mixed-methods research emerged against a backdrop of debates on incommensurability, but chief to the arguments for advancing this third paradigm is enhancing rigor [

58]. Several aspects of rigor have been contextualized in laboratory education research. As illustrated in this paper, they are perhaps best addressed in a more substantial research project consisting of several lines of inquiry. Of course, any single study could and should strive to address rigor, but larger projects like this allow for methodological and epistemological diversity.

Funding

The work presented in this article is supported by Novo Nordisk Foundation, grant NNF 18SA0034990.

Institutional Review Board Statement

The study was approved by the Institutional Review Board (Case number 514-0278/21-5000).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data is available on reasonable request from the author.

Conflicts of Interest

There is no conflict of interest to declare.

References

- Authors. Article. 2022a.

- Bretz, S.L. Evidence for the Importance of Laboratory Courses. J. Chem. Educ. 2019, 96, 193–195. [Google Scholar] [CrossRef]

- Hofstein, A.; Lunetta, V.N. The laboratory in science education: Foundations for the twenty-first century. Sci. Educ. 2003, 88, 28–54. [Google Scholar] [CrossRef]

- Kirschner, P.A. Epistemology, practical work and Academic skills in science education. Sci. Educ. 1992, 1, 273–299. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Kerres, M.; Bedenlier, S.; Bond, M.; Buntins, K. Systematic Reviews in Educational Research: Methodology, Perspectives and Application; Springer VS: Wiesbaden, 2020. [Google Scholar]

- Coryn, C.L.S. The 'Holy Trinity' of Methodological Rigor: A Skeptical View. J. Multidiscip. Evaluation 2007, 4, 26–31. [Google Scholar] [CrossRef]

- Erickson, F.; Gutierrez, K. Culture, Rigor, and Science in Educational Research. Educ. Res. 2002, 31, 21–24. [Google Scholar] [CrossRef]

- Johnson, J.L.; Adkins, D.; Chauvin, S. A Review of the Quality Indicators of Rigor in Qualitative Research. Am. J. Pharm. Educ. 2020, 84, 138–146. [Google Scholar] [CrossRef] [PubMed]

- Arfken, C.L.; MacKenzie, M.A. Achieving Methodological Rigor in Education Research. Acad. Psychiatry 2022, 46, 519–521. [Google Scholar] [CrossRef]

- Bunce, D. M. Constructing Good and Researchable Questions. In Nuts and Bolts of Chemical Education Research; ACS Publications: Washington, DC, 2008. [Google Scholar]

- Kiste, A.L.; Scott, G.E.; Bukenberger, J.; Markmann, M.; Moore, J. An examination of student outcomes in studio chemistry. Chem. Educ. Res. Pr. 2016, 18, 233–249. [Google Scholar] [CrossRef]

- Wei, J.; Mocerino, M.; Treagust, D.F.; Lucey, A.D.; Zadnik, M.G.; Lindsay, E.D.; Carter, D.J. Developing an understanding of undergraduate student interactions in chemistry laboratories. Chem. Educ. Res. Pr. 2018, 19, 1186–1198. [Google Scholar] [CrossRef]

- Ellström, P.-E.; Kock, H. Competence Development in the Workplace: Concepts, Strategies and Effects. Asia Pac. Educ. Rev. 2008, 9. [Google Scholar] [CrossRef]

- Sandberg, Jörgen. Understanding of Work: The Basis for Competence Development. In International Perspectives on Competence in the Workplace; Springer: Dordrecht, 2009. [Google Scholar]

- Hamilton, J.B. Rigor in Qualitative Methods: An Evaluation of Strategies Among Underrepresented Rural Communities. Qual. Heal. Res. 2020, 30, 196–204. [Google Scholar] [CrossRef] [PubMed]

- Markow, P.G.; Lonning, R.A. Usefulness of concept maps in college chemistry laboratories: Students' perceptions and effects on achievement. J. Res. Sci. Teach. 1998, 35, 1015–1029. [Google Scholar] [CrossRef]

- Winberg, T.M.; Berg, C.A.R. Students' cognitive focus during a chemistry laboratory exercise: Effects of a computer-simulated prelab. J. Res. Sci. Teach. 2007, 44, 1108–1133. [Google Scholar] [CrossRef]

- Hartner, M. Multiperspectivity. In Handbook of Narratology; Hühn, P., Meister, J. C., Pier, J., Schmid, W., Eds.; De Gruyter: Berlin, 2014. [Google Scholar]

- Kropman, M.; van Drie, J.; van Boxtel, C. The Influence of Multiperspectivity in History Texts on Students’ Representations of a Historical Event. Eur. J. Psychol. Educ. 2022. [CrossRef]

- Feyerabend, P. K. Knowledge, Science and Relativism; Preston, J., Ed.; Cambridge University Press: Cambridge, 1999; Vol. 3.

- Gillespie, A.; Cornish, F. Intersubjectivity: Towards a Dialogical Analysis. J. Theory Soc. Behav. 2010, 40, 19–46. [Google Scholar] [CrossRef]

- Santos-Diaz, S.; Hensiek, S.; Owings, T.; Towns, M.H. Survey of Undergraduate Students' Goals and Achievement Strategies for Laboratory Coursework. J. Chem. Educ. 2019, 96, 850–856. [Google Scholar] [CrossRef]

- Chopra, I.; O’Connor, J.; Pancho, R.; Chrzanowski, M.; Sandi-Urena, S. Reform in a General Chemistry Laboratory: How Do Students Experience Change in the Instructional Approach? Chem. Educ. Res. Pract. 2017, 18, 113–126. [Google Scholar] [CrossRef]

- Denzin, N.K. Moments, Mixed Methods, and Paradigm Dialogs. Qual. Inq. 2010, 16, 419–427. [Google Scholar] [CrossRef]

- Gorard, S.; Taylor, C. Combining Methods in Educational and Social Research; Open University Press: Maidenhead, 2004. [Google Scholar]

- Mayoh, J.; Onwuegbuzie, A. J. Toward a Conceptualisation of Mixed Methods Phenomenological Research. J. Mix. Methods Res. 2015, 9, 91–107. [Google Scholar] [CrossRef]

- Walker, J.P.; Sampson, V. Learning to Argue and Arguing to Learn: Argument-Driven Inquiry as a Way to Help Undergraduate Chemistry Students Learn How to Construct Arguments and Engage in Argumentation During a Laboratory Course. J. Res. Sci. Teach. 2013, 50, 561–596. [Google Scholar] [CrossRef]

- Flick, U. Triangulation in Qualitative Research. In A Companion to Qualitative Research; SAGE Publications: London, 2004. [Google Scholar]

- Lawrie, G.A.; Grøndahl, L.; Boman, S.; Andrews, T. Wiki Laboratory Notebooks: Supporting Student Learning in Collaborative Inquiry-Based Laboratory Experiments. J. Sci. Educ. Technol. 2016, 25, 394–409. [Google Scholar] [CrossRef]

- Horowitz, G. The Intrinsic Motivation of Students Exposed to a Project-Based Organic Chemistry Laboratory Curriculum, Columbia University, 2009.

- Authors. Article. 2024.

- Author. Article. 2022.

- Author. Article. 2023.

- Kelly, G. J.; Licona, P. Epistemic Practices and Science Education. In Science: Philosophy, History and Education; Springer Nature, 2018; pp 139–165.

- Braun, V.; Clarke, V. Using Thematic Analysis in Psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Marton, F.; Pong, W.Y. On the unit of description in phenomenography. High. Educ. Res. Dev. 2005, 24, 335–348. [Google Scholar] [CrossRef]

- Jiménez-Aleixandre, M. P.; Reigosa Castro, C.; Diaz de Bustamante, J. Discourse in the Laboratory: Quality in Argumentative and Epistemic Operations. In Science Education Research in the Knowledge-Based Society; Psillos, D., Kariotoglou, P., Tselfes, V., Hatzikraniotis, E., Fassoulopoulos, G., Kallery, M., Eds.; Springer: Dordrecht, 2003. [Google Scholar]

- Sandoval, W.A.; Kawasaki, J.; Clark, H.F. Characterizing Science Classroom Discourse Across Scales. Res. Sci. Educ. 2020, 51, 35–49. [Google Scholar] [CrossRef]

- Van Rooy, W. S.; Chan, E. Multimodal Representations in Senior Biology Assessments: A Case Study of NSW Australia. Int. J. Sci. Math. Educ. 2017, 15, 1237–1256. [Google Scholar] [CrossRef]

- Author. Article. 2020.

- Galloway, K.R.; Bretz, S.L. Development of an Assessment Tool To Measure Students’ Meaningful Learning in the Undergraduate Chemistry Laboratory. J. Chem. Educ. 2015, 92, 1149–1158. [Google Scholar] [CrossRef]

- McCann, E. J.; Turner, J. E. Increasing Student Learning through Volitional Control. Teach. Coll. Rec. 2004, 106, 1695–1714. [Google Scholar] [CrossRef]

- Pintrich, P.R.; Smith, D.A.F.; Garcia, T.; McKeachie, W.J. Reliability and Predictive Validity of the Motivated Strategies for Learning Questionnaire (Mslq). Educ. Psychol. Meas. 1993, 53, 801–813. [Google Scholar] [CrossRef]

- Yoon, H.; Woo, A.J.; Treagust, D.; Chandrasegaran, A. The Efficacy of Problem-based Learning in an Analytical Laboratory Course for Pre-service Chemistry Teachers. Int. J. Sci. Educ. 2012, 36, 79–102. [Google Scholar] [CrossRef]

- Cai, Z.; Siebert-Evenstone, A.; Eagan, B.; Shaffer, D. W.; Hu, X.; Graesser, A. C. nCoder+: A Semantic Tool for Improving Recall of nCoder Coding. In Communications in Computer and Information Science; Springer, 2019; Vol. 1112, pp 41–54.

- Shaffer, D.W.; Collier, W.; Ruis, A.R. A Tutorial on Epistemic Network Analysis: Analyzing the Structure of Connections in Cognitive, Social, and Interaction Data. J. Learn. Anal. 2016, 3, 9–45. [Google Scholar] [CrossRef]

- Green, J.L.; Dixon, C.N. Exploring Differences in Perspectives on Microanalysis of Classroom Discourse: Contributions and Concerns. Appl. Linguistics 2002, 23, 393–406. [Google Scholar] [CrossRef]

- Authors. Article. 2022b.

- Hofstein, A.; Kind, P. M. Learning in and from Science Laboratories. In Second International Handbook of Science Education; Fraser, B. J., Tobin, K. G., McRobbie, C. J., Eds.; Springer Science & Business Media: Dordrecht, 2012. [Google Scholar]

- Tang, K.-S.; Park, J.; Chang, J. Multimodal Genre of Science Classroom Discourse: Mutual Contextualization Between Genre and Representation Construction. Res. Sci. Educ. 2021, 52, 755–772. [Google Scholar] [CrossRef]

- Johnstone, A.H. Chemical education research in Glasgow in perspective. Chem. Educ. Res. Pr. 2006, 7, 49–63. [Google Scholar] [CrossRef]

- Finne, L.T.; Gammelgaard, B.; Christiansen, F.V. When the Lab Work Disappears: Students’ Perception of Laboratory Teaching for Quality Learning. J. Chem. Educ. 2022, 99, 1766–1774. [Google Scholar] [CrossRef]

- Domin, D.S. A Review of Laboratory Instruction Styles. J. Chem. Educ. 1999, 76. [Google Scholar] [CrossRef]

- Pabuccu, A.; Erduran, S. Investigating students' engagement in epistemic and narrative practices of chemistry in the context of a story on gas behavior. Chem. Educ. Res. Pr. 2016, 17, 523–531. [Google Scholar] [CrossRef]

- Davidson, S.G.; Jaber, L.Z.; Southerland, S.A. Emotions in the doing of science: Exploring epistemic affect in elementary teachers' science research experiences. Sci. Educ. 2020, 104, 1008–1040. [Google Scholar] [CrossRef]

- Ton, G. The mixing of methods: A three-step process for improving rigour in impact evaluations. Evaluation 2012, 18, 5–25. [Google Scholar] [CrossRef]

- Lederman, N. G.; Zeidler, D. L.; Lederman, J. S. Handbook of Research on Science Education: Volume III, 1st ed.; Routledge: New York, 2023. [Google Scholar] [CrossRef]

- Johnson, R.B.; Onwuegbuzie, A.J. Mixed Methods Research: A Research Paradigm Whose Time Has Come. Educ. Res. 2004, 33, 14–26. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).