Submitted:

13 August 2024

Posted:

16 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Research Contribution

- Will there be a strong event (M ≥ 6.0, 7.0, or 8.0) forecasted in the next year among the specific studied geographical region?

- Can we obtain the scientific ability to predict the nearly exact 4-tuple ((°N), (°E), (km), (Mag.)) output of such future major event, as well as the (implicit) almost exact time frame of its occurrence?

2. Proof of Methods

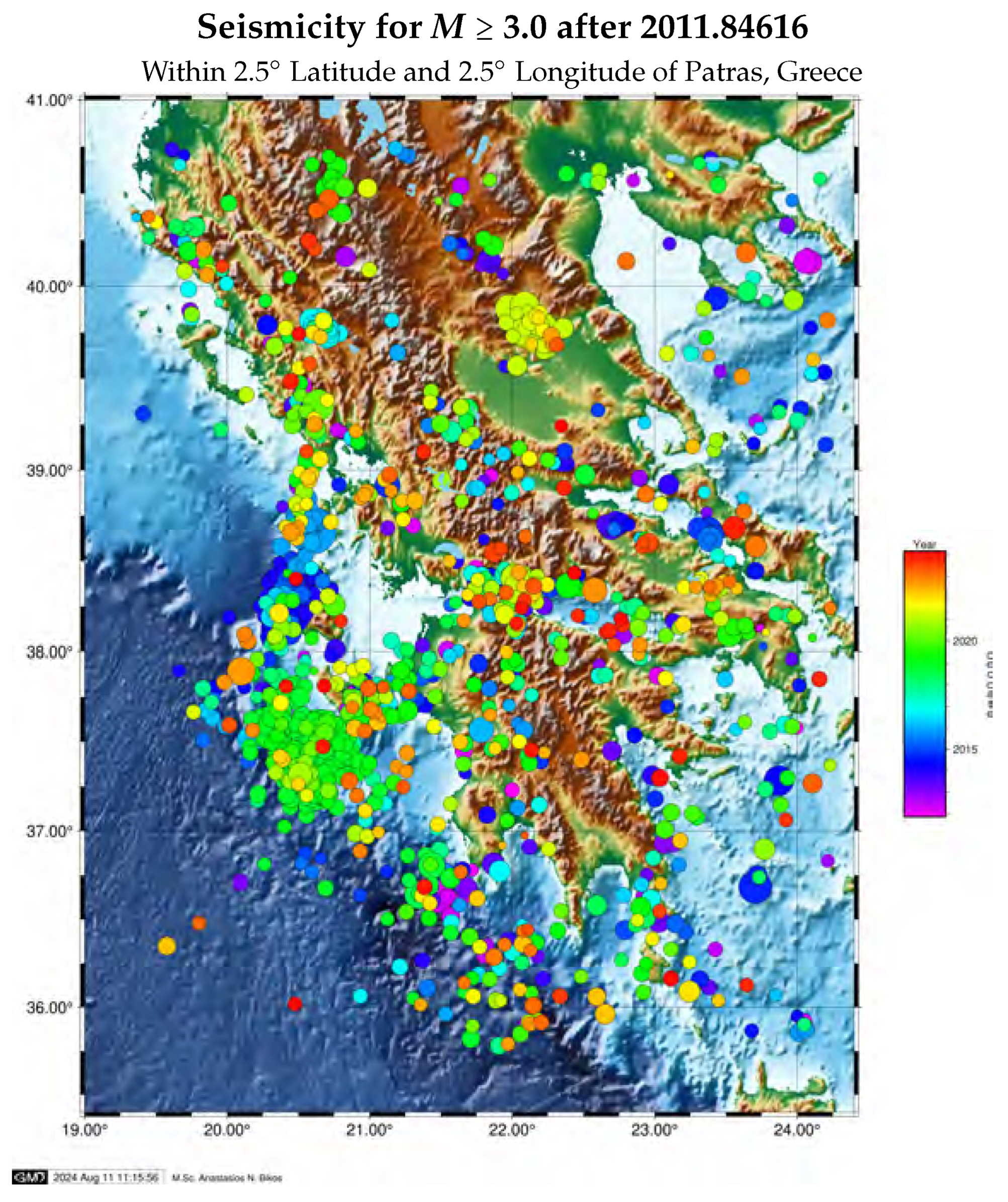

3. Datasets and Feature Engineering

4. Methodologies

4.1. Deep Learning Approach

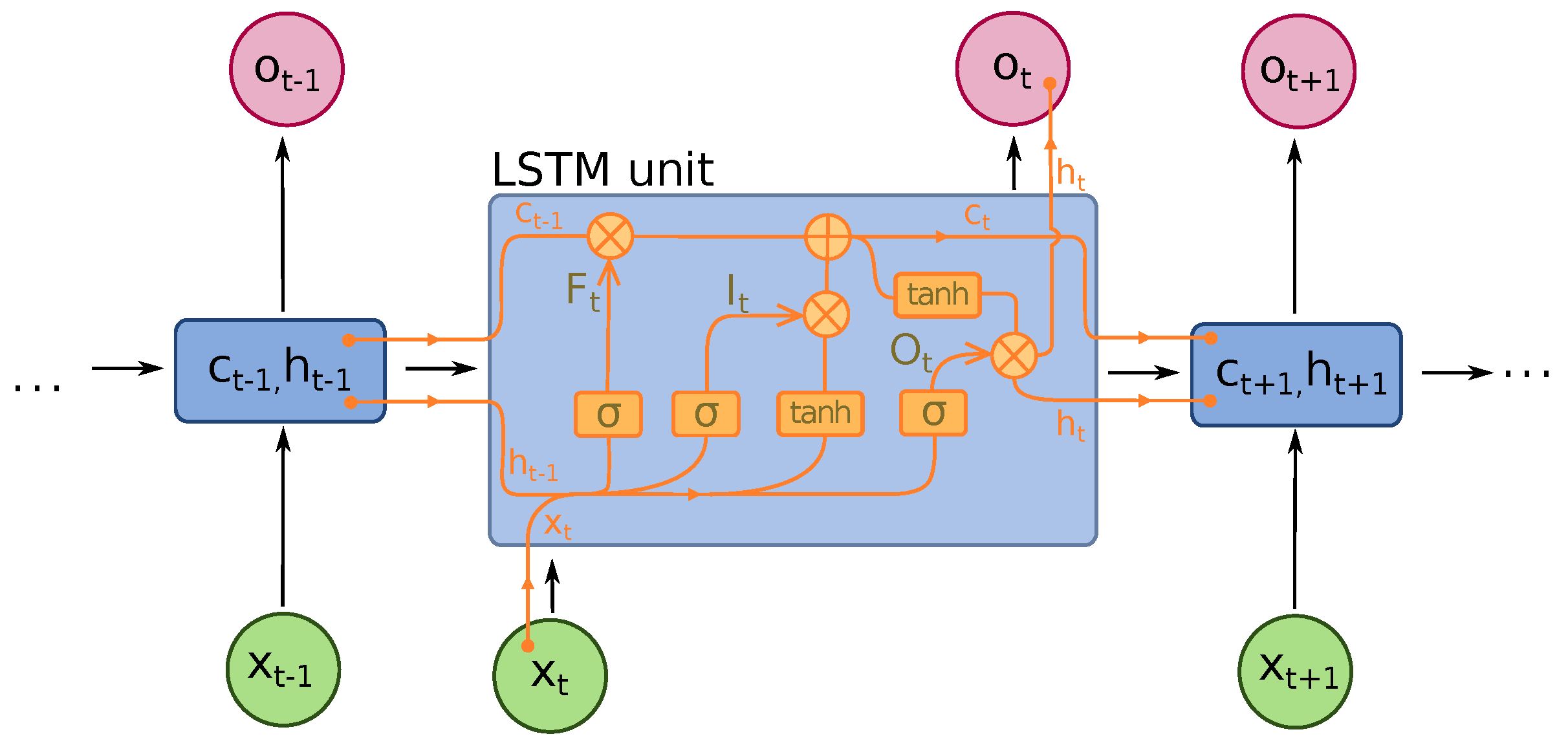

4.1.1. Recurrent Neural Networks: an elemental LSTM artificial neural network

- : input vector to the LSTM unit

- : forget gate’s activation vector

- : input/update gate’s activation vector

- : output gate’s activation vector

- : hidden state vector also known as output vector of the LSTM unit

- : cell input activation vector

- : cell state vector

- and : weight matrices and bias vector parameters which need to be learned during the training period where the superscripts d and h refer to the number of input features as well as the number of hidden units, correspondingly.

- : sigmoid function.

- : hyperbolic tangent function.

- : hyperbolic tangent function, or .

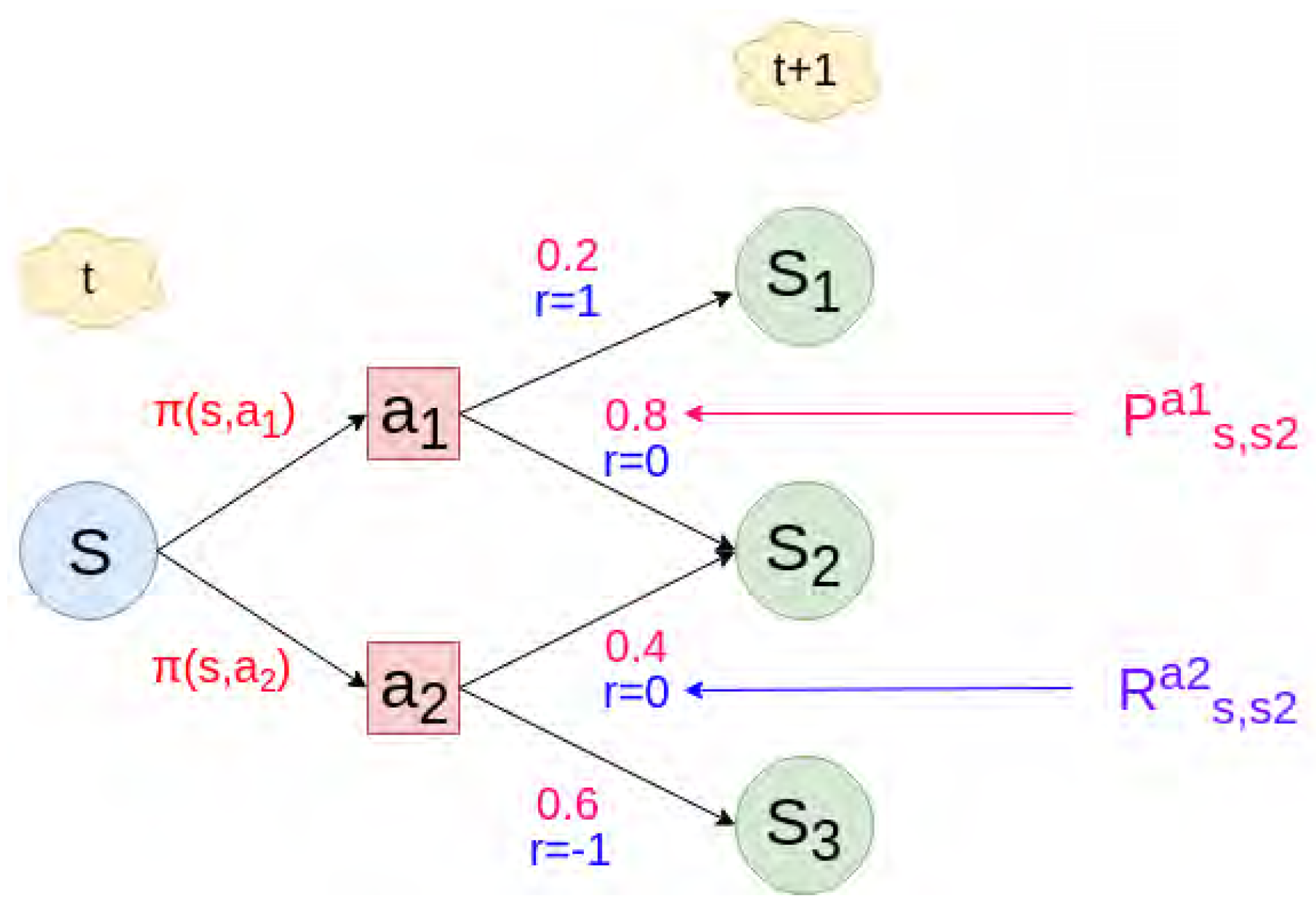

4.1.2. (Customized) Reinforcement Learning Rule

- This sum can potentially diverge (go to infinity), which does not make sense since we want to converge it into maximization.

- We are considering as much for future rewards as we do for (inter)immediate rewards values.

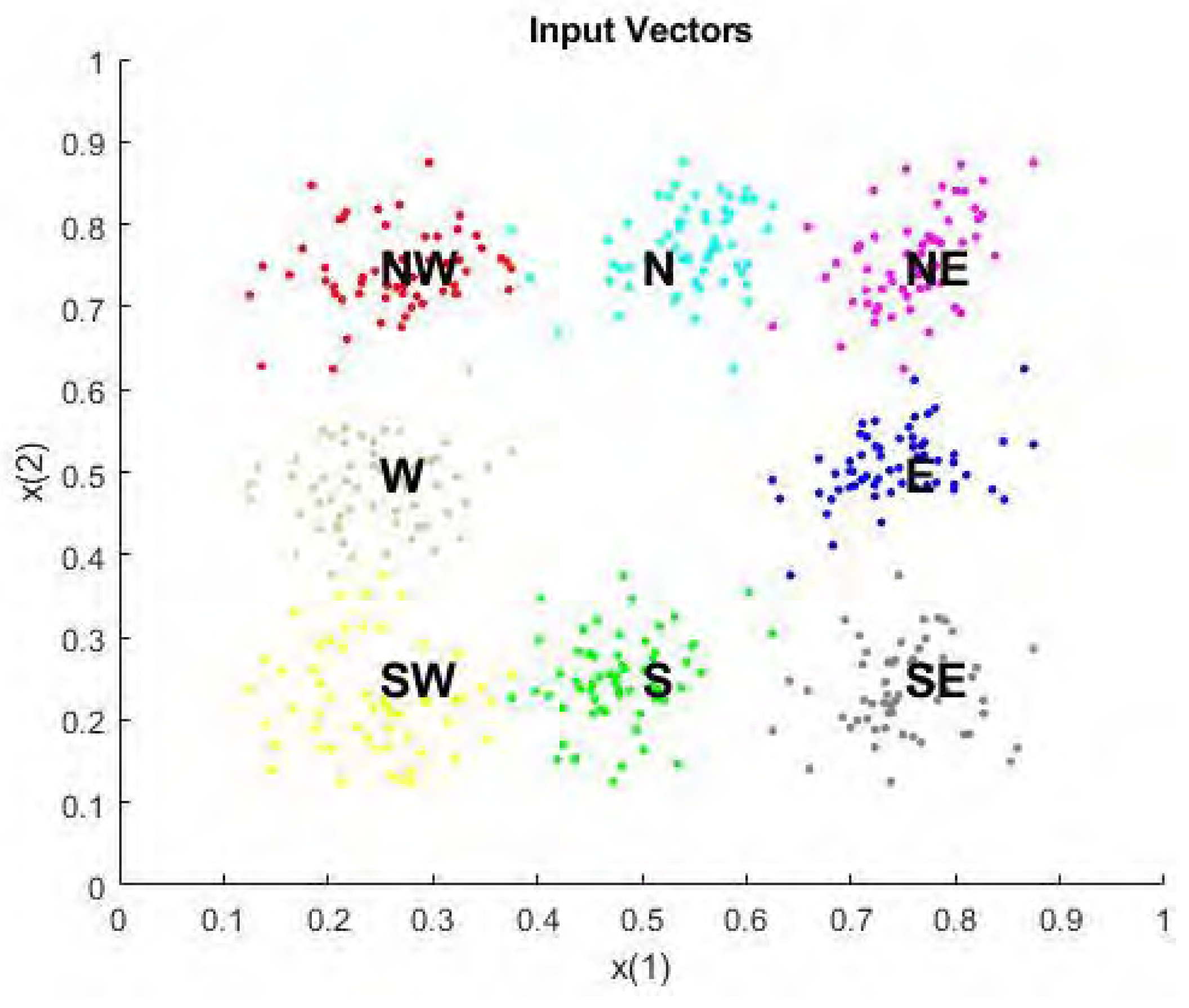

4.1.3. Competitive Learning

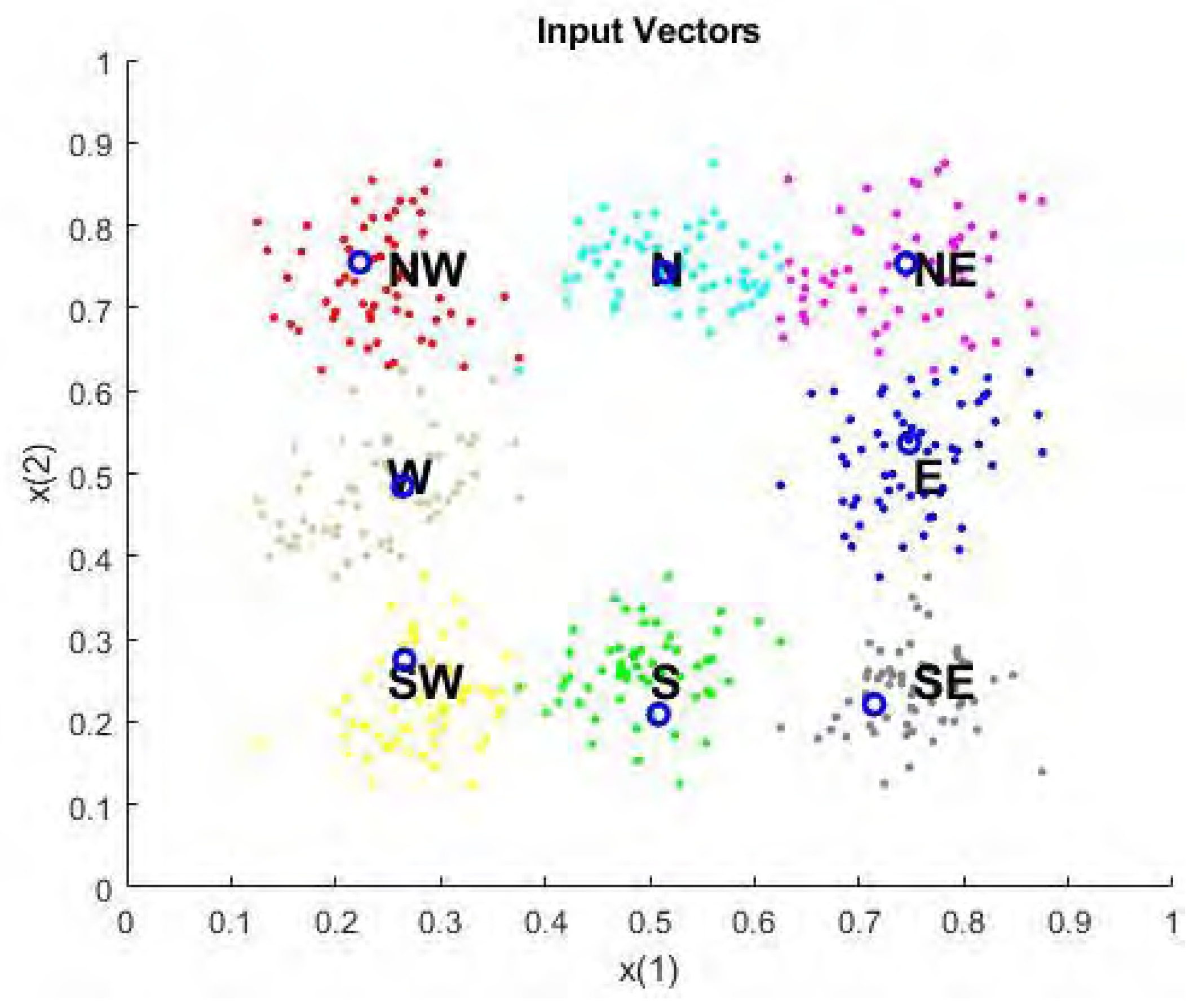

- First, we simulate (create) the eight horizon directions with a (custom) Matlab code (see Figure 4).

- Next, we set the number of epochs to train before stopping and training this competitive layer(which may take several seconds). We plot the updated layer weights on the same graph (Figure 5).

- Finally, let us predict a new prediction instance: i.e., by creating a prediction input that is North-directed (e.g., with value ranges spaced around XY coordinates of the ‘N’ direction), the network will correctly classify the input to the fifth cluster, which is North, most of the times.

4.2. Game-theoretic Learning Approach

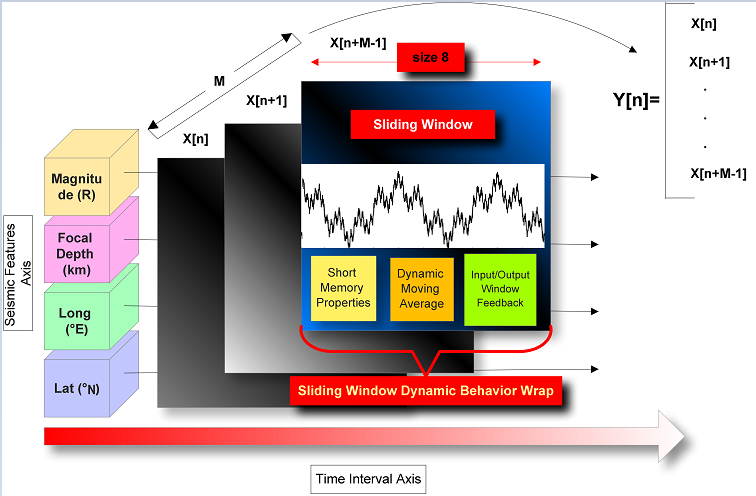

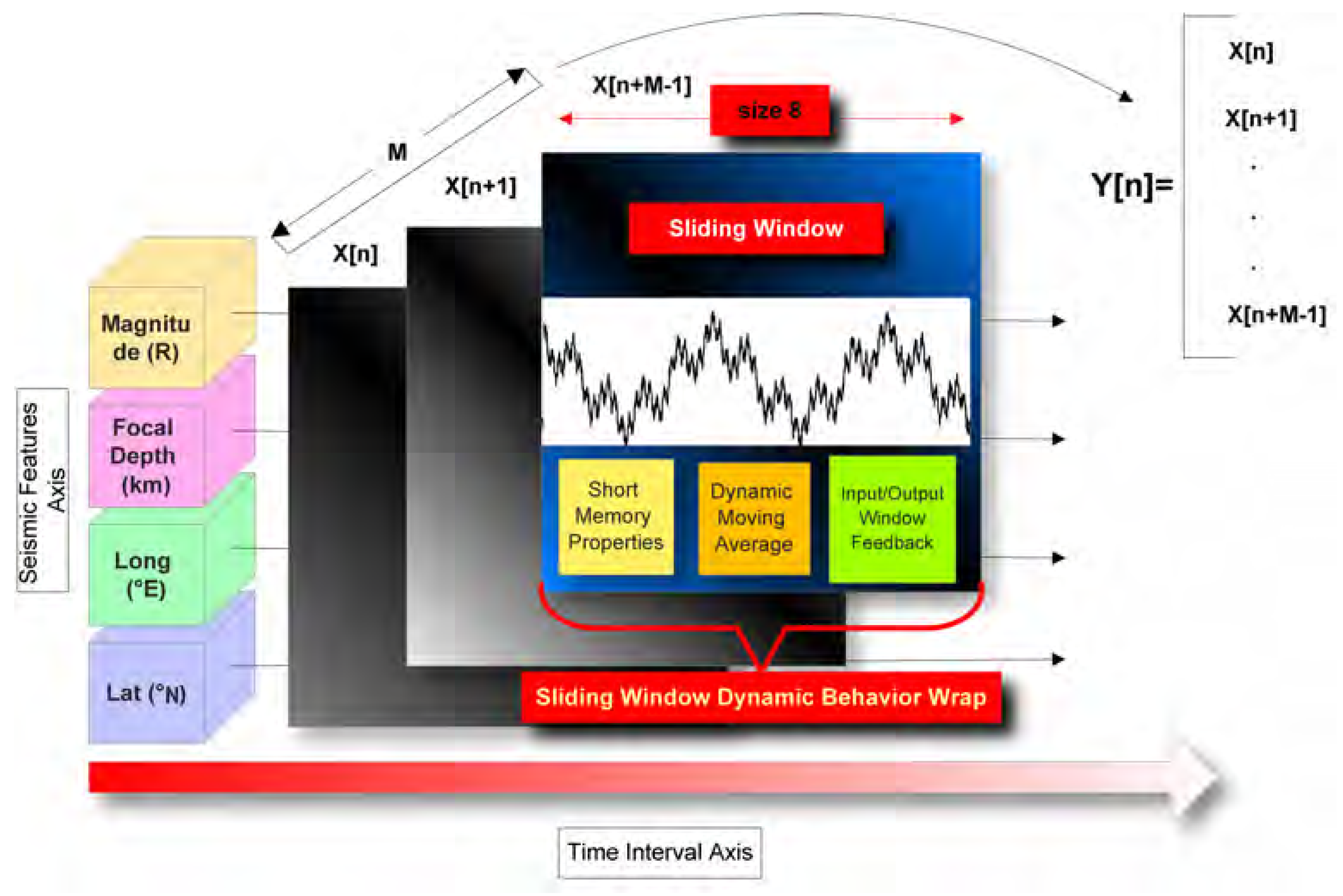

4.3. Sliding-Window Learning Approach

5. Evaluation Results

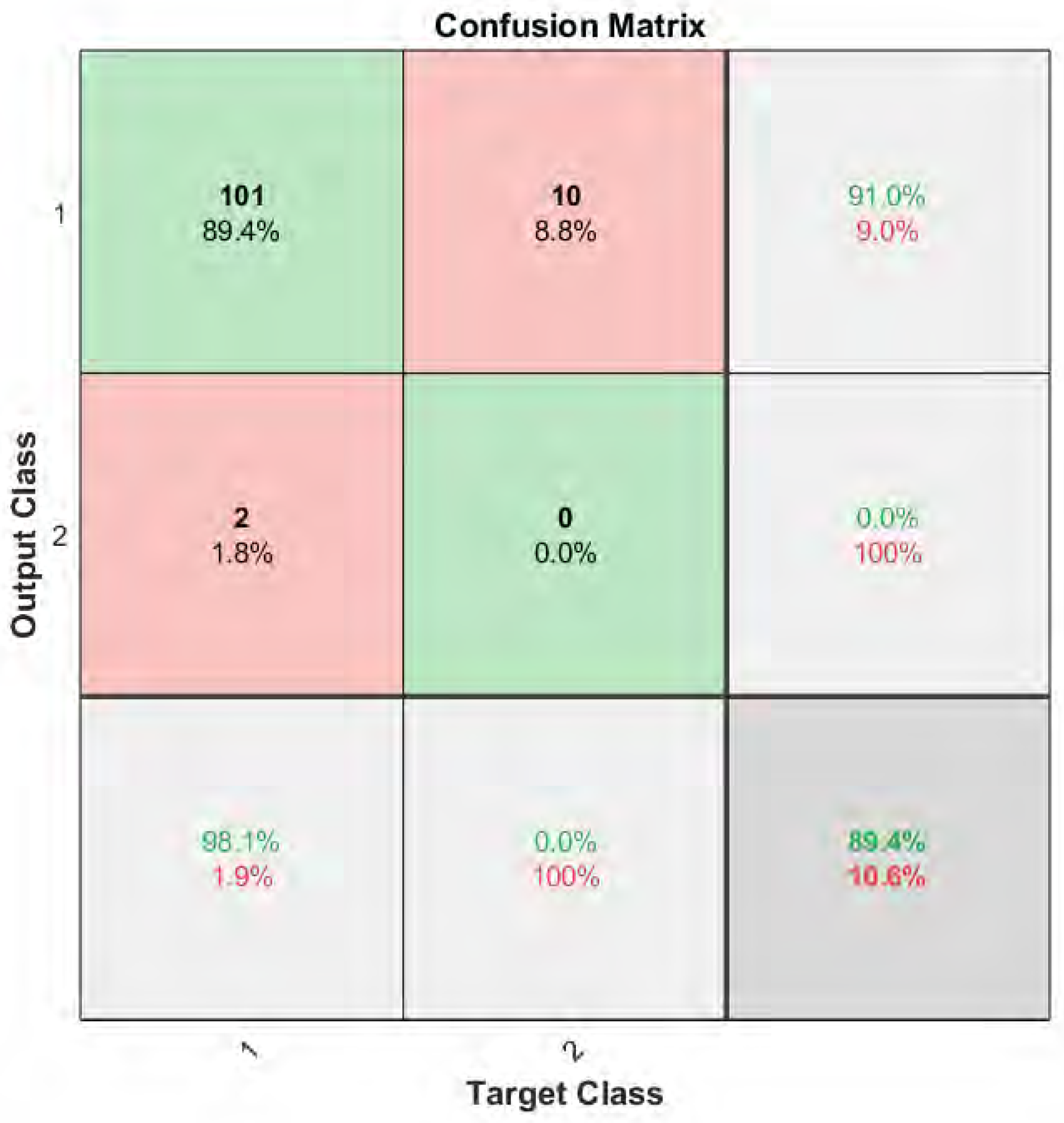

5.1. Performance Evaluation Metrics

- True Positives (TP): The quantity of times the model accurately forecasts the occurrence of an earthquake within the following experimental time frame.

- True Negatives (TN): The quantity of times the model accurately forecasts that there won’t be an earthquake during the following experimental time frame.

- False Positives (FP): The frequency with which the model incorrectly forecasts the occurrence of an earthquake within the following experimental time frame.

- False Negatives (FN): The quantity of times the model incorrectly forecasts that there won’t be an earthquake during the following experimental time frame.

| Predicted Seismic Condition Is Positive | Predicted Seismic Condition Is Negative | |

|---|---|---|

| Actual seismic condition is positive | True Positive (TP) | False Negative (FN) |

| Actual seismic condition is negative | False Positive (FP) | True Negative (TN) |

5.2. Forecast Results

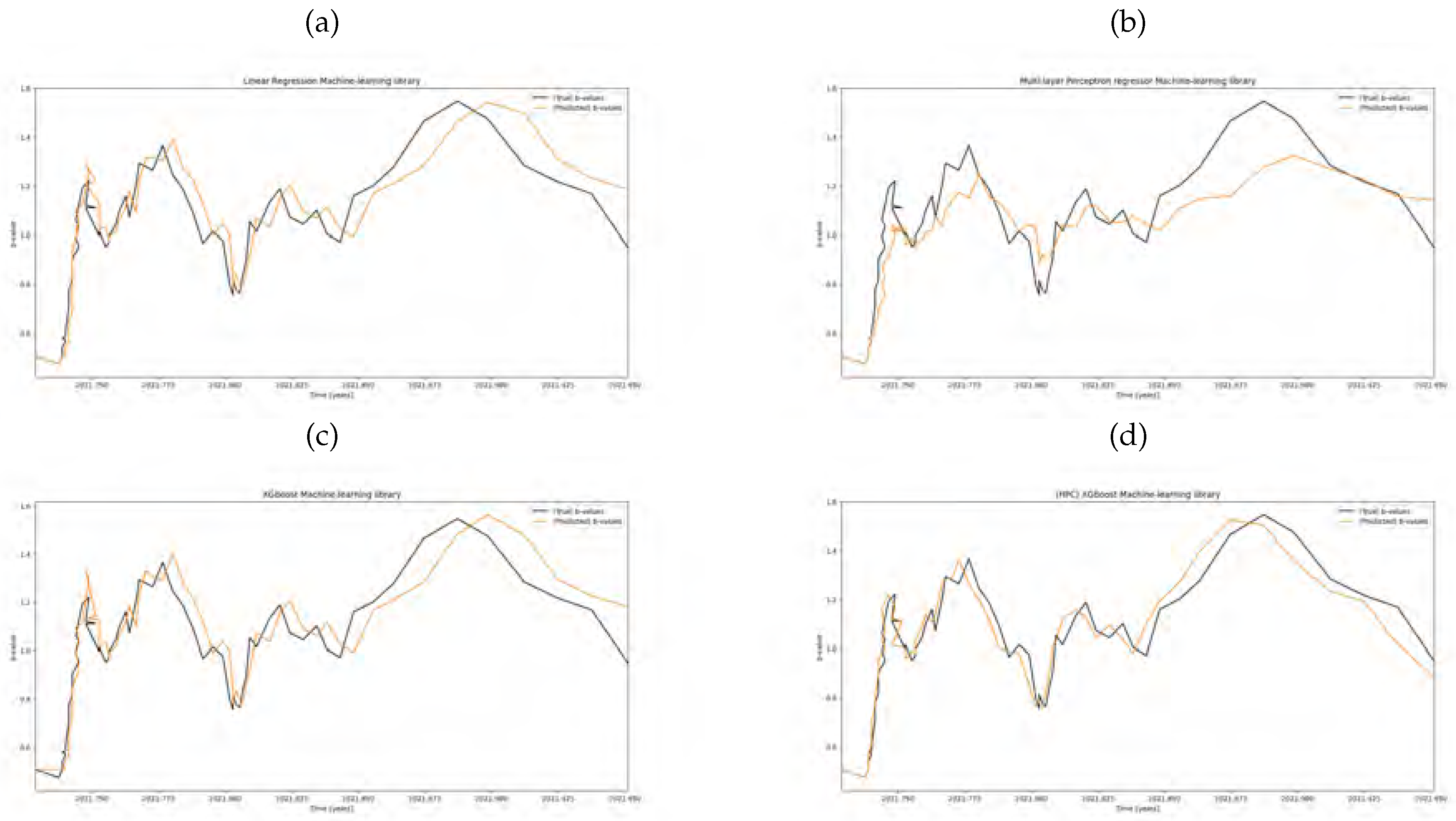

5.2.1. Predicting the next-day GR-law value

5.2.2. Predicting future GR-law values

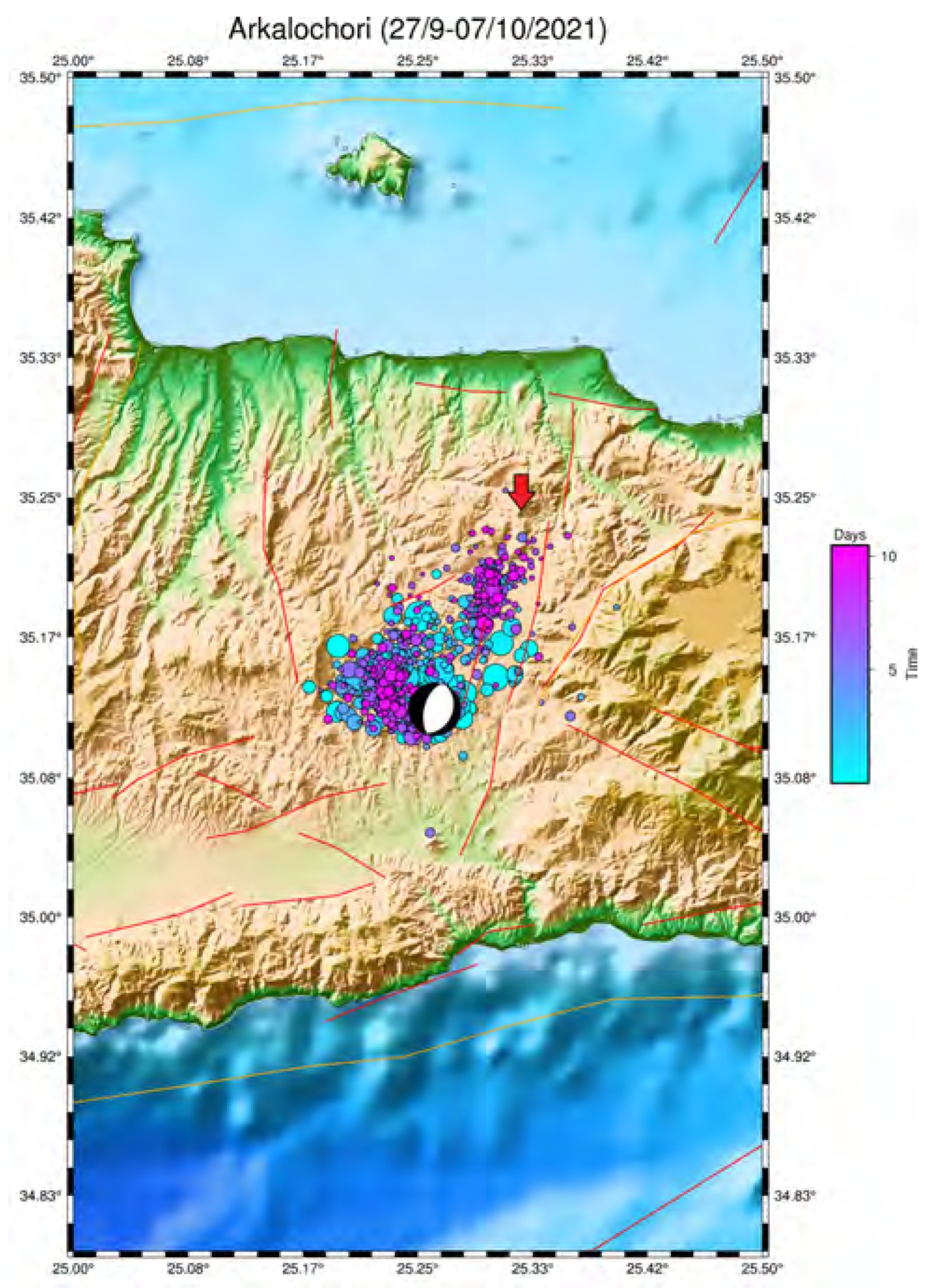

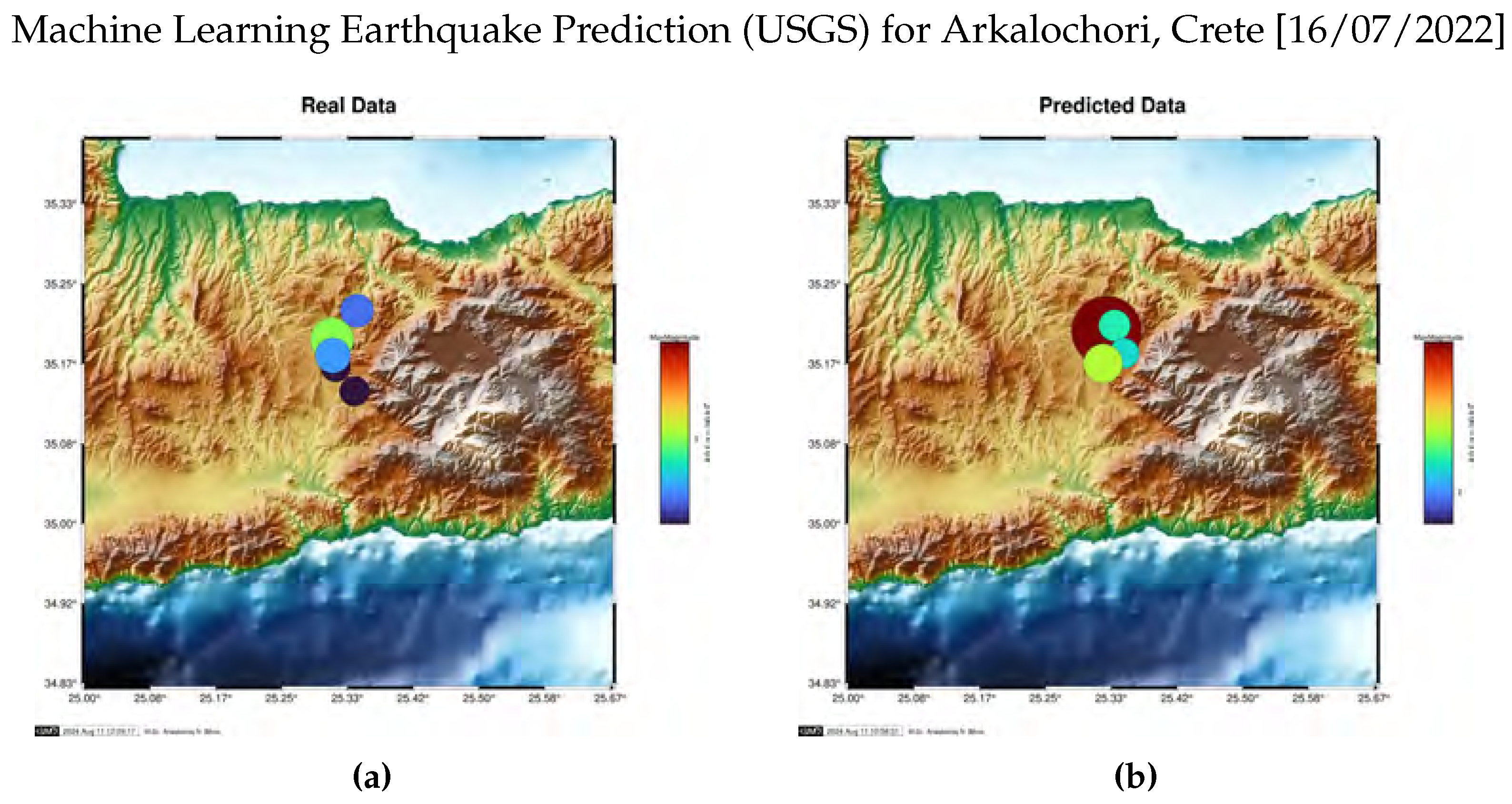

5.2.3. Next-Day Seismic Prediction for Arkalochori, Crete, Greece

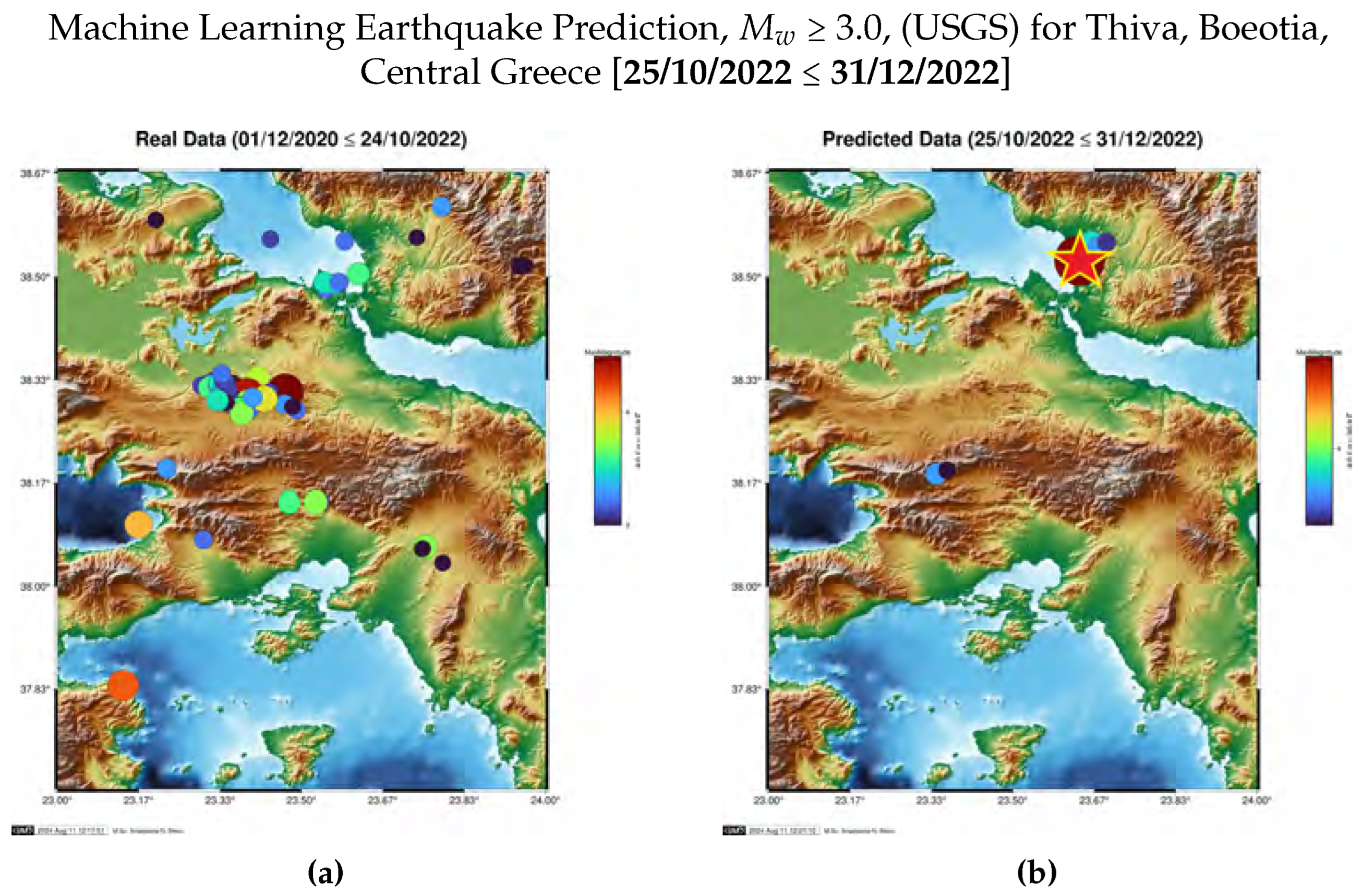

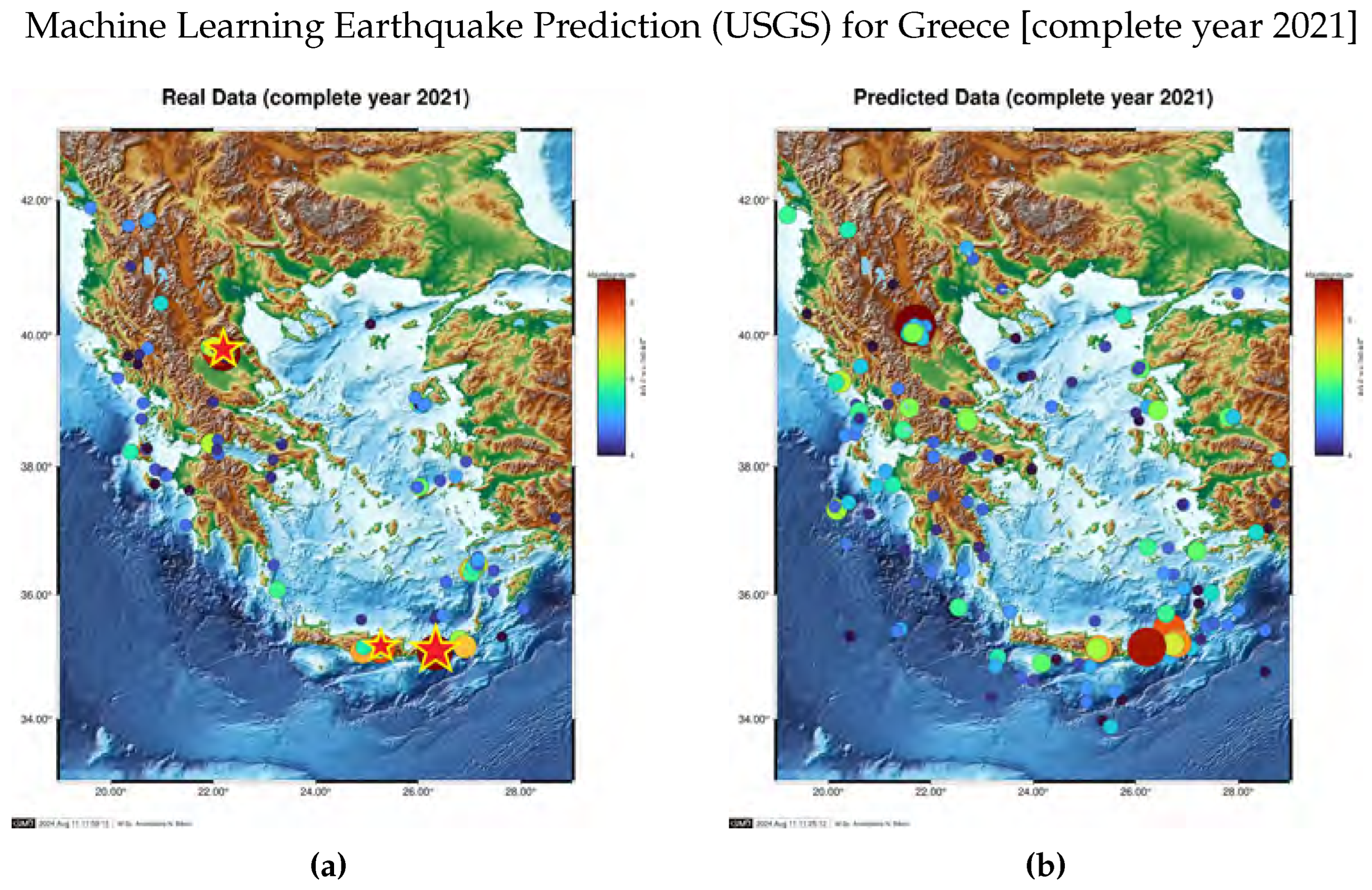

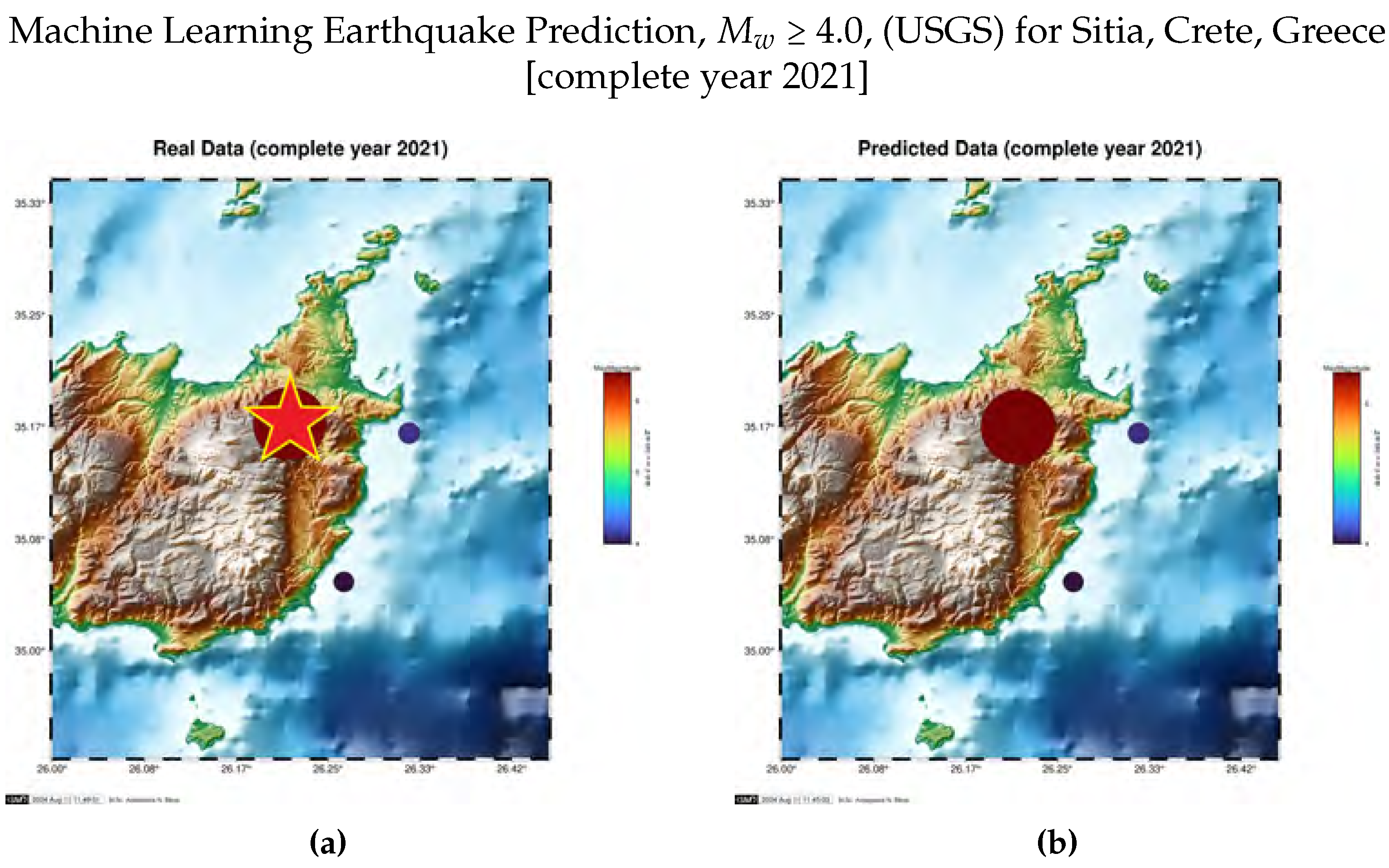

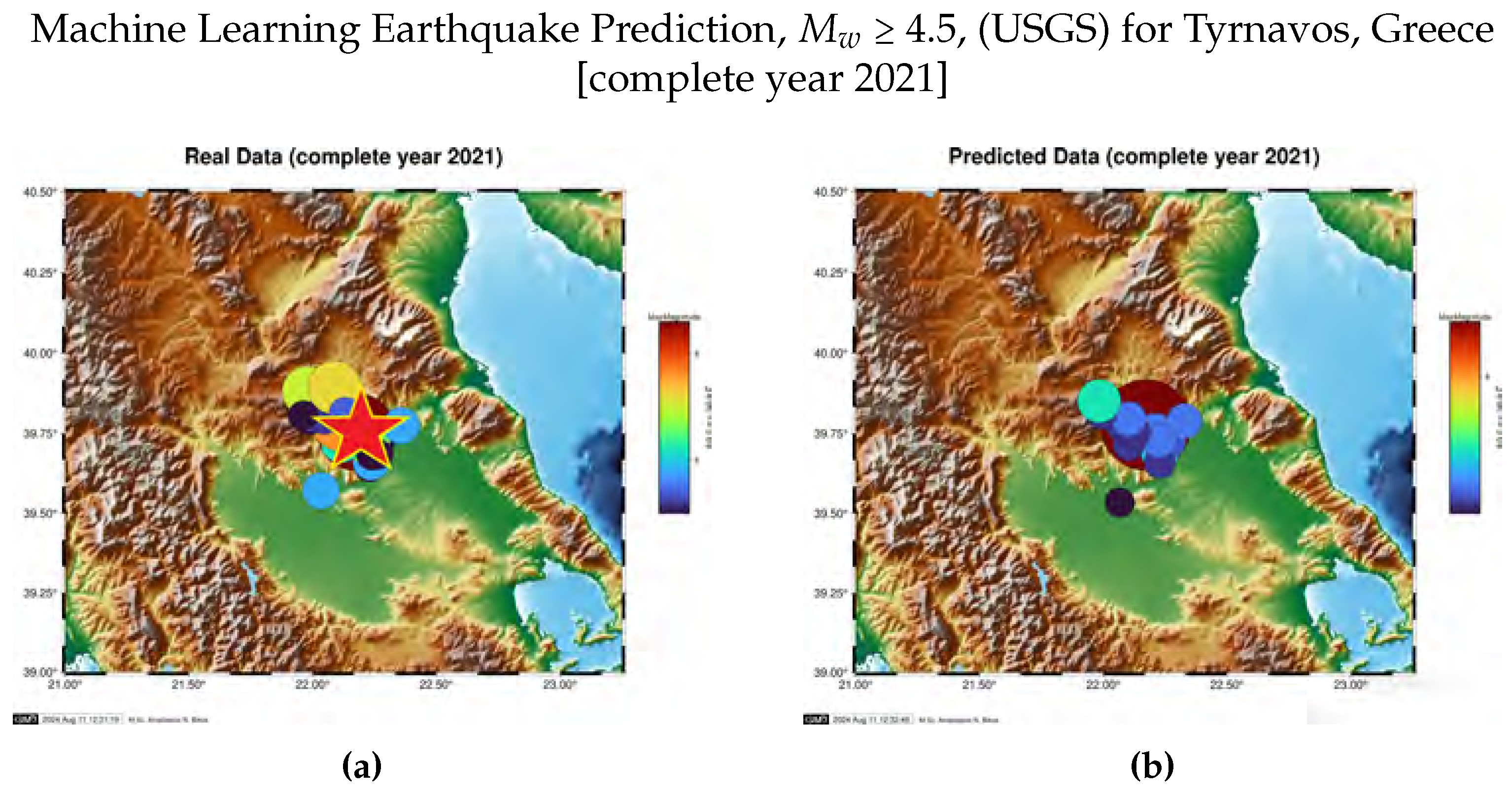

5.2.4. Long-Term Seismic Prediction

| Lat (°N) | Long (°E) | Focal Depth (km) | Magnitude (R) | |

|---|---|---|---|---|

| Predicted data* | 38.5267 | 23.6367 | 8.0 | 5.1 |

| Real data | 38.5652 | 23.6906 | 13.0 | 4.9 |

5.2.5. Error Validation Analysis

6. Discussion and Future Work

7. Conclusions

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| LSTM | Long Short-Term Memory |

| SW | Sliding Window |

| XGBoost | eXtreme Gradient Boosting |

| EQ | EarthQuake |

References

- T. Bhandarkar et al. "Earthquake trend prediction using long short-term memory RNN". In: International Journal of Electrical and Computer Engineering 9.2 (2019), p. 1304, 2019.

- Q. Wang et al. "Earthquake prediction based on spatio-temporal data mining: an LSTM network approach". In: IEEE Transactions on Emerging Topics in Computing, 2017.

- A.Boucouvalas, M.Gkasios, N.Tselikas, and G.Drakatos. "Modified fibonacci-dual-lucas method for earthquake prediction". In Third International Conference on Remote Sensing and Geoinformation of the Environment, pages 95351A-95351A. International Society for Optics and Photonics, 2015.

- Chouliaras, G. "Seismicity anomalies prior to 8 June 2008 earthquake in Western Greece". Nat. Hazards Earth Syst. Sci., 9 (2): 327-335, 2009. [CrossRef]

- J. Fan, Z. Chen, L. Yan, J. Gong, and D. Wang. "Research on earthquake prediction from infrared cloud images". In Ninth International Symposium on Multispectral Image Processing and Pattern Recognition (MIPPR2015), pages 98150E-98150E. International Society for Optics and Photonics, 2015.

- M. Hayakawa, H. Yamauchi, N. Ohtani, M. Ohta, S. Tosa, T. Asano, A. Schekotov, J. Izutsu, S. M. Potirakis, and K. Eftaxias. "On the precursory abnormal animal behavior and electromagnetic effects for the kobe earthquake (m 6) on april 12, 2013". Open Journal of Earthquake Research, 5(03):165, 2016.

- M. Moustra, M. Avraamides, and C. Christodoulou. "Artificial neural networks for earthquake prediction using time series magnitude data or seismic electric signals". Expert systems with applications, 38(12):15032-15039, 2011.

- Mohsen Yousefzadeh, Seyyed Ahmad Hosseini, Mahdi Farnaghi. "Spatiotemporally explicit earthquake prediction using deep neural network". Soil Dynamics and Earthquake Engineering, Volume 144, 2021. [CrossRef]

- Luana Ruiz, Fernando Gama and Alejandro Ribeiro. "Gated Graph Convolutional Recurrent Neural Networks". arXiv:1903.01888, 2019.

- Parisa Kavianpour, Mohammadreza Kavianpour, Ehsan Jahani, Amin Ramezani. "A CNN-BiLSTM Model with Attention Mechanism for Earthquake Prediction". arXiv:2112.13444, 2021.

- Du, Xiangyu (2022). Short-term Earthquake Prediction via Recurrent Neural Network Models: Comparison among vanilla RNN, LSTM and Bi-LSTM.

- Panakkat, A.; Adeli, H. Neural network models for earthquake magnitude prediction using multiple seismicity indicators. Int. J. Neural Syst. 2007, 17, 13–33. [Google Scholar] [CrossRef] [PubMed]

- Asencio-Cortes, G.; Martinez-Alvarez, F.; Morales-Esteban, A.; Reyes, J. A sensitivity study of seismicity indicators in supervised learning to improve earthquake prediction. Knowl.-Based Syst. 2016, 101, 15–30. [Google Scholar] [CrossRef]

- Asim, K.M.; Idris, A.; Iqbal, T.; Martinez-Alvarez, F. Seismic indicators based earthquake predictor system using Genetic Programming and AdaBoost classification. Soil Dyn. Earthq. Eng. 2018, 111, 1–7. [Google Scholar] [CrossRef]

- Martinez-Alvarez, F.; Reyes, J.; Morales-Esteban, A.; Rubio-Escudero, C. Determining the best set of seismicity indicators to predict earthquakes. Two case studies: Chile and the Iberian Peninsula. Knowl.-Based Syst. 2013, 50, 198–210. [Google Scholar] [CrossRef]

- Florido, E.; Asencio Cortes, G.; Aznarte, J.L.; Rubio-Escudero, C.; Martinez-Alvarez, F. A novel tree-based algorithm to discover seismic patterns in earthquake catalogs. Comput. Geosci. 2018, 115, 96–104. [Google Scholar] [CrossRef]

- Rundle, J.B.; Donnellan, A.; Fox, G.; Crutchfield, J.P. Nowcasting Earthquakes by Visualizing the Earthquake Cycle with Machine Learning: A Comparison of Two Methods. Surv. Geophys. 2022, 43, 483–501. [Google Scholar] [CrossRef]

- Wang, X., Zhong, Z., Yao, Y., Li, Z., Zhou, S., Jiang, C., & Jia, K. (2023). Small Earthquakes Can Help Predict Large Earthquakes: A Machine Learning Perspective. Applied Sciences, 13(11), 6424.

- Chouliaras, G. Investigating the earthquake catalog of the National Observatory of Athens. Nat. Hazards Earth Syst. Sci. 2009, 9, 905–912. [Google Scholar] [CrossRef]

- Mignan, A.; Chouliaras, G. Fifty Years of Seismic Network Performance in Greece (1964–2013): Spatiotemporal Evolution of the Completeness Magnitude. Seismol. Res. Lett. 2014, 85, 657–667. [Google Scholar] [CrossRef]

- National Observatory of Athens, Institute of Geodynamics. Recent Earthquakes. Available online: http://www.gein.noa.gr/en/seismicity/recent-earthquakes (accessed on 6 February 2023).

- Rundle, J.B.; Turcotte, D.L.; Donnellan, A.; Grant Ludwig, L.; Luginbuhl, M.; Gong, G. Nowcasting earthquakes. Earth Space Sci. 2016, 3, 480–486. [Google Scholar] [CrossRef]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S. Spatio-Temporal complexity aspects on the interrelation between Seismic Electric Signals and Seismicity. Pract. Athens Acad. 2001, 76, 294–321. Available online: http://physlab.phys.uoa.gr/org/pdf/p3.pdf (accessed on 6 February 2023).

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S. Long-range correlations in the electric signals that precede rupture. Phys. Rev. E 2002, 66, 011902. [Google Scholar] [CrossRef]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S. Seismic Electric Signals and Seismicity: On a tentative interrelation between their spectral content. Acta Geophys. Pol. 2002, 50, 337–354. Available online: http://physlab.phys.uoa.gr/org/pdf/d35.pdf (accessed on 6 February 2023).

- Varotsos, P.A.; Sarlis, N.V.; Tanaka, H.K.; Skordas, E.S. Similarity of fluctuations in correlated systems: The case of seismicity. Phys. Rev. E 2005, 72, 041103. [Google Scholar] [CrossRef]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S. Natural Time Analysis: The New View of Time. Precursory Seismic Electric Signals, Earthquakes and Other Complex Time-Series; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Pasari, S. Nowcasting Earthquakes in the Bay of Bengal Region. Pure Appl. Geophys. 2019, 176, 1417–1432. [Google Scholar] [CrossRef]

- Pasari, S.; Verma, H.; Sharma, Y.; Choudhary, N. Spatial distribution of seismic cycle progression in northeast India and Bangladesh regions inferred from natural time analysis. Acta Geophys. 2023, 71, 89–100. [Google Scholar] [CrossRef]

- Utsu, T. Estimation of parameters for recurrence models of earthquakes. Bull. Earthq. Res. Inst. Univ. Tokyo 1984, 59, 53–66. Available online: http://basin.earth.ncu.edu.tw/download/courses/seminar_MSc/2009/02252_Estimation%20of%20parameters%20for%20recurrence%20models%20of%20earthquakes.pdf (accessed on 6 February 2023).

- Pasari, S.; Dikshit, O. Distribution of Earthquake Interevent Times in Northeast India and Adjoining Regions. Pure Appl. Geophys. 2015, 172, 2533–2544. [Google Scholar] [CrossRef]

- Pasari, S.; Dikshit, O. Earthquake interevent time distribution in Kachchh, Northwestern India. Earth Planets Space 2015, 67, 129. [Google Scholar] [CrossRef]

- Rundle, J.B.; Luginbuhl, M.; Giguere, A.; Turcotte, D.L. Natural Time, Nowcasting and the Physics of Earthquakes: Estimation of Seismic Risk to Global Megacities. Pure Appl. Geophys. 2018, 175, 647–660. [Google Scholar] [CrossRef]

- Luginbuhl, M.; Rundle, J.B.; Hawkins, A.; Turcotte, D.L. Nowcasting Earthquakes: A Comparison of Induced Earthquakes in Oklahoma and at the Geysers, California. Pure Appl. Geophys. 2018, 175, 49–65. [Google Scholar] [CrossRef]

- Luginbuhl, M.; Rundle, J.B.; Turcotte, D.L. Natural Time and Nowcasting Earthquakes: Are Large Global Earthquakes Temporally Clustered? Pure Appl. Geophys. 2018, 175, 661–670. [Google Scholar] [CrossRef]

- Rundle, J.B.; Donnellan, A. Nowcasting Earthquakes in Southern California With Machine Learning: Bursts, Swarms, and Aftershocks May Be Related to Levels of Regional Tectonic Stress. Earth Space Sci. 2020, 7, e2020EA001097. [Google Scholar] [CrossRef]

- Rundle, J.; Stein, S.; Donnellan, A.; Turcotte, D.L.; Klein, W.; Saylor, C. The Complex Dynamics of Earthquake Fault Systems: New Approaches to Forecasting and Nowcasting of Earthquakes. Rep. Prog. Phys. 2021, 84, 076801. [Google Scholar] [CrossRef]

- Rundle, J.B.; Donnellan, A.; Fox, G.; Crutchfield, J.P. Nowcasting Earthquakes by Visualizing the Earthquake Cycle with Machine Learning: A Comparison of Two Methods. Surv. Geophys. 2022, 43, 483–501. [Google Scholar] [CrossRef]

- Rundle, J.B.; Donnellan, A.; Fox, G.; Crutchfield, J.P.; Granat, R. Nowcasting Earthquakes: Imaging the Earthquake Cycle in California with Machine Learning. Earth Space Sci. 2021, 8, e2021EA001757. [Google Scholar] [CrossRef]

- Rundle, J.; Donnellan, A.; Fox, G.; Ludwig, L.; Crutchfield, J. Does the Catalog of California Earthquakes, with Aftershocks Included, Contain Information about Future Large Earthquakes? Earth Space Sci. 2023, 10, e2022EA002521. [Google Scholar] [CrossRef]

- Perez-Oregon, J.; Angulo-Brown, F.; Sarlis, N.V. Nowcasting Avalanches as Earthquakes and the Predictability of Strong Avalanches in the Olami-Feder-Christensen Model. Entropy 2020, 22, 1228. [Google Scholar] [CrossRef] [PubMed]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S. Natural Time Analysis: The New View of Time, Part II. Advances in Disaster Prediction Using Complex Systems; Springer: Berlin/Heidelberg, Germany, 2023; in press; ISBN 978-3-031-26005-6. [Google Scholar]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S.; Lazaridou, M.S. Fluctuations, under time reversal, of the natural time and the entropy distinguish similar looking electric signals of different dynamics. J. Appl. Phys. 2008, 103, 014906. [Google Scholar] [CrossRef]

- Sarlis, N.V.; Skordas, E.S.; Lazaridou, M.S.; Varotsos, P.A. Investigation of seismicity after the initiation of a Seismic Electric Signal activity until the main shock. Proc. Jpn. Acad. Ser. B Phys. Biol. Sci. 2008, 84, 331–343. [Google Scholar] [CrossRef]

- Uyeda, S.; Kamogawa, M. The Prediction of Two Large Earthquakes in Greece. Eos Trans. AGU 2008, 89, 363. [Google Scholar] [CrossRef]

- Uyeda, S.; Kamogawa, M. Comment on ‘The Prediction of Two Large Earthquakes in Greece’. Eos Trans. AGU 2010, 91, 163. [Google Scholar] [CrossRef]

- Uyeda, S.; Kamogawa, M.; Tanaka, H. Analysis of electrical activity and seismicity in the natural time domain for the volcanic seismic swarm activity in 2000 in the Izu Island region, Japan. J. Geophys. Res. 2009, 114, B02310. [Google Scholar] [CrossRef]

- Sarlis, N.V.; Skordas, E.S.; Varotsos, P.A.; Nagao, T.; Kamogawa, M.; Tanaka, H.; Uyeda, S. Minimum of the order parameter fluctuations of seismicity before major earthquakes in Japan. Proc. Natl. Acad. Sci. USA 2013, 110, 13734–13738. [Google Scholar] [CrossRef] [PubMed]

- Sarlis, N.V.; Skordas, E.S.; Varotsos, P.A.; Nagao, T.; Kamogawa, M.; Uyeda, S. Spatiotemporal variations of seismicity before major earthquakes in the Japanese area and their relation with the epicentral locations. Proc. Natl. Acad. Sci. USA 2015, 112, 986–989. [Google Scholar] [CrossRef]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S.; Uyeda, S.; Kamogawa, M. Natural time analysis of critical phenomena: The case of Seismicity. Europhys. Lett. 2010, 92, 29002. [Google Scholar] [CrossRef]

- Skordas, E.S.; Christopoulos, S.R.G.; Sarlis, N.V. Detrended fluctuation analysis of seismicity and order parameter fluctuations before the M7.1 Ridgecrest earthquake. Nat. Hazards 2020, 100, 697–711. [Google Scholar] [CrossRef]

- Sarlis, N.V.; Skordas, E.S.; Varotsos, P.A.; Ramírez-Rojas, A.; Flores-Márquez, E.L. Natural time analysis: On the deadly Mexico M8.2 earthquake on 7 September 2017. Physica A 2018, 506, 625–634. [Google Scholar] [CrossRef]

- Sarlis, N.V.; Skordas, E.S.; Varotsos, P.A.; Ramírez-Rojas, A.; Flores-Márquez, E.L. Identifying the Occurrence Time of the Deadly Mexico M8.2 Earthquake on 7 September 2017. Entropy 2019, 21, 301. [Google Scholar] [CrossRef] [PubMed]

- Perez-Oregon, J.; Varotsos, P.K.; Skordas, E.S.; Sarlis, N.V. Estimating the Epicenter of a Future Strong Earthquake in Southern California, Mexico, and Central America by Means of Natural Time Analysis and Earthquake Nowcasting. Entropy 2021, 23, 1658. [Google Scholar] [CrossRef]

- Mintzelas, A.; Sarlis, N. Minima of the fluctuations of the order parameter of seismicity and earthquake networks based on similar activity patterns. Phys. A 2019, 527, 121293. [Google Scholar] [CrossRef]

- Varotsos, P.K.; Perez-Oregon, J.; Skordas, E.S.; Sarlis, N.V. Estimating the epicenter of an impending strong earthquake by combining the seismicity order parameter variability analysis with earthquake networks and nowcasting: Application in Eastern Mediterranean. Appl. Sci. 2021, 11, 10093. [Google Scholar] [CrossRef]

- Sarlis, N.V.; Christopoulos, S.R.G.; Skordas, E.S. Minima of the fluctuations of the order parameter of global seismicity. Chaos 2015, 25, 063110. [Google Scholar] [CrossRef] [PubMed]

- Sarlis, N.V.; Skordas, E.S.; Mintzelas, A.; Papadopoulou, K.A. Micro-scale, mid-scale, and macro-scale in global seismicity identified by empirical mode decomposition and their multifractal characteristics. Sci. Rep. 2018, 8, 9206. [Google Scholar] [CrossRef]

- Christopoulos, S.R.G.; Varotsos, P.K.; Perez-Oregon, J.; Papadopoulou, K.A.; Skordas, E.S.; Sarlis, N.V. Natural Time Analysis of Global Seismicity. Appl. Sci. 2022, 12, 7496. [Google Scholar] [CrossRef]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S. Attempt to distinguish electric signals of a dichotomous nature. Phys. Rev. E 2003, 68, 031106. [Google Scholar] [CrossRef]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S.; Lazaridou, M.S. Entropy in Natural Time Domain. Phys. Rev. E 2004, 70, 011106. [Google Scholar] [CrossRef] [PubMed]

- Varotsos, P.A.; Sarlis, N.V.; Tanaka, H.K.; Skordas, E.S. Some properties of the entropy in the natural time. Phys. Rev. E 2005, 71, 032102. [Google Scholar] [CrossRef]

- Lesche, B. Instabilities of Renyi entropies. J. Stat. Phys. 1982, 27, 419. [Google Scholar] [CrossRef]

- Lesche, B. Renyi entropies and observables. Phys. Rev. E 2004, 70, 017102. [Google Scholar] [CrossRef]

- Sarlis, N.V. Entropy in Natural Time and the Associated Complexity Measures. Entropy 2017, 19, 177. [Google Scholar] [CrossRef]

- Sarlis, N.V.; Skordas, E.S.; Varotsos, P.A. A remarkable change of the entropy of seismicity in natural time under time reversal before the super-giant M9 Tohoku earthquake on 11 March 2011. EPL (Europhys. Lett.) 2018, 124, 29001. [Google Scholar] [CrossRef]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S. Tsallis Entropy Index q and the Complexity Measure of Seismicity in Natural Time under Time Reversal before the M9 Tohoku Earthquake in 2011. Entropy 2018, 20, 757. [Google Scholar] [CrossRef] [PubMed]

- Skordas, E.S.; Sarlis, N.V.; Varotsos, P.A. Identifying the occurrence time of an impending major earthquake by means of the fluctuations of the entropy change under time reversal. EPL (Europhys. Lett.) 2019, 128, 49001. [Google Scholar] [CrossRef]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S. Self-organized criticality and earthquake predictability: A long-standing question in the light of natural time analysis. EPL (Europhys. Lett.) 2020, 132, 29001. [Google Scholar] [CrossRef]

- Ramírez-Rojas, A.; Flores-Márquez, E.L.; Sarlis, N.V.; Varotsos, P.A. The Complexity Measures Associated with the Fluctuations of the Entropy in Natural Time before the Deadly Mexico M8.2 Earthquake on 7 September 2017. Entropy 2018, 20, 477. [Google Scholar] [CrossRef]

- Flores-Márquez, E.L.; Ramírez-Rojas, A.; Perez-Oregon, J.; Sarlis, N.V.; Skordas, E.S.; Varotsos, P.A. Natural Time Analysis of Seismicity within the Mexican Flat Slab before the M7.1 Earthquake on 19 September 2017. Entropy 2020, 22, 730. [Google Scholar] [CrossRef]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S. Order Parameter and Entropy of Seismicity in Natural Time before Major Earthquakes: Recent Results. Geosciences 2022, 12, 225. [Google Scholar] [CrossRef]

- Varotsos, P.; Alexopoulos, K. Physical Properties of the variations of the electric field of the Earth preceding earthquakes, I. Tectonophysics 1984, 110, 73–98. [Google Scholar] [CrossRef]

- Varotsos, P.; Alexopoulos, K.; Nomicos, K.; Lazaridou, M. Earthquake prediction and electric signals. Nature 1986, 322, 120. [Google Scholar] [CrossRef]

- Varotsos, P.; Lazaridou, M. Latest aspects of earthquake prediction in Greece based on Seismic Electric Signals. Tectonophysics 1991, 188, 321–347. [Google Scholar] [CrossRef]

- Varotsos, P.; Alexopoulos, K.; Lazaridou, M. Latest aspects of earthquake prediction in Greece based on Seismic Electric Signals, II. Tectonophysics 1993, 224, 1–37. [Google Scholar] [CrossRef]

- Varotsos, P. The Physics of Seismic Electric Signals; TERRAPUB: Tokyo, Japan, 2005; p. 338. [Google Scholar]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S. Phenomena preceding major earthquakes interconnected through a physical model. Ann. Geophys. 2019, 37, 315–324. [Google Scholar] [CrossRef]

- Varotsos, P.A.; Sarlis, N.V.; Skordas, E.S.; Lazaridou, M.S. Seismic Electric Signals: An additional fact showing their physical interconnection with seismicity. Tectonophysics 2013, 589, 116–125. [Google Scholar] [CrossRef]

- Chouliaras, G. Seismicity anomalies prior to 8 June 2008, MW = 6.4 earthquake inWestern Greece. Nat. Hazards Earth Syst. Sci. 2009, 9, 327–335. [Google Scholar] [CrossRef]

- Shaikh, S.A.. "Measures Derived from a 2 x 2 Table for an Accuracy of a Diagnostic Test". Journal of biometrics biostatistics, 2, pp. 1-4, 2011.

- Kim, J., & Yang, I. (2019). Hamilton-Jacobi-Bellman Equations for Q-Learning in Continuous Time. ArXiv, abs/1912.10697.

- Chouliaras, G.; Skordas, E.S.; Sarlis, N.V. Earthquake Nowcasting: Retrospective Testing in Greece. Entropy 2023, 25, 379. [Google Scholar] [CrossRef]

- Chen, T., & Guestrin, C. (2016). XGBoost: A Scalable Tree Boosting System. Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining.

- Yano, T., Sugimoto, K., Kuroki, Y., & Kamata, S. (2018). Acceleration of Gaussian Filter with Short Window Length Using DCT-1. 2018 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), 129-132.

- Rundle, J. Megacities Earthquake Nowcasting V4.0. Zenodo 2023.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).