Introduction

Soaring demand for sustainable energy solutions has solidified solar panels as a leading technology for harnessing renewable energy. While solar energy presents a clean and abundant alternative, its intermittent nature introduces challenges in maintaining a consistent balance between energy consumption and production. This research endeavors to address this challenge by integrating machine learning techniques with climatic parameters to predict solar panel energy generation, with a specific focus on the unique environmental context of Estonia.

Previous research often focuses on predicting solar radiation, but a broader understanding is needed. Photovoltaic (PV) module output power is influenced by various factors beyond just sunlight intensity. Beyond just sunlight intensity, factors like cell health, type, configuration, angle of incidence, and weather conditions all affect a photovoltaic module's power output. Notably, the temperature of the solar cell within a PV system is one such critical factor that significantly impacts the amount of produced power. In contrast to the prevailing emphasis on solar radiation, this research aims to delve into the broader spectrum of influences on PV module output power, offering a more comprehensive understanding of the complexities involved in predicting and optimizing solar energy generation[

1]. Solar cell temperature isn't just about sunlight. Factors like ambient temperature, wind speed, and humidity all play a role. This understanding is crucial, as researchers have explored weather data and classification to predict photovoltaic (PV) output power. Shi et al. [

2] explored weather classification for solar power forecasting. They categorized weather into clear sky, cloudy, foggy, and rainy conditions, and used this along with historical power data and Support Vector Machines (SVMs) to develop a one-day-ahead forecasting model for a single location in China. This approach demonstrates the potential of weather data for solar power prediction. Building on the importance of accurate weather data, Sangrody et al. [

3] investigated uncertainties in weather forecasts for solar power prediction. Their research compared predicted and observed weather data, identified the most influential weather variables, and analyzed how data errors impact forecasting accuracy. This emphasizes the need for high-quality weather data in solar power forecasting models, a factor our proposed machine learning approach will address. In Ref [

4], an investigation and comparison of three stacking techniques were conducted to integrate the prediction outputs of base learners. These techniques encompassed feed-forward neural networks, support vector regressors, and k-nearest neighbor regressors. This study introduces a machine learning approach that combines Kernel Principal Component Analysis (PCA) with XGBoost to improve the accuracy of one-hour-ahead solar power predictions. Our model is built by integrating deterministic Weather Research and Forecasting (WRF) data obtained from the Taiwan Central Weather Bureau (CWB). Furthermore, we utilize an XGBoost model, constructed as an ensemble of decision trees, to provide valuable insights and precise forecasts in the prediction process [

5]. In our study, we employed various algorithmic combinations to demonstrate their predictive efficacy in contrast to a conventional Multilayer Perceptron (MLP) and a physical forecasting model. The focus was on forecasting the energy output of 21 solar power plants [

6]. This study introduces a hybrid model that combines a Long Short-Term Memory (LSTM) and Convolutional Neural Network (CNN) for accurate prediction of stable power generation [

7]. In the concluding section of the introduction, I intend to outline the objective of my study, which involves the comparative analysis of various time series prediction models for solar energy production. Specifically, I aim to evaluate the performance of XGBoost, LSTM and Bi-LSTM models in forecasting solar energy generation. This comparative assessment seeks to provide insights into the strengths and weaknesses of each model, contributing valuable findings to the field of renewable energy forecasting. The study is designed to be rigorous and comprehensive, utilizing established models from the machine learning domain to enhance the understanding of their applicability and effectiveness in predicting solar energy output. The outcomes of this research endeavor are anticipated to inform and guide future developments in renewable energy forecasting methodologies.

Methodology

To achieve the objectives of this study, a comprehensive methodology is employed, focusing on the comparison of different time series prediction models for solar energy production. The primary models under consideration include Long Short-Term Memory (LSTM) [

8,

9] , XGBoost [

10,

11], and Bidirectional Long Short-Term Memory (Bi-LSTM) [

12].

Data Collection

The dataset utilized in this study is sourced from Kaggle, a reputable platform known for its diverse and high-quality datasets. The Kaggle dataset selected contains relevant information on solar energy production, including key climatic variables such as sunlight intensity, temperature, and humidity.

Data Preprocessing

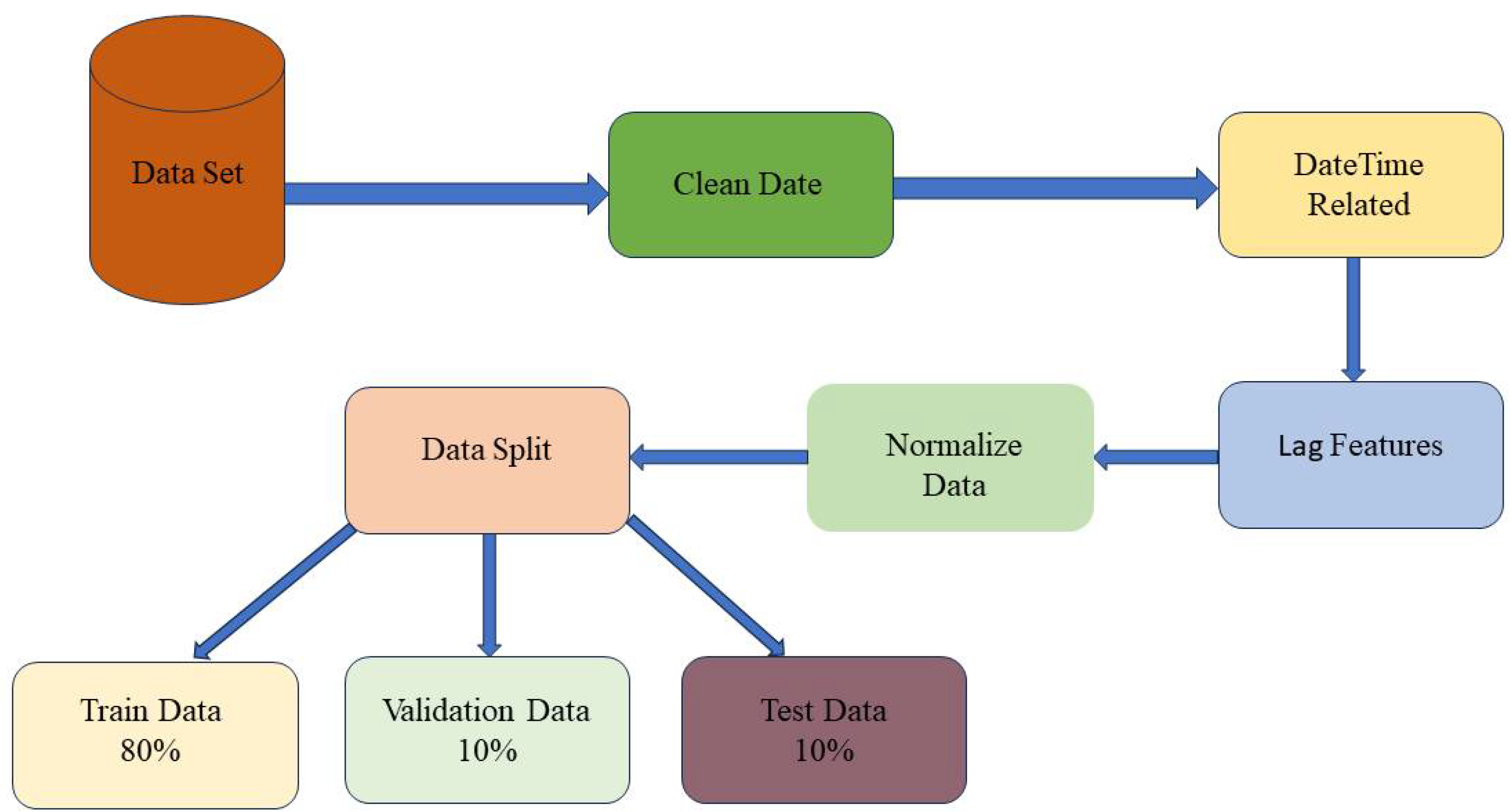

Thorough preprocessing of the dataset involves handling missing values, normalizing data, and extracting relevant features. This step aims to enhance the quality and reliability of the input data for subsequent modeling. These all process as preprocessing in our study are done to extract the feature from the cleaned data one step prior importing into the model by splitting them into training data (80%), test data (10%) and validation data (10%), respectively, as shown in

Figure 1.

Model Selection

The choice of models, including LSTM, XGBoost, and Bi-LSTM, is driven by their established efficacy in time series prediction. Each model will be configured and fine-tuned to cater specifically to the characteristics of solar energy generation data.

Long Short Term Memory (LSTM)

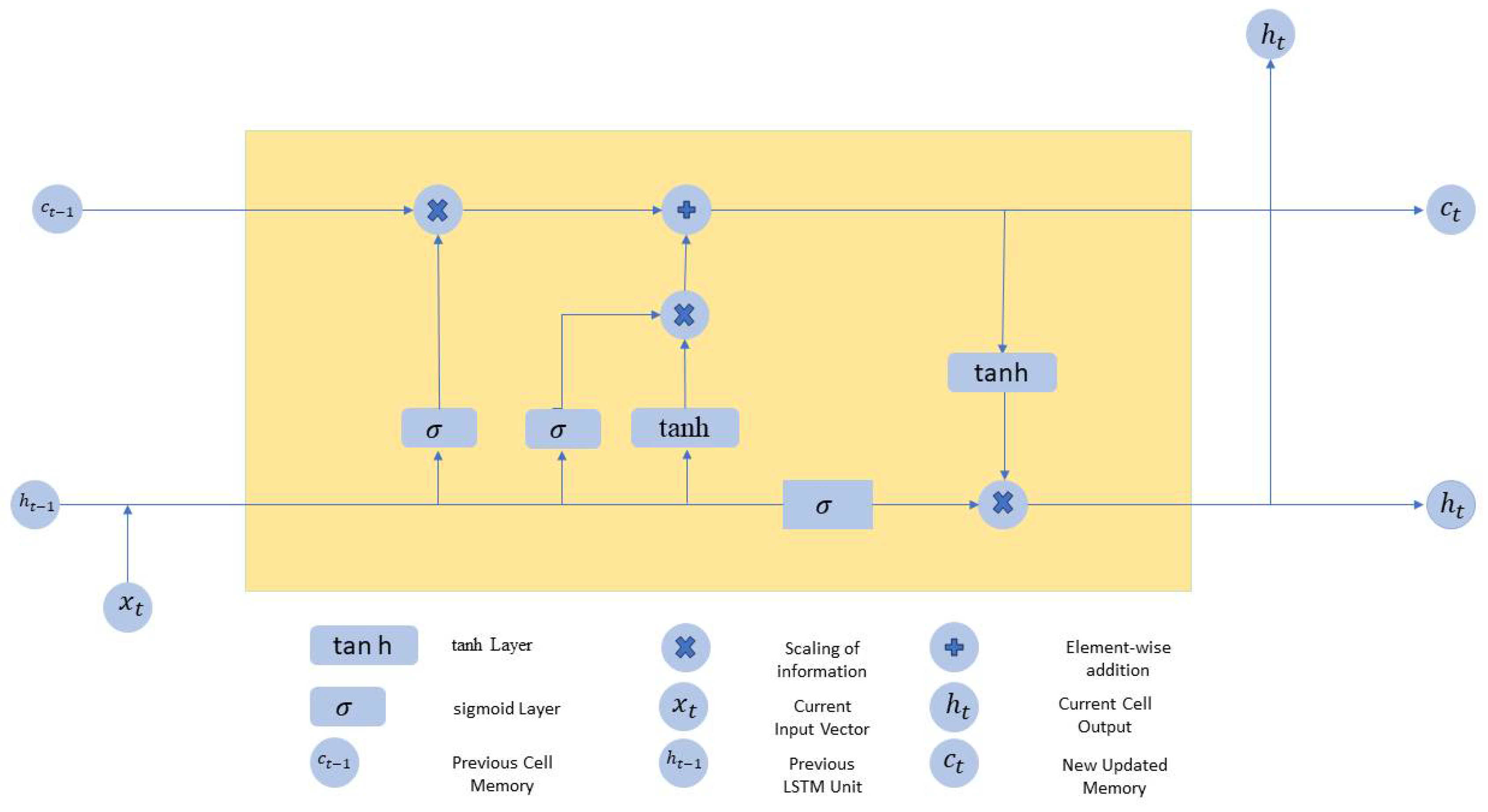

The Long Short Term Memory (LSTM) model, a form of recurrent neural network (RNN), is renowned for its adeptness in handling sequential data, making it especially suitable for time series forecasting. LSTM incorporates a memory block responsible for retaining temporal information from the input data. Illustrated in

Figure 2, the hidden layer of LSTM, known as the LSTM cell, encapsulates this functionality. In the context of predicting solar energy production, LSTM proves invaluable due to its capacity to capture long-term dependencies and intricate patterns within historical data [

13]. Comprising memory cells and gates that regulate information flow, LSTM's key components include the input gate, forget gate, cell state, and output gate. This architectural design enables LSTM to selectively retain and discard information from previous time steps, facilitating the model's comprehension of complex temporal relationships. Proficient in learning sequential patterns over extended periods, LSTM excels in capturing the influence of various climatic factors, such as sunlight intensity, temperature, and humidity, on energy output over time. Its sequential learning nature equips LSTM to discern both short-term fluctuations and long-term trends. The LSTM model is trained using historical time series data, where input features encompass relevant climatic variables, and the target is the corresponding solar energy production. Throughout training, the model adjusts its parameters to minimize the disparity between its predictions and actual output, refining its capacity to generalize and make precise forecasts on unseen data [

14]. Fine-tuning hyperparameters, including the number of LSTM layers, hidden units, and learning rate, is pivotal for optimizing the model's performance. Iterative experimentation with diverse configurations aids in identifying settings that yield the most accurate predictions for solar energy generation. Once trained, the LSTM model generates predictions for future solar energy production based on new input data. Its ability to assimilate information from prior time steps enables adaptation to changing climatic conditions, offering a dynamic and responsive forecasting tool.

Bidirectional Long Short Term Memory (BiLSTM)

The Bidirectional Long Short Term Memory (BiLSTM) model extends the traditional LSTM architecture by introducing bidirectional information flow. This enhancement enables the Bi-LSTM model to simultaneously consider both past and future information, thereby augmenting its capability to capture nuanced patterns in time series data [

15].

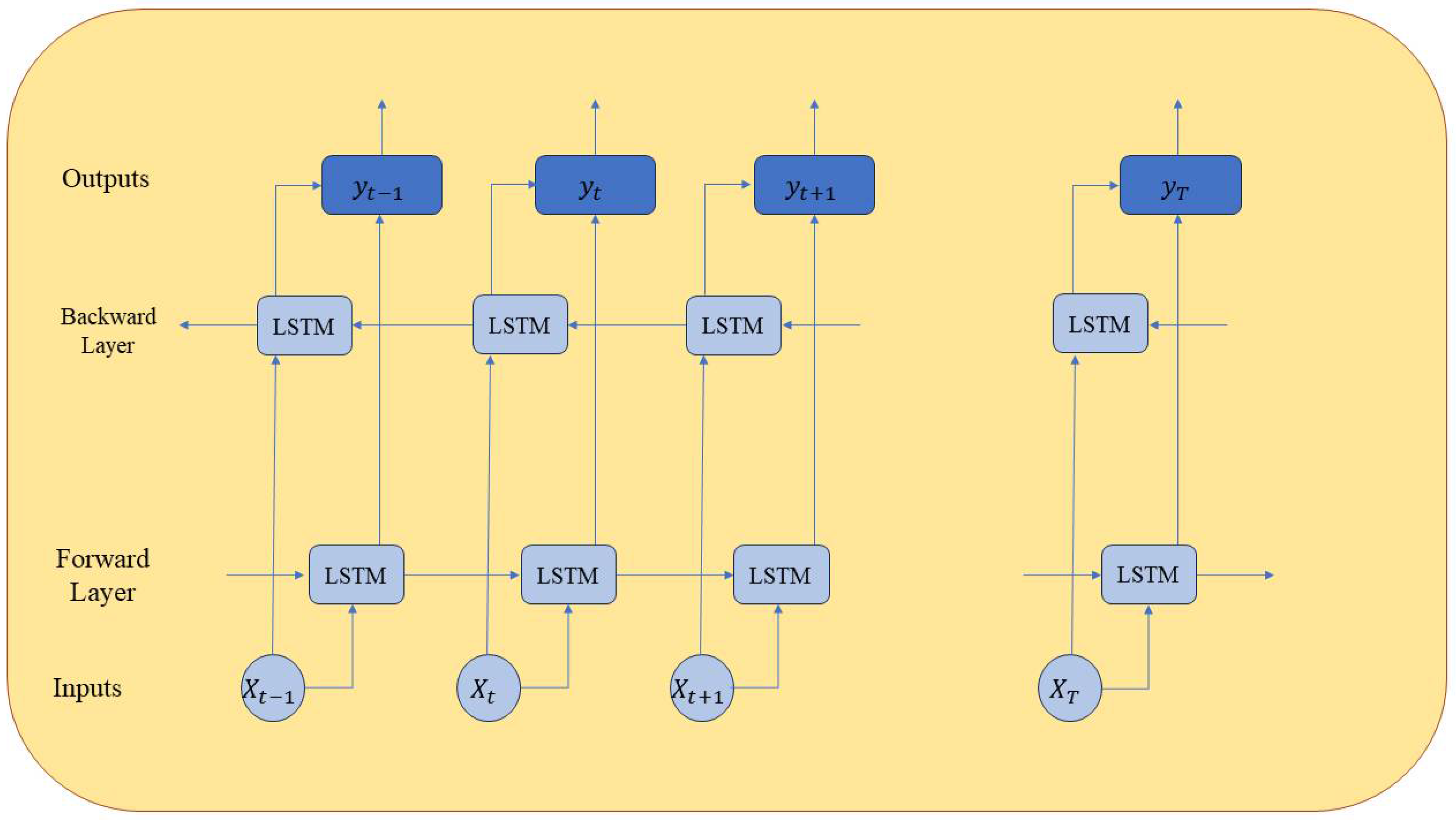

The Bidirectional LSTM (Bi

-LSTM) depicted in

Figure 3 is developed to operate in two time directions. This is achieved through the connection of neurons from two separate hidden layers to a singular output layer. In contrast to unidirectional LSTMs, Bi-LSTM processes the input sequence in both forward and backward directions. This bidirectional flow enables the model to capture dependencies not only from past time steps but also from future time steps, providing a more comprehensive understanding of temporal relationships. Similar to LSTM, Bi-LSTM incorporates memory cells and gates for regulating information flow. The bidirectional structure introduces two sets of memory cells, one processing the sequence forward and the other backward. This dual memory cell approach enhances the model's capacity to capture complex dependencies in both directions [

16]. The training process for Bi-LSTM is similar to that of LSTM, involving the adjustment of model parameters based on historical time series data. Fine-tuning of hyperparameters, such as the number of layers and hidden units in both forward and backward directions, is essential to optimize the model's performance. Bi-LSTM's bidirectional nature allows it to dynamically adapt to changing climatic conditions. This adaptability is vital for forecasting solar energy production, as the model can leverage information from both past and potential future states to adjust predictions in response to variations in sunlight intensity, temperature, and humidity. In summary, the Bidirectional Long Short-Term Memory (Bi-LSTM) model presents a powerful augmentation to the LSTM architecture, offering an improved capacity to capture temporal dependencies in both directions. This bidirectional approach enhances the model's contextual understanding and adaptability, making it a valuable asset for accurate time series forecasting in solar energy production.

XGBoost Model

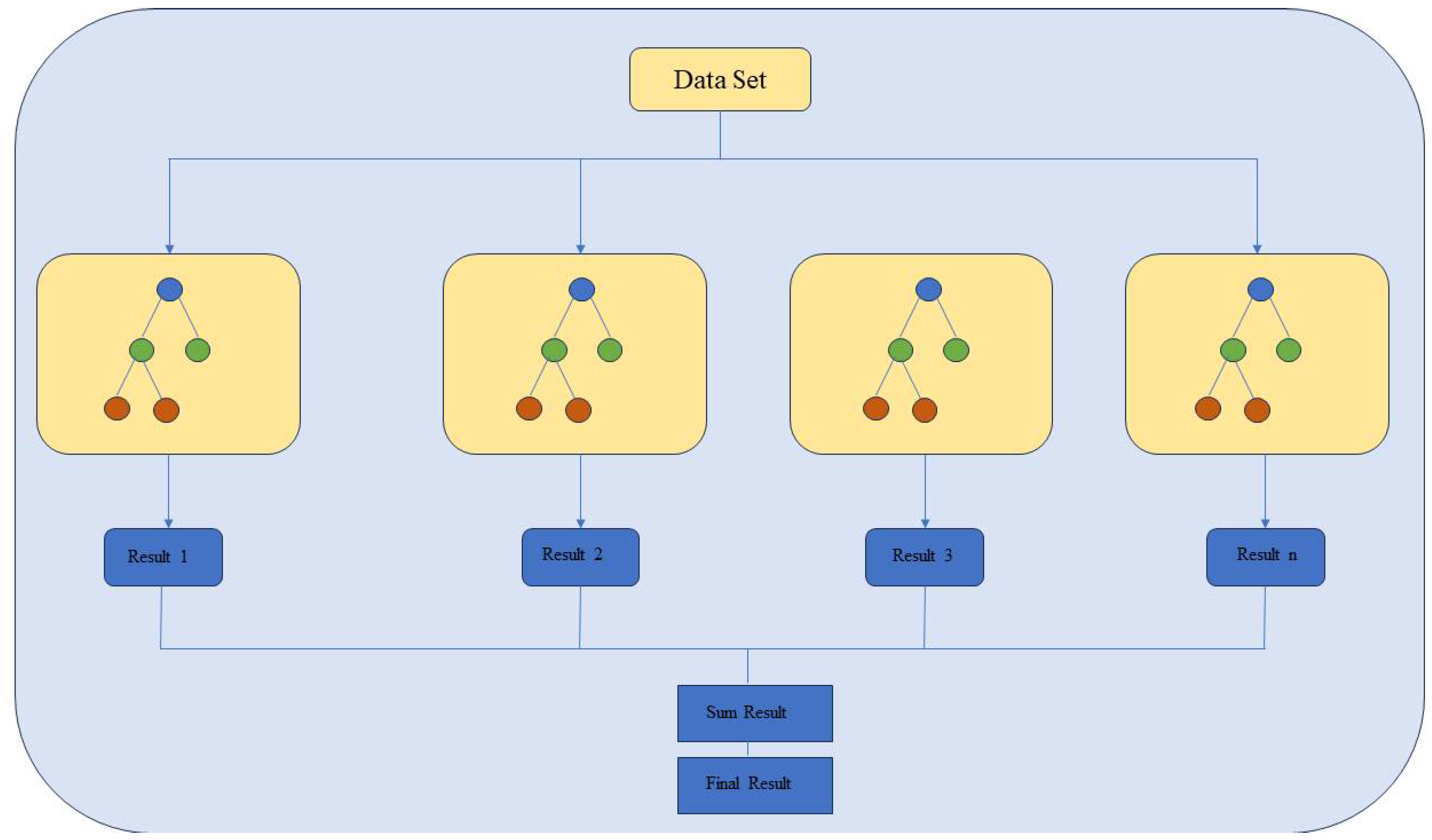

The XGBoost model, short for Extreme Gradient Boosting, is an ensemble learning algorithm renowned for its versatility and efficiency in handling diverse data types, including time series data. In the context of predicting solar energy production, XGBoost is employed as a robust alternative to neural network-based approaches, offering interpretability and scalability. XGBoost belongs to the family of gradient boosting algorithms, which construct a strong predictive model by combining the outputs of multiple weak learners, often decision trees. Each decision tree is trained sequentially to correct the errors of its predecessor, leading to a robust and accurate ensemble model [

17]. One notable feature of XGBoost is its capability to assess the importance of different input features. The structure of XGBoost can be seen in

Figure 4. In the context of solar energy forecasting, XGBoost can highlight the significance of climatic variables such as sunlight intensity, temperature, and humidity in influencing the energy output. This information aids in understanding the key drivers of solar energy production. XGBoost excels in capturing nonlinear relationships within the data. In the context of solar energy production, where the interaction between climatic variables and energy output can be nonlinear, XGBoost provides a flexible framework for modeling complex relationships. The core of XGBoost's power lies in its ensemble of decision trees. These trees collectively contribute to the model's predictive accuracy, with each tree focusing on different aspects of the input data. The combination of multiple trees enhances the model's capacity to capture diverse patterns and trends. The performance of the XGBoost model is heavily influenced by hyperparameter settings. Fine-tuning parameters such as learning rate, maximum depth of trees, and regularization terms is crucial to optimizing the model's predictive capabilities for solar energy production forecasting. the XGBoost model presents a distinctive approach to time series prediction, leveraging the power of ensemble learning to capture complex relationships within solar energy production data. Its interpretability, regularization mechanisms, and ability to handle nonlinearities contribute to a comprehensive and comparative analysis of diverse modeling techniques in the context of renewable.

A comprehensive comparative analysis will be conducted to highlight the strengths and weaknesses of each model. Insights gained from this analysis will contribute to a nuanced understanding of the applicability of LSTM, XGBoost, and Bi-LSTM in the context of solar energy production forecasting. This robust methodology ensures a systematic and thorough exploration of the selected time series prediction models, providing valuable insights that contribute to the advancement of renewable energy forecasting practices.

Results and Discussion

The empirical evaluation of Bidirectional Long Short Term Memory (BiLSTM), Long Short Term Memory (LSTM) and XGBoost models for solar energy production forecasting offers nuanced insights into their respective performances. Evaluation metrics, including Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE), were employed to assess the predictive performance of each model. These metrics serve as quantitative measures to gauge the accuracy and reliability of each model's predictions.

Prediction Analysis

The evaluation of three distinct models, Bidirectional Long Short Term Memory (BiLSTM), Long Short Term Memory (LSTM) and XGBoost, for solar energy production forecasting revealed insightful patterns. LSTM exhibited commendable accuracy in capturing both short and long-term dependencies, proving effective in handling sequential data. BiLSTM, with its bidirectional architecture, demonstrated enhanced contextual understanding by considering both past and future information, particularly beneficial for scenarios where anticipating future trends is crucial. XGBoost, employing ensemble learning, excelled in handling nonlinear relationships within the data and provided valuable insights into the importance of climatic variables. Each model showcased specific strengths and considerations; LSTM's proficiency in sequential patterns, Bi-LSTM's enhanced contextual understanding, and XGBoost's robustness in capturing nonlinearities. The choice among these models depends on the specific characteristics of the forecasting task and dataset, offering a diverse set of tools for accurate solar energy production predictions.

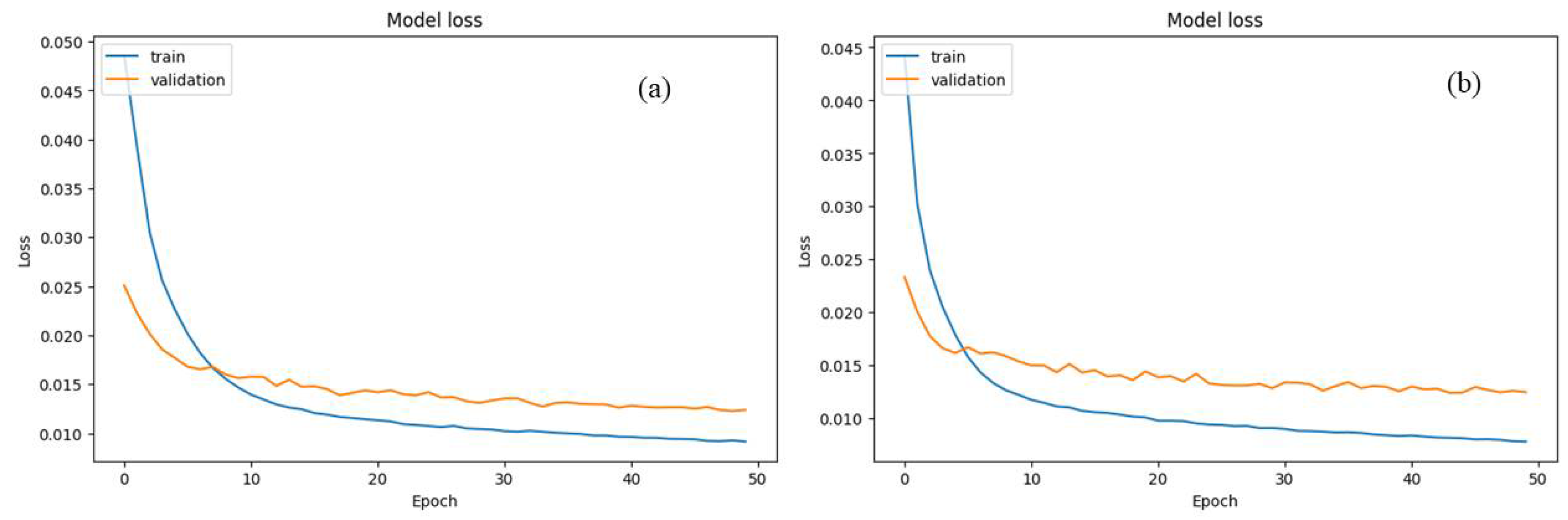

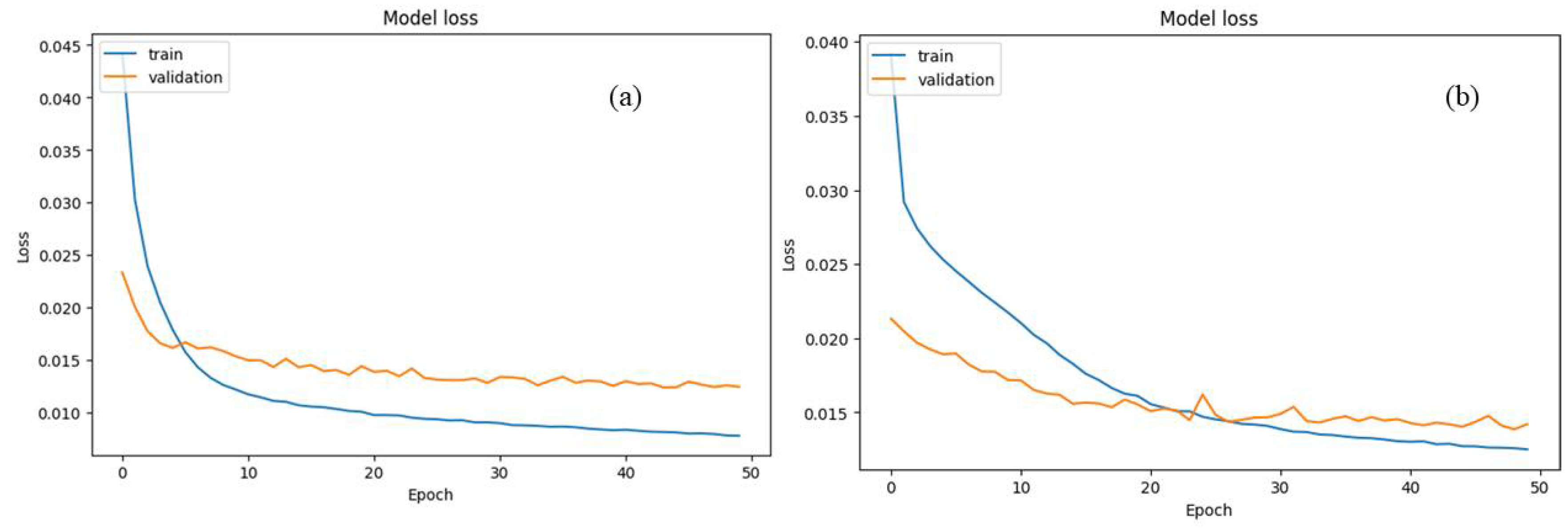

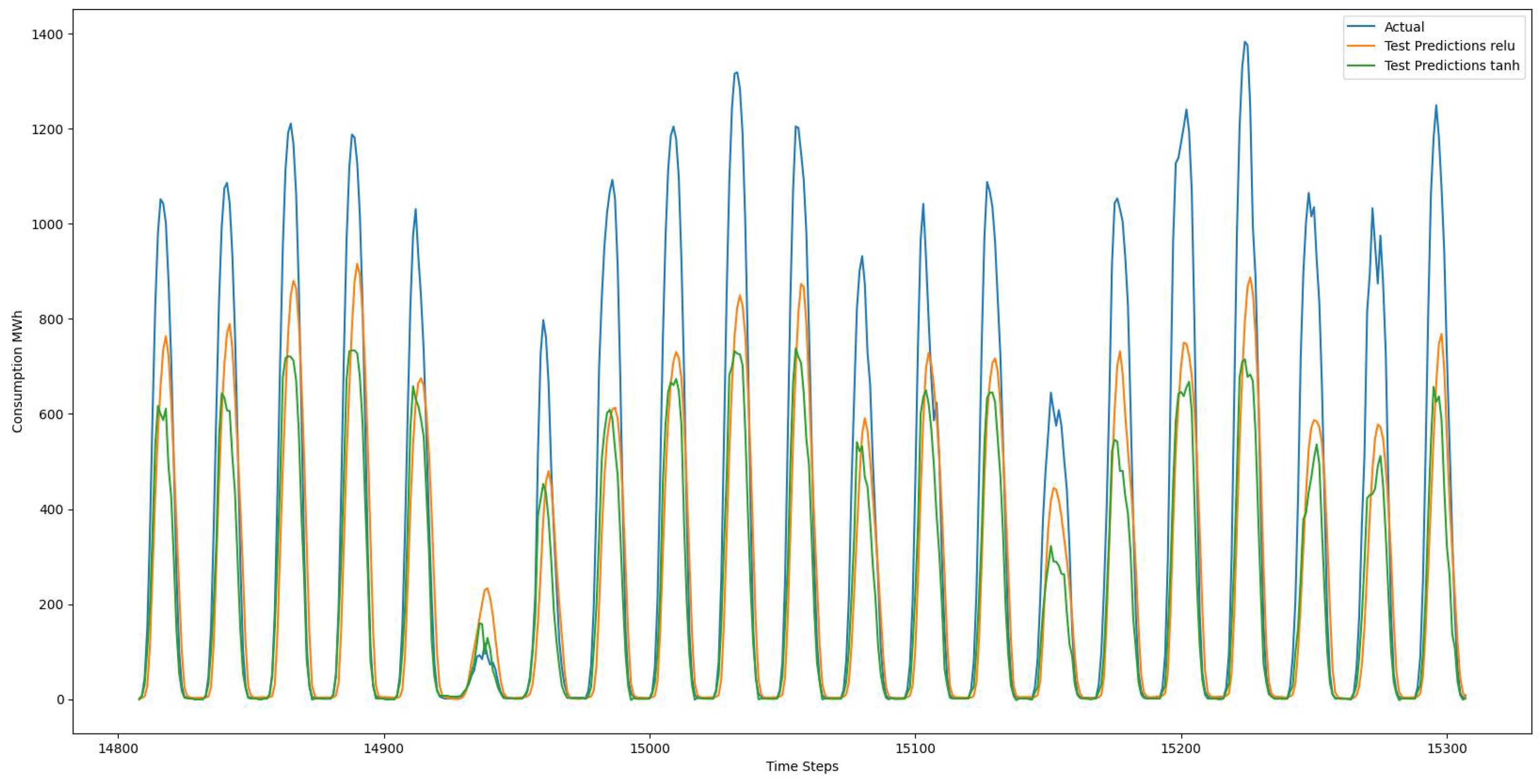

Figure 5 presents a comparative analysis of the activation functions ReLU (Rectified Linear Unit) and tanh in the Long Short Term Memory (LSTM) model. The plot illustrates the impact of these activation functions on the LSTM's ability to capture complex patterns and dependencies within the solar energy production data. ReLU, known for its efficiency in handling vanishing gradient problems, is contrasted with tanh, which introduces non-linearity and is particularly adept at managing input data in the range of -1 to 1. The objective is to assess how the choice of activation function influences the model's performance and its capacity to capture intricate relationships. In

Figure 6, a similar examination is conducted on the Bidirectional Long Short Term Memory (BiLSTM) model, again employing ReLU and tanh as activation functions. Given Bi-LSTM's bidirectional nature, understanding the impact of activation functions becomes crucial for capturing dependencies from both past and future information. The comparative visualization aims to elucidate the nuances in the model's response to different activation functions, providing insights into their effectiveness for solar energy production forecasting. These figures contribute valuable information to the discussion on the role of activation functions in LSTM and Bi-LSTM models, guiding researchers and practitioners in making informed decisions regarding the selection of activation functions based on the characteristics of the dataset and the intricacies of the forecasting task.

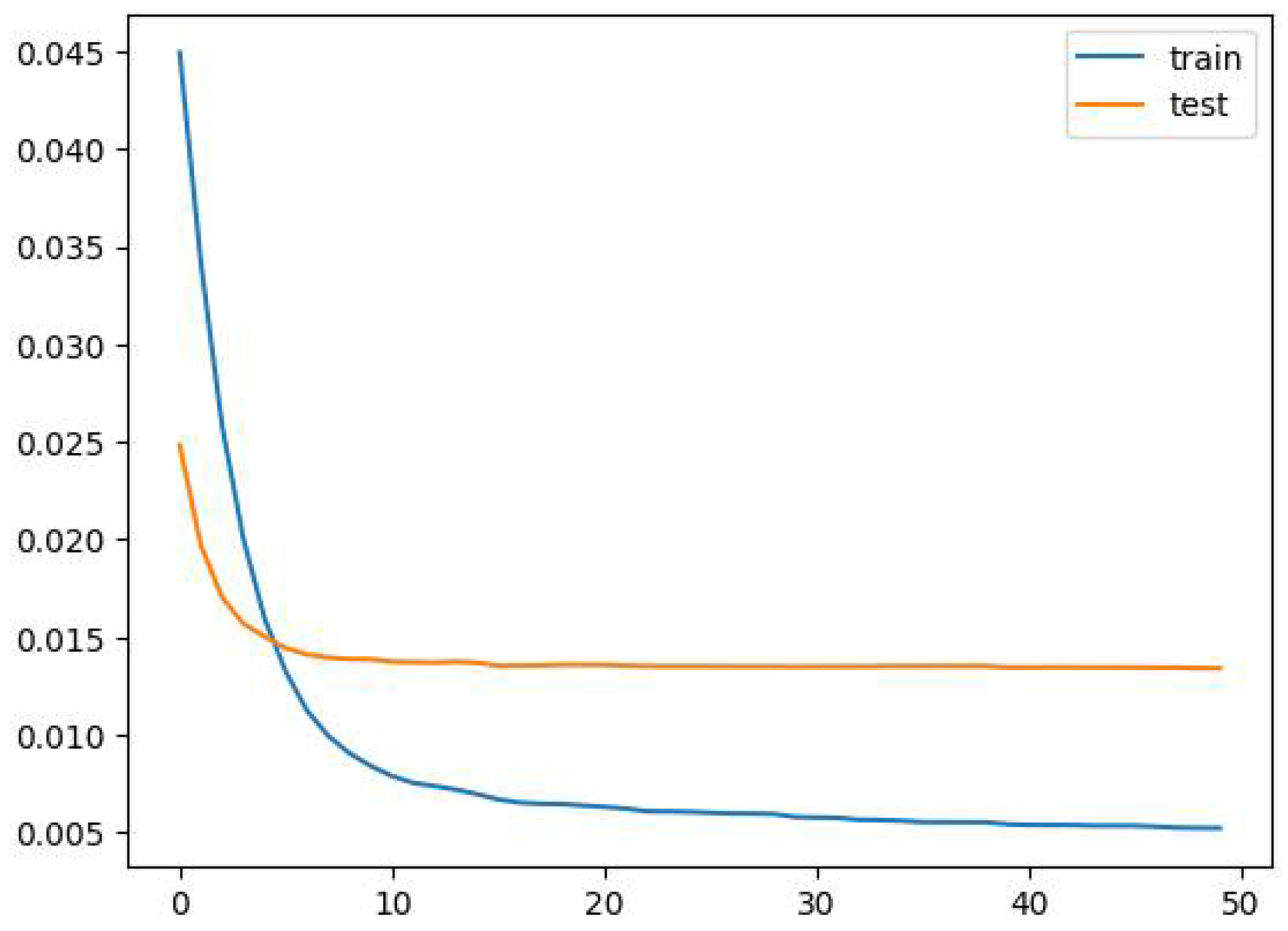

Figure 7 presents an epoch-to-loss analysis for the XGBoost model, offering valuable insights into the training dynamics and convergence behavior of the model during the learning process. This graphical representation allows for a visual assessment of how the loss evolves over successive epochs, providing information on the model's learning rate, convergence stability, and potential overfitting or underfitting tendencies. Analyzing the trend in the loss curve facilitates the identification of the optimal number of epochs, beyond which further training may lead to diminished generalization on unseen data. Interpreting

Figure 7 enables a deeper understanding of the training process and aids in making informed decisions regarding hyperparameter tuning, regularization, and overall model optimization.

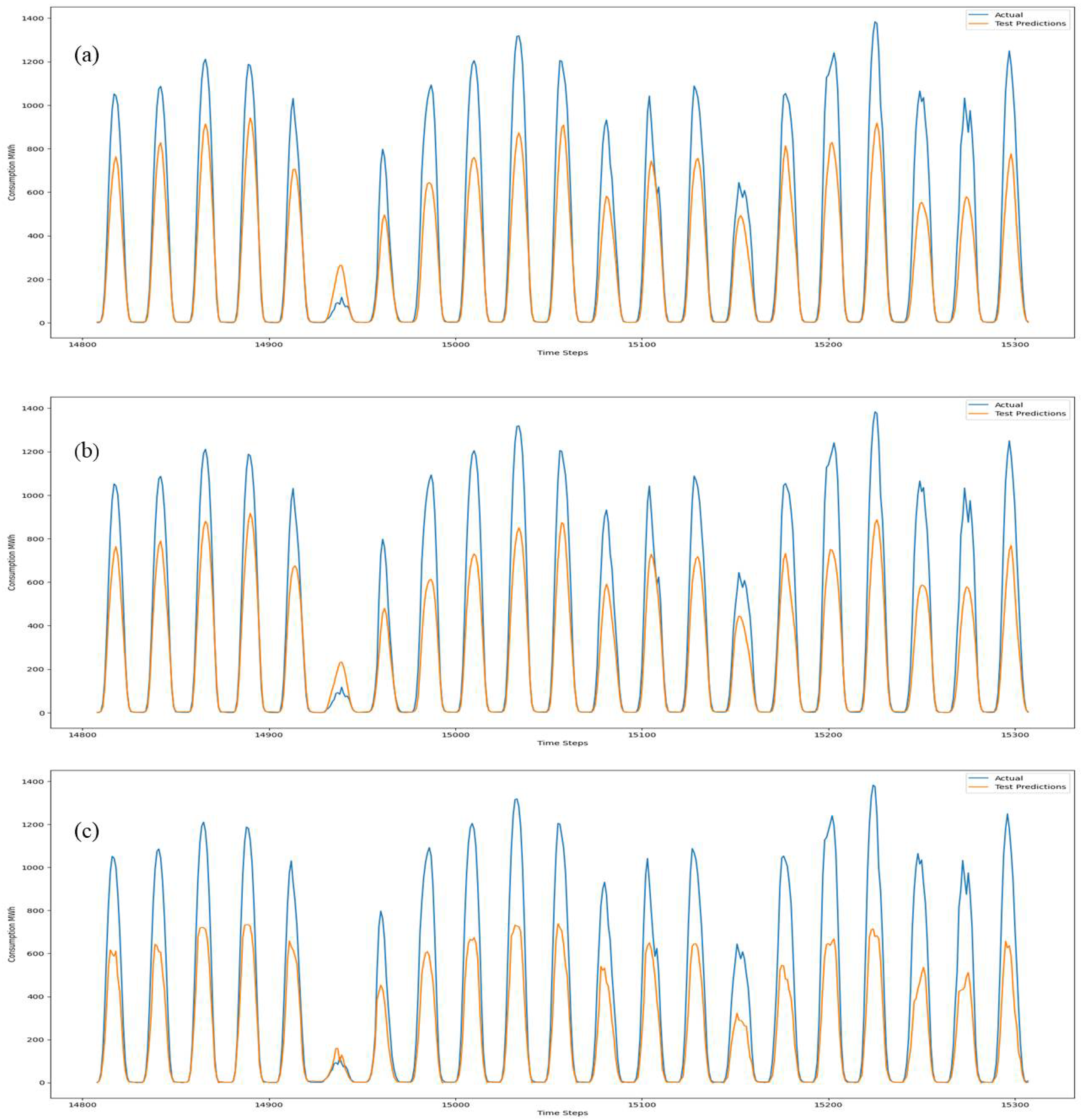

Figure 8 comprises three distinct subplots, each illustrating the comparison between real and predicted energy generation over successive timesteps for the Long Short Term Memory (LSTM), Bidirectional Long Short Term Memory (BiLSTM), and XGBoost models.

The blue line represents the actual energy generation values over different timesteps, while the orange line depicts the corresponding predicted values generated by models. This visual representation allows for a direct comparison between the model's predictions and the ground truth, providing insights into the model's accuracy in capturing temporal dependencies.

This visual assessment in

Figure 8 aids in identifying how well each model aligns with the actual energy generation trends. Discrepancies between real and predicted values can highlight areas of improvement or strengths in the models' forecasting capabilities. Researchers and practitioners can utilize these visualizations to refine and optimize their chosen models for more accurate and reliable solar energy production predictions.

Figure 9 visually represents the influence of activation functions, specifically ReLU (Rectified Linear Unit) and tanh, on the predictions of the Bidirectional Long Short Term Memory (BiLSTM) model for the test dataset. The subplot showcases two distinct lines: one denoting the actual values of energy generation over different timesteps (blue line), and the other representing the predicted values generated by the Bi-LSTM model with ReLU activation (orange line) and tanh activation (green line). This comparison allows for a direct evaluation of how the choice of activation function impacts the Bi-LSTM model's ability to forecast energy generation patterns.

The influence of data characteristics, including variations in sunlight intensity, temperature, and humidity, on model performance is evident. Models may exhibit varying levels of sensitivity to different climatic factors, emphasizing the importance of tailoring the choice of model to the unique attributes of the dataset. In conclusion, the comparative analysis provides a comprehensive understanding of the performance of BiLSTM, LSTM and XGBoost models in predicting solar energy production. The insights gained contribute valuable knowledge to the field of renewable energy forecasting, paving the way for improved methodologies and informed decision-making in the transition towards sustainable energy systems.

The performance metrics for the three models—Bidirectional Long Short Term Memory (BiLSTM), Long Short Term Memory (LSTM), and XGBoost—based on MAE and RMSE for both training and testing datasets are summarized in the

Table 1:

The XGBoost model exhibits the lowest Train MAE and RMSE, indicating its effectiveness in capturing training data patterns. However, it demonstrates a higher Test MAE and RMSE compared to the BiLSTM and LSTM models, indicating potential challenges in generalizing to unseen data. Both BiLSTM and LSTM models achieve comparable performance, with Bi-LSTM slightly outperforming in terms of Train and Test. The overall performance of each model can be assessed based on a balance between Train and Test metrics, considering their ability to capture patterns during training and generalize to new data during testing.

Conclusion

This study conducted a comprehensive investigation into the predictive capabilities of three distinct models—Long Short Term Memory (LSTM), Bidirectional Long Short Term Memory (BiLSTM), and XGBoost—in the context of solar energy production forecasting. The evaluation encompassed a thorough analysis of training and testing metrics, activation function impact, and real vs. predicted energy generation over timesteps. The results revealed nuanced differences among the models, each demonstrating unique strengths and considerations. The Bi-LSTM model exhibited superior performance in capturing both short and long-term dependencies, achieving the lowest Test MAE and RMSE. The LSTM model, while comparable to Bi-LSTM, showed slightly higher metrics. The XGBoost model demonstrated strong training performance but faced challenges in generalizing to unseen data, evidenced by higher Test MAE and RMSE. Activation function analysis highlighted the influence of ReLU and tanh on Bi-LSTM predictions, providing insights into their impact on forecasting accuracy. Real vs. predicted energy generation visualizations illustrated the models' effectiveness in aligning predictions with actual trends. Ultimately, the choice of the most suitable model depends on specific forecasting requirements, computational considerations, and the dataset's characteristics. Future work may explore hybrid models, further tuning of hyperparameters, and the transferability of models to diverse geographical locations. Overall, this study marks a significant step toward advancing the understanding and application of machine learning techniques in renewable energy prediction.

References

- T. Fischer, C. T. Fischer, C. Krauss, Deep learning with long short-term memory networks for financial market predictions, Eur J Oper Res 270 (2018) 654–669. [CrossRef]

- J. Shi, W.J. J. Shi, W.J. Lee, Y. Liu, Y. Yang, P. Wang, Forecasting power output of photovoltaic systems based on weather classification and support vector machines, IEEE Trans Ind Appl 48 (2012) 1064–1069. [CrossRef]

- H. Sangrody, M. H. Sangrody, M. Sarailoo, N. Zhou, N. Tran, M. Motalleb, E. Foruzan, Weather forecasting error in solar energy forecasting, IET Renewable Power Generation 11 (2017) 1274–1280. [CrossRef]

- R. Al-Hajj, A. R. Al-Hajj, A. Assi, M. Fouad, Short-Term Prediction of Global Solar Radiation Energy Using Weather Data and Machine Learning Ensembles: A Comparative Study, Journal of Solar Energy Engineering, Transactions of the ASME 143 (2021). [CrossRef]

- Q.T. Phan, Y.K. Q.T. Phan, Y.K. Wu, Q.D. Phan, Short-term Solar Power Forecasting Using XGBoost with Numerical Weather Prediction, 2021 IEEE International Future Energy Electronics Conference, IFEEC 2021 (2021). [CrossRef]

- A. Gensler, J. A. Gensler, J. Henze, B. Sick, N. Raabe, Deep Learning for solar power forecasting - An approach using AutoEncoder and LSTM Neural Networks, 2016 IEEE International Conference on Systems, Man, and Cybernetics, SMC 2016 - Conference Proceedings (2017) 2858–2865. [CrossRef]

- S.C. Lim, J.H. S.C. Lim, J.H. Huh, S.H. Hong, C.Y. Park, J.C. Kim, Solar Power Forecasting Using CNN-LSTM Hybrid Model, Energies 2022, Vol. 15, Page 8233 15 (2022) 8233. [CrossRef]

- H.C. de C. Filho, O.A. H.C. de C. Filho, O.A. de C. Júnior, O.L.F. de Carvalho, P.P. de Bem, R. dos S. de Moura, A.O. de Albuquerque, C.R. Silva, P.H.G. Ferreira, R.F. Guimarães, R.A.T. Gomes, Rice Crop Detection Using LSTM, Bi-LSTM, and Machine Learning Models from Sentinel-1 Time Series, Remote Sensing 2020, Vol. 12, Page 2655 12 (2020) 2655. [CrossRef]

- M.J. Hamayel, A.Y. M.J. Hamayel, A.Y. Owda, A Novel Cryptocurrency Price Prediction Model Using GRU, LSTM and bi-LSTM Machine Learning Algorithms, AI 2021, Vol. 2, Pages 477-496 2 (2021) 477–496. [CrossRef]

- J. Ma, Z. J. Ma, Z. Yu, Y. Qu, J. Xu, Y. Cao, Application of the XGBoost Machine Learning Method in PM2.5 Prediction: A Case Study of Shanghai, Aerosol Air Qual Res 20 (2020) 128–138. [CrossRef]

- X. Zhu, J. X. Zhu, J. Chu, K. Wang, S. Wu, W. Yan, K. Chiam, Prediction of rockhead using a hybrid N-XGBoost machine learning framework, Journal of Rock Mechanics and Geotechnical Engineering 13 (2021) 1231–1245. [CrossRef]

- S. Shan, H. S. Shan, H. Ni, G. Chen, X. Lin, J. Li, A Machine Learning Framework for Enhancing Short-Term Water Demand Forecasting Using Attention-BiLSTM Networks Integrated with XGBoost Residual Correction, Water 2023, Vol. 15, Page 3605 15 (2023) 3605. [CrossRef]

- S. Siami-Namini, N. S. Siami-Namini, N. Tavakoli, A. Siami Namin, A Comparison of ARIMA and LSTM in Forecasting Time Series, Proceedings - 17th IEEE International Conference on Machine Learning and Applications, ICMLA 2018 (2018) 1394–1401. [CrossRef]

- H. Abbasimehr, M. H. Abbasimehr, M. Shabani, M. Yousefi, An optimized model using LSTM network for demand forecasting, Comput Ind Eng 143 (2020) 106435. [CrossRef]

- S. Siami-Namini, N. S. Siami-Namini, N. Tavakoli, A.S. Namin, The Performance of LSTM and BiLSTM in Forecasting Time Series, Proceedings - 2019 IEEE International Conference on Big Data, Big Data 2019 (2019) 3285–3292. [CrossRef]

- J. Kim, N. J. Kim, N. Moon, BiLSTM model based on multivariate time series data in multiple field for forecasting trading area, J Ambient Intell Humaniz Comput (2019) 1–10. [CrossRef]

- J. Luo, Z. J. Luo, Z. Zhang, Y. Fu, F. Rao, Time series prediction of COVID-19 transmission in America using LSTM and XGBoost algorithms, Results Phys 27 (2021) 104462. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).